Cisco Crosswork Situation Manager 7.3.x Implementer Guide

Available Languages

Cisco Crosswork Situation Manager 7.3.x Implementer Guide

Powered by Moogsoft AIOps 7.3

Contents

Cisco Crosswork Situation Manager 7.3.0 Supported Environments

Scale Your Cisco Crosswork Situation Manager Implementation

High Availability Configuration Hierarchy

High Availability for Third Party Component Dependencies

HA Control utility command reference

Install Cisco Crosswork Situation Manager

Pre-installation for Cisco Crosswork Situation Manager v7.3.x

System Setup for Cisco Crosswork Situation Manager

Control Cisco Crosswork Situation Manager Processes

Encrypt Database Communications

Configure External Authentication

Configure Historic Data Retention

Change passwords for default users

Upgrade Cisco Crosswork Situation Manager

RPM upgrade to Cisco Crosswork Situation Manager v7.3.x

Tarball upgrade to Cisco Crosswork Situation Manager v7.3.x

RPM - Upgrade database components

RPM - Upgrade data ingestion components

RPM - Migrate from MySQL to Percona

Tarball - Upgrade UI components

Tarball - Upgrade Core components

Tarball - Upgrade database components

Tarball - Upgrade data ingestion components

Tarball - Migrate from MySQL to Percona

Configuration Migration Utility

Finalize and validate the upgrade

Uninstall Cisco Crosswork Situation Manager

Stop Core Cisco Crosswork Situation Manager and Supporting Services

Uninstall Core Cisco Crosswork Situation Manager Packages and Remove Directories and Users

Uninstall Supporting Applications

Uninstall Remaining Packages and Remove Yum Repositories

Monitor and Troubleshoot Cisco Crosswork Situation Manager

Monitor Component CPU and Memory Usage

Monitor Moogfarmd Data Processing Performance

Monitor RabbitMQ Message Bus Performance

Monitor System Performance Metrics

Troubleshoot Installation and Upgrade

Troubleshoot Integrations Controller

Troubleshoot Required Services for a Functional Production System

Troubleshoot Slow Alert/Situation Creation

Obtaining Documentation and Submitting a Service Request

Implementer Guide

The Implementer Guide contains instructions to help you install and configure Cisco Crosswork Situation Manager.

To install the system and handle common post-installation setup, see Install Cisco Crosswork Situation Manager and System Setup for Cisco Crosswork Situation Manager.

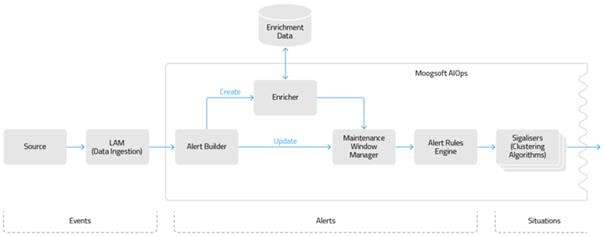

After you have the base system up and running, you can begin to ingest event data from your monitoring sources. Integrations covers most integrations topics. You can find some detail on some common configuration tasks for data ingestion under Configure Data Ingestion.

Much of the value of Cisco Crosswork Situation Manager comes from its ability to process your raw event data, deduplicate the events, and transform the data into alerts that comprise Situations. It is critical to configure the system to create meaningful Situations for you and to present the Situations to the right teams. Figuring out your needs for your Situation design will help you make decisions about the right data processing choices for you.

Based upon your Situation design choices and the type of data available from your monitoring sources, you can follow the Clustering Algorithm Guide to choose the correct clustering algorithms for your system. Then you have several options to Configure Data Processing to achieve your goals. See also the Administrator Guide.

To keep your system running and healthy, see Monitor and Troubleshoot Cisco Crosswork Situation Manager.

Plan Your Implementation

Cisco Crosswork Situation Manager 7.3.0 Supported Environments

The following operation systems, browsers and third-party software are either supported or are required in order to run Cisco Crosswork Situation Manager.

Any operating systems and browsers not listed in the sections below are not officially recommended or supported.

Operating Systems

You can run Cisco Crosswork Situation Manager on the following versions of Red Hat Enterprise Linux®(RHEL) and CentOS Linux:

| OS |

Versions |

|

|

v7 |

|

|

v7 |

Note: No other Linux distributions are currently supported

Browsers

You can use the following browsers for the Cisco Crosswork Situation Manager UI:

|

|

Version |

|

|

Latest |

|

|

Latest |

|

|

Latest |

|

|

Latest |

|

|

v11 |

Supported Third-Party Software

The latest default installation of Cisco Crosswork Situation Manager comes with the following third-party applications:

| Application |

Version |

| Apache Tomcat® |

v9.0.22 |

| Elasticsearch |

v6.8.1 (LTS version) |

| Percona |

v5.7.26 |

| Nginx |

v1.14.0 or above |

| RabbitMQ |

v3.7.4 |

Other supported application packages include:

| Application |

Version |

| Erlang |

v20.1.7 |

| JDK |

OpenJDK 11.0.2.7-0.el7_6 |

| Apache Tomcat® Native |

v1.2.23 or above |

Integration Support

The following table outlines the vendor supported integrations for the current version of Cisco Crosswork Situation Manager alongside the corresponding supported software versions.

Integrations support IPv6 connectivity.

| Integration Version |

Supported Software / Version |

| Ansible Tower Integration v1.10 |

Ansible Tower v3.0, 3.1 |

| Apache Kafka Integration v1.12 |

Apache Kafka v0.9, 1.1, 2.2 |

| AppDynamics Integration v2.2 |

AppDynamics v4.0, 4.1 |

| AWS CloudWatch Integration v2.0 |

aws-java-sdk v1.11 |

| AWS SNS Integration v1.2 |

AWS SNS v2016-06-28 |

| BMC Remedy Integration v1.8 |

Remedy v9.1 |

| CA UIM Integration v1.8 |

CA Nimsoft UIM v8.4 |

| CA Spectrum Integration v2.2 |

CA Spectrum v10.2 |

| Catchpoint Integration v1.0 |

Catchpoint v2019 |

| Cherwell Service Management Integration v1.5 |

Cherwell v9.3 |

| Datadog Polling Integration v1.3 |

Datadog v2018 |

| Datadog Webhook Integration v1.11 |

Datadog v5.21 |

| Dynatrace APM Plugin Integration v1.8 |

Dynatrace v6.5, 7.0 |

| Dynatrace APM Polling Integration v2.2 |

Dynatrace v6.5, 7.0 |

| Dynatrace Notification Integration v1.5 |

Dynatrace v6.5 |

| Dynatrace Synthetic Integration v1.12 |

Dynatrace Synthetic v2017 |

| Email Integration v2.5 |

IMAP, IMAPS, POP3, POP3S |

| EMC Smarts Integration v1.3 |

RabbitMQ v3.7.4 and Smarts v9.5 |

| ExtraHop Integration v1.2 |

ExtraHop v2018 |

| FluentD Integration v1.10 |

FluentD v0.12 |

| Grafana Integration v1.2 |

Grafana v5.2.4 |

| HP NNMi Integration v2.5 |

HP NNMi v10.2 |

| HP OMi Plugin Integration v1.8 |

HP OMi v10.1 |

| HP OMi Polling Integration v2.5 |

HP OMi v10.1 |

| JIRA Service Desk Integration v1.10 |

JIRA Service Desk v7.6 |

| JIRA Software Integration v1.10 |

JIRA Software v7, JIRA Cloud |

| JMS Integration v1.11 |

ActiveMQ v5.14, JBoss v10, WebLogic v12.0 |

| Microsoft Azure Integration v1.2 |

Microsoft Azure Monitor v2018 |

| Microsoft Azure Classic Integration v1.2 |

Microsoft Azure Classic v2018 |

| Microsoft SCOM Integration v2.6 |

Microsoft SCOM v2012, 2016 |

| Microsoft Teams Integration v1.0 |

Microsoft Teams v1.2.00.3961 |

| Nagios Integration v2.10 |

Nagios vXI |

| New Relic Integration v1.10 |

New Relic v2016 |

| New Relic Polling Integration v2.0 |

New Relic v2.3 |

| New Relic Insights Polling Integration v1.0 |

New Relic v2.3 |

| Node.js Integration v1.9 |

Node.js v1.6 |

| NodeRED Integration v1.9 |

Nagios Red v016, 017 |

| OEM Integration v2.3 |

Oracle Enterprise Manager v12c, 13c |

| Office 365 Email Integration v1.0 |

|

| Pingdom Integration v1.9 |

Pingdom v2017 |

| Sensu Integration v1.0 |

Sensu Core v1.8 |

| ServiceNow Integration v4.3 |

ServiceNow vNew York, Madrid, London, Kingston |

| SevOne Integration v1.5 |

SevOne v5.7.2.0 |

| Site24x7 Integration v1.0 |

Site24x7 v17.4.3, 17.4.4 |

| Slack Integration v1.7 |

Slack v3.1 |

| SolarWinds Integration v3.2 |

SolarWinds v11.5, 12.2 |

| Splunk Integration v2.5 |

Splunk v6.5, 6.6, 7.0 |

| Splunk Streaming Integration v1.0 |

Splunk v7.2, 7.3 |

| Sumo Logic Integration v1.1 |

Sumo Logic v2018 |

| VMware vCenter Integration v2.3 |

VMware vCenter v6.0, 6.5 |

| VMware vROps Integration v2.3 |

VMware vROps v6.6 |

| VMware vSphere Integration v2.4 |

VMware vSphere v6.0, 6.5 |

| VMware vRealize Log Insight Integration v2.4 |

VMware vRealize Log Insight v4.3 |

| WebSphere MQ Integration v1.12 |

WebSphere MQ v8 |

| xMatters Integration v1.6 |

xMatters v5.5 |

| Zabbix Integration v1.0 |

Zabbix v3.4 |

| Zabbix Polling Integration v3.4 |

Zabbix v3.2 |

| Zenoss Integration v2.4 |

Zenoss v4.2 |

Sizing Recommendations

The sizing recommendations below are guidelines for small, medium and large Cisco Crosswork Situation Manager systems based on input data rate and volume.

In the context of this guide, Managed Devices (MDs) are all of the components in the network infrastructure that generate and emit events:

Small

| Environment |

CPU |

File System |

| 1000 to 5000 Managed Devices (MDs) Less than 20 users Up to 5 integrations Less than 20 Alerts per second |

8 Cores 32GB RAM 2 x 1GB Ethernet Physical or Virtual Server |

1 TB Local or SAN See retention policy. |

Medium

| Environment |

CPU |

File System |

| 5000 to 20,000 MDs Between 20 and 40 users Between 6 and 10 integrations Between 20 and 100 Alerts per second |

16 Cores 64GB RAM 2 x 1GB Ethernet Physical or Virtual Server |

1 TB Local or SAN Seeretention policy. |

Large

| Environment |

CPU |

File System |

| More than 20,000 MDs More than 40 users More than 10 integrations More than100 Alerts per second |

24+ Cores 128GB RAM 2 x 1GB Ethernet Physical or Virtual Server |

1 TB Local or SAN Seeretention policy. |

Virtualization Restrictions

Consider the following restrictions for virtual environments:

· Ideally all Moog servers (guests) should be on the same compute node (host) sharing a hypervisor or virtual machine monitor. This minimizes latency between Moog guests.

· If servers are liable to automated resource balancing (e.g. vMotion) and liable to move compute nodes, then all Moog servers should be moved at the same time. If this is not possible, then Moog servers should be constrained to movements that minimize the resulting network distance.

· If Moog servers are distributed amongst compute nodes then the network “distance” (logical hops) between the nodes should be minimized.

· Network latency between components may affect Event processing throughput. This is especially true of the core to db servers.

Shared Storage

On any shared compute platform Cisco makes the following recommendations:

· The minimum resource requirements are multiplied by at least 33% to account for shared resource usage and allocation.

· Storage latency will reduce effective throughput at the core processing layer and should be minimised within the available constraints of a SAN.

· Cisco Crosswork Situation Manager should be treated as a highly transactional system and not placed on the same compute node as other highly transactional applications that may cause SAN resource contention.

· SAN port and array port contention should be minimized

· Storage medium should be as fast as possible to minimize the transaction times to the database.

Retention Policy

You can calculate the amount of disk space in GB required for the database server using the following calculation:

(es x eps x d x 86,400) x 1.2 / 1,000,000

For this calculation: es = average event size in KB, eps = average events per second, d = number of days of retention and 86,400 represents the number of seconds per day.

For the majority of event sources, you can reasonably estimate a 2KB event size. However, some sources have larger than average events. For example, Microsoft SCOM. A 2KB base takes account of the other event and alert based storage such as an alert's Situation membership and Situation room thread sizes.

The average event rate is across all LAMs and integrations.

Note:

If you do not enable the Archiver tool, the historic database will grow indefinitely. See Archive Situations and Alerts for more information.

For example, the following calculation represents a 400 day retention period with an average event size of 2KB at 300 events per second:

(2 x 300 x 400 x 86,400) x 1.2 / 1,000,000 = 24,883.2 GB.

Server Roles

In order to plan your Cisco Crosswork Situation Manager deployment, it helps to understand the different components of Moogsoft AIOps and the options for distributing them among multiple physical or virtual machines.

A server role within an Cisco Crosswork Situation Manager installation is a functional entity containing components that must be installed on the same machine. You can distribute different roles to different machines.

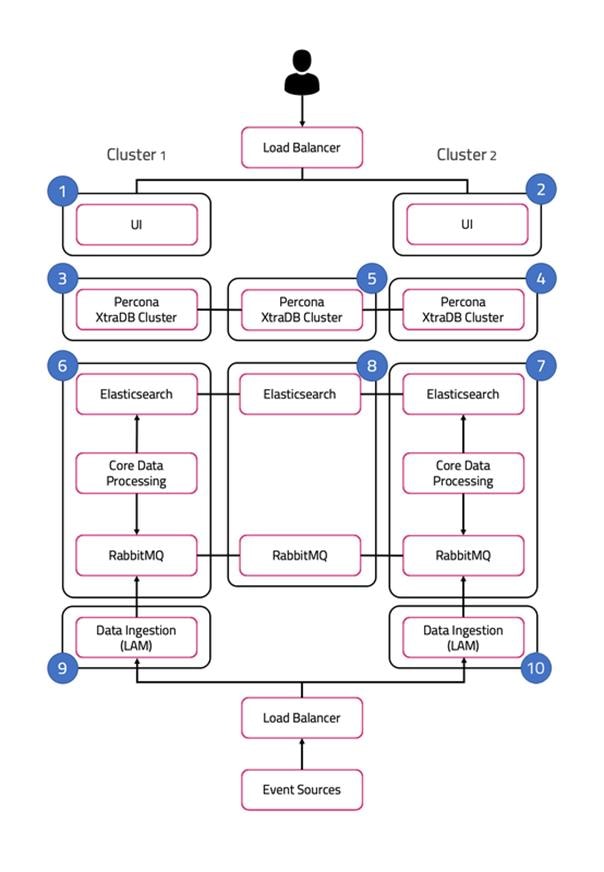

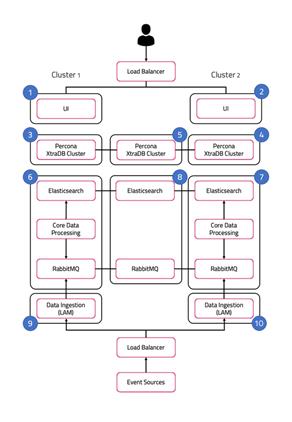

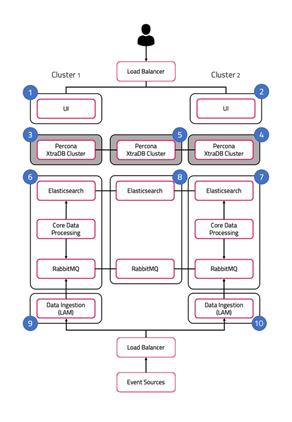

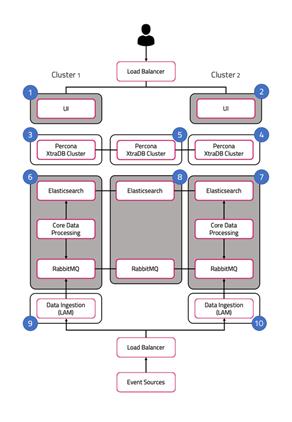

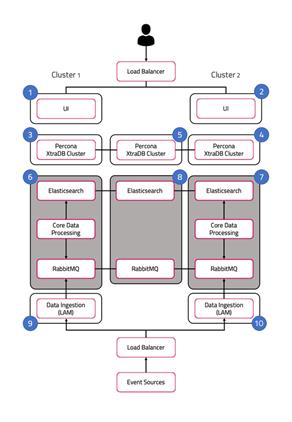

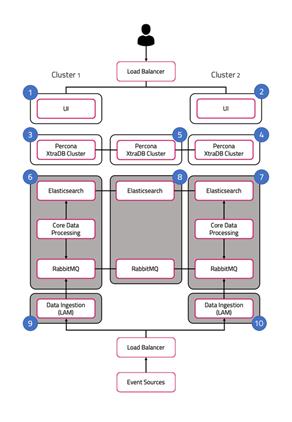

The following diagram illustrates the typical deployment strategy for the components of Cisco Crosswork Situation Manager in an Highly Available configuration:

The architecture is built upon two clusters with software components that serve several roles. See also HA Reference Architecture.

In the case of a single-server installation, you install all the roles on one machine.

UI role

The UI role comprises Nginx and Apache Tomcat, represented in the diagram as numbers 1 and 2. The Cisco Crosswork Situation Manager servlets groups run in active / active configuration.

Ngnix is the proxy for the web application server and for integrations.

Tomcat is the web application server. It reads and writes to the Message Bus and the database.

Database role

Percona XtraDB Cluster serves the database role, represented in the diagram as numbers 3, 4, and 5. The cluster runs in active / active standby / active standby mode.

Percona Xtra Db Cluster is the system datastore that handles transactional data from other parts of the system: LAMs (integrations), data processing, and the web application server.

HA Proxy handles database query routing and load balancing.

See /document/preview/120574#UUID816c7d74d05ed359780616a54d06a4d4 for more information.Database Strategy

Core role

The Core role, represented by numbers 6 and 7 in the diagram comprises the following:

· Moogfarmd, the Cisco Crosswork Situation Manager data processing component. Moogfarmd consumes messages from the Message Bus. It processes event data in a series of servlet-like modules called Moolets.

· Moogfarmd reads and writes to the database and publishes messages to the bus.

· RabbitMQ which provides the message queue. It receives published messages from integrations. It publishes messages destined for data processing (Moogfarmd) and the web application server.

· Elasticsearch which provides the UI search capability. It indexes documents from the indexer Moolet in the data processing series. It returns search results to Tomcat.

In HA deployments, Moogfarmd automatically runs in active / passive mode. See #section5d02a6f594ecfidm45764278084720 for more information.

In concert with the the Redundancy Role server, RabbitMQ and Elasticsearch run in active / active / active mode.

Redundancy role

The redundancy role, represented by number 8 in the diagram, provides the third node required for true HA for RabbitMQ and Elasticsearch.

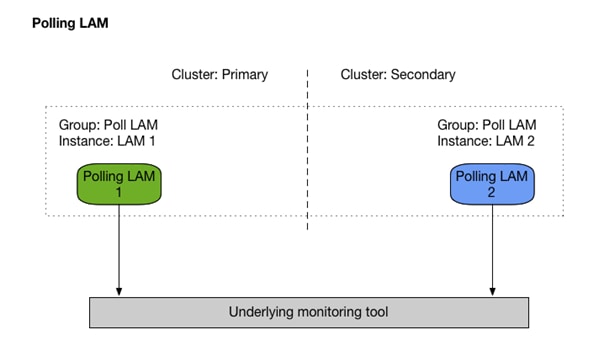

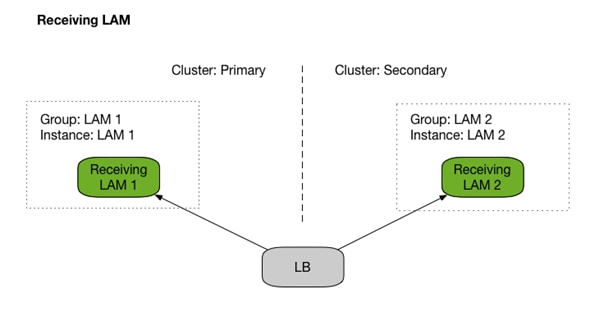

Data ingestion role

Link Access Modules (LAMs) make up the data ingestion role represented by numbers 9 and 10 in the diagram. Receiving LAMs listen for events from monitoring sources and Polling LAMs poll monitoring sources for events. Both parse and encode raw events into discrete events, and then write the discrete events to the Message Bus.

In HA deployments, receiving LAMs run in active / active mode, but polling LAMs run in active / passive mode.

Load balancers

The load balancers in front of the UI server role and the data ingestion server role are the customer's responsibility.

Scale Your Cisco Crosswork Situation Manager Implementation

Cisco Crosswork Situation Manager supports several options to help you scale your implementation to meet your performance needs. Monitor and Troubleshoot Cisco Crosswork Situation Manager to monitor your system for signs that it is time to scale.

For information on the performance tuning capabilities of individual Cisco Crosswork Situation Manager components, see Monitor Component Performance.

Horizontal Scaling

Cisco Crosswork Situation Manager currently supports horizontal scaling at the integration (LAM) and visualization (Ngnix + Tomcat) layers.

· You can add more LAMs, either on additional servers or on the same server, to achieve higher event rates. In this case, you have the option to configure event sources to send to the parallel LAMs separately or to implement a load balancer in front of the LAMs.

· You can add Nginx/Tomcat UI "stacks" behind a load balancer to increase performance for UI users. Adding UI stacks does not always provide better performance. It can degrade performance by adding more connection pressure to the database.

The following are typical horizontal scaling scenarios:

· You can add an additional LAM to process incoming events if you see that, despite attempts to tune the number of threads for an individual LAM, its event rate hits a plateau. This is a sign that the LAM is the bottleneck, so adding other instances of the LAM behind a load balancer will allow a higher event processing rate.

· You can add an additional UI stack if database pool diagnostics for Tomcat suggest that all or most of the database connections are constantly busy with long running connections, but the database itself is performing fine.

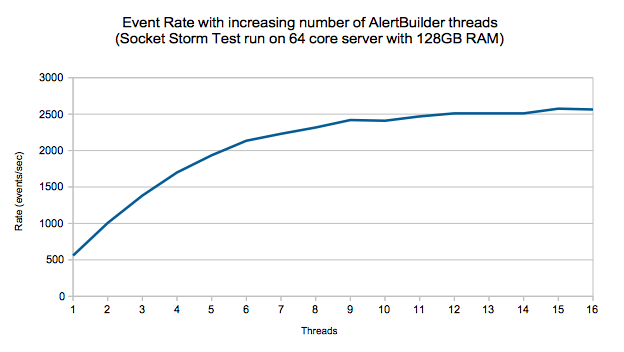

The data processing layer (moogfarmd) is not currently well suited to horizontal scaling. Moolets of the same type cannot currently share processing. Adding more Moolets like the AlertBuilder in an attempt to increase the event processing rate is likely to lead to database problems.

Vertical Scaling

All Cisco Crosswork Situation Manager components ultimately benefit from being run on the best available hardware, but the data processing layer (moogfrarmd) benefits most from this approach. Depending on the number and complexity of Moolets in your configuration, you will see performance benefits in data processing on servers having the fastest CPUs with numerous cores and a large amount of memory. This enables you to increase the number of threads for moogfarmd to improve processing speed. You should also locate the database on the most feasibly powerful server (clock speed, number of cores and memory) with the biggest/fastest disk.

Distributed Installations

In some cases you distribute Cisco Crosswork Situation Manager components among different hosts to gain performance because it reduces resource contention on a single server: The most common distribution is to install the database on a separate server, ideally within the same fast network to minimize risk of latency. An additional benefit of this move is that it allows you to run a clustered or master/slave database for redundancy.

Another common distribution is to install the UI stack (Nnginx) on a separate server within the same fast network.

Some integrations (LAMs) benefit in being closer to the source so are a candidates for distribution.

See Server Roles and Distributed HA Installation for more information.

High Availability Overview

Cisco Crosswork Situation Manager supports high availability (HA) architectures to improve the fault tolerance of Cisco Crosswork Situation Manager. Each component supports a multi-node architecture to enable redundancy, failover, or both to minimize the risk of data loss, for example, in the case of a hardware failure

This topic covers the architectures you can use to achieve HA with Cisco Crosswork Situation Manager. For an example of how to set up a single site HA system, see Distributed HA Installation. See HA Reference Architecture for a detailed diagram of the components in a single site HA configuration.

Distributed HA architectures

Cisco Crosswork Situation Manager supports high availability in distributed architectures where different machines host a subset of the stack. You can run one or more of the server roles on its own machine.

See Server Roles for details of the HA architecture server roles in Cisco Crosswork Situation Manager.

If you run more than one server role on a machine, choose a primary role for the server. The primary role dictates which additional roles are supported on the machine as follows:

| Primary Role |

Supported Secondary Roles |

| Core |

UI, Data ingestion and Database |

| UI |

Data ingestion |

| Data Ingestion |

UI |

| Database |

Redundancy |

| Redundancy |

Database |

See Scale Your Cisco Crosswork Situation Manager Implementation for information on how to increase capacity within the HA architecture, you can.

Contact your Cisco technical representative to discuss scaling your deployment.

See Sizing Recommendations for more information on hardware sizes and capacity.

After you decide on the best HA architecture for your environment, you can plan your implementation.

Resilience and failover

Cisco Crosswork Situation Manager provides support for automatic failover between the two nodes within an HA pair. For example from one instance of Moogfarmd to another, or from one instance of a LAM to another. However there is no automatic failover between multiple HA pairs. For example, there is no failover from a primary site to a second site, such as a disaster recovery replica.

Cisco Crosswork Situation Manager does not support automated fail-back for any architecture. For example, consider an HA pair of Moogfarmd instances. When the instance of Moogfarmd in cluster 1 becomes unavailable, the instance in cluster 2 enters an active state. When the instance from cluster 1 recovers and becomes available, the instance in cluster 2 remains active.

High Availability Configuration Hierarchy

Cisco Crosswork Situation Manager deployments use a tiered hierarchy of clusters, groups, and instances to achieve High Availability.

A cluster is a collection of Cisco Crosswork Situation Manager components. To achieve HA you need at least two clusters that include all the Cisco Crosswork Situation Manager components. You need an additional, third machine, for message queue and search components.

A group comprises a single component or two identical components that provide resilience over two or more clusters. Cisco Crosswork Situation Manager automatically controls the active or passive behavior and failover of the instances within a group.

An example of a group is a Socket LAM configured for the same source in two separate clusters. Other groups include the following;

· Servlets for the UI.

· Moogfarmd for data processing.

· Individual LAMs for data ingestion. For example the REST LAM.

An instance is an individual component running within a group. Each instance in a group provides resilience for the other instance. For example the primary instance of a Socket LAM pairs with a secondary instance in the second cluster to make a group.

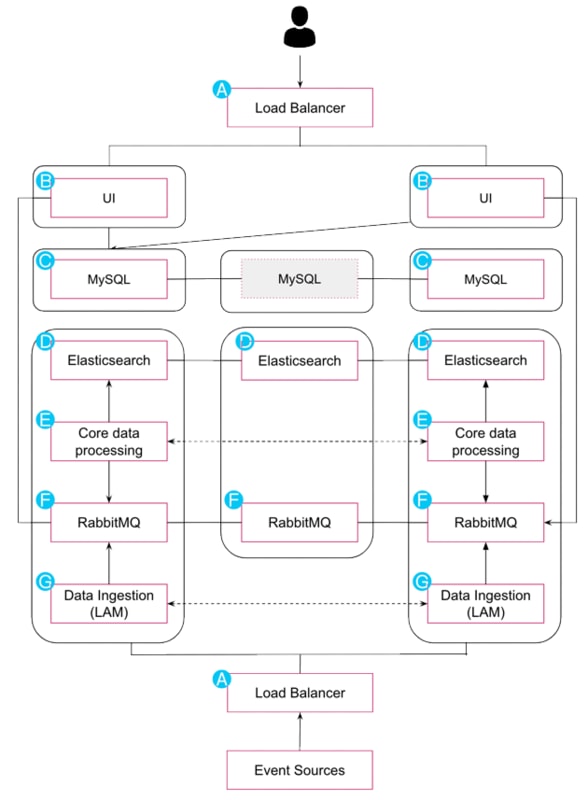

HA Reference Architecture

The diagram in this topic represents a Cisco Crosswork Situation Manager High Availability deployment to a single site: one datacenter, LAN, or availability zone. To support this architecture, all servers must have sufficient connection speed amongst themselves so that latency between hosts does not exceed 5 ms.

A) Load balancers / VIPs

All Cisco Crosswork Situation Manager components have their own HA mechanism that provides failover capabilities , but it is also a best practice to use a load balancer or load balancers. You can use either software or hardware load balancers with the following requirements and recommendations:

· Load balancers must use TCP.

· You must implement health checks using your preferred approach to remove unhealthy servers from the cluster.

· The load balancer should provide load balancing capabilities and a VIP for each server role. For example: one UI VIP per site, one LAM VIP per site.

· Sticky sessions are recommended.

· You can choose your preferred load balancing approach. For example, round robin or least-connection.

B) User interface

The Cisco Crosswork Situation Manager UI comprises the following components:

· Nginx: The web server that provides static UI content and acts as a proxy for the application server. For HA deployments, install a minimum of two Nginx instances on separate servers and optionally cluster the Nginx instances.

· Apache Tomcat: The web server that provides servlet and API support. For HA deployments, install a minimum of two Tomcat instances on separate servers and optionally cluster the instances.

The UI components run in active/active configuration, so configure servlet instances to run in separate groups.

Required Ports: 80, 443

C) Database

Cisco Crosswork Situation Manager uses Percona XtraDB as the system database. HA requires a minimum of three server nodes configured in each cluster with latency between them not exceeding 5 ms.

Required Ports: 3306

D) Search and indexing

Cisco Crosswork Situation Manager uses Elasticsearch to store active alert and Situation data to provide search functionality within the product. For HA deployments install a cluster of a minimum of three data servers with one active master server.

Required Ports: 9200, 9300

E) Core data processing

Moogfarmd is the core data processing application that controls all other services in Cisco Crosswork Situation Manager. It manages the clustering algorithms and other applets (Moolets) that run as part of the system. For HA deployments, install a minimum of two Moogfarmd services on separate servers. Moogfarmd can only run as a two-instance group in an active/passive mode.

Required Ports: 5701, 8901 for Hazelcast: the in-memory data grid that provides fault tolerance.

F) Message Bus

Cisco Crosswork Situation Manager uses RabbitMQ as the system Message Bus. It requires a minimum of three servers for HA. RabbitMQ relies on its native clustering functionality and mirrored queues to handle failover; it does not use the Cisco Crosswork Situation Manager load balancing feature.

Required Ports: 5672, 4369, 15672

G) Data ingestion

Cisco Crosswork Situation Manager uses the following types of Link Access Modules (LAMs) to ingest data:

· Polling LAMs that periodically connect to a data source using an integration API to collect event data.

· Receiving LAMs that provide an endpoint for data sources to post event data.

· For HA deployments:

· Install two instances of each LAM. When both instances are in the same group, they run in active/passive mode.

· For LAMs deployed over an unreliable link such as a WAN, or across data centers, you should deploy a caching LAM strategy that includes a database and message queue on the LAM Servers.

· You can load balance receiving LAMs and configure them as active/active to increase capacity.

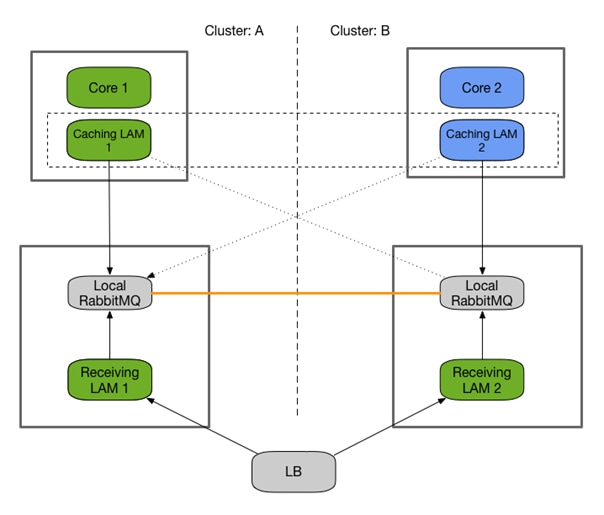

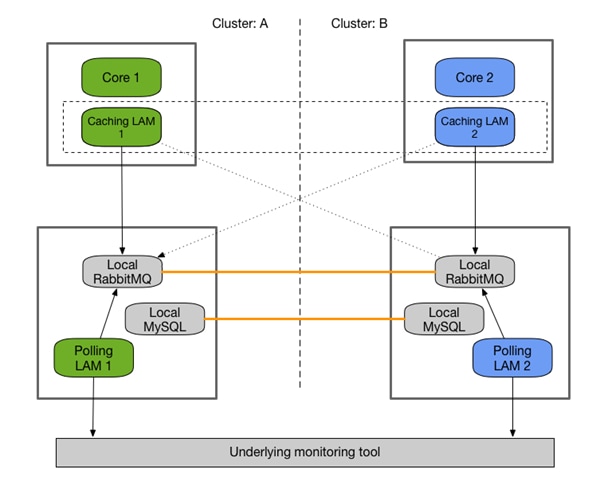

HA Architecture for LAMs

The Cisco Crosswork Situation Manager HA architecture provides increased resilience against LAM and server restarts by caching ingested data to the disk. It requires installing a local RabbitMQ cluster which is used by LAMs for publishing.

A remote caching LAM, located next to the Core role, connects to the local RabbitMQ cluster, picks the events from the queue and publishes them to the central RabbitMQ cluster for Moogfarmd to process.

If no caching LAM is available to consume the events from the local RabbitMQ cluster, the data is cached to disk until the server runs out of memory.

HA architecture

This architecture is recommended for hybrid installations, where the core processing is located in the cloud and LAMs are on-premise, or for a full on-premise configuration where LAMs are housed remotely to the core components.

Polling LAMs run in an active / passive mode and must connect to a local database in order to negotiate their state. This requires a local MySQL instance that runs with master / master replication.

Installation steps

If you are setting up the Store and Forward architecture, perform the following steps:

· Setup LAM 1 and 2 Roles (see Install with Caching LAM)

· Setup Caching LAM 1 and 2 Roles (see Caching LAM)

Otherwise, perform the standard installation steps:

· Setup LAM 1 and 2 Roles (see Install without Caching LAM)

High Availability for Third Party Component Dependencies

You can configure Cisco Crosswork Situation Manager dependencies such as Percona XTraDB Cluster, Elasticsearch, RabbitMQ, and Grafana to work effectively in highly available deployments.

See High Availability for details on high availability deployments of Cisco Crosswork Situation Manager and deployment scenarios.

Configure Percona XtraDB Cluster for HA

For information on Percona XtraDB Cluster in Cisco Crosswork Situation Manager, see /document/preview/120574#UUID816c7d74d05ed359780616a54d06a4d4. For an example configuration, see Set Up the Database for HA For further information, refer to the documentation about Percona XtraDB Cluster.Database Strategy

Configure RabbitMQ for HA

You can improve the performance and reliability of your Cisco Crosswork Situation Manager deployment by:

· Distributing your RabbitMQ brokers on different hosts.

· Clustering your multiple RabbitMQ brokers.

· Mirroring your message queues across multiple nodes.

See Set Up the Core Role for HA and Set Up the Redundancy Server Role for an example configuration. For more information see See Message System Deployment. Refer to the RabbitMQ documentation on Clustering and Mirrored Queues for more information.

Configure Elasticsearch for HA

There are different ways to configure Elasticsearch for distributed installations. See Set Up the Core Role for HA and Set Up the Redundancy Server Role for an example configuration.

Refer to the Elasticsearch documentation on Clustering for more details.

Configure Grafana for HA

To set up Grafana for distributed installations, you should configure each Grafana instance to connect to a Cisco Crosswork Situation Manager UI load balancer such as HA Proxy rather than the Cisco Crosswork Situation Manager UI stack.

Alternatively you can point it at the Apache Tomcat server or Nginx server. Refer to the Grafana documention on Setting Up Grafana for High Availability.

HA Control utility command reference

The Cisco Crosswork Situation Manager HA Control Utility ha_cntl is a command line utility to:

· Control instance, process group, or cluster failover. For example, to switch from passive to active mode.

· View the current status of all clusters, process groups, and instances. See High Availability Configuration Hierarchy for more information.

Normally you should configure groups in HA to use automatic failover in production. Use the HA Control utility to check the status of the HA system or to initiate failover in non-production scenarios.

Usage

ha_cntl [ --activate cluster[.group[.instance]] | --deactivate cluster[.group[.instance]] | --diagnostics cluster[.group[.instance]] [ --assumeyes ] | --view ] [ --loglevel (INFO|WARN|ALL) ] [ --time_out <seconds> ] | --help

| Argument |

Input |

Description |

| -a, --activate |

String <cluster[.group[.instance_name]]> |

Activate all groups within a cluster, a specific group within a cluster, or a single instance. |

| -d, --deactivate |

String <cluster[.group[.instance_name]]> |

Deactivate all groups within a cluster, a specific group within a cluster or a single instance. |

| -i, --diagnostics |

String <arg> |

Print additional diagnostics where available to process log file. |

| -l,--loglevel |

String, one of INFO | WARN | ALL |

Log level controlling the amount of information logged by the utility. |

| -t,--time_out |

String <number of seconds> |

Amount of time in seconds to wait for the last answer. Defaults to 2. |

| -v,--view |

- |

View the current status of all instances, process groups, and clusters. |

| -y,--assumeyes |

- |

Answer "yes" for all prompts. |

Example

$MOOGSOFT_HOME/bin/ha_cntl -v

Getting system status

Cluster: [SECONDARY] passive

Process Group: [UI] Passive (no leader - all can be active)

Instance: [servlets] Passive

Component: moogpoller - not running

Component: moogsvr - not running

Component: toolrunner - not running

Process Group: [moog_farmd] Passive (only leader should be active)

Instance: FARM Passive Leader

Moolet: AlertBuilder - not running (will run on activation)

Moolet: AlertRulesEngine - not running (will run on activation)

Moolet: Cookbook - not running (will run on activation)

Moolet: Speedbird - not running (will run on activation)

Moolet: TemplateMatcher - not running

Process Group: [rest_lam] Passive (no leader - all can be active)

Instance: REST2 Passive

Process Group: [socket_lam] Passive (only leader should be active)

Instance: SOCK2 Passive Leader

Cluster: [PRIMARY] active

Process Group: [UI] Active (no leader - all can be active)

Instance: [servlets] Active

Component: moogpoller - running

Component: moogsvr - running

Component: toolrunner - running

Process Group: [moog_farmd] Active (only leader should be active)

Instance: FARM Active Leader

Moolet: AlertBuilder - running

Moolet: AlertRulesEngine - running

Moolet: Cookbook - running

Moolet: Default Cookbook - running

Moolet: Speedbird - running

Moolet: TemplateMatcher - not running

Process Group: [rest_lam] Active (no leader - all can be active)

Instance: REST1 Active

Process Group: [socket_lam] Active (only leader should be active)

Instance: SOCK1 Active Leader

Install Cisco Crosswork Situation Manager

Use this guide to learn how to install Cisco Crosswork Situation Manager:

If you are installing another version, see Welcome to the Cisco Docs! for more information. Refer to the following topics to help choose the right environment for your Cisco Crosswork Situation Manager deployment:

· The Cisco Crosswork Situation Manager Cisco Crosswork Situation Manager 7.3.0 Supported Environments topic details supported operating systems and system requirements.

· The Sizing Recommendations topic will help you make sure you select hardware to support your data ingestion and user requirements.

If you are upgrading Cisco Crosswork Situation Manager, see Upgrade Cisco Crosswork Situation Manager.

Deployment options

You have the option to install all Cisco Crosswork Situation Manager packages on a single machine. However, the modular approach of the Cisco Crosswork Situation Manager distribution means fewer dependencies between individual packages. This means you have the flexibility to install different components to different machines. See Server Roles for a description of how you can distribute the different components amongst multiple machines.

· For smaller deployments, you can run all the components in on a single machine.

— If you have root access to the machine and want to use Yum to install, see v7.3.x - RPM installation.

— If you do not have root access to the machine where you are installing and you want more control over where you install Cisco Crosswork Situation Manager, see v7.3.x - Tarball installation.

· For most production deployments, you may install different components to different machines in order to distribute the workload. See High Availability Overview for more information.

Pre-installation for Cisco Crosswork Situation Manager v7.3.x

Before you start to install Cisco Crosswork Situation Manager v7.3.x, you must perform certain pre-installation tasks.

The instructions to follow depends on your preferred mode of deployment:

· RPM: Use this method if you have root access to your Cisco Crosswork Situation Manager server(s) and you do not want to change the default installation locations.

· Tarball: Use this method if you need to run the process as a non-root user, or you want the ability to deploy to a non-default location and install all components under one directory.

The Tarball installer is hosted on the Cisco "speedy" Yum repository: https://speedy.moogsoft.com/installer/. Contact Cisco Support for access if you do not already have an account.

· Use the Offline RPM instructions if you have root access but your Cisco Crosswork Situation Manager server(s) do not have access to the internet.

For pre-installation instructions, refer to one of the following topics:

· RPM pre-installation for 7.3.x

· Tarball pre-installation for 7.3.x

· Offline RPM pre-installation for 7.3.x

Cisco Crosswork Situation Manager v7.3.x - RPM pre-installation steps

You must perform certain preparatory tasks before you install Cisco Crosswork Situation Manager v7.3.x.

Follow these steps if you have root access to the machine or machines on which you will install Cisco Crosswork Situation Manager, and you can connect to Yum repositories outside your network from those machines.

For Offline RPM pre-installation steps, see v7.3.x - Offline RPM pre-installation steps.

For Tarball pre-installation steps, see v7.3.x - Tarball pre-installation steps.

Before you begin

Before you begin to prepare for the installation, verify the following:

· You have root access to the system where you plan to install Cisco Crosswork Situation Manager.

· You have credentials to connect to the Cisco "speedy" Yum repository.

· You are familiar with the supported versions of third party software, as outlined in Cisco Crosswork Situation Manager 7.3.0 Supported Environments.

Pre-installation steps

Complete the following steps before you perform a RPM installation of Cisco Crosswork Situation Manager v7.3.x:

1. Create the Cisco Crosswork Situation Manager Yum repository as a new file /etc/yum.repos.d/aiops.repo with the following contents. Replace the login and password in the baseurl property with your Cisco "speedy" Yum repository credentials.

[moogsoft-aiops]

name=moogsoft-aiops

baseurl=https://<username>:<password>@speedy.moogsoft.com/repo/aiops/esr

enabled=1

gpgcheck=0

sslverify=0

2. Optional: GPG key validation of the RPMs

For users wishing to validate the RPMs before installation, the following steps must be followed:

a. Download the key from this site:

https://keys.openpgp.org/vks/v1/by-fingerprint/2529C94A49E42429EDAAADAEC7A2253BFC50512A

b. Copy the key to the server onto which the RPMs or tarball will be installed (it will be an .asc file)

c. Import the key:

gpg --import 2529C94A49E42429EDAAADAEC7A2253BFC50512A.asc

d. Download all the '7.3.0' RPMs and .sig files from the speedy yum repository using a browser, providing speedy credentials when asked by the browser:

https://<speedyusername>:<speedypassword>@speedy.moogsoft.com/repo/aiops/esr/x86_64

e. Move the RPMs and .sig files into the same folder. For example, /tmp, as used in the example below.

f. Copy the following code into a bash terminal and run it to perform the validation:

while read RPM

do

echo "Current RPM: $RPM"

gpg --verify ${RPM}.sig ${RPM} 2>&1

done < <(find /tmp -name '*.rpm');

g. Confirm that all the commands for each RPM report:

Good signature from "Moogsoft Information Security Team "<security@moogsoft.com>"

h. You can now remove the RPMs and .sig files. Yum will download the packages from the online repository for the actual installation.

3. Create an Elasticsearch Yum repository as a new file /etc/yum.repos.d/elasticsearch.repo with the following contents:

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

4. Install the RabbitMQ Erlang el7 package. For example:

yum -y install https://github.com/rabbitmq/erlang-rpm/releases/download/v20.1.7/erlang-20.1.7-1.el7.centos.x86_64.rpm

Alternatively you can find the file at https://github.com/rabbitmq/erlang-rpm/releases/tag/v20.1.7.

5. Install the RabbitMQ Yum repository. For example:

curl -s https://packagecloud.io/install/repositories/rabbitmq/rabbitmq-server/script.rpm.sh | sudo bash

Verify that the /etc/yum.repos.d/rabbitmq_rabbitmq-server.repo file has been created.

6. Install the Elasticsearch public key. For example:

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

7. Create a Bash script named create_nginx_repo.sh with the following contents:

#!/bin/bash

echo '[nginx]' > /etc/yum.repos.d/nginx.repo

echo 'name=nginx repo' >> /etc/yum.repos.d/nginx.repo

echo 'baseurl=http://nginx.org/packages/OS/OSRELEASE/$basearch/' >> /etc/yum.repos.d/nginx.repo

echo 'gpgcheck=0' >> /etc/yum.repos.d/nginx.repo

echo 'enabled=1' >> /etc/yum.repos.d/nginx.repo

OS_VERSION=$(cat /etc/system-release)

case "$OS_VERSION" in

CentOS*release\ 7* )

sed -i -e 's/OS/centos/' -e 's/OSRELEASE/7/' /etc/yum.repos.d/nginx.repo;;

Red\ Hat*release\ 7* )

sed -i -e 's/OS/rhel/' -e 's/OSRELEASE/7/' /etc/yum.repos.d/nginx.repo;;

esac

8. Execute the create_nginx_repo.sh script to create the Nginx Yum repo. For example:

bash create_nginx_repo.sh

9. Refresh the local Yum repo cache and verify that the NSS and OpenSSL packages are up to date on your system. For example:

yum clean all

yum -y update nss openssl

10. Install Java 11:

yum -y install java-11-openjdk-headless-11.0.2.7 java-11-openjdk-11.0.2.7 java-11-openjdk-devel-11.0.2.7

11. Install the Extra Packages for Enterprise Linux (EPEL) Yum repository and enable the optional packages:

yum install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

Verify that the /etc/yum.repos.d/epel.repo file has been created.

12. If the Operating System is RHEL, enable the 'extras' repos:

subscription-manager repos --enable "rhel-*-optional-rpms" --enable "rhel-*-extras-rpms" --enable "rhel-ha-for-rhel-*-server-rpms"

13. Install Percona on all servers that will house a database node. The script configures multiple nodes to run as a cluster. A single node is also supported. This command should be run on an internet-connected host in order to download the installer. If this host does not have internet access, download the script on a different host before copying it to this host. Substitute your "speedy" Yum repo user credentials:

cat > aiops_repo.sh << _EOF_

#!/bin/bash

clear

echo "Please provide access credentials for the 'speedy' yum repository in order to download the Percona setup script"

echo

read -p "AIOps Repository Username: " AIOPS_USER

export AIOPS_USER

read -p "AIOps Repository Password: " -s AIOPS_PASS

export AIOPS_PASS

curl -L -O https://\$AIOPS_USER:\$AIOPS_PASS@speedy.moogsoft.com/repo/aiops/install_percona_nodes.sh 2>/dev/null

echo

_EOF_

bash aiops_repo.sh;

Now run the script:

bash install_percona_nodes.sh

The script guides you through the installation process. To configure a single database node on the same server as Cisco Crosswork Situation Manager, use these settings:

— Configure Percona as "Primary".

— Do not set the server to "DB only".

— Set the first database node IP address to the server IP address.

— When prompted to enter the IP addresses of the second and third nodes, press Enter to skip these settings.

14. Set SELinux to permissive mode or disable it completely. For example, to set SELinux to permissive mode:

setenforce 0

If you want to disable SELinux at boot time, edit the file /etc/sysconfig/selinux.

After you have completed these steps, proceed with your offline installation or upgrade. See Upgrade Cisco Crosswork Situation Manager for the instructions relevant to your deployment.

Cisco Crosswork Situation Manager v7.3.x - Offline RPM pre-installation steps

You must perform certain preparatory tasks before you install Cisco Crosswork Situation Manager v7.3.x.

Follow these steps if you have root access to the machine or machines on which you will install or upgrade Cisco Crosswork Situation Manager, but you cannot connect to Yum repositories outside your network from those machines.

For RPM pre-installation steps, see v7.3.x - RPM pre-installation steps.

For Tarball pre-installation steps, see v7.3.x - Tarball pre-installation steps.

Before you begin

Before you begin to prepare for the installation, verify the following:

· You have root access to the system where you plan to install Cisco Crosswork Situation Manager.

· You are familiar with the supported versions of third party software, as outlined in Cisco Crosswork Situation Manager 7.3.0 Supported Environments.

Download the installation files

Complete the following steps before you perform an offline RPM installation of Cisco Crosswork Situation Manager v7.3.x:

1. Download the two archives required for the offline installation, using the following links:

— The BASE repository containing the dependent packages to install for RHEL/CentOS 7:

https://speedy.moogsoft.com/offline/aiops/2019-10-03-1570143328-MoogsoftBASE7_offline_repo.tar.gz

— The ESR repository containing the standard RPMs and ancillary packages (Apache Tomcat, RabbitMQ, JRE, etc):

2. Copy the downloaded Tarball files to your offline system.

3. Download the Percona and dependency packages using cURL on an internet-connection host:

curl -L -O http://repo.percona.com/percona/yum/release/7/RPMS/x86_64/Percona-XtraDB-Cluster-shared-57-5.7.26-31.37.1.el7.x86_64.rpm;

curl -L -O http://repo.percona.com/percona/yum/release/7/RPMS/x86_64/Percona-XtraDB-Cluster-client-57-5.7.26-31.37.1.el7.x86_64.rpm;

curl -L -O http://repo.percona.com/percona/yum/release/7/RPMS/x86_64/Percona-XtraDB-Cluster-server-57-5.7.26-31.37.1.el7.x86_64.rpm;

curl -L -O http://repo.percona.com/percona/yum/release/7/RPMS/x86_64/Percona-XtraDB-Cluster-shared-compat-57-5.7.26-31.37.1.el7.x86_64.rpm;

curl -L -O http://repo.percona.com/percona/yum/release/7/RPMS/x86_64/percona-xtrabackup-24-2.4.15-1.el7.x86_64.rpm;

Prepare the local Yum repositories

Follow these steps to create local Yum repositories to house the installation packages. If you are running a distributed installation, perform these steps on each machine that will run Cisco Crosswork Situation Manager components.

1. Create two directories to house the repositories. For example:

sudo mkdir -p /media/localRPM/BASE/

sudo mkdir -p /media/localRPM/ESR/

2. Extract the two Tarball files into separate directories. For example:

tar xzf *-MoogsoftBASE7_offline_repo.tar.gz -C /media/localRPM/BASE/

tar xzf *-MoogsoftESR_7.3.0_offline_repo.tar.gz -C /media/localRPM/ESR/

3. Back up the existing /etc/yum.repos.d directory. For example:

mv /etc/yum.repos.d /etc/yum.repos.d-backup

4. Create an empty /etc/yum.repos.d directory. For example:

mkdir /etc/yum.repos.d

5. Create a local.repo file ready to contain the local repository details:

vi /etc/yum.repos.d/local.repo

6. Edit local.repo and configure the baseurl paths for BASE and ESR to point to the your directories. For example:

[BASE]

name=MoogCentOS-$releasever - MoogRPM

baseurl=file:///media/localRPM/BASE/RHEL

gpgcheck=0

enabled=1

[ESR]

name=MoogCentOS-$releasever - MoogRPM

baseurl=file:///media/localRPM/ESR/RHEL

gpgcheck=0

enabled=1

7. Clean the Yum cache:

yum clean all

8. Verify that Yum can detect the newly created repositories. For example:

yum info "moogsoft-*"

Available Packages

Arch : x86_64

Version : 7.3.0

Release : XYZ

Size : 76 M

Repo : ESR

Summary : Algorithmic Intelligence for IT Operations

URL : https://www.moogsoft.com

License : Proprietary

Description : Moogsoft AIOps (7.3.0) - Build: XYZ - (Revision: XYZ)

The results should include the following packages:

Name : moogsoft-db

Name : moogsoft-integrations

Name : moogsoft-integrations-ui

Name : moogsoft-mooms

Name : moogsoft-search

Name : moogsoft-server

Name : moogsoft-ui

Name : moogsoft-utils

Name : moogsoft-common

9. Install Percona on all servers that will house a database node. The script configures multiple nodes to run as a cluster. A single node is also supported. This command should be run on an internet-connected host in order to download the installer. If this host does not have internet access, download the script on a different host before copying it to this host.

Ensure that any host Percona will be installed on has the Percona RPMs (detailed above) in the current directory, and the offline yum repository has also been deployed

Substitute your "speedy" Yum repo user credentials:

cat > aiops_repo.sh << _EOF_

#!/bin/bash

clear

echo "Please provide access credentials for the 'speedy' yum repository in order to download the Percona setup script"

echo

read -p "AIOps Repository Username: " AIOPS_USER

export AIOPS_USER

read -p "AIOps Repository Password: " -s AIOPS_PASS

export AIOPS_PASS

curl -L -O https://\$AIOPS_USER:\$AIOPS_PASS@speedy.moogsoft.com/repo/aiops/install_percona_nodes.sh 2>/dev/null

echo

_EOF_

bash aiops_repo.sh;

Now run the script:

bash install_percona_nodes.sh;

The script guides you through the installation process. To configure a single database node on the same server as Cisco Crosswork Situation Manager, use these settings:

— Configure Percona as "Primary".

— Do not set the server to "DB only".

— Set the first database node IP address to the server IP address.

— When prompted to enter the IP addresses of the second and third nodes, press Enter to skip these settings.

10. Install Java 11:

yum -y install java-11-openjdk-headless-11.0.2.7 java-11-openjdk-11.0.2.7 java-11-openjdk-devel-11.0.2.7

11. Set SELinux to permissive mode or disable it completely. For example, to set SELinux to permissive mode:

setenforce 0

If you want to disable SELinux at boot time, edit the file /etc/sysconfig/selinux.

12. Optional: GPG key validation of the RPMs

For users wishing to validate the RPMs before installation, the following steps must be followed:

a. Download the key from this site:

https://keys.openpgp.org/vks/v1/by-fingerprint/2529C94A49E42429EDAAADAEC7A2253BFC50512A

b. Copy the key to the server onto which the RPMs or tarball will be installed (it will be an .asc file)

c. Import the key:

gpg --import 2529C94A49E42429EDAAADAEC7A2253BFC50512A.asc

d. Download all the '7.3.0' RPMs and .sig files from the speedy yum repository using a browser, providing speedy credentials when asked by the browser:

https://<speedyusername>:<speedypassword>@speedy.moogsoft.com/repo/aiops/esr/x86_64

e. Move the RPMs and .sig files into the same folder. For example, /tmp, as used in the example below.

f. Copy the following code into a bash terminal and run it to perform the validation:

while read RPM

do

echo "Current RPM: $RPM"

gpg --verify ${RPM}.sig ${RPM} 2>&1

done < <(find /media/localRPM/ESR/RHEL/ -name '*.rpm');

g. Confirm that all the commands for each RPM report:

Good signature from "Moogsoft Information Security Team "<security@moogsoft.com>"

Your local Yum repositories are now ready. Proceed with your offline installation or upgrade. See Upgrade Cisco Crosswork Situation Manager for the instructions relevant to your deployment.

Cisco Crosswork Situation Manager v7.3.x - Tarball pre-installation steps

You must perform certain preparatory tasks before you install Cisco Crosswork Situation Manager v7.3.x.

Follow these steps if you do not have root access to the machine or machines on which you will install Cisco Crosswork Situation Manager.

For RPM pre-installation steps, see v7.3.x - RPM pre-installation steps.

For Offline RPM pre-installation steps, see v7.3.x - Offline RPM pre-installation steps.

Before you begin

Before you begin to prepare for the installation, verify the following:

· You have a CentOS 7 / RHEL 7 system on which to install Cisco Crosswork Situation Manager.

· You have removed any existing environment variables such as $MOOGSOFT_HOME from previous installations.

· You have identified the Linux user you will use to perform the installation.

· Optional: Ask an administrator to set the ulimit maximum for open files and max user processes for the installation user. This requires root privileges. For example, on a busy system you could increase both to 65535.

· You have selected a working directory in which to run the installation. The installation directory requires a minimum of 7Gb, and more if you are storing the Percona database in the installation directory, to allow for database and Elasticsearch artefacts and log file growth.

· You have credentials to connect to the Cisco "speedy" Yum repository.

· You are familiar with the supported versions of third party software, as outlined in Cisco Crosswork Situation Manager 7.3.0 Supported Environments.

· Ports 8443 and 8080 are open on your server.

· You are running OpenSSL v1.0.2 or later.

Pre-installation steps

Before you perform a Tarball installation of Cisco Crosswork Situation Manager v7.3.x, complete the following tasks on the server on which you will install Cisco Crosswork Situation Manager:

1. Install Kernel Asynchronous I/O (AIO) Support for Linux and libgfortran. For example:

mkdir -p ~/install/libraries/ && cd ~/install/libraries/

for PACKAGE in libquadmath-4.8.5-39.el7.x86_64.rpm libgfortran-4.8.5-39.el7.x86_64.rpm; do

curl -L -O http://mirror.centos.org/centos/7/os/x86_64/Packages/$PACKAGE && \

rpm2cpio $PACKAGE | cpio -idmv && \

rm -f $PACKAGE

done

echo "export LD_LIBRARY_PATH=$(pwd)/usr/lib64:\$LD_LIBRARY_PATH" >> ~/.bashrc && \

source ~/.bashrc

cd -

2. Install Percona dependencies. This step requires root privileges:

curl -L -O http://repo.percona.com/percona/yum/release/7/RPMS/x86_64/qpress-11-1.el7.x86_64.rpm;

curl -L -O http://mirrors.vooservers.com/centos/7.6.1810/extras/x86_64/Packages/libev-4.15-7.el7.x86_64.rpm;

curl -L -O http://mirrors.clouvider.net/CentOS/7.6.1810/updates/x86_64/Packages/perl-5.16.3-294.el7_6.x86_64.rpm;

curl -L -O http://mirrors.clouvider.net/CentOS/7.6.1810/updates/x86_64/Packages/perl-Pod-Escapes-1.04-294.el7_6.noarch.rpm;

curl -L -O http://mirrors.clouvider.net/CentOS/7.6.1810/updates/x86_64/Packages/perl-libs-5.16.3-294.el7_6.x86_64.rpm;

curl -L -O http://mirrors.clouvider.net/CentOS/7.6.1810/updates/x86_64/Packages/perl-macros-5.16.3-294.el7_6.x86_64.rpm;

curl -L -O http://centos.serverspace.co.uk/centos/7.6.1810/os/x86_64/Packages/xinetd-2.3.15-13.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Carp-1.26-244.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Compress-Raw-Bzip2-2.061-3.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Compress-Raw-Zlib-2.061-4.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-DBD-MySQL-4.023-6.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-DBI-1.627-4.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Data-Dumper-2.145-3.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Digest-1.17-245.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Digest-MD5-2.52-3.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Encode-2.51-7.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Exporter-5.68-3.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-File-Path-2.09-2.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-File-Temp-0.23.01-3.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Filter-1.49-3.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Getopt-Long-2.40-3.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-HTTP-Tiny-0.033-3.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-IO-Compress-2.061-2.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Net-Daemon-0.48-5.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-PathTools-3.40-5.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-PlRPC-0.2020-14.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Pod-Perldoc-3.20-4.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Pod-Simple-3.28-4.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Pod-Usage-1.63-3.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Scalar-List-Utils-1.27-248.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Socket-2.010-4.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Storable-2.45-3.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Text-ParseWords-3.29-4.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Time-HiRes-1.9725-3.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-Time-Local-1.2300-2.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-constant-1.27-2.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-parent-0.225-244.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-podlators-2.5.1-3.el7.noarch.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-threads-1.87-4.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/perl-threads-shared-1.43-6.el7.x86_64.rpm;

curl -L -O http://mirror.as29550.net/mirror.centos.org/7.6.1810/os/x86_64/Packages/socat-1.7.3.2-2.el7.x86_64.rpm;

curl -L -O http://mirror.sov.uk.goscomb.net/centos/7.6.1810/updates/x86_64/Packages/rsync-3.1.2-6.el7_6.1.x86_64.rpm;

curl -L -O http://mirror.sov.uk.goscomb.net/centos/7.6.1810/os/x86_64/Packages/lsof-4.87-6.el7.x86_64.rpm

yum install *.rpm

3. Install Percona on all servers that will house a database node. The script configures multiple nodes to run as a cluster. A single node is also supported. Substitute your "speedy" Yum repo user credentials:

cat > aiops_repo.sh << _EOF_

#!/bin/bash

clear

echo "Please provide access credentials for the 'speedy' yum repository in order to run the Percona setup script"

echo

read -p "AIOps Repository Username: " AIOPS_USER

export AIOPS_USER

read -p "AIOps Repository Password: " -s AIOPS_PASS

export AIOPS_PASS

curl -L -O https://\$AIOPS_USER:\$AIOPS_PASS@speedy.moogsoft.com/repo/aiops/install_percona_nodes_tarball.sh 2>/dev/null

echo

_EOF_

bash aiops_repo.sh

Now run the script:

bash install_percona_nodes_tarball.sh

The script guides you through the installation process. To configure a single database node on the same server as Cisco Crosswork Situation Manager, use these settings:

— Configure Percona as "Primary".

— Do not set the server to "DB only".

— Set the first database node IP address to the server IP address.

— When prompted to enter the IP addresses of the second and third nodes, press Enter to skip these settings.

4. Execute the .bashrc file:

source ~/.bashrc

The pre-installation steps are now complete. To continue with the Cisco Crosswork Situation Manager installation, see v7.3.x - Tarball installation.

RPM installation

This topic describes how to install Cisco Crosswork Situation Manager v7.3.x on a single host.

Follow these steps if you have root access to the machine or machines on which you will install Cisco Crosswork Situation Manager, and you can connect to Yum repositories outside your network from those machines.

To install Cisco Crosswork Situation Manager in a highly available distributed environment, see Distributed HA Installation.

For Tarball installation steps, see v7.3.x - Tarball installation.

Before you begin

Before you start to install Cisco Crosswork Situation Manager, complete all steps in one of the following documents:

· v7.3.x - RPM pre-installation steps: If you have root access to the machine or machines on which you will install Cisco Crosswork Situation Manager, and you can connect to Yum repositories outside your network from those machines.

· v7.3.x - Offline RPM pre-installation steps: If you have root access to the machine or machines on which you will install or upgrade Cisco Crosswork Situation Manager, but you cannot connect to Yum repositories outside your network from those machines.

Install Cisco Crosswork Situation Manager

To complete an RPM installation of Cisco Crosswork Situation Manager v7.3.x, perform the following steps:

1. Download and install the Cisco Crosswork Situation Manager RPM packages, using one of the following methods according to your deployment type:

— If you are performing an RPM installation:

yum -y install moogsoft-server-7.3.0 \

moogsoft-db-7.3.0 \

moogsoft-utils-7.3.0 \

moogsoft-search-7.3.0 \

moogsoft-ui-7.3.0 \

moogsoft-common-7.3.0 \

moogsoft-mooms-7.3.0 \

moogsoft-integrations-7.3.0 \

moogsoft-integrations-ui-7.3.0

— If you are performing an offline RPM installation, navigate to the location where you copied the RPM files and install them:

yum install *.rpm

2. Edit your ~/.bashrc file to contain the following lines:

export MOOGSOFT_HOME=/usr/share/moogsoft

export APPSERVER_HOME=/usr/share/apache-tomcat

export JAVA_HOME=/usr/java/latest

export PATH=$PATH:$MOOGSOFT_HOME/bin:$MOOGSOFT_HOME/bin/utils

Initialize Cisco Crosswork Situation Manager

When the installation process is complete, initialize Cisco Crosswork Situation Manager as follows:

1. Run the initialization script moog_init (replace <zone name> with your desired RabbitMQ VHOST):

$MOOGSOFT_HOME/bin/utils/moog_init.sh -qI <zone_name> -u root

The script prompts you to accept the End User License Agreement (EULA) and guides you through the initialization process.

The zone_name sets up a virtual host for the Message Bus. If you have multiple systems sharing the same bus, set a different zone name for each.

2. If you are deploying more than one database, configure HA Proxy to load-balance the database nodes. The following script requires root privileges. Run this script on any host running any Cisco Crosswork Situation Manager components. Provide your "speedy" Yum repo user credentials when prompted:

cat > aiops_repo.sh << _EOF_

#!/bin/bash

clear

echo "Please provide access credentials for the 'speedy' yum repository in order to run the haproxy setup script"

echo

read -p "AIOps Repository Username: " AIOPS_USER

export AIOPS_USER

read -p "AIOps Repository Password: " -s AIOPS_PASS

export AIOPS_PASS

echo

bash <(curl -s -k https://\$AIOPS_USER:\$AIOPS_PASS@speedy.moogsoft.com/repo/aiops/haproxy_installer.sh)

_EOF_

bash aiops_repo.sh

3. Restart Moogfarmd and Apache Tomcat:

service moogfarmd restart

Run an unattended installation

The moog_init script provides the ability to run a 'quiet' installation and to automatically accept the terms of the EULA. This means that you can write a bash script to automatically execute both the installation script and the initialization script on the same host. For example:

~/moogsoft-aiops-install-7.3.0.sh \

-d ~/moogsoft &&

~/moogsoft/bin/utils/moog_init.sh \

-qI MoogsoftAIOps -p MySQLpasswd -u root --accept-eula

Run moog_init.sh -h for a description of all options.

Verify the installation

To verify that the installation has completed successfully, follow the steps outlined in Validate the installation.

Change passwords for default users

When the installation is complete, it is critical that you change the passwords for the default users created during the installation process. See Change passwords for default users for more information.

Tarball installation

This topic describes how to install Cisco Crosswork Situation Manager on a single host using the tarball archives, previously known as the non-root install.

Follow these steps if you do not have root access to the machine or machines on which you will install Cisco Crosswork Situation Manager.

To install Cisco Crosswork Situation Manager in a highly available distributed environment, see Distributed HA Installation.

For RPM installation steps, see /document/preview/11638#UUIDc3421ecf858260d943609c8ad4af0f4c.Prepare for an RPM Installation

Before you begin

Before you start to install Cisco Crosswork Situation Manager, complete all steps in the following document:

v7.3.x - Tarball pre-installation steps.

Install Cisco Crosswork Situation Manager

To complete a Tarball installation of Cisco Crosswork Situation Manager v7.3.x, perform the following steps:

1. Download the tarball installer, using one of the following options:

— Download via a web browser from https://speedy.moogsoft.com/installer and user the Yum user credentials provided by Cisco support.

— Use the following cURL command, substituting your "speedy" Yum repo user credentials:

curl -L -O "https://<username>:<password>@speedy.moogsoft.com/installer/moogsoft-aiops-7.3.0.tgz"

2. Optional: GPG key validation of the Tarball

For users wishing to validate the Tarball before installation, the following steps must be followed:

a. Download the key from this site:

https://keys.openpgp.org/vks/v1/by-fingerprint/2529C94A49E42429EDAAADAEC7A2253BFC50512A

b. Copy the key to the server onto which the Tarball will be installed (it will be a .asc file)

c. Import the key:

gpg --import 2529C94A49E42429EDAAADAEC7A2253BFC50512A.asc

d. Download the moogsoft-aiops-7.3.0.tgz.sig file from the same 'speedy' path:

curl -L -O "https://<username>:<password>@speedy.moogsoft.com/installer/moogsoft-aiops-7.3.0.tgz.sig"

e. Ensure both the tgz and the .sig file are both in the same folder, then copy the following command into a bash terminal and run it to perform the validation:

gpg --verify moogsoft-aiops-7.3.0.tgz.sig moogsoft-aiops-7.3.0.tgz

f. Confirm that the report states:

Good signature from "Moogsoft Information Security Team "<security@moogsoft.com>"

3. Unzip and untar the Cisco Crosswork Situation Manager distribution archive in your working directory:

tar -xf moogsoft-aiops-7.3.0.tgz

The distribution archive contains the following files:

— A README.txt file

— The installation script: moogsoft-aiops-install-7.3.0.sh

— The distribution archive: moogsoft-aiops-dist-7.3.0.tgz

4. Execute the installation script moogsoft-aiops-install-7.3.0.sh in your working directory to install Cisco Crosswork Situation Manager.

bash moogsoft-aiops-install-7.3.0.sh

The script guides you through the installation process. The installation directory defaults to <working-directory>/Cisco. You can change this if you wish.

5. Set the $MOOGSOFT_HOME environment variable to point to your installation directory, and add $MOOGSOFT_HOME/bin/utils to the path. For example:

echo "export MOOGSOFT_HOME=~/moogsoft" >> ~/.bashrc

echo "export PATH=$PATH:\$MOOGSOFT_HOME/bin/utils" >> ~/.bashrc && \

source ~/.bashrc

Initialize Cisco Crosswork Situation Manager

When the installation process is complete, initialize Cisco Crosswork Situation Manager as follows:

1. Configure the Toolrunner to execute locally by setting "execute_locally: true" in $MOOGSOFT_HOME/config/servlets.conf:

sed -i 's/# execute_locally: false,/,execute_locally: true/1' $MOOGSOFT_HOME/config/servlets.conf

2. Run the initialization script moog_init:

$MOOGSOFT_HOME/bin/utils/moog_init.sh -qI <zone_name> -u root

The script prompts you to accept the End User License Agreement (EULA) and guides you through the initialization process.

The zone_name sets up a virtual host for the Message Bus. If you have multiple systems sharing the same bus, set a different zone name for each.

To set processes to restart when the system is rebooted (Percona, RabbitMQ, Moogfarmd etc) use the -k flag. For example:

$MOOGSOFT_HOME/bin/utils/moog_init.sh -k

For more information see Configure Services to Restart.

3. If you are deploying more than one database, configure HA Proxy to load-balance the database nodes. The following script requires root privileges. Run this script on any host running any Cisco Crosswork Situation Manager components. Provide your "speedy" Yum repo user credentials when prompted:

cat > aiops_repo.sh << _EOF_

#!/bin/bash

clear

echo "Please provide access credentials for the 'speedy' yum repository in order to download the Percona setup script"

echo

read -p "AIOps Repository Username: " AIOPS_USER

export AIOPS_USER

read -p "AIOps Repository Password: " -s AIOPS_PASS

export AIOPS_PASS

curl -L -O https://\$AIOPS_USER:\$AIOPS_PASS@speedy.moogsoft.com/repo/aiops/install_percona_nodes_tarball.sh 2>/dev/null

echo

_EOF_

bash aiops_repo.sh;

Then run the script:

bash install_percona_nodes_tarball.sh

4. Restart Moogfarmd and Apache Tomcat:

$MOOGSOFT_HOME/bin/utils/process_cntl moog_farmd restart

$MOOGSOFT_HOME/bin/utils/process_cntl apache-tomcat restart

Run an unattended installation

The moog_init script provides the ability to run a 'quiet' installation and to automatically accept the terms of the EULA. This means that you can write a bash script to automatically execute both the installation script and the initialization script on the same host. For example:

~/moogsoft-aiops-install-7.3.0.sh \

-d ~/moogsoft &&

~/moogsoft/bin/utils/moog_init.sh \

-qI MoogsoftAIOps -p MySQLpasswd -u root --accept-eula

Run moog_init.sh -h for a description of all options.

Verify the installation

To verify that the installation has completed successfully, follow the steps outlined in Validate the installation.

Change passwords for default users

When the installation is complete, it is critical that you change the passwords for the default users created during the installation process. See Change passwords for default users for more information.

Distributed HA Installation

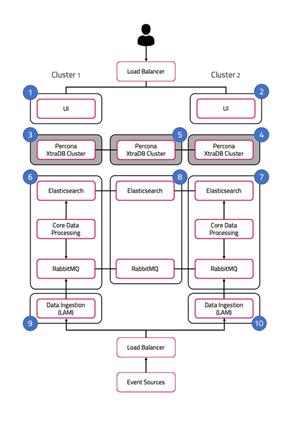

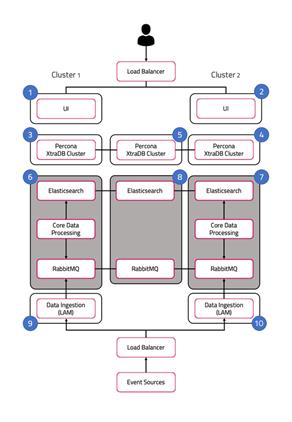

The following includes the installation steps for a fully distributed system running with HA, as illustrated in the following diagram.

Note that prior to performing a distributed HA installation, you must complete Pre-installation for Cisco Crosswork Situation Manager v7.3.x.

To view a list of connectivity ports for a fully distributed HA architecture see Distributed HA system Firewall.

The installation assumes a HA configuration across 2 clusters called Cluster1 and Cluster2.

Note that both Core instances and polling LAMs are part of the same respective Cisco Crosswork Situation Manager process group since they run in an active / passive configuration with auto-failover enabled.

UI stacks, as well as receiving LAMs, should run as part of two distinct Cisco Crosswork Situation Manager process groups as both instances in the HA pair are active.

Install a fully distributed HA system

1. Set up Percona XTRA DB Cluster. See Set Up the Database for HA for more information.

2. Set up Core 1 and 2 roles. See Set Up the Core Role for HA for more information.

3. Set up UI 1 and 2 roles. See Set Up the User Interface Role for HA for more information.

4. Set up LAM 1 and 2 roles. See Install without Caching LAM for more information.

5. (Optional) set up Caching LAM 1 and 2 roles.

Install a minimally distributed HA system

For any other minimally distributed HA setup, follow the same high level installation steps as described above.

The instructions list the steps for a specific role installation. If you need to collocate multiple roles on the same server according to a minimally distributed installation of your choice, you may need to run multiple sets of instructions on the same server for the corresponding collocated roles. There might be an overlap in terms of steps and if this is the case you only need to perform those steps once. For instance, if you collocate Core 1 and UI 1 roles, you only need to configure HA Proxy once.

Distributed HA system Firewall

Connectivity within a fully distributed HA architecture:

| Source |

Destination |

Ports |

Bi-directional |

| UI 1, UI 2 |

Core 1, Core 2 |

5672,9200 |

- |

| UI 1, UI 2 |

RedServ |

5672,9200 |

- |

| UI 1, UI 2 |

DB 1, DB 2, DB 3 |

3306,9198 |

- |

| Core 1 |

Core 2 |

5701,9300,4369,5672 |

Yes |

| Core 1, Core 2 |

RedServ |

9300, 4369, 5672 |

Yes |

| Core 1, Core 2 |

DB 1, DB 2, DB 3 |

3306, 9198 |

- |

| LAM 1, LAM 2 |

Core 1, Core 2, RedServ |

5672 |

- |

| LAM 1, LAM 2 |

DB 1, DB 2, DB 3 |

3306, 9198 |

- |

| DB 1 |

DB 2, DB 3 |

3306, 4567, 4444, 5468 |

Yes |

If any of the default ports are changed then substitute it in the tables above. The ports are responsible for the following:

| 9200 |

Used for inbound Elastic Search REST API |

| 9300 |

Used for Elastic nodes communication within a cluster |

| 5672 |

Access to mooms bus (RabbitMQ) |

| 15672 |

Access to mooms (RabbitMQ) console |

| 4369 |

Required for mooms (RabbitMQ) cluster |

| 5701 |

Required for Hazelcast cluster |

| 8091 |

Access the Hazelcast cluster info via Hazelcast's |

| 3306 |

Regular MySQL port |

| 4567 |

For group communication in Percona XtraDB Cluster |

| 4444 |

For State Snapshot Transfer in Percona XtraDB Cluster |

| 4568 |

For Incremental State Transfer in Percona XtraDB Cluster |

| 9198 |

Allows HAProxy to check the node's Percona XtraDB Cluster status via http |

See Distributed HA Installation for the full installation steps for a fully distributed system running with HA.

Set Up the Database for HA

The database layer Cisco Crosswork Situation Manager for HA uses the Percona XtraDB Cluster mechanism.

See /document/preview/120574#UUID816c7d74d05ed359780616a54d06a4d4 for more information about the supported database platform.Database Strategy

HA architecture

In our distributed HA installation, the database components are installed on servers 3, 4, and 5:

The roles are installed as follows:

Server 3: DB 1.

Server 4: DB 2.

Server 5: DB 3.

Fully distributed installation

See Distributed HA Installation for a reference diagram and steps to achieve a fully distributed installation.

Minimally distributed installation