Cisco Solution for EMC VSPEX Microsoft Fast Track 3.0

Available Languages

Table Of Contents

About Cisco Validated Design (CVD) Program

Cisco Solution for EMC VSPEX Microsoft Fast Track 3.0

Benefits of Cisco Unified Computing System

Benefits of EMC VNXe3300 Storage Array

Benefits of Microsoft Private Cloud Fast Track Small Implementation

Active Directory Domain Services

Install the Remote Server Administration Toolkit (RSAT) on the Configuration Workstation

Cisco Unified Computing System Deployment Procedure

Initial Cisco UCS Configuration

Scripted Configuration for Fast Track

Initial EMC VNXe3300 Configuration

Cisco UCS Service Profile Creation

Configure MPIO on Windows Server 2012

Configure the iSCSI Sessions to the VNXe

Validate the Host iSCSI Configuration

Configuration of Hyper-V Failover Cluster

Create Additional Virtual Machines

Appendix A: PowerShell Scripts

Example VNXe Unisphere CLI Commands to Change the MTU size on the VNXe

Example Script to Configure Maximum Transmission Unit (MTU) Size on Windows Server 2012

Sample mtu.txt File Used for Input with the MTU Script

Example Script to Configure MPIO

Example Script to Configure iSCSI Sessions on Windows Server 2012

Example Script to Create iSCSI LUNs Using ESI PowerShell

Sample CFG_Storage.txt File Used for Input with the LUN Creation Script

Example Script to Mask iSCSI LUNs to Hosts Using ESI Powershell

Sample CFG_Access.txt File Used for Input with the LUN Masking Script

Cisco Solution for EMC VSPEX Microsoft Fast Track 3.0Microsoft Hyper-V Small ImplementationLast Updated: September 4, 2013

Building Architectures to Solve Business Problems

About the Authors

Tim Cerling, Technical Marketing Engineer, Cisco

Tim Cerling is a Technical Marketing Engineer with Cisco's Datacenter Group, focusing on delivering customer-driven solutions on Microsoft Hyper-V and System Center products. Tim has been in the IT business since 1979. He started working with Windows NT 3.5 on the DEC Alpha product line during his 19 year tenure with DEC, and he has continued working with Windows Server technologies since then with Compaq, Microsoft, and now Cisco. During his twelve years as a Windows Server specialist at Microsoft, he co-authored a book on Microsoft virtualization technologies - Mastering Microsoft Virtualization. Tim holds a BA in Computer Science from the University of Iowa.

Acknowledgments

For their support and contribution to the design, validation, and creation of this Cisco Validated Design, we would like to thank:

•

Mike Mankovsky—Cisco

•

Mike McGhee—EMC

•

Txomin Barturen—EMC

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2013 Cisco Systems, Inc. All rights reserved

Cisco Solution for EMC VSPEX Microsoft Fast Track 3.0

Executive Summary

Private cloud technologies have proven themselves in large data centers and hosting organizations. The ability to quickly deploy new virtual machines, make configuration changes to virtual machines, live migrate virtual machines to different hosts before performing maintenance on physical components, and other benefits like this have cut operational expenses.

The benefit has not been quite as easy to attain in smaller configurations. Often the installation of cloud technologies require the purchase of large management infrastructures in order to provide the benefits listed above. With the advent of the improved built-in management capabilities of Windows Server 2012, the improved Hyper-V that comes with it, and the Cisco UCS PowerTool PowerShell module, it is now possible to bring some of the benefits of cloud technologies to small and medium businesses or remote offices of larger businesses.

This guide will provide the steps necessary to configure a Microsoft Fast Track Small Implementation cloud built on EMC VSPEX, which is built on Cisco Unified Computing System and EMC VNXe technologies.

Benefits of Cisco Unified Computing System

Cisco Unified Computing System is the first converged data center platform that combines industry-standard, x86-architecture servers with networking and storage access into a single converged system. The system is entirely programmable using unified, model-based management to simplify and speed deployment of enterprise-class applications and services running in bare-metal, virtualized, and cloud computing environments.

The system's x86-architecture rack-mount and blade servers are powered by Intel Xeon processors. These industry-standard servers deliver world-record performance to power mission-critical workloads. Cisco servers, combined with a simplified, converged architecture, drive better IT productivity and superior price/performance for lower total cost of ownership (TCO). Building on Cisco's strength in enterprise networking, Cisco Unified Computing System is integrated with a standards-based, high-bandwidth, low-latency, virtualization-aware unified fabric. The system is wired when to support the desired bandwidth and carries all Internet protocol, storage, inter-process communication, and virtual machine traffic with security isolation, visibility, and control equivalent to physical networks. The system meets the bandwidth demands of today's multicore processors, eliminates costly redundancy, and increases workload agility, reliability, and performance.

Cisco Unified Computing System is designed from the ground up to be programmable and self-integrating. A server's entire hardware stack, ranging from server firmware and settings to network profiles, is configured through model-based management. With Cisco virtual interface cards, even the number and type of I/O interfaces is programmed dynamically, making every server ready to power any workload at any time. With model-based management, administrators manipulate a model of a desired system configuration, associate a model's service profile with hardware resources, and the system configures itself to match the model. This automation speeds provisioning and workload migration with accurate and rapid scalability. The result is increased IT staff productivity, improved compliance, and reduced risk of failures due to inconsistent configurations.

The power of this programmability is demonstrated in how quickly this configuration can be deployed. Cisco UCS PowerTool is used to configure the converged fabric and define the required pools, templates, and profiles needed to implement a small business or branch implementation of Hyper-V. After editing a text file to define customer specific values, a PowerShell script is run. This takes a few minutes instead of a couple hours working in front of a GUI. It ensures consistency in deployment, while at the same time not requiring a high level of expertise in UCS in order to deploy the solution.

Cisco Fabric Extender technology reduces the number of system components to purchase, configure, manage, and maintain by condensing three network layers into one. It eliminates both blade server and hypervisor-based switches by connecting fabric interconnect ports directly to individual blade servers and virtual machines. Virtual networks are now managed exactly as physical networks are, but with massive scalability. This represents a radical simplification over traditional systems, reducing capital and operating costs while increasing business agility, simplifying and speeding deployment, and improving performance.

Cisco Unified Computing System helps organizations go beyond efficiency: it helps them become more effective through technologies that breed simplicity rather than complexity. The result is flexible, agile, high-performance, self-integrating information technology, reduced staff costs with increased uptime through automation, and more rapid return on investment.

Benefits of EMC VNXe3300 Storage Array

The EMC VNXe series redefines networked storage for the small business to small enterprise user, delivering an unequaled combination of features, simplicity, and efficiency. These unified storage systems provide true storage consolidation capability with seamless management and a unique application driven approach that eliminates the boundaries between applications and their storage. VNXe systems are uniquely capable of delivering unified IP storage for NAS and iSCSI while simplifying operations and reducing management overhead. While for the Fast Track configuration only iSCSI is defined, the system can be extended to include CIFS and NFS to enable NAS environments.

The VNXe3300 is equipped with two controllers for performance, scalability, and redundancy. The high-availability design, including mirrored cache and dual active controllers, is architected to eliminate single points-of-failure. If an outage occurs, data in the VNXe write cache is safely stored in Flash memory, eliminating time-limited battery backup and external power supplies.

The VNXe hardware platforms take advantage of the latest processor technology from Intel, and include features that help meet future needs for growth and change. This includes Flex I/O expansion which provides the capability of adding 1 Gb/s or 10 Gb/s ports to extend connectivity and performance. Also the latest 6 Gb/s serial-attached SCSI (SAS) drives and enclosures are used to enable enterprise performance and end-to-end data integrity features. The VNXe systems also support Flash drives for performance-intensive applications.

The system can grow from as small as 6 drives to as large as 150 to allow for extreme flexibility in growth and performance for changing environments. The drives presented to the system are organized into pools for simple capacity management and ease of expansion. Advanced storage efficiency can be achieved through the use of the VNXe's thin provisioning capability, which enables on-demand allocation of storage. In NAS environments file-level deduplication and compression can be used to reduce physical capacity needs by 50 percent or more.

VNXe systems were designed with a management philosophy in mind: keep it simple. It's storage from the application's point of view with one clear way to handle any task. From initial installation to creating storage for virtual servers, the bottom line is the VNXe management interfaces, including Unisphere, will help to save steps and time. Provisioning storage for 500 mailboxes or 100 GB of virtual server storage can be done in less than 10 minutes. Application-driven provisioning and management enables you to easily consolidate your storage.

Benefits of Microsoft Private Cloud Fast Track Small Implementation

Microsoft Fast Track private cloud solutions, built on Microsoft Windows Server and System Center, dramatically change the way that enterprise customers produce and consume IT services by creating a layer of abstraction over pooled IT resources. But small and medium businesses might not need all the features provided by a full System Center implementation. Enter the Fast Track Small Implementation, a design specifically for small/medium businesses and branch locations of larger businesses.

The Microsoft Hyper-V Cloud Fast Track Program provides a reference architecture for building private clouds on each organization's unique terms. Each fast-track solution helps organizations implement private clouds with increased ease and confidence. Among the benefits of the Microsoft Hyper-V Cloud Fast Track Program are faster deployment, reduced risk, and a lower cost of ownership.

Faster deployment:

•

End-to-end architectural and deployment guidance

•

Streamlined infrastructure planning due to predefined capacity

•

Enhanced functionality and automation through deep knowledge of infrastructure

•

Integrated management for virtual machine (VM) and infrastructure deployment

•

Self-service portal for rapid and simplified provisioning of resources

Reduced risk:

•

Tested, end-to-end interoperability of compute, storage, and network

•

Predefined, out-of-box solutions based on a common cloud architecture that has already been tested and validated

•

High degree of service availability through automated load balancing

Lower cost of ownership:

•

A cost-optimized, platform and software-independent solution for rack system integration

•

High performance and scalability with Windows Server 2012 operating system advanced platform editions of Hyper-V technology

•

Minimized backup times and fulfilled recovery time objectives for each business critical environment

Audience

This document describes the architecture and deployment procedures of an infrastructure comprised of Cisco, EMC, and Microsoft virtualization. The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy the VSPEX architecture.

Architecture

There are two reference architectures for the Fast Track Small Implementation. The first is based on a Cluster-in-a-Box design which utilizes low-cost storage options connected to clustered RAID controllers. The second architecture is built on a storage solution that off-loads storage processing to a SAN. The Cisco/EMC solution is based on the clustered SAN design.

The Clustered SAN design pattern uses the highly available Windows Server 2012 Hyper-V clustered architecture with traditional SAN storage. The Clustered SAN design pattern enables the storage network and network paths to be combined over a single medium, which requires fewer infrastructures by offering a converged network design. The design pattern employs an Ethernet infrastructure that serves as the transport for the management and failover networks, and provides logical separation between these networks.

This topology utilizes a traditional SAN based solution with 2 to 4 server nodes connected and clustered. The virtual machines all run within the Hyper-V cluster and utilize the networking infrastructure; whether using converged or non-converged design as mentioned previously. The requirement of Microsoft's Fast Track architecture maps on top of the VSPEX program of validated configurations of the Cisco UCS Server and EMC VNX storage.

The Cisco/EMC solution uses local on motherboard LAN connections for management of the hosts. All other networking is handled through a converged fabric which configures the redundant connections into multiple, individual LANs for use by the different functions, for example, Live Migration and iSCSI.

Figure 1 Cisco Unified Computing System and EMC Reference Design Pattern

Bill of Materials

This solution is designed to scale from a small configuration of two Cisco UCS C220 M3 servers to a maximum of four servers. The associated VNXe330 Storage Array can also scale from the 22 disks shown in the below table, up to 150 disks, depending on storage requirements.

Cisco Bill of Materials

Table 1lists the bill of materials for Cisco.

Table 1 Cisco Bill of Material

EMC Bill of Materials

Table 2 lists the bill of materials for EMC.

Table 2 EMC Bill of Materials

Configuration Guidelines

This document provides details for configuring a fully redundant, highly-available configuration. Therefore, references are made as to which component is being configured with each step, whether A or B. For example, Fabric Interconnect A and Fabric Interconnect B are used to identify the two Cisco UCS 6248UP Fabric Interconnect switches. Service Process A and Service Processor B (or SP-A and SP-b) are used to identify the two service processors in the EMC VNXe3300 Storage Array. o indicate that the reader should include information pertinent to their environment in a given step, <italicized text> appears as part of the command structure.

This document is intended to allow the reader to fully configure the customer environment. In order to expedite the configuration of the UCS environment, PowerShell command scripts are included in Appendix A. The Create-UcsHyperVFastTrack.ps1 script contains generic values for variables at the beginning of the script. Values for these variables will have to be tailored for the customer environment. The following table can be used to record the appropriate values for the customer installation. Note that many of these values may be used as is, but optionally can be altered.

Table 3 Customer Worksheet for Create-UcsHyperVFastTrack.ps1

A second sample PowerShell script, Create-UcsHyperVIscsi.ps1, creates the service profiles necessary to enable the servers to boot from iSCSI. This script uses the following variables. Again, the customer will have to change values to reflect their environment.

Table 4 Customer Worksheet for Create-UcsHyperVIscsi.ps1

Active Directory Domain Services

Active Directory Domain Services (AD DS) is a required foundational component that is provided as a component of Windows Server 2012. Previous versions are not directly supported for all workflow provisioning and de-provisioning automation. It is assumed that AD DS deployments exist at the customer site and deployment of these services is not in scope for the typical deployment.

•

AD DS in guest virtual machine. For standalone, business in-a-box configurations, the preferred approach is to run AD DS in a guest virtual machine, using the Windows Server 2012 feature that allows a Windows Failover Cluster to boot prior to AD DS running in the guest. For more information on deploying Domain Services within virtual machines, see Microsoft TechNet article on Things to consider when you host Active Directory domain controllers in virtual hosting environments.

•

Forests and domains. The preferred approach is to integrate into an existing AD DS forest and domain, but this is not a hard requirement. A dedicated resource forest or domain may also be employed as an additional part of the deployment. This solution supports multiple domains or multiple forests in a trusted environment using two-way forest trusts.

•

Trusts (multi-domain or inter-forest support). This solution enables multi-domain support within a single forest in which two-way forest (Kerberos) trusts exist between all domains.

The Cisco/EMC solution is designed to integrate with an existing AD DS infrastructure. If this is for a new installation that does not have an AD infrastructure, virtual machines can be built as virtual machines on the cluster hosts. If using a 2008 or earlier AD DS infrastructure, the virtual machines running AD DS should not be configured as highly available virtual machines.

Configure the Workstation

It is recommended to have a Windows 8 or Windows Server 2012 workstation configured with certain pre-requisite software and joined to the same domain as the Hyper-V servers will be joined. Using a properly configured workstation makes the job of installing the solution easier. Here is the recommendation for software to be installed on the workstation.

•

Java 7 - required for running UCS Manager. Version 2.0(3a) and later will work with Java 7. http://java.com/en/download/ie_manual.jsp?locale=en

•

Cisco UCS PowerTool for UCSM, version 0.9.10.0. http://developer.cisco.com/web/unifiedcomputing/pshell-download

–

Cisco UCS PowerTool requires the presence of Microsoft's .NET Framework 2.0. If using Windows 8 or Windows Server 2012, this will need to be installed, as it is older software.

•

PuTTY - an SSH and Telnet client helpful in initial configuration of the Cisco UCS 6248UP Fabric Interconnects. http://www.chiark.greenend.org.uk/~sgtatham/putty/download.html

•

PL-2303 USB-to-Serial driver - used to connect to the Cisco UCS 6248UP Fabric Interconnects through a serial cable connected to a USB port on the workstation. http://plugable.com/drivers/prolific/

•

Windows Server 2012 system

–

Install the Hyper-V Management Tools by issuing this PowerShell cmdlet: Install-WindowsFeature -Name RSAT-Hyper-V-Tools -IncludeAllSubFeature

–

Install the Windows Failover Clustering Tools by issuing this PowerShell cmdlet: Install-WindowsFeature -Name RSAT-Clustering -IncludeAllSubFeature

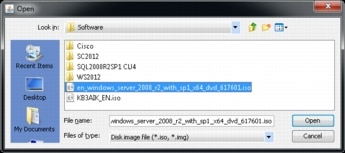

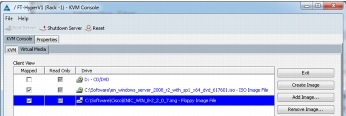

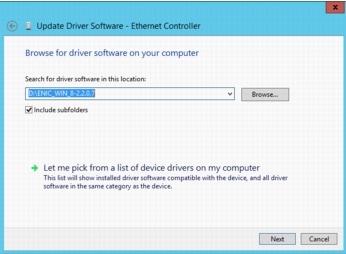

You will also need to have copies of the Windows Server 2012 installation media and the Cisco drivers 2.0(4a) for the P81E (www.cisco.com/cisco/software/type.html?mdfid=283862063&flowid=25886). Store these in a directory on your configuration workstation.

Install the Remote Server Administration Toolkit (RSAT) on the Configuration Workstation

There are several PowerShell scripts contained in Appendix A of this document. These are sample scripts. They have been tested, but they are not warranted against errors. They are provided as is, and no support is assumed. But they assist greatly in getting the Hyper-V implementation configured properly and quickly. Some of the scripts will require editing to reflect customer-specific configurations. It is best to create a file share on the configuration workstation and place all the PowerShell scripts on that file share. Most of the scripts will run from the configuration workstation, but there may be some that have to be run locally on the server being configured. Having them available on a file share makes it easier to access them.

For each of the PowerShell scripts contained in Appendix A, do the following:

1.

Open Notepad.

2.

Copy the contents of a section in Appendix A.

3.

Paste into Notepad.

4.

Save the file using as the name of the file the name of the section in Appendix A. While saving, ensure to set the "Save as type:" field to "All files (*)". For example, section Create-UcsHyperVFastTrack.ps1 should be saved as "Create-UcsHyperVFastTrack.ps1".

Deployment

This document details the necessary steps to deploy base infrastructure components as well as provisioning Microsoft Hyper-V as the foundation for virtualized workloads. At the end of these deployment stops, you will be prepared to provision applications on top of a Microsoft Hyper-V virtualized infrastructure. The outlined procedure includes:

•

Cabling Information

•

Cisco Unified Computing System Deployment Procedure

•

Initial EMC VNXe3300 Configuration

•

Installation of Windows Server 2012 Datacenter Edition

•

Configuration of Hyper-V Failover Cluster

The VSPEX solution provides for a flexible implementation. This guide will provision a basic configuration. Specific customer installations may vary slightly. For example, this guide will show how to configure a two-node Microsoft Server 2012 Hyper-V Failover Cluster. Adding a third and fourth node is just a matter of adding the name of the third and fourth nodes into the cluster configuration wizard or PowerShell commands. Although a specific customer implementation may deviate from the information that follows, the best practices, features, and configurations listed in this section should still be used as a reference for building a customized Cisco UCS with EMC VNXe3300 Microsoft Private Cloud Fast Track Small Implementation.

Cabling Information

The following information is provided as a reference for cabling the physical equipment in a Cisco/EMC VSPEX environment. The tables include both local and remote device and port locations in order to simplify cabling requirements. The PowerShell command file in Appendix A is written to conform to this cabling information. Changes made to the customer cabling need to be reflected in the command file by editing the associated variables.

Table 5 Cisco UCS C220 M3 Server 1 Cabling Information

Table 6 Cisco UCS C220 M3 Server 2 Cabling Information

Table 7 Cisco UCS 2232PP Fabric Extender A Cabling Information

Table 8 Cisco UCS 2232PP Fabric Extender B Cabling Information

Table 9 Cisco UCS 6248UP Fabric Interconnect A Cabling Information

Table 10 Cisco UCS 6248UP Fabric Interconnect B Cabling Information

Table 11 EMC VNXe3300 Service Processor A Cabling Information

•

Local Port

•

Connection

•

Remote Device

•

Remote Port

•

Eth 0

•

10 GE (Fibre)

•

UCS 6248UP A

•

Eth 1/25

•

Eth 1

•

10 GE (Fibre)

•

UCS 6248UP B

•

Eth 1/25

Table 12 EMC VNXe3300 Service Processor B Cabling Information

•

Local Port

•

Connection

•

Remote Device

•

Remote Port

•

Eth 0

•

10 GE (Fibre)

•

UCS 6248UP A

•

Eth 1/26

•

Eth 1

•

10 GE (Fibre)

•

UCS 6248UP B

•

Eth 1/26

Cisco Unified Computing System Deployment Procedure

Initial Cisco UCS Configuration

The following section provides a detailed procedure for configuring the Cisco Unified Computing System. These steps should be followed precisely because a failure to do so could result in an improper configuration.

Cisco UCS 6248 A

1.

Connect to the console port on the first Cisco UCS 6248 fabric interconnect.

2.

At the prompt to enter the configuration method, enter console to continue.

3.

If asked to either do a new setup or restore from backup, enter setup to continue.

4.

Enter y to continue to set up a new fabric interconnect.

5.

Enter y to enforce strong passwords.

6.

Enter the password for the admin user.

7.

Enter the same password again to confirm the password for the admin user.

8.

When asked if this fabric interconnect is part of a cluster, answer y to continue.

9.

Enter A for the switch fabric.

10.

Enter the cluster name for the system name.

11.

Enter the Mgmt0 IPv4 address.

12.

Enter the Mgmt0 IPv4 netmask.

13.

Enter the IPv4 address of the default gateway.

14.

Enter the cluster IPv4 address.

15.

To configure DNS, answer y.

16.

Enter the DNS IPv4 address.

17.

Answer y to set up the default domain name.

18.

Enter the default domain name.

19.

Review the settings that were printed to the console, and if they are correct, answer yes to save the configuration.

20.

Wait for the login prompt to make sure the configuration has been saved.

Cisco UCS 6248 B

1.

Connect to the console port on the second Cisco UCS 6248 fabric interconnect.

2.

When prompted to enter the configuration method, enter console to continue.

3.

The installer detects the presence of the partner fabric interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

4.

Enter the admin password for the first fabric interconnect.

5.

Enter the Mgmt0 IPv4 address.

6.

Answer yes to save the configuration.

7.

Wait for the login prompt to confirm that the configuration has been saved.

Log into Cisco UCS Manager

These steps provide details for logging into the Cisco UCS environment.

1.

Open a web browser and navigate to the Cisco UCS 6248 fabric interconnect cluster address.

2.

Select the Launch link to download the Cisco UCS Manager software.

3.

If prompted to accept security certificates, accept as necessary.

4.

When prompted, enter admin for the username and enter the administrative password and click Login to log in to the Cisco UCS Manager software.

Scripted Configuration for Fast Track

Appendix A contains a PowerShell script that can be run to configure the Microsoft Private Cloud Fast Track Small Implementation environment. It contains default values that should be edited to reflect what has been captured in the customer worksheet shown in Table 3. Only the variables at the beginning of the script should be edited. This script makes extensive use of Cisco UCS PowerTool.

Note

This script contains a section for defining a server qualification policy. That policy will need to be edited to reflect the customer's particular server models.

1.

Connect your configuration workstation to the network. Ensure proper network access to the Cisco UCS Manager by pinging the fabric interconnect network address.

2.

Open a PowerShell window. Enter the command Get-ExecutionPolicy.

3.

If the above command returns the value "Restricted", enter the command Set-ExecutionPolicy RemoteSigned. Enter Y at the confirmation prompt. By default, PowerShell is set up to prevent the execution of script files. Setting the execution policy to RemoteSigned will enable the execution of the Create-UcsHyperVFastTrack script.

4.

Connect to the directory in which you stored the PowerShell scripts.

5.

Type.\Create-UcsHyperVFastTrack.ps1.`

6.

You can use the UCS Manager GUI that you opened earlier to view the configuration just built.

Initial EMC VNXe3300 Configuration

Unpack, Rack, and Install

The VNXe base system included with this Fast Track solution includes one VNXe disk processor enclosure (DPE) and one disk-array enclosures (DAE). The system will also include 30 300GB 15K SAS drives.

The VNXe system package will include the 3U DPE with capacity for 15 disk drives, an adjustable rail kit, power cords, a service cable, and a front bezel with key. The 3U DAE package also has capacity for 15 disk drives, includes an adjustable rail kit, power cords, serial attached SCSI (SAS) cables, and a front bezel with key.

The VNXe System installation guide (available online at http://www.emc.com/vnxesupport) provides detailed information on how to rack, cable, and power-up the VNXe system. At a high level, the process includes the following:

•

When applicable, install VNXe components in a rack - install included rail kits and secure the VNXe components inside the rack. It is ideal to have two people available for lifting the hardware, due to the weight of the system.

•

Install the 2 10Gb Optical Ultraflex I/O modules, one into each service processor - Detailed information on how to add the I/O modules can be found in the "EMC VNXe3300 Adding Input/Output Modules" document available at www.emc.com/vnxesupport

•

Connect the dual port 10Gb I/O modules to the switch ports as outlined in the Cabling Information tables in this document.

•

Connect cables to the VNXe system components - connect cables between the DPE and DAE and connect the DPE management ports, one per service processor, to the appropriate "top of rack" switch to be used for external connectivity.

•

Connect power cables and power up the system - connect power to the VNXe components and wait until the LEDs indicate that the system is ready.

Connect to the VNXe

Option 1 - Automatic IP Address Assignment for the VNXe Management Port

If you are running the VNXe on a dynamic network that includes DHCP servers, DNS servers, and Dynamic DNS services, the management IP address can be assigned automatically. By default, the VNXe system management port is configured to use DHCP for IP assignment and will accept an IP address broadcast by a network DHCP server.

Perform the following steps to automatically assign an IP address to your VNXe system management port:

After you power up the VNXe system check the status of the SP fault/status LEDs. If the SP fault/status LEDs are solid blue, a management IP address has been assigned. If the fault/status LEDs are blue and flash amber every three seconds, no management IP address has been assigned. If the SP Fault/Status LEDs are blue and flashing, check the connectivity between the system, the DNS server, and the DHCP server.

Open a web browser and access the VNXe management interface specifying the following as a URL in the browser's address bar serial_number.domain.

Where:

Option 2 - Manual Static IP Address Assignment for the VNXe Management Port

To manually assign a static IP address for the VNXe system management port, the VNXe Connection Utility is required. To use the VNXe connection utility to assign a network address to the VNXe system, perform the following steps:

1.

Download and run the VNXe Connection Utility software.

a.

Download the software from www.emc.com/vnxesupport (under Downloads)

b.

Install the VNXe connection utility on a Windows computer. To use the Auto Discover method discussed below, install on a computer connected to the same subnet as the VNXe management port.

c.

Launch the VNXe Connection Utility

Use the connection utility to assign a management IP address to the VNXe system. After running the utility, select one of the following options

d.

Select Auto Discover and click Next to assign an IP address to a VNXe on the local subnet

–

View the VNXe systems, select the Product ID/SN of the desired system and click Next. If you do not see your VNXe, click Discover to scan the subnet again.

–

Specify a name, an IP address, subnet mask and gateway, click Next

–

The Configuration Summary screen appears. When all entries are complete, click Finish. The Configuring the VNXe Device screen will appear while the settings are implemented. The setup can take up to 10 minutes.

–

Click the Start Unisphere button to log in to Unisphere on the selected system.

e.

Or select Manual Configuration and click Next to assign a Management IP address to a VNXe system.

–

Specify a name, an IP address, subnet mask, and default gateway for the VNXe system and then click Save file to flash drive

–

Connect the flash drive to the USB port on either storage processor of the VNXe system to assign the IP address to the system.

–

Open a web browser to the IP address assigned to the VNXe system in order to connect to Unisphere.

Initial VNXe Configuration

Upon connecting to the VNXe system in the previous steps, log into Unisphere using the following credentials

•

Username: admin

•

Password: Password123#

The first time Unisphere is launched, the Unisphere Configuration wizard will run. The wizard provides the steps necessary to configure the following system settings:

•

Passwords for the default system administrator and service accounts

•

Advanced proactive EMC support through the ESRS and ConnectEMC features

•

DNS and NTP time synchronization support settings

•

Storage pool configuration: automatic or customer storage pool configuration: more details on this in the following sections

•

Unisphere Storage Server settings for managing iSCSI and shared folder storage: more details on this in the following sections

Storage Pool Considerations

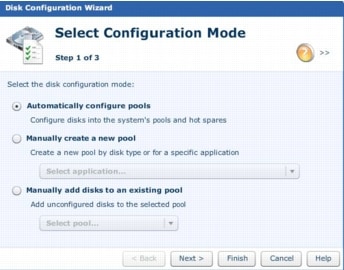

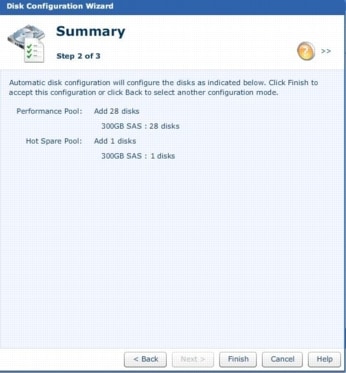

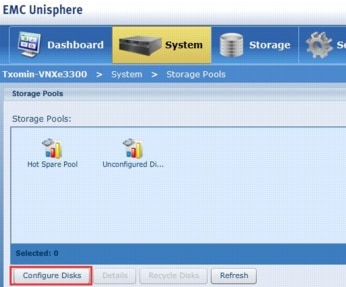

The VNXe 3300 supports a range of drive technologies and RAID protection schemes. For the proposed solution, Cisco and EMC have implemented a base configuration that utilizes a single drive type and RAID protection scheme. The solution implements a total of 30 300 GB 15K RPM SAS drives in a RAID 5 configuration.

In a VNXe 3300 RAID 5 is implemented in multiples of 7 (6 data + 1 parity) drive sets. For a total of 30 drives, there will be 4 X 6+1 RAID 5 sets. The remaining 2 drives are meant to be configured as Hot Spares.

When using the automatically configure pools option, the VNXe will allocate existing disks into capacity, performance and/or extreme performance pools, depending on the number and type of available disks. The rules used are the following:

•

NL-SAS disks are allocated in multiples of six in RAID6 (4+2) groups with no assigned spare disks. For example, if 45 NL-SAS disks are available, the capacity pool uses 42 of the disks, does not allocate any spare disks and leaves three disks unassigned. If needed, you can manually create a hot spare with NL-SAS disks.

•

In a VNXe3300 system, SAS disks are assigned in multiples of seven in RAID 5 (6+1) groups. One spare disk is assigned for the first 0-30 disks, and another spare disk is assigned for each additional group of 30 disks. For the base Fast Track configuration of 30 SAS disks, a performance pool would be created with 28 disks (4 groups of seven-disks,) one hot spare would be allocated and one disks would be unassigned. The unassigned disk can be added manually as a second hot spare.

•

In a VNXe3300, Flash drives are assigned in multiples of five in RAID 5 (4+1) groups. A spare disk is assigned if there are leftover drives. For example, if 11 Flash drives are available, the extreme performance pool uses 10 disks (in two groups of five-disks) and allocates one spare disk.

Instead of configuring the storage pools automatically, custom storage pools can be created with the manually create a new pool option. Custom pools can be used to optimize storage for an application with specific performance, capacity or cost efficiency requirements. The pool RAID types that can be configured, dependent on drive technology, can be seen in the following table:

Table 13 Storage Pool Options

It is recommended to use the automatically configure pools option with the base configuration. If this is not done as a part of the initial VNXe configuration during the first time Unisphere is launched, it can be done with the following steps:

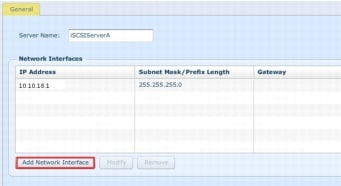

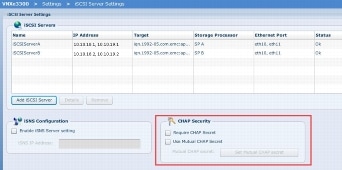

iSCSI Server Configuration

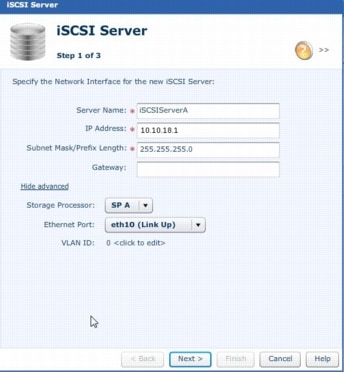

The iSCSI Storage Server is the portal through which storage will be accessed by the hosts within the Fast Track configuration. The goal of the proposed iSCSI server configuration is to provide redundancy, multi-pathing and balanced access across all 10 GE connections and both storage processors. Each 10 GE I/O module will have 2 ports, referred to as eth10 and eth11. Considering there is an I/O module for each service processor, both SPA and SPB will have eth10 and eth11 connections.

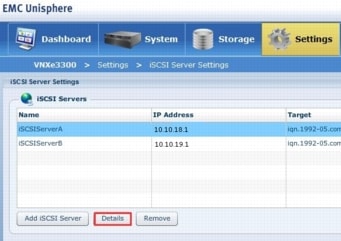

iSCSI servers will run on either SPA or SPB. This means storage assigned to a given iSCSI server will only be available to one SP at a given time. To utilize both SPA and SPB concurrently, two iSCSI servers will be created.

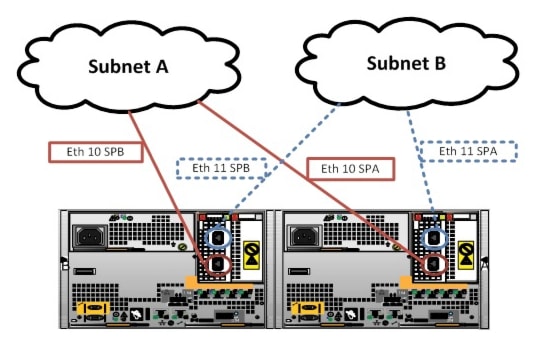

With respect to iSCSI server high availability, the eth10 and eth11 connections are paired across the service processors. If an iSCSI server running with an IP address dedicated to eth10 on SP A needs to move to SP B, for maintenance as an example, the IP address will move to the corresponding eth10 port on SPB. Therefore subnet connectivity will need to be the same for the associated eth10 and eth11 connections across the service processors. The following figure shows a logical example of the connections.

Figure 2 Logical Network Connections

The iSCSI server configuration will also have redundant connectivity while running against its respective service processor. This means both Eth10 and Eth11 will be assigned an IP addresses for each iSCSI server. This allows each iSCSI server to have both redundant ports and redundant fabric connections when running on either SPA or SPB. The following table provides an example.

Table 14 Sample IP Configuration

In summary, the key considerations for configuring iSCSI connectivity and the iSCSI storage servers are the following:

•

VNXe Generic iSCSI storage is presented to only one SP at a given time. To ensure both SP's are active, two iSCSI Storage Servers are created.

•

Two IP interfaces are configured for an iSCSI Storage Server. These IP interfaces should be associated with two separate physical interfaces on the same SP

•

Network switches for the two physical interfaces used per iSCSI Storage Server will be on separate subnets.

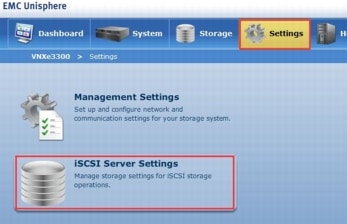

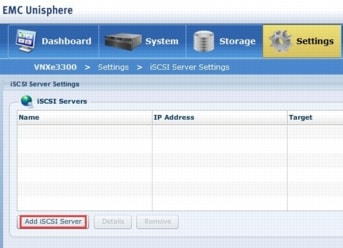

To configure iSCSI Storage Servers, do the following:

The Cisco networking environment will have a Maximum Transmission Unit (MTU) size of 9000 for the iSCSI connections to the VNXe. An example script to change the MTU through the VNXe Unisphere CLI is in the appendix. In order to match the configured MTU size through Unisphere, do the following steps:

Note

Make sure to change the Windows Server 2012 MTU size the on the appropriate network interfaces to match the network topology. This can be accomplished with the Set-NetIpInterface PowerShell command. See the appendix for an example script.

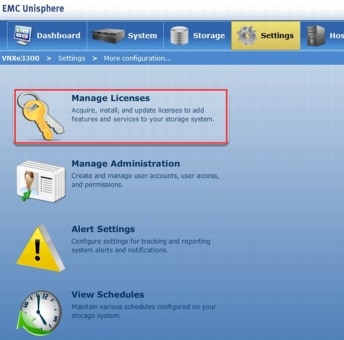

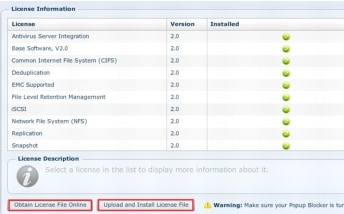

Licensing

Obtaining a license file as well as uploading and installing the file can be accomplished from within Unisphere: under Settings > More configuration... > Manage Licenses.

Update the VNXe Operating Environment

Depending on availability, please ensure the VNXe Operating Environment is updated to the MR4 release, which is not available as of the writing of this document. If this release is not available, please update the VNXe software as instructed below.

For Windows Server 2012 support with Windows Failover Clustering, the VNXe operating environment must be at MR3 SP1.1 - 2.3.1.20356 and include hotfix 2.3.1.20364.1.2.001.192. More information regarding the required hotfix can be found in knowledge base article emc306921.

VNXe software can be updated from within Unisphere at Settings > More configuration... > Update Software.

First ensure MR3 SP1.1 at a minimum is installed. Then install the aforementioned hotfix.

After the hotfix is installed, file parameters need to be updated on the service processor. Please see emc289415 for details.

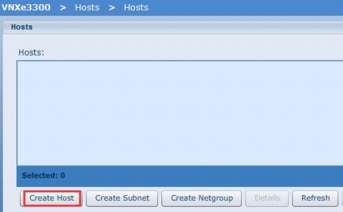

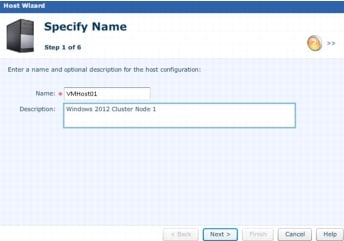

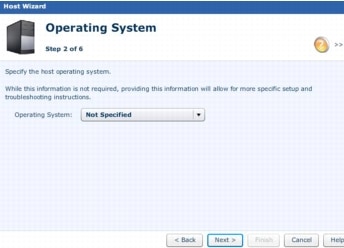

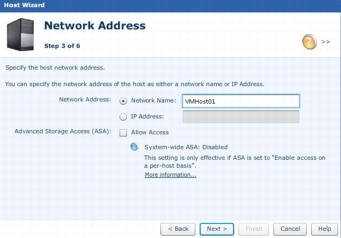

Create Host Configurations

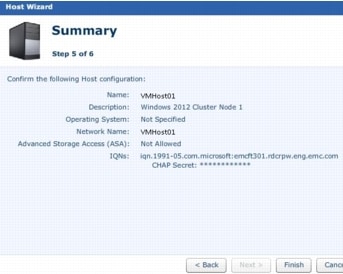

In order to present storage from the VNXe to the servers in the Fast Track environment, a host configuration profile must be created on the VNXe. The host profile will define the iSCSI Qualified Name (IQN) used by each server. It will also define the one-way Challenge-Handshake Authentication Protocol (CHAP) secret for the IQN(s) associated with the Host. Follow the next steps to configure the host:

Provisioning Storage

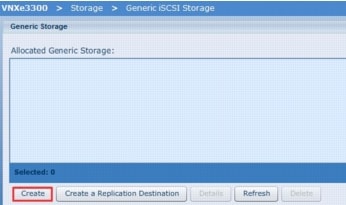

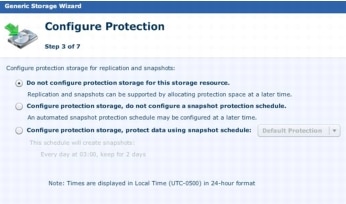

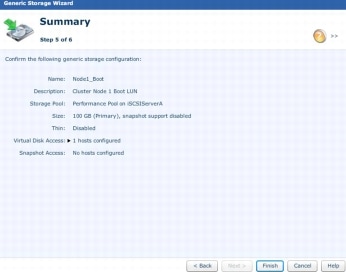

Potentially repetitive tasks, like provisioning storage, can be accomplished through Unisphere or can be scripted. EMC offers PowerShell cmdlets through a free product called EMC Storage Integrator (ESI). The appendix provides an example script on how to use ESI version 2.1 to provision storage. More information on ESI can be found on support.emc.com by searching for "ESI". The following table provides an example on how to use Unisphere to provision storage:

Cisco UCS Service Profile Creation

The Create-UcsHyperVIscsi.ps1 script in Appendix A is used to create service profiles based on the values entered in tables 3 and 4.

This script file is designed to be run from the configuration workstation or server.

•

Connect your configuration workstation to the network. Ensure proper network access to the UCS Manager by pinging the fabric interconnect network address.

•

Edit the Create-UcsHyperVIscsi.ps1 file to contain the customer values entered in Table 4.

•

Save the file.

•

Connect to the directory in which you stored the PowerShell script file. Type.\Create-UcsHyperVIscsi.ps1

Server Configuration

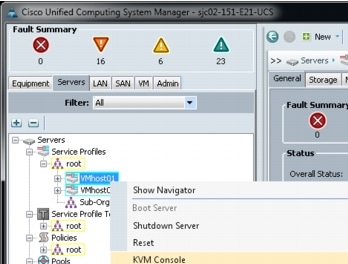

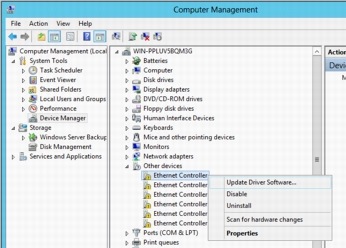

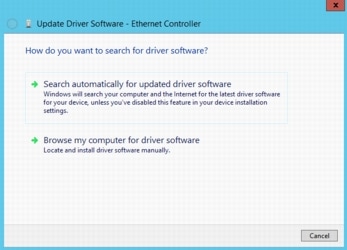

Installation of Windows Server 2012 Datacenter Edition

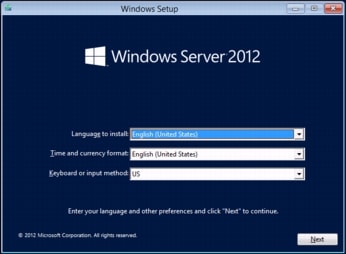

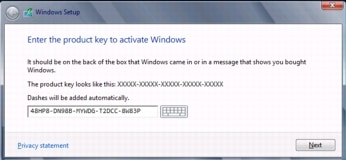

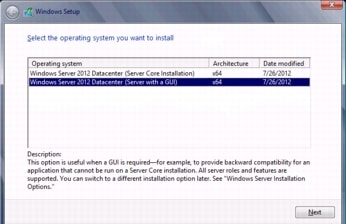

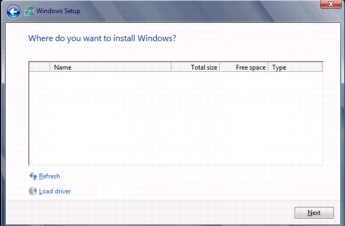

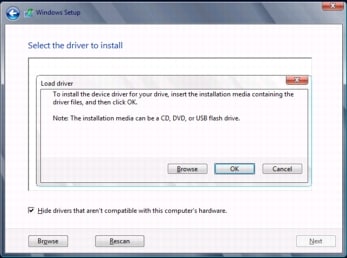

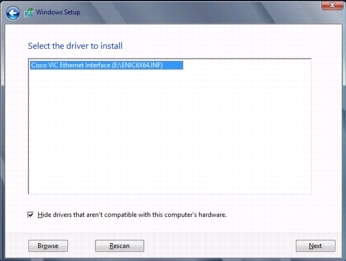

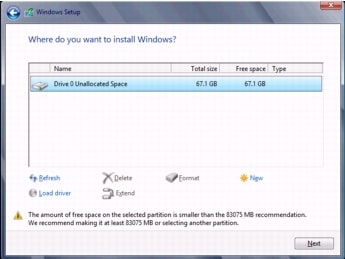

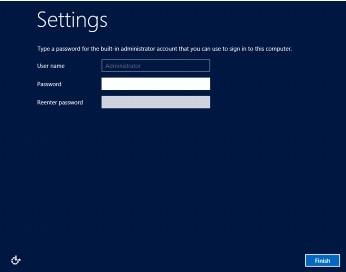

The instruction for install Windows Server 2012 Datacenter Edition presented here make the assumption that this is an installation from the Microsoft installation DVD. If the customer already has an automated deployment process in place, such as Windows Deployment Server, follow the customer installation procedure.

You will install Windows Server 2012 Datacenter Edition to the Cisco UCS C220 M3 servers by working through the UCSM KVM.

Local Configuration Tasks

When the computer has the operating system installed, there are some tasks that are performed to ensure the ability for the hosts to be remotely managed for the rest of these instructions. In an existing customer environment, the customer may handle some of these tasks through Active Directory group policy objects. Setting up these tasks to be handled by group policies is beyond the scope of this document, so they should be reviewed with the customer.

Remote Management

The server needs to be configured to ensure its ability to be remotely managed. This requires setting some specific firewall rules, so the setting of these rules should be agreed to by the customer's security department.

•

Log into the server you have just configured, connect to the file share on the configuration workstation.

•

Open a PowerShell window. Enter the command Get-ExecutionPolicy.

•

If the above command returns the value "Restricted", enter the command Set-ExecutionPolicy Unrestricted. Enter Y at the confirmation prompt. By default, PowerShell is set up to prevent the execution of script files. Setting the execution policy to Unrestricted will enable the execution of the Create-UcsHyperVFastTrack script.

•

Connect to the file share directory on the configuration workstation.

•

Type.\Create-UcsHyperVRemoteMgmt.ps1

Assigning Storage To Hosts

If the storage device was created but not assigned to any hosts, access can be specified at a later time through the storage device details area of Unisphere. Additionally, the appendix provides an example script, using ESI, for how to assign storage to a host. The following table provides an example on how to use Unisphere to assign storage.

Modify Windows Server 2012 iSCSI registry parameters

The registry settings in the following table should be modified on each server running iSCSI to the VNXe. The settings apply for both the native Windows Server 2012 MPIO DSM and PowerPath unless otherwise noted.

1.

In Windows, run the regedit.exe command to start the Windows Registry Editor.

2.

Navigate to HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet

3.

Right click on the key and select Find

4.

Search for the registry values in the table below. The values will be within the following folder

a.

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Class\{***}\***\Parametes

b.

*** indicates a unique value to a given computer.

Table 15 Registry Setting Values

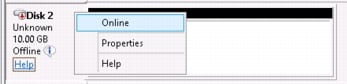

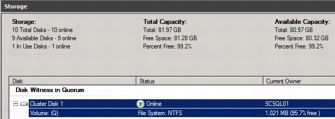

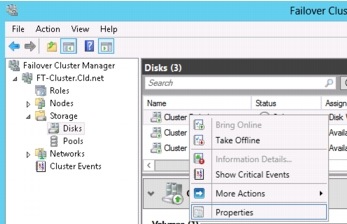

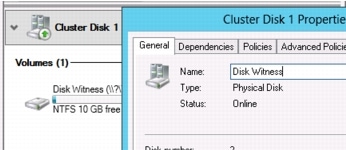

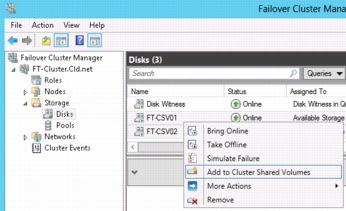

Prepare Disks for Clustering

Earlier iSCSI LUNs were created and zoning/masking performed to present multiple LUNs to the servers for use by the cluster. It is a good practice to ensure each server that is going to participate in the cluster is able to properly access and mount the presented LUNs. Also, Microsoft Failover Cluster Services expects the LUNs to be formatted as NTFS volumes. These steps ensure the disks are ready for use by the cluster and that each node has access to them.

All the following steps should be performed only from the first server that will be a cluster node. These steps prepare the disks for clustering.

On subsequence servers, simply follow the steps to bring the disks online and then take them offline. This ensures that disks are accessible from each node in the cluster. If you run into an error bring the disks online and offline, you will most likely need to troubleshoot your iSCSI configuration for that particular server.

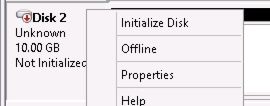

Initial Network Configuration

What is seen in the following sample screen shots may vary significantly from the actual customer environment. This is due to the fact that there are many variables in the potential customer network, and all variations are not covered in these samples. These samples assume that there is no DHCP server (which would make this a little easier, but is beyond the scope of this document). By assuming there is no DHCP server, all NICs will initially be configured with 169.254/16 APIPA addresses. These steps will assign fixed IP addresses to all the NICs.

The first that is necessary is to find the NIC through which host management is performed. This is not the out-of-band NIC used by UCSM, but the NIC dedicated to host management.

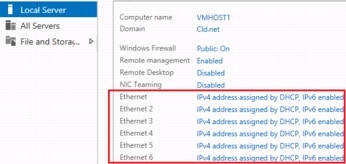

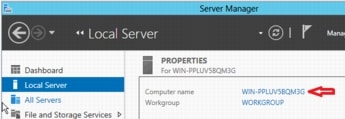

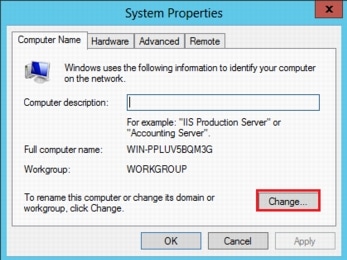

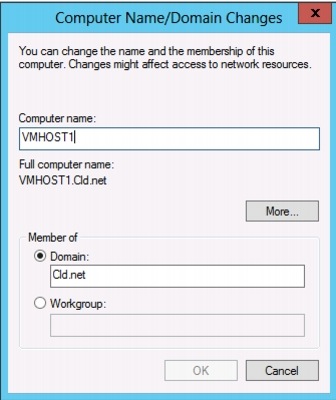

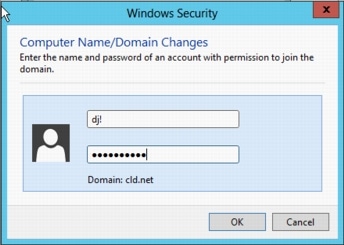

Join Computer to Domain

Final Network Configuration

The configuration workstation contains a PowerShell script file that eases the process of renaming the NICs from the generic names assigned during the installation to names that match the Service Profile used on each server. It contains default values for accessing the UCS Manager. These variables will need to be edited to reflect the customer's configuration.

This script file is designed to be run from a domain-joined workstation or server.

1.

Connect your configuration workstation to the network. Ensure proper network access to the UCS Manager by pinging the fabric interconnect network address.

2.

Edit the Rename-UcsHyperVNICs.ps1 file to contain the Admin username and password for the customer environment, and the VIP of the UCS Manager.

3.

Save the file.

4.

Connect to the directory in which you stored the PowerShell script file. Type.\Rename-UcsHyperVNICs.ps1

When the NIC teams have been renamed, you can configure static IP addresses for the NICs. Set-UcsHyperVIps.ps1 contains a PowerShell script file that will assign a fixed IP address to four NICs. The addresses assigned are in the format 192.168.<vlan>.<hostnum>, where <vlan> is the VLAN tag for the associated network, and <hostnum> is a value fixed across all IP addresses. It keeps things simpler if you assign the same value to <hostnum> as you used for the Mgmt NIC. The script also sets the NICs to not automatically register themselves in DNS.

This script file is designed to be run from a domain-joined workstation or server.

1.

Connect your configuration workstation to the network.

2.

Edit the script to reflect the names of the customer NIC names and VLAN tags.

3.

Save the.script

4.

Connect to the directory in which you stored the Set-UcsHyperVIps script file. Type.\Set-UcsHyperVIps.ps1

Role and Feature Installation

The Add-UcsHyperVFeatures.ps1 PowerShell script will add the Hyper-V role and the Failover Cluster and MPIO features. MPIO is required to create dual paths to the iSCSI LUNs. Adding the Hyper-V role requires that the server be rebooted. This is handled automatically by the script.

This script can be run from the configuration workstation.

1.

Connect to the location of the PowerShell scripts

2.

Type.\Add-UcsHyperVFeatures.ps1

It will take a couple minutes for the features to be added. After the Hyper-V Server has rebooted, the virtual switches need to be created for use by the VMs.

1.

Connect to the location of the PowerShell scripts

2.

Type.\Create-UcsHyperVSwitches.ps1

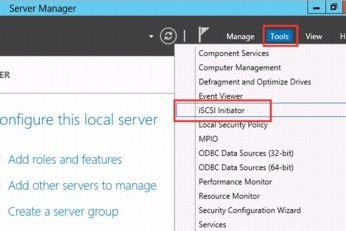

Configure MPIO on Windows Server 2012

MPIO can be configured from either the MPIO GUI after the feature is enabled, or from powershell. To configure the required settings from the MPIO GUI perform the following steps

1.

Go to the Server Manager

2.

Select Tools then MPIO

3.

From the MPIO GUI, click the Discover Multi-Path tab

4.

Select Add support for iSCSI devices, and click Add

5.

Do not reboot the node when prompted

6.

Click the MPIO Devices tab

7.

Select Add

8.

Enter "EMC Celerra"

Note

EMC is followed by 5 spaces and Celerra is followed by 9 spaces

9.

Select OK and reboot the server

Alternatively the following PowerShell commands can be used to configure MPIO.

Note

A reboot is required after these commands even though there may not be a prompt to reboot.

10.

Enable-MsdsmAutomaticClaim -BusType iSCSI

11.

New-MsdsmSupportedhw -VendorID "EMC Celerra"

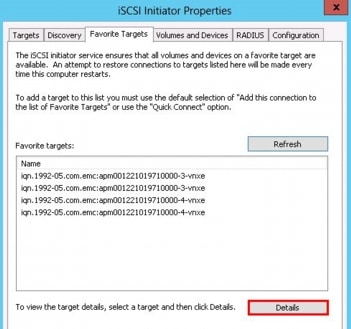

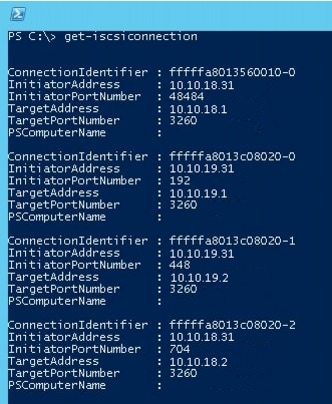

Configure the iSCSI Sessions to the VNXe

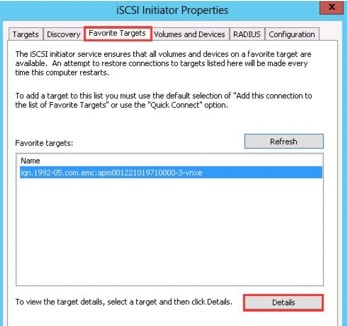

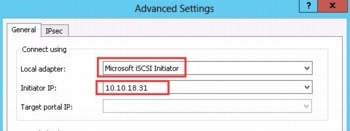

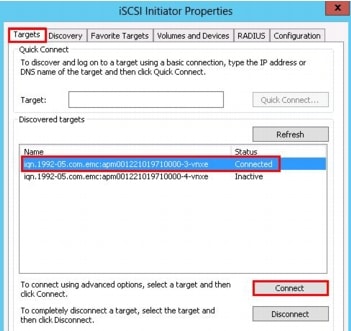

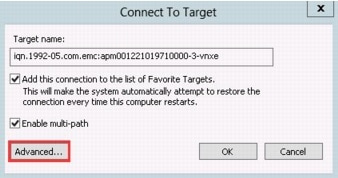

The following steps will show how to configure the iSCSI connections to the VNXe through the iSCSI Initiator Properties GUI. The appendix also includes a PowerShell script that can be used to accomplish the same task.

Validate the Host iSCSI Configuration

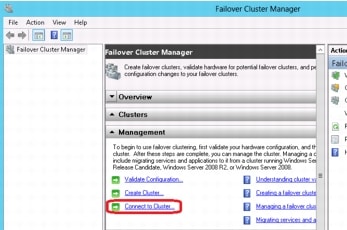

Configuration of Hyper-V Failover Cluster

At this time you should have configured the physical Cisco UCS C-220 Rack-Mount servers to be ready to create a cluster. Preparation steps on each server included:

•

Installation of Windows Server 2012 Datacenter Edition

•

Prepared the installation for remote management

•

Identified the management NIC and assigned a fixed IP address.

•

Joined to the domain.

•

Renamed the NICs to reflect the names of the service profile used to create the server instance

•

Set fixed IP addresses on remaining NICs

•

Ensured cluster disks are available and ready for use

•

Installed requisite roles and features and configured virtual switches

Log on to the configuration workstation with an account that has privileges to create Computer Name Objects in the Active Directory. Definitely the domain administrator account will have these privileges.

Note

Some customers will limit access to the domain administrator account. Some customers also prepopulate Active Directory with the Computer Name Object for clusters before the clusters are created. Work with the customer domain administrators to ensure you are following their practices and modify the following steps accordingly.

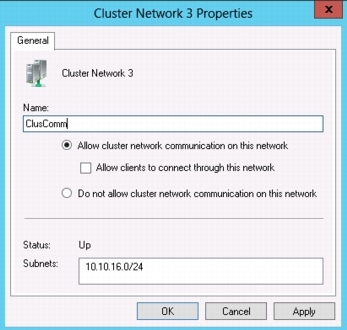

Set Cluster Network Purposes

The cluster automatically attempts to determine which network is to be used for which purpose. Though it does a good job most of the time, it is better to ensure that the networks are performing the functions you want them to. Microsoft Failover Clustering assigns a metric value to each network, and that metric value is used to determine each network's use. The network with the lowest metric value is always used for Cluster Shared Volumes. The next lowest metric is always used for the primary Live Migration network.

This script can be run from the configuration workstation. This script assumes that the CSV network is named "CSV" and the live migration network is named "LiveMigration". If your networks have different names, you will need to modify the script to reflect your names.

1.

Connect to the location of the PowerShell scripts

2.

Type.\Set-UcsHyperVClusterMetric.ps1

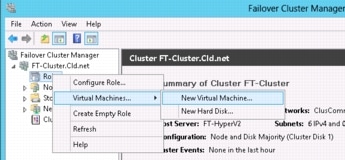

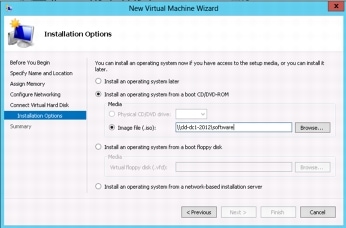

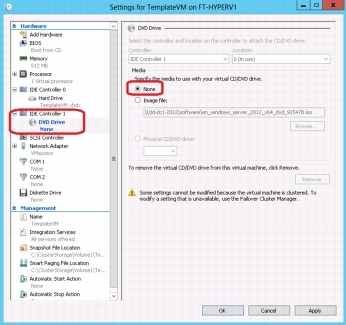

Create First Virtual Machine

This section describes how to create the first virtual machine. This first virtual machine will be turned into a template that can be used to quickly create additional virtual machines. The steps to build the template are as follows:

1.

Create a generic virtual machine.

2.

Install Windows Server 2012 Datacenter Edition.

3.

Tailor the configuration to meet customer base standards, including things like firewall settings, management utilities, network definitions, and so forth.

4.

Run Microsoft's sysprep utility on the machine to prepare it for cloning. Microsoft only supports cloned machines if they have been built from a computer image that has been prepared by sysprep.

5.

Store the virtual machine in a library location for later cloning.

The following steps MUST be performed from one of the nodes of the cluster.

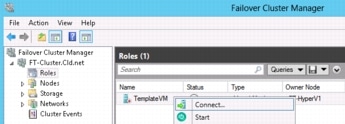

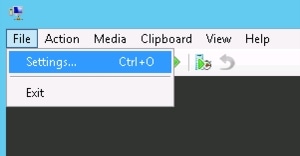

Create Additional Virtual Machines

After the template has been made, a PowerShell script can be used to create as many virtual machines as possible. The Create-UcsHyperVSingleVM.ps1 PowerShell script contains the commands necessary to create new VMs. This is a sample script and it may need to be edited to reflect the customer environment.

By default this script copies the VHDX file of a virtual machine that has had sysprep run against it. It copies from CSV volume 1 to CSV volume 2. If these are not the desired locations, the script will need to be edited. It is best to run this script from the cluster that owns CSV volume 2, or other selected destination CSV, due to the way CSV works. It is not a strict requirement to run it from the owning node, but it is more efficient.

This script must be run from a cluster node.

1.

Connect to the file share that stores the scripts.

2.

Connect to the location of the PowerShell scripts

3.

Type.\Add-UcsHyperVSingleVM.ps1

4.

Start and connect to the newly created virtual machine to run the out-of-the-box experience to complete the cloning experience.

5.

Tailor the operating system to the customer's specification.

Appendix A: PowerShell Scripts

The following PowerShell scripts are provided as is and are not warranted to work in all situations. They are provided as samples that will have to be modified to reflect each installation. They have been tested and are known to work, but they do not include error checking. Incorrect changes to any one of the files can render the script inoperable, so some experience with PowerShell is recommended on the part of the person making the modifications.

Create-UcsHyperVFastTrack.ps1

# Global Variables - These need to be tailored to the customer environment$ucs = "192.168.171.129"$ucsuser = "Admin"$ucspass = "admin"$mgtippoolblockfrom = "10.29.130.5"$mgtippoolblockto = "10.29.130.12"$mgtippoolgw = "10.29.130.1"$maintpolicy = "immediate" # Possible values "immediate", "timer-automatic", "user-ack"$WarningPreference = "SilentlyContinue"# vNIC information - build an array of values for working with vNICs, vNIC templates, VLANs# Values are: Name, MTU size, SwitchID, VLAN tag, order, QoS Policy$vnicarray = @()$vnicarray +=, ( "CSV" , "9000" , "A-B" , "12" , "5" , "")$vnicarray +=, ( "ClusComm" , "9000" , "A-B" , "16" , "3" , "")$vnicarray +=, ( "LiveMigration" , "9000" , "B-A" , "11" , "4" , "LiveMigration")$vnicarray +=, ( "Mgmt" , "1500" , "A-B" , "1" , "1" , "")$vnicarray +=, ( "VMaccess" , "1500" , "A-B" , "1" , "2" , "")# VLAN information for Appliance ports$iScsiVlanA = "iSCSI-A"$iSCSIVlanAID = 18$iScsiVlanB = "iSCSI-B"$iScsiVlanBID = 19$serverports = 3 , 4 , 5 , 6$applianceport1 = 29$applianceport2 = 30$qoslivemigration = "platinum"$qosiscsi = "gold"############# The following variables are used for Tenant definitions#$tenantname = "FastTrack3" # Used to create sub-organization and resource names$tenantnum = "F3" # Two hex characters to distinguish pool values$macpoolblockfrom = "00:25:B5:" + $tenantnum + ":01:01"$macpoolblockto = "00:25:B5:" + $tenantnum + ":01:FF"$wwpnpoolblockfrom = "20:00:00:25:B5:" + $tenantnum + ":02:01"$wwpnpoolblockto = "20:00:00:25:B5:" + $tenantnum + ":02:10"$wwnnpoolblockfrom = "20:00:00:25:B5:" + $tenantnum + ":03:01"$wwnnpoolblockto = "20:00:00:25:B5:" + $tenantnum + ":03:10"$uuidpoolblockfrom = "00" + $tenantnum + "-000000000001"$uuidpoolblockto = "00" + $tenantnum + "-00000000002F"##### ------------------------------------------------- ####### Start of code# Import Modulesif (( Get-Module | where { $_ .Name -ilike "CiscoUcsPS"}) .Name -ine "CiscoUcsPS"){Write-Host "Loading Module: Cisco UCS PowerTool Module"Import-Module CiscoUcsPs}Set-UcsPowerToolConfiguration -SupportMultipleDefaultUcs $false | Out-NullTry {# Login to UCSWrite-Host "UCS: Logging into UCS Domain: $ucs "$ucspasswd = ConvertTo-SecureString $ucspass -AsPlainText -Force$ucscreds = New-Object System.Management.Automation.PSCredential ( $ucsuser , $ucspasswd)$ucslogin = Connect-Ucs -Credential $ucscreds $ucs$rootorg = Get-UcsManagedObject -Dn "org-root"# Create Server PortsWrite-Host "UCS: Creating Server Ports"$mo = Get-UcsFabricServerCloudForEach ( $srvrprt in $serverports){$mo | Add-UcsServerPort -PortId $srvrprt -SlotId 1 | Out-Null}# Create Management IP PoolWrite-Host "UCS: Creating Management IP Pool"Get-UcsOrg -Level root | Get-UcsIpPool -Name "ext-mgmt" -LimitScope | Add-UcsIpPoolBlock -DefGw $mgtippoolgw -From $mgtippoolblockfrom -To $mgtippoolblockto | Out-Null# Create QoS for Live Migration, iSCSI, and Best EffortWrite-Host "UCS: Creating QoS for Live Migration, iSCSI, and Best Effort"Start-UcsTransaction$mo = Get-UcsQosClass -Priority $qosiscsi | Set-UcsQosClass -AdminState enabled -Mtu 9000 -Force | Out-Null$mo = Get-UcsQosClass -Priority $qoslivemigration | Set-UcsQosClass -AdminState enabled -Mtu 9000 -Force | Out-Null$mo = Get-UcsBestEffortQosClass | Set-UcsBestEffortQosClass -Mtu 9000 -Force | Out-Null$mo = $rootorg | Add-UcsQosPolicy -Name LiveMigration$mo | Set-UcsVnicEgressPolicy -Prio $qoslivemigration -Force | Out-Null$mo = $rootorg | Add-UcsQosPolicy -Name iSCSI$mo | Set-UcsVnicEgressPolicy -Prio $qosiscsi -Force | Out-NullComplete-UcsTransaction | Out-Null# Create any needed VLANsWrite-Host "UCS: Creating VLANs"Start-UcsTransaction$i = 0while ( $i -lt $vnicarray .length){$entry = $vnicarray [ $i ]Get-UcsLanCloud | Add-UcsVlan -Name $entry [ 0 ] -Id $entry [ 3 ] | Out-Null$i ++}Complete-UcsTransaction | Out-Null# Create VLANs for Appliance PortsWrite-Host "UCS: Creating VLANs for Appliance Ports"Start-UcsTransactionGet-UcsFiLanCloud -Id "A" | Add-UcsVlan $iScsiVlanA -Id $iScsiVlanAID -DefaultNet "no" -Sharing "none" | Out-NullGet-UcsFiLanCloud -Id "B" | Add-UcsVlan $iScsiVlanB -Id $iScsiVlanBID -DefaultNet "no" -Sharing "none" | Out-NullGet-UcsFabricApplianceCloud -Id "A" | Add-UcsVlan -Id $iScsiVlanAID -Name $iScsiVlanA | Out-NullGet-UcsFabricApplianceCloud -Id "B" | Add-UcsVlan -Id $iScsiVlanBID -Name $iScsiVlanB | Out-NullComplete-UcsTransaction | Out-Null####### Create Tenant sub-organization, templates, pools, and policies## Create Tenant sub-organizationWrite-Host "UCS: $tenantname - Creating sub-organization"$tenantorg = Add-UcsOrg -Org root -Name $tenantname# Create Local Disk Configuration PolicyWrite-Host "UCS: Creating Local Disk Configuration Policy"$tenantorg | Add-UcsLocalDiskConfigPolicy -Mode "any-configuration" -Name $tenantname -ProtectConfig "yes" | Out-Null# Create Mac PoolWrite-Host "UCS: $tenantname - Creating MAC Pool"Start-UcsTransaction$mo = $tenantorg | Add-UcsMacPool -Name $tenantname$mo | Add-UcsMacMemberBlock -From $macpoolblockfrom -To $macpoolblockto | Out-NullComplete-UcsTransaction | Out-Null# Create WWPN PoolWrite-Host "UCS: $tenantname - Creating WWPN Pool"Start-UcsTransaction$mo = $tenantorg | Add-UcsWwnPool -Name wwpn $tenantname -Purpose "port-wwn-assignment"$mo | Add-UcsWwnMemberBlock -From $wwpnpoolblockfrom -To $wwpnpoolblockto | Out-NullComplete-UcsTransaction | Out-Null# Create WWNN PoolWrite-Host "UCS: $tenantname - Creating WWNN Pool"Start-UcsTransaction$mo = $tenantorg | Add-UcsWwnPool -Name wwnn $tenantname -Purpose "node-wwn-assignment"$mo | Add-UcsWwnMemberBlock -From $wwnnpoolblockfrom -To $wwnnpoolblockto | Out-NullComplete-UcsTransaction | Out-Null# Create UUID Suffix PoolWrite-Host "UCS: $tenantname - Creating UUID Suffix Pool"Start-UcsTransaction$mo = $tenantorg | Add-UcsUuidSuffixPool -Name $tenantname$mo | Add-UcsUuidSuffixBlock -From $uuidpoolblockfrom -To $uuidpoolblockto | Out-NullComplete-UcsTransaction | Out-Null# Create BIOS, Power, and Scrub PoliciesWrite-Host "UCS: $tenantname - Creating BIOS and Scrub Policies"Add-UcsBiosPolicy -Name $tenantname | Set-UcsBiosVfQuietBoot -VpQuietBoot disabled -Force | Out-NullAdd-UcsPowerPolicy -Name $tenantname -Prio "no-cap" | Out-NullAdd-UcsScrubPolicy -org $rootorg -Name $tenantname -BiosSettingsScrub "no" -DiskScrub "no" | Out-NullAdd-UcsMaintenancePolicy -org $rootorg -Name $tenantname -UptimeDisr $maintpolicy | Out-Null# Create Server PoolWrite-Host "UCS: $tenantname - Creating Server Pool"$serverpool = $tenantorg | Add-UcsServerPool -Name $tenantname#???** # Create Server Qualification PolicyWrite-Host "UCS: $tenantname - Creating Server Qualification Policy"Start-UcsTransaction$serverqualpol = $tenantorg | Add-UcsServerPoolQualification -Name $tenantname$serveradaptorqual = $serverqualpol | Add-UcsAdaptorQualification$serveradaptorcapqual = $serveradaptorqual | Add-UcsAdaptorCapQualification -Maximum "unspecified" -Model "N2XX-ACPCI01" -Type "virtualized-eth-if"$serverrackqual = $serverqualpol | Add-UcsRackQualification -MinId 1 -MaxId 8Complete-UcsTransaction | Out-Null# Create Server Pool Policy (for dynamic server pools based on qualification policy)Write-Host "UCS: $tenantname - Creating Server Pool Policy"$serverpoolpol = $tenantorg | Add-UcsServerPoolPolicy -Name $tenantname -PoolDn $serverpool .dn -Qualifier $serverqualpol .Name#Create VNIC templatesWrite-Host "UCS: $tenantname - Creating VNIC templates"Start-UcsTransaction$i = 0while ( $i -lt $vnicarray .length){$entry = $vnicarray [ $i ]$vnictemplate = $tenantorg | Add-UcsVnicTemplate -IdentPoolName $tenantname -Name $entry [ 0 ] -Mtu $entry [ 1 ] -SwitchId $entry [ 2 ] -Target "adaptor" -TemplType "updating-template" -QosPolicyName $entry [ 5 ]Add-UcsVnicInterface -VnicTemplate $vnictemplate -DefaultNet "yes" -Name $entry [ 0 ] | Out-Null$i ++}$mo = $tenantorg | Add-UcsVnicTemplate -IdentPoolName $tenantname -Mtu 9000 -Name $iSCSIVlanA -QosPolicyName "iSCSI" -SwitchId "A" -TemplType "updating-template"$mo | Add-UcsVnicInterface -DefaultNet "yes" -Name $iSCSIVlanA | Out-Null$mo = $tenantorg | Add-UcsVnicTemplate -IdentPoolName $tenantname -Mtu 9000 -Name $iSCSIVlanB -QosPolicyName "iSCSI" -SwitchId "B" -Target "adaptor" -TemplType "updating-template"$mo | Add-UcsVnicInterface -DefaultNet "yes" -Name $iSCSIVlanB | Out-NullComplete-UcsTransaction | Out-Null# Create Appliance Ports for iSCSI connection.Write-Host "UCS: Creating Appliance Ports for iSCSI connectivity"Start-UcsTransactionGet-UcsFabricApplianceCloud -Id "A" | Add-UcsAppliancePort -AdminSpeed "10gbps" -AdminState "enabled" -PortId $applianceport2 -PortMode "trunk" -Prio $qosiscsi -SlotId 1 | Out-NullGet-UcsFabricApplianceCloud -Id "A" | Add-UcsAppliancePort -AdminSpeed "10gbps" -AdminState "enabled" -PortId $applianceport1 -PortMode "trunk" -Prio $qosiscsi -SlotId 1 | Out-NullGet-UcsApplianceCloud | Get-UcsVlan -Name vlan $iScsiVlanA -LimitScope | Add-UcsVlanMemberPort -AdminState "enabled" -PortId $applianceport1 -SlotId 1 -SwitchId "A"Get-UcsApplianceCloud | Get-UcsVlan -Name vlan $iScsiVlanA -LimitScope | Add-UcsVlanMemberPort -AdminState "enabled" -PortId $applianceport2 -SlotId 1 -SwitchId "A"Get-UcsFabricApplianceCloud -Id "B" | Add-UcsAppliancePort -AdminSpeed "10gbps" -AdminState "enabled" -PortId $applianceport2 -PortMode "trunk" -Prio $qosiscsi -SlotId 1 | Out-NullGet-UcsFabricApplianceCloud -Id "B" | Add-UcsAppliancePort -AdminSpeed "10gbps" -AdminState "enabled" -PortId $applianceport1 -PortMode "trunk" -Prio $qosiscsi -SlotId 1 | Out-NullGet-UcsApplianceCloud | Get-UcsVlan -Name vlan $iScsiVlanB -LimitScope | Add-UcsVlanMemberPort -AdminState "enabled" -PortId $applianceport1 -SlotId 1 -SwitchId "B"Get-UcsApplianceCloud | Get-UcsVlan -Name vlan $iScsiVlanB -LimitScope | Add-UcsVlanMemberPort -AdminState "enabled" -PortId $applianceport2 -SlotId 1 -SwitchId "B"Complete-UcsTransaction | Out-Null# Create Service Profile Template (using MAC, WWPN, Server Pools, VLANs, etc. previously created steps) with desired power state to downWrite-Host "UCS: $tenantname - Creating SP Template: $tenantname in UCS org:" $tenantorg .dnStart-UcsTransaction$mo = $tenantorg | Add-UcsServiceProfile -ExtIPState "none" -IdentPoolName $tenantname -LocalDiskPolicyName $tenantname -PowerPolicyName $tenantname -ScrubPolicyName $tenantname -Name $tenantname -Type "updating-template" -Uuid "0"$mo | Add-UcsVnicDefBeh -Action "none" -Type "vhba" | Out-Null$i = 0while ( $i -lt $vnicarray .length){$entry = $vnicarray [ $i ]$mo | Add-UcsVnic -AdaptorProfileName "Windows" -Addr "derived" -AdminVcon "1" -Name $entry [ 0 ] -NwTemplName $entry [ 0 ] -order $entry [ 4 ] | Out-Null$i ++}Complete-UcsTransaction | Out-Null# Logout of UCSWrite-Host "UCS: Logging out of UCS: $ucs "Disconnect-Ucs}Catch{Write-Host "Error occurred in script:"Write-Host ${Error}exit}Create-UcsHyperVIscsi.ps1