Cisco HyperFlex for Google Cloud’s Anthos

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Cisco HyperFlex for Google Cloud’s Anthos

Cisco HyperFlex with HX-CSI Plugin for Google Cloud’s Anthos GKE on-prem Deployment

Published: May 2020

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

About the Anthos Ready Partner Initiative

Anthos Ready designates Partner solutions that meet Google Cloud’s qualification requirements and have been verified to work with Anthos to meet the infrastructure and application development needs of enterprise customers. Partner solutions that complete and maintain the applicable qualification requirements are awarded the Works with Anthos badge which may be used with the qualified solution.

https://cloud.google.com/partners/anthos-ready

Table of Contents

Cisco Unified Computing System

Cisco UCS 6332-16UP Fabric Interconnect

Cisco HyperFlex HX-Series Nodes

Cisco HyperFlex HXAF240c-M5SX All-Flash Node

Cisco VIC 1387 MLOM Interface Cards

Cisco HyperFlex Data Platform Software

Cisco HyperFlex Connect HTML5 Management Web Page

Cisco HyperFlex HX Data Platform Administration Plug-in

Cisco HyperFlex HX Data Platform Controller

Data Operations and Distribution

Data Write and Compression Operations

Data Destaging and Deduplication

Cisco HyperFlex CSI Storage Plugin

Continuous Integration / Continuous Delivery with Anthos

Google Cloud Marketplace for Kubernetes Apps

Anthos GKE on-prem Network Topology

Deployment Hardware and Software

Cisco HyperFlex Cluster Configuration

Deploy Cisco HyperFlex Data Platform Installer VM

Cisco HyperFlex Cluster Creation

Run Cluster Post Installation Script

Adding a Converged Node through HX Installer

Upgrade Cisco HyperFlex Data Platform

Anthos GKE on-prem Prerequisites

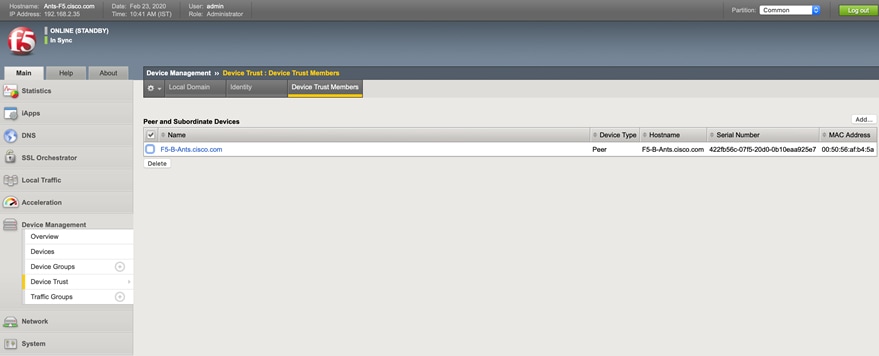

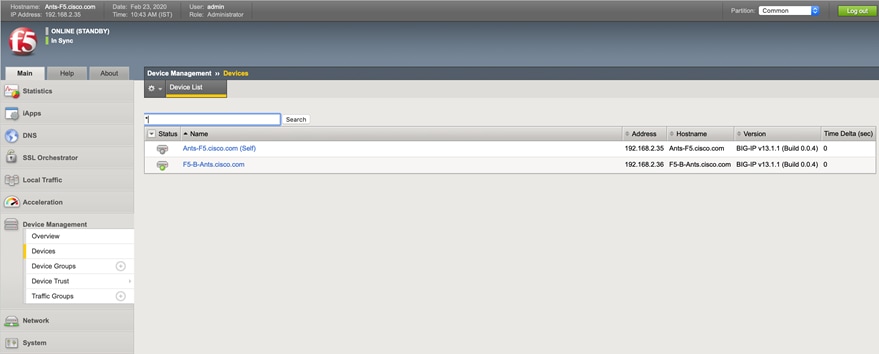

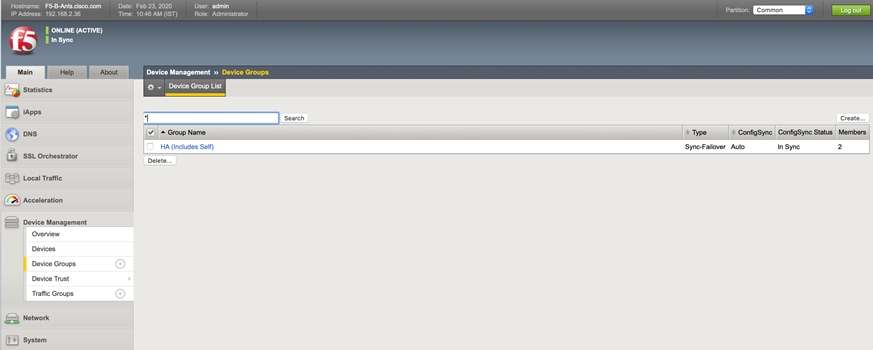

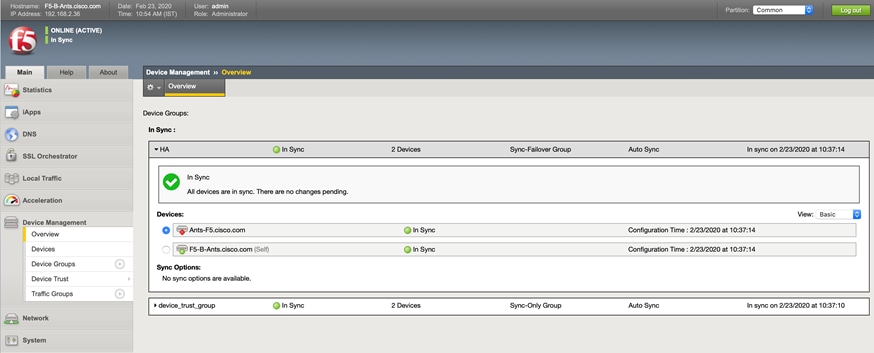

L4 Load Balancer Configuration

Configuring Private Docker Repository

Setting up Proxy Environment (optional)

Configuring Static IPs for Admin and User Cluster

Creating Cluster Configuration File

Prepare Cluster with Configuration File

Create Cluster with Configuration file

Deploy Additional User Cluster

Enabling Ingress for L4 Load Balancer

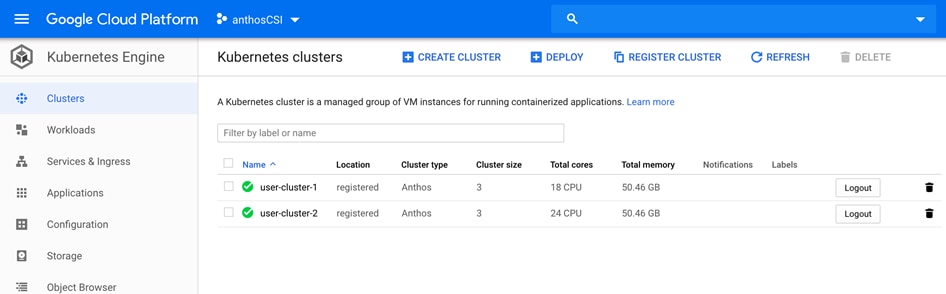

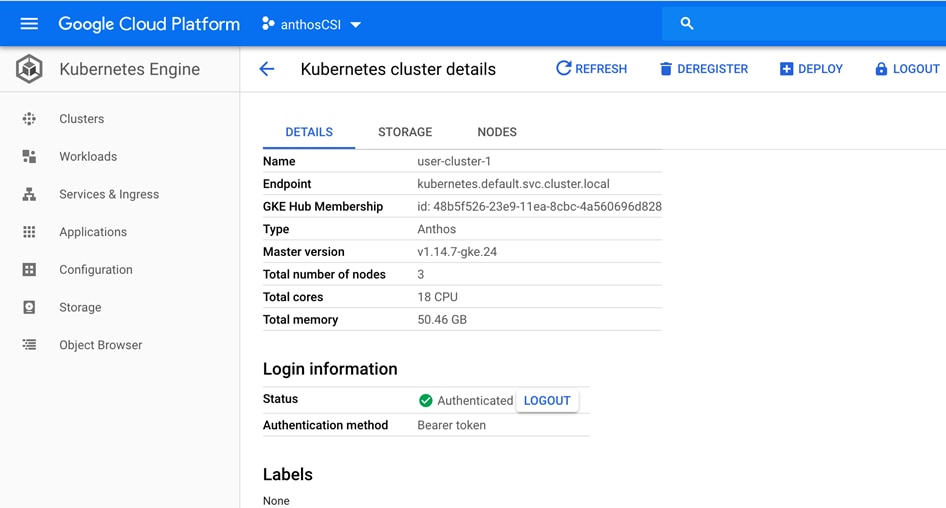

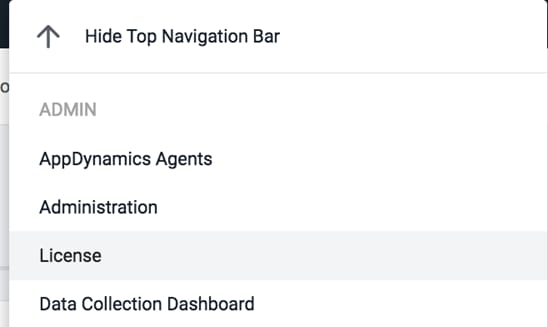

Enabling Access to User Clusters with Google Cloud Console

Google Cloud Platform Marketplace

Installing HX-CSI Storage Plugin

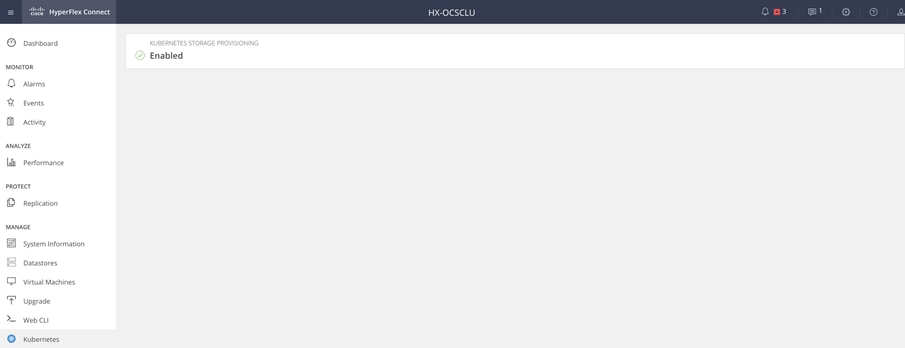

Enabling Kubernetes Integration in Cisco HyperFlex Connect

Installing the Cisco HyperFlex CSI Plugin for Kubernetes

Verifying Cisco HyperFlex CSI Storage Class Creation

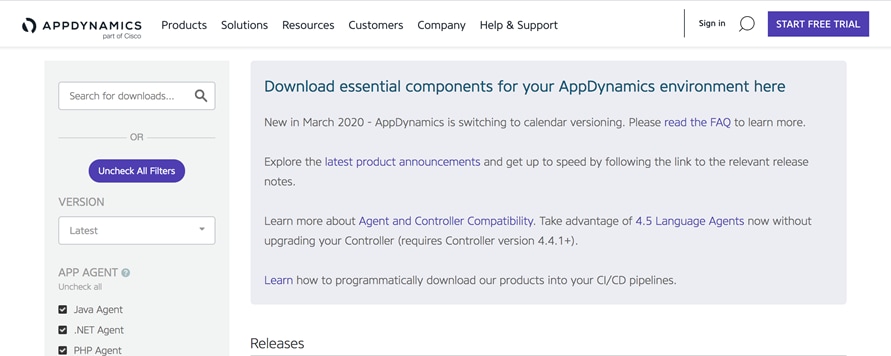

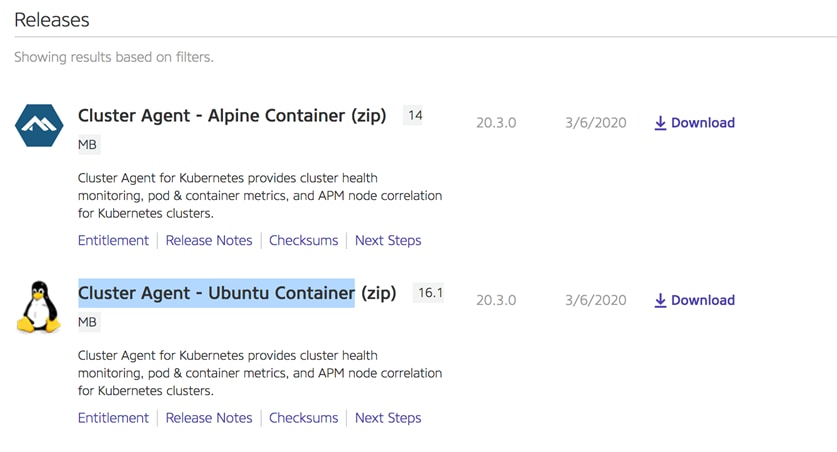

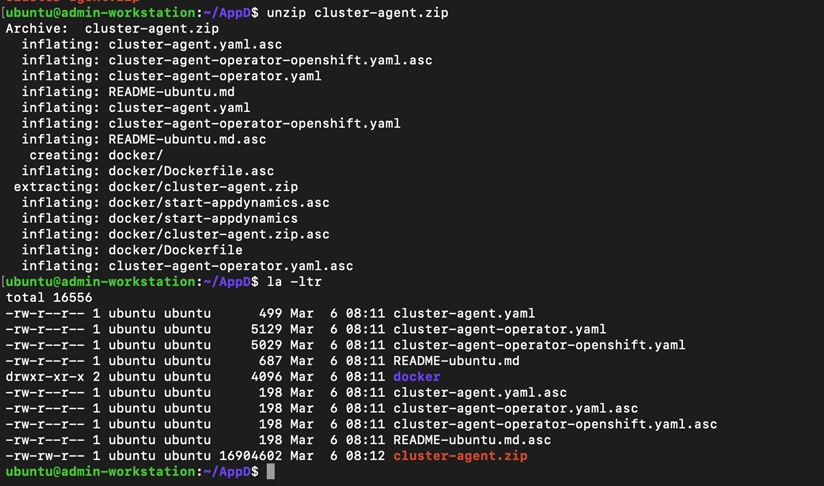

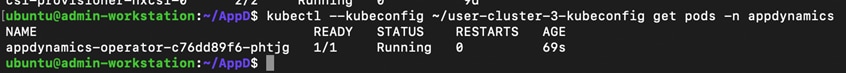

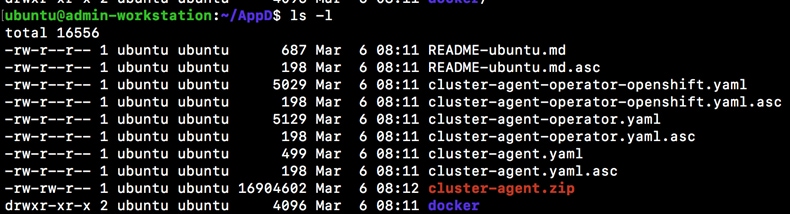

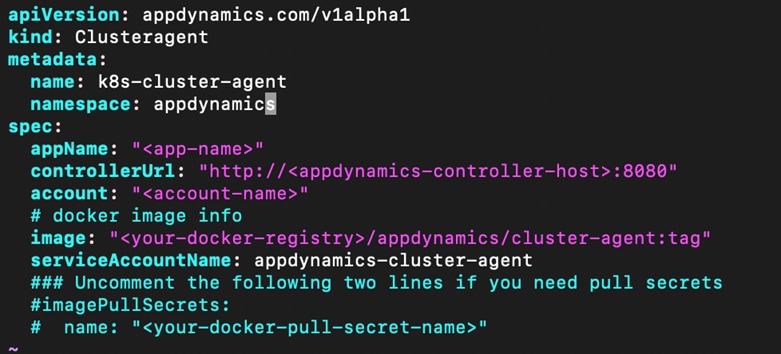

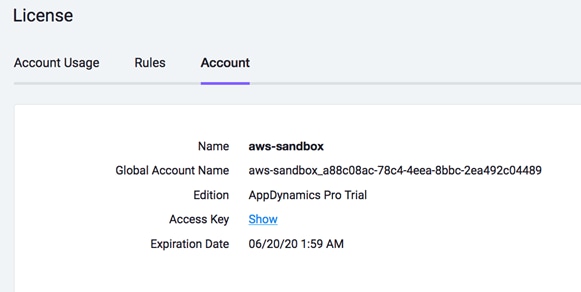

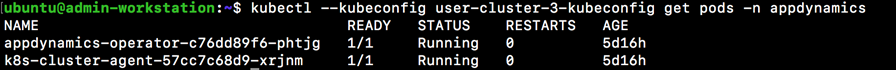

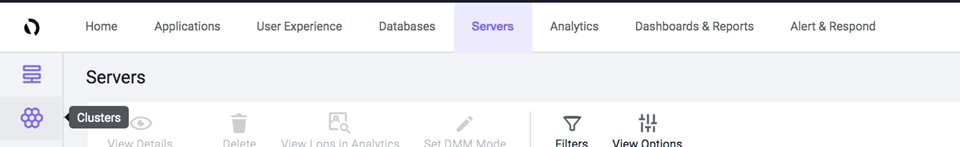

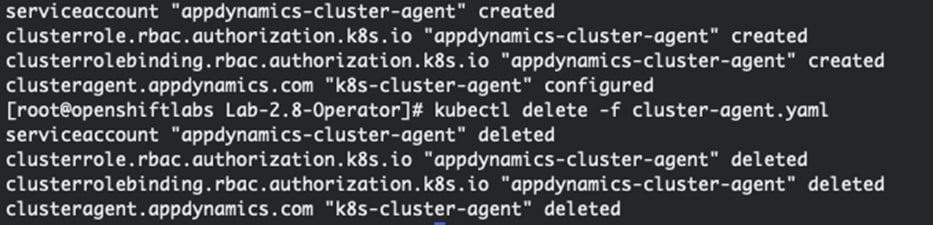

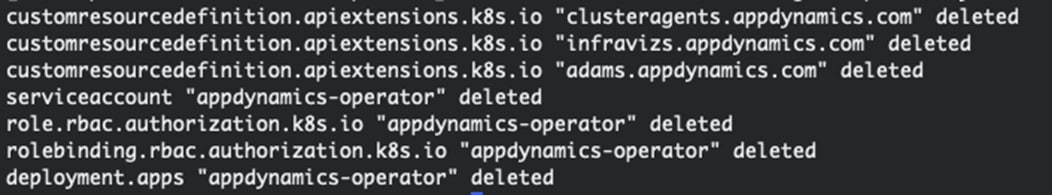

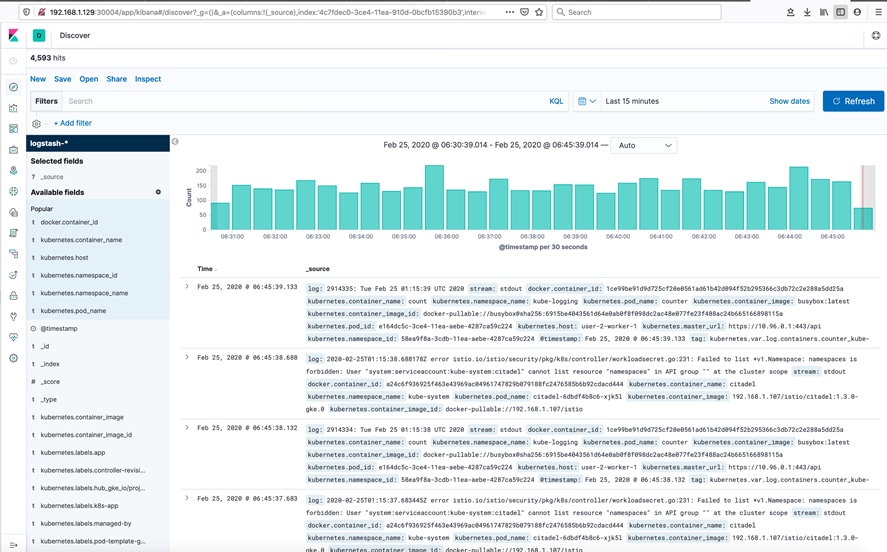

Installing AppDynamics for Monitoring Anthos GKE on-prem Cluster

Prerequisites for Cluster Agent Deployment

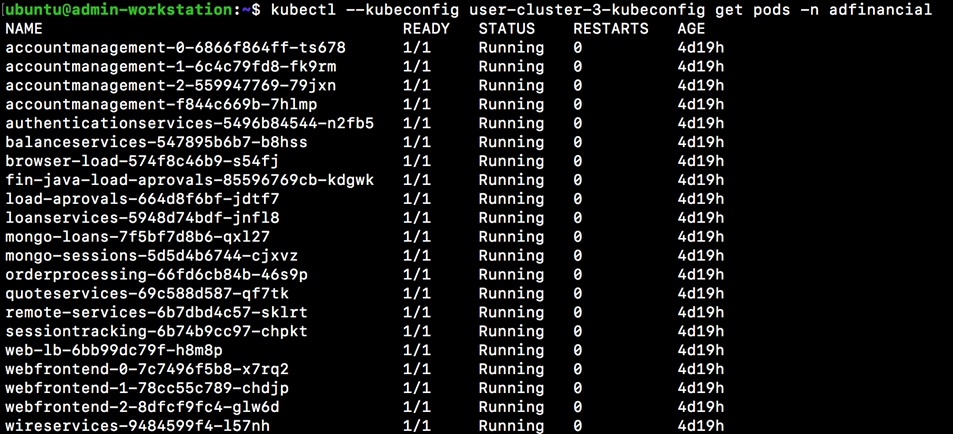

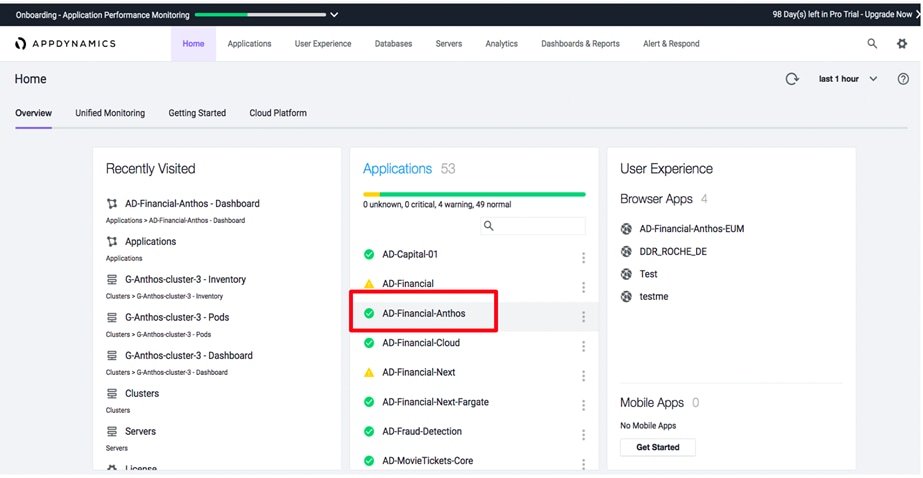

Monitor Demo Application Deployed on Anthos GKE on-prem cluster

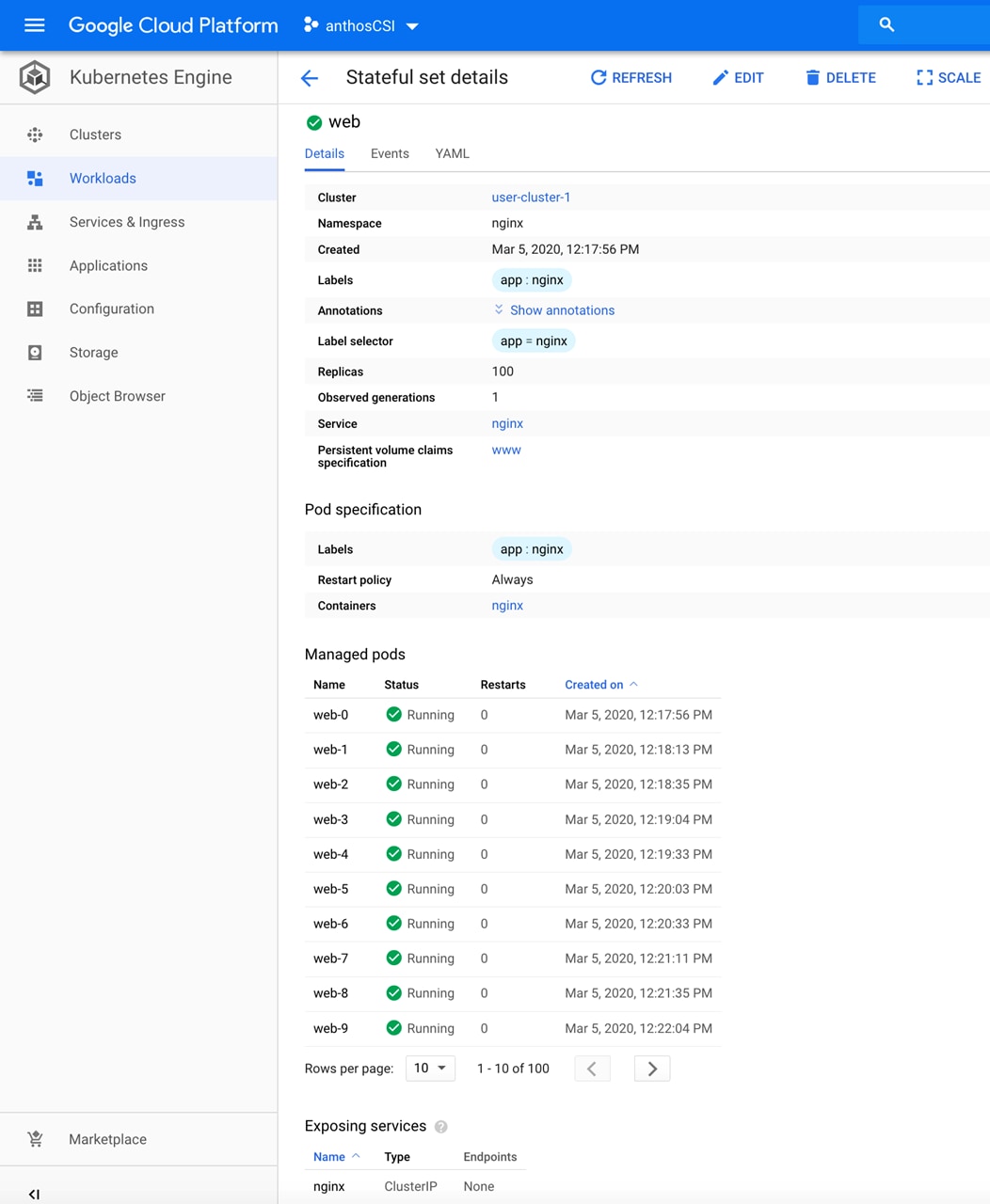

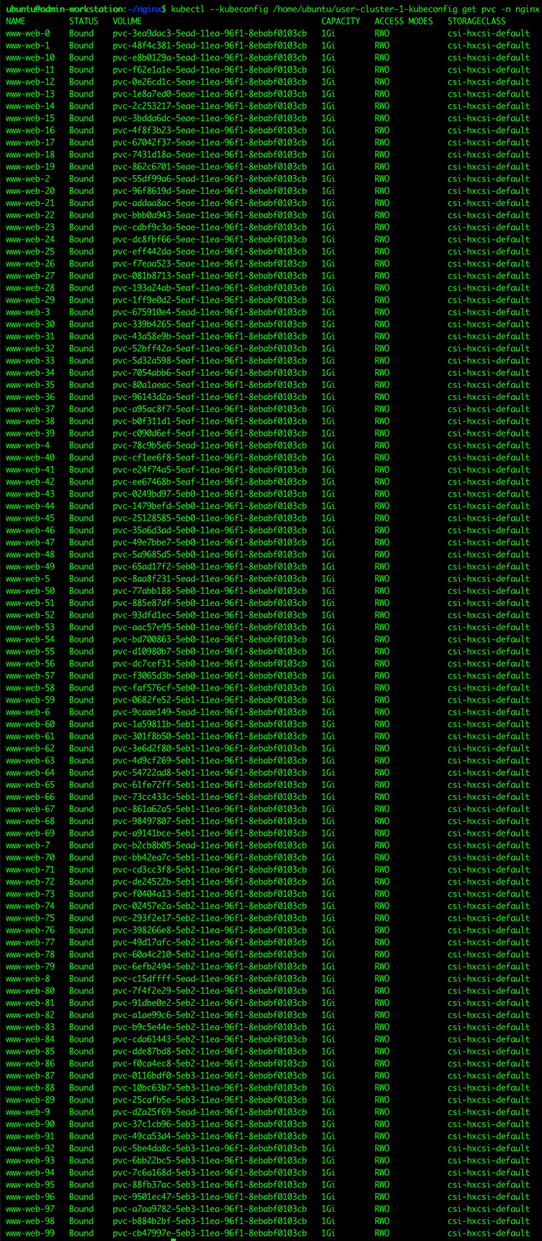

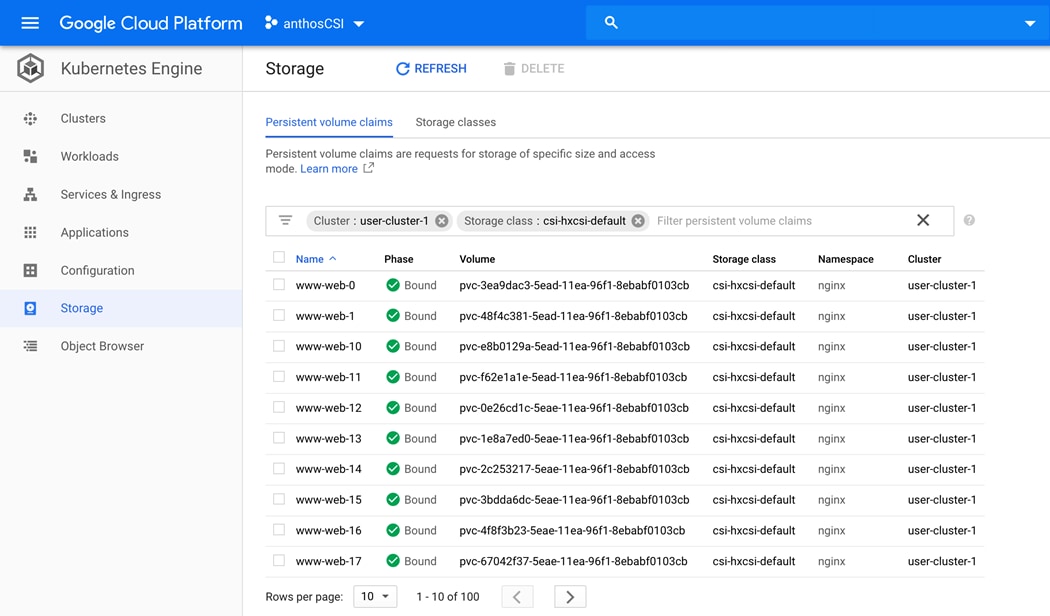

Stateful Application Deployment

Sizing Considerations for Anthos GKE on Cisco HyperFlex

Appendix – Stateful Application YAML Examples

Stateful Application Deployment

Stateful Application for Scale Test

As enterprises undergo digital transformation, customers are modernizing their IT infrastructure to provide the performance, availability, scalability, and agility required to meet today's business demands. HyperConverged Infrastructure (HCI), the software-defined storage platform, has been the fastest growing platform providing an excellent fit for such growing business needs.

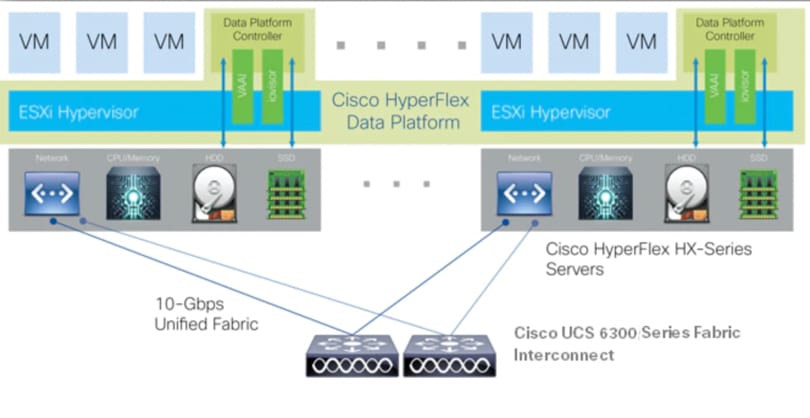

Cisco HyperFlex™ unifies compute, storage, and networking from the core to the edge. Cisco HyperFlex provides best-in-class HyperConverged Infrastructure that dramatically simplifies IT needs by providing a common building block for virtualized as well as Kubernetes-based workloads.

Google Cloud’s Anthos is an open hybrid and multi-cloud application platform that enables users to modernize existing applications, and build new ones, while running anywhere in a secure manner. Built on open source technologies pioneered by Google—including Kubernetes, Istio, and Knative—Anthos enables consistency between on-premises and cloud environments and helps accelerate application development. Included in Anthos is, GKE on-prem which helps business to modernize on-prem and transition to cloud with hybrid architectures, promoting agility and innovation.

By bringing together Anthos and Cisco HyperFlex hyperconverged infrastructure, customers can unlock new levels of speed and agility by simplifying the building, running and managing of modern applications in a Hybrid Cloud environment. By running Anthos on Cisco HyperFlex enables independent scaling of compute and storage, provides guaranteed performance and a simplified management. This solution enables users to quickly deploy and manage Kubernetes-based applications on Cisco HyperFlex or on the Cloud.

Kubernetes Monitoring with Cisco’s AppDynamics (optional component) gives organizations visibility into application and business performance, providing unparalleled insights into containerized applications, Kubernetes clusters and underlying infrastructure metrics—all through a single pane of glass.

This document details the deployment steps and best practices for this solution.

Introduction

HyperConverged Infrastructures coalesce the computing, memory, hypervisor, and storage devices of servers into a single platform of virtual servers. There is no longer a separate storage system, since the servers running the hypervisors also provide the software defined storage resources to store the virtual servers, effectively storing the virtual machines on themselves. Here are benefits:

· The silos are gone

· HCI is self-contained

· Simpler to use, faster to deploy, easier to consume

· Flexible and with high performance

The Cisco HyperFlex Kubernetes CSI Integration allows Cisco HyperFlex to dynamically provide persistent storage to stateful containerized workloads (from Kubernetes version 1.13 or later). The integration enables orchestration of the entire Persistent Volume object lifecycle to be offloaded and managed by Cisco HyperFlex, while being driven (initiated) by developers and users through standard Kubernetes Persistent Volume Claim objects. Developers and users get the benefit of leveraging Cisco HyperFlex for their Kubernetes persistent storage needs with zero additional administration overhead from their perspective. The HyperFlex Container Storage Interface (CSI) plug-in extends the performance, efficiency, and resiliency of HyperFlex to Kubernetes stateful applications.

Anthos GKE on-prem deployed on Cisco HyperFlex provides Container-as-a-Service environment with Anthos version 1.2 or higher. Cisco HyperFlex is qualified as an Anthos Ready Platform. This solution provides end-to-end orchestration, management and scalable architecture to deploy Anthos on Cisco HyperFlex with HyperFlex CSI (Container Storage Interface) for persistent storage to the containerized workloads. The HyperFlex CSI driver is qualified by Google as well. Also, in this solution we have included Cisco AppDynamics as it provides application visibility needed to deliver flawless customer experience for monitoring the containerized workloads running on Anthos GKE on-prem and cloud. The solution shows easy integration of AppDynamics with lightweight monitoring agents.

Audience

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, customers deploying the Cisco HyperFlex System and Anthos. External references are provided wherever applicable, but readers are expected to be familiar with VMware specific technologies, infrastructure concepts, networking connectivity, and security policies of the customer installation.

Purpose of this Document

This document describes the steps required to deploy, configure, and manage a standard Cisco HyperFlex system (in other words, a Cisco HyperFlex cluster connected to Cisco UCS Fabric Interconnects) to support Anthos. The document is based on all known best practices using the software, hardware and firmware revisions specified in the document at the time of publication. This document showcases the installation, configuration and expansion of Cisco HyperFlex standard clusters with converged nodes, and deployment of Anthos GKE on-prem in a typical customer datacenter environment. While readers of this document are expected to have sufficient knowledge to install and configure the products used, configuration details that are important to the deployment of this solution are provided in this solution.

Solution Summary

The Cisco HyperFlex system provides a fully contained virtual server platform, with compute and memory resources, integrated networking connectivity, a distributed high-performance log-based filesystem for VM storage, and the hypervisor software for running the virtualized servers, all within a single Cisco UCS management domain. Cisco HyperFlex is compatible with and supports Kubernetes and provides Cisco HyperFlex CSI (HX-CSI) integration for dynamic provisioning of persistent storage to stateful Kubernetes workloads running on HyperFlex platform. The HX-CSI plugin is based on the Kubernetes Container Storage Interface (CSI) specification. Customers can now use the HX-CSI plugin to provision and manage persistent volumes in Kubernetes version 1.13 and later.

Figure 1 Cisco HyperFlex System for Kubernetes Overview

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that unites compute, network, and storage access. The platform, optimized for virtual environments, is designed using open industry-standard technologies and aims to reduce total cost of ownership (TCO) and increase business agility. The system integrates a low-latency, lossless 10 Gigabit Ethernet, 25 Gigabit Ethernet or 40 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. It is an integrated, scalable, multi chassis platform in which all resources participate in a unified management domain.

The main components of Cisco Unified Computing System are:

· Computing: The system is based on an entirely new class of computing system that incorporates rack-mount and blade servers based on Intel Xeon Processors.

· Network: The system is integrated onto a low-latency, lossless, 10Gbps, 25Gbps or 40Gbps unified network fabric, with an option for 100Gbps uplinks. This network foundation consolidates LANs, SANs, and high-performance computing networks which are often separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

· Virtualization: The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

· Storage access: The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying storage access, Cisco Unified Computing System can access storage over Ethernet, Fibre Channel, Fibre Channel over Ethernet (FCoE), and iSCSI. This provides customers with their choice of storage protocol and physical architecture, and enhanced investment protection. In addition, the server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity, and management for increased productivity.

· Management: The system uniquely integrates all system components which enable the entire solution to be managed as a single entity by Cisco UCS Manager (UCSM). Cisco UCS Manager has an intuitive GUI, a CLI, and a robust API to manage all system configuration and operations. Cisco UCS can also be managed by Cisco Intersight, a cloud-based management and monitoring platform which offers a single pane of glass portal for multiple Cisco UCS systems across multiple locations.

Cisco Unified Computing System is designed to deliver:

· A reduced Total Cost of Ownership and increased business agility.

· Increased IT staff productivity through just-in-time provisioning and mobility support.

· A cohesive, integrated system which unifies the technology in the data center. The system is managed, serviced and tested as a whole.

· Scalability through a design for hundreds of discrete servers and thousands of virtual machines and the capability to scale I/O bandwidth to match demand.

· Industry standards supported by a partner ecosystem of industry leaders.

Cisco UCS Fabric Interconnect

Cisco UCS Fabric Interconnect (FI) is a core part of Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. Depending on the model chosen, Cisco UCS Fabric Interconnect offers line-rate, low-latency, lossless Ethernet, Fibre Channel over Ethernet (FCoE) and Fibre Channel connectivity. Cisco UCS Fabric Interconnects provide the management and communication backbone for the Cisco UCS C-Series, S-Series and HX-Series Rack-Mount Servers, Cisco UCS B-Series Blade Servers and Cisco UCS 5100 Series Blade Server Chassis. All servers and chassis, and therefore all blades, attached to the Cisco UCS Fabric Interconnects become part of a single, highly available management domain. In addition, by supporting unified fabrics, Cisco UCS Fabric Interconnects provide both the LAN and SAN connectivity for all servers within its domain. The product family supports Cisco low-latency, lossless Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The Fabric Interconnect supports multiple traffic classes over the Ethernet fabric from the servers to the uplinks. Significant TCO savings come from an FCoE-optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated.

Cisco UCS 6332-16UP Fabric Interconnect

Cisco UCS 6332-16UP Fabric Interconnect is a one-rack-unit (1RU) 10/40 Gigabit Ethernet, FCoE, and native Fibre Channel switch offering up to 2430 Gbps of throughput. The switch has 24 40Gbps fixed Ethernet and FCoE ports, plus 16 1/10Gbps fixed Ethernet, FCoE, or 4/8/16 Gbps FC ports. Up to 18 of the 40Gbps ports can be reconfigured as 4x10Gbps breakout ports, providing up to 88 total 10Gbps ports, although Cisco HyperFlex nodes must use a 40GbE VIC adapter in order to connect to a Cisco UCS 6300 Series Fabric Interconnect.

Figure 2 Cisco UCS 6332-16UP Fabric Interconnect

![]() When used for a Cisco HyperFlex deployment, due to mandatory QoS settings in the configuration, the 6332 and 6332-16UP will be limited to a maximum of four 4x10Gbps breakout ports, which can be used for other non-HyperFlex servers.

When used for a Cisco HyperFlex deployment, due to mandatory QoS settings in the configuration, the 6332 and 6332-16UP will be limited to a maximum of four 4x10Gbps breakout ports, which can be used for other non-HyperFlex servers.

Cisco HyperFlex HX-Series Nodes

A standard HyperFlex cluster requires a minimum of three HX-Series “converged” nodes (that is, nodes with shared disk storage). Data is replicated across at least two of these nodes, and a third node is required for continuous operation in the event of a single-node failure. Each node that has disk storage is equipped with at least one high-performance SSD drive for data caching and rapid acknowledgment of write requests. Each node also is equipped with additional disks, up to the platform’s physical limit, for long term storage and capacity.

Cisco HyperFlex HXAF240c-M5SX All-Flash Node

This capacity optimized Cisco HyperFlex all-flash model contains a 240 GB M.2 form factor solid-state disk (SSD) that acts as the boot drive, a 240 GB housekeeping SSD drive, either a single 375 GB Optane NVMe SSD, a 1.6 TB NVMe SSD or 1.6 TB SAS SSD write-log drive installed in a rear hot swappable slot, and six to twenty-three 960 GB or 3.8 TB SATA SSD drives for storage capacity. Optionally, the Cisco HyperFlex Acceleration Engine card can be added to improve write performance and compression. For configurations requiring self-encrypting drives, the caching SSD is replaced with an 800 GB SAS SED SSD, and the capacity disks are also replaced with 960 GB or 3.8 TB SED SSDs.

Figure 3 HXAF240c-M5SX Node

![]() In HX-series all-flash nodes either a 375 GB Optane NVMe SSD, a 1.6 TB SAS SSD or 1.6 TB NVMe SSD caching drive may be chosen. While the Optane and NVMe options can provide a higher level of performance, the partitioning of the three disk options is the same, therefore the amount of cache available on the system is the same regardless of the model chosen. Caching amounts are not factored in as part of the overall cluster capacity, only the capacity disks contribute to total cluster capacity.

In HX-series all-flash nodes either a 375 GB Optane NVMe SSD, a 1.6 TB SAS SSD or 1.6 TB NVMe SSD caching drive may be chosen. While the Optane and NVMe options can provide a higher level of performance, the partitioning of the three disk options is the same, therefore the amount of cache available on the system is the same regardless of the model chosen. Caching amounts are not factored in as part of the overall cluster capacity, only the capacity disks contribute to total cluster capacity.

Cisco VIC 1387 MLOM Interface Cards

Cisco UCS VIC 1387 Card is a dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP+) 40Gbps Ethernet and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter installed in the Cisco UCS HX-Series Rack Servers. Cisco UCS VIC 1387 is used in conjunction with the Cisco UCS 6332 or 6332-16UP model Fabric Interconnects.

The mLOM is used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host, each dynamically configured as either a network interface card (NICs) or host bus adapter (HBA). The personality of the interfaces is set programmatically using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, adapter settings, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all specified using the service profile.

Figure 4 Cisco VIC 1387 mLOM Card

![]() Hardware revision V03 or later of the Cisco VIC 1387 card is required for the Cisco HyperFlex HX-series servers.

Hardware revision V03 or later of the Cisco VIC 1387 card is required for the Cisco HyperFlex HX-series servers.

Cisco HyperFlex Data Platform Software

The Cisco HyperFlex HX Data Platform is a purpose-built, high-performance, distributed file system with a wide array of enterprise-class data management services. The data platform’s innovations redefine distributed storage technology, exceeding the boundaries of first-generation hyperconverged infrastructures. The data platform has all the features expected in an enterprise shared storage system, eliminating the need to configure and maintain complex Fibre Channel storage networks and devices. The platform simplifies operations and helps ensure data availability. Enterprise-class storage features include the following:

· Data protection creates multiple copies of the data across the cluster so that data availability is not affected if single or multiple components fail (depending on the replication factor configured).

· Stretched clusters allow nodes to be evenly split between two physical locations, keeping a duplicate copy of all data in both locations, thereby providing protection in case of an entire site failure.

· Logical availability zones provide multiple logical grouping of nodes and distributes the data across these groups in such a way that no single group has more than one copy of the data. This enables enhanced protection from node failures, allowing for more nodes to fail while the overall cluster remains online.

· Deduplication is always on, helping reduce storage requirements in virtualization clusters in which multiple operating system instances in guest virtual machines result in large amounts of replicated data.

· Compression further reduces storage requirements, reducing costs, and the log-structured file system is designed to store variable-sized blocks, reducing internal fragmentation.

· Replication copies virtual machine level snapshots from one Cisco HyperFlex cluster to another, to facilitate recovery from a cluster or site failure, via a failover to the secondary site of all VMs.

· Encryption stores all data on the caching and capacity disks in an encrypted format, to prevent accidental data loss or data theft. Key management can be done using local Cisco UCS Manager managed keys, or third-party Key Management Systems (KMS) via the Key Management Interoperability Protocol (KMIP).

· Thin provisioning allows large volumes to be created without requiring storage to support them until the need arises, simplifying data volume growth and making storage a “pay as you grow” proposition.

· Fast, space-efficient clones rapidly duplicate virtual storage volumes so that virtual machines can be cloned simply through metadata operations, with actual data copied only for write operations.

· Snapshots help facilitate backup and remote-replication operations, which are needed in enterprises that require always-on data availability.

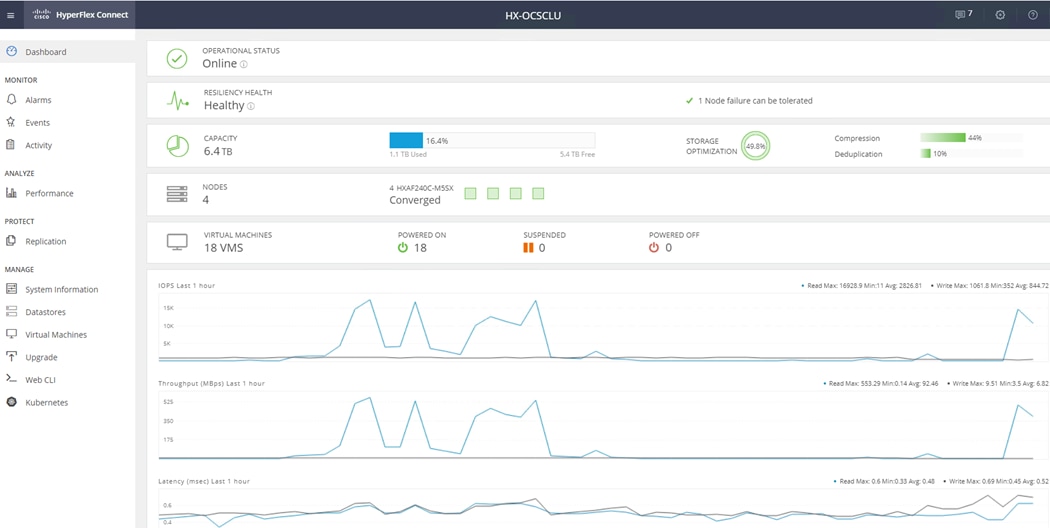

Cisco HyperFlex Connect HTML5 Management Web Page

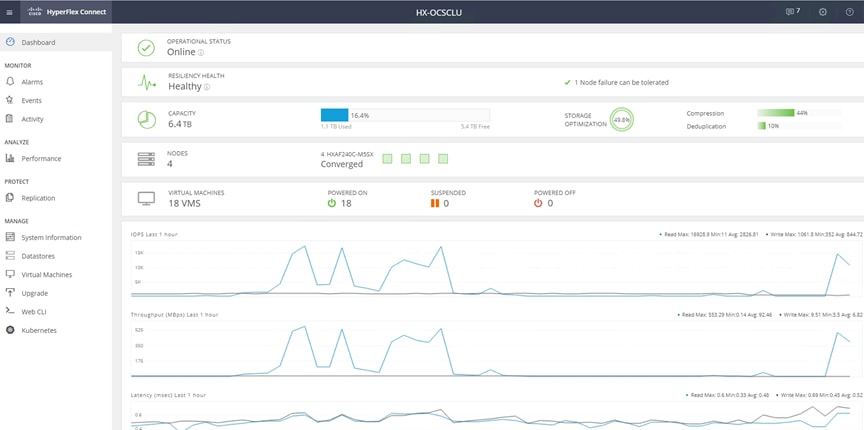

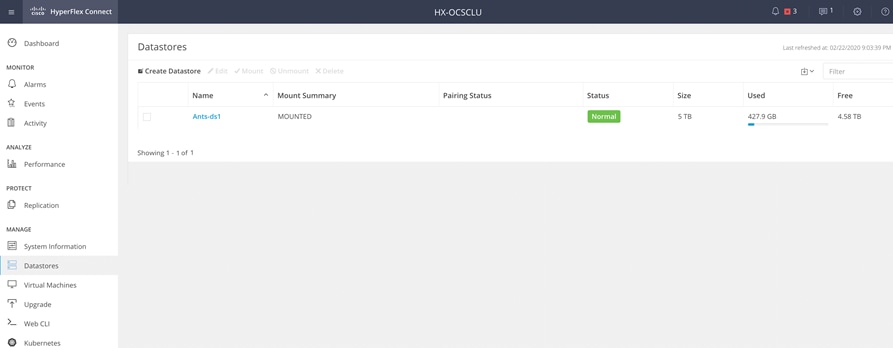

An HTML 5 based Web UI named HyperFlex Connect is available for use as the primary management tool for Cisco HyperFlex. Through this centralized point of control for the cluster, administrators can create volumes, monitor the data platform health, and manage resource use. Administrators can also use this data to predict when the cluster will need to be scaled. To use the HyperFlex Connect UI, connect using a web browser to the HyperFlex cluster IP address: http://<hx controller cluster ip>.

Figure 5 HyperFlex Connect UI

Cisco HyperFlex HX Data Platform Administration Plug-in

The Cisco HyperFlex HX Data Platform is also administered secondarily through a VMware vSphere web client plug-in, which is deployed automatically by the Cisco HyperFlex installer.

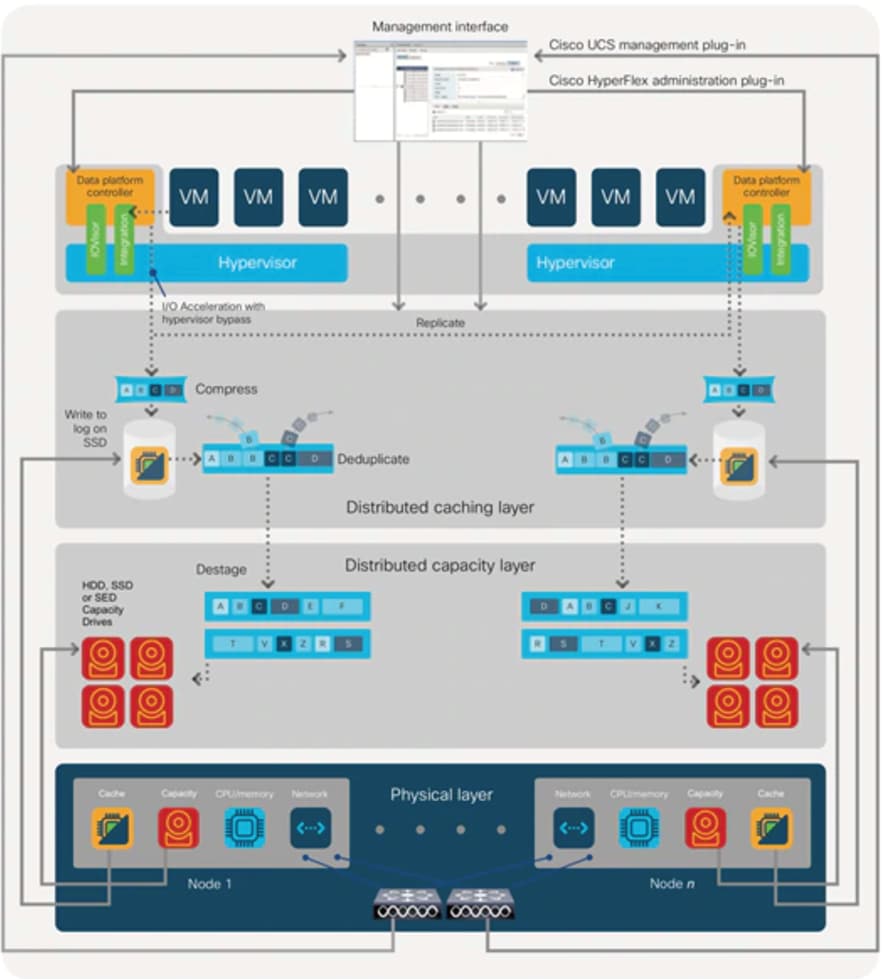

Cisco HyperFlex HX Data Platform Controller

A Cisco HyperFlex HX Data Platform controller resides on each node and implements the distributed file system. The controller runs as software in user space within a virtual machine, and intercepts and handles all I/O from the guest virtual machines. The Storage Controller Virtual Machine (SCVM) uses the VMDirectPath I/O feature to provide direct PCI passthrough control of the physical server’s SAS disk controller, or direct control of the PCI attached NVMe based SSDs. This method gives the controller VM full control of the physical disk resources, utilizing the SSD drives as a read/write caching layer, and the HDDs or SDDs as a capacity layer for distributed storage. The controller integrates the data platform into the VMware vSphere cluster through the use of three preinstalled VMware ESXi vSphere Installation Bundles (VIBs) on each node:

· IO Visor: This VIB provides a network file system (NFS) mount point so that the ESXi hypervisor can access the virtual disks that are attached to individual virtual machines. From the hypervisor’s perspective, it is simply attached to a network file system. The IO Visor intercepts guest VM IO traffic, and intelligently redirects it to the HyperFlex SCVMs.

· VMware API for Array Integration (VAAI): This storage offload API allows vSphere to request advanced file system operations such as snapshots and cloning. The controller implements these operations via manipulation of the filesystem metadata rather than actual data copying, providing rapid response, and thus rapid deployment of new environments.

· stHypervisorSvc: This VIB adds enhancements and features needed for HyperFlex data protection and VM replication.

Data Operations and Distribution

The Cisco HyperFlex HX Data Platform controllers handle all read and write operation requests from the guest VMs to their virtual disks (VMDK) stored in the distributed datastores in the cluster. The data platform distributes the data across multiple nodes of the cluster, and also across multiple capacity disks of each node, according to the replication level policy selected during the cluster setup. This method avoids storage hotspots on specific nodes, and on specific disks of the nodes, and thereby also avoids networking hotspots or congestion from accessing more data on some nodes versus others.

Replication Factor

The policy for the number of duplicate copies of each storage block is chosen during cluster setup and is referred to as the replication factor (RF).

· Replication Factor 3: For every I/O write committed to the storage layer, 2 additional copies of the blocks written will be created and stored in separate locations, for a total of 3 copies of the blocks. Blocks are distributed in such a way as to ensure multiple copies of the blocks are not stored on the same disks, nor on the same nodes of the cluster. This setting can tolerate simultaneous failures of 2 entire nodes in a cluster of 5 nodes or greater, without losing data and resorting to restore from backup or other recovery processes. RF3 is recommended for all production systems.

· Replication Factor 2: For every I/O write committed to the storage layer, 1 additional copy of the blocks written will be created and stored in separate locations, for a total of 2 copies of the blocks. Blocks are distributed in such a way as to ensure multiple copies of the blocks are not stored on the same disks, nor on the same nodes of the cluster. This setting can tolerate a failure of 1 entire node without losing data and resorting to restore from backup or other recovery processes. RF2 is suitable for non-production systems, or environments where the extra data protection is not needed. HyperFlex stretched clusters use the RF2 setting, however there are 2 copies of the data kept in both halves of the cluster, so effectively there are four copies stored.

Data Write and Compression Operations

Internally, the contents of each virtual disk are subdivided and spread across multiple servers by the HXDP software. For each write operation, the data is intercepted by the IO Visor module on the node where the VM is running, a primary node is determined for that particular operation via a hashing algorithm, and then sent to the primary node via the network. The primary node compresses the data in real time, writes the compressed data to the write log on its caching SSD, and replica copies of that compressed data are sent via the network and written to the write log on the caching SSD of the remote nodes in the cluster, according to the replication factor setting. For example, at RF=3 a write operation will be written to write log of the primary node for that virtual disk address, and two additional writes will be committed in parallel on two other nodes. Because the virtual disk contents have been divided and spread out via the hashing algorithm for each unique operation, this method results in all writes being spread across all nodes, avoiding the problems with data locality and “noisy” VMs consuming all the IO capacity of a single node. The write operation will not be acknowledged until all three copies are written to the caching layer SSDs. Written data is also cached in a write log area resident in memory in the controller VM, along with the write log on the caching SSDs. This process speeds up read requests when reads are requested of data that has recently been written.

Data Destaging and Deduplication

The Cisco HyperFlex HX Data Platform constructs multiple write log caching segments on the caching SSDs of each node in the distributed cluster. As write cache segments become full and based on policies accounting for I/O load and access patterns, those write cache segments are locked and new writes roll over to a new write cache segment. The data in the now locked cache segment is destaged to the HDD capacity layer of the nodes for the Hybrid system or to the SSD capacity layer of the nodes for the All-Flash or All-NVMe systems. During the destaging process, data is deduplicated before being written to the capacity storage layer, and the resulting data can now be written to the HDDs or SDDs of the server. On hybrid systems, the now deduplicated and compressed data is also written to the dedicated read cache area of the caching SSD, which speeds up read requests of data that has recently been written. When the data is destaged to the capacity disks, it is written in a single sequential operation, avoiding disk head seek thrashing on the spinning disks and accomplishing the task in the minimal amount of time. Since the data is already deduplicated and compressed before being written, the platform avoids additional I/O overhead often seen on competing systems, which must later do a read/dedupe/compress/write cycle. Deduplication, compression and destaging take place with no delays or I/O penalties to the guest VMs making requests to read or write data, which benefits both the HDD and SDD configurations.

Figure 6 HyperFlex HX Data Platform Data Movement

Data Read Operations

For data read operations, data may be read from multiple locations. For data that was very recently written, the data is likely to still exist in the write log of the local platform controller memory, or the write log of the local caching layer disk. If local write logs do not contain the data, the distributed filesystem metadata will be queried to see if the data is cached elsewhere, either in write logs of remote nodes, or in the dedicated read cache area of the local and remote caching SSDs of hybrid nodes. Finally, if the data has not been accessed in a significant amount of time, the filesystem will retrieve the requested data from the distributed capacity layer. As requests for reads are made to the distributed filesystem and the data is retrieved from the capacity layer, the caching SSDs of hybrid nodes populate their dedicated read cache area to speed up subsequent requests for the same data. This multi-tiered distributed system with several layers of caching techniques, ensures that data is served at the highest possible speed, leveraging the caching SSDs of the nodes fully and equally. All-flash and all-NVMe configurations do not employ a dedicated read cache, because such caching does not provide any performance benefit since the persistent data copy already resides on high-performance SSDs.

In summary, the Cisco HyperFlex HX Data Platform implements a distributed, log-structured file system that performs data operations via two configurations:

· In a Hybrid configuration, the data platform provides a caching layer using SSDs to accelerate read requests and write responses, and it implements a storage capacity layer using HDDs.

· In an All-Flash or all-NVMe configuration, the data platform provides a dedicated caching layer using high endurance SSDs to accelerate write responses, and it implements a storage capacity layer also using SSDs. Read requests are fulfilled directly from the capacity SSDs, as a dedicated read cache is not needed to accelerate read operations.

Cisco HyperFlex CSI Storage Plugin

Container Storage Integration (CSI) is a standard for exposing arbitrary block and file storage systems to containerized workloads on Kubernetes. Using CSI standard interface, third-party storage providers can write and deploy plugins exposing their storage systems in Kubernetes. HX-CSI is Cisco’s storage plugin implementation for containerized workloads that consumes the CSI framework.

Cisco HyperFlex Container Storage Interface (CSI) is an out-of-tree container-based Kubernetes storage integration; which is deployed and consumed through standard Kubernetes primitives such as Persistent Volume Claims and Storage Classes. Cisco HyperFlex CSI supports the following features:

· Dynamic creation and deletion of volumes

· Dynamic volume attach and detach

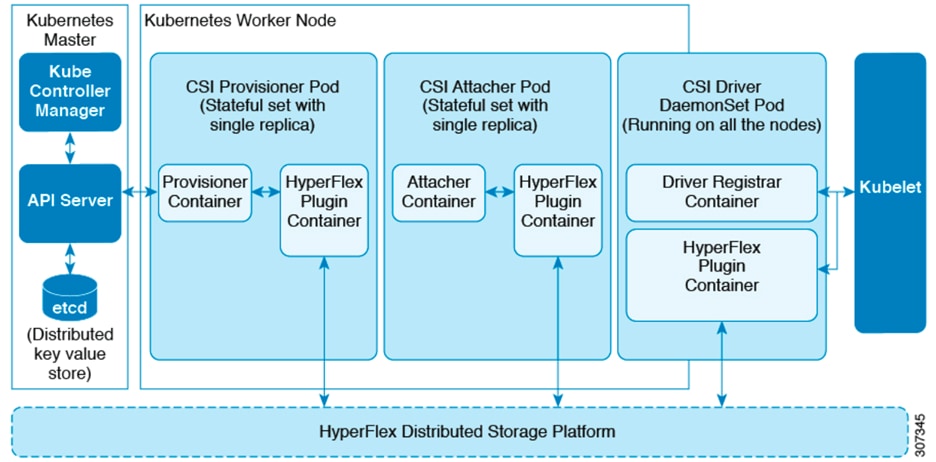

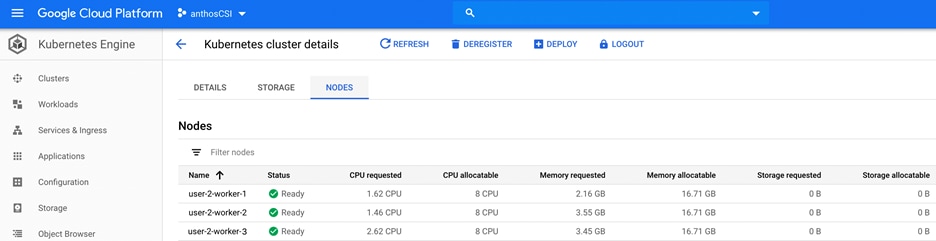

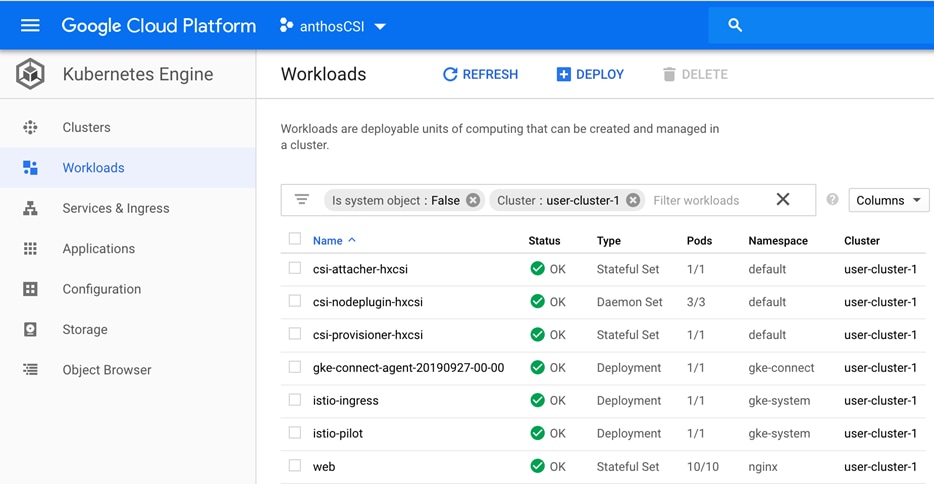

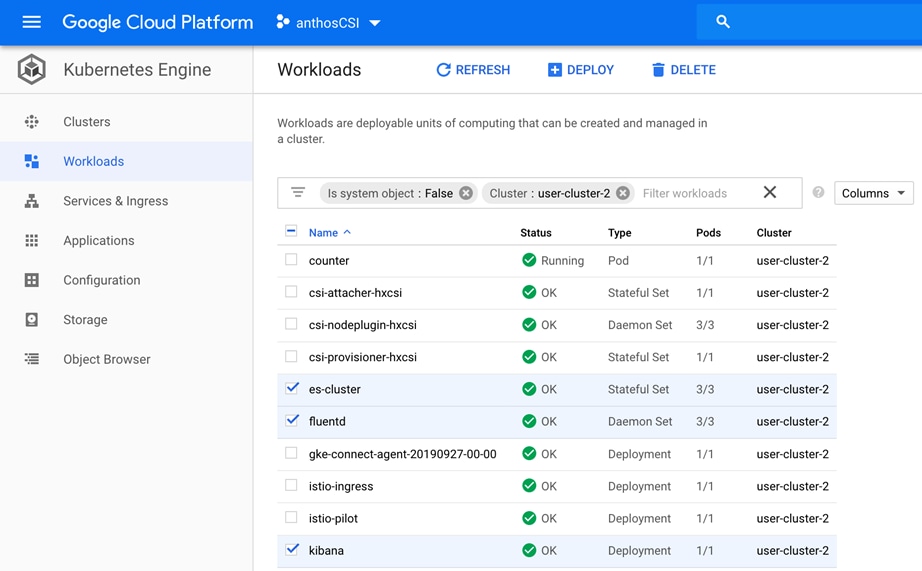

The Cisco HyperFlex CSI plugin is deployed as containers on Anthos cluster. Figure 7 shows the different components of the Cisco HyperFlex CSI deployment and how they interact with each other.

Figure 7 Cisco HX-CSI Storage Plugin

The following are the pods that get deployed as part of HX-CSI:

· csi-attacher-hxcsi

- Type - StatefulSet

- Number of Instances - One per Kubernetes Cluster

- Purpose - Required by CSI, but not currently in used in the Cisco deployment.

· csi-provisioner-hxcsi

- Type - StatefulSet

- Number of Instances - One per Kubernetes Cluster

- Purpose - Watches Kubernetes Persistent Volume Claim objects and triggers CreateVolume and DeleteVolume operations as part of CSI spec.

· csi-nodeplugin-hxcsi

- Type - DaemonSet

- Number of Instances - One per Kubernetes Worker Node

- Purpose - Discovery and formatting of provisioned HyperFlex iSCSI LUNs on Kubernetes worker nodes. Implements NodeStage/NodeUnstage and NodePublish/NodeUnpublish Volume APIs as part of Kubernetes CSI spec.

Cisco Nexus Switch

The Cisco Nexus 93180YC-EX-24 Switch is a 1RU switch with latency of less than 1 microsecond. The 24 downlink ports (licensed to use any 24 ports out of the 48 ports) on the 93180YC-EX-24 can be configured to work as 1, 10, or 25Gbps ports, offering deployment flexibility and investment protection. The 6 uplink ports can support 40 and 100Gbps or a combination of 1, 10, 25, 40, 50, and 100Gbps connectivity, thus offering flexible migration options. The switch has FC-FEC enabled for 25Gbps and supports up to 3m in DAC connectivity.

Figure 8 Cisco Nexus 93180YC-EX Switch

![]() Any upstream switch in Cisco’s Nexus portfolio can be used for this solution. There is no dependency or recommendation to use the above mentioned switch.

Any upstream switch in Cisco’s Nexus portfolio can be used for this solution. There is no dependency or recommendation to use the above mentioned switch.

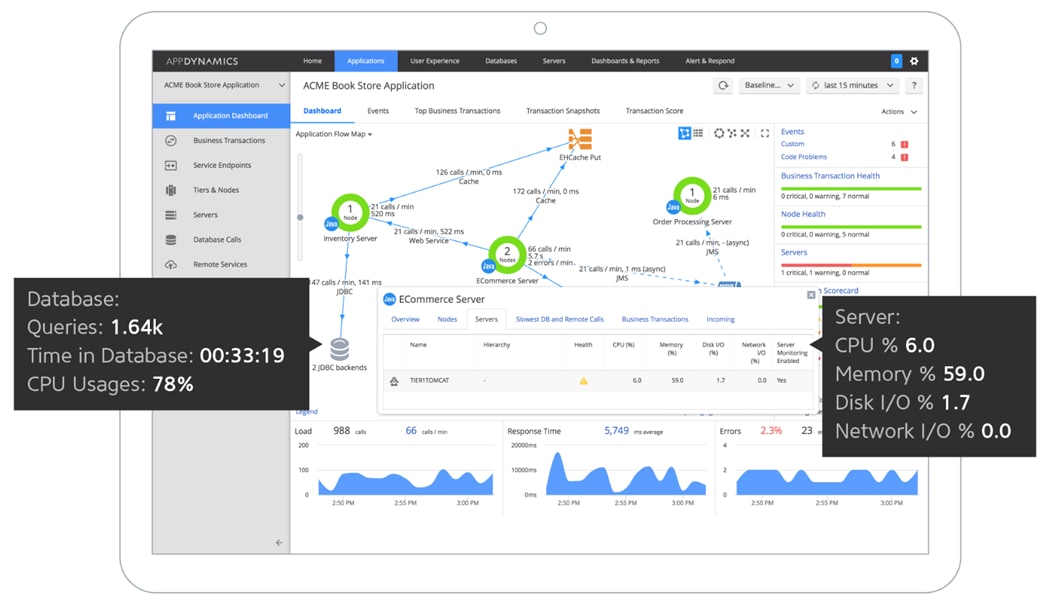

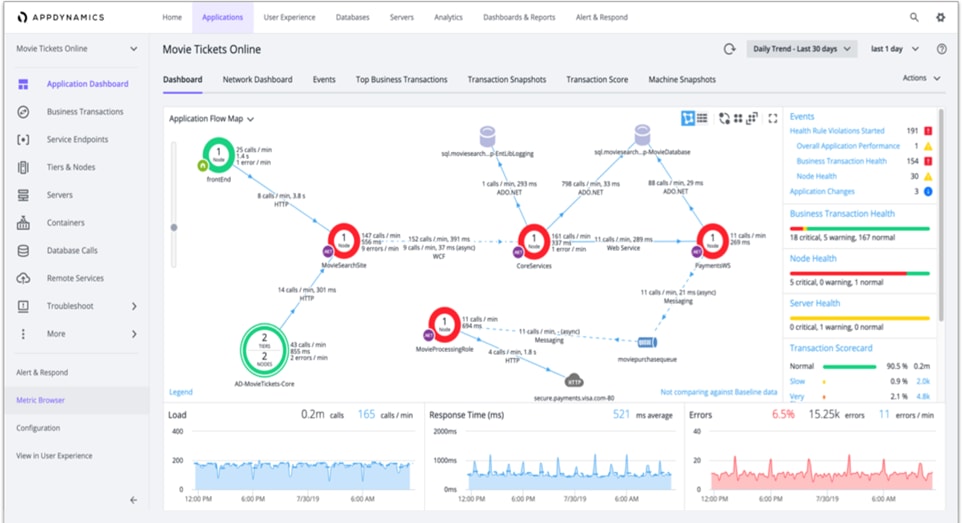

Cisco AppDynamics

AppDynamics is designed for production and pre-production environments, AppDynamics gives user visibility into their entire application topology in a single pane of glass. AppDynamics provides real-time monitoring, business insights, anomaly detection, and full visibility for the entire application landscape-so that the customers can spend less time fixing issues and more time driving in innovation and delivery for exceptional customer experience. For more information on AppDynamics, see: https://www.appdynamics.com/.

AppDynamics Provides the following capabilities:

· Application Performance Monitoring

Application Performance Management (APM) solution monitor apps for users and give them the power to ensure flawless customer experiences. Complex distributed applications demand end-to-end management. Our APM solution delivers application mapping, dynamic baselining and code-level diagnostics.

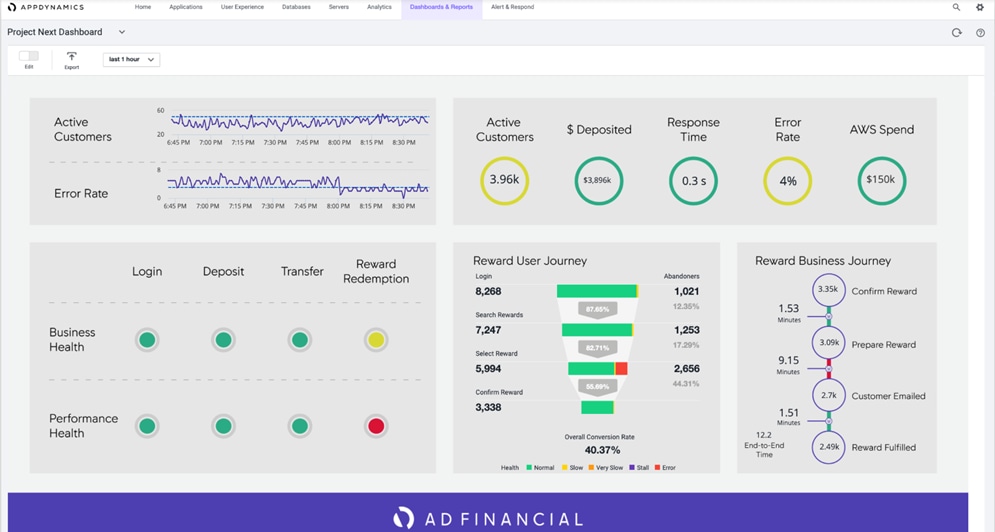

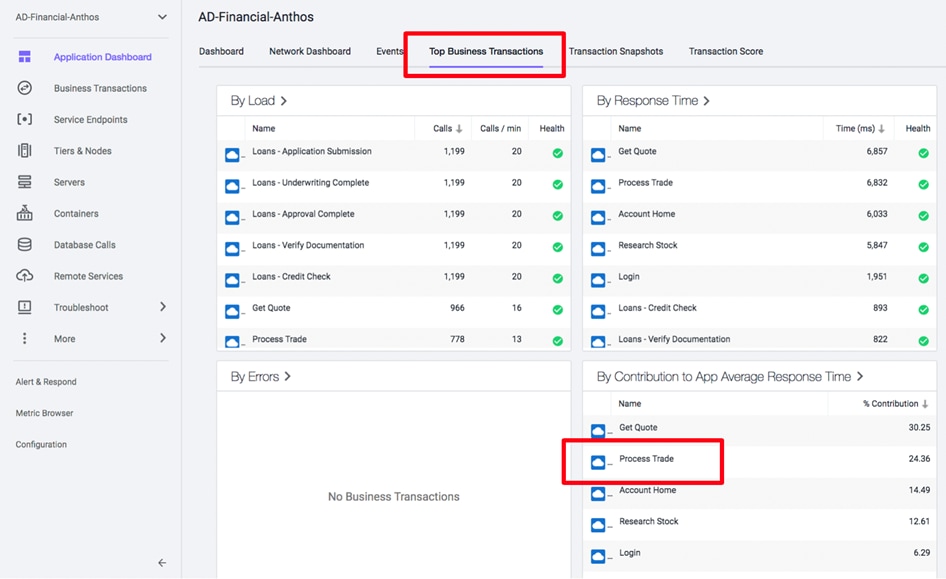

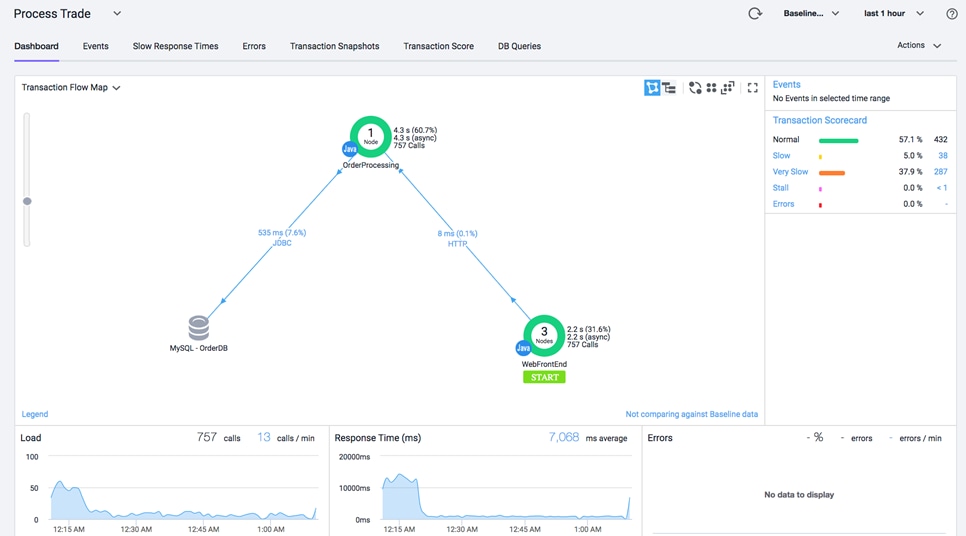

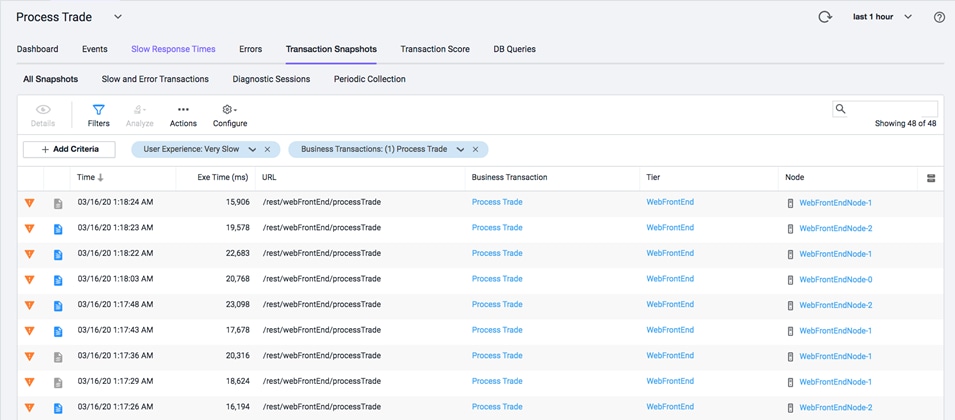

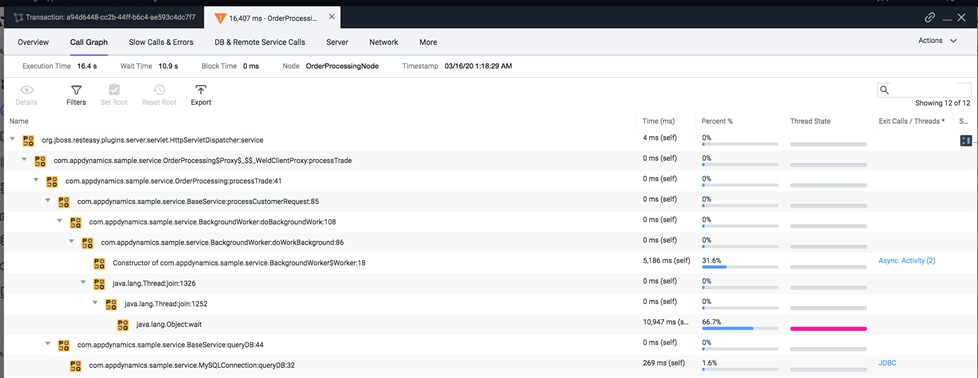

Figure 9 AppDynamics APM

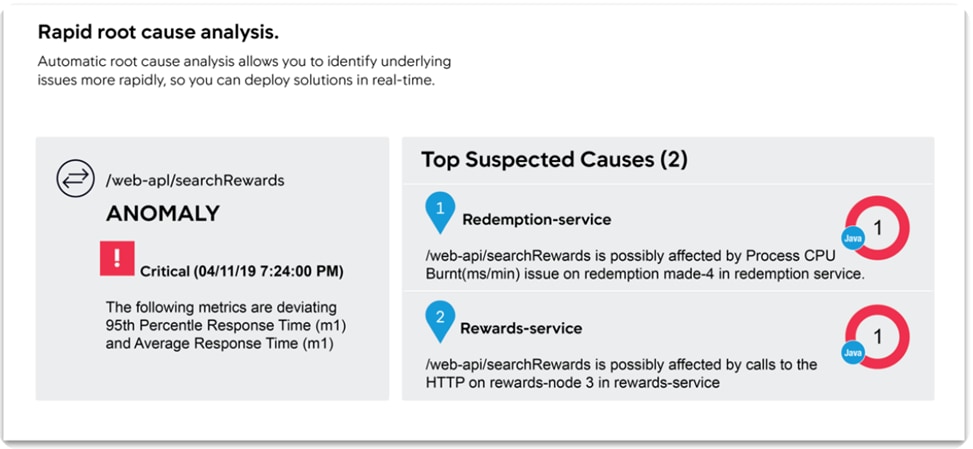

Figure 10 AppDynamics Baselining and Anomaly Detection

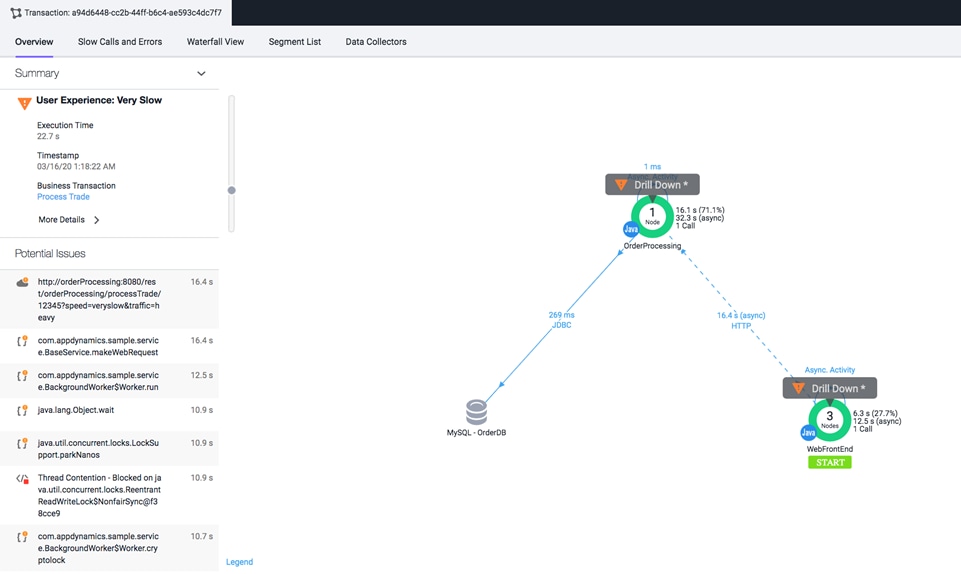

Figure 11 AppDynamics Root Cause Analysis

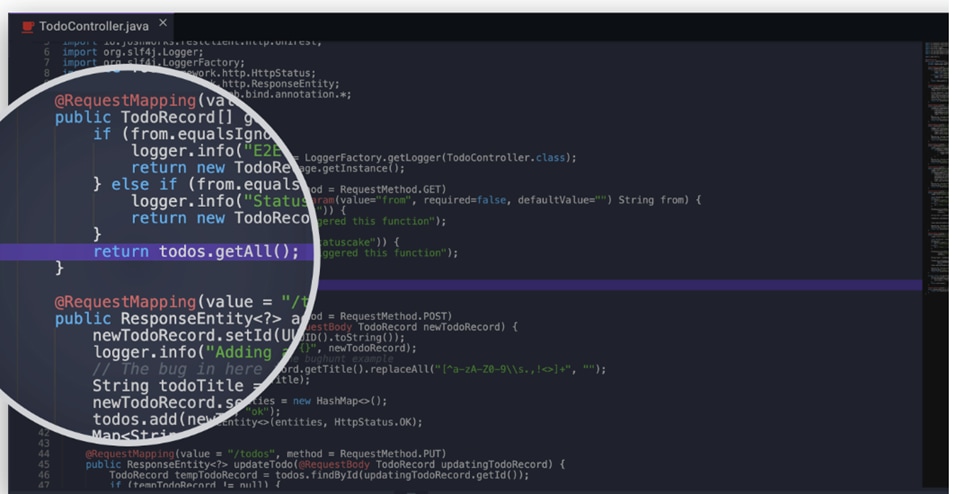

Figure 12 Root Cause Analysis at Code Level

For more information on APM, see: https://www.appdynamics.com/product/application-performance-management

Business Performance Monitoring

Business IQ is an industry first that translates code-level monitoring into immediate, clear and actionable insights, by correlating application performance, user experience and business outcomes. From the end user’s front-end to the application back-end, AppDynamics is the only platform that unifies full stack performance monitoring for business and IT departments.

Figure 13 AppDynamics Business Performance Monitoring

For more information on Business Performance Monitoring, see: https://www.appdynamics.com/product/business-iq

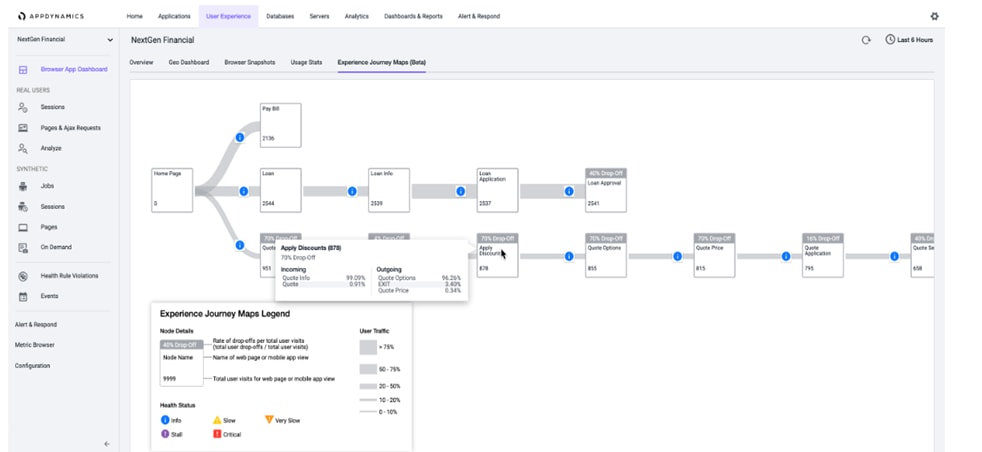

End User Monitoring

Create better customer experiences and quickly solve issues by automatically capturing errors, crashes, network requests, page load details, and other metrics. Also, End User Monitoring gives users a clear understanding of how third-party APIs and content services are impacting performance, giving users more leverage to enforce SLAs.

Figure 14 AppDynamics End User Monitoring

For more details on End User Monitoring, see: https://www.appdynamics.com/product/end-user-monitoring

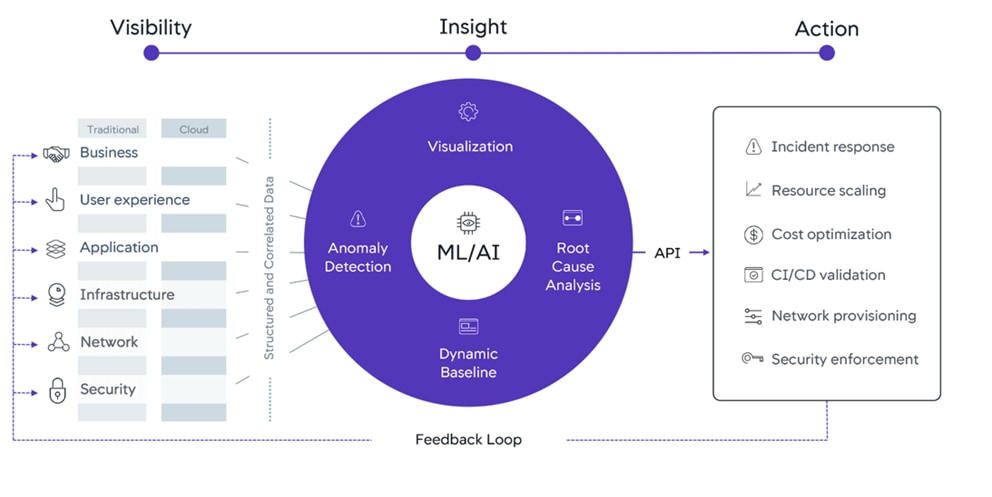

AIOps

Together with Cisco, AppDynamics provides our vision for AIOps: the Central Nervous System for IT. The Central Nervous System is a platform that delivers deep, cross-domain visibility and insights with the ability to auto-mate actions, reduce the amount of time-consuming IT tasks, and enable teams to drive innovation. With the Central Nervous System for IT, AppDynamics and Cisco empower businesses with AI powered insights and automation that help them take the right action, at exactly the right time.

Figure 15 AppDynamics Central Nervous System

For information on Central Nervous System, see: https://www.appdynamics.com/central-nervous-system

Cloud

Whether it's a new cloud application or a user is migrating an existing application, we provide all the cloud monitoring tools that users need to accelerate their journey to the cloud.

For information on Cloud Monitoring, see: https://www.appdynamics.com/solutions/cloud/cloud-monitoring

AppDynamics support various cloud platforms and cloud native programming, which includes:

· Public Cloud like AWS, GCP and Azure

· Serverless Functions

· Docker Monitoring

· Kubernetes Monitoring

Infrastructure Visibility

This makes sure that the application performance is fully supported by the infrastructure with server, database and network performance visibility at the user’s end. Infrastructure Visibility lets users drill down into their business transactions to see and troubleshoot server, database and network issues before they affect customers—and do it within minutes.

Figure 16 AppDynamics Infrastructure Visibility

For information on Infrastructure Visibility, see: https://www.appdynamics.com/product/application-performance-management/infrastructure-visibility

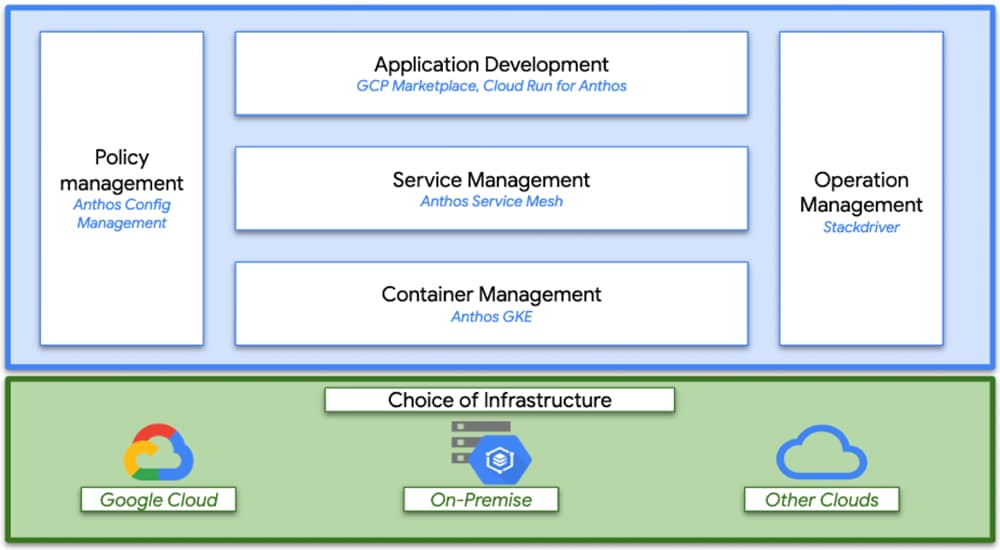

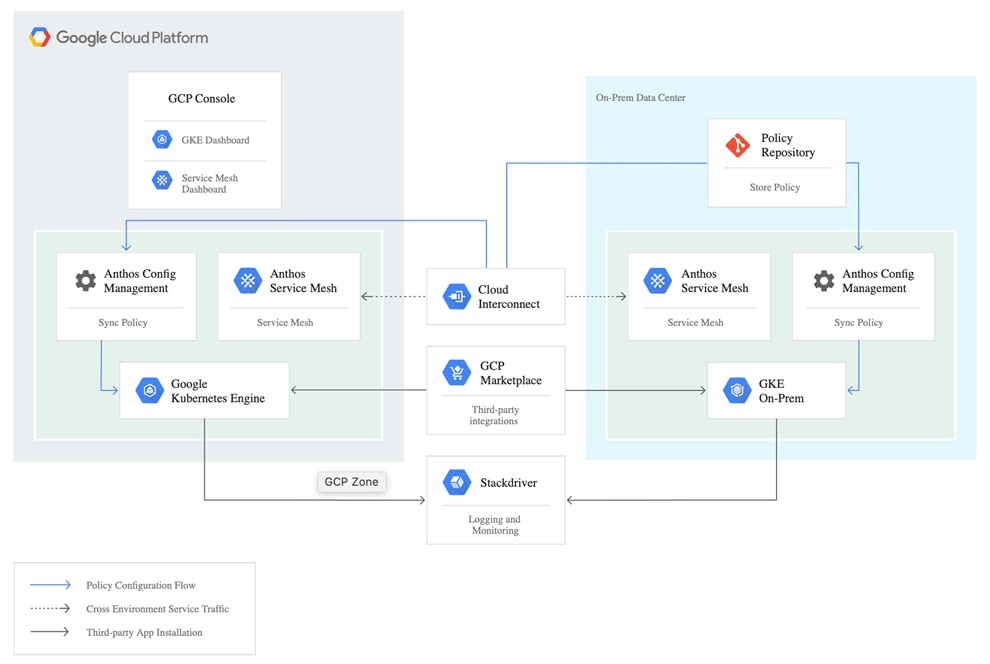

Google Cloud’s Anthos

Anthos is a platform composed of multiple products and capabilities designed to help organizations modernize their applications and run them on hybrid and multi-cloud environments. Anthos GKE on-prem is a Google provided Kubernetes experience designed to run within the data center and be part of a hybrid cloud architecture that enables organizations to construct and manage modern hybrid-cloud applications. GKE on-prem, a solution built on open-source technologies, runs on-premises in a VMware vSphere-based infrastructure, and can be managed from a single control point along with Anthos GKE in Google Cloud and in other clouds. Adopting containers, microservices, service mesh, and other transformational technologies enables organizations to experience consistent application development cycles and production-ready workloads in local and cloud-based environments. Figure 17 depicts the Anthos overview showing the connectivity between cloud and on-premises.

For more information about Anthos, see: https://cloud.google.com/anthos/. Anthos is a platform that offers a collection of products and capabilities:

· Anthos Config Management - Automates the policy and security of hybrid Kubernetes deployments.

· Anthos Service Mesh - Enhances application observability (trace path), security, and control with an Istio-powered service mesh.

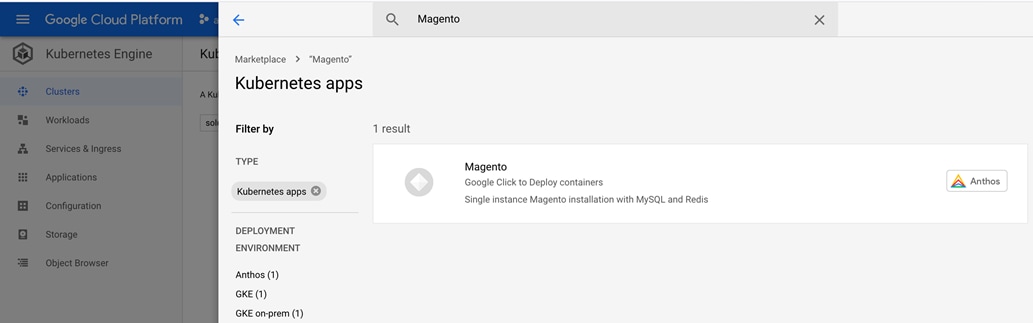

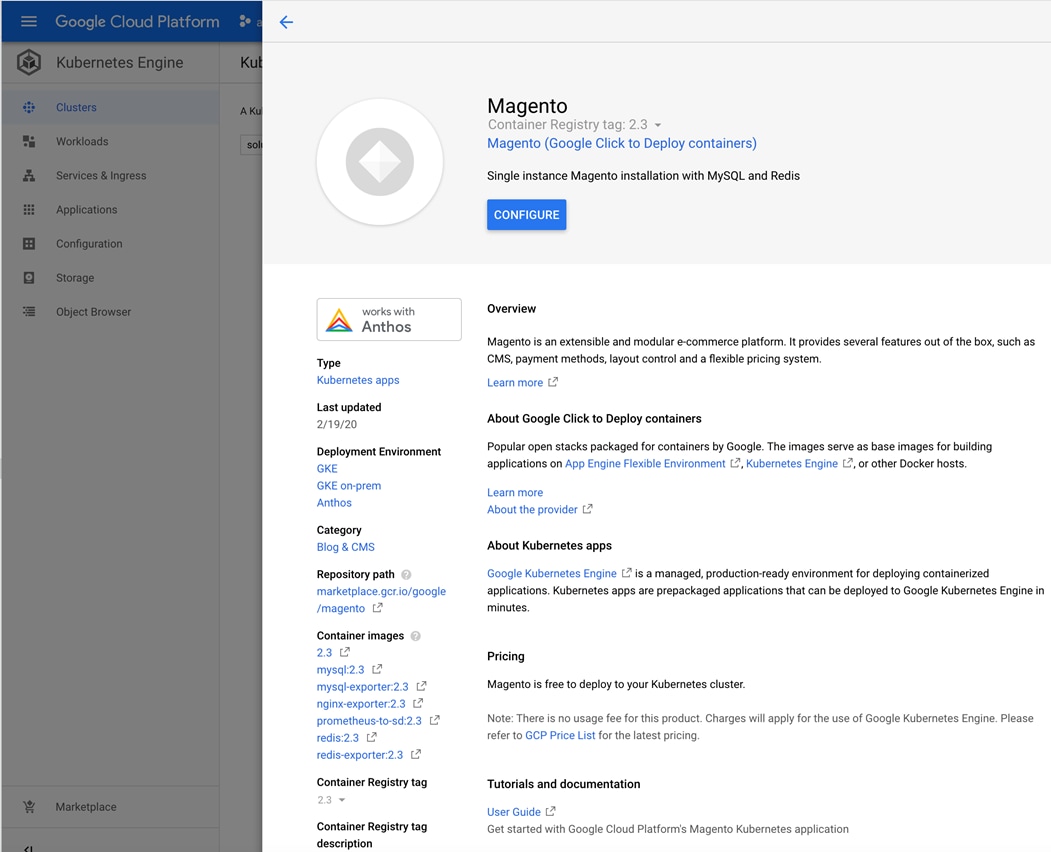

· Google Cloud Marketplace for Kubernetes Applications - A catalog of curated container applications available for easy deployment.

· Migrate for Anthos - Automatic migration of physical services and VMs from on-premises to the cloud.

· Stackdriver - Management service offered by Google for logging and monitoring cloud instances.

· Anthos GKE deployed on-prem - is hybrid cloud software that brings Google Kubernetes Engine (GKE) to on-premises data centers

Figure 17 Google Cloud’s Anthos overview

Figure 18 Interconnection of Anthos GKE on-prem with GCP

Kubernetes Orchestration

Enterprises have embraced container technology for application-specific workload requirements to adopt themselves to ever-changing IT needs. Containers by design require less overhead to deploy and all that is needed is the packaging of application code and supporting libraries together, because all other services depend on the host OS. Rather than managing a complete virtual machine (VM) environment, developers can instead focus on the application development process. As container technology began to find appeal in the enterprise landscape, enterprises had a need for both fault tolerance and application scaling. In response, Google partnered with the Linux Foundation to form the Cloud Native Computing Foundation (CNCF). Together, they introduced Kubernetes (K8s), an open-source platform for orchestrating and managing containers.

Anthos GKE

Anthos GKE is a certified distribution of Kubernetes in the Google Cloud. It allows end users to easily deploy managed, production-ready Kubernetes clusters, enabling developers to focus primarily on application development rather than on the management of their environment. Deploying Kubernetes clusters in Anthos GKE offers the following benefits:

· Simplified application deployment - Anthos GKE allows for rapid development, and also provides updates of applications and services. By providing simple descriptions of the expected system resources (compute, memory, and storage) required by the application containers, the Kubernetes Engine automatically provisions and manages the lifecycle of the cluster environment.

· Monitoring – GCP (Google Cloud Platform) provides a single pane of glass for managing clusters and workloads. Anthos GKE clusters are continually monitored by Google Site Reliability Engineers (SREs) to make sure that clusters behave as expected by collecting regular metrics and observing the use of assigned system resources. Dashboard provides visibility into cluster health to make sure the deployed applications are highly available and there is no single point of failure.

· Securing Clusters in Google Cloud - An end user can ensure that clusters are secure and accessible by customizing network policies available from Google Cloud’s Global Virtual Private Cloud. Public services can be placed behind a single global IP address for load balancing purposes. A single IP can help provide high availability for applications and protect against Distributed Denial of Service (DDOS) and other forms of attacks that might hinder service performance.

· Easily Scaling – An end user can enable auto-scaling on their cluster to easily counter both planned and unexpected increases in application demands. Auto-scaling helps make sure that system resources are always available by increasing capacity during high-demand windows. It also allows the cluster to return to its previous state and size after peak demand subsides.

Anthos GKE Deployed on-prem

Anthos GKE deployed on-prem (GKE on-prem) is an extension of Google Kubernetes Engine that is deployed in an end user’s private data center. An organization can deploy the same container applications designed to run in Google Cloud and in Kubernetes clusters on premises. GKE on-prem offers the following benefits:

· Cost - End users can realize significant cost savings by utilizing their own physical resources for their application deployments instead of provisioning resources in their Google Cloud environment.

· Develop and publish - On-premises deployments can be used while applications are in development, which allows for testing of applications in the privacy of a local data center before being made publicly available in the cloud.

· Security – Customers with increased security concerns or sensitive data sets that they do not want to store in the public cloud can be run securely in their own data centers fulfilling their organizational requirements.

Anthos Config Management

Anthos Config Management is a key component of Anthos. With Anthos Config Management, users can create a common configuration across all their infrastructure, including custom policies, and apply it both on-premises and in the cloud. Anthos Config Management evaluates changes and rolls them out to all Kubernetes clusters so that the user’s desired state is always reflected. Anthos Config Management allows cluster operators to manage single clusters, multi-tenant clusters, and multi-cluster Kubernetes deployments by using files, called configs, stored in a Git repository. Some configs are Kubernetes object manifests. Other configs are not object manifests, but instead provide information needed by Anthos Config Management. Users can write configs in YAML or JSON. Anthos Config Management watches for updates to these files and applies changes to all relevant clusters automatically. A configuration-as-code approach allows users to manage the configuration of Google Kubernetes Engine (GKE) or Anthos GKE deployed on-prem clusters by using the same principles that users may already be using to manage their applications deployed in Kubernetes. With Anthos Config Management, users need do the following:

· Code reviews are required to be done before changes are pushed to the live environment, and audit exactly which commit caused a configuration change.

· Reduce the risk of shadow ops, where unvetted changes are pushed to live clusters, and where it is difficult to understand the differences between a documented configuration and users’ live environment. Users can require that all cluster configuration changes are propagated by using Anthos Config Management and lock down direct access to the Kubernetes API.

· Apply configuration changes to hundreds of clusters with a single Git commit instead of writing scripts to run thousands of kubectl apply commands manually.

· Ensure that a change is pushed to each relevant cluster and only relevant clusters, based on metadata applied to each cluster.

· Use Continuous Integration/Continuous Deployment (CI/CD) pipelines to test users changes and apply them automatically when tests pass.

· Use a revert then investigate strategy to roll back breaking changes and get users’ live clusters back into a good working state before fixing the problematic change and applying it as a new commit. This strategy reduces downtime due to configuration-related outages. Anthos Config Management synchronizes the states of user’s clusters with their Git repository. The Config Management Operator custom controller monitors the user’s Git repository and the states of user clusters, keeping them consistent for each Kubernetes object that has been chosen. If Operator fails to apply changes to a resource, the resource is left in the last known good state. Anthos Config Management can configure a single cluster or multiple clusters from a single Git repository.

Continuous Integration / Continuous Delivery with Anthos

Digital transformation in the enterprise extends beyond just technology into the organizational and process changes. CI/CD is part of the larger transition to a DevOps mentality that is occurring in the enterprise. Anthos is designed to support CI/CD and DevOps processes and tools by providing integrations into Google Cloud services or allowing operations teams to plug into their existing workflows. Anthos is built to work with Google Cloud CI/CD supporting tools, such as: Cloud Build, Google Container Registry and Source Repositories to provide a foundation out of the box to integrate seamlessly with Google Cloud. However, Anthos also fully supports third-party CI/CD tools for builds, pipelines, deployments and more to ensure that Anthos customers have the flexibility and choice to meet their application needs. For a sample CI/CD model deployment with Anthos, see: https://cloud.google.com/solutions/partners/a-hybrid-cloud-native-devsecops-pipeline-with-jfrog-artifactory-and-gke-on-prem

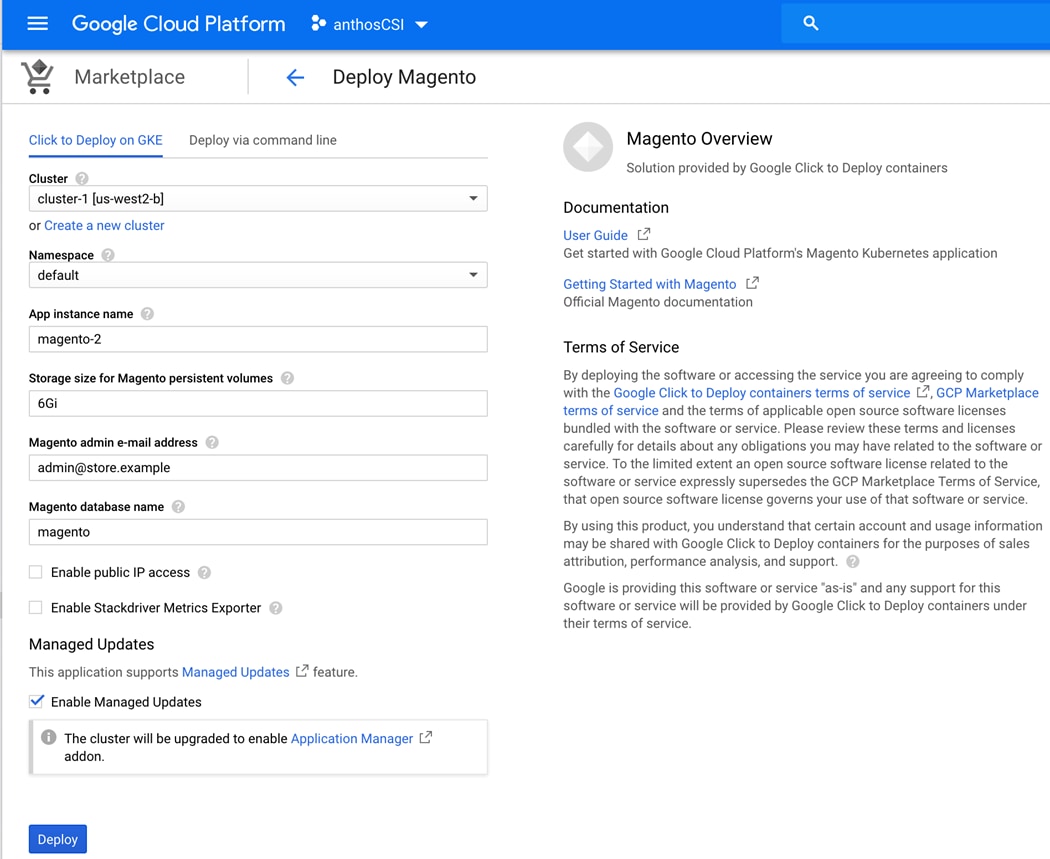

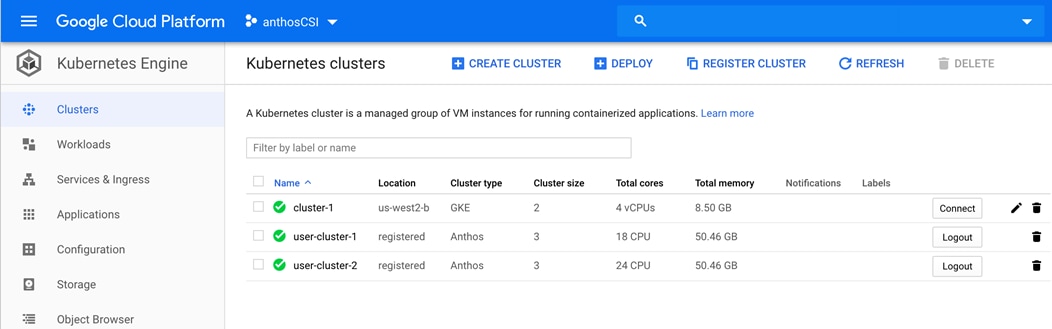

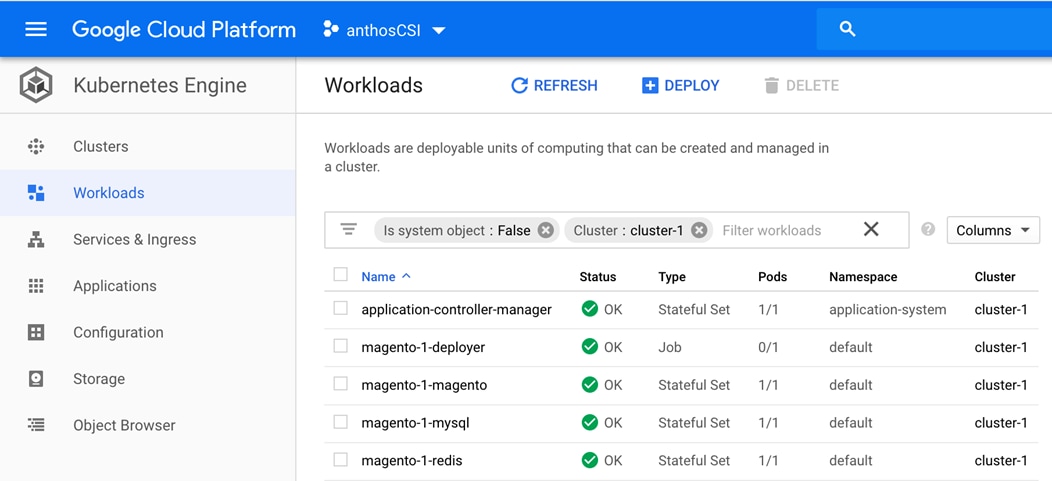

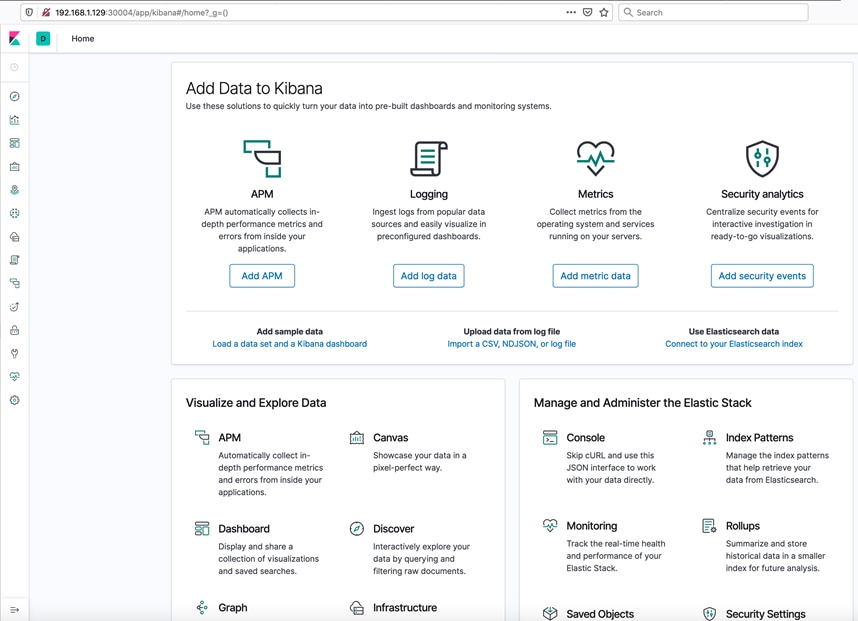

Google Cloud Marketplace for Kubernetes Apps

Kubernetes applications are enterprise-ready containerized solutions with prebuilt deployment templates, featuring portability, simplified licensing, and consolidated billing. They can be run on Anthos, in the cloud, on-premises, or on Kubernetes clusters hosted in other environments. These are not just container images, but open-source, Google-built, and commercial applications that increase developer productivity, available now on GCP Marketplace. The Kubernetes apps in Google Cloud Marketplace include container images and configuration files, such as a kubectl configuration or a Helm chart. When users deploy an app from Google Cloud Marketplace, the Kubernetes resources are created in their cluster, and they can manage the resources as a group. Deploying a Kubernetes app from the Google Cloud Marketplace is as easy as discovering the application from within the marketplace, selecting the cluster (in the cloud or on-prem users wish to deploy to, selecting or creating the desired namespace, setting the application specific options, and clicking deploy). From there, Marketplace will push the application according to the application provider’s specifications and can monitor the progress of the deployment from the Google Cloud Console. The Google Cloud Marketplace integrates billing with the apps that are deployed. Usage metering follows the application no matter where it is deployed or to which environment it is moved. Billing takes place only through GCP and is consolidated with the rest of the user’s spend, resulting in just one bill. Lastly, users pay only for what they use via the transparent consumption-based billing model. See the published solutions with simple click to deploy to Google Kubernetes Engine, and the flexibility to deploy to Kubernetes clusters on-premises or in third-party clouds at: https://console.cloud.google.com/marketplace/browse?filter=solution-type%3Ak8s.

Cloud Run for Anthos

As an Anthos integration, Google Cloud’s app modernization platform, Cloud Run provides custom machine types, VPC networking, and integration with existing Kubernetes‐based solutions. Cloud Run for Anthos provides a flexible serverless development platform on Google Kubernetes Engine (GKE). Cloud Run for Anthos is powered by Knative, an open source project that supports serverless workloads on Kubernetes. Cloud Run is also available as a fully managed serverless platform, without Kubernetes. Cloud Run is a fully managed compute platform that automatically scales the stateless containers. Cloud Run is serverless: it abstracts away all infrastructure management, so that users can focus on what matters most—building great applications. Run containers on Anthos, which supports both Google Cloud and on‐premises environments. Cloud Run is built upon an open standard, Knative, enabling the portability of applications deployed in the user environment.

· Write code the way the user wants, using their favorite languages (Go, Python, Java, C#, PHP, Ruby, Node.js, Shell, and others)

· Abstract away all infrastructure management for a simple developer experience

· Only pay when the user is running the code

Requirements

The following sections detail the physical hardware, software revisions, and firmware versions required to install a single cluster of the Cisco HyperFlex system. Maximum cluster size of 64 nodes can be obtained by combining 32 converged nodes and 32 compute-only nodes.

Physical Components

Table 1 HyperFlex System Components of this Solution

| Components |

Hardware Used |

| Fabric Interconnect |

A pair of Cisco UCS 6332-16UP Fabric Interconnects |

| Servers |

Four Cisco HyperFlex HXAF240c-M5SX All-Flash rack servers (up to 32 servers is supported) |

For complete server specifications and more information, please refer to the Cisco HyperFlex HX240c M5 Node Spec Sheet.

Table 2 lists the hardware component options for the HX240c-M5SX server model used in this solution.

Table 2 Cisco HyperFlex HX240c M5SX All Flash Node Server Configuration

| Cisco HyperFlex HX240c M5SX All Flash Node options |

Hardware required |

| Processors |

2 Intel Xeon Gold 6240 CPUs with 18 cores each |

| Memory |

12 x 16 GB = 192 GB |

| Disk controllers |

Cisco 12Gbps modular SAS controller |

| Solid-state disks (SSDs) |

1 x 240 GB 6Gbps SATA SSD for housekeeping tasks 6 x 960 GB 6Gbps SATA SSDs for capacity tier 1 x 400 GB SAS SSD for caching tier |

| Network |

1 x Cisco UCS VIC 1387 mLOM 1 x Cisco UCS VIC 1385 PCIe card |

| Boot device |

1 x 240 GB M.2 form-factor 6Gbps SATA SSD |

For additional information on supported hardware components HX240c-M5SX server and other HX models, see: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hx_4_vsi_vmware_esxi.html#_Toc24465141

Software Components

Table 3 provides the software components and its versions used in this solution.

Table 3 Software components and versions

| Component |

Software required |

| VMware hypervisor |

VMware ESXi 6.5.0 U3 13932383 |

| VMware vCenter management server |

VMware vCenter Server 6.5 8307201 |

| Cisco HyperFlex HX Data Platform |

Cisco HyperFlex HX Data Platform 4.0.1b |

| Cisco UCS firmware |

Cisco UCS infrastructure: Cisco UCS C-Series bundle 4.0.4d |

| GKE on-prem Admin Appliance |

gke-on-prem-admin-appliance-vsphere-1.2.2-gke.2.ova |

| GKE bundle |

gke-onprem-vsphere-1.2.2-gke.2-full.tgz |

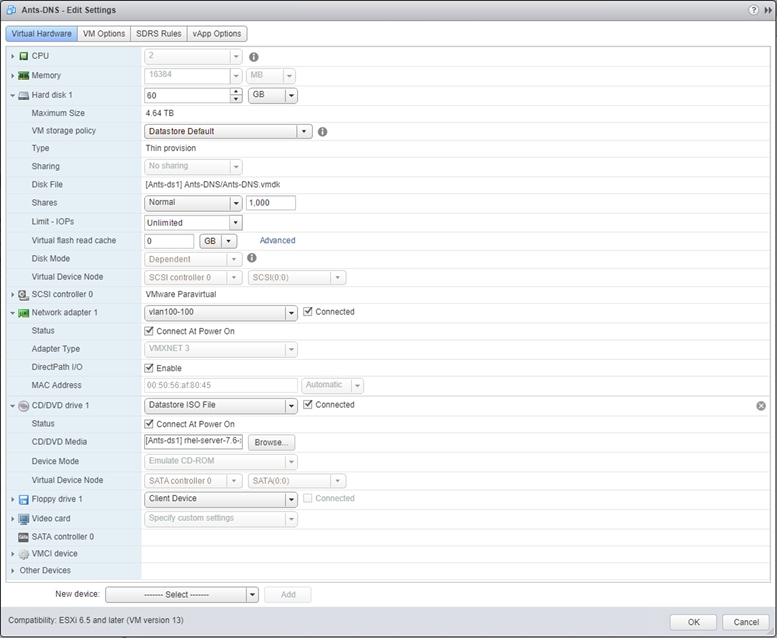

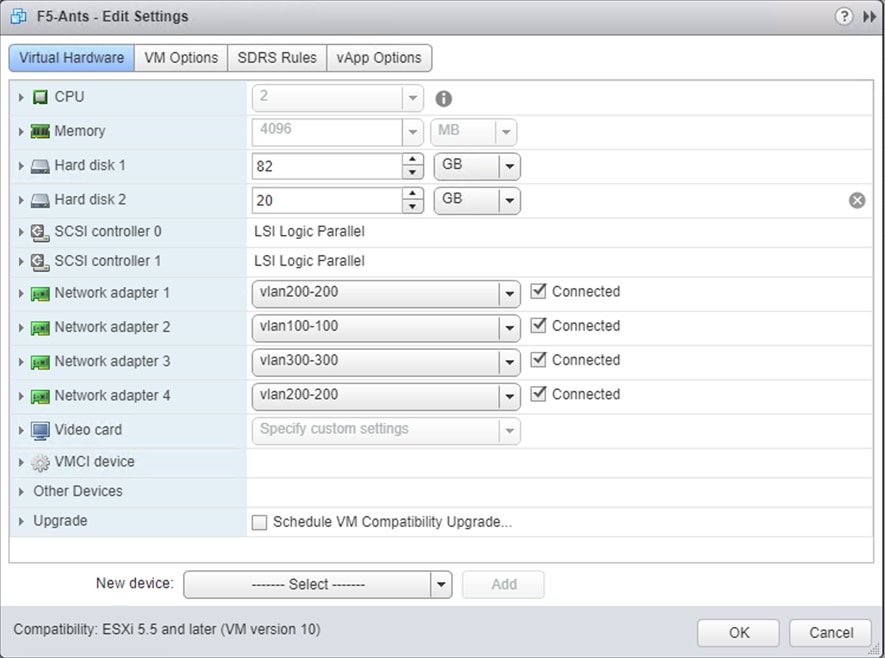

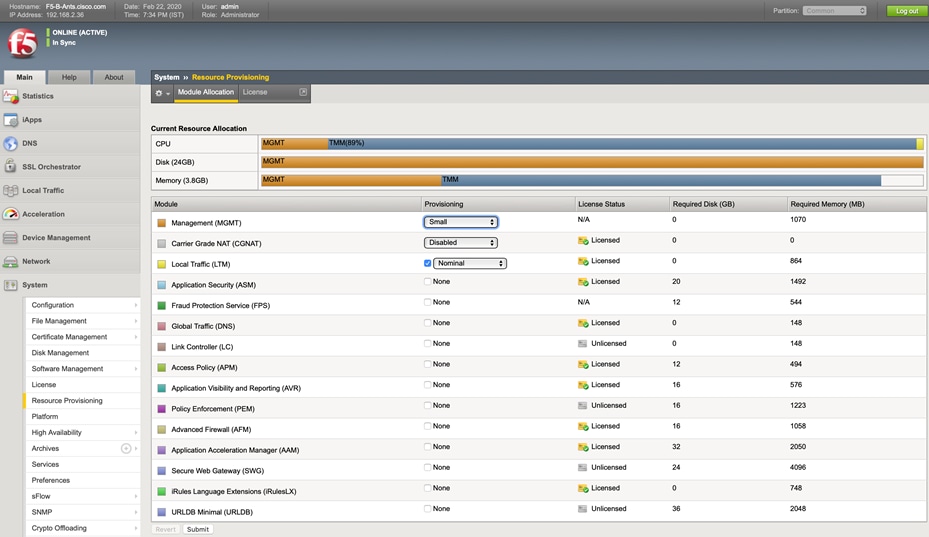

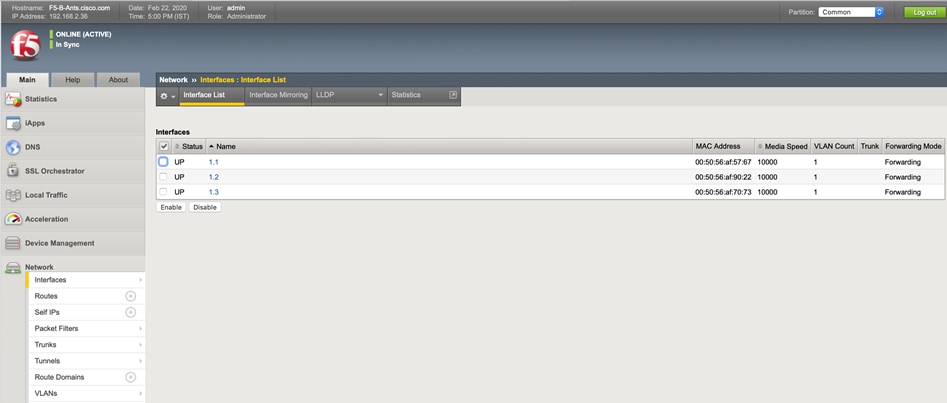

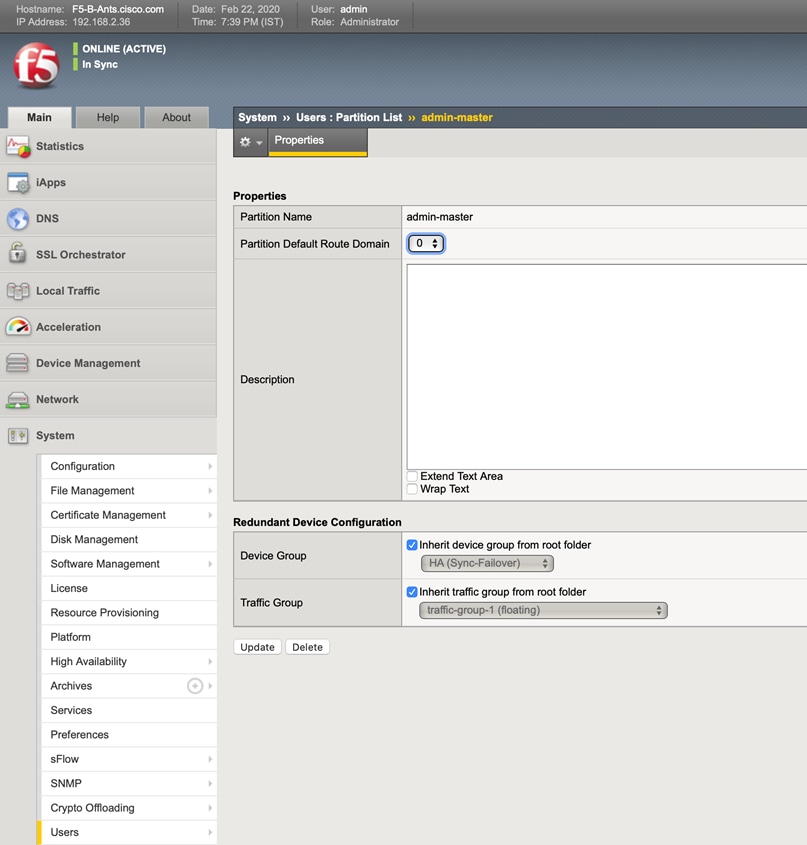

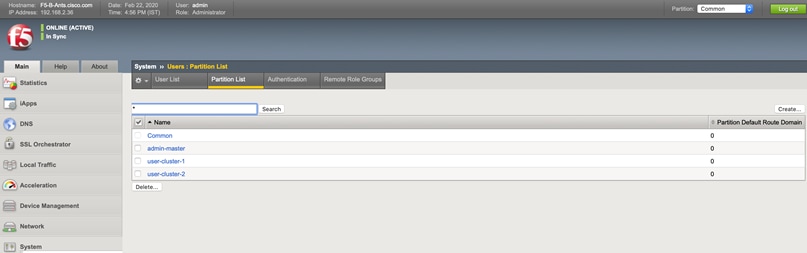

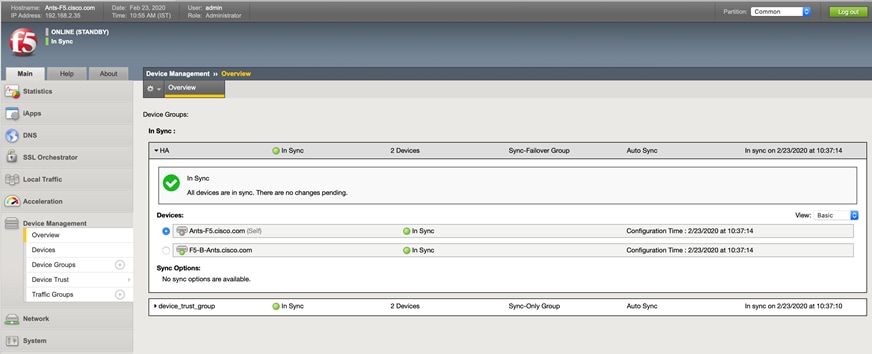

| F5 load balancer |

F5 BIG-IP VE 13.1.3-0.0.6.ALL-scsi.ova |

| Cisco HyperFlex CSI |

HX-CSI version 1.0 |

| Kubernetes version |

Kubernetes version 1.14 |

![]() Users need to have an Enterprise level GCP account for deploying and managing this solution, for more information, see: https://cloud.google.com/resource-manager/docs/creating-managing-projects#creating_a_project

Users need to have an Enterprise level GCP account for deploying and managing this solution, for more information, see: https://cloud.google.com/resource-manager/docs/creating-managing-projects#creating_a_project

![]() Although this solution has been validated with Anthos 1.2, Cisco HyperFlex is qualified as Anthos Ready Platform for Anthos latest versions. For more information, see: https://cloud.google.com/anthos/gke/docs/on-prem/partner-platforms.

Although this solution has been validated with Anthos 1.2, Cisco HyperFlex is qualified as Anthos Ready Platform for Anthos latest versions. For more information, see: https://cloud.google.com/anthos/gke/docs/on-prem/partner-platforms.

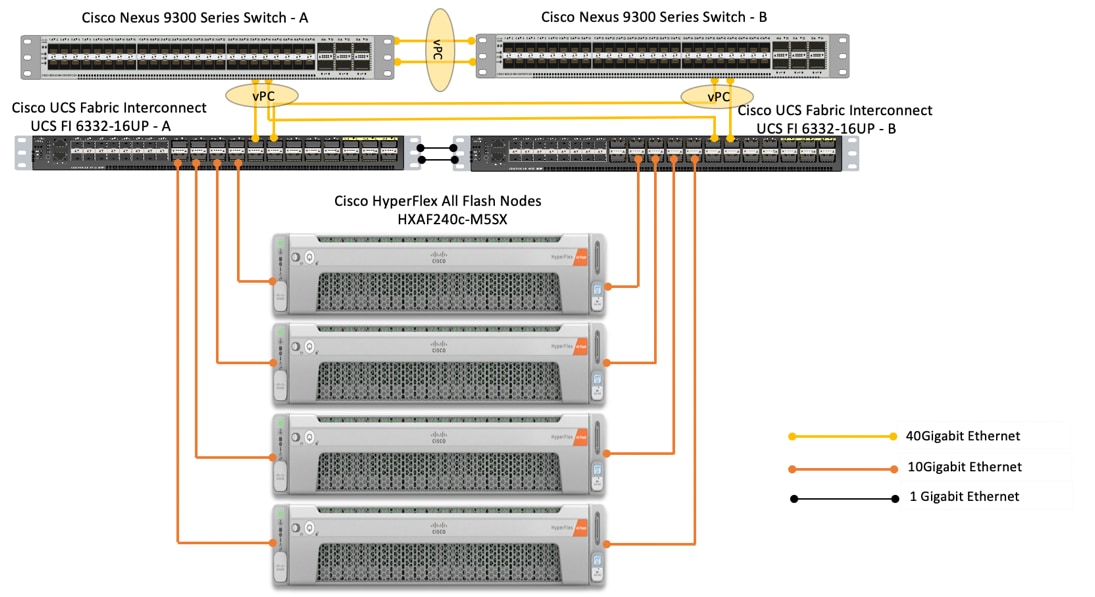

Physical Topology

Topology Overview

The Cisco HyperFlex system is composed of a pair of Cisco UCS Fabric Interconnects along with up to thirty-two HX-Series rack-mount servers per cluster. Up to thirty-two compute-only servers can also be added per HyperFlex cluster. Adding Cisco UCS rack-mount servers and/or Cisco UCS 5108 Blade chassis, which house Cisco UCS blade servers, allows for additional compute resources in an extended cluster design. The two Fabric Interconnects both connect to every HX-Series rack-mount server, and both connect to every Cisco UCS 5108 blade chassis, and Cisco UCS rack-mount server. Upstream network connections, also referred to as “northbound” network connections are made from the Fabric Interconnects to the customer datacenter network at the time of installation.

Figure 19 depicts the reference architecture proposed for this solution. In our solution we have deployed Google Cloud’s Anthos on a four node Cisco HyperFlex (HX) cluster. Each node in the cluster is Cisco UCS HXAF240c-M5SX All Flash. The HX cluster is connected to a pair of Cisco UCS 6332-16UP Fabric Interconnects. Cisco UCS Manager is a management software that runs on the Fabric Interconnects. Cisco UCS Manager UI is a highly intuitive and provides complete visibility on the infrastructure components. The FIs are connected to the upstream switches; in our solution we used Cisco Nexus 93180YC-EX switches.

Figure 19 Reference Architecture

Infrastructure services such as DNS, NTP and VMWare vCenter are recommended to be installed outside the HyperFlex cluster. Customers can leverage these existing services to deploy and manage the HyperFlex cluster.

The HyperFlex storage solution has several data protection techniques, as explained in detail in the Technology Overview section, one of which is data replication which needs to be configured on HyperFlex cluster creation. Based on the specific performance and data protection requirements, customer can choose either a replication factor of two (RF2) or three (RF3). In this solution we have configured the test HyperFlex cluster with replication factor 3 (RF3).

As described in the Technology Overview section, Cisco HyperFlex distributed file system software runs inside a controller VM, which gets installed on each cluster node. These controller VMs pool and manage all the storage devices and exposes the underlying storage as NFS mount points to the VMware ESXi hypervisors. The ESXi hypervisors exposes these NFS mount points as datastores to the guest virtual machines to store their data.

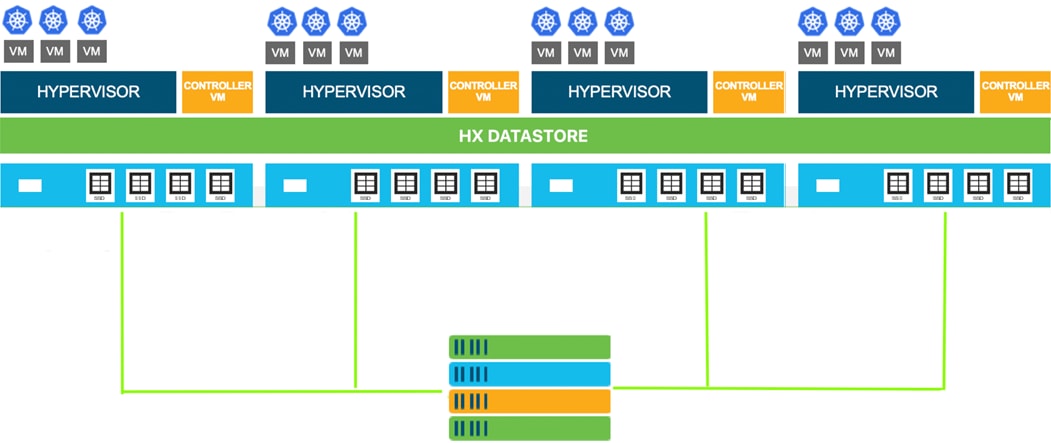

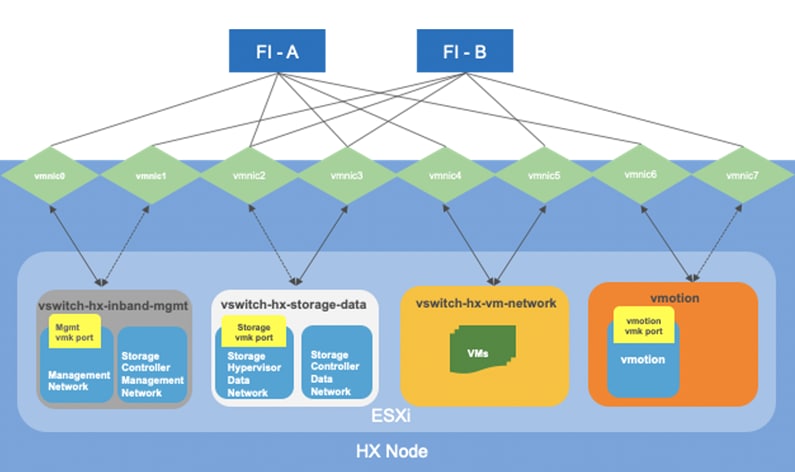

Logical Topology

The logical architecture of this solution is designed to support and run Anthos GKE on-prem within a four node Cisco UCS HXAF240c-M5SX All Flash – HyperFlex cluster, which provides physical redundancy for the containerized workloads running on Anthos VMs.

Figure 20 Cisco HyperFlex Logical Architecture

ESXi Host Design

The following sections detail the design of the elements within the VMware ESXi hypervisors, system requirements, virtual networking and the configuration of ESXi for the Cisco HyperFlex HX Distributed Data Platform.

Virtual Networking Design

The Cisco HyperFlex system has a pre-defined virtual network design at the ESXi hypervisor level. Four different virtual switches are created by the HyperFlex installer, each using two uplinks, which are each serviced by a vNIC defined in the UCS service profile. The vSwitches created are:

· vswitch-hx-inband-mgmt: This is the default vSwitch0 which is renamed by the ESXi kickstart file as part of the automated installation. The default vmkernel port, vmk0, is configured in the standard Management Network port group. The switch has two uplinks, active on fabric A and standby on fabric B, without jumbo frames. A second port group is created for the Storage Platform Controller VMs to connect to with their individual management interfaces. The VLAN is not a Native VLAN as assigned to the vNIC template, and therefore assigned in ESXi/vSphere

· vswitch-hx-storage-data: This vSwitch is created as part of the automated installation. A vmkernel port, vmk1, is configured in the Storage Hypervisor Data Network port group, which is the interface used for connectivity to the HX Datastores via NFS. The switch has two uplinks, active on fabric B and standby on fabric A, with jumbo frames required. A second port group is created for the Storage Platform Controller VMs to connect to with their individual storage interfaces. The VLAN is not a Native VLAN as assigned to the vNIC template, and therefore assigned in ESXi/vSphere

· vswitch-hx-vm-network: This vSwitch is created as part of the automated installation. The switch has two uplinks, active on both fabrics A and B, and without jumbo frames. The VLAN is not a Native VLAN as assigned to the vNIC template, and therefore assigned in ESXi/vSphere

· vmotion: This vSwitch is created as part of the automated installation. The switch has two uplinks, active on fabric A and standby on fabric B, with jumbo frames required. The VLAN is not a Native VLAN as assigned to the vNIC template, and therefore assigned in ESXi/vSphere

Figure 21 ESXi Network Design

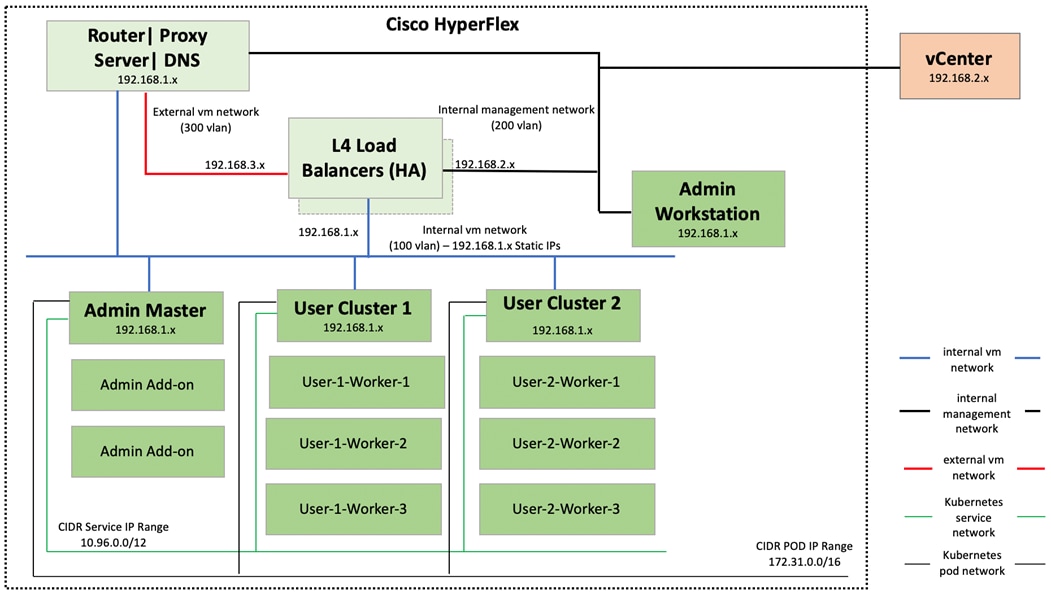

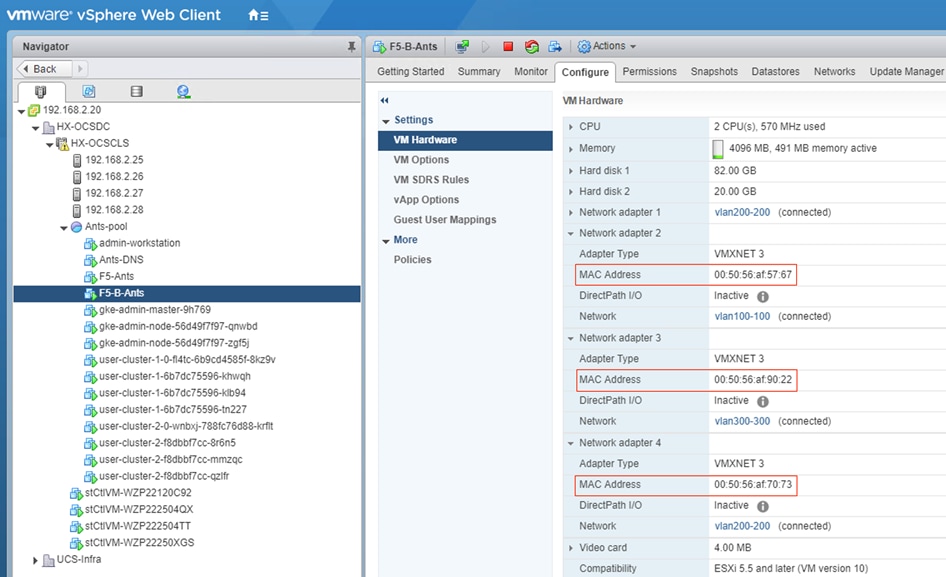

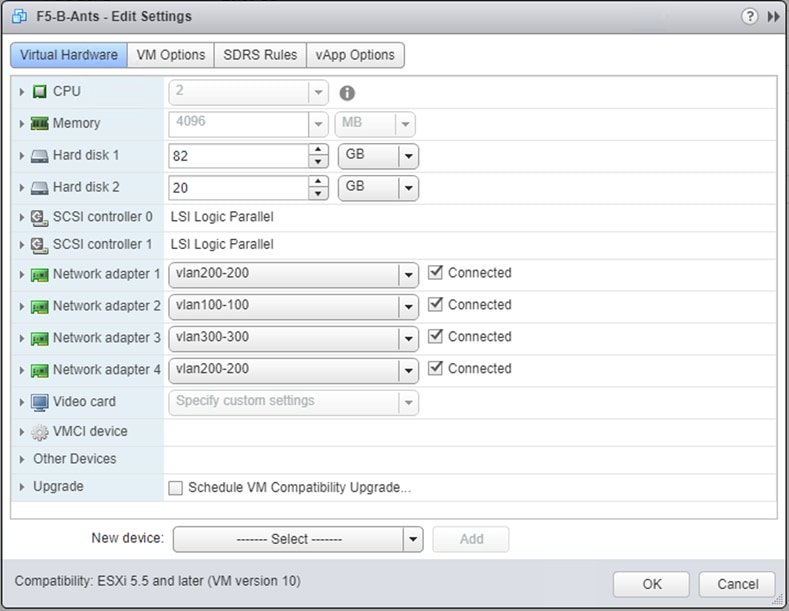

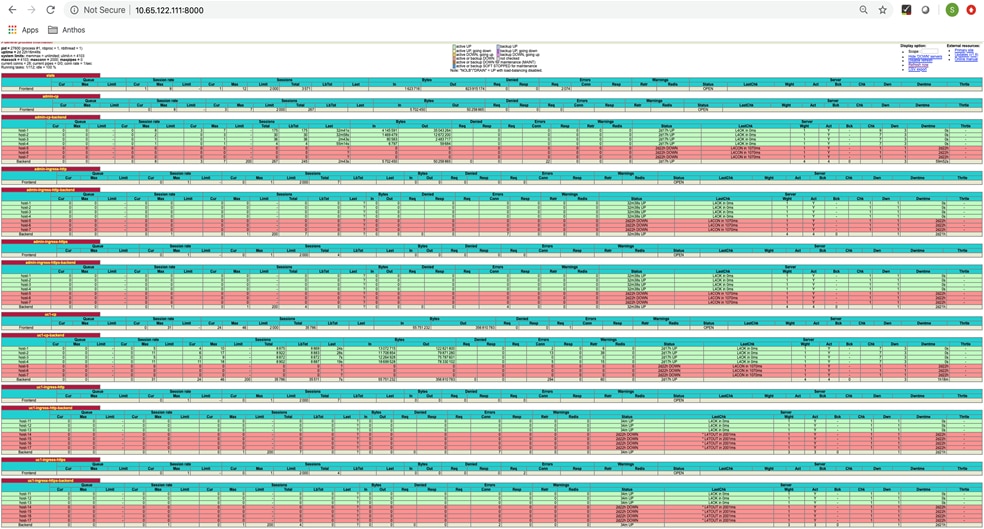

Anthos GKE on-prem Network Topology

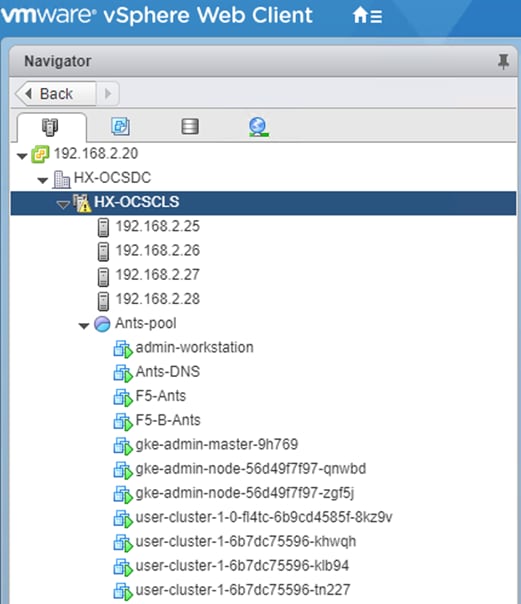

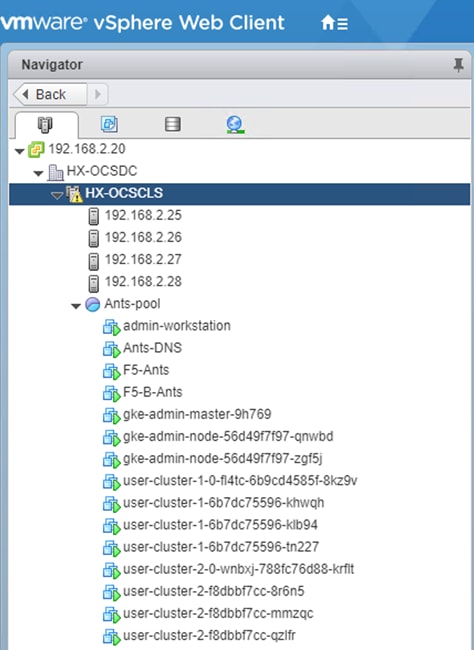

Figure 22 shows the network topology diagram for Anthos GKE deployed on-prem on vSphere on Cisco HyperFlex. All the green boxes in the figure represent VMs within the Cisco HyperFlex platform. The boxes represented in a dark green color are created during Anthos GKE on-prem cluster bring-up.

The admin-workstation deployment is automated using Terraform and this node serves as the administrative host for the Anthos cluster creation. Admin master manages the admin control plane that includes Kubernetes API server, the scheduler, and several controllers for the admin cluster and also manages user cluster masters. The VM that runs the admin control plane is called the admin master. User masters run on nodes in the admin cluster, and not in the user clusters themselves. User clusters are where containerized workloads and services are deployed and run. This layer serves as the scale-out layer for Anthos GKE on-prem.

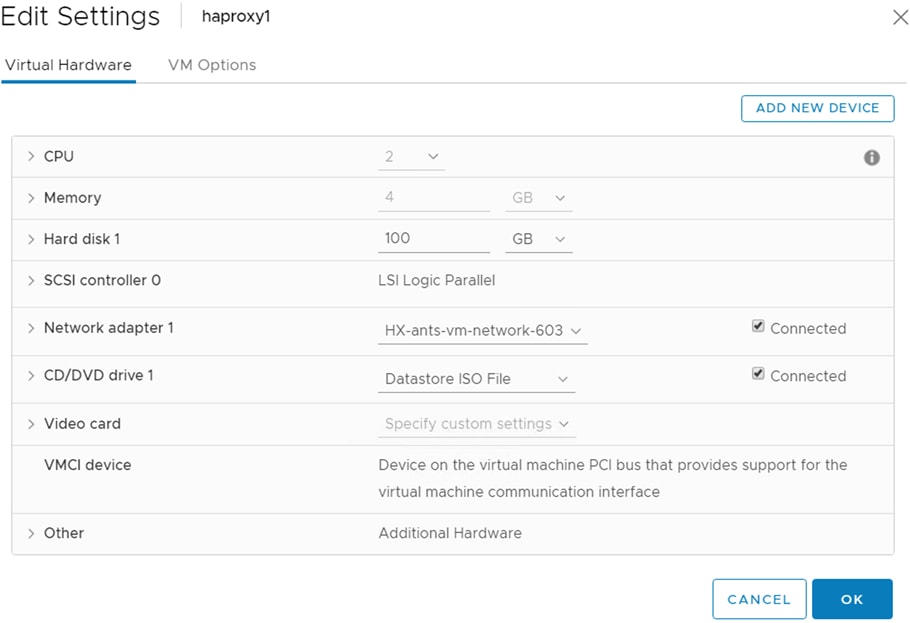

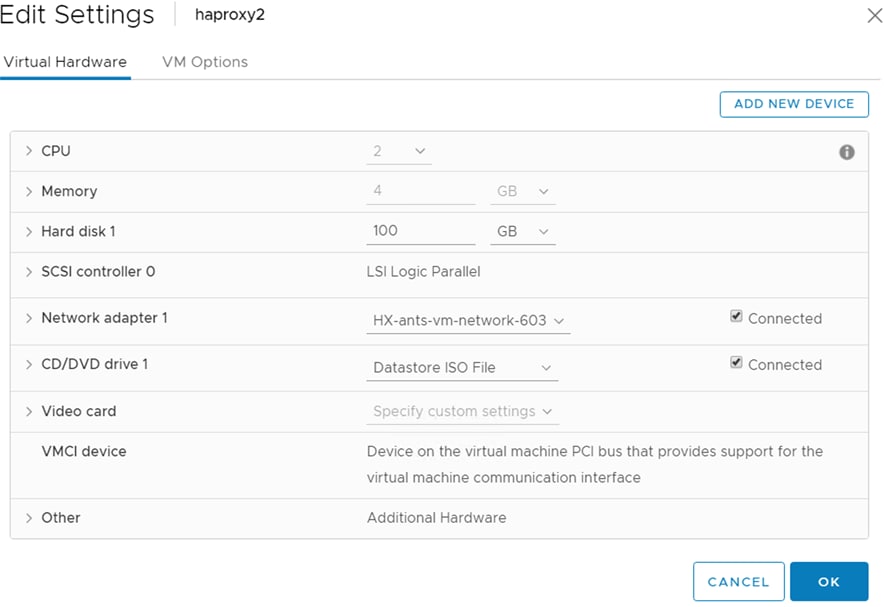

The light green boxes represent VMs that need to be created prior to the cluster creation process as a prerequisite. vCenter is outside the Cisco HyperFlex environment. vCenter appliance and DNS can be newly created or users can leverage their existing setup for Anthos deployment. Anthos GKE requires an L4 load balancer as the network at the end user needs to support outbound traffic to the internet so that their admin workstation and cluster nodes can fetch GKE on-prem components and call required Google services.

Figure 22 Network Diagram for Anthos GKE on-prem Deployment

This section provides a detailed deployment procedure to get the Anthos GKE on-prem up and running in end user’s data center.

For the sake of simplicity, the entire deployment process is grouped into the following subsections:

1. Cisco Nexus switch configuration

2. Cisco UCS Manager configuration

3. Cisco HyperFlex installation

4. Anthos GKE on-prem prerequisites

5. Anthos GKE on-prem deployment

![]() For detailed information about the configuration steps for subsections 1 and 2, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hx_4_vsi_vmware_esxi.html. This solution captures details on installation procedures from subsections 3 to 6.

For detailed information about the configuration steps for subsections 1 and 2, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hx_4_vsi_vmware_esxi.html. This solution captures details on installation procedures from subsections 3 to 6.

Cisco HyperFlex Cluster Configuration

This subsection provides detailed steps on installing and configuring the HX cluster.

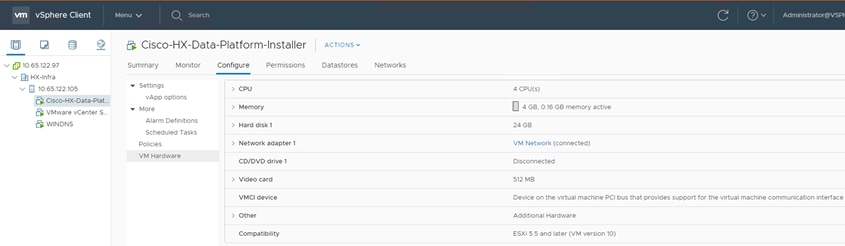

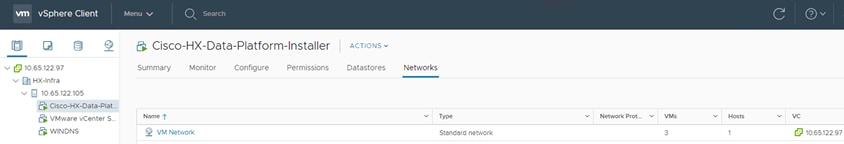

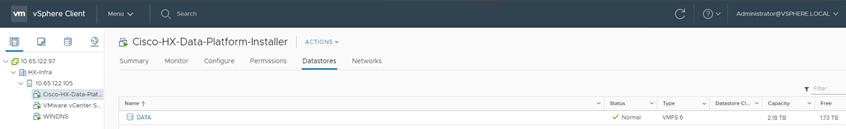

Deploy Cisco HyperFlex Data Platform Installer VM

To deploy HyperFlex Data Platform (HXDP) installer VM, follow these steps:

1. Download the latest installer OVA from Cisco.com at: https://software.cisco.com/download/home/286319572/type/286305994/release/4.0(2a)?catid=286305510

2. To deploy OVA to an existing host in the environment. Use either users existing vCenter Thick Client or vSphere Web Client to deploy OVA on ESXi host.

3. Log into vCenter web client via vCenter management IP address: https://<FQDN or IP address for VC>.

4. Select ESXi host under hosts and cluster then choose the ESXi host to deploy HX DP installer OVA.

5. Right-click the ESXi host and select the option Deploy OVF Template.

6. Browse for the HX DP OVA and click Next.

7. Enter name for OVF template to deploy, select datacenter. Click Next.

8. Review and verify the details for OVF template to deploy, click Next.

9. Select virtual disk format, VM storage policy set to datastore default, select datastore for OVF deployment. Click Next.

10. Select Network adapter destination port-group. Click Next.

11. Fill up the parameters requested for hostname, gateway, DNS, IP address, and netmask. Alternatively, leave all blank for a DHCP assigned address.

12. Review settings selected part of the OVF deployment, click the checkbox for Power on after deployment. Click Finish.

Cisco HyperFlex Cluster Creation

To create a HyperFlex cluster, follow these steps:

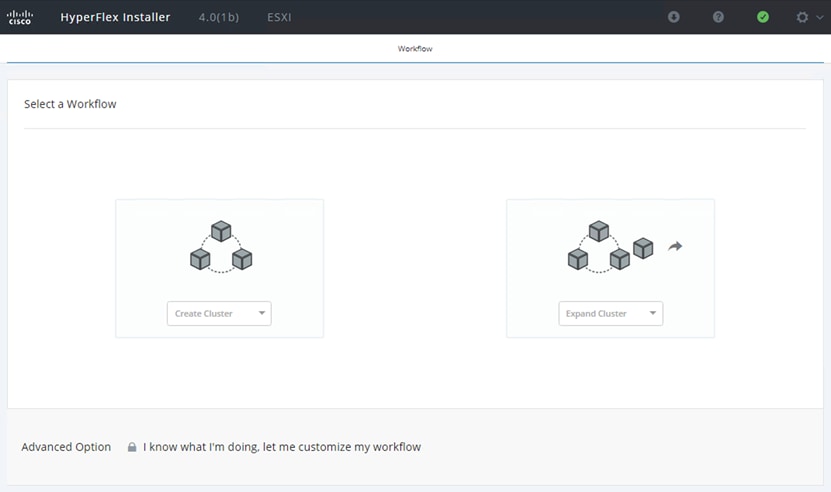

1. Select the workflow for cluster creation to deploy a new HyperFlex cluster on Cisco HXAF240c-M5SX nodes.

2. On the credentials page, enter the access details for Cisco UCS Manager and vCenter server. Click Continue.

3. Choose the top-most check box at the top right corner of the HyperFlex installer to select all unassociated servers. Click Continue after completing server selection.

4. Enter the Details for the Cisco UCS Manager Configuration:

a. Enter VLAN ID for hx-inband-mgmt, hx-storage-data, hx-vmotion, vm-network.

b. MAC Pool Prefix: The prefix to use for each HX MAC address pool. Please select a prefix that does not conflict with any other MAC address pool across all Cisco UCS domains.

c. The blocks in the MAC address pool will have the following format:

§ ${prefix}:${fabric_id}${vnic_id}:{service_profile_id}

§ The first three bytes should always be “00:25:B5”.

5. Enter range of IP address to create a block of IP addresses for external management and access to CIMC/KVM.

6. Cisco UCS firmware version is set to 4.0.x which is the required Cisco UCS Manager release for HyperFlex 4.0.x installation.

7. Enter HyperFlex cluster name.

8. Enter the Org name to be created in Cisco UCS Manager. Click Continue.

9. To configure the Hypervisor settings, follow these steps:

a. In the Configure common Hypervisor Settings section, enter:

§ Subnet Mask

§ Gateway

§ DNS server(s)

b. In the Hypervisor Settings section:

§ Select check box Make IP Address and Hostnames Sequential if they are following in sequence.

§ Provide the IP Address.

§ Provide the Host Name or enter Static IP address and Host Names manually for each node. Click Continue.

10. To add the IP addresses, follow these steps:

a. On the IP Addresses page, check the box Make IP Addresses Sequential or enter the IP address manually for each node for the following requested values:

§ Storage Controller/Management

§ Hypervisor/Data

§ Storage Controller/Data

b. Enter subnet and gateway details for the Management and Data subnets configured. Click Continue to proceed.

11. On the Cluster Configuration page, enter the following:

a. Cluster Name

b. Set Replication Factor: 2 or 3

c. Controller VM password

d. vCenter Configuration

§ vCenter Datacenter name

§ vCenter Cluster name

e. System Services

§ DNS Server(s)

§ NTP Server(s)

§ Time Zone

f. Auto Support

§ Click the check box for Enable Auto Support

§ Mail Server

§ Mail Sender

§ ASUP Recipient(s)

g. Advanced Networking

§ Management vSwitch

§ Data vSwitch

h. Advanced Configuration

§ Click the check box to Optimize for VDI only deployment

§ Enable jumbo Frames on Data Network

§ Clean up disk partitions (if the cluster was in use previously)

i. vCenter Single-Sign-On server

12. The configuration details can be exported to a JSON file by clicking the down arrow icon in the top right corner of the Web browser page.

13. Configuration details can be reviewed on Configuration page on right side section. Verify entered details for IP address entered in Credentials page, server selection for cluster deployment and creation workflow, Cisco UCS Manager configuration, Hypervisor Configuration, IP addresses.

14. Click Start after verifying the details.

When the installation workflow begins, it will go through the Cisco UCS Manager validation.

15. After a successful validation, the workflow continues with the Cisco UCS Manager configuration.

16. After a successful Cisco UCS Manager configuration, the installer proceeds with the Hypervisor configuration.

17. After a successful Hypervisor configuration, deploy validation task is performed which checks for required component and accessibility prior Deploy task is performed on Storage Controller VM.

18. Installer performs deployment task after successfully validating Hypervisor configuration.

19. After a successful deployment of the ESXi hosts configuration, the Controller VM software components for HyperFlex installer checks for validation prior to creating the cluster.

20. After a successful validation, the installer creates and starts the HyperFlex cluster service.

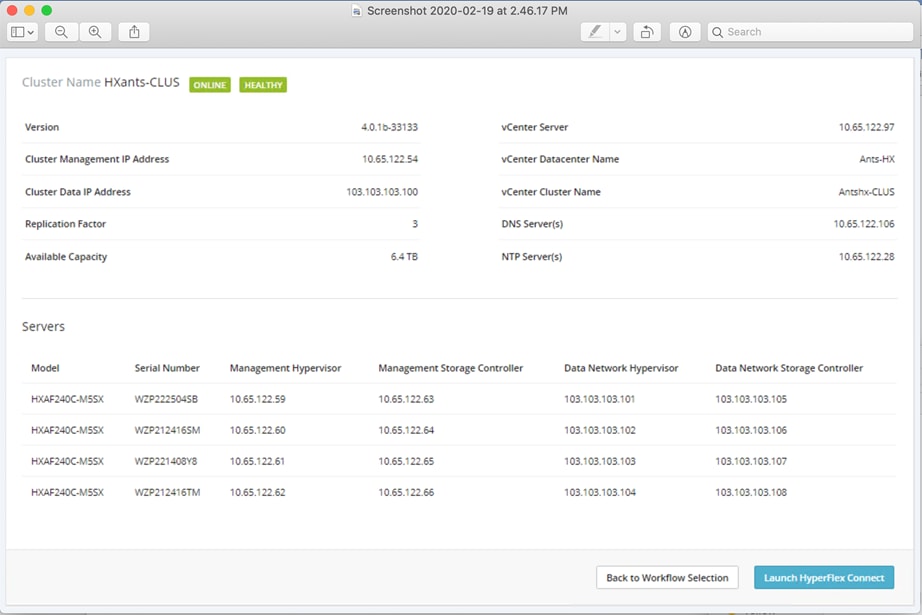

21. After a successful HyperFlex Installer VM workflow completion, the installer GUI provides a summary of the cluster that has been created.

22. Click Launch vSphere Web Client.

Cisco HyperFlex installer creates and configures a controller VM on each converged node. Naming convention is used as “stctlvm-<Serial Number for Cisco UCS Node>”

![]() Do not to change the name or any resource configuration for the controller VM.

Do not to change the name or any resource configuration for the controller VM.

![]() For more information about the Cisco HyperFlex installation steps with screenshots, see: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hyperflex_30_vsi_esxi.html#_Toc514225549. Please note that this link is for Cisco HyperFlex 3.0, users can refer to this guide to verify their configuration with the screenshots.

For more information about the Cisco HyperFlex installation steps with screenshots, see: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hyperflex_30_vsi_esxi.html#_Toc514225549. Please note that this link is for Cisco HyperFlex 3.0, users can refer to this guide to verify their configuration with the screenshots.

Run Cluster Post Installation Script

After a successful installation of HyperFlex cluster, run the post_install script by logging into the Data Platform Installer VM via SSH, using the credentials configured earlier.

A built-in post install script automates basic final configuration tasks like enabling HA/DRS on HyperFlex cluster, configuring VMkernel for vMotion interface, creating datastore for ESXi logging, and so on.

To run the script, use any tool of choice to make a secure connection to the Cisco HyperFlex Data Platform installer using it’s IP address and port 22.

1. Authenticate with the credentials provided earlier. (user name: root with user’s password if user did not change the defaults.)

2. When authenticated, enter post_install at the command prompt, then press Enter.

3. Provide a valid vCenter administrator user name and password and the vCenter url IP address.

4. Type y for yes to each of the prompts that follow and choose yes at the prompt to Add VM network VLANs if user needs to add more VLANs. This is required for allowing other VLANs that are required for three-arm interfaces created for F5 load balancer.

5. Provide the requested user credentials, the vMotion netmask, VLAN ID and an IP address on the vMotion VLAN for each host when prompted for the vmkernel IP.

![]() For more details on running the post installation script, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hx_4_vsi_vmware_esxi.html#_Toc24465202

For more details on running the post installation script, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/hx_4_vsi_vmware_esxi.html#_Toc24465202

Log into HyperFlex Connect

To configure the Cisco HyperFlex Cluster, follow these steps:

1. Log into HX Installer VM through a web browser: http://<Installer_VM_IP_Address>

2. HyperFlex Connect dashboard shows the details about the cluster status, capacity, and performance.

3. System Information page provides details on System Overview, Nodes and Disks.

4. Click Datastores and click Create Datastore to create datastore for Anthos GKE on-prem deployment.

Adding a Converged Node through HX Installer

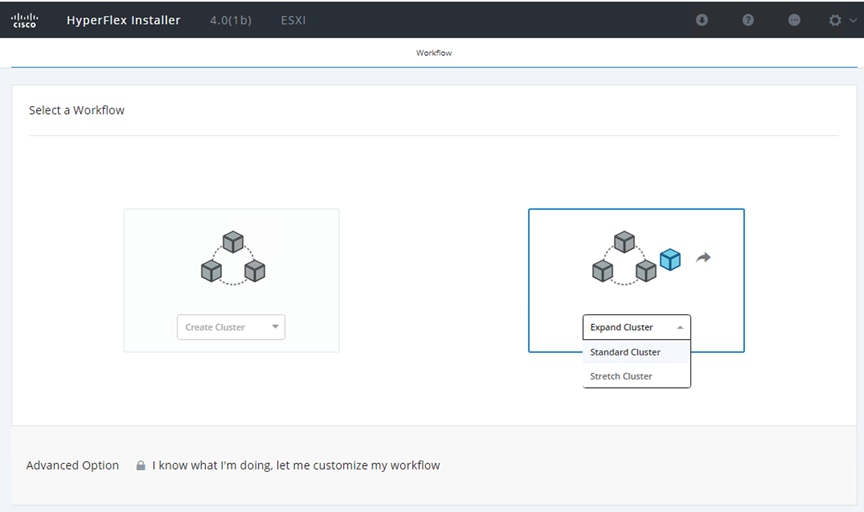

Cisco HyperFlex allows users to expand their cluster non-disruptively. Converged, compute only and storage nodes can be independently scaled. In this solution we show how a converged node can be added to the existing cluster.

The HyperFlex installer has a wizard for Cluster Expansion with converged nodes. This procedure is very similar to the initial HyperFlex cluster setup. The following process assumes a new Cisco HX node has been ordered, therefore it is pre-configured from the factory with the proper hardware, firmware, and ESXi hypervisor installed. To add converged storage nodes to an existing HyperFlex cluster, follow these steps:

1. On the HyperFlex installer webpage click the dropdown menu for Expand Cluster, then click Standard Cluster.

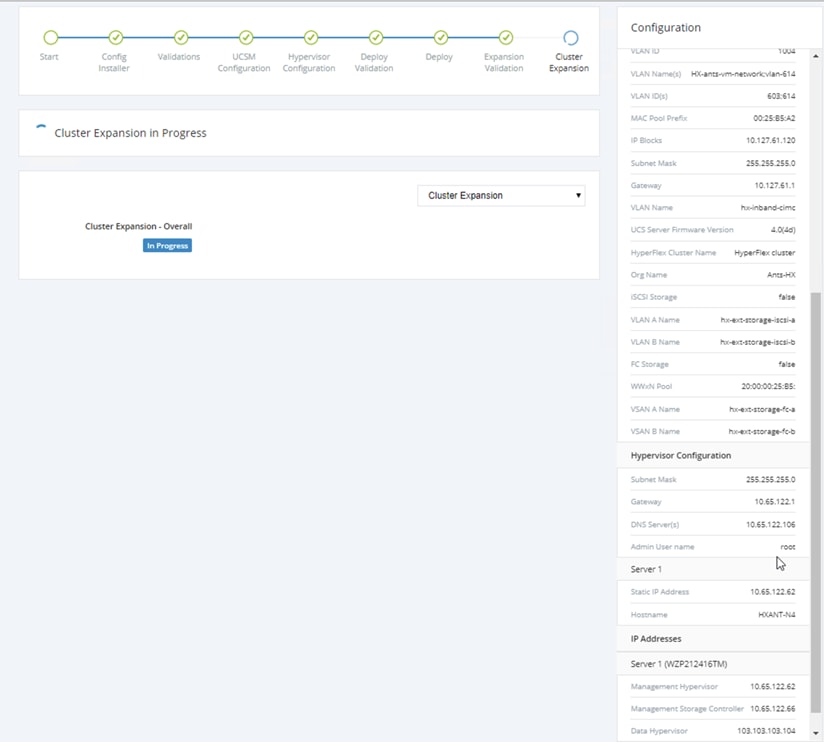

2. Enter the Cisco UCS Manager and vCenter DNS hostname or IP address, the admin usernames, and the passwords for the UCS domain of the existing cluster nodes. Optionally, user can import a JSON file that has the configuration information. Click Continue.

3. Select the HX cluster to expand and enter the cluster management password, then click Continue.

4. Select the unassociated HX-series server to be added to the existing HX cluster, then click Continue.

5. On the UCSM Configuration page, all the settings should be pre-populated with the correct values for the existing cluster. The only value that is required is to create an additional IP address block for the hx-ext-mgmt IP address pool. Enter a range of IP addresses sufficient to assign to the new server, along with the subnet mas and gateway address, then click Continue.

6. Enter the subnet mask, gateway, DNS, and IP addresses for the Hypervisors (ESXi hosts) as well as host names, then click Continue. The IPs will be assigned through Cisco UCS Manager to the ESXi systems.

7. Enter the additional IP addresses for the Management and Data networks of the new node. Enter the current password that is set on the Controller VMs. Select Clean up disk partitions if it is not a first time installation. Click Start.

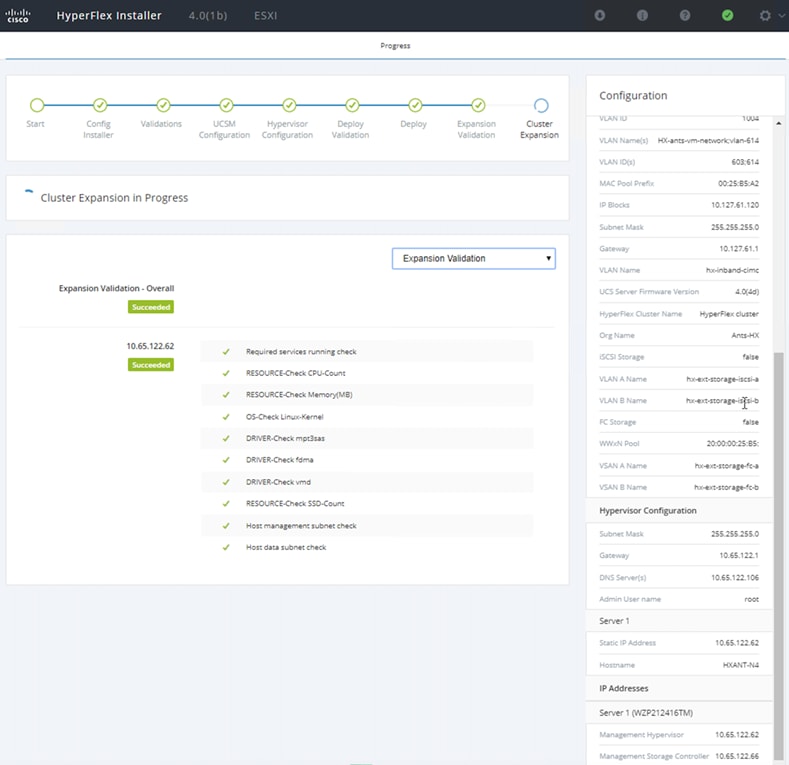

8. Validation of the configuration now starts and automatically proceeds with the configuration process if there are no warnings or errors.

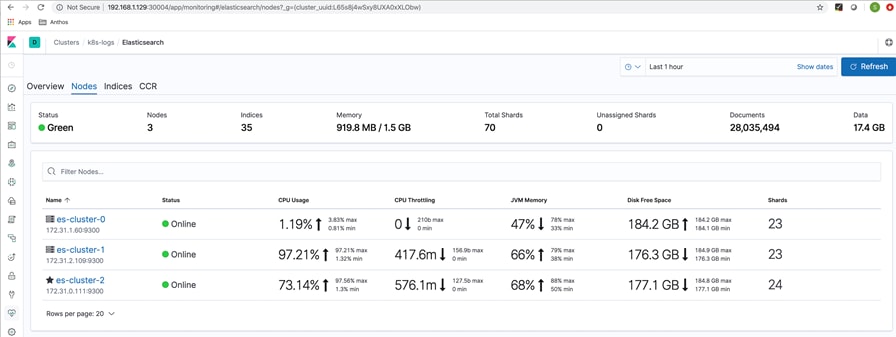

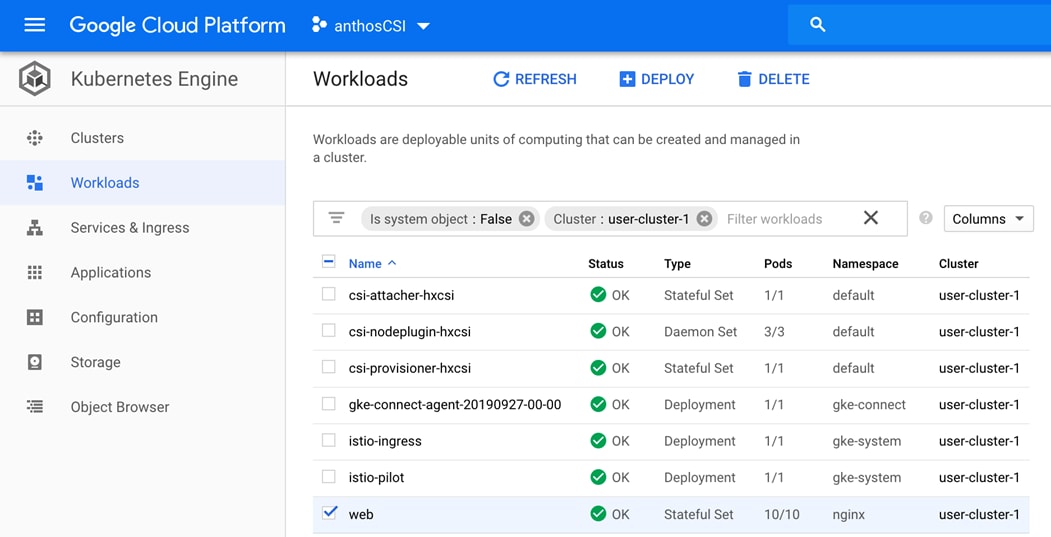

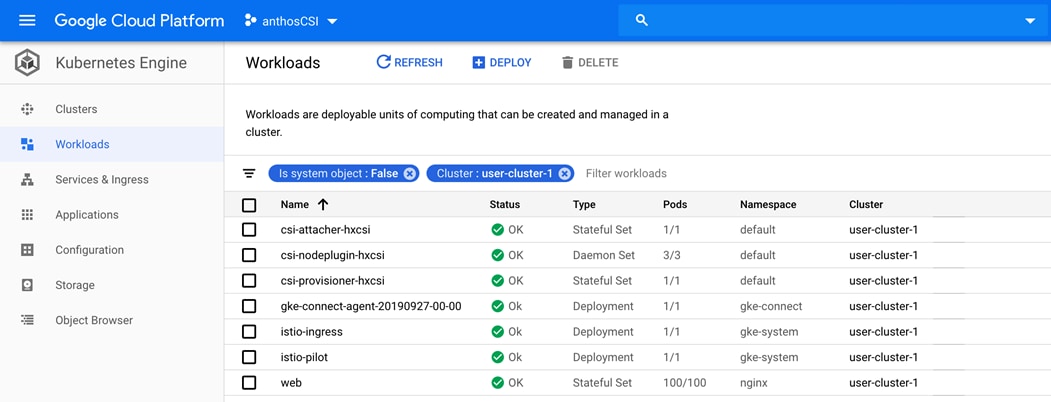

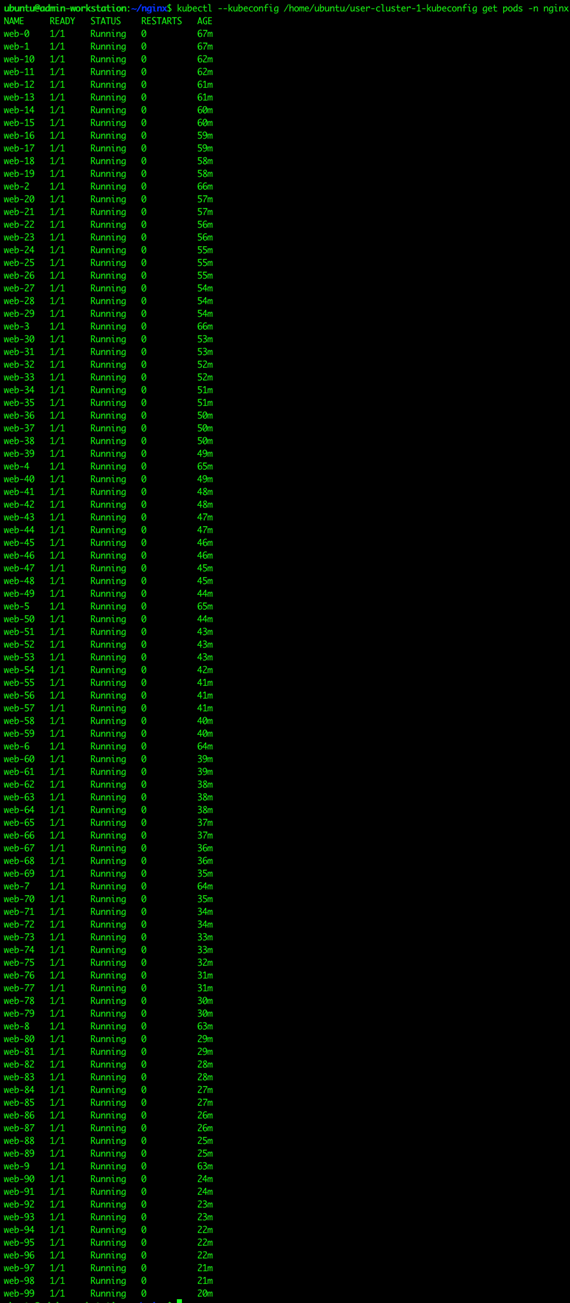

9. Once HX installer completes the deployment and summary screen can be seen showing the node added into the existing cluster.