FlexPod Datacenter with Microsoft Hyper-V Windows Server 2016 and Cisco ACI 3.0

Available Languages

FlexPod Datacenter with Microsoft Hyper-V Windows Server 2016 and Cisco ACI 3.0

Deployment Guide for FlexPod Datacenter with Microsoft Hyper-V Windows Server 2016, Cisco ACI 3.0, and NetApp AFF A-Series

Last Updated: May 28, 2018

About the Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2018 Cisco Systems, Inc. All rights reserved.

Table of Contents

Deployment Hardware and Software

Infrastructure Servers Prerequisites

Cisco Application Policy Infrastructure Controller (APIC) Verification

Initial ACI Fabric Setup Verification

Setting Up Out-of-Band Management IP Addresses for New Leaf and Switches

Verifying Time Zone and NTP Server

Verifying BGP Route Reflectors

Set Up Fabric Access Policy Setup

Create LLDP Interface Policies

Create BPDU Filter/Guard Policies

Create Virtual Port Channels (vPCs)

VPC – UCS Fabric Interconnects

Configuring Common Tenant for Management Access

Create Security Filters in Tenant Common

Create Application Profile for IB-Management Access

Create Application Profile for Host Connectivity

NetApp All Flash FAS A300 Controllers

Complete Configuration Worksheet

Set Onboard Unified Target Adapter 2 Port Personality

Set Auto-Revert on Cluster Management

Set Up Management Broadcast Domain

Set Up Service Processor Network Interface

Disable Flow Control on 10GbE and 40GbE Ports

Disable Unused FCoE Capability on CNA Ports

Configure Network Time Protocol

Configure Simple Network Management Protocol

Enable Cisco Discovery Protocol

Create Jumbo Frame MTU Broadcast Domains in ONTAP

Create Storage Virtual Machine

Modify Storage Virtual Machine Options

Create Load-Sharing Mirrors of SVM Root Volume

Create Block Protocol Service(s)

Create Gold Management Host Boot LUN

Create Witness and iSCSI Datastore LUNs

Add Infrastructure SVM Administrator

Upgrade Cisco UCS Manager Software to Version 3.2(1d)

Add Block of IP Addresses for KVM Access

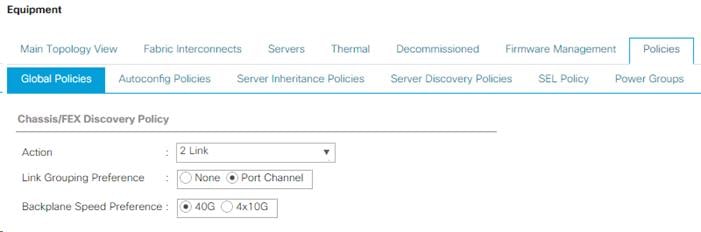

Edit Policy to Automatically Discover Server Ports

Verify Server and Enable Uplink Ports

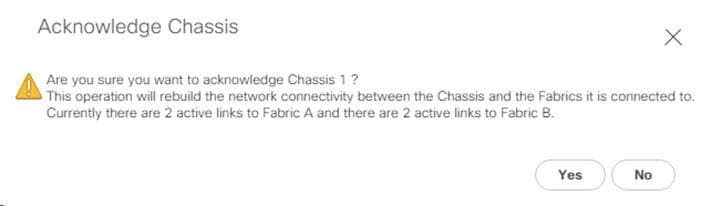

Acknowledge Cisco UCS Chassis and FEX

Re-Acknowledge Any Inaccessible C-Series Servers

Create Uplink Port Channels to Cisco Nexus 9332 Switches

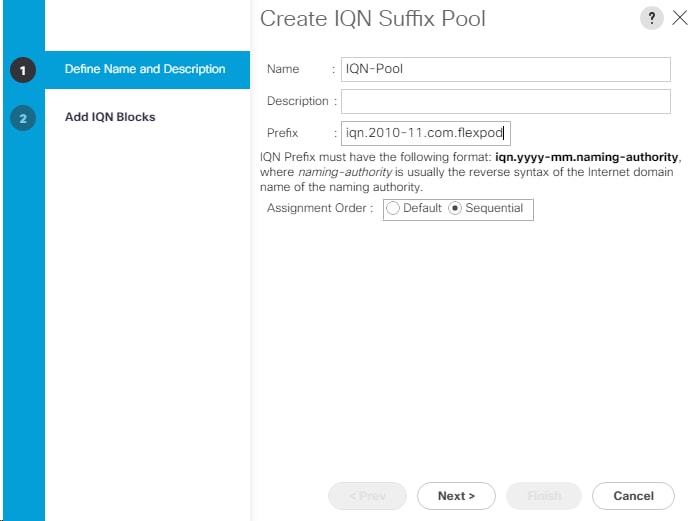

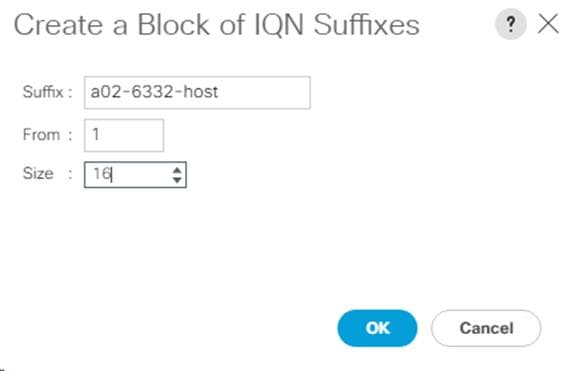

Create an IQN Pool for iSCSI Boot

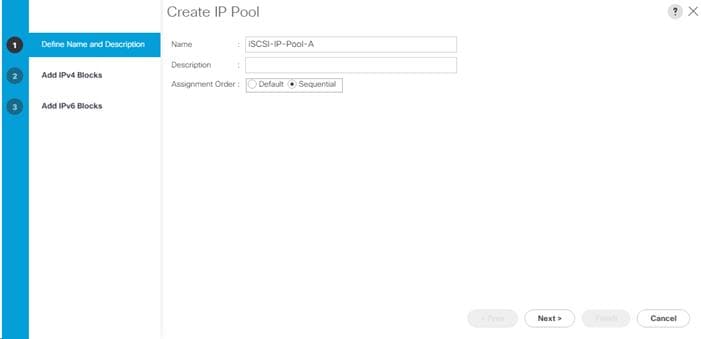

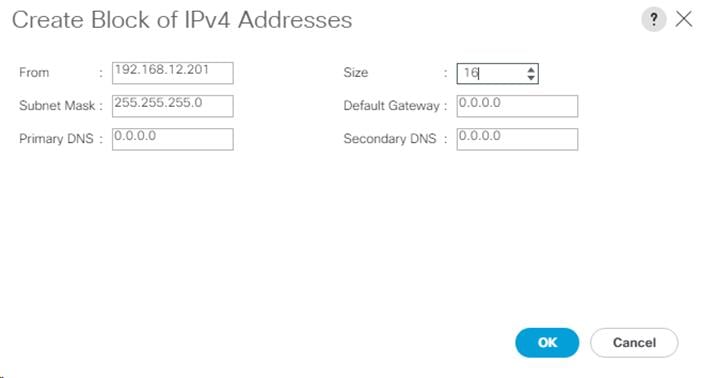

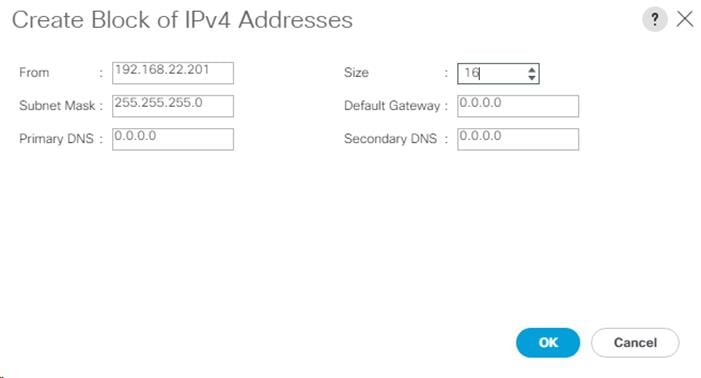

Create iSCSI Boot IP Address Pools

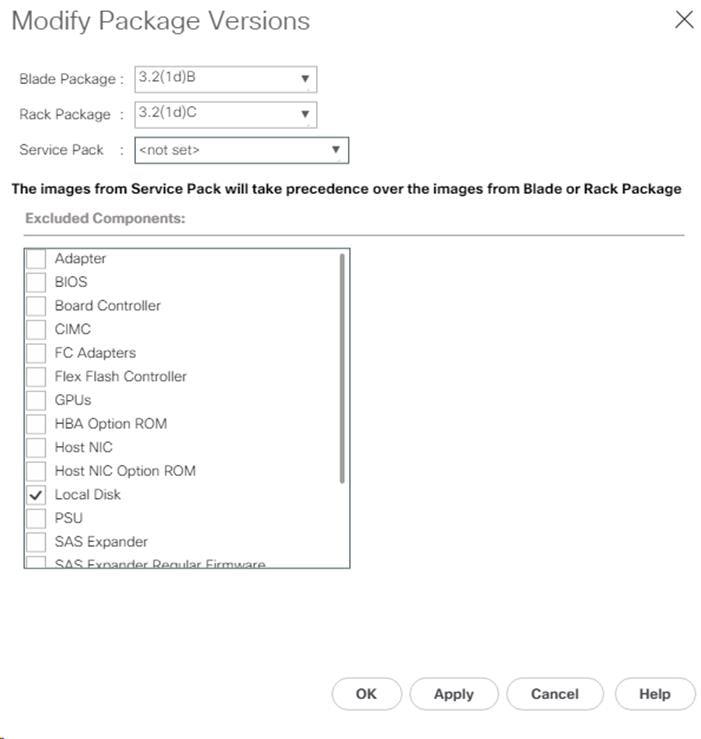

Modify Default Host Firmware Package

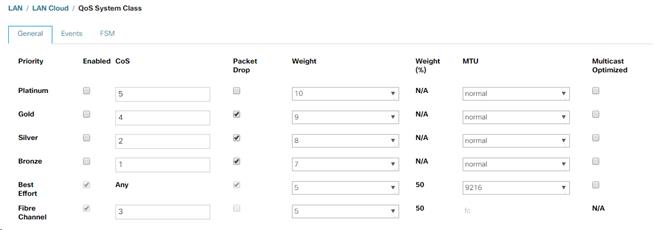

Set Jumbo Frames in Cisco UCS Fabric

Create Local Disk Configuration Policy (Optional)

Create Server Pool Qualification Policy (Optional)

Update the Default Maintenance Policy

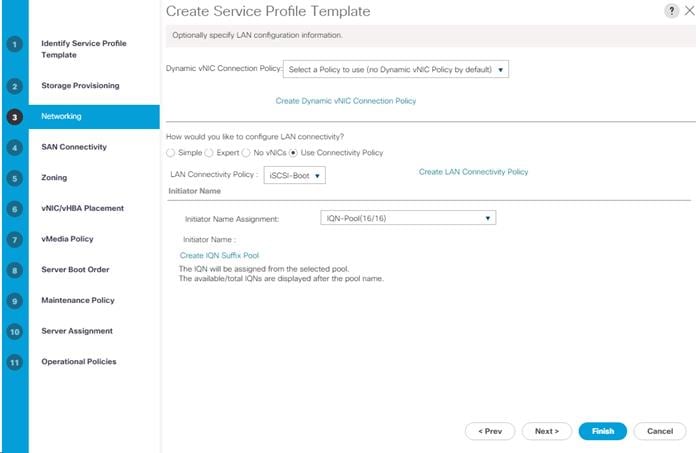

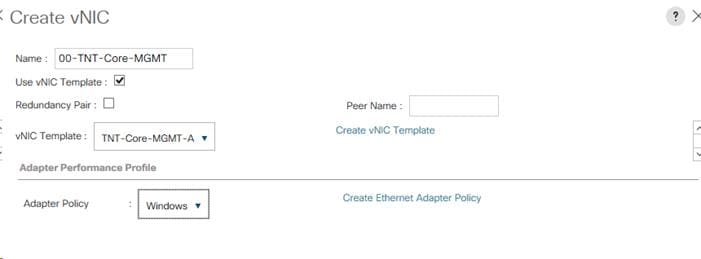

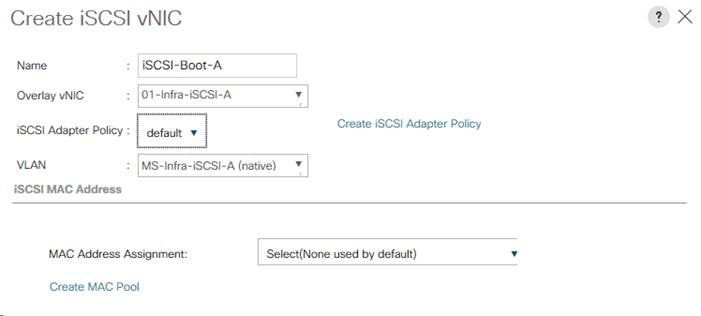

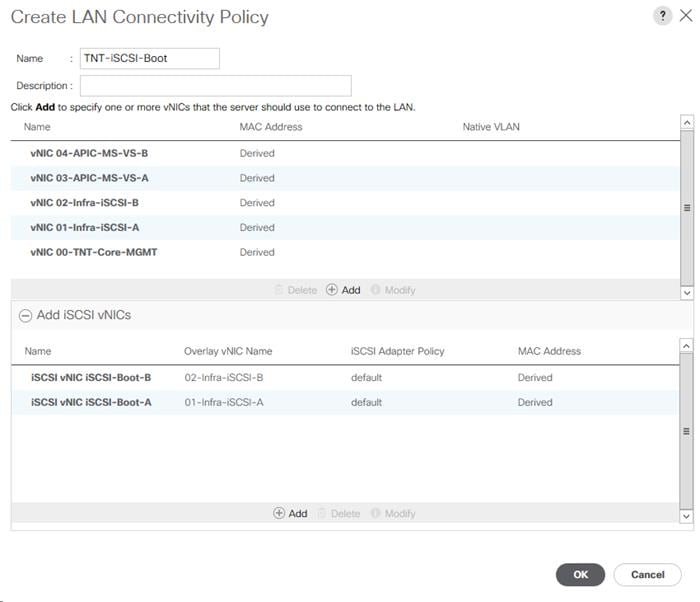

Create LAN Connectivity Policy for iSCSI Boot

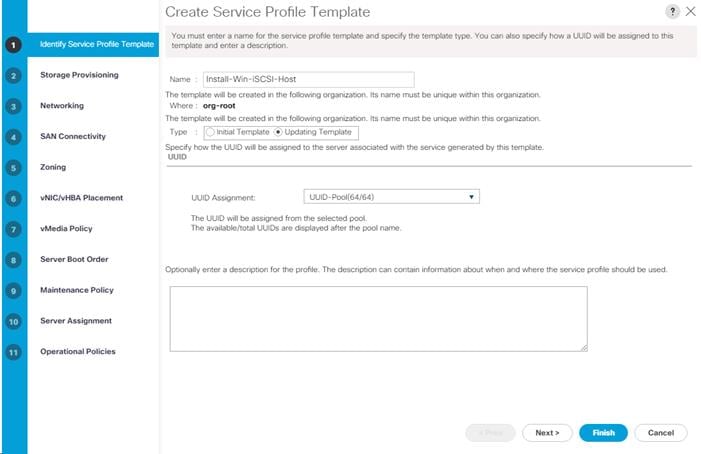

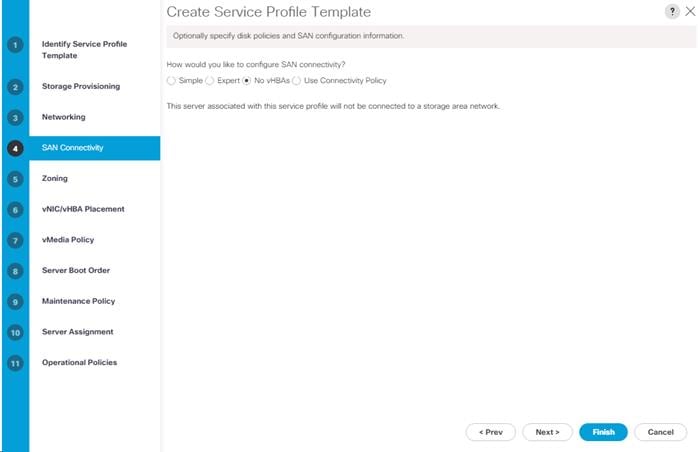

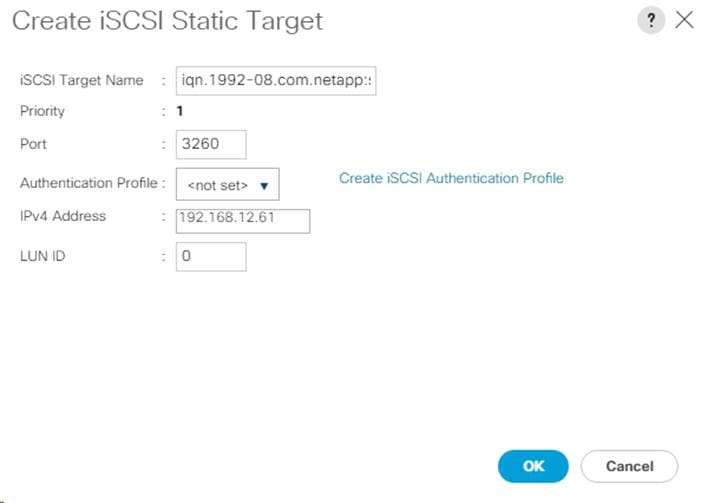

Create iSCSI Boot Service Profile Templates

Create Multipath Service Profile Template

Storage Configuration – Boot LUNs

NetApp ONTAP Boot Storage Setup

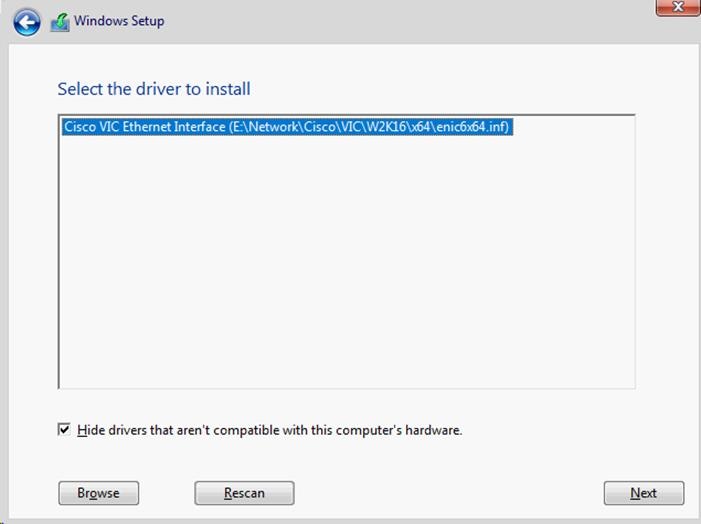

Microsoft Windows Server 2016 Hyper-V Deployment Procedure

Setting Up Microsoft Windows Server 2016

Host Renaming and Join to Domain

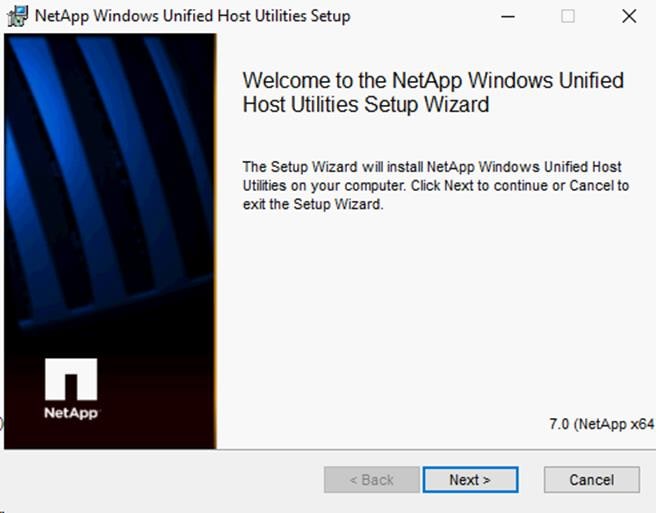

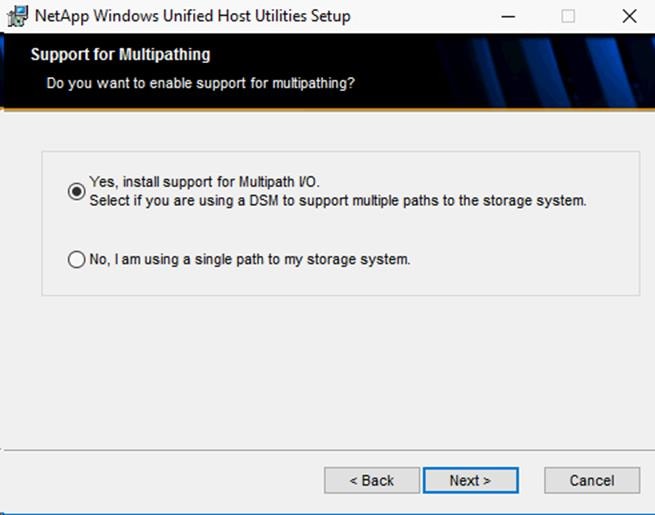

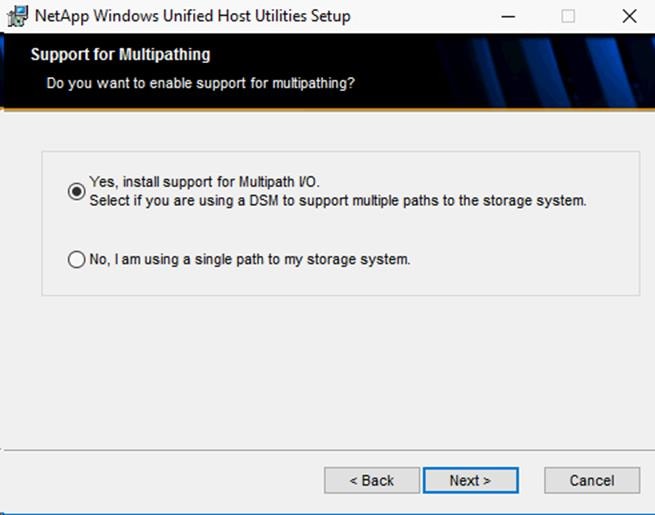

Install NetApp Windows Unified Host Utilities

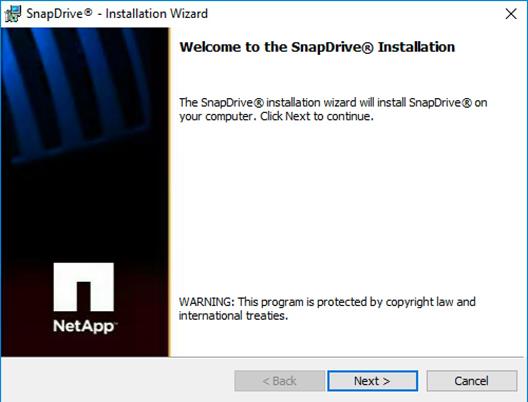

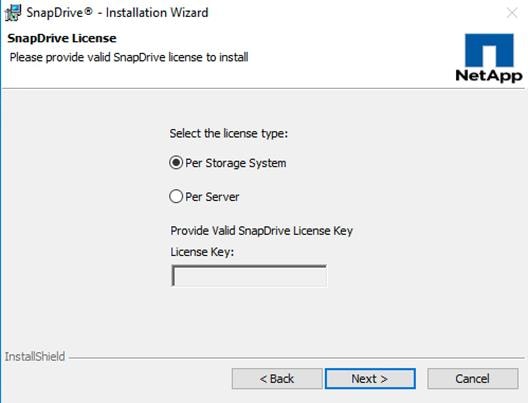

Install NetApp SnapDrive 7.1.4 for Windows

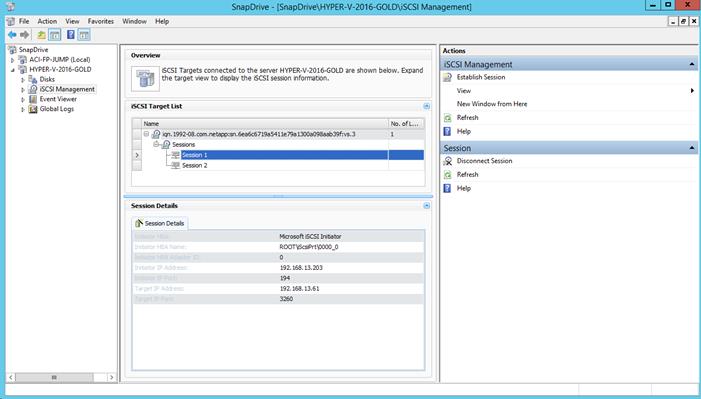

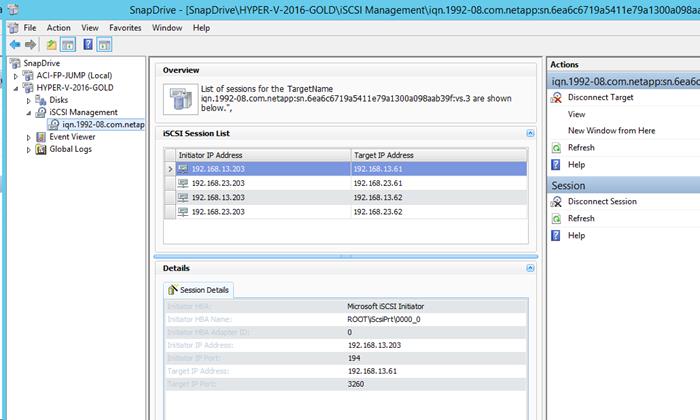

Configuring Access for SnapDrive for Windows

Downloading SnapDrive 7.1.4 for Windows

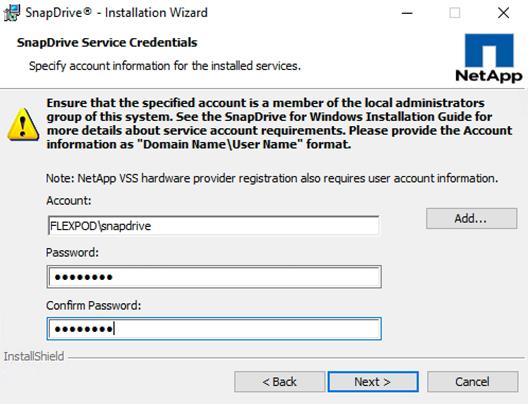

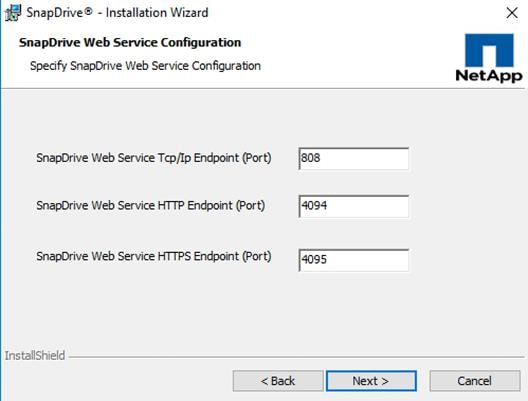

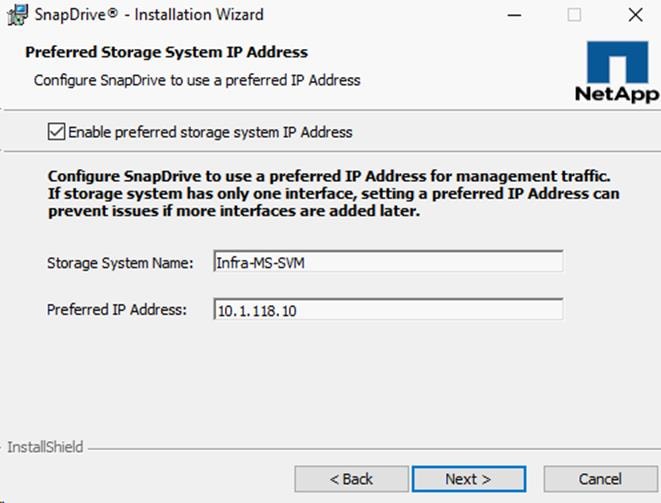

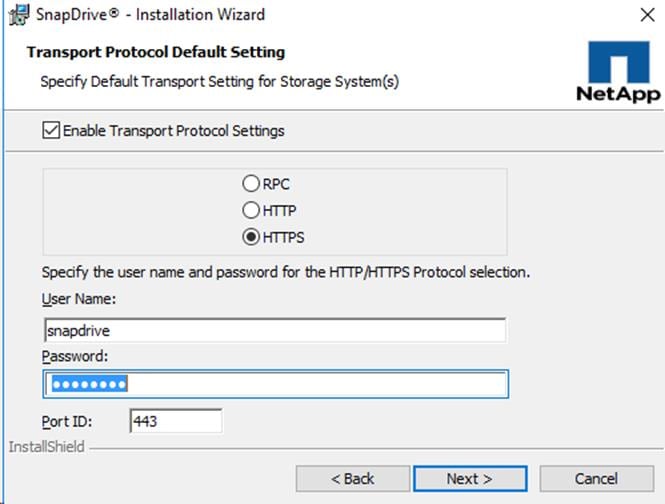

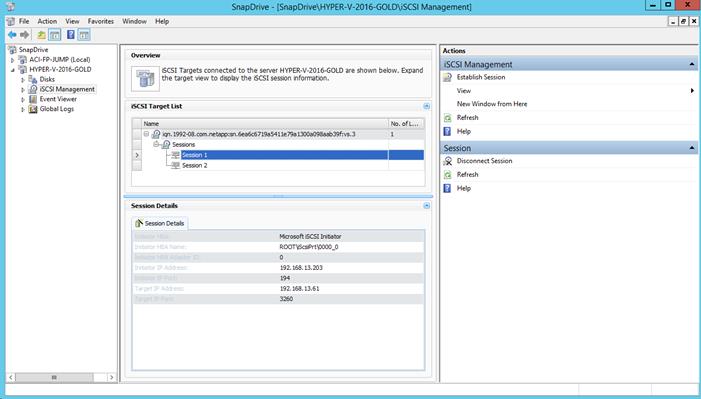

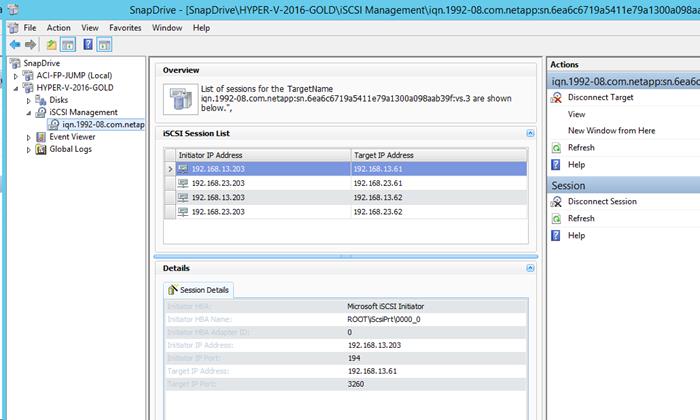

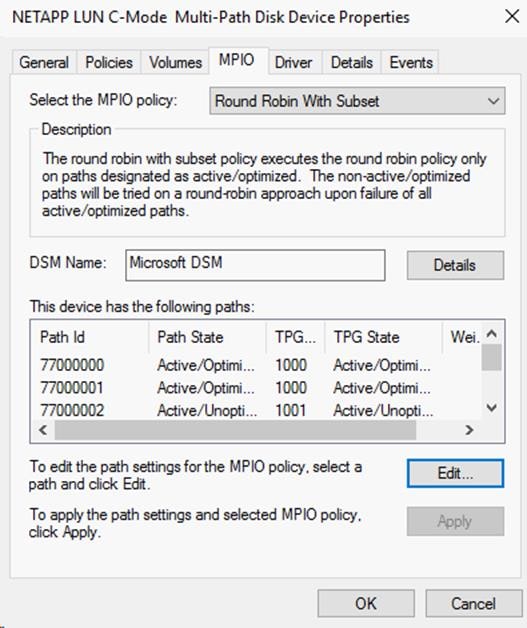

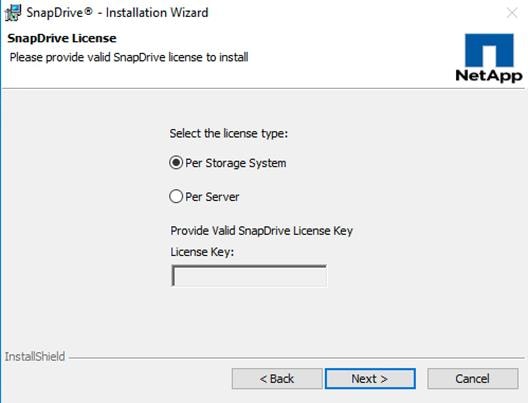

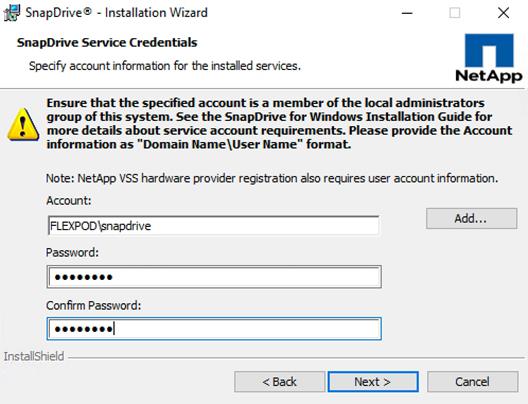

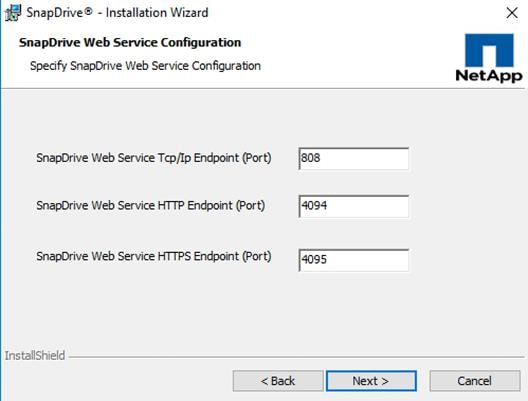

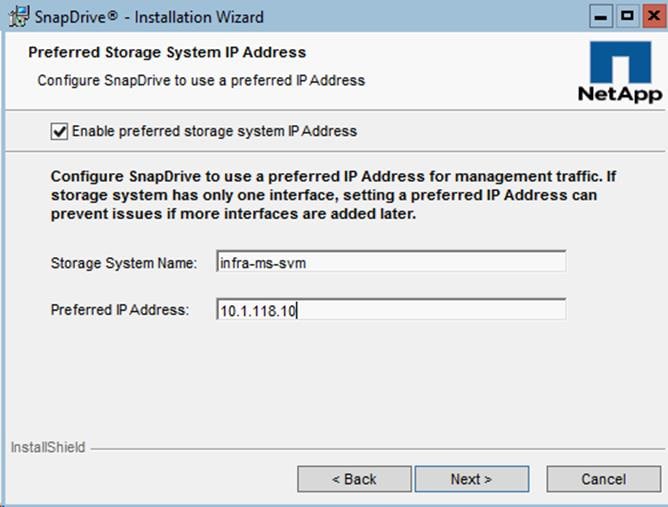

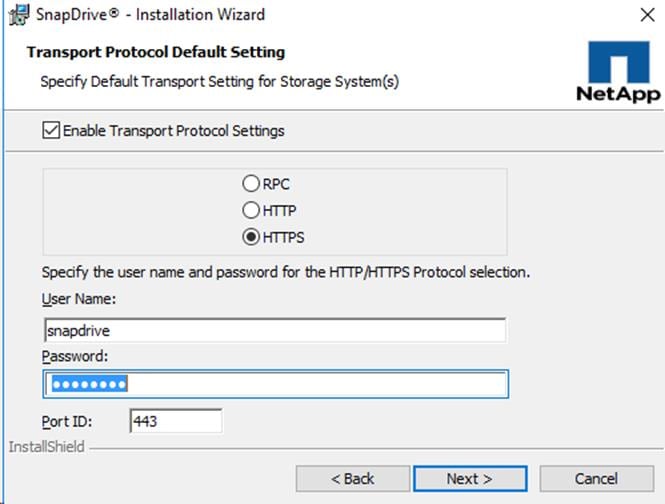

Installing SnapDrive for Windows

Clone and Remap Server LUNs for Sysprep Image

Clone and Remap Server LUNs for Production Images

Install Roles and Features Required for Hyper-V

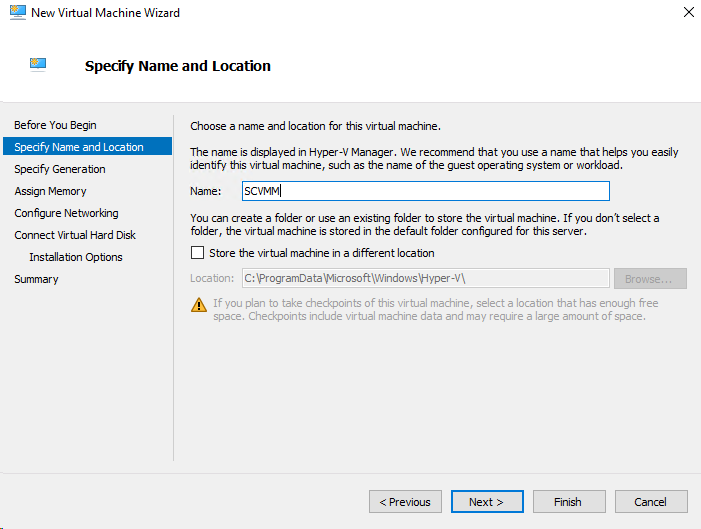

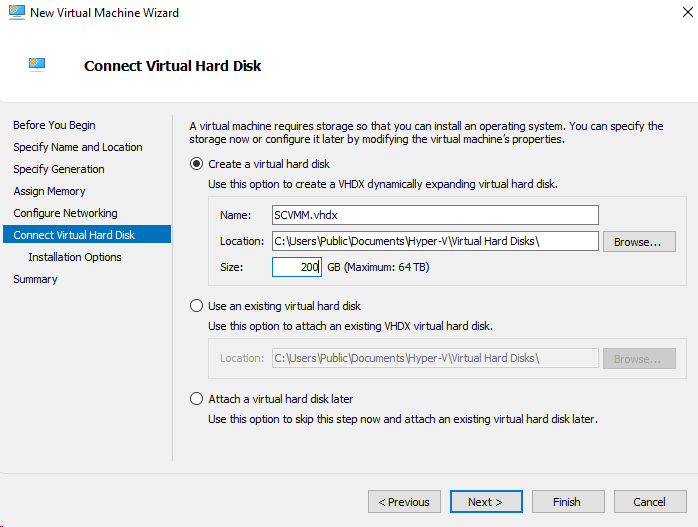

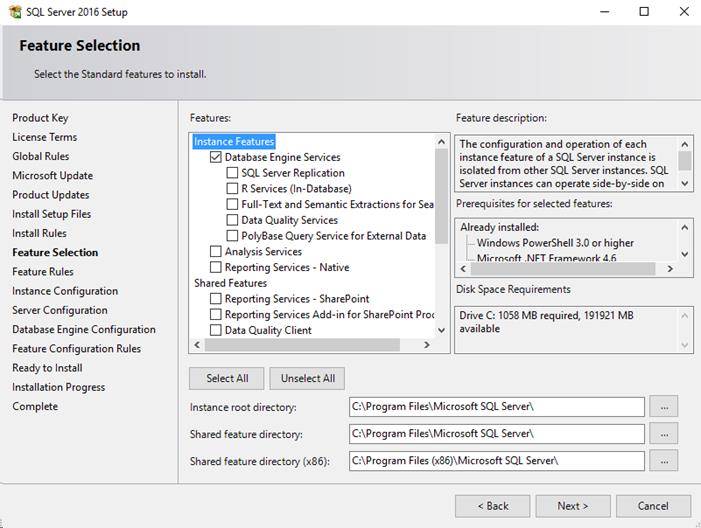

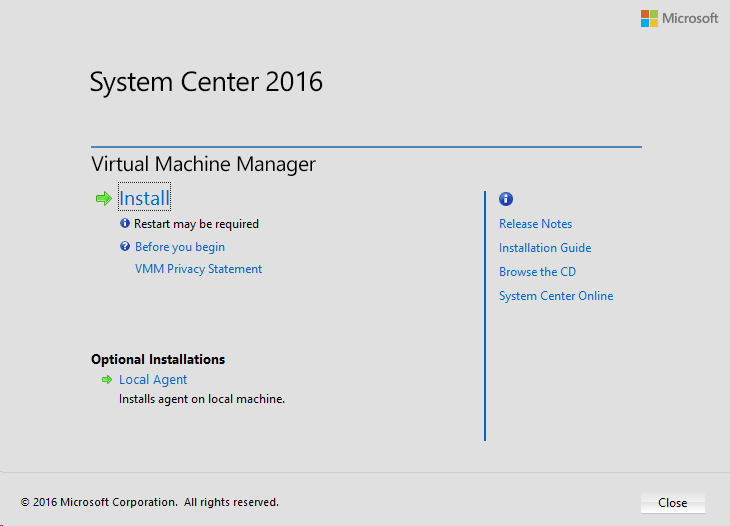

Build System Center Virtual Machine Manager (SCVMM) Virtual Machine (VM)

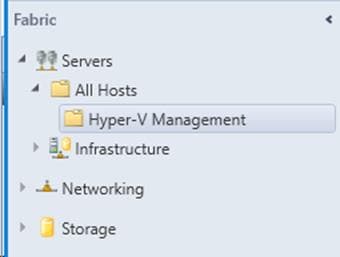

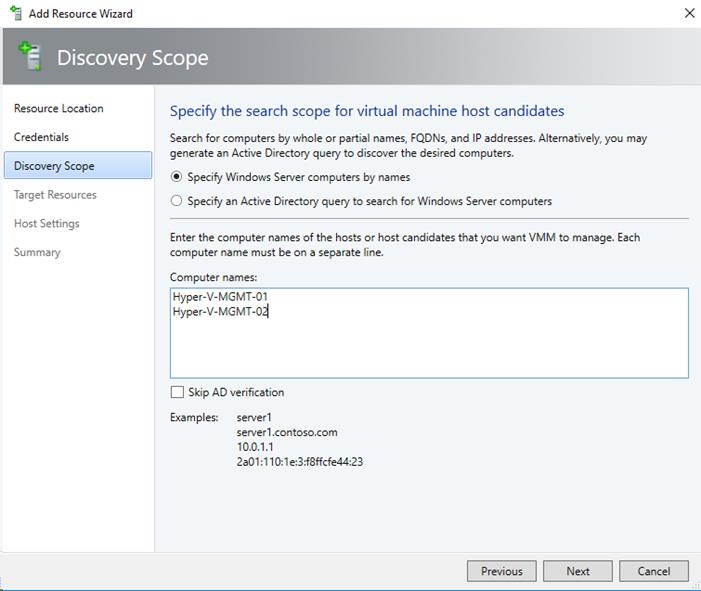

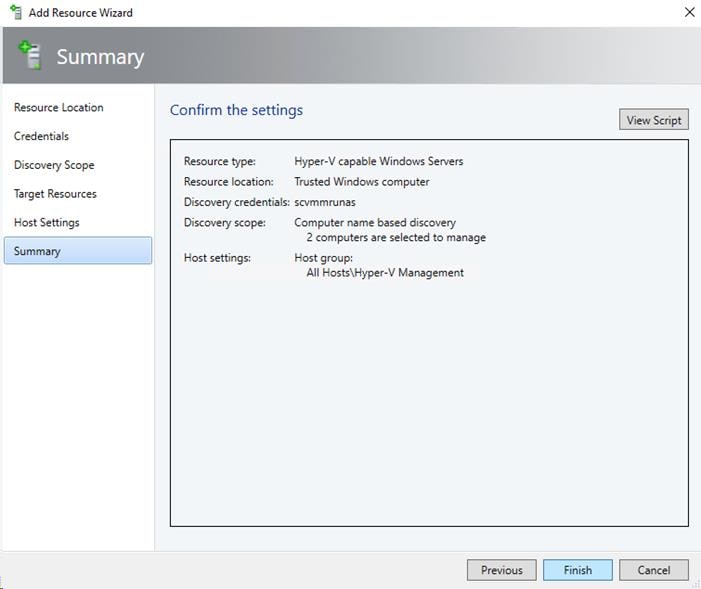

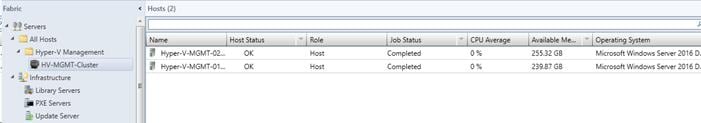

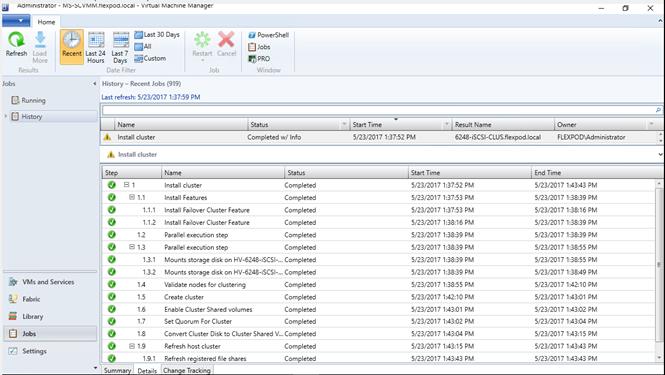

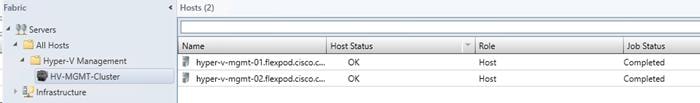

Deploying and Managing the Management Hyper-V Cluster Using System Center 2016 VMM

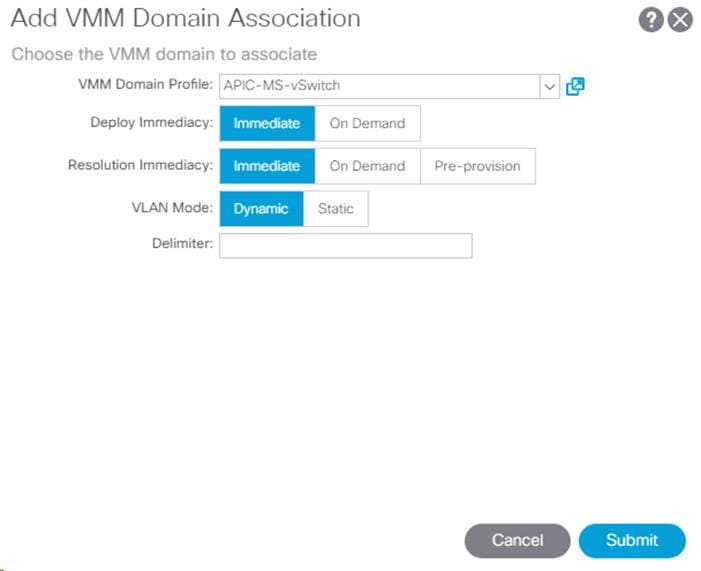

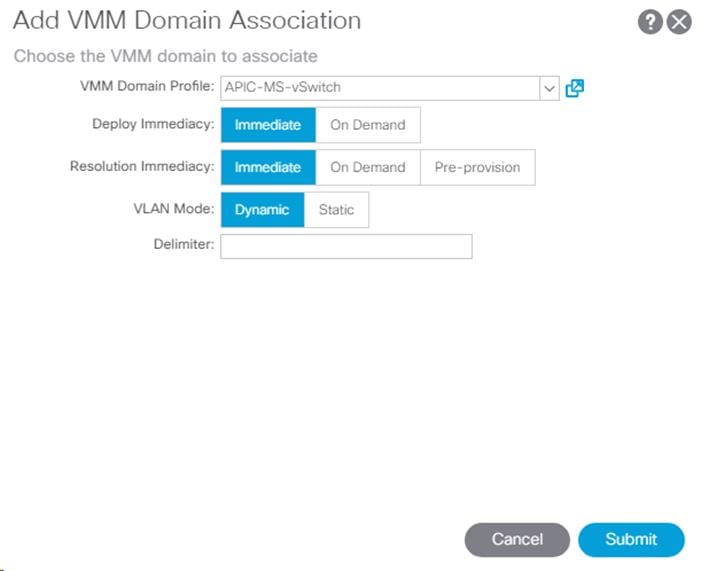

Creating APIC-Controlled Hyper-V Networking

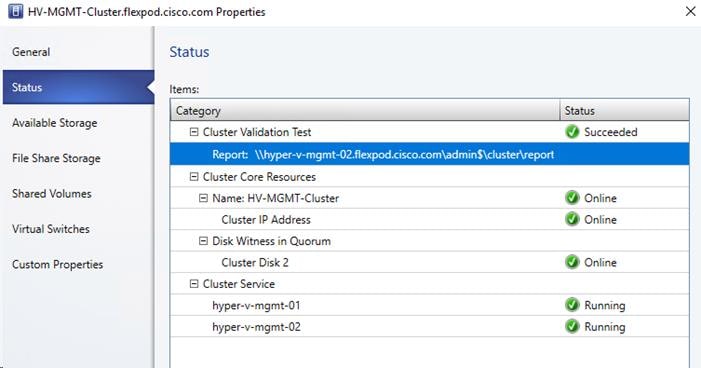

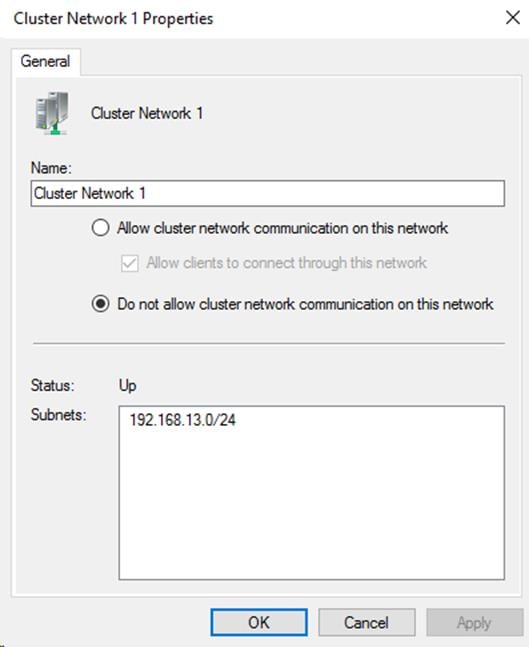

Create Windows Failover Cluster

Build a Windows Server 2016 Virtual Machine for Cloning

NetApp SMI-S Provider Configuration

NetApp SMI-S Integration with VMM

Build Windows Active Directory Servers for ACI Fabric Core Services

Build Microsoft Systems Center Operations Manager (SCOM) Server VM

Cisco UCS Management Pack Suite Installation and Configuration

Cisco UCS Manager Integration with SCOM

About Cisco UCS Management Pack Suite

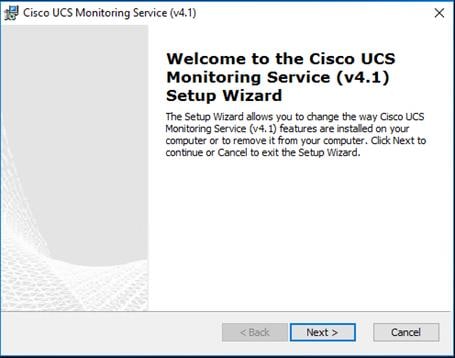

Installing Cisco UCS Monitoring Service

Adding a Firewall Exception for the Cisco UCS Monitoring Service

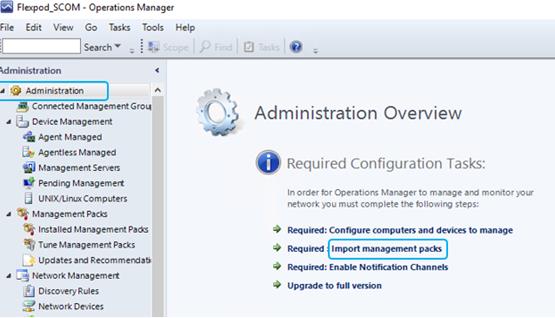

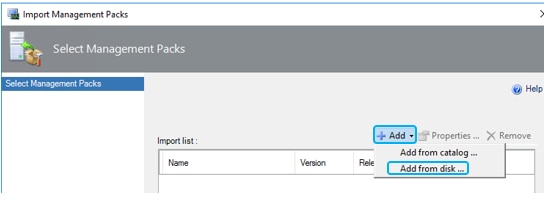

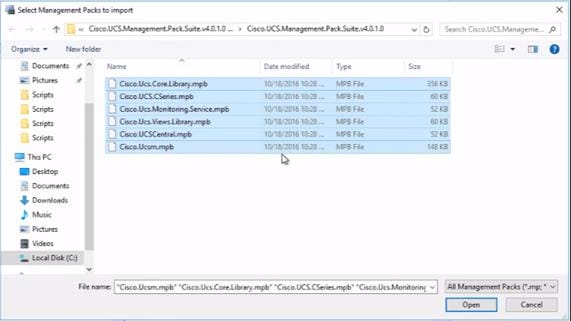

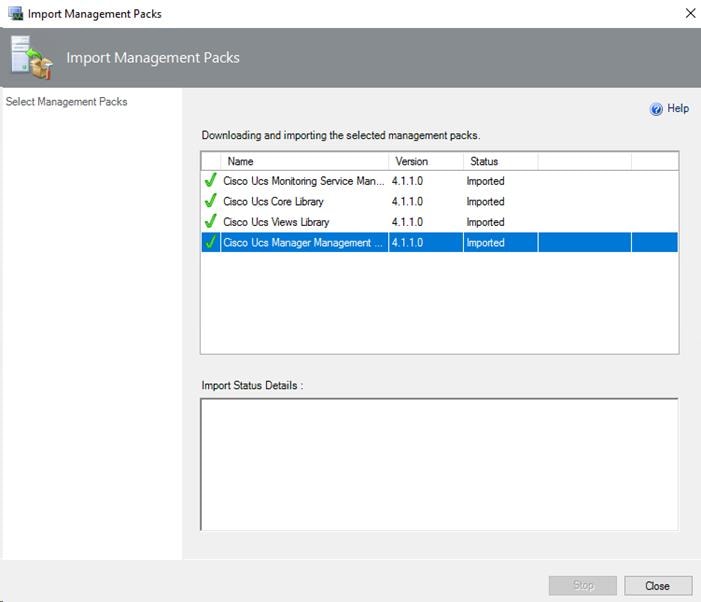

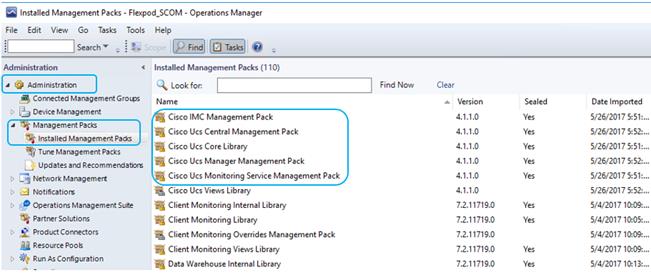

Installing the Cisco UCS Management Pack Suite

Adding Cisco UCS Domains to the Operations Manager

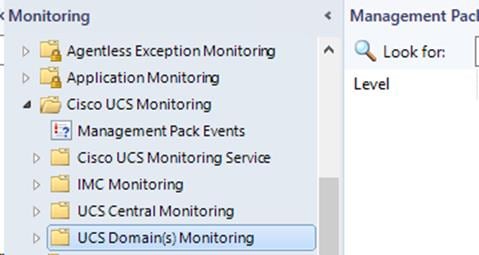

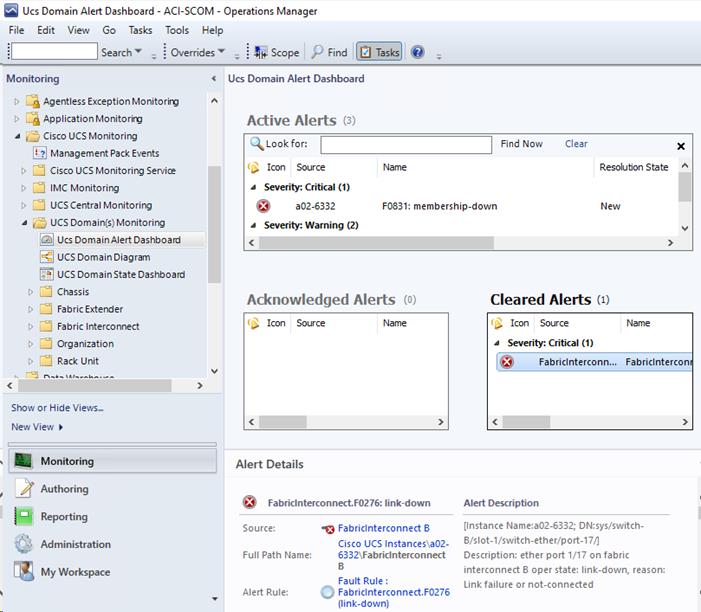

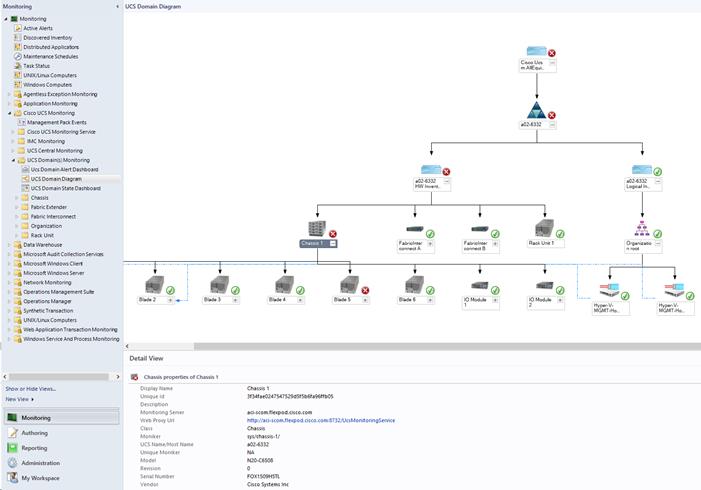

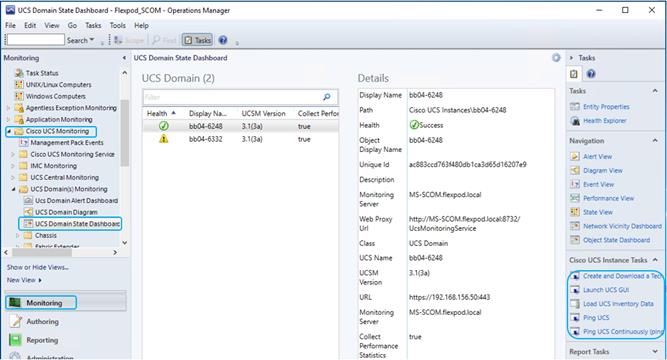

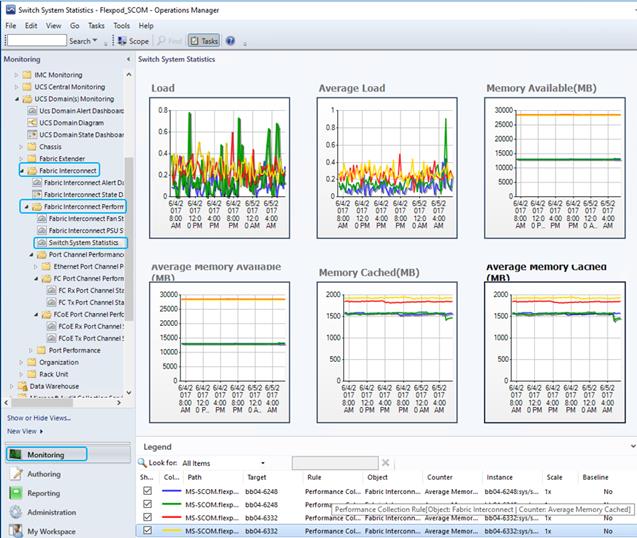

Cisco UCS Manager Monitoring Dashboards

Cisco UCS Manager Plug-in for SCVMM

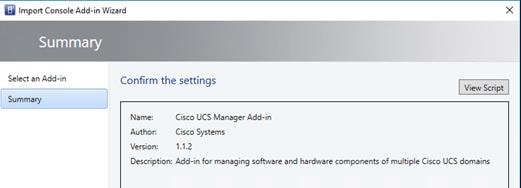

Cisco UCS Manager Plug-in Installation

Cisco UCS Domain Registration:

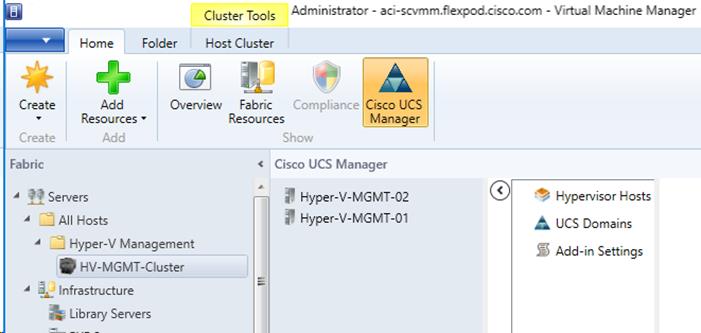

Using the Cisco UCS SCVMM Plugin

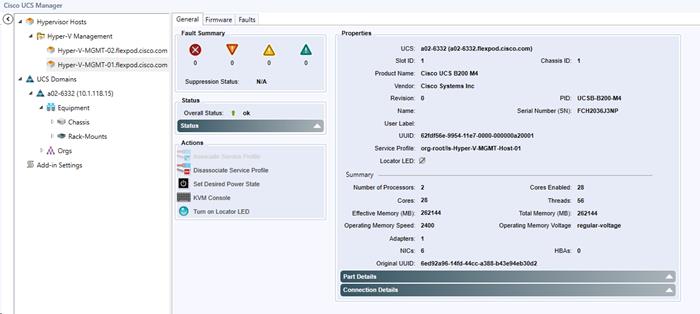

Viewing the Server Details from the Hypervisor Host View

Viewing Registered UCS Domains

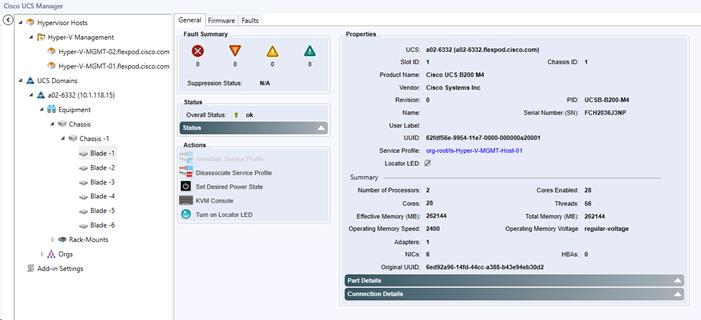

Viewing the UCS Blade Server Details

Viewing the UCS Rack-Mount Server Details:

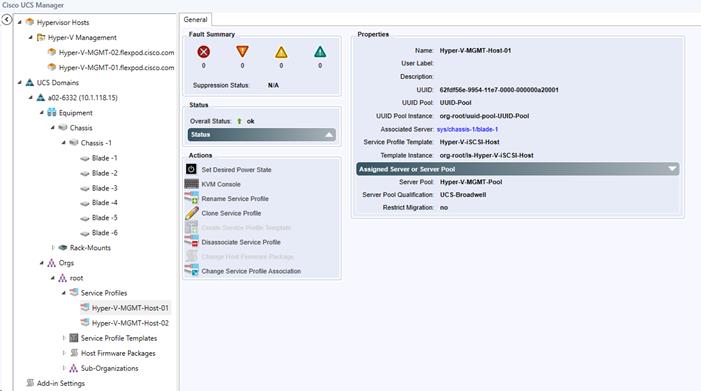

Viewing the Service Profile Details

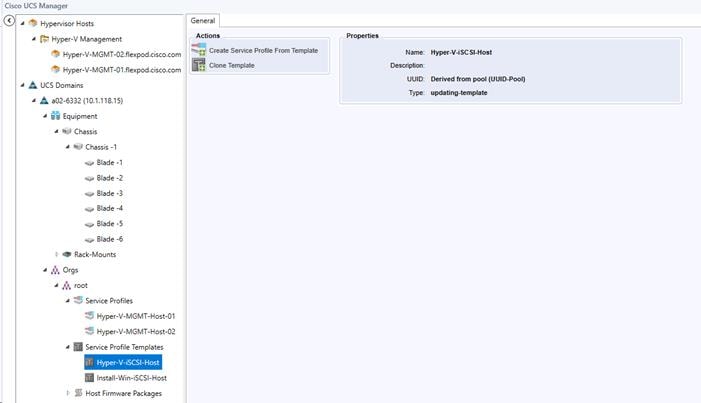

Viewing the Service Profile Template Details

Viewing the Host Firmware Package Details

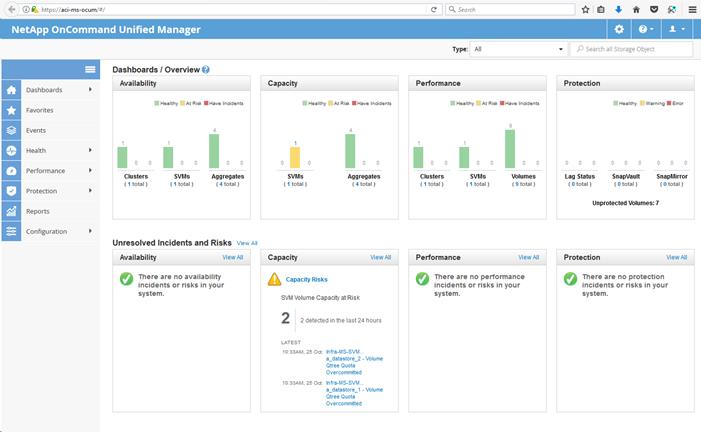

NetApp FlexPod Management Tools Setup

NetApp SnapManager for Hyper-V

Downloading SnapManager for Hyper-V

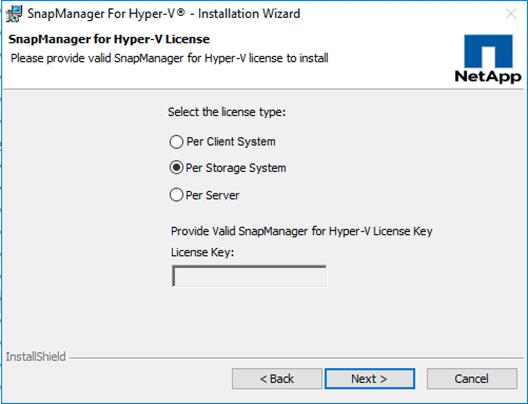

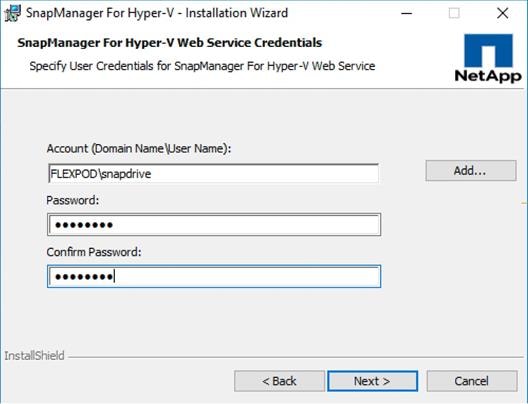

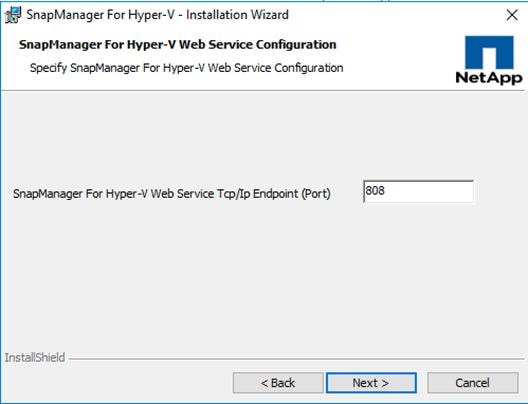

Installing SnapManager for Hyper-V

NetApp OnCommand Plug-in for Microsoft

Downloading OnCommand Plug-in for Microsoft

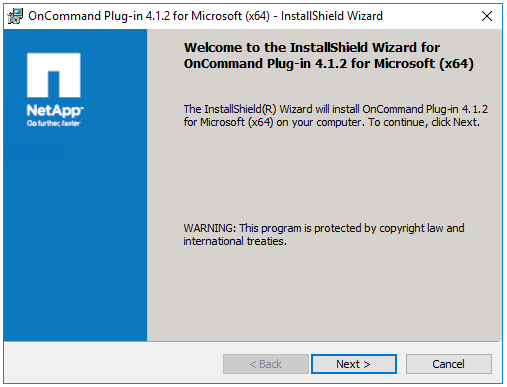

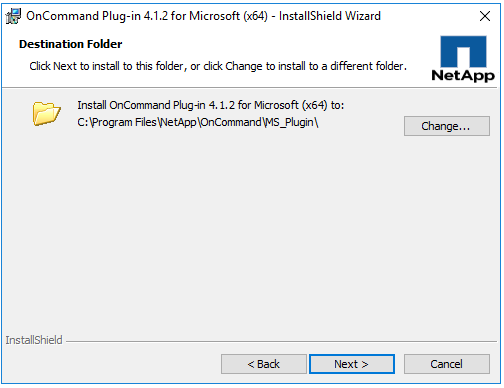

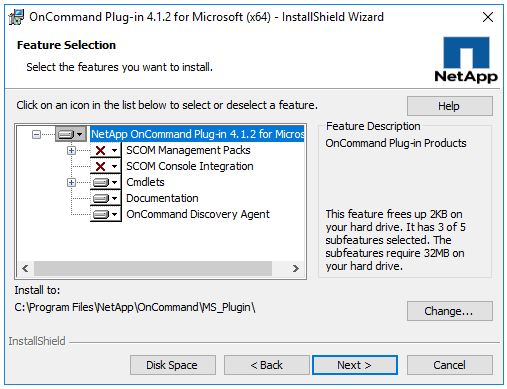

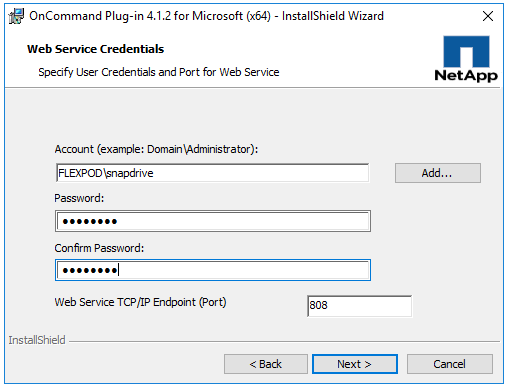

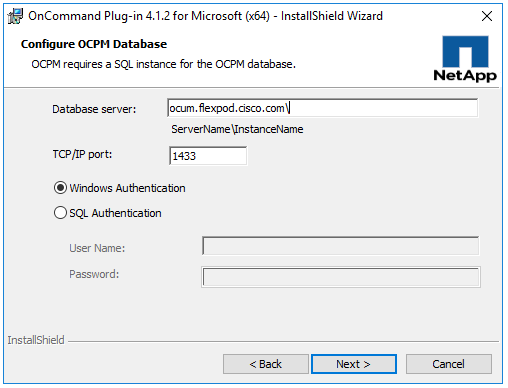

Installing NetApp OnCommand Plug-In for Microsoft

Storage Configuration – Boot LUNs for Tenant Hyper-V Hosts

NetApp ONTAP Boot Storage Setup

Add Supernet Routes to Core-Services Devices

Adding the Supernet Route in a Windows VM or Host

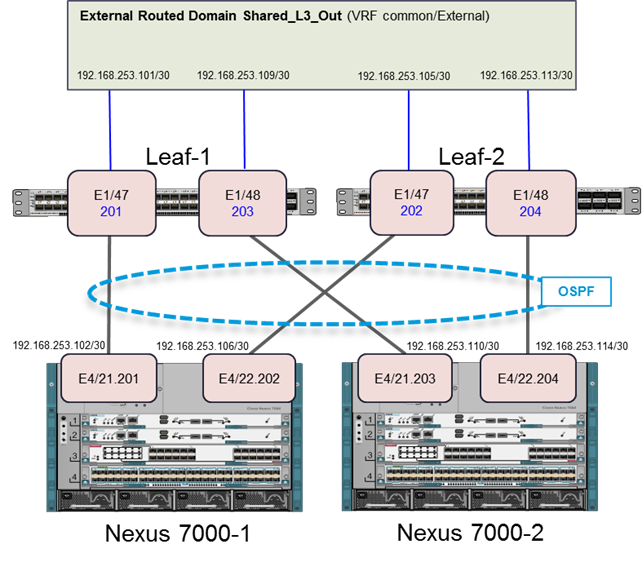

Configuring the Nexus 7000s for ACI Connectivity (Sample)

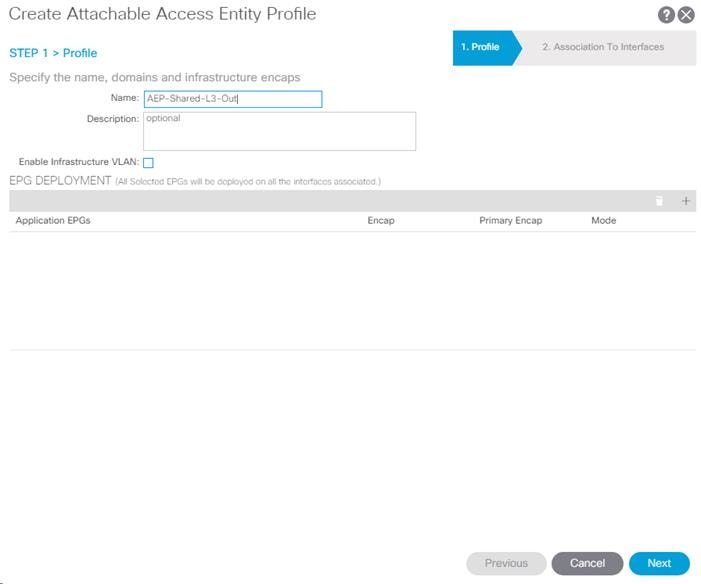

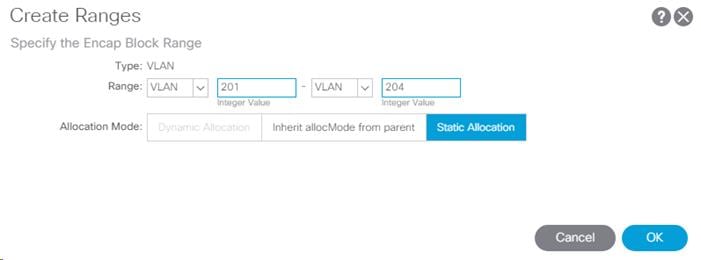

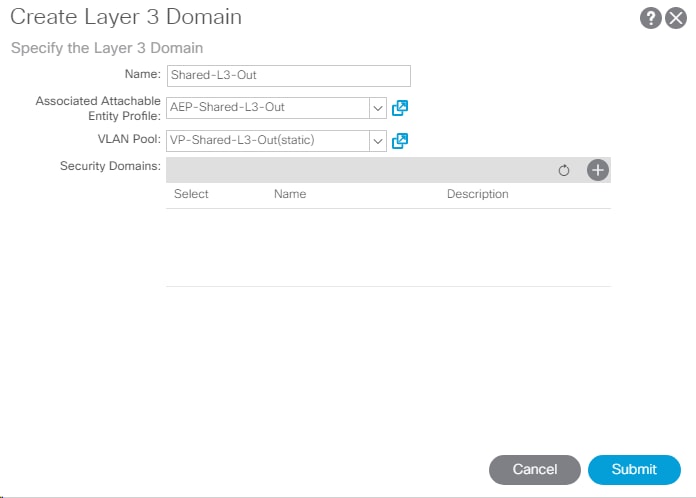

Configuring ACI Shared Layer 3 Out

Lab Validation Tenant Configuration

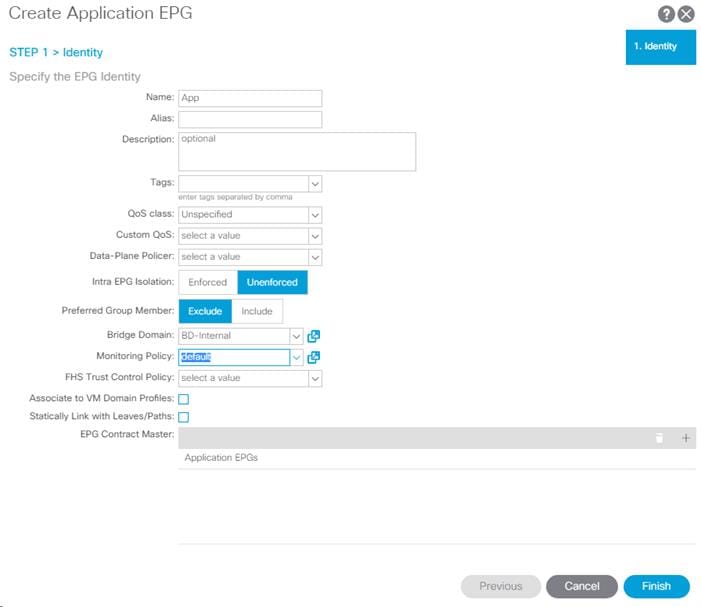

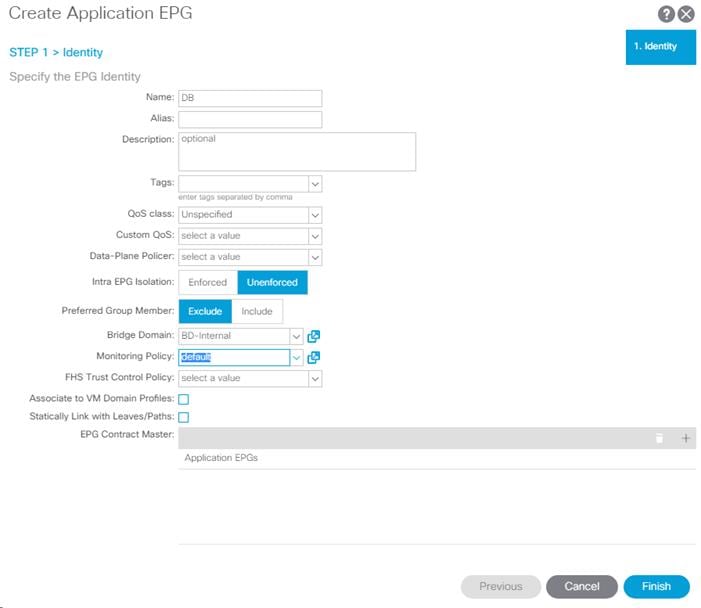

Deploy ACI Application (MS-TNT-A) Tenant

Create Tenant Broadcast Domains in ONTAP

Create Storage Virtual Machine

Modify Storage Virtual Machine Options

Create Load-Sharing Mirrors of SVM Root Volume

Create Block Protocol Service(s)

Add Quality of Service (QoS) Policy to Monitor Application Workload

Configure Cisco UCS for the Tenant

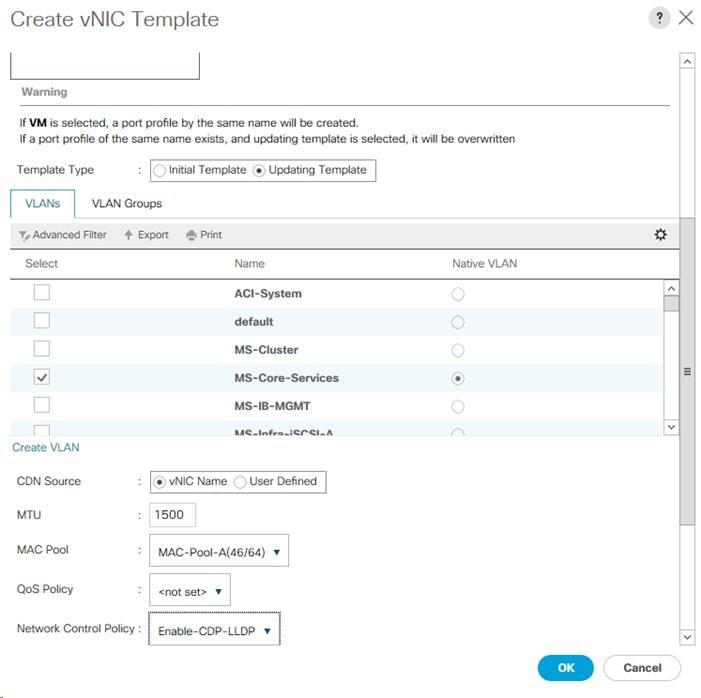

Add Tenant Host Management vNIC Template

Create Tenant LAN Connectivity Policy for iSCSI Boot

Create Tenant Service Profile Template

Add New Application-Specific Server Pool

Create New Service Profiles for Tenant Servers

Configure Storage SAN Boot for the Tenant

Hyper-V Boot LUN in Infra-MS-SVM for First Tenant Host

Clustered Data ONTAP iSCSI Boot Storage Setup

Microsoft Hyper-V Server Deployment Procedure for Tenant Hosts

Setting Up Microsoft Hyper-V Server 2016

Host Renaming and Join to Domain

Install NetApp Windows Unified Host Utilities

Clone and Remap Server LUNs for Sysprep Image

Clone and Remap Server LUNs for Production Image

Deploying and Managing the Tenant Hyper-V Cluster Using System Center 2016 VMM

Fabric – Networking – Install APIC Hyper-V Agent and Add Host to SCVMM Virtual Switch

Add TNT iSCSI Sessions to Hosts

Create Windows Failover Cluster

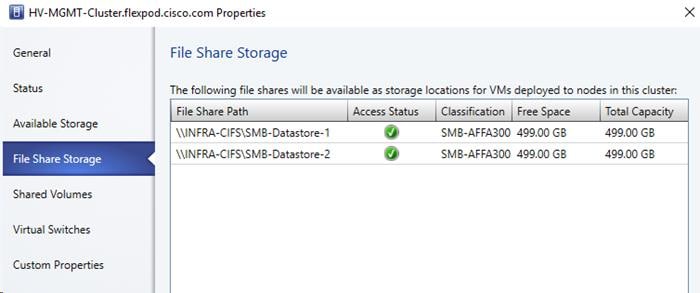

Add Tenant iSCSI Datastores (Optional)

Build a Second Tenant (Optional)

Set Onboard Unified Target Adapter 2 Port Personality

Add FCP Storage Protocol to Infrastructure SVM

Create FCP Storage Protocol in Infrastructure SVM

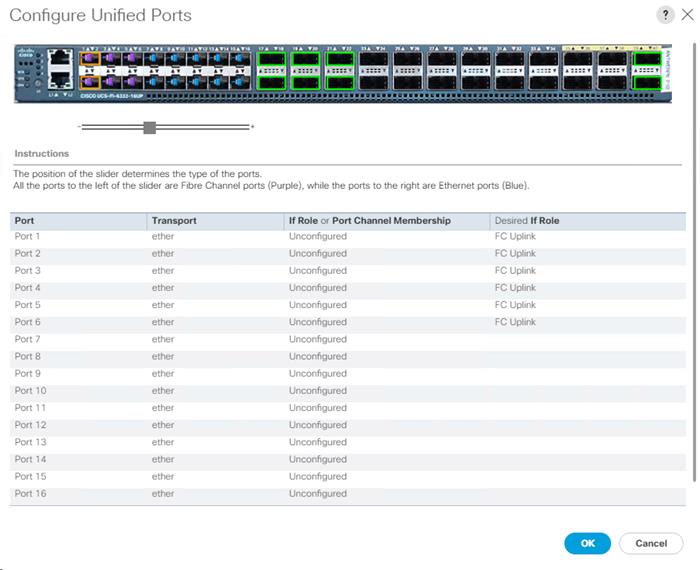

Configure FC Unified Ports (UP) on UCS Fabric Interconnects

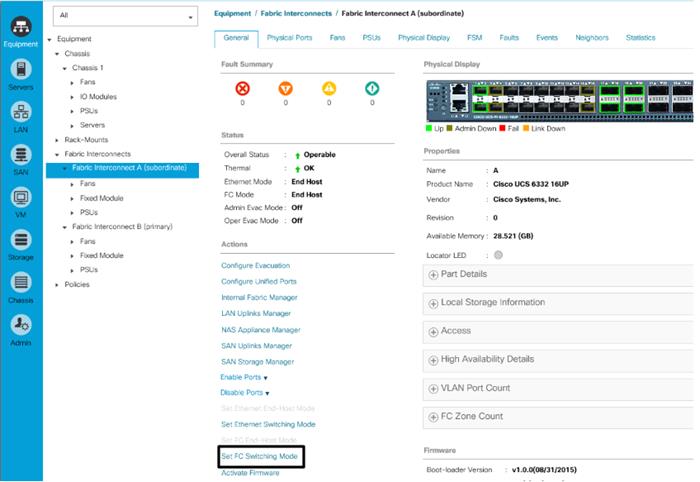

Place Cisco UCS Fabric Interconnects in Fiber Channel Switching Mode

Assign VSANs to FC Storage Ports

Create a WWNN Pool for FC Boot

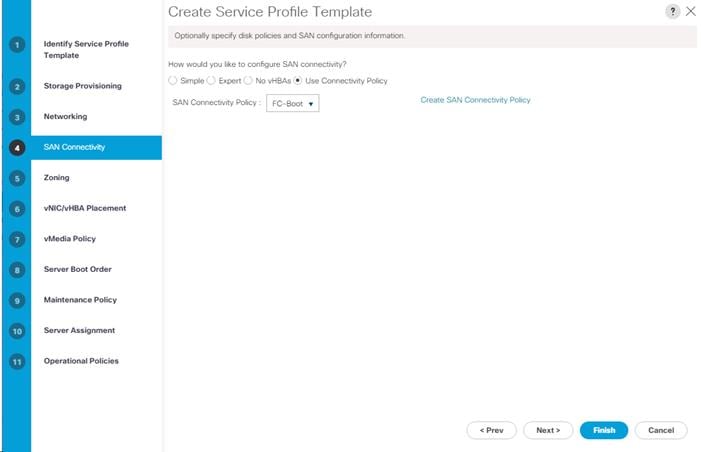

Create SAN Connectivity Policy

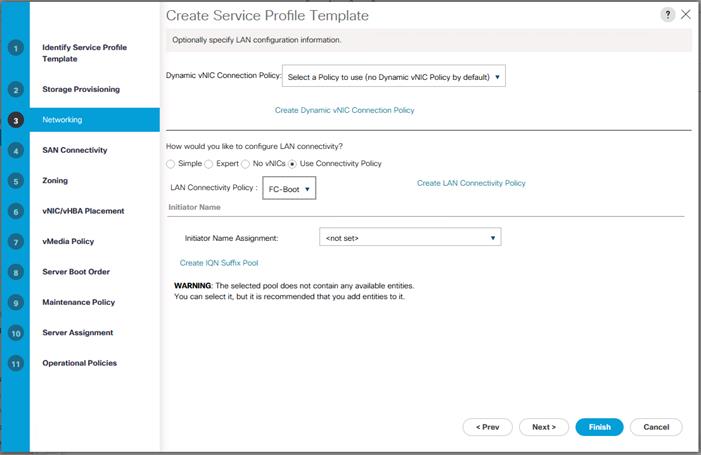

Create LAN Connectivity Policy for FC Boot

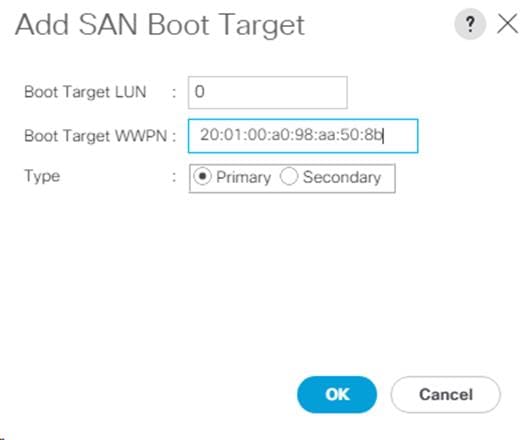

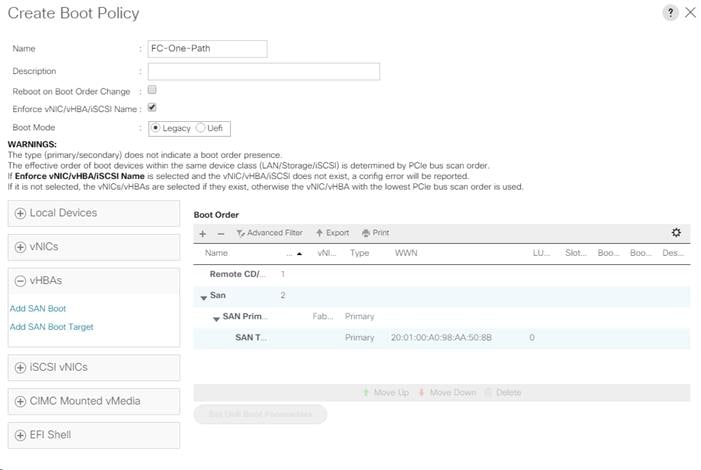

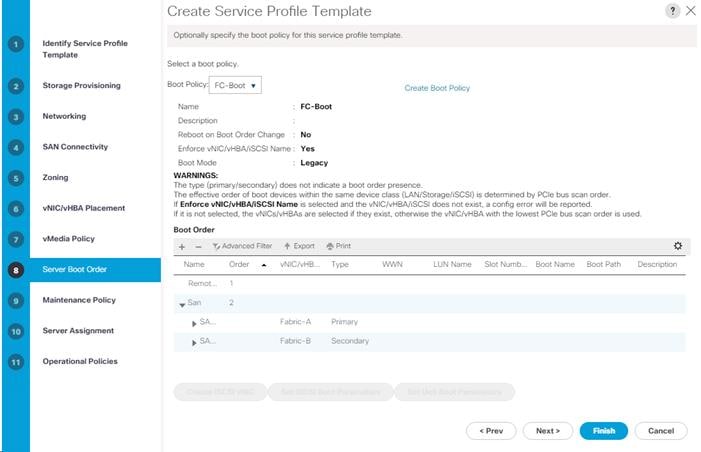

Create Boot Policy (FC Boot) With a Single Path for Windows Installation

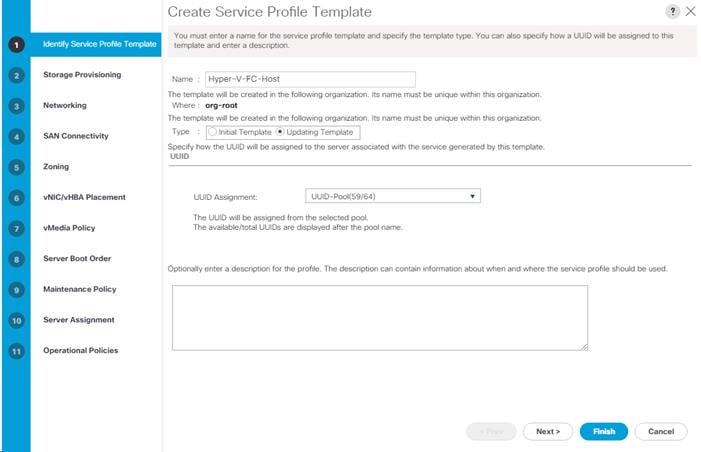

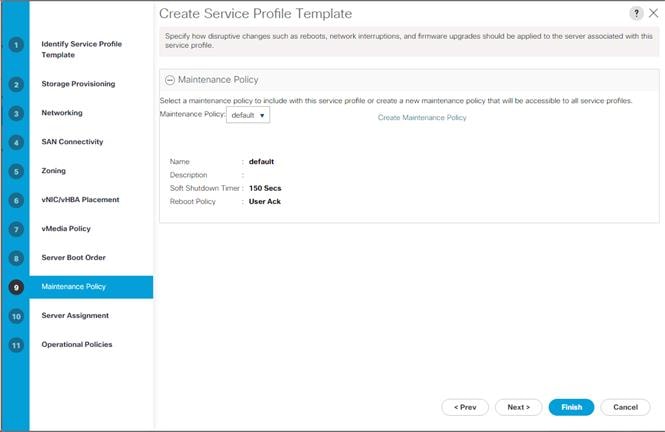

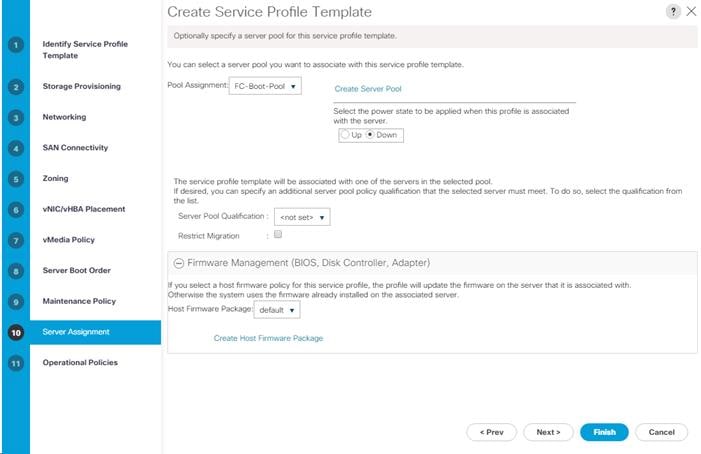

Create Service Profile Templates

Add More Servers to FlexPod Unit

Adding Direct Connected Tenant FC Storage

Create Storage Connection Policies

Map Storage Connection Policies vHBA Initiator Groups in SAN Connectivity Policy

Cisco Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers. Cisco and NetApp have partnered to deliver FlexPod, which serves as the foundation for a variety of workloads and enables efficient architectural designs that are based on customer requirements. A FlexPod solution is a validated approach for deploying Cisco and NetApp technologies as a shared cloud infrastructure.

This document describes the Cisco and NetApp® FlexPod Datacenter with Cisco UCS Manager unified software release 3.2(1d), Cisco Application Centric Infrastructure (ACI) 3.0(1k), and Microsoft Hyper-V 2016. Cisco UCS Manager (UCSM) 3.2 provides consolidated support for all the current Cisco UCS Fabric Interconnect models (6200, 6300, 6324 (Cisco UCS Mini)), 2200/2300 series IOM, Cisco UCS B-Series, and Cisco UCS C-Series, including Cisco UCS B200M5 servers. FlexPod Datacenter with Cisco UCS unified software release 3.2(1d), and Microsoft Hyper-V 2016 is a predesigned, best-practice data center architecture built on Cisco Unified Computing System (UCS), Cisco Nexus® 9000 family of switches, Cisco Application Policy Infrastructure Controller (APIC), and NetApp All Flash FAS (AFF).

This document primarily focuses on deploying Microsoft Hyper-V 2016 Cluster on FlexPod Datacenter using iSCSI and SMB storage protocols. The Appendix section covers the delta changes on the configuration steps using the Fiber Channel (FC) storage protocol for the same deployment model.

![]() FC storage traffic does not flow through the ACI Fabric and is not covered by the ACI policy model.

FC storage traffic does not flow through the ACI Fabric and is not covered by the ACI policy model.

Introduction

The current industry trend in data center design is towards shared infrastructures. By using virtualization along with pre-validated IT platforms, enterprise customers have embarked on the journey to the cloud by moving away from application silos and toward shared infrastructure that can be quickly deployed, thereby increasing agility and reducing costs. Cisco and NetApp have partnered to deliver FlexPod, which uses best of breed storage, server and network components to serve as the foundation for a variety of workloads, enabling efficient architectural designs that can be quickly and confidently deployed.

Audience

The audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineers, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

Purpose of this Document

This document provides a step-by-step configuration and implementation guidelines for the FlexPod Datacenter with Cisco UCS Fabric Interconnects, NetApp AFF, and Cisco ACI solution. This document primarily focuses on deploying Microsoft Hyper-V 2016 Cluster on FlexPod Datacenter using iSCSI and SMB storage protocols. The Appendix section covers the delta changes on the configuration steps using FC storage protocol for the same deployment model.

What’s New?

The following design elements distinguish this version of FlexPod from previous FlexPod models:

· Support for the Cisco UCS 3.2(1d) unified software release, Cisco UCS B200-M5 servers, Cisco UCS B200-M4 servers, and Cisco UCS C220-M4 servers

· Support for Cisco ACI version 3.0(1k)

· Support for the latest release of NetApp ONTAP® 9.1

· SMB, iSCSI, and FC storage design

· Validation of Microsoft Hyper-V 2016

Architecture

FlexPod architecture is highly modular, or pod-like. Although each customer's FlexPod unit might vary in its exact configuration, after a FlexPod unit is built, it can easily be scaled as requirements and demands change. This includes both scaling up (adding additional resources within a FlexPod unit) and scaling out (adding additional FlexPod units). Specifically, FlexPod is a defined set of hardware and software that serves as an integrated foundation for all virtualization solutions. FlexPod validated with Microsoft Hyper-V 2016 includes NetApp All Flash FAS storage, Cisco ACI® networking, Cisco Unified Computing System (Cisco UCS®), Microsoft Systems Center Operations Manager and Microsoft Systems Center Virtual Machine Manager in a single package. The design is flexible enough that the networking, computing, and storage can fit in a single data center rack or be deployed according to a customer's data center design. Port density enables the networking components to accommodate multiple configurations of this kind.

The reference architectures detailed in this document highlight the resiliency, cost benefit, and ease of deployment across multiple storage protocols. A storage system capable of serving multiple protocols across a single interface allows for customer choice and investment protection because it truly is a wire-once architecture.

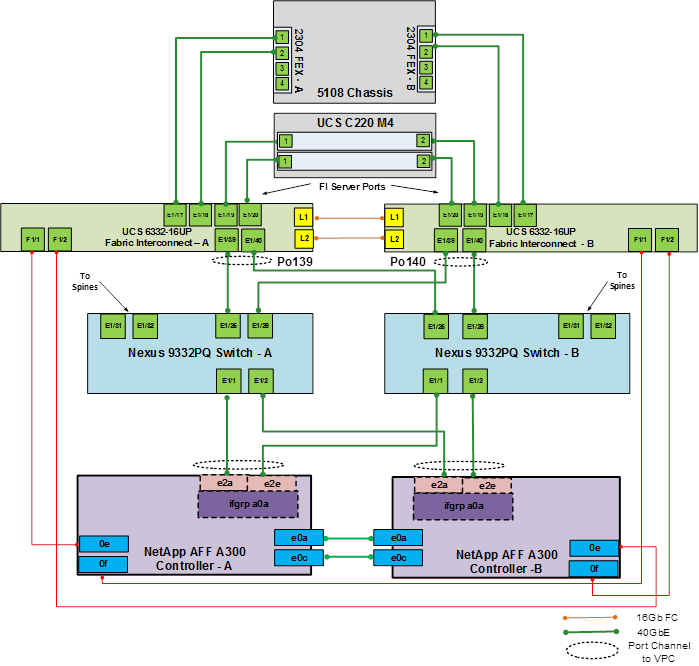

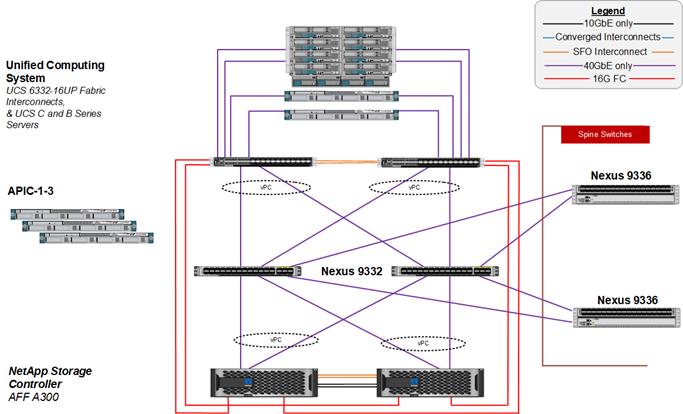

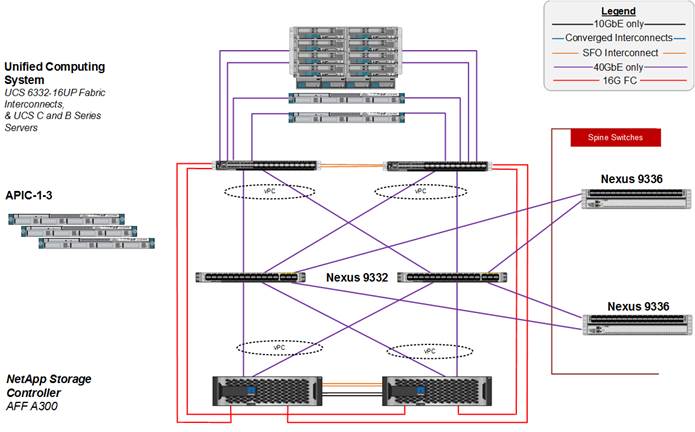

Figure 1 shows the Microsoft Hyper-V built on FlexPod components and its physical cabling with the Cisco UCS 6332-16UP Fabric Interconnects. The Nexus 9336PQ switches shown serve as spine switches in the Cisco ACI Fabric Spine-Leaf Architecture, while the Nexus 9332PQ switches serve as 40GE leaf switches. The Cisco APICs shown attach to the ACI Fabric with 10GE connections. This attachment can be accomplished with either other leaf switches in the fabric with 10 GE ports, such as the Nexus 93180YC-EX, or with Cisco QSFP to SFP/SFP+ Adapter (QSA) modules in the Nexus 9332s. 10GE breakout cables are not supported when the 9332 is in ACI mode. This design has end-to-end 40 Gb Ethernet connections from Cisco UCS Blades, Cisco UCS C-Series rackmount servers, a pair of Cisco UCS Fabric Interconnects, Cisco Nexus 9000 switches, through to NetApp AFF A300. These 40 GE paths carry SMB, iSCSI, and Virtual Machine (VM) traffic that has Cisco ACI policy applied. This infrastructure option can be expanded by connecting 16G FC or 10G FCoE links between the Cisco UCS Fabric Interconnects and the NetApp AFF A300 as shown below, or introducing a pair of Cisco MDS switches between the Cisco UCS Fabric Interconnects and the NetApp AFF A300 to provide FC/FCoE block-level shared storage access. Note that FC/FCoE storage access does not have ACI policy applied. The FC configuration shown below is covered in the appendix of this document, but the FCoE and MDS options are also supported. The reference architecture reinforces the "wire-once" strategy, because the additional storage can be introduced into the existing architecture without a need for re-cabling from the hosts to the Cisco UCS Fabric Interconnects.

Physical Topology

Figure 1 FlexPod with Cisco UCS 6332-16UP Fabric Interconnects

The reference 40Gb based hardware configuration includes:

· Three Cisco APICs

· Two Cisco Nexus 9336PQ fixed spine switches

· Two Cisco Nexus 9332PQ leaf switches

· Two Cisco UCS 6332-16UP fabric interconnects

· One chassis of Cisco UCS blade servers

· Two Cisco UCS C220M4 rack servers

· One NetApp AFF A300 (HA pair) running ONTAP with disk shelves and solid state drives (SSD)

![]() A 10GE-based design with Cisco UCS 6200 Fabric Interconnects is also supported, but not covered in this deployment Guide. All systems and fabric links feature redundancy and provide end-to-end high availability. For server virtualization, this deployment includes Microsoft Hyper-V 2016. Although this is the base design, each of the components can be scaled flexibly to support specific business requirements. For example, more (or different) blades and chassis could be deployed to increase compute capacity, additional disk shelves could be deployed to improve I/O capacity and throughput, or special hardware or software features could be added to introduce new features.

A 10GE-based design with Cisco UCS 6200 Fabric Interconnects is also supported, but not covered in this deployment Guide. All systems and fabric links feature redundancy and provide end-to-end high availability. For server virtualization, this deployment includes Microsoft Hyper-V 2016. Although this is the base design, each of the components can be scaled flexibly to support specific business requirements. For example, more (or different) blades and chassis could be deployed to increase compute capacity, additional disk shelves could be deployed to improve I/O capacity and throughput, or special hardware or software features could be added to introduce new features.

Software Revisions

Table 1 lists the software revisions for this solution.

| Layer | Device | Image | Comments |

| Compute | · Cisco UCS Fabric Interconnects 6200 and 6300 Series. · UCS B-200 M5, B-200 M4, UCS C-220 M4 | · 3.2(1d) - Infrastructure Bundle · 3.2(1d) – Server Bundle | Includes the Cisco UCS-IOM 2304 Cisco UCS Manager, Cisco UCS VIC 1340 and Cisco UCS VIC 1385 |

| Network | Cisco APIC | 3.0(1k) |

|

|

| Cisco Nexus 9000 ACI | n9000-13.0(1k) |

|

| Storage | NetApp AFF A300 | ONTAP 9.1P5 |

|

| Software | Cisco UCS Manager | 3.2(1d) |

|

| Microsoft System Center Virtual Machine Manager | 2016 (version: 4.0.2051.0) |

| |

| Microsoft Hyper-V | 2016 |

| |

| Microsoft System Center Operation Manager | 2016 (version: 7.2.11878.0) |

|

Configuration Guidelines

This document provides details on configuring a fully redundant, highly available reference model for a FlexPod unit with NetApp ONTAP storage. Therefore, reference is made to the component being configured with each step, as either 01 or 02 or A and B. In this CVD we have used node01 and node02 to identify the two NetApp storage controllers provisioned in this deployment model. Similarly, Cisco Nexus A and Cisco Nexus B refer to the pair of Cisco Nexus switches configured. Likewise the Cisco UCS Fabric Interconnects are also configured in the same way. Additionally, this document details the steps for provisioning multiple Cisco UCS hosts, and these examples are identified as: Hyper-V-Host-01, Hyper-V-Host-02 to represent infrastructure hosts deployed to each of the fabric interconnects in this document. Finally, to indicate that you should include information pertinent to your environment in a given step, <text> appears as part of the command structure. See the following example for the network port vlan create command:

Usage:

network port vlan create ?

[-node] <nodename> Node

{ [-vlan-name] {<netport>|<ifgrp>} VLAN Name

| -port {<netport>|<ifgrp>} Associated Network Port

[-vlan-id] <integer> } Network Switch VLAN Identifier

Example:

network port vlan -node <node01> -vlan-name i0a-<vlan id>

This document is intended to enable you to fully configure the customer environment. In this process, various steps require you to insert customer-specific naming conventions, IP addresses, and VLAN schemes, as well as to record appropriate MAC addresses. Table 3 lists the virtual machines (VMs) necessary for deployment as outlined in this guide. Table 2 describes the VLANs necessary for deployment as outlined in this guide. In this table VS indicates dynamically assigned VLANs from the APIC-Controlled Microsoft Virtual Switch.

| VLAN Name | VLAN Purpose | ID Used in Validating This Document |

| Out-of-Band-Mgmt | VLAN for out-of-band management interfaces | 3911 |

| MS-IB-MGMT | VLAN for in-band management interfaces | 118/218/318/418/VS |

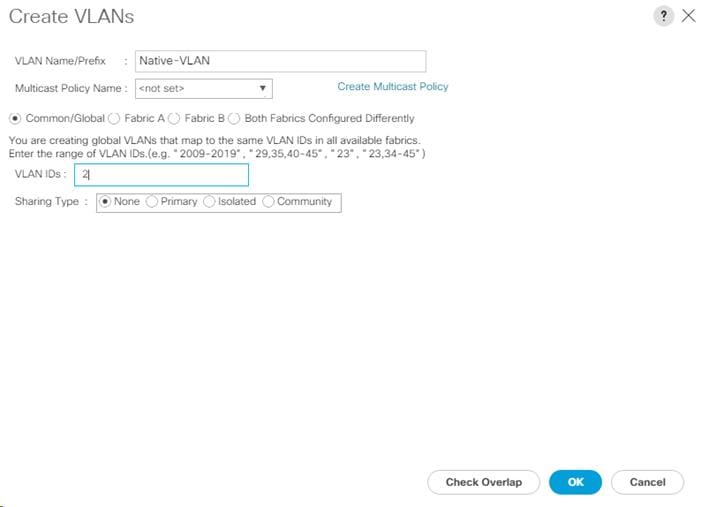

| Native-VLAN | VLAN to which untagged frames are assigned | 2 |

| MS-Infra-SMB-VLAN | VLAN for SMB traffic | 3053/3153/VS |

| MS-LVMN-VLAN | VLAN designated for the movement of VMs from one physical host to another. | 906/VS |

| MS-Cluster-VLAN | VLAN for cluster connectivity | 907/VS |

| MS-Infra-iSCSI-A | VLAN for iSCSI Boot on Fabric A | 3013/3113 |

| MS-Infra-iSCSI-B | VLAN for iSCSI Boot on Fabric B | 3023/3123 |

Table 3 lists the VMs necessary for deployment as outlined in this document.

| Virtual Machine Description | Host Name |

| Active Directory (AD) | ACI-FP-AD1, ACI-FP-AD2 |

| Microsoft System Center Virtual Machine Manager | MS-SCVMM |

| Microsoft System Center Operation Manager | MS-SCOM |

Physical Infrastructure

FlexPod Cabling

The information in this section is provided as a reference for cabling the physical equipment in a FlexPod environment. To simplify cabling requirements, the tables include both local and remote device and port locations.

The tables in this section contain details for the prescribed and supported configuration of the NetApp AFF A300 running NetApp ONTAP® 9.1.

![]() For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT).Cisco HyperFlex documents need Cisco.com login credentials. Please login to access these documents.

For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT).Cisco HyperFlex documents need Cisco.com login credentials. Please login to access these documents.

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps. Make sure to use the cabling directions in this section as a guide.

The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to the Universal SAS and ACP Cabling Guide: https://library.netapp.com/ecm/ecm_get_file/ECMM1280392.

Figure 2 details the cable connections used in the validation lab for the 40Gb end-to-end with Fibre Channel topology based on the Cisco UCS 6332-16UP Fabric Interconnect. Four 16Gb links connect directly to the NetApp AFF controllers from the Cisco UCS Fabric Interconnects. An additional 1Gb management connection is required for an out-of-band network switch apart from the FlexPod infrastructure. Cisco UCS fabric interconnects and Cisco Nexus switches are connected to the out-of-band network switch, and each NetApp AFF controller has two connections to the out-of-band network switch.

Figure 2 FlexPod Cabling with Cisco UCS 6332-16UP Fabric Interconnect

Active Directory DC/DNS

Production environments at most customers’ locations might have an active directory and DNS infrastructure configured; the FlexPod with Microsoft Windows Server 2016 Hyper-V deployment model does not require an additional domain controller to be setup. The optional domain controller is omitted from the configuration in this case or used as a resource domain. In this document we have used an existing AD domain controller and an AD integrated DNS server role running on the same server, which is available in our lab environment. We will configure two additional AD/DNS servers connected to the Core Services End Point Group (EPG) in the ACI Fabric. These AD/DNS servers will be configured as additional Domain Controllers in the same domain as the prerequisite AD/DNS server.

Microsoft System Center 2016

This document details the steps to install Microsoft System Center Operations Manager (SCOM) and Virtual Machine Manager (SCVMM). The Microsoft guidelines to install SCOM and SCVMM 2016 can be found at:

· SCOM: https://docs.microsoft.com/en-us/system-center/scom/deploy-overview

· SCVMM: https://docs.microsoft.com/en-us/system-center/vmm/install-console

This section provides a detailed procedure for configuring the Cisco ACI fabric for use in a FlexPod environment and is written where the FlexPod components are added to an existing Cisco ACI fabric in several new ACI tenants. Required fabric setup is verified, but previous configuration of the ACI fabric is assumed.

![]() Follow these steps precisely because failure to do so could result in an improper configuration.

Follow these steps precisely because failure to do so could result in an improper configuration.

Physical Connectivity

Follow the physical connectivity guidelines for FlexPod as covered in section FlexPod Cabling.

In ACI, both spine and leaf switches are configured using APIC, individual configuration of the switches is not required. Cisco APIC discovers the ACI infrastructure switches using LLDP and acts as the central control and management point for the entire configuration.

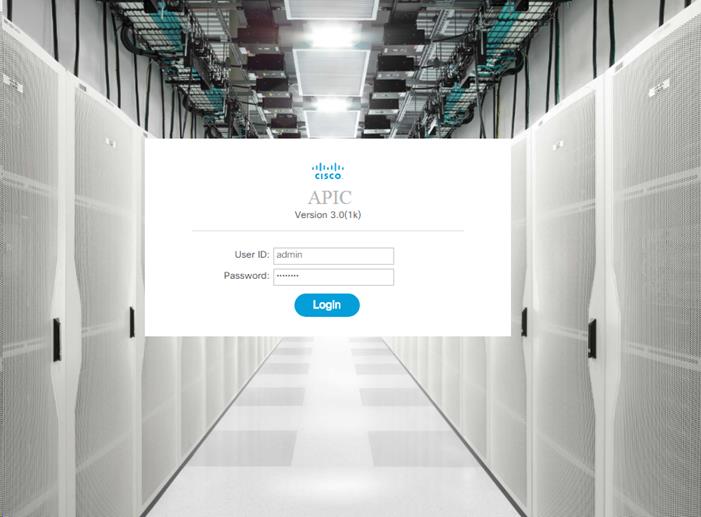

Cisco Application Policy Infrastructure Controller (APIC) Verification

This sub-section verifies the setup the Cisco APIC. Cisco recommends a cluster of at least 3 APICs controlling an ACI Fabric.

1. Log into the APIC GUI using a web browser, by browsing to the out of band IP address configured for APIC. Login with the admin user id and password.

![]() In this validation, Google Chrome was used as the web browser. It might take a few minutes before APIC GUI is available after the initial setup.

In this validation, Google Chrome was used as the web browser. It might take a few minutes before APIC GUI is available after the initial setup.

2. Take the appropriate action to close any warning or information screens.

3. At the top in the APIC home page, select the System tab followed by Controllers.

4. On the left, select the Controllers folder. Verify that at least 3 APICs are available and have redundant connections to the fabric.

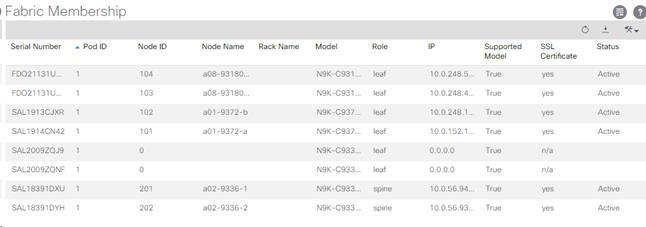

Cisco ACI Fabric Discovery

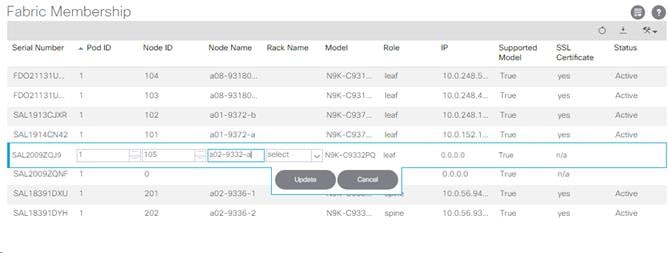

This section details the steps for adding the two Nexus 9332PQ leaf switches to the Fabric. These switches are automatically discovered in the ACI Fabric and are manually assigned node IDs. To add Nexus 9332PQ leaf switches to the Fabric, complete the following steps:

1. At the top in the APIC home page, select the Fabric tab.

2. In the left pane, select and expand Fabric Membership.

3. The two 9332 Leaf Switches will be listed on the Fabric Membership page with Node ID 0 as shown:

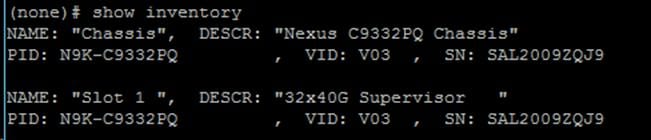

4. Connect to the two Nexus 9332 leaf switches using serial consoles and login in as admin with no password (press enter). Use show inventory to get the leaf’s serial number.

5. Match the serial numbers from the leaf listing to determine the A and B switches under Fabric Membership.

6. In the APIC GUI, under Fabric Membership, double-click the A leaf in the list. Enter a Node ID and a Node Name for the Leaf switch and click Update.

7. Repeat step 6 for the B leaf in the list.

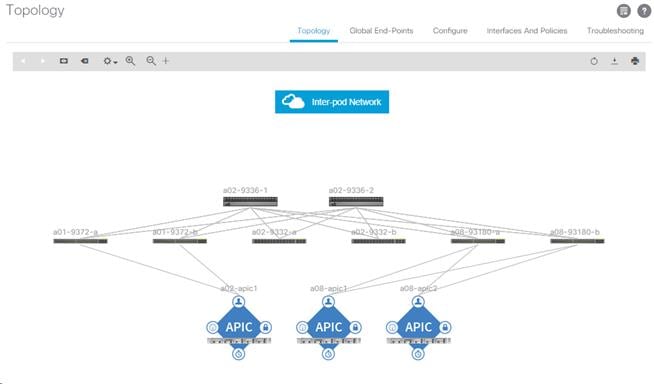

8. Click Topology in the left pane. The discovered ACI Fabric topology will appear. It may take a few minutes for the Nexus 9332 Leaf switches to appear and you will need to click the refresh button for the complete topology to appear.

Initial ACI Fabric Setup Verification

This section details the steps for the initial setup of the Cisco ACI Fabric, where the software release is validated, out of band management IPs are assigned to the new leaves, NTP setup is verified, and the fabric BGP route reflectors are verified.

Software Upgrade

To upgrade the software, complete the following steps:

1. In the APIC GUI, at the top select Admin > Firmware.

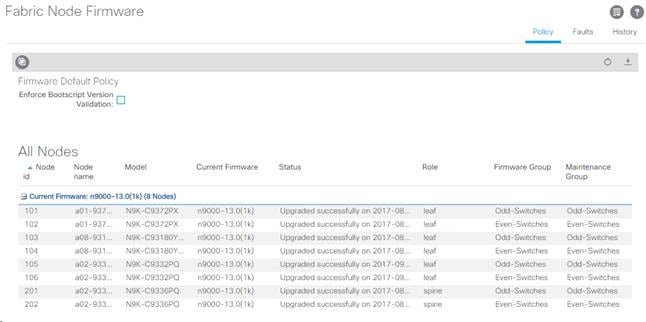

2. This document was validated with ACI software release 3.0(1k). Select Fabric Node Firmware in the left pane under Firmware Management. All switches should show the same firmware release and the release version should be at minimum n9000-13.0(1k). The switch software version should also match the APIC version.

3. Click Admin > Firmware > Controller Firmware. If all APICs are not at the same release at a minimum of 3.0(1k), follow the Cisco APIC Controller and Switch Software Upgrade and Downgrade Guide to upgrade both the APICs and switches to a minimum release of 3.0(1k) on APIC and 13.0(1k) on the switches.

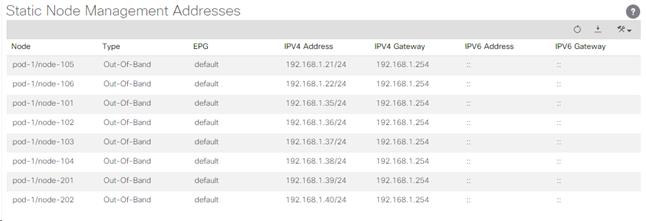

Setting Up Out-of-Band Management IP Addresses for New Leaf and Switches

To set up out-of-band management IP addresses, complete the following steps:

1. To add out-of-band management interfaces for all the switches in the ACI Fabric, select Tenants > mgmt.

2. Expand Tenant mgmt on the left. Right-click Node Management Addresses and select Create Static Node Management Addresses.

3. Enter the node number range for the new leaf switches (105-106 in this example).

4. Select the checkbox for Out-of-Band Addresses.

5. Select default for Out-of-Band Management EPG.

6. Considering that the IPs will be applied in a consecutive range of two IPs, enter a starting IP address and netmask in the Out-Of-Band IPV4 Address field.

7. Enter the out of band management gateway address in the Gateway field.

8. Click SUBMIT, then click YES.

9. On the left, expand Node Management Addresses and select Static Node Management Addresses. Verify the mapping of IPs to switching nodes.

10. Direct out-of-band access to the switches is now available for SSH.

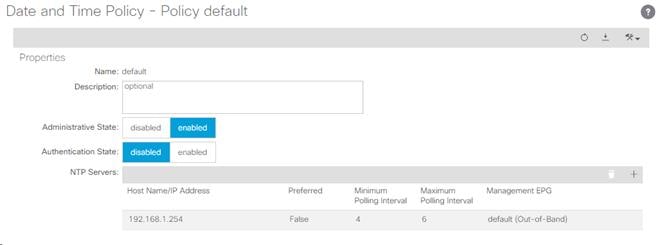

Verifying Time Zone and NTP Server

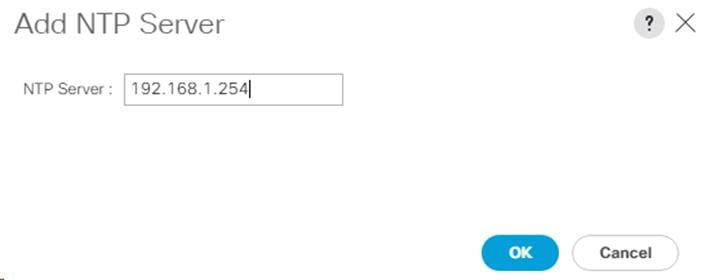

This procedure will allow customers to verify setup of an NTP server for synchronizing the fabric time. To verify the time zone and NTP server set up, complete the following steps:

1. To verify NTP setup in the fabric, select and expand Fabric > Fabric Policies > Pod Policies > Policies > Date and Time.

2. Select default. In the Datetime Format - default pane, verify the correct Time Zone is selected and that Offset State is enabled. Adjust as necessary and click Submit and Submit Changes.

3. On the left, select Policy default. Verify that at least one NTP Server is listed.

4. If necessary, on the right use the + sign to add NTP servers accessible on the out of band management subnet. Enter an IP address accessible on the out of band management subnet and select the default (Out-of-Band) Management EPG. Click Submit to add the NTP server. Repeat this process to add all NTP servers.

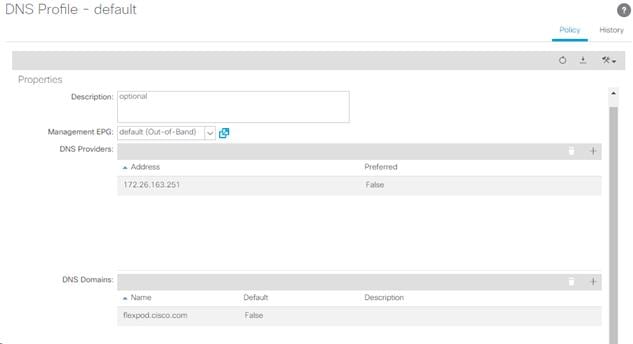

Verifying Domain Name Servers

To verify optional DNS in the ACI fabric, complete the following steps:

1. Select and expand Fabric > Fabric Policies > Global Policies > DNS Profiles > default.

2. Verify the DNS Providers and DNS Domains.

3. If necessary, in the Management EPG drop-down, select the default (Out-of-Band) Management EPG. Use the + signs to the right of DNS Providers and DNS Domains to add DNS servers and the DNS domain name. Note that the DNS servers should be reachable from the out of band management subnet. Click SUBMIT to complete the DNS configuration.

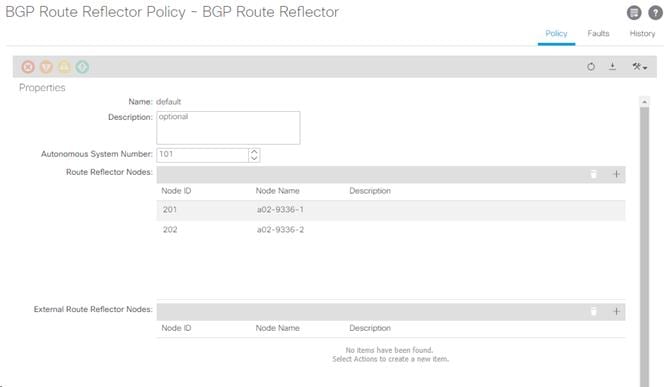

Verifying BGP Route Reflectors

In this ACI deployment, both the spine switches should be set up as BGP route-reflectors to distribute the leaf routes throughout the fabric. To verify the BGP Route Reflector, complete the following steps:

1. Select and expand System > System Settings > BGP Route Reflector.

2. Verify that a unique Autonomous System Number has been selected for this ACI fabric. If necessary, use the + sign on the right to add the two spines to the list of Route Reflector Nodes. Click SUBMIT to complete configuring the BGP Route Reflector.

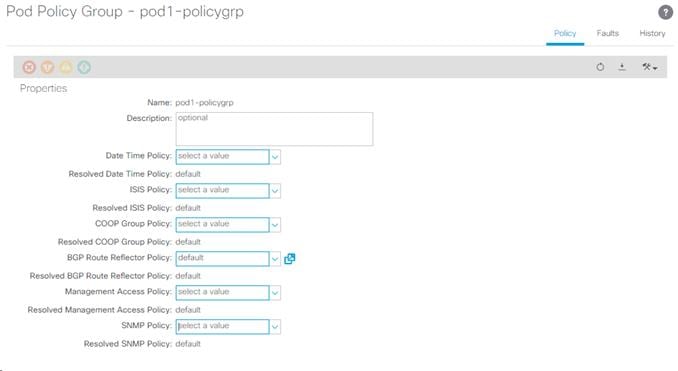

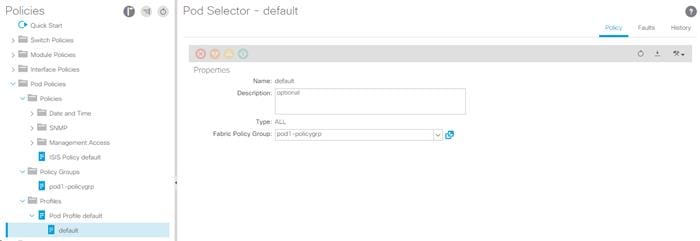

3. To verify the BGP Route Reflector has been enabled, select and expand Fabric > Fabric Policies > Pod Policies > Policy Groups. Under Policy Groups make sure a policy group has been created and select it. The BGP Route Reflector Policy field should show “default.”

4. If a Policy Group has not been created, on the left, right-click Policy Groups under Pod Policies and select Create Pod Policy Group. In the Create Pod Policy Group window, name the Policy Group pod1-policygrp. Select the default BGP Route Reflector Policy. Click SUBMIT to complete creating the Policy Group.

5. On the left expand Profiles under Pod Policies and select Pod Profile default > default.

6. Verify that the pod1-policygrp or the Fabric Policy Group identified above is selected. If the Fabric Policy Group is not selected, view the drop-down list to select it and click Submit.

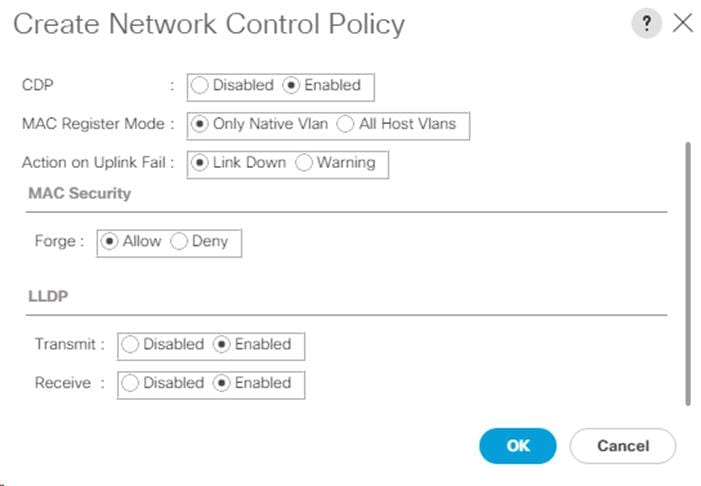

Set Up Fabric Access Policy Setup

This section details the steps to create various access policies creating parameters for CDP, LLDP, LACP, etc. These policies are used during vPC and VM domain creation. In an existing fabric, these policies may already exist. The existing policies can be used if configured the same way as listed. To define fabric access policies, complete the following steps:

1. Log into the APIC AGUI.

2. In the APIC UI, select and expand Fabric > Access Policies > Interface Policies > Policies.

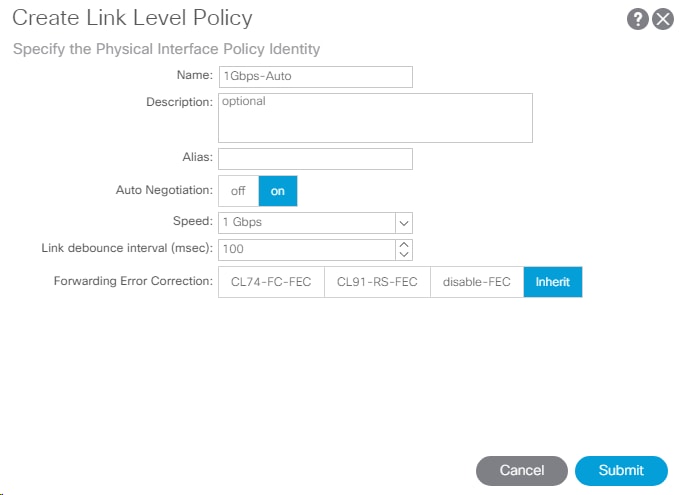

Create Link Level Policies

This procedure will create link level policies for setting up the 1Gbps, 10Gbps, and 40Gbps link speeds. To create the link level policies, complete the following steps:

1. In the left pane, right-click Link Level and select Create Link Level Policy.

2. Name the policy as 1Gbps-Auto and select the 1Gbps Speed.

3. Click Submit to complete creating the policy.

4. In the left pane, right-click Link Level and select Create Link Level Policy.

5. Name the policy 10Gbps-Auto and select the 10Gbps Speed.

6. Click Submit to complete creating the policy.

7. In the left pane, right-click Link Level and select Create Link Level Policy.

8. Name the policy 40Gbps-Auto and select the 40Gbps Speed.

9. Click Submit to complete creating the policy.

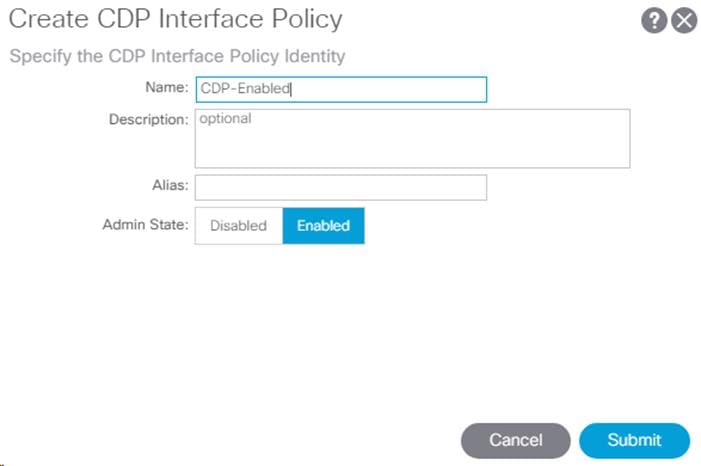

Create CDP Policy

This procedure creates policies to enable or disable CDP on a link. To create a CDP policy, complete the following steps:

1. In the left pane, right-click CDP interface and select Create CDP Interface Policy.

2. Name the policy as CDP-Enabled and enable the Admin State.

3. Click Submit to complete creating the policy.

4. In the left pane, right-click the CDP Interface and select Create CDP Interface Policy.

5. Name the policy CDP-Disabled and disable the Admin State.

6. Click Submit to complete creating the policy.

Create LLDP Interface Policies

This procedure will create policies to enable or disable LLDP on a link. To create an LLDP Interface policy, complete the following steps:

1. In the left pane, right-click LLDP lnterface and select Create LLDP Interface Policy.

2. Name the policy as LLDP-Enabled and enable both Transmit State and Receive State.

3. Click Submit to complete creating the policy.

4. In the left, right-click the LLDP lnterface and select Create LLDP Interface Policy.

5. Name the policy as LLDP-Disabled and disable both the Transmit State and Receive State.

6. Click Submit to complete creating the policy.

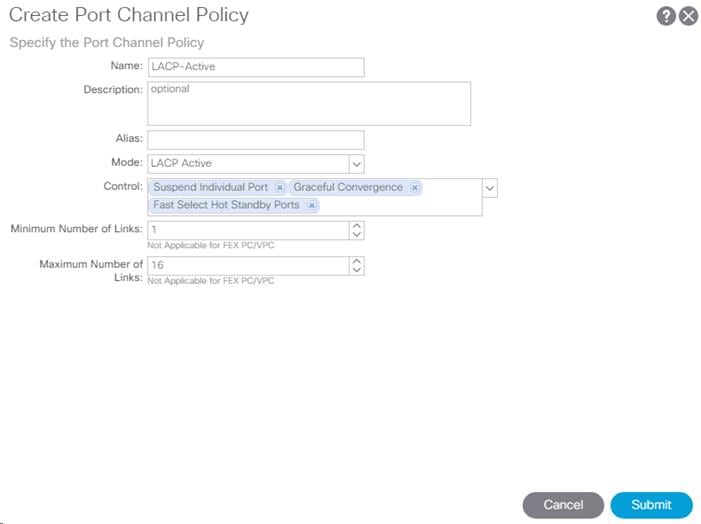

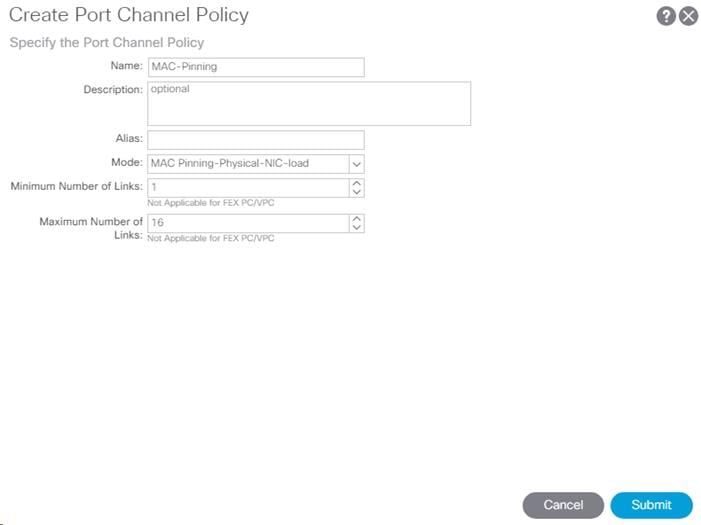

Create Port Channel Policy

This procedure will create policies to set LACP active mode configuration, LACP Mode On configuration and the MAC-Pinning mode configuration. To create Port Channel policy, complete the following steps:

1. In the left pane, right-click the Port Channel and select Create Port Channel Policy.

2. Name the policy as LACP-Active and select LACP Active for the Mode. Do not change any of the other values.

3. Click Submit to complete creating the policy.

4. In the left pane, right-click Port Channel and select Create Port Channel Policy.

5. Name the policy as MAC-Pinning and select MAC Pinning-Physical-NIC-load for the Mode. Do not change any of the other values.

6. Click Submit to complete creating the policy.

7. In the left pane, right-click Port Channel and select Create Port Channel Policy.

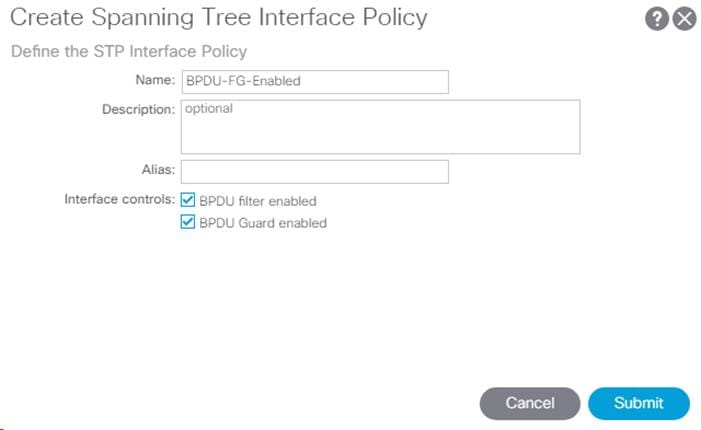

Create BPDU Filter/Guard Policies

This procedure will create policies to enable or disable BPDU filter and guard. To create a BPDU filter/Guard policy, complete the following steps:

1. In the left pane, right-click Spanning Tree Interface and select Create Spanning Tree Interface Policy.

2. Name the policy as BPDU-FG-Enabled and select both the BPDU filter and BPDU Guard Interface Controls.

3. Click Submit to complete creating the policy.

4. In the left pane, right-click Spanning Tree Interface and select Create Spanning Tree Interface Policy.

5. Name the policy as BPDU-FG-Disabled and make sure both the BPDU filter and BPDU Guard Interface Controls are cleared.

6. Click Submit to complete creating the policy.

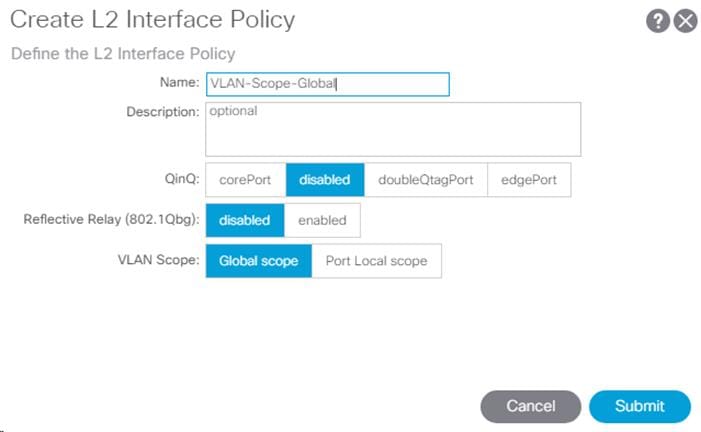

Create Global VLAN Policy

To create policies to enable global scope for all the VLANs, complete the following steps:

1. In the left pane, right-click the L2 Interface and select Create L2 Interface Policy.

2. Name the policy as VLAN-Scope-Global and make sure Global scope is selected. Do not change any of the other values.

3. Click Submit to complete creating the policy.

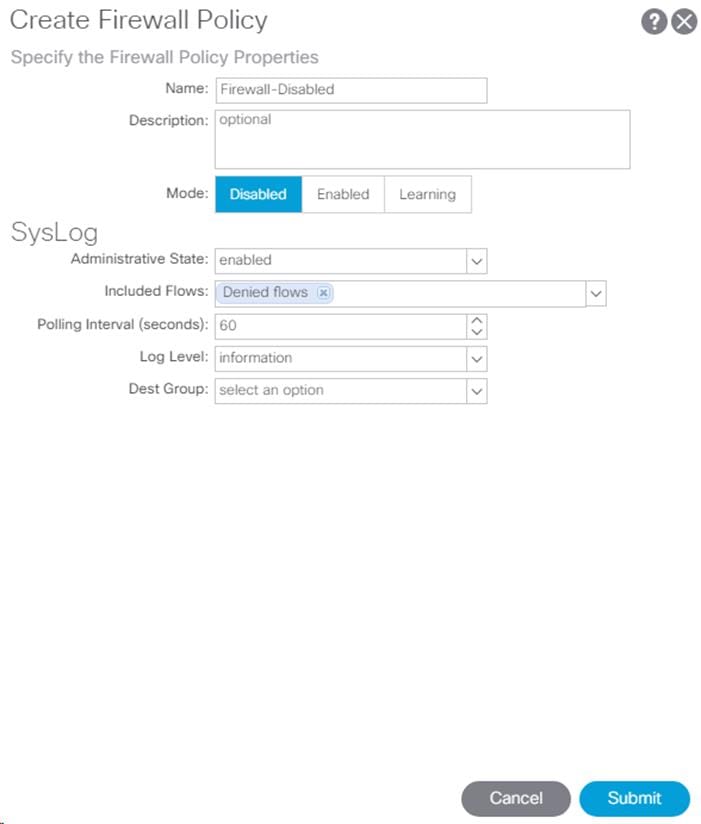

Create Firewall Policy

To create policies to disable a firewall, complete the following steps:

1. In the left pane, right-click Firewall and select Create Firewall Policy.

2. Name the policy Firewall-Disabled and select Disabled for Mode. Do not change any of the other values.

3. Click Submit to complete creating the policy.

Create Virtual Port Channels (vPCs)

This section details the steps to setup vPCs for connectivity to the In-Band Management Network, Cisco UCS, and NetApp Storage.

VPC - Management Switch

To setup vPCs for connectivity to the existing In-Band Management Network, complete the following steps:

![]() This deployment guide covers the configuration for a pre-existing Cisco Nexus management switch. You can adjust the management configuration depending on your connectivity setup. The In-Band Management Network provides connectivity of Management Virtual Machines and Hosts in the ACI fabric to existing services on the In-Band Management network outside of the ACI fabric. Layer 3 connectivity is assumed between the In-Band and Out-of-Band Management networks. This setup creates management networks that are physically isolated from tenant networks. In this validation, a 10GE vPC from two 10GE capable leaf switches in the fabric is connected to a port-channel on a Nexus 5K switch outside the fabric. Note that this vPC is not created on the Nexus 9332 leaves, but on existing leaves that have 10GE ports.

This deployment guide covers the configuration for a pre-existing Cisco Nexus management switch. You can adjust the management configuration depending on your connectivity setup. The In-Band Management Network provides connectivity of Management Virtual Machines and Hosts in the ACI fabric to existing services on the In-Band Management network outside of the ACI fabric. Layer 3 connectivity is assumed between the In-Band and Out-of-Band Management networks. This setup creates management networks that are physically isolated from tenant networks. In this validation, a 10GE vPC from two 10GE capable leaf switches in the fabric is connected to a port-channel on a Nexus 5K switch outside the fabric. Note that this vPC is not created on the Nexus 9332 leaves, but on existing leaves that have 10GE ports.

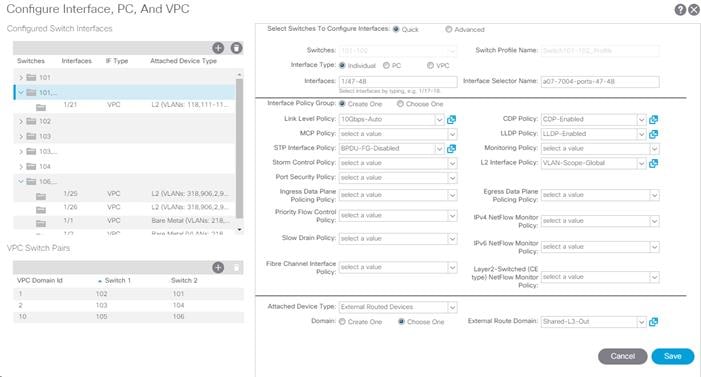

1. In the APIC GUI, at the top select Fabric > Access Policies > Quick Start.

2. In the right pane select Configure an interface, PC and VPC.

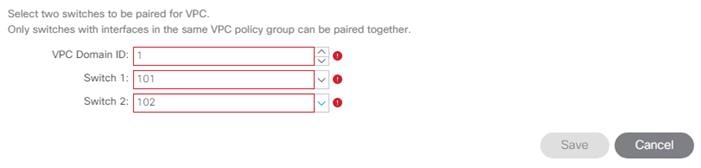

3. In the configuration window, configure a VPC domain between the leaf switches by clicking “+” under VPC Switch Pairs. If a VPC Domain already exists between the two switches being used for this vPC, skip to step 7.

4. Enter a VPC Domain ID (1 in this example).

5. From the drop-down list, select Switch A and Switch B IDs to select the two leaf switches.

6. Click SAVE.

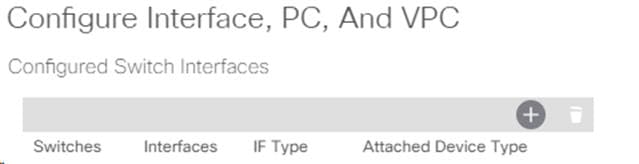

7. Click the “+” under Configured Switch Interfaces.

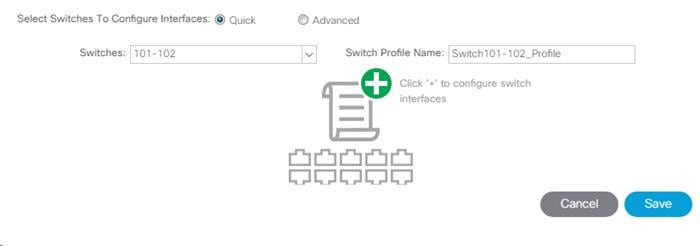

8. From the Switches drop-down list on the right, select both the leaf switches being used for this vPC.

9. Leave the system generated Switch Profile Name in place.

10. Click the big green “+” to configure switch interfaces.

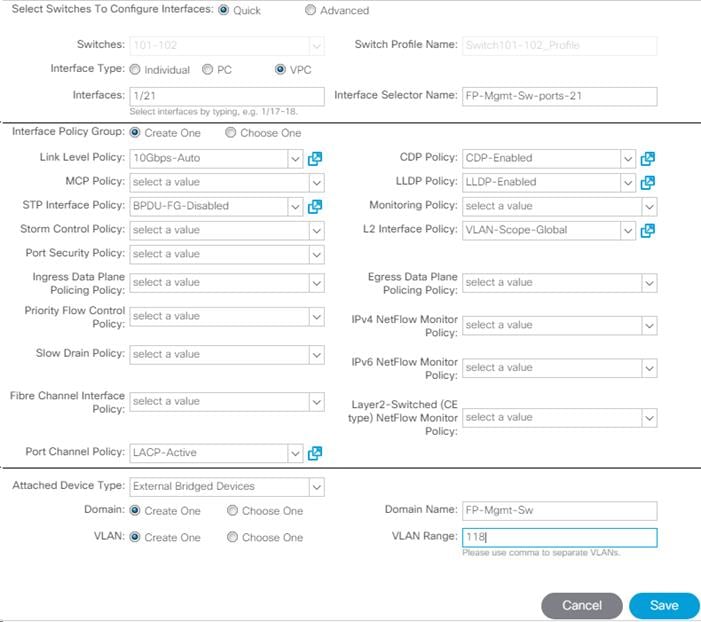

11. Configure various fields as shown in the figure below. In this screen shot, port 1/21 on both leaf switches is connected to a Nexus switch using 10Gbps links.

12. Click Save.

13. Click Save again to finish the configuring switch interfaces.

14. Click Submit.

![]() To validate the configuration, log into the Nexus switch and verify the port-channel is up (show port-channel summary).

To validate the configuration, log into the Nexus switch and verify the port-channel is up (show port-channel summary).

VPC – UCS Fabric Interconnects

Complete the following steps to setup vPCs for connectivity to the UCS Fabric Interconnects.

Figure 3 VLANs Configured for Cisco UCS

Table 4 VLANs for Cisco UCS Hosts

| Name | VLAN |

| Native | <2> |

| MS-Core-Services | <318> |

| MS-IB-Mgmt | <418> |

| MS-LVMN | <906> |

| MS-Cluster | <907> |

| MS-Infra-SMB | <3153> |

| MS-Infra-iSCSI-A | <3113> |

| MS-Infra-iSCSI-B | <3123> |

![]() MS-Core-Services and MS-IB-MGMT will be in the same bridge domain and subnet; they have to be in different VLANs because we are using the VLAN-Scope-Global L2 Interface Policy.

MS-Core-Services and MS-IB-MGMT will be in the same bridge domain and subnet; they have to be in different VLANs because we are using the VLAN-Scope-Global L2 Interface Policy.

![]() MS-LVMN, MS-Cluster, and MS-Infra-SMB VLANs are configured in this section and will be in place if needed on the manually created Hyper-V Virtual Switch. The EPG should be configured; it is not necessary to configure the actual VLAN or UCS static port mapping, but you can configure these without any negative effects.

MS-LVMN, MS-Cluster, and MS-Infra-SMB VLANs are configured in this section and will be in place if needed on the manually created Hyper-V Virtual Switch. The EPG should be configured; it is not necessary to configure the actual VLAN or UCS static port mapping, but you can configure these without any negative effects.

1. In the APIC GUI, select Fabric > Access Policies > Quick Start.

2. In the right pane, select Configure and interface, PC and VPC.

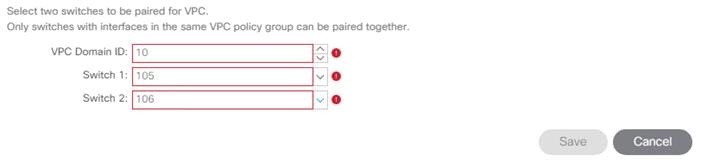

3. In the configuration window, configure a VPC domain between the 9332 leaf switches by clicking “+” under VPC Switch Pairs.

4. Enter a VPC Domain ID (10 in this example).

5. From the drop-down list, select 9332 Switch A and 9332 Switch B IDs to select the two leaf switches.

6. Click Save.

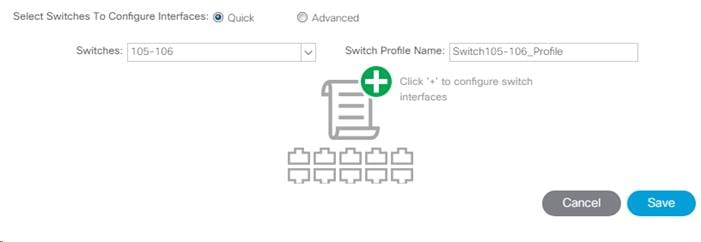

7. Click the “+” under Configured Switch Interfaces.

8. Select the two Nexus 9332 switches under the Switches drop-down list.

9. Click ![]() to add switch interfaces.

to add switch interfaces.

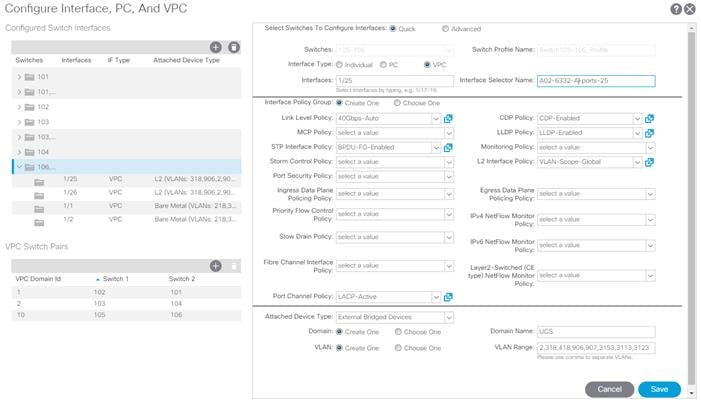

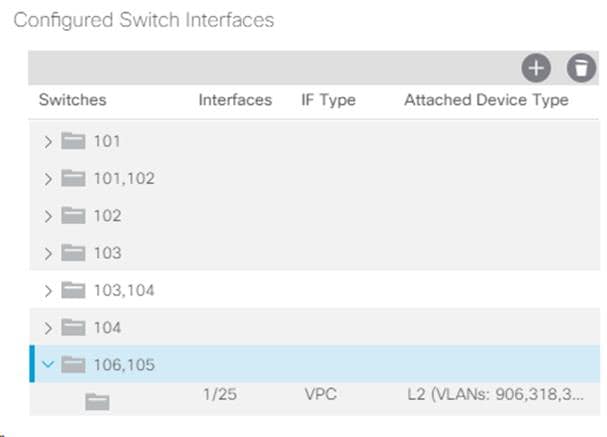

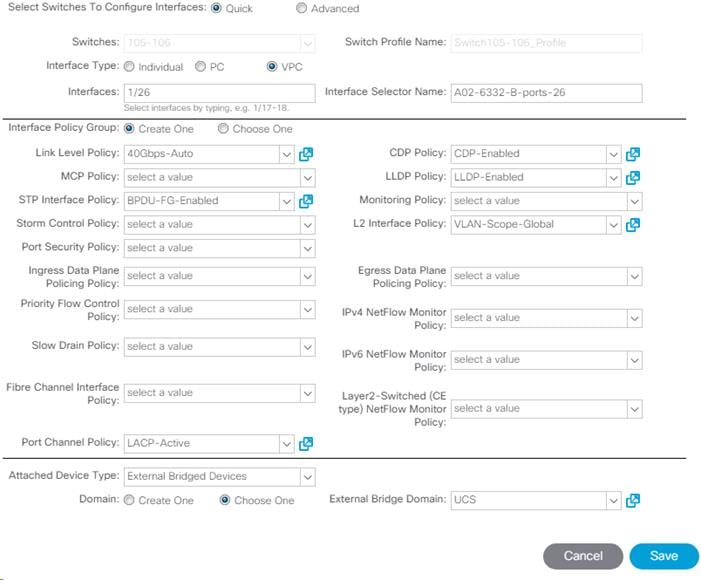

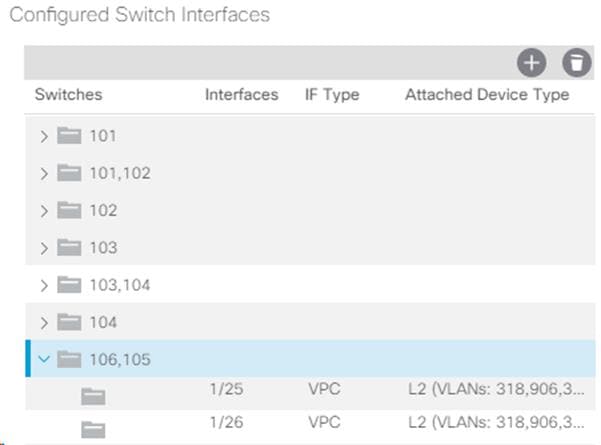

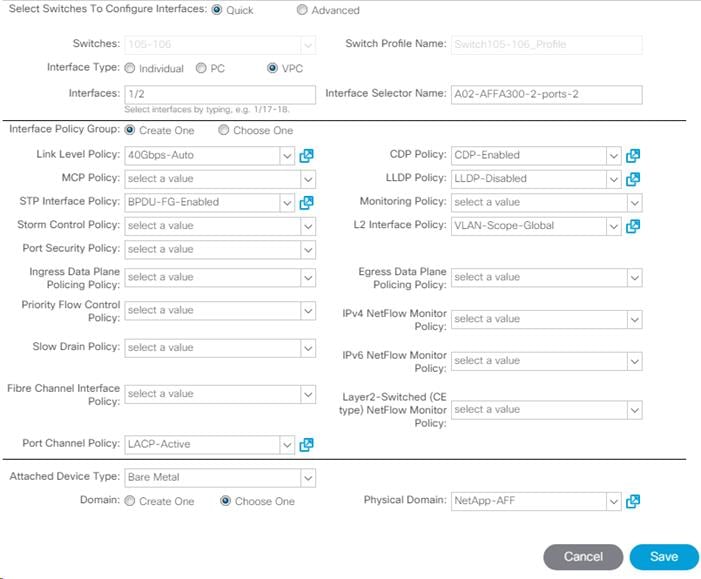

10. Configure various fields as shown in the figure below. In this screenshot, port 1/25 on both leaf switches is connected to UCS Fabric Interconnect A using 40Gbps links.

11. Click Save.

12. Click Save again to finish the configuring switch interfaces.

13. Click Submit.

14. From the right pane, select Configure and interface, PC and VPC.

15. Select the switches configured in the last step under Configured Switch Interfaces.

16. Click ![]() to add switch interfaces.

to add switch interfaces.

17. Configure various fields as shown in the screenshot. In this screenshot, port 1/26 on both leaf switches is connected to UCS Fabric Interconnect B using 40Gbps links. Instead of creating a new domain, the External Bridge Domain created in the last step (UCS) is attached to the FI-B as shown below.

18. Click Save.

19. Click Save again to finish the configuring switch interfaces.

20. Click Submit.

21. Optional: Repeat this procedure to configure any additional UCS domains. For a uniform configuration, the External Bridge Domain (UCS) will be utilized for all the Fabric Interconnects.

VPC – NetApp AFF Cluster

Complete the following steps to setup vPCs for connectivity to the NetApp AFF storage controllers. The VLANs configured for NetApp are shown in the table below.

![]() Since Global VLAN Scope is being used in this environment, unique VLAN IDs must be used for each different entry point into the ACI fabric. The VLAN IDs for the same named VLANs are different.

Since Global VLAN Scope is being used in this environment, unique VLAN IDs must be used for each different entry point into the ACI fabric. The VLAN IDs for the same named VLANs are different.

Table 5 VLANs for Storage

| VLAN | |

| MS-IB-MGMT | <218> |

| MS-Infra-SMB | <3053> |

| MS-Infra-iSCSI-A | <3013> |

| MS-Infra-iSCSI-B | <3023> |

1. In the APIC GUI, select Fabric > Access Policies > Quick Start.

2. In the right pane, select Configure and interface, PC and VPC.

3. Select the paired Nexus 9332 switches configured in the last step under Configured Switch Interfaces.

4. Click ![]() to add switch interfaces.

to add switch interfaces.

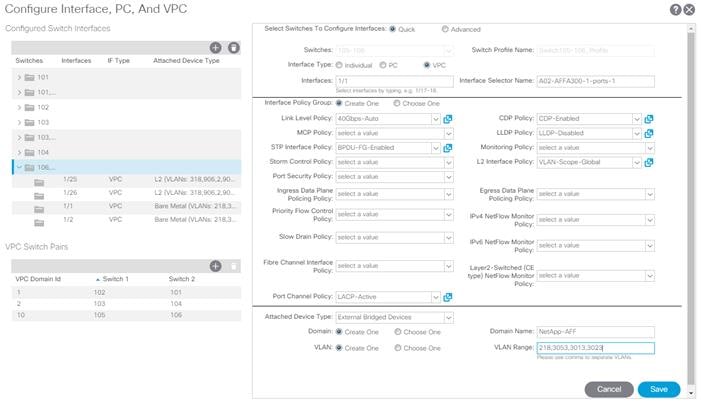

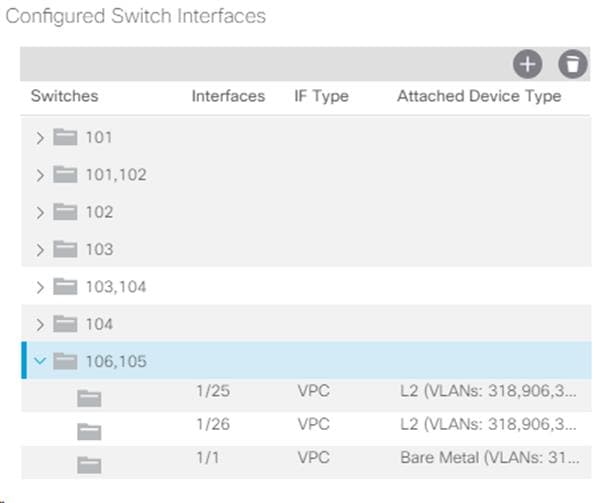

5. Configure various fields as shown in the screenshot below. In this screen shot, port 1/1 on both leaf switches is connected to Storage Controller 1 using 40Gbps links.

6. Click Save.

7. Click Save again to finish the configuring switch interfaces.

8. Click Submit.

9. From the right pane, select Configure and interface, PC and VPC.

10. Select the paired Nexus 9332 switches configured in the last step under Configured Switch Interfaces.

11. Click ![]() to add switch interfaces.

to add switch interfaces.

12. Configure various fields as shown in the screenshot below. In this screenshot, port 1/2 on both leaf switches is connected to Storage Controller 2 using 40Gbps links. Instead of creating a new domain, the Bare Metal Domain created in the previous step (NetApp-AFF) is attached to the storage controller 2 as shown below.

13. Click Save.

14. Click Save again to finish the configuring switch interfaces.

15. Click Submit.

16. Optional: Repeat this procedure to configure any additional NetApp AFF storage controllers. For a uniform configuration, the Bare Metal Domain (NetApp-AFF) will be utilized for all the Storage Controllers.

Configuring Common Tenant for Management Access

This section details the steps to setup in-band management access in the Tenant common. This design will allow all the other tenant EPGs to access the common management segment for Core Services VMs such as AD/DNS.

1. In the APIC GUI, select Tenants > common.

2. In the left pane, expand Tenant common and Networking.

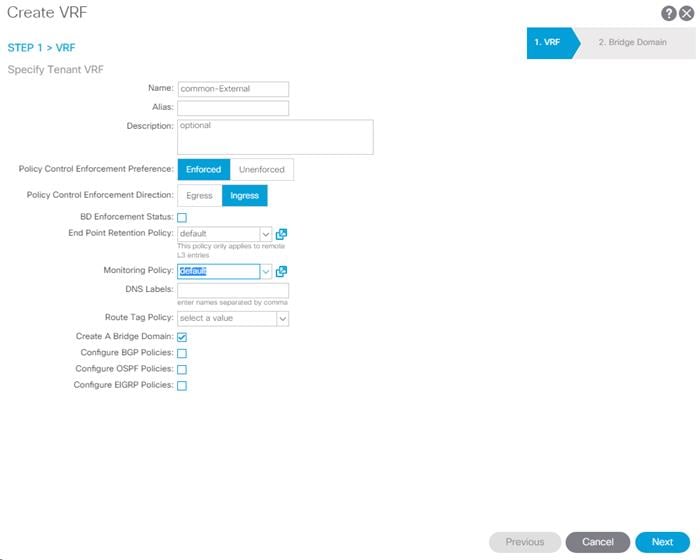

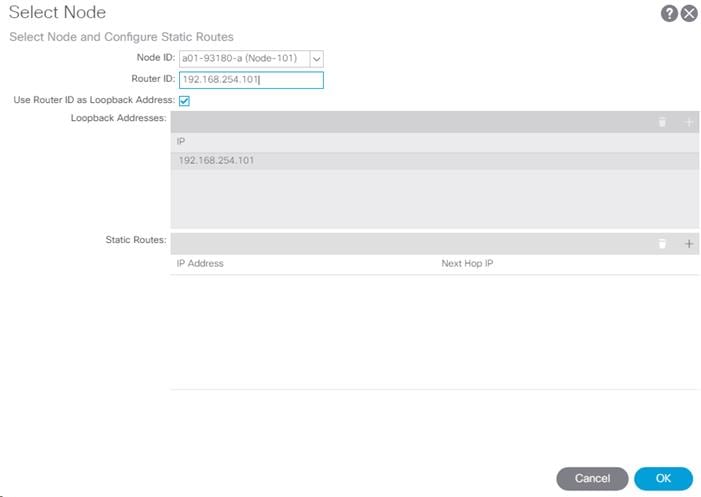

Create VRFs

To create VRFs, complete the following steps:

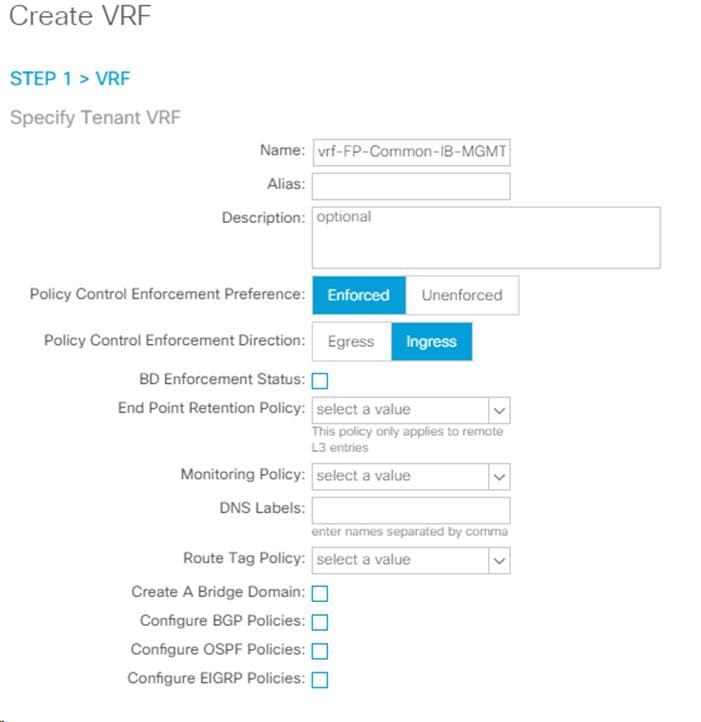

1. Right-click VRFs and select Create VRF.

2. Enter vrf-FP-Common-IB-MGMT as the name of the VRF.

3. Uncheck Create A Bridge Domain.

4. Click Finish.

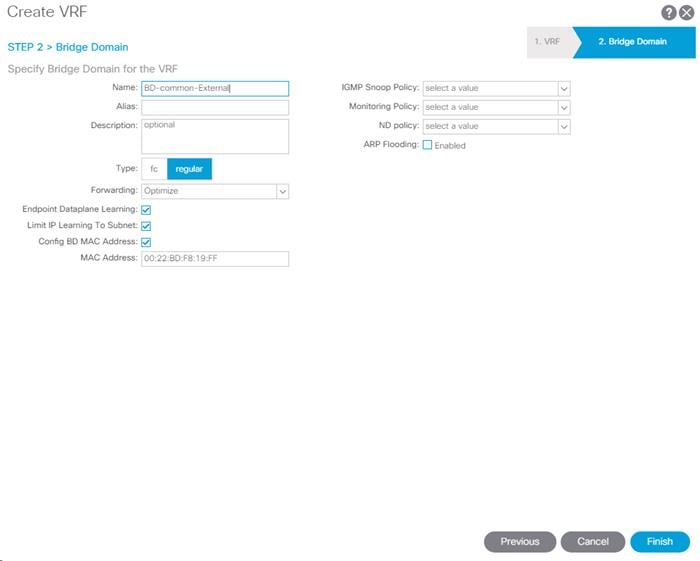

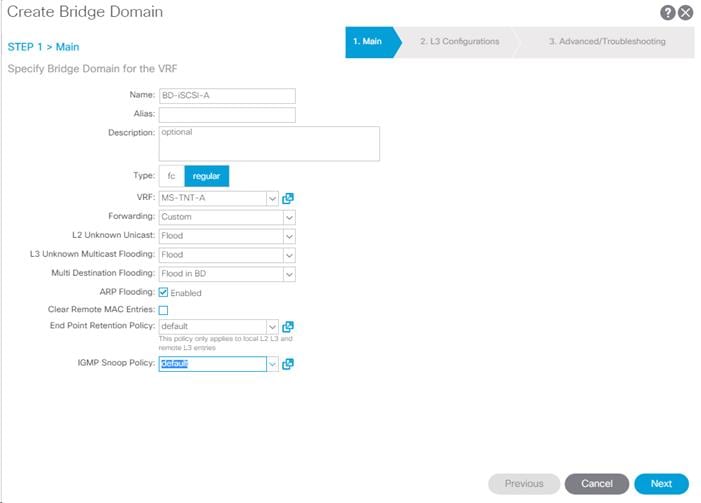

Create Bridge Domains

To create Bridge domains, complete the following steps:

1. In the APIC GUI, select Tenants > common.

2. In the left pane, expand Tenant common and Networking.

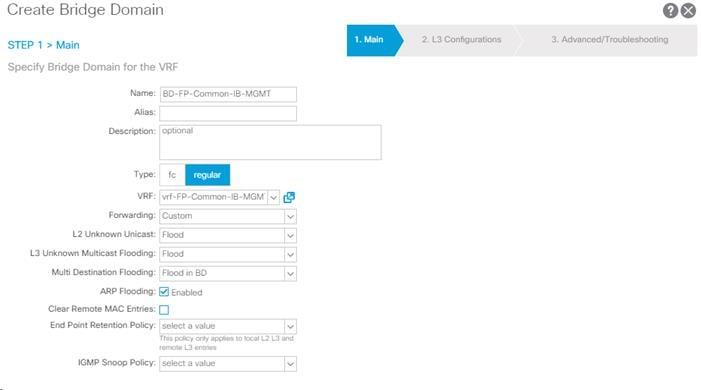

3. Right-click the Bridge Domain and select Create Bridge Domain.

4. Name the Bridge Domain as BD-FP-Common-IB-Mgmt

5. Select vrf-FP-Common-IB-MGMT from the VRF drop-down list.

6. Select Custom under Forwarding and enable the flooding as shown in the screenshot below.

7. Click Next.

8. Do not change any configuration on next screen (L3 Configurations). Select Next.

9. No changes are needed Advanced/Troubleshooting. Click FINISH.

Create Application Profile

To create an Application profile, complete the following steps:

![]() When the APIC-Controlled Microsoft Virtual Switch is used later in this document, for port-group naming, the Tenant name, Application Profile name, and EPG name are concatenated together to form the port-group name. Since the port-group name must be less than 64 characters, short names are used here for Tenant, Application Profile, and EPG.

When the APIC-Controlled Microsoft Virtual Switch is used later in this document, for port-group naming, the Tenant name, Application Profile name, and EPG name are concatenated together to form the port-group name. Since the port-group name must be less than 64 characters, short names are used here for Tenant, Application Profile, and EPG.

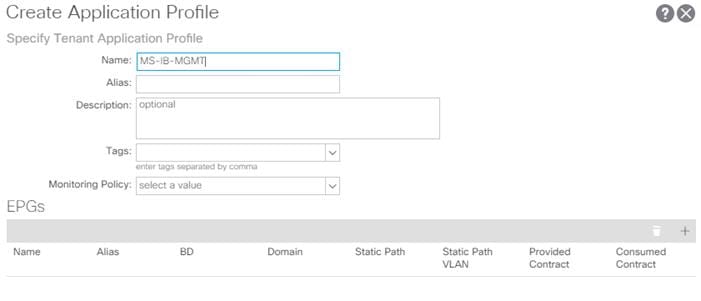

1. In the APIC GUI, select Tenants > common.

2. In the left pane, expand Tenant common and Application Profiles.

3. Right-click the Application Profiles and select Create Application Profiles.

4. Enter MS-IB-MGMT as the name of the application profile.

5. Click Submit.

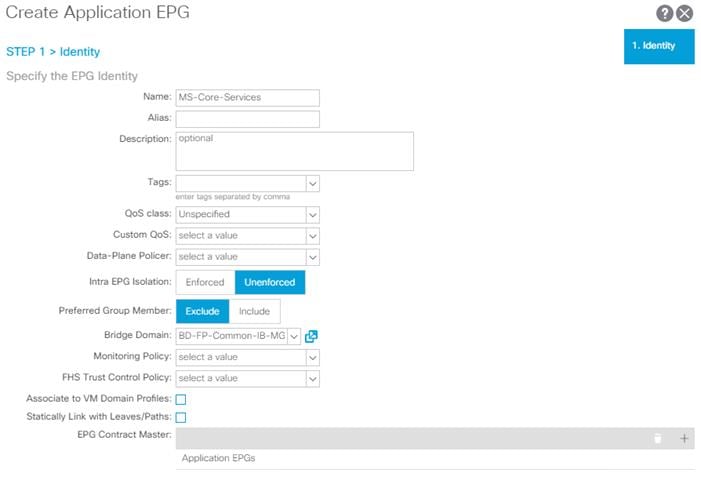

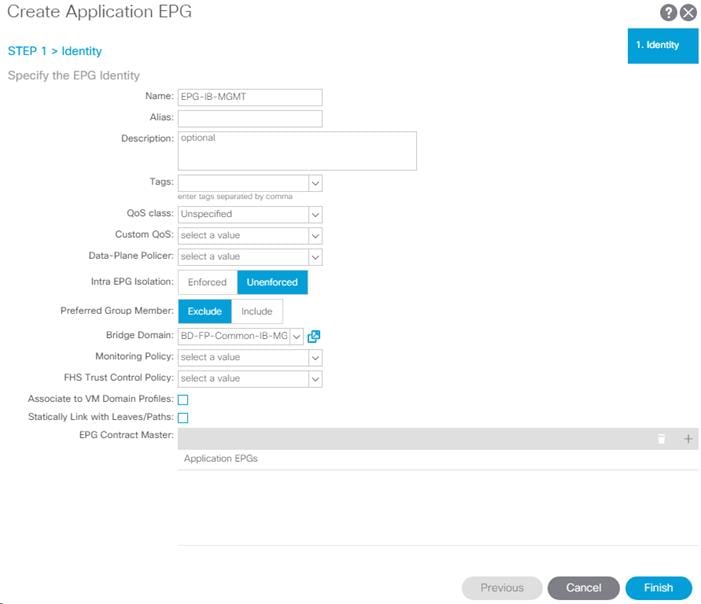

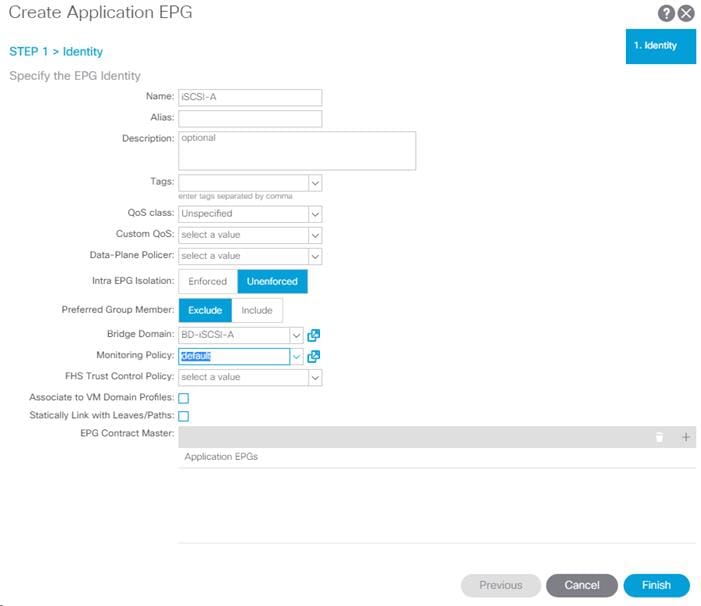

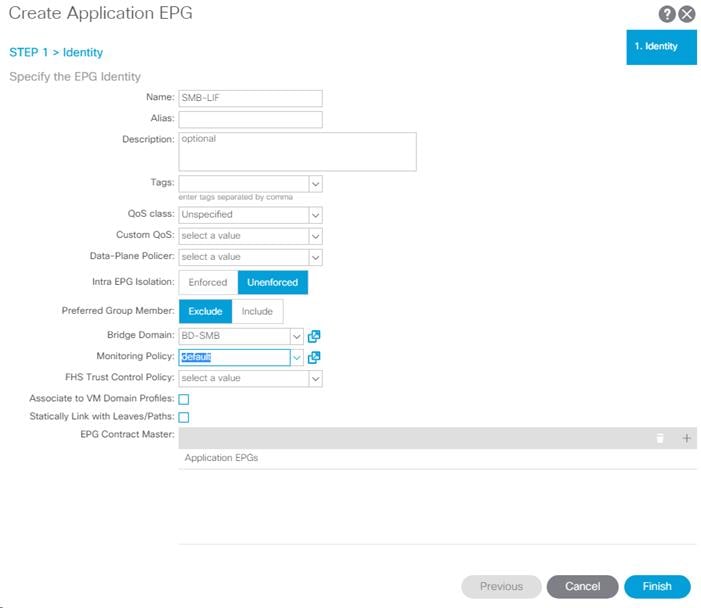

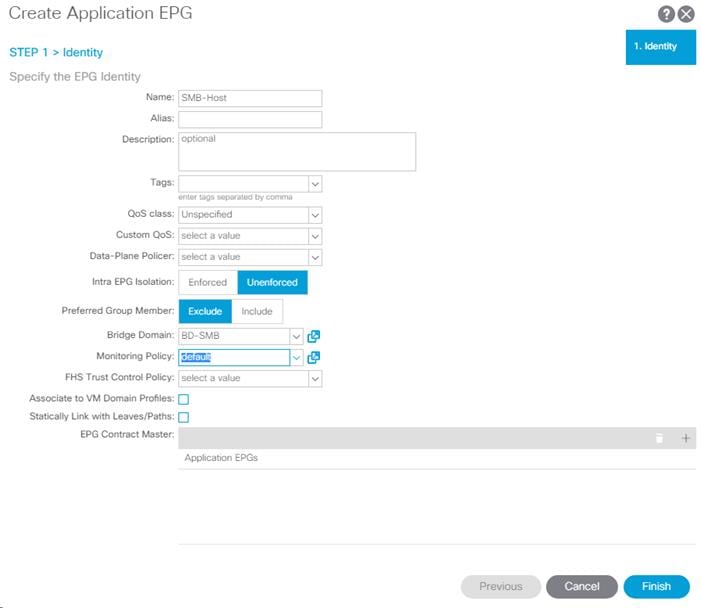

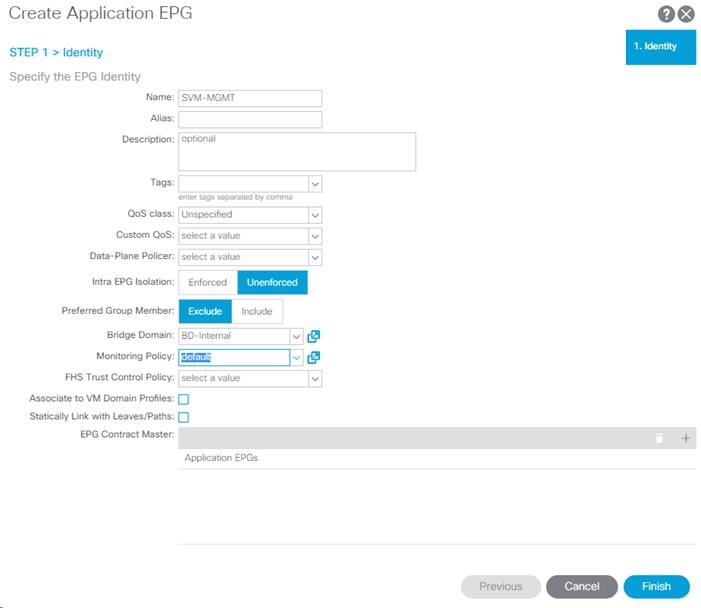

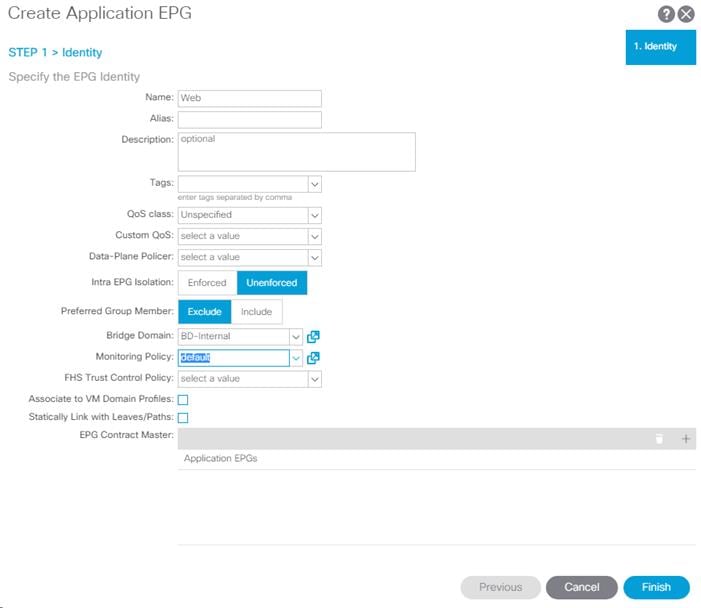

Create EPG

To create EPG, complete the following steps:

1. Expand the MS-IB-MGMT Application Profile and right-click the Application EPGs.

2. Select Create Application EPG.

3. Enter MS-Core-Services as the name of the EPG.

4. Select BD-FP-Common-IB-MGMT from the drop-down list for Bridge Domain.

5. Click Finish.

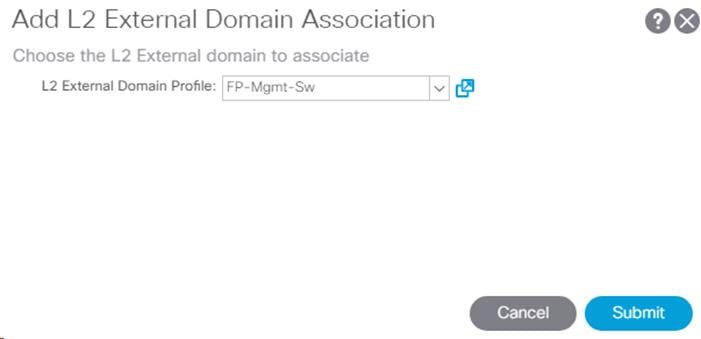

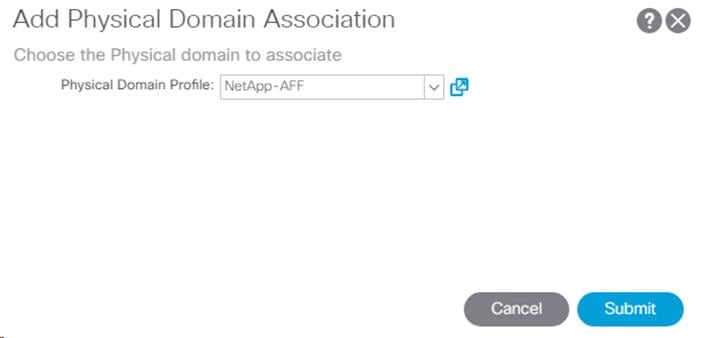

Set Domains

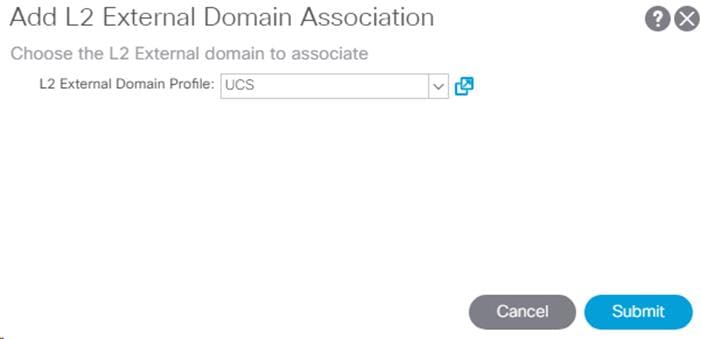

To set Domains, complete the following steps:

1. Expand the newly create EPG and click Domains.

2. Right-click Domains and select Add L2 External Domain Association.

3. Select the FP-Mgmt-Sw as the L2 External Domain Profile.

4. Click Submit.

5. Right-click Domains and select Add L2 External Domain Association.

6. Select the UCS as the L2 External Domain Profile.

7. Click Submit.

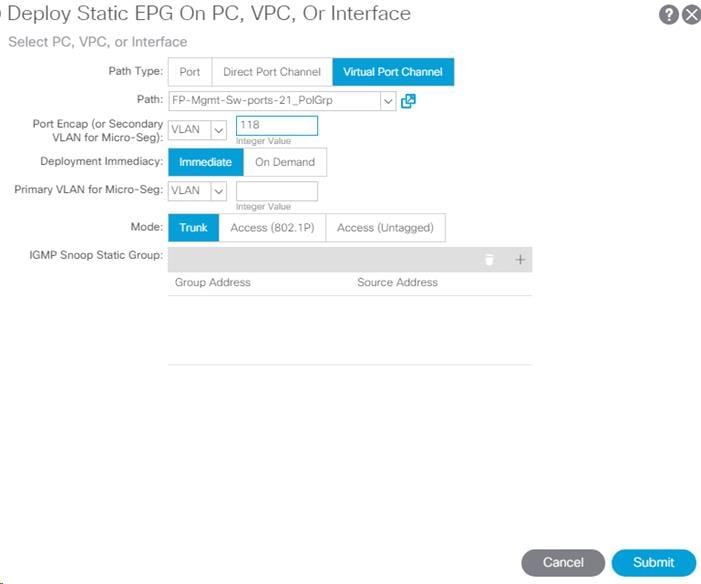

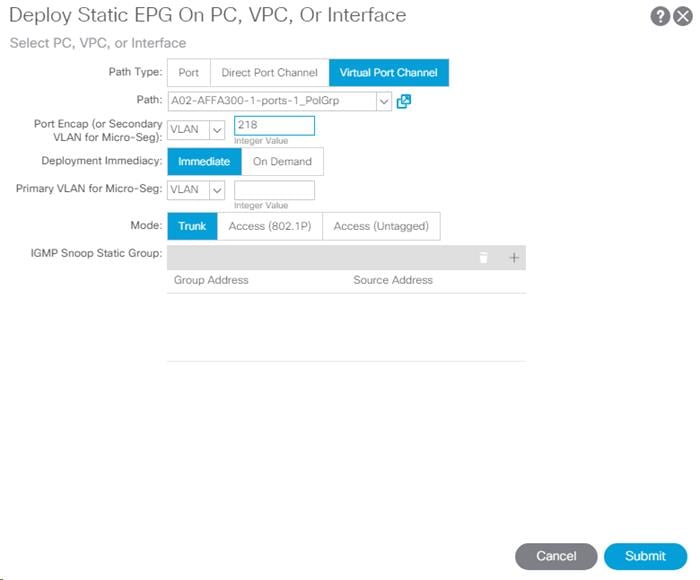

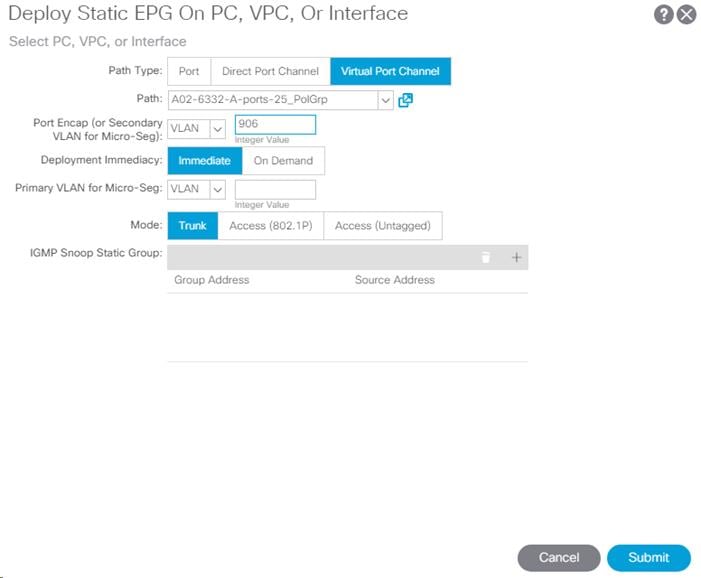

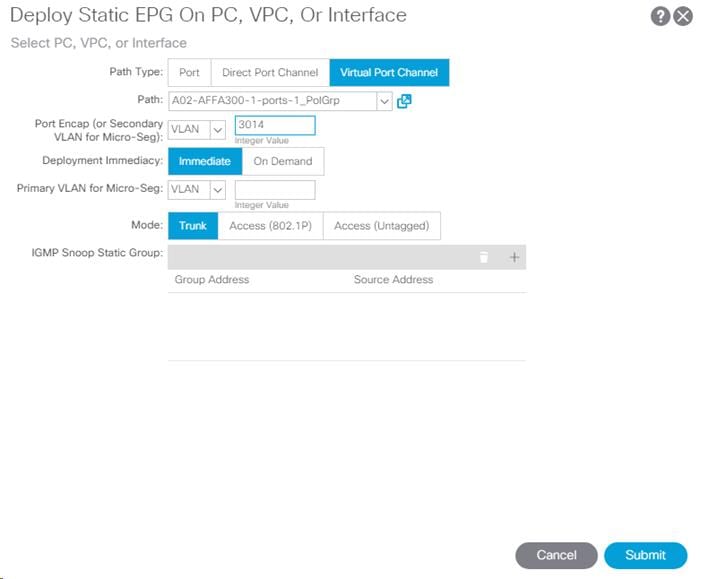

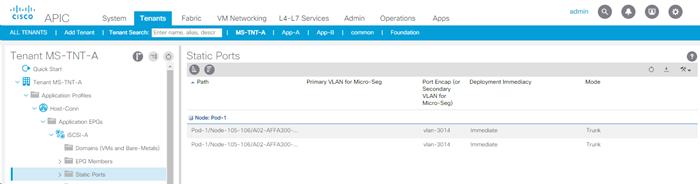

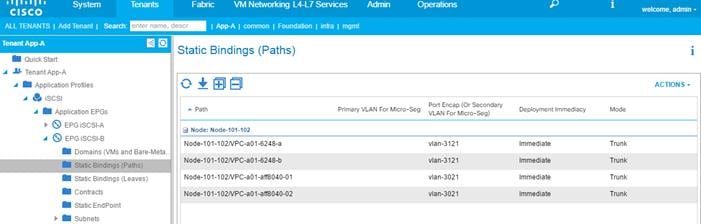

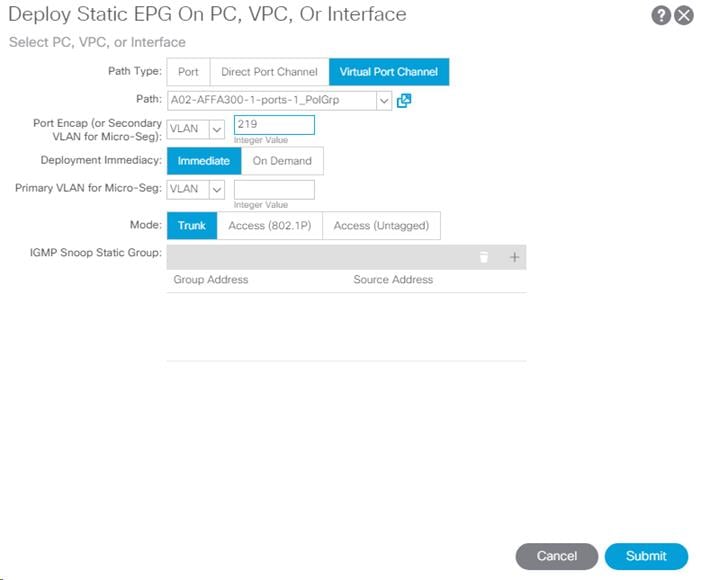

Set Static Ports

To set Static Ports, complete the following steps:

1. In the left pane, right-click Static Ports.

2. Select Deploy Static EPG on PC, VPC, or Interface.

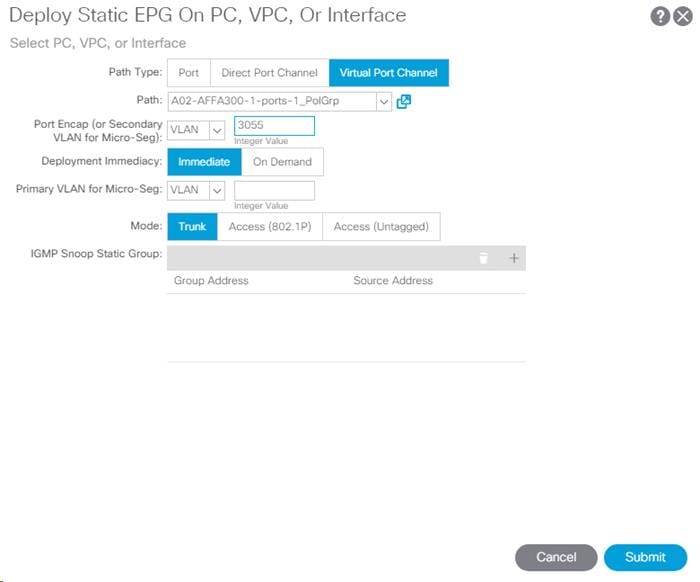

3. In the next screen, for the Path Type, select Virtual Port Channel and from the Path drop-down list, select the VPC for FP-Mgmt-Sw configured earlier.

4. Enter the IB-MGMT VLAN under Port Encap.

5. Change Deployment Immediacy to Immediate.

6. Set the Mode to Trunk.

7. Click Submit.

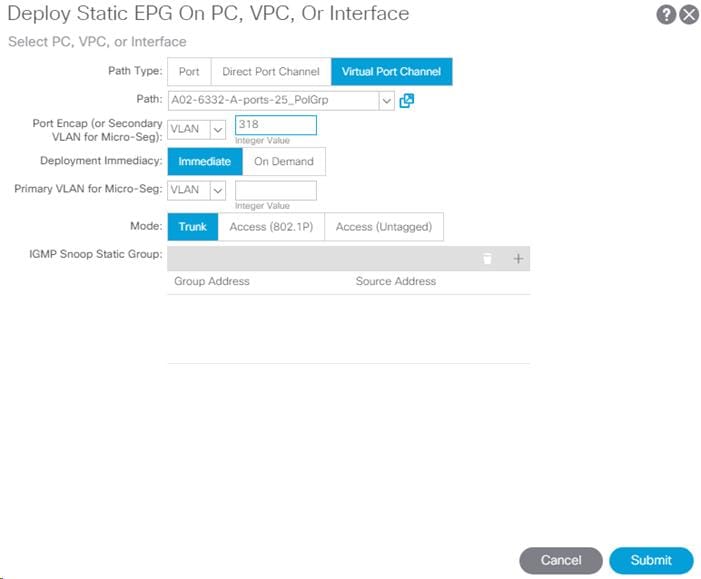

8. In the left pane, right-click Static Ports.

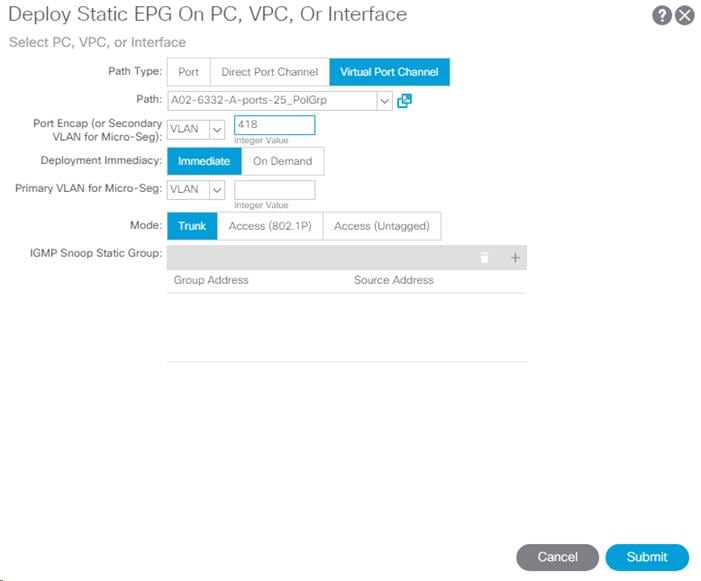

9. Select Deploy Static EPG on PC, VPC, or Interface.

10. In the next screen, for the Path Type, select Virtual Port Channel and from the Path drop-down list, select the VPC for UCS Fabric Interconnect A configured earlier.

11. Enter the UCS Core-Services VLAN under Port Encap.

![]() This VLAN should be a different VLAN than the one entered above for the Management Switch.

This VLAN should be a different VLAN than the one entered above for the Management Switch.

12. Change Deployment Immediacy to Immediate.

13. Set the Mode to Trunk.

14. Click Submit.

15. In the left pane, right-click Static Ports.

16. Select Deploy Static EPG on PC, VPC, or Interface.

17. In the next screen, for the Path Type, select Virtual Port Channel and from the Path drop-down list, select the VPC for UCS Fabric Interconnect B configured earlier.

18. Enter the UCS MS-IB-MGMT VLAN under Port Encap.

![]() This VLAN should be a different VLAN than the one entered above for the Management Switch.

This VLAN should be a different VLAN than the one entered above for the Management Switch.

19. Change Deployment Immediacy to Immediate.

20. Set the Mode to Trunk.

21. Click Submit.

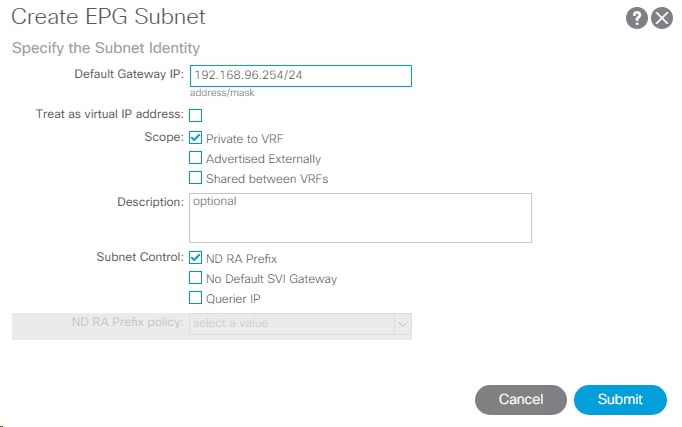

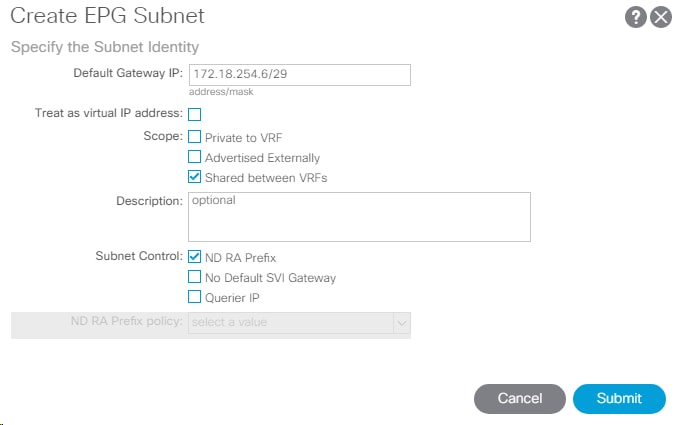

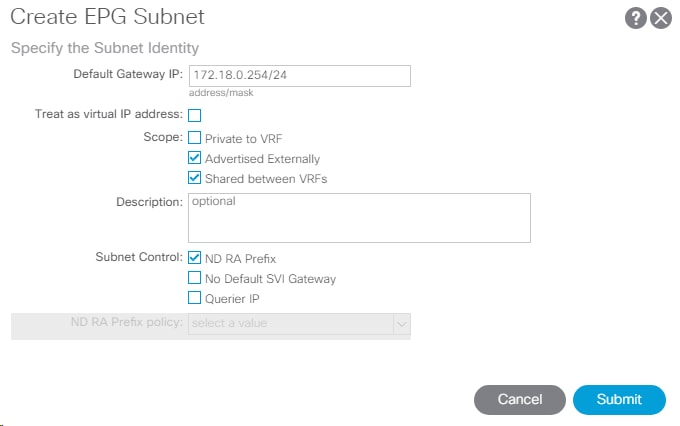

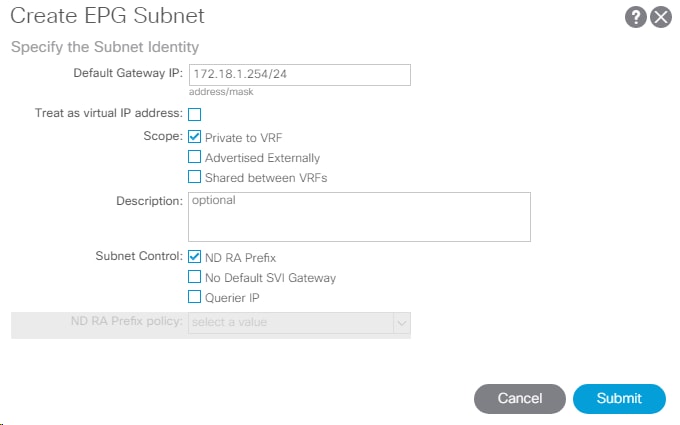

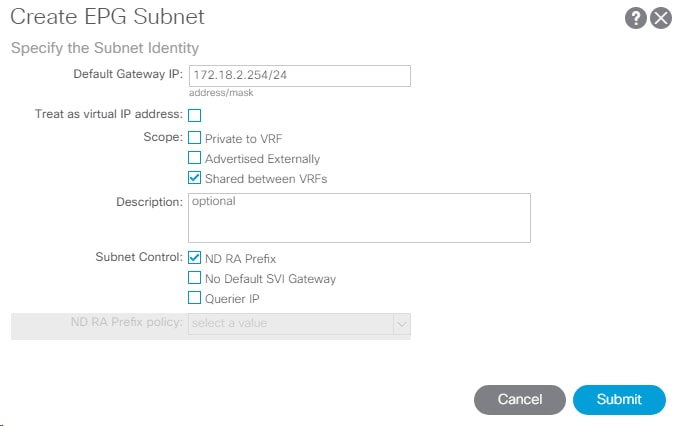

Create EPG Subnet

A subnet gateway for this Core Services EPG provides Layer 3 connectivity to Tenant subnets. To create a EPG Subnet, complete the following steps:

1. In the left pane, right-click Subnets and select Create EPG Subnet.

2. In CIDR notation, enter an IP address and subnet mask to serve as the gateway within the ACI fabric for routing between the Core Services subnet and Tenant subnets. This IP should be different than the IB-MGMT subnet gateway. In this lab validation, 10.1.118.1/24 is the IB-MGMT subnet gateway and is configured externally to the ACI fabric. 10.1.118.254/24 will be used for the EPG subnet gateway. Set the Scope of the subnet to Shared between VRFs.

3. Click Submit to create the Subnet.

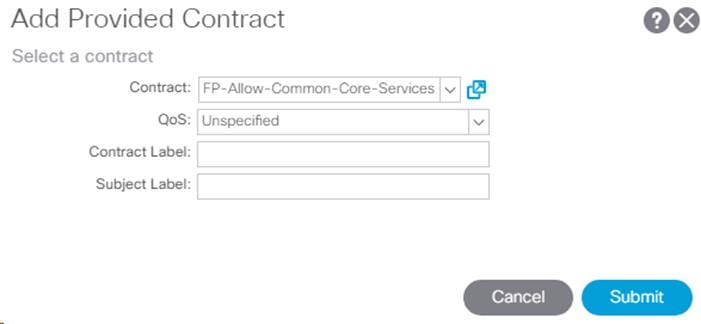

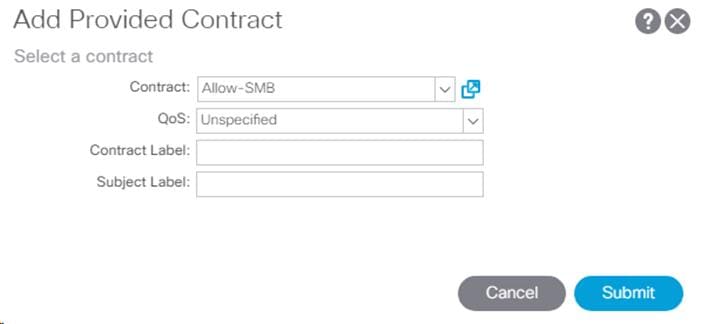

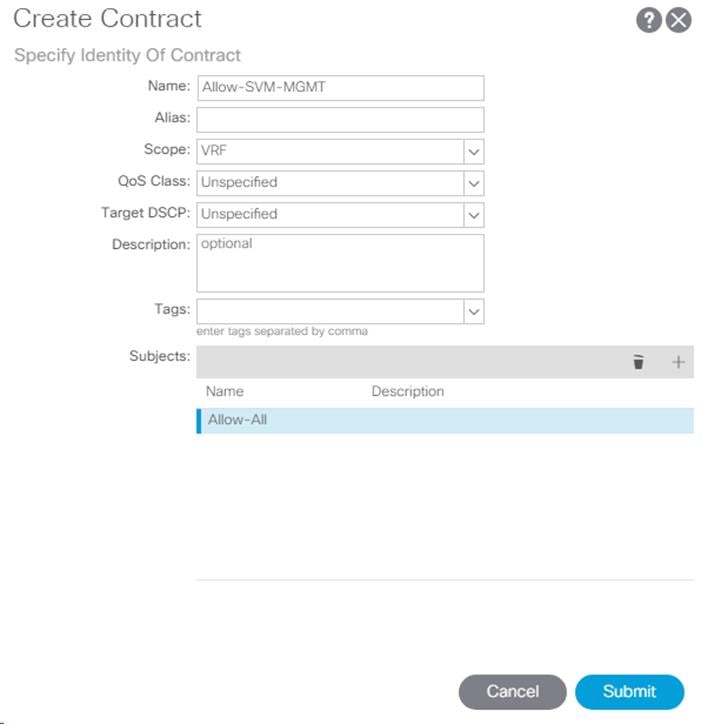

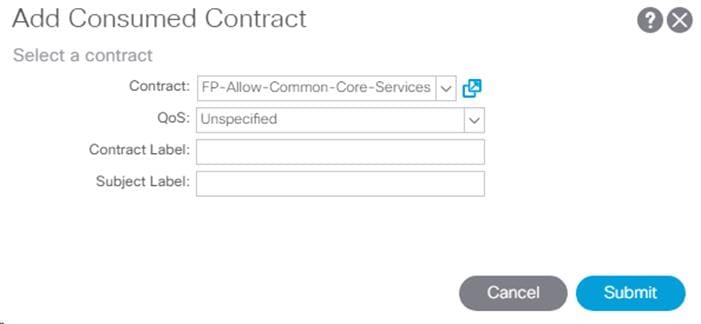

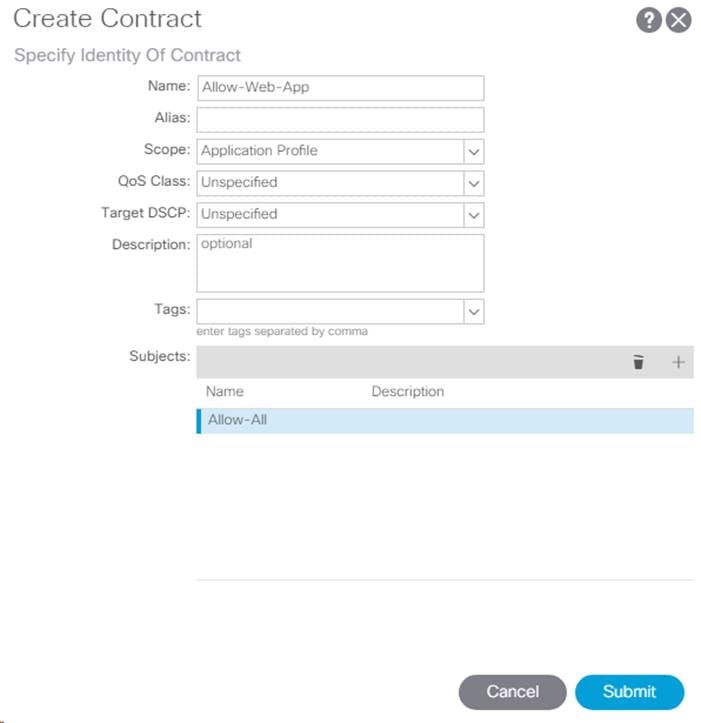

Create Provided Contract

To create Provided Contract, complete the following steps:

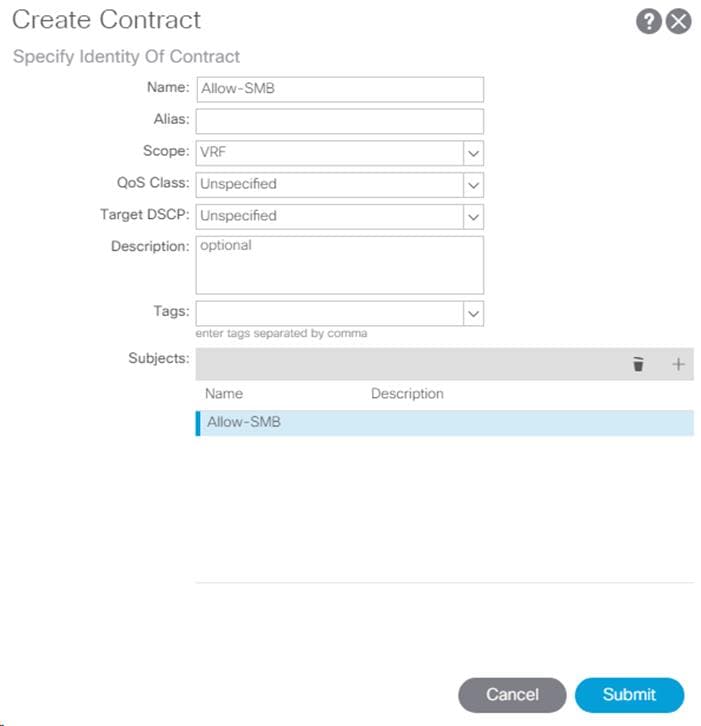

1. In the left pane, right-click Contracts and select Add Provided Contract.

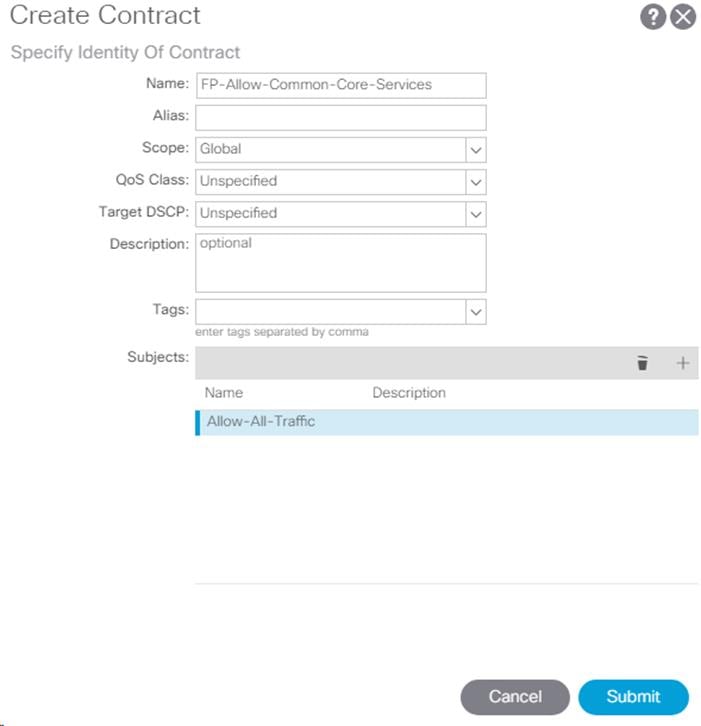

2. In the Add Provided Contract window, select Create Contract from the drop-down list.

3. Name the Contract FP-Allow-Common-Core-Services.

4. Set the scope to Global.

5. Click + to add a Subject to the Contract.

![]() The following steps create a contract to allow all the traffic between various tenants and the common management segment. You are encouraged to limit the traffic by setting restrictive filters.

The following steps create a contract to allow all the traffic between various tenants and the common management segment. You are encouraged to limit the traffic by setting restrictive filters.

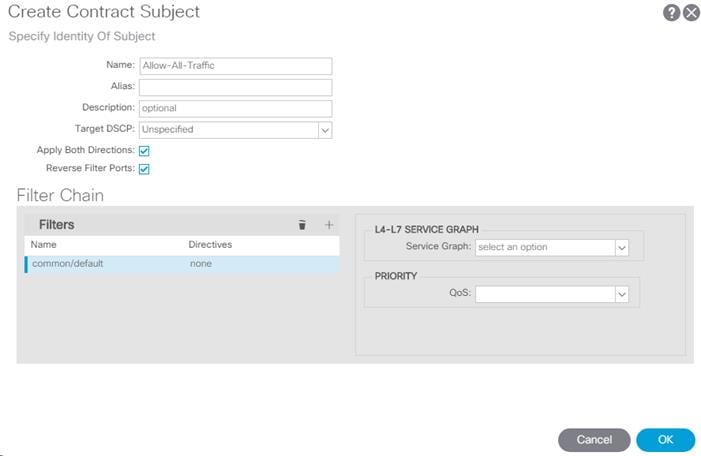

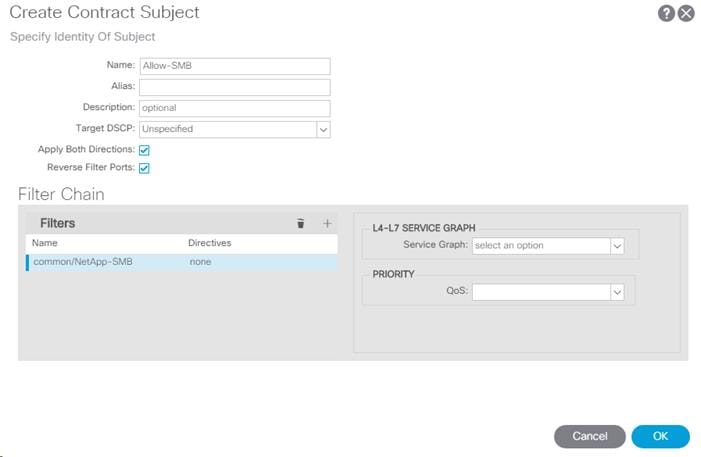

6. Name the subject Allow-All-Traffic.

7. Click + under Filter Chain to add a Filter.

8. From the drop-down Name list, select common/default.

9. In the Create Contract Subject window, click Update to add the Filter Chain to the Contract Subject.

10. Click OK to add the Contract Subject.

![]() The Contract Subject Filter Chain can be modified later.

The Contract Subject Filter Chain can be modified later.

11. Click Submit to finish creating the Contract.

12. Click Submit to finish adding a Provided Contract.

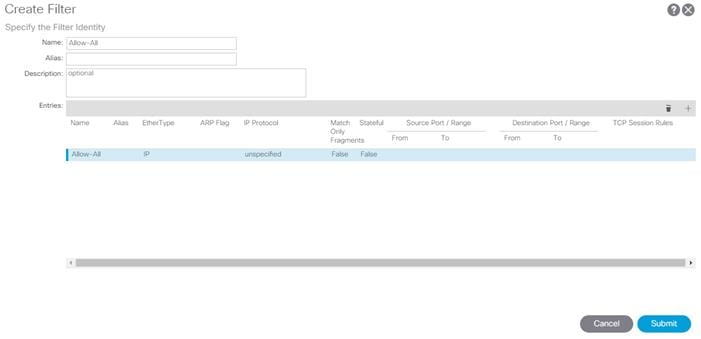

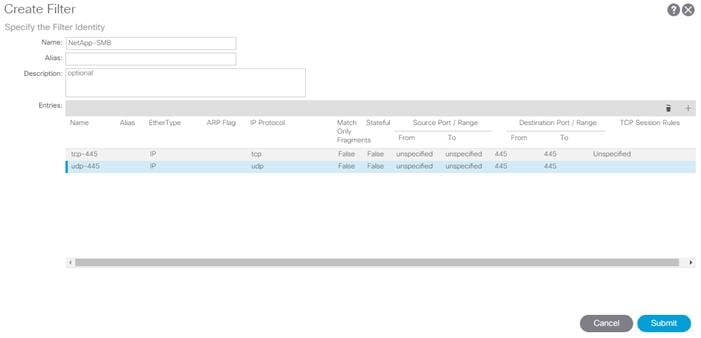

Create Security Filters in Tenant Common

To create Security Filters for SMB/CIFS with NetApp Storage and for iSCSI, complete the following steps. This section can also be used to set up other filters necessary to your environment.

1. In the APIC GUI, at the top select Tenants > common.

2. On the left, expand Tenant common, Security Policies, and Filters.

3. Right-click Filters and select Create Filter.

4. Name the filter Allow-All.

5. Click the + sign to add an Entry to the Filter.

6. Name the Entry Allow-All and select EtherType IP.

7. Leave the IP Protocol set at Unspecified.

8. Click UPDATE to add the Entry.

9. Click SUBMIT to complete adding the Filter.

10. Right-click Filters and select Create Filter.

11. Name the filter NetApp-SMB.

12. Click the + sign to add an Entry to the Filter.

13. Name the Entry tcp-445 and select EtherType IP.

14. Select the tcp IP Protocol and enter 445 for From and To under the Destination Port / Range by backspacing over Unspecified and entering the number.

15. Click UPDATE to add the Entry.

16. Click the + sign to add another Entry to the Filter.

17. Name the Entry udp-445 and select EtherType IP.

18. Select the tcp IP Protocol and enter 445 for From and To under the Destination Port / Range by backspacing over Unspecified and entering the number.

19. Click UPDATE to add the Entry.

20. Click SUBMIT to complete adding the Filter.

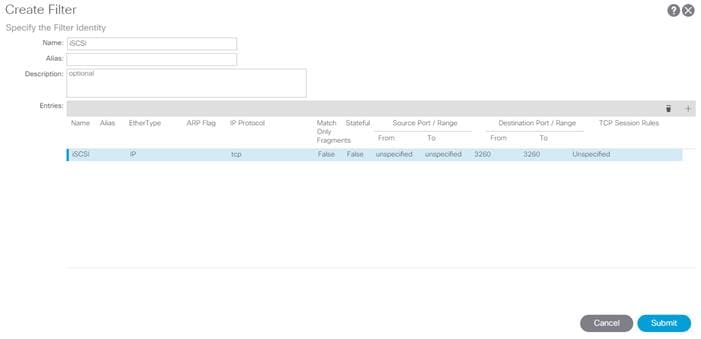

21. Right-click Filters and select Create Filter.

22. Name the filter iSCSI.

23. Click the + sign to add an Entry to the Filter.

24. Name the Entry iSCSI and select EtherType IP.

25. Select the TCP IP Protocol and enter 3260 for From and To under the Destination Port / Range by backspacing over Unspecified and entering the number.

26. Click UPDATE to add the Entry.

27. Click SUBMIT to complete adding the Filter.

![]() By adding these Filters to Tenant common, they can be used from within any Tenant in the ACI Fabric

By adding these Filters to Tenant common, they can be used from within any Tenant in the ACI Fabric

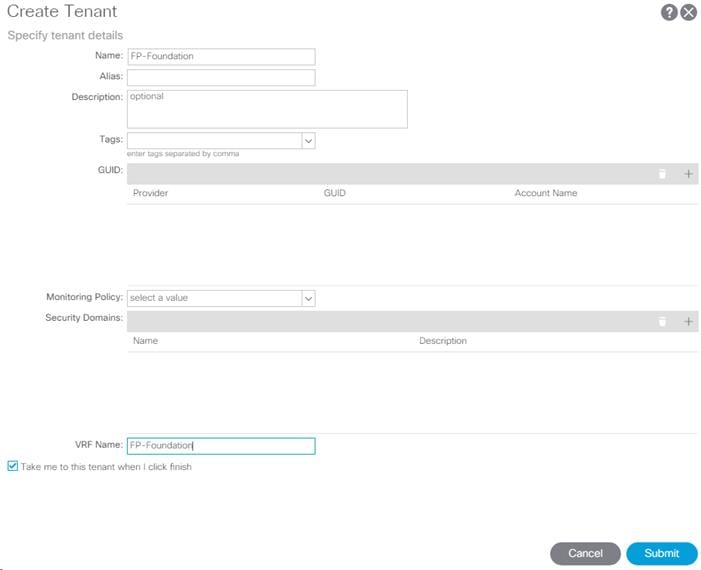

Deploy FP-Foundation Tenant

This section details the steps for creating the Foundation Tenant in the ACI Fabric. This tenant will host infrastructure connectivity for the compute (Microsoft Hyper-V on UCS nodes) and the storage environments. To deploy the FP-Foundation Tenant, complete the following steps:

1. In the APIC GUI, select Tenants > Add Tenant.

2. Name the Tenant as FP-Foundation.

3. For the VRF Name, enter FP-Foundation. Keep the check box “Take me to this tenant when I click finish” checked.

4. Click Submit to finish creating the Tenant.

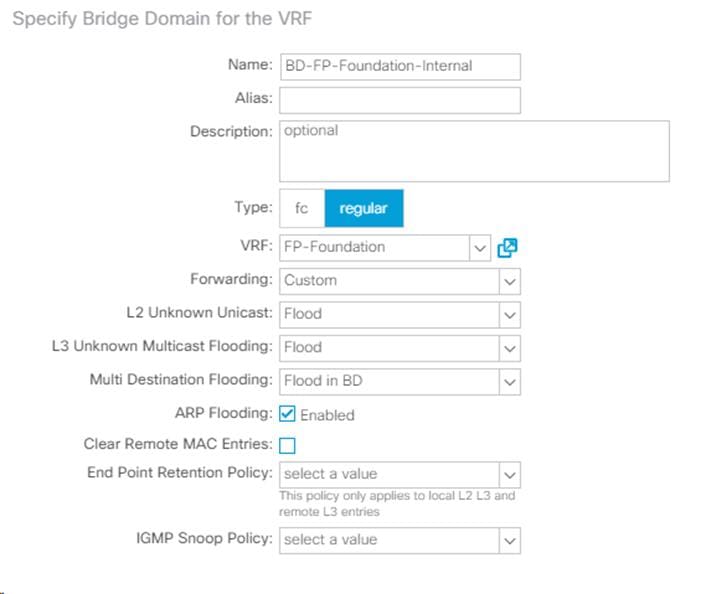

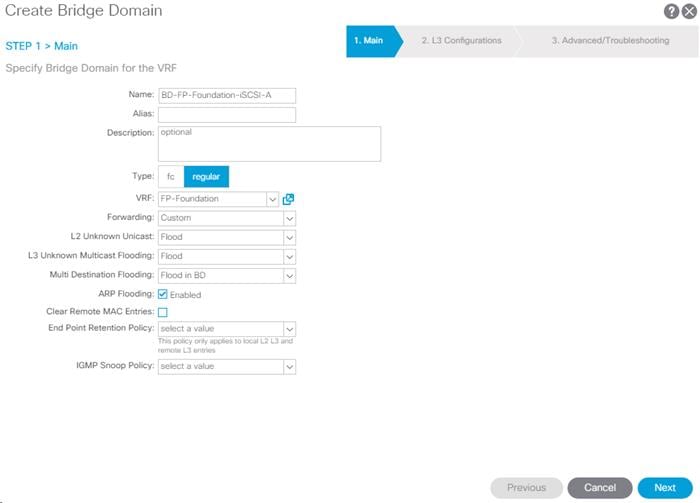

Create Bridge Domain

To create a Bridge Domain, complete the following steps:

1. In the left pane, expand Tenant FP-Foundation and Networking.

2. Right-click Bridge Domains and select Create Bridge Domain.

3. Name the Bridge Domain BD-FP-Foundation-Internal.

4. Select FP-Foundation from the VRF drop-down list.

5. Select Custom under Forwarding and enable the flooding.

6. Click Next.

7. Do not change any configuration on the next screen (L3 Configurations). Select Next.

8. No changes are needed for Advanced/Troubleshooting. Click Finish to finish creating Bridge Domain.

Create Application Profile for IB-Management Access

To create an application profile for IB-Management Access, complete the following steps:

1. In the left pane, expand tenant FP-Foundation, right-click Application Profiles and select Create Application Profile.

2. Name the Application Profile as AP-IB-MGMT and click Submit to complete adding the Application Profile.

Create EPG for IB-MGMT Access

This EPG will be used for Hyper-V hosts and management virtual machines that are in the IB-MGMT subnet, but that do not provide ACI fabric Core Services. For example, AD server VMs could be placed in the Core Services EPG defined earlier to provide DNS services to tenants in the Fabric. The SCVMM VM can be placed in the IB-MGMT EPG; it will have access to the Core Services VMs, but will not be reachable from Tenant VMs.

To create EPG for IB-MGMT access, complete the following steps:

1. In the left pane, expand the Application Profiles and right-click the AP-IB-MGMT EPG and select Create Application EPG.

2. Name the EPG EPG-IB-MGMT.

3. From the Bridge Domain drop-down list, select Bridge Domain BD-FP-Common-IB-MGMT from Tenant common.

4. Click Finish to complete creating the EPG.

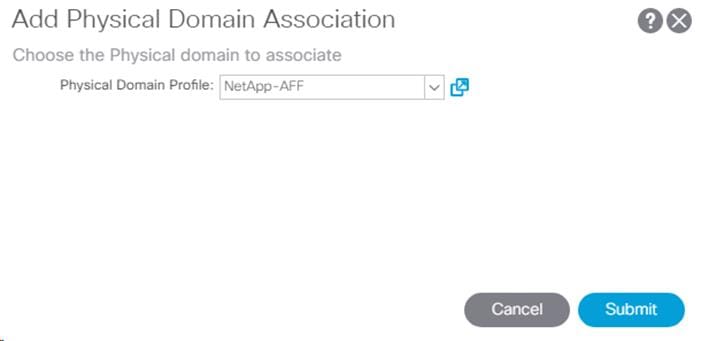

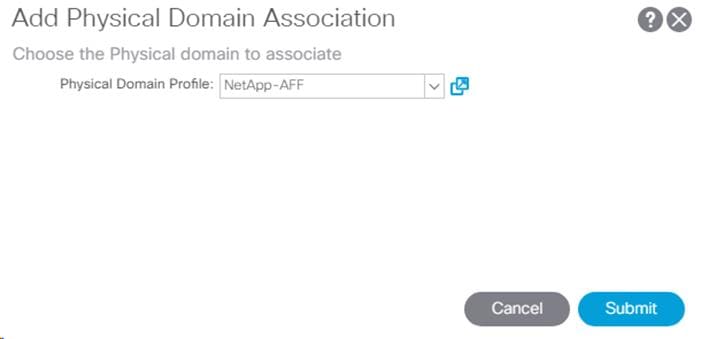

5. In the left menu, expand the newly created EPG, right-click Domains and select Add Physical Domain Association.

6. Select the NetApp-AFF Physical Domain Profile and click Submit.

7. In the left menu, right-click Static Ports and select Deploy Static EPG on PC, VPC, or Interface.

8. Select the Virtual Port Channel Path Type, then for Path select the vPC for the first NetApp AFF storage controller.

9. For Port Encap leave VLAN selected and fill in the storage IB-MGMT VLAN ID.

10. Set the Deployment Immediacy to Immediate and click Submit.

11. Repeat steps 7-10 to add the Static Port mapping for the second NetApp AFF storage controller.

12. In the left menu, right-click Domains and select Add L2 External Domain Association.

13. Select the UCS L2 External Domain Profile and click Submit.

14. In the left menu, right-click Static Ports and select Deploy Static EPG on PC, VPC, or Interface.

15. Select the Virtual Port Channel Path Type, then for Path select the vPC for the first UCS Fabric Interconnect.

16. For Port Encap leave VLAN selected and fill in the UCS IB-MGMT VLAN ID.

17. Set the Deployment Immediacy to Immediate and click Submit.

18. Repeat steps 7-10 to add the Static Port mapping for the second UCS Fabric Interconnect.

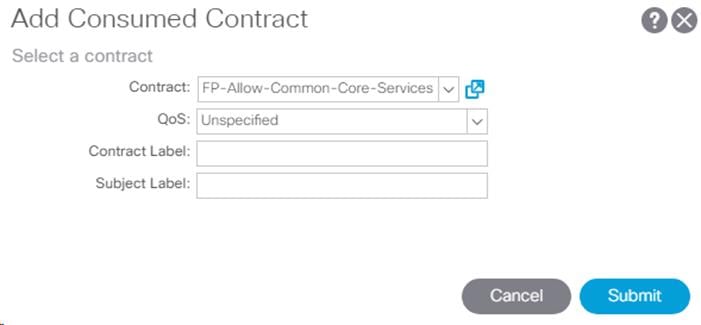

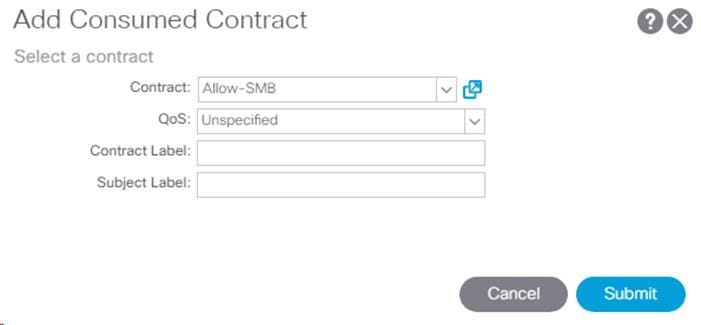

19. In the left menu, right-click Contracts and select Add Consumed Contract.

20. From the drop-down list for the Contract, select FP-Allow-Common-Core-Services from Tenant common.

21. Click Submit.

This EPG will be utilized to provide Hyper-V hosts as well as the VMs that do not provide Core Services access to the existing in-band management network.

Create Application Profile for Host Connectivity

To create an application profile for host connectivity, complete the following steps:

1. In the left pane, under the Tenant FP-Foundation, right-click Application Profiles and select Create Application Profile.

2. Name the Profile AP-Host-Connectivity and click Submit to complete adding the Application Profile.

The following EPGs and the corresponding mappings will be created under this application profile.

![]() Refer to Table 6. for the information required during the following configuration. Items marked by { } will need to be updated according to Table 6. Note that since all storage interfaces on a single Interface Group on a NetApp AFFA300 share the same MAC address, that different bridge domains must be used for each storage EPG.

Refer to Table 6. for the information required during the following configuration. Items marked by { } will need to be updated according to Table 6. Note that since all storage interfaces on a single Interface Group on a NetApp AFFA300 share the same MAC address, that different bridge domains must be used for each storage EPG.

Table 6 EPGs and mappings for AP-Host-Connectivity

| EPG Name | Bridge Domain | Domain | Static Port – Compute | Static Port - Storage |

| EPG-MS-LVMN | BD-FP-Foundation-Internal | L2 External: UCS | VPC for all UCS FIs VLAN 906 | N/A |

| EPG-MS-Clust | BD-FP-Foundation-Internal | L2 External: UCS | VPC for all UCS FIs VLAN 907 | N/A |

| EPG-Infra-iSCSI-A | BD-FP-Foundation-iSCSI-A | L2 External: UCS Physical: NetApp-AFF | VPC for all UCS FIs VLAN 3113 | VPC for all NetApp AFFs |

| EPG-Infra-iSCSI-B | BD-FP-Foundation-iSCSI-B | L2 External: UCS Physical: NetApp-AFF | VPC for all UCS FIs VLAN 3123 | VPC for all NetApp AFFs |

| EPG-Infra-SMB | BD-FP-Foundation-SMB | L2 External: UCS Physical: NetApp-AFF | VPC for all UCS FIs VLAN 3153 | VPC for all NetApp AFFs |

![]() The MS-LVMN, MS-Cluster and MS-Infra-SMB VLANs are configured in Cisco UCS here and will be in place if needed on the manually created Hyper-V Virtual Switch. The EPG should be configured; it is not necessary to configure the actual VLAN or UCS static port mapping, but you can configure these without any negative effects.

The MS-LVMN, MS-Cluster and MS-Infra-SMB VLANs are configured in Cisco UCS here and will be in place if needed on the manually created Hyper-V Virtual Switch. The EPG should be configured; it is not necessary to configure the actual VLAN or UCS static port mapping, but you can configure these without any negative effects.

Create Bridge Domains and EPGs

To create bridge domains and EPGs, complete the following steps:

1. For each row in the table above, if the Bridge Domain does not already exist, in the left pane, expand Networking > Bridge Domains.

2. Right-click Bridge Domains and select Create Bridge Domain.

3. Name the Bridge Domain {BD-FP-Foundation-iSCSI-A}.

4. Select the FP-Foundation VRF.

5. Select Custom for Forwarding and setup forwarding as shown in the screenshot.

6. Click Next.

7. Do not change any configuration on the next screen (L3 Configurations). Select Next.

8. No changes are needed for Advanced/Troubleshooting. Click Finish to finish creating Bridge Domain.

9. In the left pane, expand Application Profiles > AP-Host-Connectivity. Right-click on Application EPGs and select Create Application EPG.

10. Name the EPG {EPG-MS-LVMN}.

11. From the Bridge Domain drop-down list, select the Bridge Domain from the table.

12. Click Finish to complete creating the EPG.

13. In the left pane, expand the Application EPGs and EPG {EPG-LVMN}.

14. Right-click Domains and select Add L2 External Domain Association.

15. From the drop-down list, select the previously defined {UCS} L2 External Domain Profile.

16. Click Submit to complete the L2 External Domain Association.

17. Repeat the Domain Association steps (6-9) to add appropriate EPG specific domains from Table 7.

18. Right-click Static Ports and select Deploy EPG on PC, VPC, or Interface.

19. In the Deploy Static EPG on PC, VPC, Or Interface Window, select the Virtual Port Channel Path Type.

20. From the drop-down list, select the appropriate VPCs.

21. Enter VLAN from Table 3. {906} for Port Encap.

22. Select Immediate for Deployment Immediacy and for Mode select Trunk.

23. Click Submit to complete adding the Static Path Mapping.

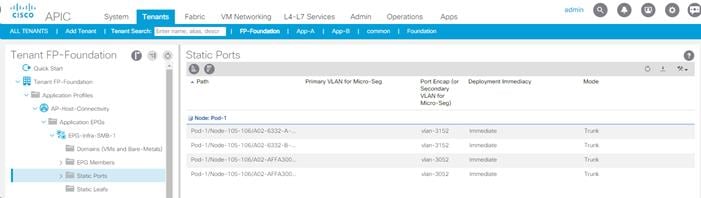

24. Repeat the above steps to add all the Static Path Mappings for the EPG listed in Table 6.

Table 7 EPGs and Subnets for AP-Host-Connectivity

| EPG Name | Subnet |

| EPG-MS-LVMN | 192.168.96.254/24 |

| EPG-MS-Clust | 192.168.97.254/24 |

| EPG-Infra-iSCSI-A | 192.168.12.254/24 |

| EPG-Infra-iSCSI-B | 192.168.22.254/24 |

| EPG-Infra-SMB | 192.168.53.254/24 |

25. On the left under the EPG, right-click Subnets and select Create EPG Subnet.

26. In the Create EPG Subnet window, enter the Subnet from Table 7 as the Default Gateway IP.

27. Click Submit to complete adding the subnet.

28. Repeat the above steps to complete adding the EPGs and subnets in Table 6 and Table 7.

![]() Pursuant to best practices, NetApp recommends the following command on the LOADER prompt of the NetApp controllers to assist with LUN stability during copy operations. To access the LOADER prompt, connect to the controller via serial console port or Service Processor connection and press Ctrl-C to halt the boot process when prompted.

Pursuant to best practices, NetApp recommends the following command on the LOADER prompt of the NetApp controllers to assist with LUN stability during copy operations. To access the LOADER prompt, connect to the controller via serial console port or Service Processor connection and press Ctrl-C to halt the boot process when prompted.

setenv bootarg.tmgr.disable_pit_hp 1

For more information about the workaround, see: http://nt-ap.com/2w6myr4

For more information about Windows Offloaded Data Transfers see: https://technet.microsoft.com/en-us/library/hh831628(v=ws.11).aspx

NetApp All Flash FAS A300 Controllers

NetApp Hardware Universe

The NetApp Hardware Universe (HWU) application provides supported hardware and software components for any specific ONTAP version. It provides configuration information for all the NetApp storage appliances currently supported by ONTAP software. It also provides a table of component compatibilities. Confirm that the hardware and software components that you would like to use are supported with the version of ONTAP that you plan to install by using the HWU application at the NetApp Support site.

To a access the HWU application to view the System Configuration guides, complete the following steps:

1. Click the Controllers tab to view the compatibility between different version of the ONTAP software and the NetApp storage appliances with your desired specifications.

2. To compare components by storage appliance, click Compare Storage Systems.

Controllers

Follow the physical installation procedures for the controllers found in the AFF A300 Series product documentation at the NetApp Support site.

Disk Shelves

NetApp storage systems support a wide variety of disk shelves and disk drives. The complete list of disk shelves that are supported by the AFF A300 is available at the NetApp Support site.

For SAS disk shelves with NetApp storage controllers, refer to the SAS Disk Shelves Universal SAS and ACP Cabling Guide for proper cabling guidelines.

NetApp ONTAP 9.1

Complete Configuration Worksheet

Before running the setup script, complete the cluster setup worksheet from the ONTAP 9.1 Software Setup Guide. You must have access to the NetApp Support site to open the cluster setup worksheet.

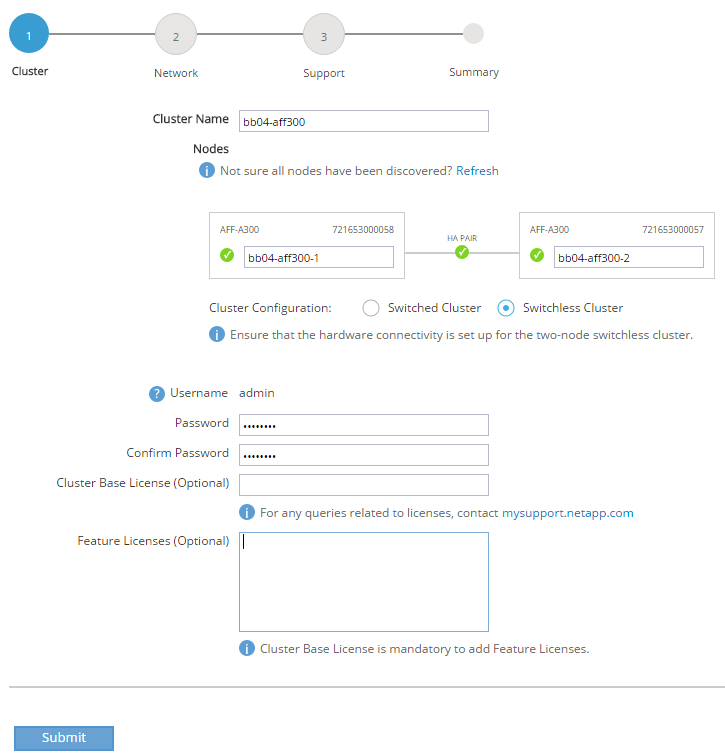

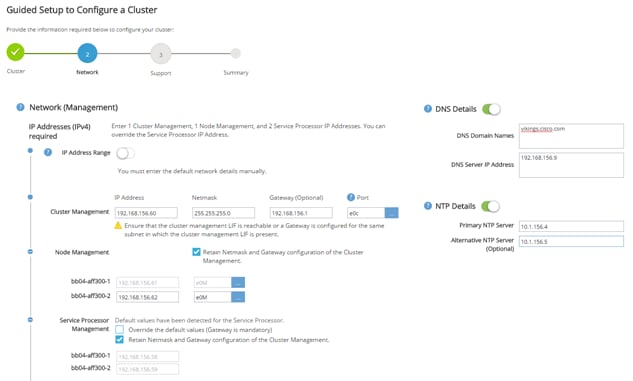

Configure ONTAP Nodes

Before running the setup script, review the configuration worksheets in the ONTAP 9.1 Software Setup Guide to learn about configuring ONTAP. Table 8 lists the information needed to configure two ONTAP nodes. Customize the cluster detail values with the information applicable to your deployment.

Table 8 ONTAP Software Installation Prerequisites

| Cluster Detail | Cluster Detail Value |

| Cluster node 01 IP address | <node01-mgmt-ip> |

| Cluster node 01 netmask | <node01-mgmt-mask> |

| Cluster node 01 gateway | <node01-mgmt-gateway> |

| Cluster node 02 IP address | <node02-mgmt-ip> |

| Cluster node 02 netmask | <node02-mgmt-mask> |

| Cluster node 02 gateway | <node02-mgmt-gateway> |

| Data ONTAP 9.1 URL | <url-boot-software> |

Configure Node 01

To configure node 01, complete the following steps:

1. Connect to the storage system console port. You should see a Loader-A prompt. However, if the storage system is in a reboot loop, press Ctrl-C to exit the autoboot loop when the following message displays:

Starting AUTOBOOT press Ctrl-C to abort…

2. Allow the system to boot up.

autoboot

3. Press Ctrl-C when prompted.

![]() If ONTAP 9.1 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.1 is the version being booted, select option 8 and y to reboot the node, then continue with step 14.

If ONTAP 9.1 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.1 is the version being booted, select option 8 and y to reboot the node, then continue with step 14.

4. To install new software, select option 7.

5. Enter y to perform an upgrade.

6. Select e0M for the network port you want to use for the download.

7. Enter y to reboot now.

8. Enter the IP address, netmask, and default gateway for e0M.

<node01-mgmt-ip> <node01-mgmt-mask> <node01-mgmt-gateway>

9. Enter the URL where the software can be found.

![]() This web server must be reachable.

This web server must be reachable.

<url-boot-software>

10. Press Enter for the user name, indicating no user name.

11. Enter y to set the newly installed software as the default to be used for subsequent reboots.

12. Enter y to reboot the node.

![]() When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

13. Press Ctrl-C when the following message displays:

Press Ctrl-C for Boot Menu

14. Select option 4 for Clean Configuration and Initialize All Disks.

15. Enter y to zero disks, reset config, and install a new file system.

16. Enter y to erase all the data on the disks.

![]() The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize. You can continue with node 02 configuration while the disks for node 01 are zeroing.

The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize. You can continue with node 02 configuration while the disks for node 01 are zeroing.

Configure Node 02

To configure node 02, complete the following steps:

1. Connect to the storage system console port. You should see a Loader-A prompt. However, if the storage system is in a reboot loop, press Ctrl-C to exit the autoboot loop when the following message displays:

Starting AUTOBOOT press Ctrl-C to abort…

2. Allow the system to boot up.

autoboot

3. Press Ctrl-C when prompted.

![]() If ONTAP 9.1 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.1 is the version being booted, select option 8 and y to reboot the node. Then continue with step 14.

If ONTAP 9.1 is not the version of software being booted, continue with the following steps to install new software. If ONTAP 9.1 is the version being booted, select option 8 and y to reboot the node. Then continue with step 14.

4. To install new software, select option 7.

5. Enter y to perform an upgrade.

6. Select e0M for the network port you want to use for the download.

7. Enter y to reboot now.

8. Enter the IP address, netmask, and default gateway for e0M.

<node02-mgmt-ip> <node02-mgmt-mask> <node02-mgmt-gateway>

9. Enter the URL where the software can be found.

![]() This web server must be reachable.

This web server must be reachable.

<url-boot-software>

10. Press Enter for the user name, indicating no user name.

11. Enter y to set the newly installed software as the default to be used for subsequent reboots.

12. Enter y to reboot the node.

![]() When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

When installing new software, the system might perform firmware upgrades to the BIOS and adapter cards, causing reboots and possible stops at the Loader-A prompt. If these actions occur, the system might deviate from this procedure.

13. Press Ctrl-C when you see this message:

Press Ctrl-C for Boot Menu

14. Select option 4 for Clean Configuration and Initialize All Disks.

15. Enter y to zero disks, reset config, and install a new file system.

16. Enter y to erase all the data on the disks.

![]() The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize. You can continue with node 02 configuration while the disks for node 01 are zeroing.

The initialization and creation of the root aggregate can take 90 minutes or more to complete, depending on the number and type of disks attached. When initialization is complete, the storage system reboots. Note that SSDs take considerably less time to initialize. You can continue with node 02 configuration while the disks for node 01 are zeroing.

Set Up Node

To set up a node, complete the following steps:

1. From a console port program attached to the storage controller A (node 01) console port, run the node setup script. This script appears when ONTAP 9.1 boots on the node for the first time.

2. Follow the prompts to set up node 01:

Welcome to the cluster setup wizard.

You can enter the following commands at any time:

"help" or "?" - if you want to have a question clarified,

"back" - if you want to change previously answered questions, and

"exit" or "quit" - if you want to quit the setup wizard.

Any changes you made before quitting will be saved.

You can return to cluster setup at any time by typing “cluster setup”.

To accept a default or omit a question, do not enter a value.

This system will send event messages and weekly reports to NetApp Technical Support.

To disable this feature, enter "autosupport modify -support disable" within 24 hours.

Enabling AutoSupport can significantly speed problem determination and resolution should a problem occur on your system.

For further information on AutoSupport, see:

http://support.netapp.com/autosupport/

Type yes to confirm and continue {yes}: yes

Enter the node management interface port [e0M]: Enter

Enter the node management interface IP address: <node01-mgmt-ip>

Enter the node management interface netmask: <node01-mgmt-mask>

Enter the node management interface default gateway: <node01-mgmt-gateway>

A node management interface on port e0M with IP address <node01-mgmt-ip> has been created

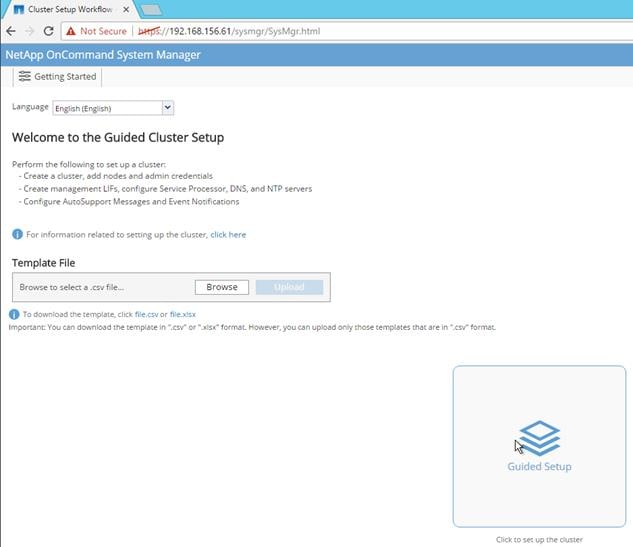

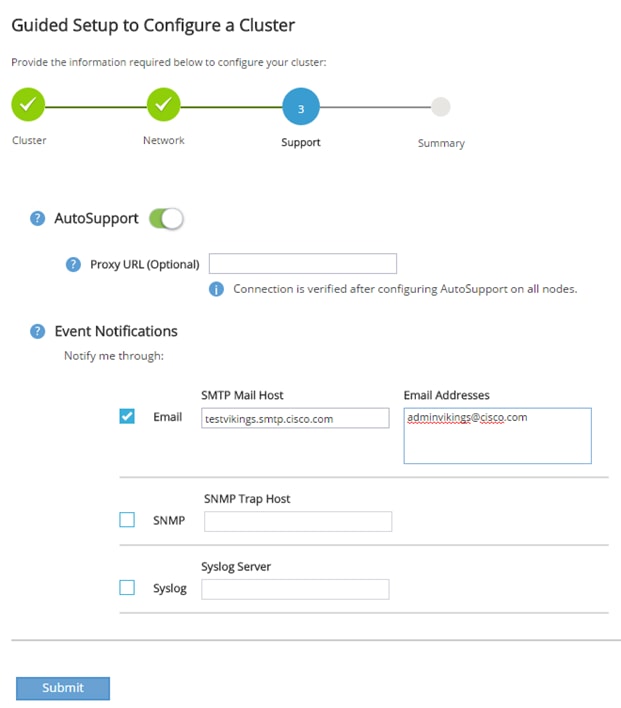

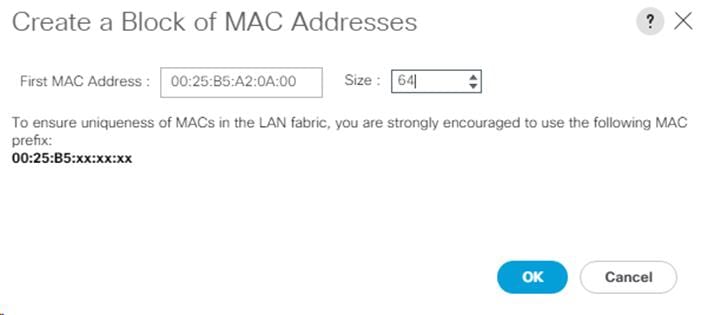

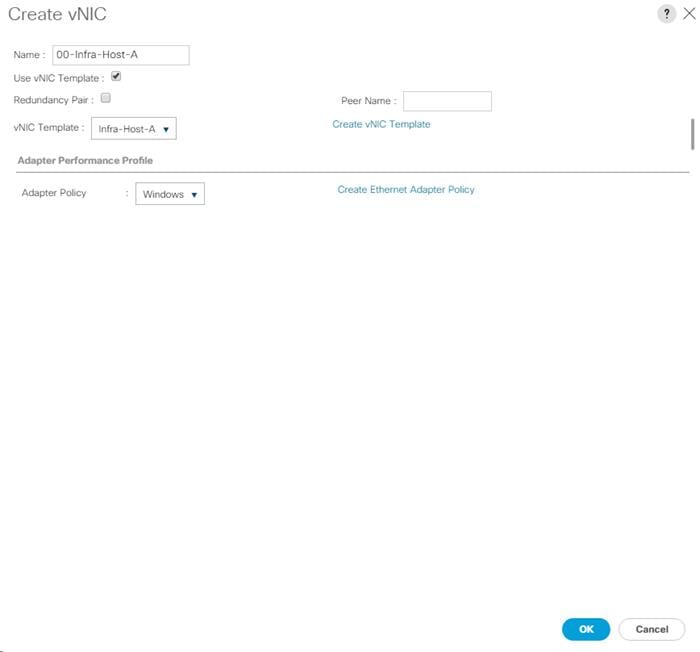

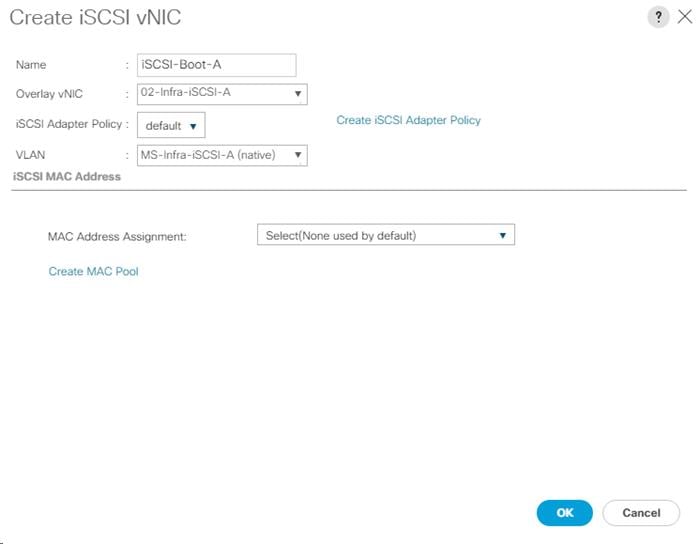

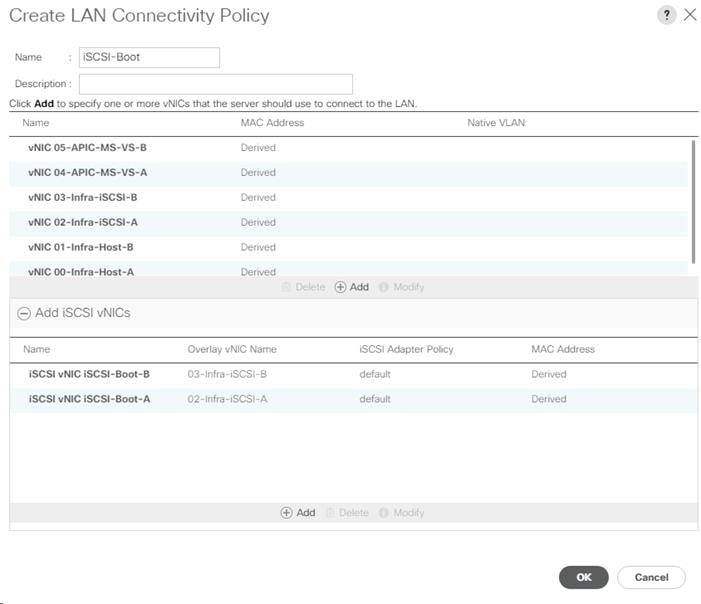

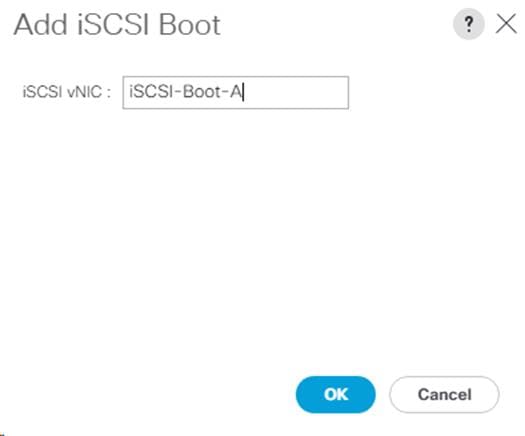

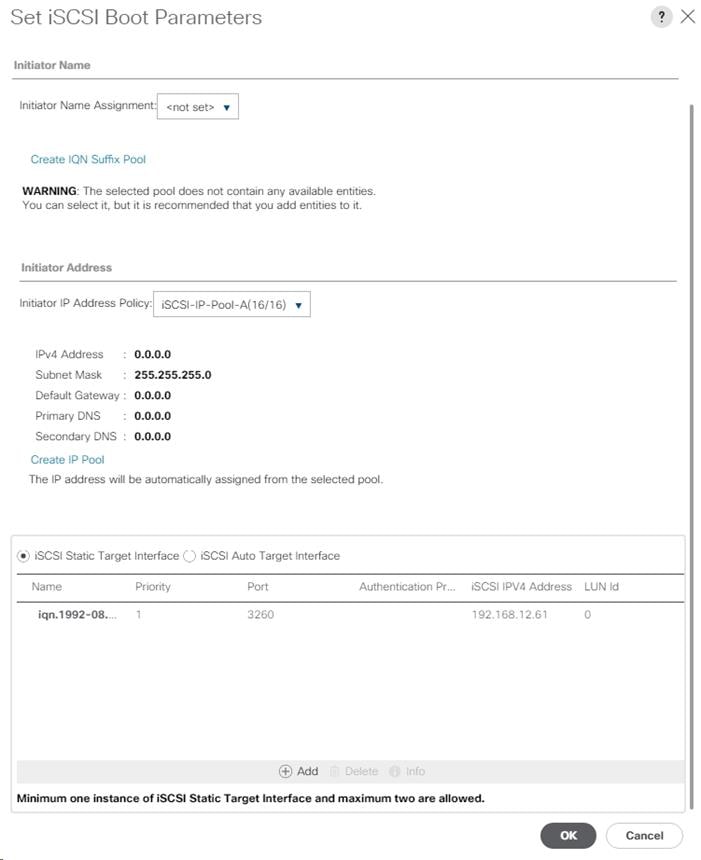

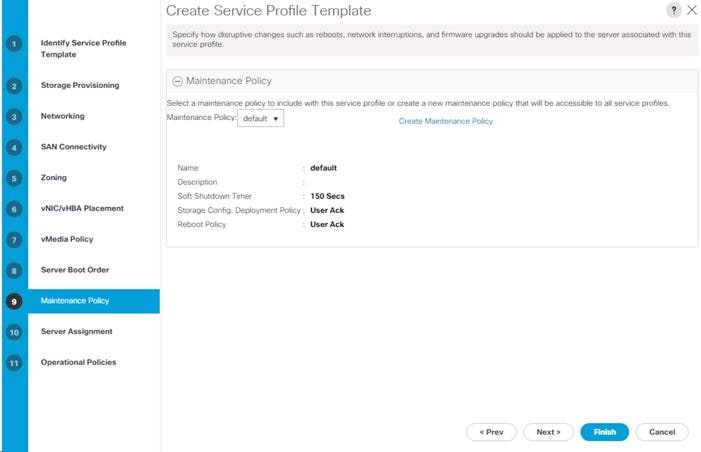

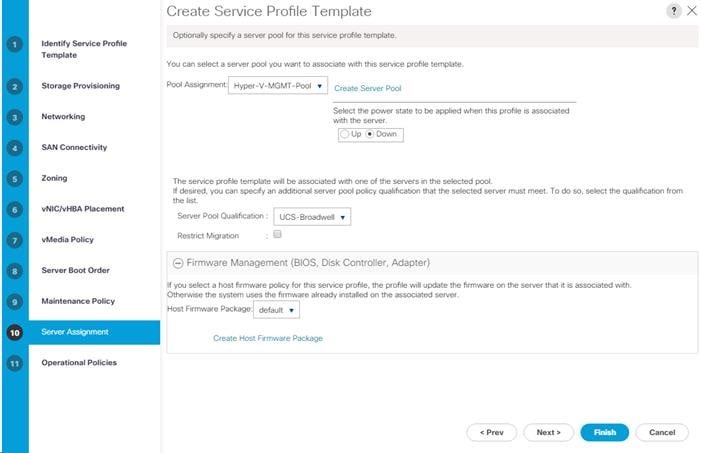

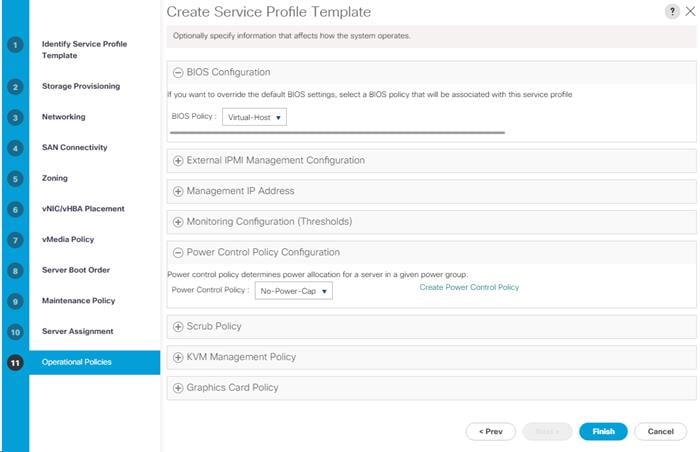

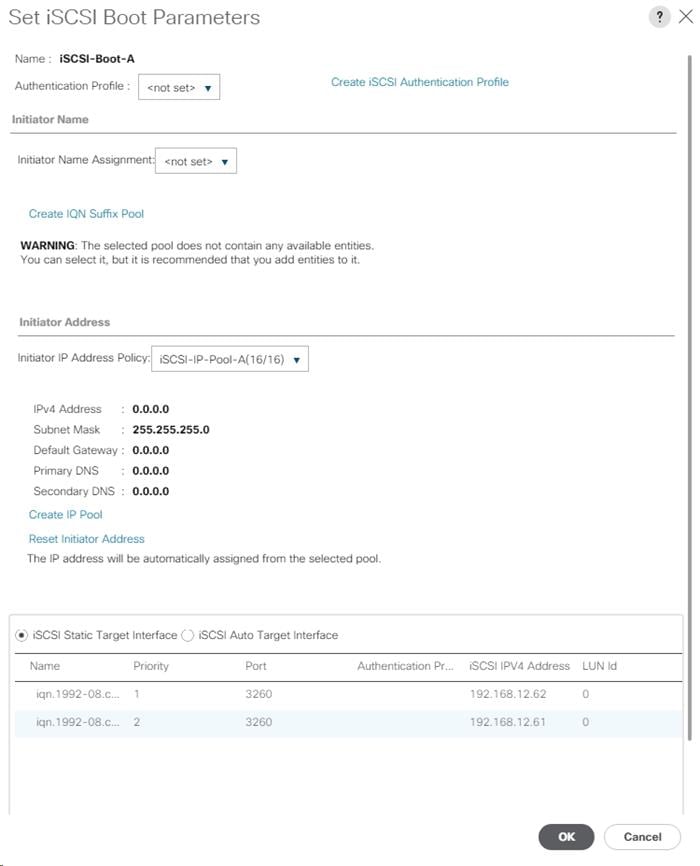

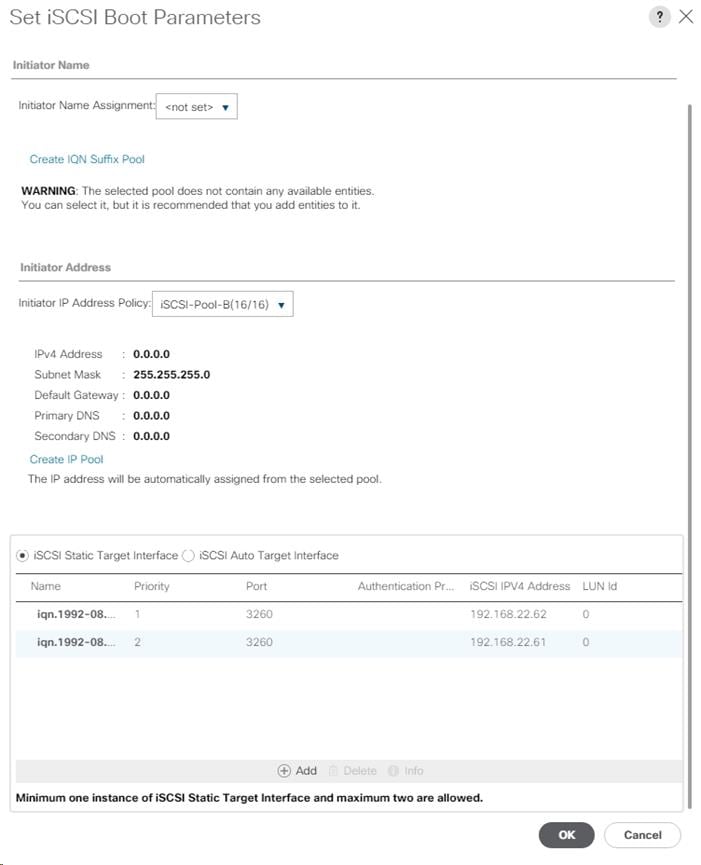

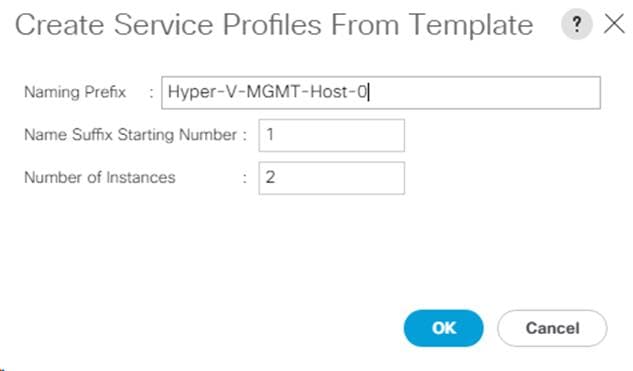

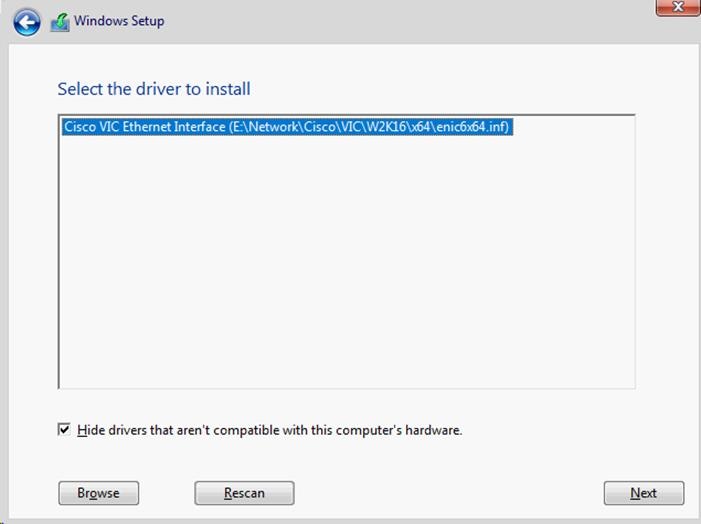

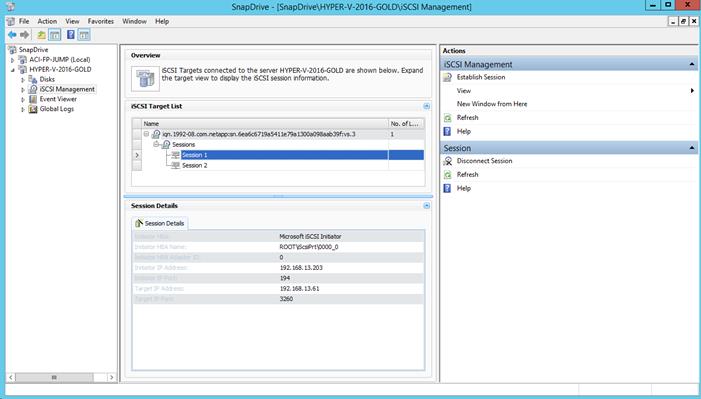

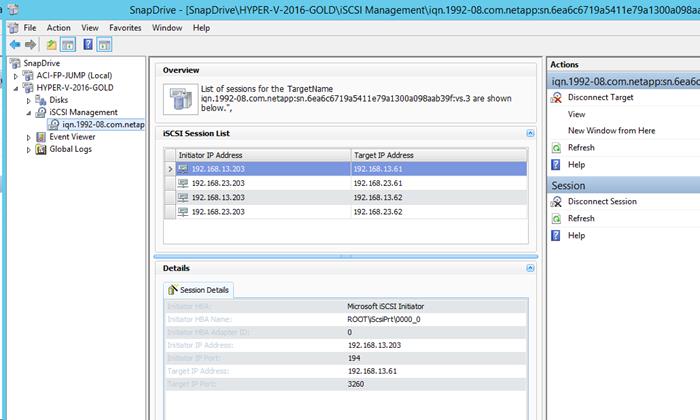

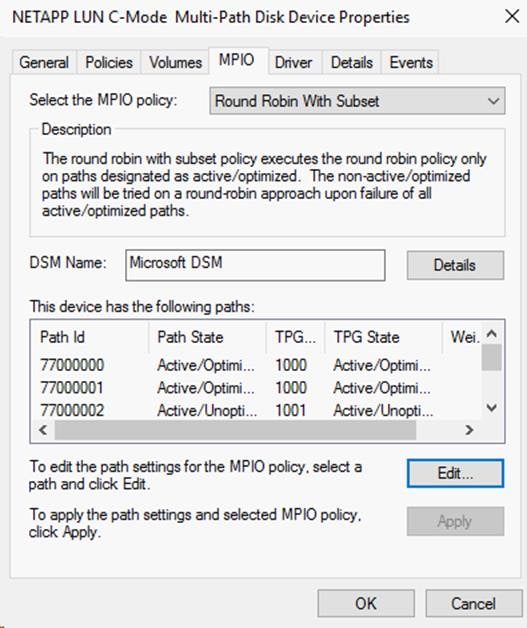

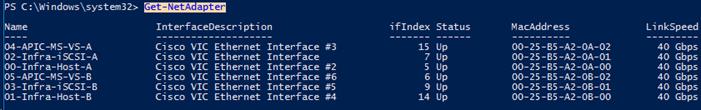

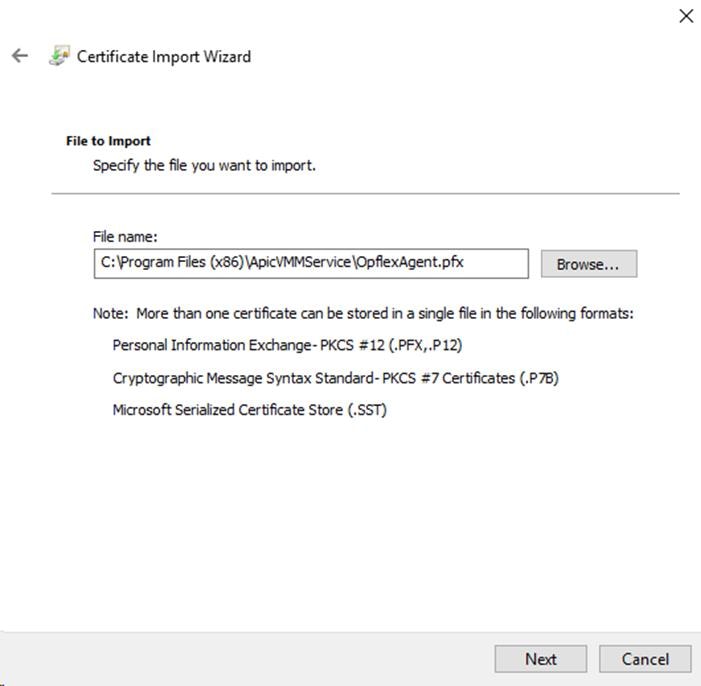

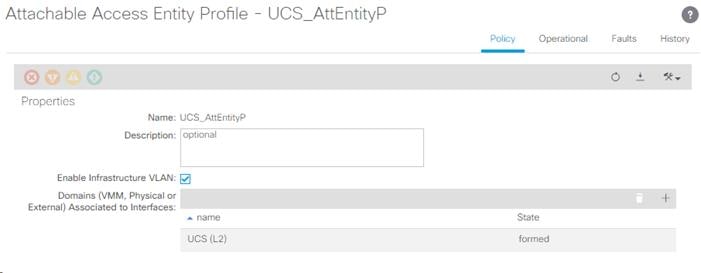

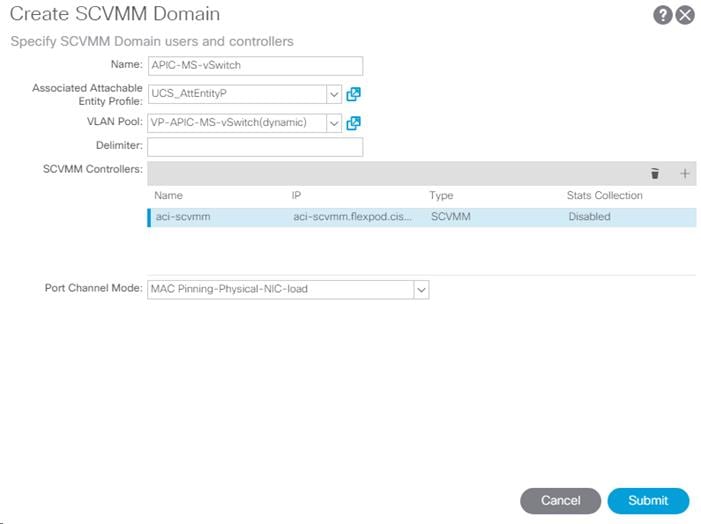

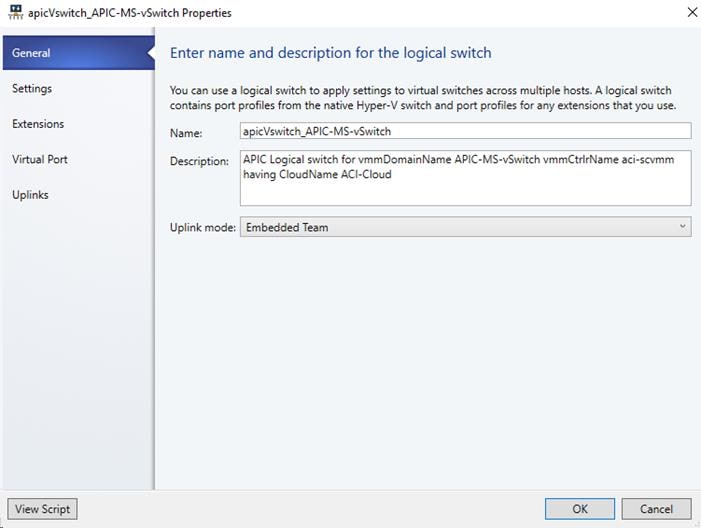

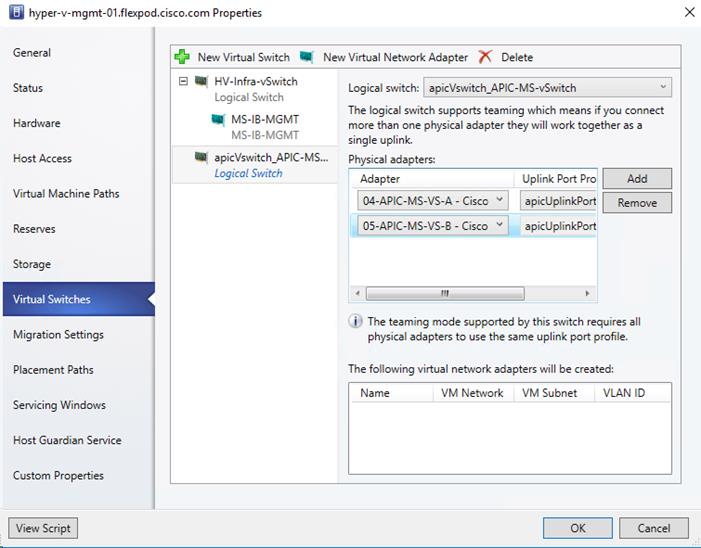

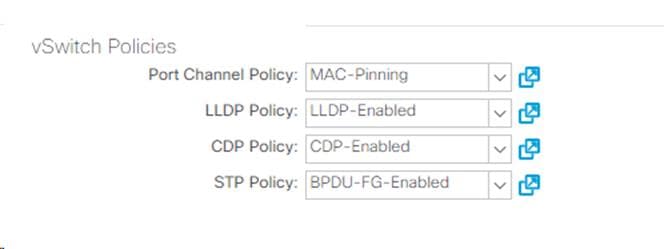

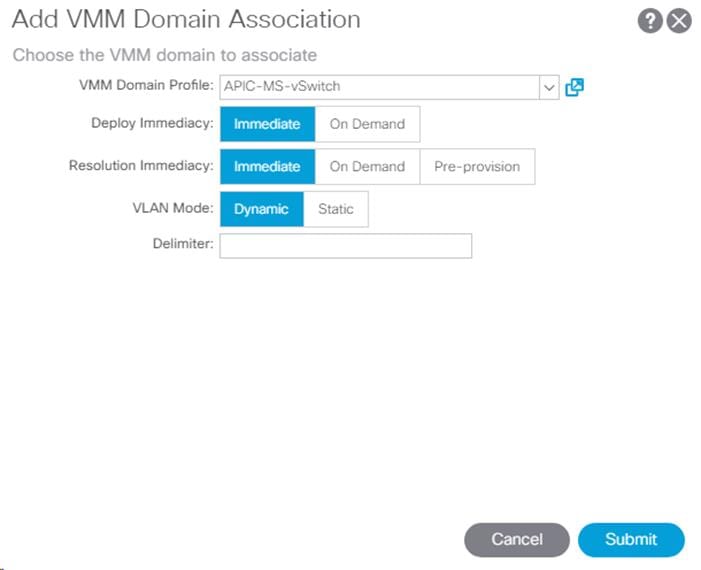

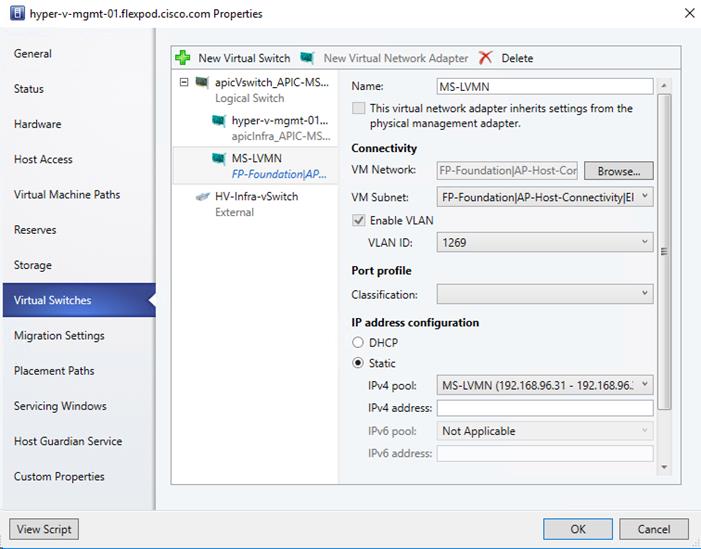

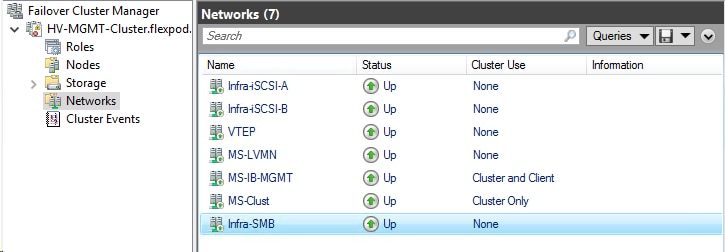

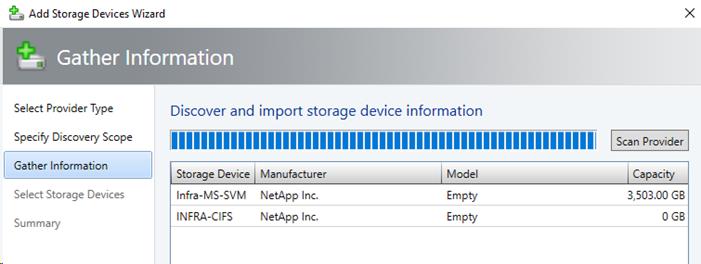

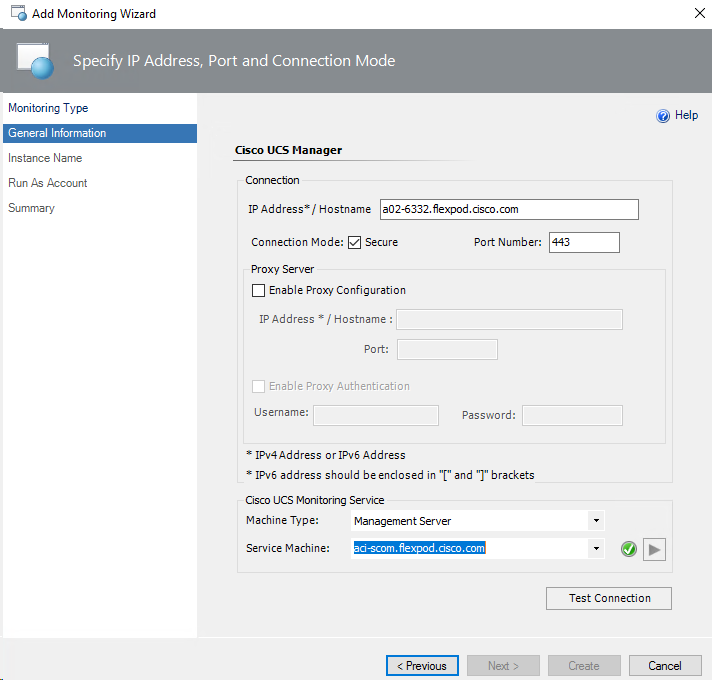

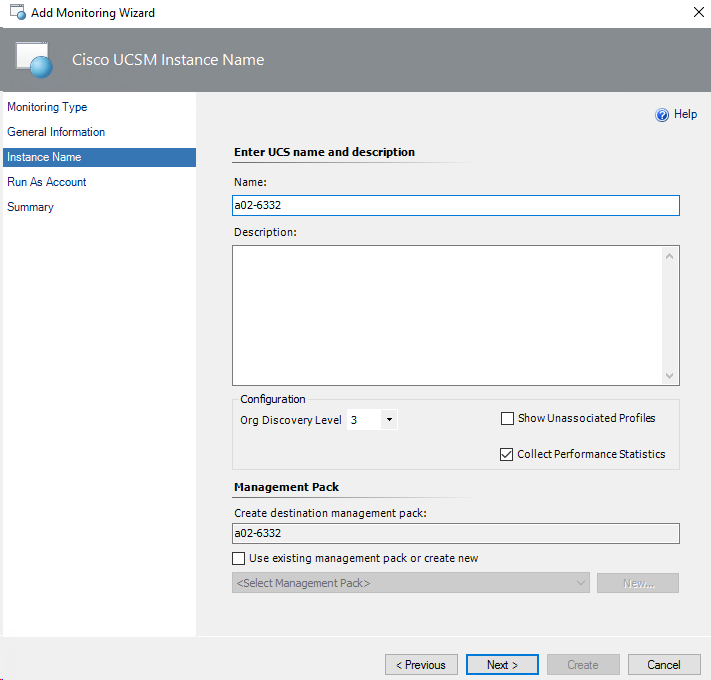

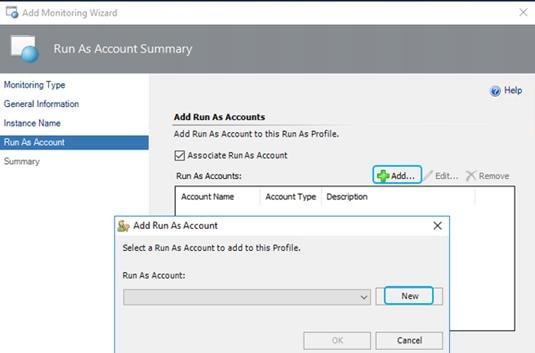

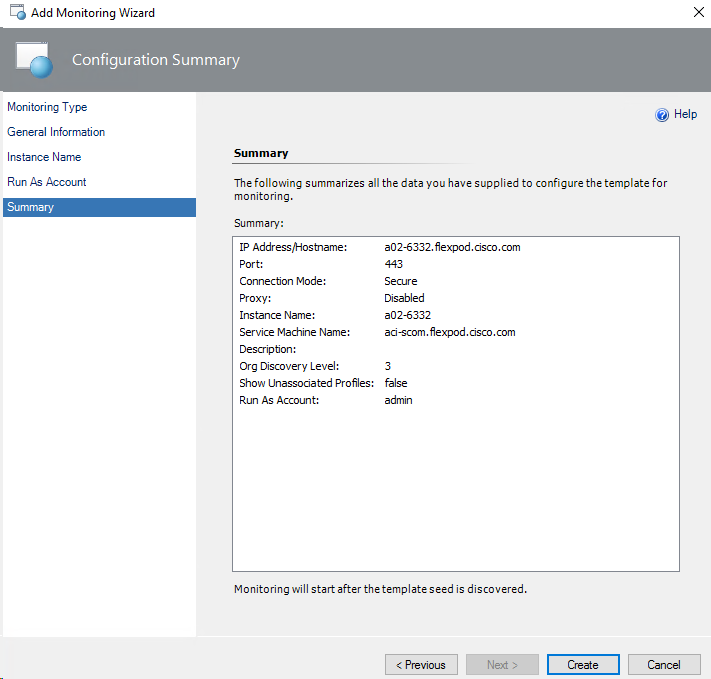

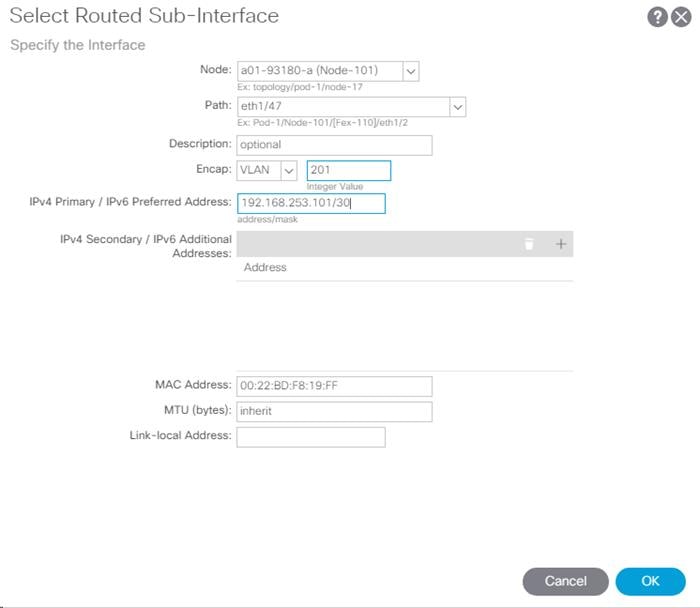

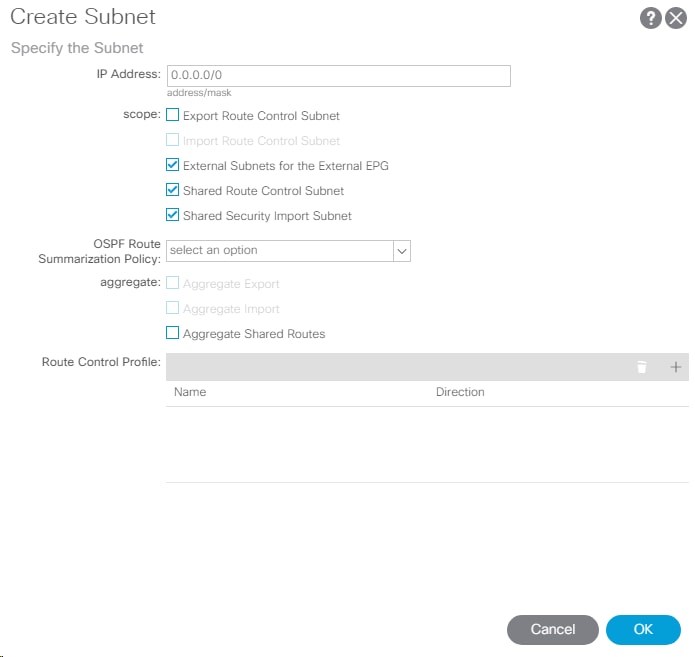

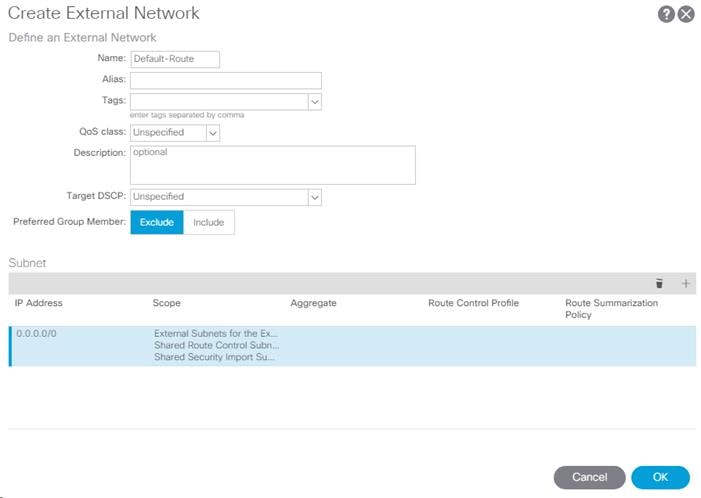

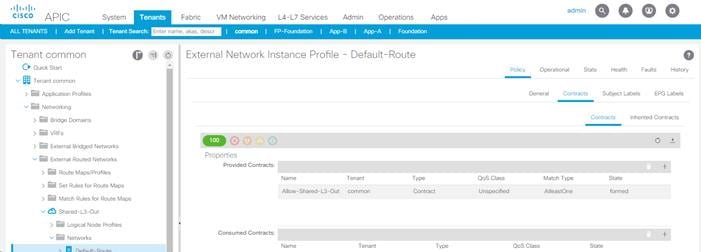

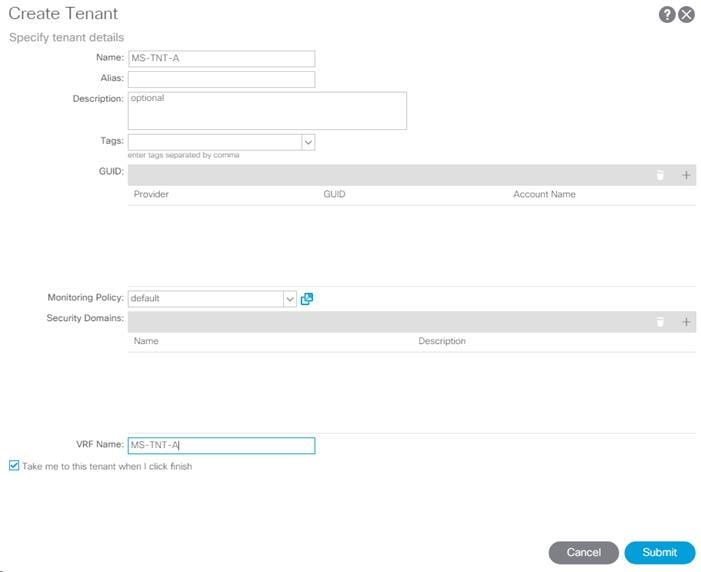

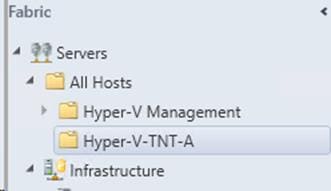

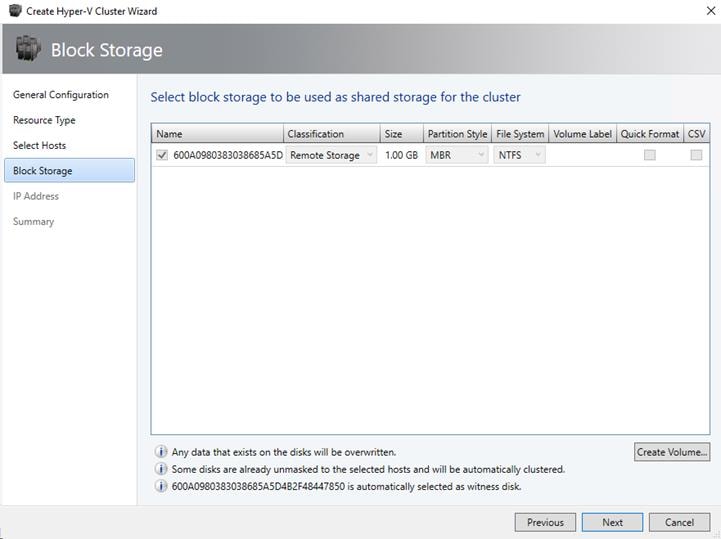

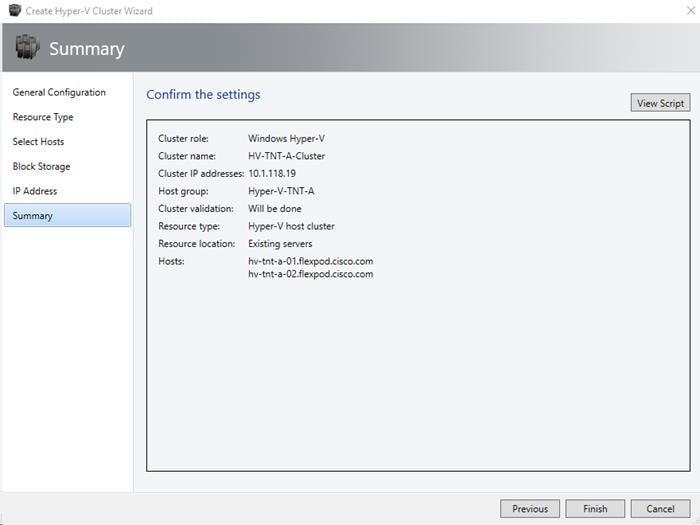

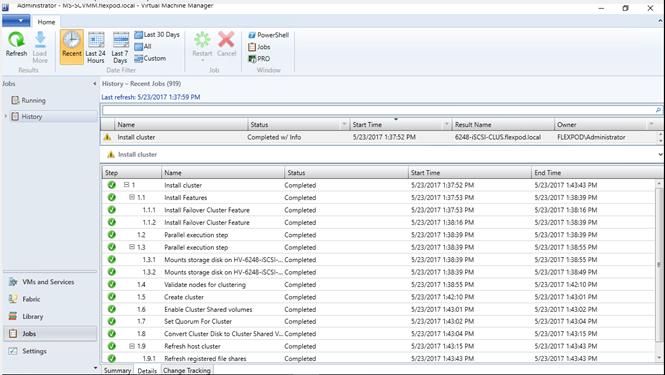

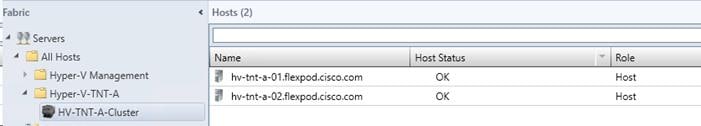

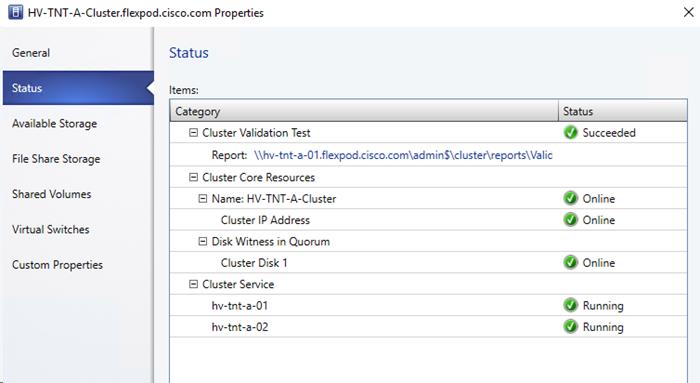

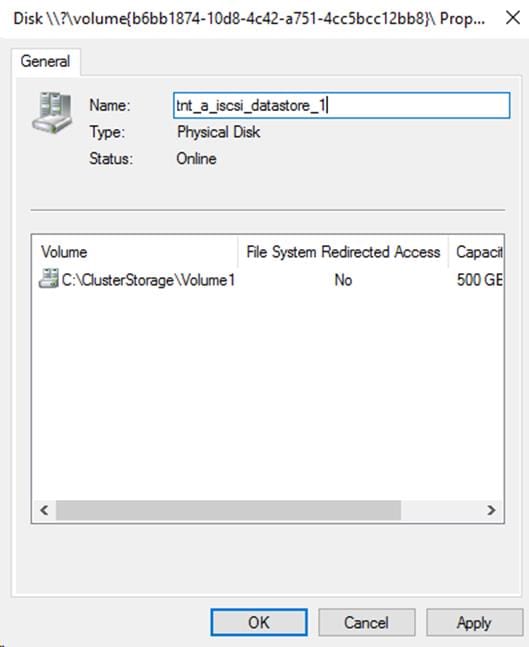

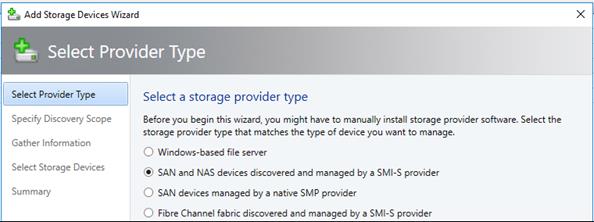

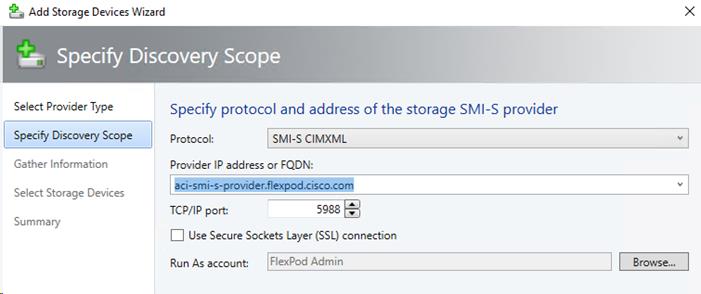

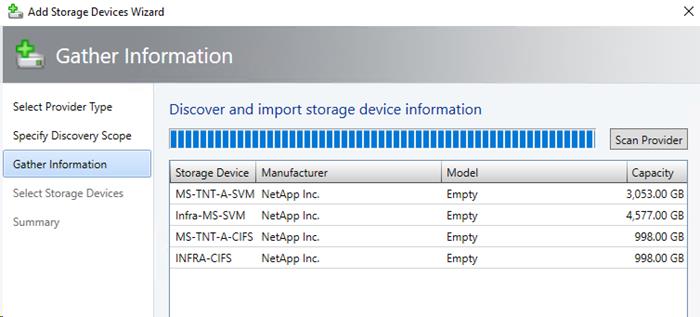

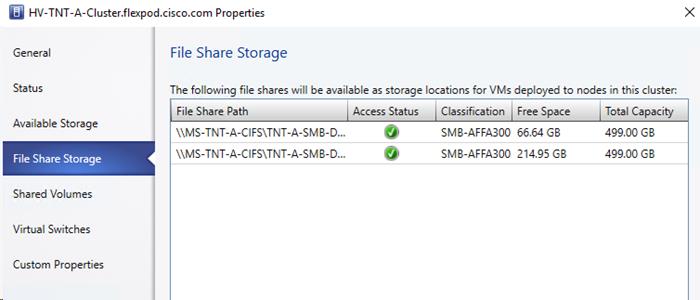

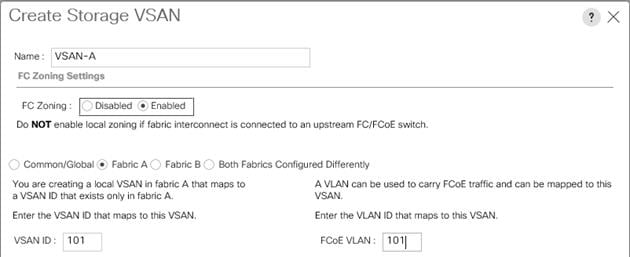

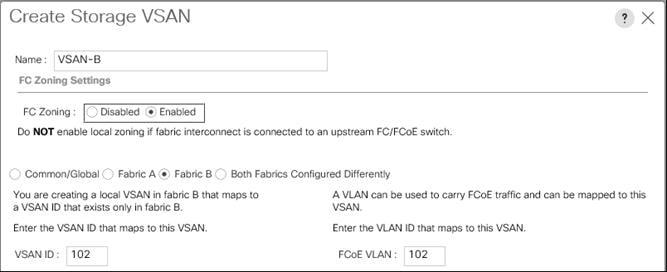

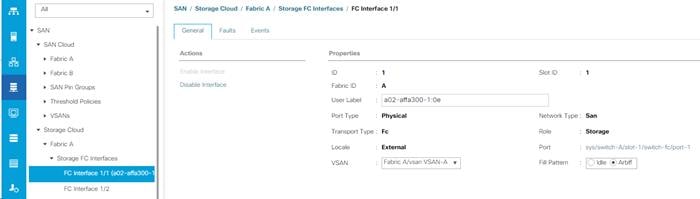

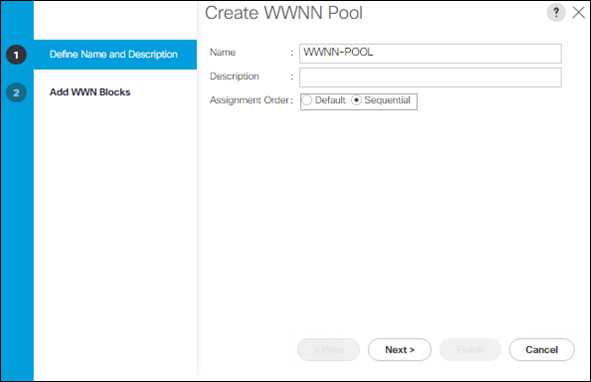

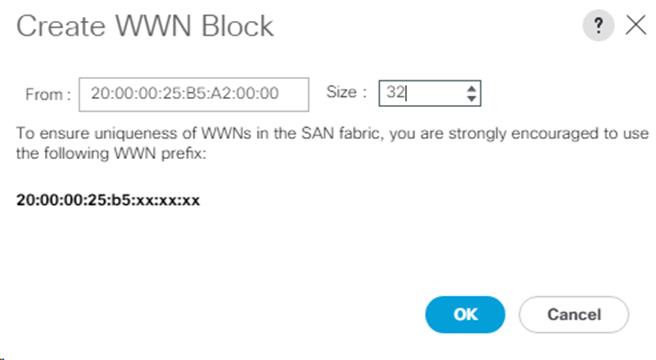

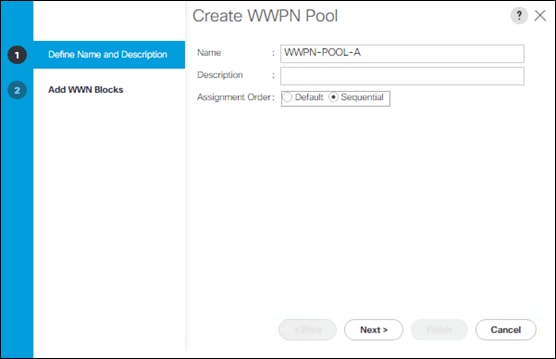

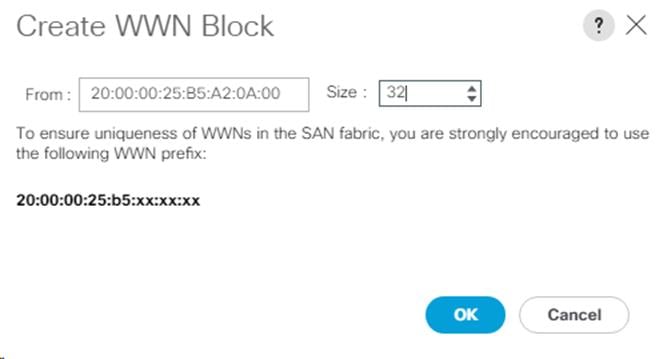

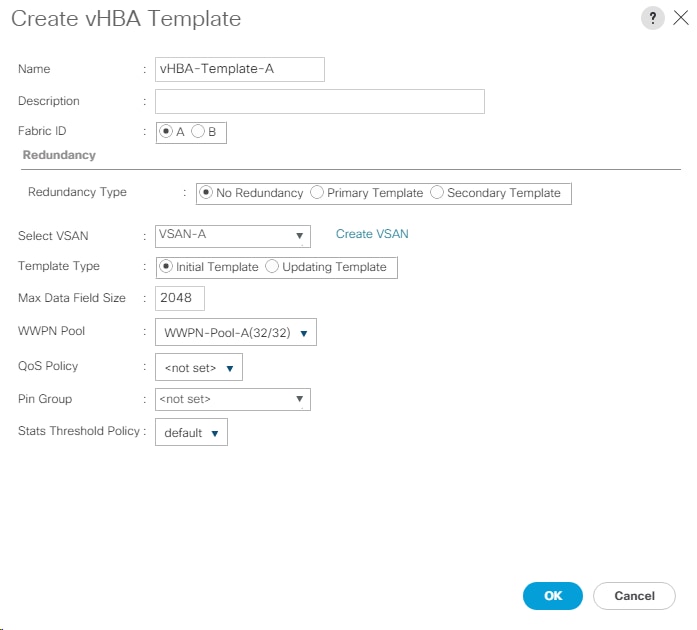

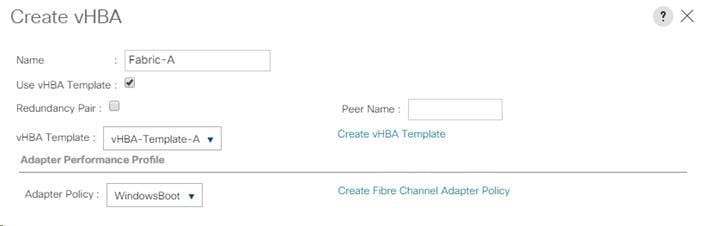

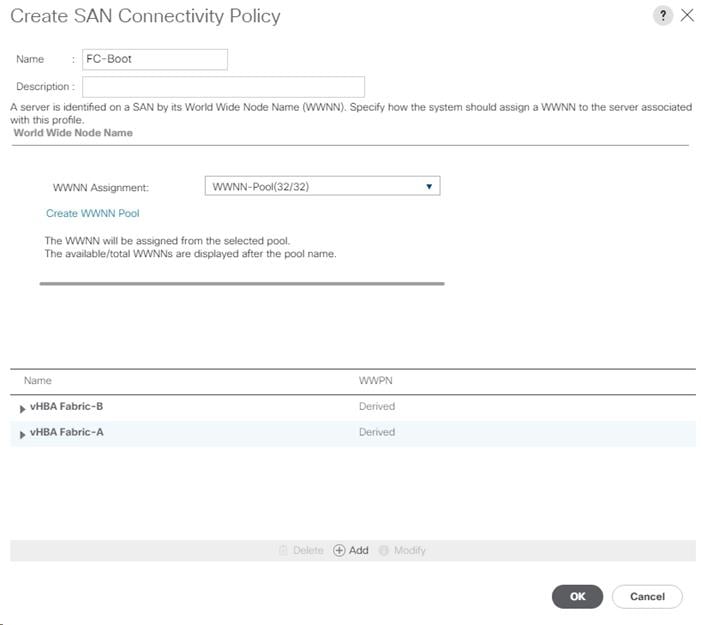

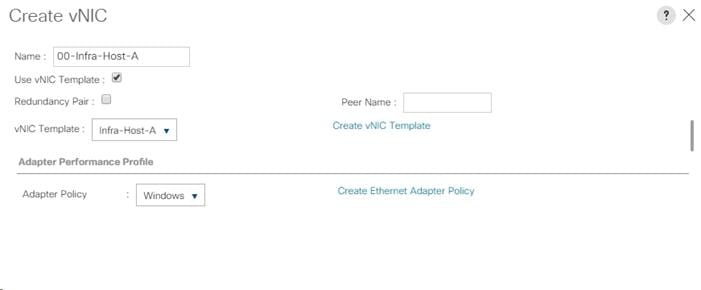

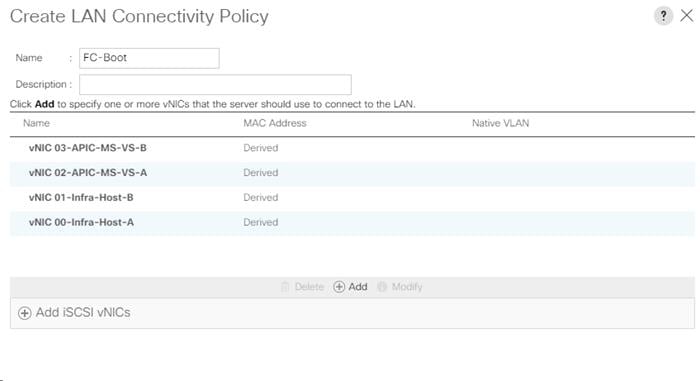

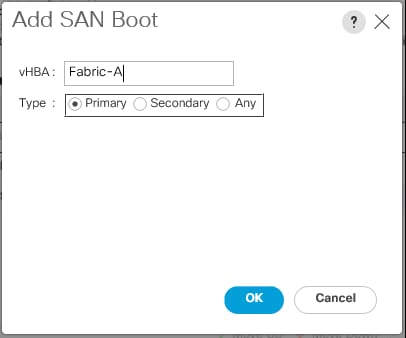

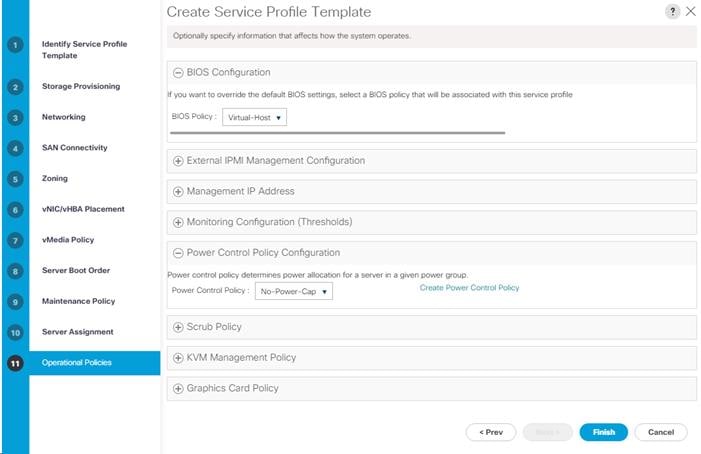

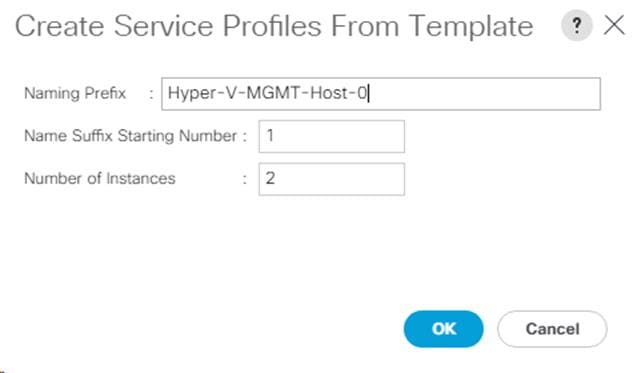

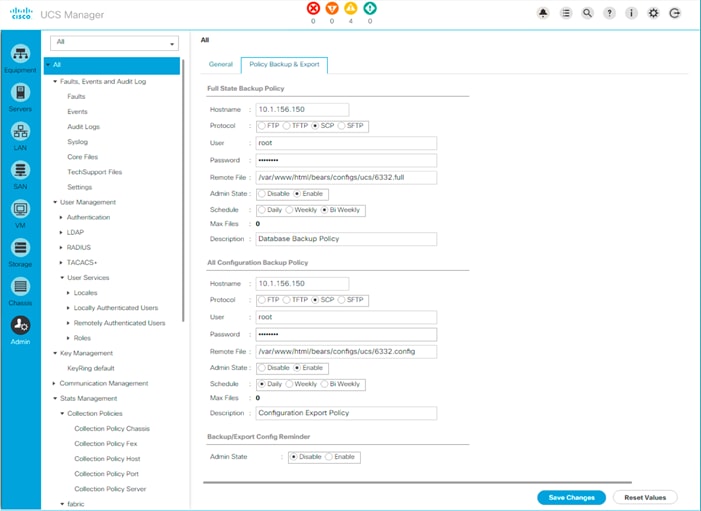

Use your web browser to complete cluster setup by accesing https://<node01-mgmt-ip>