FlashStack for SAP HANA TDI

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

FlashStack for SAP HANA TDI

Deployment Guide for FlashStack for SAP HANA TDI

Published: November 2020

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. LDR1.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

Contents

Pure Storage FlashArray//X Configuration

Cisco® Validated Designs (CVDs) consists of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers and to guide them from design to deployment.

This document discusses the deployment requirements and procedures to install and operate SAP HANA Tailored Data Center Integration (TDI) deployments on FlashStack, a converged infrastructure jointly developed by Cisco and Pure Storage. The predesigned FlashStack solution serves as foundation for a variety of workloads and enables efficient architectural designs based on customer requirements.

FlashStack for SAP HANA is a validated approach to deploy Cisco and Pure Storage technologies in an appliance like infrastructure. The reference architecture builds on the Cisco® Unified Computing System™ (Cisco UCS®) platform based on 2nd Generation Intel Xeon Scalable Processors optionally with DDR4 memory modules only or in a mixed memory configuration of DDR4 modules and Intel® Optane™ DC Persistent Memory Modules (DC PMM). The Cisco UCS Servers connect through Cisco switching products to the Pure Storage® FlashArray//X.

This document details the required configuration steps for SAP HANA TDI deployments whether in SAP HANA Scale-Up or Scale-Out configuration running on either Red Hat Enterprise Linux for SAP Solutions or SUSE Linux Enterprise Server for SAP Applications.

Industry trends indicate a vast data center transformation toward shared infrastructure, multi-tenant workload and cloud computing. Business agility requires application agility, so IT teams must provision applications quickly and resources must scale up (and out) as needed.

Cisco and Pure Storage jointly developed FlashStack, which uses best-in-class storage, server, and network components to serve as the foundation for a variety of workloads, enabling efficient architectural designs that can be quickly and confidently deployed. FlashStack converged infrastructure provides the advantage of having the compute, storage, and network stack integrated with the programmability of Cisco UCS and the on-demand growth and expandability of Evergreen storage from Pure Storage. Users experience appliance-level simplicity with cloud-like efficiencies and economics while maintaining their SAP HANA TDI-based re-deployment/re-use options as their landscape evolves.

SAP HANA is SAP SE’s implementation of in-memory database technology. The SAP HANA database combines transactional and analytical SAP workloads and hereby takes advantage of the low-cost main memory (RAM), data-processing capabilities of multicore processors, and faster data access. Cisco UCS servers equipped with the second-generation Intel® Xeon® Scalable processors support mixed Intel® Optane™ DC PM and DDR4 memory configurations which not only significantly increases the maximum supported memory size but the SAP HANA startup time as well.

The Pure Storage FlashArray//X provides out-of-the-box file sharing capabilities without compromise, thus enabling distributed SAP HANA Scale-Out deployments. It enables organizations to consolidate their SAP landscape and run SAP application servers as well as multiple SAP HANA databases hosted on the same infrastructure.

The target audience for this document includes, but is not limited to field consultants, professional services, IT managers, partner engineers, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This deployment guide provides step by step configuration and implementation guidelines for the FlashStack data center solution for SAP HANA TDI and show case the scalability, manageability, and simplicity of the FlashStack converged infrastructure solution when deploying SAP HANA mission critical applications.

The previous FlashStack reference architecture has been updated with the up-to-date Cisco and Pure Storage hardware and software components:

● Support for the Cisco UCS 4.1(1) unified software release.

● Cisco UCS B-Series M5 Blade Servers with the second-generation Intel® Xeon® Scalable processors and Cisco 1400 Series Virtual Interface Cards (VICs). Holds true for UCSM managed Cisco UCS C220, C240 and C480 M5 Rack Servers as well.

● Validation with Intel® Optane™ Data Center persistent memory modules (DC PMM)

● Cisco UCS 6454 Fabric Interconnects and Cisco UCS 2408 Fabric Extender

● Validation with Nexus® 9300-FX Switches

● Pure Storage FlashArray//X R3 with DirectFlash Modules

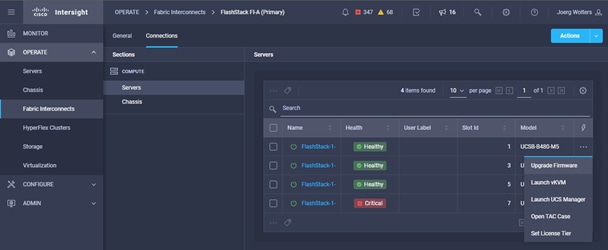

● Cisco Intersight Management and Monitoring

![]() Software versions used in this validation reflect the current version at the time of the publication. Review and implement the Cisco suggested release mentioned on the corresponding Cisco UCS Hardware and Software compatibility list at the time of actual implementation.

Software versions used in this validation reflect the current version at the time of the publication. Review and implement the Cisco suggested release mentioned on the corresponding Cisco UCS Hardware and Software compatibility list at the time of actual implementation.

The FlashStack platform, is a flexible and highly modular converged infrastructure solution. It delivers pre-validated storage, networking, and server technologies and scales easily as requirements and demand change. FlashStack is a defined set of hardware and software that serves as an integrated foundation for both virtualized and non-virtualized workloads. Cisco and Pure Storage carefully validated and verified the FlashStack architecture and its many use cases while creating a portfolio of detailed documentation, information, and references to assist customers in transforming their data centers to this shared infrastructure model.

This portfolio includes, but is not limited to, the following items:

● Best practice architectural design

● Implementation and deployment guidelines

● SAP application sizing recommendations

All components are connected and configured according to best practices of both Cisco and Pure Storage and provide the ideal platform to run a variety of enterprise workloads with confidence. FlashStack can scale up for greater performance and capacity (adding compute, network, or storage resources individually as required), or it can scale out for environments that require multiple consistent deployments.

The validated reference architecture follows the FlashStack for SAP HANA TDI design guide and leverages the Pure Storage FlashArray//X, Cisco Nexus 9300 series and Cisco MDS 9100 series as switching elements as well as Cisco 6400 Series Fabric Interconnects for system management. Each of the Cisco or Pure Storage component families shown offer platform and resource options to scale the infrastructure up or down, while supporting the same features and functionality that are required under the configuration and connectivity best practices of FlashStack.

Validation tests confirm the functionality and resilience of the whole solution.

Solution Architecture

FlashStack for SAP HANA TDI provides an end-to-end architecture that demonstrates support for multiple SAP HANA workloads including high availability and secure multi-tenancy. The architecture builds around the Cisco UCS compute and Pure Storage FlashArray//X connected by Cisco MDS Multilayer SAN Switches and is further enabled by Cisco Nexus Switches.

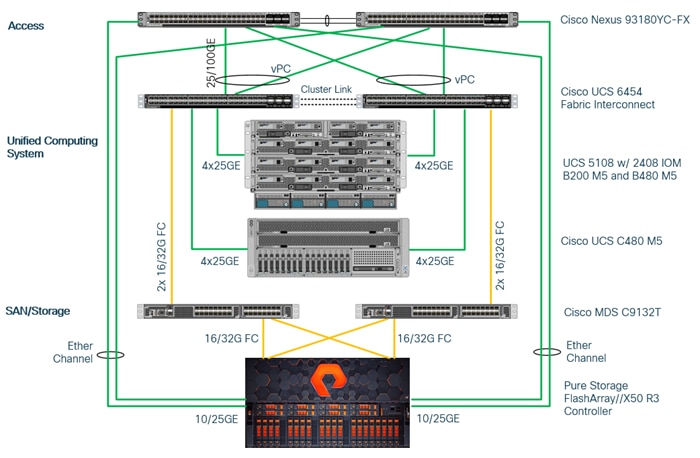

These components form a powerful and scalable design, built on the best practices of Cisco and Pure Storage to create an ideal platform for running a variety of enterprise application workloads. Figure 1 illustrates the topology of the FlashStack solution for SAP HANA TDI.

The Cisco Nexus Switches handle the Ethernet traffic and uplink to the customer network. The chassis with Cisco UCS 2408 FEX leverages 25GE connections to the Fabric Interconnects. The validated design uses 25GE connections from the Fabric Interconnect (FI) to the Cisco Nexus switches and 16 Gb Fibre Channel connections towards the MDS switches and the FlashArray//X.

The FlashStack environment scales easily when requirements and demand change. It is recommended to add additional 4 connections between the Fabric Interconnects and the Cisco Nexus switches and to define a dedicated port channel to handle the SAP HANA backup network traffic explicitly.

Figure 1. High- level Physical Topology of the Validated FlashStack Solution

The information in the deployment guide follows a complete configuration of a customer environment. The installation steps outlined below require various configuration variables which are customer environment and naming convention specific, like host names, IP addresses, VLAN schemes or appropriate MAC addresses. Appendix 1: Configuration Variables, lists the configuration variables used throughout this deployment guide. When completed with the customer-specific site variables it can be used as a reference during the deployment.

The following non-FlashStack system configuration needs to be in place before you start:

● Internal and external DNS records

● Firewall & Proxy configuration

● Active Directory Domain (required for an SAP HANA Scale-Out scenario only)

The lab infrastructure uses a management pod which includes a pair of Cisco Nexus 9000 Switches in standalone mode for out-of-band management network and a pair of Cisco UCS C220 M5 Rack Servers running VMware ESXi. The hypervisor runs a vCenter Server Appliance and VMware hosts provide the Active Domain Service (ADS), Domain Name Service (DNS) and the Network Time Protocol (NTP) Service. Installation and configuration of the management pod and its VMWare hosts are not further detailed in this document but remain pre-requisite to finish the FlashStack installation successfully.

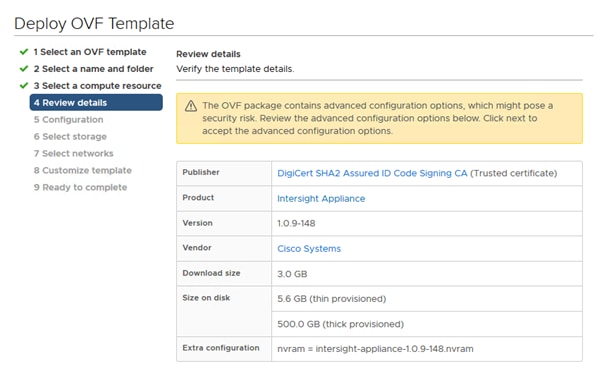

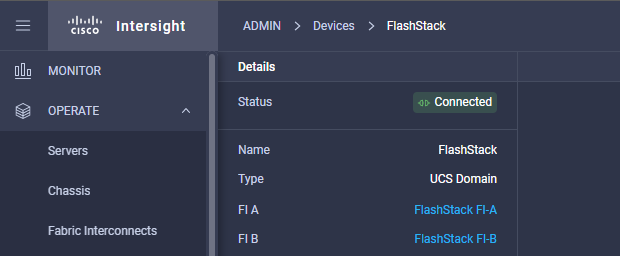

The management pod hosts the Cisco Intersight Virtual Assist Appliance which helps to connect the Pure Storage FlashArray//X to Cisco Intersight.

Cisco UCS Manager

FlashStack configuration with Cisco UCS 6454 Fabric Interconnects, Intel Cascade Lake processors and Intel Optane DC PM modules require Cisco UCS Manager release 4.0(4) or later. Cisco UCS Manager provides unified, embedded management of all Cisco software and hardware components.

The Cisco suggested release based on software quality, stability and longevity is release 4.1(1c). Beginning with Cisco UCS Manager Release 4.1(1), the KVM Console GUI is available as an HTML5-based application only and Java is no longer required to manage and install the environment.

Infrastructure Requirements

SAP defines hardware and software requirements to run SAP HANA TDI systems. This Cisco Validated Design uses guidelines provided by SAP and best practices provided by Cisco and Pure Storage.

SAP HANA 2.0 (TDI) supports servers equipped with Intel Xeon processor E7-8880v3, E7-8890v3, E7-8880v4, E7-8890v4 and all Skylake CPU’s > 8 cores. In addition, the Intel Xeon processor E5-26xx v4 is supported for SAP HANA Scale up deployments.

Appropriate SAP HANA memory sizing must be performed before considering an Intel Optane DC PM based configuration. More detailed information on the configuration and management is available in the whitepaper Cisco UCS for SAP HANA with Intel Optane DC PMM.

SAP HANA supports the following DDR4 only memory configurations:

● Homogenous symmetric assembly of dual in-line memory modules (DIMMs) for example, DIMM size or speed should not be mixed

● Maximum use of all available memory channels

● SAP HANA 2.0 memory per socket ratio is up to 768 GB for SAP NetWeaver Business Warehouse (BW) and DataMart

● SAP HANA 2.0 memory per socket ratio is up to 1536 GB for SAP Business Suite on SAP HANA (SoH) on two or four-socket servers.

Mixed DC PM/DDR4 memory module configurations are supported with SAP HANA 2.0 SPS03 rev 35 and higher:

● Same size of all installed DDR4 memory modules

● Same size of all installed DC PM memory modules

● Homogenous symmetric assembly of all memory modules for example, each memory channel consists of DC PM and DDR4 memory modules.

● Maximum use of all available memory channel.

SAP HANA supports a specific set of CPU and memory combinations only. Table 1 lists the certified Cisco UCS servers for SAP HANA with supported Memory and CPU configuration for different use cases. Mixed memory configurations with DRAM and DC PM modules are available with different memory ratios between (1:1) to (4:1). Table 2 lists the maximum possible memory configuration using Intel Optane DC PMM.

Table 1. Supported DRAM Memory Configuration for FlashStack for SAP HANA TDI

| Cisco UCS Server |

Intel Xeon CPU Socket |

Supported Memory |

Scale-Up / Suite on HANA |

Scale-Out |

| Cisco UCS B200 M5 Cisco UCS C220 M5 Cisco UCS C240 M5 |

2 |

BW: 128 GB to 1.5 TB SoH: 128 GB to 3 TB |

Supported |

Not supported |

| Cisco UCS B480 M5 Cisco UCS C480 M5 |

4 |

BW: 256 GB to 3 TB for BW SoH: 256 GB to 6 TB for SoH |

Supported |

Supported (BW only) |

Table 2. Maximum DRAM/DC PM Memory Configuration for FlashStack for SAP HANA TDI

| Cisco UCS Server |

Intel Xeon CPU Socket |

Max. (4:1) Supported Memory |

Cisco UCS Server |

Intel Xeon CPU Socket |

| Cisco UCS B200 M5 Cisco UCS C220 M5 Cisco UCS C240 M5 |

2 |

BW: 1.5 TB DRAM + 6 TB DC PMM = 7.5 TB SoH: 3 TB DRAM + 12 TB DC PMM = 15 TB |

Supported |

Not supported |

| Cisco UCS B480 M5 Cisco UCS C480 M5 |

4 |

BW: 3 TB DRAM + 12 TB DC PMM = 15 TB SoH: 6 TB DRAM + 24 TB DC PMM = 30 TB |

Supported |

Supported (BW only) |

Network

SAP HANA data center deployments can range from databases running on single hosts (Scale Up), distributed systems (Scale Out) to complex Scale Out systems with multiple hosts located at a primary site having one or more secondary sites to operate SAP HANA with full fault tolerance and disaster recovery.

The different components of the SAP HANA platform communicate via different network channels. To apply the appropriate security and performance measures it is recommended to:

● Separate network communication into logical network zones.

● Enable redundancy for the internal and storage networks, but important too for high availability requirements.

● Separate the Backup network from other HANA related network communication and to configure an additional, exclusive port channel for the Backup network traffic.

Make sure to use the named VLANs to isolate traffic to the external LAN, including broadcast traffic.

Table 3. SAP HANA Network Requirements

| Client Zone |

||||

| Application Server Network |

SAP Application Server to database communication |

All |

Application Server Network |

SAP Application Server to database communication |

| Client Network |

User / Client Application to database communication |

All |

Client Network |

User / Client Application to database communication |

| Data Source Network |

Data import and external data integration |

Optional |

Data Source Network |

Data import and external data integration |

| Internal Zone |

||||

| Inter-Node Network |

Node to node communication |

Scale-Out |

Inter-Node Network |

Node to node communication |

| System Replication Network |

SAP HANA System Replication |

SAP HANA System Replication and Disaster Tolerance |

System Replication Network |

SAP HANA System Replication |

| Storage Zone |

||||

| NFS Shared Network |

Shared SAP HANA binaries |

Scale-Out |

NFS Shared Network |

Shared SAP HANA binaries |

| Backup Network |

Data Backup |

Optional |

Backup Network |

Data Backup |

| Storage Network |

Node to Storage communication |

All |

Storage Network |

Node to Storage communication |

| Infrastructure Related |

||||

| Administration Network |

Infrastructure and SAP HANA administration |

Optional |

Administration Network |

Infrastructure and SAP HANA administration |

| Boot Network |

Boot the Operating Systems via PXE/NFS or iSCSI |

Optional |

Boot Network |

Boot the Operating Systems via PXE/NFS or iSCSI |

The SAP HANA TDI network requirement whitepaper (http://scn.sap.com/docs/DOC-63221) describes more detailed network requirements and recommendations.

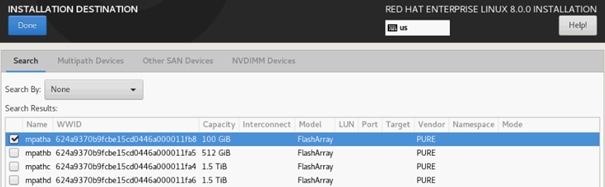

Storage

FlashStack provides consolidated access to both SAN storage and Network Attached Storage (NAS) over unified fabric. For SAP HANA Scale Out scenarios the Pure Storage FlashArray//X provides out of the box NFS capabilities to share SAP HANA binaries and maps the Fibre Channel storage LUNs to the SAP HANA server hosts with a point-to-point connection. The SAP HANA Storage Connector (see SAP Note 190823 - SAP HANA Storage Connector API) manages the remapping of the SAP HANA data and log volumes in the event of a failover to the standby host.

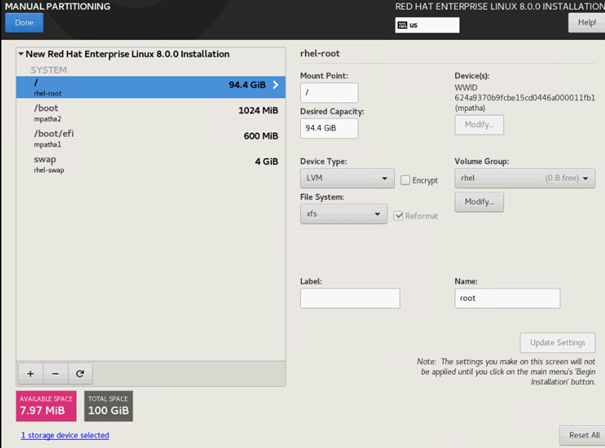

The recommended file system sizes (Table 4) for SAP HANA worker nodes depend on the total amount of physical server memory and the given SAP HANA scenario.

Table 4. File System Size Requirements

| Mount Point |

Scale-Up |

Scale-Out |

| / (incl. swap) |

62 GB |

|

| /usr/sap |

50 GB |

|

| /hana/shared |

1 x RAM or 1 TB (whichever is less) |

1 x RAM of a single worker node for each 4 nodes |

| /hana/data/<SID> |

1 x RAM |

|

| /hana/log/<SID> |

If the server memory is <= 512 GB then ½ x RAM If the server memory is > 512 GB then 512 GB |

|

All relevant information about storage requirements is documented in this white paper: https://www.sap.com/documents/2015/03/74cdb554-5a7c-0010-82c7-eda71af511fa.html.

Operating System

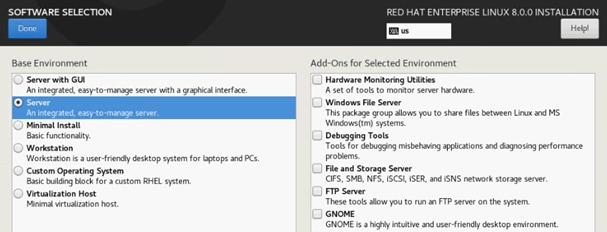

The operating systems to operate SAP HANA compatible with Intel Optane DC PM are:

● SUSE Linux Enterprise Server for SAP Applications 12 SP4 or later and 15 or later

● Red Hat Enterprise Linux for SAP Solutions 7.6 or later and 8.0 or later

Review SAP note 2235581 - SAP HANA: Supported Operating Systems to evaluate compatibility information between Linux operating system release and SAP HANA platform releases.

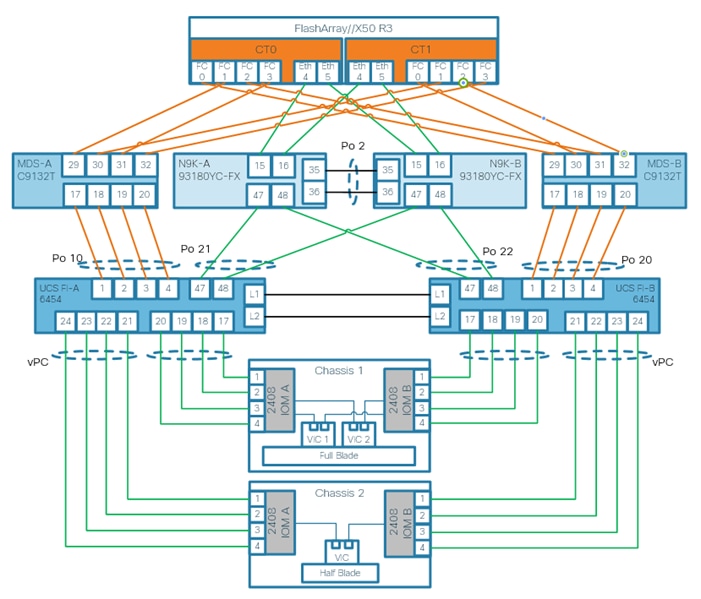

Figure 2 shows the cabling topology for IP network configuration of FlashStack for SAP HANA.

Figure 2. FlashStack Network Device Cabling Topology

The following tables include both, local and remote device port locations for easier reference. The tables capture the out-of-band management port connectivity into a pre-existing management infrastructure too.

Table 5. Cisco Nexus-A 93180YC-FX Device Cabling

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Nexus 93180YC-FX-A |

Eth 1/47 |

25GbE |

Cisco UCS FI-A |

Eth 1/47 |

| Eth 1/48 |

25GbE |

Cisco UCS FI-B |

Eth 1/47 |

|

| Eth 1/53 |

40GbE |

Cisco N9K-Mgmt-A |

Eth 1/49 |

|

| Eth 1/54 |

40GbE |

Cisco N9K-Mgmt-B |

Eth 1/49 |

|

| Eth 1/15 |

10/25GbE |

Pure Storage FlashArray//X CT0 |

Eth 4 |

|

| Eth 1/16 |

10/25GbE |

Pure Storage FlashArray//X CT1 |

Eth 4 |

|

| Eth 1/35 |

10GbE |

Cisco N9K-B (peer-link) |

Eth 1/35 |

|

| Eth 1/36 |

10GbE |

Cisco N9K-B (peer-link) |

Eth 1/36 |

|

| MGMT |

1GbE |

Customer’s Management Switch |

Eth 1/23 |

Table 6. Cisco Nexus-B 93180YC-FX Device Cabling

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Nexus 93180YC-FX-B |

Eth 1/47 |

25GbE |

Cisco UCS FI-A |

Eth 1/48 |

| Eth 1/48 |

25GbE |

Cisco UCS FI-B |

Eth 1/48 |

|

| Eth 1/9 |

40GbE |

Cisco N9K-Mgmt-A |

Eth 1/50 |

|

| Eth 1/11 |

40GbE |

Cisco N9K-Mgmt-B |

Eth 1/50 |

|

| Eth 1/15 |

10/25GbE |

Pure Storage FlashArray//X CT0 |

Eth 5 |

|

| Eth 1/16 |

10/25GbE |

Pure Storage FlashArray//X CT1 |

Eth 5 |

|

| Eth 1/35 |

10GbE |

Cisco N9K-B (peer-link) |

Eth 1/35 |

|

| Eth 1/36 |

10GbE |

Cisco N9K-B (peer-link) |

Eth 1/36 |

|

| MGMT |

1GbE |

Customer’s Management Switch |

Any |

![]() Use Twinax cables for the iSCSI port ethernet connectivity from the FlashArray//X to the Nx93180YC-FX to provide the HANA shared filesystem access.

Use Twinax cables for the iSCSI port ethernet connectivity from the FlashArray//X to the Nx93180YC-FX to provide the HANA shared filesystem access.

Table 7. Cisco UCS Fabric Interconnect A Device Cabling

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco 6454 Fabric Inter-connect A |

fc 1/1 |

FC uplink |

Cisco MDS-A 9148T |

fc 1/17 |

| fc 1/2 |

FC uplink |

fc 1/18 |

||

| fc 1/3 |

FC uplink |

fc 1/19 |

||

| fc 1/4 |

FC uplink |

fc 1/20 |

||

| Eth 1/17 |

25GbE |

Cisco UCS 5108 - IOM-A 2408 |

1/1 |

|

| Eth 1/18 |

25GbE |

1/2 |

||

| Eth 1/19 |

25GbE |

1/3 |

||

| Eth 1/20 |

25GbE |

1/4 |

||

| Eth 1/47 |

25GbE |

Cisco Nexus 93180YC-FX-A |

Eth 1/47 |

|

| Eth 1/48 |

25GbE |

Cisco Nexus 93180YC-FX-B |

Eth 1/47 |

|

| L1 |

GbE |

Cisco UCS FI-B |

L1 |

|

| L2 |

GbE |

Cisco UCS FI-B |

L2 |

|

| MGMT |

1GbE |

Customer’s Management Switch |

Any |

Table 8. Cisco UCS Fabric Interconnect B Device Cabling

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Fabric Interconnect B |

fc 1/1 |

FC uplink |

Cisco MDS-B 9148T

|

fc 1/17 |

| fc 1/2 |

FC uplink |

fc 1/18 |

||

| fc 1/3 |

FC uplink |

fc 1/19 |

||

| fc 1/4 |

FC uplink |

fc 1/20 |

||

| Eth 1/17 |

40GbE |

Cisco UCS 5108 - IOM-B 2408 |

2/1 |

|

| Eth 1/18 |

40GbE |

2/2 |

||

| Eth 1/19 |

10GbE |

2/3 |

||

| Eth 1/20 |

10GbE |

2/4 |

||

| Eth 1/47 |

25GbE |

Cisco Nexus 93180YC-FX-A |

Eth 1/48 |

|

| Eth 1/48 |

25GbE |

Cisco Nexus 93180YC-FX-B |

Eth 1/48 |

|

| L1 |

GbE |

Cisco UCS FI-A |

L1 |

|

| L2 |

GbE |

Cisco UCS FI-A |

L2 |

|

| MGMT |

1GbE |

Customer’s Management Switch |

Any |

Table 9. Cisco MDS-A 9148T Device Cabling

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| MDS-A 9148T |

fc 1/17 |

32GbE |

Cisco UCS FI-A |

fc 1/1 |

| fc 1/18 |

32GbE |

fc 1/2 |

||

| fc 1/19 |

32GbE |

fc 1/3 |

||

| fc 1/20 |

32GbE |

fc 1/4 |

||

| fc 1/29 |

16/32GbE |

Pure Storage FlashArray//X CT0 |

CT0.FC0 |

|

| fc 1/30 |

16/32GbE |

Pure Storage FlashArray//X CT1 |

CT1.FC0 |

|

| fc 1/31 |

16/32GbE |

Pure Storage FlashArray//X CT0 |

CT0.FC2 |

|

| fc 1/32 |

16/32GbE |

Pure Storage FlashArray//X CT1 |

CT1.FC2 |

|

| MGMT |

1GbE |

Customer’s Management Switch |

Any |

Table 10. Cisco MDS-B 9148T Device Cabling

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| MDS-B 9148T |

fc 1/17 |

32GbE |

Cisco UCS FI-A |

fc 1/1 |

| fc 1/18 |

32GbE |

fc 1/2 |

||

| fc 1/19 |

32GbE |

fc 1/3 |

||

| fc 1/20 |

32GbE |

fc 1/4 |

||

| fc 1/29 |

16/32GbE |

Pure Storage FlashArray//X CT0 |

CT0.FC0 |

|

| fc 1/30 |

16/32GbE |

Pure Storage FlashArray//X CT1 |

CT1.FC0 |

|

| fc 1/31 |

16/32GbE |

Pure Storage FlashArray//X CT0 |

CT0.FC2 |

|

| fc 1/32 |

16/32GbE |

Pure Storage FlashArray//X CT1 |

CT1.FC2 |

|

| MGMT |

1GbE |

Customer’s Management Switch |

Any |

Table 11. Pure Storage FlashArray//X 50 R3 Device Cabling

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| FlashArray//X 50 R3 |

CT0.FC0 |

16/32GbE |

Cisco MDS-A 9148T

|

fc 1/29 |

| CT1.FC0 |

16/32GbE |

fc 1/30 |

||

| CT0.FC2 |

16/32GbE |

fc 1/31 |

||

| CT1.FC2 |

16/32GbE |

fc 1/32 |

||

| CT0.FC1 |

16/32GbE |

Cisco MDS-B 9148T

|

fc 1/29 |

|

| CT1.FC1 |

16/32GbE |

fc 1/30 |

||

| CT0.FC3 |

16/32GbE |

fc 1/31 |

||

| CT1.FC3 |

16/32GbE |

fc 1/32 |

||

| CT0 eth4 |

10/25GbE |

Nexus 93180YC-FX-A |

Eth 1/15 |

|

| CT0 eth5 |

10/25GbE |

Nexus 93180YC-FX-B |

Eth 1/15 |

|

| CT1 eth4 |

10/25GbE |

Nexus 93180YC-FX-A |

Eth 1/16 |

|

| CT1 eth5 |

10/25GbE |

Nexus 93180YC-FX-B |

Eth 1/16 |

Solution components and Software Revisions

The following tables list the components and software revisions validated for the FlashStack for SAP HANA TDI deployment.

Table 12. Inventory and Bill of Material of the Validation Setup

| Vendor |

Name |

Version/Model |

Description |

Quantity |

| Cisco |

Cisco Nexus 93180YC Switch |

N9K-C93180YC-FX |

Cisco Nexus 9300 Series Switches |

2 |

| Cisco |

Cisco MDS 9148T 32GB Multilayer Switch |

DS-C9148T-K9 |

Cisco MDS 9100 Series Multilayer Fabric Switches |

2 |

| Cisco |

Cisco UCS 6454 Fabric Interconnect |

UCS-FI-6454 |

Cisco 6400 Series Fabric Interconnects |

2 |

| Cisco |

Cisco UCS Fabric Extender |

UCS-IOM-2408 |

Cisco UCS 2408 I/O Module (8x 25GB External, 32x 10GB Internal) |

4 |

| Cisco |

Cisco UCS B480 M5 blade servers |

UCSB-B480-M5 |

Cisco UCS B-Series Blade Servers |

4 |

| Cisco |

Cisco UCS VIC 1440 mLom |

UCSB-MLOM-40G-04 |

Cisco UCS VIC 1400 PCIE adapters for blade servers |

4 |

| Cisco |

Cisco UCS VIC 1480 |

UCSB-VIC-M84-4P |

Cisco UCS VIC 1400 PCIE adapters for blade servers |

4 |

| Pure Storage |

FlashArray//X |

FlashArray//X50 R3 |

Pure Storage FlashArray//X |

1 |

Table 13. Hardware and Software Component Versions of the Validated Setup

| Vendor |

Product |

Version |

| Cisco |

Cisco UCSM |

4.1(1c) |

| Cisco |

Cisco UCS 6454 |

7.0(3)N2(4.11b) |

| Cisco |

Cisco UCS B-Series M5 Servers |

4.1(1c) |

| Cisco |

Cisco Nexus 93180YC Switches |

9.2(1) |

| Cisco |

Cisco MDS 9148T 32GB |

8.3(1) |

| Pure Storage |

Purity//FA |

5.3.8 |

| SUSE |

SUSE Linux Enterprise Server for SAP Applications |

15 SP1 |

| RHEL |

Red Hat Enterprise Linux for SAP Solutions |

8.1 |

Performance

The solution is designed to meet SAP HANA TDI performance requirements defined by SAP SE. All data traffic between SAP HANA nodes is handled by the UCS Fabric Interconnect. Each HANA Server is equipped with a minimum of 4 x 10GbE capable Cisco Virtual Interface Card, the storage network provides dedicated bandwidth between SAP HANA servers and the FlashArray//X. For HANA node-to-node network, 10 GB dedicated network bandwidth is provided with non-blocking mode.

All FlashStack components are capable to operate end-to-end with 32GB Fibre channel and meet the SAP HANA TDI performance requirements already with 16GB Fibre channel connectivity.

Cisco Hardware Configuration

Some hardware components like the Cisco UCS Fabric Interconnects or Cisco UCS B-Series blade servers are configured similarly. This document details steps for provisioning multiple Cisco UCS hosts which are identified sequentially, like:

HANA-Server0{1 | 2}.

Angle brackets (<>) indicate a character string that the user needs to enter like a variable pertinent to the customer environment or a password.

Cisco Nexus 9000 Series Switch Network Configuration

This section provides a detailed procedure to configure the Cisco Nexus 9000 Switches part of the FlashStack environment. The configuration steps are based on above cabling plan. If systems are connected on different ports, configure the switches accordingly following the guidelines described in this section.

Ensure the physical hardware installation and cabling is complete before you continue. First create a local management connection through a console terminal to perform the initial configuration and to configure a switch IP address. Second configure the required features and virtual local area networks (VLANs) according to the device cabling documentation.

![]() Connect to the serial or console port of the Nexus switch. The NX-OS setup will automatically start and attempt to enter power on auto provisioning after initial boot.

Connect to the serial or console port of the Nexus switch. The NX-OS setup will automatically start and attempt to enter power on auto provisioning after initial boot.

Cisco Nexus Initial Configuration

To perform the initial Cisco Nexus switch configuration, follow these steps. Keep all settings on default if not listed otherwise.

1. Connect to the Nexus A console port and press the spacebar:

● Would you like to enter the basic configuration dialog (yes/no): yes

● Enter the switch name : <var_nexus_A_hostname>

● Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

● Mgmt0 IPv4 address : <var_nexus_A_mgmt0_ip>

● Out of Band Mgmt0 IPv4 netmask : <var_oob_vlan_net>

● Configure the default gateway? (yes/no) [y]:

● IPv4 address of the default gateway : <var_oob_vlan_gw>

● Number of rsa key bits <1024-2048> [2048]: 1024

● Configure the ntp server? (yes/no) [n]: y

● NTP server IPv4 address : <var_global_ntp_server_ip>

2. The configuration wizard lists a configuration summary at the end. Review the summary and save it.

3. Connect to the Nexus B console port and press the spacebar:

● Would you like to enter the basic configuration dialog (yes/no): yes

● Enter the switch name : <var_nexus_B_hostname>

● Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

● Mgmt0 IPv4 address : <var_nexus_B_mgmt0_ip>

● Out of Band Mgmt0 IPv4 netmask : <var_oob_vlan_net>

● Configure the default gateway? (yes/no) [y]:

● IPv4 address of the default gateway : <var_oob_vlan_gw>

● Number of rsa key bits <1024-2048> [2048]: 1024

● Configure the ntp server? (yes/no) [n]: y

● NTP server IPv4 address : <var_global_ntp_server_ip>

4. The configuration wizard lists a configuration summary at the end. Review the summary and save it.

Enable Cisco Nexus 9000 Series Switch Features and Spanning-Tree

To enable the required features and set the default spanning tree behavior on both Nexus switches, run the following commands:

1. Run the following:

N9K-A|B# config terminal

2. Enable features:

N9K-A|B(config)# feature udld

N9K-A|B(config)# feature lacp

N9K-A|B(config)# feature vpc

N9K-A|B(config)# feature interface-vlan

N9K-A|B(config)# feature lldp

3. Set Spanning-Tree:

N9K-A|B(config)# spanning-tree port type network default

N9K-A|B(config)# spanning-tree port type edge bpduguard default

N9K-A|B(config)# spanning-tree port type edge bpdufilter default

4. Persist the configuration:

N9K-A|B(config)# copy run start

Create VLANs for SAP HANA Traffic

Separate network traffic using multiple VLANs for SAP Hana traffic. In Nexus configuration mode create VLANs depending on customer and HANA scenario requirements:

5. Use multiple VLANs for network traffic separation. Run the following commands in the Nexus configuration mode to create the VLANs:

N9K-A|B(config)# vlan <var_oob_vlan_id>

N9K-A|B(config-vlan)# name HANA-Node-Mgmt

N9K-A|B(config-vlan)# exit

6. Create additional VLANs using the same command syntax as shown above:

vlan <var_client_vlan_id> name HANA-Client

vlan <var_AppServer_vlan_id> name HANA-AppServer

vlan <var_datasource_vlan_id> name HANA-DataSource

vlan <var_backup_vlan_id> name HANA-Node-Backup>

7. Other SAP HANA scenarios like Scale-Out or SAP HANA System Replication can require additional VLANs:

vlan <var_nfs-shared_vlan_id> name HANA-NFSshared

vlan <var_internal_vlan_id> name HANA-Internode

vlan <var_replication_vlan_id> name HANA-System-Replication

![]() Define the same VLAN ID for HANA-NFSshared and the management services network, that provides the Active Directory Services and DNS within the SAP landscape.

Define the same VLAN ID for HANA-NFSshared and the management services network, that provides the Active Directory Services and DNS within the SAP landscape.

Virtual Port-Channel Domain Configuration

To configure the virtual port channel domain, follow these steps:

1. Run the following commands in the Nexus configuration mode to create the vPCs:

N9K-A(config)# vpc domain <var_nexus_vpc_domain_id>

2. Define a lower priority value to promote this Nexus as primary vPC peer:

N9K-A(config-vpc-domain)# role priority 10

3. Use the management interfaces on the supervisors to establish a keepalive link:

N9K-A(config-vpc-domain)# peer-keepalive destination <var_nexus_B_mgmt0_ip> source <var_nexus_A_mgmt0_ip>

4. Enable the following features for this vPC domain:

N9K-A(config-vpc-domain)# peer-switch

N9K-A(config-vpc-domain)# delay restore 150

N9K-A(config-vpc-domain)# peer-gateway

N9K-A(config-vpc-domain)# auto-recovery

5. Complete the vPC configuration on the other Nexus switch:

N9K-B(config)# vpc domain <var_nexus_vpc_domain_id>

6. Define a higher priority value than on the other Nexus switch to promote this Nexus as secondary vPC peer:

N9K-B(config-vpc-domain)# role priority 20

7. Use the management interfaces on the supervisors to establish a keepalive link:

N9K-B(config-vpc-domain)# peer-keepalive destination <var_nexus_A_mgmt0_ip> source <var_nexus_B_mgmt0_ip>

8. Enable the following features for this vPC domain:

N9K-B(config-vpc-domain)# peer-switch

N9K-B(config-vpc-domain)# delay restore 150

N9K-B(config-vpc-domain)# peer-gateway

N9K-B(config-vpc-domain)# auto-recovery

Network Interface Configuration for the vPC Peer Links

To configure the network interface for the vPC peer links, follow these steps:

1. Define a port description for the vPC peer interface:

N9K-A(config)# interface eth1/35

N9K-A(config)# description vPC peer <var_nexus_B_hostname>:1/35

N9K-A(config)# interface eth1/36

N9K-A(config)# description vPC peer <var_nexus_B_hostname>:1/36

2. Define a port description for the vPC peer links on the secondary Nexus:

N9K-B(config)# interface eth1/35

N9K-B(config)# description vPC peer <var_nexus_A_hostname>:1/35

N9K-B(config)# interface eth1/36

N9K-B(config)# description vPC peer <var_nexus_A_hostname>:1/36

![]() Perform the following configuration steps on both Nexus switches.

Perform the following configuration steps on both Nexus switches.

3. Apply a port channel to both vPC peer links and bring the interfaces up:

N9K-A|B (config)# interface eth1/35-36

N9K-A|B (config-if-range)# channel-group 2 mode active

N9K-A|B (config-if-range)# no shutdown

4. Define a description for the port channel connecting to the other Nexus:

N9K-A|B (config)# interface Po2

N9K-A|B (config-if)# vPC peer-link

5. Make the port channel a switchport and configure a trunk to allow the HANA VLANs:

N9K-A|B (config-if)# switchport

N9K-A|B (config-if)# switchport mode trunk

N9K-A|B (config-if)# switchport trunk allowed vlan <var_oob_vlan_id>, <var_client_vlan_id>, <var_appserver_vlan_id>, <var_datasource_vlan_id>, <var_backup_vlan_id>, <var_nfs-shared_vlan_id>, <var_internal_vlan_id>, <var_replication_vlan_id>

6. Make the port channel the vPC peer link and bring it up:

N9K-A|B(config-if)# spanning-tree port type network

N9K-A|B(config-if)# vpc peer-link

N9K-A|B(config-if)# no shutdown

Configure vPC with the Cisco UCS Fabric Interconnects

To configure vPC with the Cisco UCS FIs, follow these steps:

1. The different SAP HANA network zones will use the vPC for the admin, client, and internal network traffic. Verify the neighbors with the following command:

N9K-A|B# show cdp neighbors

2. Define a port description for the interfaces connecting to the fabric interconnect:

N9K-A(config)# interface eth1/47

N9K-A(config-if)# description <var_ucs_clustername>-A:1/47

N9K-A(config)# interface eth1/48

N9K-A(config-if)# description <var_ucs_clustername>-B:1/47

N9K-B(config)# interface eth1/47

N9K-B(config-if)# description <var_ucs_clustername>-A:1/48

N9K-B(config)# interface eth1/48

N9K-B(config-if)# description <var_ucs_clustername>-B:1/48

3. Apply the interfaces to a port channel and bring them up:

N9K-A|B(config)# interface eth1/47

N9K-A|B(config-if)# channel-group 21 mode active

N9K-A|B(config-if)# no shutdown

N9K-A|B(config)# interface eth1/48

N9K-A|B(config-if)# channel-group 22 mode active

N9K-A|B(config-if)# no shutdown

4. Define a port channel description for port channel 21 and configure it:

N9K-A|B(config)# interface Po21

N9K-A|B(config-if)# description <var_ucs_clustername>-A

5. Make the port channel a switchport and configure a trunk to allow the HANA VLANs:

N9K-A|B(config-if)# switchport

N9K-A|B(config-if)# switchport mode trunk

N9K-A|B(config-if)# switchport trunk allowed vlan <var_oob_vlan_id>, <var_client_vlan_id>, <var_appserver_vlan_id>, <var_datasource_vlan_id>, <var_internal_vlan_id>, <var_replication_vlan_id>

6. Associate interface spanning tree edge ports:

N9K-A|B(config-if)# spanning-tree port type edge trunk

7. Set MTU to support jumbo frames:

N9K-A|B(config-if)# mtu 9216

8. Make this a vPC port channel and bring it up:

N9K-A|B(config-if)# vpc 21

N9K-A|B(config-if)# no shutdown

9. Define a port channel description for port channel 22 and configure it:

N9K-A|B(config)# interface Po22

N9K-A|B(config-if)# description <var_ucs_clustername>-B

10. Make the port channel a switchport and configure a trunk to allow the HANA VLANs:

N9K-A|B(config-if)# switchport

N9K-A|B(config-if)# switchport mode trunk

N9K-A|B(config-if)# switchport trunk allowed vlan <var_oob_vlan_id>, <var_client_vlan_id>, <var_appserver_vlan_id>, <var_datasource_vlan_id>, <var_internal_vlan_id>, <var_replication_vlan_id>

11. Associate interface spanning tree edge ports:

N9K-A|B(config-if)# spanning-tree port type edge trunk

12. Set MTU to support jumbo frames:

N9K-A|B(config-if)# mtu 9216

13. Make this a vPC port channel and bring it up:

N9K-A|B(config-if)# vpc 22

N9K-A|B(config-if)# no shutdown

![]() (Optional) Configure additional vPCs for exclusive usage by the storage zone network, SAP HANA node backup network, or the NFS network if this is used for backup purposes.

(Optional) Configure additional vPCs for exclusive usage by the storage zone network, SAP HANA node backup network, or the NFS network if this is used for backup purposes.

Configure Pure Storage FlashArray//X Connectivity

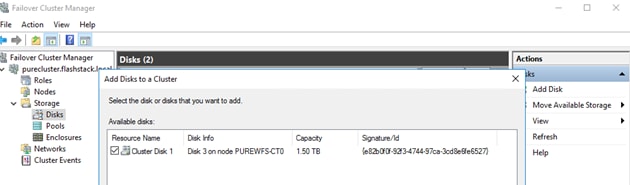

Purity//FAs run platform-based WFS configuration and enables NFS filesystem provisioning. It uses iSCSI ports on the array controllers for southbound connectivity to consumer nodes via the Nexus switches. The iSCSI ports work as uplink ports for the controller hosted Windows 2016 Server VMs configured as failover cluster. The iSCSI ports on the array side do not support LACP; they are configured as access ports with spanning-tree type edge.

To configure the ports that connect to Pure Storage FlashArray//X’s iSCSI ports to provide IP connectivity to the NFS share for the SAP HANA Scale Out scenario, follow these steps:

1. Define a port description for the interface connecting to the iSCSI port eth 2 on array controller CT0.

N9K-A(config)# interface eth1/15

N9K-A(config-if)# description FlashArray-CT0-iscsi-eth2

2. Configure the access port and assign the NFS network VLAN ID.

N9K-A(config-if)# switchport access vlan <var_nfs-shared_vlan_id>

N9K-A(config-if)# spanning-tree port type edge

N9K-A(config-if)# no shutdown

3. Define a port description for the interface connecting to the iSCSI port eth 2 on array controller CT1.

N9K-A(config)# interface eth1/16

N9K-A(config-if)# description FlashArray-CT1-iscsi-eth2

4. Configure the access port and assign the NFS network VLAN ID.

N9K-A(config-if)# switchport access vlan <var_nfs-shared_vlan_id>

N9K-A(config-if)# spanning-tree port type edge

N9K-A(config-if)# no shutdown

5. Optionally, connect eth1/17 and eth1/18 to FlashArray interface eth 4 (PCI Port 2).

![]() Perform the same configuration as above for N9K-B replacing the iSCSI port with eth 3, optionally eth 5.

Perform the same configuration as above for N9K-B replacing the iSCSI port with eth 3, optionally eth 5.

6. Persist the configuration on both Nexus devices.

N9K-A|B# copy run start

Cisco MDS 9148T Switch Configuration

This section provides the configure procedure for the Cisco MDS 9100 Switches part of the FlashStack SAN environment. Figure 1 illustrates the connected MDS Switches to Fabric Interconnects and Pure Storage FlashArray//X and Table 13 and Table 14 provide the port information required for the configuration.

If systems are connected on different ports, configure the switches accordingly following the guidelines described in this section. Ensure the physical hardware installation and cabling is complete before you continue. First create a local management connection through a console terminal to perform the initial configuration and to configure a switch IP address. Second configure the required features and VLANs according to the device cabling documentation.

![]() Cisco UCS needs to be configured for the FC ports connected to the Cisco UCS Fabric Interconnects to come up.

Cisco UCS needs to be configured for the FC ports connected to the Cisco UCS Fabric Interconnects to come up.

Cisco MDS Initial Configuration

To perform the initial Cisco MDS switch configuration, follow these steps. Keep all settings on default if not listed otherwise.

1. Connect to the MDS A console port and press the spacebar:

● Enter the password for "admin": <var_mgmt_passwd>

● Confirm the password for "admin": <var_mgmt_passwd>

● Would you like to enter the basic configuration dialog (yes/no): yes

● Configure read-only SNMP community string (yes/no) [n]: yes

● SNMP community string :

● Enter the switch name : <var_mds-A_hostname>

● Mgmt0 IPv4 address : <var_mds-A_mgmt0_ip>

● Out of Band Mgmt0 IPv4 netmask : <var_oob_vlan_net>

● IPv4 address of the default gateway : <var_oob_vlan_gw>

● Number of rsa key bits <768-2048> [1024]: 2048

● Configure the ntp server? (yes/no) [n]: y

● NTP server IPv4 address : <var_global_ntp_server_ip>

● Configure default switchport interface state (shut/noshut) [shut]: noshut

● Configure default switchport trunk mode (on/off/auto) [on]: auto

● Configure default switchport port mode F (yes/no) [n]: y

a. The configuration wizard lists a configuration summary at the end. Review the summary and save it.

2. Connect to the MDS B console port and press the spacebar:

● Enter the password for "admin": <var_mgmt_passwd>

● Confirm the password for "admin": <var_mgmt_passwd>

● Would you like to enter the basic configuration dialog (yes/no): yes

● Configure read-only SNMP community string (yes/no) [n]: yes

● SNMP community string :

● Enter the switch name : <var_mds-B_hostname>

● Mgmt0 IPv4 address : <var_mds-B_mgmt0_ip>

● Out of Band Mgmt0 IPv4 netmask : <var_oob_vlan_net>

● IPv4 address of the default gateway : <var_oob_vlan_gw>

● Number of rsa key bits <768-2048> [1024]: 2048

● Configure the ntp server? (yes/no) [n]: y

● NTP server IPv4 address : <var_global_ntp_server_ip>

● Configure default switchport interface state (shut/noshut) [shut]: noshut

● Configure default switchport trunk mode (on/off/auto) [on]: auto

● Configure default switchport port mode F (yes/no) [n]: y

3. The configuration wizard lists a configuration summary at the end. Review the summary and save it.

Configure the Management Port and Enable Essential Features

To configure the management port and enable feature, follow these steps:

1. Enter the configuration mode and configure both MDS switches:

MDS-A|B# config terminal

2. Configure the management port:

MDS-A|B(config)# interface mgmt 0

MDS-A|B(config-if)# switchport speed 1000

MDS-A|B(config-if)# no shutdown

3. Enable features:

MDS-A|B(config)# feature fport-channel-trunk

MDS-A|B(config)# feature npiv

Create Port Channels and VSANs

To configure the fibre channel ports, follow these steps:

1. Create a Port Channel that will uplink to the Cisco UCS Fabric Interconnect on both 9148T MDS switches:

MDS-A(config)# interface port-channel <var_fc-pc_A_id>

MDS-B(config)# interface port-channel <var_fs-pc-B_id>

2. Create the VSAN to connect the Cisco UCS Fabric Interconnect and the Pure Storage FlashArray//X. Assign this VSAN to the interface which connects to the Pure Storage FlashArray//X, as well as the interfaces and the port channels connected to the Cisco Fabric Interconnect:

MDS-A(config)# vsan database

MDS-A(config-vsan-db)# vsan <var_san_A_id>

MDS-A(config-vsan-db)# vsan <var_san_A_id> int port-channel <var_fc-pc_A_id>

MDS-A(config-vsan-db)# vsan <var_san_A_id> int fc 1/29

MDS-A(config-vsan-db)# vsan <var_san_A_id> int fc 1/30

MDS-A(config-vsan-db)# vsan <var_san_A_id> int fc 1/31

MDS-A(config-vsan-db)# vsan <var_san_A_id> int fc 1/32

MDS-A(config-vsan-db)# interface fc 1/29-32

MDS-A(config-if)# no shut

3. Repeat the command on the Cisco 9148T MDS-B switch and use the fabric B appropriate VSAN ID:

MDS-B(config)# vsan database

MDS-B(config-vsan-db)# vsan <var_san_B_id>

MDS-B(config-vsan-db)# vsan <var_san_B_id> int port-channel <var_fc-pc_B_id>

MDS-B(config-vsan-db)# vsan <var_san_B_id> int fc 1/29

MDS-B(config-vsan-db)# vsan <var_san_B_id> int fc 1/30

MDS-B(config-vsan-db)# vsan <var_san_B_id> int fc 1/31

MDS-B(config-vsan-db)# vsan <var_san_B_id> int fc 1/32

MDS-B(config-vsan-db)# interface fc 1/29-32

MDS-B(config-if)# no shut

4. Configure the port channel and add the interfaces connecting to the Cisco Fabric Interconnect:

MDS-A(config)# interface port-channel <var_fc-pc-_A_id>

MDS-A(config-if)# channel mode active

MDS-A(config-if)# switchport mode F

MDS-A(config-if)# switchport trunk mode off

MDS-A(config-if)# switchport trunk allowed vsan <var_san_A_id>

MDS-A(config-if)# int fc1/17-20

MDS-A(config-if)# port-license acquire

MDS-A(config-if)# channel-group <var_fc-pc_A_id> force

MDS-A(config-if)# no shut

5. Repeat the commands on the Cisco 9148T MDS-B switch and use the fabric appropriate port channel:

MDS-B(config)# interface port-channel <var_fc-pc-_B_id>

MDS-B(config-if)# channel mode active

MDS-B(config-if)# switchport mode F

MDS-B(config-if)# switchport trunk mode off

MDS-B(config-if)# switchport trunk allowed vsan <var_san_B_id>

MDS-B(config-if)# int fc1/17-20

MDS-B(config-if)# port-license acquire

MDS-B(config-if)# channel-group <var_fc-pc_B_id> force

MDS-B(config-if)# no shut

Persist the Configuration

To persist the configuration, follow this step:

1. Save the configuration changes on both MDS switches:

MDS-A|B# copy run start

Cisco UCS Configuration Overview

It is beyond the scope of this document to explain the Cisco UCS infrastructure installation and connectivity. If you require additional information on specific configuration steps or options you might have review the Cisco UCS Manager Installation and Upgrade Guides.

High-Level Steps to Configure Cisco Unified Computing System

From a high-level perspective, the following steps are required to configure the Cisco UCS infrastructure and service profile templates:

1. Initial Fabric Interconnect configuration for a cluster setup.

2. Configure Fabric Interconnects for Chassis and Blade discovery.

3. Configure LAN and SAN in Cisco UCS Manager.

4. Configure UUDI, IP, MAC, WWNN and WWPN pools.

5. Configure vNIC and vHBA templates.

6. Configure ethernet uplink port-channels.

7. Create Service Profile templates.

Cisco Fabric Interconnect Initial Configuration

The first time you access a fabric interconnect in a Cisco UCS instance, a setup wizard prompts you for the following information required to configure the system.

To configure the initial Cisco Fabric Interconnect, follow these steps:

1. Perform the initial Cisco FI configuration. Keep all settings on default if not listed otherwise.

2. Connect to the FI-A console port and press space:

● Enter the setup mode; setup newly or restore from backup.(setup/restore)? setup

● You have chosen to setup a new fabric interconnect? Continue? (y/n): y

● Enter the password for "admin": <var_password>

● Enter the same password for "admin": <var_password>

● Is this fabric interconnect part of a cluster (select 'no' for standalone)? (yes/no) [n]: y

● Which switch fabric (A|B): A

● Enter the system name: <var_ucs_clustername>

● Physical switch Mgmt0 IPv4 address: <var_ucsa_mgmt_ip>

● Physical switch Mgmt0 IPv4 netmask: <var_oob_vlan_net>

● IPv4 address of the default gateway: <var_oob_vlan_gw>

● Cluster IPv4 address: <var_ucs_cluster_ip>

● Configure DNS Server IPv4 address? (yes/no) [no]: y

● DNS IPv4 address: <var_nameserver_ip>

● Configure the default domain name? y

● Default domain name: <var_dns_domain_name>

3. The configuration wizard lists a configuration summary at the end. Review the summary and save it.

4. Connect to the FI-B console port and press the spacebar:

Enter the configuration method: console

Installer had detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Do you want to continue {y|n} y

Enter the admin password for the peer fabric interconnect: <var_password>

Physical switch Mgmt0 IPv4 address: <var_ucsb_mgmt_ip>

Apply and save the configuration (select ‘no’ if you want to re-enter)? (yes/no): y

5. Wait for the login prompt to make sure that the configuration has been saved.

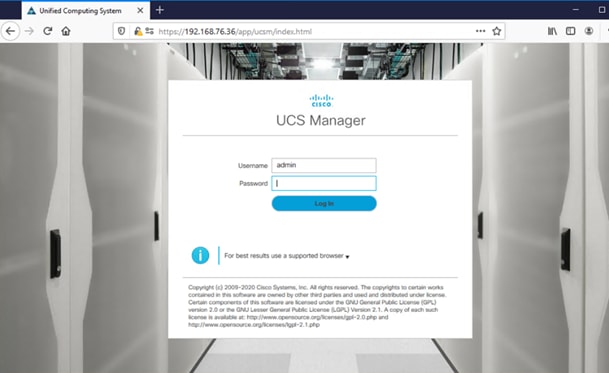

With the Fabric Interconnects configured continue the configuration from the web frontend of the Cisco UCS Manager. To access the frontend, follow these steps:

1. Open a web browser and navigate to the FI cluster address https://<var_ucs_cluster_ip>

2. Click Launch UCS Manager (accept the security certificate warning if prompted)

3. Enter the username “admin” and the Cisco UCS admin password <var_password> before you click Log In.

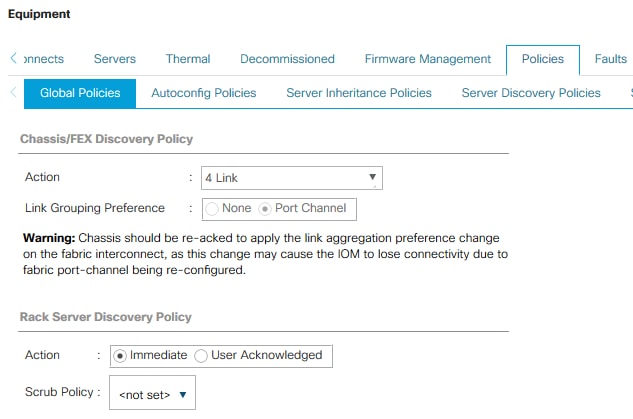

To modify the discovery policy to enable discovery of the Cisco UCS B-Series chassis and the Cisco UCS C-Series server connectivity, follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane and select Equipment in the list on the left.

2. In the right pane, click the Policies tab.

3. Under Global Policies, set the Chassis/FEX Discovery Policy to match the number of uplink ports that are cabled between the chassis or fabric extenders (FEXes) and the fabric interconnects. Keep the port channel default for Link Grouping Preference.

4. Under Rack Server Discovery Policy change the action to Immediate.

5. Click Save Changes.

6. Click OK.

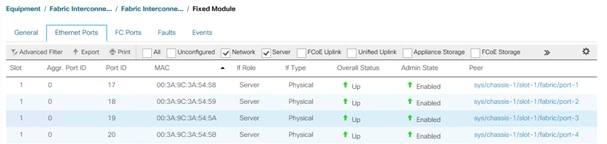

To enable server and uplink ports, follow these steps on both Fabric Interconnects:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A|B (primary | subordinate) > Fixed Module.

3. Go to the Ethernet Ports tabulator.

4. Select the ports that are connected to the chassis and / or to the Cisco C-Series Server (two per FI), right-click them, and select Configure as Server Port.

5. Click Yes to confirm server ports and click OK.

6. Verify that the ports connected to the chassis and / or to the Cisco C-Series Server are now configured as server ports.

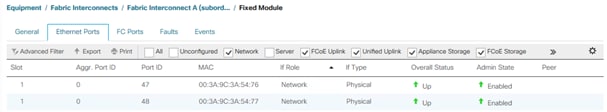

Configure Ethernet Uplink Ports

To configure the ethernet uplink ports, follow these steps on both Fabric Interconnects:

![]() Select ports in the range 49-54 for 40/100GE Uplink Port connectivity.

Select ports in the range 49-54 for 40/100GE Uplink Port connectivity.

1. Configure the ports connected to the N9Ks Ethernet Uplink Ports. The port range 17-48 provides 10/25GE uplink connectivity.

2. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

3. Select Equipment > Fabric Interconnects > Fabric Interconnect A|B (primary | subordinate) > Fixed Module.

4. Go to the Ethernet Ports tabulator.

5. Select ports that are connected to the Cisco Nexus switches, right-click them, and select Configure as Uplink Port.

6. Click Yes to confirm uplink ports and click OK.

Enable FlashStack for volume provisioning used by the FlashStack UCS hosts to boot from Fibre Channel LUNs and used as application data volumes.

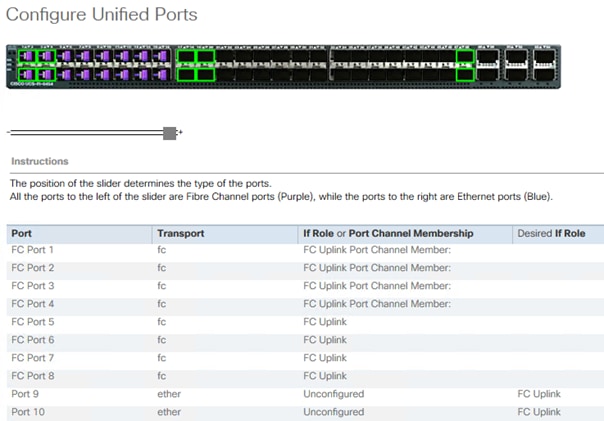

Configure Unified Ports

The Configure Unified Ports wizard allows you to change the port mode from Ethernet to Fibre Channel. Create the first set of ports from the left, for example ports 1-4 of the fixed module for Fibre Channel. Each of the other ports can be an Ethernet Uplink Port to the Nexus switches.

![]() While configuring the Fixed Module Ports, the slider bar movement enables sets of ports from the left of the module as FC ports. The remainder is available for Ethernet Uplinks. This step used 4 ports for uplink to MDS, it is sufficient to configure first set of 8 ports as FC ports.

While configuring the Fixed Module Ports, the slider bar movement enables sets of ports from the left of the module as FC ports. The remainder is available for Ethernet Uplinks. This step used 4 ports for uplink to MDS, it is sufficient to configure first set of 8 ports as FC ports.

Follow these steps on both Fabric Interconnects:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A|B (primary | subordinate)

3. In the right pane General tab scroll to Actions and select Configure Unified Ports. Confirm the warning,

4. Move the slider bar to right to enable the first set of 8 ports for FC Uplink Role. Click OK.

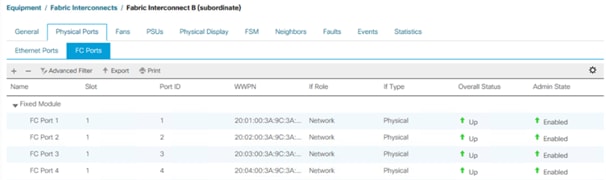

A change to the fixed module requires a reboot of the Fabric Interconnects. To reboot the FIs, follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A|B (primary | subordinate)

3. Expand the FC ports.

4. Select the ports connected to the Cisco MDS switch, right-click them and select Enable

5. Click Yes to confirm to enable the ports and click OK.

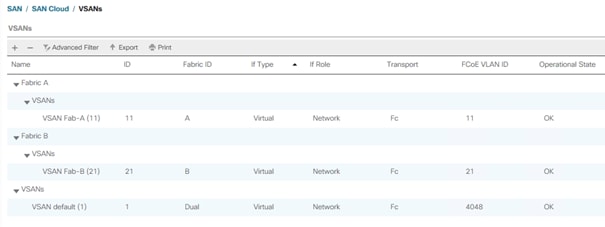

VSAN is a security mechanism for storage which can be compared to VLANs for the networks.

The storage connectivity is achieved through northbound Cisco MDS Fabric Switches. It is important to note that physical northbound storage connectivity does not support vPCs like LAN connectivity does and it is required to connect FI-A via MDS-A and FI-B via MDS-B towards the FlashArray//X. Fabric Interconnects do not cross connect with MDS switches.

Port channel configuration to combine multiple storage FC uplink ports to provide physical link redundancy is possible.

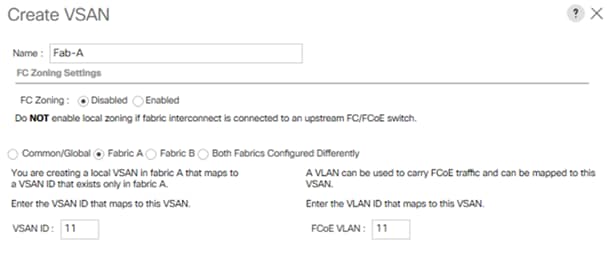

To configure VSAN, follow these steps:

1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

2. Select SAN > SAN Cloud > VSANs.

3. Right-click VSANs and select Create VSAN.

4. Enter Fab-A as the name of the VSAN to be used for Fabric-A.

5. Retain ‘Disabled’ for FC Zoning option and select Fabric A. Enter VSAN ID <var_san_A_id> which maps to the VSAN on MDS-A. Use the same value for FCOE VLAN ID.

6. Click OK and then click OK again.

7. Repeat steps 1 - 6 to create VSAN Fab-B and use VSAN ID <var_san_B_id> which maps to the VSAN on MDS-B.

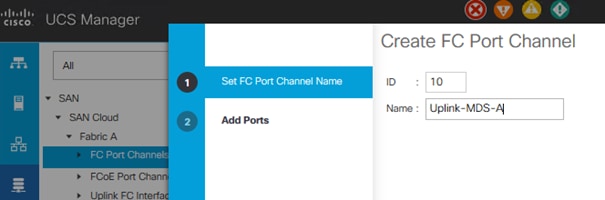

Configure the FC uplinks from the Fabric Interconnects towards the Cisco MDS fabric switches. A port channel bundles the interfaces into a group to provide increased bandwidth and redundancy and load balance the VSAN traffic. The port channel pair has corresponding F-port-channel-trunks defined on the MDS switches to allow the fabric logins from N Port Virtualization (NPV) enabled Fabric Interconnects. This provides non-disruptive redundancy should individual member links fail.

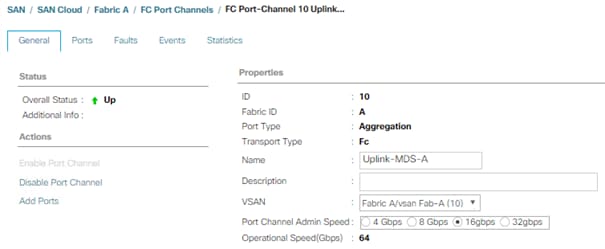

To configure the necessary port channels out of the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

2. Under SAN > SAN Cloud, expand the Fabric A tree.

3. Right-click FC Port Channels.

4. Select Create FC Port Channel.

5. Enter the unique ID 10 and FC port channel name Uplink-MDS-A. Click Next.

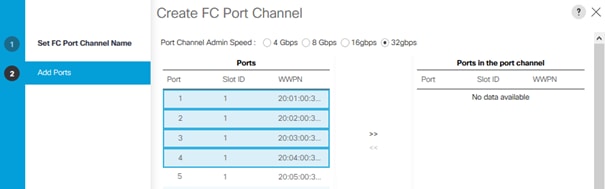

6. Set Port Channel Admin Speed to 16gbps. Select the following ports to be added to the port channel:

◦ Slot ID 1 and port 1

◦ Slot ID 1 and port 2

◦ Slot ID 1 and port 3

◦ Slot ID 1 and port 4

![]() The selected port channel admin speed and ports is based on Uplink Port connectivity and device cabling in this lab setup and might deviate in your data center configuration.

The selected port channel admin speed and ports is based on Uplink Port connectivity and device cabling in this lab setup and might deviate in your data center configuration.

7. Click the >> symbol to add the ports to the FC port channel.

8. To create the port channel, click Finish.

9. Click OK

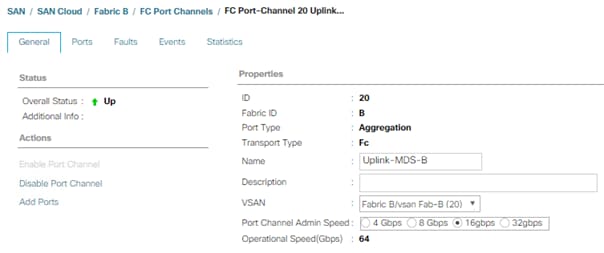

10. Under SAN > SAN Cloud, expand the Fabric B tree.

11. Right-click FC Port Channels.

12. Select Create FC Port Channel.

13. Enter the unique ID 20 and FC port channel name Uplink-MDS-B. Click Next.

14. Set Port Channel Admin Speed to 16gbps. Select the following ports to be added to the port channel:

◦ Slot ID 1 and port 1

◦ Slot ID 1 and port 2

◦ Slot ID 1 and port 3

◦ Slot ID 1 and port 4

![]() The selected port channel admin speed and ports is based on Uplink Port connectivity and device cabling in this lab setup and might deviate in your data center configuration.

The selected port channel admin speed and ports is based on Uplink Port connectivity and device cabling in this lab setup and might deviate in your data center configuration.

15. Click the >> symbol to add the ports to the FC port channel

16. To create the port channel, click Finish.

17. Click OK.

Assign Respective Fabric FC Channels to the VSANs

To assign the fc port channels to respective fabric VSAN just created, follow these steps:

1. In Cisco UCS Manager, click the SAN tab > SAN Cloud > Fabric A > FC Port Channels.

2. Select FC Port-Channel 10 Uplink-MDS-A

3. On the right pane, change the VSAN information from default (1) to Fab-A VSAN 10 created for Fabric-A.

4. Click the SAN tab > SAN Cloud > Fabric B > FC Port Channels.

5. Select FC Port-Channel 20 Uplink-MDS-B.

6. On the right pane, change the VSAN information from default (1) to Fab-B VSAN 20 created for Fabric-B.

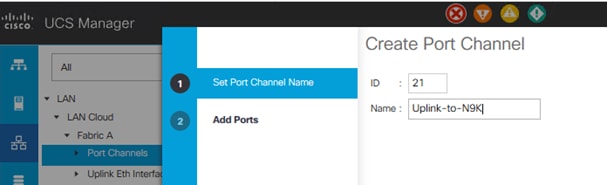

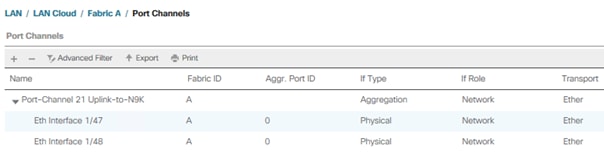

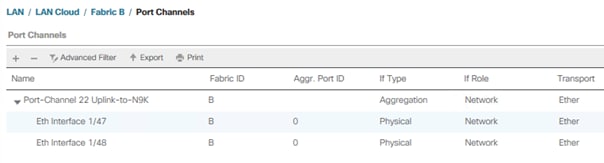

Create LAN Uplink Port Channels

Configure the LAN uplinks from the Fabric Interconnects towards the northbound Nexus Switches. A port channel bundles the interfaces into a group to provide increased bandwidth and redundancy and load balance the SAP network zone traffic across these physical interfaces.

For example, create port channel 21 on FI-A and port channel 22 on FI-B. This port channel pairs have corresponding vPCs defined on N9Ks to ensure seamless redundancy and failover for the north-south network traffic in the rare case of an IOM or VIC port failure situation.

In this example configuration we use two pairs of 2 x 25GE ports for the connectivity between the FI and Nexus switch to handle the network traffic of all network zones except the internal node to node traffic. While this is sufficient for most of the use cases it is possible to extend the configuration and add additional port channel pairs if required to separate network intensive traffic like backup for example.

![]() The ports selection is based on Uplink Port connectivity and device cabling in this lab setup and might deviate in your data center configuration.

The ports selection is based on Uplink Port connectivity and device cabling in this lab setup and might deviate in your data center configuration.

To configure the necessary port channels from FI-A and FI-B to the uplink Cisco Nexus switches follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane

2. Under LAN > LAN Cloud, expand the Fabric A tree.

3. Right-click Port Channels.

4. Select Create Port Channel.

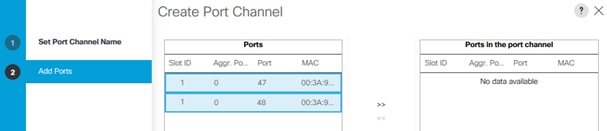

5. Enter the unique ID 21 and port channel name Uplink-to-N9K, then click Next.

6. Select the following ports and add them to the port channel:

◦ Slot ID 1 and port 47

◦ Slot ID 2 and port 48

7. Click the >> symbol to add the ports to the port channel.

8. Click Finish to create the port-channel.

9. Click Ok.

10. Under LAN > LAN Cloud, expand the Fabric B tree.

11. Right-click Port Channels.

12. Select Create Port Channel.

13. Enter the unique ID 22 and port channel name Uplink-to-N9K, then click Next.

14. Select the following ports and add them to the port channel:

◦ Slot ID 1 and port 47

◦ Slot ID 2 and port 48

15. Click the >> symbol to add the ports to the port channel

16. To create the port channel, click Finish.

17. Click OK.

![]() A second uplink port channel set can be configured and exclusively used for backup network traffic.

A second uplink port channel set can be configured and exclusively used for backup network traffic.

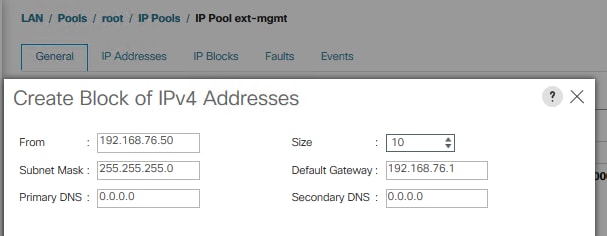

Add Block of IP Addresses for KVM Access

To create a block of IP addresses for server Keyboard, Video, Mouse (KVM) access in the Cisco UCS environment, follow these steps:

![]() This block of IP addresses should be in the same subnet as the management IP addresses for the Cisco UCS Manager.

This block of IP addresses should be in the same subnet as the management IP addresses for the Cisco UCS Manager.

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select Pools > root > IP Pools > IP Pool ext-mgmt.

3. In the Actions pane, select Create Block of IPv4 Addresses.

4. Enter the starting IP address of the block and the number of IP addresses required, and the subnet and gateway information.

5. Click OK to create the IP block.

6. Click OK in the confirmation notification.

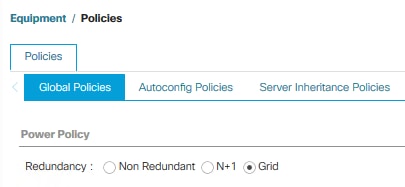

To run Cisco UCS with two independent power distribution units, the redundancy must be configured as Grid. Follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Select Equipment > Policies.

3. Select Global Policies in the work pane.

4. Set the Power Policy Redundancy to Grid.

5. Click Save Changes.

6. Click OK.

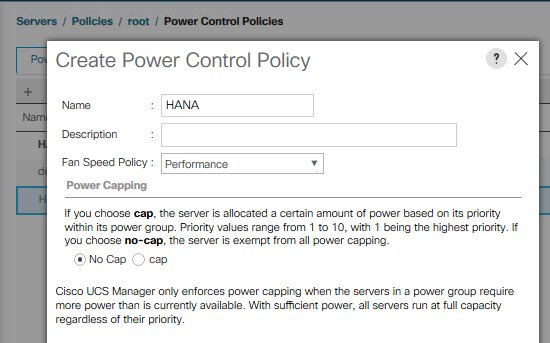

Power Control Policy

The Power Capping feature in Cisco UCS is designed to save power in the data center. This feature conflicts with the high-performance behavior of SAP HANA. Choose the “No Cap” option for the power control policy to not restrict the power supply for the SAP HANA server nodes.

![]() A power control policy is recommended to ensure sufficient power supply for high-performance and critical workload applications like SAP HANA.

A power control policy is recommended to ensure sufficient power supply for high-performance and critical workload applications like SAP HANA.

To create a power control policy for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Power Control Policies.

3. Right-click Power Control Policies and select Create Power Control Policy.

4. Enter HANA as the Power Control Policy name.

5. (Optional) Provide a description.

6. Set Fan Speed Policy to Performance from the drop-down list.

7. Enable the Power Capping radio button No Cap.

8. Click OK to create the power control policy.

9. Click OK.

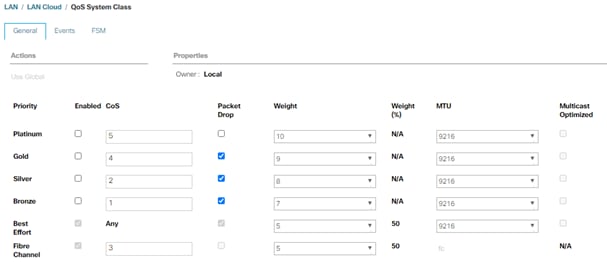

Set Jumbo Frames in Cisco UCS Fabric

The core network requirements for SAP HANA are covered by Cisco UCS defaults. The Service Profile is configured to distribute the traffic across Fabric Interconnect A and B.

To configure jumbo frames and enable quality of service in the Cisco UCS fabric, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > QoS System Class.

3. In the right pane, click the General tab.

4. On the MTU Column, enter 9216 in the box.

5. Click Save Changes in the bottom of the window.

6. Click OK.

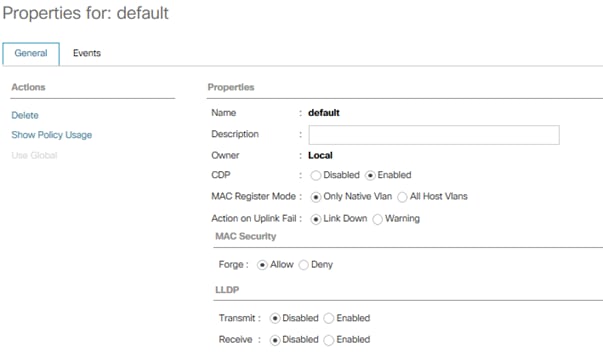

Enable CDP in the Default Network Control Policy

To enable the Cisco Discovery Protocol (CDP) to learn the MAC address of the End Point and to update the default Network Control Policy, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > Policies > root > Network Control Policies.

3. Double-click Default in the work pane.

4. Select the Enabled button for CDP.

5. Click Save Changes in the bottom of the window.

6. Click OK.

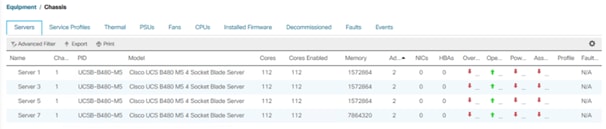

Acknowledge Cisco UCS Chassis and Rack-Mount Servers

To acknowledge all Cisco UCS chassis and/or Rack Mount Servers, follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Expand Chassis and select each chassis that is listed. Right-click each chassis and select Acknowledge Chassis.

3. Expand Rack-Mounts to the list the discovered servers. The servers automatically go into “Discovery” phase.

4. Ensure the Discovery completes successfully and there are no major or critical faults reported for any of the servers.

Firmware Update

Obtain the Cisco UCSM Release software bundles and transfer the Cisco UCS infrastructure software bundle, the related Cisco UCS B-Series and C-Series software bundle as well as the Capability Catalog file towards the Cisco Fabric Interconnect.

To update the firmware to the Cisco recommended release, review the Cisco UCS Manager Firmware Management Guide (https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/ucs-manager/GUI-User-Guides/Firmware-Mgmt/4-1/b_UCSM_GUI_Firmware_Management_Guide_4-1/b_UCSM_GUI_Firmware_Management_Guide_4-1_chapter_011.html).

![]() At the time of this validation the recommended firmware package release is 4.1(1c).

At the time of this validation the recommended firmware package release is 4.1(1c).

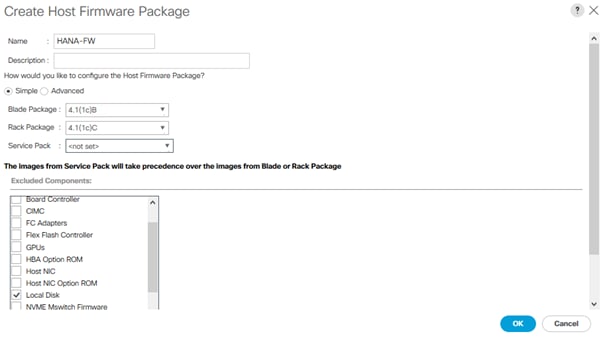

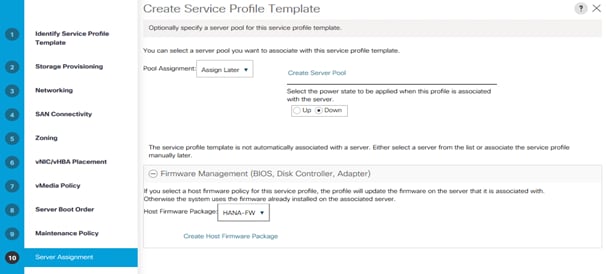

Firmware management policies allow the administrator to select the corresponding packages for a given server configuration. These policies often include packages for adapter, BIOS, board controller, FC adapters, host bus adapter (HBA) option ROM, and storage controller properties.

To create a firmware management policy for a given server configuration in the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Firmware Packages.

3. Right-click Host Firmware Packages and select Create Host Firmware Package.

4. Enter HANA-FW as the name of the host firmware package.

5. Leave Simple selected.

6. Select the version 4.1(1c) for both the Blade and Rack Packages.

7. Click OK to create the host firmware package.

8. Click OK.

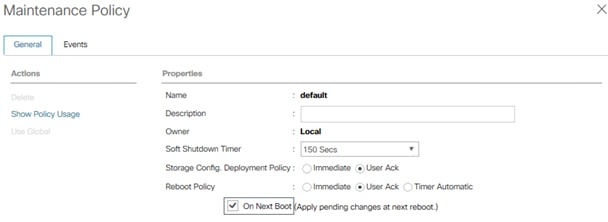

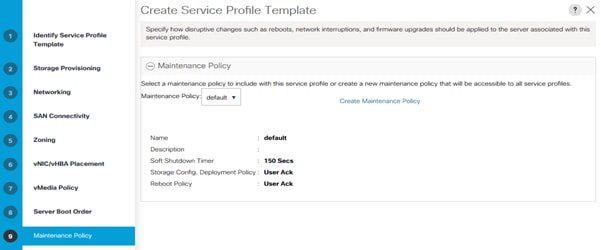

Update Default Maintenance Policy

To update the default Maintenance Policy with the Reboot Policy “User Ack” for SAP HANA servers, follow these steps. This policy will wait for the administrator to acknowledge the server reboot for the configuration changes to take effect.

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root.

3. Select Maintenance Policies > default.

4. Change the Reboot Policy to User Ack.

5. Click Save Changes.

6. Click OK to accept the change

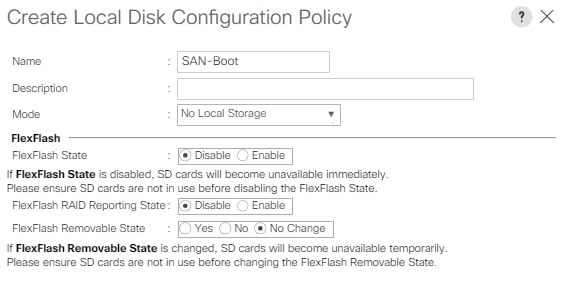

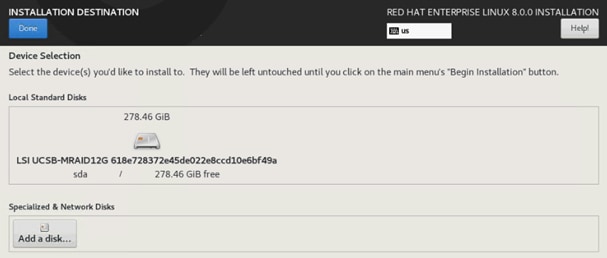

Create Local Disk Configuration Policy

All nodes are set to boot from SAN for this Cisco Validated Design as part of the Service Profile template. The benefits of booting from SAN are numerous; disaster recovery, lower cooling, and power requirements for each server since local drives are not required, as well as better performance, to name just a few.

![]() A local disk configuration is required only if the servers in the environment do have local disks.

A local disk configuration is required only if the servers in the environment do have local disks.

To configure local disk policy, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Local Disk Config Policies

3. Right-click Local Disk Config Policies and select create local disk configuration policy

4. Provide SAN-Boot as policy name

5. Change the mode drop down box to No Local Storage

6. Keep the other defaults and confirm with OK.

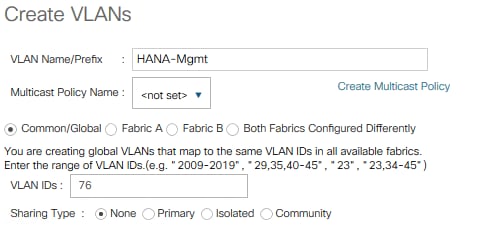

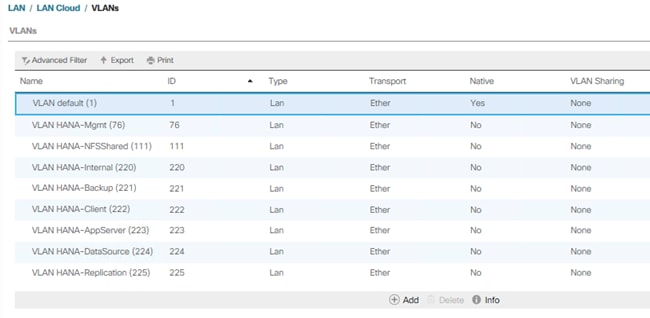

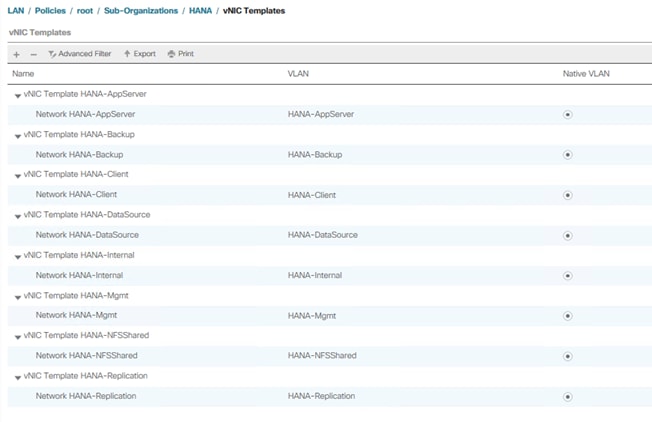

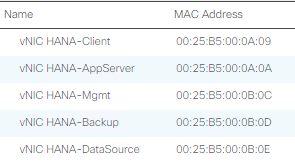

Within Cisco UCS, all the network types for an SAP HANA system are manifested by defined VLANs. Network design guideline from SAP recommends seven SAP HANA related networks and two infrastructure related networks.

The total number of VLANs depends on the SAP HANA installation scenario and might differ in a customer environment. If there is no SAP HANA System Replication configured the replication network is needless. The same applies for the internal host communication network when there is no Scale Out scenario required.

The VLAN IDs can be changed if required to match the VLAN IDs in the customer’s network – for example, ID 221 for backup should match the configured VLAN ID at the customer uplink network switches.

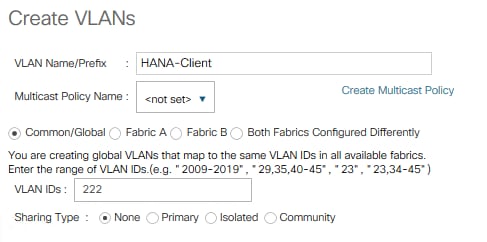

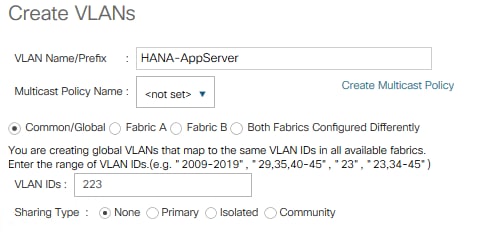

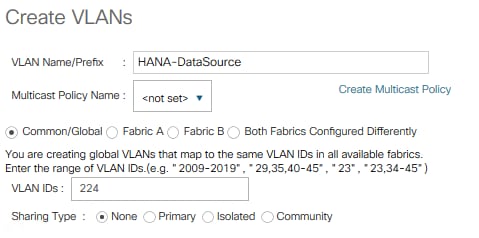

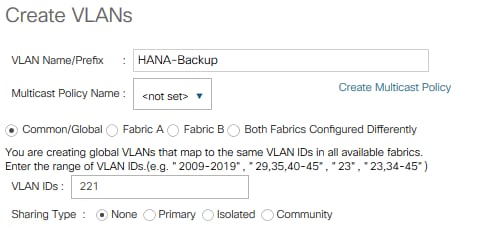

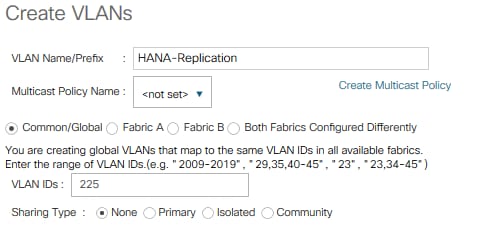

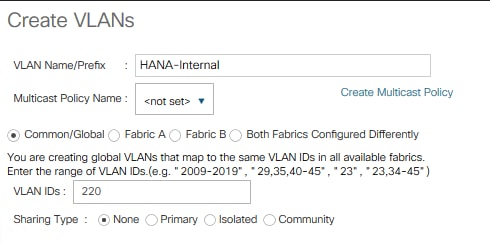

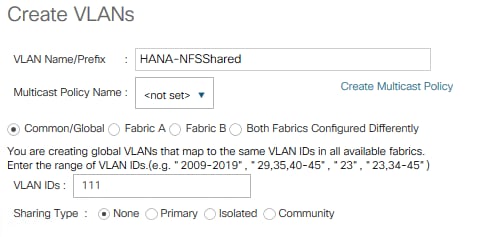

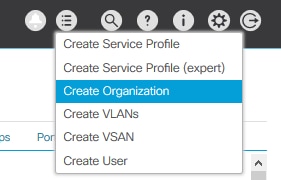

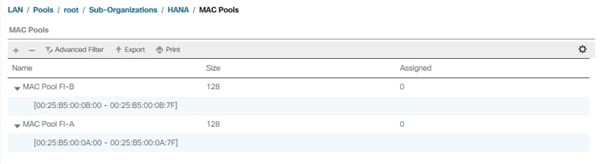

To configure the necessary VLANs for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > VLANs.

3. Right-click VLANs and select Create VLANs.

4. Enter HANA-Mgmt as VLAN name of the HANA Management network.

5. Keep the Common/Global option selected for the scope of the VLAN.

6. Enter <var_oob_vlan_id> as the ID of the HANA Node to Node network.

7. Keep the Sharing Type as None.

8. Click OK and confirm with OK.

9. Repeat steps 1-8 to create all required VLANs.

10. Create VLAN HANA-Client using <var_client_vlan_id>

11. Create VLAN HANA-AppServer using <var_appserver_vlan_id>

12. Create VLAN HANA-DataSource using <var_datasource_vlan_id>

13. Create VLAN HANA-Backup using <var_backup_vlan_id>

14. Create VLAN HANA-Replication using <var_replication_vlan_id>

15. Create VLAN HANA-Internal Node to Node traffic using <var_internal_vlan_id>

16. Create VLAN HANA-NFSshared for /hana/shared NFS network.

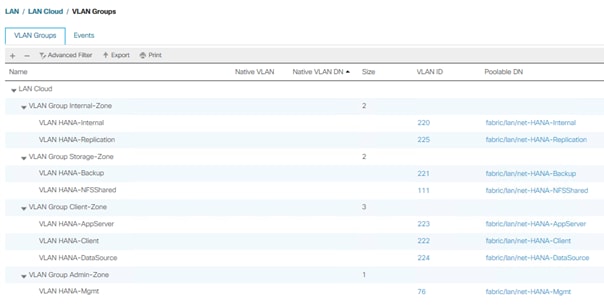

The summary of all previous created VLANs is shown below:

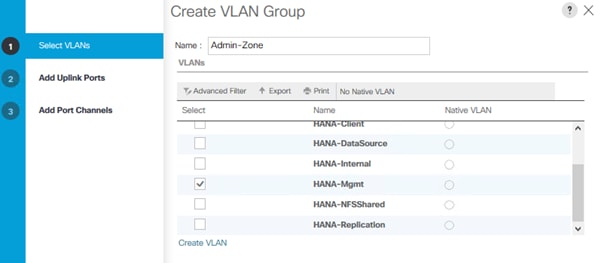

Create VLAN Groups to simplify the management and bandwidth allocation to a dedicated uplink on the Fabric Interconnect. SAP groups recommended SAP HANA networks into zones which translates to VLAN Groups in the Cisco UCS configuration:

● Client Zone – including AppServer, Client and DataSource networks

● Internal Zone – including Inter-node and System Replication networks

● Storage Zone – including Backup and IP storage networks

● (optional) Admin zone – including Management or Linux cluster network if any

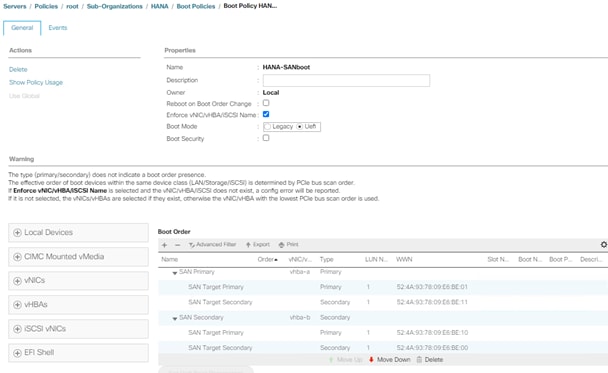

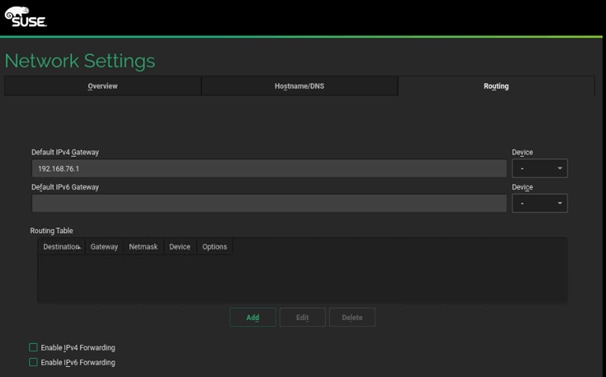

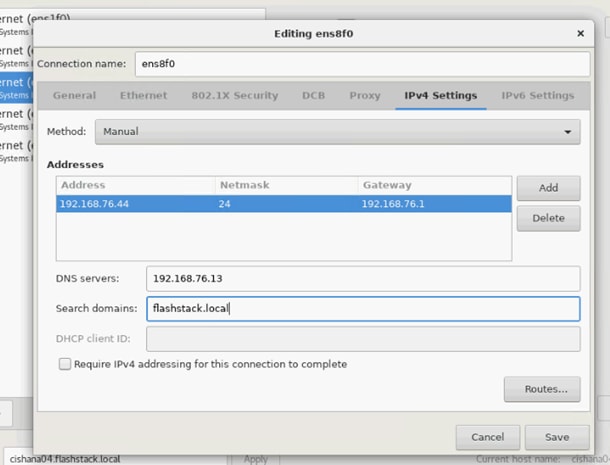

![]() For this deployment guide we create four VLAN Groups. Depending on customer requirements and SAP HANA scenario the number of VLAN Groups might differ in a customer environment.

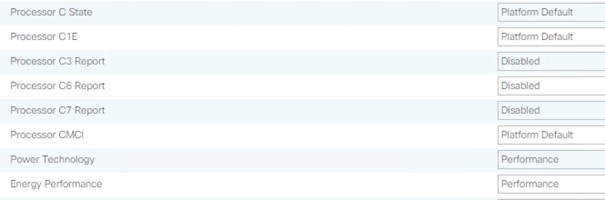

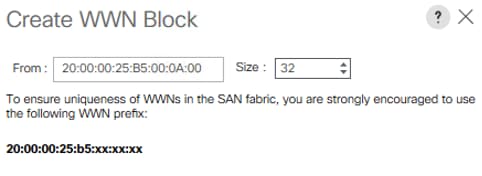

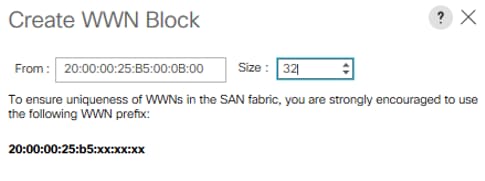

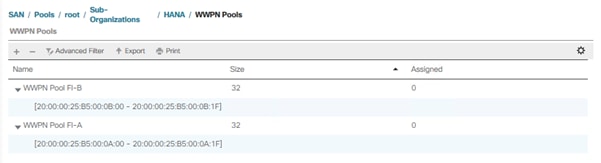

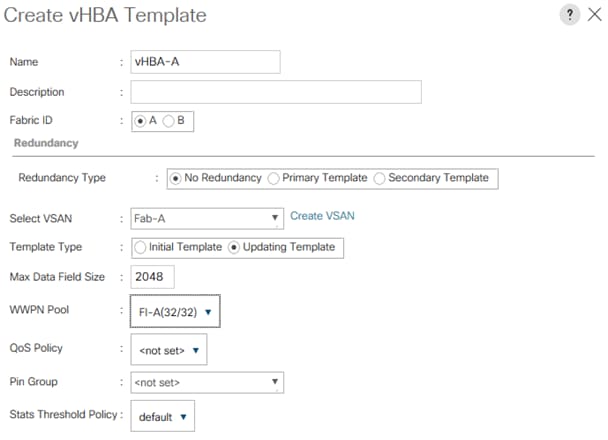

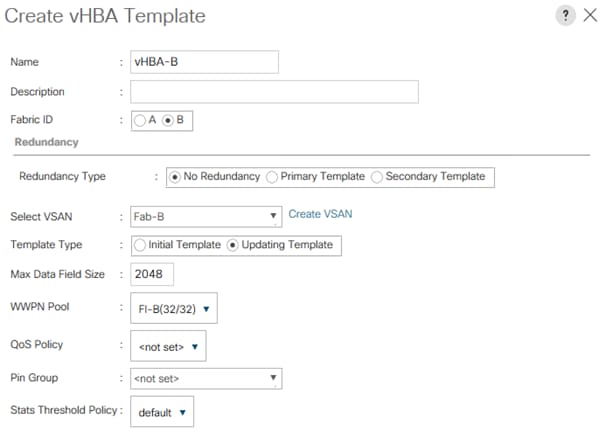

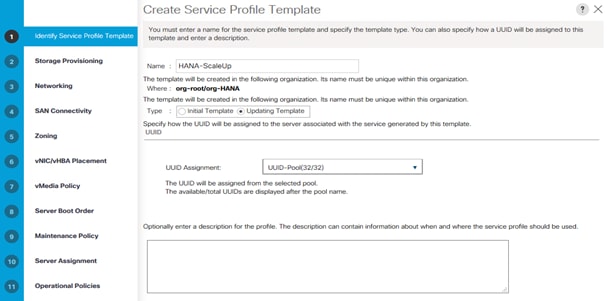

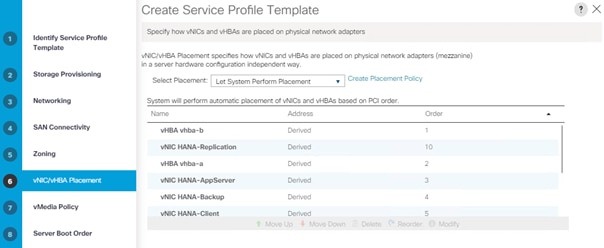

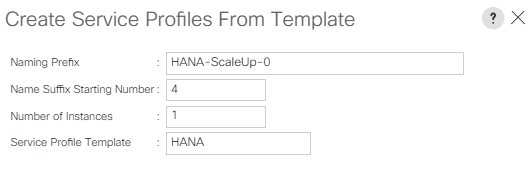

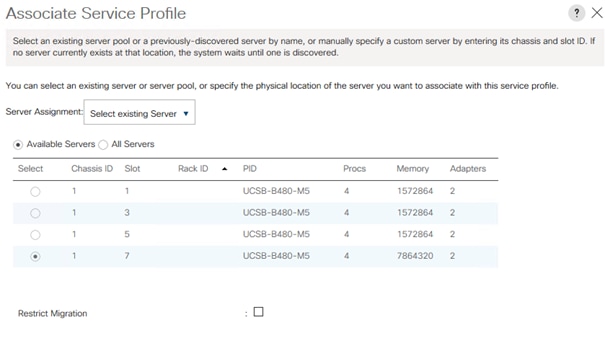

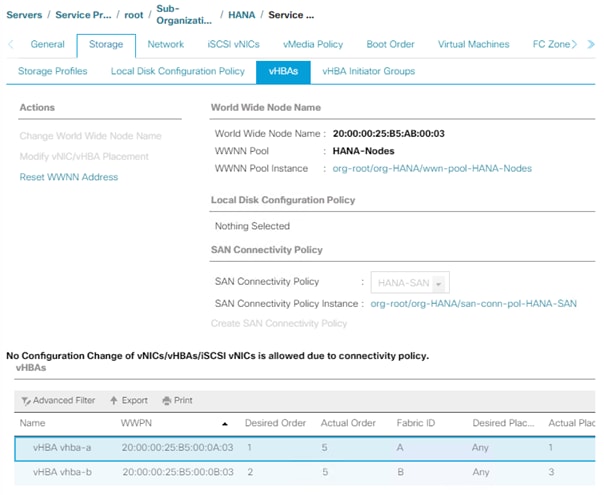

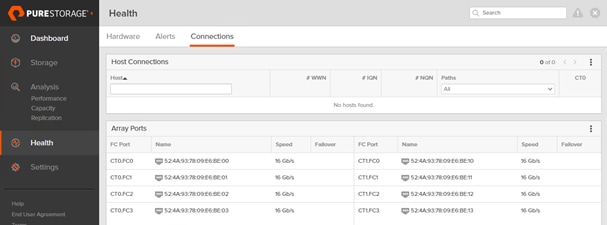

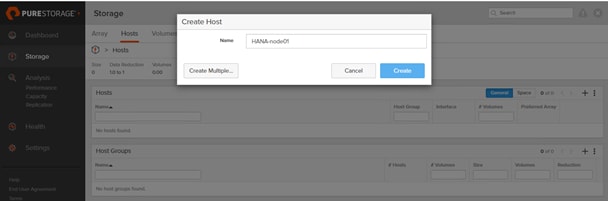

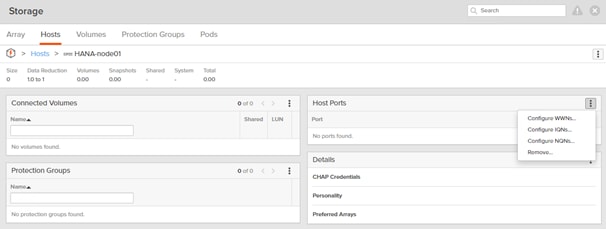

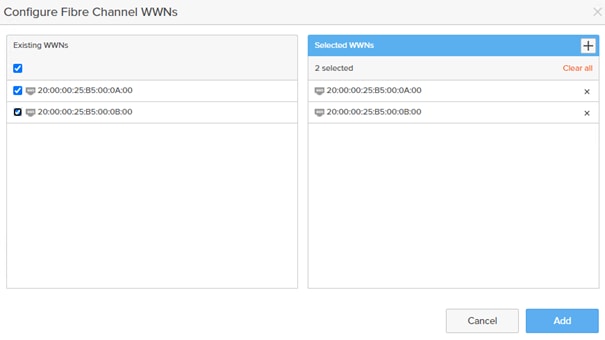

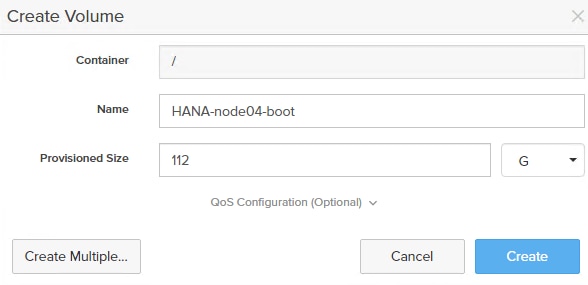

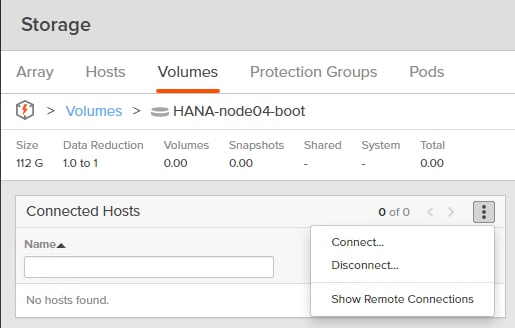

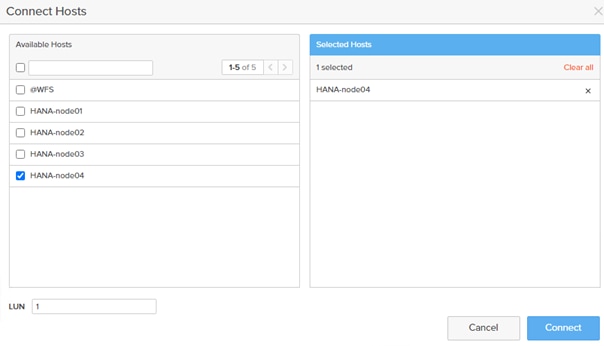

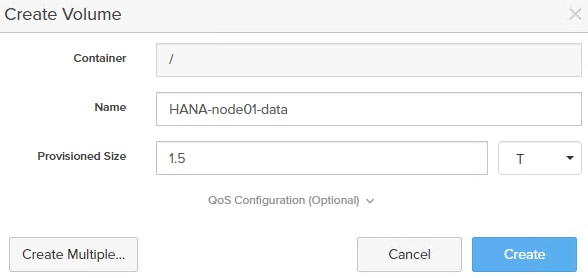

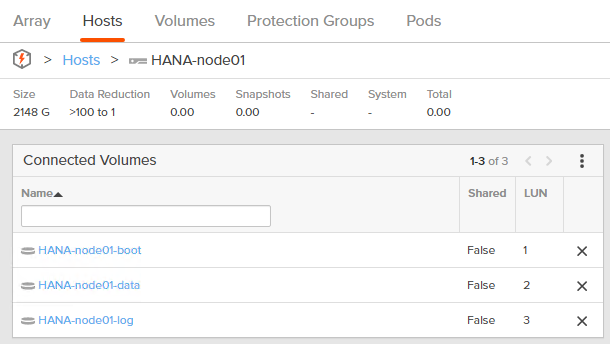

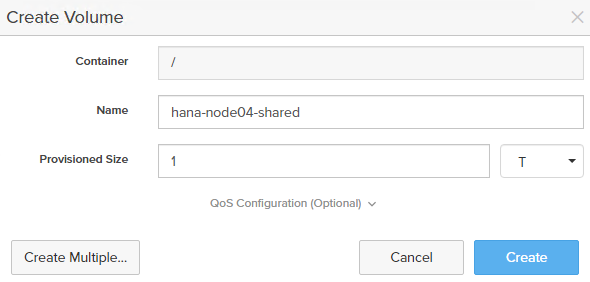

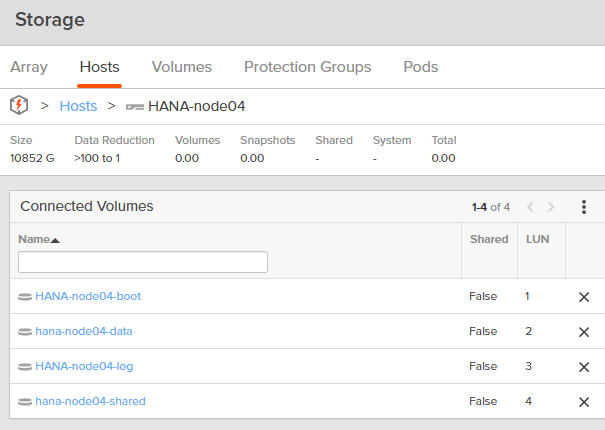

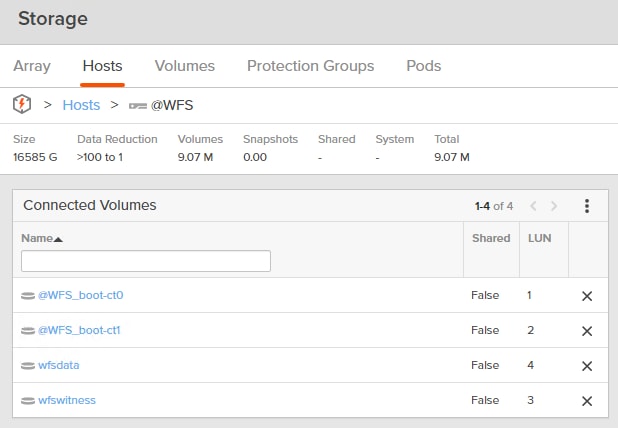

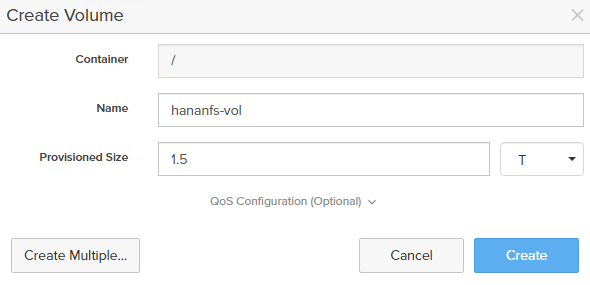

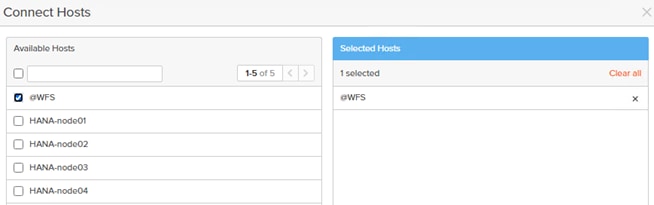

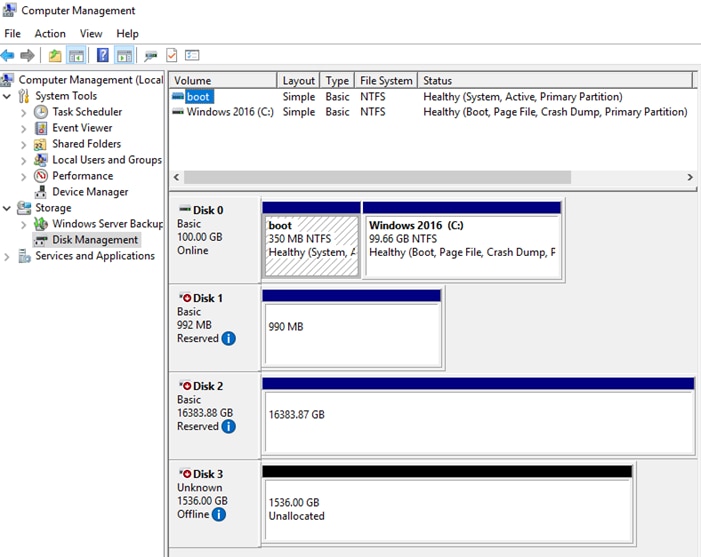

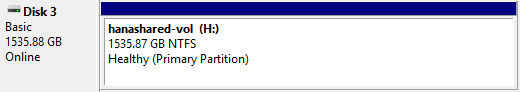

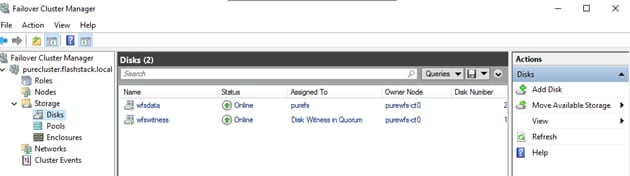

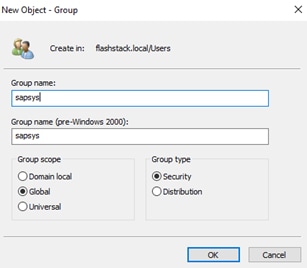

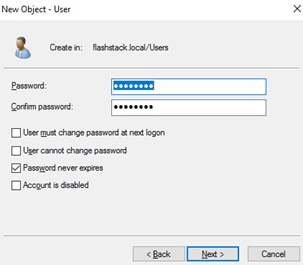

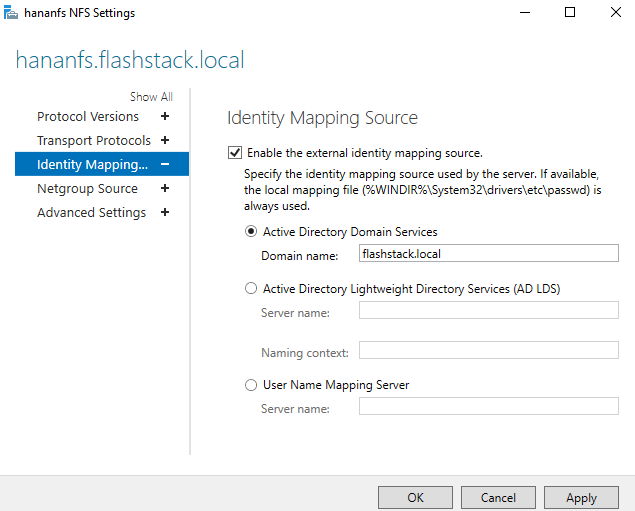

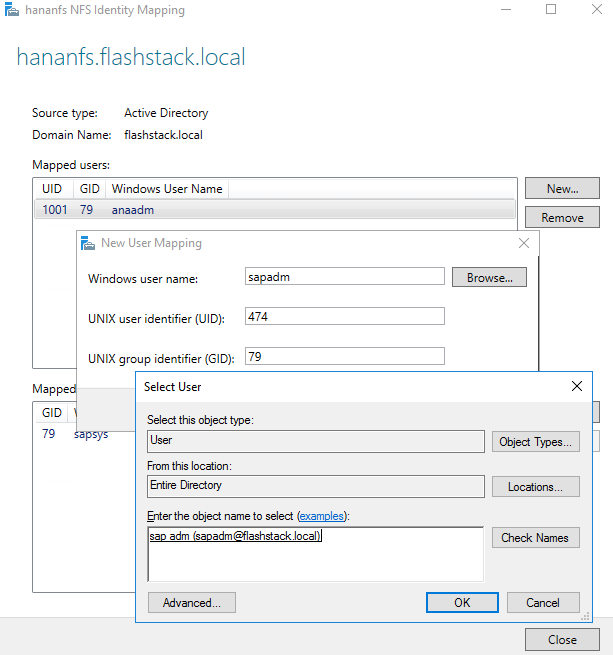

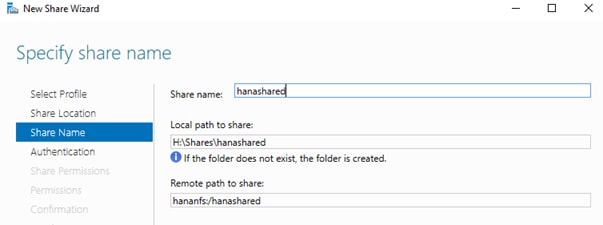

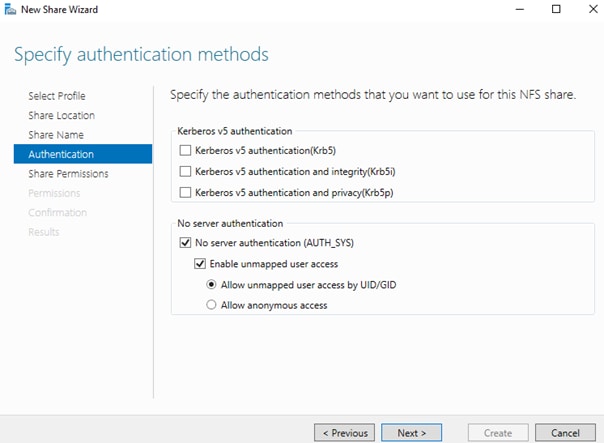

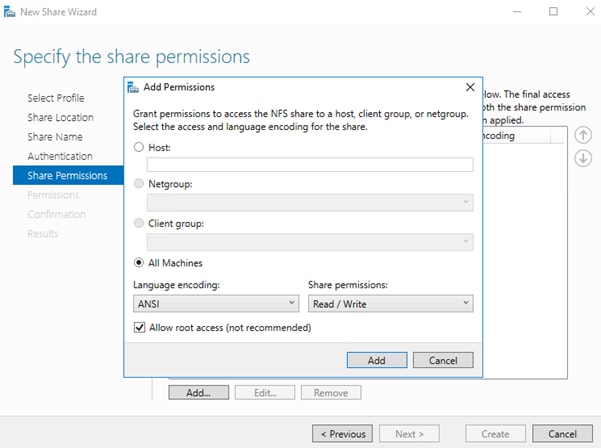

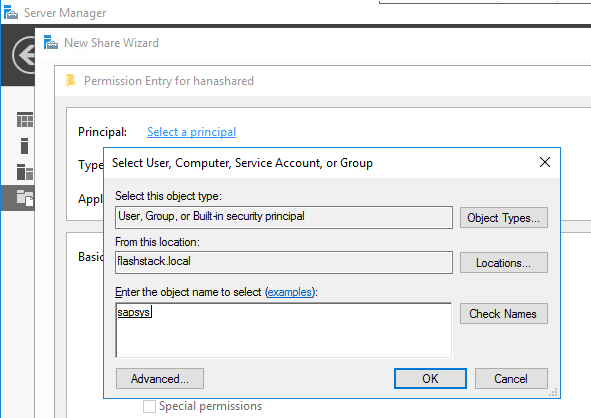

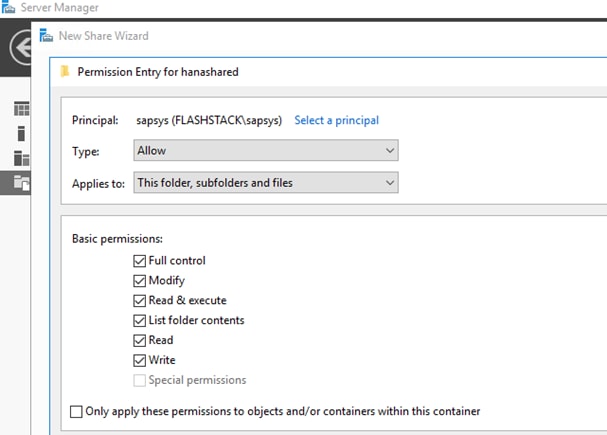

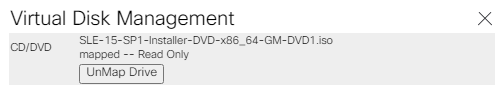

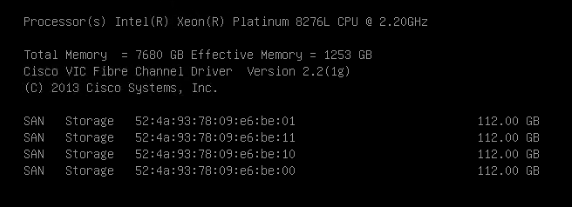

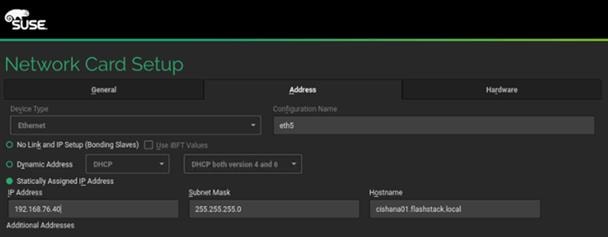

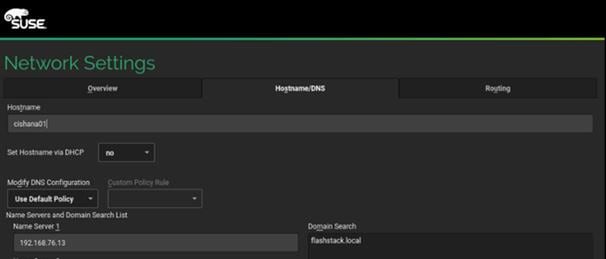

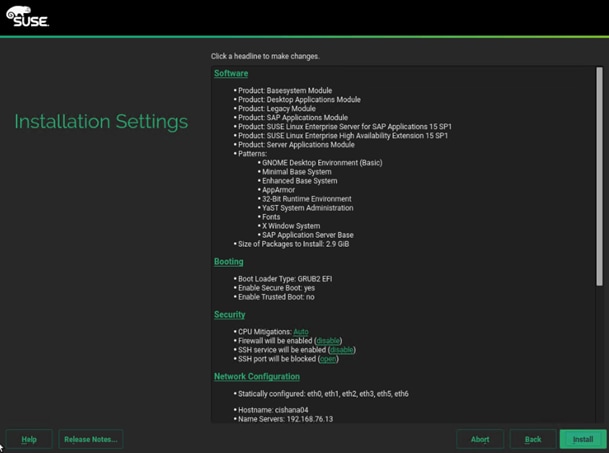

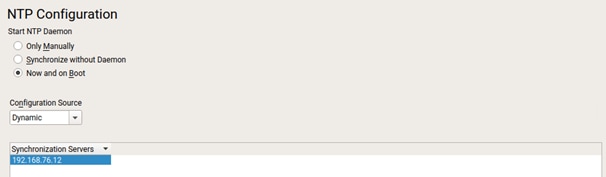

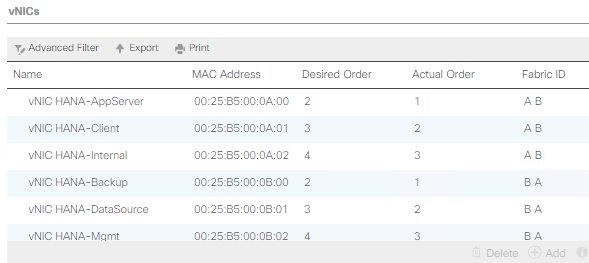

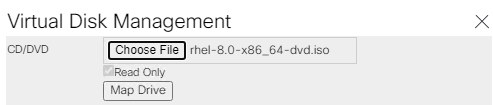

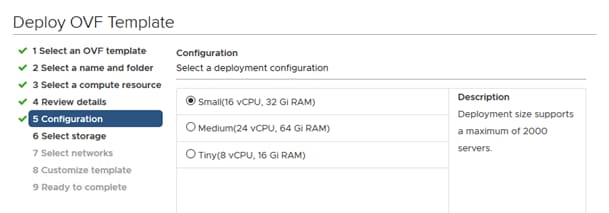

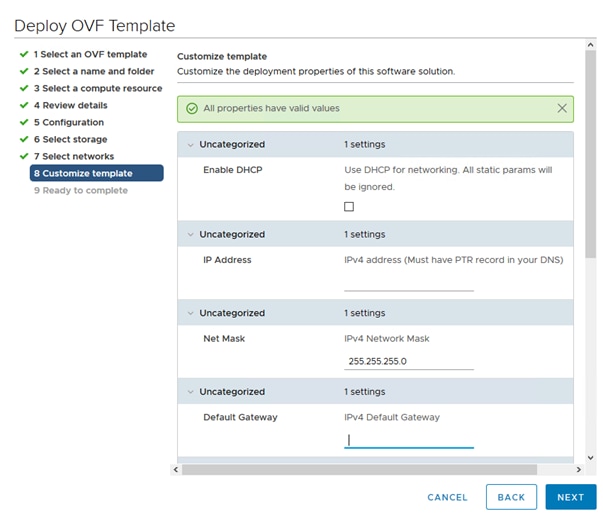

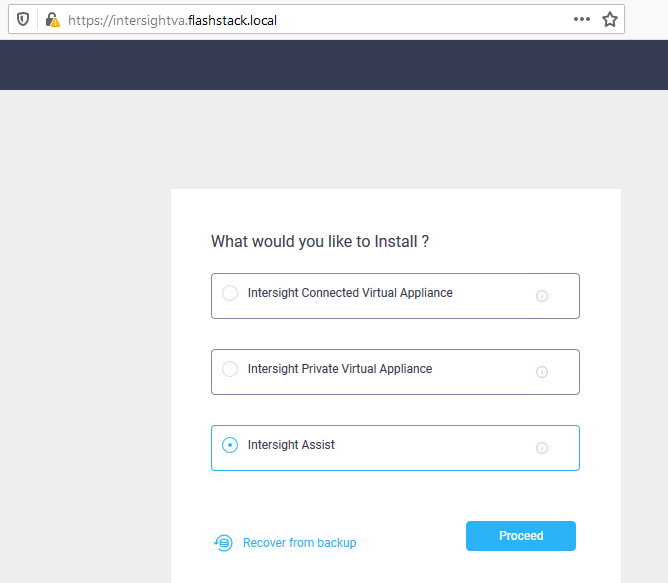

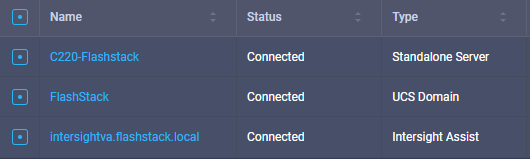

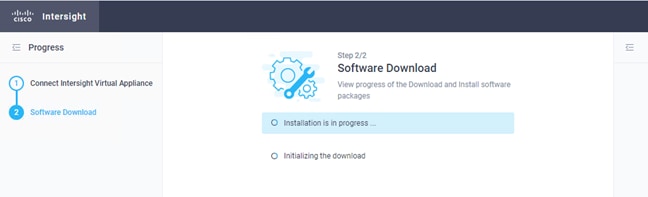

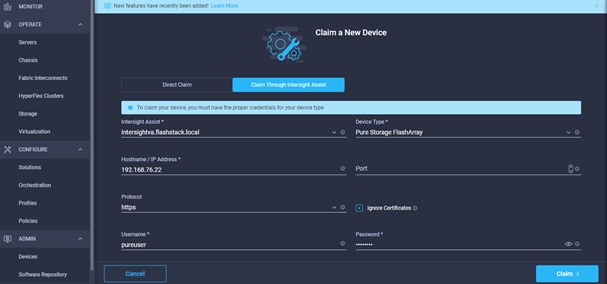

For this deployment guide we create four VLAN Groups. Depending on customer requirements and SAP HANA scenario the number of VLAN Groups might differ in a customer environment.