- Preface

- New and Changed Information

- Overview

- Configuring CDP

- Configuring the Domain

- Managing Server Connections

- Managing the Configuration

- Working with Files

- Managing Users

- Configuring NTP

- Configuring Local SPAN and ERSPAN

- Configuring SNMP

- Configuring NetFlow

- Configuring System Message Logging

- Configuring iSCSI Multipath

- Configuring VSM Backup and Recovery

- Enabling vTracker

- Configuring Virtualized Workload Mobility

- Index

Cisco Nexus 1000V System Management Configuration Guide, Release 4.2(1)SV2(2.1)

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- Updated:

- July 14, 2014

Chapter: Configuring iSCSI Multipath

Contents

- Configuring iSCSI Multipath

- Information About iSCSI Multipath

- Overview

- Supported iSCSI Adapters

- iSCSI Multipath Setup on the VMware Switch

- Guidelines and Limitations

- Pre-requisites

- Default Settings

- Configuring iSCSI Multipath

- Uplink Pinning and Storage Binding

- Process for Uplink Pinning and Storage Binding

- Creating a Port Profile for a VMkernel NIC

- Creating VMkernel NICs and Attaching the Port Profile

- Manually Pinning the NICs

- Identifying the iSCSI Adapters for the Physical NICs

- Identifying iSCSI Adapters on the vSphere Client

- Identifying iSCSI Adapters on the Host Server

- Binding the VMkernel NICs to the iSCSI Adapter

- Converting to a Hardware iSCSI Configuration

- Converting to a Hardware iSCSI Configuration

- Removing the Binding to the Software iSCSI Adapter

- Adding the Hardware NICs to the DVS

- Changing the VMkernel NIC Access VLAN

- Process for Changing the Access VLAN

- Changing the Access VLAN

- Verifying the iSCSI Multipath Configuration

- Managing Storage Loss Detection

- Related Documents

- Feature History for iSCSI Multipath

Configuring iSCSI Multipath

This chapter contains the following sections:

- Information About iSCSI Multipath

- Guidelines and Limitations

- Pre-requisites

- Default Settings

- Configuring iSCSI Multipath

- Uplink Pinning and Storage Binding

- Converting to a Hardware iSCSI Configuration

- Changing the VMkernel NIC Access VLAN

- Verifying the iSCSI Multipath Configuration

- Managing Storage Loss Detection

- Related Documents

- Feature History for iSCSI Multipath

Information About iSCSI Multipath

This section includes the following topics:

Overview

The iSCSI multipath feature sets up multiple routes between a server and its storage devices for maintaining a constant connection and balancing the traffic load. The multipathing software handles all input and output requests and passes them through on the best possible path. Traffic from host servers is transported to shared storage using the iSCSI protocol that packages SCSI commands into iSCSI packets and transmits them on the Ethernet network.

iSCSI multipath provides path failover. In the event a path or any of its components fails, the server selects another available path. In addition to path failover, multipathing reduces or removes potential bottlenecks by distributing storage loads across multiple physical paths.

The daemon on an KVM server communicates with the iSCSI target in multiple sessions using two or more Linux kernel NICs on the host and pinning them to physical NICs on the Cisco Nexus 1000V. Uplink pinning is the only function of multipathing provided by the Cisco Nexus 1000V. Other multipathing functions such as storage binding, path selection, and path failover are provided by code running in the Linux kernel.

Setting up iSCSI Multipath is accomplished in the following steps:

Uplink Pinning

Each Linux kernel port created for iSCSI access is pinned to one physical NIC. This overrides any NIC teaming policy or port bundling policy. All traffic from the Linux kernel port uses only the pinned uplink to reach the upstream switch.

Storage Binding

Each Linux kernel port is pinned to the iSCSI host bus adapter (VMHBA) associated with the physical NIC to which the Linux kernel port is pinned.

The ESX or ESXi host creates the following VMHBAs for the physical NICs.

For detailed information about how to use sn iSCSI storage area network (SAN), see the iSCSI SAN Configuration Guide.

Supported iSCSI Adapters

The default settings in the iSCSI Multipath configuration are listed in the following table.

Parameter |

Default |

|---|---|

Type (port-profile) |

vEthernet |

Description (port-profile) |

None |

Linux port group name (port-profile) |

The name of the port profile |

Switchport mode (port-profile) |

Access |

State (port-profile) |

Disabled |

iSCSI Multipath Setup on the VMware Switch

Before enabling or configuring multipathing, networking must be configured for the software or hardware iSCSI adapter. This involves creating a Linux kernel iSCSI port for the traffic between the iSCSI adapter and the physical NIC.

Uplink pinning is done manually by the admin directly on the OpenStack controller.

Storage binding is also done manually by the admin directly on the KVM host or using RCLI.

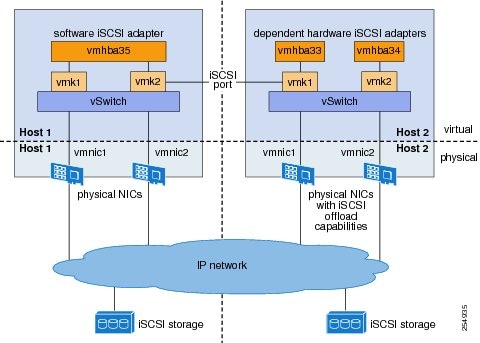

For software iSCSI, only oneVMHBA is required for the entire implementation. All Linux kernel ports are bound to this adapter. For example, in the following illustration, both vmk1 and vmk2 are bound to VMHBA35.

For hardware iSCSI, a separate adapter is required for each NIC. Each Linux kernel port is bound to the adapter of the physical KVM NIC to which it is pinned. For example, in the following illustration, vmk1 is bound to VMHBA33, the iSCSI adapter associated with vmnic1 and to which vmk1 is pinned. Similarly vmk2 is bound to VMHBA34.

The following are the adapters and NICs used in the hardware and software iSCSI multipathing configuration shown in the iSCSI Multipath illustration.

Software HBA |

Linux kernel NIC |

KVM NIC |

|---|---|---|

VMHBA35 |

1 |

1 |

2 |

2 |

|

Hardware HBA |

||

VMHBA33 |

1 |

1 |

VMHBA34 |

2 |

2 |

Guidelines and Limitations

The following are guidelines and limitations for the iSCSI multipath feature:

Only port profiles of type vEthernet can be configured with capability iscsi-multipath.

The port profile used for iSCSI multipath must be an access port profile, not a trunk port profile.

The following are not allowed on a port profile configured with capability iscsi-multipath:

Only VMkernel NIC ports can inherit a port profile configured with capability iscsi-multipathcapability iscsi-multipath.

The Cisco Nexus 1000V imposes the following limitations if you try to override its automatic uplink pinning.

The iSCSI initiators and storage must already be operational.

VMkernel ports must be created before enabling or configuring the software or hardware iSCSI for multipathing.

VMkernel networking must be functioning for the iSCSI traffic.

Before removing from the DVS an uplink to which an activeVMkernel NIC is pinned, you must first remove the binding between the VMkernel NIC and its VMHBA. The following system message displays as a warning:

vsm# 2010 Nov 10 02:22:12 sekrishn-bl-vsm %VEM_MGR-SLOT8-1-VEM_SYSLOG_ALERT: sfport : Removing Uplink Port Eth8/3 (ltl 19), when vmknic lveth8/1 (ltl 49) is pinned to this port for iSCSI Multipathing

Hardware iSCSI is new in Cisco Nexus 1000V Release 4.2(1)SV1(5.1). If you configured software iSCSI multipathing in a previous release, the following are preserved after upgrade:

An iSCSI target and initiator should be in the same subnet.

iSCSI multipathing on the Nexus 1000V currently only allows a single vmknic to be pinned to one vmnic.

Pre-requisites

The iSCSI Multipath feature has the following prerequisites:

You must understand VMware iSCSI SAN storage virtualization. For detailed information about how to use VMware ESX and VMware ESXi systems with an iSCSI storage area network (SAN), see the iSCSI SAN Configuration Guide.

You must know how to set up the iSCSI Initiator on your VMware ESX/ESXi host.

The host is already functioning with one of the following:

You must understand iSCSI multipathing and path failover.

VMware kernel NICs configured to access the SAN external storage are required.

Default Settings

Parameters |

Default |

|---|---|

Type (port-profile) |

vEthernet |

Description (port-profile) |

None |

VMware port group name (port-profile) |

The name of the port profile |

Switchport mode (port-profile) |

Access |

State (port-profile) |

Disabled |

Configuring iSCSI Multipath

Use the following procedures to configure iSCSI Multipath:

Uplink Pinning and Storage Binding

Use this section to configure iSCSI multipathing between hosts and targets over iSCSI protocol by assigning the vEthernet interface to an iSCSI multipath port profile configured with a system VLAN.

- Process for Uplink Pinning and Storage Binding

- Creating a Port Profile for a VMkernel NIC

- Creating VMkernel NICs and Attaching the Port Profile

- Manually Pinning the NICs

- Identifying the iSCSI Adapters for the Physical NICs

- Binding the VMkernel NICs to the iSCSI Adapter

Process for Uplink Pinning and Storage Binding

Refer to the following process for Uplink Pinning and Storage Binding:

Creating a Port Profile for a VMkernel NIC procedure.

Creating VMkernel NICs and Attaching the Port Profile procedure.

Do one of the following:

If you want to override the automatic pinning of NICS, go to Manually Pinning the NICs procedure.

If not, continue with storage binding.

You have completed uplink pinning. Continue with the next step for storage binding.

Identifying the iSCSI Adapters for the Physical NICs procedure.

Binding the VMkernel NICs to the iSCSI Adapter procedure.

Verifying the iSCSI Multipath Configuration procedure.

Creating a Port Profile for a VMkernel NIC

You can use this procedure to create a port profile for a VMkernel NIC.

Before starting this procedure, you must know or do the following:

You have already configured the host with one port channel that includes two or more physical NICs

Multipathing must be configured on the interface by using this procedure to create an iSCSI multipath port profile and then assigning the interface to it.

You are logged in to the CLI in EXEC mode.

You know the VLAN ID for the VLAN you are adding to this iSCSI multipath port profile.

The port profile must be an access port profile. It cannot be a trunk port profile. This procedure includes steps to configure the port profile as an access port profile.

Creating VMkernel NICs and Attaching the Port Profile

You can use this procedure to create VMkernel NICs and attach a port profile to them which triggers the automatic pinning of the VMkernel NICs to physical NICs.

You have already created a port profile using the procedure, “Creating a Port Profile for a VMkernel NIC” procedure on page 13-6, and you know the name of this port profile.

The VMkernel ports are created directly on the vSphere client.

Create one VMkernel NIC for each physical NIC that carries the iSCSI VLAN. The number of paths to the storage device is the same as the number of VMkernel NIC created.

Step 2 of this procedure triggers automatic pinning of VMkernel NICs to physical NICs, so you must understand the following rules for automatic pinning:

A VMkernel NIC is pinned to an uplink only if the VMkernel NIC and the uplink carry the same VLAN.

The hardware iSCSI NIC is picked first if there are many physical NICs carrying the iSCSI VLAN.

The software iSCSI NIC is picked only if there is no available hardware iSCSI NIC.

Two VMkernel NICs are never pinned to the same hardware iSCSI NIC.

Two VMkernel NICs can be pinned to the same software iSCSI NIC.

Before starting this procedure, you must know or do the following

Manually Pinning the NICs

Note | If the pinning done automatically by Cisco Nexus 1000V is not optimal or if you want to change the pinning, then this procedure describes how to use the vemcmd on the ESX host to override it. |

Before starting this procedure, you must know or do the following:

You are logged in to the ESX host.

You have already created VMkernel NICs and attached a port profile to them.

Before changing the pinning, you must remove the binding between the iSCSI VMkernel NIC and the VMHBA. This procedure includes a step for doing this.

Manual pinning persists across ESX host reboots.Manual pinning is lost if the VMkernel NIC is moved from the DVS to the vSwitch and back.

| Step 1 | List the binding for each VMHBA to identify the binding to remove (iSCSI VMkernel NIC to VMHBA) with the command esxcli swiscsi nic list -d vmhbann.

Example: esxcli swiscsi nic list -d vmhba33

vmk6

pNic name: vmnic2

ipv4 address: 169.254.0.1

ipv4 net mask: 255.255.0.0

ipv6 addresses:

mac address: 00:1a:64:d2:ac:94

mtu: 1500

toe: false

tso: true

tcp checksum: false

vlan: true

link connected: true

ethernet speed: 1000

packets received: 3548617

packets sent: 102313

NIC driver: bnx2

driver version: 1.6.9

firmware version: 3.4.4

vmk5

pNic name: vmnic3

ipv4 address: 169.254.0.2

ipv4 net mask: 255.255.0.0

ipv6 addresses:

mac address: 00:1a:64:d2:ac:94

mtu: 1500

toe: false

tso: true

tcp checksum: false

vlan: true

link connected: true

ethernet speed: 1000

packets received: 3548617

packets sent: 102313

NIC driver: bnx2

driver version: 1.6.9

firmware version: 3.4.4

|

| Step 2 | Remove the binding between the iSCSI VMkernel NIC and the VMHBA. Example: Example: esxcli swiscsi nic remove --adapter vmhba33 --nic vmk6 esxcli swiscsi nic remove --adapter vmhba33 --nic vmk5 If active iSCSI sessions exist between the host and targets, the iSCSI port cannot be disconnected. |

| Step 3 | From the ESX host, display the auto pinning configuration with the command # vemcmd show iscsi pinning. Example: Example: ~ # vemcmd show iscsi pinning Vmknic LTL Pinned_Uplink LTL vmk6 49 vmnic2 19 vmk5 50 vmnic1 18 |

| Step 4 | Manually pin the VMkernel NIC to the physical NIC, overriding the auto pinning configuration with the command # vemcmd set iscsi pinningvmk-ltl vmnic-ltl. Example: Example: ~ # vemcmd set iscsi pinning 50 20 |

| Step 5 | Manually pin the VMkernel NIC to the physical NIC, overriding the auto pinning configuration with the command # vemcmd set iscsi pinningvmk-ltl vmnic-ltl. Example: Example: ~ # vemcmd set iscsi pinning 50 20 |

| Step 6 | You have completed this procedure. Return to the Process for Uplink Pinning and Storage Binding section. |

Identifying the iSCSI Adapters for the Physical NICs

You can use one of the following procedures in this section to identify the iSCSI adapters associated with the physical NICs.

Identifying iSCSI Adapters on the vSphere Client

You can use this procedure on the vSphere client to identify the iSCSI adapters associated with the physical NICs.

Before beginning this procedure, you must know or do the following:

Identifying iSCSI Adapters on the Host Server

You can use this procedure on the ESX or ESXi host to identify the iSCSI adapters associated with the physical NICs.

Before beginning this procedure, you must do the following:

Binding the VMkernel NICs to the iSCSI Adapter

You can use this procedure to manually bind the physical VMkernel NICs to the iSCSI adapter corresponding to the pinned physical NICs.

Before starting this procedure, you must know or do the following:

Converting to a Hardware iSCSI Configuration

You can use the procedures in this section on an ESX 5.1 host to convert from a software iSCSI to a hardware iSCSI

Before starting the procedures in this section, you must know or do the following:

Converting to a Hardware iSCSI Configuration

You can use the procedures in this section on an ESX 5.1 host to convert from a software iSCSI to a hardware iSCSI

Before starting the procedures in this section, you must know or do the following:

Removing the Binding to the Software iSCSI Adapter

You can use this procedure to remove the binding between the iSCSI VMkernel NIC and the software iSCSI adapter.

Adding the Hardware NICs to the DVS

You can use this procedure, if the hardware NICs are not on Cisco Nexus 1000V DVS, to add the uplinks to the DVS using the vSphere client.

Before starting this procedure, you must know or do the following:

What to Do Next

You have completed this procedure. Return to the Process for Converting to a Hardware iSCSI Configuration section.

Changing the VMkernel NIC Access VLAN

You can use the procedures in this section to change the access VLAN, or the networking configuration, of the iSCSI VMkernel.

Process for Changing the Access VLAN

You can use the following steps to change the VMkernel NIC access VLAN:

| Step 1 | In the vSphere Client, disassociate the storage configuration made on the iSCSI NIC. |

| Step 2 | Remove the path to the iSCSI targets. |

| Step 3 | Remove the binding between the VMkernel NIC and the iSCSI adapter using the Removing the Binding to the Software iSCSI Adapter procedure. |

| Step 4 | Move VMkernel NIC from the Cisco Nexus 1000V DVS to the vSwitch. |

| Step 5 | Change the access VLAN, using the Changing the Access VLAN procedure. |

| Step 6 | Move the VMkernel NIC back from the vSwitch to the Cisco Nexus 1000V DVS. |

| Step 7 | Find an iSCSI adapter, using the Identifying the iSCSI Adapters for the Physical NICs procedure. |

| Step 8 | Bind the NIC to the adapter, using the Binding the VMkernel NICs to the iSCSI Adapter procedure. |

| Step 9 | Verify the iSCSI multipathing configuration, using the Verifying the iSCSI Multipath Configuration procedure. |

Changing the Access VLAN

Before starting this procedure, you must know or do the following:

What to Do Next

You have completed this procedure.

Verifying the iSCSI Multipath Configuration

Refer to the following commands and the examples.

You can use the commands in this section to verify the iSCSI multipath configuration.

Command |

Purpose |

|---|---|

~ # vemcmd show iscsi pinning |

Displays the auto pinning of VMkernel NICs. |

esxcli swiscsi nic list -d vmhba33 |

Displays the iSCSI adapter binding of VMkernel NICs. |

show port-profile [brief | expand-interface | usage] name [profile-name] |

Displays the port profile configuration. See Example. |

Managing Storage Loss Detection

This section describes the command that provides the configuration to detect storage connectivity losses and provides support when storage loss is detected. When VSMs are hosted on remote storage systems such as NFS or iSCSI, storage connectivity can be lost. This connectivity loss can cause unexpected VSM behavior.

Use the following command syntax to configure storage loss detection: system storage-loss { log | reboot } [ time <interval> ] | no system storage-loss [ { log | reboot } ] [ time <interval> ]

The time interval value is the intervals at which the VSM checks for storage connectivity status. If a storage loss is detected, the syslog displays. The default interval is 30 seconds. You can configure the intervals from 30 seconds to 600 seconds. The default configuration for this command is: system storage-loss log time 30

Note | Configure this command in EXEC mode. Do not use configuration mode. |

The following describes how this command manages storage loss detection:

Log only: A syslog message is displayed stating that a storage loss has occurred. The administrator must take action immediately to avoid an unexpected VSM state.

Reset: The VSM on which the storage loss is detected is reloaded automatically to avoid an unexpected VSM state.

Note | Do not keep both the active and standby VSMs on the same remote storage, so that storage losses do not affect the VSM operations. |

Before beginning this procedure, you must know or do the following:

You are logged in to the CLI in EXEC mode.

Related Documents

Related Topic |

Document Title |

|---|---|

VMware SAN Configuration |

VMware SAN Configuration Guide |

Feature History for iSCSI Multipath

Feature |

Releases |

Feature Information |

|---|---|---|

Hardware iSCSI Multipath |

4.2(1)SV1(4) |

Added support for hardware iSCSI Multipath. |

Configuring iSCSI Multipath |

4.0(4)SV1(1) |

This feature was introduced. |