Cisco SD-WAN Large Global WAN Design Case Study

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Bank of the Earth Company Background and Legacy Network

Network Topology (Fabric and Service VPN Data Planes)

Data Center Head-End SD-WAN Router Design

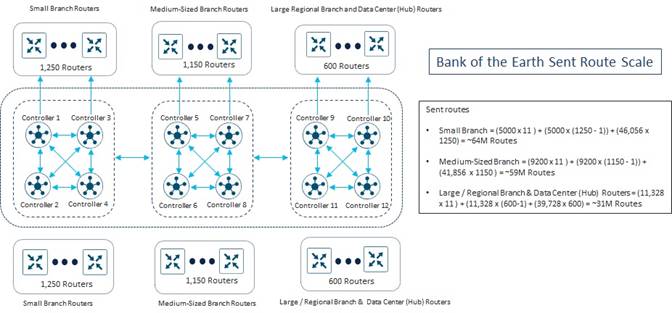

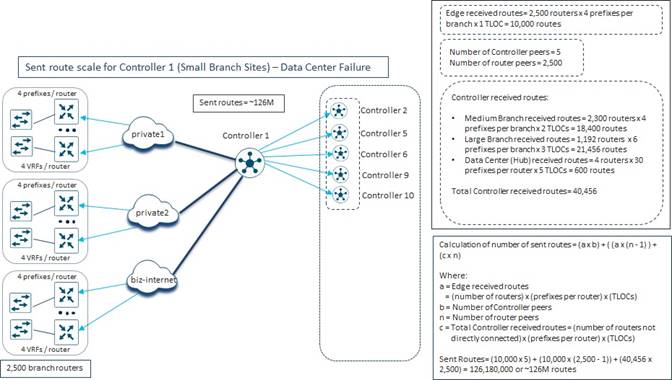

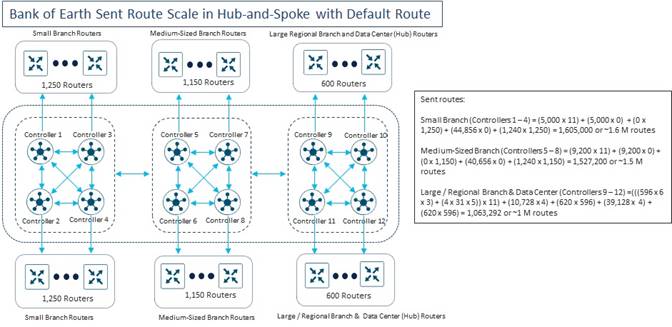

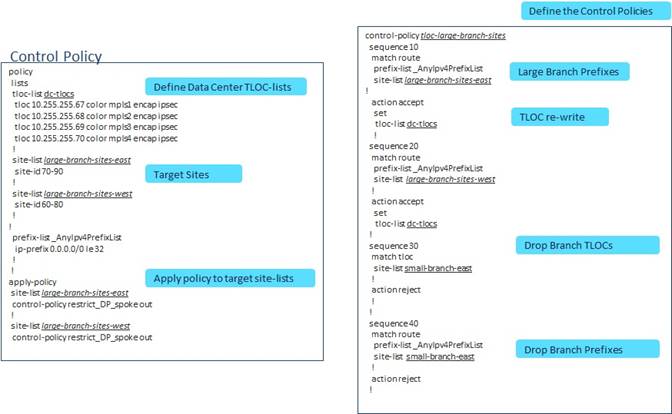

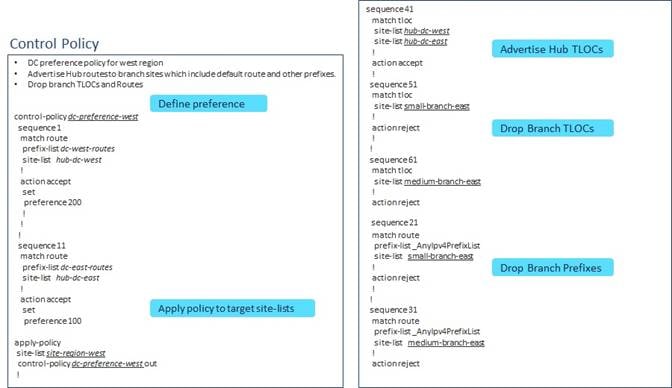

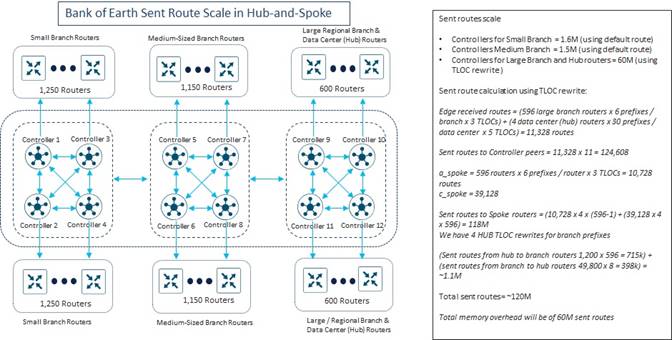

SD-WAN Controller OMP Route Calculations

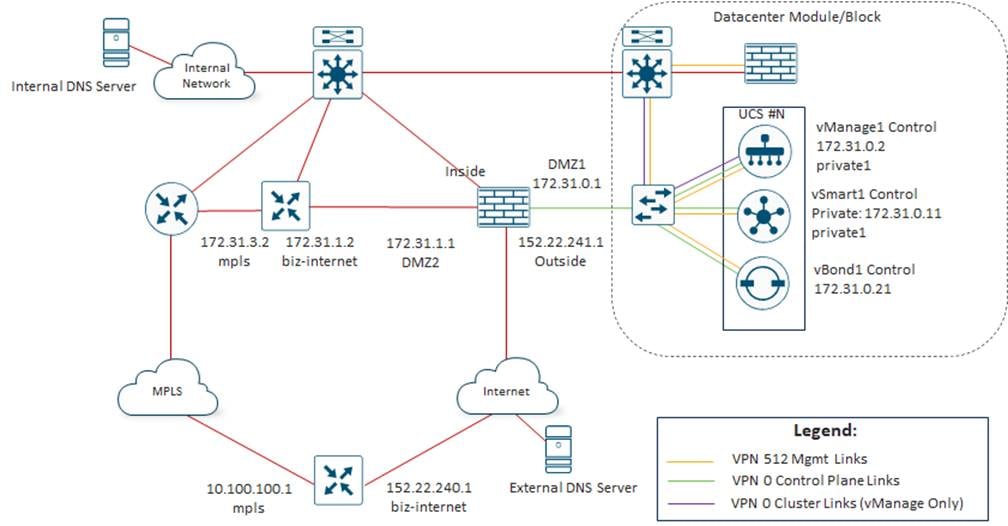

SD-WAN Controller Server Layout and IP Addressing

Appendix A: Changes from Previous Versions

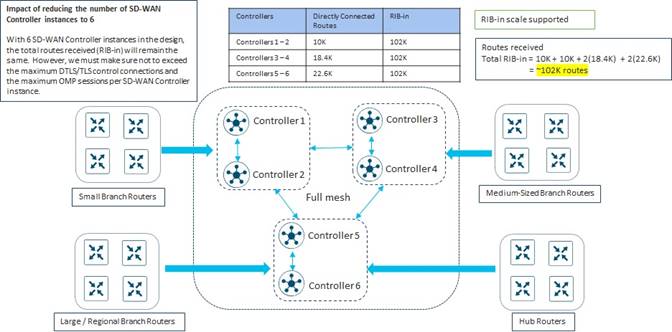

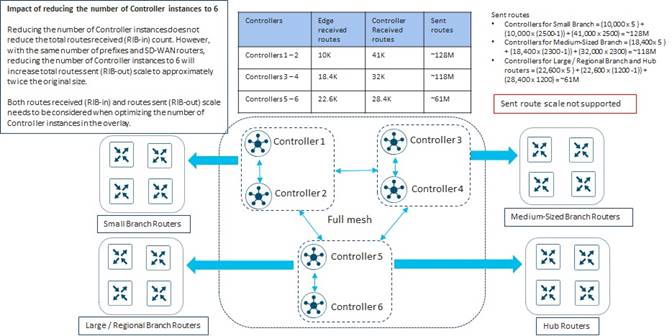

Appendix C: Alternative SD-WAN Controller Design

Appendix D: SD-WAN Controller Affinity Deep-Dive

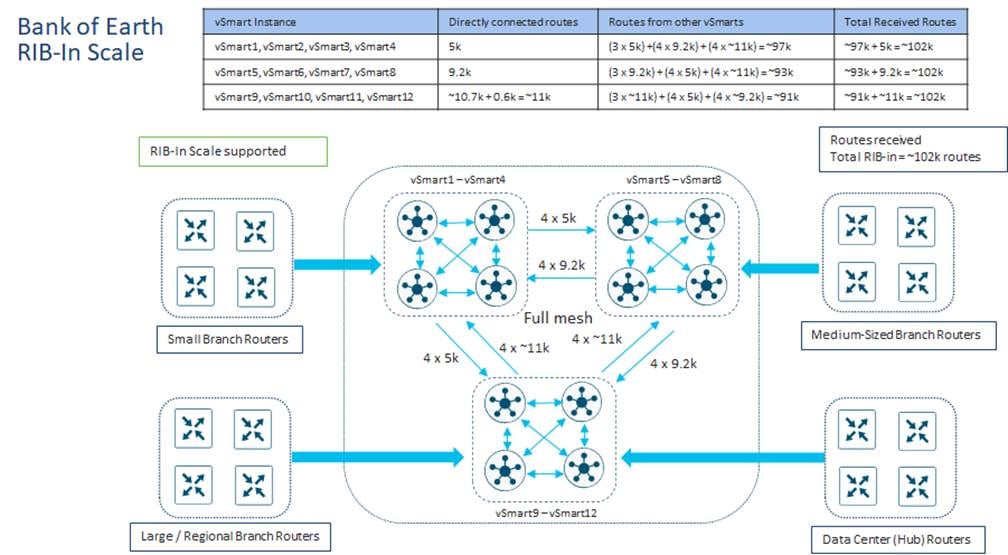

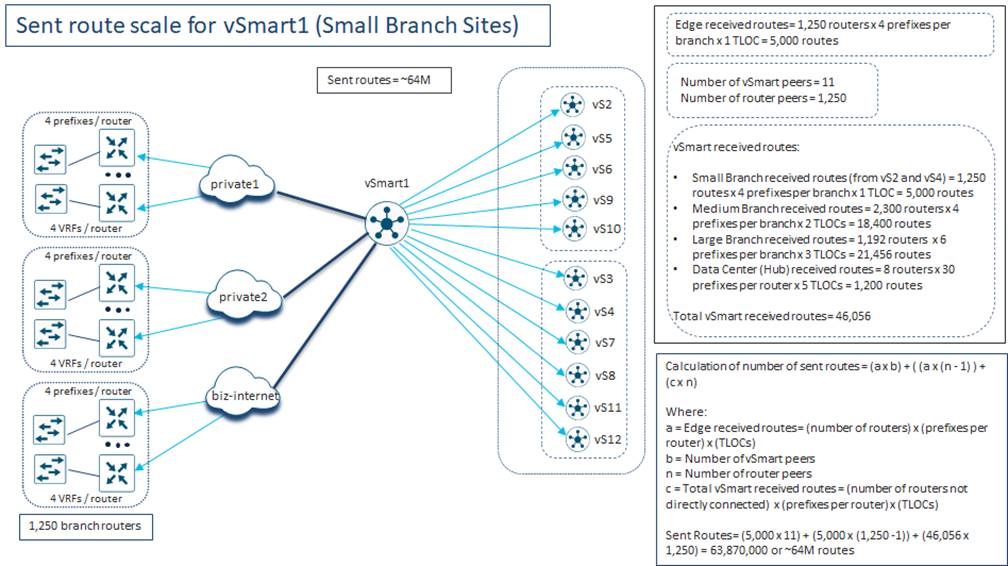

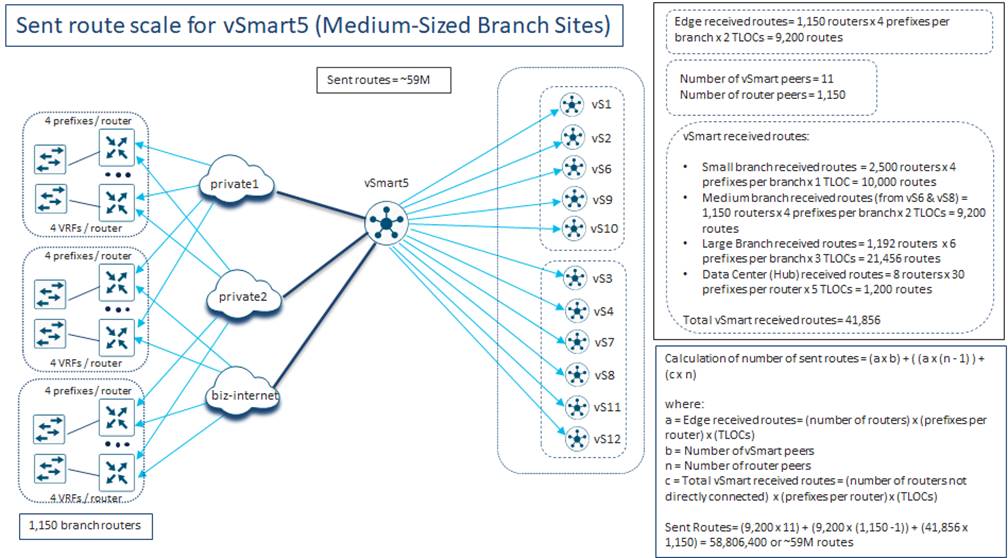

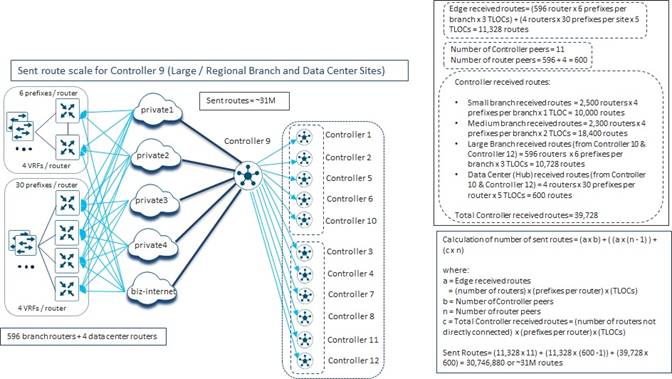

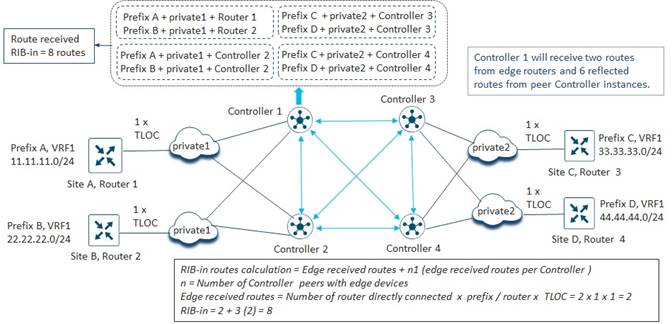

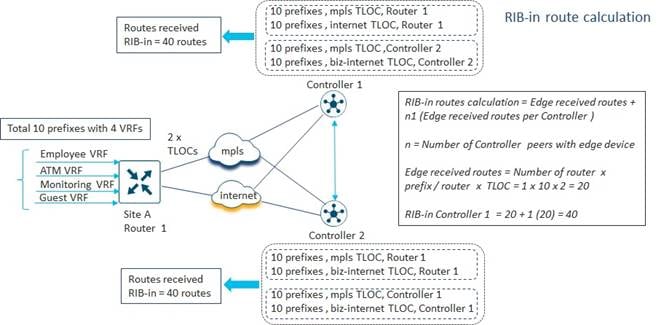

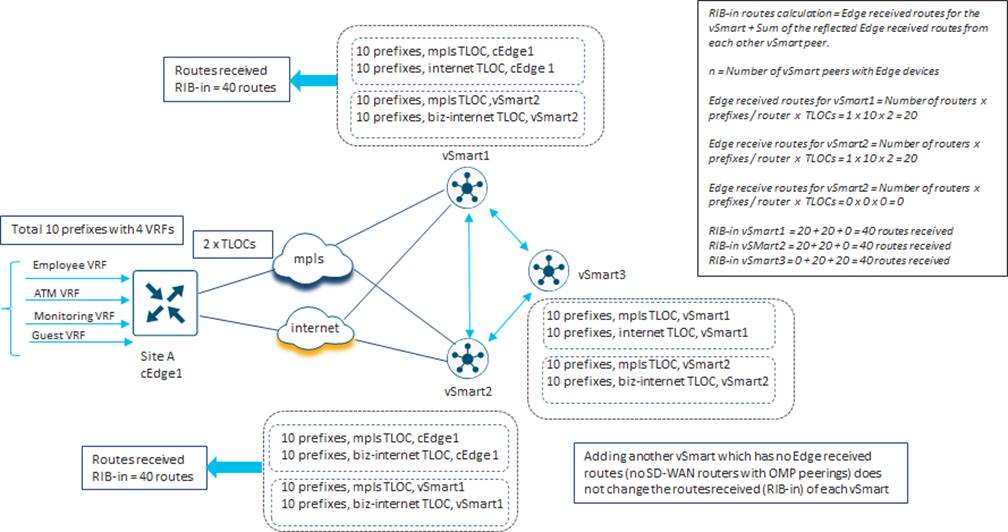

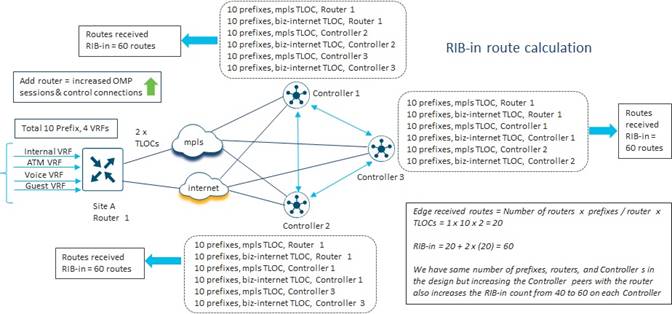

Appendix E: Examples for Calculating OMP Routes (RIB-in & RIB-out) in a Deployment

The designs discussed within this document are presented in the form of a case study for a fictional large global WAN customer – Bank of the Earth. Bank of the Earth is not a real customer and the network discussed within this document is not a real network. However, the designs presented within this guide are based on actual customer deployments. The purpose of this document is to present some of the considerations that a network engineer will need to focus attention upon and address when designing and implementing a large Cisco Catalyst SD-WAN deployment.

This guide is intended to provide technical guidance around the concepts required for the design of a large global WAN using Cisco Catalyst SD-WAN technology.

Audience

The audience for this document includes network design engineers, network operations personnel, and security operations personnel who wish to implement Cisco Catalyst SD-WAN networks.

Bank of the Earth Company Background and Legacy Network

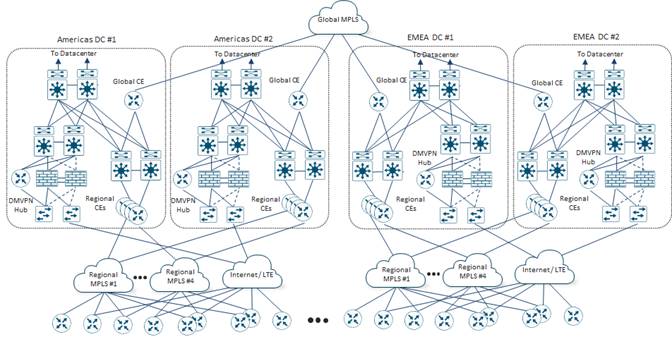

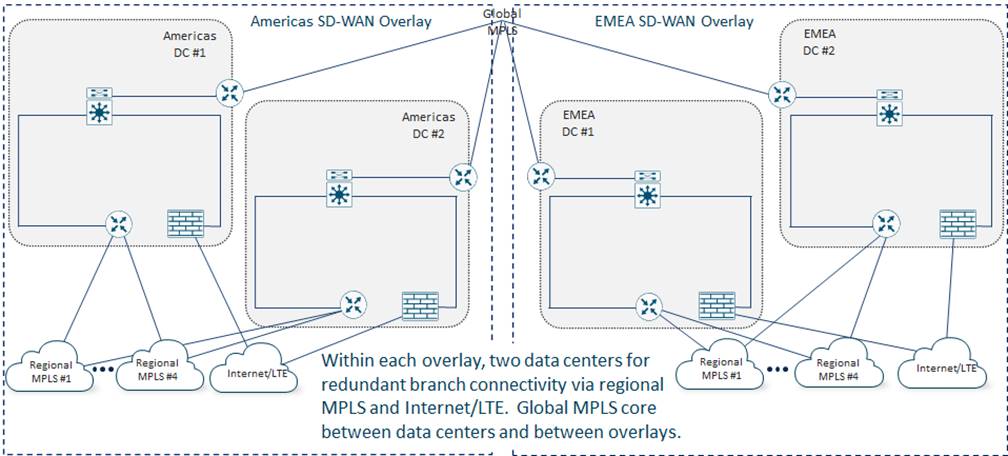

Bank of the Earth is a large multi-national commercial and retail bank with branches located throughout Europe (EMEA) and North America (Americas). Their legacy network is based upon a combination of MPLS Layer3 VPN (wired or private LTE/5G) and Dynamic Multi-point VPN (DMVPN) over Internet technologies.

Bank of the Earth uses a traditional global MPLS carrier core to connect four main data center sites – two located in Europe (EMEA DC#1 and EMEA DC#2) and two located in North America (Americas DC#1 and Americas DC#2). Due to the business criticality of Bank of the Earth’s financial applications, redundant data centers are maintained within each geographic area (Americas and EMEA) – each capable of supporting all the branch locations within their respective geographic areas.

Bank of the Earth’s legacy network design is largely the result of acquisitions and mergers over the years. For example, Bank of the Earth originally had data center and branch sites only on the western side of the Americas. Through mergers and acquisitions, they acquired branch and data center sites on the eastern side of the Americas. Then, through consolidation Bank of the Earth was able to reduce the number of data centers to two – one geographically located within the western side of the Americas, which primarily services branches on the western side; and one on geographically located within the eastern side of the Americas, which primarily services branches on the eastern side. A similar growth pattern occurred in EMEA.

Up to four regional MPLS carriers connect branch sites to both data centers within each geographic area (EMEA and the Americas). Again, this is largely the result of mergers and acquisitions over the years. For example, regional MPLS providers #1 and #2 primarily service branch locations in the western side of the Americas, while regional MPLS providers #3 and #4 primarily service branch locations in the eastern side of the Americas. Since MPLS contracts are often long-term, spanning multiple years, Bank of the Earth has yet to consolidated to a smaller set of regional MPLS carriers. DMVPN over Internet connectivity is also used between some branch locations and the data centers within their respective geographic areas.

The following figure provides a high-level overview of Bank of the Earth’s legacy network.

Branch Design

Bank of the Earth has slightly under 10,800 branch sites overall. Branch sites vary considerably in size and design.

Large / Regional Branch Sites have dual active circuits from different regional MPLS carriers, with Internet (wired broadband or public LTE/5G) connectivity on both routers to provide additional bandwidth and an additional layer of high availability. Bank of the Earth has made the business decision that Large /Regional Branch Sites are business critical to their operations, and therefore require the deployment of dual routers for WAN access.

Medium-Sized Branch Sites typically have either one MPLS circuit from a regional carrier along with Internet (wired broadband or public LTE/5G) connectivity, or dual MPLS circuits from two regional carriers. In either scenario, both circuits are actively used. Bank of the Earth has made the business decision that the criticality of Medium-Sized Branch Sites does not warrant dual routers. Instead, a strategy for rapid replacement of networking equipment has been adopted for these sites. Hence, only a single router is deployed in Medium-Sized Branch Sites.

Small Branch Sites vary widely from a single ATM co-located within a larger retail site, such as a grocery store; to a small kiosk, which offers limited financial services, located within a shopping mall. Generally, a single circuit – either MPLS or Internet (wired broadband or LTE/5G) – is provisioned, depending upon what was available at the site. Bank of the Earth has made the business decision that the criticality of Small Branch Sites is low, and therefore does not warrant even dual circuits. A single router with integrated switch ports is typically deployed within Small Branch Sites. Where the number of required switch ports exceeds the number of ports on the integrated switch, a small standalone Layer 2 switch may be deployed along with the router.

The following table shows the distribution of Large / Regional, Medium-Sized, and Small Branch Sites within the Bank of the Earth network.

Table 1. Branch Sizes within the Bank of the Earth Network

| Branch or Data Center |

Americas Sites |

EMEA Sites |

Total Sites |

Routers Per Site |

Americas Routers |

EMEA Routers |

Total Routers |

Circuits per Site |

| Large / Regional |

596 |

596 |

1,192 |

2 |

1,192 |

1,192 |

2,384 |

4 |

| Medium-Sized |

2,300 |

2,300 |

4,600 |

1 |

2,300 |

2,300 |

4,600 |

2 |

| Small |

2,500 |

2,500 |

5,000 |

1 |

2,500 |

2,500 |

5,000 |

1 |

| Data Center |

2 |

2 |

4 |

4 |

8 |

8 |

16 |

5 |

| Total |

5,398 |

5,398 |

10,796 |

--- |

6,000 |

6,000 |

12,000 |

--- |

| Technical Note: |

| For brevity, the two sides of the network (Americas and EMEA) within the Bank of the Earth case study are considered identical. In other words, the number of branch and data center sites, number of devices per site, number of circuits per site, etc., are the same for both sides of the network. Even the design of each of the data centers is considered identical. In actual production deployments this will likely not be the case. However, by assuming both sides are identical, much of the redundancy of having to repeat information can be eliminated. Within this case study, each side of the Bank of the Earth network (Americas and EMEA) translates to a separate SD-WAN overlay. Hence, the discussion within this guide will be focused primarily on one SD-WAN overlay – with the assumption that the design principles discussed for one overlay can be equally applied to the other overlay. Again, this is done to reduce the amount of information that has to be duplicated within this case study. In a real deployment, each SD-WAN overlay would have to be designed separately. |

Data Center Design

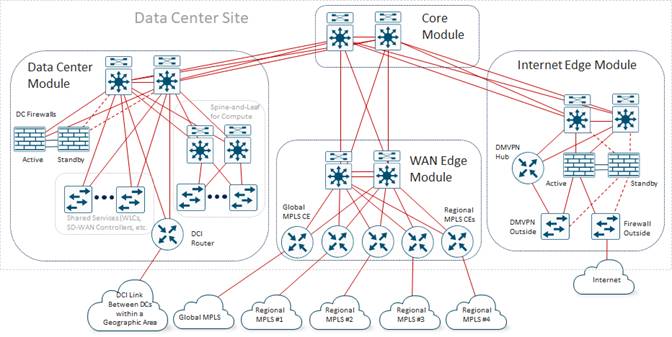

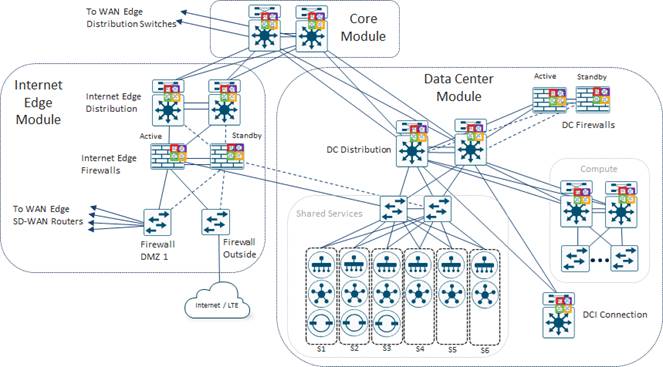

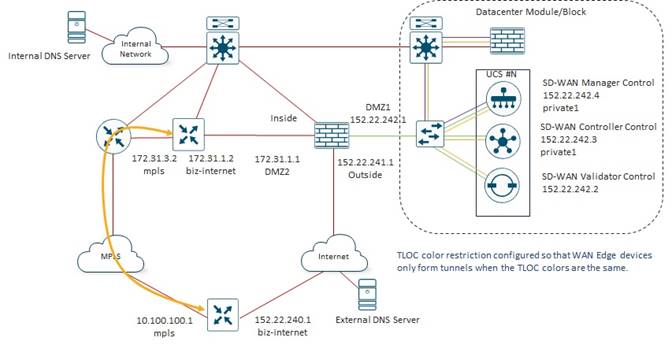

The following figure shows the general layout of one of the Data Center sites showing the legacy connectivity before migrating to SD-WAN.

Each Data Center Site consists of separate Core, Data Center, WAN Edge, and Internet Edge functional blocks / modules which connect to form a traditional hierarchical LAN design. Each Data Center Site is connected to all the regional MPLS carriers within its geographic area (Americas or EMEA), as well as the Bank of the Earth global MPLS backbone through the WAN Edge module. Each Data Center Site also has Internet connectivity, which is used for outbound Internet connectivity from the data center and branch sites, as well as for Internet-based DMVPN connections to some branch sites. The data center functional block / module within the Data Center site houses on-prem compute as well as a DCI link between Data Center Sites within the geographic area (Americas or EMEA).

As legacy router platforms within branch locations began to reach their end-of-life, Bank of the Earth made the business decision to migrate to SD-WAN simultaneously with refreshing their aging router platforms. The primary motivation for migrating to SD-WAN was centralized configuration and policy management, which would offset the need for additional staff to continue to deploy and maintain Bank of the Earth’s overall wide-area network. Additional benefits identified by Bank of the Earth were the Application Visibility (AV) and Application Aware Routing (AAR) features of Cisco Catalyst SD-WAN, which could be leveraged to make optimal use of bandwidth to sites with multiple MPLS and/or Internet transports. Finally, the future benefits of integration with cloud-based security services via Secure Access Service Edge (SASE), as well as Direct Internet Access (DIA) from the branch for Software-as-a-Service (SaaS) applications and guest access were a consideration.

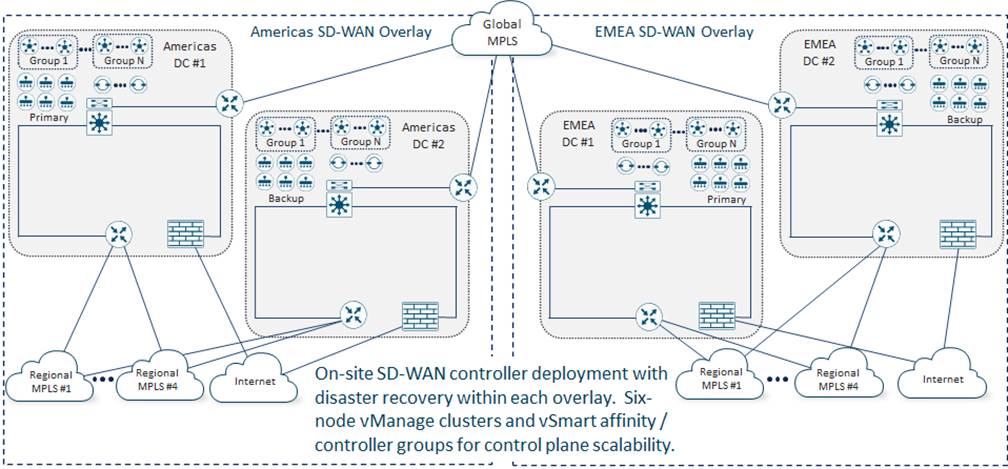

The overall Bank of the Earth Cisco Catalyst SD-WAN deployment consists of two overlays based on geographic area – EMEA and the Americas.

This design choice aligns with the location of their data centers – two in Europe and two in North America – as well as the existing connectivity of branch sites to their respective data centers through regional MPLS carriers within each geographic area.

The primary drivers for the decision to go with two overlays were as follows:

● Since the total number of branch sites within Bank of the Earth’s network is approximately 10,800, the total number of SD-WAN devices required within the branch sites alone exceeds the number of devices currently supported by a single Cisco Catalyst SD-WAN overlay.

| Technical Note: |

| Guidance as to server size recommendations, as well as the maximum number of devices supported per SD-WAN overlay based upon the software release, can be found at the following link: https://www.cisco.com/c/en/us/td/docs/routers/sdwan/release/notes/compatibility-and-server-recommendations/ch-server-recs-20-9-combined.html |

● Each SD-WAN overlay defines a separate fault-domain within their overall network deployment. SD-WAN devices within an overlay rely on the centralized control and management planes provided by the SD-WAN Manager, SD-WAN Controllers and SD-WAN Validators. Hence, implementing multiple overlays constrains each fault-domain to a smaller number of SD-WAN devices within a smaller geographic area.

● Fewer SD-WAN devices within each overlay results in fewer OMP routes per overlay. This can result in lower CPU and memory load on each of the SD-WAN Controllers within the overlay.

● Fewer SD-WAN devices and sites within each overlay can result in smaller centralized policy per overlay. Smaller centralized policy and fewer SD-WAN Controllers per overlay due to fewer SD-WAN routers per overlay, can result in faster deployment and/or modifications to centralized policy within the overlay. Bank of the Earth needed to make sure the policy push times fit within the scheduled change windows allowed for network changes.

● Fewer SD-WAN routers within each overlay can result in lower SAIE (formerly known as DPI) / statistics collection per overlay. This can result in lower load in terms of CPU and memory on the SD-WAN Manager instances within each cluster, as well as longer storage times (in terms of days and/or weeks) that the statistics are available for viewing within SD-WAN Manager.

● Fewer SD-WAN routers within each overlay can result in lower number of API calls needed to troubleshoot and monitor the SD-WAN devices within the overlay. This can result in lower load, in terms of CPU utilization required on each SD-WAN Manager instance within the cluster needed to service the API calls.

Bank of the Earth also recognizes the complications associated with the choice of implementing two overlays:

● Two separate overlays must be managed, including creating and maintaining separate templates and/or profiles for SD-WAN devices within each overlay; as well as separate policies which must be configured and managed within the SD-WAN Manager cluster within each overlay. In other words, there is no single management pane of glass with multiple overlays.

● There is no end-to-end application visibility and/or statistics collection between overlays within SD-WAN Manager. If Bank of the Earth desires end-to-end application visibility and/or statistics they can use functionality such as the collection and export of flow data via NetFlow to 3rd party tools, such as LiveAction. Alternatively, they can leverage the APIs within SD-WAN Manager to periodically export data to 3rd party tools which can provide a consolidated view of application visibility and/or statistics across their overall network deployment.

● Segmentation via Service VPNs can be implemented within each SD-WAN overlay. However, Bank of the Earth must extend segmentation across the global MPLS network if they wish to maintain segmentation between overlays.

● There is additional cost in terms of physical servers needed to run two sets of SD-WAN control components (primary and backup SD-WAN Manager clusters, in addition to SD-WAN Controllers and SD-WAN Validators) within each overlay.

Despite the complications associated with implementing separate overlays, Bank of the Earth decided that the benefits of greater scale, potentially smaller centralized policy and faster policy push, and smaller fault domains outweighed the benefits of a single management pane of glass, additional work needed to extend segmentation across the global MPLS network, and additional cost of physical servers.

Bank of the Earth also actively monitors the status and health of each of the SD-WAN overlays, including CPU load and memory usage on each of the SD-WAN Manager and SD-WAN Controller instance, number of routes received and sent by each SD-WAN Controller instance, how long it takes to deploy policy and make configuration changes to their network, etc. This is used to determine if each existing SD-WAN overlay needs to be further split into multiple overlays – each with fewer SD-WAN devices – at some point in the future, to maintain their performance and scale targets.

Network Topology (Fabric and Service VPN Data Planes)

The primary data flow pattern within Bank of the Earth’s legacy network was client-server based. Devices owned, managed, and located within Bank of the Earth branch sites, access applications running on servers within the on-prem data centers in each of their respective geographic areas. Bank of the Earth saw no reason why the data flow patterns would be any different when migrating to an SD-WAN network infrastructure. Hence, the client-server data flow pattern favored the deployment of a hub-and-spoke fabric data plane topology within each SD-WAN overlay.

With a hub-and-spoke fabric data plane topology each branch site only forms persistent SD-WAN tunnels to the head-end routers located within the data center hub sites, and not with other branch sites. Since each Service VPN relies on the underlying fabric data plane topology, a hub-and-spoke fabric data plane topology implies that the data plane topology of each Service VPN is also hub-and-spoke.

In a hub-and-spoke fabric data plane topology, each branch router only needs to form one SD-WAN tunnel to each of the head-end routers within each data center, for each WAN transport (VPN 0) tunnel interface / TLOC – assuming the use of color restriction between TLOCs. This can allow for the deployment of router platforms that support fewer overall SD-WAN tunnels at the branch sites. Within a hub-and-spoke fabric data plane topology, it is only the head-end routers within the data centers which must be sized appropriately to support large numbers of SD-WAN tunnels.

Referring to Table 1 above, the number of branch routers within each overlay is just under 6,000. Bank of the Earth concluded that the number of SD-WAN tunnels required to form a full-mesh within each SD-WAN overlay would exceed the maximum number of tunnels supported by the router platforms they were targeting for the branch locations. A larger router platform could be deployed within each branch site, but that would exceed the price-point they were targeting for the branch locations. Therefore, Bank of the Earth made the decision to implement a hub-and-spoke fabric data plane topology within each SD-WAN overlay.

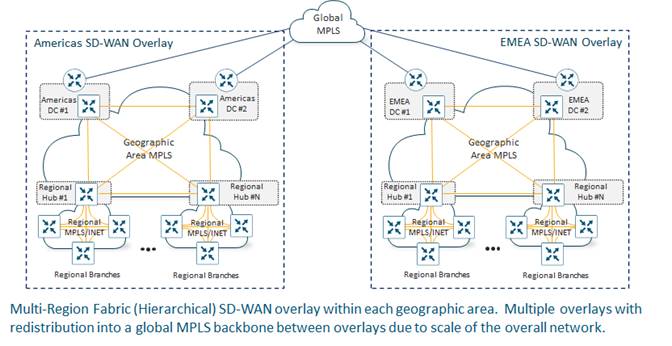

Hierarchical SD-WAN Consideration

As part of the SD-WAN initiative, Bank of the Earth considered re-designing the network infrastructure within each SD-WAN overlay to be based on multiple smaller regions, hierarchically connected to an SD-WAN backbone. This would require the deployment of a multi-region fabric Cisco Catalyst SD-WAN design (also known as a hierarchical SD-WAN design) – as shown in the following figure.

A multi-region fabric (hierarchical) SD-WAN design can result in reduced complexity, in terms of the centralized control policy, needed to create a hub-and-spoke topology. However, it would also require Bank of the Earth to re-architect their network to utilize some of the Large / Regional Branch Sites as SD-WAN Regional Hub Sites.

By deploying multiple Regional Hub Sites, the number of SD-WAN devices per regional fabric is smaller, and therefore the number of SD-WAN tunnels required within each regional full-mesh of branch sites would also be smaller. This would allow Bank of the Earth to utilize SD-WAN routers with lower price-points within the branch sites. However, this design would also require significant work in re-distributing circuits and circuit bandwidth from existing Data Center Sites to Regional Hub Sites to accommodate the design. The expanded role of the Large / Regional Branch Sites functioning as SD-WAN Regional Hub Sites would also require Bank of the Earth to re-evaluate their support model, which is based primarily upon having the necessary staff, physical space for equipment, uninterruptable power systems (UPS), air conditioning, etc., within their Data Center Sites today. Therefore, Bank of the Earth decided to table multi-region fabric (hierarchical) SD-WAN design initially and will consider it as a possible future design.

Network Topology Summary and Decision

Bank of the Earth concluded that the fabric data plane topology (and therefore, all the Service VPNs) within each SD-WAN overlay will be hub-and-spoke, based upon the following:

● The data flow patterns within their legacy MPLS-based network match a hub-and-spoke network topology. Bank of the Earth did not see any upcoming changes to the data flow pattern with or without SD-WAN.

● A hub-and-spoke network topology decreases the number of tunnels required at each of the branch sites. This allowed Bank of the Earth to deploy platforms that support fewer SD-WAN tunnels, which also fit within their price point, at the branch locations. It is only the head-end hub routers that needed to be scaled in terms of throughput and tunnel capacity.

● Bank of the Earth was satisfied that scalability of the data throughput at the head-end (in a hub-and-spoke topology) could be accomplished through horizontal scaling – by deploying multiple Catalyst router platforms at the head-end data center locations. This is discussed in the Data Center Design section of this document.

● Bank of the earth was satisfied that scalability of the tunnel count supported at the head-end (in a hub-and-spoke topology) could be accomplished through multiple Catalyst router platforms with the use of TLOC color restriction and Tunnel Groups. This is also discussed in the Data Center Design section of this document.

Bank of the Earth also recognized the following complications to the choice of a hub-and-spoke fabric data plane topology:

● Spoke-to-spoke (branch-to-branch) traffic must hairpin through the hub (head-end) routers. For voice and/or video applications, this could increase end-to-end latency, lowering the overall quality of experience (QoE) of the voice call. Bank of the Earth plans to evaluate the use of Dynamic on-Demand tunnels or a multi-region fabric SD-WAN design (also known as a hierarchical SD-WAN design) at a future point, as a possible means of mitigating this potential issue.

● A hub-and-spoke network topology introduces more complexity in terms of the centralized control policy needed to create the hub-and-spoke topology itself, particularly if TLOC rewrites are needed. The more complexity could result in larger centralized control policy, which could take longer to deploy and/or make changes within a production network. Bank of the Earth plans to evaluate a multi-region fabric (hierarchical) SD-WAN design at a future point, as a means of mitigating this potential issue as well.

After completing the migration from their legacy MPLS-based network design to SD-WAN, Bank of the Earth made the business decision to implement segmentation across their network for increased security. Up to four separate Service VPNs may be deployed within branch and data center sites. Strict separation of traffic between Service VPNs is both required and maintained by Bank of the Earth.

These Service VPNs consist of the following:

● Service VPN 10 (Employee VPN) – Used for internal business applications

● Service VPN 20 (ATM VPN) – Used for ATMs and other devices accessing internal financial applications

● Service VPN 30 (Monitoring VPN) – Used for logging information (syslog and/or SNMP traps) and in-band management

● Service VPN 40 (Guest VPN) – Used primarily for guest Wi-Fi access to the customer-facing Bank of the Earth web applications reachable via the Internet

Bank of the Earth provides additional customer services which are accessed primarily through public-facing web applications. These applications are currently hosted within Bank of the Earth’s on-prem data centers but may transition to cloud-hosted data centers at some point in the future. A portal, where customers are allowed to opt-in to use the guest Wi-Fi services at a branch site provides additional opportunities for Bank of the Earth to reach their customers with more personalized service, as well as providing a means for customers to access these services.

Medium-Sized and Large / Regional Branch Sites require all four Service VPNs. Small Branch Sites require Service VPN 20 (ATM VPN) and Service VPN 30 (Monitoring VPN). If a customer-facing representative is located at the Small Branch Site – meaning that the site offers more than just access to ATM machines – the site may also require Service VPN 10 (Employee VPN) and Service VPN 40 (Guest VPN). Bank of the Earth has made the business decision to extend Service VPN 40 (Guest VPN) to small branch offices where it is cost effective to provision and maintain guest Wi-Fi access in such small locations. Where it is not cost effective, customers can still access customer-facing web applications either through LTE/5G Internet access directly from the customer’s mobile device or guest Wi-Fi networks provided by the mall operator or retail store in which the small branch is located.

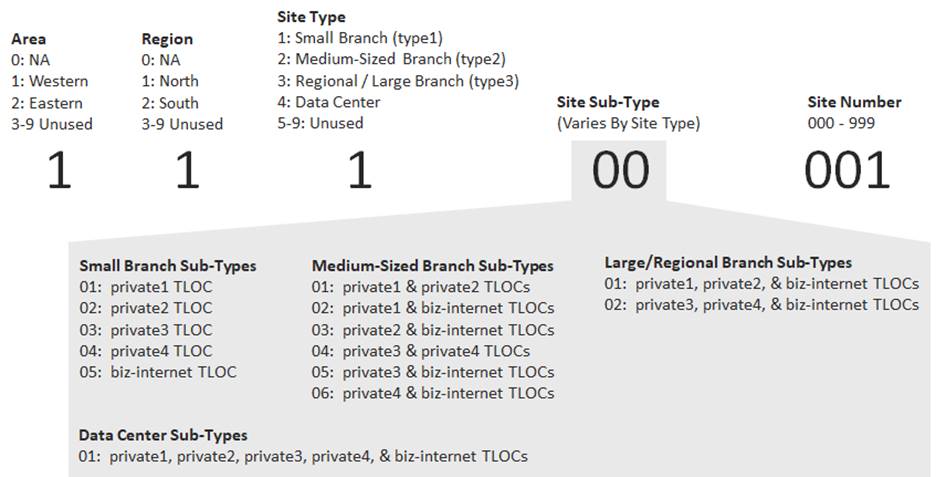

Bank of the Earth has decided upon an eight-digit Site ID numbering scheme for their branch and data center sites within each SD-WAN overlay.

The site ID numbering scheme takes into consideration the following:

● The geographic area (west or east) within the overlay. As discussed in the Bank of the Earth Company Background and Legacy Network section, Bank of the Earth has grown largely through acquisitions and mergers. After consolidation, Bank of the Earth has two remaining data centers – DC#1 and DC#2 – within each overlay. DC#1 primarily services the branch locations in the western side of each overlay, and DC#2 primarily services the branch locations on the eastern side of each overlay.

● The geographic region (north or south) within each geographic area. As discussed in the Bank of the Earth Company Background and Legacy Network section, regional MPLS carriers primarily service branch sites within a geographic region, although each of the data centers – DC#1 (Western DC) and DC#2 (Eastern DC) – have connections to all regional MPLS carriers for redundancy purposes.

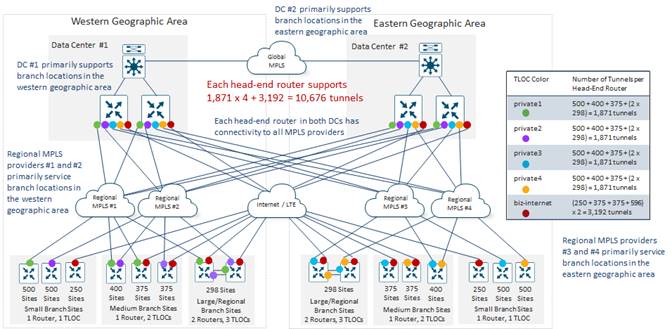

Regional MPLS Carrier #1, corresponding to TLOC color private1, primarily services northwestern branch sites

Regional MPLS Carrier #2, corresponding to TLOC color private2, primarily services southwestern branch sites

Regional MPLS Carrier #3, corresponding to TLOC color private3, primarily services northeastern branch sites

Regional MPLS Carrier #4, corresponding to TLOC color private4, primarily services southeastern branch sites

The centralized control policy applied to the branch sites also takes into consideration whether the branch is located within the northern or southern region of each geographic area – effectively splitting the branch sites within the overlay into four quadrants – northwest, southwest, northeast, and southeast. Note that since the data centers only reflect the geographic area (west or east) separate digits are used within the Site ID to identify geographic area (1 – West and 2 – East) and region (0 – Not Applicable, 1 – North, and 2 – South).

● The site type within the overlay. Bank of the Earth has identified four site types.

1 – Type 1 sites correspond to Small Branch Sites

2 – Type 2 sites correspond to Medium-Sized Branch Sites

3 – Type 3 sites correspond to Large / Regional Branch Sites

4 – Type 4 sites correspond to Data Center Sites

Each of the site types is discussed in the Branch SD-WAN Router Design section of this document.

● The site sub-type depends on the site type. Site sub-types reflect the different combinations of regional MPLS carriers, as well as any Internet connections, that each branch or data center SD-WAN router supports.

● The site number reflects the specific instance of the branch or data center site.

Bank of the Earth has implemented this Site ID numbering scheme to give them flexibility when configuring centralized control policy, with the ability to add and/or remove geographic areas, regions, site types, site sub-types, and site numbers. For example, the centralized control policy deployed within each overlay distinguishes between data center and branch sites. Furthermore, the centralized control policy applied to the branch sites can also take into consideration whether the branch is located on the western or eastern side of the overlay.

Bank of the Earth has identified the following SD-WAN branch prototypes, based upon the size of the branch.

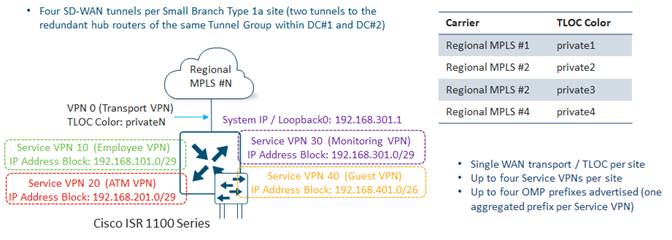

Small Branch (Type 1) Sites

Bank of the Earth has defined two Small Branch (Type 1) prototypes as follows:

● Small Branch Type 1a – Single router, single regional MPLS WAN circuit

● Small Branch Type 1b – Single router, single Internet circuit

Small Branch Type 1a Sites

Small Branch Type 1a sites consist of a single SD-WAN router with a single MPLS circuit.

Because there is only a single MPLS WAN circuit, there is a single WAN transport (VPN 0) tunnel interface / TLOC per Small Branch Type 1a site. The TLOC color privateN (where N is from 1 to 4) matches one of the four regional MPLS carriers within the overlay, as shown in the figure above. Color restriction is configured such that SD-WAN tunnels only form to the WAN transport tunnel interfaces of the Data Center head-end routers connected to the same regional MPLS carrier.

The WAN transport (VPN 0) tunnel interface of each Small Branch Type 1a router is also configured to be part of either Tunnel Group 1 or 2, to balance the SD-WAN tunnels from the Small Branch sites across the head-end routers within the two Data Center Sites within each overlay. As a result of this configuration, a total of four SD-WAN tunnels are initiated from each Small Branch Type 1a Site.

Each Small Branch Type 1a Site supports up to four Service VPNs – VPNs 10, 20, 30, and 40. Service VPNs 10 (Employee VPN) and 40 (Guest VPN), are only needed for Small Branch Type 1a sites when they include a small, secured kiosk – such as a bank branch located within a larger store or shopping mall. Within the small, secured kiosk locations, one or two Ethernet ports can be made available to connect a PC / laptop, where a branch employee can assist customers. Service VPN 20 (ATM VPN) is used for Automated Teller Machine (ATM) connections. Service VPN 30 (Monitoring VPN) is used for the Loopback0 interface which is configured to be the source of logging information (Syslog) as well as SNMP traps.

Bank of the Earth has selected the Cisco ISR 1100 Series platform for Small Branch Type 1a Sites. This platform provides 4 Gigabit Ethernet switch ports. Small Branch Type 1a sites can support up to four ATMs, depending upon whether a port is needed for a branch employee PC / laptop.

IP addressing for each Small Branch Type 1a site has been carefully selected so that only a single aggregate prefix is advertised within OMP for each of the Service VPNs. The IP addressing shown in the figure above provides an example of how the addressing can be selected such that aggregate prefixes can be advertised for each Service VPN. Hence for each Small Branch Type 1a site, up to four OMP prefixes are advertised.

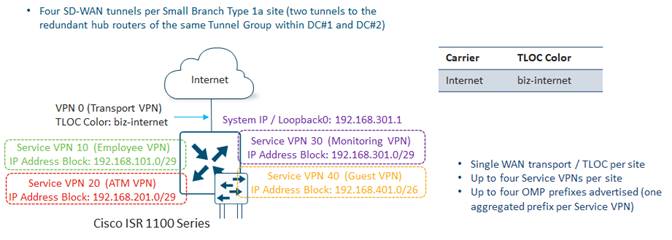

Small Branch Type 1b Sites

Small Branch Type 1b sites consist of a single SD-WAN router with a single Internet circuit.

The only difference between Branch Prototype 1a and Branch Prototype 1b is the TLOC Color. Branch Prototype 1b is configured with a TLOC-color of biz-internet.

Small Branch Sites Summary

The following table summarizes the TLOCs, DTLS/TLS control connections, OMP sessions, SD-WAN tunnels, and advertised OMP prefixes from the Small Branch Sites within each Bank of the Earth overlay.

Table 2. Small Branch Sites Summary

| Parameter |

Per Overlay |

| Sites |

2,500 |

| TLOCs per Site |

1 |

| SD-WAN Controller DTLS / TLS Control Connections per Site |

2 |

| Total SD-WAN Controller DTLS / TLS Control Connections |

5,000 |

| OMP Sessions per Site |

2 |

| Total OMP Sessions |

5,000 |

| SD-WAN Tunnels per Site |

4 |

| Total SD-WAN Tunnels From All Small Branch Sites |

10,000 |

| OMP Prefixes Advertised per TLOC |

Up to 4 |

| OMP Prefixes Advertised per Site (Single TLOC) |

Up to 4 |

| Total OMP Prefixes Advertised From All Branch Sites |

Up to 10,000 |

Medium-Sized Branch (Type2) Sites

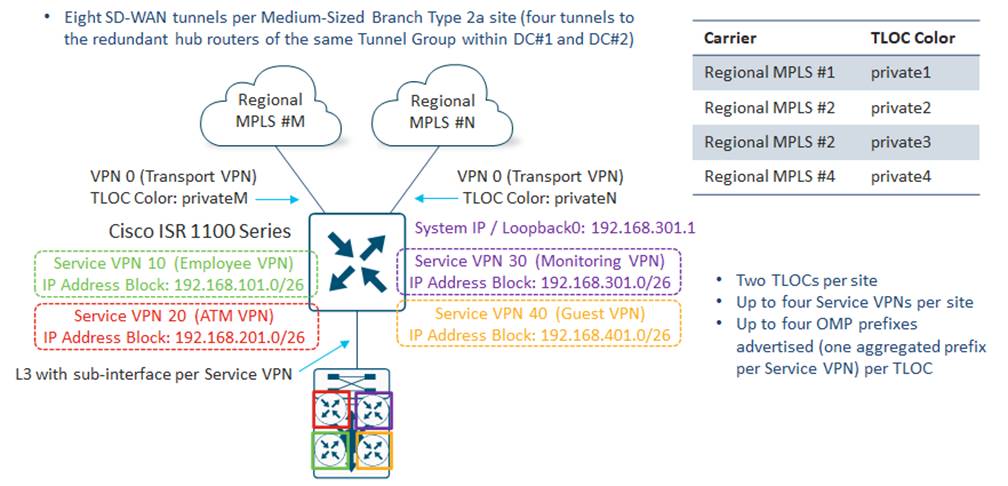

Bank of the Earth has defined two Medium-Sized Branch (Type 2) prototypes as follows:

● Medium-Sized Branch Type 2a – Single router with two regional MPLS WAN circuits

● Medium-Sized Branch Type 2b – Single router with one regional MPLS circuit and one Internet circuit

Medium-Sized Branch Type 2a Sites

Medium-Sized Branch Type 2a Sites consist of a single SD-WAN router with two MPLS circuits – each connected to one of the four regional MPLS carriers within each geographic area. Due to the geographic nature of the regional MPLS carriers, Medium-Sized Branch Type 2a Sites are connected to regional MPLS providers #1 and #2, or to regional MPLS providers #3 and #4. Those are the only two combinations of regional MPLS providers connected to Medium-Sized Branch (Type 2a) Sites.

Because there are two MPLS WAN circuits, there are two WAN transport (VPN 0) tunnel interfaces / TLOCs per Medium-Sized Branch Type 2a site. The TLOC colors of each of the Medium-Sized Branch router WAN transport (VPN 0) tunnel interfaces matches one of the regional MPLS providers, as shown in the figure above. TLOC color restriction is configured such that SD-WAN tunnels only form to the WAN transport tunnel interfaces of the data center head-end routers connected to the same regional MPLS carrier.

Both WAN transport (VPN 0) tunnel interfaces of each Medium-Sized Branch Type 2a router are also configured to be part of either Tunnel Group 1 or 2, to balance the SD-WAN tunnels from the Medium-Sized Branch sites across the head-end routers within the two Data Center Sites within each overlay. As a result of this configuration, a total of eight SD-WAN tunnels are initiated from each Medium-Sized Branch Type 2a Site.

Each Medium-Sized Branch Type 2a Site supports up to four Service VPNs – VPNs 10, 20, 30, and 40. Service VPN 10 (Employee VPN), is for internal employee PCs / laptops. Service VPN 20 (ATM VPN) is used for Automated Teller Machine (ATM) connections. Service VPN 30 (Monitoring VPN) is used for the Loopback0 interface which is configured to be the source of logging information (Syslog) as well as SNMP traps. Service VPN 40 (Guest VPN) is used primarily for wireless guest Internet access which is currently backhauled to the Data Center sites before being sent to the Internet.

Bank of the Earth has selected the Cisco ISR 1100 Series platform for Medium-Sized Branch Type 2a Sites. To maintain segmentation between the Service VPNs, VRF-Lite is implemented on the Layer 3 distribution switch within the site. A single Layer 3 1 Gigabit Ethernet connection with four sub-interfaces (one for each Service VPN) connects the Medium-Sized Branch Type 2a SD-WAN router with the Layer 3 distribution switch. Layer 2 access switches may further connect to the Layer 3 distribution switch – with each Layer 2 access switch supporting one or more VLANs. A single VLAN or multiple VLANs can map back into the VRFs / Service VPNs within the Layer 3 distribution switch. This allows for either a single IP subnet or multiple IP subnets per Service VPN as needed.

IP addressing for each Medium-Sized Branch Type 2a Site has been carefully selected so that only a single aggregate prefix is advertised within OMP for each of the Service VPNs. The IP addressing shown in the figure above provides an example of how the addressing can be selected such that aggregate prefixes can be advertised for each Service VPN. When multiple subnets are needed within a Service VPN, the IP addressing is carefully chosen such that a single aggregated prefix can be sent from the site for that Service VPN. Hence for each Medium-Sized Branch Type 2a Site, up to four OMP prefixes are advertised.

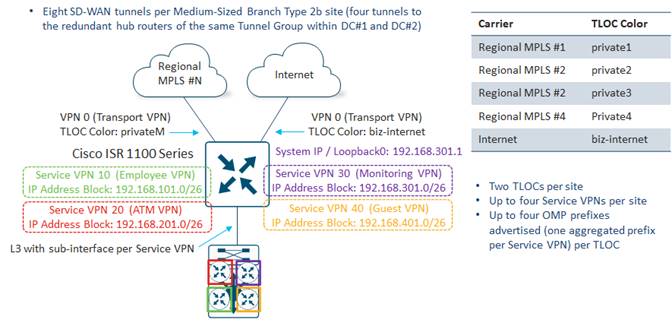

Medium-Sized Branch Type 2b Sites

Medium-Sized Branch Type 2b Sites consist of a single SD-WAN router with one regional MPLS circuit and one Internet circuit.

The only difference between Medium-Sized Branch Type 2a and Type 2b Sites is the TLOC-color of the second WAN transport. Medium-Sized Branch Type 2b SD-WAN routers are configured with a TLOC-color of biz-internet for the Internet-facing interface.

Medium-Sized Branch Sites Summary

The following table summarizes the TLOCs, DTLS / TLS control connections, OMP sessions, SD-WAN tunnels, and advertised OMP prefixes from the Medium-Sized Branch Sites within each overlay.

Table 3. Medium-Sized Branch Sites Summary

| Parameter |

Per Overlay |

| Sites |

2,300 |

| TLOCs per Site |

2 |

| SD-WAN Controller DTLS / TLS Control Connections per Site |

4 |

| Total SD-WAN Controller DTLS / TLS Control Connections |

9,200 |

| OMP Sessions per Site |

2 |

| Total OMP Sessions |

4,600 |

| SD-WAN Tunnels per Site |

8 |

| Total SD-WAN Tunnels From All Medium-Sized Branch Sites |

18,400 |

| OMP Prefixes Advertised per TLOC |

Up to 4 |

| OMP Prefixes Advertised per Site (2 TLOCs) |

Up to 8 |

| Total OMP Prefixes Advertised From All Medium-Sized Branch Sites |

Up to 18,400 |

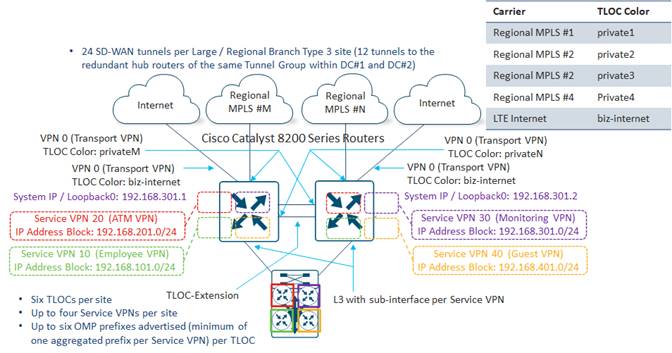

Large / Regional Branch (Type3) Sites

Bank of the Earth has defined a single Large / Regional Branch (Type 3) prototype as follows:

● Large / Regional Branch Type 3 – Dual routers, each with dual regional MPLS WAN circuits (one via TLOC-Extension) and one Internet circuit

Large / Regional Branch Type 3 Sites

Large / Regional Branch Type 3 sites consist of two SD-WAN routers. Each SD-WAN router has one direct connection to one of the four regional MPLS service carriers within each geographic area. However, each SD-WAN router connects to a different regional MPLS provider for redundancy purposes. Each SD-WAN router also has a second connection to the opposite MPLS provider through a TLOC-Extension interface on the opposite router. Due to the geographic nature of the regional MPLS carriers, Large / Regional Branch Type 3 sites are connected to regional MPLS providers #1 and #2, or to regional MPLS providers #3 and #4. Those are the only two combinations of regional MPLS providers connected to Large / Regional Branch (Type 3) Sites. Finally, each Large / Regional Branch SD-WAN router has a direct connection to an Internet Service Provider (ISP) as well. The Internet connection provides a further level of high availability, as well as additional bandwidth to the branch. It may also provide a path for Direct Internet Access (DIA) for possibly guest Wi-Fi access or Software-as-a-Service (SaaS) application access, at some point in the future. Bank of the Earth has made the business decision that TLOC-Extension is not required for the Internet connection to reduce the number of SD-WAN tunnels at each Large / Regional Branch Type 3 Site.

Each Large / Regional Branch Type 3 Site has a total of 6 WAN transport (VPN 0) tunnel interfaces / TLOCs. The TLOC colors of each of the Large / Regional Branch router MPLS tunnel interfaces match one of the regional MPLS providers, as shown in the figure above. The Internet TLOC color is biz-internet. TLOC color restriction is configured such that SD-WAN tunnels only form to the WAN transport tunnel interfaces of the data center head-end routers connected to the same regional MPLS carrier and/or the Internet connection.

The tunnel interfaces of each Large / Regional Branch Type 3 SD-WAN router are also configured to be part of either Tunnel Group 1 or 2, to balance the SD-WAN tunnels from the Large / Regional Branch Sites across the head-end routers within the two Data Center Sites within each overlay. As a result of this configuration, a total (from both SD-WAN routers) of 24 SD-WAN tunnels are initiated from each Large / Regional Branch Type 3 Site.

Each Large / Regional Branch Type 3 site supports up to four Service VPNs – VPN 10, 20, 30, and 40. Service VPN 10 (Employee VPN), is for employee PCs / laptops. Service VPN 20 (ATM VPN) is used for Automated Teller Machine (ATM) connections. Service VPN 30 (Monitoring VPN) is used for the Loopback0 interface which is configured to be the source of logging information (Syslog) as well as SNMP traps. Service VPN 40 (Guest VPN) is used primarily for wireless guest Internet access which is currently backhauled to the Data Center Sites before being sent to the Internet.

Bank of the Earth has selected the Cisco Catalyst 8200 Series platform for Large / Regional Branch Type 3 Sites. To maintain segmentation between the Service VPNs, VRF-Lite is implemented on the Layer 3 distribution switch within the site. A single Layer 3 10 Gigabit Ethernet connection with four sub-interfaces (one for each Service VPN) connects each Large / Regional Branch Type 3 SD-WAN router with the Layer 3 distribution switch. Layer 2 access switches may connect to the Layer 3 distribution switch, with each Layer 2 access switch supporting one or more VLANs. A single VLAN or multiple VLANs can map back into the VRFs / Service VPNs within the Layer 3 distribution switch. This allows for either a single IP subnet or multiple IP subnets per Service VPN as needed.

IP addressing for each Large / Regional Branch Type 3 site has been carefully selected so that ideally only a single aggregate prefix is advertised within OMP for each of the Service VPNs. The IP addressing shown in the figure above provides an example of how the addressing can be selected such that aggregate prefixes can be advertised for each Service VPN. When multiple subnets are needed within a Service VPN, the IP addressing is carefully chosen such that a single aggregated prefix can be sent from the site for that Service VPN. However, with the larger number of devices which need to be supported at each Large / Regional Branch Type 3 Site, Bank of the Earth recognizes that in some situations additional IP addressing space needs to be allocated per branch. Hence for each Large / Regional Branch Type 3 Site, up to six OMP prefixes can be advertised.

Large / Regional Branch Sites Summary

The following table summarizes the TLOCs, DTLS / TLS control connections, OMP sessions, SD-WAN tunnels, and advertised OMP prefixes from the Large / Regional Branch Sites within each overlay.

Table 4. Large / Regional Branch Sites Summary

| Parameter |

Americas Overlay |

| Sites |

596 |

| TLOCs per Site |

6 |

| SD-WAN Controller DTLS / TLS Control Connections per Site |

12 |

| Total SD-WAN Controller DTLS / TLS Control Connections |

7,152 |

| OMP Sessions per Site |

4 |

| Total OMP Sessions |

2,384 |

| SD-WAN Tunnels per Site |

24 |

| Total SD-WAN Tunnels From All Large / Regional Branch Sites |

14,304 |

| OMP Prefixes Advertised per TLOC |

Up to 6 |

| OMP Prefixes Advertised per Site (6 TLOCs) |

Up to 36 |

| Total OMP Prefixes Advertised from All Large / Regional Branch Sites |

21,465 |

Common Branch Design Principles

Regardless of the branch prototype, Bank of the Earth has decided that all branch locations will adhere to the following design principles:

● Use of IP prefix aggregation

● Use of TLOC color restriction

● Use of Tunnel Groups

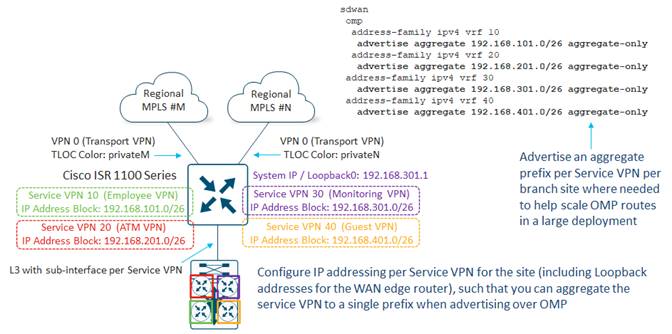

IP Prefix Aggregation

The following figure provides an example of how the IP addressing of a Medium-Sized Branch Site can be carefully selected and configured such that a single aggregated IP prefix can be advertised per Service VPN via OMP from the site.

In the example above, the following IP address blocks have been allocated for the Medium-Sized Branch Site:

● 192.168.101.0/26 has been allocated for Service VPN 10 (Employee VPN) of the branch

● 192.168.201.0/26 has been allocated for Service VPN 20 (ATM VPN) of the branch

● 192.168.301.0/26 has been allocated for Service VPN 30 (Monitoring VPN) of the branch

● 192.168. 401.0/26 has been allocated for Service VPN 30 (Guest VPN) of the branch

Each Service VPN can consist of multiple IP subnets, and multiple VLANs connected via the downstream Layer 3 distribution switch and optionally additional Layer 2 access switches (not shown in the figure above).

By carefully choosing the IP address space of each Service VPN, the various subnets can be aggregated into a single prefix, corresponding to the IP address block shown in the figure above, for each Service VPN. Advertising an aggregate prefix per Service VPN – rather than multiple prefixes – per branch location, can help reduce the overall number of OMP routes within large deployments, helping to scale the deployment. This will be highlighted further within the OMP Routes section of this guide.

Also note that in the example above, a Loopback0 interface has been configured on the SD-WAN router within Service VPN 30 (Monitoring VPN). A Loopback interface is often used as a source for SNMP traps and logging information sent remotely. It also often serves as the in-band network management IP address used to reach the SD-WAN routers. Because of this, the Loopback IP address is often broadcast across OMP as well. By choosing the Loopback IP address to be within the aggregated prefix for the Service VPN, this again reduces the overall number of OMP routes within large deployments. Likewise, for downstream switches if a Loopback interface is used for monitoring and/or management purposes, the IP addressing should be selected from the IP address range of Service VPN 30 (Monitoring VPN) to preserve the ability to minimize the number of prefixes advertised by OMP per Service VPN, by sending aggregated prefixes.

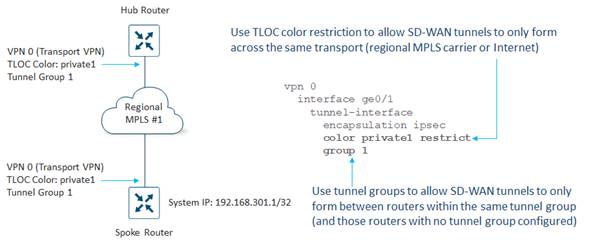

TLOC Color Restriction and Tunnel Groups

The use of TLOC color restriction and tunnel groups will be discussed further in the Data Center Design section of this document.

For the Bank of the Earth deployment, the same Tunnel Group is configured on all WAN (VPN 0) transports / TLOCs for a given branch site (Small Branch, Medium-Sized Branch, or Large / Regional Branch). In other words, the routers at a given branch site have all their tunnel interfaces configured for either Tunnel Group 1 or Tunnel Group 2. Half the branches within an overlay are configured to use Tunnel Group 1, and the other half are configured to use Tunnel Group 2. This ensures that half the branches within an overlay form SD-WAN tunnels to one pair of head-end routers in each Data Center Site, while the other half of the branches within that overlay form SD-WAN tunnels to the other pair of head-end routers in each Data Center Site. Although this reduces the number of SD-WAN tunnels required at both the branch and data center SD-WAN routers, the primary benefit is the reduction in the overall number of SD-WAN tunnels that need be supported at the head-end routers within each Data Center Site as the number of branch sites increases.

TLOC color restriction is also configured, such that SD-WAN tunnels are only formed across the same regional MPLS carrier or the Internet between the branch site SD-WAN router WAN transport tunnel interfaces and the data center hub SD-WAN router WAN transport tunnel interfaces. This also reduces the number of SD-WAN tunnels required at both the branch and data center SD-WAN routers.

When migrating from their legacy MPLS-based network to an SD-WAN network, Bank of the Earth made the business decision to maintain the design of redundant data centers within each geographic area. Each data center serves as a head-end location for implementing the hub-and-spoke SD-WAN data plane network topology discussed previously.

Data Center Head-End SD-WAN Router Design

Bank of the Earth realized early in the design process that they would need to use two methods of scaling the data plane of the head-end SD-WAN routers at the data center locations – vertical scaling and horizontal scaling. Both are necessary for the data centers to be able to handle the expected number of SD-WAN tunnels from all the branch sites, as well as the desired aggregated head-end throughput of up to 40 Gbps per data center.

Vertical scaling involves deploying head-end routers which can handle higher throughput and higher SD-WAN tunnel capacity. Throughput of SD-WAN routers is expressed in terms of millions of packets per second (Mpps) and gigabits per second (Gbps). This reflects the fact that throughput is constrained by how many packets per second the SD-WAN router can process. Hence the larger the packet size (for example 1,400 bytes), the higher the throughput in Gbps. Likewise, the lower the packet size (for example 64 bytes), the lower the throughput in Gbps. Actual customer networks do not have just one packet size. Therefore, for realistic throughput numbers, a mixture of packet sizes is used, based upon experience with existing customer networks. This is referred to as IMIX traffic. Hence, Bank of the Earth based their decision as to the platform choice for their data center head-end routers on throughput capacity of the platform with IMIX traffic.

Throughput is also based on the features enabled on the SD-WAN router platforms. Since Bank of the Earth has requirements for SAIE (formerly known as DPI) / statistics collection, they based their decision as to the platform of choice for their data center head-end routers on the combination of feature sets which include IPsec encapsulation on the SD-WAN overlay tunnels, Quality of Service (QoS), DPI, and Flexible NetFlow (FNF) collection and export.

After discussing platform choices with their Cisco account team, Bank of the Earth decided to implement Catalyst 8500 Series platforms as head-end SD-WAN routers within each of the data centers within each of the overlays.

Bank of the Earth made the business decision to maintain the existing four regional MPLS provider circuits, as well as the Internet circuit within each data center. Therefore, each SD-WAN head-end router has a WAN transport (VPN 0) tunnel interface connection to each of the four regional MPLS service providers, through their respective MPLS CE routers. In addition to this, each head-end SD-WAN router has a WAN transport (VPN 0) tunnel interface connection to the Internet via the Internet Edge firewall within the data center. Hence, each data center head-end SD-WAN router is configured with five TLOCs. The following figure shows the TLOC colors implemented at the head-end (and branch) routers for the Bank of the Earth SD-WAN network.

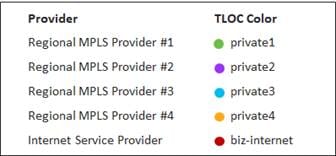

Use of TLOC Color Restriction

When branch and head-end SD-WAN routers support multiple WAN transport (VPN 0) tunnel interfaces / TLOCs, the number of SD-WAN tunnels between endpoints typically increases. The number of SD-WAN tunnels depends upon whether the customer has chosen to implement TLOC color restriction or not. TLOC color restriction prevents the formation of SD-WAN tunnels between TLOCs of different colors, as shown in the figure below.

The use of TLOC color restriction can significantly reduce the number of SD-WAN tunnels needed at the head-end routers in a hub-and-spoke network topology. Because of this, Bank of the Earth decided to implement color restriction on each of the regional MPLS TLOCs (private1 through private4) and the Internet TLOC (biz-internet), within each SD-WAN overlay, to reduce the number of SD-WAN tunnels required on each head-end router within the data center sites.

| Technical Note |

| Since MPLS carriers typically utilize private (RFC 1918) IP addressing within their networks, and since Internet Service Providers (ISPs) may assign publicly routable (non-RFC 1918) IP addressing to devices requiring Internet access, it may not be possible in all scenarios to form SD-WAN tunnels between the two transports – regional MPLS and Internet – or even between two different regional MPLS carriers. |

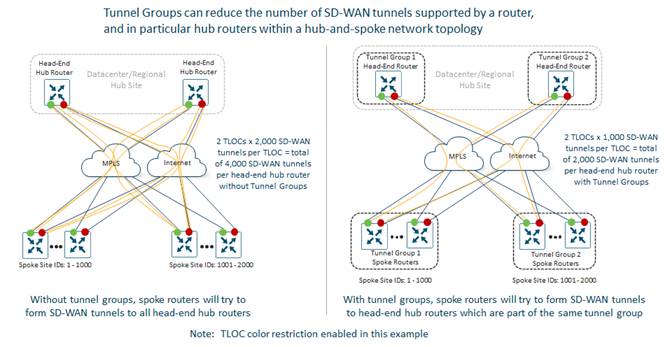

Use of Tunnel Groups

Even with a hub-and-spoke fabric data plane topology and with TLOC color restriction, the data plane scale of the head-end Data Center Sites (hubs) is constrained both by the throughput as well as the maximum number of SD-WAN tunnels supported by the head-end SD-WAN routers. In a hub-and-spoke fabric data plane topology, when the number of branches in a single overlay becomes very large, even with TLOC color restriction the number of SD-WAN tunnels that need to be supported can exceed the capabilities of a single head-end router. Likewise, the throughput requirements of the hub site can exceed the capabilities of a single head-end router.

A pair of head-end SD-WAN routers can be used for resiliency and to load-balance traffic across the head-end routers, helping to alleviate the data throughput concerns. However, since the spoke (branch) routers still form SD-WAN tunnels to each hub router of the head-end router pair, redundant head-end routers do not alleviate the issue of head-end tunnel scale.

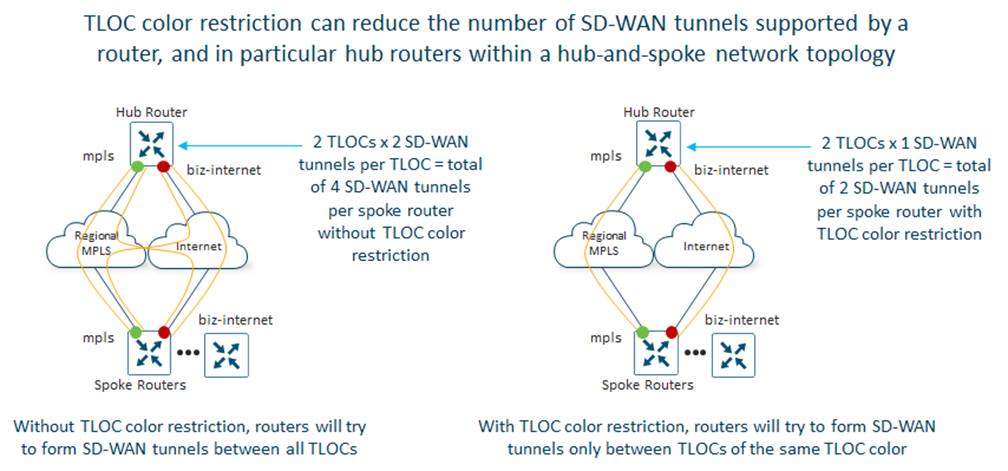

Bank of the Earth ran the calculations regarding the number of SD-WAN tunnels required at each data center head-end router for each overlay if all branch sites simply connected to a single pair of head-end routers as shown in the following figure.

The number of tunnels required is based on the branch prototype designs and the numbers of each branch type (Small, Medium-Sized, or Large / Regional) as discussed in the Branch SD-WAN Router Design section of this document. Also, as discussed within the Branch SD-WAN Router Design, each Bank of the Earth overlay is split into eastern and western geographic areas, with MPLS providers primarily servicing branches within their respective geographic areas.

As can be seen in the figure above, with a single pair of SD-WAN routers per data center, each data center head-end router would need to support over 10,000 SD-WAN tunnels. This exceeded the tunnel capabilities of the hardware platform that Bank of the Earth was targeting for their data center head-end SD-WAN routers.

One method to increase the total number of tunnels which can be supported at the head-end data center site – and therefore the total number of branch devices that can be supported in a hub-and-spoke fabric data plane topology – is to scale the data plane horizontally at the hub sites. Horizontal data plane scaling involves provisioning multiple SD-WAN routers (or multiple pairs of SD-WAN routers) at the head-end – and allowing only certain spoke (branch) routers to form SD-WAN tunnels to certain head-end (data center) routers. This can be done through Tunnel Groups, as shown in the following figure.

With Tunnel Groups, routers will only form SD-WAN tunnels between each other if the Tunnels Group number is the same, or if one or both sides is configured with no Tunnel Group (default Tunnel Group).

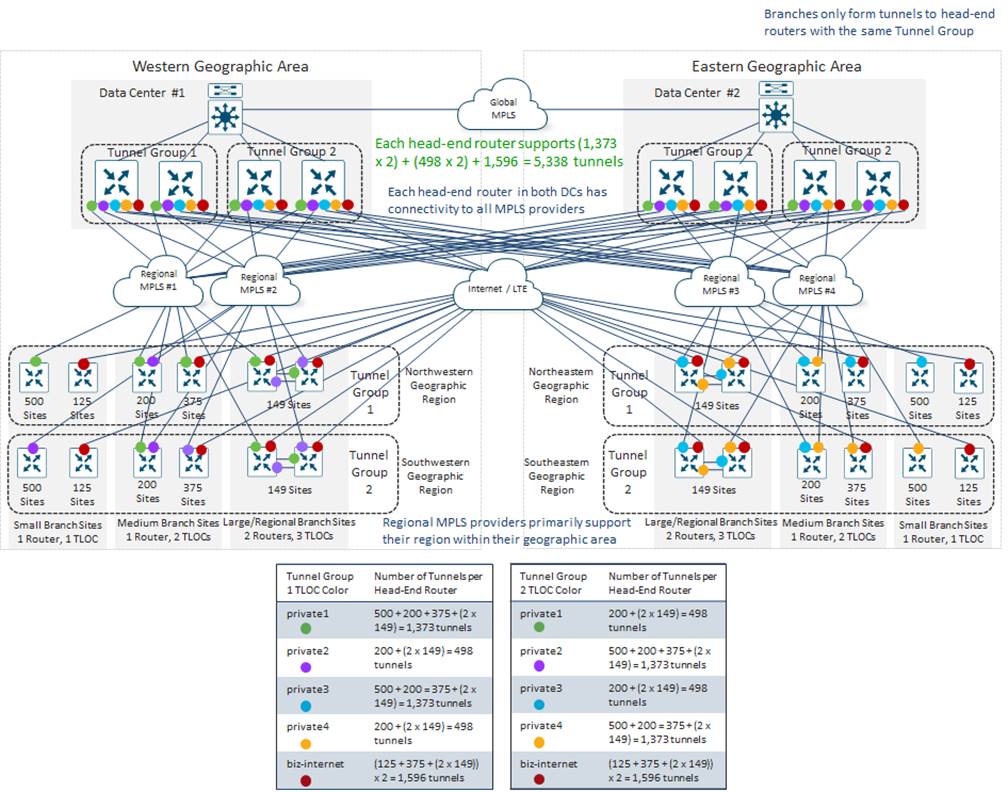

To scale out the ability of each data center site to support the required number of SD-WAN tunnels from all the branch sites within each overlay, Bank of the Earth re-designed the data centers to support two pairs of SD-WAN routers. Each pair of SD-WAN routers within each data center would be part of a different SD-WAN Tunnel Group, as shown in the following figure.

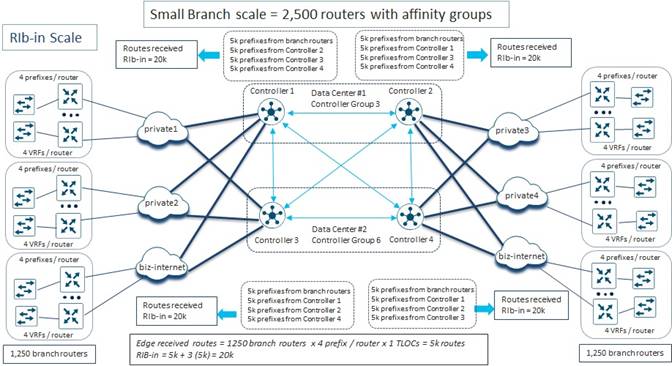

Since Bank of the Earth had already considered both the geographic area and region in the design of their Site ID numbering scheme, they could easily make use of this information to determine which branch sites should be part of Tunnel Group 1 and which branch sites should be part of Tunnel Group 2, to balance the SD-WAN tunnel count load between the sets of data center head-end SD-WAN routers.

Hence, Bank of the Earth used the Site ID numbering scheme information to configure all TLOCs within branch sites in the “north” regions (northwest and northeast) to be part of Tunnel Group 1 and all TLOCs within branch sites in the “south” regions (southwest and southeast) to be part of Tunnel Group 2. This effectively split the SD-WAN tunnel count load equally between the two sets of data center head-end SD-WAN routers.

As can be seen in the figure above, since the branch sites are now equally distributed across the sets of head-end SD-WAN routers, each set of routers must handle less than 5,500 SD-WAN tunnels. This is within the capabilities of the router platform which Bank of the Earth had targeted for the data center head-end hardware platform, based on feedback from their Cisco account team.

Note that this design also increases the throughput capacity of the overall data center site, since the traffic load from the branch sites is now spread across two sets of Catalyst head end routers. Note also that Bank of the Earth did not have to implement redundant sets of data center head-end SD-WAN routers. They could have implemented a single head-end SD-WAN router within each Tunnel Group (Tunnel Groups 1 and 2) at each data center, to scale the tunnel capacity at each data center. Even with the loss of a single data center within each SD-WAN overlay, branch sites still have connectivity to the other data center within their SD-WAN overlay – as well as connectivity to the data center and branch sites within the other SD-WAN overlay via the global MPLS network. This provides a first level of high-availability within the data plane of each SD-WAN overlay. However, a pair of head-end SD-WAN routers within each Tunnel Group in each data center provides a second level of high-availability – albeit at the cost of additional SD-WAN tunnels which need to be supported by each branch SD-WAN router, as well as additional OMP routes between the SD-WAN Controller instances and the SD-WAN routers within the overlay. This is due to the additional paths between the branch routers and the data centers due to the additional SD-WAN tunnels. The OMP Route Calculation section of this document discusses the calculation of the OMP routes and scalability considerations of the design.

Head-End SD-WAN Router Physical Connectivity

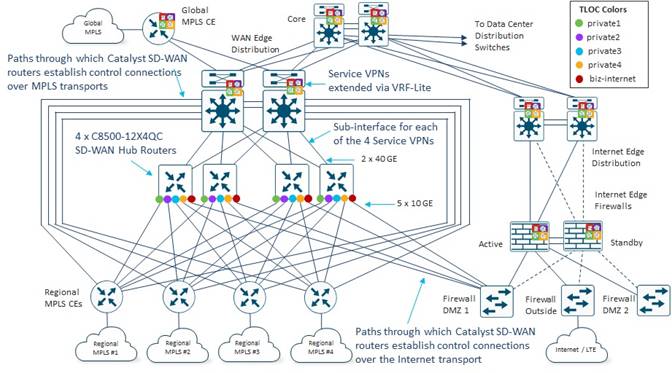

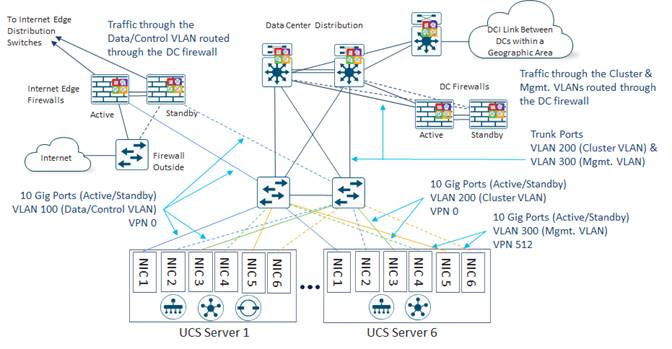

The following figure shows the design of the head-end SD-WAN routers within each data center.

Each WAN transport of the head-end SD-WAN router is connected to a regional MPLS provider CE by way of a Layer 3 (routed) 10 Gbps interface. The IP addressing for the subnets for each of these connections must be advertised into their respective regional MPLS provider networks. It is necessary for the WAN transport (VPN 0) interfaces of the SD-WAN routers at the branch sites to have IP reachability with the WAN transport (VPN 0) interfaces of the data center SD-WAN head-end routers for SD-WAN tunnels to be formed between the branch and data center sites.

The LAN (Service VPN) side of each of the data center SD-WAN head-end routers connects to a pair of WAN Edge Distribution switches by way of two Layer 3 (routed) 40 Gbps interfaces. Each physical interface on the head-end SD-WAN routers is configured with four sub-interfaces – one for each Service VPN. Each Service VPN is extended to a separate virtual routing and forwarding instance (VRF) running on the WAN Edge Distribution switches. VRF-Lite configured on the WAN Edge Distribution switches extends the segmentation across the data center as well as across the global MPLS network. This ensures that segmentation is extended throughout Bank of the Earth’s network.

BGP routing is enabled – per Service VPN – between the data center head-end SD-WAN routers and the WAN Edge Distribution switches. As OMP routes from the branch sites are redistributed into BGP at each data center head-end SD-WAN router, the prefixes from the branch sites are tagged with a BGP community. Inbound BGP route filtering at the data center head-end SD-WAN routers is used to filter out branch routes that cross the global MPLS backbone between data centers and between overlays, to prevent unintentional loops from forming due to the redistribution of OMP routes to BGP and vice-versa.

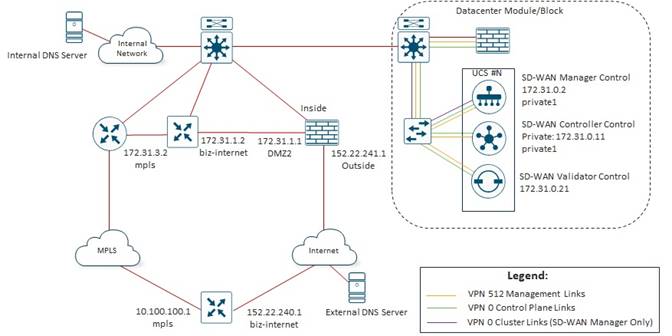

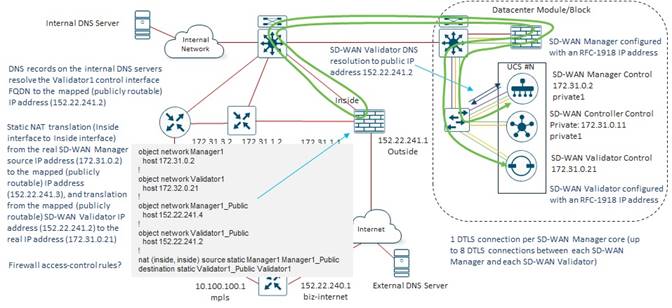

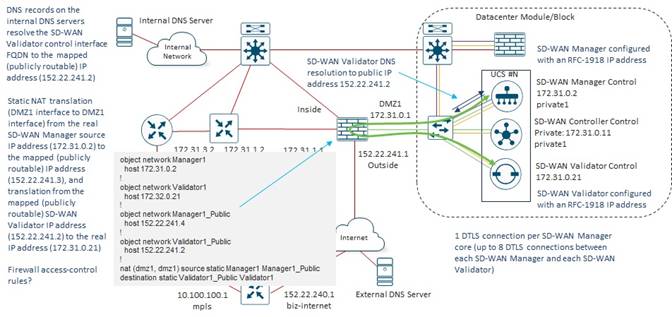

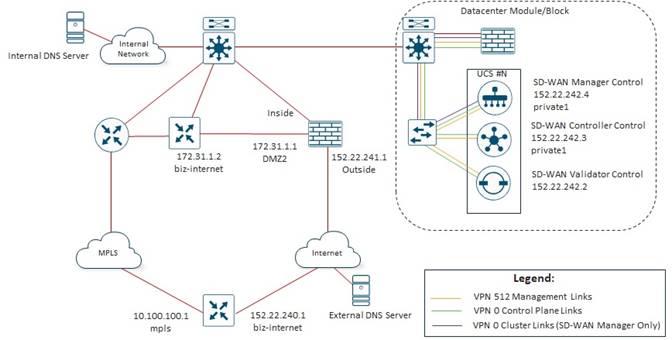

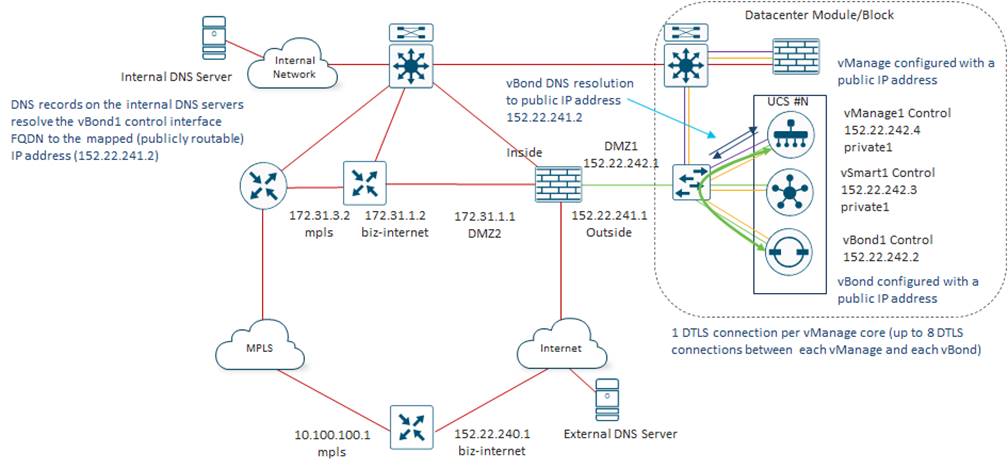

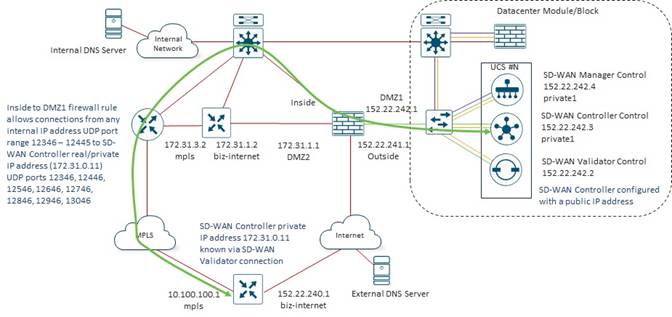

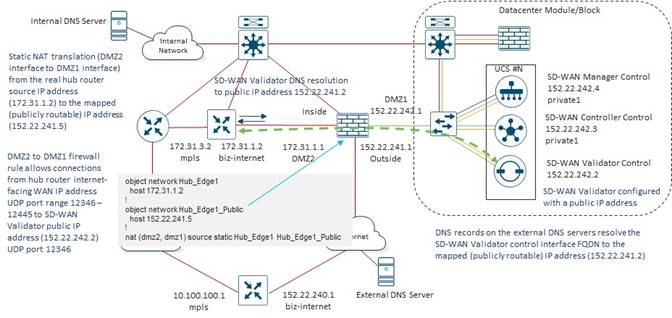

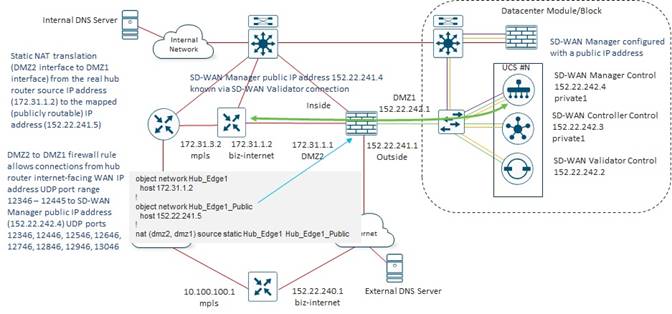

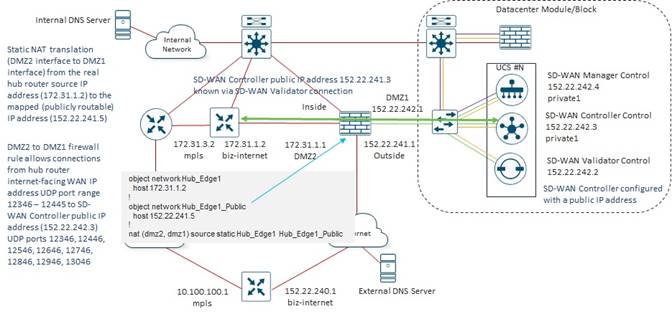

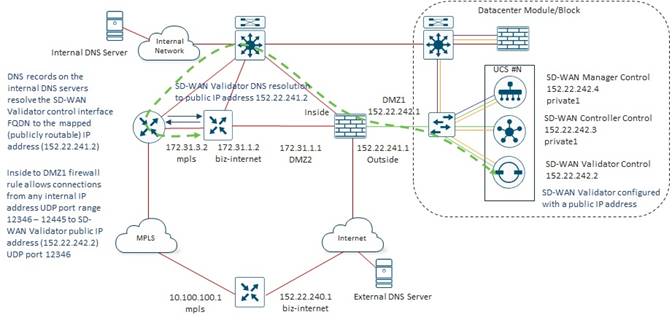

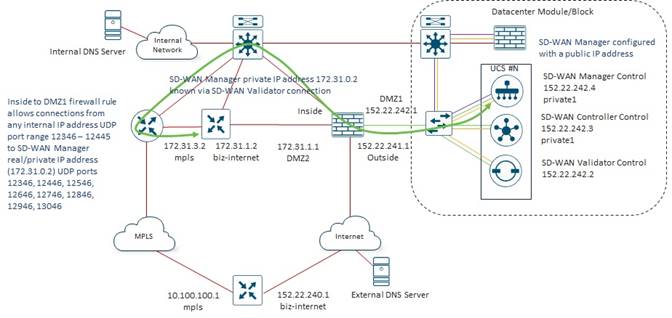

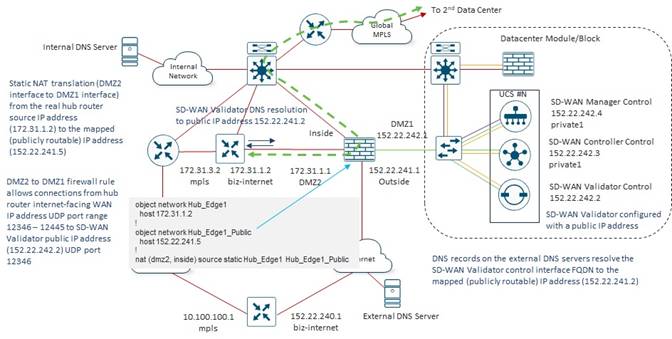

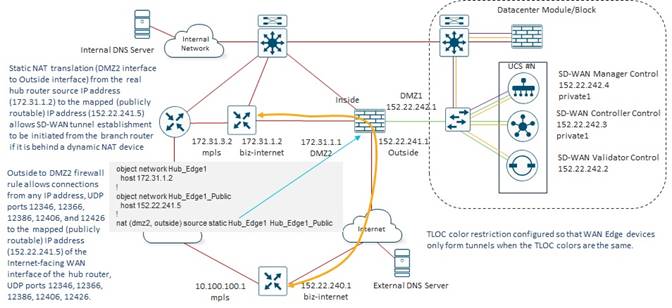

The regional MPLS CEs were left in place with the Bank of the Earth design for a specific reason. It is necessary for the WAN transport (VPN 0) interfaces of the SD-WAN routers at the branch sites (and the data center head-end routers) to have IP reachability to the SD-WAN Validator, SD-WAN Manager, and SD-WAN Controllers. Specifically, each WAN transport (VPN 0) interface of an SD-WAN router that is connected to a regional MPLS provider needs to be able to initiate a DTLS control connection to the control (non-management) interface of the SD-WAN Validator controllers located within each of the data centers. Likewise, each WAN transport (VPN 0) interface of an SD-WAN router that is connected to a regional MPLS provider needs to be able to initiate a DTLS/TLS control connection to the control (non-management) interface of two SD-WAN Controller instances which may be in either data center within an overlay. As discussed in the SD-WAN Controller Design section, Bank of the Earth made the decision to leave the max-control-connections setting of all their SD-WAN routers at the default of 2. Finally, each SD-WAN router that is connected to a regional MPLS provider may need to initiate a DTLS/TLS control connection to the control (non-management and non-cluster-replication) interface of a SD-WAN Manager instance within the SD-WAN Manager cluster – regardless of whether it is the primary SD-WAN Manager cluster or the secondary / disaster recovery SD-WAN Manager cluster.

The IP addressing for SD-WAN control components must be advertised into their respective regional MPLS provider networks. Further, there must be a path by which the SD-WAN routers can reach the SD-WAN control components within the data centers. Bank of the Earth achieved this by leaving the existing regional MPLS CEs, with their connections into the WAN Edge Distribution switches in place. This also provided a path by which non-migrated MPLS branch sites could communicate with migrated SD-WAN branch sites during the migration phase of their SD-WAN rollout.

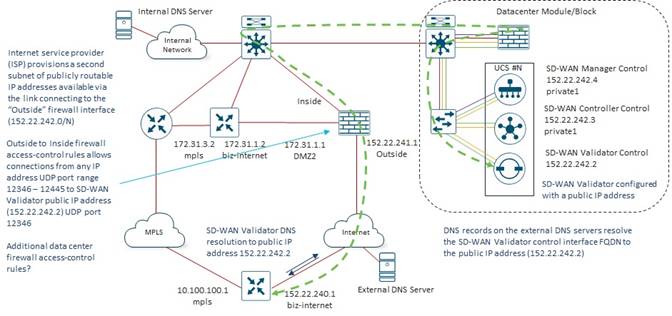

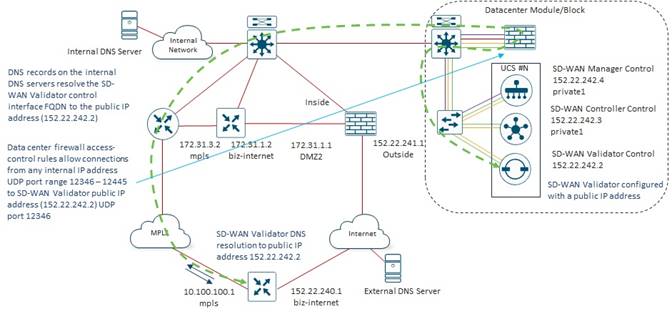

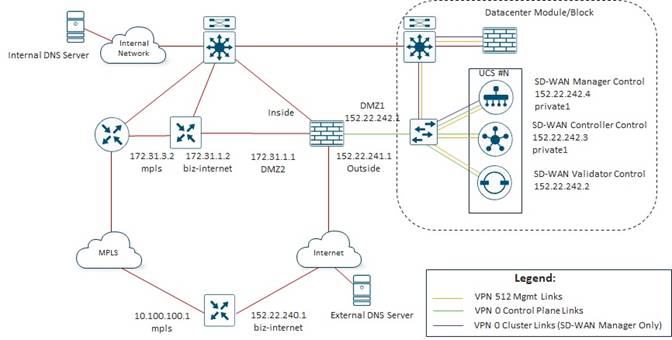

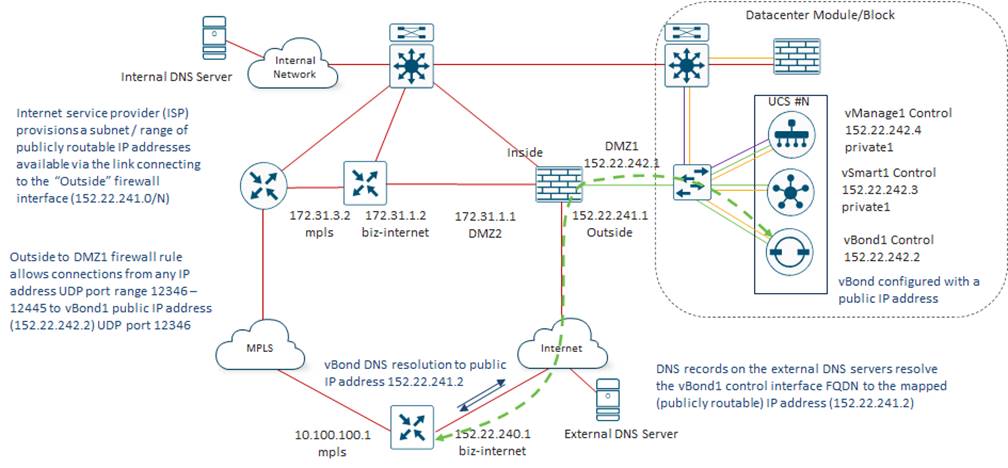

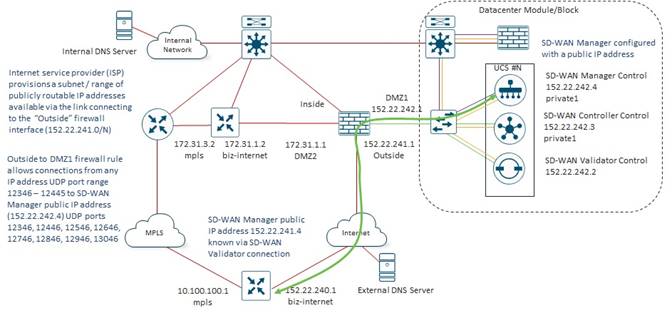

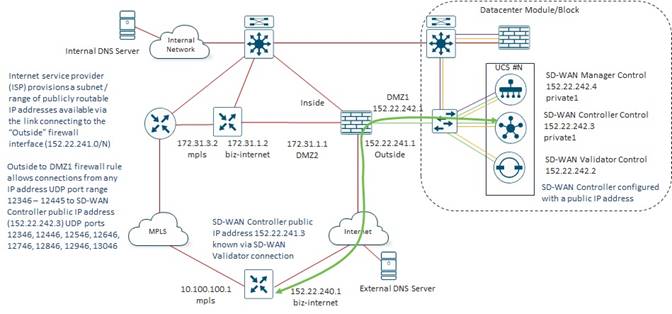

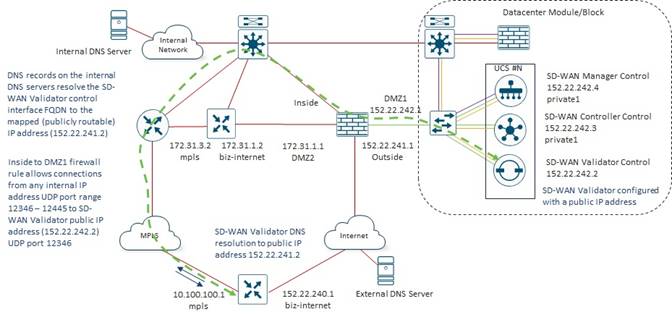

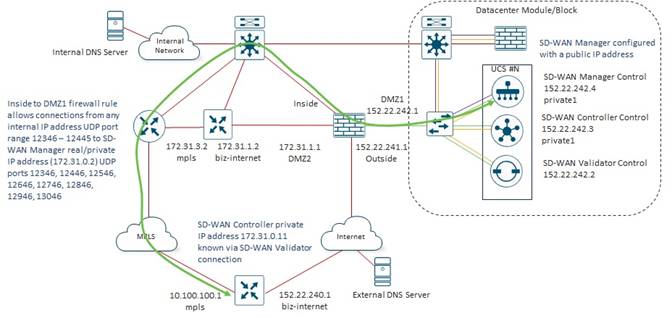

Likewise, each WAN transport (VPN 0) interface of an SD-WAN router that is connected to an Internet provider needs to be able to initiate a DTLS control connection to the control (non-management) interface of the SD-WAN Validator controllers located within each of the data centers. Likewise, each WAN transport (VPN 0) interface of an SD-WAN router that is connected to an Internet provider needs to be able to initiate a DTLS/TLS control connection to the control (non-management) interface of two SD-WAN Controller instances which may be in either data center within an overlay. Finally, each SD-WAN router that is connected to an Internet provider may need to initiate a DTLS/TLS control connection to the control (non-management and non-cluster-replication) interface of a SD-WAN Manager instance within the SD-WAN Manager cluster – regardless of whether it is the primary SD-WAN Manager cluster or the secondary / disaster recovery SD-WAN Manager cluster.

Hence, the IP addressing for the SD-WAN control components must also be advertised as publicly routable IP addresses on the Internet. Further, there must be a path by which the SD-WAN routers can reach the SD-WAN control components within the data centers via the Internet WAN transports. Bank of the Earth achieved this through the Internet Edge firewall. Note that the IP addressing of the SD-WAN control components is discussed in the SD-WAN Controller IP Addressing section of this document.

| Technical Note |

| Cisco SD-WAN has been rebranded to Cisco Catalyst SD-WAN. As part of this rebranding, the vManage name has been changed to SD-WAN Manager, the vSmart name has been changed to SD-WAN Controller, and the vBond name has been changed to SD-WAN Validator. Together, the vManage, vSmart, and vBond will be referred to as SD-WAN control components or the SD-WAN control complex in this document. |

Bank of the Earth made the decision to implement on-prem SD-WAN Validator, SD-WAN Controller, and SD-WAN Manager control components, as opposed to Cisco CloudOps or MSP cloud-hosted control components. This is primary due to their internal security operations guidance that all data (including management & control plane data) for the SD-WAN network remain on-site.

SD-WAN Manager Design

Bank of the Earth followed Cisco recommendations for SD-WAN control component sizing within each overlay, found at the following URL:

Within each overlay, for disaster recovery purposes a primary 6-node SD-WAN Manager cluster operating in single tenant mode was deployed in one data center, with a backup 6-node SD-WAN Manager cluster deployed in the other data center. The decision to implement 6-node SD-WAN Manager clusters is based on the total number of SD-WAN routers deployed in each overlay (greater than 4,000) and the requirement to enable SD-WAN Application Intelligence Engine (SAIE) statistics collection and processing on the SD-WAN Manager cluster itself, rather than offload it to the Cisco cloud-hosted vAnalytics service.

Each SD-WAN Manager instance is deployed with 32 vCPUs, 128 GB RAM, and 10 TB of storage (thick provisioning) on separate UCS servers, based on Cisco recommendations.

Although Bank of the Earth could have begun with a 3-node SD-WAN Manager cluster and scaled it out it to a 6-node cluster as the number of SD-WAN routers or daily amount of SAIE statistics collected exceeded the capabilities of a 3-node cluster; they decided to implement the required SD-WAN controller instances for the final end-state of each SD-WAN overlay. This was based on the business decision that minimizing the potential risk of disrupting the SD-WAN deployment during the migration from a 3-node cluster to a 6-node cluster outweighed the cost of purchasing and implementing additional hardware up front.

Likewise, Bank of the Earth could have started with less than 10 TB of disk storage allocated per SD-WAN Manager instance and expanded it as the amount of SAIE statistics collection increased over time. However, based on their expectations for total daily SAIE statistics collection when each SD-WAN overlay is fully deployed, they decided to allocate the full 10 TB of disk space up front on each UCS server.

Finally, administrator triggered failover between the primary and backup SD-WAN Manager clusters was implemented, since failover using an arbitrator is no longer supported by Cisco. Database synchronization between the primary and backup SD-WAN Manager clusters occurs over the DCI link between the primary and secondary data centers within each overlay.

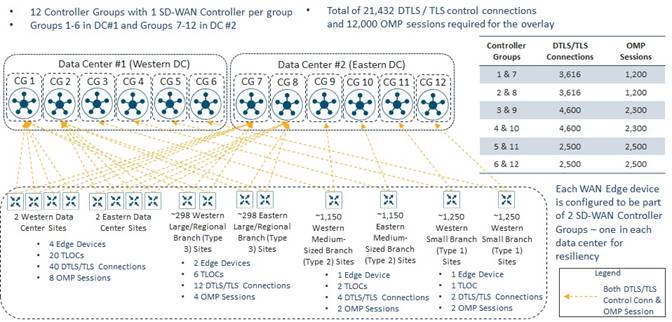

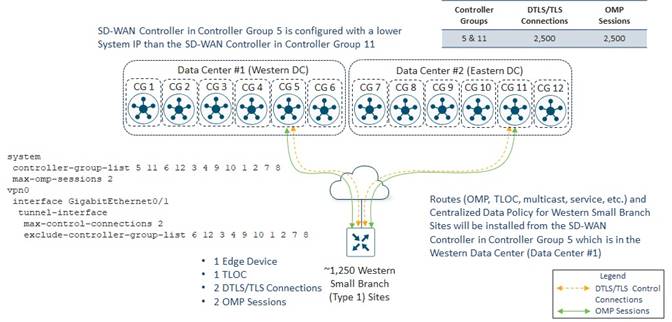

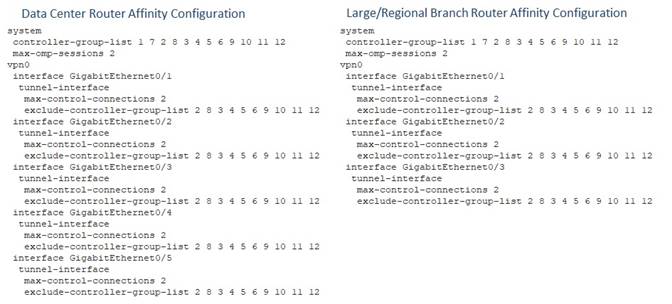

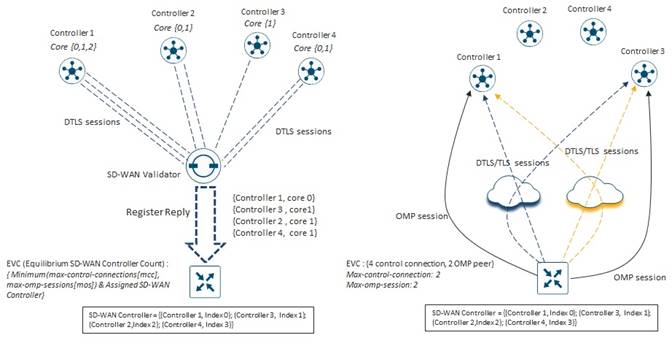

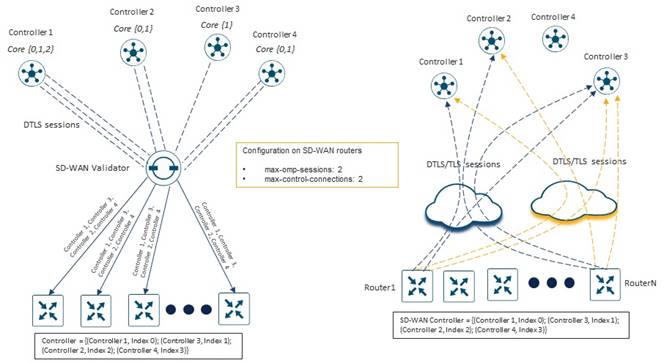

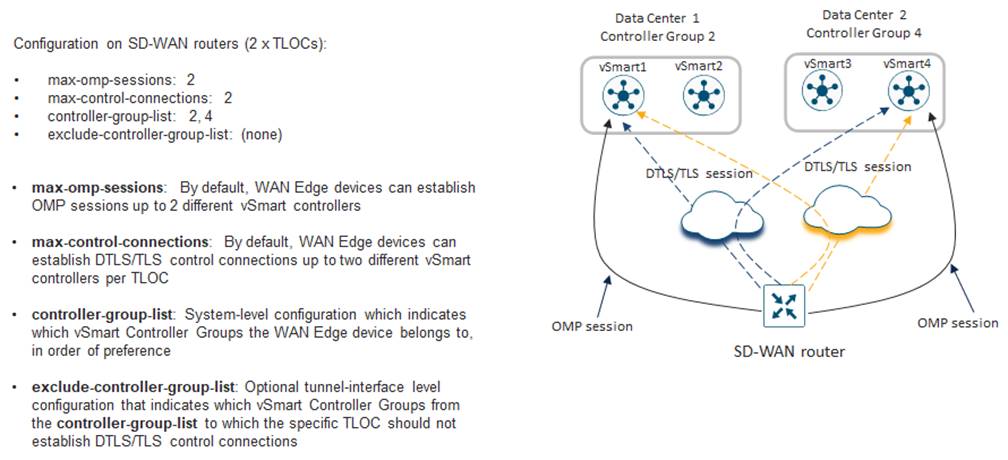

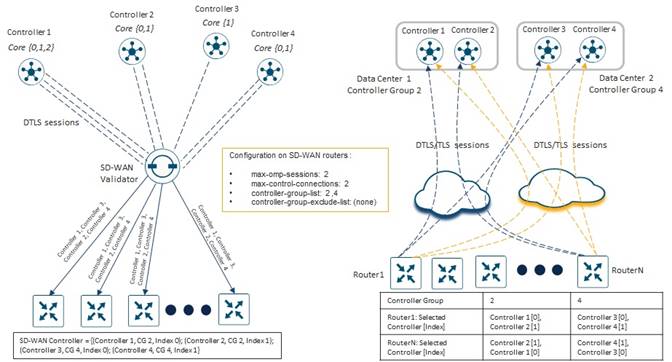

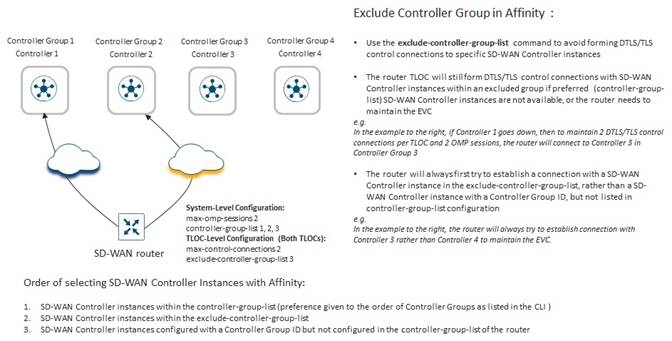

When designing a large SD-WAN deployment it is generally recommended to separate SD-WAN Controller instances into different Controller Groups, and then use the system-level controller-group-list and tunnel-interface level exclude-controller-group-list commands on the SD-WAN routers to control which SD-WAN routers form DTLS/TLS control connections and OMP sessions to which SD-WAN Controller instances. This is referred to as SD-WAN Controller Affinity.

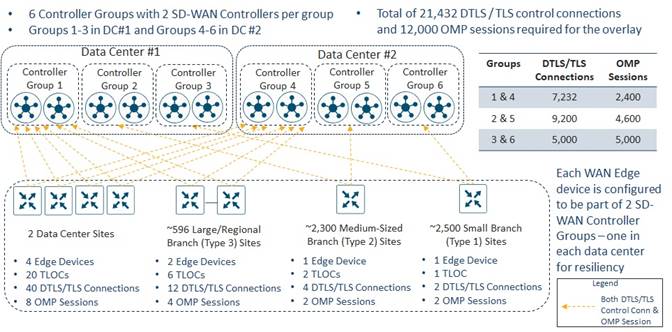

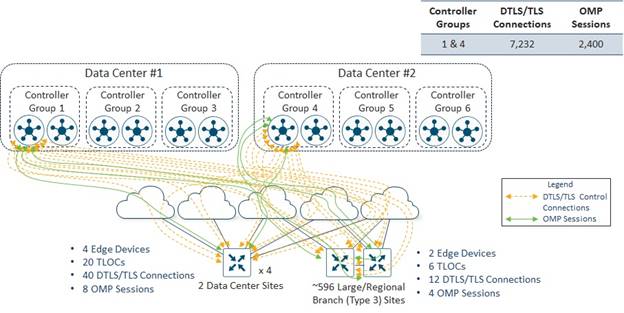

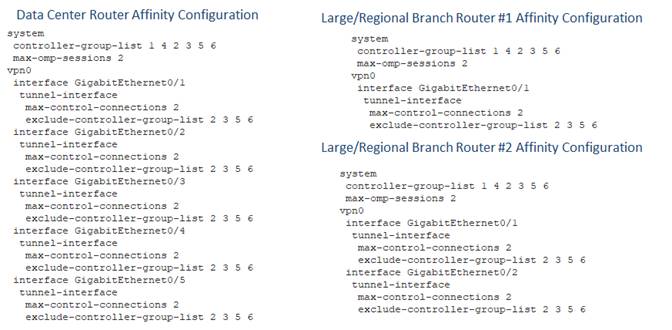

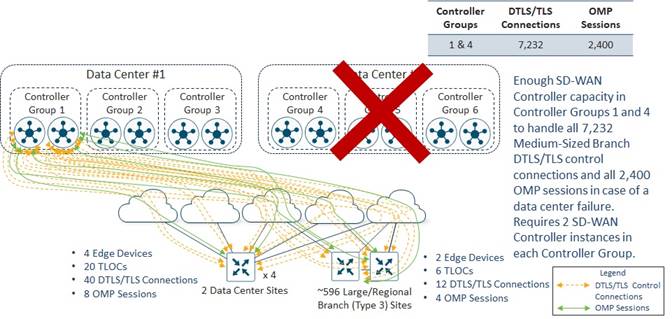

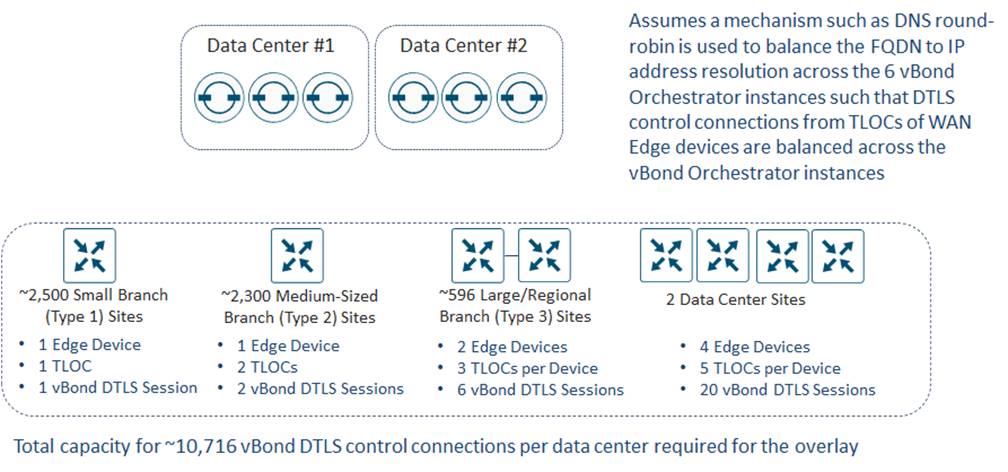

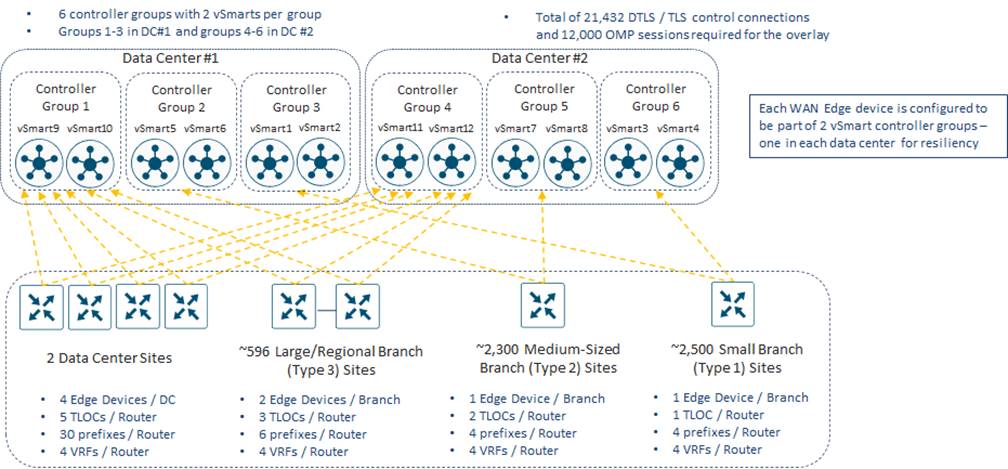

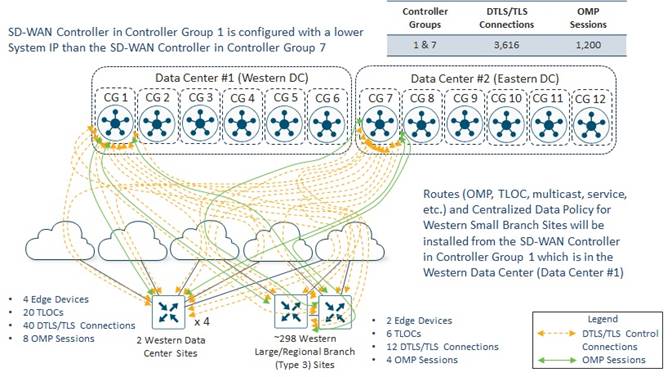

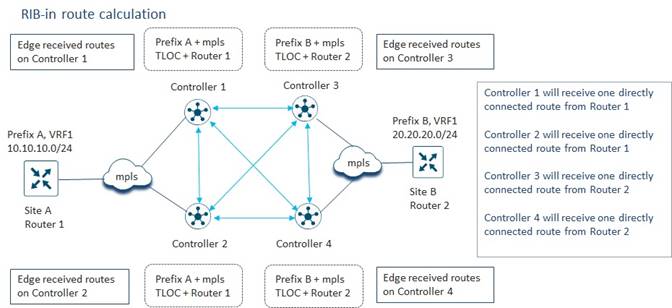

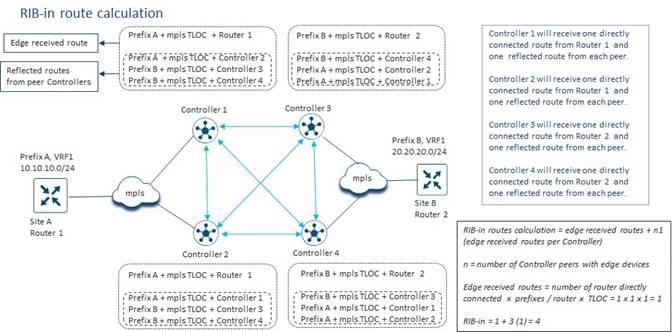

For the SD-WAN Controller design for Bank of the Earth’s overlays, a total of six SD-WAN Controller Groups are configured with two SD-WAN Controller instances in each Controller Group. Controller Groups 1 – 3 are configured in Data Center #1, and Controller Groups 4 – 6 are configured in Data Center #2.

Each SD-WAN router is configured to be a member of two SD-WAN Controller Groups (Groups 1 and 4, Groups 2 and 5, or Groups 3 and 6) – with one Controller Group in each data center.

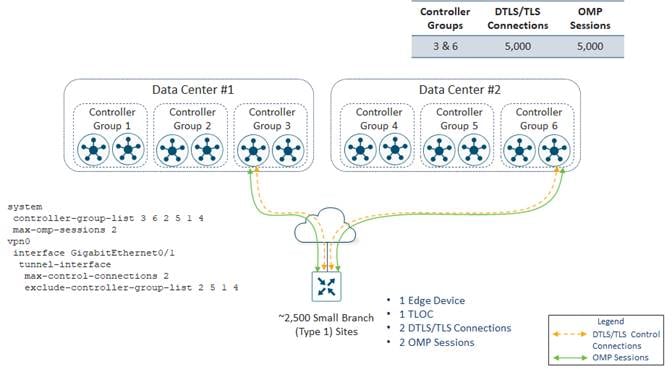

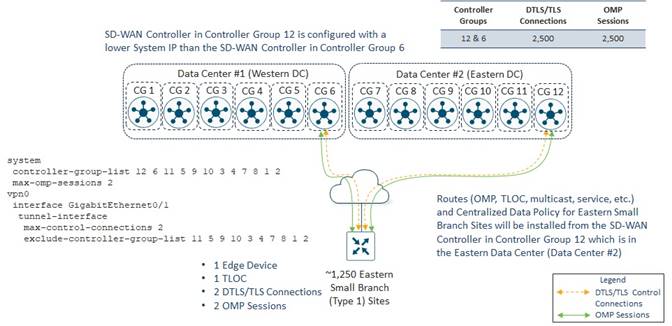

Small Branch Sites

SD-WAN routers within Small Branch Sites are configured to be members of SD-WAN Controller Groups 3 and 6.

By default, each SD-WAN router will establish DTLS/TLS control connections to two SD-WAN Controller instances over each TLOC. This is controlled at the WAN transport tunnel-interface level by the max-control-connections command. Likewise, each SD-WAN router will establish OMP connections to two SD-WAN Controllers by default. This is controlled by the max-omp-sessions command. Bank of the Earth decided to leave these settings at the default values.

Each Small Branch Site has a single SD-WAN router which has a single WAN transport, and therefore a single TLOC. Therefore, each Small Branch Site initiates two DTLS/TLS control connections – one to a SD-WAN Controller in Controller Group 3 and the other to a SD-WAN Controller in Controller Group 6. One OMP session is initiated to the SD-WAN Controller in Controller Group 3 over the DTLS/TLS control connection, and one OMP session is initiated to the SD-WAN Controller in Controller Group 6 over the DTLS/TLS control connection – for a total of two OMP sessions per Small Branch Site.

Since there are approximately 2,500 Small Branch Sites within the Americas overlay, there are a total of 2 x 2,500 = 5,000 DTLS/TLS control connections established between all the Small Branch Sites and all the SD-WAN Controller instances in Controller Groups 3 and 6. Likewise, there are a total of 2 x 2,500 = 5,000 OMP sessions established between all the Small Branch Sites and all the SD-WAN Controller instances in Controller Groups 3 and 6. More specifically, 2,500 DTLS/TLS control connections and 2,500 OMP Sessions will be formed to the SD-WAN Controller instances in Controller Group 3 and 2,500 DTLS /TLS control connections and 2,500 OMP sessions will be formed to the SD-WAN Controller instances in Controller Group 6. A load-balancing algorithm within the SD-WAN router – based on the router’s System IP address – ensures approximately equal distribution of the DTLS/TLS control connections and OMP sessions across both SD-WAN Controller instances within each Controller Group.

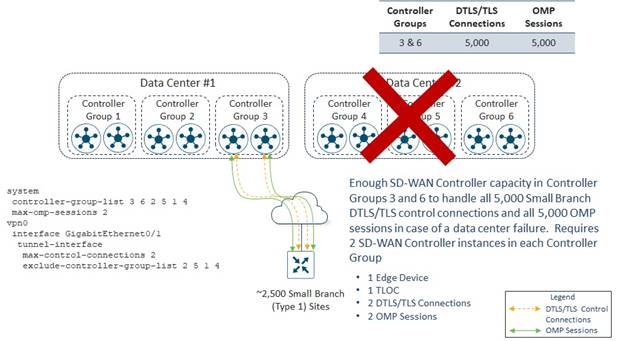

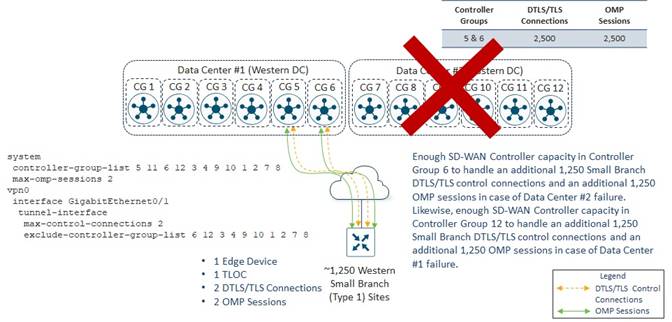

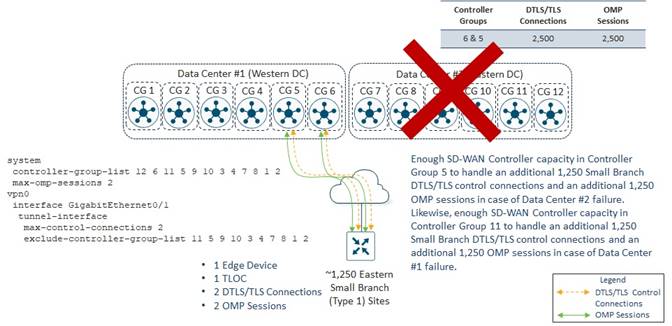

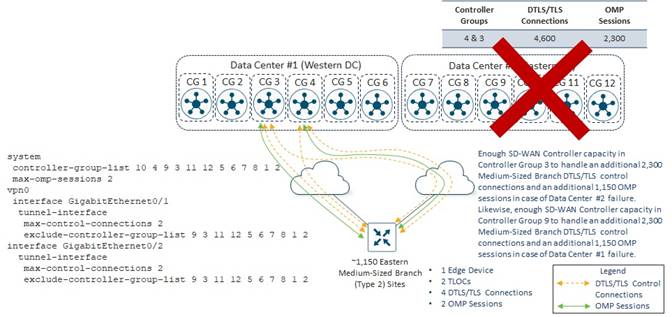

Note that a single SD-WAN Controller instance in each of Controller Groups 3 and 6 is sufficient to handle the 2,500 DTLS/TLS control connections and 2,500 OMP sessions. However, Bank of the Earth wanted the SD-WAN Controller design to specifically address the scenario of a failure of one of the data centers within a given SD-WAN overlay. In the event of a failure of one of the data centers, all Small Branch Site SD-WAN routers will lose DTLS/TLS control connections and OMP sessions to the SD-WAN Controller instances in the Controller Group to which they belong within that data center.

In the example above, if Data Center #2 fails, the Small Branch Site SD-WAN routers will lose DTLS/TLS control connections and OMP sessions to all SD-WAN Controller instances in Controller Group 6. Because the Small Branch Site SD-WAN routers are configured with the command max-omp-sessions 2 and the WAN transport interface is configured with the tunnel-interface level command max-control-connections 2, the SD-WAN router will be out of equilibrium – both regarding the number of DTLS/TLS control connections on the WAN transport tunnel-interface and the overall number of OMP sessions it has formed with SD-WAN Controllers.

The SD-WAN router will attempt to establish a second DTLS/TLS control connection over the WAN transport tunnel-interface. Since the WAN transport tunnel-interface has been configured to exclude Controller Groups 1, 2, 4, and 5, and since there is a second SD-WAN Controller instance within Controller Group 3, each small branch SD-WAN router will establish a second DTLS/TLS control connection to the second SD-WAN Controller instance within Controller Group 3. Over this second DTLS/TLS control connection, each small branch SD-WAN router will form a second OMP session. Note that an SD-WAN router will never form a second DTLS/TLS control connection or OMP session to the same SD-WAN Controller instance that it already has a DTLS/TLS control connection and OMP session.

At this point, each Small Branch Site SD-WAN router will have met the requirement for two DTLS/TLS control connections for the WAN transport tunnel-interface, as specified in the max-control-connections 2 command, and the requirement for two OMP sessions, as specified in max-omp-sessions 2 command. Note that since the TLOC of the Small Branch Site SD-WAN router is not connected to both of its “assigned” SD-WAN Controller instances, it is still considered “out of equilibrium”.

With two SD-WAN Controller instances in each of Controller Groups 3 and 6, if either Data Center #1 or #2 fails, sufficient SD-WAN Controller capacity is provisioned within each Controller Group to maintain the DTLS/TLS control connections and OMP sessions for the SD-WAN routers in the Small Branch Sites. Put another way, Small Branch Site SD-WAN routers have been compartmentalized to use only SD-WAN Controller instances within Controller Groups 3 and 6 during normal operations and in the event of the failure of one of the two data centers. This provides Bank of the Earth a deterministic way of ensuring there is sufficient SD-WAN Controller capacity for the Small Branch Sites, rather than trying to figure out how to spread individual the DTLS/TLS control connections and OMP sessions across the remaining Controller Groups without overrunning the capacity of any given SD-WAN Controller instance. This is particularly useful also, as Bank of the Earth adds or removes Small Branch Sites over time. Note that two SD-WAN Controller instances within each Controller Group already provides some excess capacity for Bank of the Earth to add additional Small Branch Sites. However, the downside of this design is that it does require the provisioning of double the number of SD-WAN Controller instances necessary for all DTLS/TLS control connections and OMP sessions from the Small Branch Sites.

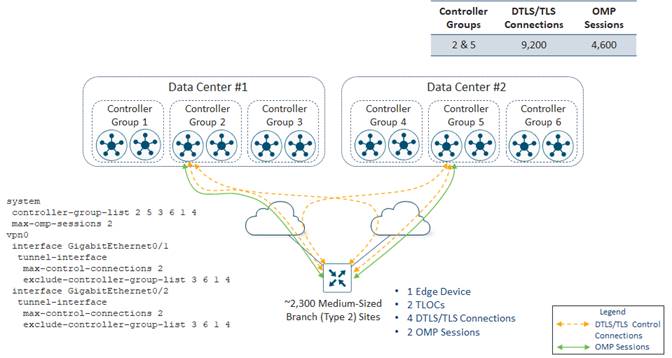

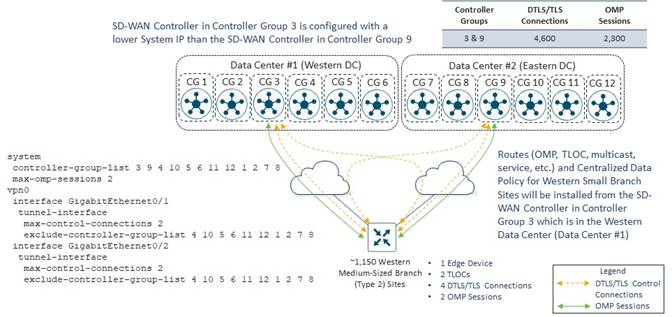

Medium-Sized Branch Sites

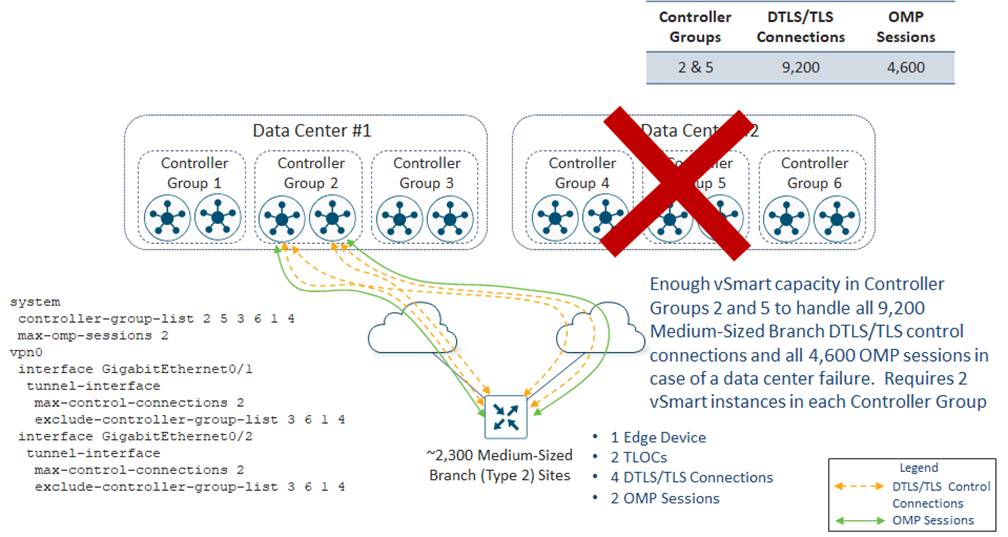

Routers within the Medium-Sized Branch Sites are configured to be members of SD-WAN Controller Groups 2 and 5.

As with the Small Branch Site SD-WAN routers, Bank of the Earth decided to leave the max-control-connections and max-omp-sessions settings at their default values of 2.

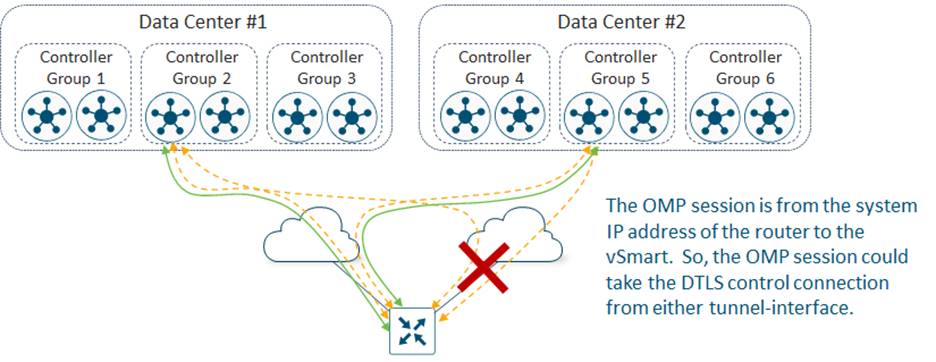

Each Medium-Sized Branch Site has a single SD-WAN router which has a two WAN transports / TLOCs. Therefore, a total of four DTLS/TLS control connections and two OMP sessions are initiated from each Medium-Sized Branch Site. Each Medium-Sized Branch Site initiates two DTLS/TLS control connections – one to a SD-WAN Controller instance in Controller Group 2 and the other to a SD-WAN Controller instance in Controller Group 5 – from each of the two WAN transport tunnel-interfaces on the router. One OMP session is established to the SD-WAN Controller instance in Controller Group 2 over one of the DTLS/TLS control connections, and one OMP session is established to the SD-WAN Controller instance in Controller Group 5 over one of the DTLS/TLS control connections.

Since there are two DTLS/TLS control connections – one from each WAN transport tunnel-interface on the router – the OMP session may be established over either of these DTLS/TLS control connections on either WAN transport tunnel-interface. This is one of the benefits which Bank of the Earth recognized when they made the decision to leave the max-control-connections and max-omp-sessions settings at their default values for the Medium-Sized Branch Sites. If one of the WAN transport interfaces on a Medium-Sized Branch Site SD-WAN router goes down, the OMP session(s) to the SD-WAN Controller instance(s) which are riding over the DTLS/TLS control connections on that WAN transport tunnel-interface can simply switch over to the other DTLS/TLS control connection on the other WAN transport tunnel-interface. The SD-WAN Controller instance(s) will not see a loss of OMP peering, and therefore will not have to withdraw all routes (OMP, TLOC, multicast, service, etc.) available via the OMP peer.

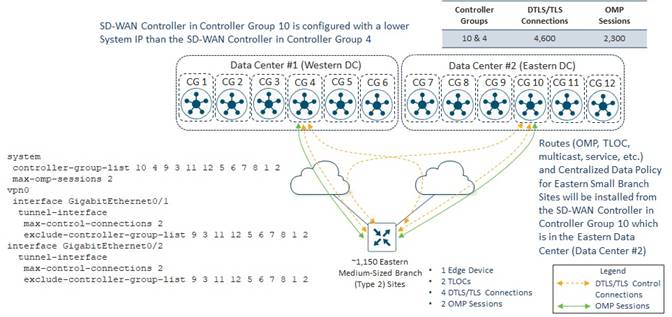

Since there are approximately 2,300 Medium-Sized Branch Sites within the Americas overlay, there are a total of 4 x 2,300 = 9,200 DTLS/TLS control connections established between all the Medium-Sized Branch Sites and all the SD-WAN Controller instances in Controller Groups 2 and 5. Likewise, there are a total of 2 x 2,300 = 4,600 OMP sessions established between all the Medium-Sized Branch Sites and all the SD-WAN Controller instances in Controller Groups 2 and 5. More specifically, 4,600 DTLS/TLS control connections and 2,300 OMP Sessions will be formed to the SD-WAN Controller instances in Controller Group 2 and 4,600 DTLS /TLS control connections and 2,300 OMP sessions will be formed to the SD-WAN Controller instances in Controller Group 5. A load-balancing algorithm within the SD-WAN router – based on the router’s System IP address – ensures approximately equal distribution of the DTLS/TLS control connections and OMP sessions across both SD-WAN Controller instances within each Controller Group.

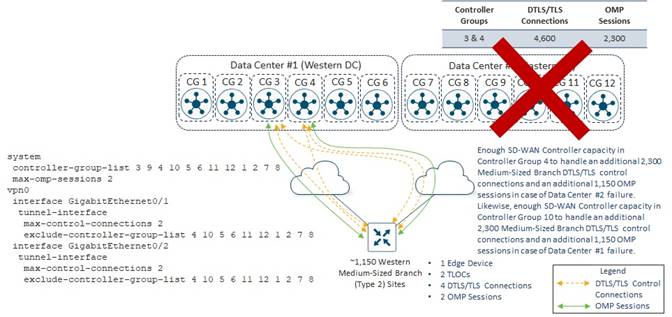

As with the small branch SD-WAN Controller design, a single SD-WAN Controller in each of Controller Groups 2 and 5 is sufficient to handle 4,600 DTLS/TLS control connections and 2,300 OMP sessions. However, Bank of the Earth wanted the SD-WAN Controller design to specifically address the scenario of a failure of one of the data centers within a given SD-WAN overlay. In the event of a failure of one of the data centers, all Medium-Sized Branch Site SD-WAN routers will lose DTLS/TLS control connections and OMP sessions to the SD-WAN Controller instances in the Controller Group to which they belong within that data center.

In the example above, if Data Center #2 fails, the Medium-Sized Branch Site SD-WAN routers will lose DTLS/TLS control connections and OMP sessions to all SD-WAN Controller instances in Controller Group 5. Because the Medium-Sized Branch Site SD-WAN routers are configured with the command max-omp-sessions 2 and each WAN transport is configured with the tunnel-interface level command max-control-connections 2, the SD-WAN routers will be out of equilibrium – both with respect to the number of DTLS/TLS control connections on each WAN transport tunnel-interface and the overall number of OMP sessions established with the SD-WAN Controllers.

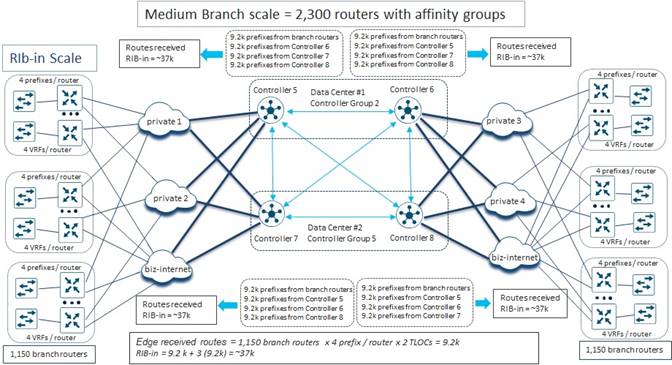

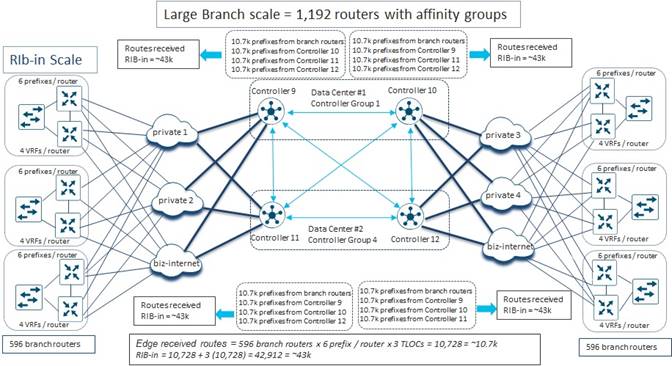

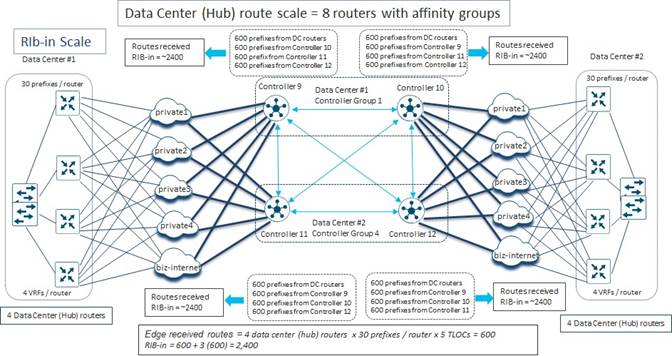

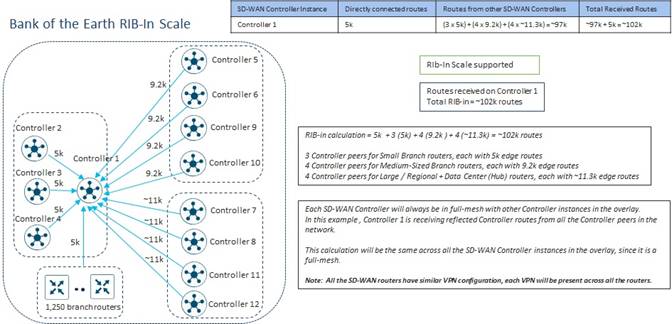

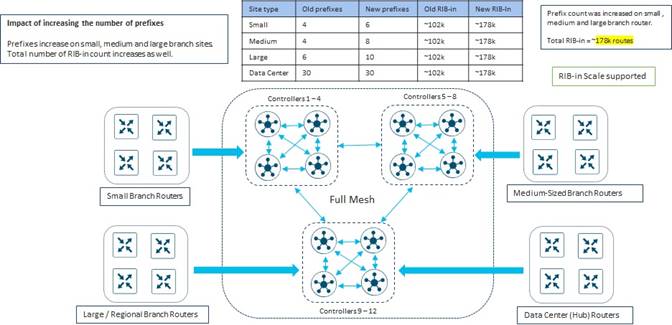

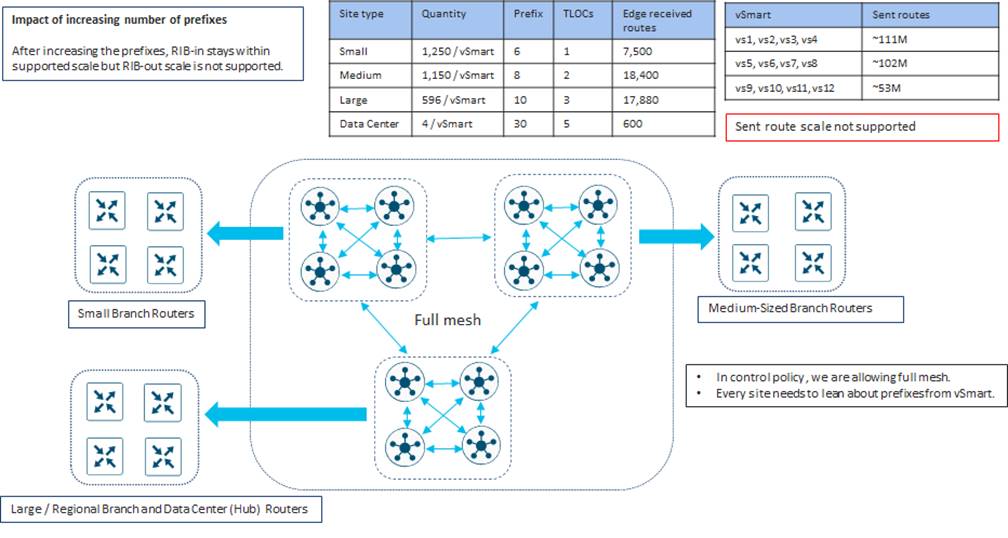

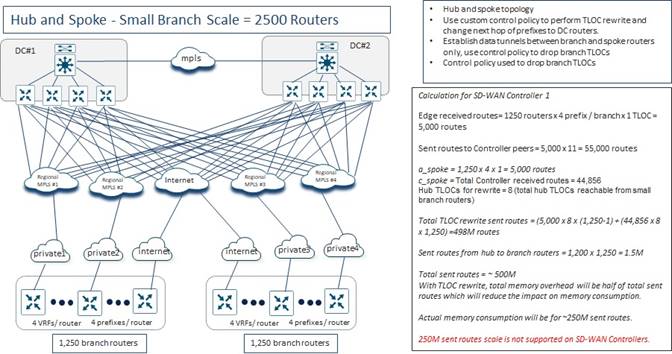

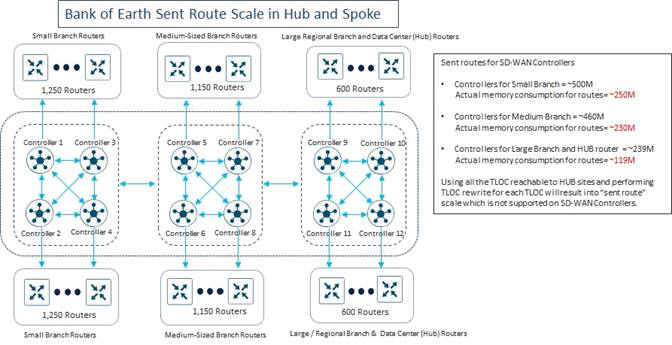

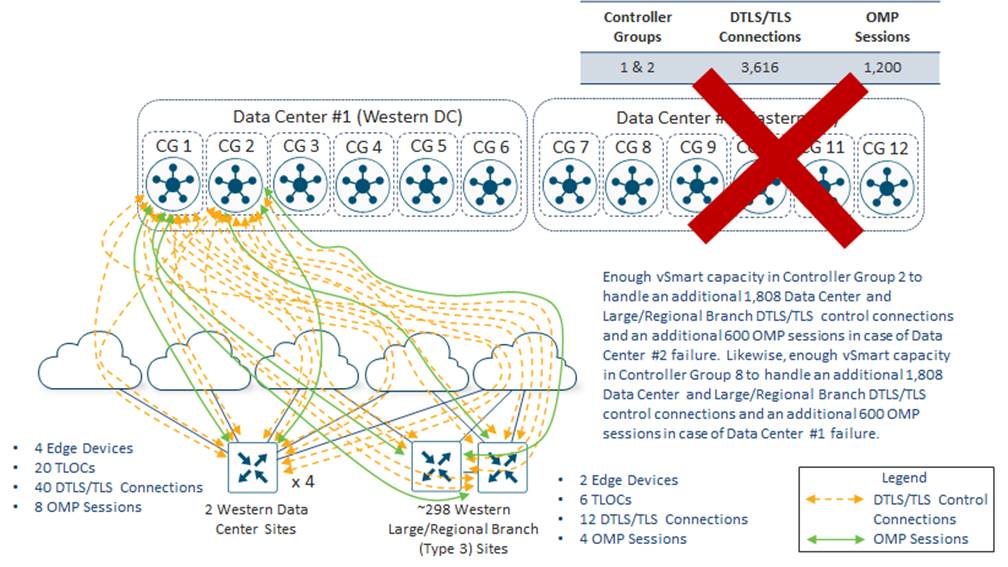

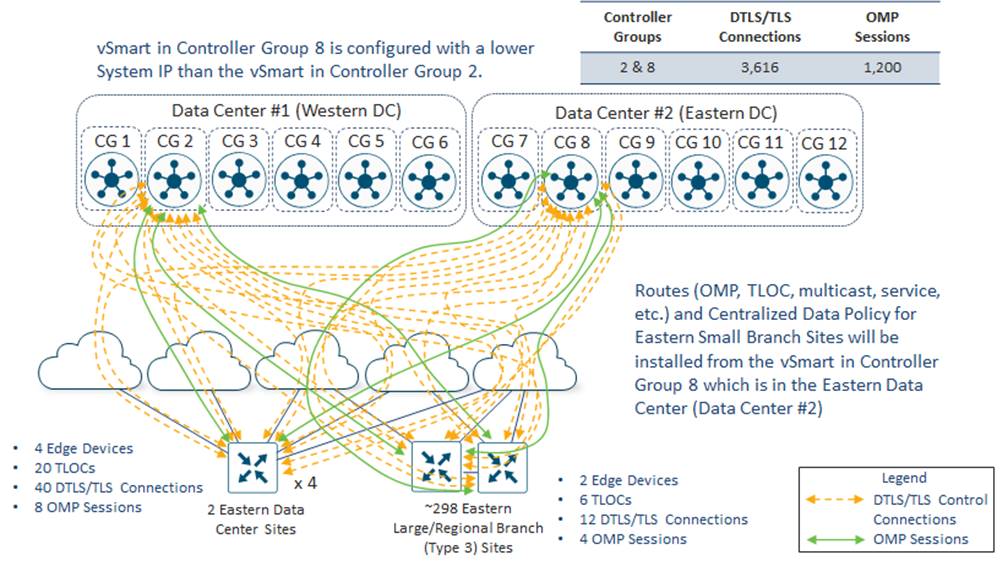

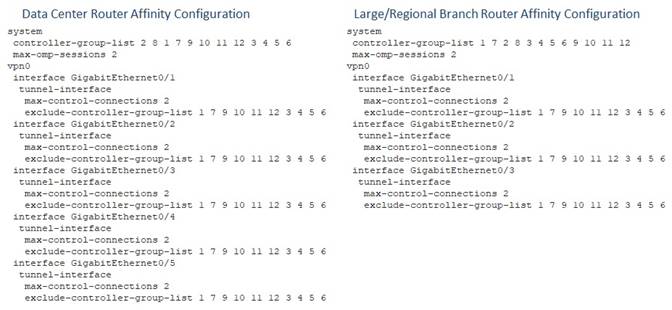

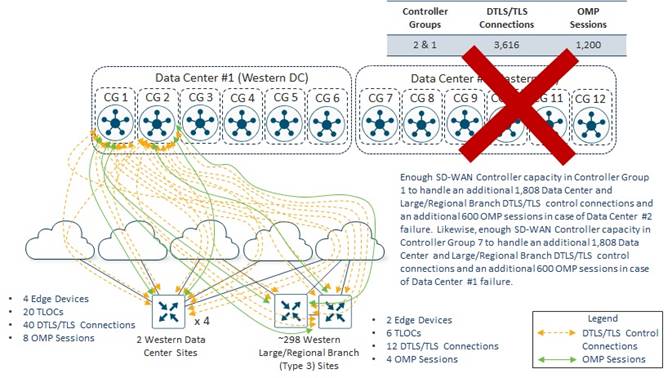

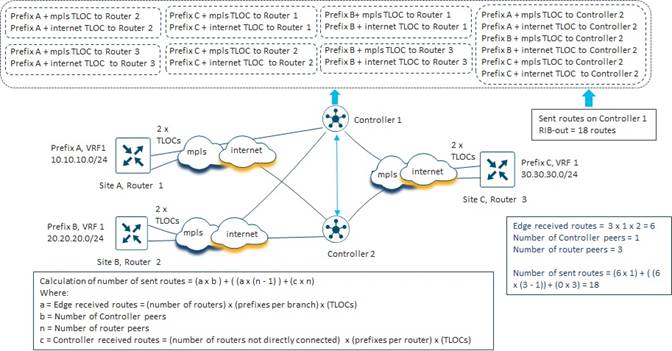

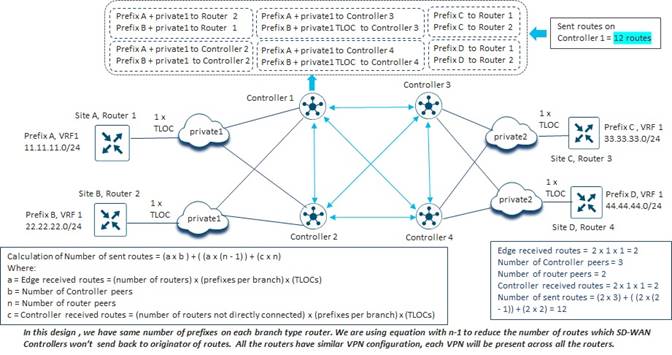

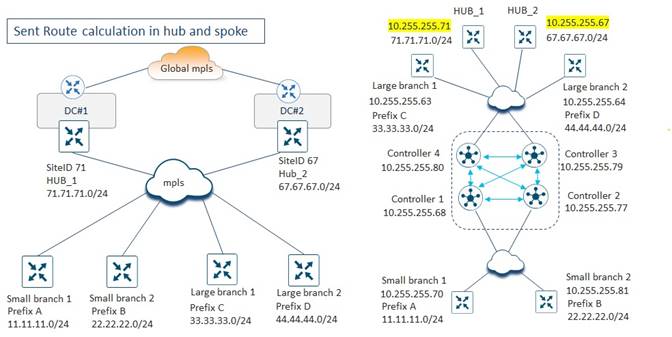

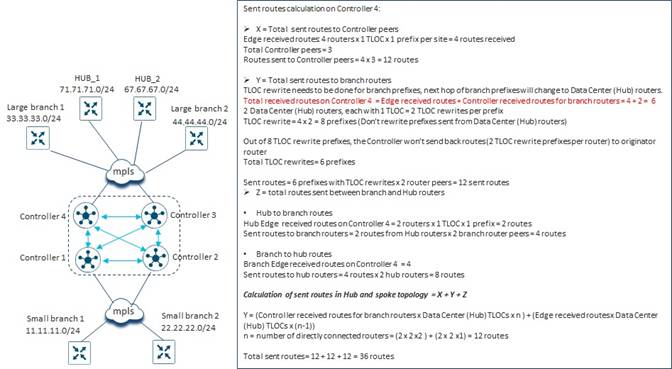

The SD-WAN routers will attempt to establish a second DTLS/TLS control connection over each WAN transport tunnel-interface. Since the WAN transport tunnel-interfaces have been configured to exclude Controller Groups 3, 6, 1 and 4, and since there is a second SD-WAN Controller instance within Controller Group 2, each WAN transport tunnel-interface will establish a second DTLS/TLS control connection to the second SD-WAN Controller instance within Controller Group 2. Over one of the two DTLS/TLS control connections (because there are two WAN transport tunnel-interfaces), each Medium-Sized Branch Site SD-WAN router will form an OMP session with the second SD-WAN Controller instance. At this point, each Medium-Sized Branch Site SD-WAN router will have met the requirement for 2 DTLS/TLS control connections for each WAN transport tunnel-interface, as specified in the max-control-connections 2 command, and the requirement for 2 OMP sessions, as specified in max-omp-sessions 2 command. Note that since both TLOCs of the Medium-Sized Branch Site SD-WAN router are not connected to both of their “assigned” SD-WAN Controller instances, they are still considered “out of equilibrium”.