Cisco UCS and MDS Better Together White Paper

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

● Advanced design options

● Easier management

● Better support

This document explains the main aspects of the combined data center solution, highlighting both the synergy of Cisco UCS with the Cisco MDS 9000 Series and its differentiation from alternative combinations.

The Cisco Unified Computing System™ (Cisco UCS®) brings unique capabilities that help scale and simplify x86 server deployments. To deliver these benefits, it is built on unique features that are new in the industry and derived more from its architecture than its individual components. The Cisco® MDS 9000 Series provides high-bandwidth, highly scalable, and highly available secure storage connectivity and unifies physical and virtual storage, enabling transparent communication and management. The Cisco MDS 9000 Series of products are an excellent counterpart to Cisco UCS, delivering exceptional performance, flexibility, topology-independent availability, management simplicity, and scalability to help customers derive value from the most challenging application environments.

New applications can be deployed transparently while using Cisco UCS and the Cisco MDS 9000 Series, according to the testimony of numerous customers, and underscored by the tremendous market success of these solutions. The Cisco UCS X-Series Modular System powered by Cisco Intersight™ is the latest addition to the UCS portfolio. Cisco Intersight represents an innovative cloud-hosted operational model for the hybrid cloud data center. The 64G switching module is the latest hardware addition to the Cisco MDS 9000 Series while Cisco SAN Analytics and the Cisco Dynamic Ingress Rate Limiting feature represent the new and most innovative software enhancements. By combining these highly differentiated sets of innovations, customers immediately realize the benefits of a future-ready IT solution.

In the era of digitalization, mobility, social networking, and the Internet of Things (IoT), data center and cloud infrastructure increasingly represent a strategic business asset for organizations of all kinds. Companies are looking at how to best design, scale, and integrate their various constituent products and technologies, making them work together with a simplified management approach. Application uptime 24 hours a day, every day, is now a key requirement, and always-on application functioning is not just a marketing message but a goal for everyone in our hybrid-cloud epoch.

In the storage networking market, Cisco is the innovation-driven market leader for block storage and file-based and object-storage networking. Cisco’s leadership manifests in a complete state-of-the-art portfolio of switches supporting Ethernet, Fibre Channel, and Fibre Channel over Ethernet (FCoE) unified networking. The same portfolio can support block-access upper-layer protocols such as SCSI and NVMe, providing an ideal choice when migrating from traditional storage to all-flash arrays. Those fast storage devices are in fact often supporting NVMe/FC, NVMe over TCP, or NVMe over ROCEv2 as an option for connectivity.

Cisco is the technology leader in Fibre Channel storage networking, driving innovation toward highly available congestion-free fabrics with deep traffic inspection at scale. With more than 35,000 customers worldwide, more than 180,000 chassis sold, and more than 18 million ports shipped since 2002, Cisco is a well-established and credible Fibre Channel vendor with a long-term horizon.

A large selection of Fortune 500 data centers relies on Cisco MDS 9000 Series field-proven reliability, achieving nonstop operations, up to 99.9999 percent availability, and a demonstrated director uptime of more than fifteen years. The purpose-built Application-Specific Integrated Circuits (ASICs) in Cisco MDS 9000 Series products consistently deliver top performance and robust features, plus massive scalability with ease of use and detailed control, offering low Total Cost of Ownership (TCO) for enterprises. Deterministic performance and latency, wire-speed throughput without the concerns of local switching, investment protection, and complete backward compatibility, all make the Cisco MDS 9000 Series the best candidate for bare-metal, virtualized, and containerized applications and next-generation flash-based storage solutions. As a standards-based product line, the Cisco MDS 9000 Series can be used to connect servers from any vendor with any qualified Host Bus Adapter (HBA) model. It can also be used to connect multiple qualified disk arrays from several vendors. This offers our customers a wide choice from a qualified ecosystem of devices and without compromises.

Cisco UCS is the number-one x86 blade server solution in the world. Introduced in 2009, Cisco UCS revolutionized the server market, combining computing, network connectivity (LAN), and storage connectivity (SAN) resources in a single system. Cisco UCS can consolidate both physical and virtual workloads onto a single, centrally managed, and automated system for computing, networking, and storage access. In this offering, Cisco pioneered unique features such as stateless computing, service profiles and unified fabrics. This innovation has allowed more than 55,000 Cisco customers to reduce IT efforts and costs through unified management of all components and an optimized use of resources.

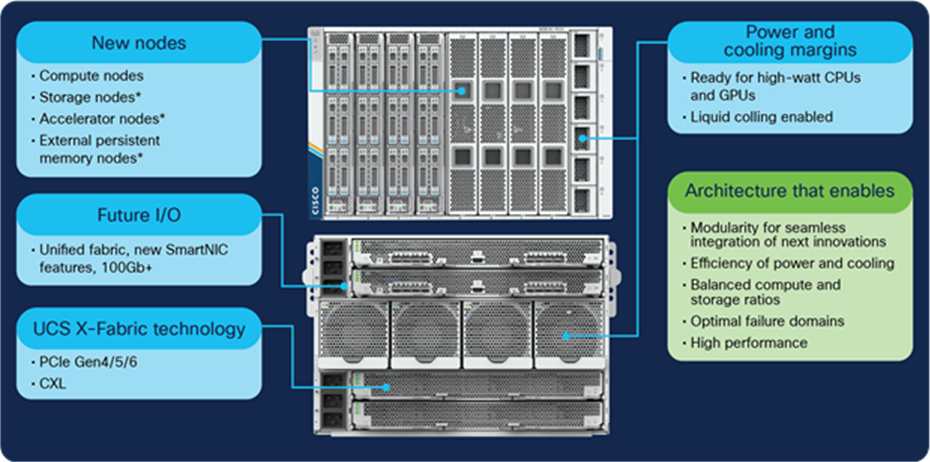

With the introduction of the Cisco UCS X-Series Modular System, Cisco has redefined once again the compute market, setting the stage for the next decade of technology innovation. The UCS X-Series Modular System enables you to feel safe investing in a system that’s designed for the future. Hardware adaptability is the main thrust of the Cisco UCS X-Series, and so any sort of modern application can be run at scale, including virtualized workloads, enterprise applications, traditional and in-memory database management systems, cloud-native applications, big data clusters, and AI/ML workloads (Figure 1).

Cisco UCS X-Series Modular System highlights

The new offering is different from past rack or blade models, as it combines the best of both into a highly flexible system architecture based on node types. Powered by Cisco Intersight, the Cisco UCS X-Series Modular System is cloud managed and so a powerful answer for a changing IT landscape that is looking more and more cloud-centric. With its midplane-free chassis, the Cisco UCS X-Series Modular System is ready to accommodate compute nodes, GPU nodes, and storage nodes. The new titanium-certified power supply units and the increased size of nodes contribute to alleviate power concerns, making it capable to host future high-power CPUs and acceleration cards.

When using the Cisco MDS 9000 Series in conjunction with Cisco UCS, organizations can go well beyond basic interoperability and enjoy the benefits of both product lines and their combined value.

Designed specifically for Fibre Channel storage networking, Cisco MDS 9000 Series of products have a reputation for performance, reliability, and ease of use. Their success comes because of several factors.

Largest Fibre Channel portfolio with 64-Gbps capability

Cisco has technologically leaped ahead of the competition with its refreshed offering. Three modular directors offer support from 2-Gbps and up to 64-Gbps Fibre Channel speed, with port counts from 192 and up to 768. At the same time multiple fabric switches provide 32-Gbps connectivity from 8 to 96 ports, with a 64-Gbps offering in the works. Cisco’s offering benefits from the latest and greatest innovations in ASIC design and architectural approaches, leading to improved performance, higher availability, better security, exceptional scalability, deep flow-level monitoring, and simplified operations.

The Cisco MDS 9000 Series of switches is broad and differentiated, so that every customer’s needs can be accommodated in the best possible way. With fixed-configuration switches, multiservice appliances, and mission- critical directors, the Cisco MDS 9000 Series product line has an excellent combination of price, performance, and flexibility for all data centers. The currently available Cisco MDS 9000 Series portfolio includes (Figure 2):

Cisco MDS 9000 Series

● Cisco MDS 9148S 16G Fabric Switch: Fixed-configuration entry-level fabric switch, from 12 to 48 16-Gbps Fibre Channel ports at wire speed

● Cisco MDS 9250i Multiservice Fabric Switch: Multiservice node with wire-speed performance on all ports, up to 40 16-Gbps Fibre Channel ports, 8 FCoE ports, and 2 1/10-Gbps Fibre Channel over IP (FCIP) ports

● Cisco MDS 9132T 32G Fabric Switch: Fixed-configuration fabric switch, from 8 to 32 32-Gbps Fibre Channel ports at wire speed

● Cisco MDS 9148T 32G Fabric Switch: Fixed-configuration fabric switch, from 24 to 48 32-Gbps Fibre Channel ports at wire speed

● Cisco MDS 9396T 32G Fabric Switch: Fixed-configuration fabric switch, from 48 to 96 32-Gbps Fibre Channel ports at wire speed

● Cisco MDS 9220i Multiprotocol Fabric Switch: Multiprotocol node with wire-speed performance on all ports, up to 12 32-Gbps Fibre Channel ports and 4 1/10-Gbps or 2 25-Gbps or 1 40-Gbps Fibre Channel over IP (FCIP) ports

● Cisco MDS 9706 Multilayer Director: Mission-critical director-class device with up to 192 64-Gbps Fibre Channel wire-speed ports, optionally capable of FCIP connectivity

● Cisco MDS 9710 Multilayer Director: Mission-critical director-class device with up to 384 64-Gbps Fibre Channel wire-speed ports, optionally capable of FCIP connectivity

● Cisco MDS 9718 Multilayer Director: Mission-critical director-class device with up to 768 64-Gbps Fibre Channel wire-speed ports, optionally capable of FCIP connectivity

The advanced functionalities of the Cisco MDS 9000 Series switches and their future-ready architecture make them an ideal choice for organizations undergoing a Storage Area Network (SAN) refresh cycle. Adoption of 32-Gbps arrays and flash-memory storage has led to an increase in performance requirements for the storage network, so that in excess of 90 percent of the Fibre Channel networking sold today supports a speed of 32 Gbps.

The Cisco storage networking portfolio of Fibre Channel and FCoE switches is further enhanced by several products from the Cisco Nexus® portfolio.

Investment protection continues to be a primary consideration for customers. Cisco continues to respond with full backward compatibility with previous generations of the same Cisco MDS 9000 Series product line as well as adjacent product lines, including Cisco Nexus switches and Cisco UCS. Whereas competitors make programmed obsolescence their most apparent feature, Cisco keeps delivering on their investment-protection promise, with full appreciation from customers. The modular director-class Fibre Channel switching devices were designed to have an initial support for 16-Gbps Fibre Channel speed, but with a capability to support higher bit rates with different generations of switching modules. As a result, the same chassis can be upgraded in-place and in-service to 32-Gbps and 64-Gbps Fibre Channel speeds. This contributes to reduce the Total Cost of Ownership (TCO) by reusing common parts, maintaining licenses, and avoiding re-cabling. In addition, Cisco fully supports the migration of SFP units from old switching modules to the more recent ones. The significance of these unique capabilities can be better appreciated when compared to the competing offerings that require major equipment replacements to move from 16-Gbps to 32- Gbps and 64- Gbps Fibre Channel speeds. Competitive offering also prevents previous generation SFP units from being operational on the recent 64-Gbps switching modules on Cisco MDS 9000 devices.

Multiprotocol flexibility, application awareness, and programmability

The Cisco MDS 9000 Series delivers multiprotocol flexibility. Both SCSI and NVMe are fully qualified and supported, meeting both existing and future needs in terms of application performance. SAN extension is a critical capability for both business continuance and disaster recovery needs. The Cisco MDS 9000 Series offers a choice among 1/10/25/40G FCIP options, enabling remote data replication over long-distance WAN links with high throughput.

The Cisco MDS 9000 Series integrates with Cisco UCS and Cisco Nexus platforms as part of Cisco’s data center strategy. For customers adopting Cisco Application Centric Infrastructure (Cisco ACI®), the Cisco MDS 9000 Series product line is the default solution for Fibre Channel access to data. The Cisco MDS 9000 Series offers two examples of innovation in terms of application centric capabilities, such as Cisco Smart Zoning and VMID Analytics. With Smart Zoning, you can create a single zone for all components of an application cluster. With VMID Analytics you can benefit from deep traffic visibility and FC I/O flow monitoring, down to the application level.

The Cisco MDS 9000 Series is well suited for cloud deployments, offering an embedded adapter for OpenStack Cinder. Programmability and interaction with open-source tools has also been enhanced. On every Cisco MDS 9000 Series device, there is a native onboard Python interpreter. The NX-API provides complete device interaction with an RPC-style API leveraging HTTP/S transport. Ansible modules have been developed and are freely available for use. A Python SDK can be downloaded from GitHub to shorten code development cycles and facilitate an object-oriented programming approach.

The full performance of the new and high bit rate switching modules can only be exploited when no congestion is present in the network. This starts from having congestion-free switches, thanks to the use of an exclusive arbitrated non-blocking architecture that virtually eliminates micro-burst congestion within switches. In addition, the Cisco MDS 9000 Series has been offering an industry-first hardware-enabled slow-drain detection and recovery mechanism at the fabric level. Now, starting with Cisco NX OS Release 8.5(1), Cisco has introduced an ideal solution for SAN congestion at the fabric level.

Cisco Dynamic Ingress Rate Limiting (DIRL) is an innovative technology to bridge the performance gaps between the newer ultra-fast all-flash NVMe storage arrays (AFAs) and slower application servers. DIRL prevents the spreading of congestion caused by performance issues or slow-drain conditions in a SAN. DIRL enables slower and faster application servers to coexist in the same fabric without affecting each other, and this innovation allows them to drive the AFAs to their full potential. The best part is that DIRL is not dependent on the end-devices, doesn’t require any additional license, and is available on Cisco MDS 9000 Series Switches after a software-only upgrade.

The Cisco SAN Analytics solution offers end-to-end visibility into Fibre Channel block storage traffic. The solution is natively available from Cisco MDS 9000 Series devices due to its integrated-by-design architecture. Cisco SAN Analytics delivers deep visibility into I/O traffic between the compute and storage infrastructures. This information is in addition to the already-available visibility obtained from individual ports, switches, servers, virtual machines, and storage arrays. By enabling this industry-unique technology, Cisco MDS 9000 Series switches inspect the Fibre Channel and SCSI/NVMe headers, not the payload, of all input/output exchanges. By using dedicated hardware resources, the switch will process this data, determine a flow table, and calculate more than 70 metrics for every I/O flow. The collected information is finally sent out the management port by using a SAN Telemetry Streaming (STS) capability, based on the gRPC open-source API. The Cisco Nexus Dashboard Fabric Controller (NDFC), which is the evolution of Cisco Data Center Network Manager (DCNM), or third-party tools can receive that data, store it over long periods of time, and perform postprocessing and visualization. The SAN Analytics capability has been further enhanced on the 64G FC switching modules, allowing scalability of up to 1 billion IOPS making the solution future ready using a local streaming port.

Cisco Unified Computing System (Cisco UCS)

The Cisco UCS portfolio has been expanding over time and now includes a variety of systems like rack mount servers, modular servers, and blade servers. All solutions can support external connectivity through the appropriate switching elements.

A good percentage of Cisco UCS platforms are sold as part of integrated computing stacks, also known as converged architectures. Built using best-in-class products, these solutions include computing, networking, and storage resources in a single solution, often with a single management tool supporting a hybrid cloud model. VxBlock, FlexPod, FlashStack, VersaStack, and Adaptive Solutions for Converged Infrastructure with Hitachi are the commercial names of these solutions. The Cisco MDS 9000 Series is an optional element of these integrated systems and has contributed to their success.

Cisco UCS consists of several components that work together to provide a cohesive high-performance computing, networking, and storage environment with a robust feature set. The popular Cisco UCS blade solution consists of blade servers, network adapters (VICs), blade chassis, I/O Modules (IOMs), and fabric interconnects. Inclusion of GPU acceleration cards is an option to further strengthen the solution for specific workloads.

Welcome to the Cisco UCS X-Series Modular System

The Cisco UCS X-Series Modular System is the latest addition to the Cisco UCS portfolio. Powered by Cisco Intersight, it is an adaptable, future-ready system engineered to simplify IT and innovate at the speed of software. Moving beyond the concept of blade servers, it combines the serviceability of a blade system with the storage density of rack mount servers. The midplane-less design makes it ideal to support future capabilities, including storage nodes and GPU nodes. Intersight has been enhanced to offer a complete provisioning, monitoring, and reporting GUI for UCS X-Series. In this new computing offering, the Cisco UCS 6400 Series Fabric Interconnects, the same as with Cisco UCS, provide external connectivity. The UCS X-Series chassis features several enhancements compared to the UCS chassis, and the Intelligent Fabric Modules (IFMs) take the role of the I/O Modules (IOMs). Specialized GPU nodes and storage nodes offer a new possibility for growth and technology expansion leveraging the high-performance Compute Express Link (CXL) bus.

Because they are standards-based and suitable for adoption in a multivendor data center, Cisco UCS and the Cisco UCS X-Series provide the option to connect to both Cisco and third-party Fibre Channel SANs. This document describes the main benefits customers receive in addition to simple interoperability when connecting Cisco UCS to the Cisco MDS 9000 Series storage networking fabric.

This document focuses on Fibre Channel connectivity for the fabric interconnects and network adapters inside Cisco compute nodes. The same principles apply to Cisco UCS, Cisco rack mount servers (when connected to fabric interconnects), and Cisco UCS X-Series.

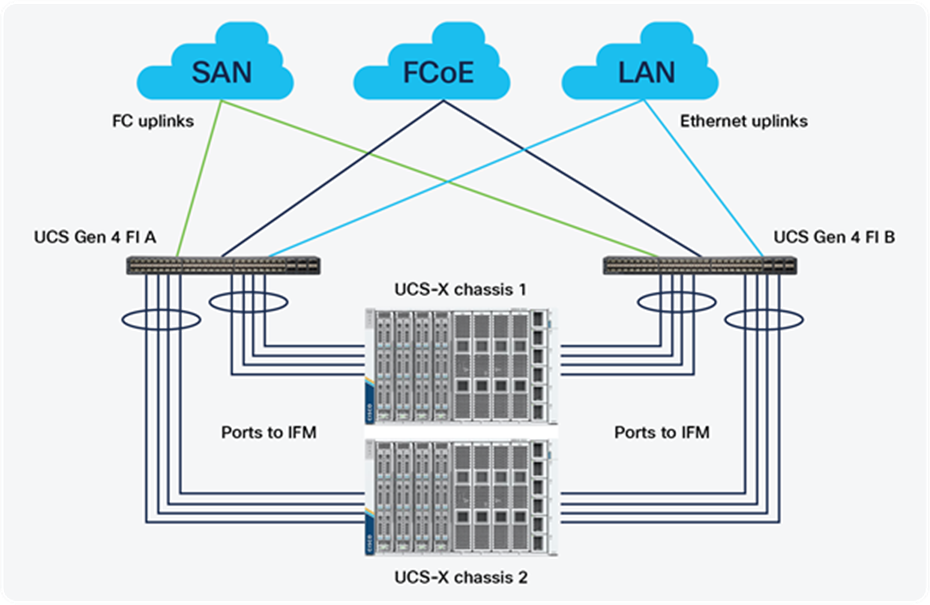

Let’s consider all Cisco compute deployments that include two fabric interconnects, namely Fabric Interconnect A (FI A) and Fabric Interconnect B (FI B), which correspond to Fibre Channel Fabric A and B respectively. Cisco Intersight provides the GUI for configuring and monitoring Cisco UCS, which provide the internal connectivity to the UCS blades and UCSX-Series compute nodes, and the northbound-facing ports, which provide the external connectivity to the Ethernet and Fibre Channel networks and other optional direct-connect devices. The fabric interconnect uplink ports are unified and can be configured to support Ethernet, Fibre Channel, or FCoE connectivity. Physical connectivity from the compute resources to the block storage arrays is achieved by connecting a fabric interconnect Fibre Channel uplink port to an upstream SAN switch (Figure 3).

Cisco UCS X-Series and its external connectivity

The Cisco VIC is a Converged Network Adapter (CNA) that is installed in a Cisco UCS blade server or Cisco UCSX-Series compute node. It provides the capability to create virtual Ethernet (vEth) adapters and virtual Host Bus Adapters (vHBAs). vEths and vHBAs are recognized by operating systems as standard PCI devices.

Two deployment options exist for Cisco fabric interconnect devices when connected to the Fibre Channel SAN:

● End-host mode (default)

● Switch mode

Devices with FC forwarding capabilities can be configured to operate in switch mode or N-Port Virtualization (NPV) mode. The NPV mode is often used on edge devices to reduce the number of domain IDs in the fabric. A device in NPV mode must be connected to a core switch with the N-Port ID Virtualization (NPIV) feature turned on. The edge NPV mode and core NPIV features are complementary technologies, as the similarity in their names reflects. When a Fibre Channel switch is operated in NPV mode, the Fibre Channel services are running remotely on the NPIV-enabled core switch rather than locally on the edge NPV-mode switch. The NPV device looks like an end host with many ports to the NPIV-enabled switch, rather than another switch in the Fibre Channel SAN fabric. For this reason, on Cisco fabric interconnects, NPV mode is called end-host mode.

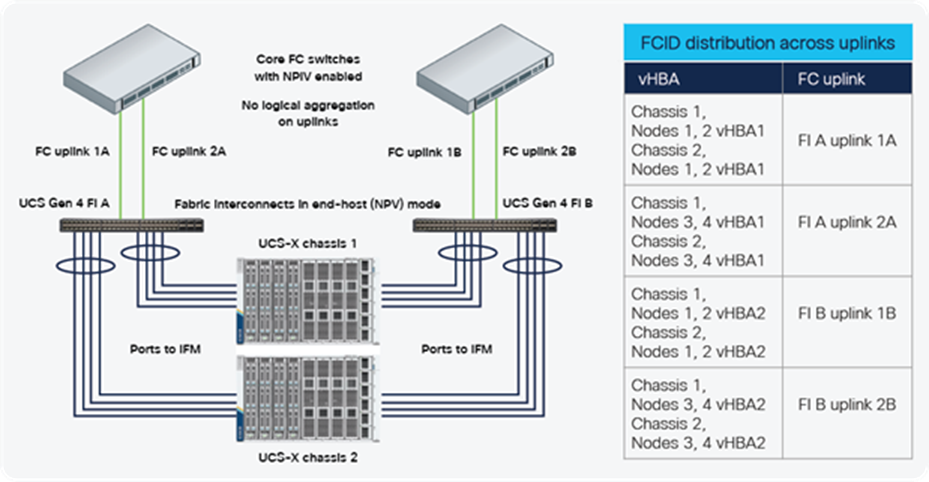

End-host mode (edge NPV) on Cisco fabric interconnects uses the N-Port Virtualization (NPV) technology to request multiple FCIDs from a single core switch port, instead of relying on the traditional one-to-one relationship between an N-port and its FCID. Technically, the FC uplink ports become NP-ports, and they operate as proxies for the multiple vHBA N-ports connected to the downlinks of the fabric interconnects. Connecting Cisco fabric interconnects to upstream SAN switches requires N-Port ID Virtualization (NPIV) feature to be enabled on those FC switches. The NP-ports will request the appropriate number of FCIDs to their peer NPIV-enabled FC switches.

Figure 4 offers a representative example and shows the Cisco UCS fabric interconnects in end-host mode connected to Cisco or third-party SAN switches. With uplinks being independently configured, the FCID distribution for the eight deployed compute nodes across the four available Fibre Channel uplinks happens on a combination of least-login and round-robin mechanisms. The implication is that even if the login number on each uplink is uniform, traffic load may be non-uniform. Depending on bandwidth requirements from the compute nodes, we might end up with some congested uplinks and some poorly utilized uplinks.

End-host mode connectivity and FCID distribution

To compensate for the uneven uplink utilization that might result from the default auto-assign traffic distribution settings, Cisco UCS offers the possibility to create pin groups and assign specific vHBAs to specific uplinks. When traffic load for each vHBA is known, this manual approach to traffic distribution can lead to a somewhat uniform uplink utilization. However, this traffic engineering mechanism is administratively very heavy and not suitable for adoption at scale in a production setup. In case one uplink had to fail, all vHBAs logging into the SAN fabric on that uplink would be logged out and need to re-login using the available uplinks. This would cause those vHBAs to drop traffic for a couple of seconds, so that fault tolerance cannot be claimed. Moreover, when the failed uplink is restored, the existing vHBAs will continue to be assigned to the previous uplinks. No dynamic rebalancing will happen, and the restored uplink will stay unutilized. For situations where more bandwidth is needed on uplinks to satisfy the existing workloads, the addition of more uplinks will not provide the expected outcome since there will be no automatic and dynamic load balancing, leading to a waste of resources.

Switch mode on Cisco fabric interconnect modules makes them behave as regular Fibre Channel switches. Due to interoperability constraints, they can only connect to Cisco MDS 9000 Series or Cisco Nexus switches. Cisco fabric interconnects will have a domain ID assigned, and the servers’ vHBAs will perform the fabric login (FLOGI) to the fabric interconnects, not to external switches. The FCID is allocated to vHBAs by the Cisco fabric interconnects.

The main merit of switch mode is the fact it allows direct connection of storage devices to the Cisco fabric interconnects. Any qualified storage array, hybrid, or all-flash can be directly connected to the Cisco fabric interconnects when operating in switch mode. Zoning on the fabric interconnects can be performed using the appropriate management tools within Intersight. For situations where storage arrays are not directly connected to UCS fabric interconnects, zoning can be performed on the Cisco MDS 9000 Series switch and then imported into Cisco UCS with the standard zone database propagation of FC technology. Table 1 below, illustrates the differences between end-host mode and switch mode.

Table 1. End-host mode compared to switch mode

| End-host mode |

Switch mode |

| NPV on fabric interconnects and NPIV on upstream FC switches |

No need of NPV/NPIV |

| FI uplinks present as “NP,” “TNP” ports |

Fabric interconnects uplinks present as “E” or “TE” ports |

| FCID for vHBA provided by upstream FC switch |

FCID for vHBA provided by fabric interconnects |

| No domain ID assigned to fabric interconnects |

Domain ID assigned as per the FC fabric mechanism |

| Zoning done on the upstream FC switch |

Zoning done on fabric interconnects or Cisco MDS |

| Direct attach of FC storage to fabric interconnects is not supported |

Can directly attach qualified FC storage arrays to fabric interconnects |

| Interoperability with Cisco and third-party switches |

Interoperates with Cisco switches only |

Advantages of using Cisco MDS 9000 Series with Cisco UCS

Many deployment options exist for Cisco UCS in combination with the Cisco MDS 9000 Series. In some cases, Cisco Nexus switching devices are used between Cisco computing and Cisco MDS 9000 Series networking. In other cases, Cisco computing is directly connected to Cisco MDS 9000 Series switches. According to the size of their deployment and their disaster recovery needs, customers can select the appropriate model in the Cisco MDS 9000 Series, from the cost-effective Cisco MDS 9148S fixed-configuration switch to the flexible Cisco MDS 9220i multiprotocol switch, or to the largest director, such as the Cisco MDS 9718 Multilayer Director.

Connecting Cisco fabric interconnects in end-host mode to Cisco MDS 9000 Series switches requires the N-Port ID Virtualization (NPIV) feature to be enabled on the Cisco MDS 9000 Series switches. To reduce administrative burden, and for an optimized support of modern NVMe/FC disk arrays, the NPIV feature is enabled by default globally in recent NX-OS releases.

Connecting Cisco UCS to the Cisco MDS 9000 Series provides multiple advantages. The main benefits of this powerful combination are:

● Advanced design options

● Easier management

● Better support

Advanced design options become possible with the combined solution, and this is one of the main benefits. End-to-end VSANs, VSAN trunking, and Inter-VSAN Routing (IVR) are additional benefits for those seeking multitenancy in the data center. Increased high availability and uniform uplink utilization are achieved with the help of the exclusive Fabric-port (F-port) PortChannel technology, avoiding host re-login in the event of a link failure. Provision for NVMe/FC is present within Cisco UCS and Cisco MDS 9000 Series, leading to better performance for application workloads.

Easier management is another major outcome. Common Cisco NX-OS Software operating system and management tools, such as Cisco Nexus Fabric Controller (formerly Cisco Data Center Network Manager [DCNM]) and Intersight, create a uniform and homogeneous solution. IT administrators can use the same skills across computing, SAN, and LAN environments. Cisco Smart Zoning reduces administration overhead without sacrificing end-node control. Automated and multitenant hybrid clouds can be created from those building blocks.

Better support is also an evident advantage. Companies can eliminate concerns about feature compatibility and interoperability, while achieving simpler and faster troubleshooting and improving uptime with peace of mind. Responsibility assignment to multiple vendors is not required, because Cisco is the single point of contact to address performance issues.

The following sections discuss these benefits in more detail while preserving the logical order.

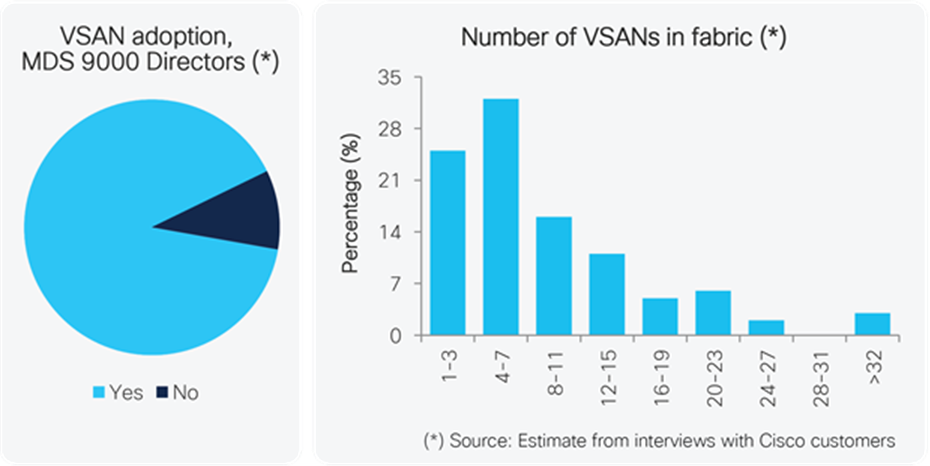

End-to-end VSANs (multitenancy), VSAN trunking, and inter-VSAN routing

VSAN technology provides the capability to partition a physical fabric into multiple logical fabrics. It is a virtualization technology that Cisco introduced in 2003 and that INCITS T11 standardized in 2004 under the name of Virtual Fabric Tagging (VFT). Any Cisco MDS 9000 Series switch port resides in one and only one VSAN. Despite being an optional feature, VSAN technology has experienced a massive adoption. According to data that Cisco collected from its install base, more than 90 percent of Cisco MDS 9000 Series customers use VSANs in their production environments (Figure 5). The average number of VSANs used is between four and seven, but some customers with large and complex deployments use more than 24 VSANs. The maximum tested and qualified number of VSANs for Cisco devices is 80 on directors and 32 on fabric switches. VSAN technology is free of charge, easy to configure, and extremely useful in a variety of use cases.

VSAN adoption and number of configured VSANs

To save ports when interconnecting Cisco MDS 9000 Series switches, each configured with more than one VSAN, organizations can transport multiple VSANs over the same link. This capability is described in the INCITS standard and made possible by tagging each frame with the relevant VSAN number. As a result, multiple frames from multiple VSANs can be carried over the same shared inter-switch link without being mixed up. This feature is called VSAN trunking and fully aligns with a similar feature in Ethernet environments.

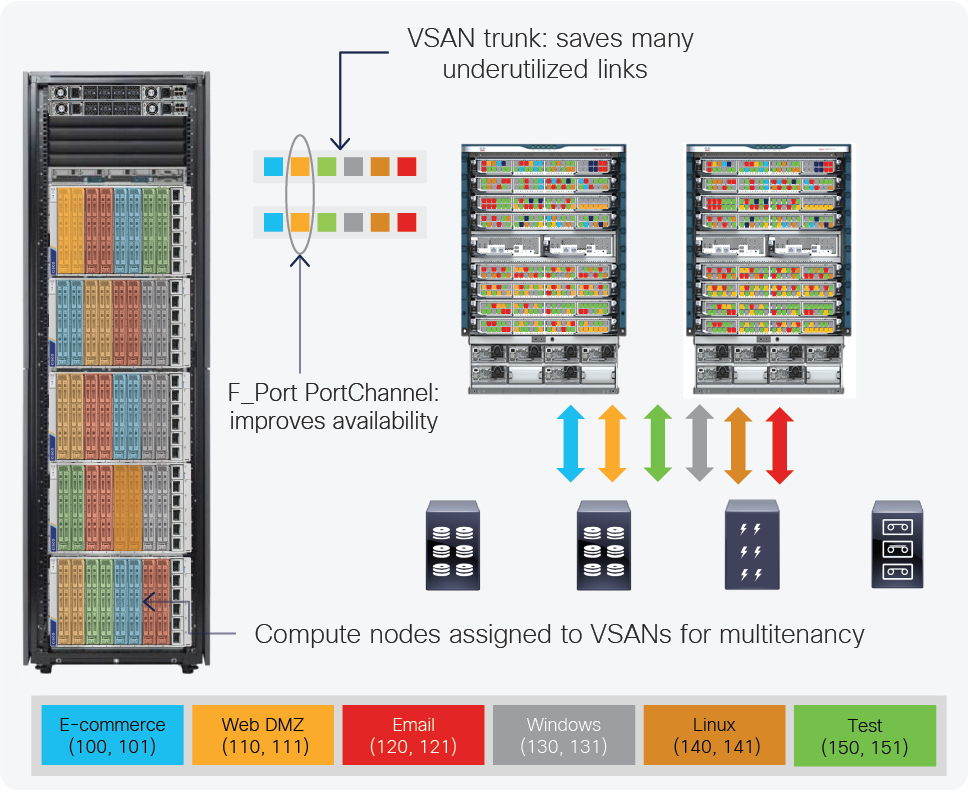

VSANs and VSAN trunking are also supported on the entire Cisco UCS portfolio when fabric interconnects are deployed. Therefore, organizations can implement an end-to-end VSAN solution. The use of VSAN trunking can save up to 85 percent of the uplink ports. VSANs and VSAN trunking can be used to enable multi-tenancy, which starts from within Cisco UCS and extends to the SAN fabric. Different UCS blades or UCSX-Series computing nodes can be assigned to different VSANs, and segmentation of storage traffic can be achieved with hardware enforcement. Figure 6 illustrates the advantages of VSANs and VSAN trunking when combine with Cisco UCS and MDS 9000 Series devices.

VSANs and VSAN trunking add value to Cisco MDS and Cisco UCS solutions

The use of these advanced features is only possible when Cisco UCS is connected to a Cisco networking device in the Cisco Nexus 9000 Series or Cisco MDS 9000 Series. These features cannot be extended from end to end when third-party switches or third-party compute systems are used. When Cisco UCS is connected to third-party Fibre Channel switches, VSANs are not supported, nor is VSAN trunking, leading to suboptimal configurations and limited design options.

Under specific circumstances, a host device in one VSAN may need to talk to a target device in another VSAN. Normally, this behavior would be prevented by the logic of separation and confinement that is the basis of VSAN technology. However, by exception and for specific host-to-target communication, inter-VSAN communication can be enabled without merging those VSANs. This capability is achieved with Inter-VSAN Routing (IVR), which is implemented in hardware on the Cisco MDS 9000 Series switches. By connecting Cisco UCS to Cisco MDS 9000 Series switches, organizations can configure and exploit IVR across the complete solution.

High availability and uniform traffic load balancing with F-port PortChannels

Link aggregation is a general term describing the combination of multiple, individual physical links into a single, logical link with the goal of increasing bandwidth and link availability between switches. Cisco’s term for Fibre Channel link aggregation is SAN PortChannel. The PortChannel is a Cisco technology that bundles multiple Inter-Switch Links (ISLs) together into a single logical link. A recent internal survey shows that almost all customers of Cisco Fibre Channel and FCoE networking products use PortChannels in their production environments with satisfaction.

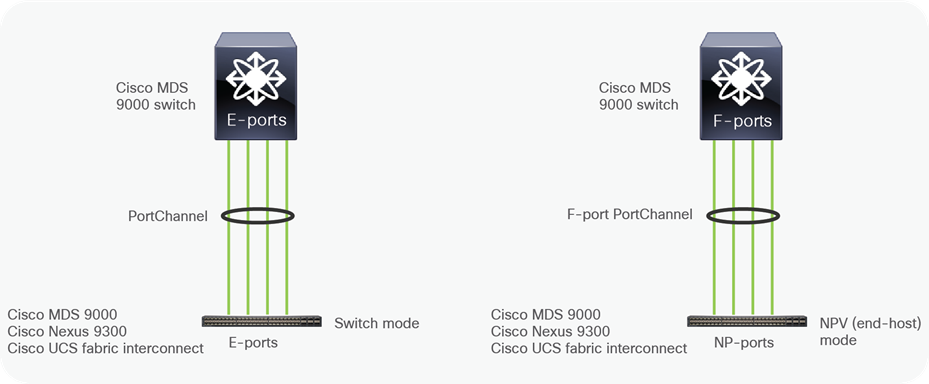

If PortChannels are groups of ISLs, F-port PortChannels are a similar technology for groups of F-ports connecting to the same switch in NPV mode (Figure 7).

PortChannels and F-port PortChannels

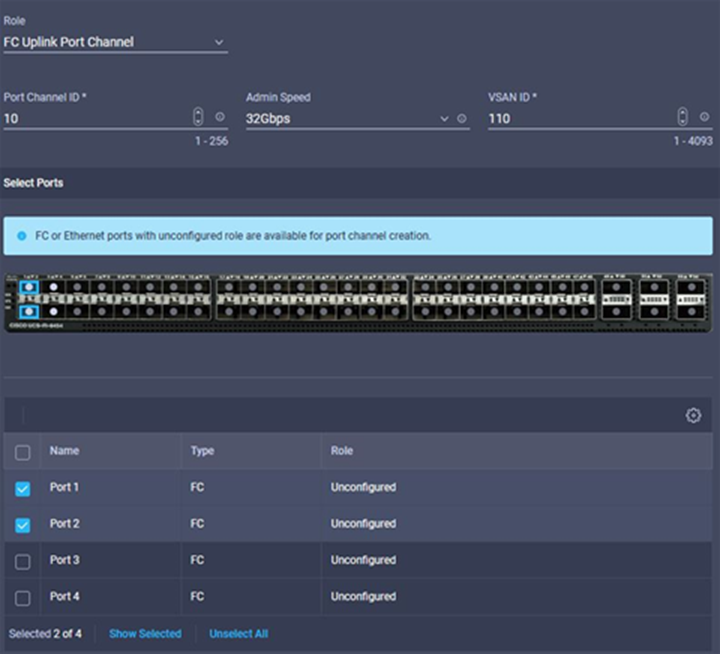

PortChannels and F-port PortChannels are also supported on Cisco UCS when the fabric interconnects are configured in switch mode or end-host mode, respectively. With these technologies, organizations can configure a logical aggregate of uplinks between Cisco fabric interconnects and Cisco MDS 9000 Series platforms, improving link bandwidth and link availability (Figure 8). The same is true for FCoE uplinks, by configuring vF-port PortChannels.

Configuring PortChannels from Intersight

These features are available only when Cisco UCS is connected to another Cisco networking device, such as a Cisco Nexus 9000 Series or Cisco MDS 9000 Series switch. These features are not supported when third-party switches are used. Consequently, when connecting Cisco UCS to third-party Fibre Channel switches, PortChannels and F-port PortChannels are not supported, limiting the traffic load balancing options or forcing the use of complex traffic engineering mechanisms such as pin groups. Table 2 lists some of the differences between individual links with pin groups and F-port PortChannels.

Table 2. Differences between individual links and F-port PortChannels

| Individual links |

F-port PortChannels |

| No bandwidth aggregation |

Bandwidth aggregation with multiple redundant links |

| No fault tolerance |

High availability and fault tolerance |

| HBA re-login required during link failure |

No re-login required when there is at least one surviving link in the PortChannel |

| No dynamic traffic load balancing across links |

Uniform traffic load balancing across member links of a PortChannel |

| Cannot scale bandwidth of a single link |

Additional links can be added to a PortChannel to increase its bandwidth |

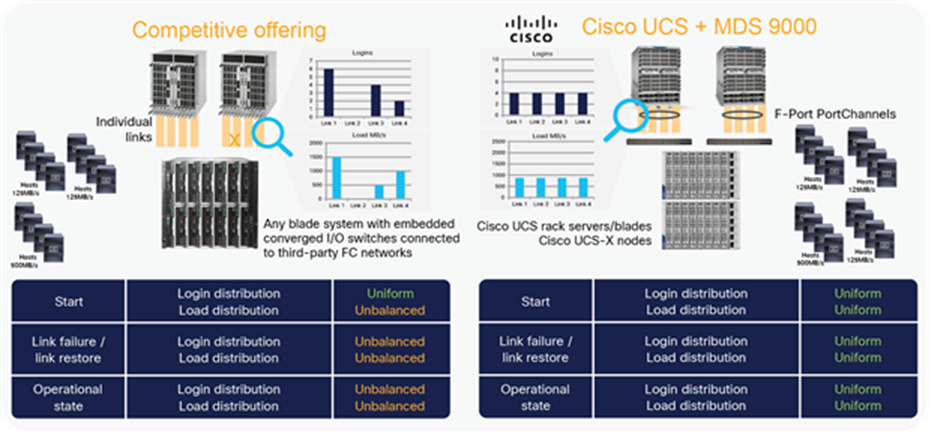

When PortChannels are used, individual vHBAs will login through the PortChannel itself, not through individual physical uplinks. Uplink utilization will get better and uniform. In fact, PortChannels achieve optimal traffic distribution with a granular load balancing mechanism based on the Source ID (SID), Destination ID (DID), and Originator Exchange ID (OXID) on a per-VSAN basis, and no wasted bandwidth. PortChannels also help simplify operations because they do not require pre-planning: administrators can avoid the guesswork of identifying top talkers and the task of assigning them individually to specific uplinks with pin groups. The use of PortChannels has a major impact on the overall data center network design. They make it possible to achieve a uniform login and load distribution at initial provisioning, during and after uplink failures, and in the long-term operational state (Figure 9).

Login and traffic load distribution: competitive offering vs. Cisco UCS + Cisco MDS 9000 Series

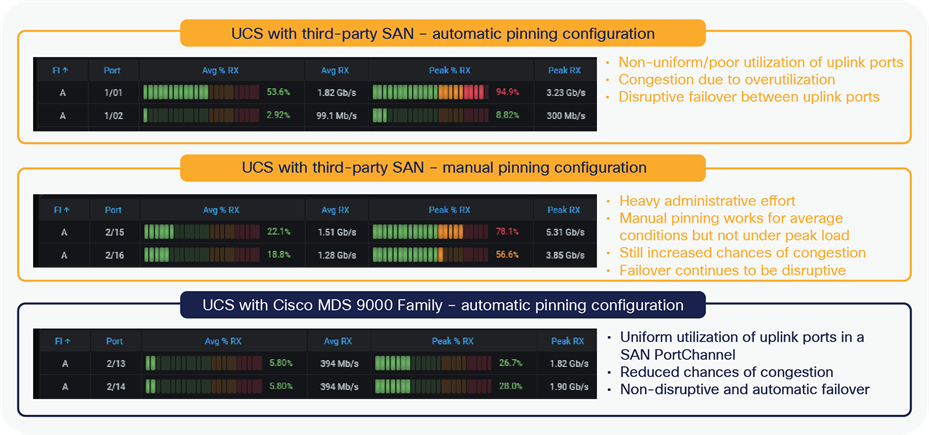

By using the UCS Traffic Monitoring tool, it is easy to see the great improvement that can be achieved by using PortChannels. Figure 10 shows the traffic distribution on UCS uplinks in three different scenarios. In the first one, third-party switches are used, and the default automatic load-balancing mechanism is adopted. In the second one, third-party switches are used, and some effort is put in place to manually distribute traffic load using pin groups. In the third one, Cisco MDS 9000 Series switches are used and F-port PortChannels configured so that an excellent uniform traffic distribution is automatically achieved.

Traffic distribution on fabric interconnect uplinks in three different scenarios

F-port PortChannels provide additional benefits over individual links and pin groups. If a single member link fails, the hosts using it remain logged into the fabric and no re-login is required. In the case of pin groups and individual links, the failure of an uplink would cause traffic to drop temporarily, and re-logins would occur, with some chances of unexpected problems. With F-port PortChannels, when the failed uplink is restored, automatic redistribution will occur for an optimal use of available resources. PortChannels are the only way to achieve fault tolerance.

PortChannels also offer agile bandwidth scaling: if a PortChannel is overutilized, additional members can be added dynamically on UCS fabric interconnects, as shown in Figure 11. A similar action would be required on the Cisco MDS 9000 side. The hosts that are logged in can immediately use the additional bandwidth without any intervention.

Adding members to an existing PortChannel with Cisco Intersight

Performance boost with NVMe/FC

Non-Volatile Memory Express (NVMe) is an optimized, high-performance, scalable protocol designed from the ground up to work with current solid-state storage and ready for next-generation NVM technologies. This protocol comes as the natural replacement for the still prevalent, but now legacy, SCSI command set. NVMe protocol can be used locally inside servers for local storage or extended across a fabric for shared storage arrays. NVMe/FC is defined by the INCITS T11 committee and fully supported on Cisco UCS, Cisco Nexus 9000 and Cisco MDS 9000 Series switches. Cisco NX-OS Release 8.1(1) onwards is required on Cisco MDS 9000 Series switches while Release 4.0(2) is the minimum for Cisco UCS. The Cisco UCS + Cisco MDS combination offers several unique advantages:

● Multiprotocol flexibility: Cisco UCS, Cisco MDS 9000 Series, and Cisco Nexus 9000 switches support NVMe and SCSI simultaneously over Fibre Channel or FCoE fabrics. Enterprises can continue to use their existing infrastructure and roll out new NVMe/FC-capable end devices in phases, sharing the same Fibre Channel or FCoE SAN.

● Seamless insertion: NVMe/FC support can be enabled on the entire solution through a nondisruptive upgrade of Cisco NX-OS Software and with recent Cisco UCS Manager or Intersight releases.

● Investment protection: No hardware changes are required. Support for NVMe/FC is possible on all 32G-capable Cisco MDS 9000 Series switches and Cisco UCS fourth-generation fabric interconnects and VIC adapters.

● Superior architecture: large enterprises across the globe from various industry verticals trust Cisco MDS 9000 Series solutions for their mission-critical data centers due to their superior architecture. Redundant components, non-oversubscribed and nonblocking architecture, automatic isolation of failure domains, and exceptional capability to detect and automatically recover from SAN congestion are a few of the top attributes that make these switches an ideal choice for high-demand storage infrastructures that support NVMe-capable workloads. Cisco UCS is also an excellent choice for NVMe/FC initiators, thanks to optimized network drivers, wide operating- system compatibility, and support for the Fabric Device Management Interface (FDMI). FDMI enables the management of devices such as HBAs through the fabric and complements the mechanisms already provided by Fibre Channel name server and management server functions. More importantly, from an operational management perspective, FDMI provides a wealth of information to the fabric regarding the attached devices.

● Integrated storage traffic visibility and analytics: The 32-Gbps products in the Cisco MDS 9000 Series offer Cisco SAN Analytics and Telemetry Streaming. This solution provides line-rate, native, on-switch storage traffic visibility to help you to make data-driven decisions to increase the utilization of your infrastructure and remove bottlenecks. Cisco SAN Analytics and Telemetry Streaming is based on a programmable network processing unit that makes it capable of both SCSI and NVMe traffic inspection.

Common Cisco NX-OS operating system and management tools

Cisco fabric interconnects, Cisco Nexus 9000 Series and Cisco MDS 9000 Series products all support NX-OS as the foundational operating system, making feature commonality easy to achieve. The same design concepts apply to all Cisco data center products discussed in this document, and feature commonality allows organizations to get the best from the overall architecture. The use of the same operating system and hence the same structure for the Command-Line Interface (CLI) allows administrators to more easily design scripts to collect information from the devices. Scripts prepared for Cisco UCS can be easily adapted to be used on Cisco MDS 9000 Series switches by using similar CLI commands.

Common management tools are available for all these products, specifically Cisco Nexus Dashboard Fabric Controller (NDFC), and Cisco Intersight, the innovative cloud operations platform pioneering management as a service. Since the Cisco MDS 9000 Series and Cisco UCS use a common Operating System (NX-OS) and common management tools, server and storage administrators can use their skills across computing, SAN and LAN environments. These capabilities help administrators implement, maintain, manage, and operate these environments without the need to learn new tools.

Cisco UCS visibility using Nexus Dashboard Fabric Controller

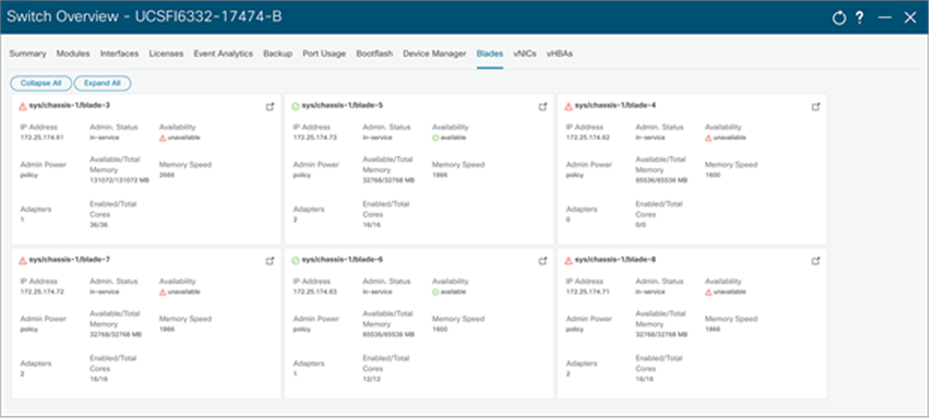

Cisco NDFC acts as a single dashboard for all networking elements in a Cisco data center architecture. Even Cisco UCS fabric interconnects are detected and shown on Cisco NDFC topology maps, and statistics about Cisco UCS uplinks and server vNICs and vHBAs can be collected and viewed. Cisco NDFC can also expose the assignment of service profiles to UCS blades and inform the administrator of their operational status (Figure 12).

Nexus Dashboard Fabric Controller view of Cisco UCS service profiles

With the additional capability to discover and report about disk arrays in the fabric and the use of virtual machine managers, Cisco NDFC can effectively provide an end-to-end view of all communication within the data center. For customers adopting a combination of bare-metal and virtualized servers, the capability of Cisco NDFC to provide visibility into the network, servers, and storage resources makes this tool in high demand, helping provide full control of application Service-Level Agreements (SLAs) and metrics beyond simple host and virtual machine monitoring.

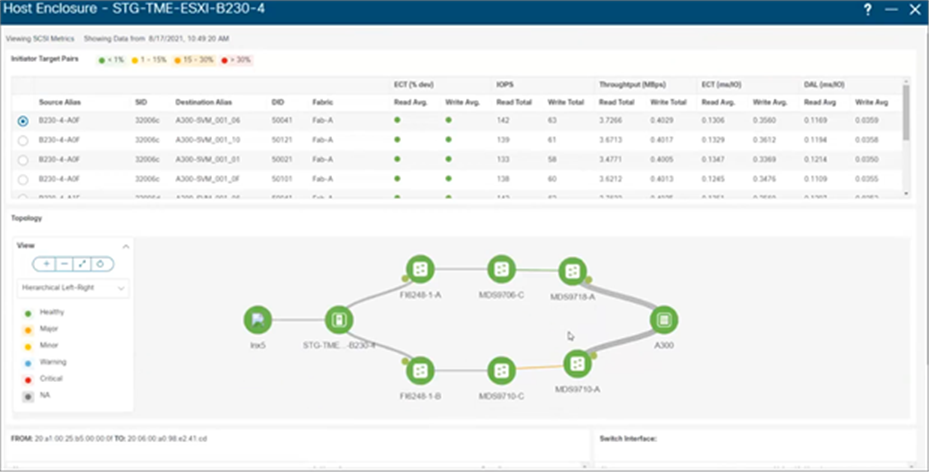

Not only can NDFC show a wealth of data points from Cisco UCS, such as inventory, service profile assignments, vHBA and vNIC traffic, etc.; when used with SAN Insights, NDFC shows enhanced metrics at a vHBA level, such as ECT, IOPS, and DAL (Figure 13).

NDFC SAN Insights view of the end-to-end topology and SCSI metrics for Cisco UCS servers

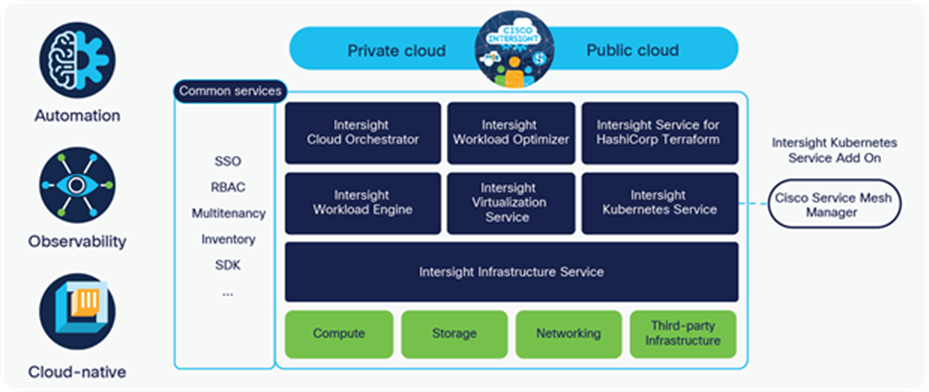

Cisco Intersight is a cloud-operations platform that can be augmented by optional, modular capabilities of advanced infrastructure, workload optimization, orchestration, and Kubernetes services. This Software-as-a- Service (SaaS) management platform is hosted in the cloud (Figure 14); this way, organizations can use it without installing and maintaining any software on premises. For customers who want better control of data exported by their on-premises infrastructure or are challenged by lack of continuous connectivity to the cloud, Cisco Intersight can also be adopted in the form of a connected virtual appliance or a private virtual appliance.

Cisco Intersight infrastructure services include the deployment, monitoring, management, and support of your physical and virtual infrastructure. You can connect to Intersight from anywhere and manage infrastructure through a browser or mobile app. Cisco Intersight’s secure connector provides lifecycle management of Cisco UCS servers, while Cisco Nexus 9000 and Cisco MDS 9000 devices can be currently discovered by using the Intersight virtual assist appliance. The same approach can be used for third-party devices that are located on premises, such as storage arrays or virtual machine managers.

Cisco Intersight software-as-a-service management platform

Intersight services streamline daily activities by automating many manual tasks. The recommendation engine provides predictive analytics, security advisories, hardware compatibility alerts, and additional functions. The integration with Cisco Technical Assistance Center (TAC) enables advanced support and proactive resolution of distributed computing environments from the core to the edge.

Simplified orchestration for a consistent cloud-like experience across your hybrid environment

The infrastructure and operations teams of any organization are challenged by the complexity of modern solutions, where cloud and on-premises resources need to be managed efficiently, balancing risk and accelerating the delivery of IT services. Cisco Intersight Cloud Orchestrator (ICO) is a powerful automation tool that enables IT operations teams to move at the speed of the business, standardize the deployment process while reducing risk across all domains, boost productivity with a selection of validated blueprints, and achieve a consistent cloud-like experience for users. With Cisco ICO, organizations can do things faster and accommodate the needs of development teams, line-of-business stakeholders, and other IT teams focused on a specific technology domain.

With one-off solutions leveraging product programmability options, organizations can possibly solve domain-specific needs. But to scale and deliver at the speed of the business, IT teams need a more thoughtful and systematic approach to automation. With Cisco ICO, they can easily design and build workflows that match the need for speed, but do not compromise on control. Cisco ICO coordinates all available resources and provisions them within minutes. Resource provisioning occurs through device adapters that are available for numerous Cisco products but also for some third-party products, such as disk arrays. For products lacking native support, a generic HTTP web API can be used, and a custom library of tasks must be built. Overall, Cisco ICO reduces the amount of time needed to deploy new applications, makes IT more responsive, and reduces risk through automation.

Both Cisco UCS and the Cisco MDS 9000 Series switches are supported by Cisco ICO, based on a library of multi-domain tasks, curated through Cisco solutions, that can be extended with custom user-developed tasks. Using the library of tasks, users can create workflows, quickly and easily, without being coding experts. This enables quick and easy automation and deployment of any infrastructure resource, from servers to VMs and network and storage arrays, taking away some of the complexity of operating your hybrid IT environment. Risk mitigation is achieved by enforcing policies using rules for what can be orchestrated and who can access workflows and tasks.

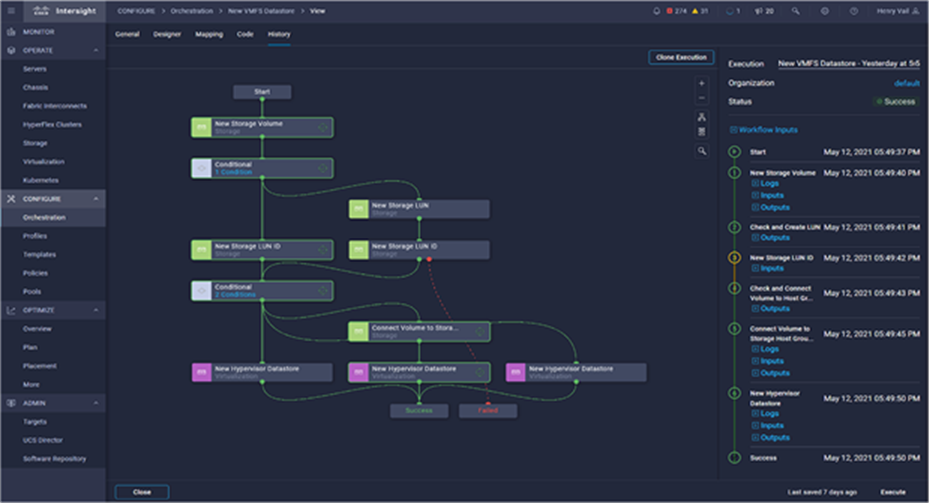

The Cisco ICO workflow designer provides a low/no-code workflow creation with a modern, drag-and-drop user experience with flow-control support. The workflow designer includes policy-based, built-in tasks for Cisco UCS, virtualization, and other Cisco devices. A Software Development Kit (SDK) enables Cisco technology partners to build their own ICO tasks to develop custom solutions. Rollback capabilities allow for selectively undoing a workflow’s tasks in the event of failure, or quickly deprovisioning infrastructure. Simply put, Cisco Intersight Cloud Orchestrator enables organizations to truly evolve their automation strategy to provide a consistent experience across on-premises resources and public clouds (Figure 15).

The low/no code workflow designer of Cisco ICO

Zoning is the security mechanism embedded in Fibre Channel fabrics. It allows communication among initiators (hosts) and targets (disk arrays) by selectively making them part of the same zone. Devices can talk to each other only when they are part of the same zone. On Cisco MDS 9000 Series switches, zoning is configured on a per-VSAN basis. The creation of large zones with multiple members has always been possible. However, to improve fabric security and stability and reduce possible side-effects of too many Registered State-Change Notification (RSCN) messages, zones should be kept as small as possible. The Single Initiator and Single Target (SIST) approach was designed to help meet this need. The SIST approach also reduces the number of Access Control Lists (ACLs) entries created on the switch TCAM table, so that a higher number of zones can be configured. The downside of this approach is the additional administrative time. For any new host joining the fabric, one or more zones need to be created, depending on the number of target ports to which the host needs to talk. Large enterprises normally have some IT staff members devoted just to zoning, and up to 40 additions or changes may be required every single day. Zoning maintenance clearly requires a big effort.

To address this challenge, Cisco introduced Smart Zoning. With Smart Zoning, customers can simplify their zoning activities, reduce administration overhead, and preserve the same fundamental secure mode offered by one-to-one zoning, including the ACL count. Smart Zoning creates large zones in which Multiple Initiators and Multiple Targets (MIMTs) coexist. The smart capability of Smart Zoning is the implicit and automatic pruning of undesired communications so that initiators cannot talk to each other, nor can targets. Only initiator-to-target communication is allowed, offering essentially the same implementation as one-to-one zoning. Table 3 presents the advantages of Smart Zoning over traditional zoning methods.

Table 3. Advantages of Smart Zoning over traditional zoning

| Operation |

Today: one-to-one zoning |

Today: many-to-many zoning |

Smart Zoning |

||||||

|

|

Zones |

Commands |

ACL Entries |

Zones |

Commands |

ACL Entries |

Zones |

Commands |

ACL Entries |

| Create zones |

32 |

96 |

64 |

1 |

13 |

132 |

1 |

13 |

64 |

| Add an initiator |

+4 |

+12 |

+8 |

|

+1 |

+24 |

|

+1 |

+8 |

| Add a target |

+8 |

+24 |

+16 |

|

+1 |

+24 |

|

+1 |

+16 |

In a typical deployment scenario, Cisco UCS is configured in end-host mode, and the NPIV feature is enabled for the Cisco MDS 9000 Series switch. Thanks to Smart Zoning, IT administrators can easily configure large zones with 16 or 32 members (with a maximum limit of 250 members). Typically, you would associate one Smart Zone to a specific application cluster or group of virtual servers. This new capability for Fibre Channel zoning has sometime been dubbed application awareness. For customers who prefer a graphical tool, Smart Zoning can be configured from Cisco NDFC.

Interoperability and feature compatibility

Any time that devices are interconnected, interoperability needs to be verified. Verification is particularly important in the storage environment. Every vendor publishes its own interoperability matrices (also known as hardware and software compatibility lists). Cisco UCS is no different in this respect. Of course, full interoperability is much easier to achieve with products from the same vendor because they come from the same engineering organization and are readily available for internal testing. That is why the Cisco interoperability matrix clearly states that Cisco UCS and Cisco MDS 9000 Series products are always interoperable, provided they meet some specified minimum software release requirements.

Cisco UCS can also be connected to third-party Fibre Channel switches, and it has been proven to work in numerous production installations; however, customers need to be more careful about the software release in use.

Interoperability is tested, and so guaranteed, only for specific software releases. Moreover, not just the Cisco interoperability matrix needs to be consulted, but also the storage vendors should be. Because of the time required to conduct all testing, some delay may occur between the introduction of a new software release for Cisco UCS and the official listing on the interoperability matrix.

UCS in end-host mode and switch mode is supported with all models and versions of Cisco MDS 9000 Series (and the Cisco Nexus 9000 Series switches. However, Cisco UCS can interoperate with third-party Fibre Channel switches only when it is configured in end-host mode. The lack of interoperability with third-party switches applies to FCoE as well.

The Cisco UCS hardware compatibility tool and the Cisco MDS 9000 Series interoperability matrix can be found at the following links:

● Cisco UCS Hardware and Software Interoperability Matrix

● Cisco MDS and Nexus Interoperability Matrix

Note that even when interoperability is possible and certified, not all features may be available. For example, as already explained, the F-port PortChannels, VSANs, and VSAN trunking features are not available when third-party Fibre Channel switches are used because these switches do not support them. Even when third-party servers are connected to third-party Fibre Channel switches, features such as F-port PortChannels, VSANs, and VSAN trunking cannot be used. This limitation in design options is one important reason making a data center built on all Cisco technology the best choice for customers.

Single vendor and solution level support

When multiple vendors contribute to a complete solution, identifying the misbehaving product can be a slow and challenging task, adding complexity to any troubleshooting activity. Customers have tried to solve this problem by reducing the number of vendors they use or by adopting pre-validated converged systems for which a cooperative multivendor support agreement is in place. Ideally, customers want a single point of contact for any problem they may experience. This is exactly what they get when they invest in Cisco UCS for computing and the Cisco MDS 9000 Series for Fibre Channel networking. No other vendor offers both computing and Fibre Channel networking. Also, Cisco post-sale support services are well known to be among the best. They have won several industry awards for effectiveness, time to resolution, and competence. Customers trust the Cisco Technical Assistance Center (TAC) for support they may require from needed information to troubleshooting and remediation. Cisco TAC operates 24 hours a day, every day, throughout the world. Customers adopting both Cisco UCS and Cisco MDS 9000 Series platforms experience faster time to resolution.

Cisco TAC staff members have expertise on products such as Cisco UCS, Cisco MDS 9000 Series and Cisco Nexus series devices, and more. With the partner support ecosystem that covers converged infrastructures, Cisco TAC can also hand over the support case to storage array vendors, simplifying the support process with a coordinated and effective troubleshooting. Cisco TAC can also go beyond this and provide support for products from technology partners with the Cisco Solution Support Service for Critical Infrastructure. You can connect with data center experts to manage problems across a broad range of more than 80 technology groups at Cisco and our partners. When you entrust your solution to Cisco, we stand accountable for problem resolution within your data center. And we stay with you to coordinate all necessary actions and keep your case open until your problem is resolved. For more information about our solution partners, see Cisco Data Center Solution Support for Critical Infrastructure.

Cisco Data Center Solution Support Service for Critical Infrastructure helps you resolve data center problems more quickly by adding a solution-level perspective to your device-level service contracts. This will reduce risk through Cisco end-to-end support. Benefits include:

● Faster problem resolution: We accelerate problem resolution by taking ownership of your problem.

● Reduced costs: Our staff has the specialized knowledge needed to assist you when devices from different vendors do not work together properly. With less need for this specialized knowledge in house, you can staff your organization more cost effectively.

● Flexibility: Solution support gives you the flexibility to solve problems your way. If you call us first, we will promote resolution, including working with third-party products. If you start with a product support team, you can still call us, and we’ll take over from there.

A data center solution that makes use of both Cisco MDS 9000 Series and Cisco UCS platforms brings together best-in-class products for better performance, greater reliability, easier management, and easier maintenance. A solution using the Cisco MDS 9000 Series with Cisco UCS provides the following advantages over alternative solutions:

● Multiprotocol flexibility allows organizations to deploy Fibre Channel and FCIP on a single chassis and more easily benefit from the advantages of both technologies. Support for SCSI, NVMe, and FICON protocols is also available.

● VSANs can logically segregate storage traffic and create multitenancy, and they are supported in the Fibre Channel fabric and in Cisco UCS.

● VSAN trunking provides options to allow multiple VSAN traffic over the same links, reducing the need for multiple links while segregating traffic.

● F-port PortChannels provide link aggregation, fault tolerance, and uniform traffic load balancing.

● NVMe/FC can boost application performance by reducing latency and minimizing CPU usage for data transfer activities.

● Common OS and management tools ease network implementation, maintenance, and troubleshooting by relying on the same skill set across SAN, LAN, and computing environments.

● Cisco UCS visibility from Nexus Dashboard Fabric Controller

● Cisco Intersight integration

● Cisco Intersight Cloud Orchestrator can be used to automate different technology domains with an easy-to-use and low-code workflow designer, enabling IT operations teams to move at the speed of the business.

● Smart Zoning reduces the need to implement and maintain large zone databases and eases management and implementation tasks.

● Assured interoperability and feature compatibility

● Organizations can interact with a single vendor when troubleshooting problems across computing and networking environments.

● A variety of support models are available across data center solutions to efficiently involve and coordinate partners and solve problems as needed.

The Cisco MDS 9000 Series provides superior performance, high availability, and intelligent storage networking for Cisco UCS environments in small, mid-size, and large organizations. The combination of Cisco UCS servers and Cisco MDS 9000 Series storage networking devices provides abundant benefits when organizations decide to invest in this direction.

● Cisco Unified Computing Servers

● Cisco UCS X-Series Modular System

● Cisco Data Center Validated Design Guides