Table Of Contents

About Cisco Validated Design (CVD) Program

FlexPod with Microsoft Hyper-V Windows Server 2012 Deployment Guide

Nexus 5548UP Deployment Procedure

Initial Cisco Nexus 5548UP Switch Configuration

Enable Appropriate Cisco Nexus Features

Add Individual Port Descriptions for Troubleshooting

Create Necessary Port Channels

Add Port Channel Configurations

Configure Virtual Port Channels

Link into Existing Network Infrastructure

NetApp FAS3240A Deployment Procedure - Part 1

Complete the Configuration Worksheet

Assign Controller Disk Ownership and initialize storage

Upgrade the Service Processor on Each Node to the Latest Release

Storage Controller Active-Active Configuration

Joining a Windows Domain (optional)

Install Remaining Required Licenses and Enable MultiStore

Cisco Unified Computing System Deployment Procedure

Perform Initial Setup of the Cisco UCS 6248 Fabric Interconnects

Add a Block of IP Addresses for KVM Access

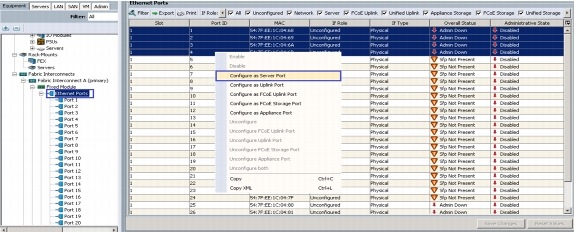

Enable Server and Uplink Ports

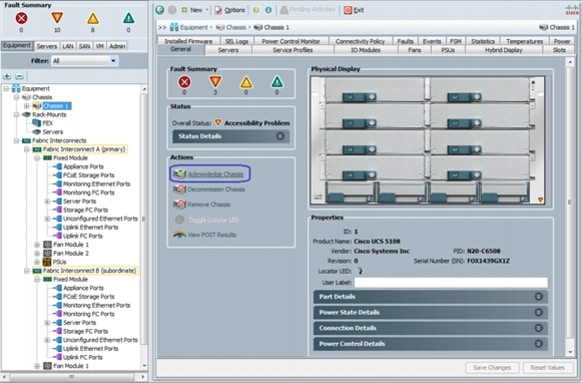

Acknowledge the Cisco UCS Chassis

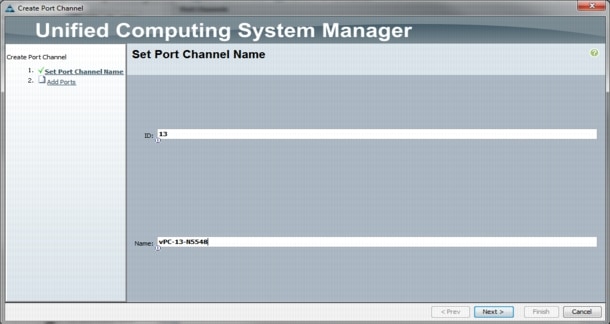

Create Uplink Port Channels to the Cisco Nexus 5548 Switches

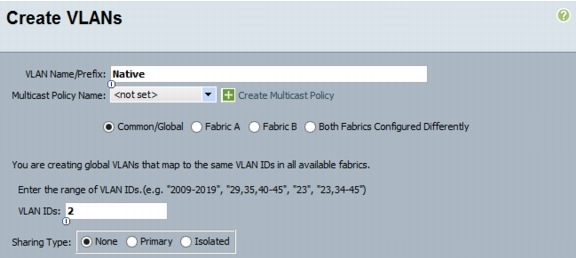

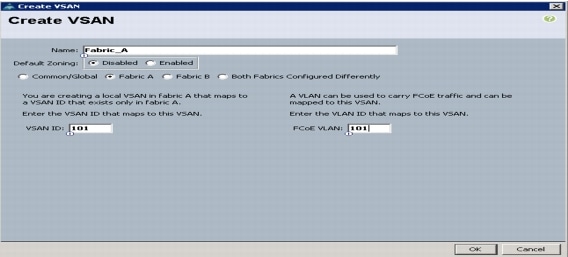

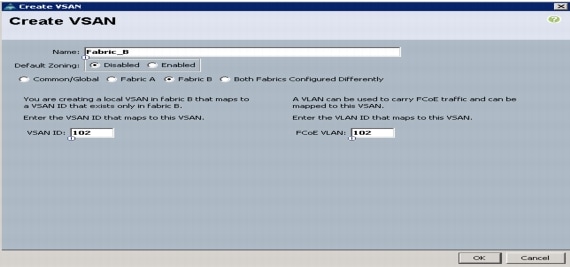

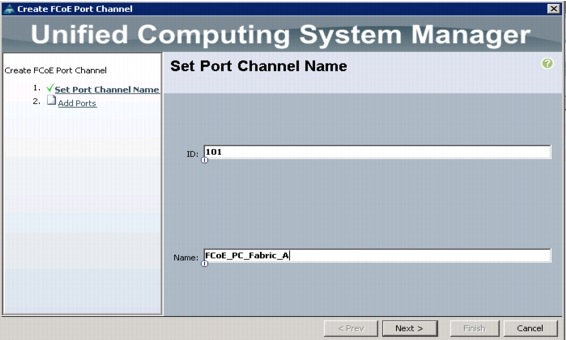

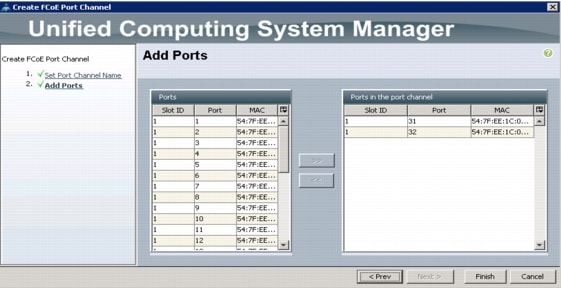

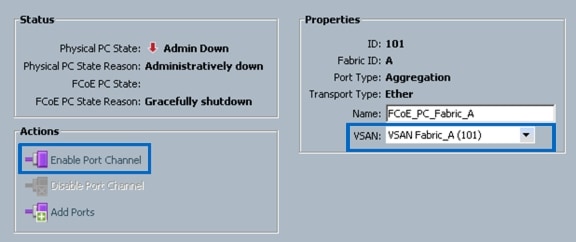

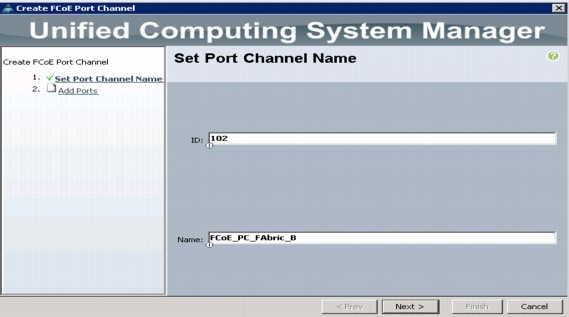

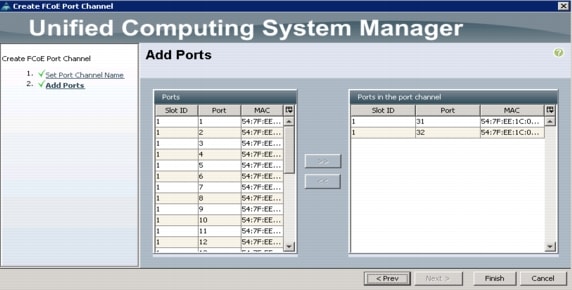

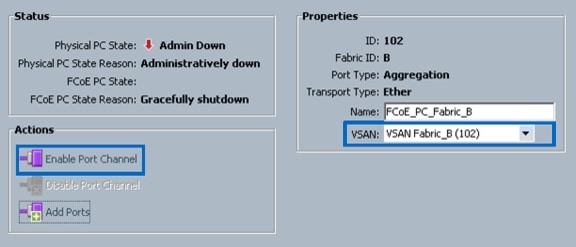

Create VSANs and FCoE Port Channels

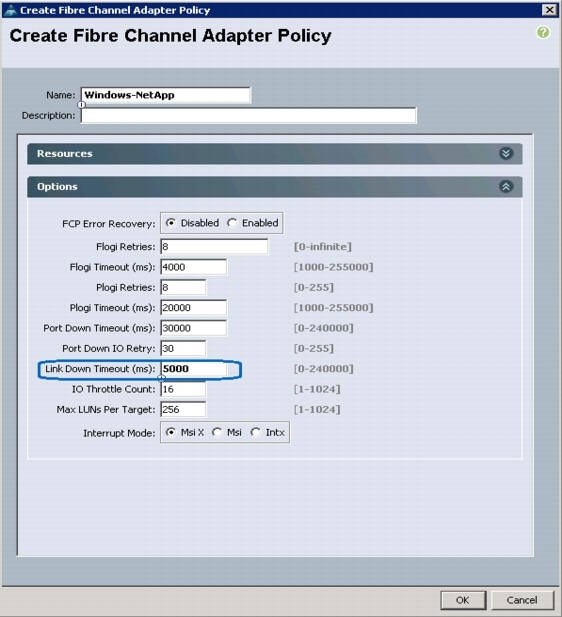

Create a FC Adapter Policy for NetApp Storage Arrays

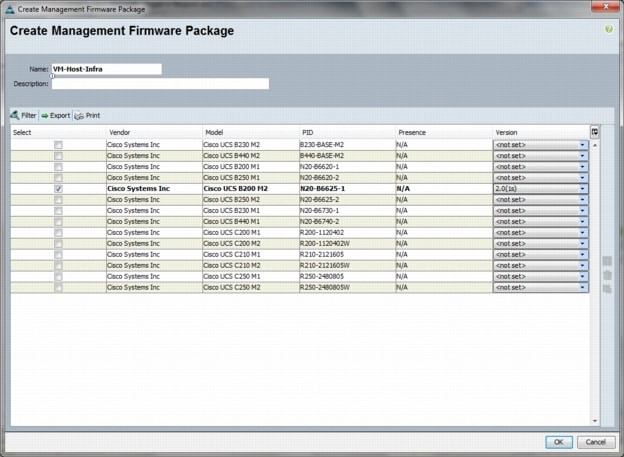

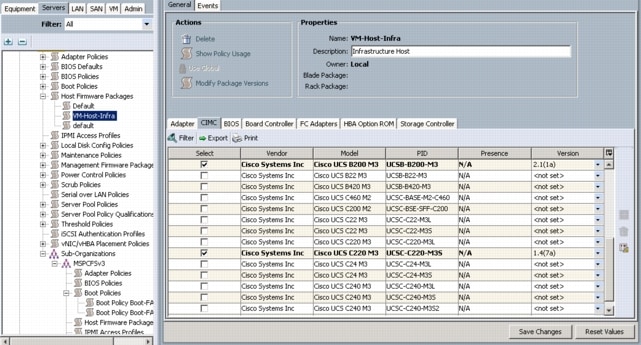

Create a Firmware Management Package

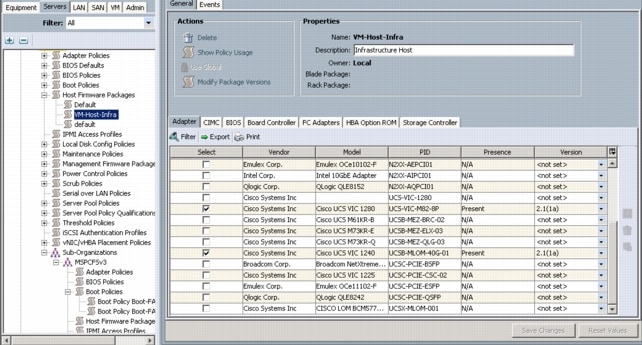

Create Firmware Package Policy

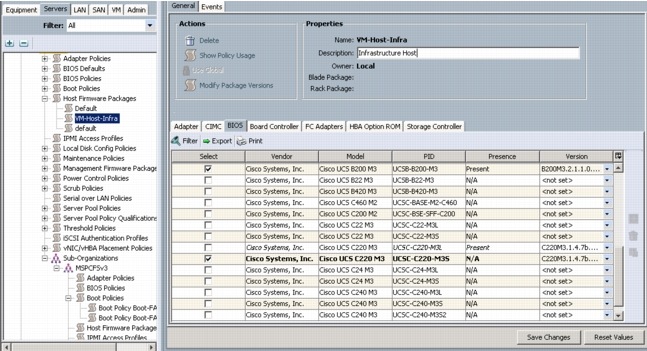

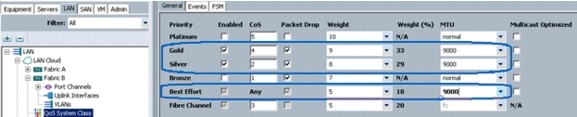

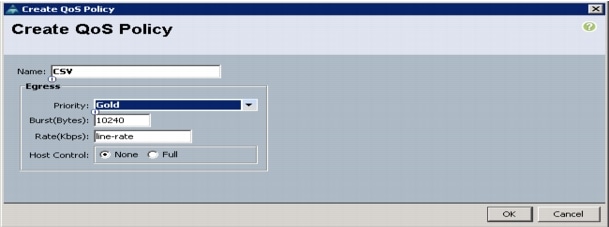

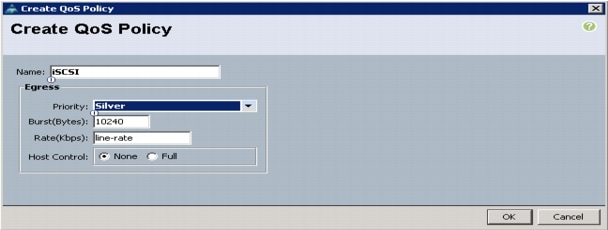

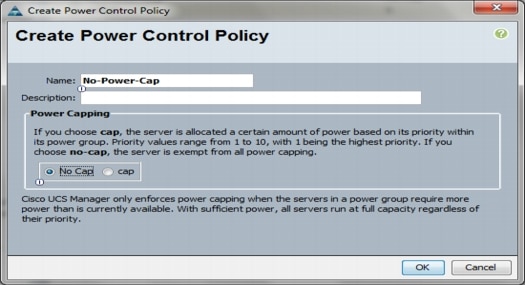

Set Jumbo Frames and Enable Quality of Service in Cisco UCS Fabric

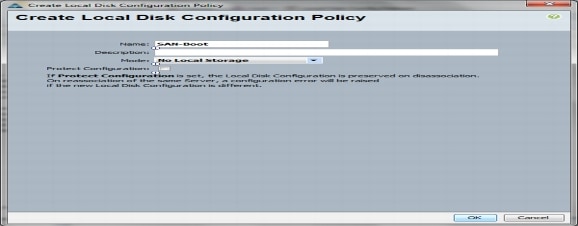

Create a Local Disk Configuration Policy

Create a Server Pool Qualification Policy

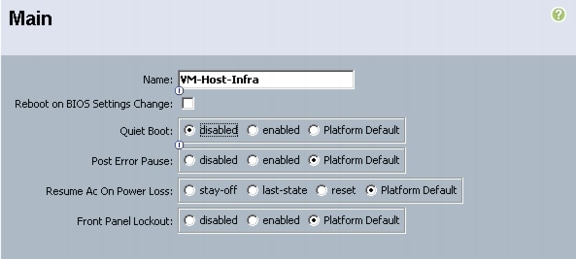

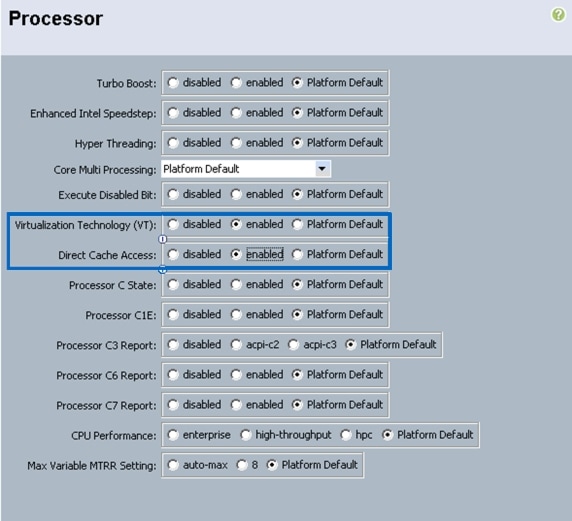

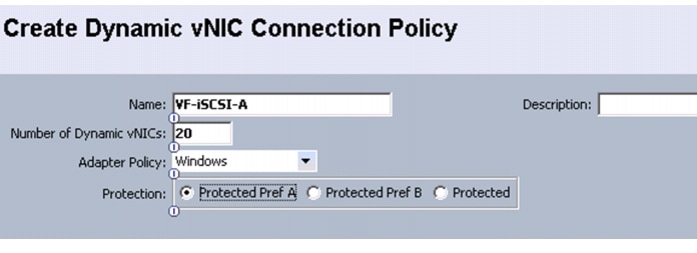

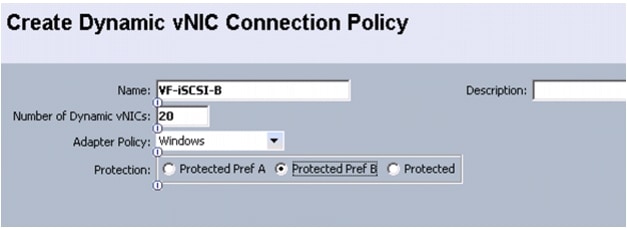

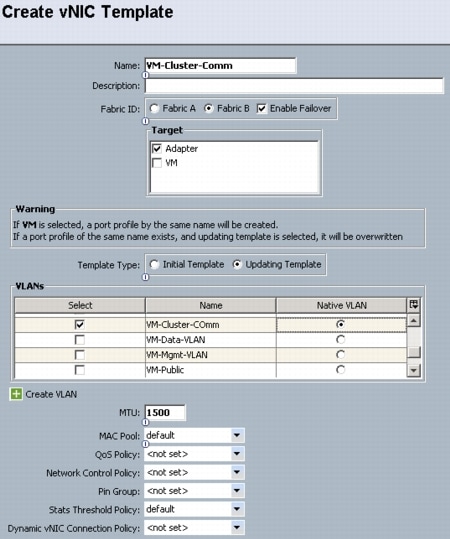

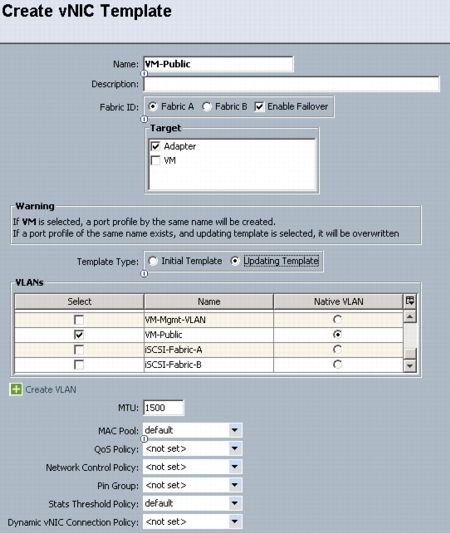

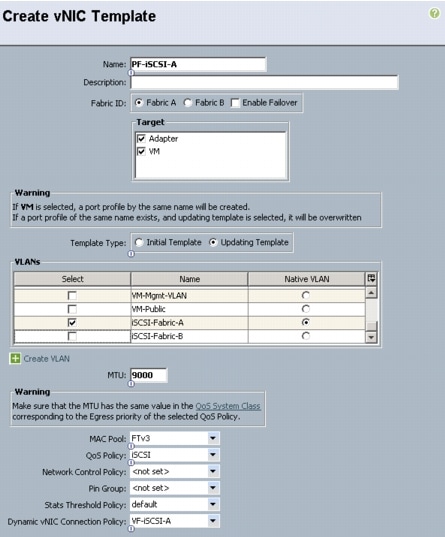

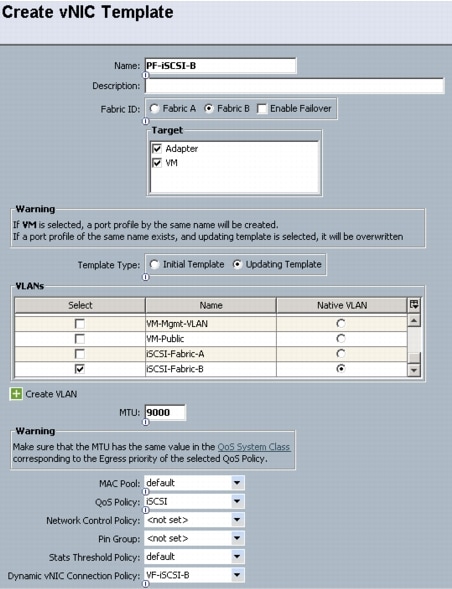

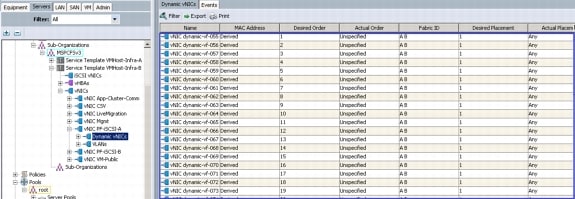

Create Dynamic vNIC Connection Policy for VM-FEX (SR-IOV)

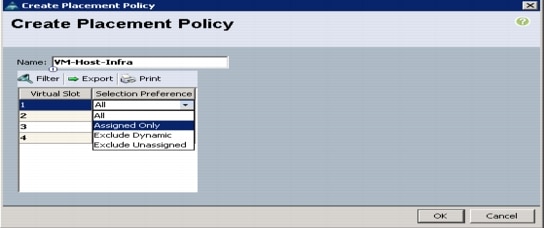

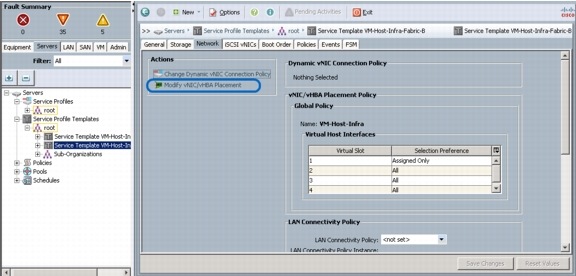

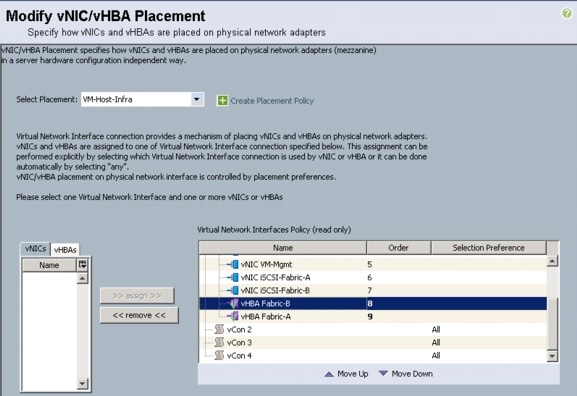

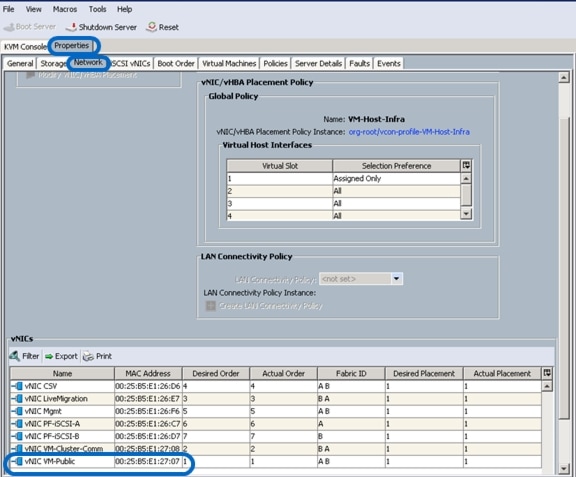

Create vNIC/HBA Placement Policy for Virtual Machine Infrastructure Hosts

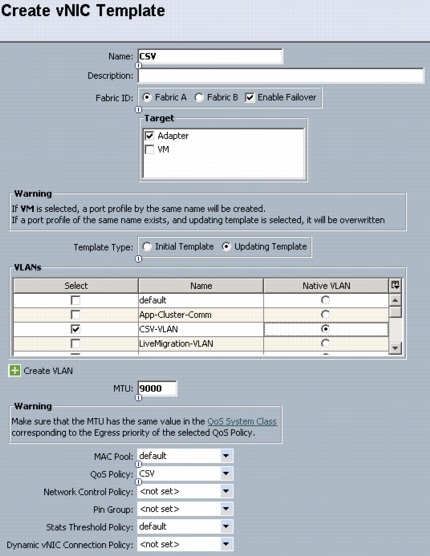

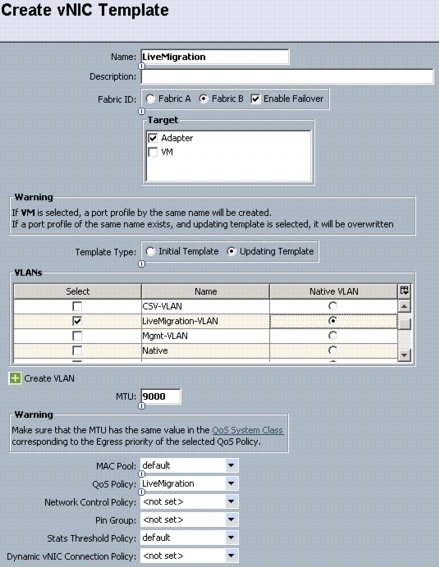

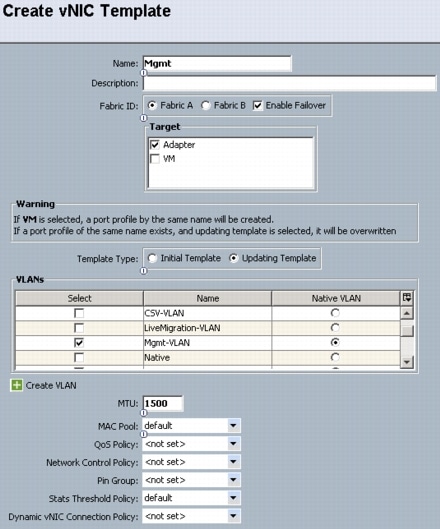

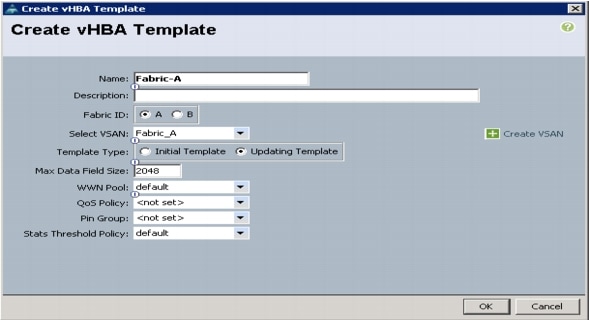

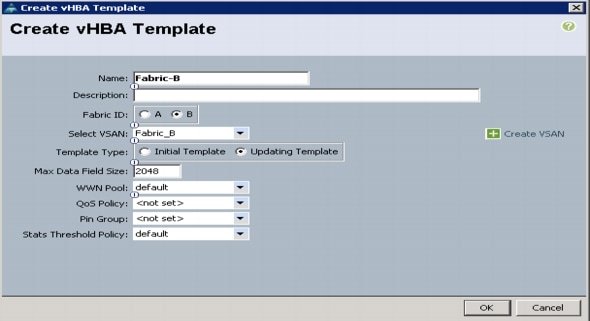

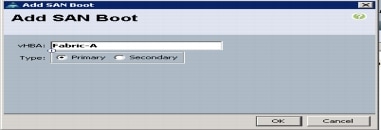

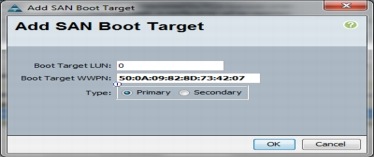

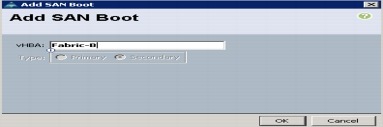

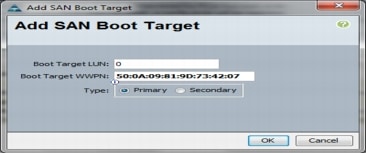

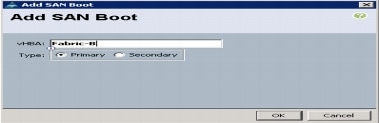

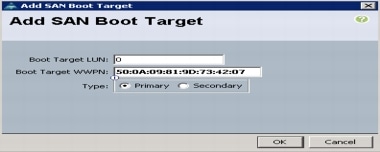

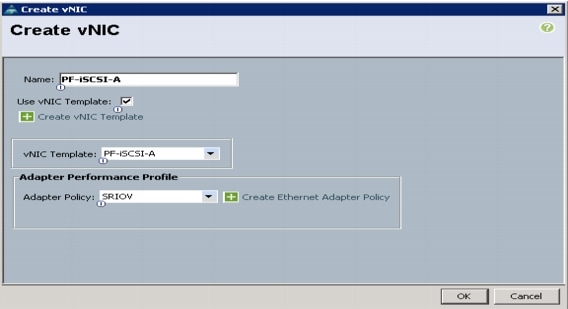

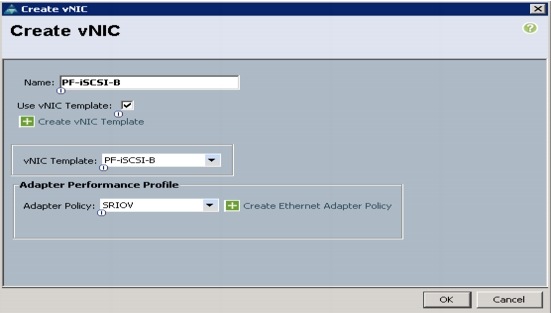

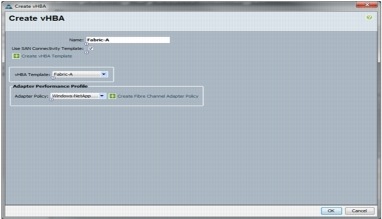

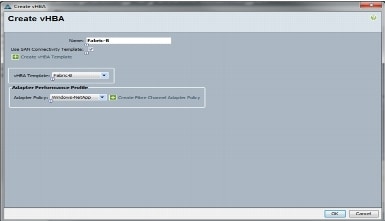

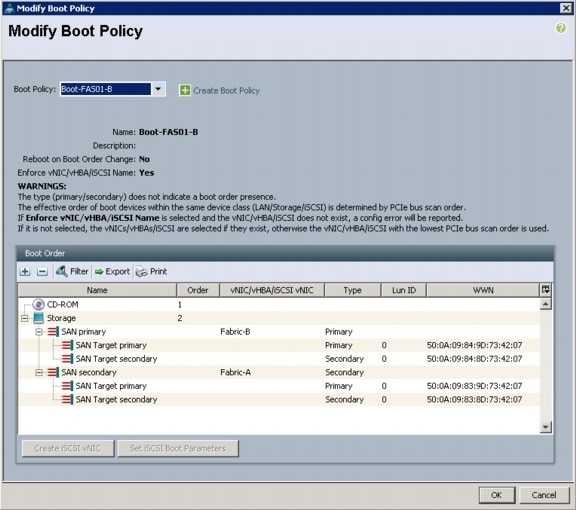

Create vHBA Templates for Fabric A and B

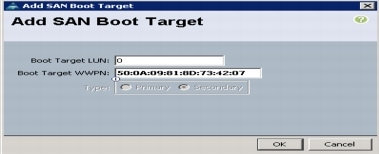

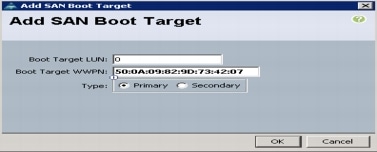

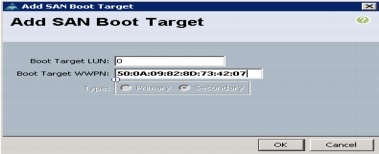

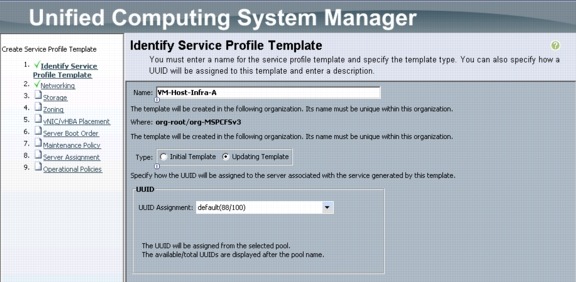

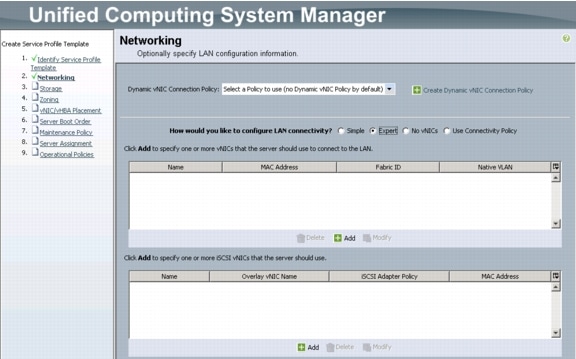

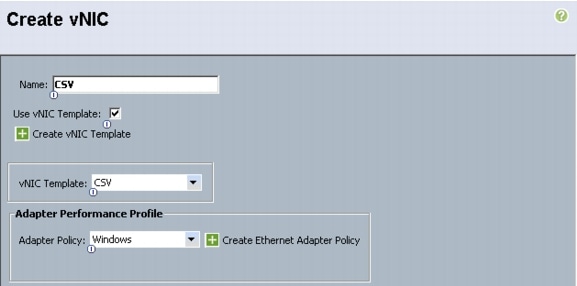

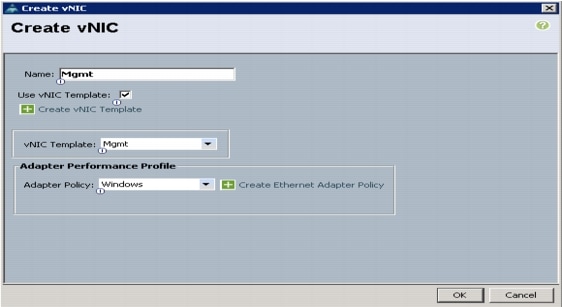

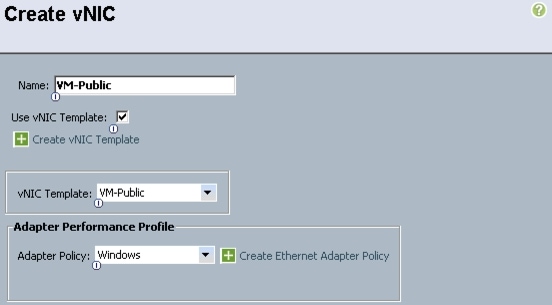

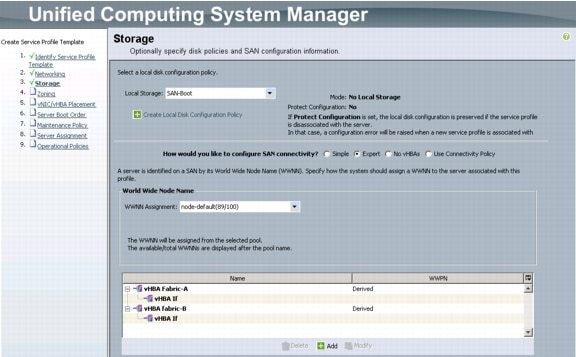

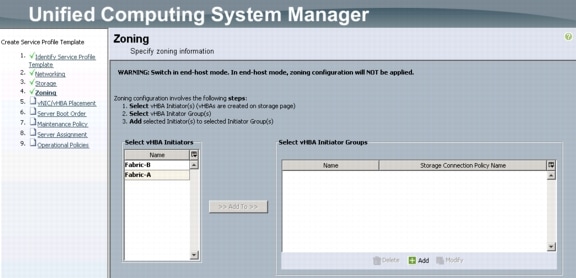

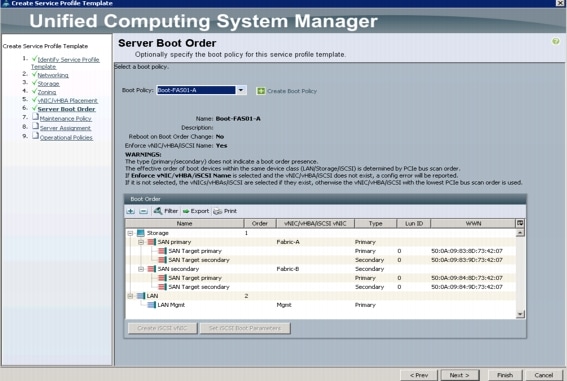

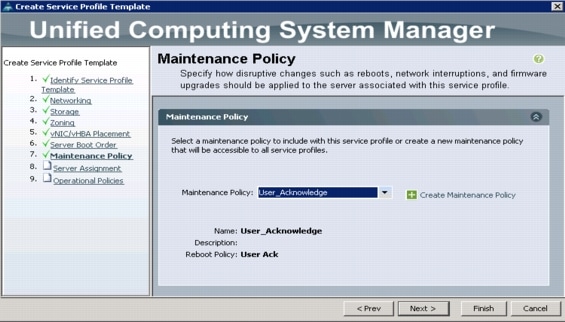

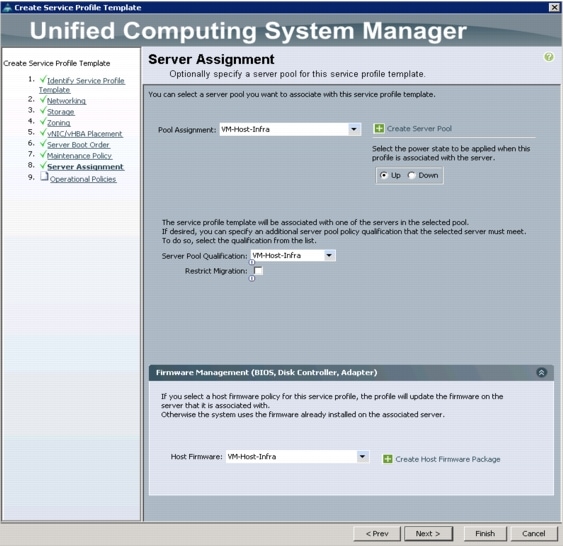

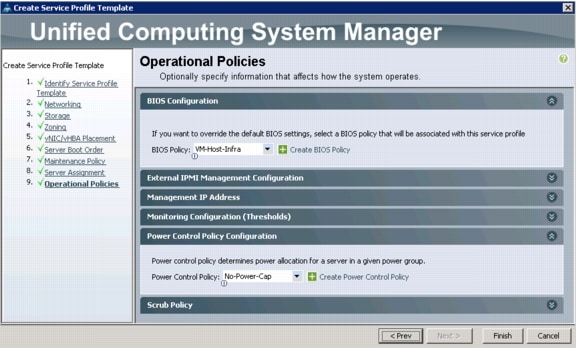

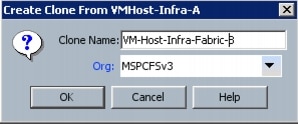

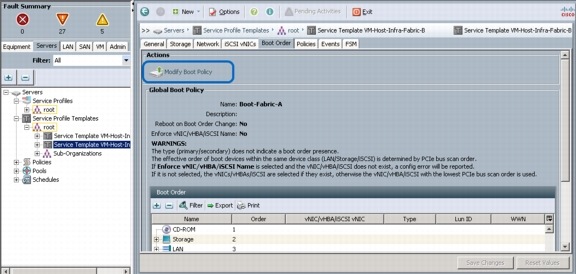

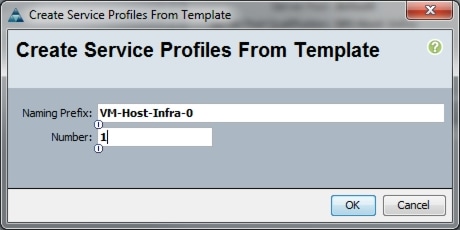

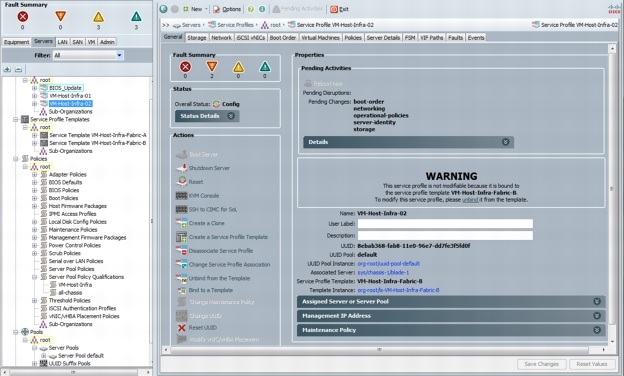

Create Service Profile Templates

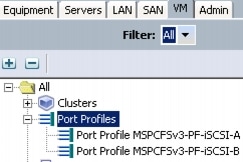

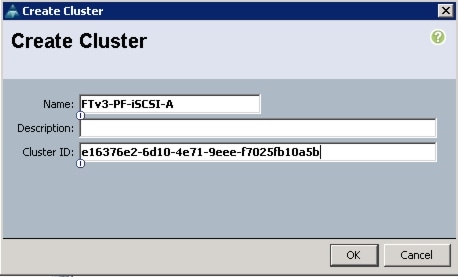

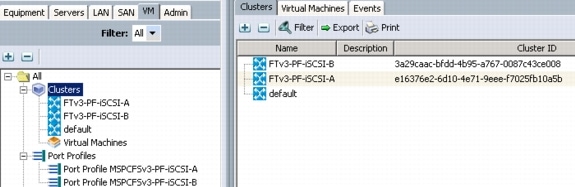

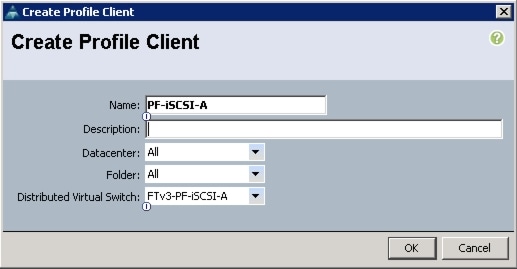

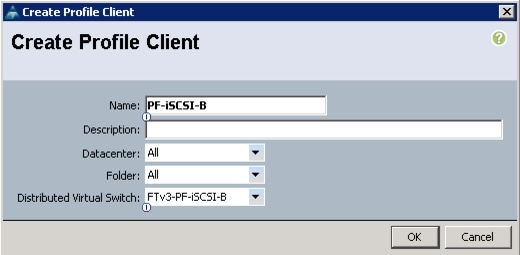

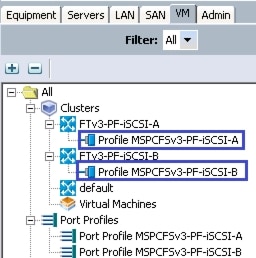

Create VM-FEX Port Profiles and Virtual Switch Clusters

Add More Server Blades to the FlexPod Unit

Create Zones for Each Service Profile (Part 1)

NetApp FAS3240A Deployment Procedure - Part 2

Microsoft Windows Server 2012 Hyper-V Deployment Procedure

Setup the Windows Server 2012 install

Install Windows Roles and features

Configure Windows Networking for FlexPod

Create Zones for Each Service Profile (Part 2)

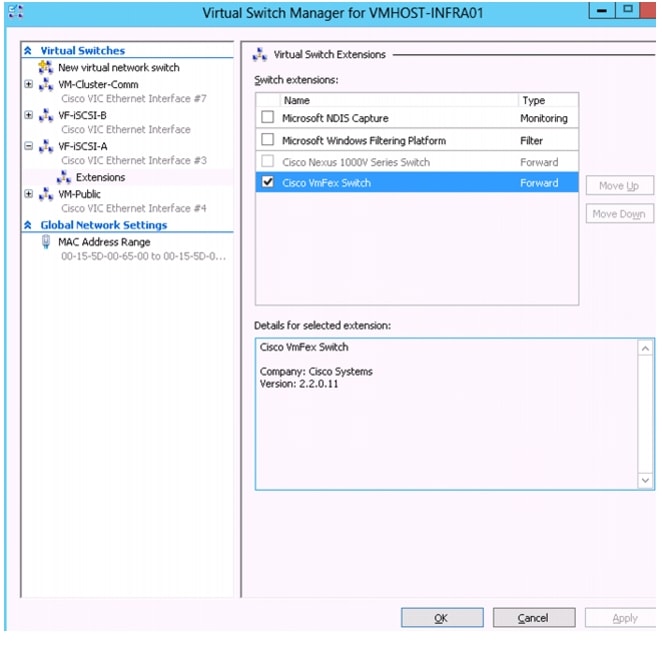

Install Cisco Virtual Switch Forwarding Extensions for Hyper-V

Create Hyper-V Virtual Network Switches

Domain Controller Virtual Machines

Install NetApp SnapManager for Hyper-V

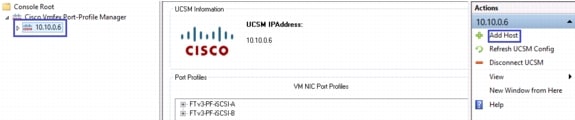

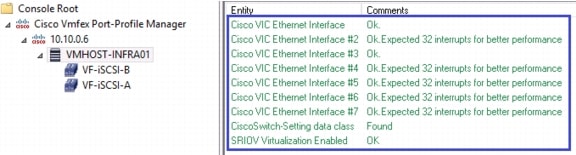

Install VM-FEX Port Profile Management Utilities in Hyper-V

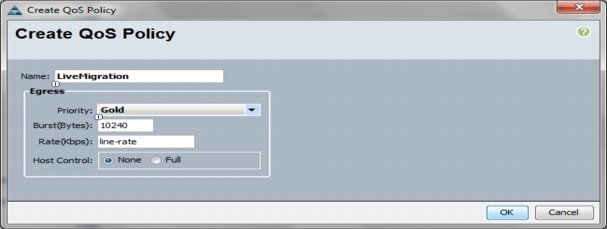

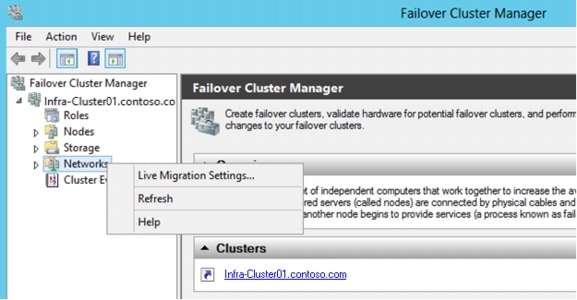

Configure Live Migration network.

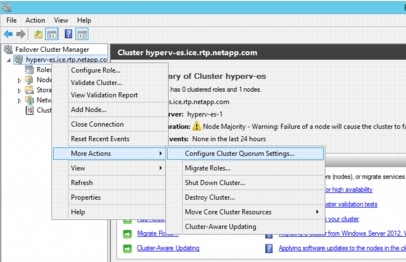

Change the Cluster to Use a Quorum Disk.

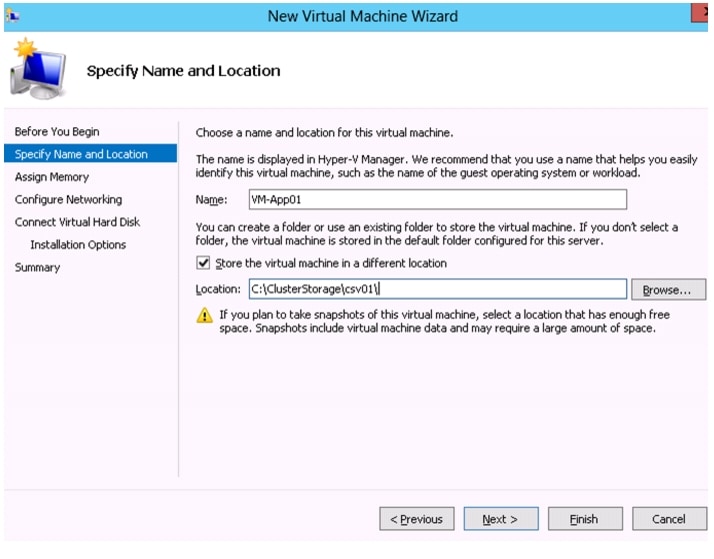

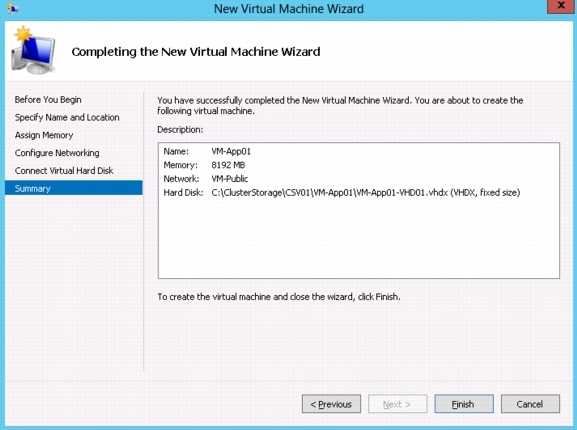

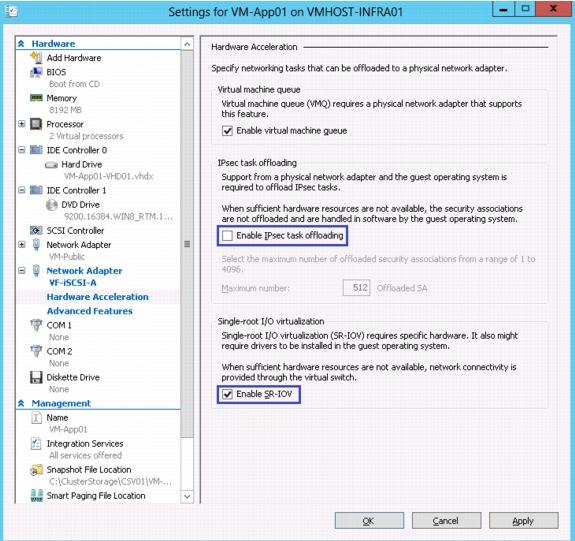

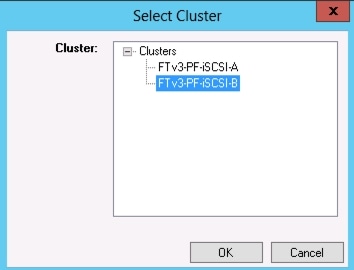

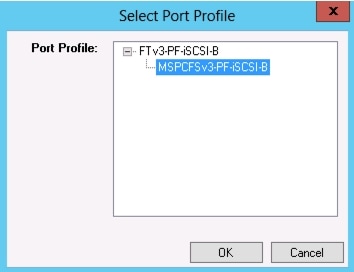

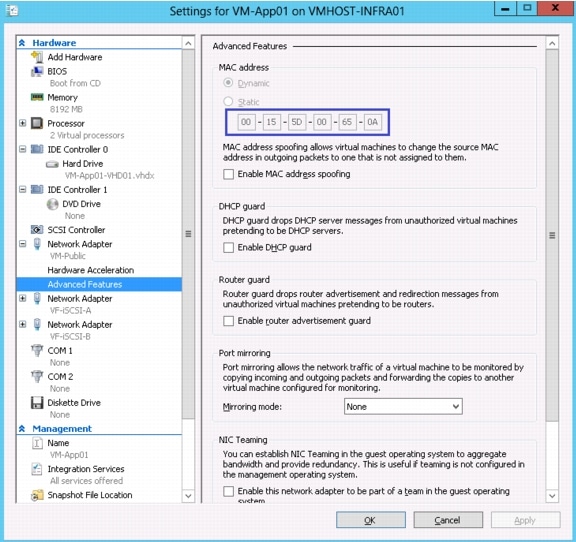

Deploying a Virtual Machine with VM-FEX

Attach Port Profile to the Virtual Machine

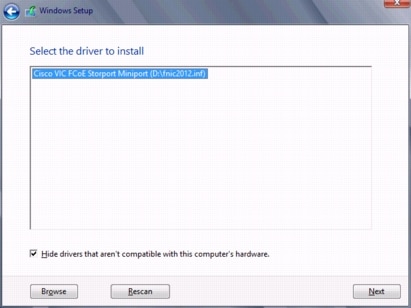

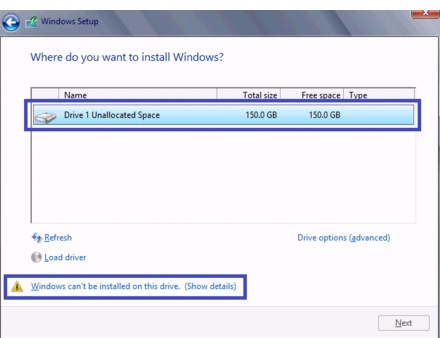

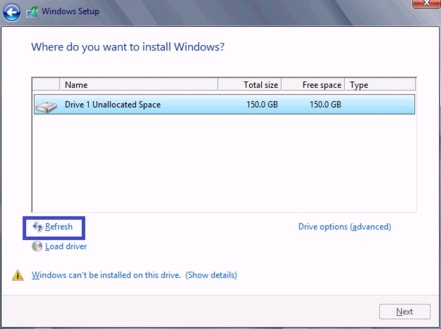

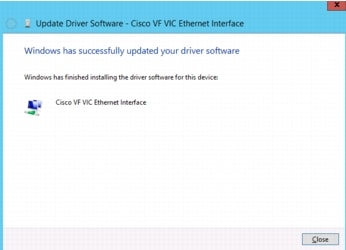

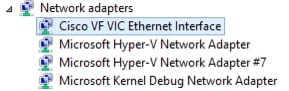

Install Windows and Cisco VF VIC Driver

Install Windows Features in the Virtual Machine

Configure Windows Host iSCSI initiator

Install NetApp Utilities in the Virtual Machine

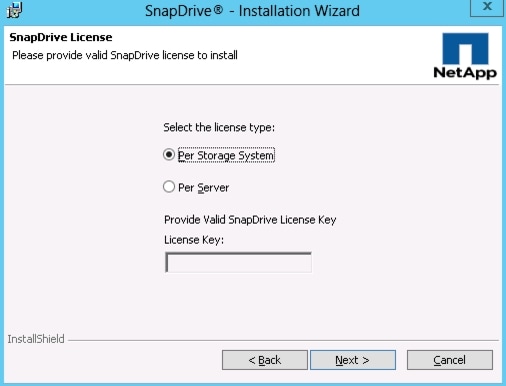

Create and Map iSCSI LUNs using SnapDrive

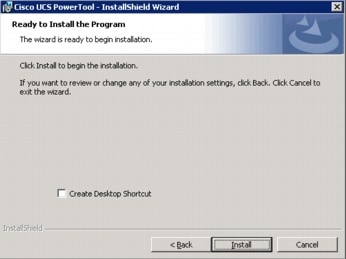

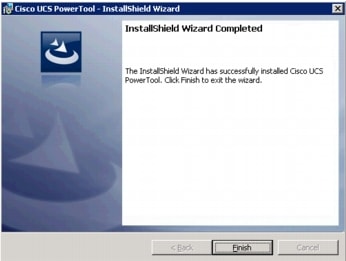

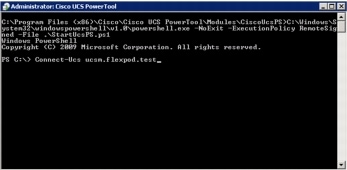

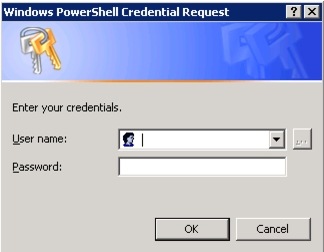

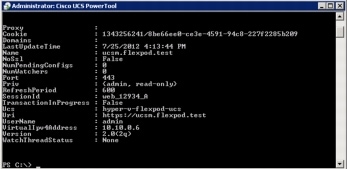

Installing Cisco UCS PowerTool

Appendix B: Installing the DataONTAP PowerShell Toolkit

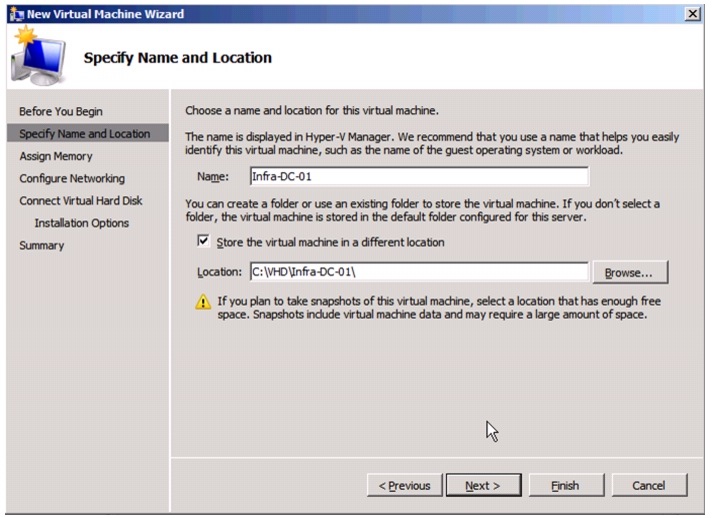

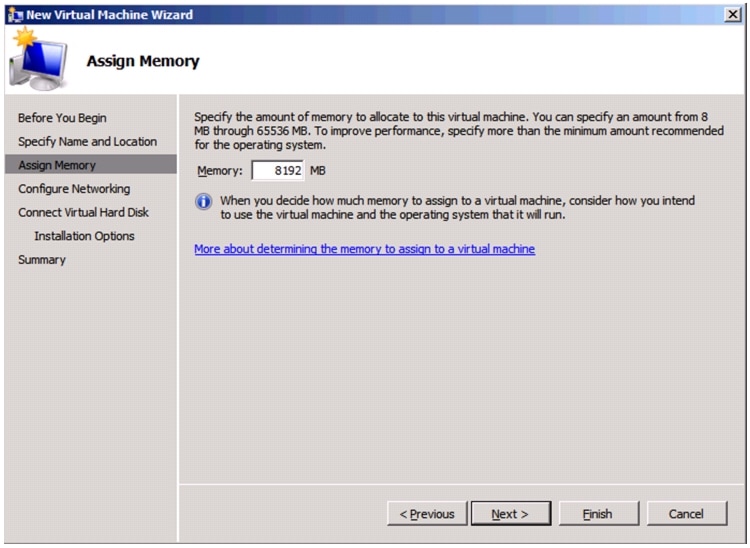

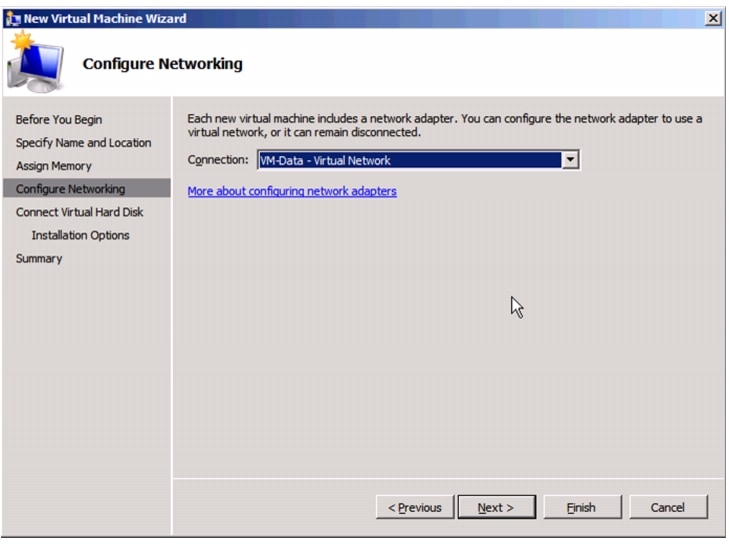

Appendix C: Creating Domain Controller Virtual Machine (Optional)

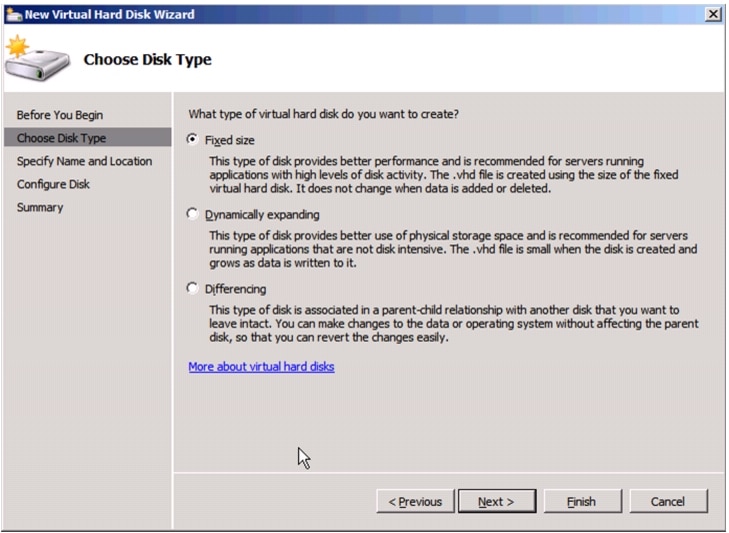

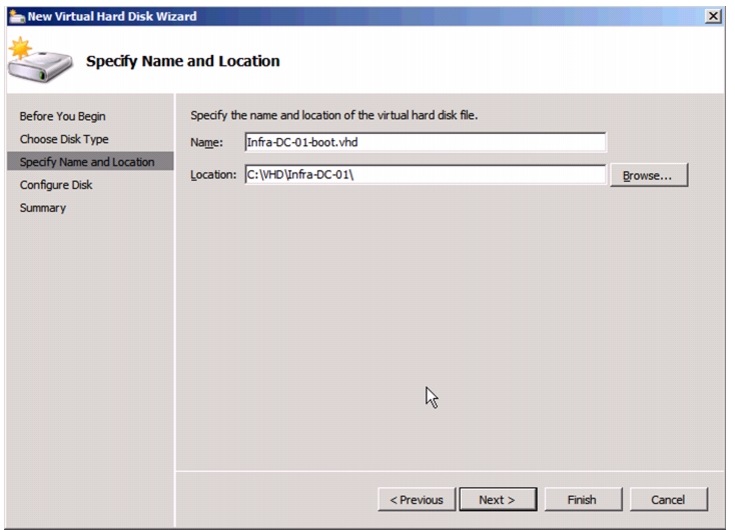

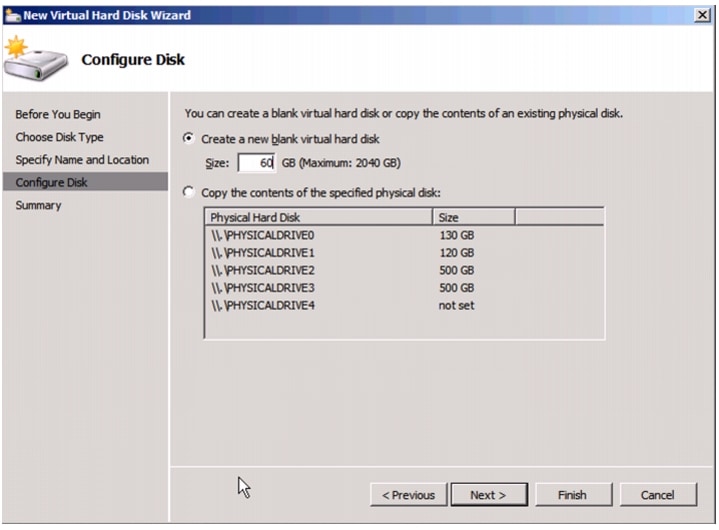

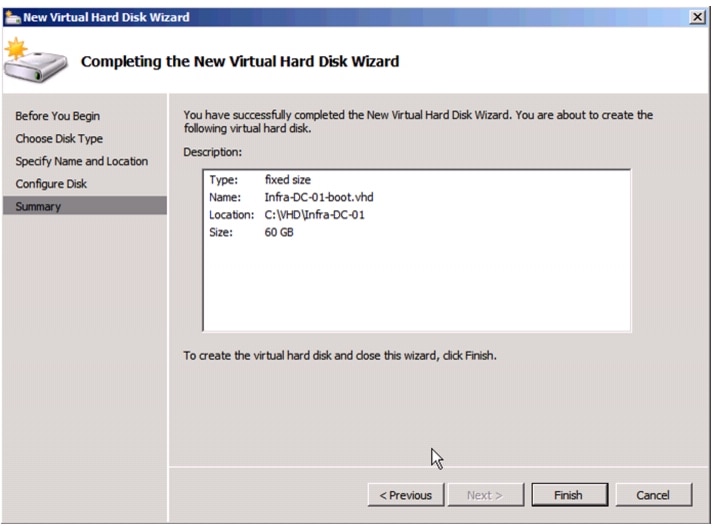

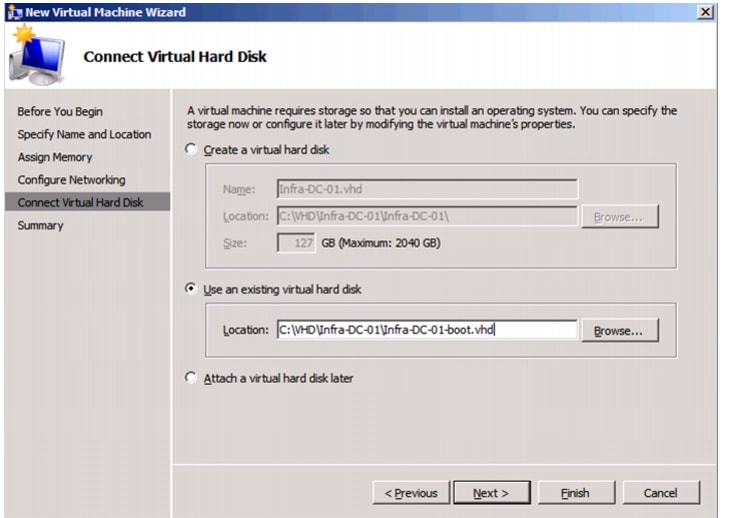

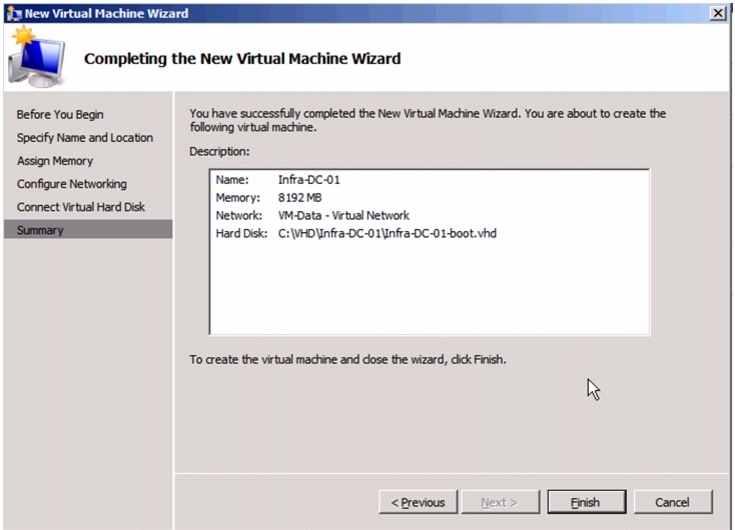

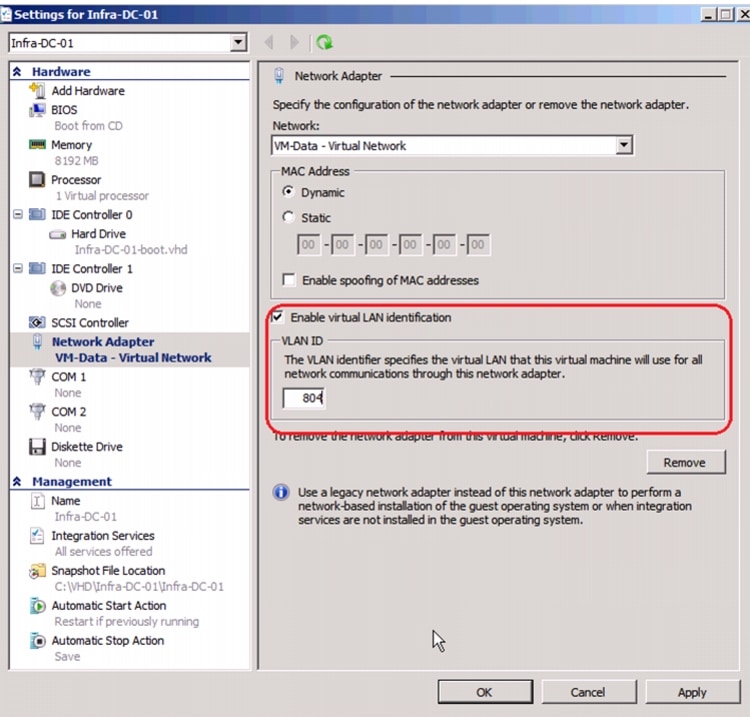

Create VHD for Domain Controller Virtual Machine.

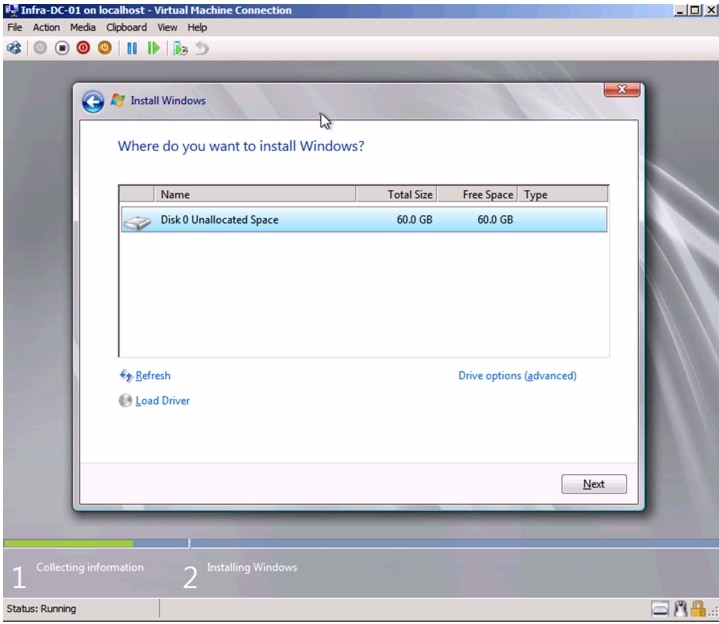

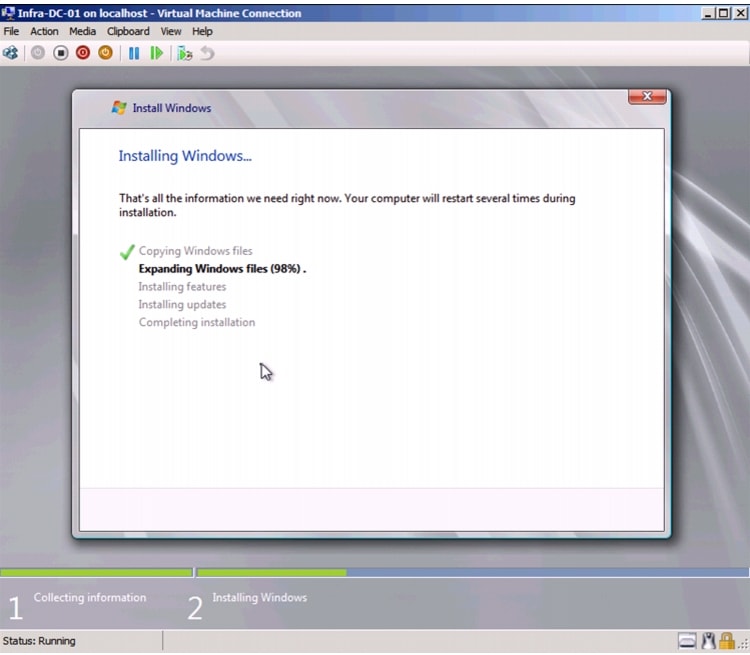

Install Windows in a Domain Controller Virtual Machine

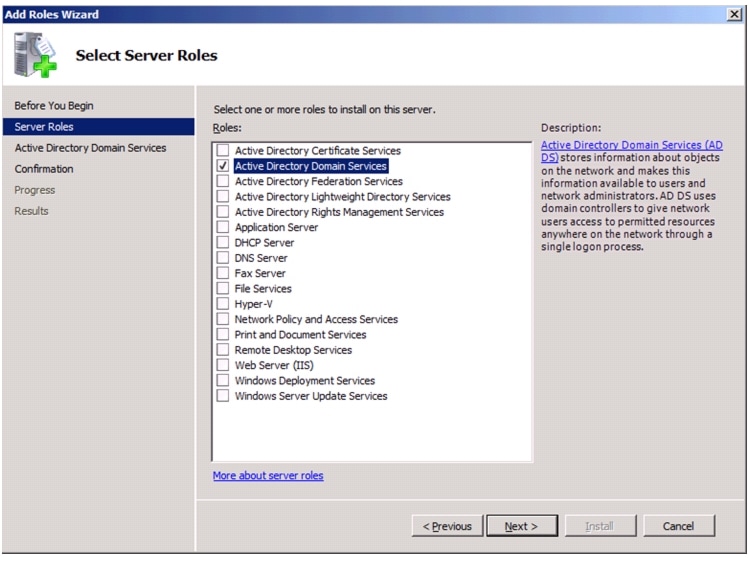

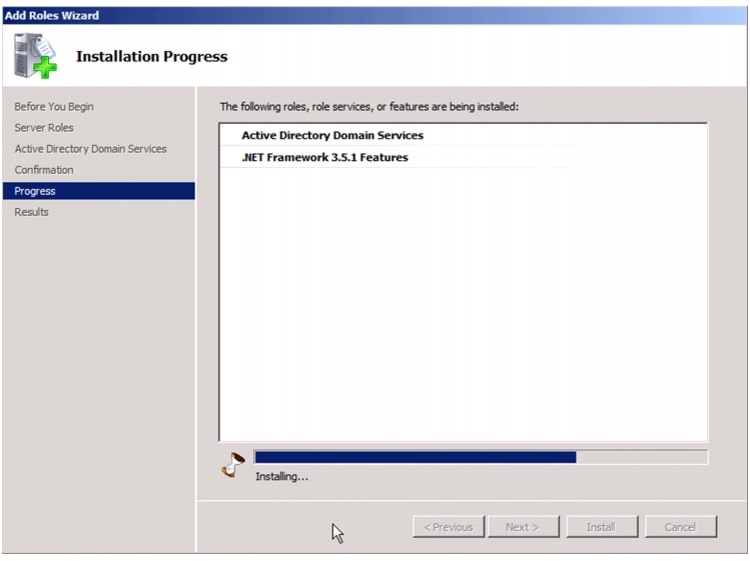

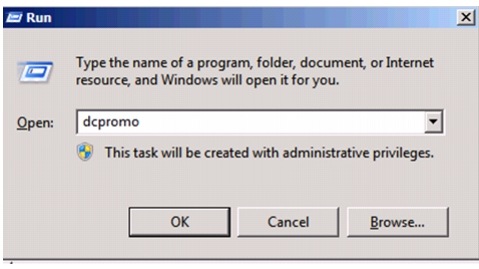

Install Active Directory Services

FlexPod Data Center with Microsoft Hyper-V

Windows Server 2012 with 7-ModeDeployment Guide for FlexPod with Microsoft Hyper-V Windows Server 2012 with Data ONTAP 8.1.2 Operating in 7-ModeLast Updated: November 22, 2013

Building Architectures to Solve Business Problems

About the Authors

Mike Mankovsky, Technical Leader, Cisco SystemsMike Mankovsky is a Cisco Unified Computing System architect, focusing on Microsoft solutions with extensive experience in Hyper-V, storage systems, and Microsoft Exchange Server. He has expert product knowledge in Microsoft Windows storage technologies and data protection technologies.

Glenn Sizemore, Technical Marketing Engineer, NetApp

Glenn Sizemore is a Technical Marketing Engineer in the Microsoft Solutions Group at NetApp, where he specializes in Cloud and Automation. Since joining NetApp, Glenn has delivered a variety of Microsoft based solutions ranging from general best practice guidance to co-authoring the NetApp Hyper-V Cloud Fast Track with Cisco reference architecture.

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit:

http://www.cisco.com/go/designzone

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

Cisco and the Cisco Logo are trademarks of Cisco Systems, Inc. and/or its affiliates in the U.S. and other countries. A listing of Cisco's trademarks can be found at http://www.cisco.com/go/trademarks. Third party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (1005R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in illustrative content is unintentional and coincidental.

© 2013 Cisco Systems, Inc. All rights reserved.

FlexPod with Microsoft Hyper-V Windows Server 2012 Deployment Guide

Reference Architecture

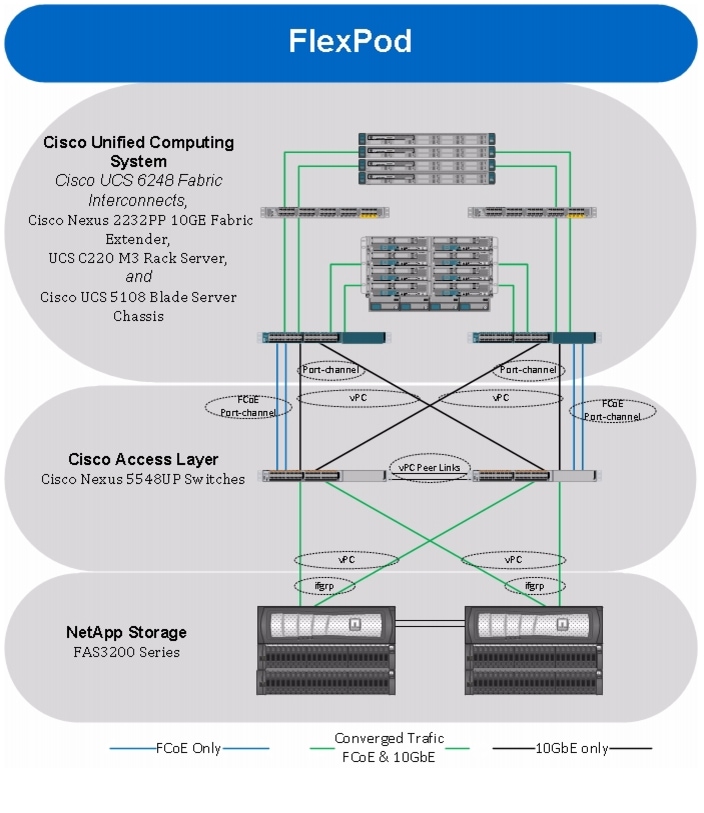

FlexPod architecture is highly modular, or pod-like. Although each customer's FlexPod unit might vary in its exact configuration, after a FlexPod unit is built, it can easily be scaled as requirements and demands change. This includes both scaling up (adding additional resources within a FlexPod unit) and scaling out (adding additional FlexPod units).

Specifically, FlexPod is a defined set of hardware and software that serves as an integrated foundation for all virtualization solutions. FlexPod validated with Microsoft Server 2012 Hyper-V includes NetApp® FAS3200 Series storage, Cisco Nexus® 5500 Series network switches, the Cisco Unified Computing Systems™ (Cisco UCS™) platforms, and Microsoft virtualization software in a single package. The computing and storage can fit in one data center rack with networking residing in a separate rack or deployed according to a customer's data center design. Due to port density, the networking components can accommodate multiple configurations of this kind.

Figure 1 Architecture overview

The reference configuration shown in Figure 1 includes:

•

Two Cisco Nexus 5548UP Switches

•

Two Cisco UCS 6248UP Fabric Interconnects

•

Two Cisco Nexus 2232PP Fabric Extenders

•

One chassis of Cisco UCS blades with two fabric extenders per chassis

•

Four Cisco USC C220M3 Servers

•

One FAS3240A (HA pair)

Storage is provided by a NetApp FAS3240A with accompanying disk shelves. All systems and fabric links feature redundancy and provide end-to-end high availability. For server virtualization, the deployment includes Hyper-V. Although this is the base design, each of the components can be scaled flexibly to support specific business requirements. For example, more (or different) blades and chassis could be deployed to increase compute capacity, additional disk shelves could be deployed to improve I/O capacity and throughput, or special hardware or software features could be added to introduce new features.

For more information on FlexPod for Windows Server 2012 Hyper-V design choices and deployment best practices, see FlexPod for Windows Server 2012 Hyper-V Design Guide:

http://www.cisco.com/en/US/solutions/collateral/ns340/ns517/ns224/ns944/whitepaper__c07-727095.html

Note

This is a sample bill of materials (BoM) only. This solution is certified for use with any configuration that meets the FlexPod Technical Specification rather than for a specific model. FlexPod and Fast Track programs allow customers to choose from within a model family to make sure that each FlexPod for Microsoft Windows Server 2012 Hyper-V solution meets the customers' requirements.

The remainder of this document guides you through the low-level steps for deploying the base architecture, as shown in Figure 1. This includes everything from physical cabling, to compute and storage configuration, to configuring virtualization with Hyper-V.

Configuration Guidelines

This document provides details for configuring a fully redundant, highly available configuration. Therefore, references are made as to which component is being configured with each step, whether it is A or B. For example, Controller A and Controller B, are used to identify the two NetApp storage controllers that are provisioned with this document, while Nexus A and Nexus B identify the pair of Cisco Nexus switches that are configured. The Cisco UCS Fabric Interconnects are similarly configured. Additionally, this document details steps for provisioning multiple Cisco UCS hosts and these are identified sequentially as VM-Host-Infra-01 and VM-Host-Infra-02, and so on. Finally, to indicate that the reader should include information about their environment in a given step, <management VLAN ID> appears as part of the command structure. See the following commands show VLAN creation:

controller A> vlan createUsage:

vlan create [-g {on|off}] <ifname> <vlanid_list>vlan add <ifname> <vlanid_list>vlan delete -q <ifname> [<vlanid_list>]vlan modify -g {on|off} <ifname>vlan stat <ifname> [<vlanid_list>]Example:

controller A> vlan create vif0 <management VLAN ID>This document is intended to allow readers to fully configure the their environment. In this process, various steps require the reader to insert customer specific naming conventions, IP addresses and VLAN schemes as well as to record appropriate WWPN, WWNN, or MAC addresses. Table 2 provides the list of VLANs necessary for deployment as outlined in this guide.

Note

In this document the VM-Data VLAN is used for virtual machine management interfaces.

The VM-Mgmt VLAN is used for management interfaces of the Microsoft Hyper-V hosts. A Layer-3 route must exist between the VM-Mgmt and VM-Data VLANS.

Deployment

This document details the necessary steps to deploy base infrastructure components as well for provisioning Microsoft Hyper-V as the foundation for virtualized workloads. At the end of these deployment steps, you will be prepared to provision applications on top of a Microsoft Hyper-V virtualized infrastructure. The outlined procedure includes:

•

Initial NetApp Controller configuration

•

Initial Cisco UCS configuration

•

Initial Cisco Nexus configuration

•

Creation of necessary VLANs and VSANs for management, basic functionality, and virtualized infrastructure specific to the Microsoft

•

Creation of necessary vPCs to provide HA among devices

•

Creation of necessary service profile pools such as World Wide Port Name (WWPN), World Wide Node Name (WWNN), MAC, server, and so on.

•

Creation of necessary service profile policies such as adapter policy, boot policy, and so on.

•

Creation of two service profile templates from the created pools and policies, one each for fabric A and B

•

Provisioning of four servers from the created service profiles in preparation for OS installation

•

Initial configuration of the infrastructure components residing on the NetApp Controller

•

Installation of Microsoft Windows Server 2012 Datacenter Edition

•

Enabling Microsoft Hyper-V Role

•

Configuring FM-FEX and SR-IOV adapters

The FlexPod Validated with Microsoft Private Cloud architecture is flexible; therefore, the configuration detailed provided in this section might vary for customer implementations depending on specific requirements. Although customer implementations might deviate from these details; the best practices, features, and configurations listed in this section can still be used as a reference for building a customized FlexPod, validated with Microsoft Private Cloud architecture.

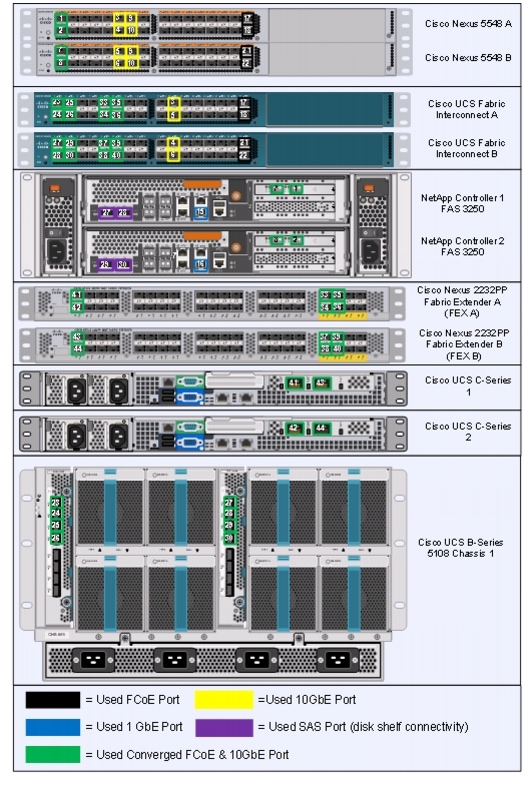

Cabling Information

The following information is provided as a reference for cabling the physical equipment in a FlexPod environment. The tables include both local and remote device and port locations in order to simplify cabling requirements.

Table 2, Table 3, Table 4, Table 5, and Table 6 contain details for the prescribed and supported configuration of the NetApp FAS3240 running Data ONTAP 8.0.2. This configuration leverages a dual-port 10 Gigabit Ethernet adapter as well as the native FC target ports and the onboard SAS ports for disk shelf connectivity. For any modifications of this prescribed architecture, consult the currently available NetApp Interoperability Matrix Tool (IMT) at:

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site.

Be sure to follow the cable directions in this section. Failure to do so will result in necessary changes to the deployment procedures that follow because specific port locations are mentioned.

It is possible to order a FAS3240A system in a different configuration from what is prescribed in the tables in this section. Before starting, be sure the configuration matches what is described in the tables and diagrams in this section

Figure 2 shows a FlexPod cabling diagram. The labels indicate connections to end points rather than port numbers on the physical device. For example, connection 1 is an FCoE target port connected from NetApp controller A to Nexus 5548 A. SAS connections 23, 24, 25, and 26 as well as ACP connections 27 and 28 should be connected to the NetApp storage controller and disk shelves according to best practices for the specific storage controller and disk shelf quantity.

Figure 2 Flexpod Cabling Diagram

Nexus 5548UP Deployment Procedure

The following section provides a detailed procedure for configuring the Cisco Nexus 5548 switches for use in a FlexPod environment. Follow these steps precisely because failure to do so could result in an improper configuration.

Note

The configuration steps detailed in this section provides guidance for configuring the Nexus 5548UP running release 5.2(1)N1(3). This configuration also leverages the native VLAN on the trunk ports to discard untagged packets, by setting the native VLAN on the Port Channel, but not including this VLAN in the allowed VLANs on the Port Channel.

Initial Cisco Nexus 5548UP Switch Configuration

These steps provide details for the initial Cisco Nexus 5548 Switch setup.

Nexus 5548 A

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start.

1.

Enter yes to enforce secure password standards.

2.

Enter the password for the admin user.

3.

Enter the password a second time to commit the password.

4.

Enter yes to enter the basic configuration dialog.

5.

Create another login account (yes/no) [n]: Enter.

6.

Configure read-only SNMP community string (yes/no) [n]: Enter.

7.

Configure read-write SNMP community string (yes/no) [n]: Enter.

8.

Enter the switch name: <Nexus A Switch name> Enter.

9.

Continue with out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter.

10.

Mgmt0 IPv4 address: <Nexus A mgmt0 IP> Enter.

11.

Mgmt0 IPv4 netmask: <Nexus A mgmt0 netmask> Enter.

12.

Configure the default gateway? (yes/no) [y]: Enter.

13.

IPv4 address of the default gateway: <Nexus A mgmt0 gateway> Enter.

14.

Enable the telnet service? (yes/no) [n]: Enter.

15.

Enable the ssh service? (yes/no) [y]: Enter.

16.

Type of ssh key you would like to generate (dsa/rsa):rsa.

17.

Number of key bits <768-2048>:1024 Enter.

18.

Configure the ntp server? (yes/no) [y]: Enter.

19.

NTP server IPv4 address: <NTP Server IP> Enter.

20.

Enter basic FC configurations (yes/no) [n]: Enter.

21.

Would you like to edit the configuration? (yes/no) [n]: Enter.

Note

Be sure to review the configuration summary before enabling it.

22.

Use this configuration and save it? (yes/no) [y]: Enter.

23.

Configuration may be continued from the console or by using SSH. To use SSH, connect to the mgmt0 address of Nexus A.

24.

Log in as user admin with the password previously entered.

Nexus 5548 B

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start.

1.

Enter yes to enforce secure password standards.

2.

Enter the password for the admin user.

3.

Enter the password a second time to commit the password.

4.

Enter yes to enter the basic configuration dialog.

5.

Create another login account (yes/no) [n]: Enter.

6.

Configure read-only SNMP community string (yes/no) [n]: Enter.

7.

Configure read-write SNMP community string (yes/no) [n]: Enter.

8.

Enter the switch name: <Nexus B Switch name> Enter.

9.

Continue with out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter.

10.

Mgmt0 IPv4 address: <Nexus B mgmt0 IP> Enter.

11.

Mgmt0 IPv4 netmask: <Nexus B mgmt0 netmask> Enter.

12.

Configure the default gateway? (yes/no) [y]: Enter.

13.

IPv4 address of the default gateway: <Nexus B mgmt0 gateway> Enter.

14.

Enable the telnet service? (yes/no) [n]: Enter.

15.

Enable the ssh service? (yes/no) [y]: Enter.

16.

Type of ssh key you would like to generate (dsa/rsa):rsa.

17.

Number of key bits <768-2048>:1024 Enter.

18.

Configure the ntp server? (yes/no) [y]: Enter.

19.

NTP server IPv4 address: <NTP Server IP> Enter.

20.

Enter basic FC configurations (yes/no) [n]: Enter.

21.

Would you like to edit the configuration? (yes/no) [n]: Enter.

Note

Be sure to review the configuration summary before enabling it.

22.

Use this configuration and save it? (yes/no) [y]: Enter.

23.

Configuration may be continued from the console or by using SSH. To use SSH, connect to the mgmt0 address of Nexus A.

24.

Log in as user admin with the password previously entered.

Enable Appropriate Cisco Nexus Features

These steps provide details for enabling the appropriate Cisco Nexus features.

Nexus A and Nexus B

1.

Type config t to enter the global configuration mode.

2.

Type feature lacp.

3.

Type feature fcoe.

4.

Type feature npiv.

5.

Type feature vpc.

6.

Type feature fport-channel-trunk.

Set Global Configurations

These steps provide details for setting global configurations.

Nexus A and Nexus B

1.

From the global configuration mode, type spanning-tree port type network default to make sure that, by default, the ports are considered as network ports in regards to spanning-tree.

2.

Type spanning-tree port type edge bpduguard default to enable bpduguard on all edge ports by default.

3.

Type spanning-tree port type edge bpdufilter default to enable bpdufilter on all edge ports by default.

4.

Type ip access-list classify_Silver.

5.

Type 10 permit ip <iSCSI-A net address> any

Note

Where the variable is the network address of the iSCSI-A VLAN in CIDR notation (i.e. 192.168.102.0/24).

6.

Type 20 permit ip any <iSCSI-A net address>.

7.

Type 30 permit ip <iSCSI-B net address> any.

8.

Type 40 permit ip any <iSCSI-B net address>.

9.

Type exit.

10.

Type class-map type qos match-all class-gold.

11.

Type match cos 4.

12.

Type exit.

13.

Type class-map type qos match-all class-silver.

14.

Type match cos 2.

15.

Type match access-group name classify_Silver.

16.

Type exit.

17.

Type class-map type queuing class-gold.

18.

Type match qos-group 3.

19.

Type exit.

20.

Type class-map type queuing class-silver.

21.

Type match qos-group 4.

22.

Type exit.

23.

Type policy-map type qos system_qos_policy.

24.

Type class class-gold.

25.

Type set qos-group 3.

26.

Type class class-silver.

27.

Type set qos-group 4.

28.

Type class class-fcoe.

29.

Type set qos-group 1.

30.

Type exit.

31.

Type exit.

32.

Type policy-map type queuing system_q_in_policy.

33.

Type class.

34.

Type queuing class-fcoe.

35.

Type bandwidth percent 20.

36.

Type class type queuing class-gold.

37.

Type bandwidth percent 33.

38.

Type class type queuing class-silver.

39.

Type bandwidth percent 29.

40.

Type class type queuing class-default.

41.

Type bandwidth percent 18.

42.

Type exit.

43.

Type exit.

44.

Type policy-map type queuing system_q_out_policy.

45.

Type class type queuing class-fcoe.

46.

Type bandwidth percent 20.

47.

Type class type queuing class-gold.

48.

Type bandwidth percent 33.

49.

Type class type queuing class-silver.

50.

Type bandwidth percent 29.

51.

Type class type queuing class-default.

52.

Type bandwidth percent 18.

53.

Type exit.

54.

Type exit.

55.

Type class-map type network-qos class-gold.

56.

Type match qos-group 3.

57.

Type exit.

58.

Type class-map type network-qos class-silver.

59.

Type match qos-group 4.

60.

Type exit.

61.

Type policy-map type network-qos system_nq_policy.

62.

Type class type network-qos class-gold.

63.

Type set cos 4.

64.

Type mtu 9000.

65.

Type class type network-qos class-fcoe.

66.

Type pause no-drop.

67.

Type mtu 2158.

68.

Type class type network-qos class-silver.

69.

Type set cos 2.

70.

Type mtu 9000.

71.

Type class type network-qos class-default.

72.

Type mtu 9000.

73.

Type exit.

74.

Type system qos.

75.

Type service-policy type qos input system_qos_policy.

76.

Type service-policy type queuing input system_q_in_policy.

77.

Type service-policy type queuing output system_q_out_policy.

78.

Type service-policy type network-qos system_nq_policy.

79.

Type exit.

80.

Type copy run start.

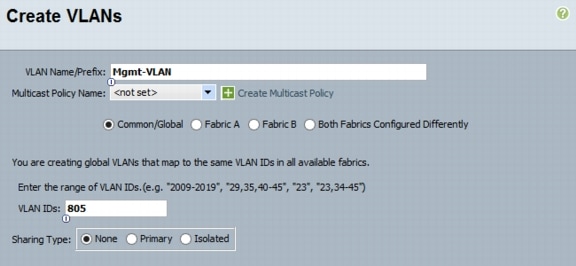

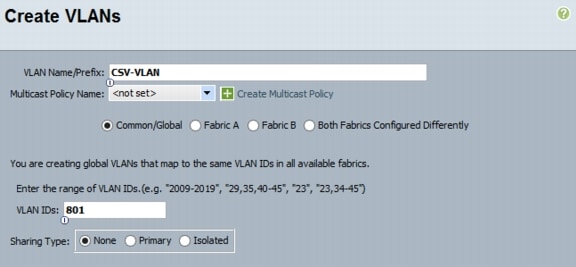

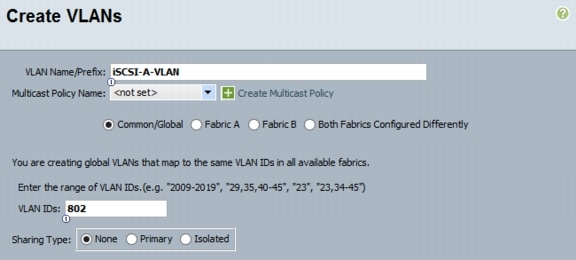

Create Necessary VLANs

These steps provide details for creating the necessary VLANs.Nexus A

1.

Type vlan <<Fabric_A_FCoE_VLAN ID>>.

2.

Type name FCoE_Fabric_A.

3.

Type exit.

Nexus B

1.

Type vlan <<Fabric_B_FCoE_VLAN ID>>.

2.

Type name FCoE_Fabric_B.

3.

Type exit.

Nexus A and Nexus B

1.

Type vlan <<Native VLAN ID>>.

2.

Type name Native-VLAN.

3.

Type exit.

4.

Type vlan <<CSV VLAN ID>>.

5.

Type name CSV-VLAN.

6.

Type exit.

7.

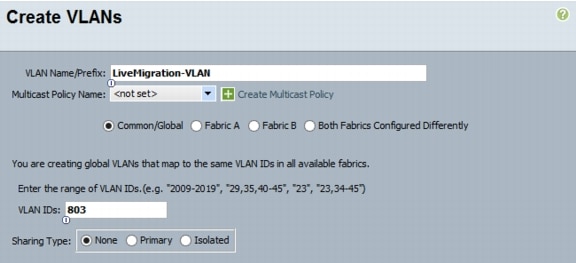

Type vlan <<Live Migration VLAN ID>>.

8.

Type name Live-Migration-VLAN.

9.

Type exit.

10.

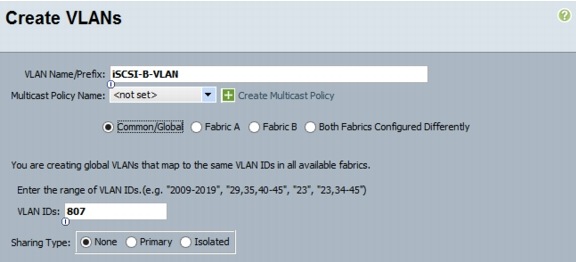

Type vlan <<iSCSI A VLAN ID>>.

11.

Type name iSCSI-A-VLAN.

12.

Type exit.

13.

Type vlan <<iSCSI B VLAN ID>>.

14.

Type name iSCSI-B-VLAN.

15.

Type exit.

16.

Type vlan <<MGMT VLAN ID>>.

17.

Type name Mgmt-VLAN.

18.

Type exit.

19.

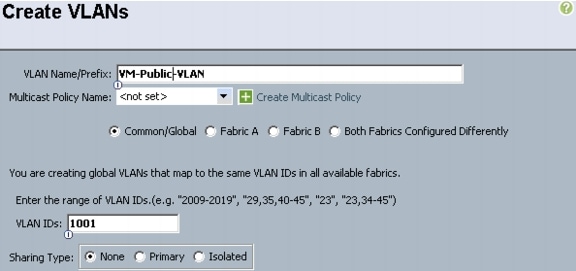

Type vlan <<VM Data VLAN ID>>.

20.

Type name VM-Public-VLAN.

21.

Type exit.

22.

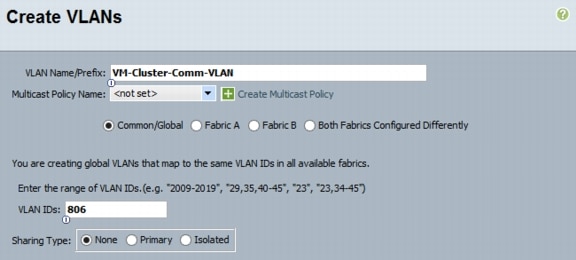

Type vlan <<VM Cluster Comm VLAN ID>>.

23.

Type name VM-Cluster-Comm-VLAN.

24.

Type exit.

Add Individual Port Descriptions for Troubleshooting

These steps provide details for adding individual port descriptions for troubleshooting activity and verification.

Nexus 5548 A

1.

From the global configuration mode,

2.

Type interface Eth1/1.

3.

Type description <Controller A:e2a>.

4.

Type exit.

5.

Type interface Eth1/2.

6.

Type description <Controller B:e2a>.

7.

Type exit.

8.

Type interface Eth1/3.

9.

Type description <UCSM A:Eth1/19>.

10.

Type exit.

11.

Type interface Eth1/4.

12.

Type description <UCSM B:Eth1/19>.

13.

Type exit.

14.

Type interface Eth1/5.

15.

Type description <Nexus B:Eth1/5>.

16.

Type exit.

17.

Type interface Eth1/6.

18.

Type description <Nexus B:Eth1/6>.

19.

Type exit.

Nexus 5548 B

1.

From the global configuration mode,

2.

Type interface Eth1/1.

3.

Type description <Controller A:e2b>.

4.

Type exit.

5.

Type interface Eth1/2.

6.

Type description <Controller B:e2b>.

7.

Type exit.

8.

Type interface Eth1/3.

9.

Type description <UCSM A:Eth1/20>.

10.

Type exit.

11.

Type interface Eth1/4.

12.

Type description <UCSM B:Eth1/20>.

13.

Type exit.

14.

Type interface Eth1/5.

15.

Type description <Nexus A:Eth1/5>.

16.

Type exit.

17.

Type interface Eth1/6.

18.

Type description <Nexus A:Eth1/6>.

19.

Type exit.

Create Necessary Port Channels

These steps provide details for creating the necessary Port Channels between devices.

Nexus 5548 A

1.

From the global configuration mode,

2.

Type interface Po10.

3.

Type description vPC peer-link.

4.

Type exit.

5.

Type interface Eth1/5-6.

6.

Type channel-group 10 mode active.

7.

Type no shutdown.

8.

Type exit.

9.

Type interface Po11.

10.

Type description <Controller A>.

11.

Type exit.

12.

Type interface Eth1/1.

13.

Type channel-group 11 mode active.

14.

Type no shutdown.

15.

Type exit.

16.

Type interface Po12.

17.

Type description <Controller B>.

18.

Type exit.

19.

Type interface Eth1/2.

20.

Type channel-group 12 mode active.

21.

Type no shutdown.

22.

Type exit.

23.

Type interface Po13.

24.

Type description <UCSM A>.

25.

Type exit.

26.

Type interface Eth1/3.

27.

Type channel-group 13 mode active.

28.

Type no shutdown.

29.

Type exit.

30.

Type interface Po14.

31.

Type description <UCSM B>.

32.

Type exit.

33.

Type interface Eth1/4.

34.

Type channel-group 14 mode active.

35.

Type no shutdown.

36.

Type exit.

37.

Type interface eth1/31.

38.

Type switchport description <UCSM A:eth1/31>.

39.

Type exit.

40.

Type interface eth1/32.

41.

Type switchport description <UCSM A:eth1/32>.

42.

Type exit.

43.

Type interface Eth1/31-32.

44.

Type channel-group 15 mode active.

45.

Type no shutdown.

46.

Type copy run start.

Nexus 5548 B

1.

From the global configuration mode, type interface Po10.

2.

Type description vPC peer-link.

3.

Type exit.

4.

Type interface Eth1/5-6.

5.

Type channel-group 10 mode active.

6.

Type no shutdown.

7.

Type exit.

8.

Type interface Po11.

9.

Type description <Controller A>.

10.

Type exit.

11.

Type interface Eth1/1.

12.

Type channel-group 11 mode active.

13.

Type no shutdown.

14.

Type exit.

15.

Type interface Po12.

16.

Type description <Controller B>.

17.

Type exit.

18.

Type interface Eth1/2.

19.

Type channel-group 12 mode active.

20.

Type no shutdown.

21.

Type exit.

22.

Type interface Po13.

23.

Type description <UCSM A>.

24.

Type exit.

25.

Type interface Eth1/3.

26.

Type channel-group 13 mode active.

27.

Type no shutdown.

28.

Type exit.

29.

Type interface Po14.

30.

Type description <UCSM B>.

31.

Type exit.

32.

Type interface Eth1/4.

33.

Type channel-group 14 mode active.

34.

Type no shutdown

35.

Type exit.

36.

Type interface eth1/31.

37.

Type switchport description <UCSM B:eth1/31>.

38.

Type exit.

39.

Type interface eth1/32.

40.

Type switchport description <UCSM B:eth1/32>.

41.

Type exit.

42.

Type interface eth1/31-32.

43.

Type channel-group 16 mode active.

44.

Type no shutdown.

45.

Type copy run start.

Add Port Channel Configurations

These steps provide details for adding Port Channel configurations.

Nexus 5548 A

1.

From the global configuration mode,

2.

Type interface Po10.

3.

Type switchport mode trunk.

4.

Type switchport trunk native vlan <<Native VLAN ID>>.

5.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<CSV VLAN ID>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Live Migration VLAN ID>>, <<VM Data VLAN ID>>, <<VM Cluster Comm VLAN ID>> <<Fabric A FCoE VLAN ID>>.

6.

Type spanning-tree port type network.

7.

Type no shutdown.

8.

Type exit.

9.

Type interface Po11.

10.

Type switchport mode trunk.

11.

Type switchport trunk native vlan <<Native VLAN ID>>.

12.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Fabric A FCoE VLAN ID>>.

13.

Type spanning-tree port type edge trunk.

14.

Type no shut.

15.

Type exit.

16.

Type interface Po12.

17.

Type switchport mode trunk.

18.

Type switchport trunk native vlan <<Native VLAN ID>>.

19.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Fabric A FCoE VLAN ID>>.

20.

Type spanning-tree port type edge trunk.

21.

Type no shut.

22.

Type exit.

23.

Type interface Po13.

24.

Type switchport mode trunk.

25.

Type switchport trunk native vlan <Native VLAN ID>.

26.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<CSV VLAN ID>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Live Migration VLAN ID>>, <<VM Data VLAN ID>>, <<VM Cluster Comm VLAN ID>> <<Fabric A FCoE VLAN ID>>.

27.

Type spanning-tree port type edge trunk.

28.

Type no shut.

29.

Type exit.

30.

Type interface Po14

31.

Type switchport mode trunk

32.

Type switchport trunk native vlan <Native VLAN ID>.

33.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<CSV VLAN ID>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Live Migration VLAN ID>>, <<VM Data VLAN ID>>, <<VM Cluster Comm VLAN ID>> <<Fabric A FCoE VLAN ID>>.

34.

Type spanning-tree port type edge trunk.

35.

Type no shutdown.

36.

Type exit.

37.

Type interface Po15.

38.

Type switchport mode trunk.

39.

Type switchport trunk allowed vlan <Fabric A FCoE VLAN ID>

40.

Type no shutdown

41.

Type exit.

42.

Type copy run start.

Nexus 5548 B

1.

From the global configuration mode,

2.

Type interface Po10.

3.

Type switchport mode trunk.

4.

Type switchport trunk native vlan <<Native VLAN ID>>.

5.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<CSV VLAN ID>>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Live Migration VLAN ID>>, <<VM Data VLAN ID>>, <<Fabric B FCoE VLAN ID>>.

6.

Type spanning-tree port type network.

7.

Type no shutdown.

8.

Type exit.

9.

Type interface Po11.

10.

Type switchport mode trunk.

11.

Type switchport trunk native vlan <<Native VLAN ID>>.

12.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Fabric B FCoE VLAN ID>>.

13.

Type spanning-tree port type edge trunk.

14.

Type no shut.

15.

Type exit.

16.

Type interface Po12.

17.

Type switchport mode trunk.

18.

Type switchport trunk native vlan <<Native VLAN ID>>.

19.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Fabric B FCoE VLAN ID>>.

20.

Type spanning-tree port type edge trunk.

21.

Type no shut.

22.

Type exit.

23.

Type interface Po13.

24.

Type switchport mode trunk.

25.

Type switchport trunk native vlan <Native VLAN ID>.

26.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<CSV VLAN ID>>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Live Migration VLAN ID>>, <<VM Data VLAN ID>>, <<Fabric B FCoE VLAN ID>>.Type spanning-tree port type edge trunk.

27.

Type no shut.

28.

Type exit.

29.

Type interface Po14.

30.

Type switchport mode trunk.

31.

Type switchport trunk native vlan <Native VLAN ID>.

32.

Type switchport trunk allowed vlan <<MGMT VLAN ID>>, <<CSV VLAN ID>>, <<iSCSI A VLAN ID>>, <<iSCSI B VLAN ID>>, <<Live Migration VLAN ID>>, <<VM Data VLAN ID>>, <<Fabric B FCoE VLAN ID>>.Type spanning-tree port type edge trunk.

33.

Type no shut.

34.

Type exit.

35.

Type interface Po16.

36.

Type switchport mode trunk.

37.

Type switchport trunk allowed vlan <Fabric B FCoE VLAN ID>

38.

Type no shutdown.

39.

Type exit.

40.

Type copy run start.

Configure Virtual Port Channels

These steps provide details for configuring virtual Port Channels (vPCs).

Nexus 5548 A

1.

From the global configuration mode,

2.

Type vpc domain <Nexus vPC domain ID>.

3.

Type role priority 10.

4.

Type peer-keepalive destination <Nexus B mgmt0 IP> source <Nexus A mgmt0 IP>.

5.

Type exit.

6.

Type interface Po10.

7.

Type vpc peer-link.

8.

Type exit.

9.

Type interface Po11.

10.

Type vpc 11.

11.

Type exit.

12.

Type interface Po12.

13.

Type vpc 12.

14.

Type exit.

15.

Type interface Po13.

16.

Type vpc 13.

17.

Type exit.

18.

Type interface Po14.

19.

Type vpc 14.

20.

Type exit.

21.

Type copy run start.

Nexus 5548 B

1.

From the global configuration mode, type vpc domain <Nexus vPC domain ID>.

2.

Type role priority 20.

3.

Type peer-keepalive destination <Nexus A mgmt0 IP> source <Nexus B mgmt0 IP>.

4.

Type exit.

5.

Type interface Po10.

6.

Type vpc peer-link.

7.

Type exit.

8.

Type interface Po11.

9.

Type vpc 11.

10.

Type exit.

11.

Type interface Po12.

12.

Type vpc 12.

13.

Type exit

14.

Type interface Po13.

15.

Type vpc 13.

16.

Type exit.

17.

Type interface Po14.

18.

Type vpc 14.

19.

Type exit.

20.

Type copy run start

Configure FCoE Fabric

These steps provide details for configuring Fiber Channel over Ethernet Fabric.

Nexus 5548 A

1.

Type interface vfc11.

2.

Type bind interface po11.

3.

Type no shutdown.

4.

Type exit.

5.

Type interface vfc12.

6.

Type bind interface po12.

7.

Type no shutdown.

8.

Type exit.

9.

Type interface vfc15.

10.

Type bind interface po15.

11.

Type no shutdown.

12.

Type exit.

13.

Type vsan database.

14.

Type vsan <VSAN A ID>

15.

Type fcoe vsan <VSAN A ID>.

16.

Type vsan <VSAN A ID> interface vfc11.

17.

Type vsan <VSAN A ID> interface vfc12.

18.

Type exit.

19.

Type vlan <<Fabric_A_FCoE_VLAN ID>>

20.

Type fcoe vsan <VSAN A ID>.

21.

Type exit.

22.

Type copy run start

Nexus 5548 B

1.

Type interface vfc11.

2.

Type bind interface po11.

3.

Type no shutdown.

4.

Type exit.

5.

Type interface vfc12.

6.

Type bind interface po12.

7.

Type no shutdown

8.

Type exit.

9.

Type interface vfc16

10.

Type bind interface po16

11.

Type no shutdown

12.

Type exit.

13.

Type vsan database.

14.

Type vsan <VSAN B ID>

15.

Type vsan <VSAN B ID> name Fabric_B.

16.

Type vsan <VSAN B ID> interface vfc11.

17.

Type vsan <VSAN B ID> interface vfc12.

18.

Type exit.

19.

Type vlan <<Fabric_B_FCoE_VLAN ID>>

20.

Type fcoe vsan <VSAN B ID>.

21.

Type exit.

22.

Type copy run start

Link into Existing Network Infrastructure

Depending on the available network infrastructure, several methods and features can be used to uplink the FlexPod environment. If an existing Cisco Nexus environment is present, NetApp recommends using vPCs to uplink the Cisco Nexus 5548 switches included in the FlexPod environment into the infrastructure. The previously described procedures can be used to create an uplink vPC to the existing environment.

NetApp FAS3240A Deployment Procedure - Part 1

Complete the Configuration Worksheet

Before running the setup script, complete the Configuration worksheet from the product manual.

Assign Controller Disk Ownership and initialize storage

These steps provide details for assigning disk ownership and disk initialization and verification.

Note

Typical best practices should be followed when determining the number of disks to assign to each controller head. You may choose to assign a disproportionate number of disks to a given storage controller in an HA pair, depending on the intended workload.

In this reference architecture, half the total number of disks in the environment is assigned to one controller and the remainder to its partner.

Controller A

1.

Connect to the storage system console port. You should see a Loader-A prompt. However, if the storage system is in a reboot loop, Press Ctrl - C to exit the Autoboot loop when you see this message:

Starting AUTOBOOT press Ctrl-C to abort...2.

If the system is at the LOADER prompt, enter the following command to boot Data ONTAP:

autoboot3.

During system boot, press Ctrl - C when prompted for the Boot Menu:

Press Ctrl-C for Boot Menu...

Note

If 8.1.2 is not the version of software being booted, proceed with the steps below to install new software. If 8.1.2 is the version being booted, then proceed with step 14, maintenance mode boot.

4.

To install new software first select option 7.

75.

Type y indicating yes to perform a nondisruptive upgrade.

y6.

Select e0M for the network port you want to use for the download.

e0M7.

Type y indicating yes to reboot now.

y8.

Enter the IP address, netmask, and default gateway for e0M in their respective places.

<<var_controller1_e0m_ip>> <<var_controller1_mask>>> <<var_controller1_mgmt_gateway>>.9.

Enter the URL where the software can be found.

Note

This Web server must be pingable.

<<var_url_boot_software>>10.

Press Enter for the username, indicating no user name.

Enter11.

Type y indicating yes to set the newly installed software as the default to be used for subsequent reboots.

y12.

Type y indicating yes to reboot the node.

y13.

When you see "Press Ctrl-C for Boot Menu", press:

Ctrl-C14.

To enter Maintenance mode boot, select option 5.

515.

When you see the question "Continue to Boot?" type yes.

y16.

To verify the HA status of your environment, enter:

ha-config show

Note

If either component is not in HA mode, use the ha-config modify command to put the components in HA mode.

17.

To see how many disks are unowned, enter:

disk show -a

Note

No disks should be owned in this list.

18.

Assign disks.

disk assign -n <<var_#_of_disks>>

Note

This reference architecture allocates half the disks to each controller. However, workload design could dictate different percentages.

19.

Reboot the controller.

halt20.

At the LOADER-A prompt, enter:

autoboot21.

Press Ctrl - C for Boot Menu when prompted.

Ctrl-C22.

Select option 4 for Clean configuration and initialize all disks.

423.

Enter y indicating yes to zero disks, reset config, and install a new file system.

y24.

Type y indicating yes to erase all the data on the disks.

y

Note

The initialization and creation of the root volume can take 75 minutes or more to complete, depending on the number of disks attached. When initialization is complete, the storage system reboots. You can continue with the Controller B configuration while the disks for Controller A are zeroing.

Controller B

1.

Connect to the storage system console port. You should see a Loader-A prompt. However if the storage system is in a reboot loop, Press Ctrl - C to exit the Autoboot loop when you see this message:

Starting AUTOBOOT press Ctrl-C to abort...2.

If the system is at the LOADER prompt, enter the following command to boot Data ONTAP:

autoboot3.

During system boot, press Ctrl - C when prompted for the Boot Menu:

Press Ctrl-C for Boot Menu...

Note

If 8.1.2 is not the version of software being booted, proceed with the steps below to install new software. If 8.1.2 is the version being booted, then proceed with step 14, maintenance mode boot.

4.

To install new software, first select option 7.

75.

Type y indicating yes to perform a nondisruptive upgrade.

y6.

Select e0M for the network port you want to use for the download.

e0M7.

Type y indicating yes to reboot now.

y8.

Enter the IP address, netmask and default gateway for e0M in their respective places.

<<var_controller2_e0m_ip>> <<var_controller2_mask>> <<var_controller2_mgmt_gateway>>.9.

Enter the URL where the software can be found.

Note

This Web server must be pingable.

<<var_url_boot_software>>10.

Press Enter for the username, indicating no user name.

Enter11.

Type y indicating yes to set the newly installed software as the default to be used for subsequent reboots.

y12.

Type y indicating yes to reboot the node.

y13.

When you see "Press Ctrl-C for Boot Menu", press:

Ctrl-C14.

To enter Maintenance mode boot, select option 5:

515.

If you see the question "Continue to Boot?" type yes.

y16.

To verify the HA status of your environment, enter:

ha-config show

Note

If either component is not in HA mode, use the ha-config modify command to put the components in HA mode.

17.

To see how many disks are unowned, enter:

disk show -a

Note

The remaining disks should be shown.

18.

Assign disks by entering:

disk assign -n <<var_#_of_disks>>

Note

This reference architecture allocates half the disks to each controller. However, workload design could dictate different percentages.

19.

Reboot the controller.

halt20.

At the LOADER prompt, enter:

autoboot21.

Press Ctrl - C for Boot Menu when prompted.

Ctrl-C22.

Select option 4 for a Clean configuration and initialize all disks.

423.

Type y indicating yes to zero disks, reset config, and install a new file system.

y24.

Type y indicating yes to erase all the data on the disks.

y

Note

The initialization and creation of the root volume can take 75 minutes or more to complete, depending on the number of disks attached. When initialization is complete, the storage system reboots.

Run the Setup Process

When Data ONTAP is installed on your new storage system, the following files are not populated:

•

/etc/rc

•

/etc/exports

•

/etc/hosts

•

/etc/hosts.equiv

Controller A

1.

Enter the configuration values the first time you power on the new system. The configuration values populate these files and configure the installed functionality of the system.

2.

Enter the following information:

Please enter the new hostname []:<<var_controller1>> Do you want to enable IPv6? [n]: EnterDo you want to configure interface groups? [n]: EnterPlease enter the IP address for Network Interface e0a []: Enter

Note

Press Enter to accept the blank IP address.

Should interface e0a take over a partner IP address during failover? [n]: EnterPlease enter the IP address for the Network Interface e0b []:Enter Should interface e0b take over a partner IP address during failover? [n]: Enter Please enter the IP address for the Network Interface e1a []:Enter Should interface e1a take over a partner IP address during failover? [n]: Enter Please enter the IP address for the Network Interface e1b []:Enter Should interface e1b take over a partner IP address during failover? [n]: Enter Please enter the IP address for Network Interface e0M []: <<var_controller1_e0m_ip>> Please enter the netmaskfor the Network Interface e0M [255.255.255.0]: <<var_controller1_mask>> Should interface e0M take over a partner IP address during failover? [n]: y Please enter the IPv4 address or interface name to be taken over by e0M []: e0M Please enter flow control for e0M {none, receive, send, full} [full]: Enter

3.

Enter the following information:

Please enter the name or IP address of the IPv4 default gateway: <<var_controller1_mgmt_gateway>>The administration host is given root access to the storage system's / etc files for system administration. To allow /etc root access to all NFS clients enter RETURN below.Please enter the name or IP address for administrative host: <<var_adminhost_ip>>Please enter timezone [GTM]: <<var_timezone>>

Note

Example time zone: America/New_York.

Where is the filer located? <<var_location>> Enter the root directory for HTTP files [home/http]: Enter Do you want to run DNS resolver? [n]: y Please enter DNS domain name []: <<var_dns_domain_name>> Please enter the IP address for first nameserver []: <<var_nameserver_ip>> Do you want another nameserver? [n]:

Note

Optionally enter up to three name server IP addresses.

Do you want to run NIS client? [n]: EnterPress the Return key to continue through AutoSupport message would you like to configure SP LAN interface [y]: Enter Would you like to enable DHCP on the SP LAN interface [y]: n Please enter the IP address for the SP: <<var_sp_ip>> Please enter the netmask for the SP []: <<var_sp_mask>> Please enter the IP address for the SP gateway: <<var_sp_gateway>> Please enter the name or IP address of the mail host [mailhost]: <<var_mailhost>> Please enter the IP address for <<var_mailhost>> []: <<var_mailhost_ip>> New password: <<var_ password>> Retype new password <<var_ password>>4.

Enter the admin password to log in to Controller A.

Controller B

1.

Enter the configuration values the first time you power on the new system. The configuration values populate these files and configure the installed functionality of the system.

2.

Enter the following information:

Please enter the new hostname []: <<var_controller2>> Do you want to enable IPv6? [n]: EnterDo you want to configure interface groups? [n]: EnterPlease enter the IP address for Network Interface e0a []: Enter

Note

Press Enter to accept the blank IP address.

Should interface e0a take over a partner IP address during failover? [n]: EnterPlease enter the IP address for the Network Interface e0b []:Enter Should interface e0b take over a partner IP address during failover? [n]: Enter Please enter the IP address for the Network Interface e1a []:Enter Should interface e1a take over a partner IP address during failover? [n]: Enter Please enter the IP address for the Network Interface e1b []:Enter Should interface e1b take over a partner IP address during failover? [n]: Enter Please enter the IP address for Network Interface e0M []: <<var_controller2_e0m_ip>> Please enter the netmaskfor the Network Interface e0M [255.255.255.0]: <<var_controller2_mask>> Should interface e0M take over a partner IP address during failover? [n]: y Please enter the IPv4 address or interface name to be taken over by e0M []: e0M Please enter flow control for e0M {none, receive, send, full} [full]: Enter3.

Enter the following information:

Please enter the name or IP address of the IPv4 default gateway: <<var_controller2_mgmt_gateway>>The administration host is given root access to the storage system's / etc files for system administration. To allow /etc root access to all NFS clients enter RETURN below.Please enter the name or IP address for administrative host: <<var_adminhost_ip>>Please enter timezone [GTM]: <<var_timezone>>

Note

Example time zone: America/New York.

Where is the filer located? <<var_location>> Enter the root directory for HTTP files [home/http]: Enter Do you want to run DNS resolver? [n]: y Please enter DNS domain name []: <<var_dns_domain_name>> Please enter the IP address for first nameserver []: <<var_nameserver_ip>> Do you want another nameserver? [n]:

Note

Optionally enter up to three name server IP addresses.

Do you want to run NIS client? [n]: EnterPress the Return key to continue through AutoSupport message would you like to configure SP LAN interface [y]: Enter Would you like to enable DHCP on the SP LAN interface [y]: n Please enter the IP address for the SP: <<var_sp_ip>> Please enter the netmask for the SP []: <<var_sp_mask>> Please enter the IP address for the SP gateway: <<var_sp_gateway>> Please enter the name or IP address of the mail host [mailhost]: <<var_mailhost>> Please enter the IP address for <<var_mailhost>> []: <<var_mailhost_ip>> New password: <<var_admin_passwd>> Retype new password <<var_admin_passwd>>4.

Enter the admin password to log in to Controller B.

Upgrade the Service Processor on Each Node to the Latest Release

With Data ONTAP 8.1.2, you must upgrade to the latest Service Processor (SP) firmware to take advantage of the latest updates available for the remote management device.

1.

Using a web browser, go to http://support.netapp.com/NOW/cgi-bin/fw.

2.

Navigate to the Service Process Image for installation from the Data ONTAP prompt page for your storage platform.

3.

Proceed to the Download page for the latest release of the SP Firmware for your storage platform.

4.

Follow the instructions on this page; update the SPs on both controllers. You will need to download the .zip file to a Web server that is reachable from the management interfaces of the controllers.

64-Bit Aggregates

Note

A 64-bit aggregate containing the root volume is created during the Data ONTAP setup process. To create additional 64-bit aggregates, determine the aggregate name, the node on which it can be created, and how many disks it should contain. Calculate the RAID group size to allow for roughly balanced (same size) RAID groups from 12 through 20 disks (for SAS disks) within the aggregate. For example, if 52 disks are assigned to the aggregate then, select a RAID group size of 18. A RAID group size of 18 would yield two 18-disk RAID groups and one 16-disk RAID group. Remember that the default RAID group size is 16 disks, and that the larger the RAID group size, the longer the disk rebuild time in case of a failure.

Controller A

1.

Execute the following command to create a new aggregate:

aggr create aggr1 -B 64 -r <<var_raidsize>> <<var_num_disks>>

Note

Leave at least one disk (select the largest disk) in the configuration as a spare. A best practice is to have at least one spare for each disk type and size.

Controller B

1.

Execute the following command to create a new aggregate:

aggr create aggr1 -B 64 -r <<var_raidsize>> <<var_num_disks>>

Note

Leave at least one disk (select the largest disk) in the configuration as a spare. A best practice is to have at least one spare for each disk type and size.

Flash Cache

Controller A and Controller B

1.

Execute the following commands to enable Flash Cache:

options flexscale.enable onoptions flexscale.lopri_blocks offoptions flexscale.normal_data_blocks on

Note

For directions on how to configure Flash Cache in metadata mode or low-priority data caching mode, refer to TR-3832: Flash Cache and PAM Best Practices Guide. Before customizing the settings, determine whether the custom settings are required or whether the default settings are sufficient.

IFGRP LACP

Since this type of interface group requires two or more Ethernet interfaces and a switch that supports Link Aggregation Control Protocol (LACP), make sure that the switch is configured properly.

Controller A and Controller B

1.

Run the following command on the command line and also add it to the /etc/rc file, so it is activated upon boot:

ifgrp create lacp ifgrp0 -b port e2a e2bwrfile -a /etc/rc "ifgrp create lacp ifgrp0 -b ip e1a e1b"

Note

All interfaces must be in down status before being added to an interface group.

VLAN

Controller A and Controller B

1.

Follow the steps below to create a VLAN interface for iSCSI data traffic.

vlan create ifgrp0 <<var_iscsi_a_vlan_id>>, <<var_iscsi_b_vlan_id>>wrfile -a /etc/rc "vlan create ifgrp0 <<var_iscsi_a_vlan_id>>, <<var_iscsi_b_vlan_id>>"IP Config

Controller A and Controller B

1.

Run the following commands on the command line.

ifconfig ifgrp0-<<var_iscsi_a_vlan_id>> <<var_iscsi_a_ip>> netmask <<var_iscsi_a_mask>> mtusize 9000 partner ifgrp0-<<var_iscsi_a_vlan_id>> ifconfig ifgrp0-<<var_iscsi_b_vlan_id>> <<var_iscsi_b_ip>> netmask <<var_iscsi_b_mask>> mtusize 9000 partner ifgrp0-<<var_iscsi_b_vlan_id>>wrfile -a /etc/rc "ifconfig ifgrp0-<<var_iscsi_a_vlan_id>> <<var_iscsi_a_ip>> netmask <<var_iscsi_a_mask>> mtusize 9000 partner ifgrp0-<<var_iscsi_a_vlan_id>>" wrfile -a /etc/rc "ifconfig ifgrp0-<<var_iscsi_b_vlan_id>> <<var_iscsi_b_ip>> netmask <<var_iscsi_b_mask>> mtusize 9000 partner ifgrp0-<<var_iscsi_b_vlan_id>>"Storage Controller Active-Active Configuration

Controller A and Controller B

To enable two storage controllers to an active-active configuration, complete the following steps:

1.

Enter the cluster license on both nodes.

license add <<var_cf_license>>2.

Reboot both the storage controllers.

reboot3.

Log back in to both the controllers.

Controller A

1.

Enable failover on Controller A, if it is not enabled already.

cf enableNTP

The following commands configure and enable time synchronization on the storage controller. You must have either a publicly available IP address or your company's standard NTP server name or IP address.

Controller A and Controller B

1.

Run the following commands to configure and enable the NTP server:

date <<var_date>>2.

Enter the current date in the format of [[[[CC]yy]mm]dd]hhmm[.ss]].

For example, date 201208311436; which means the date is set to August 31st 2012 at 14:36.

options timed.servers <<var_global_ntp_server_ip>>options timed.enable onJoining a Windows Domain (optional)

The following commands should be used to allow the NetApp controllers to join the existing Domain.

Controller A and Controller B

Add the controller to the domain by running CIFS setup.

CIFS setupDo you want to make the system visible via WINS? [N] nChoose (2) NTFS-only filer [2] 2Enter the password for the root user []: <<var_root_password>>Reenter the password: <<var_root_password>>Would you like the change this name? [n]: EnterChoose (1) Active Directory Domain Authentication : 1 EnterConfigure the DNS Resolver Service ?[y]: yWhat is the filers DSN Domain name? []: <<var_dnsdomain>>What the IPv4 Addresses of your Authoritative DNS servers? []: <<var_ip_DNSserver>>What is the name of the Active Directory Domain Controller? : <<var_dnsdomain>>Would you like to configure time services? [y]: [Enter]Enter the time server host []:<<var_dnsdomain>>Enter the ame of the windows user [administrator@<<var_fas3240_dnsdomain>>] [Enter]Password for <<var_domainAccountUsed>>: <<password>>Choose (1) Create the filers machine account in the "computers" container: 1Do you want to configure a <<var_ntap_hostname>>/administrator account [Y]: [Enter]Password for the <<var_ntap_hostname>>/Administrator <<var_password>> [Enter]Would you like to specify a user or group that can administer CIFS [n]: [Enter]iSCSI

Controller A and Controller B

1.

Add a license for iSCSI.

license add <<var_nfs_license>>2.

Start iSCSI

iscsi startFCP

Controller A and Controller B

1.

License FCP.

license add <<var_fc_license>>2.

Start the FCP service.

fcp start3.

Record the WWPN or FC port name for later use.

fcp show adapters4.

If using FC instead of FCoE between storage and the network, if necessary execute the following commands to make ports 0c and 0d target ports.

fcadmin config5.

Make an FC port into a target.

Note

Only FC ports that are configured as targets can be used to connect to initiator hosts on the SAN.

For example, make a port called <<var_fctarget01>> into a target port by running the following command:

fcadmin config -t target <<var_fctarget01>>

Note

If an initiator port is made into a target port, a reboot is required. NetApp recommends rebooting after completing the entire configuration because other configuration steps might also require a reboot.

Data ONTAP SecureAdmin

Secure API access to the storage controller must be configured.

Controller A

1.

Execute the following as a one-time command to generate the certificates used by the Web services for the API.

secureadmin setup ssl SSL Setup has already been done before. Do you want to proceed? [no] y Country Name (2 letter code) [US]: <<var_country_code>> State or Province Name (full name) [California]: <<var_state>> Locality Name (city, town, etc.) [Santa Clara]: <<var_city>> Organization Name (company) [Your Company]: <<var_org>> Organization Unit Name (division): <<var_unit>> Common Name (fully qualified domain name) [<<var_controller1_fqdn>>]: Enter Administrator email: <<var_admin_email>> Days until expires [5475] : EnterKey length (bits) [512] : <<var_key_length>>

Note

NetApp recommends your key length to be 1024.

After the initialization, the CSR is available in the file:

/etc/keymgr/csr/secureadmin_tmp.pem.2.

Configure and enable SSL and HTTPS for API access using the following options.

options httpd.access noneoptions httpd.admin.enable offoptions httpd.admin.ssl.enable onoptions ssl.enable onController B

1.

Execute the following as a one-time command to generate the certificates used by the Web services for the API.

secureadmin setup ssl SSL Setup has already been done before. Do you want to proceed? [no] y Country Name (2 letter code) [US]: <<var_country_code>> State or Province Name (full name) [California]: <<var_state>> Locality Name (city, town, etc.) [Santa Clara]: <<var_city>> Organization Name (company) [Your Company]: <<var_org>> Organization Unit Name (division): <<var_unit>> Common Name (fully qualified domain name) [<<var_controller2_fqdn>>]: Enter Administrator email: <<var_admin_email>> Days until expires [5475] : EnterKey length (bits) [512] : <<var_key_length>>

Note

NetApp recommends your key length to be 1024.

After the initialization, the CSR is available in the file

/etc/keymgr/csr/secureadmin_tmp.pem.2.

Configure and enable SSL and HTTPS for API access using the following options.

options httpd.access noneoptions httpd.admin.enable offoptions httpd.admin.ssl.enable onoptions ssl.enable onSecure Shell

SSH must be configured and enabled.

Controller A and Controller B

1.

Execute the following one-time command to generate host keys.

secureadmin disable ssh secureadmin setup -f -q ssh 768 512 10242.

Use the following options to configure and enable SSH.

options ssh.idle.timeout 60options autologout.telnet.timeout 5SNMP

Controller A and Controller B

1.

Run the following commands to configure SNMP basics, such as the local and contact information. When polled, this information displays as the sysLocation and sysContact variables in SNMP.

snmp contact "<<var_admin_email>>"snmp location "<<var_location>>"snmp init 1options snmp.enable on2.

Configure SNMP traps to send them to remote hosts, such as a DFM server or another fault management system.

snmp traphost add <<var_oncommand_server_fqdn>>SNMPv1

Controller A and Controller B

1.

Set the shared secret plain-text password, which is called a community.

snmp community delete allsnmp community add ro <<var_snmp_community>>

Note

Use the delete all command with caution. If community strings are used for other monitoring products, the delete all command will remove them.

SNMPv3

SNMPv3 requires a user to be defined and configured for authentication.

Controller A and Controller B

1.

Create a user called snmpv3user.

useradmin role add snmp_requests -a login-snmpuseradmin group add snmp_managers -r snmp_requestsuseradmin user add snmpv3user -g snmp_managers New Password: <<var_ password>> Retype new password: <<var_ password>>AutoSupport HTTPS

AutoSupport™ sends support summary information to NetApp through HTTPS.

Controller A and Controller B

1.

Execute the following command to configure AutoSupport.

options autosupport.noteto <<var_admin_email>>Security Best Practices

Note

Apply the following commands according to local security policies.

Controller A and Controller B

1.

Run the following commands to enhance security on the storage controller:

options rsh.access noneoptions webdav.enable offoptions security.passwd.rules.maximum 14options security.passwd.rules.minimum.symbol 1options security.passwd.lockout.numtries 6options autologout.console.timeout 5Install Remaining Required Licenses and Enable MultiStore

Controller A and Controller B

1.

Install the following licenses to enable SnapRestore® and FlexClone®.

license add <<var_snaprestore_license>>license add <<var_flex_clone_license>>options licensed_feature.multistore.enable onEnable NDMP

Run the following commands to enable NDMP.

Controller A and Controller B

options ndmpd.enable onAdd Infrastructure Volumes

Controller A

1.

Create a FlexVol® volume in Aggr1 to host the UCS boot LUNs, and cluster quorum.

vol create ucs_boot -s none aggr1 500g vol create hyperv_quorum -s none aggr1 10g2.

Configure volume dedupe.

sis config -s auto /vol/ucs_bootsis config -s auto /vol/hyperv_quorumsis on /vol/ucs_bootsis on /vol/hyperv_quorumsis start -s /vol/ucs_bootsis start -s /vol/hyperv_quorumController B

1.

Create a 500GB FlexVol volume in Aggr1 to host the management infrastructure virtual machines.

vol create ucs_boot -s none aggr1 500gvol create fabric_mgmt_csv -s none aggr1 5t2.

Configure dedupe.

sis config -s auto /vol/fabric_mgmt_csvsis config -s auto /vol/ucs_bootsis on /vol/ucs_bootsis on /vol/fabric_mgmt_csvsis start -s /vol/ucs_bootsis start -s /vol/fabric_mgmt_csvInstall SnapManager licenses

Controller A and Controller B

1.

Add a license for SnapManager® for Hyper-V.

license add <<var_snapmanager_hyperv_license>>2.

Add a license for SnapDrive® for Windows.

license add <<var_snapdrive_windows_license>>Cisco Unified Computing System Deployment Procedure

The following section provides a detailed procedure for configuring the Cisco Unified Computing System for use in a FlexPod environment. These steps should be followed precisely because a failure to do so could result in an improper configuration.

Perform Initial Setup of the Cisco UCS 6248 Fabric Interconnects

These steps provide details for initial setup of the Cisco UCS 6248 Fabric Interconnects.

Cisco UCS 6248 A

1.

Connect to the console port on the first Cisco UCS 6248 Fabric Interconnect.

2.

At the prompt to enter the configuration method, enter console to continue.

3.

If asked to either do a new setup or restore from backup, enter setup to continue.

4.

Enter y to continue to set up a new fabric interconnect.

5.

Enter y to enforce strong passwords.

6.

Enter the password for the admin user.

7.

Enter the same password again to confirm the password for the admin user.

8.

When asked if this fabric interconnect is part of a cluster, answer y to continue.

9.

Enter A for the switch fabric.

10.

Enter the cluster name for the system name.

11.

Enter the Mgmt0 IPv4 address.

12.

Enter the Mgmt0 IPv4 netmask.

13.

Enter the IPv4 address of the default gateway.

14.

Enter the cluster IPv4 address.

15.

To configure DNS, answer y.

16.

Enter the DNS IPv4 address.

17.

Answer y to set up the default domain name.

18.

Enter the default domain name.

19.

Review the settings that were printed to the console, and if they are correct, answer yes to save the configuration.

20.

Wait for the login prompt to make sure the configuration has been saved.

Cisco UCS 6248 B

1.

Connect to the console port on the second Cisco UCS 6248 Fabric Interconnect.

2.

When prompted to enter the configuration method, enter console to continue.

3.

The installer detects the presence of the partner fabric interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

4.

Enter the admin password for the first fabric interconnect.

5.

Enter the Mgmt0 IPv4 address.

6.

Answer yes to save the configuration.

7.

Wait for the login prompt to confirm that the configuration has been saved.

Log into Cisco UCS Manager

These steps provide details for logging into the Cisco UCS environment.

1.

Open a Web browser and navigate to the Cisco UCS 6248 Fabric Interconnect cluster address.

2.

Select the Launch link to download the Cisco UCS Manager software.

3.

If prompted to accept security certificates, accept as necessary.

4.

When prompted, enter admin for the username and enter the administrative password and click Login to log in to the Cisco UCS Manager software.

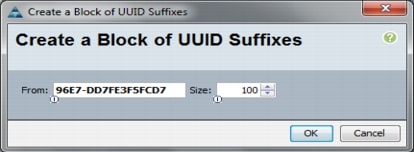

Add a Block of IP Addresses for KVM Access

These steps provide details for creating a block of KVM ip addresses for server access in the Cisco UCS environment.

1.

Select the Admin tab at the top of the left window.

2.

Select All > Communication Management.

3.

Right-click Management IP Pool.

4.

Select Create Block of IP Addresses.

5.

Enter the starting IP address of the block and number of IPs needed as well as the subnet and gateway information.

6.

Click OK to create the IP block.

7.

Click OK in the message box.

Synchronize Cisco UCS to NTP

These steps provide details for synchronizing the Cisco UCS environment to the NTP server.

1.

Select the Admin tab at the top of the left window.

2.

Select All > Timezone Management.

3.

Right-click Timezone Management.

4.

In the right pane, select the appropriate timezone in the Timezone drop-down menu.

5.

Click Save Changes and then OK.

6.

Click Add NTP Server.

7.

Input the NTP server IP and click OK.

Configure Unified Ports

These steps provide details for modifying an unconfigured Ethernet port into a FC uplink port ports in the Cisco UCS environment.

Note

Modification of the unified ports leads to a reboot of the fabric interconnect in question. This reboot can take up to 10 minutes.

1.

Navigate to the Equipment tab in the left pane.

2.

Select Fabric Interconnect A.

3.

In the right pane, select the General tab.

4.

Select Configure Unified Ports.

5.

Click Yes to launch the wizard.

6.

Use the slider tool and move one position to the left to configure the last two ports (31 and 32) as FC uplink ports.

7.

Ports 31 and 32 now have the "B" indicator indicating their reconfiguration as FC uplink ports.

8.

Click Finish.

9.

Click OK.

10.

The Cisco UCS Manger GUI will close as the primary fabric interconnect reboots.

11.

Upon successful reboot, open a Web browser and navigate to the Cisco UCS 6248 Fabric Interconnect cluster address.

12.

When prompted, enter admin for the username and enter the administrative password and click Login to log in to the Cisco UCS Manager software.

13.

Navigate to the Equipment tab in the left pane.

14.

Select Fabric Interconnect B.

15.

In the right pane, click the General tab.

16.

Select Configure Unified Ports.

17.

Click Yes to launch the wizard.

18.

Use the slider tool and move one position to the left to configure the last two ports (31 and 32) as FC uplink ports.

19.

Ports 31 and 32 now have the "B" indicator indicating their reconfiguration as FC uplink ports.

20.

Click Finish.

21.

Click OK.

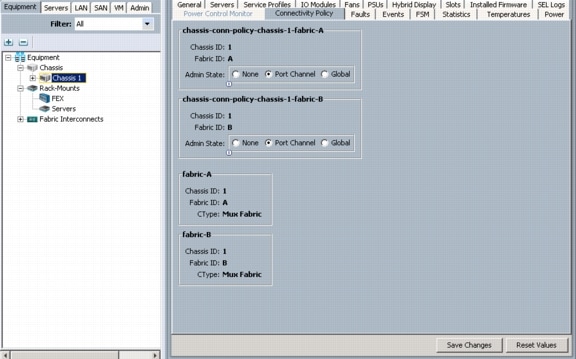

Chassis Discovery Policy

These steps provide details for modifying the chassis discovery policy as the base architecture includes two uplinks from each fabric extender installed in the Cisco UCS chassis.

1.

Navigate to the Equipment tab in the left pane.

2.

In the right pane, click the Policies tab.

3.

Under Global Policies, change the Chassis Discovery Policy to 4-link or set it to match the number of uplink ports that are cabled between the chassis or fabric extenders (FEXes) and the fabric interconnects.

4.

Keep Link Grouping Preference set to None

5.

Click Save Changes.

Enable Server and Uplink Ports

These steps provide details for enabling Fibre Channel, server and uplinks ports.

1.

Select the Equipment tab on the top left of the window.

2.

Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3.

Expand the Ethernet Ports object.

4.

Select the ports that are connected to the chassis or to the Cisco 2232 FEX (four per FEX), right-click them, and select Configure as Server Port.

5.

Click Yes to confirm the server ports, and then click OK.

6.

The ports connected to the chassis or to the Cisco 2232 FEX are now configured as server ports.

7.

A prompt displays asking if this is what you want to do. Click Yes, then OK to continue.

8.

Select ports 19 and 20 that are connected to the Cisco Nexus 5548 switches, right-click them, and select Configure as Uplink Port.

9.

A prompt displays asking if this is what you want to do. Click Yes, then OK to continue.

10.

Select Equipment > Fabric Interconnects > Fabric Interconnect B (subordinate) > Fixed Module.

11.

Expand the Ethernet Ports object.

12.

Select ports the number of ports that are connected to the Cisco UCS chassis (4 per chassis), right-click them, and select Configure as Server Port.

13.

A prompt displays asking if this is what you want to do. Click Yes, then OK to continue.

14.

Select ports 19 and 20 that are connected to the Cisco Nexus 5548 switches, right-click them, and select Configure as Uplink Port.

15.

A prompt displays asking if this is what you want to do. Click Yes, then OK to continue.

16.

At the prompt, click Yes to confirm the uplink ports, and then click OK.

17.

If using the 2208 or 2204 FEX or the external 2232 FEX, navigate to each device by selecting Equipment > Chassis > Rack-Mounts > FEX > <FEX #>.

18.

Select the Connectivity Policy tab in the right pane and change the administrative state of each fabric to Port Channel.

19.

Click Save Changes, click Yes, and then click OK.

Acknowledge the Cisco UCS Chassis

The connected chassis needs to be acknowledged before it can be managed by Cisco UCS Manager.

1.

Select Chassis 1 in the left pane.

2.

Click Acknowledge Chassis.

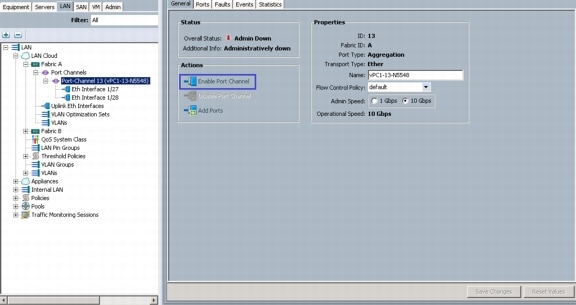

Create Uplink Port Channels to the Cisco Nexus 5548 Switches

These steps provide details for configuring the necessary Port Channels out of the Cisco UCS environment.

1.

Select the LAN tab on the left of the window.

Note

Two Port Channels are created, one from fabric A to both Cisco Nexus 5548 switches and one from fabric B to both Cisco Nexus 5548 switches.

2.

Under LAN Cloud, expand the Fabric A tree.

3.

Right-click Port Channels.

4.

Select Create Port Channel.

5.

Enter 13 as the unique ID of the Port Channel.

6.

Enter vPC-13-N5548 as the name of the Port Channel.

7.

Click Next.

8.

Select the port with slot ID: 1 and port: 19 and also the port with slot ID: 1 and port 20 to be added to the Port Channel.

9.

Click >> to add the ports to the Port Channel.

10.

Click Finish to create the Port Channel.

11.

Check the Show navigator for Port-Channel 13 (Fabric A) checkbox.

12.

Click OK to continue.

13.

Under Actions, click Enable Port Channel.

14.

In the pop-up box, click Yes, then OK to enable.

15.

Wait until the overall status of the Port Channel is up.

16.

Click OK to close the Navigator.

17.

Under LAN Cloud, expand the Fabric B tree.

18.

Right-click Port Channels.

19.

Select Create Port Channel.

20.

Enter 14 as the unique ID of the Port Channel.

21.

Enter vPC-14-N5548 as the name of the Port Channel.

22.

Click Next.

23.

Select the port with slot ID: 1 and port: 19 and also the port with slot ID: 1 and port 20 to be added to the Port Channel.

24.

Click >> to add the ports to the Port Channel.

25.

Click Finish to create the Port Channel.

26.

Check the Show navigator for Port-Channel 14 (Fabric B) checkbox.

27.

Click OK to continue.

28.

Under Actions, select Enable Port Channel.

29.

In the pop-up box, click Yes, then OK to enable.

30.