FlexPod Datacenter for SAP Solution with Cisco ACI on Cisco UCS M5 Servers with SLES 12 SP3 and RHEL 7.4

Available Languages

FlexPod Datacenter for SAP Solution with Cisco ACI on Cisco UCS M5 Servers with SLES 12 SP3 and RHEL 7.4

Design and Deployment Guide for FlexPod Datacenter for SAP Solution with IP-Based Storage using NetApp AFF A-Series, Cisco UCS Manager 3.2, and Cisco Application Centric Infrastructure 3.2

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2018 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco Unified Computing System

Cisco UCS 6332UP Fabric Interconnect

Cisco UCS 2304XP Fabric Extender

Cisco UCS B480 M5 Blade Server

Cisco UCS C480 M5 Rack Servers

Cisco UCS C240 M5 Rack Servers

Cisco I/O Adapters for Blade and Rack-Mount Servers

Cisco VIC 1340 Virtual Interface Card

Cisco VIC 1380 Virtual Interface Card

Cisco VIC 1385 Virtual Interface Card

Cisco Application Centric Infrastructure (ACI)

Cisco Application Policy Infrastructure Controller

NetApp All Flash FAS and ONTAP

FlexPod with Cisco ACI—Components

Validated System Hardware Components

Hardware and Software Components

SAP HANA Solution Implementations

SAP HANA System on a Single Host - Scale-Up

SAP HANA System on Multiple Hosts Scale-Out

Infrastructure Requirements for the SAP HANA Database

Network Configuration for Management Pod

Dual-Homed FEX Topology (Active/Active FEX Topology)

Cisco Nexus 9000 Series Switches─Network Initial Configuration Setup

Enable Appropriate Cisco Nexus 9000 Series Switches - Features and Settings

Create VLANs for Management Traffic

Configure Virtual Port Channel Domain

Configure Network Interfaces for the VPC Peer Links

Configure Network Interfaces to Cisco UCS C220 Management Server

Direct Connection of Management Pod to FlexPod Infrastructure

Uplink into Existing Network Infrastructure

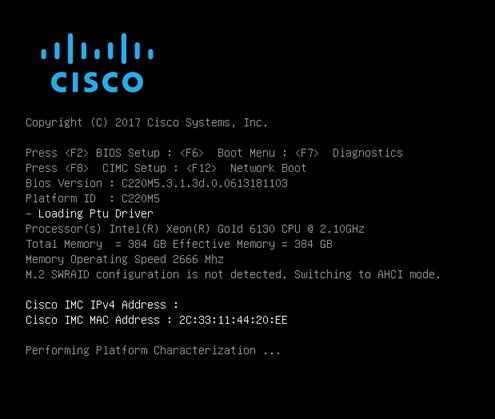

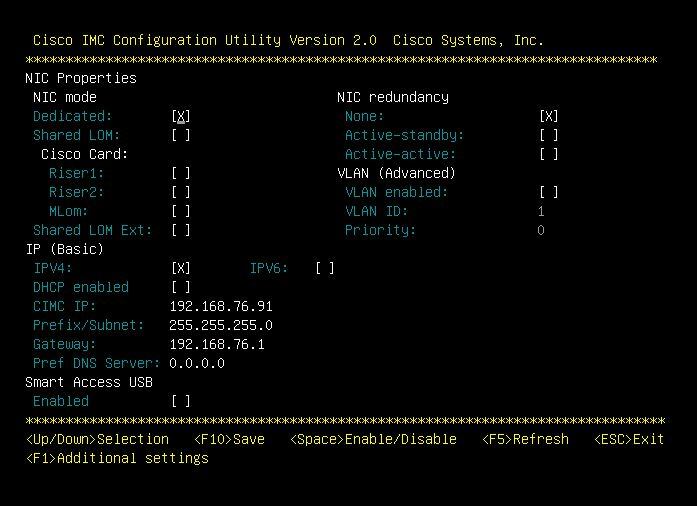

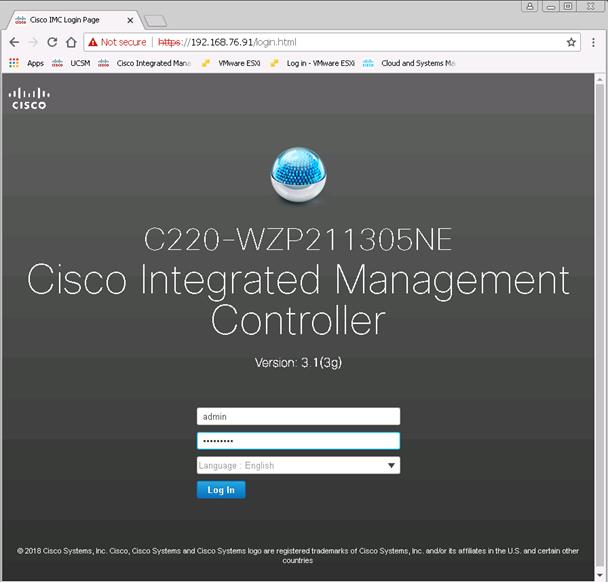

Management Server Installation

Set Up Management Networking for ESXi Hosts

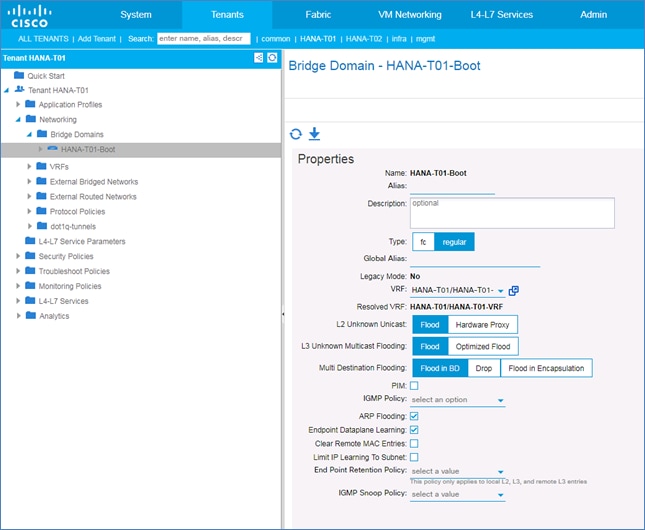

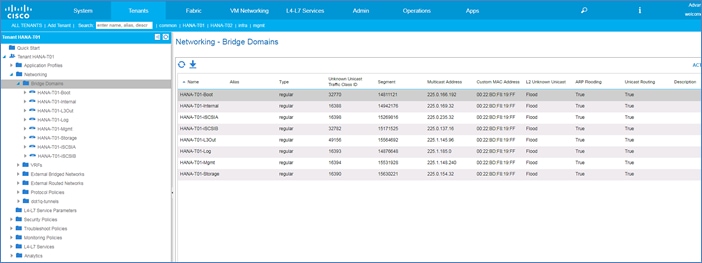

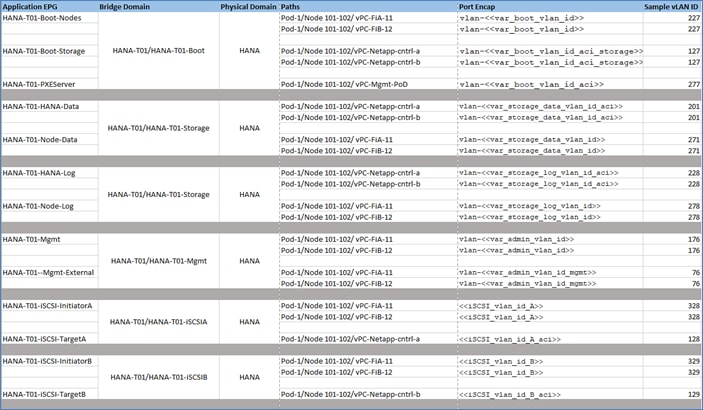

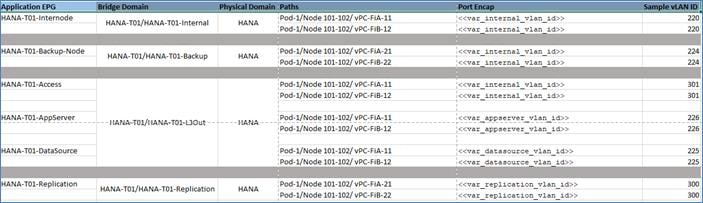

FlexPod Cisco ACI Network Configuration for SAP HANA

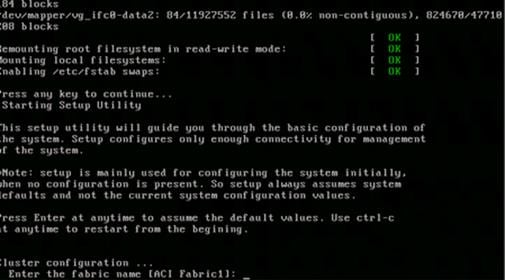

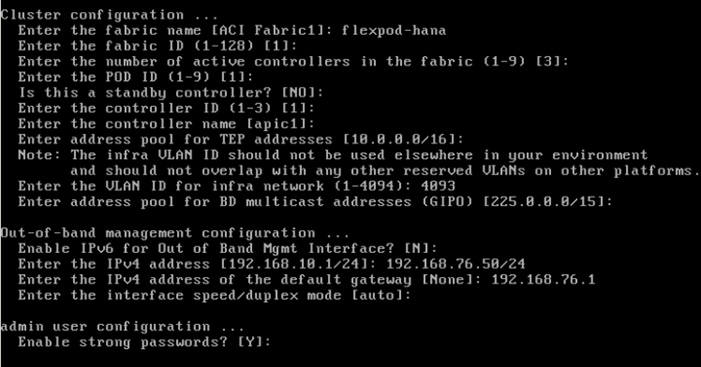

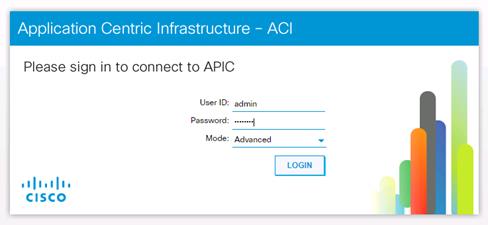

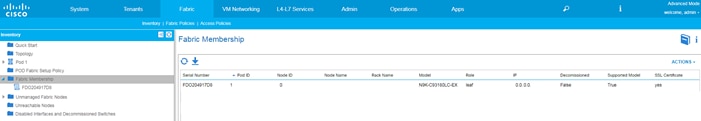

Cisco APIC Initial Configuration

Configure Cisco APIC Power State

Cisco APIC Initial Configuration Setup

Configure NTP for Cisco ACI Fabric

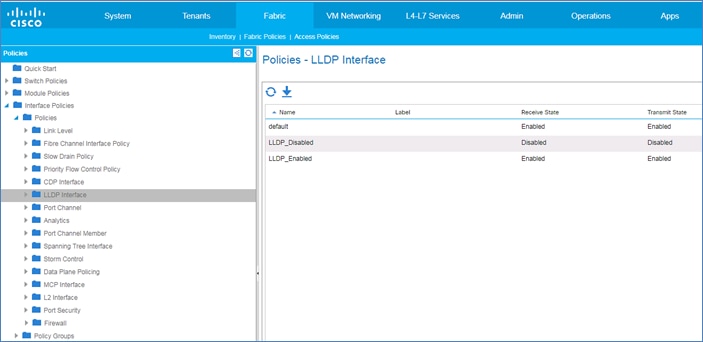

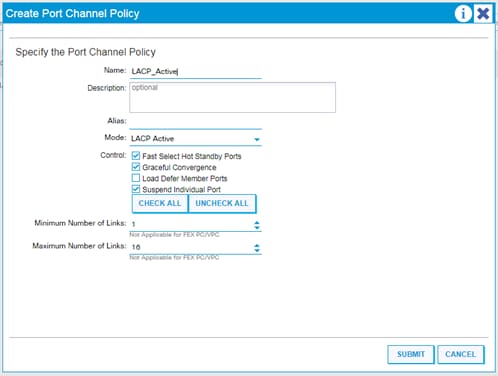

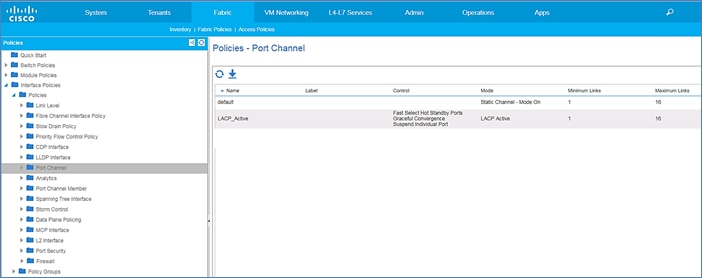

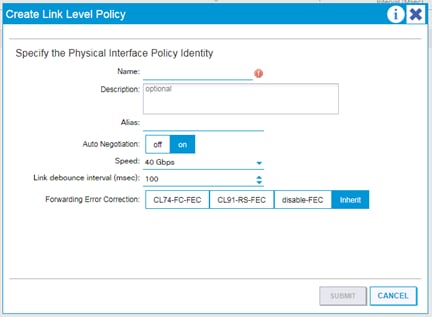

Attachable Access Entity Profile Configuration

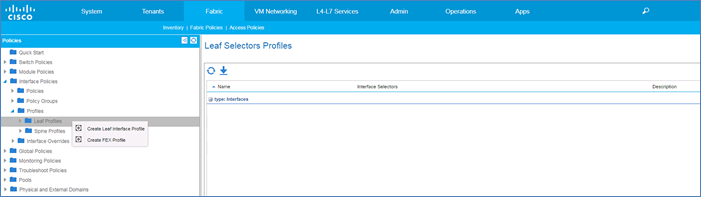

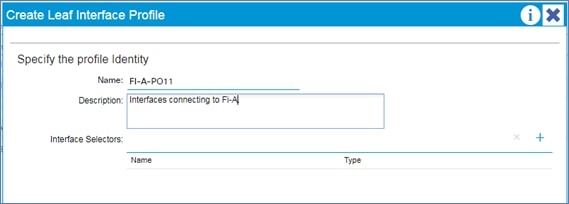

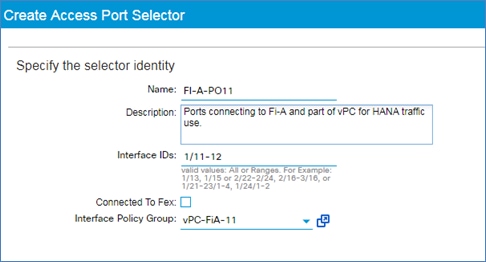

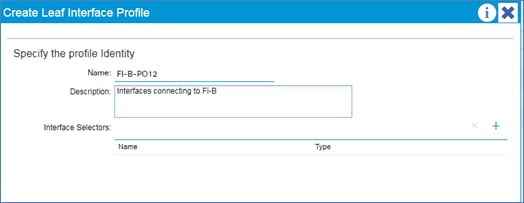

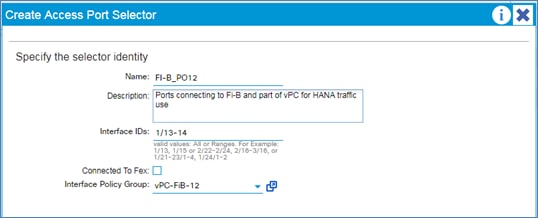

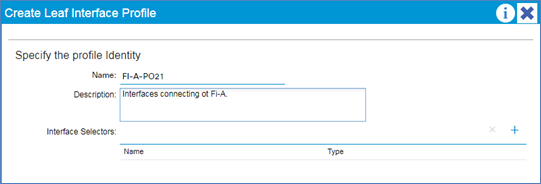

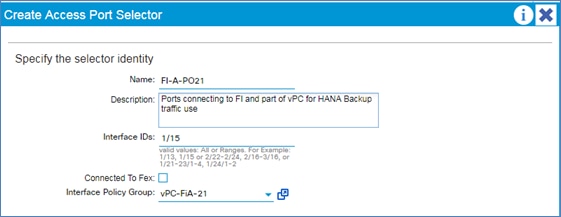

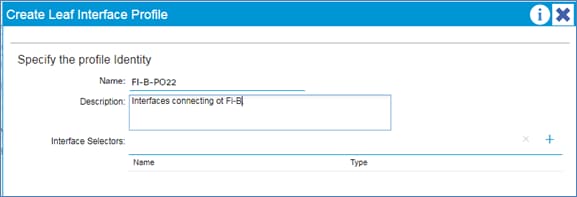

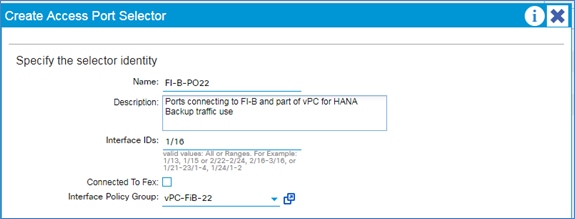

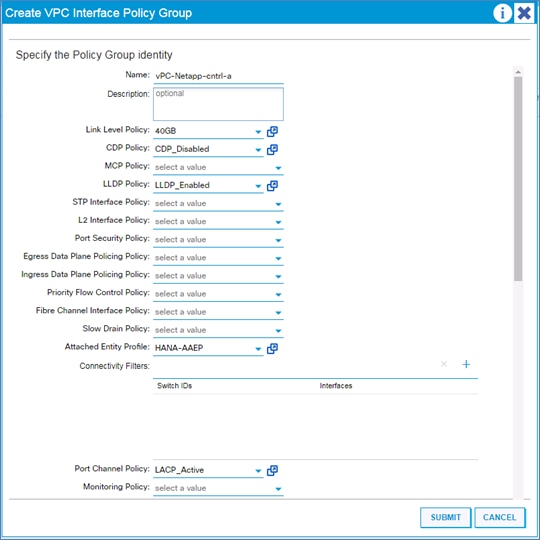

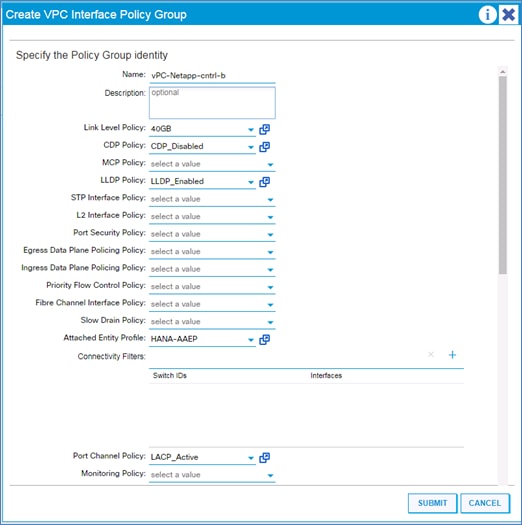

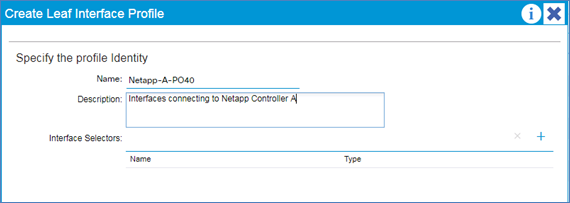

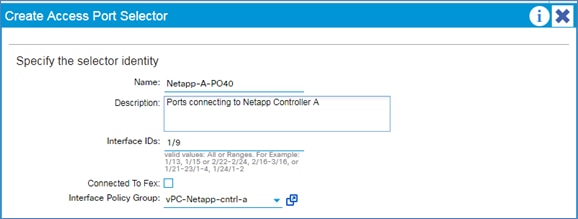

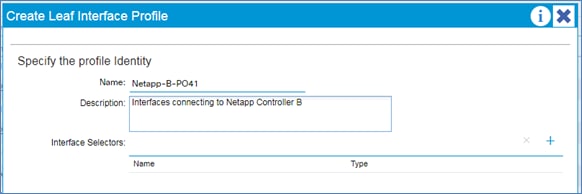

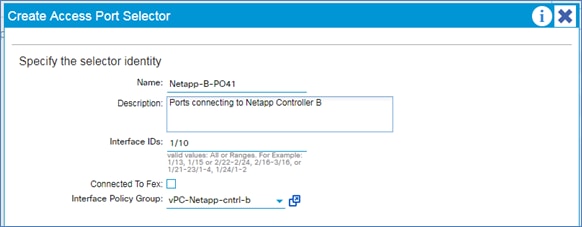

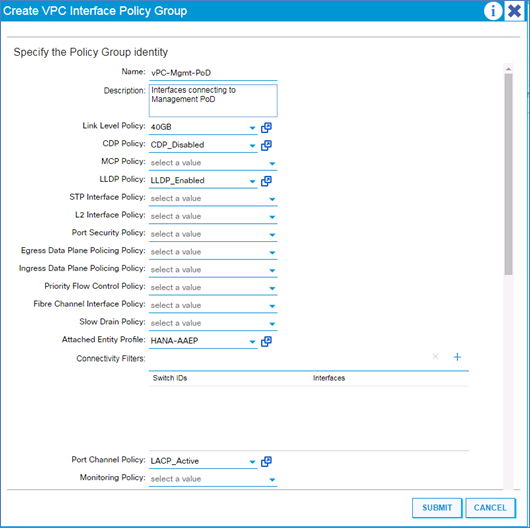

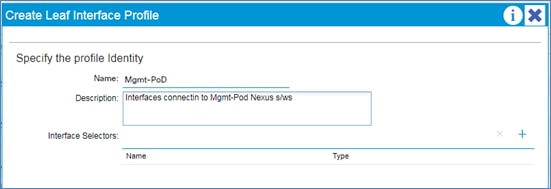

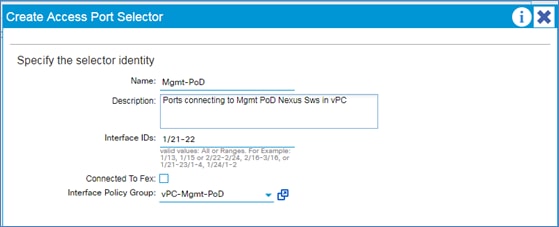

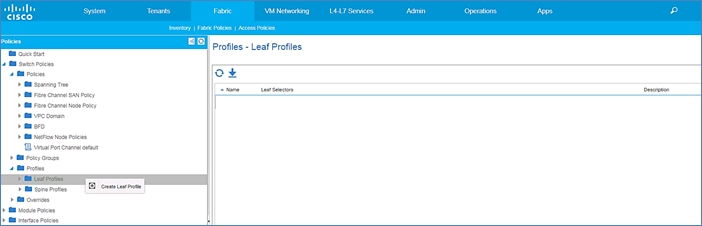

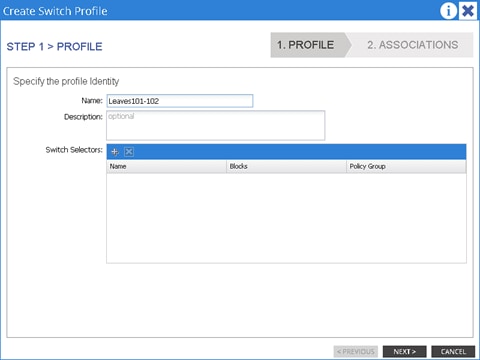

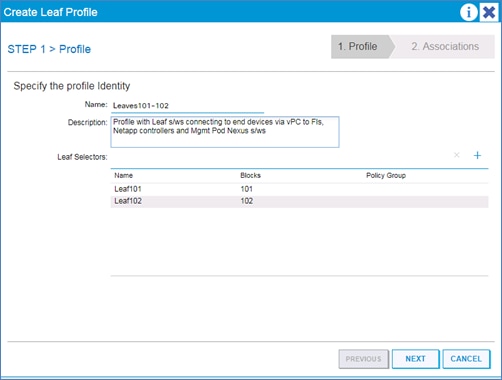

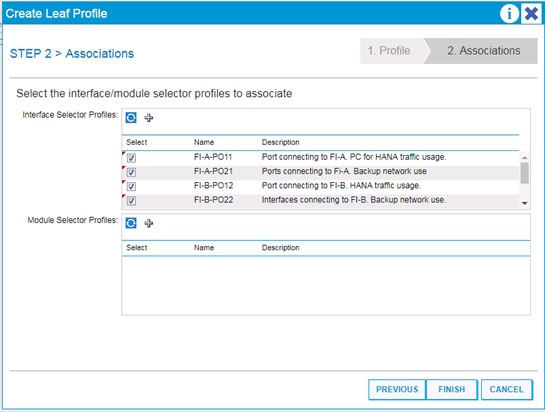

Interface Profile Configuration

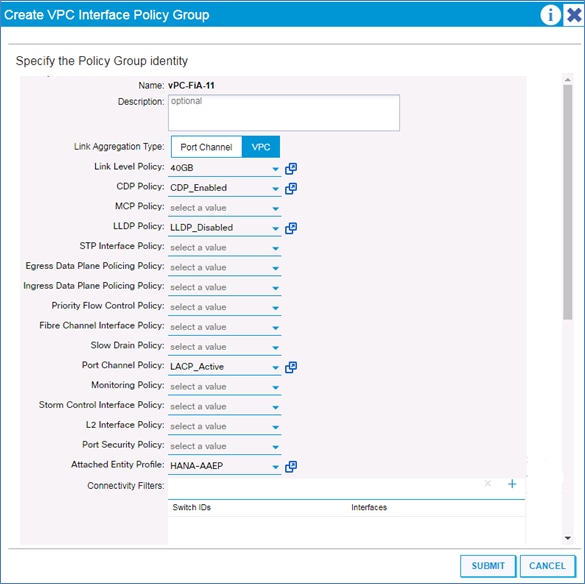

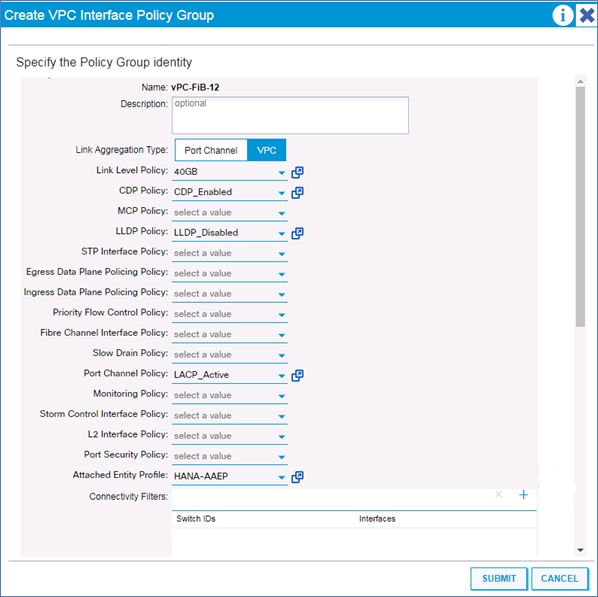

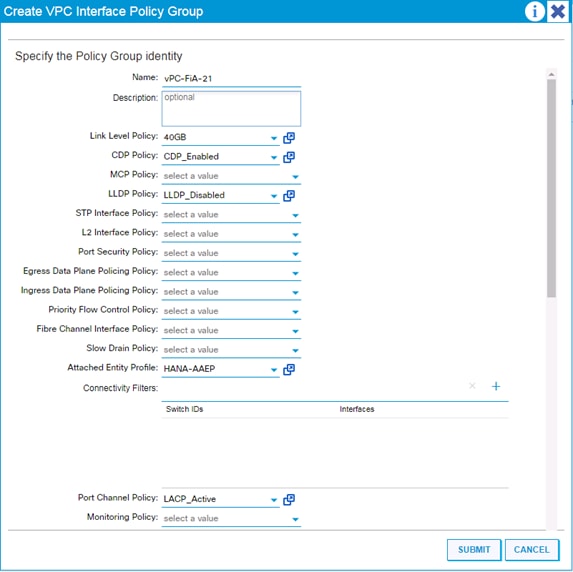

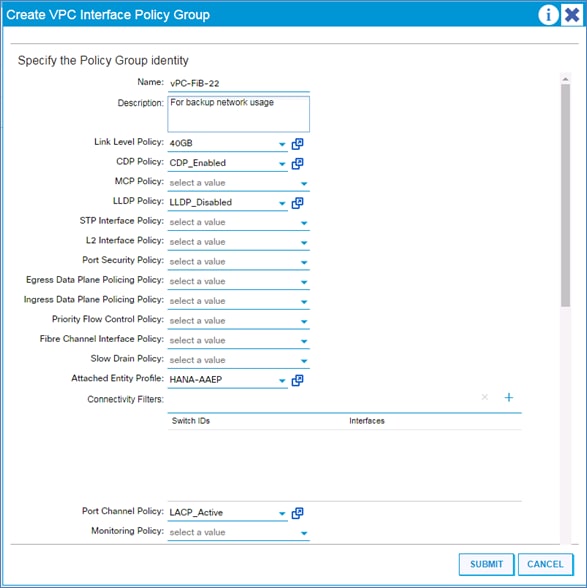

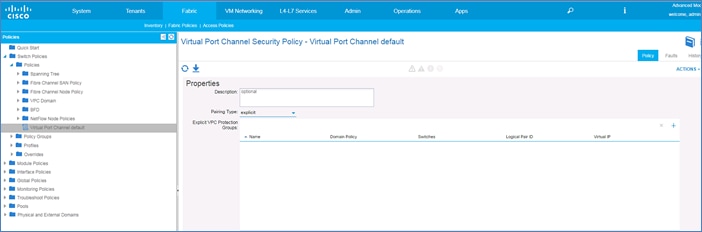

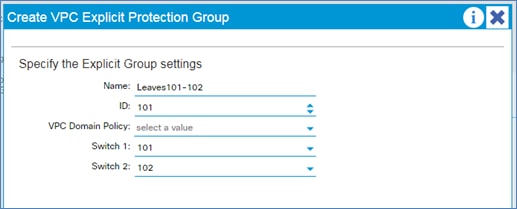

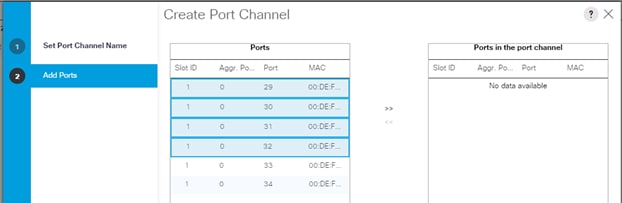

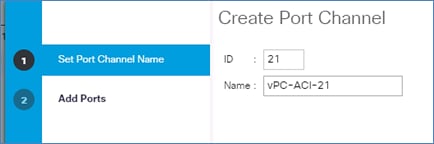

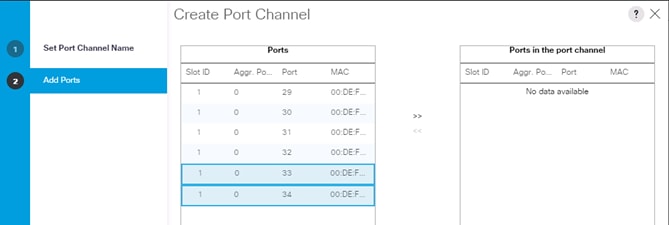

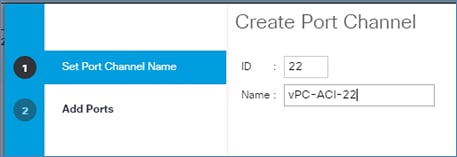

VPC Configuration for NetApp Storage

Cisco UCS Solution for SAP HANA TDI

Cisco UCS Server Configuration

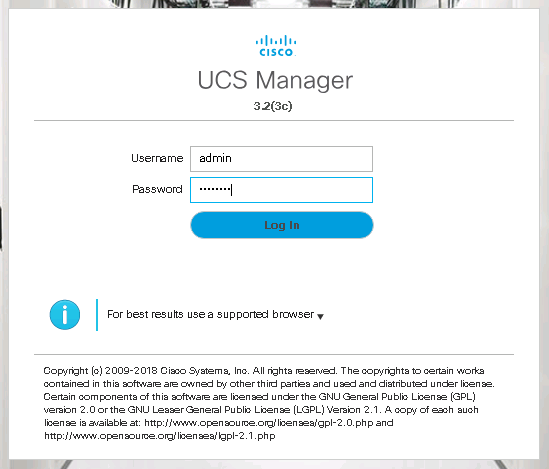

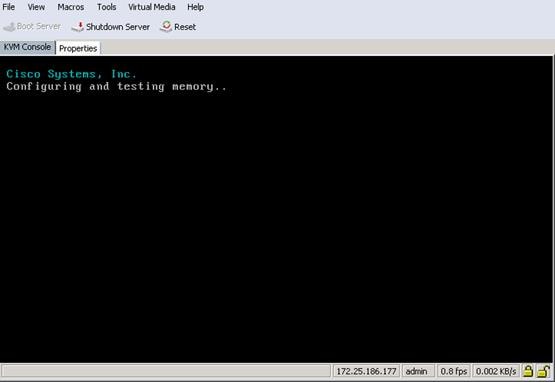

Initial Setup of Cisco UCS 6332 Fabric Interconnect

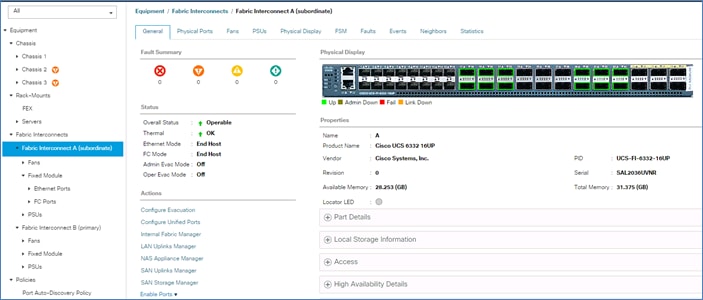

Cisco UCS 6332 Fabric Interconnect A

Cisco UCS 6332 Fabric Interconnect B

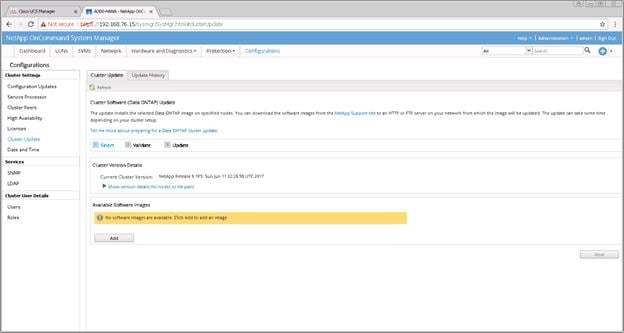

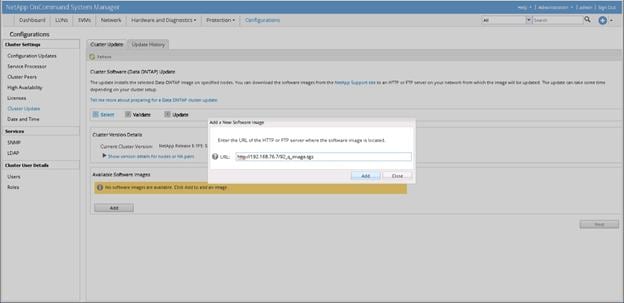

Upgrade Cisco UCS Manager Software to Version 3.2(3d)

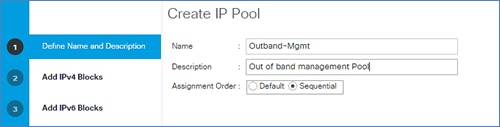

Add Block of IP Addresses for KVM Access

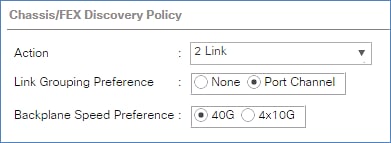

Cisco UCS Blade Chassis Connection Options

Enable Server and Uplink Ports

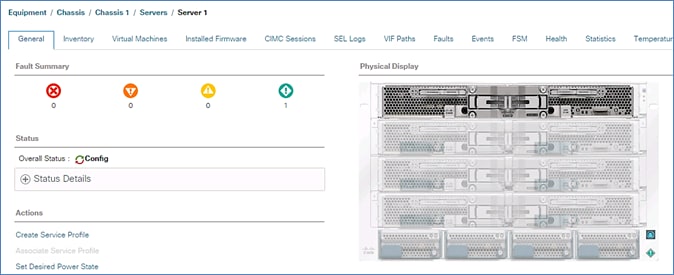

Acknowledge Cisco UCS Chassis and Rack-Mount Servers

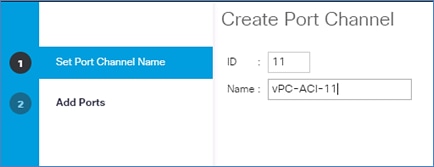

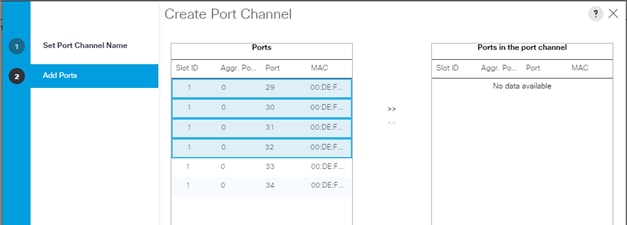

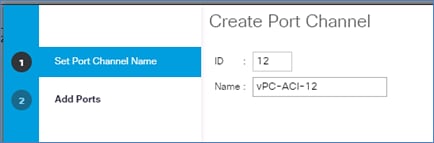

Create Uplink Port Channels to Cisco ACI Leaf Switches

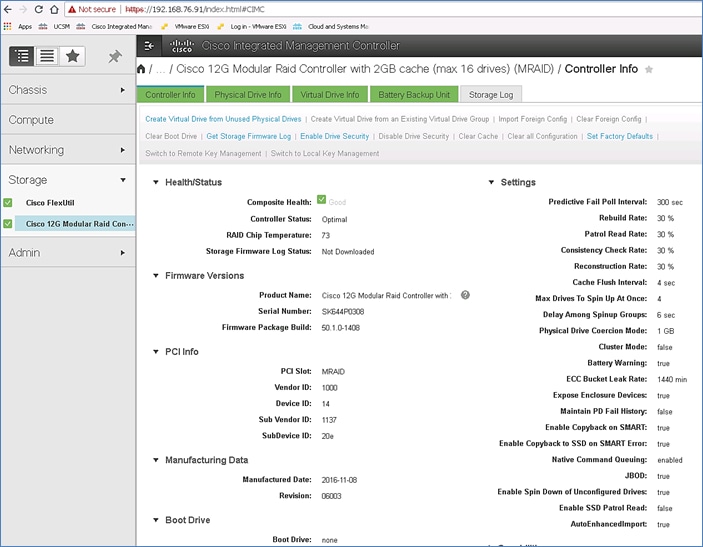

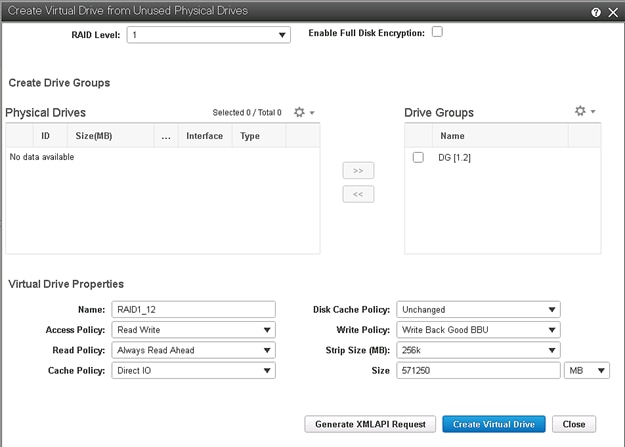

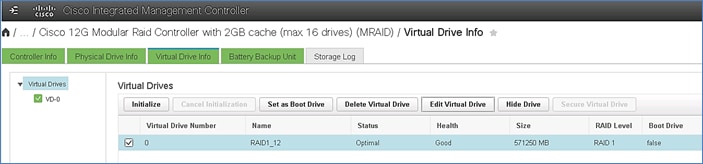

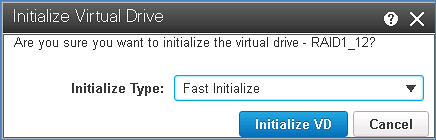

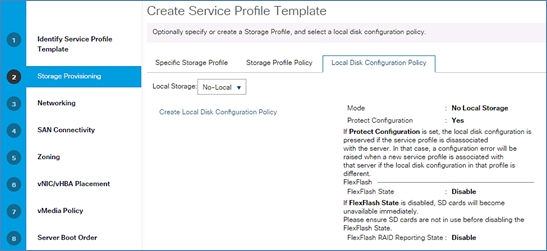

Create Local Disk Configuration Policy (Optional)

Update Default Maintenance Policy

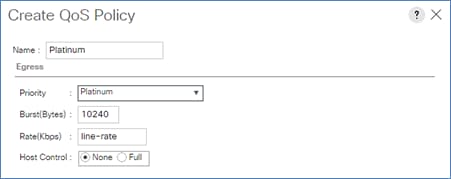

Set Jumbo Frames in Cisco UCS Fabric

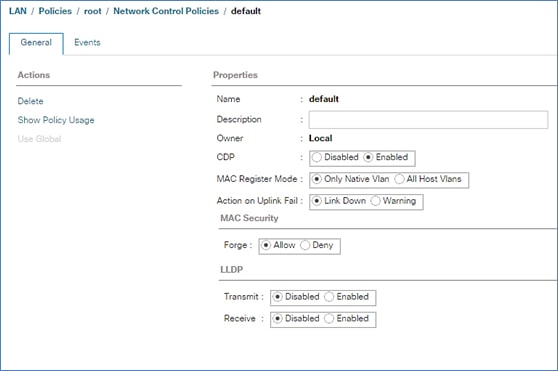

Update Default Network Control Policy to Enable CDP

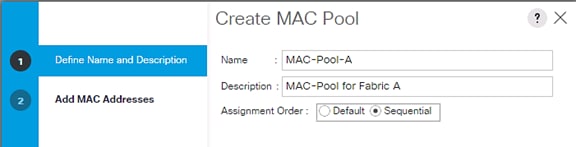

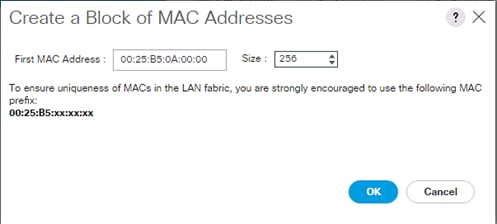

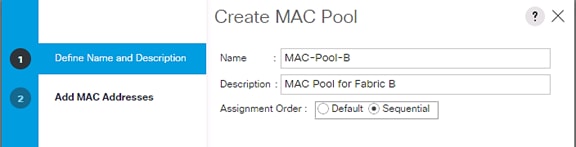

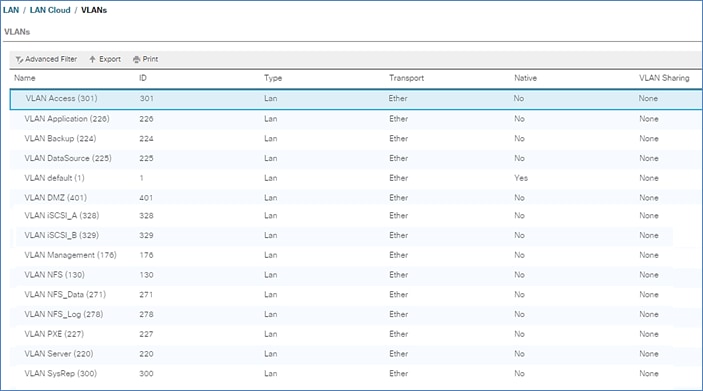

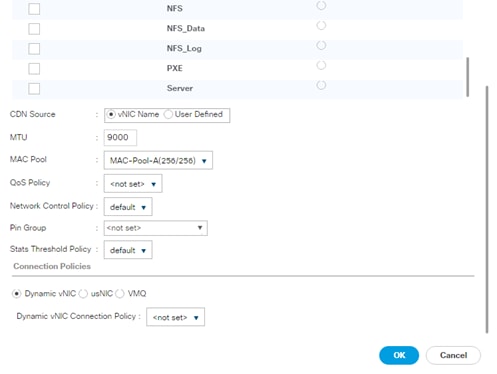

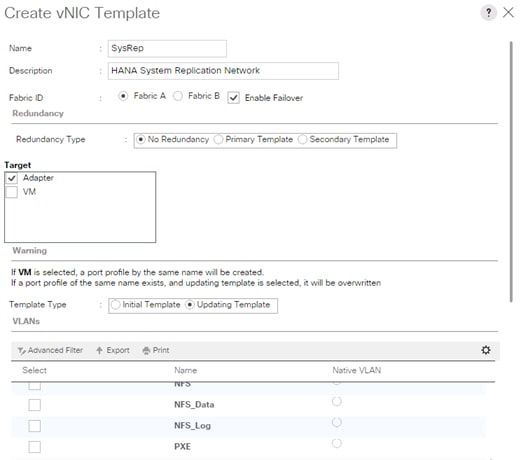

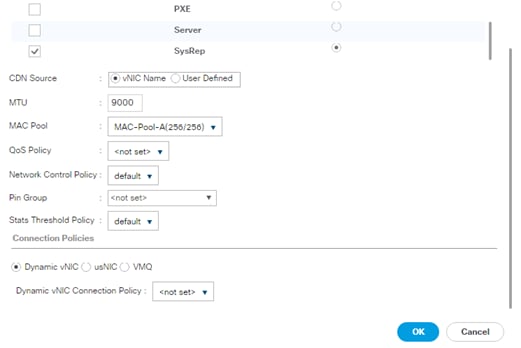

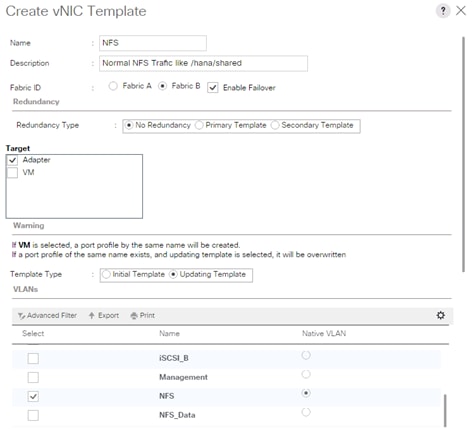

Assigning Port Channels to VLANs

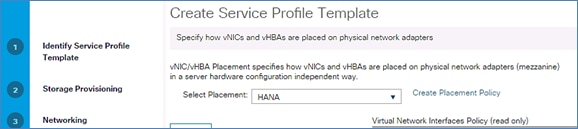

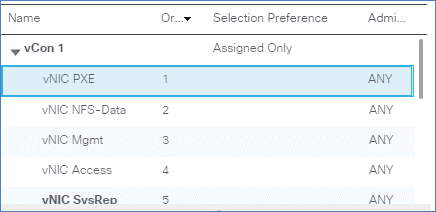

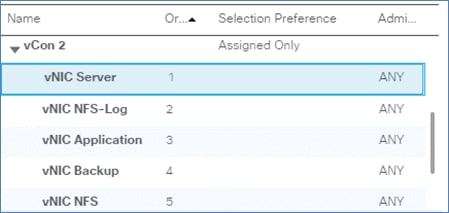

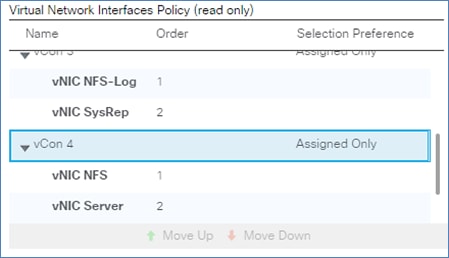

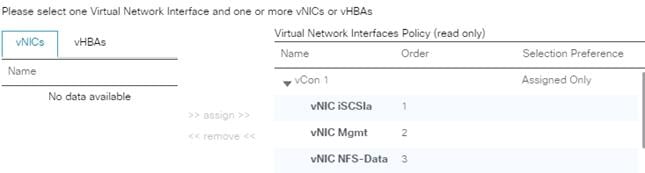

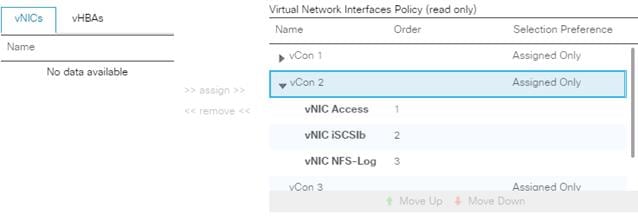

Create vNIC/vHBA Placement Policy

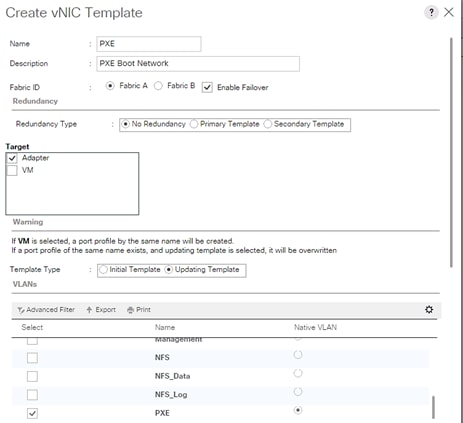

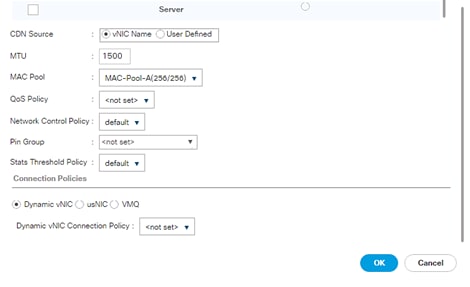

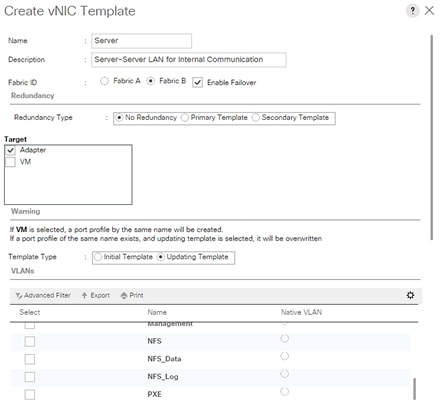

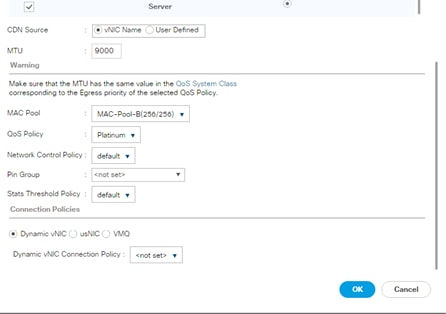

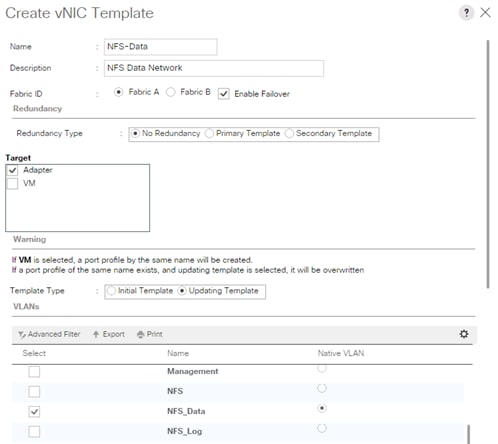

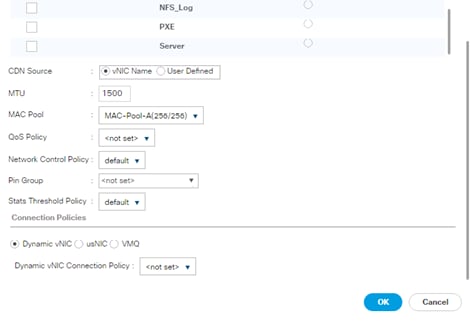

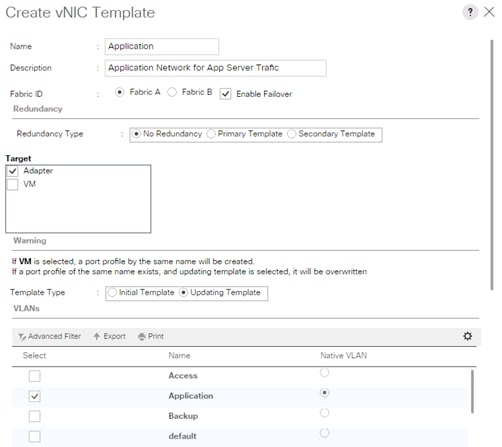

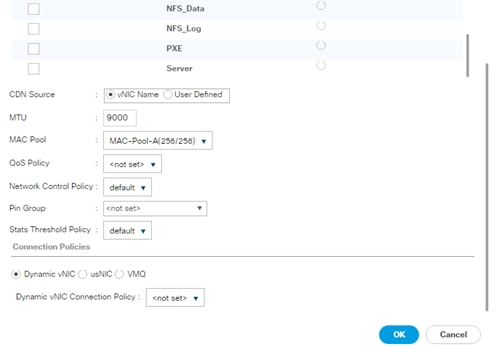

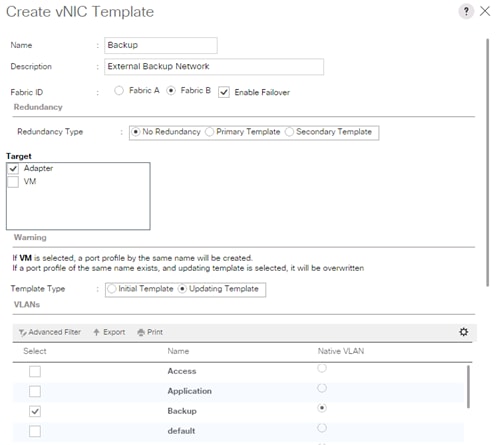

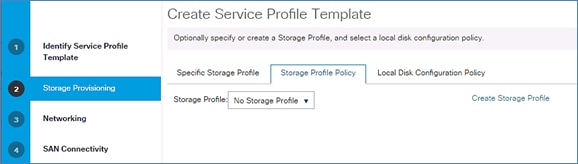

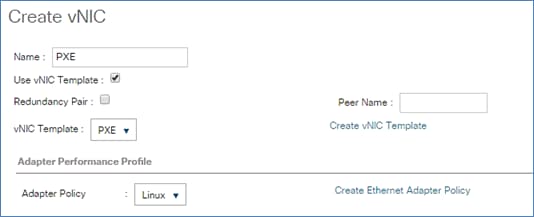

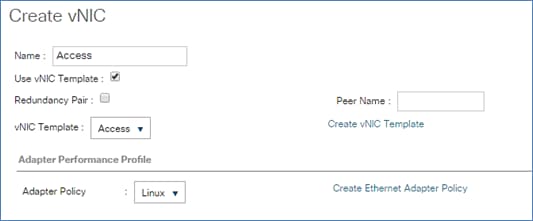

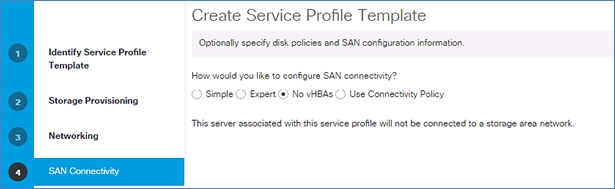

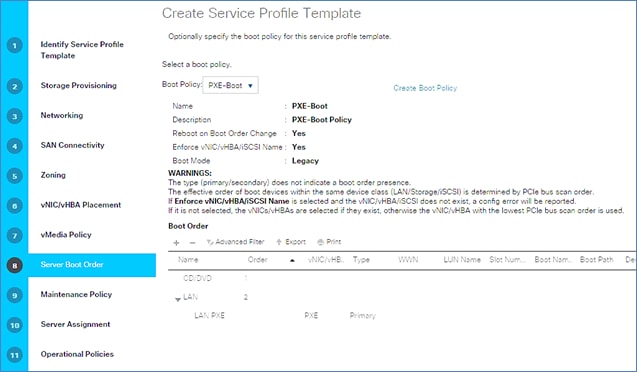

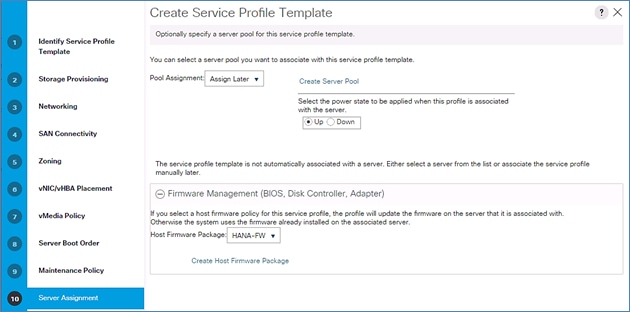

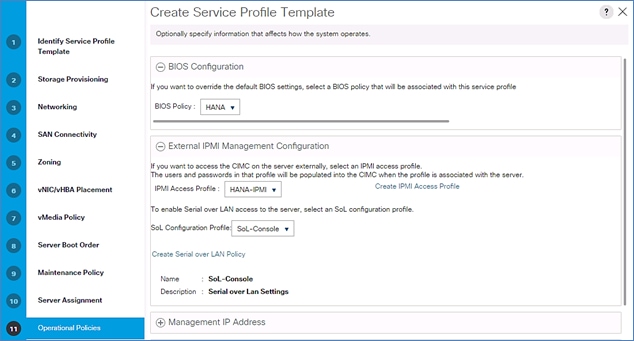

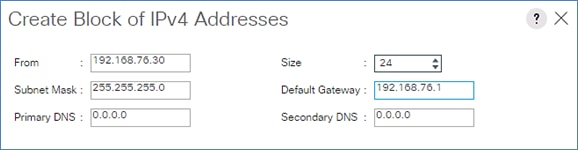

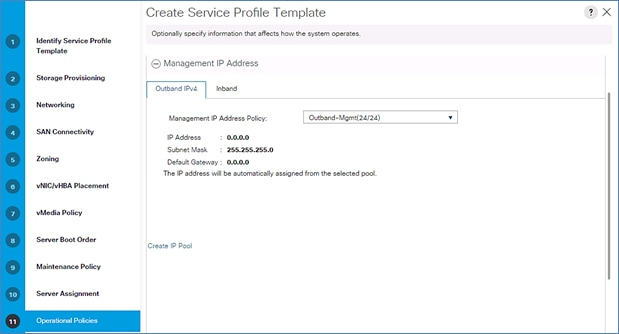

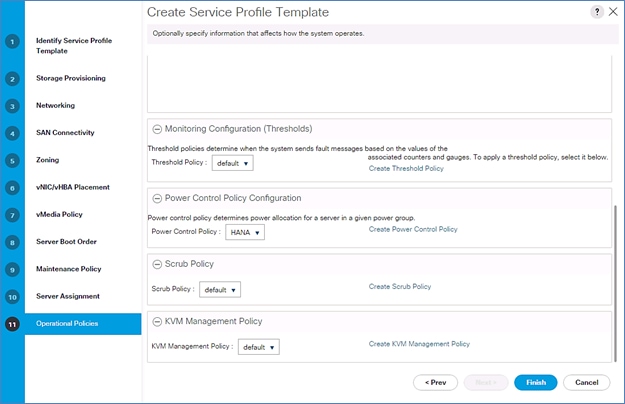

Create Service Profile Templates SAP HANA Scale-Out

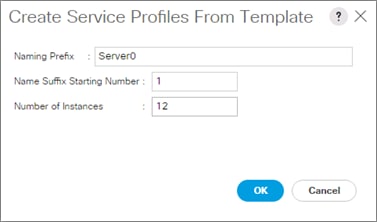

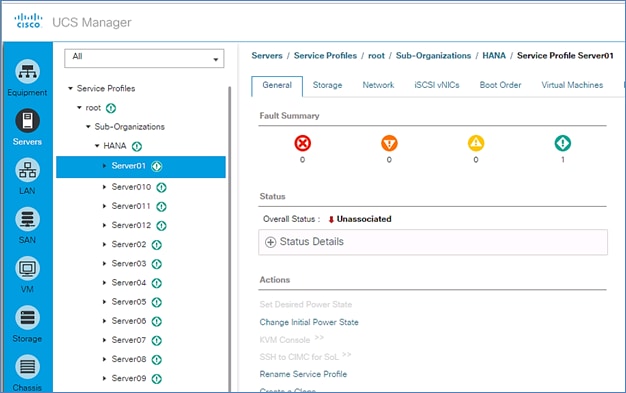

Create Service Profile from the Template

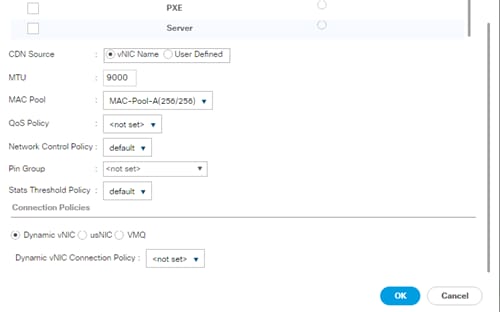

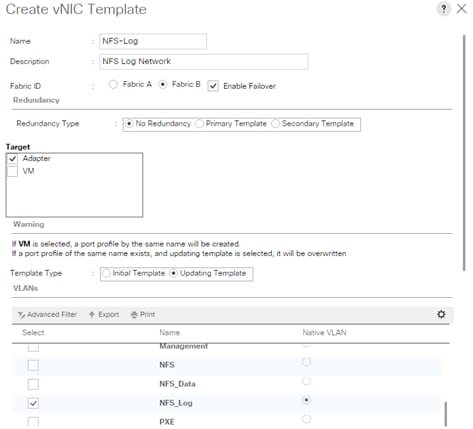

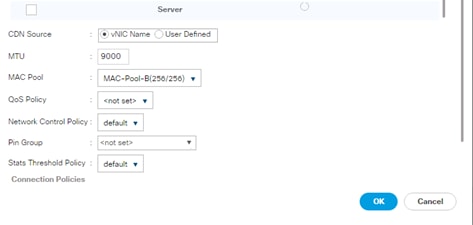

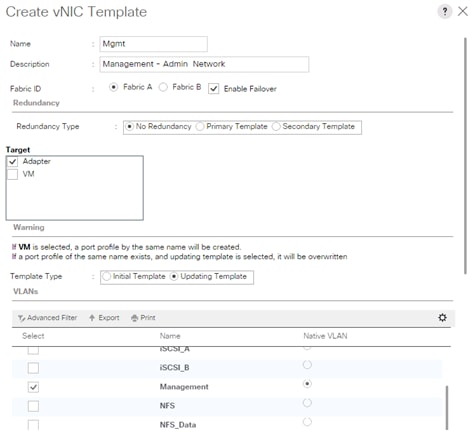

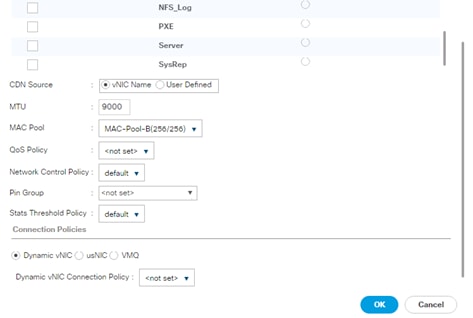

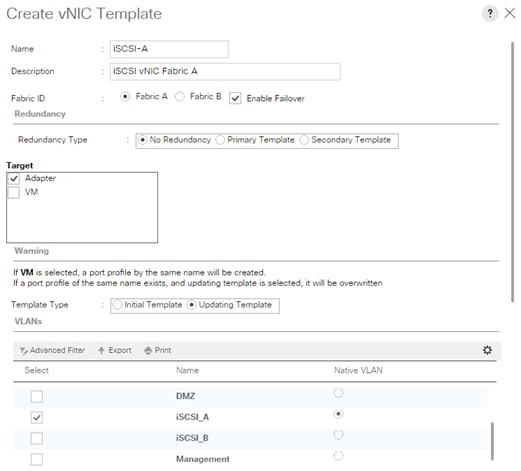

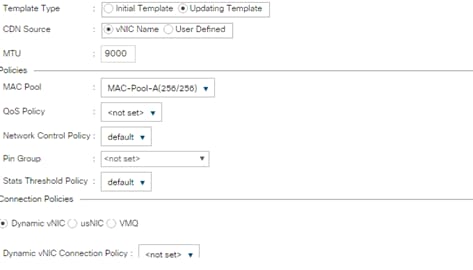

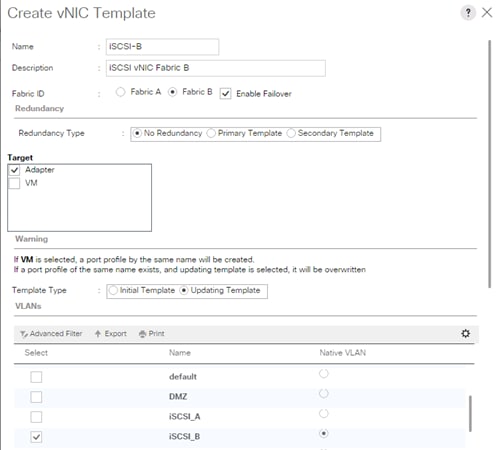

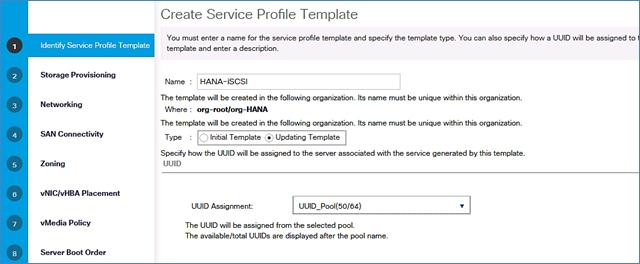

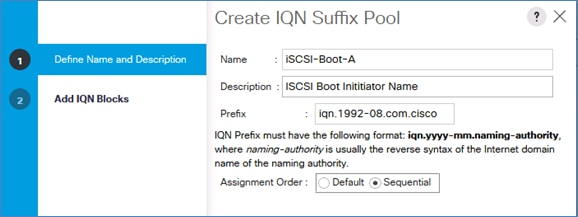

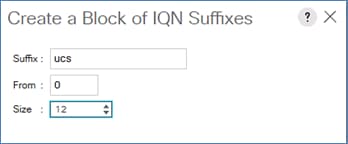

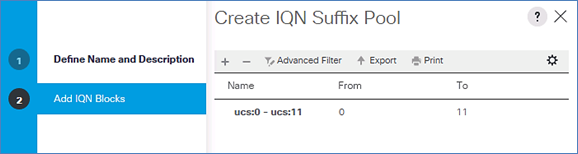

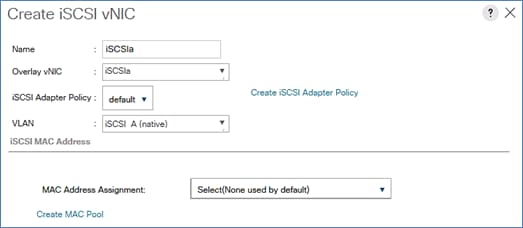

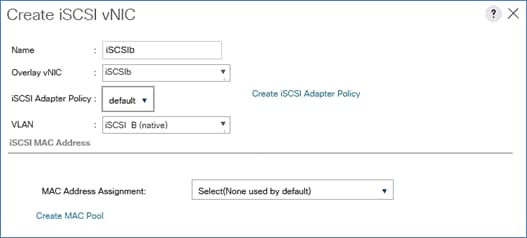

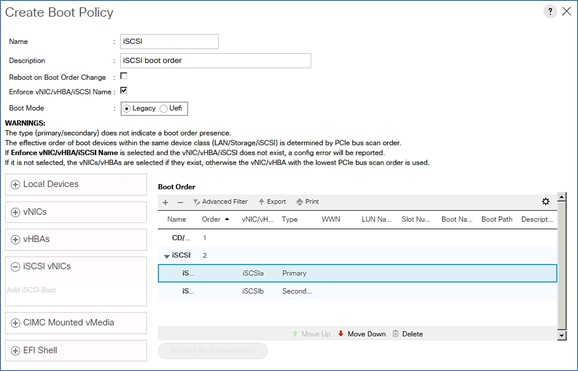

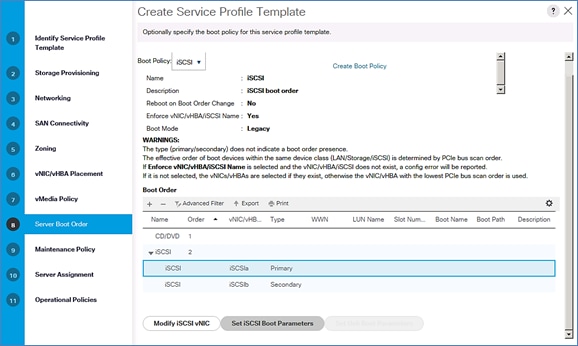

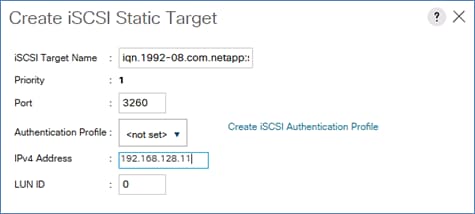

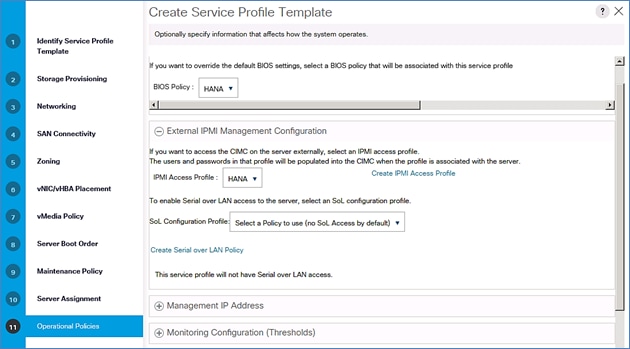

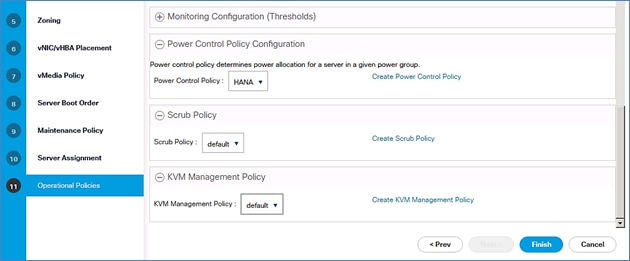

Create Service Profile Templates SAP HANA iSCSI

Create Service Profile Templates for SAP HANA Scale-Up

Complete Configuration Worksheet

Set Auto-Revert on Cluster Management

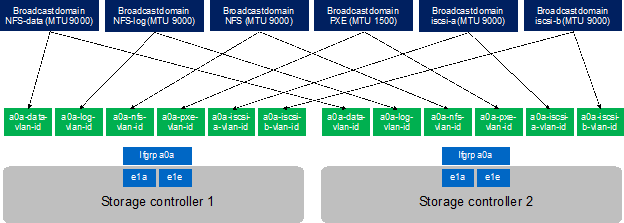

Set Up Management Broadcast Domain

Set Up Service Processor Network Interface

Disable Flow Control on 40GbE Ports

Configure Network Time Protocol

Configure Simple Network Management Protocol

Enable Cisco Discovery Protocol

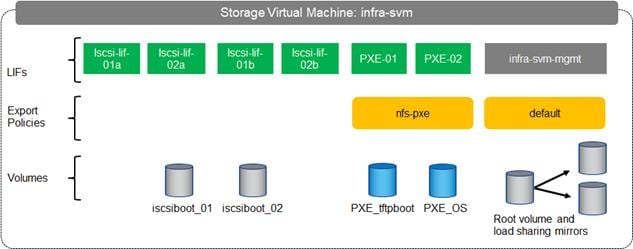

Configure SVM for the Infrastructure

Create SVM for the Infrastructure

Create Export Policies for the Root Volumes

Add Infrastructure SVM Administrator

Create Export Policies for the Infrastructure SVM

Create Block Protocol (iSCSI) Service

Create Export Policies for the Root Volumes

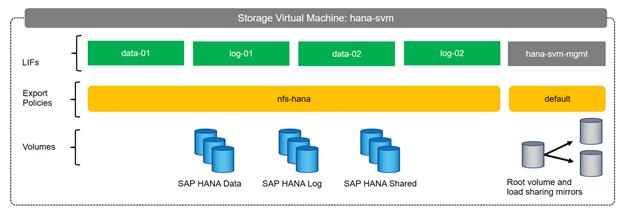

Create Export Policies for the HANA SVM

Create NFS LIF for SAP HANA Data

Create NFS LIF for SAP HANA Log

Preparation of PXE Boot Environment

Installing SLES 12 SP3 based PXE Server VM on the Management Servers

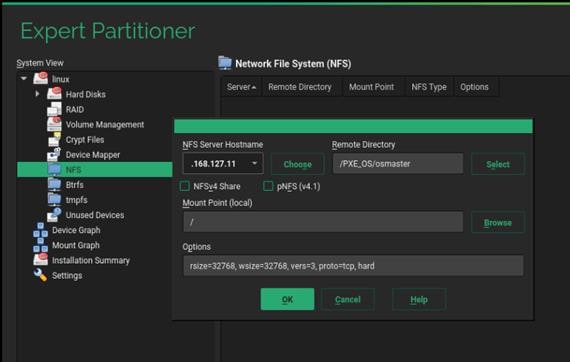

Mount Volume for PXE Boot Configuration

Update Packages for PXE Server VM

Installing RHEL 7.4 based PXE Server VM on the Management Servers

Update Packages for PXE Server VM

Mount Volume for PXE Boot Configuration

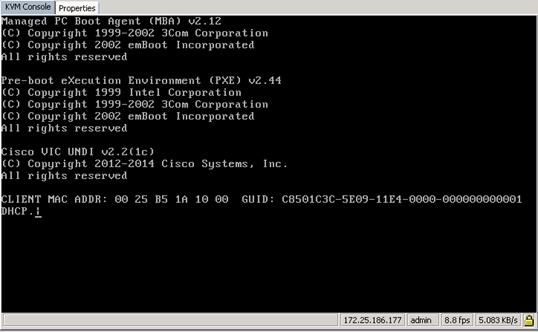

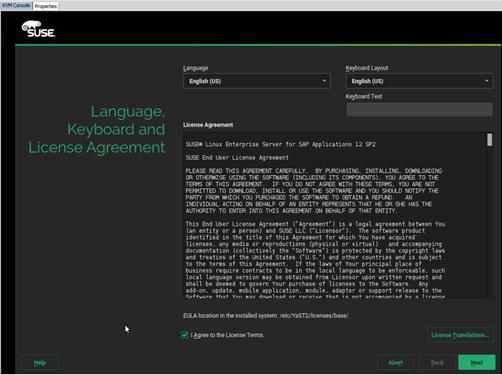

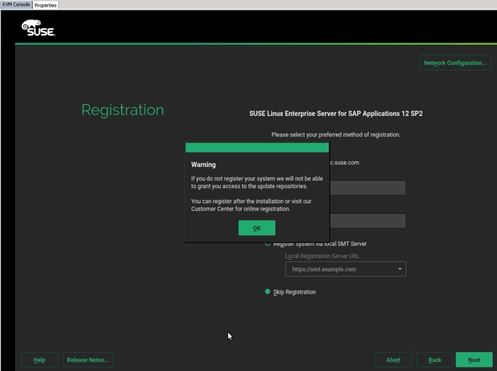

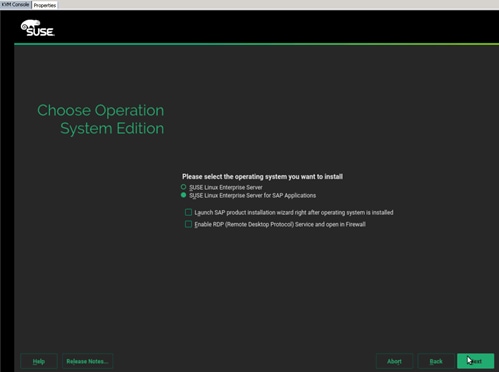

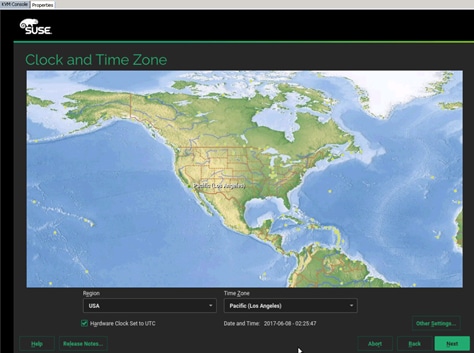

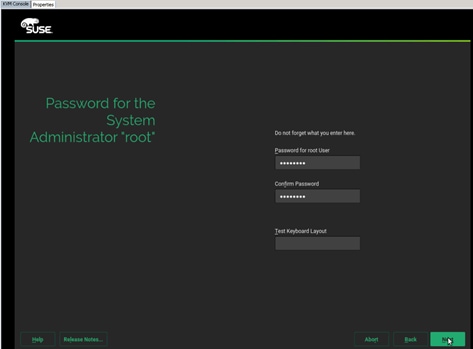

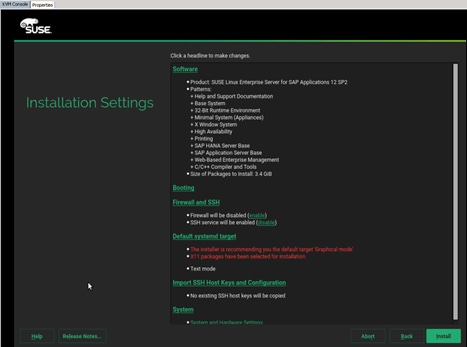

Operating System Installation SUSE SLES12SP3

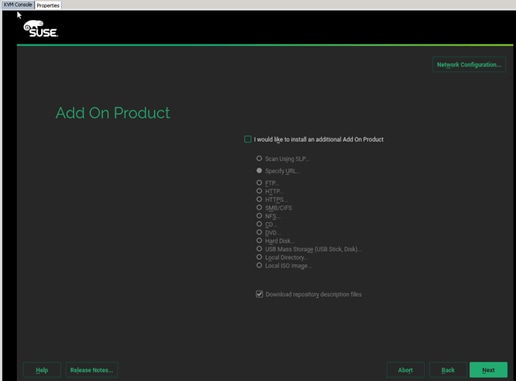

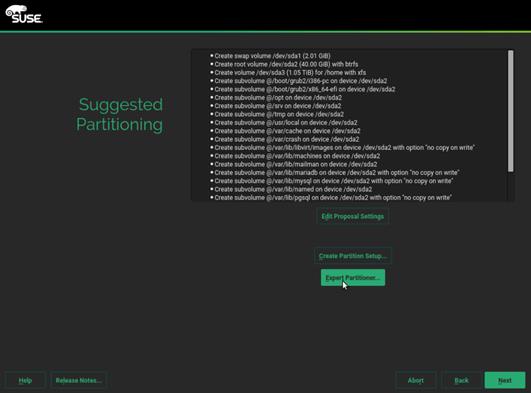

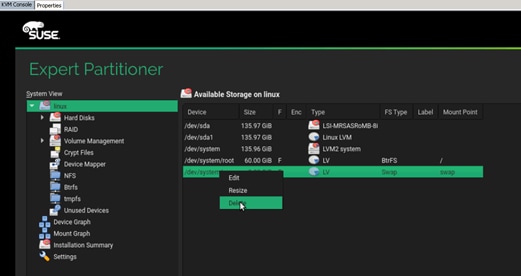

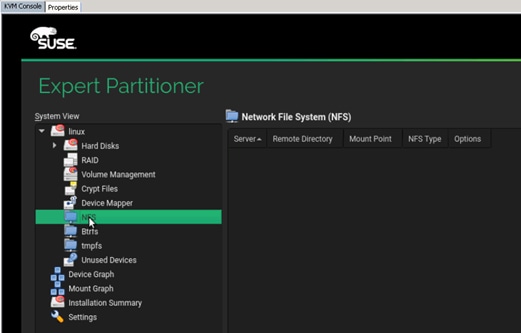

PXE Boot Preparation for SUSE OS Installation

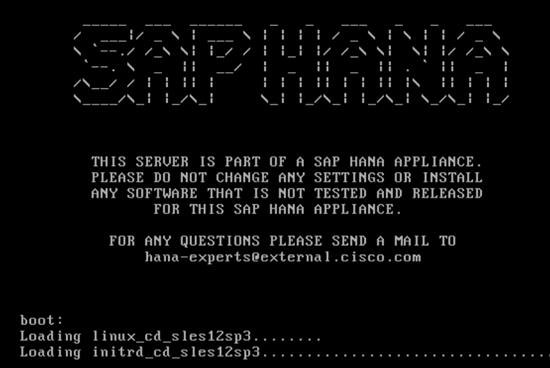

PXE booting with SUSE Linux Enterprise Server 12 SP3

Create Swap Partition in a File

Operating System Optimization for SAP HANA

Post Installation OS Customization

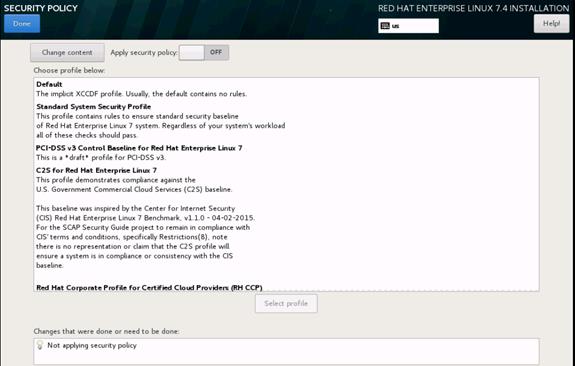

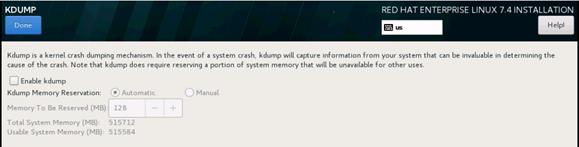

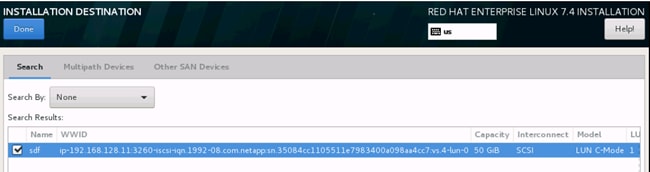

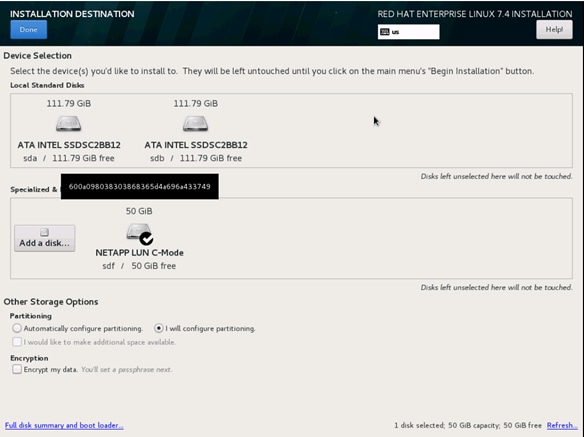

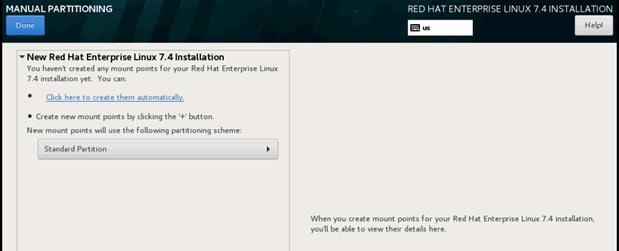

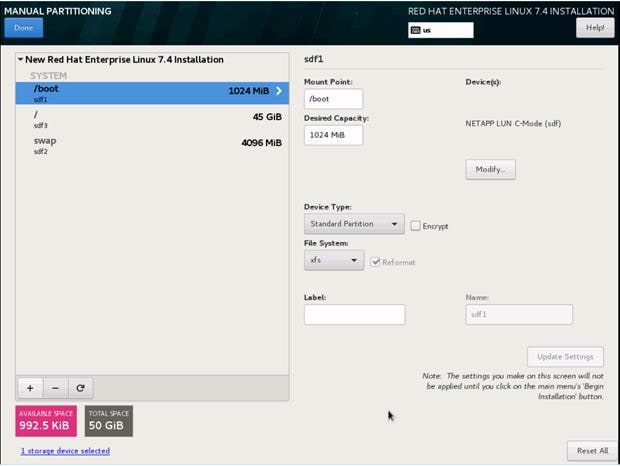

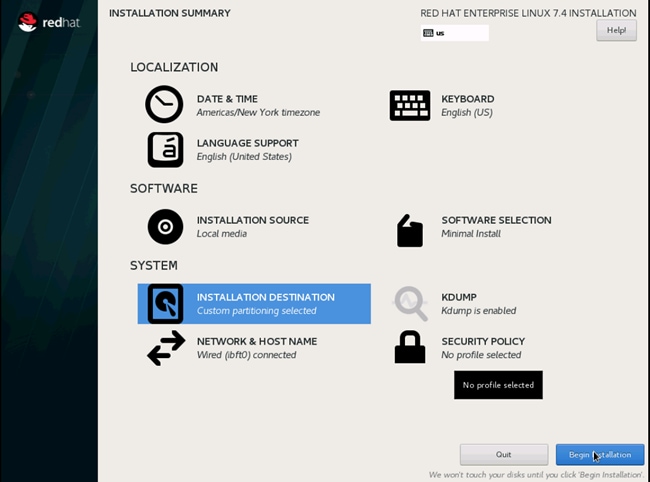

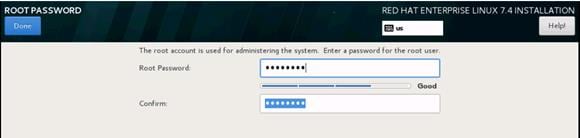

Operating System Installation Red Hat Enterprise Linux 7.4

Post Installation OS Customization

Storage Provisioning for SAP HANA

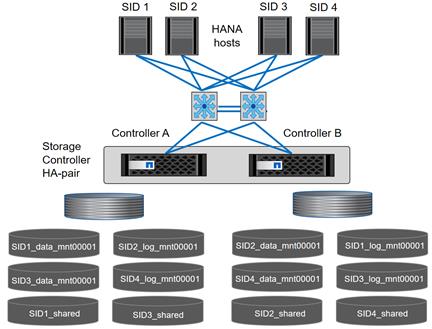

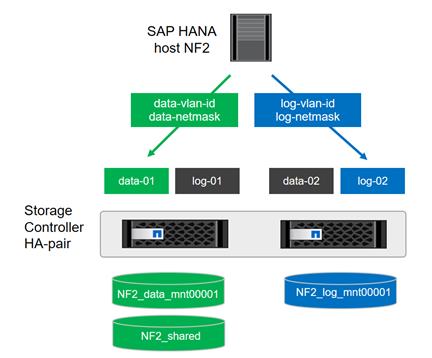

Configuring SAP HANA Single-Host Systems

Configuration Example for a SAP HANA Single-Host System

Create Data Volume and Adjust Volume Options

Create a Log Volume and Adjust the Volume Options

Create a HANA Shared Volume and Qtrees and Adjust the Volume Options

Update the Load-Sharing Mirror Relation

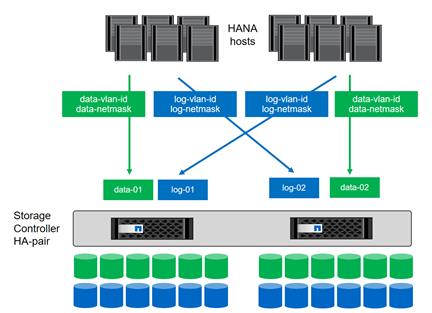

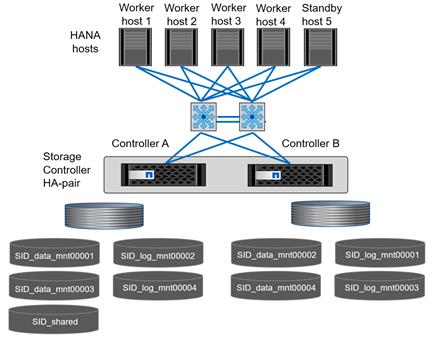

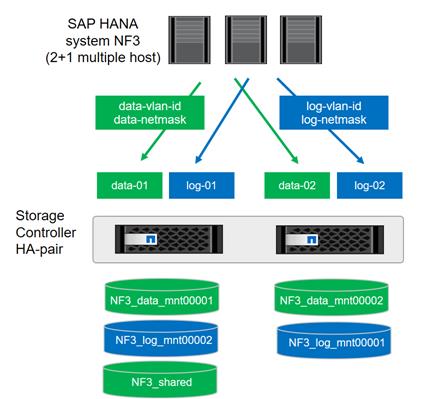

Configuration for SAP HANA Multiple-Host Systems

Configuration Example for a SAP HANA Multiple-Host Systems

Create Data Volumes and Adjust Volume Options

Create Log Volume and Adjust Volume Options

Create HANA Shared Volume and Qtrees and Adjust Volume Options

Update Load-Sharing Mirror Relation

High-Availability (HA) Configuration for Scale-Out

High-Availability Configuration

Enable the SAP HANA Storage Connector API

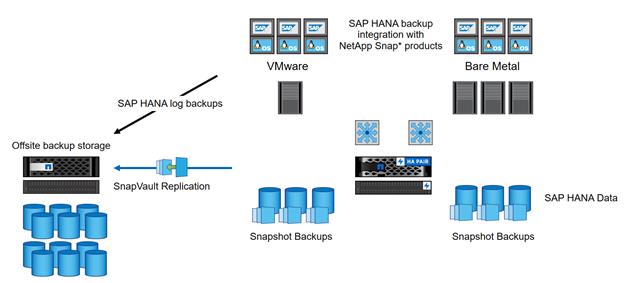

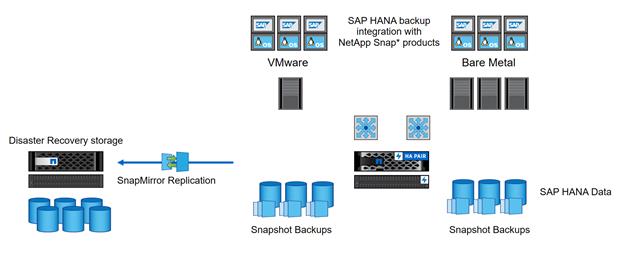

SAP HANA Disaster Recovery with Asynchronous Storage Replication

Cisco® Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers. Cisco and NetApp have partnered to deliver FlexPod, which serves as the foundation for a variety of workloads and enables efficient architectural designs that are based on customer requirements. A FlexPod solution is a validated approach for deploying Cisco and NetApp technologies as a shared infrastructure.

This document describes the architecture and deployment procedures for SAP HANA Tailored DataCenter Integration option on FlexPod infrastructure composed of Cisco® compute and switching products that leverage Cisco Application Centric Infrastructure [ACI] - the industry-leading software-defined networking solution (SDN) along with NetApp® A-series AFF arrays. The intent of this document is to show the design principles with the detailed configuration steps for SAP HANA deployment.

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers deploying the FlexPod Datacenter Solution for SAP HANA with NetApp clustered Data ONTAP®. External references are provided wherever applicable, but readers are expected to be familiar with the technology, infrastructure, and database security policies of the customer installation.

Introduction

FlexPod is a defined set of hardware and software that serves as an integrated foundation for virtualized and non-virtualized data center solutions. It provides a pre-validated, ready-to-deploy infrastructure that reduces the time and complexity involved in configuring and validating a traditional data center deployment. The FlexPod Datacenter solution for SAP HANA includes NetApp storage, NetApp ONTAP, Cisco Nexus® networking, the Cisco Unified Computing System (Cisco UCS), and VMware vSphere software in a single package.

The design is flexible enough that the networking, computing, and storage can fit in one data center rack and can be deployed according to a customer's data center design. A key benefit of the FlexPod architecture is the ability to customize or "flex" the environment to suit a customer's requirements. A FlexPod can easily be scaled as requirements and demand change. The unit can be scaled both up (adding resources to a FlexPod unit) and out (adding more FlexPod units).

The reference architecture detailed in this document highlights the resiliency, cost benefit, and ease of deployment of an IP-based storage solution. A storage system capable of serving multiple protocols across a single interface allows for customer choice and investment protection because it truly a wire-once architecture. The solution is designed to host scalable SAP HANA workloads.

SAP HANA is SAP SE’s implementation of in-memory database technology. A SAP HANA database takes advantage of low cost main memory (RAM), the data-processing capabilities of multicore processors, and faster data access to provide better performance for analytical and transactional applications. SAP HANA offers a multi-engine query-processing environment that supports relational data with both row-oriented and column-oriented physical representations in a hybrid engine. It also offers graph and text processing for semi-structured and unstructured data management within the same system.

With the introduction of SAP HANA TDI for shared infrastructure, the FlexPod solution provides you the advantage of having the compute, storage, and network stack integrated with the programmability of the Cisco UCS. SAP HANA TDI enables organizations to run multiple SAP HANA production systems in one FlexPod solution. It also enables customers to run the SAP applications servers and the SAP HANA database on the same infrastructure.

Audience

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers deploying the FlexPod Datacenter Solution for SAP HANA with NetApp clustered Data ONTAP®. External references are provided wherever applicable, but readers are expected to be familiar with the technology, infrastructure, and database security policies of the customer installation.

Purpose of this Document

This document describes the steps required to deploy and configure a FlexPod Datacenter Solution for SAP HANA with Cisco ACI. Cisco’s validation provides further confirmation with regard to component compatibility, connectivity and correct operation of the entire integrated stack. This document showcases one of the variants of cloud architecture for SAP HANA. While readers of this document are expected to have sufficient knowledge to install and configure the products used, configuration details that are important to the deployment of this solution are provided in this CVD.

The FlexPod Datacenter Solution for SAP HANA with Cisco ACI is composed of Cisco UCS servers, Cisco Nexus switches and NetApp AFF storage. This section describes the main features of these different solution components.

Cisco Unified Computing System

Cisco Unified Computing System™ (Cisco UCS®) is an integrated computing infrastructure with embedded management to automate and accelerate deployment of all your applications, including virtualization and cloud computing, scale-out and bare-metal workloads, and in-memory analytics, as well as edge computing that supports remote and branch locations and massive amounts of data from the Internet of Things (IoT). The main components of Cisco UCS: unified fabric, unified management, and unified computing resources.

The Cisco Unified Computing System is the first integrated data center platform that combines industry standard, x86-architecture servers with networking and storage access into a single unified system. The system is smart infrastructure that uses integrated, model-based management to simplify and accelerate deployment of enterprise-class applications and services running in bare-metal, virtualized, and cloud computing environments. Employing Cisco’s innovative SingleConnect technology, the system’s unified I/O infrastructure uses a unified fabric to support both network and storage I/O. The Cisco fabric extender architecture extends the fabric directly to servers and virtual machines for increased performance, security, and manageability. Cisco UCS helps change the way that IT organizations do business, including the following:

· Increased IT staff productivity and business agility through just-in-time provisioning and equal support for both virtualized and bare-metal environments

· Reduced TCO at the platform, site, and organization levels through infrastructure consolidation

· A unified, integrated system that is managed, serviced, and tested as a whole

· Scalability through a design for up to 160 discrete servers and thousands of virtual machines, the capability to scale I/O bandwidth to match demand, the low infrastructure cost per server, and the capability to manage up to 6000 servers with Cisco UCS Central Software

· Open industry standards supported by a partner ecosystem of industry leaders

· A system that scales to meet future data center needs for computing power, memory footprint, and I/O bandwidth; it is poised to help you move to 40 Gigabit Ethernet with the new Cisco UCS 6300 Series Fabric Interconnects

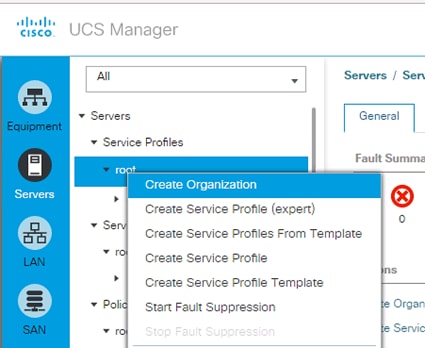

Cisco UCS Manager

Cisco UCS Manager (UCSM) provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System™ (Cisco UCS) and Cisco HyperFlex™ Systems across multiple chassis and rack servers and thousands of virtual machines. It supports all Cisco UCS product models, including Cisco UCS B-Series Blade Servers and C-Series Rack Servers, Cisco UCS Mini, and Cisco HyperFlex hyperconverged infrastructure, as well as the associated storage resources and networks. Cisco UCS Manager is embedded on a pair of Cisco UCS 6300 or 6200 Series Fabric Interconnects using a clustered, active-standby configuration for high availability. The manager participates in server provisioning, device discovery, inventory, configuration, diagnostics, monitoring, fault detection, auditing, and statistics collection.

An instance of Cisco UCS Manager with all Cisco UCS components managed by it forms a Cisco UCS domain, which can include up to 160 servers. In addition to provisioning Cisco UCS resources, this infrastructure management software provides a model-based foundation for simplifying the day-to-day processes of updating, monitoring, and managing computing resources, local storage, storage connections, and network connections. By enabling better automation of processes, Cisco UCS Manager allows IT organizations to achieve greater agility and scale in their infrastructure operations while reducing complexity and risk. The manager provides flexible role- and policy-based management using service profiles and templates.

Cisco UCS Manager manages Cisco UCS systems through an intuitive HTML 5 or Java user interface and a command-line interface (CLI). It can register with Cisco UCS Central Software in a multi-domain Cisco UCS environment, enabling centralized management of distributed systems scaling to thousands of servers. The manager can be integrated with Cisco UCS Director to facilitate orchestration and to provide support for converged infrastructure and Infrastructure as a Service (IaaS).

The Cisco UCS API provides comprehensive access to all Cisco UCS Manager functions. The unified API provides Cisco UCS system visibility to higher-level systems management tools from independent software vendors (ISVs) such as VMware, Microsoft, and Splunk as well as tools from BMC, CA, HP, IBM, and others. ISVs and in-house developers can use the API to enhance the value of the Cisco UCS platform according to their unique requirements. Cisco UCS PowerTool for Cisco UCS Manager and the Python Software Development Kit (SDK) help automate and manage configurations in Cisco UCS Manager.

Cisco UCS Fabric Interconnect

The Cisco UCS 6300 Series Fabric Interconnects are a core part of Cisco UCS, providing both network connectivity and management capabilities for the system. The Cisco UCS 6300 Series offers line-rate, low-latency, lossless 10 and 40 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions. The Cisco UCS 6300 Series provides the management and communication backbone for the Cisco UCS B-Series Blade Servers, 5100 Series Blade Server Chassis, and C-Series Rack Servers managed by Cisco UCS. All servers attached to the fabric interconnects become part of a single, highly available management domain. In addition, by supporting unified fabric, the Cisco UCS 6300 Series provides both LAN and SAN connectivity for all servers within its domain.

From a networking perspective, the Cisco UCS 6300 Series uses a cut-through architecture, supporting deterministic, low-latency, line-rate 10 and 40 Gigabit Ethernet ports, switching capacity of 2.56 terabits per second (Tbps), and 320 Gbps of bandwidth per chassis, independent of packet size and enabled services. The product family supports Cisco® low-latency, lossless 10 and 40 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The fabric interconnect supports multiple traffic classes over a lossless Ethernet fabric from the server through the fabric interconnect. Significant TCO savings can be achieved with an FCoE optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated.

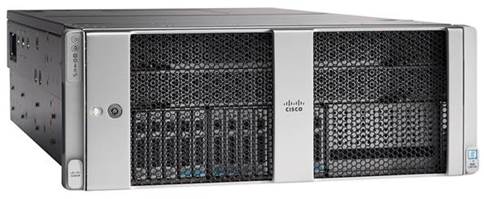

Cisco UCS 6332UP Fabric Interconnect

The Cisco UCS 6332 Fabric Interconnect is the management and communication backbone for Cisco UCS B-Series Blade Servers, C-Series Rack Servers, and 5100 Series Blade Server Chassis. All servers attached to 6332 Fabric Interconnects become part of one highly available management domain. The Cisco UCS 6332UP 32-Port Fabric Interconnect is a 1-rack-unit 40 Gigabit Ethernet, FCoE and Fibre Channel switch offering up to 2.56 Tbps throughput and up to 32 ports. The switch has 32 fixed 40-Gbps Ethernet and FCoE ports. Cisco UCS 6332UP 32-Port Fabric Interconnect have ports that can be configured for the breakout feature that supports connectivity between 40 Gigabit Ethernet ports and 10 Gigabit Ethernet ports. This feature provides backward compatibility to existing hardware that supports 10 Gigabit Ethernet. A 40 Gigabit Ethernet port can be used as four 10 Gigabit Ethernet ports. Using a 40 Gigabit Ethernet SFP, these ports on a Cisco UCS 6300 Series Fabric Interconnect can connect to another fabric interconnect that has four 10 Gigabit Ethernet SFPs.

Figure 1 Cisco UCS 6332 UP Fabric Interconnect

Cisco UCS 2304XP Fabric Extender

The Cisco UCS 2304 Fabric Extender has four 40 Gigabit Ethernet, FCoE-capable, Quad Small Form-Factor Pluggable (QSFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2304 has four 40 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 320 Gbps of I/O to the chassis.

Figure 2 Cisco UCS 2304 XP

Cisco UCS Blade Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server.

The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors. Four hot-swappable power supplies are accessible from the front of the chassis, and single-phase AC, –48V DC, and 200 to 380V DC power supplies and chassis are available. These power supplies are up to 94 percent efficient and meet the requirements for the 80 Plus Platinum rating. The power subsystem can be configured to support nonredundant, N+1 redundant, and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays that can support either Cisco UCS 2000 Series Fabric Extenders or the Cisco UCS 6324 Fabric Interconnect. A passive midplane provides up to 80 Gbps of I/O bandwidth per server slot and up to 160 Gbps of I/O bandwidth for two slots.

The Cisco UCS Blade Server Chassis is shown in Error! Reference source not found..

Figure 3 Cisco Blade Server Chassis (front and back view)

Cisco UCS B480 M5 Blade Server

The enterprise-class Cisco UCS B480 M5 Blade Server delivers market-leading performance, versatility, and density without compromise for memory-intensive mission-critical enterprise applications and virtualized workloads, among others. With the Cisco UCS B480 M5, you can quickly deploy stateless physical and virtual workloads with the programmability that Cisco UCS Manager and Cisco® SingleConnect technology enable.

The Cisco UCS B480 M5 is a full-width blade server supported by the Cisco UCS 5108 Blade Server Chassis. The Cisco UCS 5108 chassis and the Cisco UCS B-Series Blade Servers provide inherent architectural advantages:

· Through Cisco UCS, gives you the architectural advantage of not having to power, cool, manage, and purchase excess switches (management, storage, and networking), Host Bus Adapters (HBAs), and Network Interface Cards (NICs) in each blade chassis

· Reduces the Total Cost of Ownership (TCO) by removing management modules from the chassis, making the chassis stateless

· Provides a single, highly available Cisco Unified Computing System™ (Cisco UCS) management domain for all system chassis and rack servers, reducing administrative tasks

The Cisco UCS B480 M5 Blade Server offers:

· Four Intel® Xeon® Scalable CPUs (up to 28 cores per socket)

· 2666-MHz DDR4 memory and 48 DIMM slots with up to 6 TB using 128-GB DIMMs

· Cisco FlexStorage® storage subsystem

· Five mezzanine adapters and support for up to four GPUs

· Cisco UCS Virtual Interface Card (VIC) 1340 modular LAN on Motherboard (mLOM) and upcoming fourth-generation VIC mLOM

· Internal Secure Digital (SD) and M.2 boot options

Figure 4 Cisco UCS B480 M5 Blade Server

Cisco UCS C480 M5 Rack Servers

The Cisco UCS C480 M5 Rack Server is a storage and I/O optimized enterprise-class rack server that delivers industry-leading performance for in-memory databases, big data analytics, virtualization, Virtual Desktop Infrastructure (VDI), and bare-metal applications. The Cisco UCS C480 M5 delivers outstanding levels of expandability and performance for standalone or Cisco Unified Computing System™ (Cisco UCS) managed environments in a 4RU form-factor, and because of its modular design, you pay for only what you need. It offers these capabilities:

· Latest Intel® Xeon® Scalable processors with up to 28 cores per socket and support for two or four processor configurations

· 2666-MHz DDR4 memory and 48 DIMM slots for up to 6 TeraBytes (TB) of total memory

· 12 PCI Express (PCIe) 3.0 slots

- Six x8 full-height, full length slots

- Six x16 full-height, full length slots

· Flexible storage options with support up to 24 Small-Form-Factor (SFF) 2.5-inch, SAS, SATA, and PCIe NVMe disk drives

· Cisco® 12-Gbps SAS Modular RAID Controller in a dedicated slot

· Internal Secure Digital (SD) and M.2 boot options

· Dual embedded 10 Gigabit Ethernet LAN-On-Motherboard (LOM) ports

Cisco UCS C480 M5 servers can be deployed as standalone servers or in a Cisco UCS managed environment. When used in combination with Cisco UCS Manager, the Cisco UCS C480 M5 brings the power and automation of unified computing to enterprise applications, including Cisco® SingleConnect technology, drastically reducing switching and cabling requirements. Cisco UCS Manager uses service profiles, templates, and policy-based management to enable rapid deployment and help ensure deployment consistency. It also enables end-to-end server visibility, management, and control in both virtualized and bare-metal environments.

Figure 5 Cisco UCS C480 M5 Rack Server

Cisco UCS C240 M5 Rack Servers

The Cisco UCS C240 M5 Rack Server is a 2-socket, 2-Rack-Unit (2RU) rack server offering industry-leading performance and expandability. It supports a wide range of storage and I/O-intensive infrastructure workloads, from big data and analytics to collaboration. Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of a Cisco Unified Computing System™ (Cisco UCS) managed environment to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ Total Cost of Ownership (TCO) and increase their business agility.

In response to ever-increasing computing and data-intensive real-time workloads, the enterprise-class Cisco UCS C240 M5 server extends the capabilities of the Cisco UCS portfolio in a 2RU form factor. It incorporates the Intel® Xeon® Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, and five times more. Non-Volatile Memory Express (NVMe) PCI Express (PCIe) Solid-State Disks (SSDs) compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance. The Cisco UCS C240 M5 delivers outstanding levels of storage expandability with exceptional performance, along with the following:

· Latest Intel Xeon Scalable CPUs with up to 28 cores per socket

· Up to 24 DDR4 DIMMs for improved performance

· Up to 26 hot-swappable Small-Form-Factor (SFF) 2.5-inch drives, including 2 rear hot-swappable SFF drives (up to 10 support NVMe PCIe SSDs on the NVMe-optimized chassis version), or 12 Large-Form-Factor (LFF) 3.5-inch drives plus 2 rear hot-swappable SFF drives

· Support for 12-Gbps SAS modular RAID controller in a dedicated slot, leaving the remaining PCIe Generation 3.0 slots available for other expansion cards

· Modular LAN-On-Motherboard (mLOM) slot that can be used to install a Cisco UCS Virtual Interface Card (VIC) without consuming a PCIe slot, supporting dual 10- or 40-Gbps network connectivity

· Dual embedded Intel x550 10GBASE-T LAN-On-Motherboard (LOM) ports

· Modular M.2 or Secure Digital (SD) cards that can be used for boot

Cisco UCS C240 M5 servers can be deployed as standalone servers or in a Cisco UCS managed environment. When used in combination with Cisco UCS Manager, the Cisco UCS C240 M5 brings the power and automation of unified computing to enterprise applications, including Cisco® SingleConnect technology, drastically reducing switching and cabling requirements. Cisco UCS Manager uses service profiles, templates, and policy-based management to enable rapid deployment and help ensure deployment consistency. If also enables end-to-end server visibility, management, and control in both virtualized and bare-metal environments.

Figure 6 Cisco UCS C240 M5 Rack Server

Cisco I/O Adapters for Blade and Rack-Mount Servers

This section discusses the Cisco I/O Adapters used in this solution.

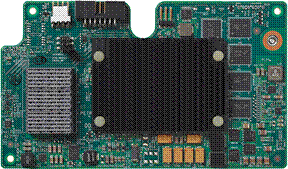

Cisco VIC 1340 Virtual Interface Card

The Cisco UCS blade server has various Converged Network Adapters (CNA) options.

The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port 40-Gbps Ethernet or dual 4 x 10-Gbps Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) designed exclusively for the Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the Cisco UCS VIC 1340 capabilities is enabled for two ports of 40-Gbps Ethernet.

Figure 7 Cisco UCS 1340 VIC Card

The Cisco UCS VIC 1340 enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1340 supports Cisco® Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management.

Cisco VIC 1380 Virtual Interface Card

The Cisco UCS Virtual Interface Card (VIC) 1380 is a dual-port 40-Gbps Ethernet, or dual 4 x 10 Fibre Channel over Ethernet (FCoE)-capable mezzanine card designed exclusively for the M5 generation of Cisco UCS B-Series Blade Servers. The card enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1380 supports Cisco® Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management.

Figure 8 Cisco UCS 1380 VIC Card

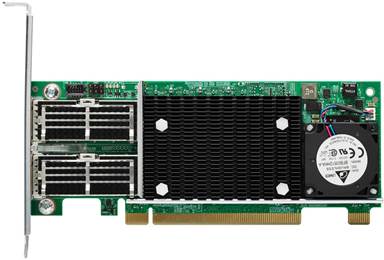

Cisco VIC 1385 Virtual Interface Card

The Cisco UCS Virtual Interface Card (VIC) 1385 is a Cisco® innovation. It provides a policy-based, stateless, agile server infrastructure for your data center. This dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP) half-height PCI Express (PCIe) card is designed exclusively for Cisco UCS C-Series Rack Servers. The card supports 40 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE). It incorporates Cisco’s next-generation converged network adapter (CNA) technology and offers a comprehensive feature set, providing investment protection for future feature software releases. The card can present more than 256 PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the VIC supports Cisco Data Center Virtual Machine Fabric Extender (VM-FEX) technology. This technology extends the Cisco UCS Fabric Interconnect ports to virtual machines, simplifying server virtualization deployment.

Figure 9 Cisco UCS 1385 VIC Card

Cisco UCS Differentiators

Cisco Unified Computing System is revolutionizing the way servers are managed in data-center. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

· Embedded management: In Cisco Unified Computing System, the servers are managed by the embedded firmware in the Fabric Interconnects, eliminating need for any external physical or virtual devices to manage the servers. Also, a pair of FIs can manage up to 40 chassis, each containing 8 blade servers. This gives enormous scaling on management plane.

· Unified fabric: In Cisco Unified Computing System, from blade server chassis or rack server fabric extender to FI, there is a single Ethernet cable used for LAN, SAN and management traffic. This converged I/O, results in reduced cables, SFPs and adapters – reducing capital and operational expenses of overall solution.

· Auto discovery: By simply inserting the blade server in the chassis or connecting rack server to the fabric extender, discovery and inventory of compute resource occurs automatically without any management intervention. Combination of unified fabric and auto-discovery enables wire-once architecture of Cisco Unified Computing System, where compute capability of Cisco Unified Computing System can extend easily, while keeping the existing external connectivity to LAN, SAN and management networks.

· Policy based resource classification: When a compute resource is discovered by Cisco UCS Manager, it can be automatically classified to a given resource pool based on policies defined. This capability is useful in multi-tenant cloud computing. This CVD focuses on the policy-based resource classification of Cisco UCS Manager.

· Combined Rack and Blade server management: Cisco UCS Manager can manage Cisco UCS B-Series Blade Servers and Cisco UCS C-Series Rack Servers under the same Cisco UCS domain. This feature, along with stateless computing makes compute resources truly hardware form factor agnostic. This CVD focuses on the combination of B-Series and C-Series Servers to demonstrate stateless and form factor independent computing work load.

· Model-based management architecture: Cisco UCS Manager Architecture and management database is model based and data driven. Open, standard based XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management system, such as VMware vCloud director, Microsoft system center, and Citrix CloudPlatform.

· Policies, Pools, Templates: Management approach in Cisco UCS Manager is based on defining policies, pools and templates, instead of cluttered configuration, which enables simple, loosely coupled, data driven approach in managing compute, network and storage resources.

· Loose referential integrity: In Cisco UCS Manager, a service profile, port profile or policies can refer to other policies or logical resources with loose referential integrity. A referred policy cannot exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts to work independently from each-other. This provides great flexibilities where different experts from different domains, such as network, storage, security, server and virtualization work together to accomplish a complex task.

· Policy resolution: In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real life tenants and/or organization relationships. Various policies, pools and templates can be defined at different levels of organization hierarchy. A policy referring to other policy by name is resolved in the org hierarchy with closest policy match. If no policy with specific name is found in the hierarchy till root org, then special policy named “default” is searched. This policy resolution practice enables automation friendly management APIs and provides great flexibilities to owners of different orgs.

· Service profiles and stateless computing: Service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

· Built-in multi-tenancy support: Combination of policies, pools and templates, loose referential integrity, policy resolution in org hierarchy and service profile based approach to compute resources make Cisco UCS Manager inherently friendly to multi-tenant environment typically observed in private and public clouds.

· Virtualization aware network: VM-FEX technology makes access layer of network aware about host virtualization. This prevents domain pollution of compute and network domains with virtualization when virtual network is managed by port-profiles defined by the network administrators’ team. VM-FEX also offloads hypervisor CPU by performing switching in the hardware, thus allowing hypervisor CPU to do more virtualization related tasks. VM-FEX technology is well integrated with VMware vCenter, Linux KVM and Hyper-V SR-IOV to simplify cloud management.

· Simplified QoS: Even though fibre-channel and Ethernet are converged in Cisco UCS fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

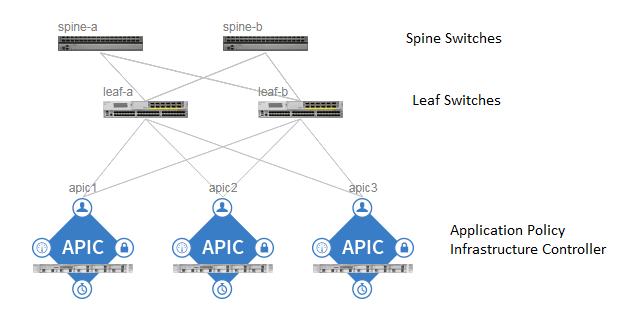

Cisco Application Centric Infrastructure (ACI)

The Cisco Nexus 9000 family of switches supports two modes of operation: NxOS standalone mode and Application Centric Infrastructure (ACI) fabric mode. In standalone mode, the switch performs as a typical Nexus switch with increased port density, low latency and 40Gb connectivity. In fabric mode, the administrator can take advantage of Cisco ACI. Cisco Nexus 9000 based FlexPod design with Cisco ACI consists of Cisco Nexus 9500 and 9300 based spine/leaf switching architecture controlled using a cluster of three Application Policy Infrastructure Controllers (APICs).

Cisco ACI delivers a resilient fabric to satisfy today's dynamic applications. ACI leverages a network fabric that employs industry proven protocols coupled with innovative technologies to create a flexible, scalable, and highly available architecture of low-latency, high-bandwidth links. This fabric delivers application instantiations through the use of profiles that house the requisite characteristics to enable end-to-end connectivity.

The ACI fabric is designed to support the industry trends of management automation, programmatic policies, and dynamic workload provisioning. The ACI fabric accomplishes this with a combination of hardware, policy-based control systems, and closely coupled software to provide advantages not possible in other architectures.

The Cisco ACI fabric consists of three major components:

· The Application Policy Infrastructure Controller (APIC)

· Spine switches

· Leaf switches

The ACI switching architecture uses a leaf-and-spine topology, in which each leaf switch is connected to every spine switch in the network, with no interconnection between leaf switches or spine switches. Each leaf and spine switch is connected with one or more 40 Gigabit Ethernet links or with 100 Gigabit links. Each APIC appliance should connect to two leaf switches for resiliency purpose.

Figure 10 Cisco ACI Fabric Architecture

ACI Components

Cisco Application Policy Infrastructure Controller

The Cisco Application Policy Infrastructure Controller (APIC) is the unifying point of automation and management for the ACI fabric. The Cisco APIC provides centralized access to all fabric information, optimizes the application lifecycle for scale and performance, and supports flexible application provisioning across physical and virtual resources. Some of the key benefits of Cisco APIC are:

· Centralized application-level policy engine for physical, virtual, and cloud infrastructures

· Detailed visibility, telemetry, and health scores by application and by tenant

· Designed around open standards and open APIs

· Robust implementation of multi-tenant security, quality of service (QoS), and high availability

· Integration with management systems such as VMware, Microsoft, and OpenStack

The software controller, APIC, is delivered as an appliance and three or more such appliances form a cluster for high availability and enhanced performance. The controller is a physical appliance based on a Cisco UCS® rack server with two 10 Gigabit Ethernet interfaces for connectivity to the leaf switches. The APIC is also equipped with 1 Gigabit Ethernet interfaces for out-of-band management. Controllers can be configured with 10GBASE-T or SFP+ Network Interface Cards (NICs), and this configuration must match the physical format supported by the leaf. In other words, if controllers are configured with 10GBASE-T, they have to be connected to a Cisco ACI leaf with 10GBASE-T ports.

APIC is responsible for all tasks enabling traffic transport including:

· Fabric activation

· Switch firmware management

· Network policy configuration and instantiation

Although the APIC acts as the centralized point of configuration for policy and network connectivity, it is never in line with the data path or the forwarding topology. The fabric can still forward traffic even when communication with the APIC is lost.

APIC provides both a command-line interface (CLI) and graphical-user interface (GUI) to configure and control the ACI fabric. APIC also provides a northbound API through XML and JavaScript Object Notation (JSON) and an open source southbound API

For more information on Cisco APIC, refer to:

Leaf Switches

In Cisco ACI, all workloads connect to leaf switches. Leaf switch, typically can be a fixed form Nexus 9300 series or a modular Nexus 9500 series switch that provides physical server and storage connectivity as well as enforces ACI policies. The latest Cisco ACI fixed form factor leaf nodes allow connectivity up to 25 and 40 Gbps to the server and uplinks of 100 Gbps to the spine. There are a number of leaf switch choices that differ based on functions like port speed, medium type, multicast routing support, scale of endpoints etc.

For a summary of leaf switch options available refer the Cisco ACI Best Practices Guide https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/1-:x/ACI_Best_Practices/b_ACI_Best_Practices/b_ACI_Best_Practices_chapter_0111.html

Spine Switches

The Cisco ACI fabric forwards traffic primarily based on host lookups. A mapping database stores the information about the leaf switch on which each IP address resides. This information is stored in the fabric cards of the spine switches. All known endpoints in the fabric are programmed in the spine switches. The spine models also differ in the number of endpoints supported in the mapping database, which depends on the type and number of fabric modules installed

For a summary of spine switch options available refer the Cisco ACI Best Practices Guide: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/1-x/ACI_Best_Practices/b_ACI_Best_Practices/b_ACI_Best_Practices_chapter_0111.html

NetApp All Flash FAS and ONTAP

NetApp All Flash FAS (AFF) systems address enterprise storage requirements with high performance, superior flexibility, and best-in-class data management. Built on NetApp ONTAP data management software, AFF systems speed up business without compromising on the efficiency, reliability, or flexibility of IT operations. As an enterprise-grade all-flash array, AFF accelerates, manages, and protects business-critical data and enables an easy and risk-free transition to flash for your data center.

Designed specifically for flash, the NetApp AFF A series all-flash systems deliver industry-leading performance, capacity density, scalability, security, and network connectivity in dense form factors. At up to 7M IOPS per cluster with submillisecond latency, they are the fastest all-flash arrays built on a true unified scale-out architecture. As the industry’s first all-flash arrays to provide both 40 Gigabit Ethernet (40GbE) and 32Gb Fibre Channel connectivity, AFF A series systems eliminate the bandwidth bottlenecks that are increasingly moved to the network from storage as flash becomes faster and faster.

AFF comes with a full suite of acclaimed NetApp integrated data protection software. Key capabilities and benefits include the following:

· Native space efficiency with cloning and NetApp Snapshot® copies, which reduces storage costs and minimizes performance effects.

· Application-consistent backup and recovery, which simplifies application management.

· NetApp SnapMirror® replication software, which replicates to any type of FAS/AFF system—all flash, hybrid, or HDD and on the premises or in the cloud— and reduces overall system costs.

AFF systems are built with innovative inline data reduction technologies:

· Inline data compaction technology uses an innovative approach to place multiple logical data blocks from the same volume into a single 4KB block.

· Inline compression has a near-zero performance effect. Incompressible data detection eliminates wasted cycles.

· Enhanced inline deduplication increases space savings by eliminating redundant blocks.

This version of FlexPod introduces the NetApp AFF A300 series unified scale-out storage system. This controller provides the high-performance benefits of 40GbE and all flash SSDs and occupies only 3U of rack space. Combined with a disk shelf containing 3.8TB disks, this solution provides ample horsepower and over 90TB of raw capacity while taking up only 5U of valuable rack space. The AFF A300 features a multiprocessor Intel chipset and leverages high-performance memory modules, NVRAM to accelerate and optimize writes, and an I/O-tuned PCIe gen3 architecture that maximizes application throughput. The AFF A300 series comes with integrated unified target adapter (UTA2) ports that support 16Gb Fibre Channel, 10GBE, and FCoE. In addition 40GBE add-on cards are available.

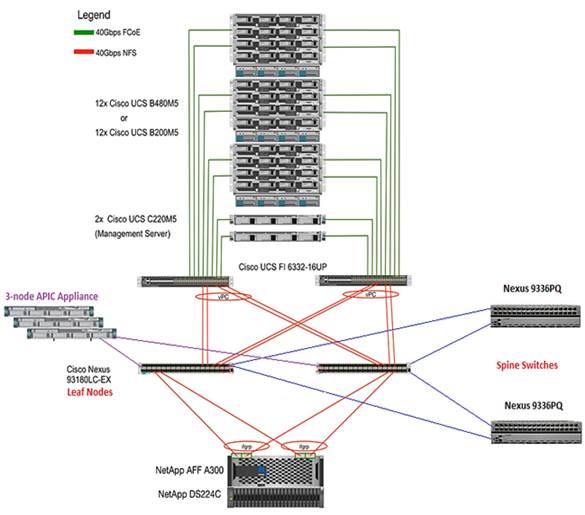

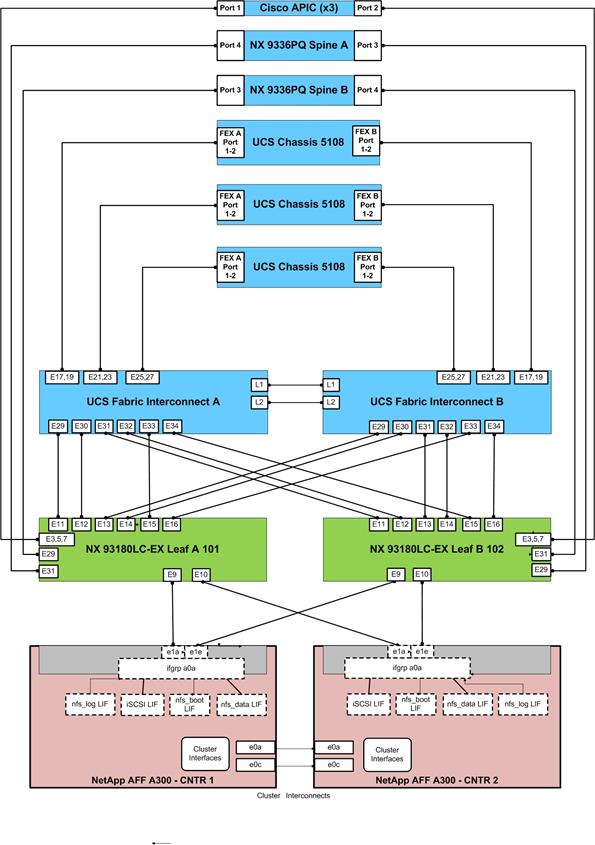

FlexPod with Cisco ACI—Components

FlexPod with ACI is designed to be fully redundant in the compute, network, and storage layers. There is no single point of failure from a device or traffic path perspective. Figure 11 shows how the various elements are connected together.

Figure 11 FlexPod Design with Cisco ACI

Fabric: As in the previous designs of FlexPod, link aggregation technologies play an important role in FlexPod with ACI providing improved aggregate bandwidth and link resiliency across the solution stack. The NetApp storage controllers, Cisco Unified Computing System, and Cisco Nexus 9000 platforms support active port channeling using 802.3ad standard Link Aggregation Control Protocol (LACP). In addition, the Cisco Nexus 9000 series features virtual Port Channel (vPC) capabilities. vPC allows links that are physically connected to two different Cisco Nexus 9000 Series devices to appear as a single "logical" port channel to a third device, essentially offering device fault tolerance. Note in the Figure above that vPC peer links are no longer needed for leaf switches. The peer link is handled in the leaf to spine connections and any two leaves in an ACI fabric can be paired in a vPC. The Cisco UCS Fabric Interconnects and NetApp FAS controllers benefit from the Cisco Nexus vPC abstraction, gaining link and device resiliency as well as full utilization of a non-blocking Ethernet fabric.

Compute: Each Fabric Interconnect (FI) is connected to both the leaf switches and the links provide a robust 40GbE connection between the Cisco Unified Computing System and ACI fabric. Figure 12 illustrates the use of vPC enabled 40GbE uplinks between the Cisco Nexus 9000 leaf switches and Cisco UCS 3rd Gen FIs. Additional ports can be easily added to the design for increased bandwidth as needed. Each Cisco UCS 5108 chassis is connected to the FIs using a pair of ports from each IO Module for a combined 40G uplink. FlexPod design supports direct attaching the Cisco UCS C-Series servers into the FIs. FlexPod designs mandate Cisco UCS C-Series management using Cisco UCS Manager to provide a uniform look and feel across blade and standalone servers.

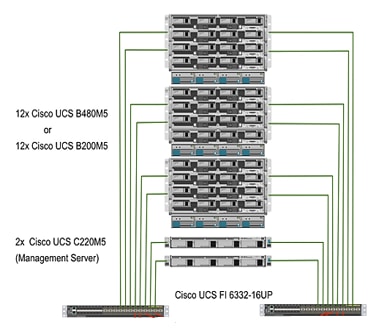

Figure 12 Compute Connectivity

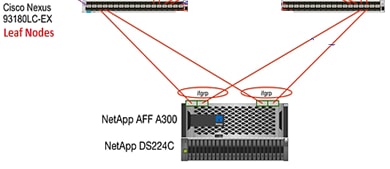

Storage: The ACI-based FlexPod design is an end-to-end IP-based storage solution that supports SAN access by using iSCSI. The solution provides a 40GbE fabric that is defined by Ethernet uplinks from NetApp storage controllers connected to the Cisco Nexus switches.

Figure 13 Storage Connectivity to ACI Leaf Switches

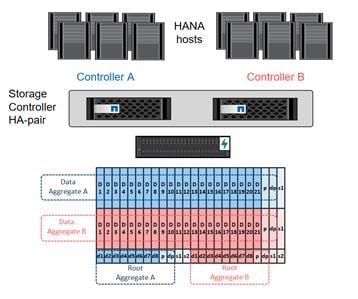

Figure 13 shows the initial storage configuration of this solution as a two-node high availability (HA) pair running clustered Data ONTAP in a switchless cluster configuration. Storage system scalability is easily achieved by adding storage capacity (disks and shelves) to an existing HA pair, or by adding more HA pairs to the cluster or storage domain.

Validated System Hardware Components

The following components were used to validate this Cisco Nexus 9000 ACI design:

· Cisco Unified Computing System 3.2 (3d)

· Cisco Nexus 2304 Fabric Extenders

· Cisco UCS B480M5 servers

· Cisco UCS C220M5 servers

· Cisco Nexus 93180LC-EX Series Leaf Switch

· Cisco Nexus 9336PQ Spine Switch

· Cisco Application Policy Infrastructure Controller (APIC) 2.2(3s)

· NetApp All-Flash FAS Unified Storage

The FlexPod Datacenter ACI solution for SAP HANA with NetApp All Flash FAS storage provides an end-to-end architecture with Cisco and NetApp technologies that demonstrate support for multiple SAP and SAP HANA workloads with high availability and server redundancy. The architecture uses Cisco UCS Manager with combined Cisco UCS B-Series blade servers and C-Series rack servers with NetApp AFF A300 series storage attached to the Cisco Nexus 93180LC-EX ACI leaf switches. The Cisco UCS C-Series Rack Servers are connected directly to Cisco UCS Fabric Interconnect with single-wire management feature. This infrastructure provides PXE and iSCSI boot options for hosts with file-level and block-level access to shared storage. The reference architecture reinforces the “wire-once” strategy, because when the additional storage is added to the architecture, no re-cabling is required from hosts to the Cisco UCS Fabric Interconnect.

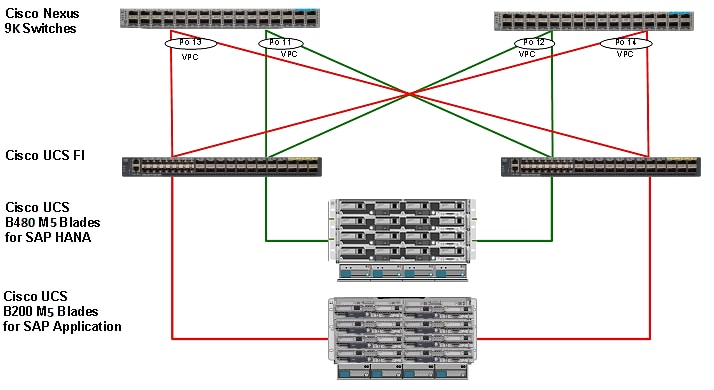

Figure 14 shows the FlexPod Datacenter reference architecture for SAP HANA workload, described in this Cisco Validation Design. It highlights the FlexPod hardware components and the network connections for a configuration with IP-based storage.

Figure 14 FlexPod ACI Solution for SAP HANA - Reference Architecture

Figure 14 includes the following:

· Cisco Unified Computing System

- 2 x Cisco UCS 6332 32 x 40Gb/s

- 3 x Cisco UCS 5108 Blade Chassis with 2 x Cisco UCS 2304 Fabric Extenders with 4x 40 Gigabit Ethernet interfaces

- 12 x Cisco UCS B480 M5 High-Performance Blade Servers with 2x Cisco UCS Virtual Interface Card (VIC) 1380 and 2x Cisco UCS Virtual Interface Card (VIC) 1340

Or

- 12 x Cisco UCS C480 M5 High-Performance Rack-Mount Servers with 2x Cisco UCS Virtual Interface Card (VIC) 1385.

Or

- 12 x Cisco UCS B200 M5 High-Performance Blade Servers with Cisco UCS Virtual Interface Card (VIC) 1340

- 2 x Cisco UCS C220 M5 High-Performance Rack Servers with Cisco UCS Virtual Interface Card (VIC) 1385

· Cisco ACI

- 2 x Cisco Nexus 93180LC-EX Switch for 40/100 Gigabit Ethernet for the ACI Leafs

- 2 x Cisco Nexus 9336PQ Switch for ACI Spines

- 3 node APIC cluster appliance

· NetApp AFF A300 Storage

- NetApp AFF A300 Storage system using ONTAP 9.3

- 1 x NetApp Disk Shelf DS224C with 24x 3.8TB SSD

Although this is the base design, each of the components can be scaled easily to support specific business requirements. Additional servers or even blade chassis can be deployed to increase compute capacity without additional Network components. Two Cisco UCS 6332 Fabric interconnect can support up to:

· 20 Cisco UCS B-Series B480 M5

· 20 Cisco UCS C480 M5 Sever

· 40 Cisco UCS C220 M5/C240 M5 Server

For every twelve Cisco UCS Servers, one NetApp AFF A300 HA paired with ONTAP is required to meet the SAP HANA storage performance. While adding compute and storage for scaling, it is required to increase the network bandwidth between Cisco UCS Fabric Interconnect and Cisco Nexus 9000 switch. Each NetApp Storage requires an additional two 40 GbE connectivity from each Cisco UCS Fabric Interconnect to Cisco Nexus 9000 switches.

The number of Cisco UCS C-Series or Cisco UCS B-Series Servers and the NetApp FAS storage type depends on the number of SAP HANA instances. SAP specifies the storage performance for SAP HANA, based on a per server rule independent of the server size. In other words, the maximum number of servers per storage remains the same if you use Cisco UCS B200 M5 with 192GB physical memory or Cisco UCS B480 M5 with 6TB physical memory.

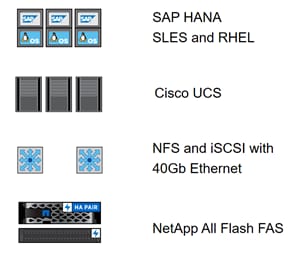

Hardware and Software Components

This architecture is based on and supports the following hardware and software components:

· SAP HANA

- SAP Business Suite on HANA or SAP Business Warehouse on HANA

- S/4HANA or BW/4HANA

- SAP HANA single-host or multiple-host configurations

· Operating System

- SUSE Linux Enterprise for SAP (SLES for SAP)

- Red Hat Enterprise Linux

· Cisco UCS Server

- Bare Metal

· Network

- 40GbE end-to-end

- NFS for SAP HANA data access

- PXE NFS or iSCSI for OS boot

· Storage

- NetApp AFF A-series array

Figure 15 shows an overview of the hardware and software components.

Figure 15 Hardware and Software Component Overview

Operating System Provisioning

All operating system images are provisioned from the external NetApp storage, leveraging either:

· PXE boot and NFS root file system

· iSCSI boot

Figure 16 shows an overview of the different operating system provisioning methods.

Figure 16 Overview Operating System Provisioning

SAP HANA Database Volumes

All SAP HANA database volumes, data, log, and the shared volumes are mounted with NFS from the central storage.

Figure 17 shows an overview of the SAP HANA database volumes.

Figure 17 SAP HANA Database Volumes

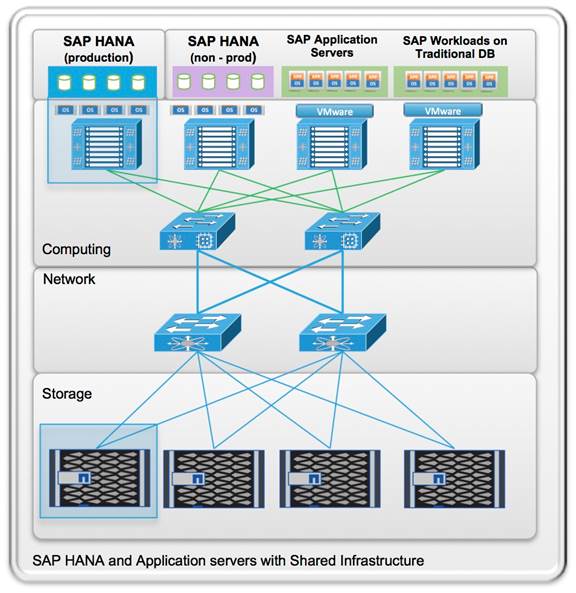

Figure 18 shows a block diagram of a complete SAP Landscape built using the FlexPod architecture. It is comprised of multiple SAP HANA systems and SAP applications with shared infrastructure as illustrated in the figure. The FlexPod Datacenter reference architecture for SAP solutions supports SAP HANA system in both Scale-Up mode and Scale-Out mode with multiple servers with the shared infrastructures.

The FlexPod datacenter solution manages the communication between the application server and the SAP HANA database. This approach enhances system performance by improving bandwidth and latency. It also improves system reliability by including the application server in the disaster-tolerance solution with the SAP HANA database.

Figure 18 Shared Infrastructure Block Diagram

The FlexPod architecture for SAP HANA TDI can run other workloads on the same infrastructure, as long as the rules for workload isolation are considered.

You can run the following workloads on the FlexPod architecture:

1. Production SAP HANA databases

2. SAP application servers

3. Non-production SAP HANA databases

4. Production and non-production SAP systems on traditional databases

5. Non-SAP workloads

In order to make sure that the storage KPIs for SAP HANA production databases are fulfilled, the SAP HANA production databases must have dedicated storage controller of a NetApp FAS Storage HA pair. SAP application servers could share the same storage controller with the production SAP HANA databases.

This document describes in detail the procedure for the reference design and outlines the network, compute and storage configurations and deployment process for running SAP HANA on FlexPod platform.

![]() This document does not describe the procedure for deploying SAP applications.

This document does not describe the procedure for deploying SAP applications.

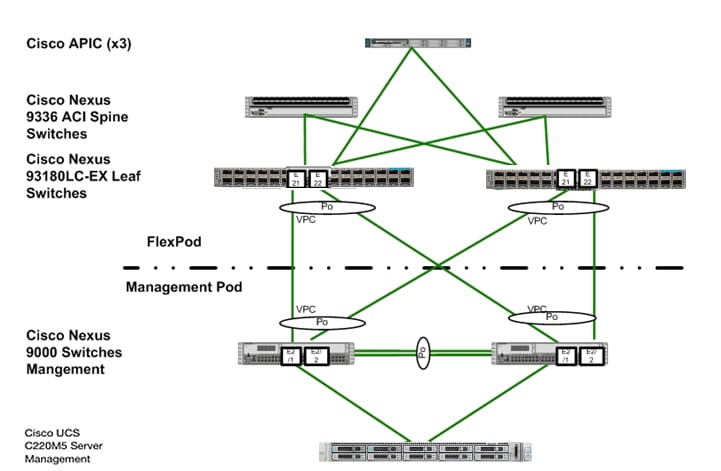

Management Pod

Comprehensive management is an important element for a FlexPod environment running SAP HANA, especially in a system involving multiple FlexPod platforms; Management pod was built to handle this efficiently. It is optional to build a dedicated Management environment; you can use your existing Management environment for the same functionality. Management Pod includes (but is not limited to) a pair of Cisco Nexus 9000 Series switches (readily available Cisco Nexus 9396PX switches were used in the validation PoD) in standalone mode and a pair of Cisco UCS C220 M5 Rack-Mount Servers. The Cisco Nexus 9000 series switches provide the out-of-band management network though a dual homed Nexus 2K switch providing 1GbE connections. The Cisco UCS C220 M5 Rack-Mount Servers will run ESXi with PXE boot server, vCenter with additional management, and monitor virtual machines.

![]() It is recommended to use additional NetApp FAS Storage in the Management Pod for redundancy and failure scenarios.

It is recommended to use additional NetApp FAS Storage in the Management Pod for redundancy and failure scenarios.

Management Pod switches can connect directly to FlexPod switches or your existing network infrastructure. If your existing network infrastructure is used, the uplink from FlexPod switches are connected same pair of switch as uplink from Management Pod switches as shown in Figure 19.

![]() The LAN switch must allow all the necessary VLANs for managing the FlexPod environment.

The LAN switch must allow all the necessary VLANs for managing the FlexPod environment.

Figure 19 Management Pod Using Customer Existing Network

The dedicated Management Pod can directly connect to each FlexPod environment as shown in Figure 20. In this topology, the switches are configured as port-channels for unified management. This CVD describes the procedure for the direct connection option.

Figure 20 Direct Connection of Management Pod to FlexPod

This section describes the various implementation options and their requirements for a SAP HANA system.

SAP HANA System on a Single Host - Scale-Up

A single-host system is the simplest of the installation types. It is possible to run an SAP HANA system entirely on one host and then scale the system up as needed. All data and processes are located on the same server and can be accessed locally. The network requirements for this option minimum one 1-Gb Ethernet (access) and one 10/40-Gb Ethernet storage networks are sufficient to run SAP HANA scale-up.

With the SAP HANA TDI option, multiple SAP HANA scale-up systems can be built on a shared infrastructure.

SAP HANA System on Multiple Hosts Scale-Out

SAP HANA Scale-Out option is used if the SAP HANA system does not fit into the main memory of a single server based on the rules defined by SAP. In this method, multiple independent servers are combined to form one system and the load is distributed among multiple servers. In a distributed system, each index server is usually assigned to its own host to achieve maximum performance. It is possible to assign different tables to different hosts (partitioning the database), or a single table can be split across hosts (partitioning of tables). SAP HANA Scale-Out supports failover scenarios and high availability. Individual hosts in a distributed system have different roles master, worker, slave, standby depending on the task.

![]() Some use cases are not supported on SAP HANA Scale-Out configuration and it is recommended to check with SAP whether a use case can be deployed as a Scale-Out solution.

Some use cases are not supported on SAP HANA Scale-Out configuration and it is recommended to check with SAP whether a use case can be deployed as a Scale-Out solution.

The network requirements for this option are higher than for Scale-Up systems. In addition to the client and application access and storage access network, a node-to-node network is necessary. One 10 Gigabit Ethernet (access) and one 10 Gigabit Ethernet (node-to-node) and one 10 Gigabit Ethernet storage networks are required to run SAP HANA Scale-Out system. Additional network bandwidth is required to support system replication or backup capability.

Based on the SAP HANA TDI option for shared storage and shared network, multiple SAP HANA Scale-Out systems can be built on a shared infrastructure.

Additional information is available at: http://saphana.com.

![]() This document does not cover the updated information published by SAP after Q1/2017.

This document does not cover the updated information published by SAP after Q1/2017.

CPU

SAP HANA2.0 (TDI) supports servers equipped with Intel Xeon processor E7-8880v3, E7-8890v3, E7-8880v4, E7-8890v4 and all Skylake CPU’s > 8 cores. In addition, the Intel Xeon processor E5-26xx v4 is supported for scale-up systems with the SAP HANA TDI option.

Memory

SAP HANA is supported in the following memory configurations:

· Homogenous symmetric assembly of dual in-line memory modules (DIMMs) for example, DIMM size or speed should not be mixed

· Maximum use of all available memory channels

· SAP HANA 2.0 Memory per socket up to 1024 GB for SAP NetWeaver Business Warehouse (BW) and DataMart

· SAP HANA 2.0 Memory per socket up to 1536 GB for SAP Business Suite on SAP HANA (SoH) on 2- or 4-socket server

CPU and Memory Combinations

SAP HANA allows for a specific set of CPU and memory combinations. Table 1 describes the list of certified Cisco UCS servers for SAP HANA with supported Memory and CPU configuration for different use cases.

Table 1 List of Cisco UCS Servers Defined in FlexPod Datacenter Solution for SAP

| Cisco UCS Server |

CPU |

Supported Memory

|

Scale UP/Suite on HANA |

Scale-Out |

| Cisco UCS B200 M5 |

2 x Intel Xeon |

128 GB to 2 TB BW |

Supported |

Not supported |

| Cisco UCS C220 M5 |

2 x Intel Xeon |

128 GB to 2 TB BW |

Supported |

Not supported |

| Cisco UCS C240 M5 |

2 x Intel Xeon |

128 GB to 2 TB BW |

Supported |

Not supported |

| Cisco UCS B480 M5 |

4 x Intel Xeon |

256 GB to 4 TB for BW |

Supported |

Supported |

| Cisco UCS C480 M5 |

4 x Intel Xeon |

256 GB to 4 TB for BW |

Supported |

Supported |

| Cisco C880 M5 |

8x Intel Xeon |

2TB – 6TB for BW |

Supported |

Supported |

Network

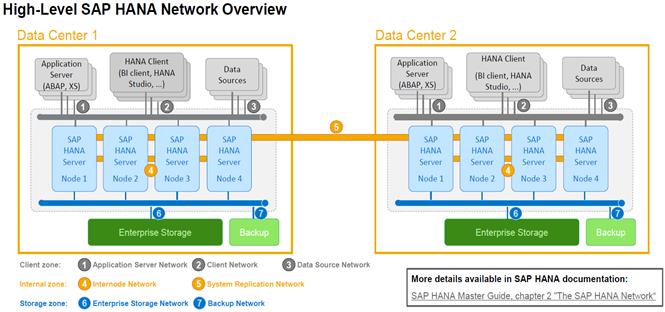

A SAP HANA data center deployment can range from a database running on a single host to a complex distributed system. Distributed systems can get complex with multiple hosts located at a primary site having one or more secondary sites; supporting a distributed multi-terabyte database with full fault and disaster recovery.

SAP HANA has different types of network communication channels to support the different SAP HANA scenarios and setups:

· Client zone: Channels used for external access to SAP HANA functions by end-user clients, administration clients, and application servers, and for data provisioning through SQL or HTTP

· Internal zone: Channels used for SAP HANA internal communication within the database or, in a distributed scenario, for communication between hosts

· Storage zone: Channels used for storage access (data persistence) and for backup and restore procedures

Table 2 lists all the networks defined by SAP or Cisco or requested by customers.

Table 2 List of Known Networks

| Name |

Use Case |

Solutions |

Bandwidth requirements |

| Client Zone Networks |

|||

| Application Server Network |

SAP Application Server to DB communication |

All |

10 or 40 GbE |

| Client Network |

User / Client Application to DB communication |

All |

10 or 40 GbE |

| Data Source Network |

Data import and external data integration |

Optional for all SAP HANA systems |

10 or 40 GbE |

| Internal Zone Networks |

|||

| Inter-Node Network |

Node to node communication within a scale-out configuration |

Scale-Out |

40 GbE |

| System Replication Network |

|

For SAP HANA Disaster Tolerance |

TBD with Customer |

| Storage Zone Networks |

|||

| Backup Network |

Data Backup |

Optional for all SAP HANA systems |

10 or 40 GbE |

| Storage Network |

Node to Storage communication |

All

|

40 GbE |

| Infrastructure Related Networks |

|||

| Administration Network |

Infrastructure and SAP HANA administration |

Optional for all SAP HANA systems |

1 GbE |

| Boot Network |

Boot the Operating Systems via PXE/NFS or iSCSI |

Optional for all SAP HANA systems |

40 GbE |

Details about the network requirements for SAP HANA are available in the white paper from SAP SE at: http://www.saphana.com/docs/DOC-4805.

The network needs to be properly segmented and must be connected to the same core/ backbone switch as shown in Figure 21 based on your customer’s high-availability and redundancy requirements for different SAP HANA network segments.

Figure 21 ![]() High-Level SAP HANA Network Overview

High-Level SAP HANA Network Overview

Based on the listed network requirements, every server must be equipped with 2x 10 Gigabit Ethernet for scale-up systems to establish the communication with the application or user (Client Zone) and a 10 GbE Interface for Storage access.

For Scale-Out solutions, an additional redundant network for SAP HANA node to node communication with 10 GbE is required (Internal Zone).

![]() For more information on SAP HANA Network security, refer to the SAP HANA Security Guide.

For more information on SAP HANA Network security, refer to the SAP HANA Security Guide.

Storage

As an in-memory database, SAP HANA uses storage devices to save a copy of the data, for the purpose of startup and fault recovery without data loss. The choice of the specific storage technology is driven by various requirements like size, performance and high availability. To use Storage system in the Tailored Datacenter Integration option, the storage must be certified for SAP HANA TDI option at: https://www.sap.com/dmc/exp/2014-09-02-hana-hardware/enEN/enterprise-storage.html.

All relevant information about storage requirements is documented in this white paper: https://www.sap.com/documents/2015/03/74cdb554-5a7c-0010-82c7-eda71af511fa.html.

![]() SAP can only support performance related SAP HANA topics if the installed solution has passed the validation test successfully.

SAP can only support performance related SAP HANA topics if the installed solution has passed the validation test successfully.

Refer to the SAP HANA Administration Guide section 2.8 Hardware Checks for Tailored Datacenter Integration for Hardware check test tool and the related documentation.

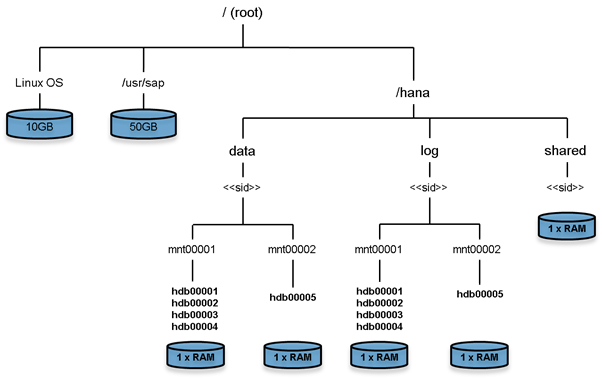

Filesystem Layout

Figure 22 shows the file system layout and the required storage sizes to install and operate SAP HANA. For the Linux OS installation (/root) 10 GB of disk size is recommended. Additionally, 50 GB must be provided for the /usr/sap since the volume used for SAP software that supports SAP HANA.

While installing SAP HANA on a host, specify the mount point for the installation binaries (/hana/shared/<sid>), data files (/hana/data/<sid>) and log files (/hana/log/<sid>), where sid is the instance identifier of the SAP HANA installation.

Figure 22 File System Layout for 2 Node Scale-Out System

![]() The storage sizing for filesystem is based on the amount of memory equipped on the SAP HANA host.

The storage sizing for filesystem is based on the amount of memory equipped on the SAP HANA host.

Below is a sample filesystem size for a single system appliance configuration:

Root-FS: 10 GB

/usr/sap: 50 GB

/hana/shared: 1x RAM or 1TB whichever is less

/hana/data: 1 x RAM

/hana/log: ½ of the RAM size for systems <= 256GB RAM and min ½ TB for all other systems

With a distributed installation of SAP HANA Scale-Out, each server will have the following:

Root-FS: 10 GB

/usr/sap: 50 GB

The installation binaries, trace and configuration files are stored on a shared filesystem, which should be accessible for all hosts in the distributed installation. The size of shared filesystem should be 1 X RAM of a worker node for each 4 nodes in the cluster. For example, in a distributed installation with three hosts with 512 GB of memory each, shared file system should be 1 x 512 GB = 512 GB, for 5 hosts with 512 GB of memory each, shared file system should be 2 x 512 GB = 1024GB.

For each SAP HANA host there should be a mount point for data and log volume. The size of the file system for data volume with TDI option is one times the host memory:

/hana/data/<sid>/mntXXXXX: 1x RAM

For solutions based on Intel Skylake 81XX CPU the size of the Log volume must be as follows:

· Half of the server RAM size for systems with ≤ 512 GB RAM

· 512 GB for systems with > 512 GB RAM

Operating System

The supported operating systems for SAP HANA are as follows:

· SUSE Linux Enterprise Server for SAP Applications

· Red Hat Enterprise Linux for SAP HANA

High Availability

· Internal storage: A RAID-based configuration is preferred

· External storage: Redundant data paths, dual controllers, and a RAID-based configuration are required

· Ethernet switches: Two or more independent switches should be used

SAP HANA Scale-Out comes with in integrated high-availability function. If a SAP HANA system is configured with a stand-by node, a failed part of SAP HANA will start on the stand-by node automatically. For automatic host failover, storage connector API must be properly configured for the implementation and operation of the SAP HANA.

For detailed information from SAP see: http://saphana.com or http://service.sap.com/notes.

Table 3 details the software revisions used for validating various components of the FlexPod Datacenter Reference Architecture for SAP HANA.

| Component |

Software version |

Count |

|

| Network |

Nexus 93180LC-EX [ACI Leaf] |

3.2(2l) [build 13.2(2l)] |

2 |

|

|

Nexus 9336PQ [ACI Spine] |

3.2(2l) [build 13.2(2l)] |

2 |

|

|

3 node Cluster APIC appliance |

3.2(2l) |

1 unit |

| Compute |

Cisco UCS Fabric Interconnect 6332 – 16UP |

3.2(3d) |

2 |

|

|

Cisco UCS B480 M5 w/1340/1380 VIC |

3.3(3d)B |

12 |

|

|

Cisco UCS C220 M5 w/1385 VIC [Management Servers] |

3.1(3g)

|

2 |

| Storage |

NetApp AFF A300 |

ONTAP 9.3 |

1 |

|

|

NetApp DS224C Flash disk shelf |

|

1 |

| Software OS |

SLES4SAP |

12 SP3 |

|

|

|

Red Hat |

7.4 |

|

This document provides details for configuring a fully redundant, highly available configuration for a FlexPod unit with ONTAP storage. Therefore, reference is made to which component is being configured with each step, either 01 or 02. For example, node01 and node02 are used to identify the two NetApp storage controllers that are provisioned with this document and Cisco Nexus A and Cisco Nexus B identifies the pair of Cisco Nexus switches that are configured.

The Cisco UCS Fabric Interconnects are similarly configured. Additionally, this document details the steps for provisioning multiple Cisco UCS hosts, and these are identified sequentially: HANA-Server01, HANA-Server02, and so on. Finally, to indicate that you should include information pertinent to your environment in a given step, <text> appears as part of the command structure. Review the following example for the network port vlan create command:

Usage:

network port vlan create ?

[-node] <nodename> Node

{ [-vlan-name] {<netport>|<ifgrp>} VLAN Name

| -port {<netport>|<ifgrp>} Associated Network Port

[-vlan-id] <integer> } Network Switch VLAN Identifier

Example:

network port vlan –node <node01> -vlan-name i0a-<vlan id>

This document is intended to enable you to fully configure the customer environment. In this process, various steps require you to insert customer-specific naming conventions, IP addresses, and VLAN schemes, etc. Table 4 lists the configuration variables that are used throughout this document. This table can be completed based on the specific site variables and used in implementing the document configuration steps.

Table 4 Configuration Variables

| Variable |

Description |

Customer Implementation Value |

| <<var_nexus_mgmt_A_hostname>> |

Cisco Nexus Management A host name |

|

| <<var_nexus_mgmt_A_mgmt0_ip>> |

Out-of-band Cisco Nexus Management A management IP address |

|

| <<var_nexus_mgmt_A_mgmt0_netmask>> |

Out-of-band management network netmask |

|

| <<var_nexus_mgmt_A_mgmt0_gw>> |

Out-of-band management network default gateway |

|

| <<var_nexus_mgmt_B_hostname>> |

Cisco Nexus Management B host name |

|

| <<var_nexus_mgmt_B_mgmt0_ip>> |

Out-of-band Cisco Nexus Management B management IP address |

|

| <<var_nexus_mgmt_B_mgmt0_netmask>> |

Out-of-band management network netmask |

|

| <<var_nexus_mgmt_B_mgmt0_gw>> |

Out-of-band management network default gateway |

|

| <<var_global_ntp_server_ip>> |

NTP server IP address |

|

| <<var_oob_vlan_id>> |

Out-of-band management network VLAN ID |

|

| <<var_admin_vlan_id_mgmt>>

<<var_admin_vlan_id>> |

Mgmt PoD - Admin Network VLAN

Admin network VLAN ID – UCS |

|

| <<var_boot_vlan_id>>

<<var_boot_vlan_id_aci>>

<<var_boot_vlan_id_aci_storage>> |

PXE boot network VLAN ID -UCS

PXE boot network VLAN ID – PXEserver Mgmt Pod

PXE boot network VLAN ID Storage |

|

| <<var_nexus_vpc_domain_mgmt_id>> |

Unique Cisco Nexus switch VPC domain ID for Management Switch |

|

| <<var_nexus_vpc_domain_id>> |

Unique Cisco Nexus switch VPC domain ID for Management Switch |

|

| <<var_vm_host_mgmt_01_ip>> |

ESXi Server 01 for Management Server IP Address |

|

| <<var_vm_host_mgmt_02_ip>> |

ESXi Server 02 for Management Server IP Address |

|

| <<var_nexus_A_hostname>> |

Cisco Nexus Mgmt-A host name |

|

| <<var_nexus_A_mgmt0_ip>> |

Out-of-band Cisco Nexus Mgmt-A management IP address |

|

| <<var_nexus_A_mgmt0_netmask>> |

Out-of-band management network netmask |

|

| <<var_nexus_A_mgmt0_gw>> |

Out-of-band management network default gateway |

|

| <<var_nexus_B_hostname>> |

Cisco Nexus Mgmt-B host name |

|

| <<var_nexus_B_mgmt0_ip>> |

Out-of-band Cisco Nexus Mgmt-B management IP address |

|

| <<var_nexus_B_mgmt0_netmask>> |

Out-of-band management network netmask |

|

| <<var_nexus_B_mgmt0_gw>> |

Out-of-band management network default gateway |

|

| <<var_storage_data_vlan_id>>

<<var_storage_data_vlan_id_aci>>

<<var_storage_log_vlan_id>>

<<var_storage_log_vlan_id_aci>> |

Storage network for HANA Data VLAN ID – UCS

Storage HANA Data Network VLAN ID – ACI

Storage network for HANA Log VLAN ID – UCS

Storage HANA Log Network VLAN ID - ACI

|

|

| <<var_internal_vlan_id>> |

Node to Node Network for HANA Data/log VLAN ID |

|

| <<var_backup_vlan_id>> |

Backup Network for HANA Data/log VLAN ID |

|

| <<var_client_vlan_id>> |

Client Network for HANA Data/log VLAN ID |

|

| <<var_appserver_vlan_id>> |

Application Server Network for HANA Data/log VLAN ID |

|

| <<var_datasource_vlan_id>> |

Data source Network for HANA Data/log VLAN ID |

|

| <<var_replication_vlan_id>> |

Replication Network for HANA Data/log VLAN ID |

|

| <<iSCSI_vlan_id_A>> |

iSCSI-A VLAN ID initiator UCS |

|

| <<iSCSI_vlan_id_B>> |

iSCSI-B VLAN ID initiator UCS |

|

| <<iSCSI_vlan_id_A_aci>> |

iSCSI-A VLAN ID Target ACI |

|

| <<iSCSI_vlan_id_B_aci>> |

iSCSI-B VLAN ID Target ACI |

|

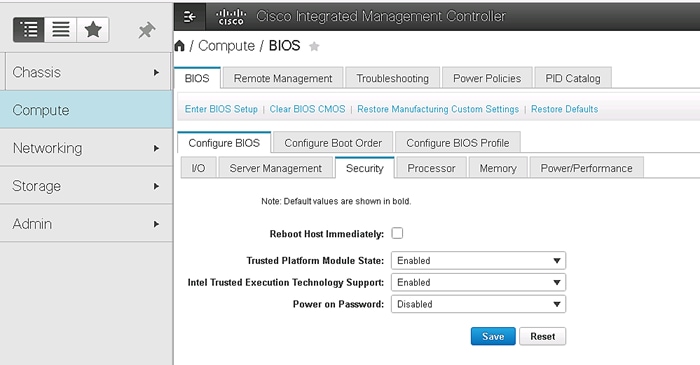

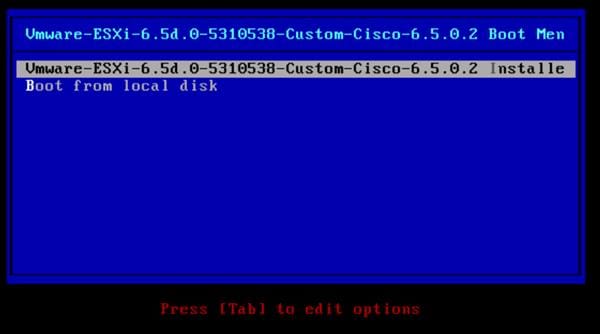

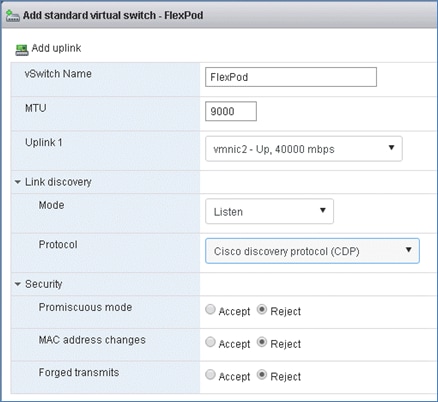

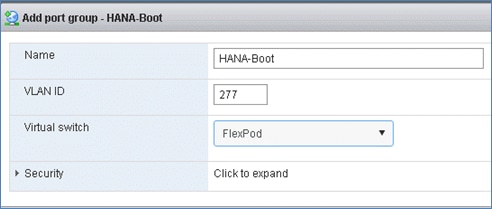

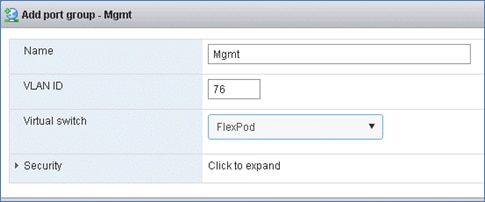

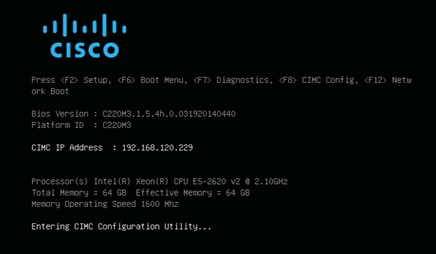

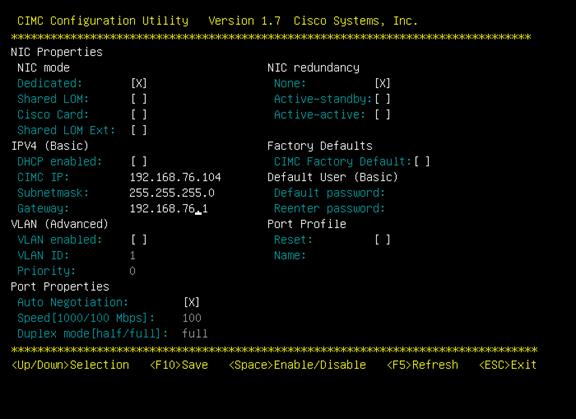

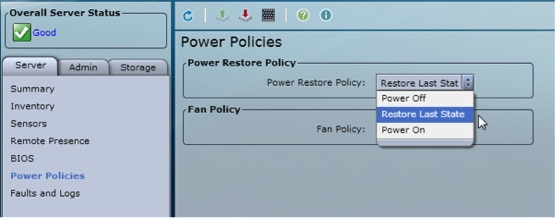

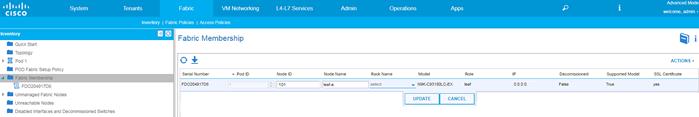

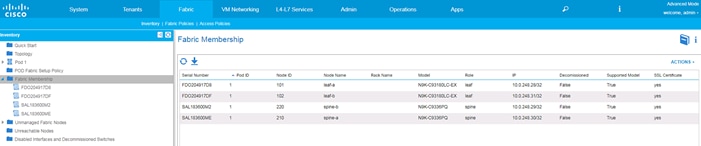

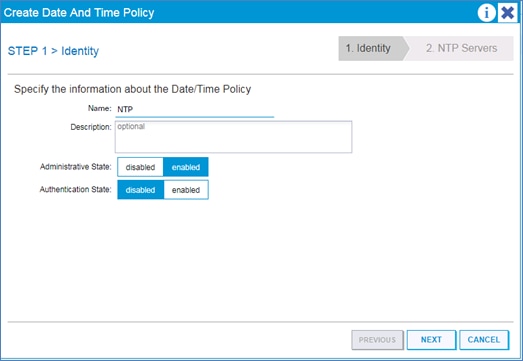

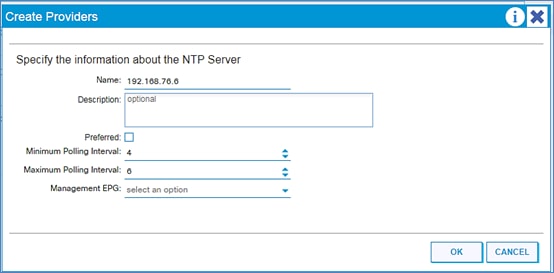

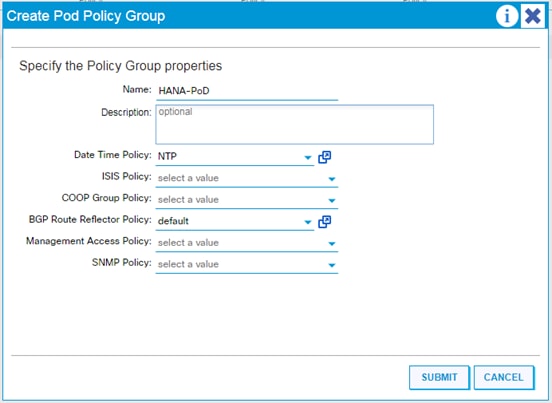

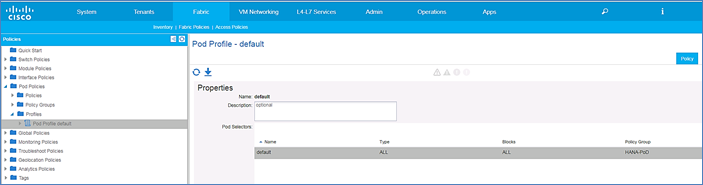

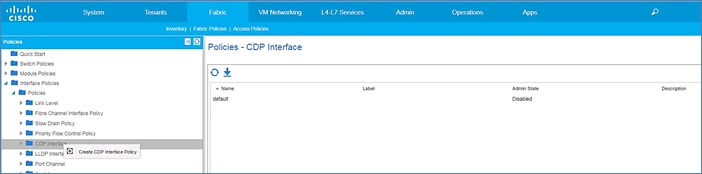

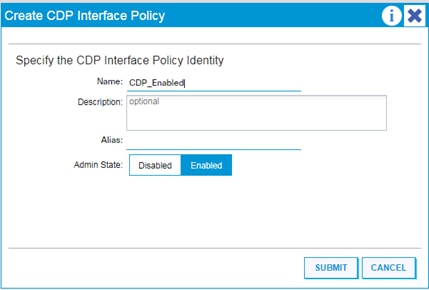

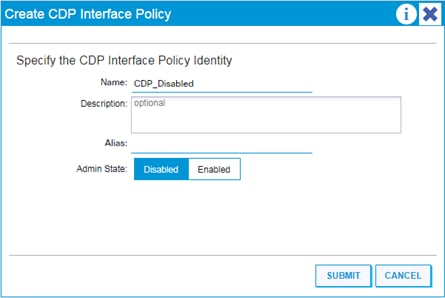

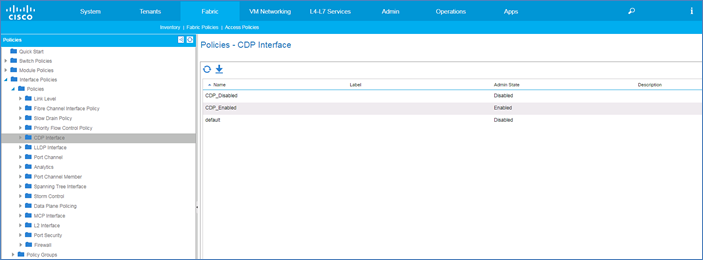

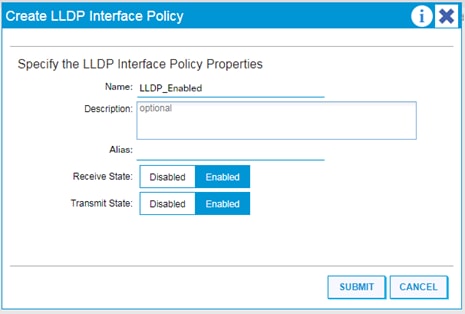

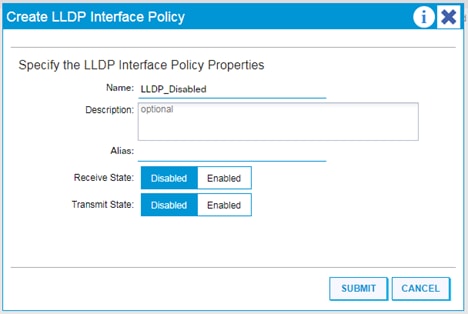

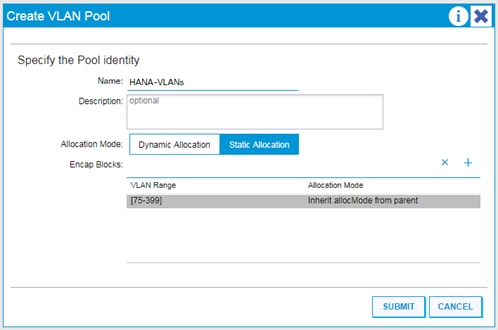

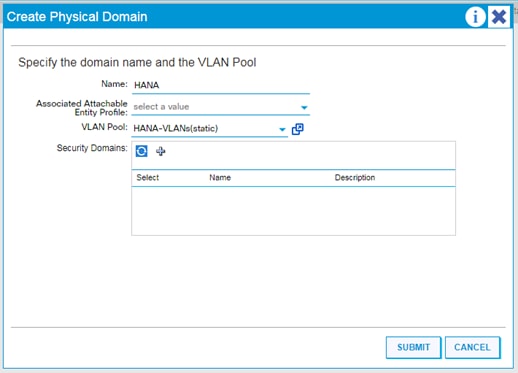

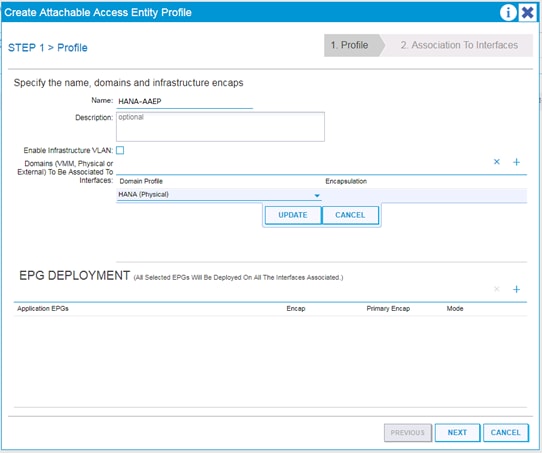

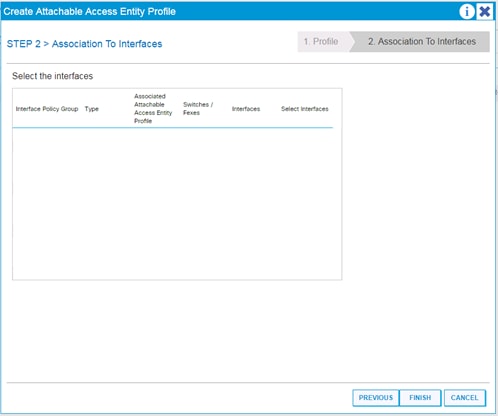

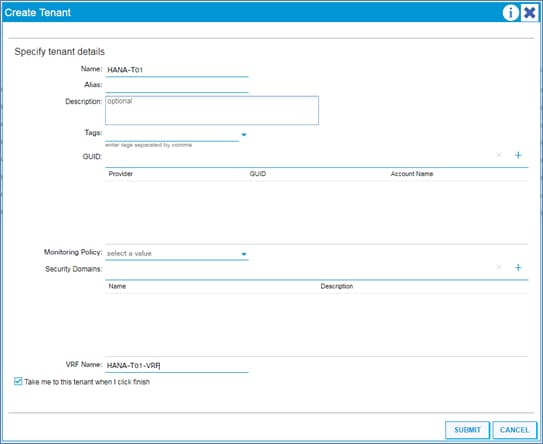

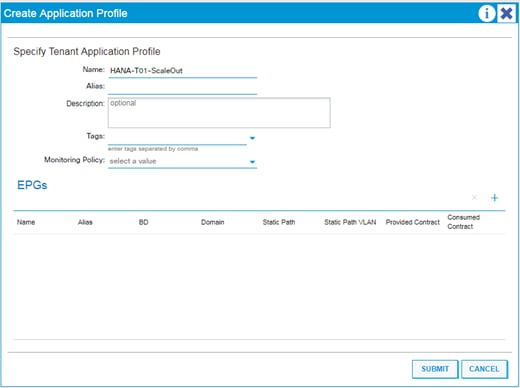

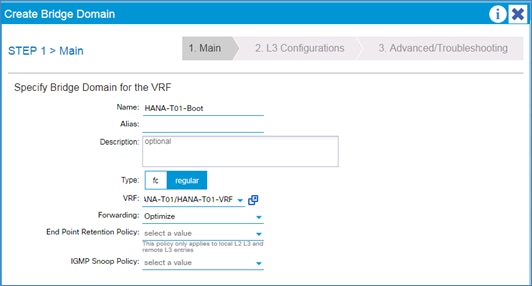

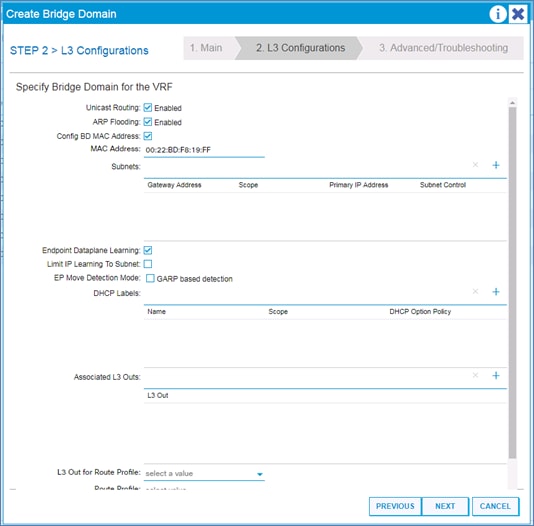

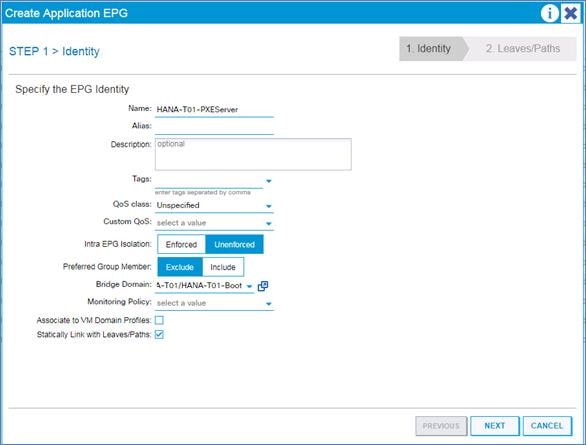

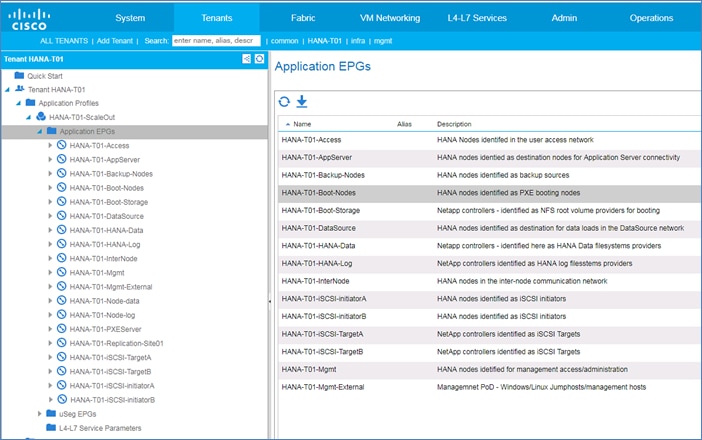

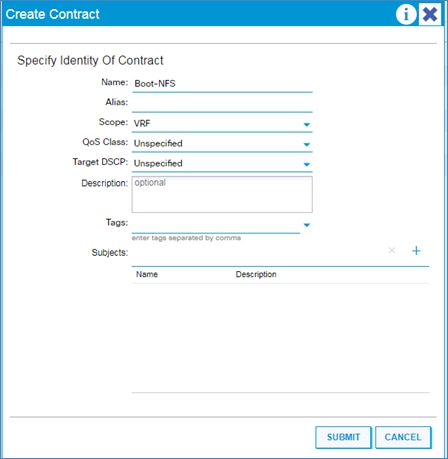

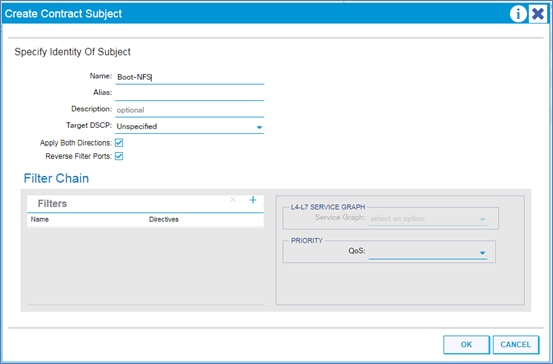

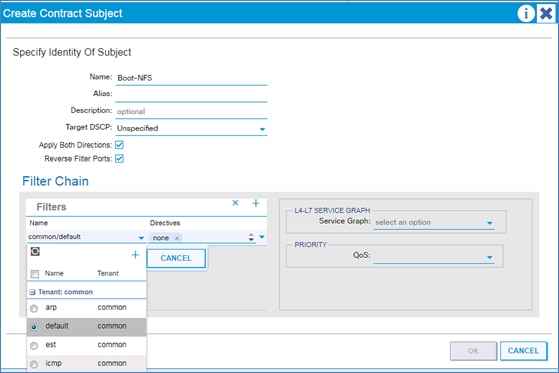

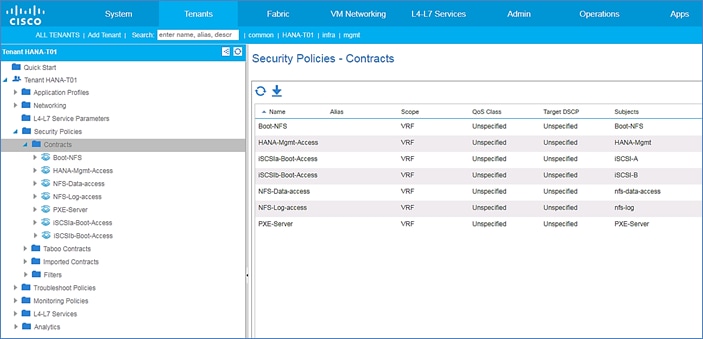

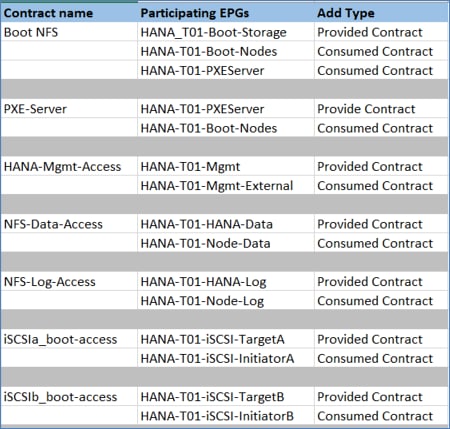

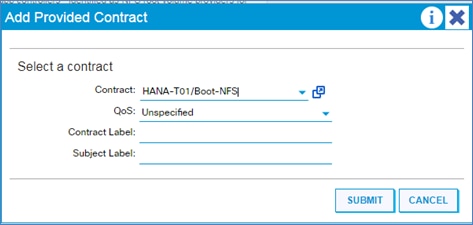

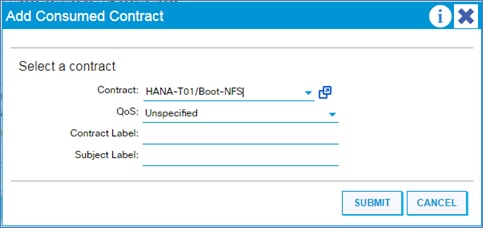

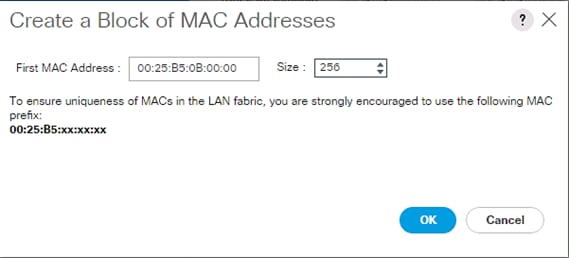

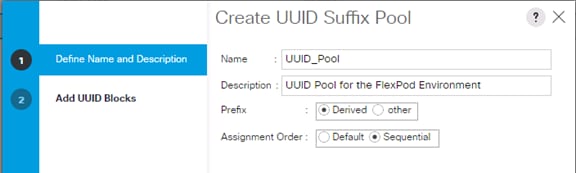

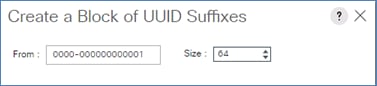

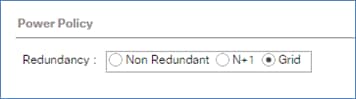

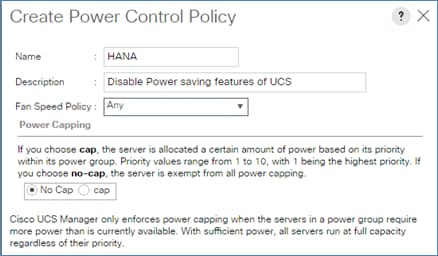

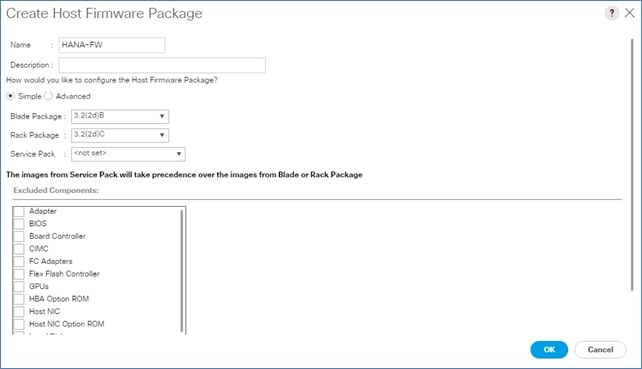

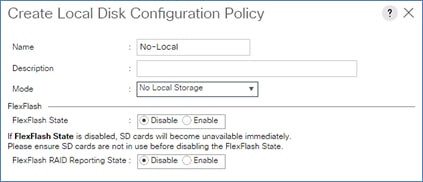

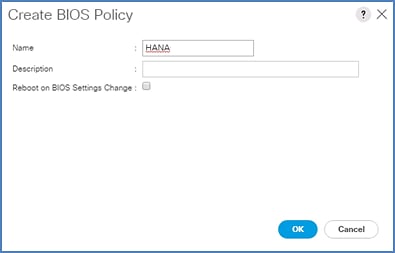

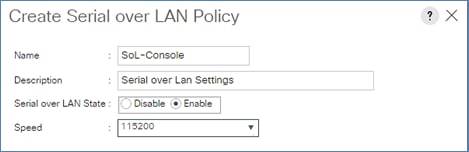

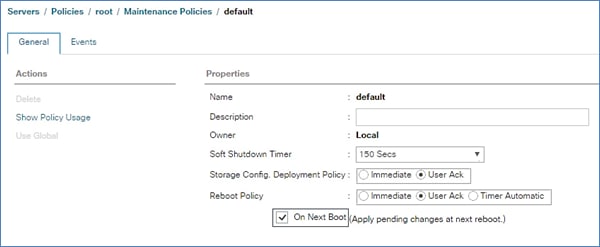

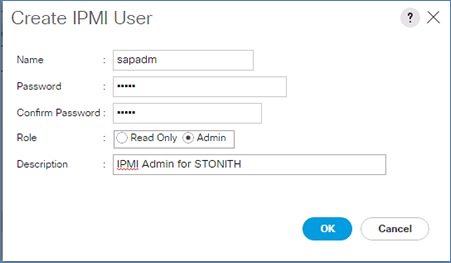

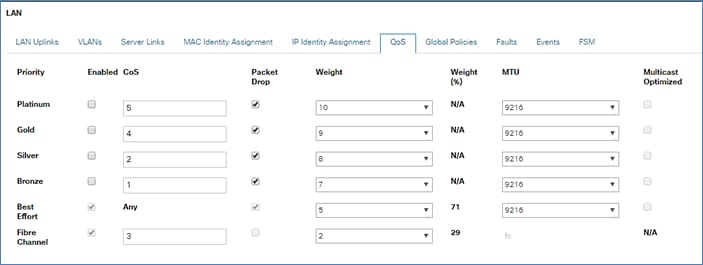

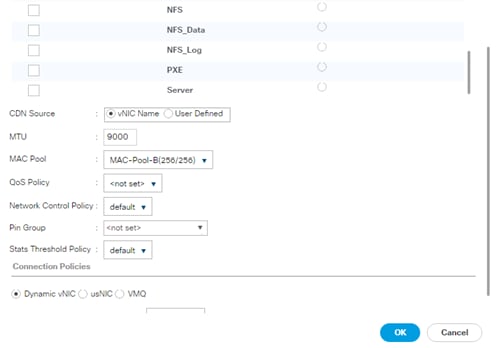

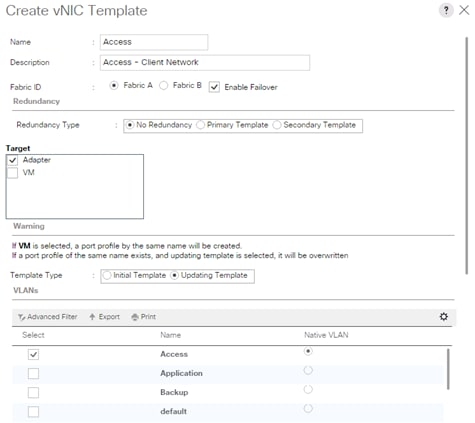

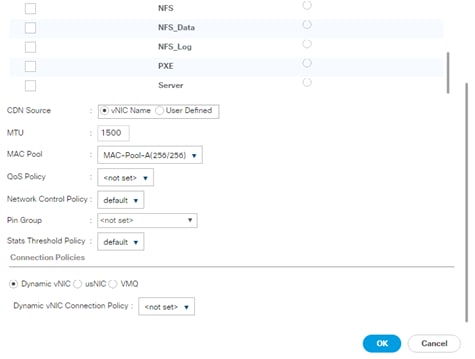

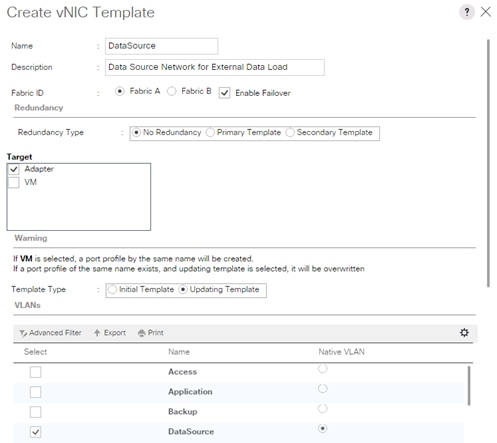

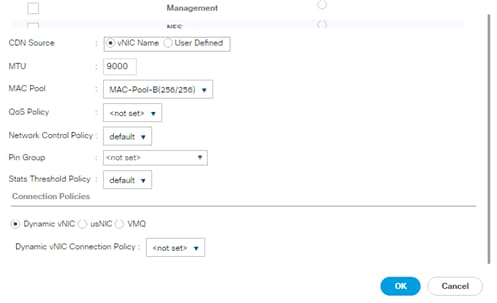

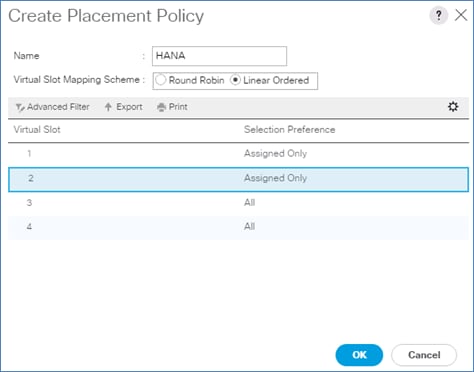

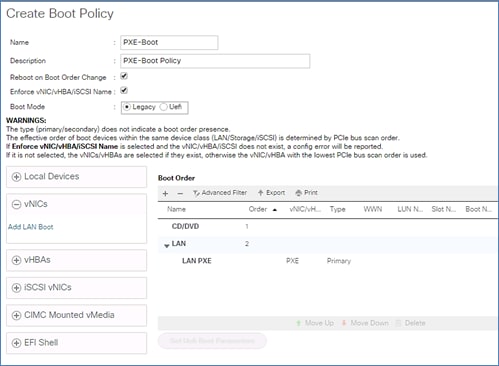

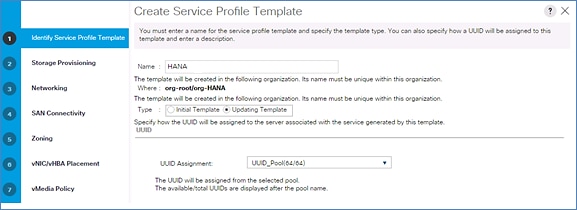

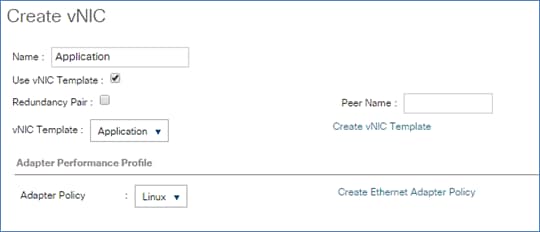

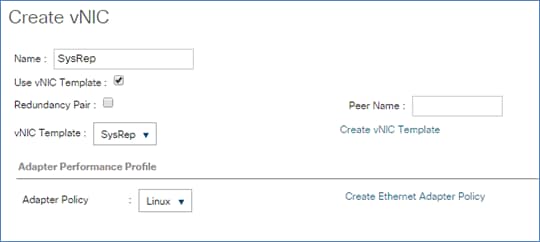

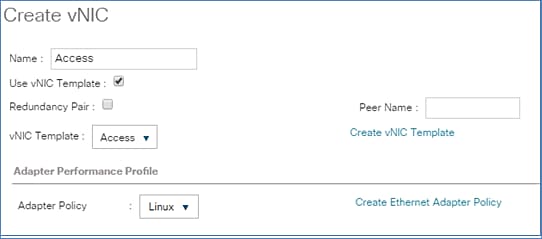

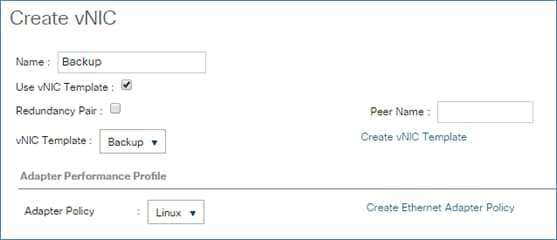

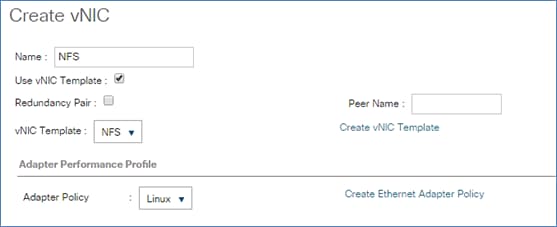

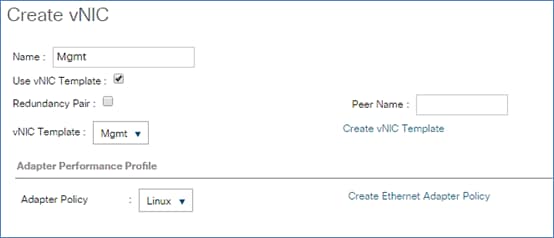

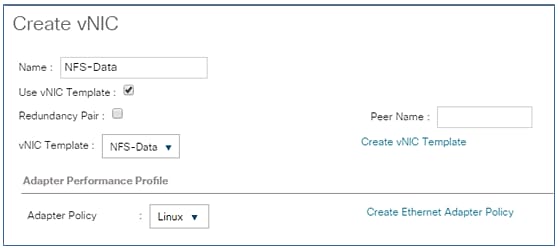

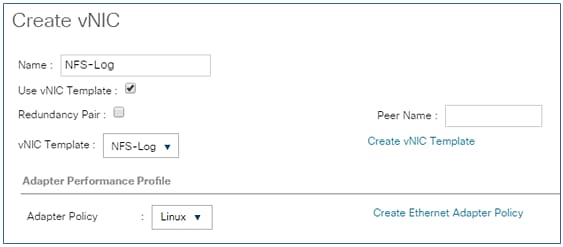

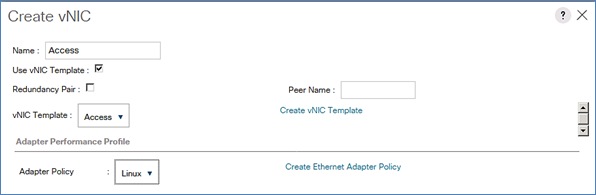

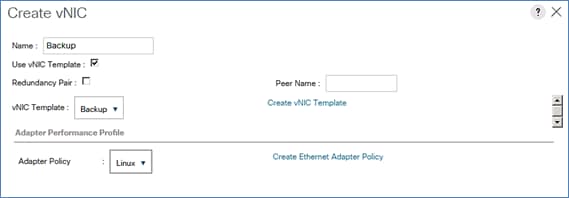

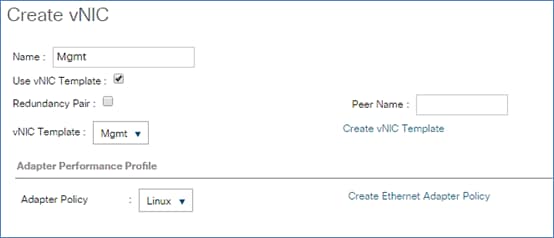

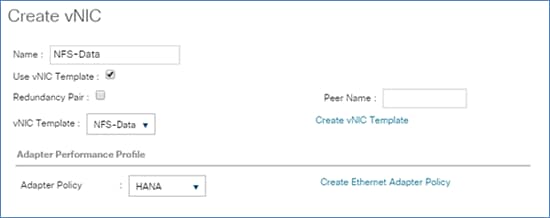

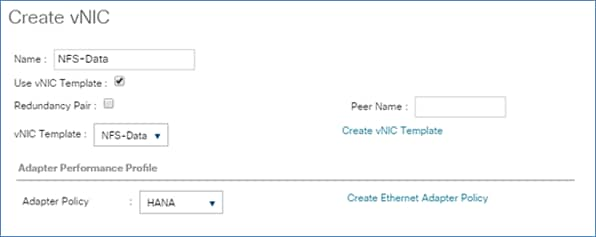

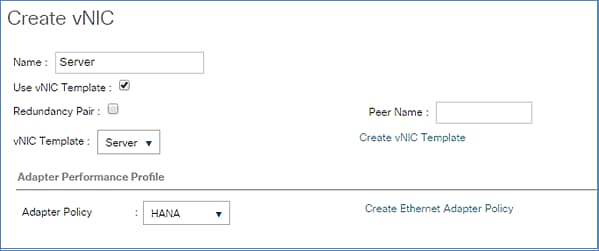

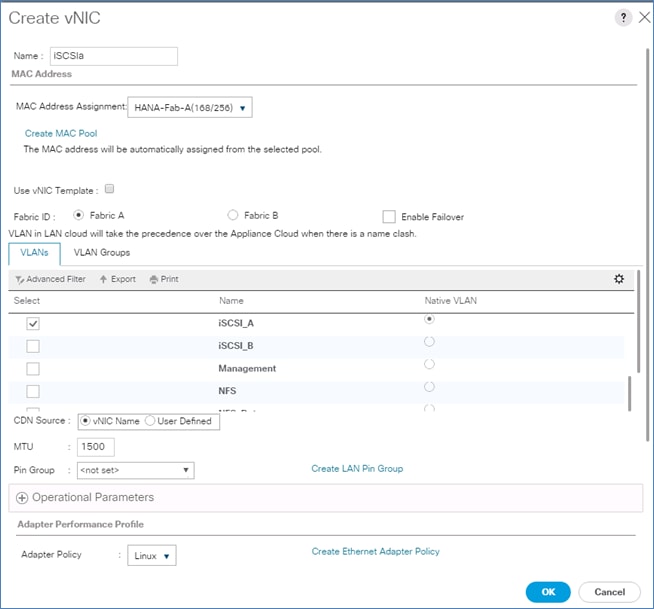

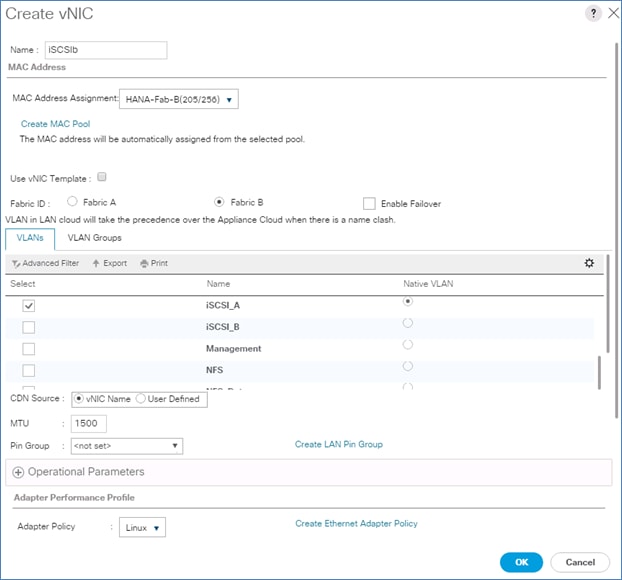

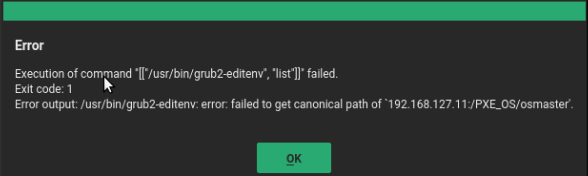

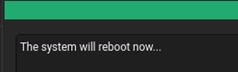

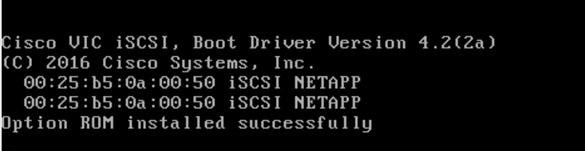

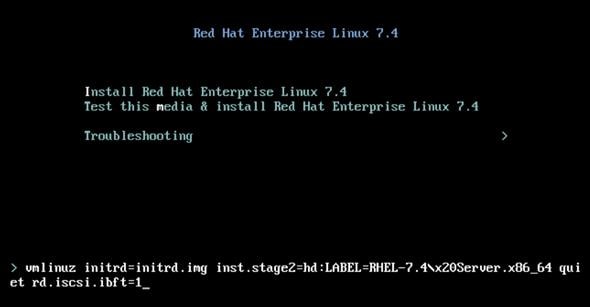

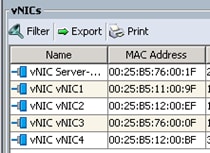

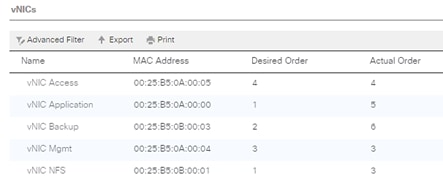

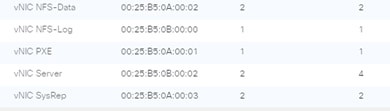

| <<var_ucs_clustername>> |