Cisco UCS Integrated Infrastructure for Big Data and Analytics with Hortonworks Data Platform 3.0

Available Languages

Cisco UCS Integrated Infrastructure for Big Data and Analytics with Hortonworks Data Platform 3.0

Design and Deployment Guide of Cisco Integrated Infrastructure for Big Data with Hortonworks Data Platform 3.0 and Cisco UCS C480 M5L Platform

Last Updated: October 21, 2019

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2019 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco UCS Integrated Infrastructure for Big Data and Analytics

Cisco Unified Computing System

Cisco UCS 6300 Series Fabric Interconnects

Cisco UCS C-Series Rack-Mount Servers

Cisco UCS Virtual Interface Cards

Software Distributions and Versions

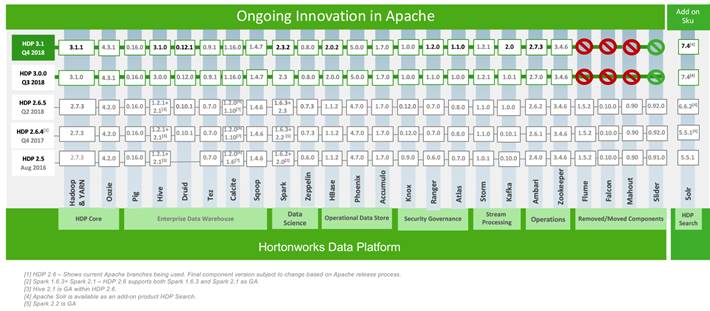

Hortonworks Data Platform (HDP 3.0.1)

Red Hat Enterprise Linux (RHEL)

Perform Initial Setup of Cisco UCS 6332 Fabric Interconnects

Configure Fabric Interconnect A

Configure Fabric Interconnect B

Upgrade Cisco UCS Manager Software to Version 4.0(2a)

Add a Block of IP Addresses for KVM Access

Create Pools for Service Profile Templates

Create Policies for Service Profile Templates

Create Host Firmware Package Policy

Create the Local Disk Configuration Policy

Create Service Profile Template

Configure the Storage Provisioning for the Template

Configure Network Settings for the Template

Configure the vMedia Policy for the Template

Configure the Server Boot Order for the Template

Configure the Server Assignment for the Template

Configure the Operational Policies for the Template

Install Red Hat Enterprise Linux 7.5

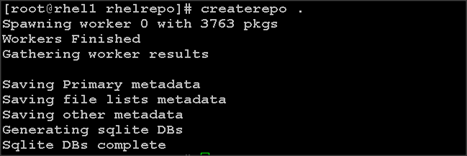

Create the Red Hat Enterprise Linux (RHEL) 7.5 Local Repository

Create the Red Hat Repository Database

Set Up All Nodes to Use the RHEL Repository

Upgrade the Cisco Network Driver for VIC1387

Disable Transparent Huge Pages

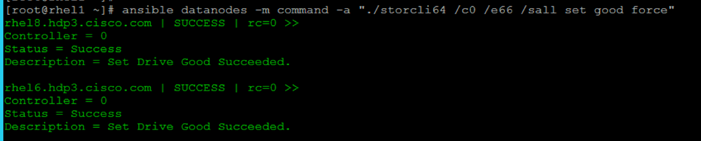

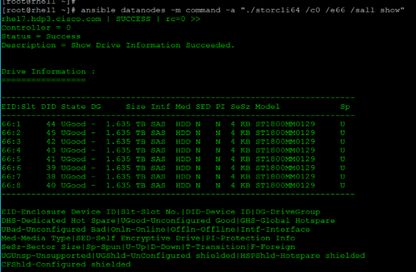

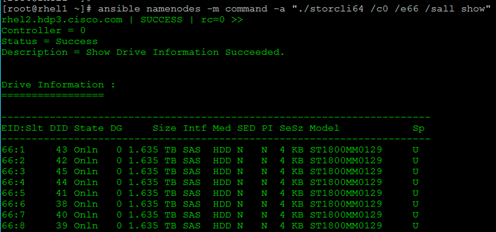

Configure Data Drives on Name Node and Other Management Nodes

Configure Data Drives on Data Nodes

Configure the Filesystem for NameNodes and Datanodes

Prerequisites for HDP Installation

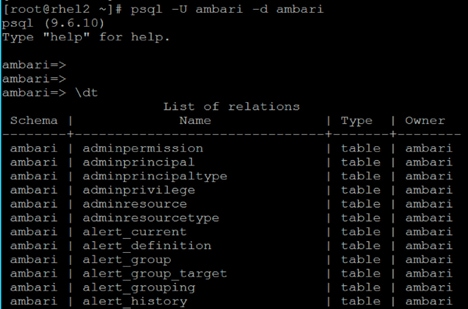

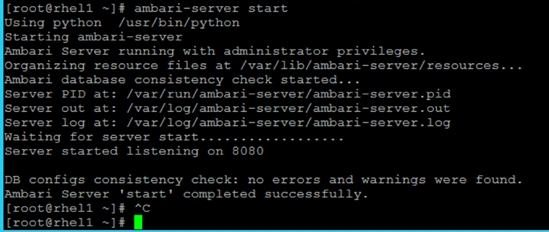

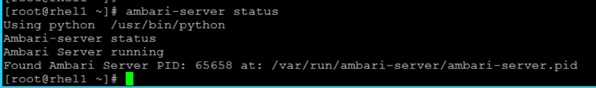

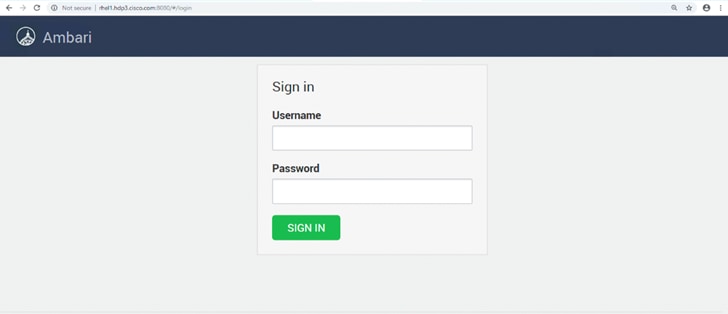

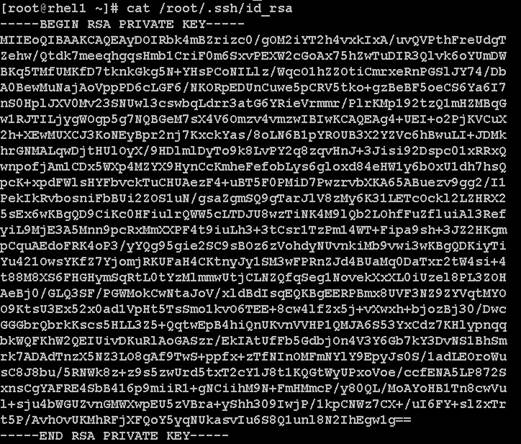

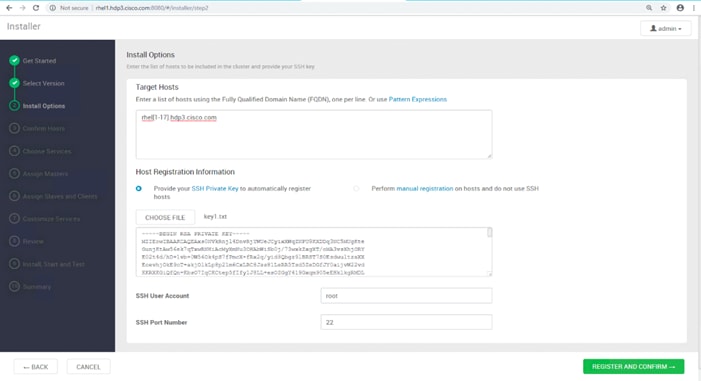

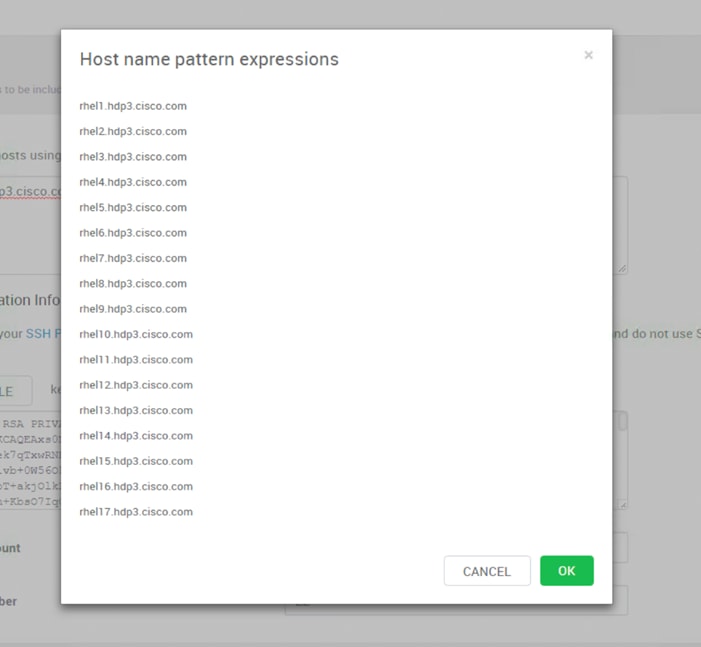

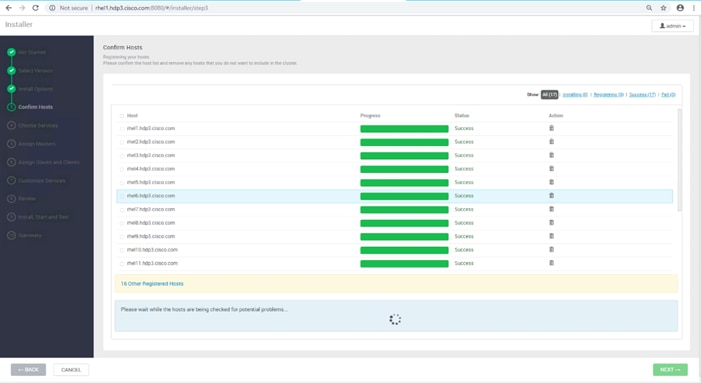

Install and Setup Ambari Server on rhel1

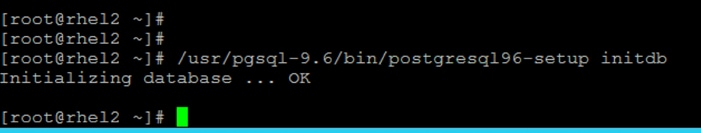

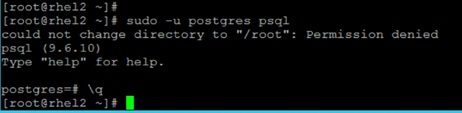

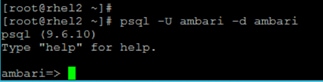

Setup Ambari Server On Admin Node(Rhel1)

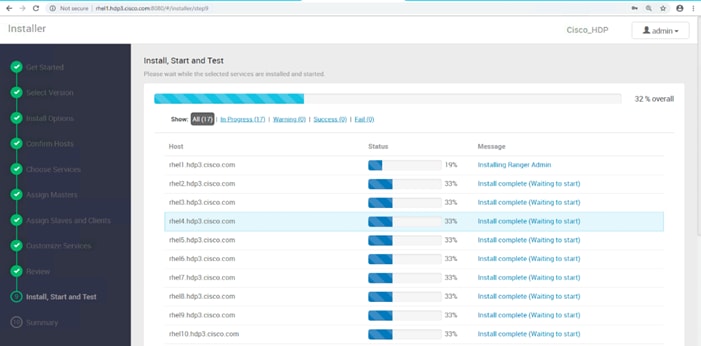

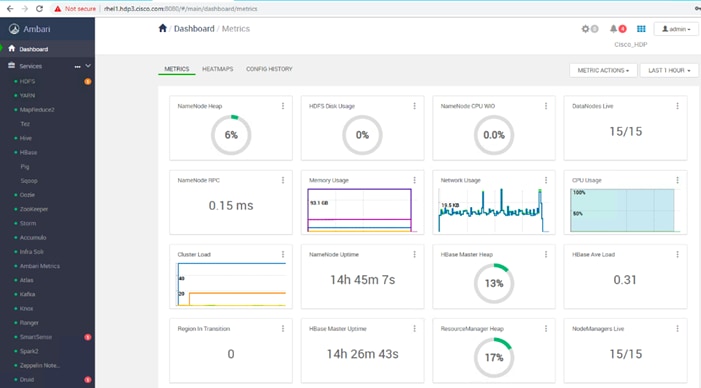

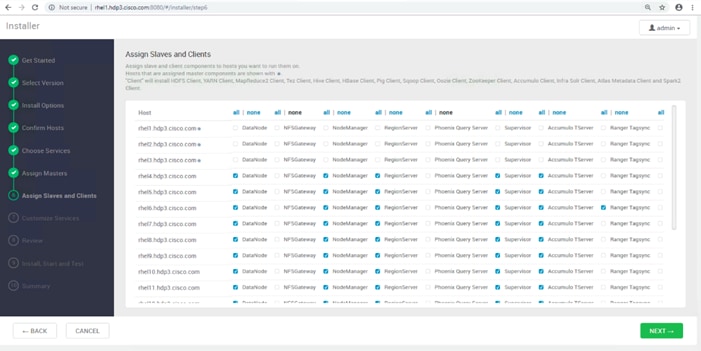

Summary of the Installation Process

High Availability for HDFS NameNode and YARN ResourceManager

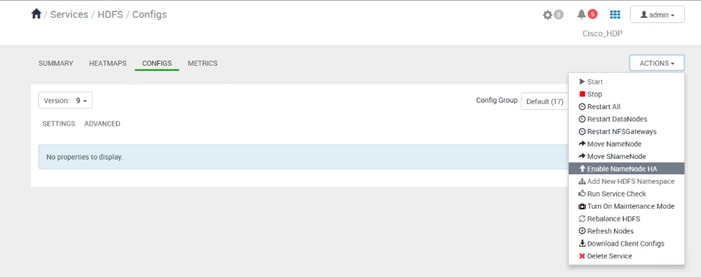

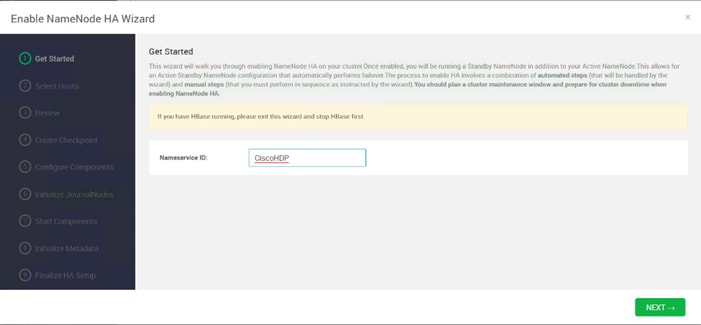

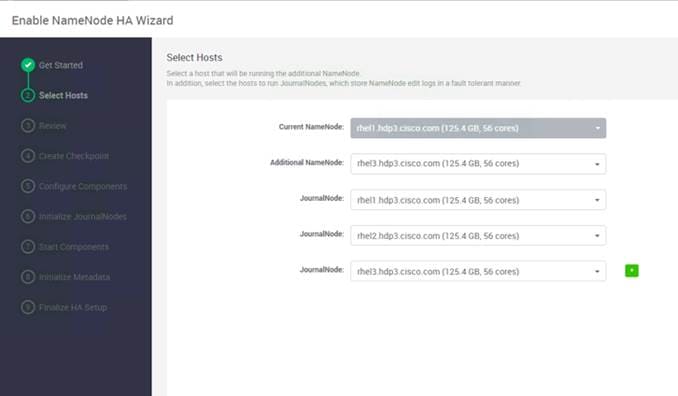

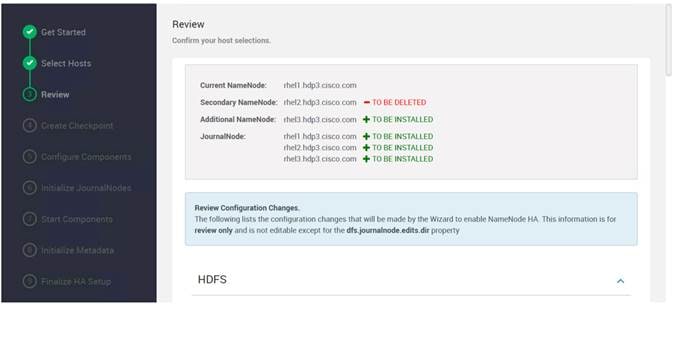

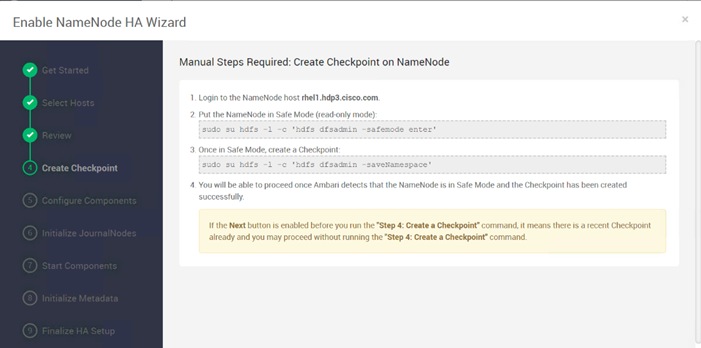

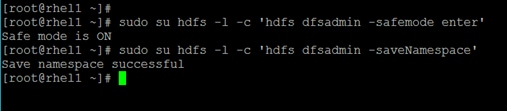

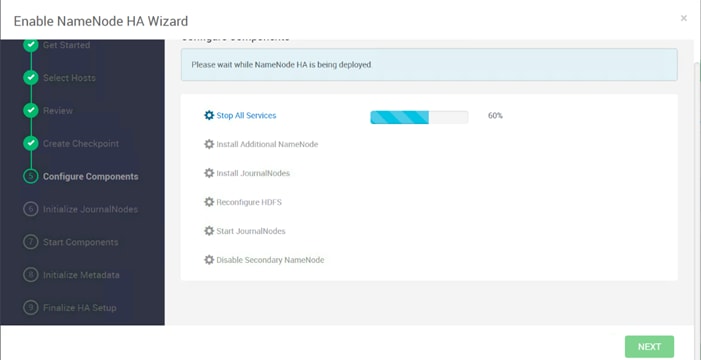

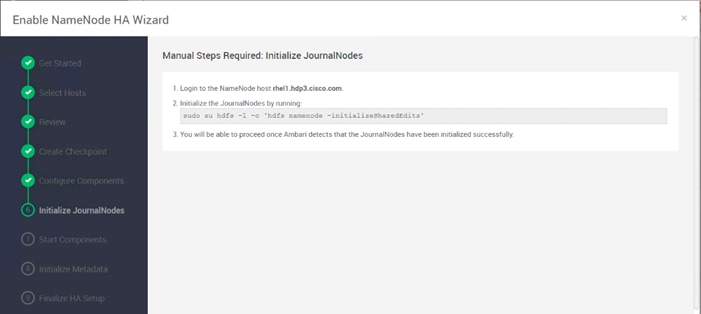

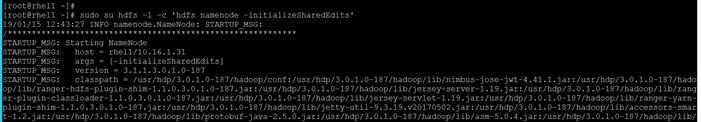

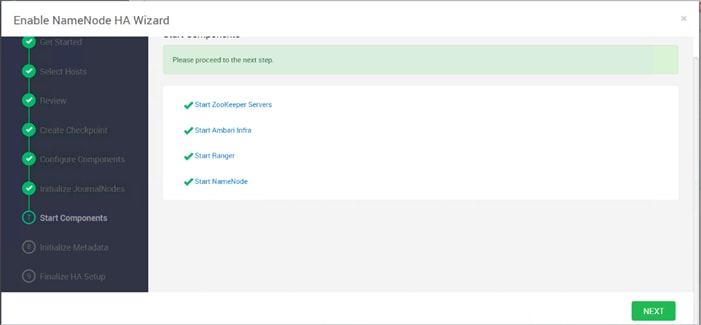

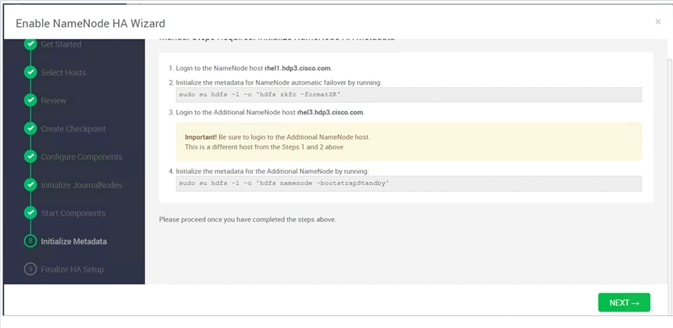

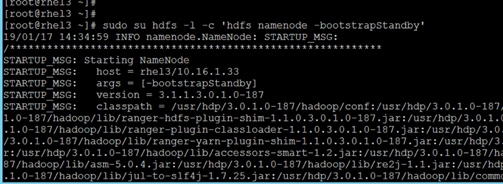

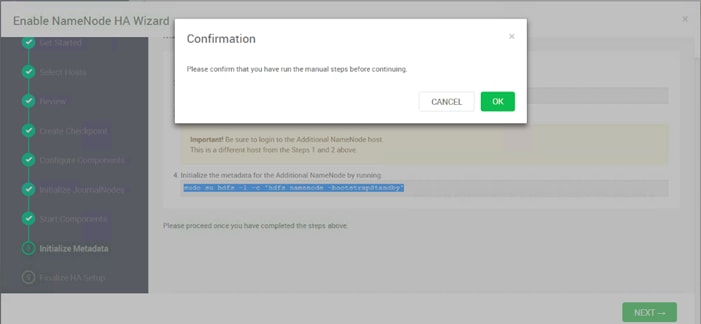

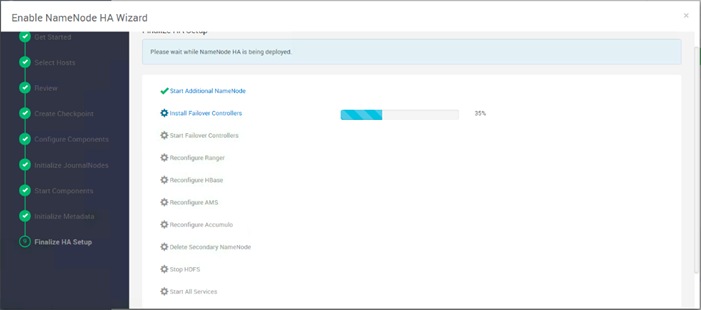

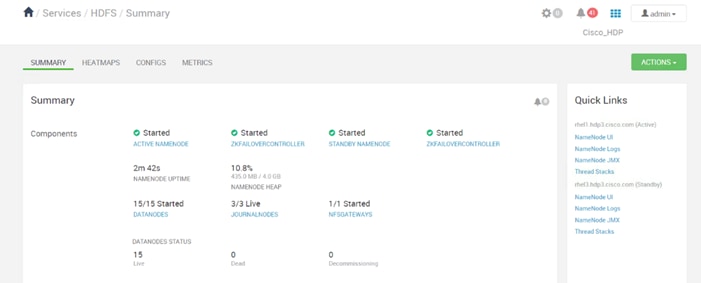

Configure the HDFS NameNode High Availability

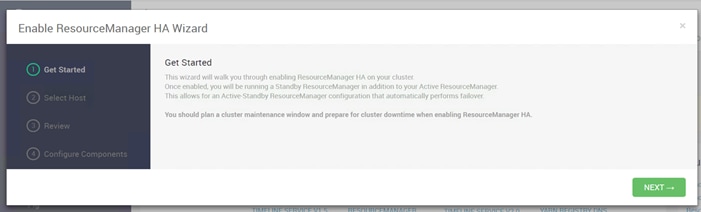

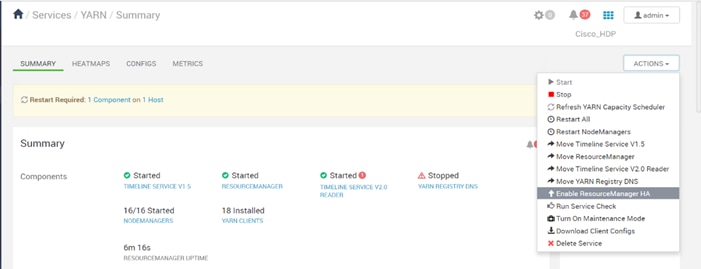

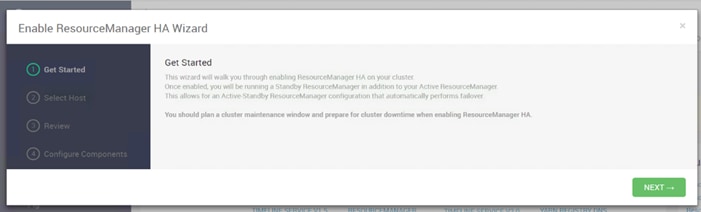

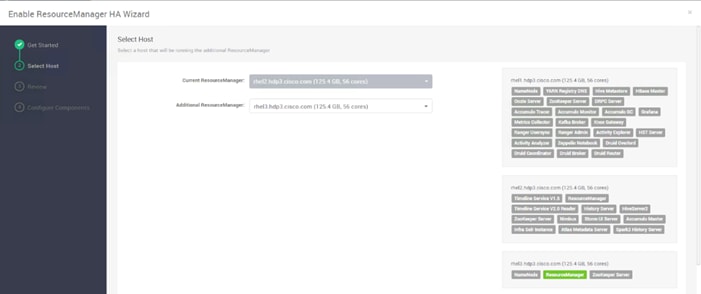

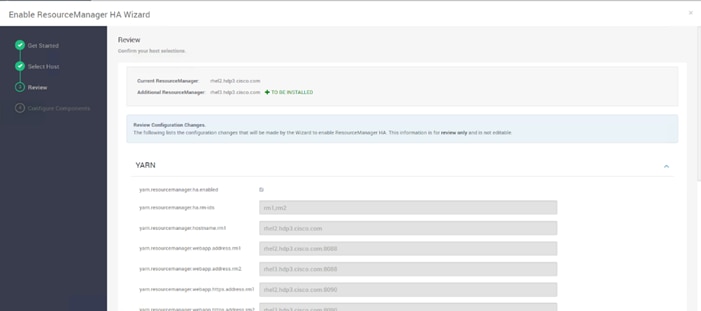

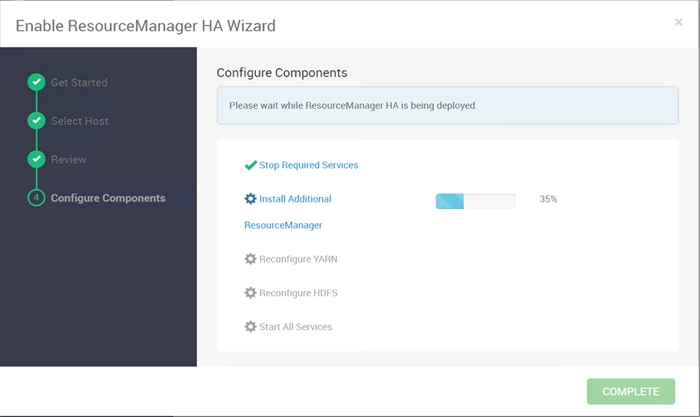

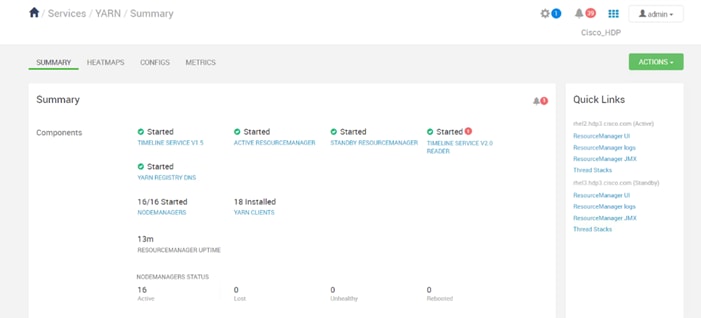

Configure the YARN ResourceManger HA

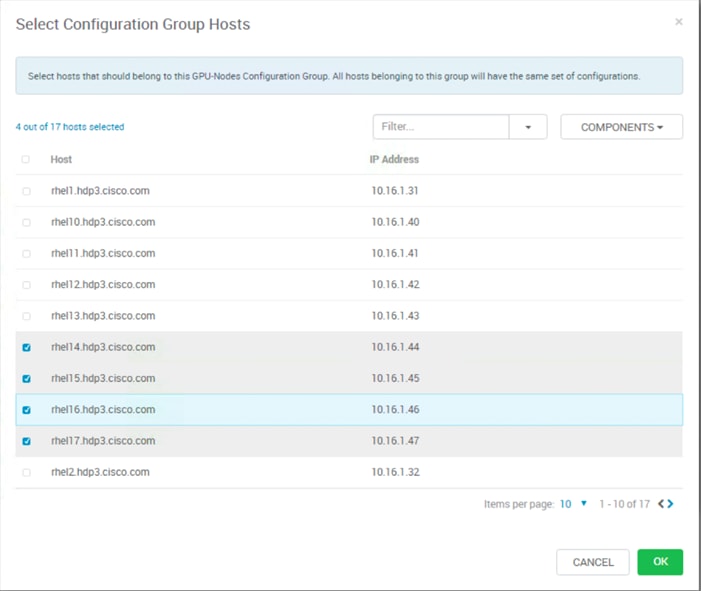

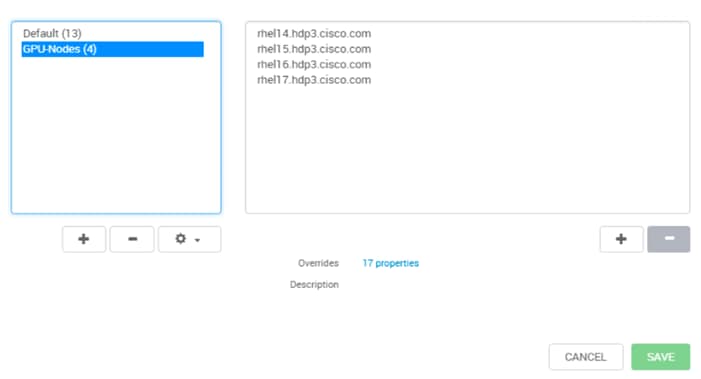

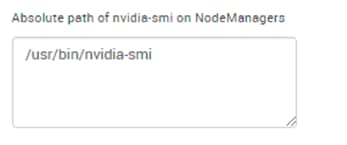

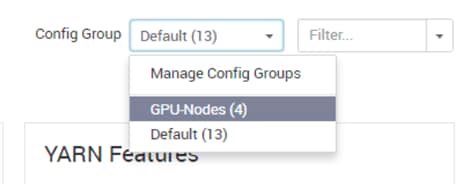

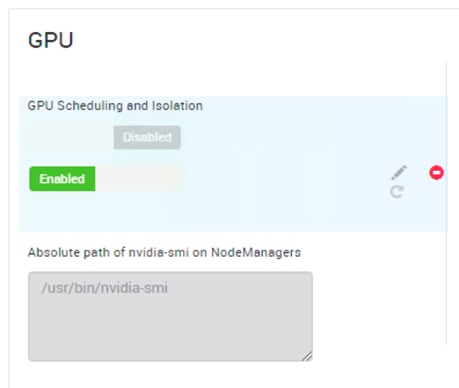

HDP Post OS Deployment – Enable GPU Isolation and Scheduling

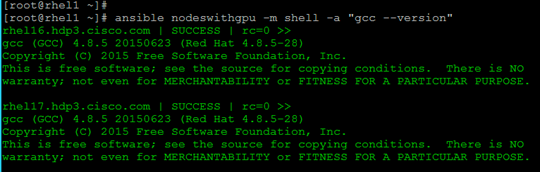

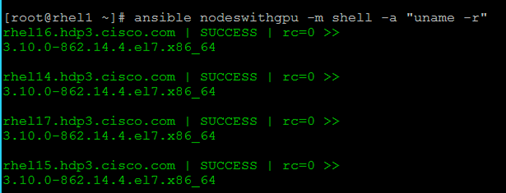

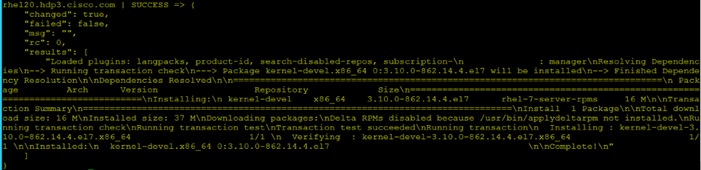

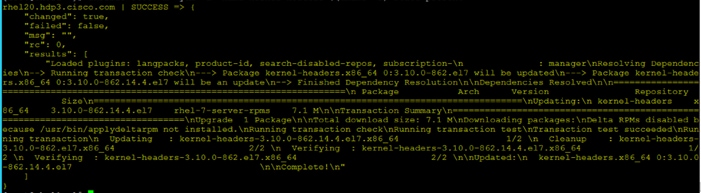

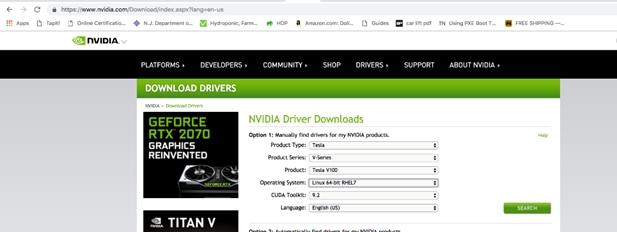

Install the Prerequisites for CUDA

Download and Setup NVIDIA CUDA Deep Neural Network library (cuDNN)

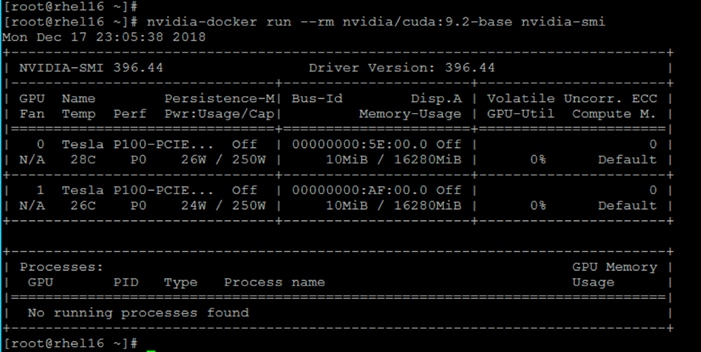

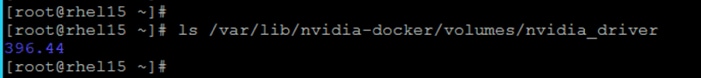

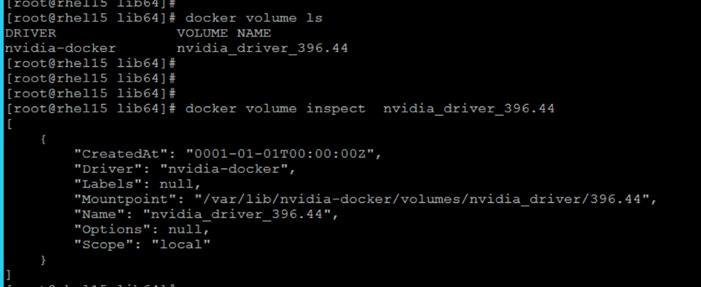

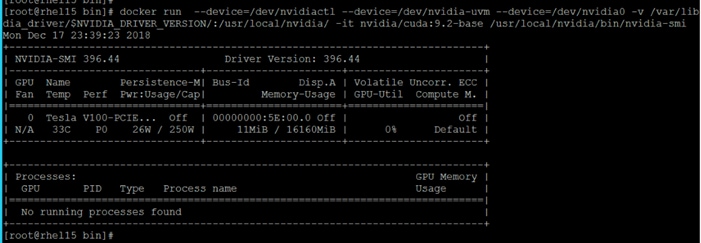

Install nvidia-docker in GPU Nodes

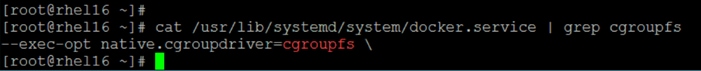

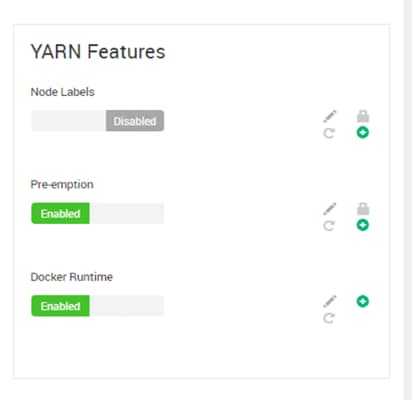

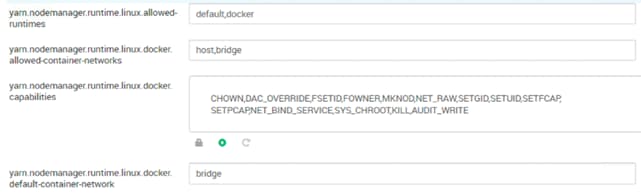

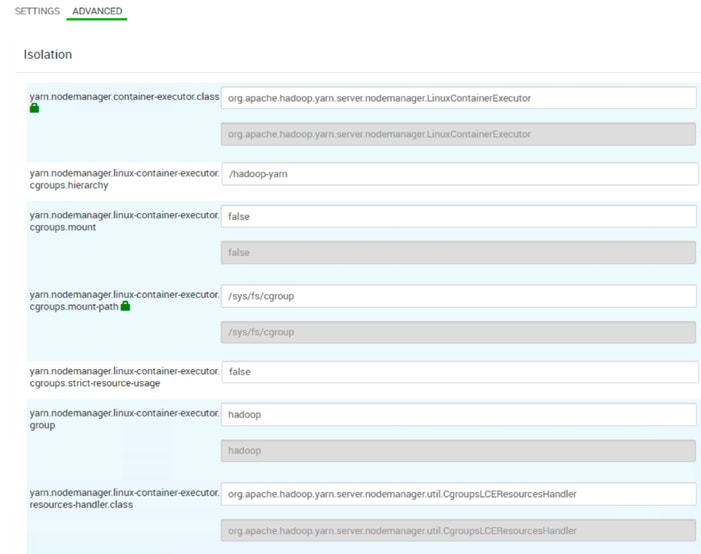

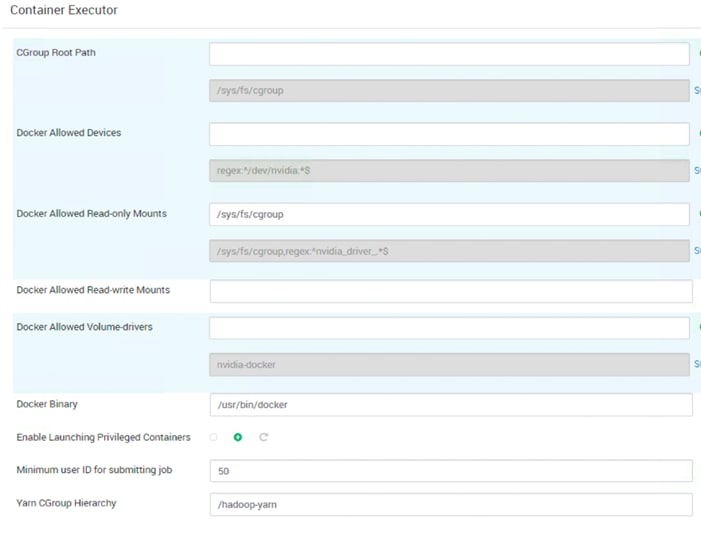

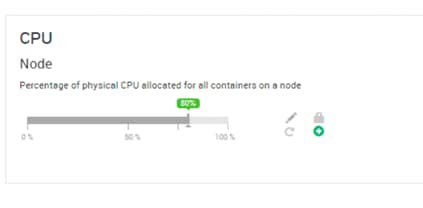

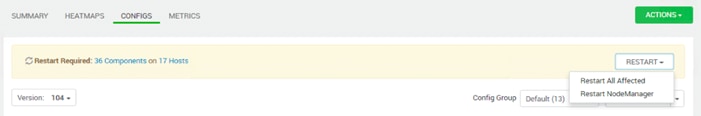

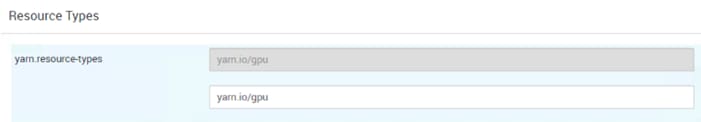

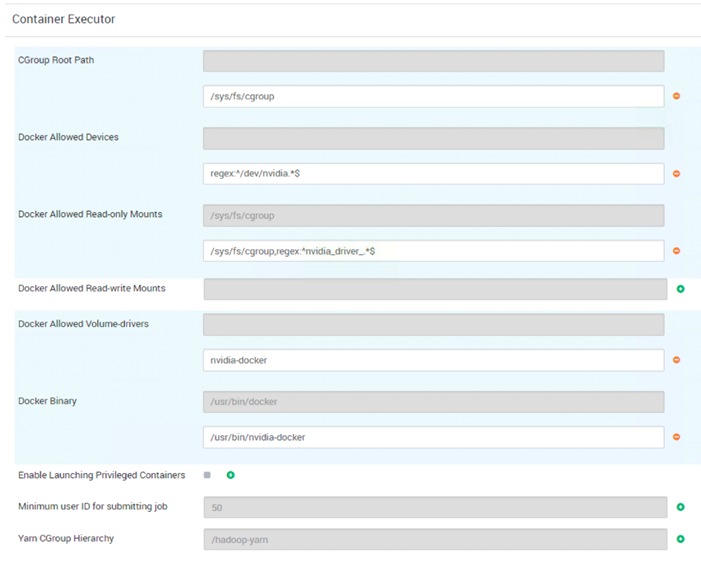

Configure YARN to Running Docker Containers

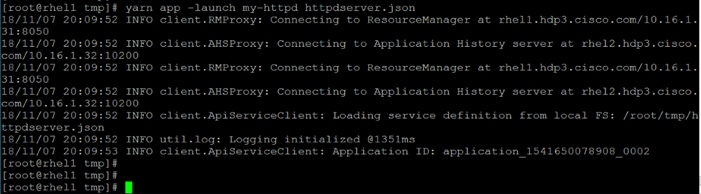

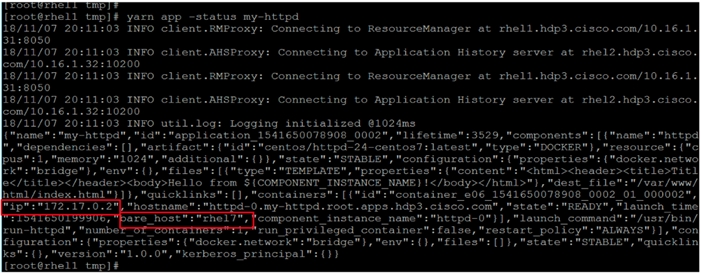

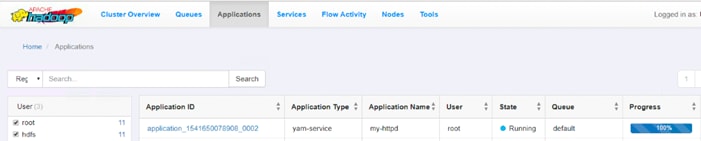

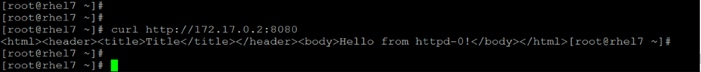

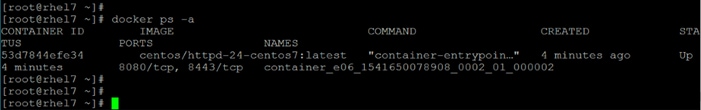

Run Docker on YARN Using the YARN Service API

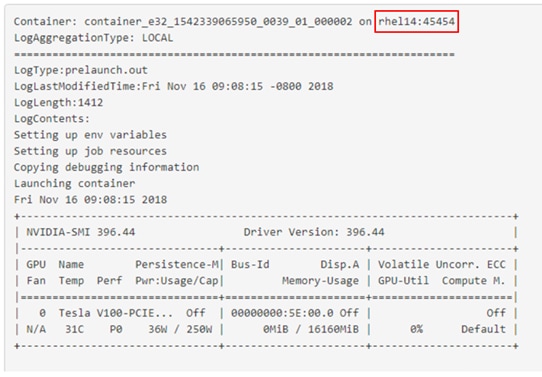

Run Docker Container with GPU on YARN

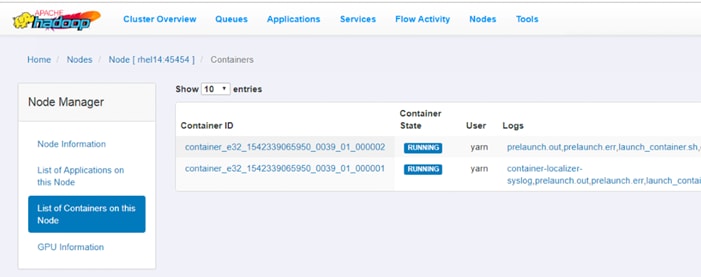

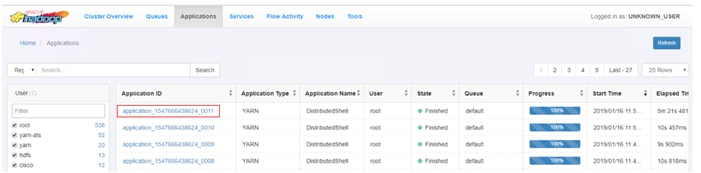

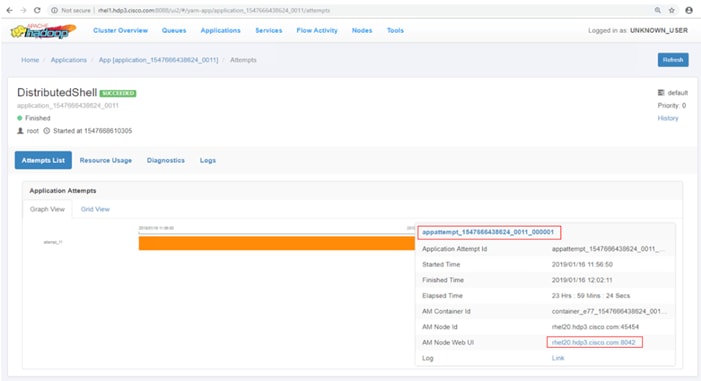

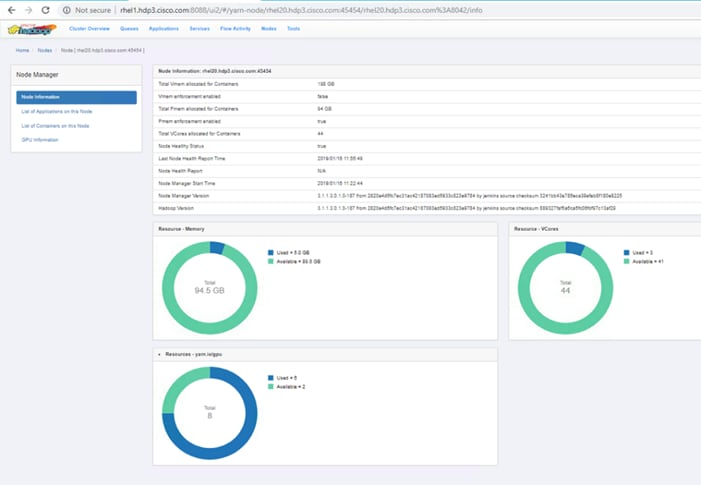

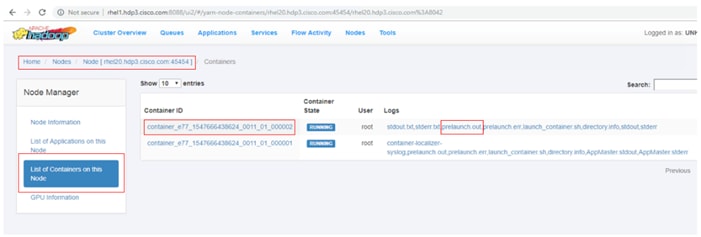

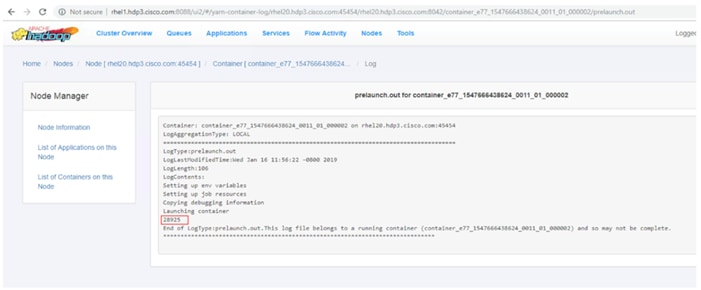

Apache Hadoop YARN Distributed Shell

Run TensorFlow Container Using YARN Distributed Shell

Years ago, most enterprises relied on batch processing to unlock the value of big data and achieved intelligence. As data size and data sources grew, it also drove a demand for real-time analysis which was catered by Spark and Spark streaming for business-critical decisions. In today’s digital world where sensors, Internet of Things (IoT) devices, social media, and online transactions are generating enormous amount of data and companies are struggling to find ways how to better process this data to generate insights and innovation. This drove the next generation of Hadoop which enables Artificial Intelligence and Machine Learning (AI/ML) with deep learning allowing deep learning frameworks and faster compute with CPUs and GPUs to solve problems driving the next generation of platforms.

The emerging trend of Artificial Intelligence requires large amounts of data for its training and Hadoop is a natural fit for storing and retrieving these large amounts of data. Many machine learning tasks especially, deep learning requires the use of GPUs, a specialized, very high-performance processor that is massively parallel in nature. This solution focuses on Hadoop accelerating AI natively where GPUs are part of a Hadoop cluster and are natively scheduled by Hadoop schedulers to process massive amounts of data stored in the same cluster.

Building next-generation big data architecture requires simplified and centralized management, high performance, and a linearly-scaling infrastructure and software platform. Cisco UCS Integrated Infrastructure for Big Data and Analytics with Hortonworks Data Platform (HDP) is an optimal choice where world class performance and reliability are base requirements. It is the strong foundation upon which solutions are built. The Cisco UCS reference architecture has been designed to scale from a small starting solution to thousands of servers and hundreds of petabytes of storage with ease, and all managed from a single pane of glass. Cisco UCS reference architecture also provides the customer with the flexibility an AI workload demands proving choice of server with 2,4,6 or 8 GPUs in a single server catering to different AI compute requirements.

The Hortonworks Data Platform (HDP) is the industry’s enterprise-ready open-source Apache Hadoop framework for distributed storage and processing of large datasets. HDP 3.0 bring the capability of managing and scheduling GPUs, Docker containers into Hadoop enabling AI workloads natively in Hadoop. HDP also enables agile application deployment in a containerized micro-services architecture. Containerization makes it possible to run multiple versions of applications for AI/ML/DL workloads. Furthermore, HDP also supports third-party applications in Docker containers and native YARN containers.

Introduction

Both big data and machine learning technology have progressed to the point where they are being implemented in production systems running 24x7. There exists a very clear need for a proven, dependable, high-performance platform for the ingestion, processing, storage and analysis of the data, as well as the seamless dissemination of the output, results and insights of the analysis.

This solution implements the Cisco UCS Integrated Infrastructure for Big Data and Analytics, a world-class platform specifically designed for demanding workloads that is both easy to scale and easy to manage, even as the requirements grow to thousands of servers and petabytes of storage; and Hortonworks Data Platform, an integrated set of tools designed to enable flexible, fast access to the entire data store, while enabling AI workloads on servers with GPUs.

This CVD implements the following:

· Hortonworks Data Platform 3.0 on Cisco UCS Integrated Infrastructure for Big Data and Analytics

· Install and Enable Docker to be used by YARN 2.0

· Enable CUDA for the GPUs

· Enable GPU as a resource to the Docker Containers through NVIDIA-docker v1

· Enable GPU isolation and scheduling (with Docker Containers) through YARN 2.0

· Downloading a TensorFlow image from NVIDIA Cloud (NGC)

· Adding trusted registries for Docker for YARN 2.0

· Execute a sample TensorFlow job accessing data from Hadoop and running on a Docker container with GPU as a resource scheduled by YARN 2.0

· Installation and setup of the above through Apache Ambari

Caveats and Limitations

The following is beyond the scope of this CVD and therefore is not addressed.

Docker Networking

YARN does not manage Docker networks or Docker multi-host networking. In the implementation of this CVD, container(s) in one host cannot communicate with container(s) in another host.

· Docker Swarm and other docker container orchestration such as Kubernetes are not supported by HDP as they might be competing with YARN

· This document considers TensorFlow or other AI applications in only standalone container spawned and scheduled through YARN 2.0. Distributed TensorFlow and other framework support is expected in later release and will be added as part of addendum

NVIDIA-Docker

· As of this release of Hortonworks and this CVD, only nvidia-docker v1 is supported with HDP 3.0

Many companies recognizing the immense potential of big data and AI/ML technology, are gearing-up to leverage these new capabilities, building-out departments and increasing hiring. However, these efforts face a new set of challenges:

· Making the data available to the diverse set of people who need it

· Enabling access to high-performance computing resources, GPUs, that also scale with data growth

· Allowing people to work with the data using the environments in which they are familiar

· Publishing their results so the organization can make use of it

· Enabling the automated production of those results

· Managing the data for compliance and governance

· Scaling the system as the data grows

· Managing and administering the system in an efficient, cost-effective way

This solution is based on the Cisco UCS Integrated Infrastructure for Big Data and Analytics and includes computing, storage, connectivity, and unified management capabilities to help companies manage the immense amount of data being collected. It is built on the Cisco Unified Computing System (Cisco UCS) infrastructure using Cisco UCS 6332 Series Fabric Interconnects and Cisco UCS C-Series Rack Servers. This architecture is specifically designed for performance and linear scalability for big data and machine learning workloads.

Audience

The intended audience of this document includes, but not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering and customers who want to deploy the Hortonworks Data Platform (HDP 3.0) on Cisco UCS Integrated Infrastructure for Big Data and Analytics. You are assumed to have intermediate level of knowledge for Apache Hadoop and Cisco UCS based scale-out infrastructure.

Purpose of this Document

This document describes the architecture and step by step guidelines of deployment procedures for Hortonworks Data Platform (HDP) 3.0.1 on a 28-node Cisco UCS C240 M5 cluster based on Cisco UCS Integrated Infrastructure for Big Data and Analytics.

Solution Summary

This CVD describes in detail the process for installing Hortonworks 3.0.1 with Apache Spark and Docker containers including the configuration details of the cluster. The current version of Cisco UCS Integrated Infrastructure for Big Data and Analytics offers the following configurations depending on the compute and storage requirements as shown in Table 1.

Table 1 Cisco UCS Integrated Infrastructure for Big Data and Analytics Configuration Options

|

|

Performance (UCS-SP-C240M5-A2) |

Capacity (UCS-SPC240M5L-S1) |

High Capacity (UCS-SP-S3260-BV) |

| Servers |

16 x Cisco UCS C240 M5 Rack Servers with SFF drives |

16 x Cisco UCS C240 M5 Rack Servers with LFF drives |

8 x Cisco UCS S3260 Storage Servers |

| CPU |

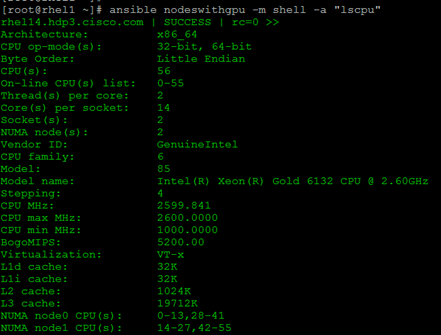

2 x Intel Xeon Processor Scalable Family 6132 (2 x 14 cores, 2.6 GHz) |

2 x Intel Xeon Processor Scalable Family 4110 (2 x 8 cores, 2.1 GHz) |

2 x Intel Xeon Processor Scalable Family 6132 (2 x 14 cores, 2.6 GHz) |

| Memory |

6 x 32 GB 2666 MHz (192 GB) |

6 x 32 GB 2666 MHz (192 GB) |

6 x 32 GB 2666 MHz (192 GB) |

| Boot |

M.2 with 2 x 240-GB SSDs |

M.2 with 2 x 240-GB SSDs |

M.2 with 2 x 240-GB SSDs |

| Storage |

24 x 2.4 TB 10K rpm SFF SAS HDDs or 12 x 1.6 TB Enterprise Value SATA SSDs |

12 x 8 TB 7.2K rpm LFF SAS HDDs + 2 SFF rear hot-swappable 1.6 TB Enterprise Value SATA SSDs |

24 x 6 TB 7.2K rpm LFF SAS HDDs |

| VIC |

40 Gigabit Ethernet (Cisco UCS VIC 1387) |

40 Gigabit Ethernet (Cisco UCS VIC 1387) |

40 Gigabit Ethernet (Cisco UCS VIC 1387) |

| Storage Controller |

Cisco 12-Gbps SAS Modular RAID Controller with 4-GB flash-based write cache (FBWC) or Cisco 12-Gbps Modular SAS Host Bus Adapter (HBA) |

Cisco 12-Gbps SAS Modular RAID Controller with 2-GB flash-based write cache (FBWC) or Cisco 12-Gbps Modular SAS Host Bus Adapter (HBA) |

Cisco 12-Gbps SAS Modular RAID Controller with 4-GB flash-based write cache (FBWC) |

| Network Connectivity |

Cisco UCS 6332 Fabric Interconnect |

Cisco UCS 6332 Fabric Interconnect |

Cisco UCS 6332 Fabric Interconnect |

| GPU (Optional) |

2 x NVIDIA TESLA V100 with 32G memory each |

2 x NVIDIA TESLA V100 with 32G memory each |

|

Table 2 High Density GPU Nodes for Data Nodes

|

|

Starter |

High Performance |

| Servers |

4 x Cisco UCS C480 M5 Rack Servers |

4 x Cisco UCS C480 ML M5 Rack Servers |

| CPU |

2 x Intel Xeon Processor Scalable Family 6142 (2 x 16 cores, 2.6 GHz) |

2 x Intel Xeon Processor Scalable Family 6142 (2 x 16 cores, 2.6 GHz) |

| Memory |

12 x 32 GB DDR4 (384 GB) |

12 x 32 GB DDR4 (384 GB) |

| Boot |

M.2 with 2 x 960-GB SSDs |

M.2 with 2 x 960-GB SSDs |

| Storage |

24 x 1.8 TB 10K rpm SFF SAS HDDs or 12 x 1.6 TB Enterprise Value SATA SSDs |

24 x 1.8 TB 10K rpm SFF SAS HDDs or 12 x 1.6 TB Enterprise Value SATA SSDs |

| VIC |

40 Gigabit Ethernet (Cisco UCS VIC 1387) |

40 Gigabit Ethernet (Cisco UCS VIC 1387) |

| Storage Controller |

Cisco 12-Gbps SAS Modular RAID Controller with 4-GB flash-based write cache (FBWC) or Cisco 12-Gbps Modular SAS Host Bus Adapter (HBA) |

Cisco 12-Gbps SAS Modular RAID Controller with 4-GB flash-based write cache (FBWC) or Cisco 12-Gbps Modular SAS Host Bus Adapter (HBA) |

| Network Connectivity |

Cisco UCS 6332 Fabric Interconnect |

Cisco UCS 6332 Fabric Interconnect |

| GPU |

4 x NVIDIA TESLA V100 with 32G memory each |

8 x NVIDIA TESLA V100 with 32G memory each and with NVlink |

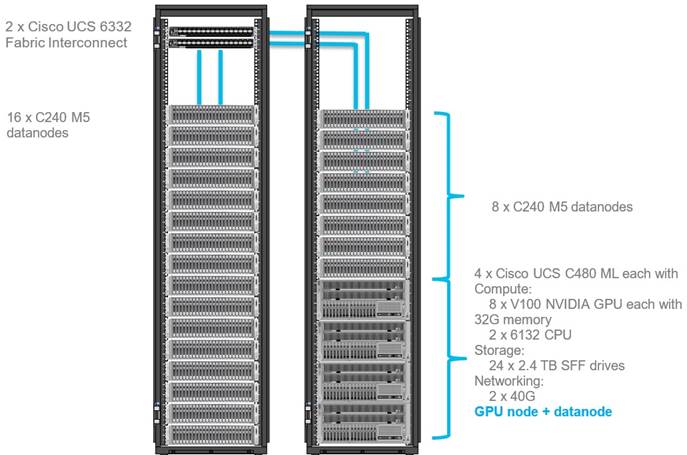

Figure 1 illustrates a 16-node starter cluster. The first rack (left) has 16 Cisco UCS C240 M5 servers. Each link in the figure represents a 40 Gigabit Ethernet link from each of the 16 servers directly connected to a Fabric Interconnect. The second rack (right) has 8 x Cisco UCS C240 M5 servers and 4 x Cisco UCS C480 ML M5 Servers. Every server is connected to both Fabric Interconnects.

![]() High density GPU servers have higher storage for OS M.2 drives for docker volumes on the OS drives.

High density GPU servers have higher storage for OS M.2 drives for docker volumes on the OS drives.

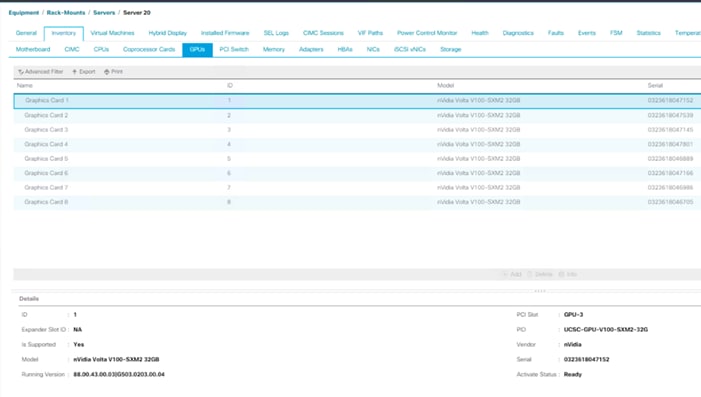

![]() Each Cisco UCS C480 ML M5 has 8 x NVIDIA SXM2 V100 32GB modules with NVLink interconnect. Each Cisco UCS C240 M5 supports up to two PCIe GPU adapters with NVIDIA Tesla V100. For more information about Cisco UCS C240 M5 Sever installation and GPU card configuration rules, go to https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/c/hw/C240M5/install/C240M5/C240M5_appendix_0101.html

Each Cisco UCS C480 ML M5 has 8 x NVIDIA SXM2 V100 32GB modules with NVLink interconnect. Each Cisco UCS C240 M5 supports up to two PCIe GPU adapters with NVIDIA Tesla V100. For more information about Cisco UCS C240 M5 Sever installation and GPU card configuration rules, go to https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/c/hw/C240M5/install/C240M5/C240M5_appendix_0101.html

![]() Power requirements per rack must be calculated since the exact values will change based on the power needs of the GPUs.

Power requirements per rack must be calculated since the exact values will change based on the power needs of the GPUs.

![]() 2 x Cisco UCS 6454 Fabric Interconnects can also be used in this reference design. For more information about Cisco UCS 6454 FI, go to https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/datasheet-c78-741116.html. Cisco UCS 6332 series FI supports 40 Gb end-to-end and is a good choice for higher bandwidth and faster connections. Cisco UCS 6454 can be considered, if you prefer to use 10/25Gb connections and get faster 40/100 Gb uplinks or move to 25Gb in the future.

2 x Cisco UCS 6454 Fabric Interconnects can also be used in this reference design. For more information about Cisco UCS 6454 FI, go to https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/datasheet-c78-741116.html. Cisco UCS 6332 series FI supports 40 Gb end-to-end and is a good choice for higher bandwidth and faster connections. Cisco UCS 6454 can be considered, if you prefer to use 10/25Gb connections and get faster 40/100 Gb uplinks or move to 25Gb in the future.

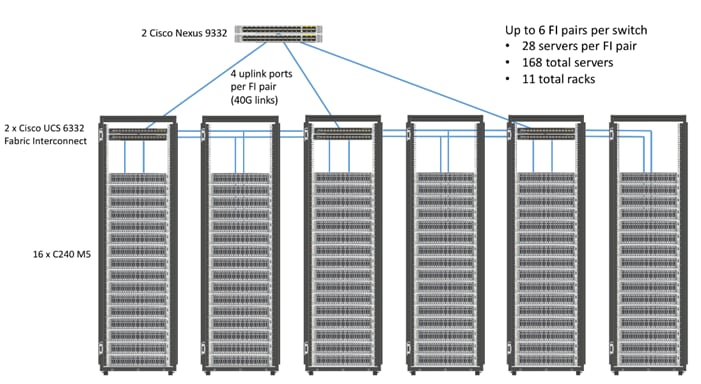

Scaling the Solution

Figure 2 illustrates how to scale the solution. Each pair of Cisco UCS 6332 Fabric Interconnects has 28 Cisco UCS C240 M5 servers connected to it. This allows for four uplinks from each Fabric Interconnect to the Cisco Nexus 9332 switch. Six pairs of 6332 FI’s can connect to a single switch with four uplink ports each. With 28 servers per FI, a total of 168 servers can be supported. Additionally, the can scale to thousands of nodes with the Nexus 9500 series family of switches.

Cisco UCS Integrated Infrastructure for Big Data and Analytics

The Cisco UCS Integrated Infrastructure for Big Data and Analytics solution for Hortonworks Data Platform on Cisco UCS Integrated Infrastructure for Big Data and Analytics, is a highly scalable architecture designed to meet a variety of scale-out application demands with seamless data integration and management integration capabilities built using the components described in this section.

Cisco Unified Computing System

Cisco Unified Computing System is a next-generation solution for blade and rack server computing. Cisco UCS integrates a low-latency; lossless 10 and 40 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. Cisco UCS is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain. Cisco UCS accelerates the delivery of new services simply, reliably, and securely through end-to-end provisioning and migration support for both virtualized and non-virtualized systems. Cisco UCS fuses access layer networking and servers. This high-performance, next-generation server system provides a data center with a high degree of workload agility and scalability.

Cisco UCS 6300 Series Fabric Interconnects

Cisco UCS 6300 Series Fabric Interconnects provide high-bandwidth, low-latency connectivity for servers, with integrated, unified management provided for all connected devices by Cisco UCS Manager (UCSM). Deployed in redundant pairs, Cisco fabric interconnects offer the full active-active redundancy, performance, and exceptional scalability needed to support the large number of nodes that are typical in clusters serving big data applications. Cisco UCS Manager enables rapid and consistent server configuration using service profiles, automating ongoing system maintenance activities such as firmware updates across the entire cluster as a single operation. Cisco UCS Manager also offers advanced monitoring with options to raise alarms and send notifications about the health of the entire cluster.

The Cisco UCS 6300 series Fabric interconnects are a core part of Cisco UCS, providing low-latency, lossless 10 and 40 Gigabit Ethernet, Fiber Channel over Ethernet (FCoE), and Fiber Channel functions with management capabilities for the entire system. All servers attached to Fabric interconnects become part of a single, highly available management domain.

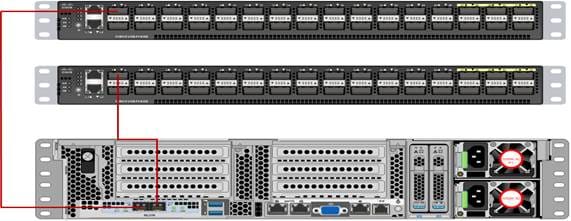

Figure 3 Cisco UCS 6332 UP 32 -Port Fabric Interconnect

Cisco UCS C-Series Rack-Mount Servers

Cisco UCS C-Series Rack-Mount Servers keep pace with Intel Xeon processor innovation by offering the latest processors with increased processor frequency and improved security and availability features. With the increased performance provided by the Intel Xeon Scalable Family Processors, Cisco UCS C-Series servers offer an improved price-to-performance ratio. They also extend Cisco UCS innovations to an industry-standard rack-mount form factor, including a standards-based unified network fabric, Cisco VN-Link virtualization support, and Cisco Extended Memory Technology.

It is designed to operate both in standalone environments and as part of Cisco UCS managed configuration, these servers enable organizations to deploy systems incrementally—using as many or as few servers as needed—on a schedule that best meets the organization’s timing and budget. Cisco UCS C-Series servers offer investment protection through the capability to deploy them either as standalone servers or as part of Cisco UCS. One compelling reason that many organizations prefer rack-mount servers is the wide range of I/O options available in the form of PCIe adapters. C-Series servers support a broad range of I/O options, including interfaces supported by Cisco and adapters from third parties.

Cisco UCS C240 M5 Rack-Mount Server

The Cisco UCS C240 M5 Rack-Mount Server (Figure 4) is a 2-socket, 2-Rack-Unit (2RU) rack server offering industry-leading performance and expandability. It supports a wide range of storage and I/O-intensive infrastructure workloads, from big data and analytics to collaboration. Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of a Cisco Unified Computing System managed environment to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ Total Cost of Ownership (TCO) and increase their business agility.

In response to ever-increasing computing and data-intensive real-time workloads, the enterprise-class Cisco UCS C240 M5 server extends the capabilities of the Cisco UCS portfolio in a 2RU form factor. It incorporates the Intel Xeon Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, and five times more

Non-Volatile Memory Express (NVMe) PCI Express (PCIe) Solid-State Disks (SSDs) compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance. The Cisco UCS C240 M5 delivers outstanding levels of storage expandability with exceptional performance, along with the following:

· Latest Intel Xeon Scalable CPUs with up to 28 cores per socket

· Up to 24 DDR4 DIMMs for improved performance

· Up to 26 hot-swappable Small-Form-Factor (SFF) 2.5-inch drives, including 2 rear hot-swappable SFF drives (up to 10 support NVMe PCIe SSDs on the NVMe-optimized chassis version), or 12 Large-Form- Factor (LFF) 3.5-inch drives plus 2 rear hot-swappable SFF drives

· Support for 12-Gbps SAS modular RAID controller in a dedicated slot, leaving the remaining PCIe Generation 3.0 slots available for other expansion cards

· Modular LAN-On-Motherboard (mLOM) slot that can be used to install a Cisco UCS Virtual Interface Card (VIC) without consuming a PCIe slot, supporting dual 10- or 40-Gbps network connectivity

· Dual embedded Intel x550 10GBASE-T LAN-On-Motherboard (LOM) ports

· Modular M.2 or Secure Digital (SD) cards that can be used for boot

Figure 4 Cisco UCS C240 M5 Rack-Mount Server – Front View

Figure 5 Cisco UCS C240 M5 Rack-Mount Server – Rear View

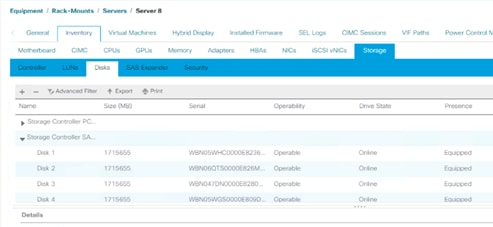

Cisco UCS C480 M5 Rack-Mount Server

The Cisco UCS C480 M5 Rack-Mount Server is a storage and I/O-optimized enterprise-class rack-mount server that delivers industry-leading performance for in-memory databases, big data analytics, virtualization, Virtual Desktop Infrastructure (VDI), and bare-metal applications. The Cisco UCS C480 M5 (Figure 6) delivers outstanding levels of expandability and performance for standalone or Cisco Unified Computing System managed environments in a 4RU form-factor. Because of its modular design, you pay for only what you need. It offers these capabilities:

· Latest Intel Xeon Scalable processors with up to 28 cores per socket and support for two-or four-processor configurations

· 2666-MHz DDR4 memory and 48 DIMM slots for up to 6 Terabytes (TB) of total memory

· 12 PCI Express (PCIe) 3.0 slots

- Six x 8 full-height, full length slots

- Six x16 full-height, full length slots

· Flexible storage options with support up to 32 Small-Form-Factor (SFF) 2.5-inch, SAS, SATA, and PCIe NVMe disk drives

· Cisco 12-Gbps SAS Modular RAID Controller in a dedicated slot

· Internal Secure Digital (SD) and M.2 boot options

· Dual embedded 10 Gigabit Ethernet LAN-On-Motherboard (LOM) ports

Figure 6 Cisco UCS C480 M5 Rack-Mount Server – Front View

Figure 7 Cisco UCS C480 M5 Rack-Mount Server – Rear View

For more information about Cisco UCS C480 M5 Rack Server, go to: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/datasheet-c78-739291.html

Cisco UCS C480 ML M5 Rack Server

The Cisco UCS C480 ML M5 Rack Server is a purpose-built server for Deep Learning. It is storage and I/O optimized to deliver an industry-leading performance for training Models. The Cisco UCS C480 ML M5 delivers outstanding levels of storage expandability and performance options for standalone or Cisco Unified Computing System managed environments in a 4RU form factor. Because of its modular design, you pay for only what you need. It offers these capabilities:

· 8 NVIDIA SXM2 V100 32G modules with NVLink interconnect

· Latest Intel Xeon Scalable processors with up to 28 cores per socket and support for two processor configurations

· 2666-MHz DDR4 memory and 24 DIMM slots for up to 3 terabytes (TB) of total memory

· 4 PCI Express (PCIe) 3.0 slots for 100G UCS VIC 1495

· Flexible storage options with support for up to 24 Small-Form-Factor (SFF) 2.5-inch, SAS/SATA Solid-State Disks (SSDs) and Hard-Disk Drives (HDDs)

· Up to 6 PCIe NVMe disk drives

· Cisco 12-Gbps SAS Modular RAID Controller in a dedicated slot

· M.2 boot options

· Dual embedded 10 Gigabit Ethernet LAN-On-Motherboard (LOM) ports

Figure 8 Cisco UCS C480 ML M5 Purpose Built Deep Learning Server – Front View

Figure 9 Cisco UCS C480 ML M5 Purpose Built Deep Learning Server – Rear View

For more information about Cisco UCS C480 ML M5 Server, go to: https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/c480m5-specsheet-ml-m5-server.pdf

Table 3 lists the features and benefits of Cisco UCS C480 ML M5 Server.

Table 3 Feature and Benefits for Cisco UCS C480 ML M5 Server

| Feature |

Benefits |

| 8 x NVIDIA SXM2 V100 32GB modules with NVLink interconnect |

Fast Deep Learning model training

|

| Modular storage support with up to 24 front accessible hot-swappable Hard Disk Drives (HDDs) and Solid-State Disks (SSDs) |

Modularity to right-size storage options to match training requirements Flexibility to expand as storage needs increase |

| High-capacity memory support of up to 3 TB using 128-GB DIMMs |

Large memory footprint to deliver performance and capacity for large model training |

| Up to 6 PCIe NVMe drives |

Up to 6 Gen3 x4 lanes NVMe drives for extreme I/O performance for faster model training |

| Support for up to 4 PCIe Generation 3.0 slots

|

Support for up to four 10/25 or 40/100G Cisco VICs |

| Hot-swappable, redundant power supplies |

Increased high availability |

| Integrated dual 10-Gbps Ethernet |

Increased network I/O performance and additional network options |

Cisco UCS Virtual Interface Cards

Cisco UCS Virtual Interface Cards (VICs) are unique to Cisco. Cisco UCS Virtual Interface Cards incorporate next-generation converged network adapter (CNA) technology from Cisco and offer dual 10- and 40-Gbps ports designed for use with Cisco UCS servers. Optimized for virtualized networking, these cards deliver high performance and bandwidth utilization, and support up to 256 virtual devices.

The Cisco UCS Virtual Interface Card 1387 offers dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP+) 40 Gigabit Ethernet and Fiber Channel over Ethernet (FCoE) in a modular-LAN-on-motherboard (mLOM) form factor. The mLOM slot can be used to install a Cisco VIC without consuming a PCIe slot providing greater I/O expandability.

Figure 10 Cisco UCS VIC 1387

For more information about Cisco UCS Adapters, go to: https://www.cisco.com/c/en/us/products/interfaces-modules/unified-computing-system-adapters/index.html

Cisco UCS Manager

Cisco UCS Manager (UCSM) resides within the Cisco UCS 6300 Series Fabric Interconnect. It makes the system self-aware and self-integrating, managing all of the system components as a single logical entity. Cisco UCS Manager can be accessed through an intuitive GUI, a CLI, or an XML API. Cisco UCS Manager uses service profiles to define the personality, configuration, and connectivity of all resources within Cisco UCS, radically simplifying provisioning of resources so that the process takes minutes instead of days. This simplification allows IT departments to shift their focus from constant maintenance to strategic business initiatives.

For more information about Cisco UCS Manger, go to: https://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-manager/index.html

NVIDIA GPU

Graphics Processing Units or GPUs are specialized processors designed to render images, animation and video for computer displays. They perform this task by running many operations simultaneously. While the number and kinds of operations they can do are limited, they make up for it by being able run many thousands in parallel. As the graphics capabilities of GPUs increased, it soon became apparent that the massive parallelism of GPUs could be put to other uses beside rendering graphics.

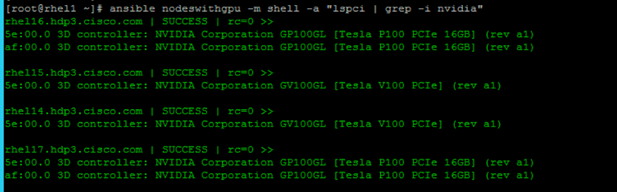

NVIDIA GPU used in this document, NVIDIA Tesla V100, is advanced data center GPU built to accelerate AI, HPC, and graphics. It is powered by NVIDIA Volta architecture, comes in 16 and 32 GB configurations.

NVIDIA GPUs bring two key advantages to the table. First, they make possible solutions that were simply not computationally possible before. Second, by providing the same processing power as scores of traditional CPUs they reduce the requirements for rack space, power, networking and cooling in the data center.

NVIDIA CUDA

GPUs are very good at running the same operation on different data simultaneously. This is often referred to as single instruction, multiple data, or SIMD. This is exactly what’s needed to render graphics but many other computing problems can benefit from this approach. As a result, NVIDIA created CUDA. CUDA is a parallel computing platform and programming model that makes it possible to use a GPU for many general-purpose computing tasks via commonly used programming languages like C and C++.

In addition to the general-purpose computing capabilities that CUDA enables there is also a special CUDA library for deep learning called the CUDA Deep Neural Network library, or cuDNN. cuDNN makes it easier to implement deep machine learning architectures that take full advantage of the GPU’s capabilities.

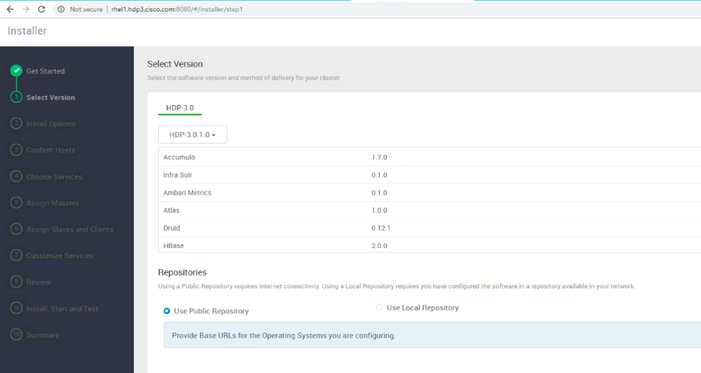

Hortonworks Data Platform

The Hortonworks Data Platform (HDP 3.0.1) delivers essential capabilities in a completely open, integrated and tested platform that is ready for enterprise usage. With Hadoop YARN at its core, HDP provides flexible enterprise data processing across a range of data processing engines, paired with comprehensive enterprise capabilities for governance, security and operations.

All the integration of the entire solution is thoroughly tested and fully documented. By taking the guesswork out of building out a Hadoop deployment, HDP gives a streamlined path to success in solving real business problems.

Hortonworks Data Platform (HDP) 3.0 delivers significant new features, including the ability to launch apps in a matter of minutes and address new use cases for high-performance deep learning and machine learning apps. In addition, this new version of HDP enables enterprises to gain value from their data faster, smarter, in a hybrid environment.

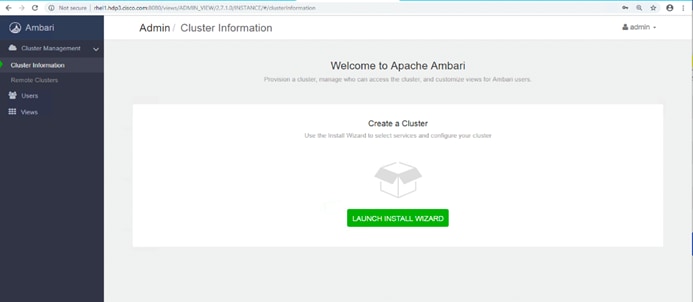

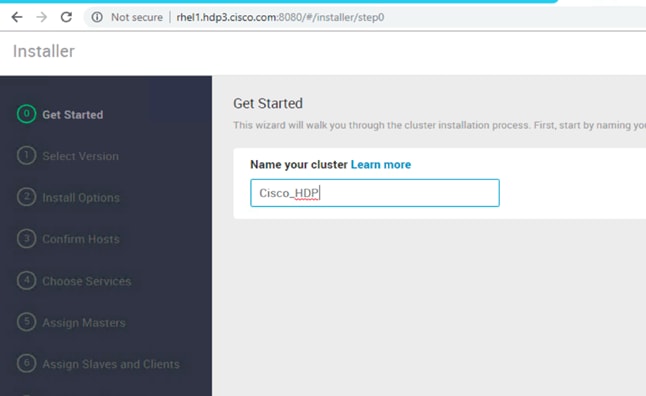

Apache Ambari

Apache Ambari is a completely open source management platform. It performs provisioning, managing, securing, and monitoring Apache Hadoop clusters. Apache Ambari is a part of Hortonworks Data Platform and it allows enterprises to plan and deploy HDP cluster. It also provides ongoing cluster maintenance and management.

Ambari provides an intuitive Web UI as well as an extensive REST API framework which is very useful for automating cluster operations.

Below are the core benefits that Hadoop operators get with Ambari:

· Simplified Installation, Configuration and Management. Easily and efficiently create, manage and monitor clusters at scale. Takes the guesswork out of configuration with Smart Configs and Cluster Recommendations. Enables repeatable, automated cluster creation with Ambari Blueprints.

· Centralized Security Setup. Reduce the complexity to administer and configure cluster security across the entire platform. Helps automate the setup and configuration of advanced cluster security capabilities such as Kerberos and Apache Ranger.

· Full Visibility into Cluster Health. Ensure your cluster is healthy and available with a holistic approach to monitoring. Configures predefined alerts — based on operational best practices — for cluster monitoring. Captures and visualizes critical operational metrics — using Grafana — for analysis and troubleshooting. Integrated with Hortonworks SmartSense for proactive issue prevention and resolution.

· Highly Extensible and Customizable. Fit Hadoop seamlessly into your enterprise environment. Highly extensible with Ambari Stacks for bringing custom services under management, and with Ambari Views for customizing the Ambari Web UI.

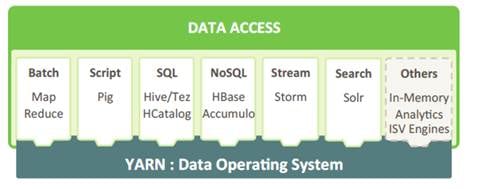

HDP for Data Access

With YARN at its foundation, HDP provides a range of processing engines that allow users to interact with data in multiple and parallel ways, without the need to stand up individual clusters for each data set/application. Some applications require batch while others require interactive SQL or low-latency access with NoSQL. Other applications require search, streaming or in-memory analytics. Apache Solr, Storm and Spark fulfill those needs respectively.

To function as a true data platform, the YARN-based architecture of HDP enables the widest possible range of access methods to coexist within the same cluster avoiding unnecessary and costly data silos.

As shown in Figure 11, HDP Enterprise natively provides for the following data access types:

· Batch – Apache MapReduce has served as the default Hadoop processing engine for years. It is tested and relied upon by many existing applications.

· Interactive SQL Query - Apache Hive is the de facto standard for SQL interactions at petabyte scale within Hadoop. Hive delivers interactive and batch SQL querying across the broadest set of SQL semantics.

· Search - HDP integrates Apache Solr to provide high-speed indexing and sub-second search times across all your HDFS data.

· Scripting - Apache Pig is a scripting language for Hadoop that can run on MapReduce or Apache Tez, allowing you to aggregate, join and sort data.

· Low-latency access via NoSQL - Apache HBase provides extremely fast access to data as a columnar format, NoSQL database. Apache Accumulo also provides high-performance storage and retrieval, but with fine-grained access control to the data.

· Streaming - Apache Storm processes streams of data in real time and can analyze and take action on data as it flows into HDFS.

Docker Containerization

Hortonworks Data Platform (HDP 3.0) makes use of container technology. Containers are conceptually similar to virtual machines, but instead of virtualizing the hardware, a container virtualizes the operating system. With a VM there is an entire operating system sitting on top of the hypervisor. Containers dispense with this time-consuming and resource hungry requirement by sharing the host system’s kernel. As a result, a container is far smaller, and its lightweight nature means they can be instantiated quickly. In fact, they can be instantiated so quickly that new application architectures are possible.

Docker is an open-source project that performs operating-system-level virtualization, also known as "containerization." It uses Linux kernel features like namespaces and control groups to create containers. These features are not new, but Docker has taken these concepts and improved them in the following ways:

· Ease of use: Docker makes easier for anyone — developers, systems admins, architects and others — to take advantage of containers in order to quickly build and test portable applications. It allows anyone to package an application on their development system, which can then run unmodified on any cloud or bare metal server. The basic idea is to create a “build once, run anywhere” system.

· Speed: Docker containers are very fast with a small footprint. Ultimately, containers are just sandboxed environments running on the kernel, so they take up few resources. You can create and run a Docker container in seconds. Compare this to a VM which takes much longer because it has to boot up a full virtual operating system every time.

· Modularity: Docker makes it easy to take an application and breaks its functionality into separate individual containers. These containers can then be spun up and run as needed. This is particularly useful for cases where an application needs to hold and lock a particular resource, like a GPU, and then release it once it’s done using it. Modularity also enables each component, i.e., container to be updated independently.

· Scalability: modularity enables scalability. With different parts of the system running in different containers it becomes possible, and with Docker, it becomes easy to connect these containers together to create an application, which can then be scaled out as needed.

YARN Support For Docker

Containerization provides YARN support for Docker containers, which makes it easier to bundle libraries and dependencies along with their application, allowing third-party applications to run on Apache Hadoop (for example, containerized applications), enabling:

· Faster time to deployment by enabling third-party apps.

· The ability to run multiple versions of an application, enabling users to rapidly create features by developing and testing new versions of services without disrupting old ones.

· Improved resource utilization and increased task throughput for containers, yielding faster time to market for services.

· Orchestration of stateless distributed applications.

· Packaging libraries for Spark application, eliminating the need for operations to deploy those libraries cluster wide.

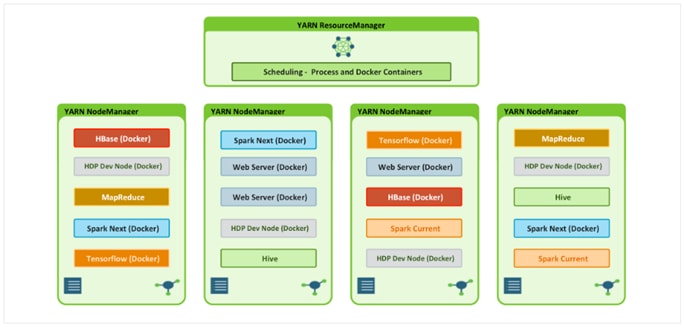

Figure 12 Containerized Application on Apache Hadoop YARN 3.1

As shown in Figure 12, YARN Services Framework in addition with Docker containerization, it is now possible to run both existing Hadoop frameworks, such as Hive, Spark, etc., and new containerized workloads on the same underlying infrastructure. Apache Hadoop 3.1 further improved these capabilities to enable advanced use cases such as TensorFlow and HBase.

NVIDIA Docker

Docker containers are platform-agnostic, but also hardware-agnostic. This presents a problem when using specialized hardware such as NVIDIA GPUs which require kernel modules and user-level libraries to operate. As a result, Docker does not natively support NVIDIA GPUs within containers.

One of the early workarounds to this problem was to fully install the NVIDIA drivers inside the container and map in the character devices corresponding to the NVIDIA GPUs (for example, /dev/nvidia0) on launch. This solution is brittle because the version of the host driver must exactly match the version of the driver installed in the container. This requirement drastically reduced the portability of these early containers, undermining one of Docker’s more important features.

To enable portability in Docker images that leverage NVIDIA GPUs, NVIDIA developed nvidia-docker, an open-source project hosted on GitHub that provides the two critical components needed for portable GPU-based containers:

driver-agnostic CUDA images; and a Docker command line wrapper that mounts the user mode components of the driver and the GPUs (character devices) into the container at launch.

nvidia-docker is essentially a wrapper around the docker command that transparently provisions a container with the necessary components to execute code on the GPU.

![]() As of the publishing of this CVD, Hortonworks only supports nvidia-docker version 1.

As of the publishing of this CVD, Hortonworks only supports nvidia-docker version 1.

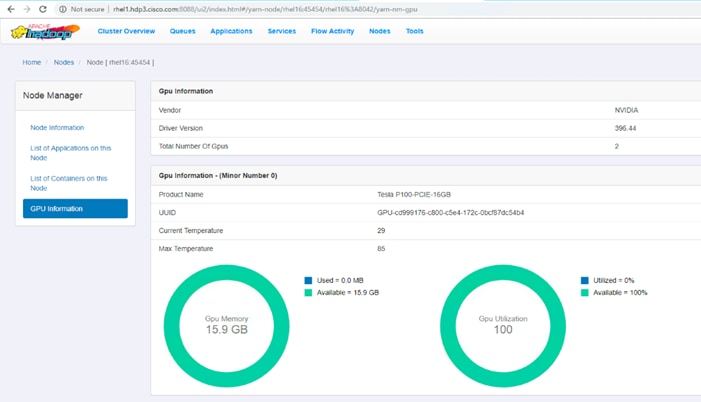

GPU Pooling and Isolation

GPU pooling and isolation allows GPU to be a first-class resource type in Hadoop, making it easier for customers to run machine learning and deep learning workloads.

· Compute-intensive analytics require not only a large compute pool, but also a fast and expensive processing pool with GPUs in tandem

· Customers can share cluster-wide GPU resources without having to dedicate a GPU node to a single tenant or workload

· GPU isolation dedicates a GPU to an application so that no other application has access to that GPU

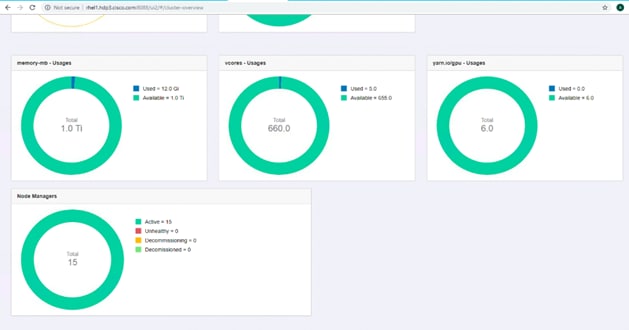

When it comes to resource scheduling, it is important to recognize GPU as a resource. YARN extends the resource model to more flexible mode which makes it easier to add new countable resource-types. When GPU is added as resource type, YARN can schedule applications on GPU machines. Furthermore, by specifying the number of requested GPU to containers, YARN can find machines with available GPUs to satisfy container requests.

Figure 13 YARN Scheduling for GPU/Non-GPU Applications

![]() When GPU scheduling is enabled, YARN can schedule non-GPU applications such as LLAP, Tez, and etc. to servers without GPU. Moreover, YARN can allocate GPU applications such as TensorFlow, Caffe, MXNet, and so on, to servers with GPU.

When GPU scheduling is enabled, YARN can schedule non-GPU applications such as LLAP, Tez, and etc. to servers without GPU. Moreover, YARN can allocate GPU applications such as TensorFlow, Caffe, MXNet, and so on, to servers with GPU.

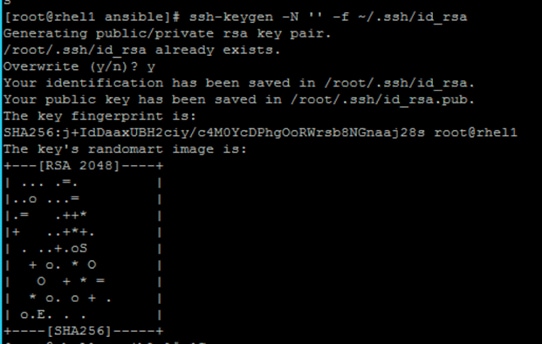

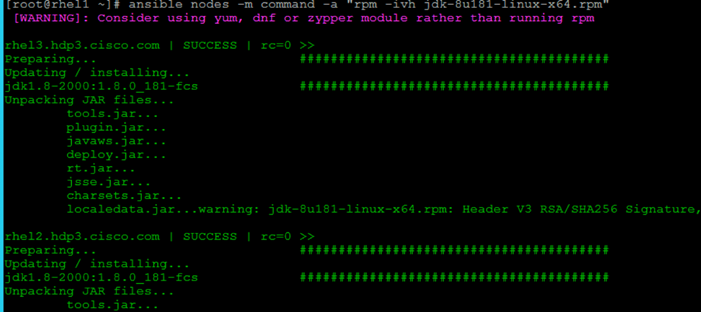

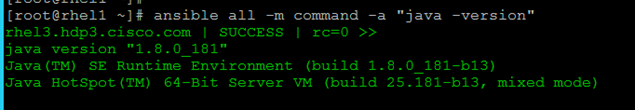

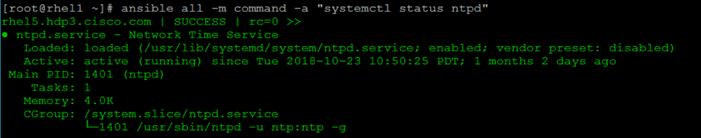

Red Hat Ansible Automation

Red Hat Ansible Automation is a powerful IT automation tool. It is capable of provisioning numerous types of resources and deploying applications. It can configure and manage devices and operating system components. Due to its simplicity, extensibility, and portability, this solution extensively utilizes Ansible for performing repetitive deployment steps across the nodes.

![]() For more information about Ansible, go to: https://www.redhat.com/en/technologies/management/ansible

For more information about Ansible, go to: https://www.redhat.com/en/technologies/management/ansible

Requirements

This CVD describes the architecture and deployment procedures for Hortonworks Data Platform (HDP) 3.0.1 on a 28 Cisco UCS C240 M5 node cluster based on Cisco UCS Integrated Infrastructure for Big Data and Analytics. The solution goes into detail configuring HDP 3.0.1 on the Cisco UCS Integrated infrastructure for Big Data. In addition, it also details the configuration for Hortonworks Dataflow for various use cases.

The cluster configuration consists of the following:

· 2 Cisco UCS 6332UP Fabric Interconnects

· 24 Cisco UCS C240 M5 Rack-Mount servers

· 4 Cisco UCS C480 ML M5 Rack-Mount server

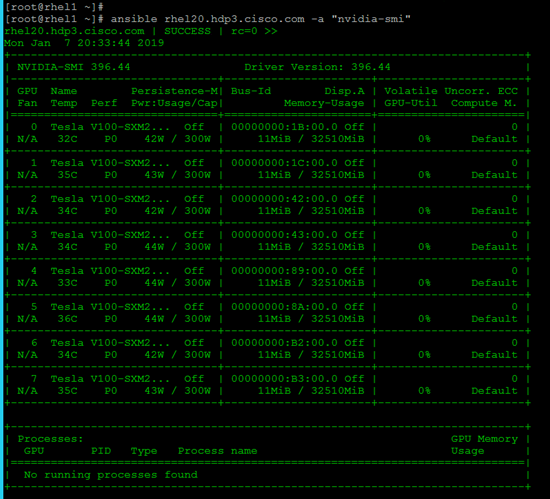

· 8 NVIDIA GPU in each Cisco UCS C480 ML M5

· 2 Cisco R42610 standard racks

· 4 Vertical Power distribution units (PDUs) (Country Specific) per rack

Rack and PDU Configuration

Each rack consists of two vertical PDUs. The first rack consists of two Cisco UCS 6332UP Fabric Interconnects, 16 Cisco UCS C240 M5 Rack Servers connected to each of the vertical PDUs for redundancy; thereby ensuring availability during power source failure. The second rack consists of 8 Cisco UCS C240 M5 Servers and 4 Cisco UCS C480 ML M5 connected to each of the vertical PDUs for redundancy; thereby ensuring availability during power source failure, similar to the first rack.

![]() Please contact your Cisco representative for country specific information.

Please contact your Cisco representative for country specific information.

Table 4 Port Configuration on Fabric Interconnects

| Port Type |

Port Number |

| Network |

29-32 |

| Server |

1-28 |

Cabling for Cisco UCS C240 M5

The Cisco UCS C240 M5 rack server is equipped with 2 x Intel Xeon Processor Scalable Family 6132 (2 x 14 cores, 2.6 GHz), 192 GB of memory, Cisco UCS Virtual Interface Card 1387 Cisco 12-Gbps SAS Modular Raid Controller with 4-GB FBWC, 26 x 1.8 TB 10K rpm SFF SAS HDDs or 12 x 1.6 TB Enterprise Value SATA SSDs, M.2 with 2 x 240-GB SSDs for Boot.

Figure 14 illustrates the port connectivity between the Fabric Interconnect, and Cisco UCS C240 M5 server. Sixteen Cisco UCS C240 M5 servers are used in Master rack configurations.

Figure 14 Cisco UCS C240 M5 and 6300 Series Fabric Interconnect Port Connectivity

For information about physical connectivity and single-wire management go to:

For more information about physical connectivity illustrations and cluster setup, go to:

Software Distributions and Versions

The software distributions required versions are listed below.

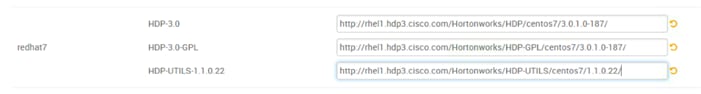

Hortonworks Data Platform (HDP 3.0.1)

The Hortonworks Data Platform supported is HDP 3.0.1. For more information, go to: http://www.hortonworks.com.

Red Hat Enterprise Linux (RHEL)

The operating system supported is Red Hat Enterprise Linux 7.5. For more information, go to: http://www.redhat.com.

Software Versions

The software versions tested and validated in this document are shown in Table 5.

| Layer |

Component |

Version or Release |

| Compute |

Cisco UCS C240 M5 |

C240M5.4.0.2a |

| Cisco UCS C480 ML M5 |

|

|

| Network |

Cisco UCS 6332 |

UCS 4.0(2a) |

| Cisco UCS VIC1387 Firmware |

4.3(2a) |

|

| Cisco UCS VIC1387 Driver |

3.1.137.5 |

|

| Storage |

SAS Expander |

65.02.15.00 |

| Cisco 12G Modular Raid controller |

50.6.0-1952 |

|

| Software |

Red Hat Enterprise Linux Server |

7.5 |

| Cisco UCS Manager |

4.0(2a) |

|

| HDP |

3.0.1 |

|

| Docker |

1.13.1 |

|

| Ansible |

2.4.6.0 |

|

| Nvidia-docker |

1.0.1 |

|

| GPU |

CUDA |

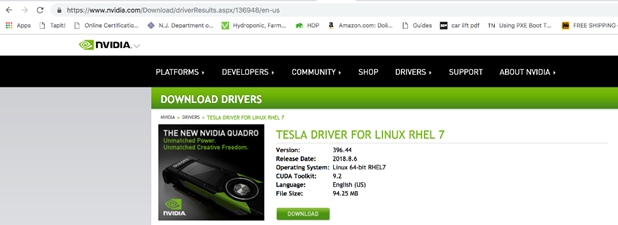

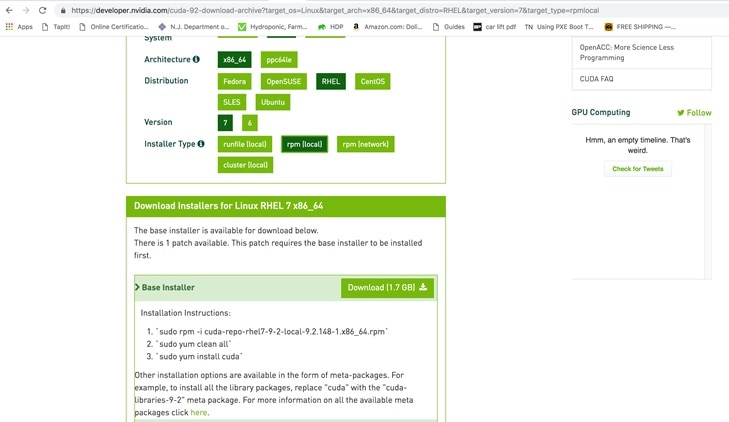

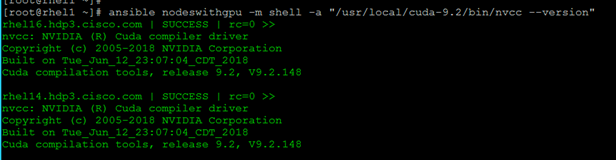

9.2 |

| NVIDIA GPU Driver |

396.44 |

![]() The latest drivers can be downloaded from this link:

The latest drivers can be downloaded from this link:

https://software.cisco.com/download/home/283862063/type/283853158/release/3.1%25283%2529.

![]() The latest supported RAID controller driver is already included with the RHEL 7.5 operating system.

The latest supported RAID controller driver is already included with the RHEL 7.5 operating system.

![]() Cisco UCS C240 M5 Rack Servers with Intel Scalable Processor Family CPUs are supported from Cisco UCS firmware 3.2 onwards.

Cisco UCS C240 M5 Rack Servers with Intel Scalable Processor Family CPUs are supported from Cisco UCS firmware 3.2 onwards.

Fabric Configuration

This section provides the details to configure a fully redundant, highly available Cisco UCS 6332 fabric configuration. The following is the high-level workflow to setup Cisco UCS:

· Initial setup of the Fabric Interconnect A and B

· Connect to Cisco UCS Manager using virtual IP address of using the web browser

· Launch Cisco UCS Manager

· Enable server and uplink ports

· Start discovery process

· Create pools and polices for service profile template

· Create Service Profile template

· Create service profile for each server from service profile template

· Associate Service Profiles to servers5

Perform Initial Setup of Cisco UCS 6332 Fabric Interconnects

This section describes the initial setup of the Cisco UCS 6332 Fabric Interconnects A and B.

Configure Fabric Interconnect A

To configure Fabric Interconnect A, follow these steps:

1. Connect to the console port on the first Cisco UCS 6332 Fabric Interconnect.

2. At the prompt to enter the configuration method, enter console to continue.

3. If asked to either perform a new setup or restore from backup, enter setup to continue.

4. Enter y to continue to set up a new Fabric Interconnect.

5. Enter y to enforce strong passwords.

6. Enter the password for the admin user.

7. Enter the same password again to confirm the password for the admin user.

8. When asked if this fabric interconnect is part of a cluster, answer y to continue.

9. Enter A for the switch fabric.

10. Enter the cluster name for the system name.

11. Enter the Mgmt0 IPv4 address.

12. Enter the Mgmt0 IPv4 netmask.

13. Enter the IPv4 address of the default gateway.

14. Enter the cluster IPv4 address.

15. To configure DNS, answer y.

16. Enter the DNS IPv4 address.

17. Answer y to set up the default domain name.

18. Enter the default domain name.

19. Review the settings that were printed to the console, and if they are correct, answer yes to save the configuration.

20. Wait for the login prompt to make sure the configuration has been saved.

Configure Fabric Interconnect B

To configure Fabric Interconnect B, follow these steps:

1. Connect to the console port on the second Cisco UCS 6332 Fabric Interconnect.

2. When prompted to enter the configuration method, enter console to continue.

3. The installer detects the presence of the partner Fabric Interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

4. Enter the admin password that was configured for the first Fabric Interconnect.

5. Enter the Mgmt0 IPv4 address.

6. Answer yes to save the configuration.

7. Wait for the login prompt to confirm that the configuration has been saved.

For more information about configuring Cisco UCS 6332 Series Fabric Interconnect, go to:

Log Into Cisco UCS Manager

To log into Cisco UCS Manager, follow these steps:

1. Open a Web browser and navigate to the Cisco UCS 6332 Fabric Interconnect cluster address.

2. Click the Launch link to download the Cisco UCS Manager software.

3. If prompted to accept security certificates, accept as necessary.

4. When prompted, enter admin for the username and enter the administrative password.

5. Click Login to log in to the Cisco UCS Manager.

Upgrade Cisco UCS Manager Software to Version 4.0(2a)

This document assumes the use of UCS 4.0(2a). Refer to the Cisco UCS 4.0 Release (upgrade Cisco UCS Manager software and UCS 6332 Fabric Interconnect software to version 4.0(2a). Also, make sure the Cisco UCS C-Series version 4.0(2a) software bundles are installed on the Fabric Interconnects.

![]() Upgrading Cisco UCS firmware is beyond the scope of this document. However for complete Cisco UCS Install and Upgrade Guides, go to: https://www.cisco.com/c/en/us/support/servers-unified-computing/ucs-manager/products-installation-guides-list.html

Upgrading Cisco UCS firmware is beyond the scope of this document. However for complete Cisco UCS Install and Upgrade Guides, go to: https://www.cisco.com/c/en/us/support/servers-unified-computing/ucs-manager/products-installation-guides-list.html

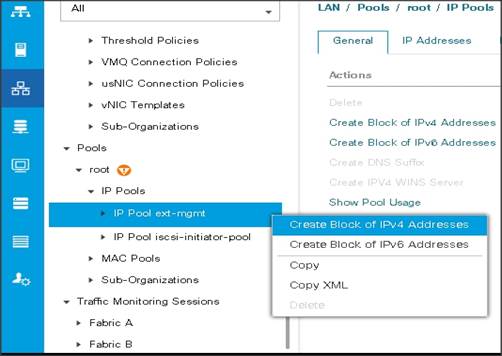

Add a Block of IP Addresses for KVM Access

To create a block of KVM IP addresses for server access in the Cisco UCS environment, follow these steps:

1. Select the LAN tab at the top of the left window.

2. Select Pools > root > IpPools > Ip Pool ext-mgmt.

3. Right-click IP Pool ext-mgmt.

4. Select Create Block of IPv4 Addresses.

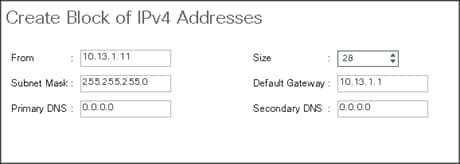

Figure 15 Adding a Block of IPv4 Addresses for KVM Access Part 1

5. Enter the starting IP address of the block and number of IPs needed, as well as the subnet and gateway information.

Figure 16 Adding Block of IPv4 Addresses for KVM Access Part 2

6. Click OK to create the IP block.

7. Click OK in the message box.

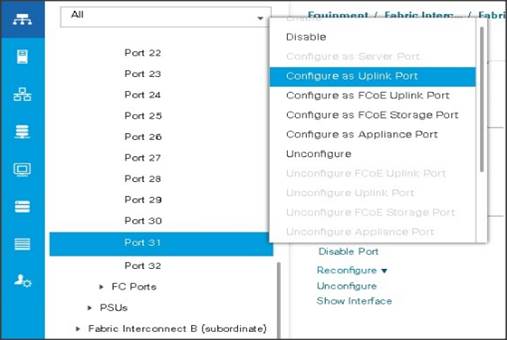

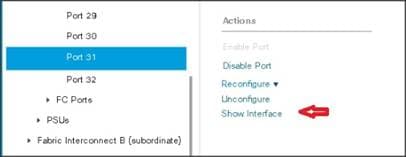

Enable Uplink Ports

To enable uplinks ports, follow these steps:

1. Select the Equipment tab on the top left of the window.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand the Unconfigured Ethernet Ports section.

4. Select port 29-32 that is connected to the uplink switch, right-click, then select Reconfigure > Configure as Uplink Port.

5. Select Show Interface and select 40GB for Uplink Connection.

6. A pop-up window appears to confirm your selection. Click Yes then OK to continue.

7. Select Equipment > Fabric Interconnects > Fabric Interconnect B (subordinate) > Fixed Module.

8. Expand the Unconfigured Ethernet Ports section.

9. Select port number 29-32, which is connected to the uplink switch, right-click, then select Reconfigure > Configure as Uplink Port.

10. Select Show Interface and select 40GB for Uplink Connection.

11. A pop-up window appears to confirm your selection. Click Yes then OK to continue.

Figure 17 Enabling Uplink Ports Part1

Figure 17 Enabling Uplink Ports Part2

Figure 18 Enabling Uplink Ports Part 3

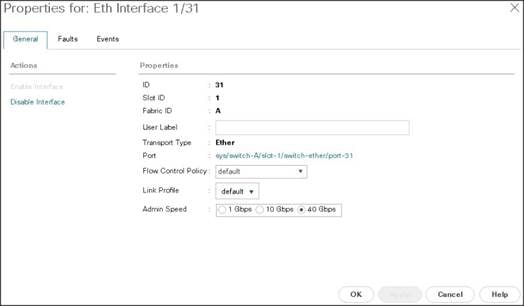

Configure VLANs

VLANs are configured as in shown in Table 6.

| VLAN |

NIC Port |

Function |

| VLAN13 |

eth0 |

Data |

The NIC will carry the data traffic from VLAN13. A single vNIC is used in this configuration and the Fabric Failover feature in Fabric Interconnects will take care of any physical port down issues. It will be a seamless transition from an application perspective.

To configure VLANs in the Cisco UCS Manager GUI, follow these steps:

1. Select the LAN tab in the left pane in the UCSM GUI.

2. Select LAN > LAN Cloud > VLANs.

3. Right-click the VLANs under the root organization.

4. Select Create VLANs to create the VLAN.

Figure 19 Creating a VLAN

5. Enter vlan13 for the VLAN Name.

6. Keep multicast policy as <not set>.

7. Select Common/Global for vlan16.

8. Enter 13 in the VLAN IDs field for the Create VLAN IDs.

9. Click OK and then, click Finish.

10. Click OK in the success message box.

Figure 20 Creating VLAN for Data

11. Click OK and then click Finish.

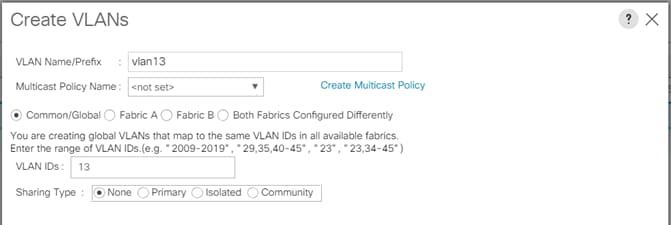

Enable Server Ports

To enable server ports, follow these steps:

1. Select the Equipment tab on the top left of the window.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand the Unconfigured Ethernet Ports section.

4. Select all the ports that are connected to the Servers right-click them and select Reconfigure > Configure as a Server Port.

5. A pop-up window appears to confirm your selection. Click Yes then OK to continue.

6. Select Equipment > Fabric Interconnects > Fabric Interconnect B (subordinate) > Fixed Module.

7. Expand the Unconfigured Ethernet Ports section.

8. Select all the ports that are connected to the Servers right-click them, and select Reconfigure > Configure as a Server Port.

9. A pop-up window appears to confirm your selection. Click Yes, then OK to continue.

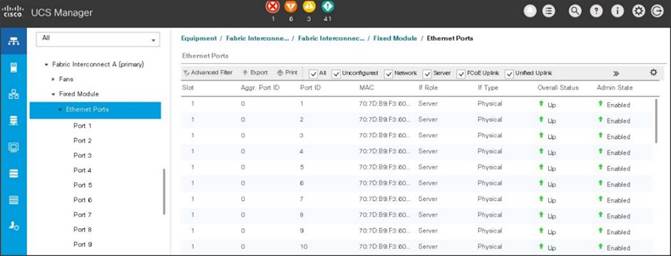

Figure 21 Enabling Server Ports

After the Server Discovery, Port 29-32 will be a Network Port and 1-28 will be Server Ports.

Figure 22 Ports Status after the Server Discover

Create Pools for Service Profile Templates

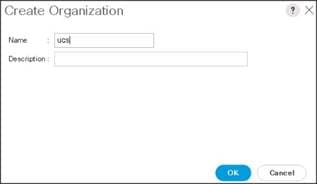

Create an Organization

Organizations are used as a means to arrange and restrict access to various groups within the IT organization, thereby enabling multi-tenancy of the compute resources. This document does not assume the use of Organizations; however, the necessary steps are provided for future reference.

To configure an organization within the Cisco UCS Manager GUI, follow these steps:

1. Click Quick Action icon on the top right corner in the right pane in the Cisco UCS Manager GUI.

2. Select Create Organization from the options

3. Enter a name for the organization.

4. (Optional) Enter a description for the organization.

5. Click OK.

6. Click OK in the success message box.

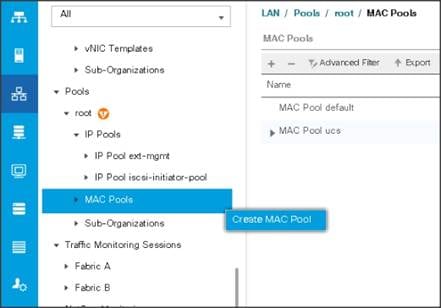

Create MAC Address Pools

To create MAC address pools, follow these steps:

1. Select the LAN tab on the left of the window.

2. Select Pools > root > MAC Pools

3. Right-click MAC Pools under the root organization.

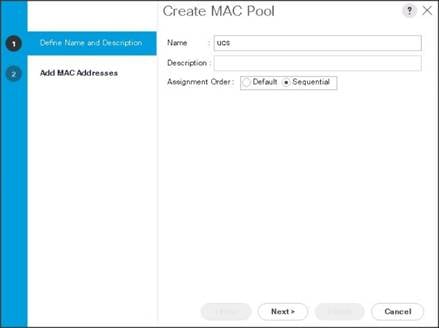

4. Select Create MAC Pool to create the MAC address pool. Enter ucs for the name of the MAC pool.

5. (Optional) Enter a description of the MAC pool.

6. Select Assignment Order Sequential.

7. Click Next.

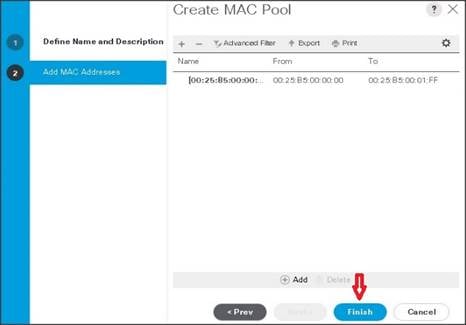

8. Click Add.

9. Specify a starting MAC address.

10. Specify a size of the MAC address pool, which is sufficient to support the available server resources.

11. Click OK.

Figure 23 Specifying first MAC Address and Size

12. Click Finish.

13. When the message box displays, click OK.

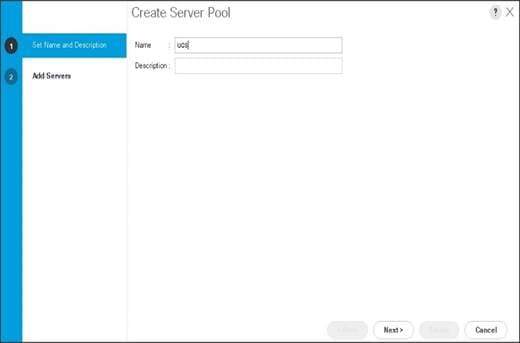

Create a Server Pool

A server pool contains a set of servers. These servers typically share the same characteristics. Those characteristics can be their location in the chassis, or an attribute such as server type, amount of memory, local storage, type of CPU, or local drive configuration. You can manually assign a server to a server pool or use server pool policies and server pool policy qualifications to automate the assignment.

To configure the server pool within the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab in the left pane in the Cisco UCS Manager GUI.

2. Select Pools > root.

3. Right-click the Server Pools.

4. Select Create Server Pool.

5. Enter your required name (ucs) for the Server Pool in the name text box.

6. (Optional) enter a description for the organization.

7. Click Next > to add the servers.

8. Select all the Cisco UCS C240M5 servers to be added to the server pool that was previously created (ucs), then Click >> to add them to the pool.

9. Click Finish.

10. Click OK and then click Finish.

Create Policies for Service Profile Templates

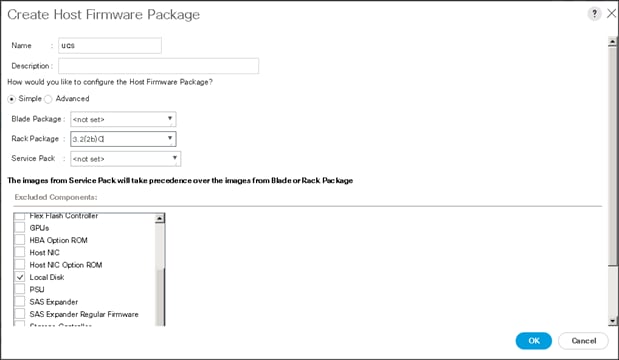

Create Host Firmware Package Policy

Firmware management policies allow the administrator to select the corresponding packages for a given server configuration. These include adapters, BIOS, board controllers, FC adapters, HBA options, and storage controller properties as applicable.

To create a firmware management policy for a given server configuration using the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab in the left pane in the UCS Manager GUI.

2. Select Policies > root.

3. Right-click Host Firmware Packages.

4. Select Create Host Firmware Package.

5. Enter the required Host Firmware package name (ucs).

6. Select Simple radio button to configure the Host Firmware package.

7. Select the appropriate Rack package that has been installed.

8. Click OK to complete creating the management firmware package

9. Click OK.

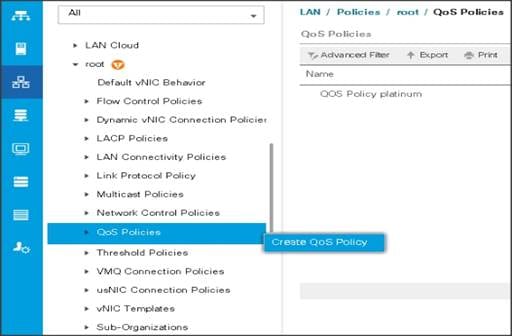

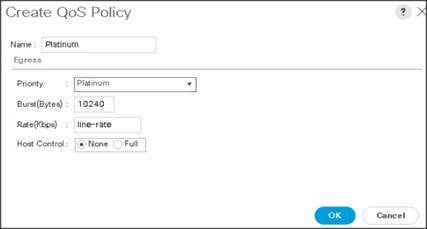

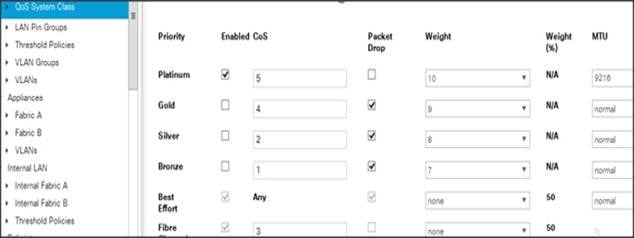

Create QoS Policies

To create the QoS policy for a given server configuration using the Cisco UCS Manager GUI, follow these steps:

Platinum Policy

1. Select the LAN tab in the left pane in the Cisco UCS Manager GUI.

2. Select Policies > root.

3. Right-click QoS Policies.

4. Select Create QoS Policy.

5. Enter Platinum as the name of the policy.

6. Select Platinum from the drop-down list.

7. Keep the Burst(Bytes) field set to default (10240).

8. Keep the Rate(Kbps) field set to default (line-rate).

9. Keep Host Control radio button set to default (none).

10. When the pop-up window appears, click OK to complete the creation of the Policy.

Set Jumbo Frames

To set Jumbo frames and enable QoS, follow these steps:

1. Select the LAN tab in the left pane in the Cisco UCS Manager GUI.

2. Select LAN Cloud > QoS System Class.

3. In the right pane, select the General tab

4. In the Platinum row, enter 9216 for MTU.

5. Check the Enabled Check box next to Platinum.

6. In the Best Effort row, select none for weight.

7. In the Fiber Channel row, select none for weight.

8. Click Save Changes.

9. Click OK.

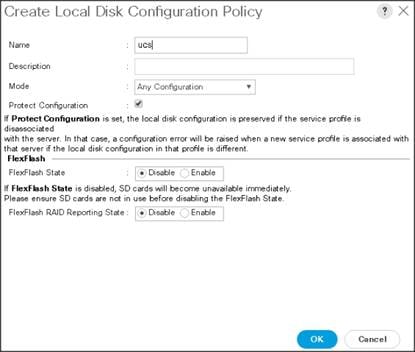

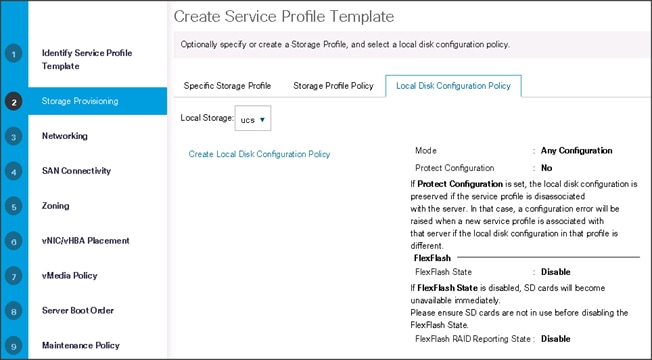

Create the Local Disk Configuration Policy

To create local disk configuration in the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab on the left pane in the Cisco UCS Manager GUI.

2. Go to Policies > root.

3. Right-click Local Disk Config Policies.

4. Select Create Local Disk Configuration Policy.

5. Enter ucs as the local disk configuration policy name.

6. Change the Mode to Any Configuration. Check the Protect Configuration box.

7. Keep the FlexFlash State field as default (Disable).

8. Keep the FlexFlash RAID Reporting State field as default (Disable).

9. Click OK to complete the creation of the Local Disk Configuration Policy.

10. Click OK.

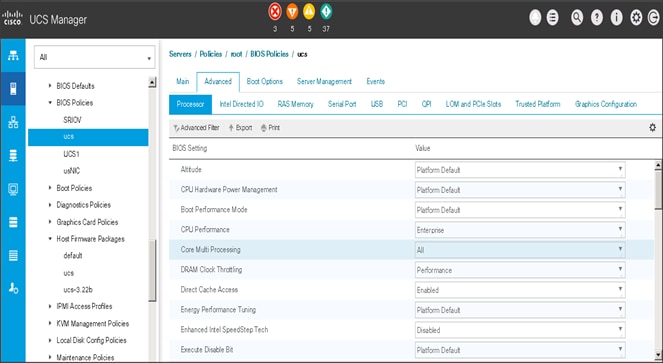

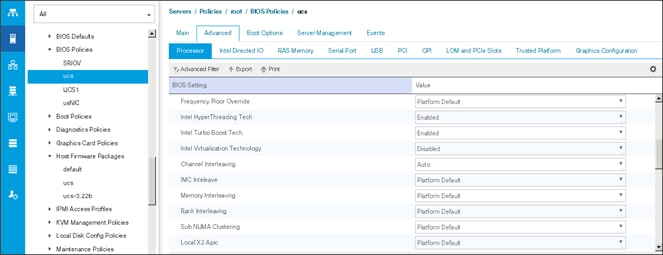

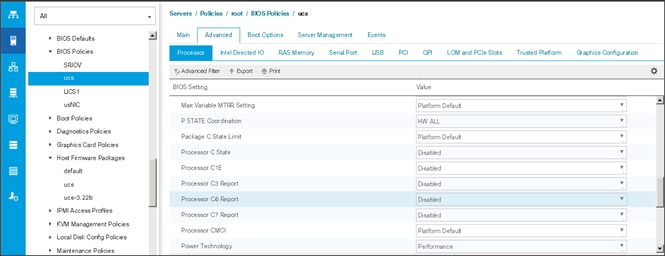

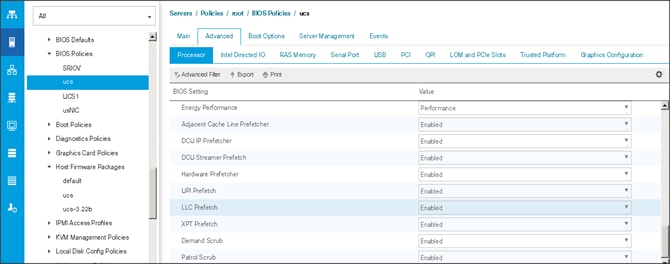

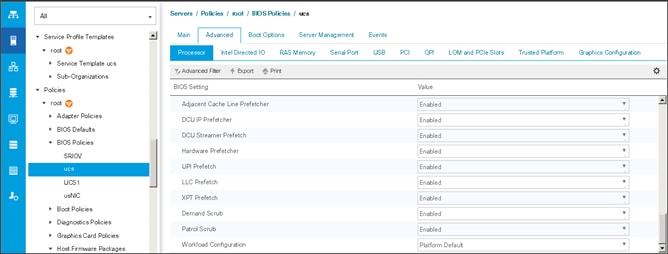

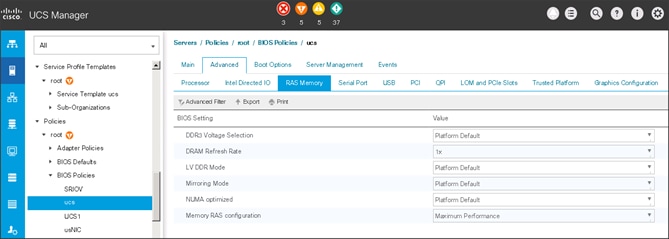

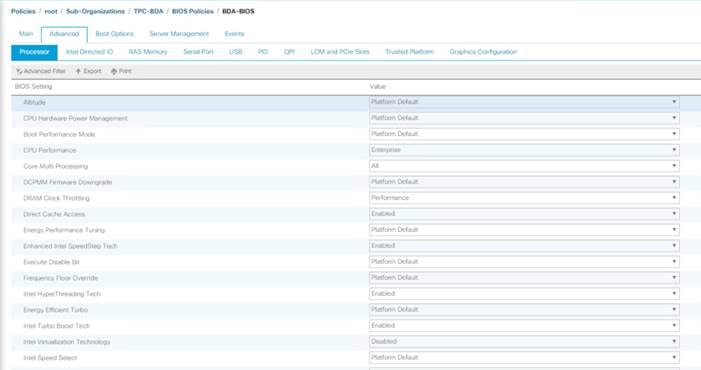

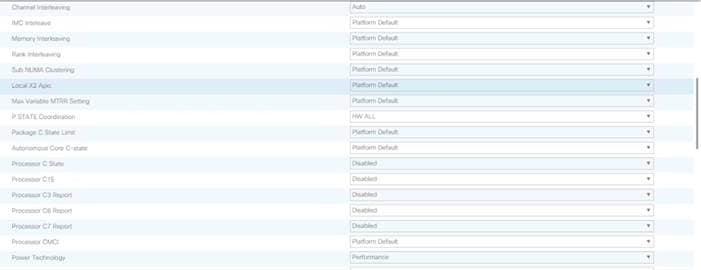

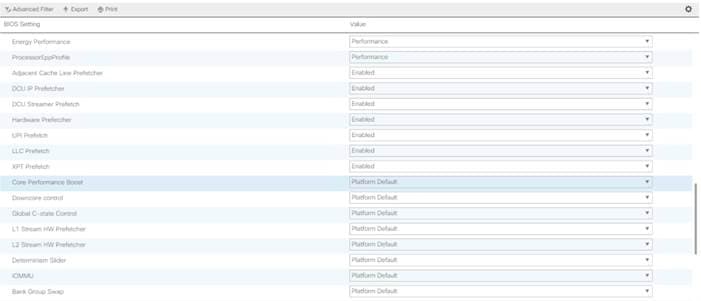

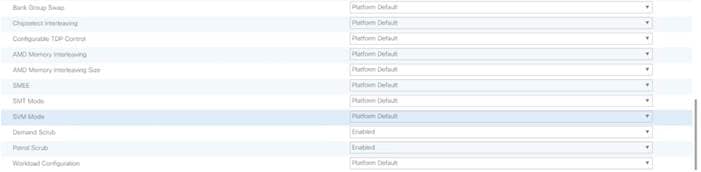

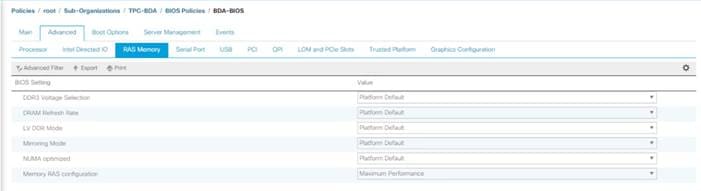

Create the Server BIOS Policy

The BIOS policy feature in Cisco UCS automates the BIOS configuration process. The traditional method of setting the BIOS is manually and is often error-prone. By creating a BIOS policy and assigning the policy to a server or group of servers, can enable transparency within the BIOS settings configuration.

![]() BIOS settings can have a significant performance impact, depending on the workload and the applications. The BIOS settings listed in this section is for configurations optimized for best performance which can be adjusted based on the application, performance, and energy efficiency requirements.

BIOS settings can have a significant performance impact, depending on the workload and the applications. The BIOS settings listed in this section is for configurations optimized for best performance which can be adjusted based on the application, performance, and energy efficiency requirements.

To create a server BIOS policy using the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab in the left pane in the UCS Manager GUI.

2. Select Policies > root.

3. Right-click BIOS Policies.

4. Select Create BIOS Policy.

5. Enter your preferred BIOS policy name (ucs).

6. Change the BIOS settings as shown in the following figures.

7. Only changes that need to be made are in the Processor and RAS Memory settings.

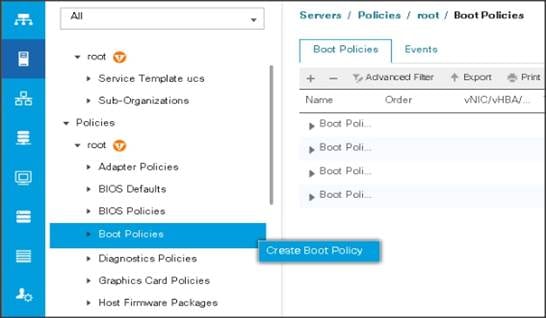

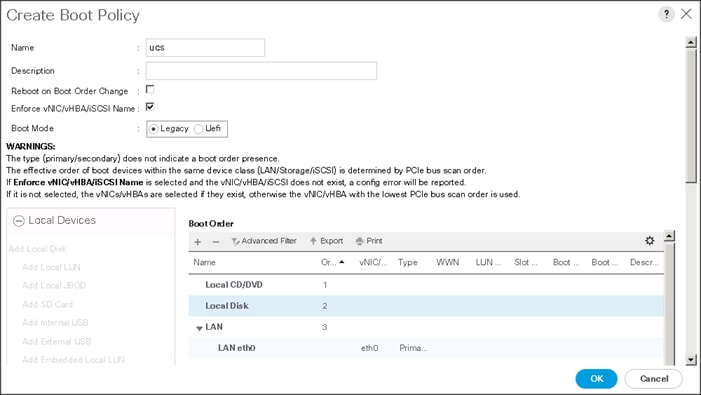

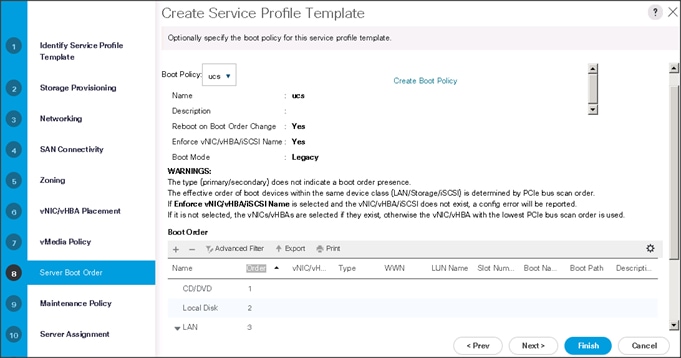

Create the Boot Policy

To create boot policies within the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab in the left pane in the UCS Manager GUI.

2. Select Policies > root.

3. Right-click the Boot Policies.

4. Select Create Boot Policy.

5. Enter ucs as the boot policy name.

6. (Optional) enter a description for the boot policy.

7. Keep the Reboot on Boot Order Change check box unchecked.

8. Keep Enforce vNIC/vHBA/iSCSI Name check box checked.

9. Keep Boot Mode Default (Legacy).

10. Expand Local Devices > Add CD/DVD and select Add Local CD/DVD.

11. Expand Local Devices and select Add Local Disk.

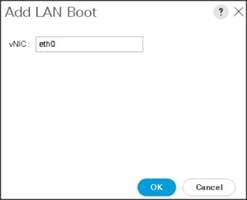

12. Expand vNICs and select Add LAN Boot and enter eth0.

13. Click OK to add the Boot Policy.

14. Click OK.

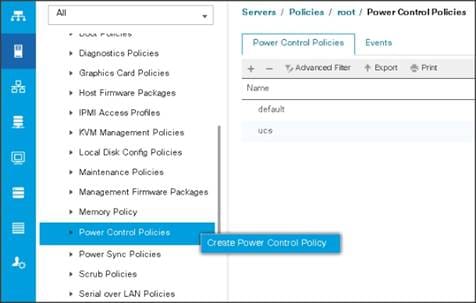

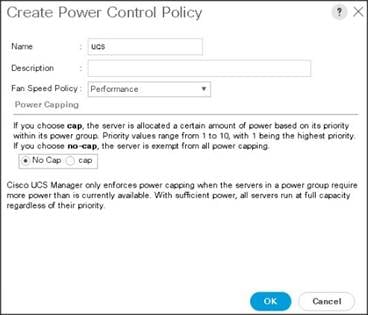

Create Power Control Policy

To create Power Control policies within the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab in the left pane in the Cisco UCS Manager GUI.

2. Select Policies > root.

3. Right-click the Power Control Policies.

4. Select Create Power Control Policy.

5. Enter ucs as the Power Control policy name.

6. (Optional) enter a description for the boot policy.

7. Select Performance for Fan Speed Policy.

8. Select No cap for Power Capping selection.

9. Click OK to create the Power Control Policy.

10. Click OK.

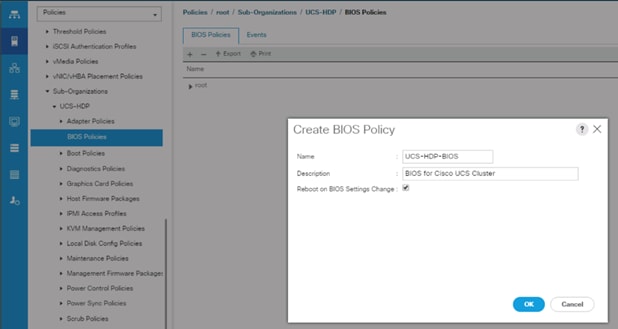

Create Server BIOS Policy

To create a server BIOS policy for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Sub-Organization > UCS-HDP > BIOS Policies.

3. Right-click BIOS Policies.

4. Select Create BIOS Policy.

5. Enter C240M5-BIOS as the BIOS policy name.

Figure 24 BIOS Configuration

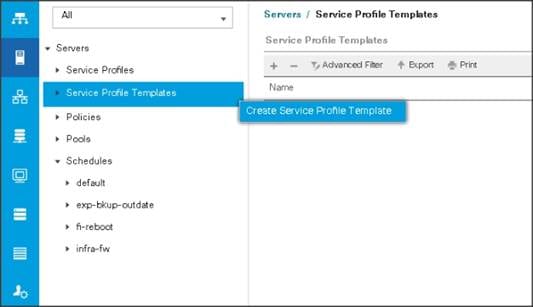

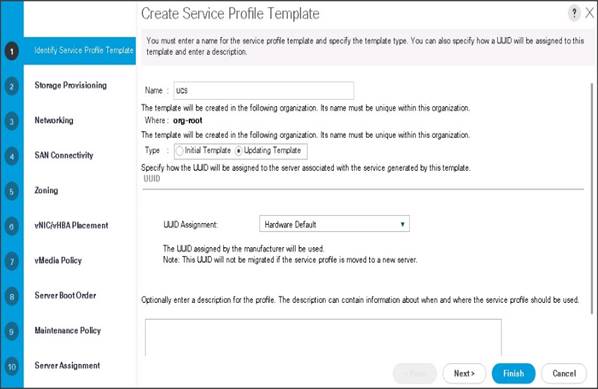

Create Service Profile Template

To create the Service Profile Template, follow these steps:

1. Select the Servers tab in the left pane in the Cisco UCS Manager GUI.

2. Right-click Service Profile Templates.

3. Select Create Service Profile Template.

The Create Service Profile Template window appears.

To identify the service profile template, follow these steps:

1. Name the service profile template as ucs. Select the Updating Template radio button.

2. In the UUID section, select Hardware Default as the UUID pool.

3. Click Next to continue to the next section.

Configure the Storage Provisioning for the Template

To configure storage policies, follow these steps:

1. Go to the Local Disk Configuration Policy tab and select ucs for the Local Storage.

2. Click Next to continue to the next section.

3. Click Next once the Networking window appears to go to the next section.

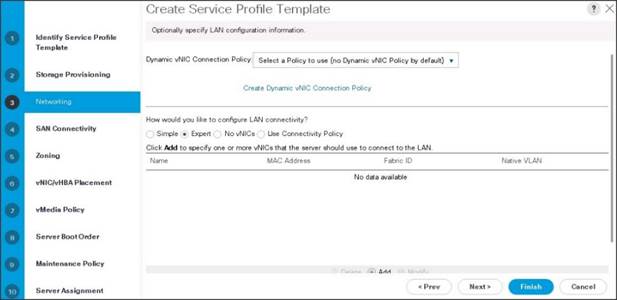

Configure Network Settings for the Template

To configure the network settings for the templates, follow these steps:

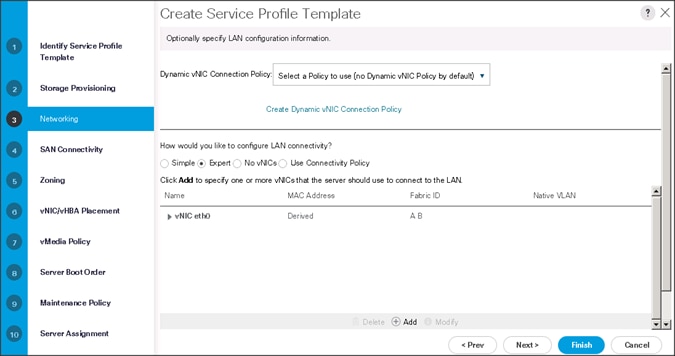

1. Keep the Dynamic vNIC Connection Policy field at the default.

2. Select Expert radio button for the option how would you like to configure LAN connectivity?

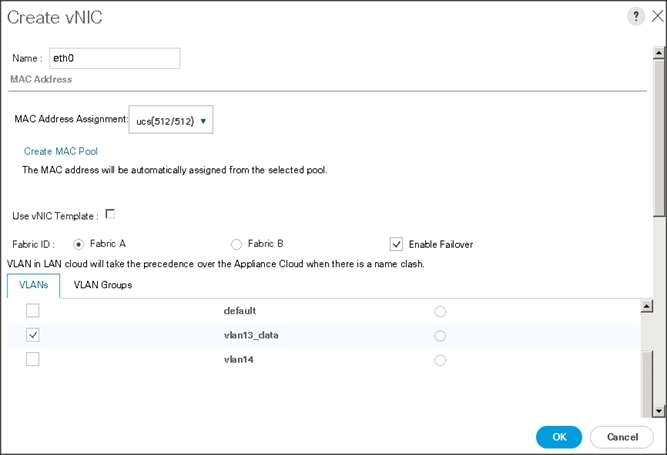

3. Click Add to add a vNIC to the template.

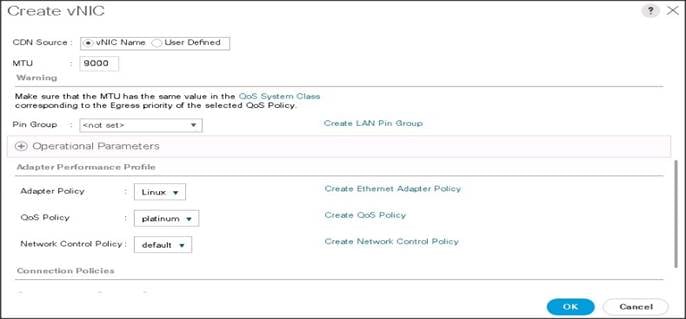

4. The Create vNIC window displays. Name the vNIC as eth0.

5. Select ucs in the Mac Address Assignment pool.

6. Select the Fabric A radio button and check the Enable failover check box for the Fabric ID.

7. Check the VLAN13 check box for VLANs and select the Native VLAN radio button.

8. Select MTU size as 9000.

9. Select adapter policy as Linux.

10. Select QoS Policy as Platinum.

11. Keep the Network Control Policy as Default.

12. Click OK.

![]() Optionally, Network Bonding can be setup on the vNICs for each host for redundancy as well as for increased throughput.

Optionally, Network Bonding can be setup on the vNICs for each host for redundancy as well as for increased throughput.

13. Click Next to continue with SAN Connectivity.

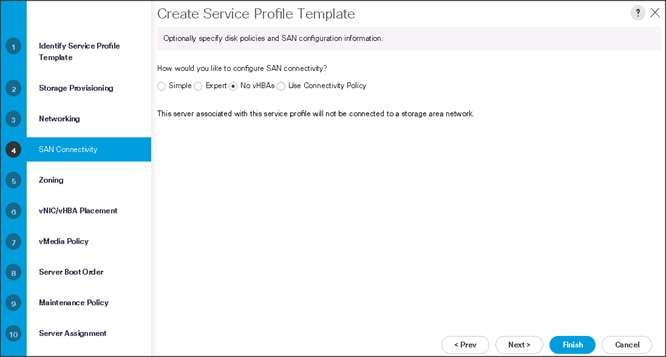

14. Select no vHBAs for How would you like to configure SAN Connectivity?

15. Click Next to continue with Zoning.

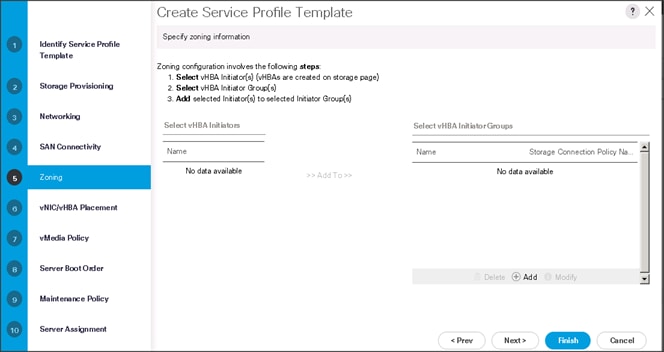

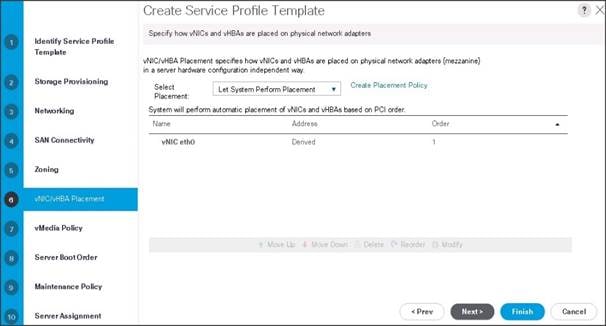

16. Click Next to continue with vNIC/vHBA placement.

17. Click Next to configure vMedia Policy.

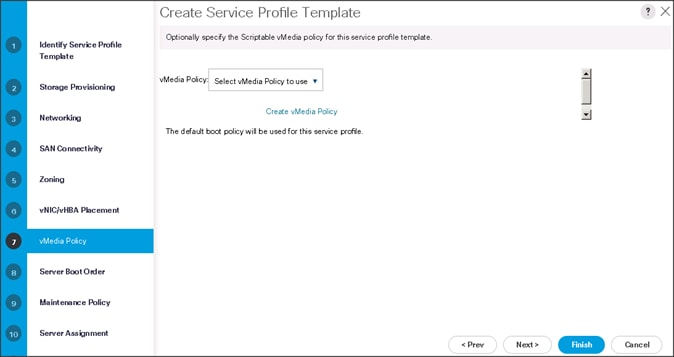

Configure the vMedia Policy for the Template

To configure the vMedia policy for the template, follow these steps:

1. Click Next once the vMedia Policy window appears to go to the next section.

Configure the Server Boot Order for the Template

To set the boot order for the servers, follow these steps:

1. Select ucs in the Boot Policy name field.

2. Review to make sure that all of the boot devices were created and identified.

3. Verify that the boot devices are in the correct boot sequence.

4. Click OK.

5. Click Next to continue to the next section.

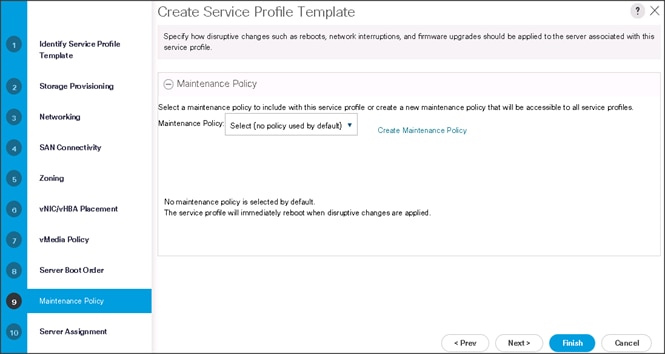

6. In the Maintenance Policy window, apply the maintenance policy.

7. Keep the Maintenance policy at no policy used by default. Click Next to continue to the next section.

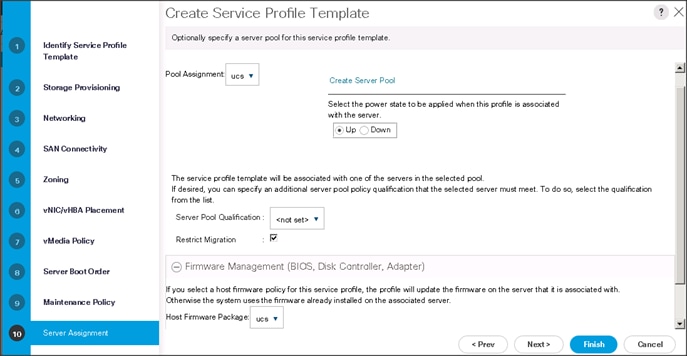

Configure the Server Assignment for the Template

To assign the servers to the pool, In the Server Assignment window, follow these steps:

1. Select ucs for the Pool Assignment field.

2. Select the power state to be Up.

3. Keep the Server Pool Qualification field set to <not set>.

4. Check the Restrict Migration check box.

5. Select ucs in Host Firmware Package.

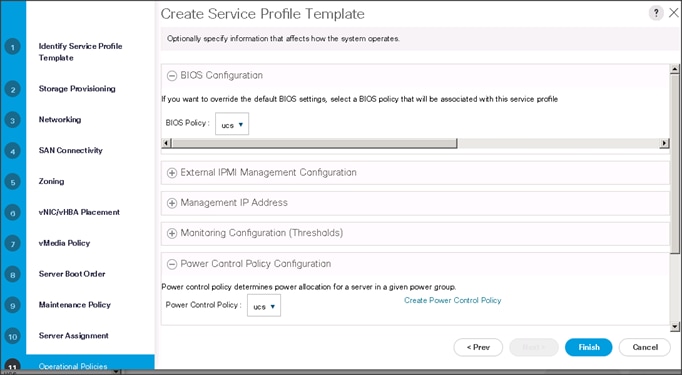

Configure the Operational Policies for the Template

To configure the operational policies for the template, in the Operational Policies Window, follow these steps:

1. Select ucs in the BIOS Policy field.

2. Select ucs in the Power Control Policy field.

3. Click Finish to create the Service Profile template.

4. Click OK in the pop-up window to proceed.

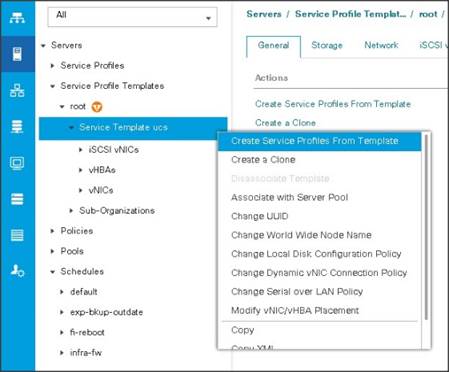

5. Select the Servers tab in the left pane of the Cisco UCS Manager GUI.

6. Go to Service Profile Templates > root.

7. Right-click Service Profile Templates ucs.

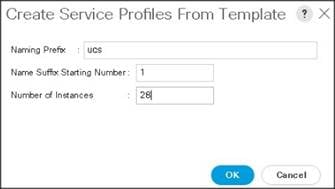

8. Select Create Service Profiles From Template.

The Create Service Profiles from Template window appears.

Association of the Service Profiles will take place automatically.

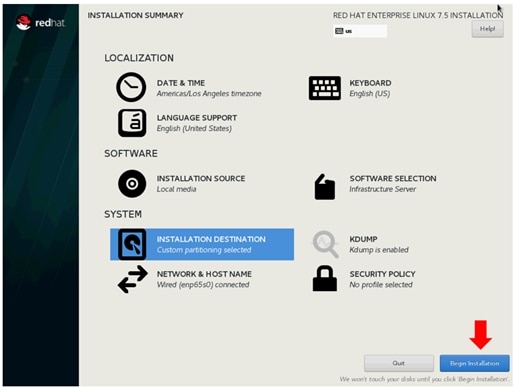

Install Red Hat Enterprise Linux 7.5

This section provides detailed procedures to install Red Hat Enterprise Linux 7.5 using Software RAID (OS based Mirroring) on Cisco UCS C240 M5 servers. There are multiple ways to install the Red Hat Linux operating system. The installation procedure described in this deployment guide uses the KVM console and virtual media from Cisco UCS Manager.

![]() This installation requires RHEL 7.5 DVD/ISO.

This installation requires RHEL 7.5 DVD/ISO.

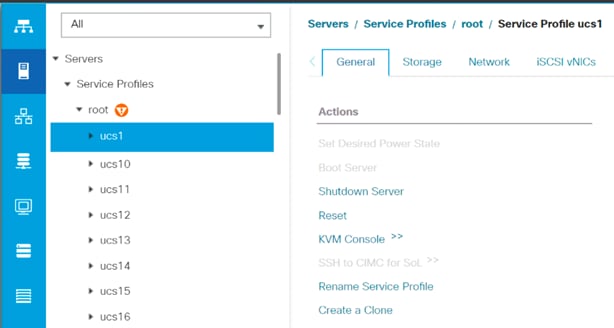

To install the Red Hat Linux 7.5 operating system, follow these steps:

1. Log into the Cisco UCS 6332 Fabric Interconnect and launch the Cisco UCS Manager application.

2. Select the Equipment tab.

3. In the navigation pane expand Rack-Mounts and then Servers.

4. In the right pane, click the KVM Console >>.

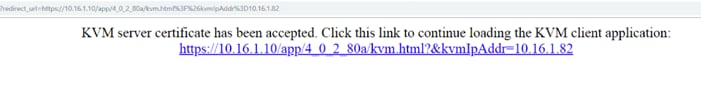

5. Click OK on the KVM Console – Select IP address pop-up window.

6. Click the link to launch the KVM console.

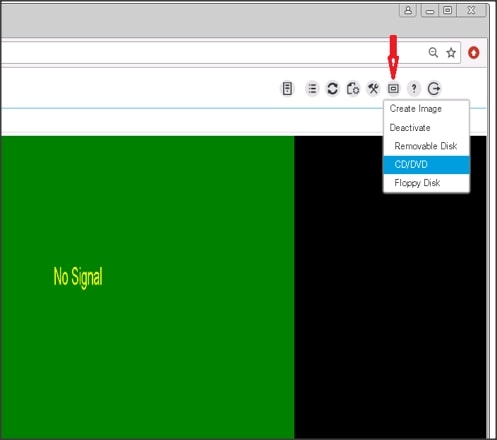

7. Point the cursor over the top right corner, select the Virtual Media tab.

8. Click the Activate Virtual Devices found in Virtual Media tab.

9. Click the Virtual Media tab again to select CD/DVD.

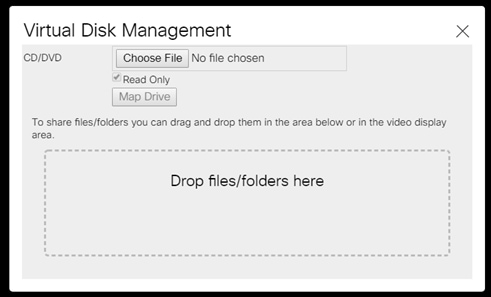

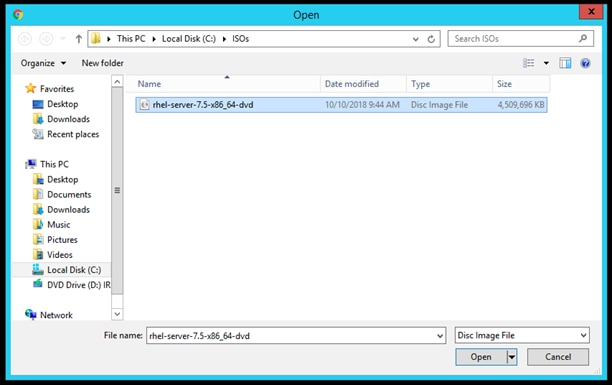

10. Select Choose File in the Virtual Disk Management windows.

11. Browse to the Red Hat Enterprise Linux Server 7.5 installer ISO image File.

![]() The Red Hat Enterprise Linux 7.5 DVD is assumed to be on the client machine.

The Red Hat Enterprise Linux 7.5 DVD is assumed to be on the client machine.

12. Click Open to add the image to the list of virtual media.

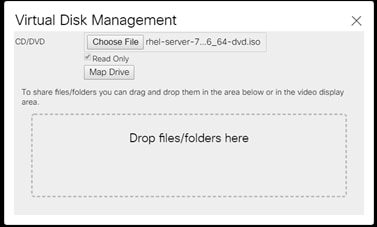

13. Click Map Drive after selecting the .iso file.

14. In the KVM window, select the KVM tab to monitor during boot.

15. In the KVM window, select the Macros > Static Macros > Ctrl-Alt-Del button in the upper left corner.

16. Click OK.

17. Click OK to reboot the system.

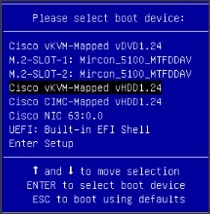

18. Press F6 key on the keyboard to select install media.

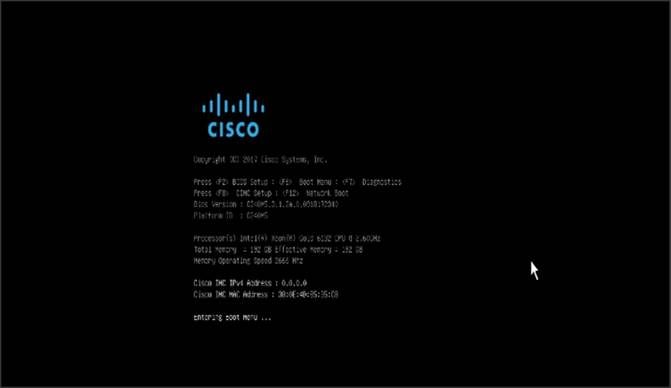

![]() Press F6 on your keyboard as soon as possible when the screen below appears to avoid the server reboot again.

Press F6 on your keyboard as soon as possible when the screen below appears to avoid the server reboot again.

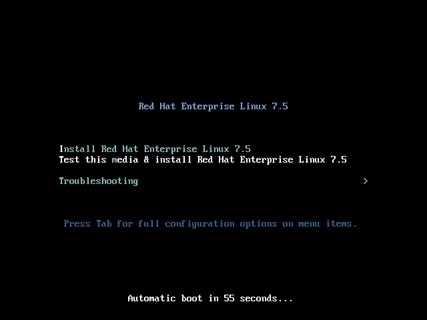

19. On reboot, the machine detects the presence of the Red Hat Enterprise Linux Server 7.5 install media.

20. Select the Install Red Hat Enterprise Linux 7.5.

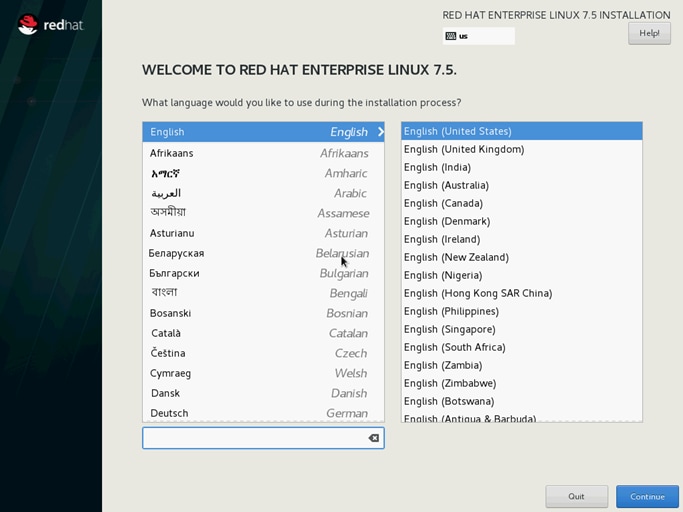

21. Skip the Media test and start the installation. Select language of installation and click Continue.

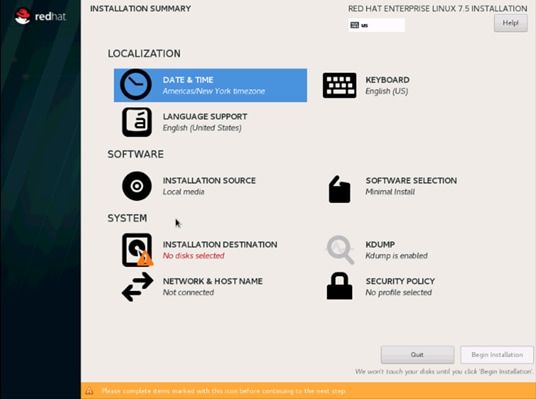

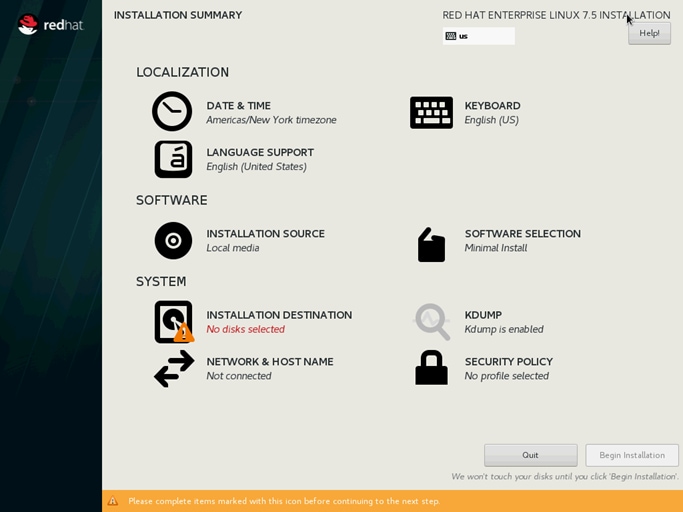

22. Select Date and time, which pops up another window as shown below:

23. Select the location on the map, set the time, and click Done.

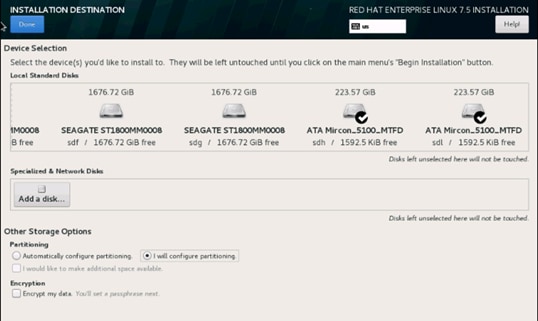

24. Click INSTALLATION DESTINATION.

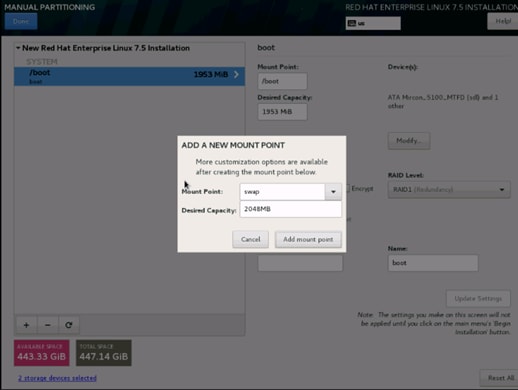

25. This opens a new window with the boot disks. Make the selection and choose I will configure partitioning. Click Done.

26. This opens the new window for creating the partitions. Click the + sign to add a new partition as shown below, boot partition of size 2048 MB.

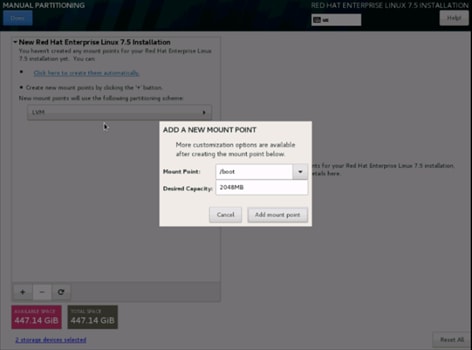

27. Click Add MountPoint to add the partition.

28. Change the Device type to RAID and make sure the RAID Level is RAID1 (Redundancy) and click Update Settings to save the changes.

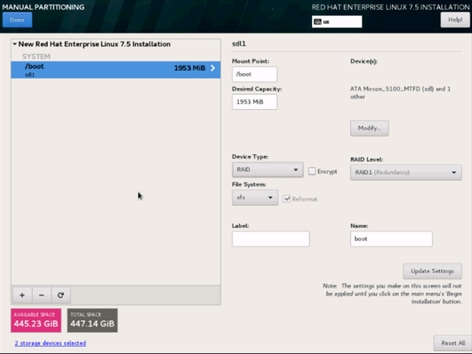

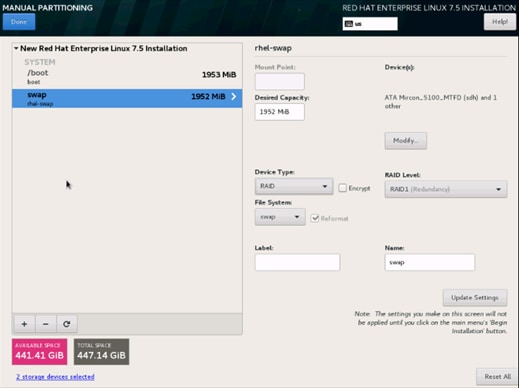

29. Click the + sign to create the swap partition of size 2048 MB as shown below.

30. Change the Device type to RAID and RAID level to RAID1 (Redundancy) and click Update Settings.

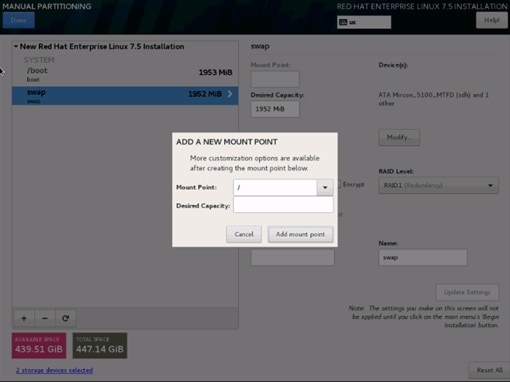

31. Click + to add the / partition. The size can be left empty, so it uses the remaining capacity and click Add Mountpoint.

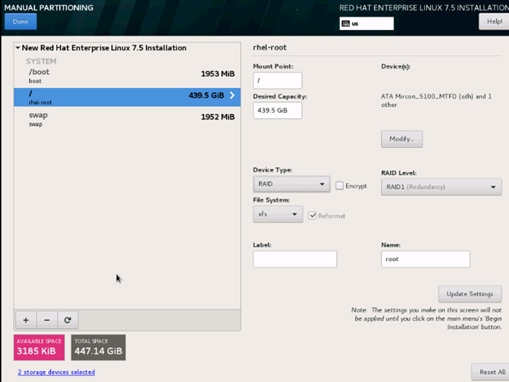

32. Change the Device type to RAID and RAID level to RAID1 (Redundancy). Click Update Settings.

33. Click Done to return to the main screen and continue the Installation.

34. Click SOFTWARE SELECTION.

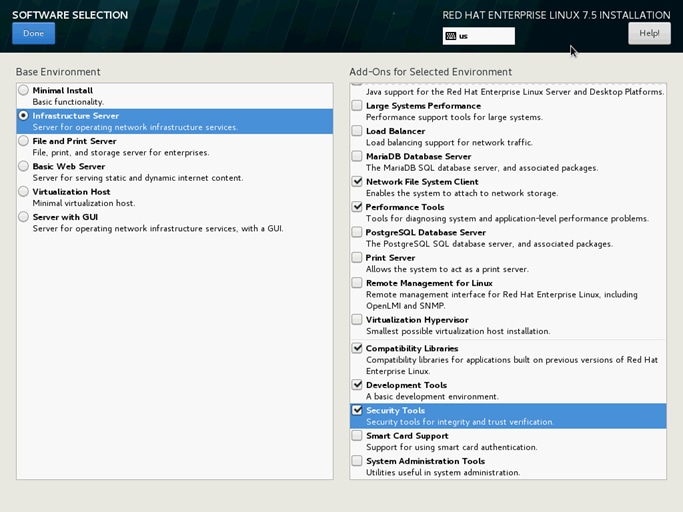

35. Select Infrastructure Server and select the Add-Ons as noted below. Click Done.

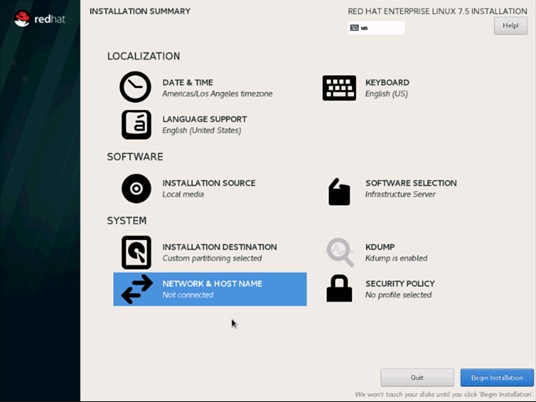

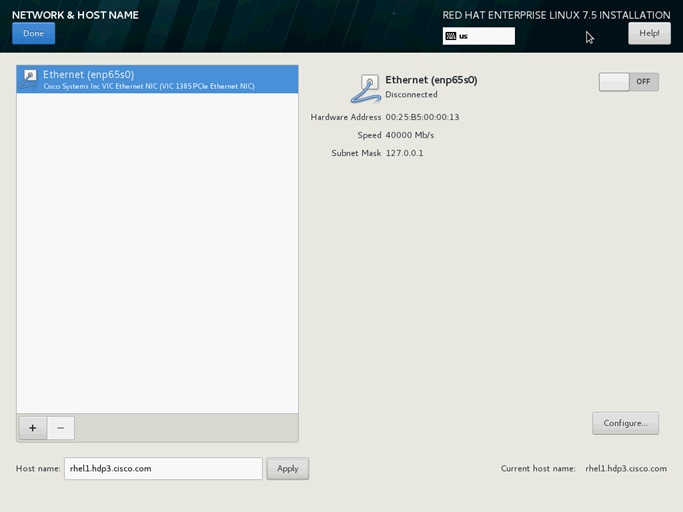

36. Click NETWORK & HOSTNAME and configure Hostname and Networking for the Host.

37. Type in the hostname as shown below.

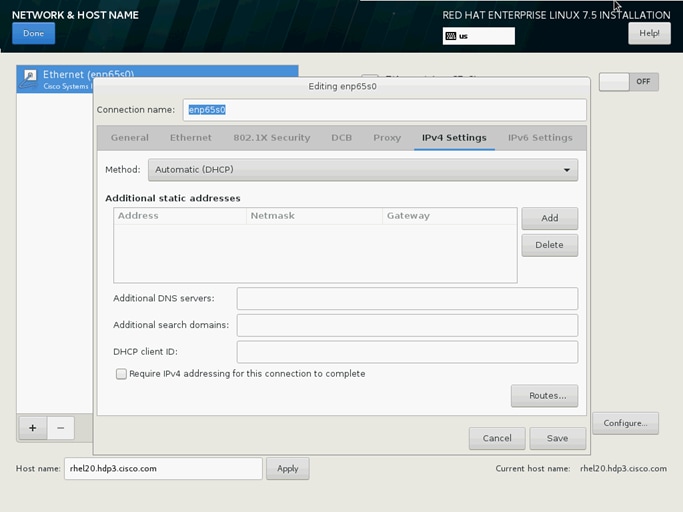

38. Click Configure to open the Network Connectivity window. Click IPV4Settings.

39. Change the Method to Manual and click Add to enter the IP Address, Netmask, and Gateway details.

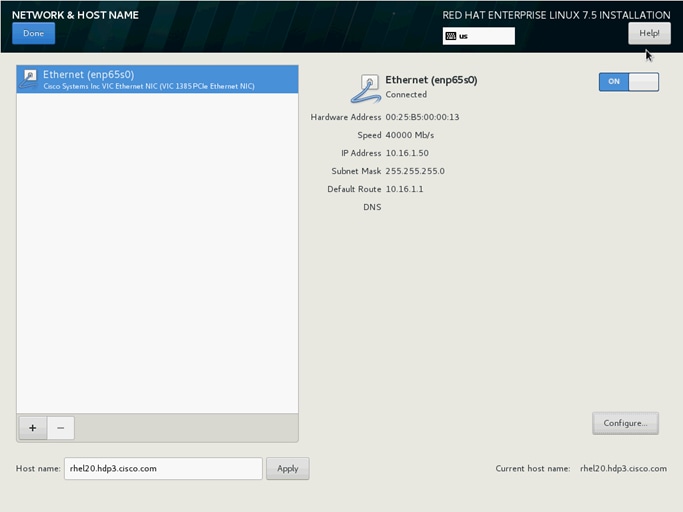

40. Click Save and update the hostname and turn Ethernet ON. Click Done to return to the main menu.

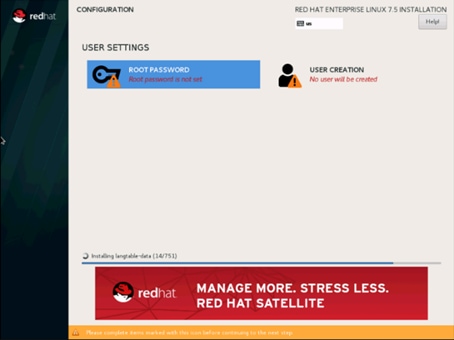

41. Click Begin Installation on the Main menu.

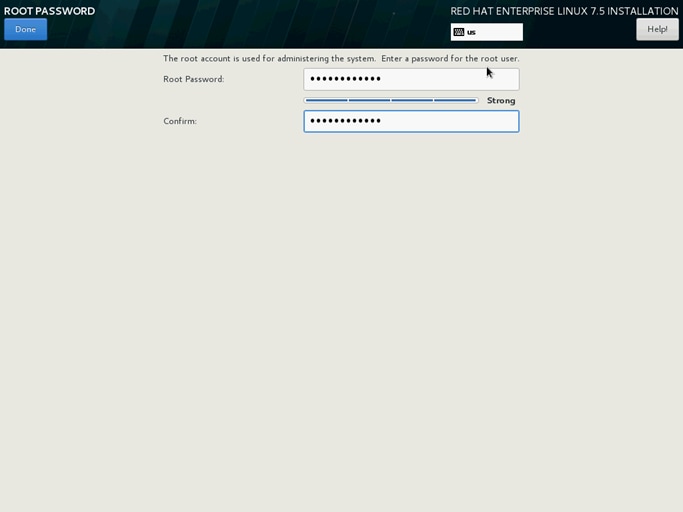

42. Select Root Password in the User Settings.

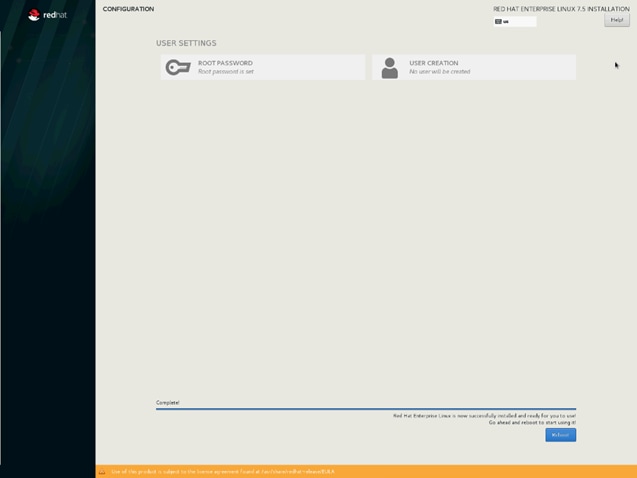

43. Enter the Root Password and click Done.

44. When the installation is complete reboot the system.

45. Repeat the steps to install Red Hat Enterprise Linux 7.5 on the remaining servers.

![]() The OS installation and configuration of the nodes that is mentioned above can be automated through PXE boot or third-party tools.

The OS installation and configuration of the nodes that is mentioned above can be automated through PXE boot or third-party tools.

The hostnames and their corresponding IP addresses are shown in Table 7.

Table 7 Hostnames and IP Addresses

| Hostname |

eth0 |

| rhel1 |

10.16.1.31 |

| rhel2 |

10.16.1.32 |

| rhel3 |

10.16.1.33 |

| rhel4 |

10.16.1.34 |

| rhel1 |

10.16.1.35 |

| rhel6 |

10.16.1.36 |

| rhel7 |

10.16.1.37 |

| rhel8 |

10.16.1.38 |

| rhel9 |

10.16.1.39 |

| rhel10 |

10.16.1.40 |

| rhel11 |

10.16.1.41 |

| rhel12 |

10.16.1.42 |

| rhel13 |

10.16.1.43 |

| rhel14 |

10.16.1.44 |

| rhel15 |

10.16.1.45 |

| rhel16 |

10.16.1.46 |

| … |

… |

| rhel24 |

10.16.1.54 |

![]() Multi-homing configuration is not recommended in this design, so please assign only one network interface on each host.

Multi-homing configuration is not recommended in this design, so please assign only one network interface on each host.

![]() For simplicity outbound NATing is configured for internet access when desired such as accessing public repos and/or accessing Red Hat Content Delivery Network. However, configuring outbound NAT is beyond the scope of this document.

For simplicity outbound NATing is configured for internet access when desired such as accessing public repos and/or accessing Red Hat Content Delivery Network. However, configuring outbound NAT is beyond the scope of this document.

Post OS Install Configuration

Choose one of the nodes of the cluster or a separate node as the Admin Node for management such as HDP installation, Ansible, creating a local Red Hat repo and so on. In this document, rhel1 has been used for this purpose.

Configure /etc/hosts

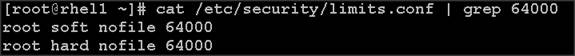

Setup /etc/hosts on the Admin node; this is a pre-configuration to setup DNS as shown in the next section.