Cisco ACI Policy Model Guide

About the Cisco Application Centric Infrastructure

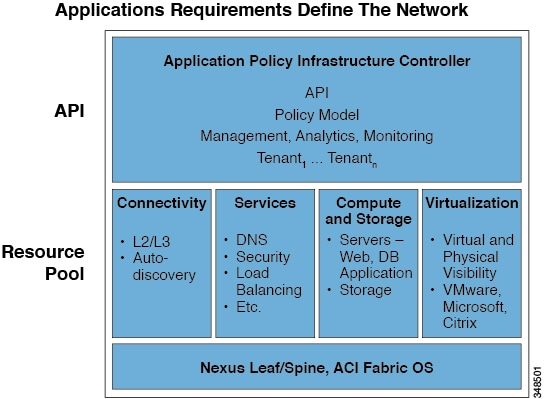

The Cisco Application Centric Infrastructure (ACI) allows application requirements to define the network. This architecture simplifies, optimizes, and accelerates the entire application deployment life cycle.

About the Cisco Application Policy Infrastructure Controller

The Cisco Application Policy Infrastructure Controller (APIC) API enables applications to directly connect with a secure, shared, high-performance resource pool that includes network, compute, and storage capabilities. The following figure provides an overview of the APIC.

APIC Overview

The APIC manages the scalable ACI multitenant fabric. The APIC provides a unified point of automation and management, policy programming, application deployment, and health monitoring for the fabric. The APIC, which is implemented as a replicated synchronized clustered controller, optimizes performance, supports any application anywhere, and provides unified operation of the physical and virtual infrastructure. The APIC enables network administrators to easily define the optimal network for applications. Data center operators can clearly see how applications consume network resources, easily isolate and troubleshoot application and infrastructure problems, andmonitor and profile resource usage patterns.

Cisco Application Centric Infrastructure Fabric Overview

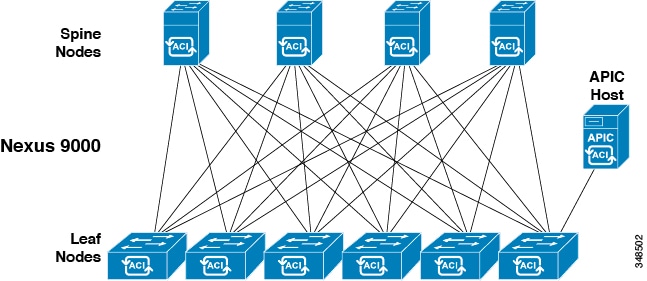

The Cisco Application Centric Infrastructure Fabric (ACI) fabric includes Cisco Nexus 9000 Series switches with the APIC to run in the leaf/spine ACI fabric mode. These switches form a “fat-tree” network by connecting each leaf node to each spine node; all other devices connect to the leaf nodes. The APIC manages the ACI fabric. The recommended minimum configuration for the APIC is a cluster of three replicated hosts. The APIC fabric management functions do not operate in the data path of the fabric. The following figure shows an overview of the leaf/spin ACI fabric.

ACI Fabric Overview

The ACI fabric provides consistent low-latency forwarding across high-bandwidth links (40 Gbps, with a 100-Gbps future capability). Traffic with the source and destination on the same leaf switch is handled locally, and all other traffic travels from the ingress leaf to the egress leaf through a spine switch. Although this architecture appears as two hops from a physical perspective, it is actually a single Layer 3 hop because the fabric operates as a single Layer 3 switch.

The ACI fabric object-oriented operating system (OS) runs on each Cisco Nexus 9000 Series node. It enables programming of objects for each configurable element of the system.

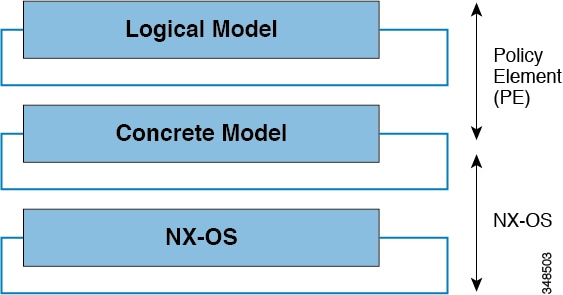

The ACI fabric OS renders policies from the APIC into a concrete model that runs in the physical infrastructure. The concrete model is analogous to compiled software; it is the form of the model that the switch operating system can execute. The figure below shows the relationship of the logical model to the concrete model and the switch OS.

Logical Model Rendered into a Concrete Model

All the switch nodes contain a complete copy of the concrete model. When an administrator creates a policy in the APIC that represents a configuration, the APIC updates the logical model. The APIC then performs the intermediate step of creating a fully elaborated policy that it pushes into all the switch nodes where the concrete model is updated.

The APIC is responsible for fabric activation, switch firmware management, network policy configuration, and instantiation. While the APIC acts as the centralized policy and network management engine for the fabric, it is completely removed from the data path, including the forwarding topology. Therefore, the fabric can still forward traffic even when communication with the APIC is lost.

The Cisco Nexus 9000 Series switches offer modular and fixed 1-, 10-, and 40-Gigabit Ethernet switch configurations that operate in either Cisco NX-OS stand-alone mode for compatibility and consistency with the current Cisco Nexus switches or in ACI mode to take full advantage of the APIC's application policy-driven services and infrastructure automation features.

The ACI Policy Model Overview

The ACI policy model enables the specification of application requirements policies. The APIC automatically renders policies in the fabric infrastructure. When a user or process initiates an administrative change to an object in the fabric, the APIC first applies that change to the policy model. This policy model change then triggers a change to the actual managed endpoint. This approach is called a model-driven framework.

Policy Model Key Characteristics

Key characteristics of the policy model include the following:

-

As a model-driven architecture, the software maintains a complete representation of the administrative and operational state of the system (the model). The model applies uniformly to the fabric, services, system behaviors, and virtual and physical devices attached to the network.

-

The logical and concrete domains are separated; the logical configurations are rendered into concrete configurations by applying the policies in relation to the available physical resources. No configuration is carried out against concrete entities. Concrete entities are configured implicitly as a side effect of the changes to the APIC policy model. Concrete entities can be, but do not have to be, physical (such as a virtual machine or a VLAN).

-

The system prohibits communications with newly connected devices until the policy model is updated to include the new device.

-

Network administrators do not configure logical and physical system resources directly but rather define logical (hardware independent) configurations and APIC policies that control different aspects of the system behavior.

Managed object manipulation in the model relieves engineers from the task of administering isolated, individual component configurations. These characteristics enable automation and flexible workload provisioning that can locate any workload anywhere in the infrastructure. Network-attached services can be easily deployed, and the APIC provides an automation framework to manage the life cycle of those network-attached services.

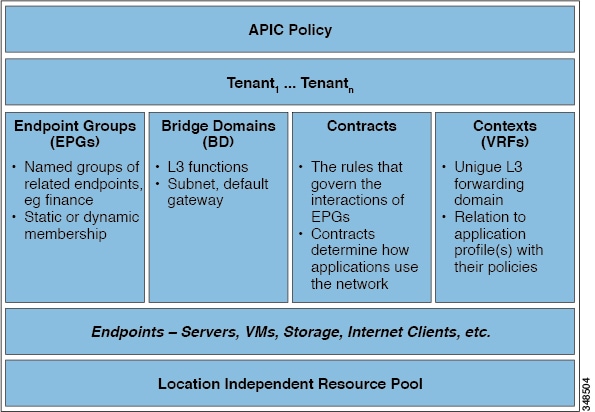

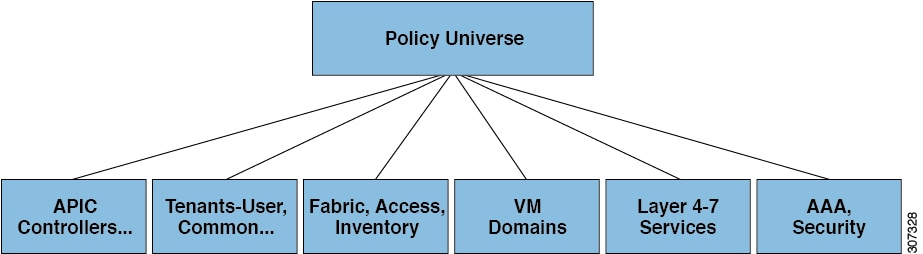

Logical Constructs

The policy model manages the entire fabric, including the infrastructure, authentication, security, services, applications, and diagnostics. Logical constructs in the policy model define how the fabric meets the needs of any of the functions of the fabric. The following figure provides an overview of the ACI policy model logical constructs.

ACI Policy Model Logical Constructs Overview

Fabric-wide or tenant administrators create predefined policies that contain application or shared resource requirements. These policies automate the provisioning of applications, network-attached services, security policies, and tenant subnets, which puts administrators in the position of approaching the resource pool in terms of applications rather than infrastructure building blocks. The application needs to drive the networking behavior, not the other way around.

Every aspect of ACI functionality is encompassed within the object model. Any configuration that can be made on the fabric, can be made programmatically using the REST API. Likewise, any monitoring, statistics, or fault information can be programmatically read from the APIC using the REST API.

ACI Utilities for Developers

Cisco ACI includes utilities that enable developers to effectively use the ACI REST APIs.

APIC API Inspector

The API Inspector is included in the APIC GUI. It provides a real-time display of REST API commands that the APIC processes to perform GUI interactions. The APIC user login drop-down menu includes the API Inspector option which opens the window shown in the figure below.

The API Inspector dynamically displays REST commands issued by the APIC. All operations that are performed in the GUI invoke REST calls to fetch and commit the information being accessed. The API Inspector further simplifies the process of examining what is taking place on the REST interface as the GUI is navigated by displaying in real time the URIs and payloads. When a new configuration is committed, the API Inspector displays the resulting POST requests, and when information is displayed on the GUI, the GET request is displayed.

After the API Inspector is brought up, time stamps will appear along with the REST method, URIs, and payloads. There may also be occasional updates in the list as the GUI refreshes subscriptions to data being shown on the screen.

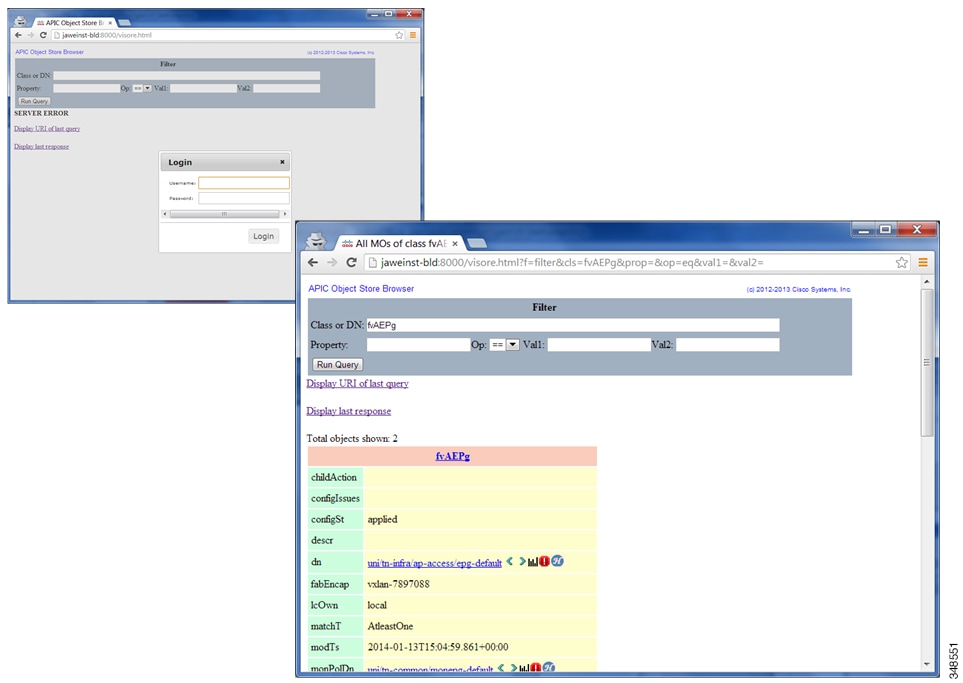

Visore Managed Object Viewer

Visore is a read-only management information tree (MIT) browser as shown in the figure below. It enables distinguished name (DN) and class queries with optional filters.

Visore MO Viewer

The Visore managed object viewer is provided with the APIC. It is at this location: http(s)://host[:port]/visore.html

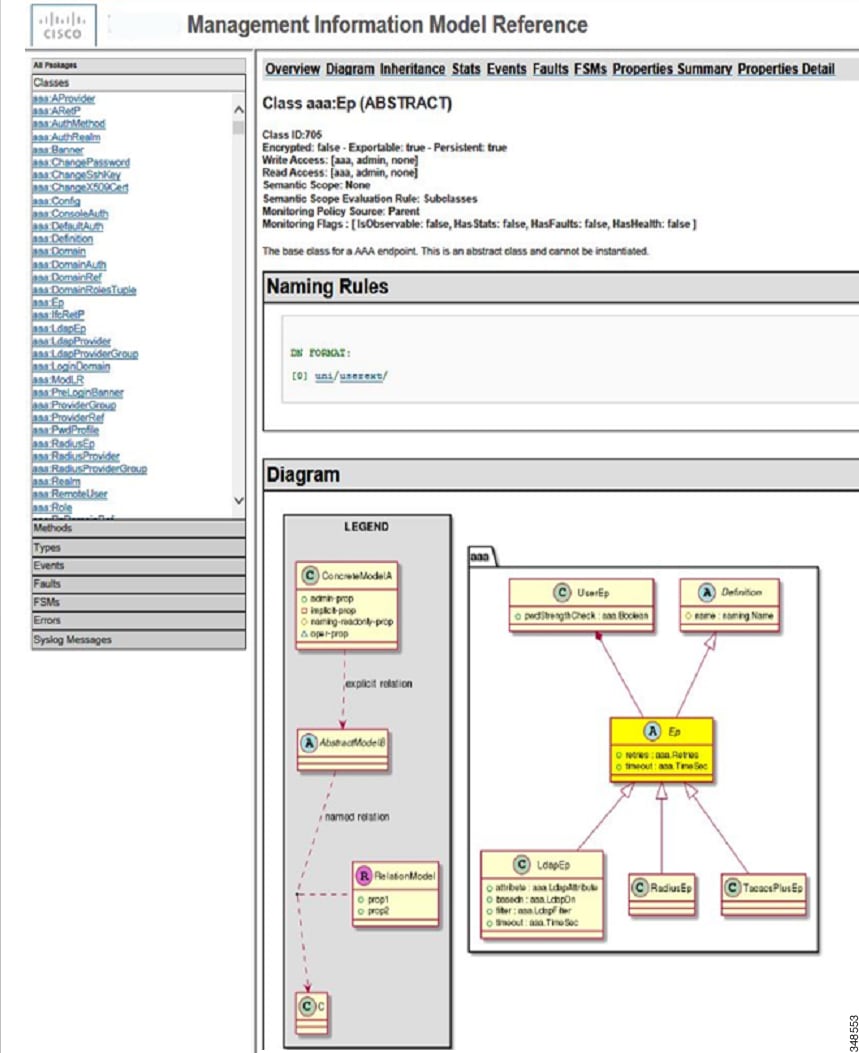

Management Information Model Reference

The Management Information Model (MIM) contains all of the managed objects in the system and their properties. For details, see the Cisco APIC Management Information Model Reference Guide.

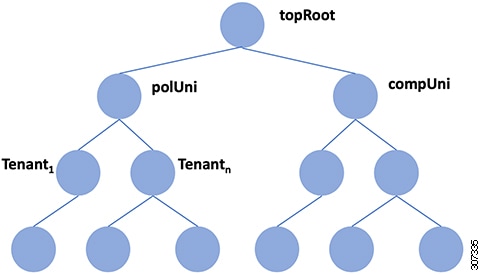

The ACI object model is represented in the Management Information Tree (MIT) that is an object oriented data base. Every branch represents a functional area, and every node is a managed object - has a CLASS and a globally unique Distinguished Name formed by a parent name and the relative name.

Top levels of the policy model in the ACI Management Information Model Tree

polUni is the top level class for all policy definition/resolution objects, and compUni is the top level class for all compute related objects.

The following figure provides an example of how an administrator can use the MIM to research an object in the MIT.

MIM Reference

About the REST API

The Application Policy Infrastructure Controller (APIC) Representational State Transfer (REST) API is a programmatic interface that uses the RESTful services architecture. The API accepts and returns HTTP (not enabled by default) or HTTPS messages that contain JavaScript Object Notation (JSON) or Extensible Markup Language (XML) documents. You can use any programming language to generate the messages and the JSON or XML documents that contain the API methods or Managed Object (MO) descriptions.

The REST API is the interface into the management information tree (MIT) and allows manipulation of the object model state. The same REST interface is used by the APIC CLI, GUI, and SDK, so that whenever information is displayed, it is read through the REST API, and when configuration changes are made, they are written through the REST API. The REST API also provides an interface through which other information can be retrieved, including statistics, faults, and audit events. It even provides a means of subscribing to push-based event notification, so that when a change occurs in the MIT, an event can be sent through a web socket.

POSTman (http://www.getpostman.com), is a popular utility that can be used to interact with the APIC REST interface, to both send and receive data which may represent configuration, actions, policy and operational state data.

Standard REST methods are supported in the ACI API, which include POST, GET, and DELETE operations through HTTP.

|

Method |

Action |

Behavior |

|---|---|---|

|

GET |

Read |

Nullipotent |

|

POST |

Create / Update |

Idempotent |

|

DELETE |

Delete |

Idempotent |

The POST and DELETE methods are idempotent, meaning that there is no additional effect if they are called more than once with the same input parameters. The GET method is nullipotent, meaning that it can be called zero or more times without making any changes (or that it is a read-only operation).

Payloads to and from the REST interface can be encapsulated through either XML or JSON encoding. In the case of XML, the encoding operation is simple: the element tag is the name of the package and class, and any properties of that object are specified as attributes of that element. Containment is defined by creating child elements.

For JSON, encoding requires definition of certain entities to reflect the tree-based hierarchy; however, the definition is repeated at all levels of the tree, so it is fairly simple to implement after it is initially understood.

-

All objects are described as JSON dictionaries, in which the key is the name of the package and class. The value is another nested dictionary with two keys: attribute and children.

-

The attribute key contains a further nested dictionary describing key-value pairs that define attributes on the object.

-

The children key contains a list that defines all the child objects. The children in this list are dictionaries containing any nested objects, which are defined as described here.

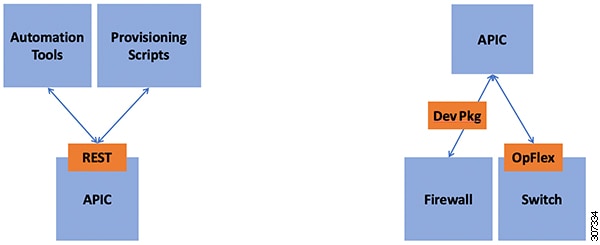

The REST API has both northbound and the southbound programmatic interfaces. The northbound REST API accepts configuration and access to management functions of the APIC. This interface provides access for automation tools, provisioning scripts and third party monitoring and management tools.

ACI Northbound and Southbound APIs

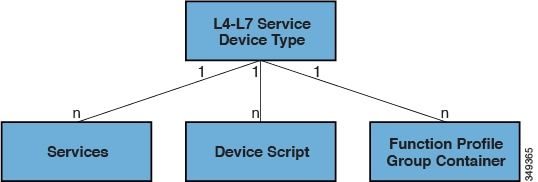

Southbound interfaces on the APIC allow for the declarative model of intent to be extended beyond the fabric, into subordinate devices. This is a key aspect to the openness of the ACI fabric, in that policy can be programmed once via the APIC and then pushed out to hypervisors, L4-7 devices and more, without the need to individually configure those devices. This southbound extension is realized through L4-7 Device Packages and the OpFlex protocol.

The L4-7 device package interface allows for ACI to apply policy to existing L4-7 devices that do not have an implicit knowledge of ACI policy. These devices can be from any vendor, so long as the device has some form of interface which is accessible via IP. The implementation of device packages is done via Python scripts that run on the APIC in a contained execution environment. The scripts can access the device through their native configuration interfaces, be they REST, CLI, SOAP or others. As a user makes changes to service graphs or EPG policy, the device package translates the APIC policy into API calls on the L4-7 device.

OpFlex is designed to allow the exchange of data for managed objects that are part of an informational model. The protocol supports XML and JSON, as well as the binary encoding used in some scenarios, and uses standard remote procedure call (RPC) mechanisms such as JSON-RPC over TCP. In ACI, OpFlex extends policy to the Application Virtual Switch as well as to Group Based Policy in OpenStack.

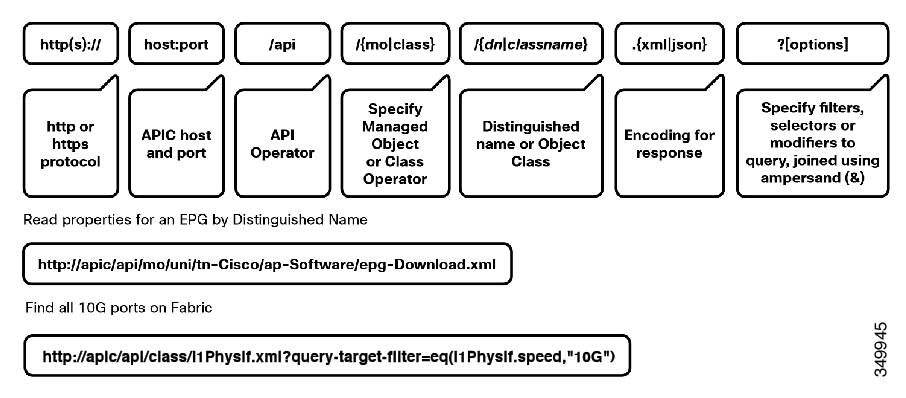

Read Operations

After the object payloads are properly encoded as XML or JSON, they can be used in create, read, update, or delete operations. The following diagram shows the syntax for an ACI REST API read operation.

REST Syntax

A universal resource identifier (URI) provides access a target resource. The first two sections of the request URI specify the protocol and access details of the APIC. The literal string /api, indicates that the API is to be invoked. The next specifies whether the operation is for an MO or a class. Next, either the fully qualified Dn for object-based queries, or the package and class name for class-based queries is specified. The final mandatory part of the request URI is the encoding format: either .xml or .json. This is the only method by which the payload format is defined (the APIC ignores Content-Type and other headers).

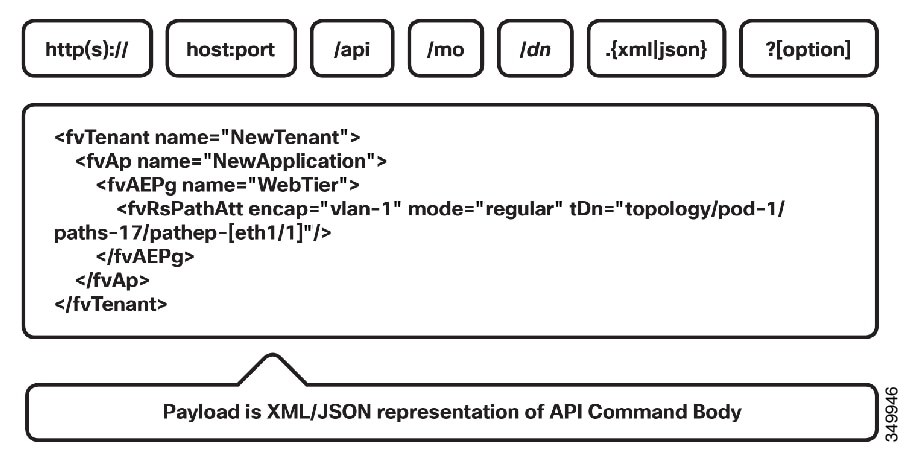

Write Operations

Create and update operations in the REST API are both implemented using the POST method. If an object does not already exist, it will be created, and if it does already exist, it will be updated to reflect any changes between its existing state and desired state.

REST Payload

Both create and update operations can contain complex object hierarchies, so that a complete tree can be defined in a single command so long as all objects are within the same context root and are under the 1MB limit for data payloads for the REST API. This limit guarantees performance and protects the system when it is under high load.

Create and update operations use the same syntax as read operations, except that they are always targeted at an object level, because you cannot make changes to every object of a specific class (nor would you want to). The literal string /mo indicates that the Dn of the managed object is provided, followed by theactual Dn. Filter strings apply to POST operations. For example, to retrieve the results of a POST operation in the response, pass the rsp-subtree=modified query string to indicate that the response is to include any objects that have been modified by the POST operation.

The payload of the POST operation contains the XML or JSON encoded data representing the managed object that defines the Cisco API command body.

Filters

The REST API supports a wide range of flexible filters, useful for narrowing the scope of your search to allow information to be located more quickly. The filters themselves are appended as query URI options, starting with a question mark (?) and concatenated with an ampersand (&). Multiple conditions can be joined together to form complex filters.

Authentication

REST API username- and password-based authentication uses a special subset of request Universal Resource Identifiers (URIs), including aaaLogin, aaaLogout, and aaaRefresh as the DN targets of a POST operation. Their payloads contain a simple XML or JSON payload containing the MO representation of an aaaUser object with the attribute name and pwd defining the username and password: for example, <aaaUser name='admin' pwd='password'/>. The response to the POST operation will contain an authentication token as both a Set-Cookie header and an attribute to the aaaLogin object in the response named token, for which the XPath is /imdata/aaaLogin/@token if the encoding is XML. Subsequent operations on the REST API can use this token value as a cookie named APIC-cookie to authenticate future requests.

The following example authenticates a local APIC user to the APIC.

POST https://<host>/api/aaaLogin.json

{

"aaaUser" : {

"attributes" : {

"name" : "admin",

"pwd" : "Pa$$w0rd"

}

}

}

Subscription

The REST API supports the subscription to one or more MOs during your active API session. When any MO is created, changed, or deleted because of a user- or system-initiated action, an event is generated. If the event changes the data on any of the active subscribed queries, the APIC will send out a notification to the API client that created the subscription.

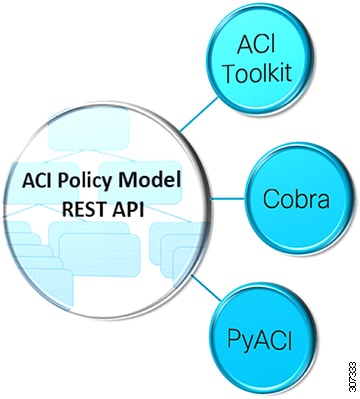

ACI Development Environment

The ACI object model represents network configuration with application-based semantics that can be consumed and posted against using the REST API. ACI provides a variety of access methods to read and manipulate this data.

Common ACI Development Libraries

All 3 libraries are simply wrappers to access the Rest API. Largely a tradeoff between personal preference, need for completeness, comfort with the ACI object model, and requirement for formal support beyond an active community

APIC Toolkit

ACI Toolkit attempts to make life easier for the developer. Python libraries that allows you to quickly get the most common ACI workflows up and running. Can use Rest API directly along with ACI Toolkit, Cobra, and PyACI in the same script The complete Cisco Application Centric Infrastructure (ACI) object model contains many entities, which may be daunting for a user being first introduced to network programmability. The ACI Toolkit makes available a simplified subset of the model that can act as an introduction to the concepts in ACI, and give users a way to quickly bring up common tasks and workflows. Various applications that are now products or features of ACI have been built in the ACI Toolkit. Examples include Multi-Site, Endpoint Tracker, ACI Lint, ACI Configuration Snapshot and Rollback, and many more.

While the ACI Toolkit provides some useful tools for an operator to immediately use, the real value is in the ability to take these examples as a starting point, and modify or extend these samples to suit your particular needs.

Cobra

Cobra provides complete access to the object model but may be more difficult for beginning developers. The ACI engineering team uses Cobra. Much of the CLI on the ACI switches was developed with Cobra.

PyACI

PyACI provides alternative Python bindings for ACI REST API with the option to use XML or JSON payload. Facilitates authoring concise scripts Provides better logging.

APIC Sandbox Environment

DevNet Sandbox makes Cisco's free spread of technology available to developers and engineers by providing packaged labs we call Sandboxes. There are two types of sandboxes, Always-On and Reservation. Each sandbox typically highlights one Cisco product (for example, CallManager, APIC, etc). Sandboxes can be used for development, testing APIs, learning how to configure a product, training, hackathons, and more.

ARYA

The process of building a request can be time consuming, because you must represent the object data payload as Python code reflecting the object changes that you want to make. Because the Cobra SDK is directly modeled on the Cisco ACI object model, you should be able to generate code directly from what resides in the object model. You can do so with the Cisco APIC REST to Python Adapter, known as Arya.

Ansible

The Ansible ACI modules provide a user-friendly interface to managing your ACI environment using Ansible playbooks. For instance ensuring that a specific tenant exists, is done using the following Ansible task using module aci_tenant:

name: Ensure tenant customer-xyz exists

aci_tenant:

host: my-apic-1

username: admin

password: my-password

tenant: customer-xyz

description: Customer XYZ

state: present

A complete list of existing ACI modules is available for the latest stable release on the list of network modules. You can also view the current development version. If you want to learn how to write your own ACI modules to contribute, look at the Developing Cisco ACI modules section.

OpenStack

OpenStack is an open source infrastructure as a service (IaaS) initiative for creating and managing large groups of virtual private servers in a data center. Cisco OpenStack plugins enable OpenStack instances to leverage the ACI fabric as a software defined networking (SDN) platform. This enables dynamic creation of networking constructs that are driven directly from OpenStack, while providing additional visibility and control through the ACI APIC controller. OpenStack supports interoperability between cloud services and allows businesses to build AWS-like cloud services in their own data centers.

Puppet

Puppet is a configuration management tool from Puppet Labs, Inc. Although Puppet was originally designed for large scale server management, many datacenter operators would like to consolidate server and network device provisioning using the same tool.

Cisco Puppet Module

An APIC controller does not run an embedded Puppet agent. Instead, Cisco provides a Puppet module ("ciscoacipuppet"), which uses a Cisco ACI-specific Puppet device to relay configuration management requests to the APIC controller. The ciscoacipuppet module interprets change information in the received Puppet manifest and translates the change requests into APIC REST API messages to implement configuration changes in the ACI fabric.

For details on the installation, setup, and usage of the ciscoacipuppet module, refer to the documentation on GitHub and Puppet Forge at the following URLs:

Only a subset of APIC managed objects can be provisioned using the ciscoacipuppet Puppet module. To understand the level of support and the limitations, refer to the ciscoacipuppet module documentation on GitHub and Puppet Forge.

GitHub

GitHub provides both free and paid hosting services that allow individuals to collaborate with millions of other GitHub users. GitHub also provides techniques for tracking issues, securing access to projects, and built-in project documentation. The combination of all of these features makes GitHub a very common place for members of the community to share code with one another, build on each other's work, and contribute their efforts back into larger projects.

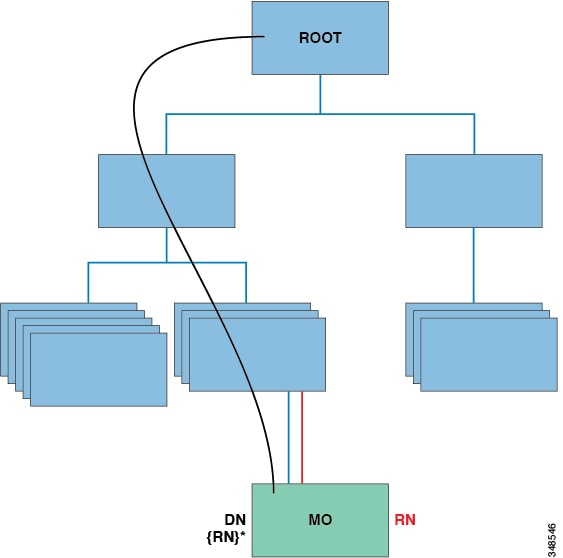

Locating Policy Model Objects

The Cisco ACI uses an information-model-based architecture (management information tree [MIT]) in which the model describes all the information that can be controlled by a management process. Object instances are referred to as managed objects (MOs).

The following figure shows the distinguished name, which uniquely represents any given MO instance, and the relative name, which represents the MO locally underneath its parent MO. All objects in the MIT exist under the root object.

MO Distinguished and Relative Names

Every MO in the system can be identified by a unique distinguished name (DN). This approach allows the object to be referred to globally. In addition to its distinguished name, each object can be referred to by its relative name (RN). The relative name identifies an object relative to its parent object. Any given object's distinguished name is derived from its own relative name that is appended to its parent object's distinguished name.

The distinguished name enables you to unambiguously a specific target object. The relative name identifies an object from its siblings within the context of its parent object. The distinguished name contains a sequence of relative names.

dn = {rn}/{rn}/{rn}/{rn}

dn =”sys/ch/lcslot-1/lc/leafport-1”

Distinguished names are directly mapped to URLs. Either the relative name or the distinguished name can be used to access an object, depending on the current location in the MIT.

Because of the hierarchical nature of the tree and the attribute system used to identify object classes, the tree can be queried in several ways for obtaining managed object information. Queries can be performed on an object itself through its distinguished name, on a class of objects such as a switch chassis, or on a tree-level to discover all members of an object.

Examples of query types are below:

-

To get a MO and everything under it: rsp-subtree=full

-

To get just the configurable items: rsp-prop-include=config-only

-

To get just particular class from a MO: target-subtree-class=

-

To get just particular class: filter class = vzFilter

-

To get just particular entry: filter class = vzEntry

-

To get just contracts: filter class = vzBrCP

For all MIT queries, an administrator can optionally return the entire subtree or a partial subtree. Additionally, the role-based access control (RBAC) mechanism in the system dictates which objects are returned; only the objects that the user has rights to view will ever be returned.

Tree-Level Queries

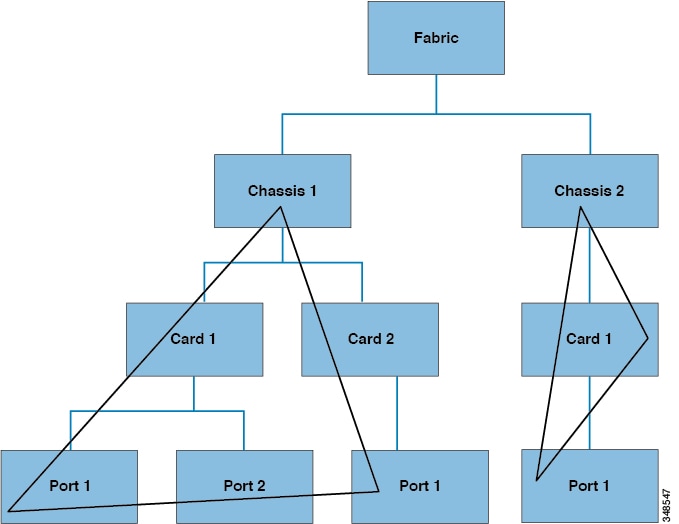

The following figure shows two chassis that are queried at the tree level.

Tree-Level Queries

Both queries return the referenced object and its child objects. This approach is useful for discovering the components of a larger system. In this example, the query discovers the cards and ports of a given switch chassis.

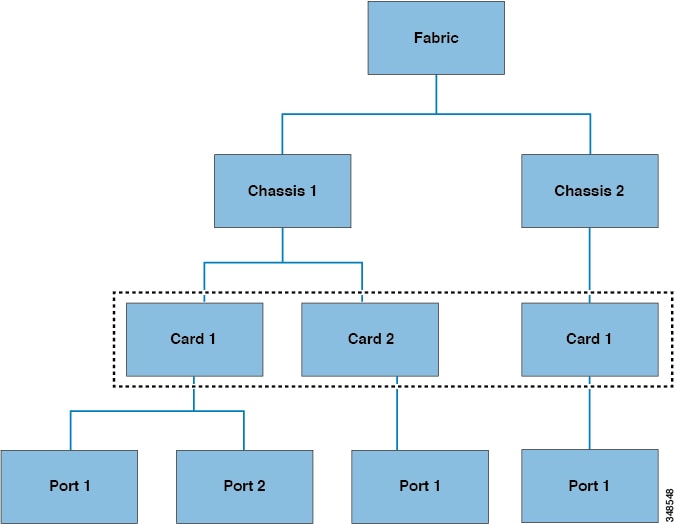

Class-Level Queries

The following figure shows the second query type: the class-level query.

Class-Level Queries

Class-level queries return all the objects of a given class. This approach is useful for discovering all the objects of a certain type that are available in the MIT. In this example, the class used is Cards, which returns all the objects of type Cards.

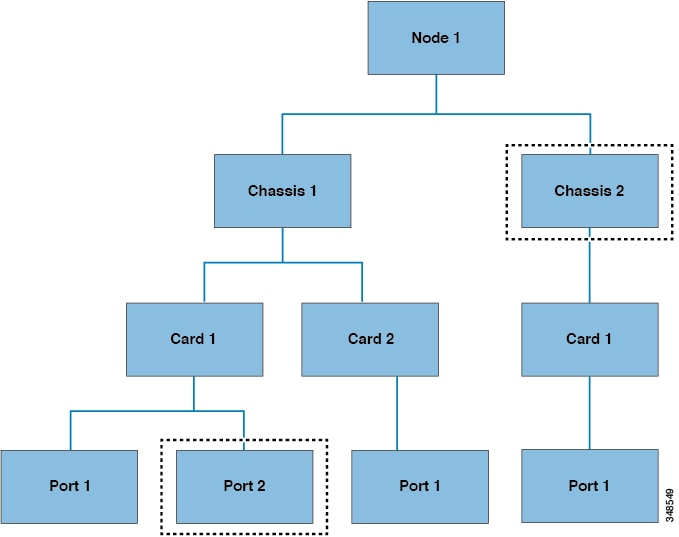

Object-Level Queries

The third query type is an object-level query. In an object-level query a distinguished name is used to return a specific object. The figure below shows two object-level queries: for Node 1 in Chassis 2, and one for Node 1 in Chassis 1 in Card 1 in Port 2.

Object-Level Queries

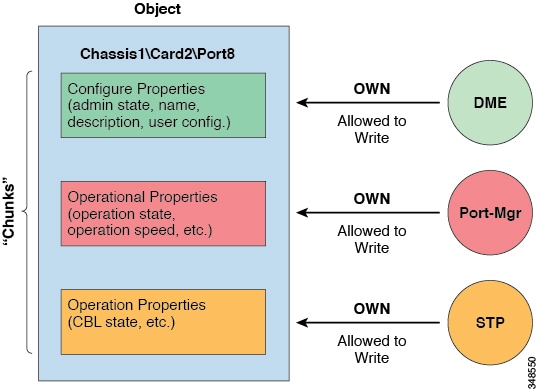

Managed Object Properties

Managed objects contain properties that define the managed object. Properties in a managed object are divided into chunks that are managed by processes in the operating system. Any object can have several processes that access it. All these properties together are compiled at runtime and are presented to the user as a single object. The following figure shows an example of this relationship.

Managed Object Properties

The example object has three processes that write to property chunks that are in the object. The data management engine (DME), which is the interface between the Cisco APIC (the user) and the object, the port manager, which handles port configuration, and the spanning tree protocol (STP) all interact with chunks of this object. The APIC presents the object to the user as a single entity compiled at runtime.

Accessing the Object Data Through REST Interfaces

REST is a software architecture style for distributed systems such as the World Wide Web. REST has increasingly displaced other design models such as Simple Object Access Protocol (SOAP) and Web Services Description Language (WSDL) due to its simpler style. The Cisco APIC supports REST interfaces for programmatic access to the entire Cisco ACI solution.

The object-based information model of Cisco ACI makes it a very good fit for REST interfaces: URLs and URIs map directly to distinguished names that identify objects on the MIT, and any data on the MIT can be described as a self-contained structured text tree document that is encoded in XML or JSON. The objects have parent-child relationships that are identified using distinguished names and properties, which are read and modified by a set of create, read, update, and delete (CRUD) operations.

Objects can be accessed at their well-defined address, their REST URLs, using standard HTTP commands for retrieval and manipulation of Cisco APIC object data. The URL format used can be represented as follows:

<system>/api/[mo|class]/[dn|class][:method].[xml|json]?{options}

The various building blocks of the preceding URL are as follows:

-

system: System identifier; an IP address or DNS-resolvable hostname

-

mo | class: Indication of whether this is a MO in the MIT, or class-level query

-

class: MO class (as specified in the information model) of the objects queried; the class name is represented as <pkgName><ManagedObjectClassName>

-

dn: Distinguished name (unique hierarchical name of the object in the MIT) of the object queried

-

method: Optional indication of the method being invoked on the object; applies only to HTTP POST requests

-

xml | json: Encoding format

-

options: Query options, filters, and arguments

With the capability to address and access an individual object or a class of objects with the REST URL, one can achieve complete programmatic access to the entire object tree and to the entire system.

The following are examples of REST queries:

-

Find all EPGs and their faults under tenant solar.

http://192.168.10.1:7580/api/mo/uni/tn-solar.xml?query-target=subtree&target-subtree-class=fvAEPg&rsp-subtree-include=faults

-

Filtered EPG query

http://192.168.10.1:7580/api/class/fvAEPg.xml?query-target-filter=eq(fvAEPg.fabEncap,%20"vxlan-12780288")

The Cisco ACI Policy Management Information Model

The fabric comprises the physical and logical components as recorded in the Management Information Model (MIM), which can be represented in a hierarchical management information tree (MIT). The information model is stored and managed by processes that run on the APIC. Similar to the OSI Common Management Information Protocol (CMIP) and other X.500 variants, the APIC enables the control of managed resources by presenting their manageable characteristics as object properties that can be inherited according to the location of the object within the hierarchical structure of the MIT.

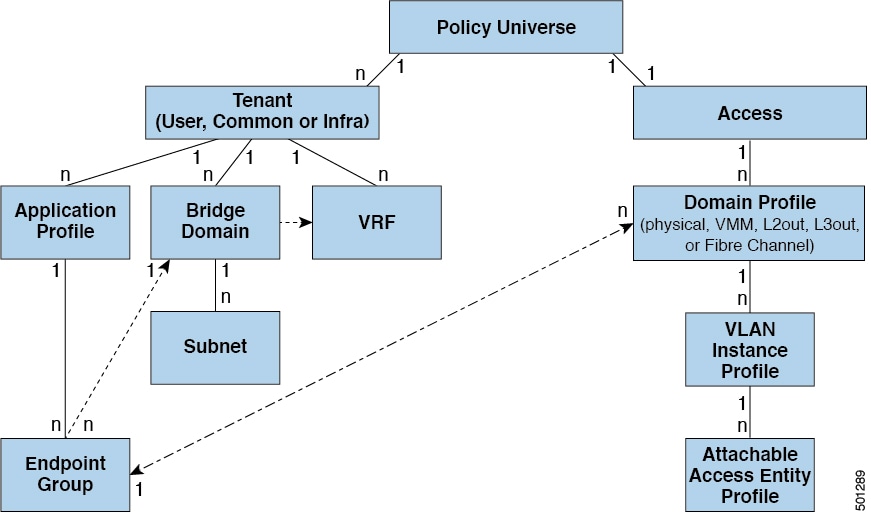

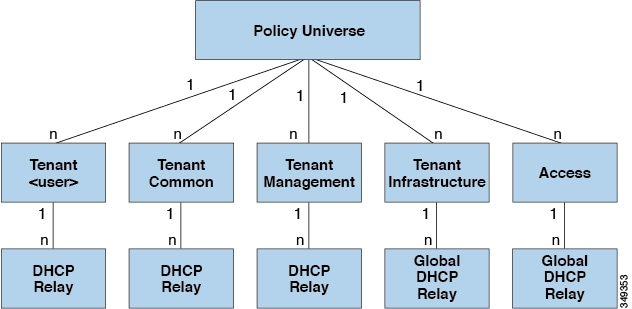

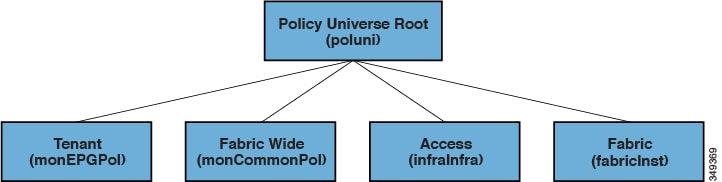

Each node in the tree represents a managed object (MO) or group of objects. MOs are abstractions of fabric resources. An MO can represent a concrete object, such as a switch, adapter, or a logical object, such as an application profile, endpoint group, or fault. The following figure provides an overview of the MIT.

Cisco ACI Management Information Model Policy Universe Overview

The hierarchical structure starts with the policy universe under the top (Root) and contains parent and child nodes. Each node in the tree is an MO and each object in the fabric has a unique distinguished name (DN) that describes the object and locates its place in the tree. The following managed objects contain the policies that govern the operation of the system:

-

APIC controllers comprise a replicated synchronized clustered controller that provides management, policy programming, application deployment, and health monitoring for the multitenant fabric.

-

A tenant is a container for policies that enable an administrator to exercise domain-based access control. The system provides the following four kinds of tenants:

-

User tenants contain policies that govern the operation of resources such as applications, databases, web servers, network-attached storage, virtual machines, and so on.

-

The common tenant is provided by the system but can be configured by the fabric administrator. It contains policies that govern the operation of resources accessible to all tenants, such as firewalls, load balancers, Layer 4 to Layer 7 services, intrusion detection appliances, and so on.

-

The infrastructure tenant is provided by the system but can be configured by the fabric administrator. It contains policies that govern the operation of infrastructure resources such as the fabric VXLAN overlay. It also enables a fabric provider to selectively deploy resources to one or more user tenants. Infrastructure tenant polices are configurable by the fabric administrator.

-

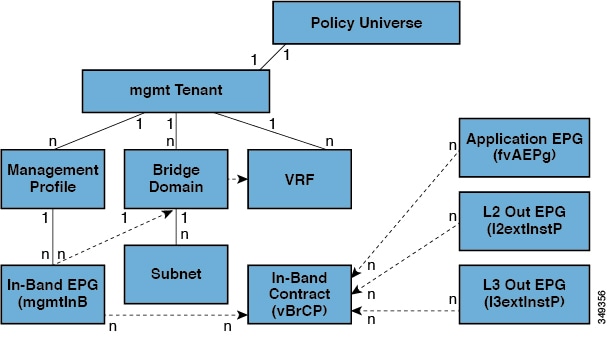

The management tenant is provided by the system but can be configured by the fabric administrator. It contains policies that govern the operation of fabric management functions used for in-band and out-of-band configuration of fabric nodes. The management tenant contains a private out-of-bound address space for the APIC/fabric internal communications that is outside the fabric data path that provides access through the management port of the switches. The management tenant enables discovery and automation of communications with virtual machine controllers.

-

-

Access policies govern the operation of switch access ports that provide connectivity to resources such as storage, compute, Layer 2 and Layer 3 (bridged and routed) connectivity, virtual machine hypervisors, Layer 4 to Layer 7 devices, and so on. If a tenant requires interface configurations other than those provided in the default link, Cisco Discovery Protocol (CDP), Link Layer Discovery Protocol (LLDP), Link Aggregation Control Protocol (LACP), or Spanning Tree, an administrator must configure access policies to enable such configurations on the access ports of the leaf switches.

-

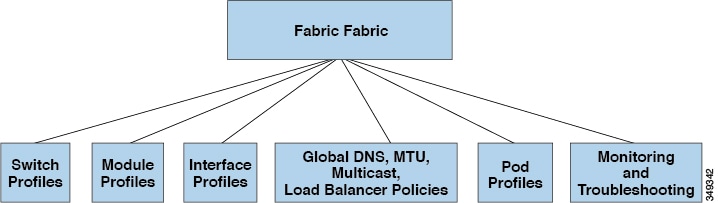

Fabric policies govern the operation of the switch fabric ports, including such functions as Network Time Protocol (NTP) server synchronization, Intermediate System-to-Intermediate System Protocol (IS-IS), Border Gateway Protocol (BGP) route reflectors, Domain Name System (DNS) and so on. The fabric MO contains objects such as power supplies, fans, chassis, and so on.

-

Virtual Machine (VM) domains group VM controllers with similar networking policy requirements. VM controllers can share VLAN or Virtual Extensible Local Area Network (VXLAN) space and application endpoint groups (EPGs). The APIC communicates with the VM controller to publish network configurations such as port groups that are then applied to the virtual workloads.

-

Layer 4 to Layer 7 service integration life cycle automation framework enables the system to dynamically respond when a service comes online or goes offline. Policies provide service device package and inventory management functions.

-

Access, authentication, and accounting (AAA) policies govern user privileges, roles, and security domains of the Cisco ACI fabric.

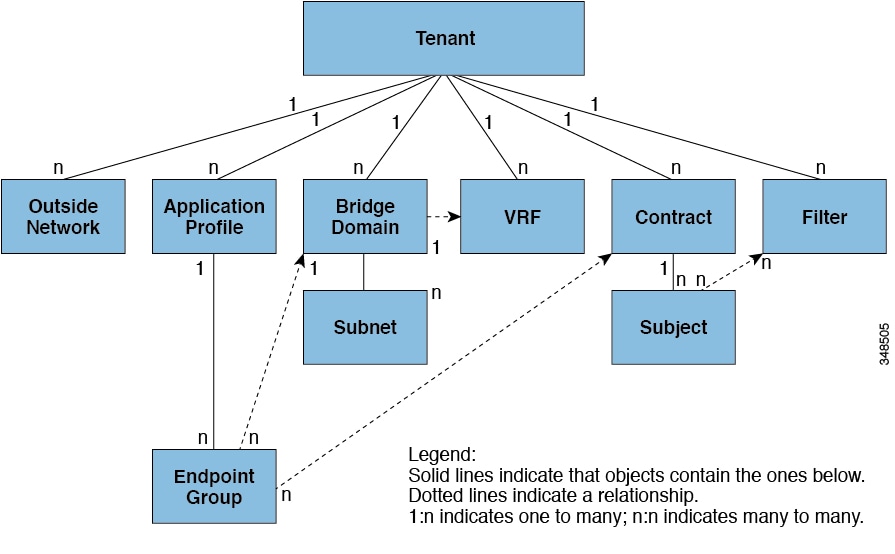

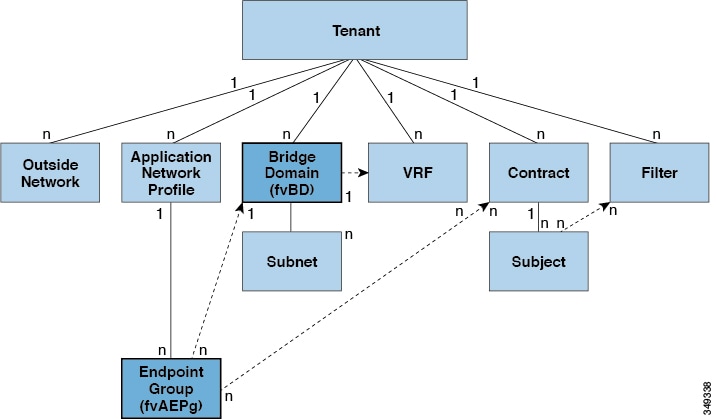

Tenants

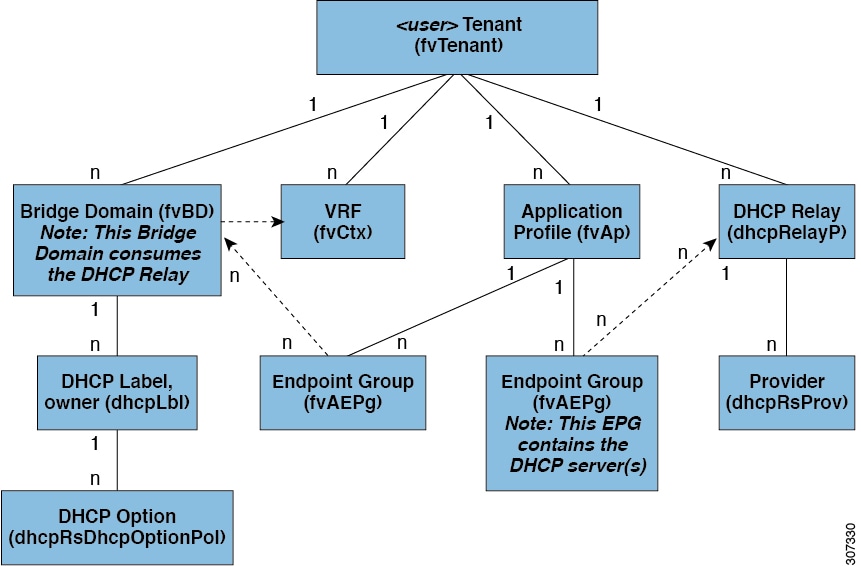

A tenant (fvTenant) is a logical container for application policies that enable an administrator to exercise domain-based access control. A

tenant represents a unit of isolation from a policy perspective, but it does not represent a private network. Tenants can

represent a customer in a service provider setting, an organization or domain in an enterprise setting, or just a convenient

grouping of policies. The following figure provides an overview of the tenant portion of the management information tree (MIT).

Tenants

Tenants can be isolated from one another or can share resources. The primary elements that the tenant contains are filters, contracts, outside networks, bridge domains, Virtual Routing and Forwarding (VRF) instances, and application profiles that contain endpoint groups (EPGs). Entities in the tenant inherit its policies. VRFs are also known as contexts; each VRF can be associated with multiple bridge domains.

Tenants are logical containers for application policies. The fabric can contain multiple tenants. You must configure a tenant before you can deploy any Layer 4 to Layer 7 services. The ACI fabric supports IPv4, IPv6, and dual-stack configurations for tenant networking.

Endpoint Groups

An EPG is a managed object that is a named logical entity that contains a collection of endpoints. Endpoints are devices that are connected to the network directly or indirectly. They have an address (identity), a location, attributes (such as version or patch level), and can be physical or virtual. Knowing the address of an endpoint also enables access to all its other identity details. EPGs are fully decoupled from the physical and logical topology. Endpoint examples include servers, virtual machines, network-attached storage, or clients on the Internet. Endpoint membership in an EPG can be dynamic or static.

The ACI fabric can contain the following types of EPGs:

-

Application endpoint group (

fvAEPg) -

Layer 2 external outside network instance endpoint group (

l2extInstP) -

Layer 3 external outside network instance endpoint group (

l3extInstP) -

Management endpoint groups for out-of-band (

mgmtOoB) or in-band (mgmtInB) access.

EPGs contain endpoints that have common policy requirements such as security, virtual machine mobility (VMM), QoS, or Layer 4 to Layer 7 services. Rather than configure and manage endpoints individually, they are placed in an EPG and are managed as a group.

Policies apply to EPGs, never to individual endpoints. An EPG can be statically configured by an administrator in the APIC, or dynamically configured by an automated system such as vCenter or OpenStack.

Regardless of how an EPG is configured, EPG policies are applied to the endpoints they contain.

WAN router connectivity to the fabric is an example of a configuration that uses a static EPG. To configure WAN router connectivity

to the fabric, an administrator configures an l3extInstP EPG that includes any endpoints within an associated WAN subnet. The fabric learns of the EPG endpoints through a discovery

process as the endpoints progress through their connectivity life cycle. Upon learning of the endpoint, the fabric applies

thel3extInstP EPG policies accordingly. For example, when a WAN connected client initiates a TCP session with a server within an application

(fvAEPg) EPG, the l3extInstP EPG applies its policies to that client endpoint before the communication with the fvAEPg EPG web server begins. When the client server TCP session ends and communication between the client and server terminate,

that endpoint no longer exists in the fabric.

Virtual machine management connectivity to VMware vCenter is an example of a configuration that uses a dynamic EPG. Once the virtual machine management domain is configured in the fabric, vCenter triggers the dynamic configuration of EPGs that enable virtual machine endpoints to start up, move, and shut down as needed.

Bridge Domains and Subnets

A bridge domain (fvBD) represents a Layer 2 forwarding construct within the fabric. The following figure shows the location of bridge domains (BDs)

in the management information tree (MIT) and their relation to other objects in the tenant.

A BD must be linked to a VRF (also known as a context or private network). It must have at least one subnet (fvSubnet) associated with it. The BD defines the unique Layer 2 MAC address space and a Layer 2 flood domain if such flooding is enabled.

While a VRF defines a unique IP address space, that address space can consist of multiple subnets. Those subnets are defined

in one or more BDs that reference the corresponding VRF.

The options for a subnet under a BD or under an EPG are as follows:

-

Public—the subnet can be exported to a routed connection.

-

Private—the subnet applies only within its tenant.

-

Shared—the subnetcan be shared with and exported to multiple VRFs in the same tenant or across tenants as part of a shared service. An example of a shared service is a routed connection to an EPG present in another VRF in a different tenant. This enables traffic to pass in both directions across VRFs. An EPG that provides a shared service must have its subnet configured under that EPG (not under a BD), and its scope must be set to advertised externally, and shared between VRFs.

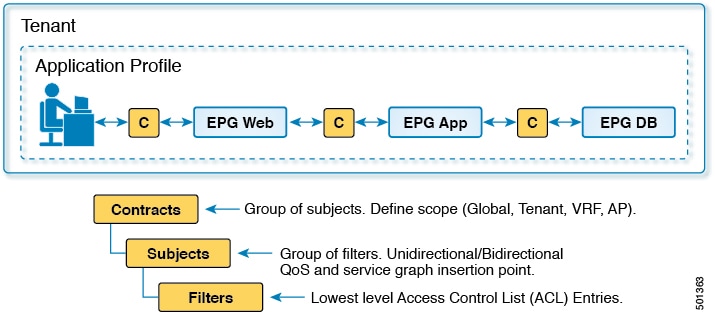

Labels, Filters, Aliases, and Subjects Govern EPG Communications

Label, subject, alias and filter managed-objects enable mixing and matching among EPGs and contracts so as to satisfy various applications or service delivery requirements. The following figure shows the location of application subjects and filters in the management information tree (MIT) and their relation to other objects in the tenant.

Contracts can contain multiple communication rules and multiple EPGs can both consume and provide multiple contracts. Labels control which rules apply when communicating between a specific pair of EPGs. A policy designer can compactly represent complex communication policies and re-use these policies across multiple instances of an application. For example, the sample policy in the Cisco Application Centric Infrastructure Fundamentals "Contract Scope Examples" chapter shows how the same contract uses labels, subjects, and filters to differentiate how communications occur among different EPGs that require HTTP or HTTPS.

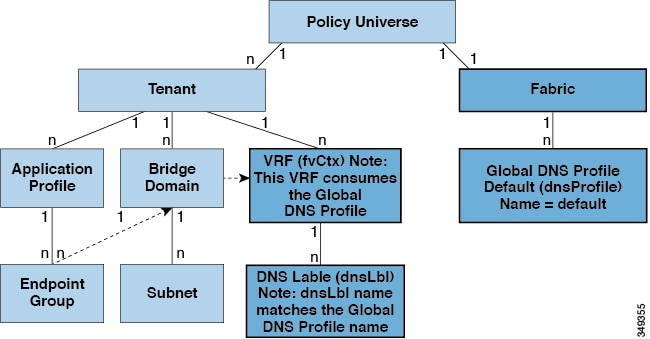

Labels, subjects, aliases and filters define EPG communications according to the following options:

-

Labels are managed objects with only one property: a name. Labels enable classifying which objects can and cannot communicate with one another. Label matching is done first. If the labels do not match, no other contract or filter information is processed. The label match attribute can be one of these values: at least one (the default), all, none, or exactly one. The Cisco Application Centric Infrastructure Fundamentals "Label Matching" chapter shows simple examples of all the label match types and their results.

Note

Labels can be applied to a variety of provider and consumer managed objects, including EPGs, contracts, bridge domains, DHCP relay policies, and DNS policies. Labels do not apply across object types; a label on an application EPG has no relevance to a label on a bridge domain.

-

Labels determine which EPG consumers and EPG providers can communicate with one another. Label matching determines which subjects of a contract are used with a given EPG provider or EPG consumer of that contract.

-

The two types of labels are as follows:

-

Subject labels that are applied to EPGs. Subject label matching enables EPGs to choose a subset of the subjects in a contract.

-

Provider/consumer labels that are applied to EPGs. Provider/consumer label matching enables consumer EPGs to choose their provider EPGs and vice versa.

-

-

Aliases are alternative names you can apply to objects, which can be changed, unlike the name.

-

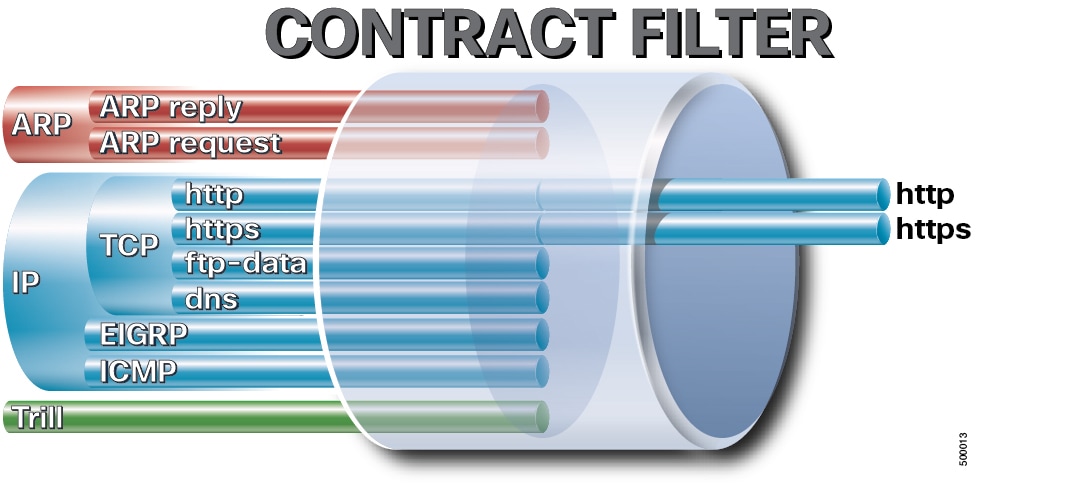

Filters are Layer 2 to Layer 4 fields, TCP/IP header fields such as Layer 3 protocol type, Layer 4 ports, and so forth. According to its related contract, an EPG provider dictates the protocols and ports in both the in and out directions. Contract subjects contain associations to the filters (and their directions) that are applied between EPGs that produce and consume the contract.

-

Subjects are contained in contracts. One or more subjects within a contract use filters to specify the type of traffic that can be communicated and how it occurs. For example, for HTTPS messages, the subject specifies the direction and the filters that specify the IP address type (for example, IPv4), the HTTP protocol, and the ports allowed. Subjects determine if filters are unidirectional or bidirectional. A unidirectional filter is used in one direction. Unidirectional filters define in or out communications but not the same for both. Bidirectional filters are the same for both; they define both in and out communications.

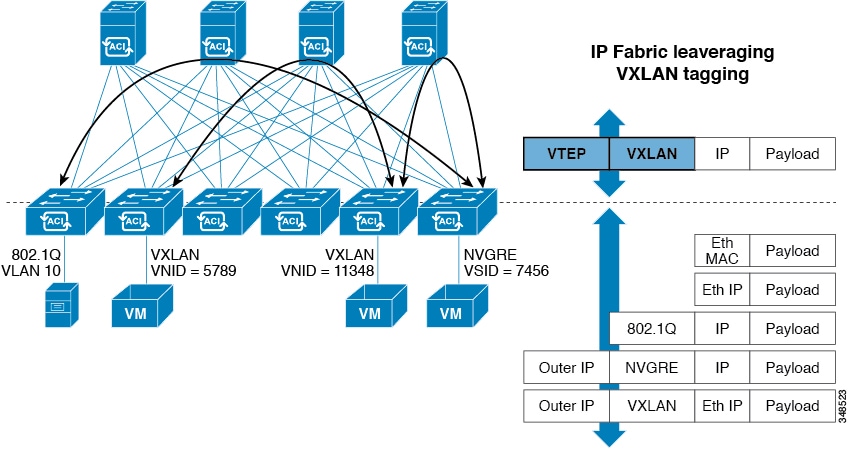

VXLAN in ACI

VXLAN is an industry-standard protocol that extends Layer 2 segments over Layer 3 infrastructure to build Layer 2 overlay logical networks. The ACI infrastructure Layer 2 domains reside in the overlay, with isolated broadcast and failure bridge domains. This approach allows the data center network to grow without risking creation of too large a failure domain.

All traffic in the ACI fabric is normalized as VXLAN packets. At ingress, ACI encapsulates external VLAN/VXLAN/NVGRE packets in a VXLAN packet. The following figure illustrates ACI encapsulation normalization.

ACI Encapsulation Normalization

Forwarding in the ACI fabric is not limited to or constrained by the encapsulation type or encapsulation overlay network. An ACI bridge domain forwarding policy can be defined to provide standard VLAN behavior where required.

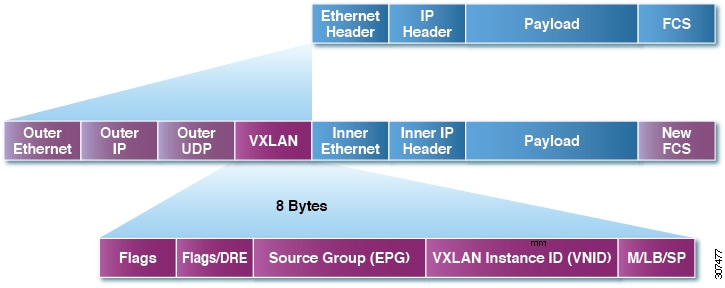

Because every packet in the fabric carries ACI policy attributes, ACI can consistently enforce policy in a fully distributed manner. ACI decouples application policy EPG identity from forwarding. The following figure illustrates how the ACI VXLAN header identifies application policy within the fabric.

ACI VXLAN Packet Format

The ACI VXLAN packet contains both Layer 2 MAC address and Layer 3 IP address source and destination fields, which enables highly efficient and scalable forwarding within the fabric. The ACI VXLAN packed header source group field identifies the application policy endpoint group (EPG) to which the packet belongs. The VXLAN Instance ID (VNID) enables forwarding of the packet through tenant virtual routing and forwarding (VRF) domains within the fabric. The 24-bit VNID field in the VXLAN header provides an expanded address space for up to 16 million unique Layer 2 segments in the same network. This expanded address space gives IT departments and cloud providers greater flexibility as they build large multitenant data centers.

VXLAN enables ACI to deploy Layer 2 virtual networks at scale across the fabric underlay Layer 3 infrastructure. Application endpoint hosts can be flexibly placed in the data center network without concern for the Layer 3 boundary of the underlay infrastructure, while maintaining Layer 2 adjacency in a VXLAN overlay network.

In the leaf switches, policy configuration translates into zoning-rules. Each zoning-rule has a rule id, a primary key that is composed of scope (VRF VNID), srcEPG, dstEPG, and scope filterId. Actions can be permit, deny, redir, copy, or log. There is also a rule priority.

Zoning-rules are programmed in leaf switch policy TCAM (ternary content-addressable memory) hardware entries. TCAM is a specialized type of high-speed memory that searches its entire contents in a single clock cycle. The priority of each TCAM entry is based on the rule priority, filter priority, and if no-stats is set (compression is enabled or not).

|

8 bits |

1 bit |

1 bit |

6 bits |

|

Rule priority |

Low Priority (if set) |

No-stats (if set) |

Entry priority |

When the priority is the same, a rule with a deny action has a higher priority than a permit action.

When a VRF is in unenforced mode, there is an any-any permit rule that allows all traffic in the VRF scope by default.

When a VRF is in enforced mode, there is an any-any deny rule that disallows traffic by default. Contracts between EPGs must be configured to allow traffic that matches the specified filters.

By default, intra-EPG traffic is permitted but microsegmentation can be configured.

Contracts

In addition to EPGs, contracts (vzBrCP) are key objects in the policy model. EPGs can only communicate with other EPGs according to contract rules. The following

figure shows the location of contracts in the management information tree and their relation to other objects in the tenant.

An administrator uses a contract to select the type(s) of traffic that can pass between EPGs, including the protocols and ports allowed. If there is no contract, inter-EPG communication is disabled by default. There is no contract required for intra-EPG communication; intra-EPG communication is always implicitly allowed.

You can also configure contract preferred groups that enable greater control of communication between EPGs in a VRF. If most of the EPGs in the VRF should have open communication, but a few should only have limited communication with the other EPGs, you can configure a combination of a contract preferred group and contracts with filters to control communication precisely.

Contracts govern the following types of endpoint group communications:

-

Between ACI fabric application EPGs (

fvAEPg), both intra-tenant and inter-tenant -

Between ACI fabric application EPGs and Layer 2 external outside network instance EPGs (

l2extInstP) -

Between ACI fabric application EPGs and Layer 3 external outside network instance EPGs (

l3extInstP) - Between ACI fabric out-of-band (

mgmtOoB) or in-band (mgmtInB) management EPGs

Contracts govern the communication between EPGs that are labeled providers, consumers, or both. EPG providers expose contracts with which a would-be consumer EPG must comply. The relationship between an EPG and a contract can be either a provider or consumer. When an EPG provides a contract, communication with that EPG can be initiated from other EPGs as long as the communication complies with the provided contract. When an EPG consumes a contract, the endpoints in the consuming EPG may initiate communication with any endpoint in an EPG that is providing that contract. An EPG can both provide and consume the same contract. An EPG can also provide and consume multiple contracts simultaneously.

Inheritance

To streamline associating contracts to new EPGs, you can enable an EPG to inherit all the (provided and consumed) contracts associated directly to another EPG in the same tenant. Contract inheritance can be configured for application, microsegmented, L2Out, and L3Out EPGs.

You can also configure contract inheritance for Inter-EPG contracts, both provided and consumed. You can enable an EPG to inherit all the contracts associated directly to another EPG.

Preferred Groups

There are two types of policy enforcements available for EPGs in a VRF with a contract preferred group configured:

-

Included EPGs: EPGs can freely communicate with each other without contracts, if they have membership in a contract preferred group. This is based on the source-any-destination-any-permit default rule.

-

Excluded EPGs: EPGs that are not members of preferred groups require contracts to communicate with each other. Otherwise, the default source-any-destination-any-deny rule applies.

The contract preferred group feature enables greater control of communication between EPGs in a VRF. If most of the EPGs in the VRF should have open communication, but a few should have limited communication with the other EPGs, you can configure a combination of a contract preferred group and contracts with filters to control inter-EPG communication precisely.

Optimize Contract Performance

You can configure bidirectional contracts that support more efficient hardware TCAM storage of contract data. With optimization enabled, contract statistics for both directions are aggregated.

TCAM Optimization is supported on the Cisco Nexus 9000 Series top of rack (TOR) switches with names ending with EX and FX, and later (for example, N9K-C93180LC-EX or N9K-C93180YC-FX).

To configure efficient TCAM contract data storage, you enable the following options:

-

Mark the contracts to be applied in both directions between the provider and consumer

-

For filters with IP TCP or UDP protocols, enable the reverse port option

-

When configuring the contract subjects, enable the no stats directive.

About Copy Services

Unlike SPAN that duplicates all of the traffic, the Cisco Application Centric Infrastructure (ACI) copy services feature enables selectively copying portions of the traffic between endpoint groups, according to the specifications of the contract. Broadcast, unknown unicast and multicast (BUM), and control plane traffic that are not covered by the contract are not copied. In contrast, SPAN copies everything out of endpoint groups, access ports or uplink ports. Unlike SPAN, copy services do not add headers to the copied traffic. Copy service traffic is managed internally in the switch to minimize impact on normal traffic forwarding.

A copy service is configured as part of a Layer 4 to Layer 7 service graph template that specifies a copy cluster as the destination for the copied traffic. A copy service can tap into different hops within a service graph. For example, a copy service could select traffic between a consumer endpoint group and a firewall provider endpoint group, or between a server load balancer and a firewall. Copy clusters can be shared across tenants.

Copy services require you to do the following tasks:

-

Identify the source and destination endpoint groups.

-

Configure the contract that specifies what to copy according to the subject and what is allowed in the contract filter.

-

Configure Layer 4 to Layer 7 copy devices that identify the target devices and specify the ports where they attach.

-

Use the copy service as part of a Layer 4 to Layer 7 service graph template.

-

Configure a device selection policy that specifies which device will receive the traffic from the service graph. When you configure the device selection policy, you specify the contract, service graph, copy cluster, and cluster logical interface that is in copy device.

vzAny

The vzAny managed object provides a convenient way of associating all endpoint groups (EPGs) in a Virtual Routing and Forwarding (VRF)

instance to one or more contracts (vzBrCP), instead of creating a separate contract relation for each EPG.

In the Cisco ACI fabric, EPGs can only communicate with other EPGs according to contract rules. A relationship between an

EPG and a contract specifies whether the EPG provides the communications defined by the contract rules, consumes them, or

both. By dynamically applying contract rules to all EPGs in a VRF, vzAny automates the process of configuring EPG contract

relationships. Whenever a new EPG is added to a VRF, vzAny contract rules automatically apply. The vzAny one-to-all EPG relationship is the most efficient way of applying contract rules to all EPGs in a VRF.

Taboos

While the normal processes for ensuring security still apply, the ACI policy model aids in assuring the integrity of whatever security practices are employed. In the ACI policy model approach, all communications must conform to these conditions:

-

Communication is allowed only based on contracts, which are managed objects in the model. If there is no contract, inter-EPG communication is disabled by default.

-

No direct access to the hardware; all interaction is managed through the policy model.

Taboo contracts can be used to deny specific traffic that is otherwise allowed by contracts. The traffic to be dropped matches a pattern (such as, any EPG, a specific EPG, or traffic matching a filter). Taboo rules are unidirectional, denying any matching traffic coming toward an EPG that provides the contract.

With Cisco APIC Release 3.2(x) and switches with names that end in EX or FX, you can alternatively use a subject Deny action or Contract or Subject Exception in a standard contract to block traffic with specified patterns.

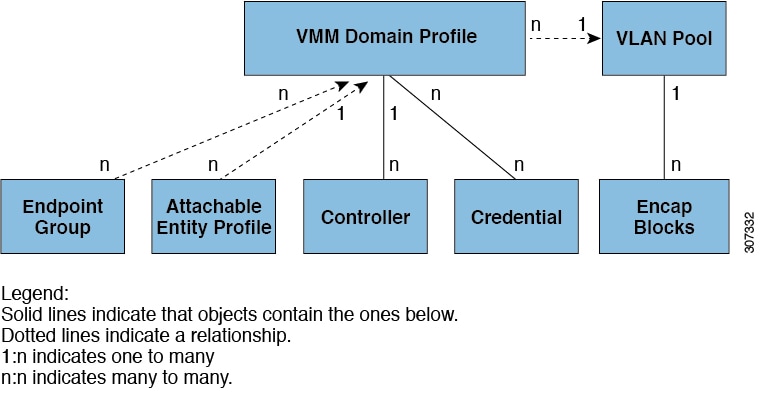

Attachable Entity Profiles Automate Assigning VLANs to EPGs

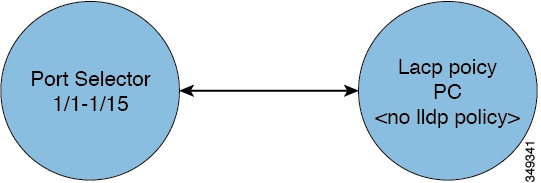

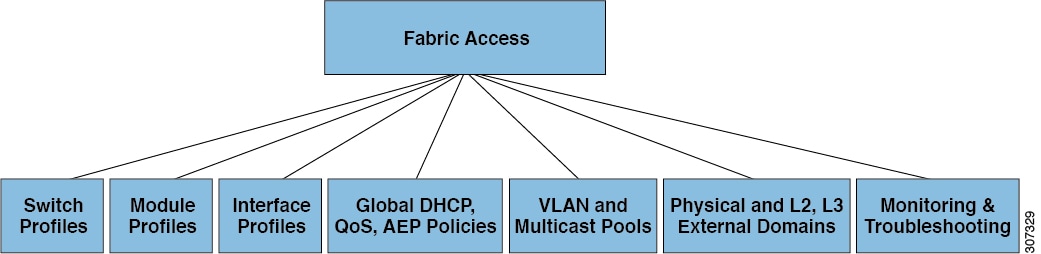

While tenant network policies are configured separately from fabric access policies, tenant policies are not activated unless their underlying access policies are in place. Fabric access external-facing interfaces connect to external devices such as virtual machine controllers and hypervisors, hosts, routers, or Fabric Extenders (FEXs). Access policies enable an administrator to configure port channels and virtual port channels, protocols such as LLDP, CDP, or LACP, and features such as monitoring or diagnostics.

Association of Endpoint Groups with Access Policies

In the policy model, EPGs are tightly coupled with VLANs. For traffic to flow, an EPG must be deployed on a leaf port with a VLAN in a physical, VMM, L2out, L3out, or Fiber Channel domain.

In the policy model, the domain profile associated to the EPG contains the VLAN instance profile. The domain profile contains both the VLAN instance profile (VLAN pool) and the attachable Access Entity Profile (AEP), which are associated directly with application EPGs. The AEP deploys the associated application EPGs to all the ports to which it is attached, and automates the task of assigning VLANs. While a large data center could easily have thousands of active virtual machines provisioned on hundreds of VLANs, the ACI fabric can automatically assign VLAN IDs from VLAN pools. This saves a tremendous amount of time, compared with trunking down VLANs in a traditional data center.

The ACI fabric provides multiple attachment points that connect through leaf ports to various external entities such as bare metal servers, virtual machine hypervisors, Layer 2 switches (for example, the Cisco UCS fabric interconnect), or Layer 3 routers (for example Cisco Nexus 7000 Series switches). These attachment points can be physical ports, FEX ports, port channels, or a virtual port channel (vPC) on leaf switches.

An Attachable Entity Profile (AEP) represents a group of external entities with similar infrastructure policy requirements. The infrastructure policies consist of physical interface policies that configure various protocol options, such as Cisco Discovery Protocol (CDP), Link Layer Discovery Protocol (LLDP), or Link Aggregation Control Protocol (LACP).

An AEP is required to deploy VLAN pools on leaf switches. Encapsulation blocks (and associated VLANs) are reusable across leaf switches. An AEP implicitly provides the scope of the VLAN pool to the physical infrastructure.

A virtual machine manager (VMM) domain automatically derives physical interface policies from the interface policy groups of an AEP.

An override policy at the AEP can be used to specify a different physical interface policy for a VMM domain. This policy is useful in scenarios where a VM controller is connected to the leaf switch through an intermediate Layer 2 node, and a different policy is desired at the leaf switch and VM controller physical ports. For example, you can configure LACP between a leaf switch and a Layer 2 node. At the same time, you can disable LACP between the VM controller and the Layer 2 switch by disabling LACP under the AEP override policy.

Microsegmentation

Microsegmentation associates endpoints from multiple EPGs into a microsegmented EPG according to virtual machine attributes, IP address, or MAC address. Virtual machine attributes include: VNic domain name, VM identifier, VM name, hypervisor identifier, VMM domain, datacenter, operating system, or custom attribute.

For any EPG, the ACI fabric ingress leaf switch classifies packets into an EPG according to the policies associated with the ingress port. Microsegmented EPGs apply policies to individual virtual or physical endpoints that are derived based on the VM attribute, MAC address, or IP address specified in the microsegmented EPG policy.

Intra-EPG Endpoint Isolation

Intra-EPG endpoint isolation policies provide full isolation for virtual or physical endpoints; no communication is allowed between endpoints in an EPG that is operating with isolation enforced. Isolation enforced EPGs reduce the number of EPG encapsulations required when many clients access a common service but are not allowed to communicate with each other.

An EPG is isolation enforced for all ACI network domains or none. While the ACI fabric implements isolation directly to connected endpoints, switches connected to the fabric are made aware of isolation rules according to a primary VLAN (PVLAN) tag.

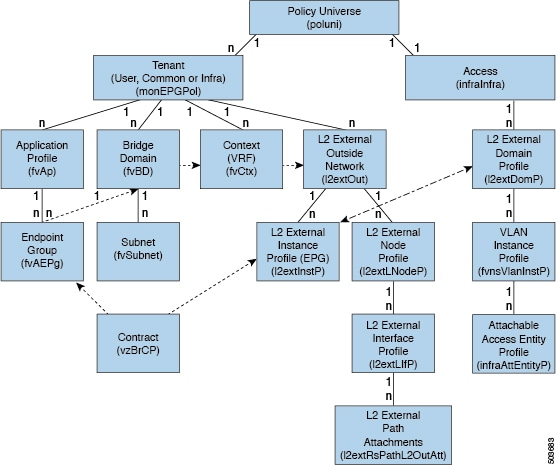

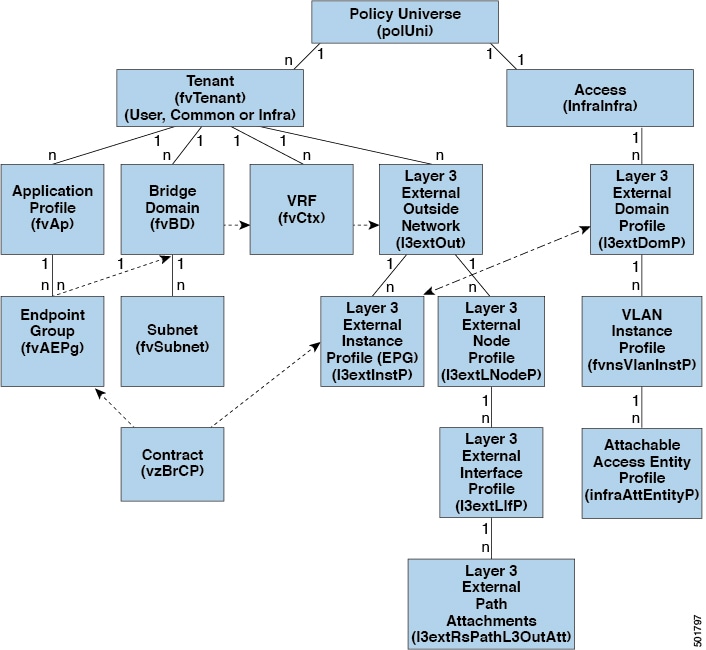

Outside Networks

Outside network policies control connectivity to the outside. A tenant can contain multiple outside network objects. The following figure shows the location of outside networks in the management information tree (MIT) and their relation to other objects in the tenant.

Outside network policies specify the relevant Layer 2 (l2extOut) or Layer 3 (l3extOut) properties that control communications between an outside public or private network and the ACI fabric. External devices,

such as routers that connect to the WAN and enterprise core, or existing Layer 2 switches, connect to the front panel interface

of a leaf switch. The leaf switch that provides such connectivity is known as a border leaf. The border leaf switch interface

that connects to an external device can be configured as either a bridged or routed interface. In the case of a routed interface,

static or dynamic routing can be used. The border leaf switch can also perform all the functions of a normal leaf switch.

Tenant Policy Example XML Code

The table below lists commonly used ACI policy model object and class prefixes and property IDs:

|

Common Name |

Prefix-Property |

Module |

Class |

Parent Class |

Example |

|---|---|---|---|---|---|

| Tenant | tn-name | fv | Tenant | Uni | tn-Cisco |

| Context/VRF | ctx-name | fv | Ctx | Tenant | ctx-CiscoVRF |

| Bridge Domain | BD-name | fv | BD | Tenant | BD-Cisco |

| Subnet | subnet-ip | fv | Subnet | BD | subnet-10.1.2.1/24 |

| App Profile | ap-name | fv | Ap | Tenant | ap-IT-test |

| EPG | epg-name | fv | AEPg | Ap | epg-Database |

| Client Endpoint | cep-name | fv | CEp | AEPg | cep-0000.1111.2222 |

| IP Address | ip-addr | fv | Ip | CEp | ip-10.1.2.20 |

| L3 External | out-name | l3ext | Out | Tenant | out-Corporate |

| Filter | flt-name | vz | Filter | Tenant | flt-HTTP |

| Contract | brc-name | vz | BrCP | Tenant | brc-Web_Services |

| Contract Subject | subj-name | vz | Subj | BrCP | subj-HTTP |

The example code for a basic ACI tenant configuration is below.

<polUni>

<fvTenant name="solar">

<vzFilter name="Http">

<vzEntry name="e1" etherT="ipv4" prot="tcp" dFromPort="80" dToPort="80" />

</vzFilter>

<vzFilter name="Https">

<vzEntry name="e1" etherT="ipv4" prot="tcp" dFromPort="443" dToPort="443" />

</vzFilter>

<vzBrCP name="webCtrct">

<vzSubj name="http" revFltPorts="true" provmatchT="All">

<vzRsSubjFiltAtt tnVzFilterName="Http" />

<vzRsSubjGraphAtt graphName="G1" termNodeName="TProv" />

<vzProvSubjLbl name="openProv" />

<vzConsSubjLbl name="openCons" />

</vzSubj>

<vzSubj name="https" revFltPorts="true" provmatchT="All">

<vzProvSubjLbl name="secureProv" />

<vzConsSubjLbl name="secureCons" />

< vzRsSubjFiltAtt tnVzFilterName="Https" />

<vzRsOutTermGraphAtt graphName="G2" termNodeName="TProv" />

</vzSubj>

</vzBrCP>

<fvCtx name="solarctx1" />

<fvBD name="solarBD1">

<fvRsCtx tnFvCtxName="solarctx1" />

<fvSubnet ip="11.22.22.20/24">

<fvRsBDSubnetToProfile tnL3extOutName="rout1" tnRtctrlProfileName="profExport" />

</fvSubnet>

<fvSubnet ip="11.22.22.211/24">

<fvRsBDSubnetToProfile tnL3extOutName="rout1" tnRtctrlProfileName="profExport" />

</fvSubnet>

</fvBD>

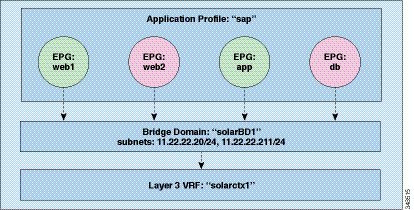

<fvAp name="sap">

<fvAEPg name="web1">

<fvRsBd tnFvBDName="solarBD1" />

<fvRsDomAtt tDn="uni/vmmp-VMware/dom-mininet" />

<fvRsProv tnVzBrCPName="webCtrct" matchT="All">

<vzProvSubjLbl name="openProv" />

<vzProvSubjLbl name="secureProv" />

<vzProvLbl name="green" />

</fvRsProv>

</fvAEPg>

<fvAEPg name="web2">

<fvRsBd tnFvBDName="solarBD1" />

<fvRsDomAtt tDn="uni/vmmp-VMware/dom-mininet" />

<fvRsProv tnVzBrCPName="webCtrct" matchT="All">

<vzProvSubjLbl name="secureProv" />

<vzProvLbl name="red" />

</fvRsProv>

</fvAEPg>

<fvAEPg name="app">

<fvRsBd tnFvBDName="solarBD1" />

<fvRsDomAtt tDn="uni/vmmp-VMware/dom-mininet" />

<fvRsCons tnVzBrCPName="webCtrct">

<vzConsSubjLbl name="openCons" />

<vzConsSubjLbl name="secureCons" />

<vzConsLbl name="green" />

</fvRsCons>

</fvAEPg>

<fvAEPg name="db">

<fvRsBd tnFvBDName="solarBD1" />

<fvRsDomAtt tDn="uni/vmmp-VMware/dom-mininet" />

<fvRsCons tnVzBrCPName="webCtrct">

<vzConsSubjLbl name="secureCons" />

<vzConsLbl name="red" />

</fvRsCons>

</fvAEPg>

</fvAp>

</fvTenant>

</polUni>

Tenant Policy Example XML Code Explained

This section contains a detailed explanation of the tenant policy example.

<polUni>

<!—polUni contains all the tenant-managed objects where the policy for each tenant is defined.—>

<fvTenant name="solar">

<!—Tenant. The <fvTenant> tag identifies the beginning of the tenant element. The tenant name must be unique in the system. The primary elements that

a tenant contains are filters, contracts, outside networks, bridege domains, and application profiles that contain EPGs.—>

<vzFilter name="Http"

<!—Filter. The filter element starts with a <vzFilter> tag and contains elements that are indicated with a <vzEntry> tag. The following example defines "Http" and "Https" filters. The first attribute of the filter is its name and the value of the name attribute is a string that is unique to

the tenant. These names can be reused in different tenants. These filters are used in the subject elements within contracts

later on in the example.—>

<vzEntry name="e1"

etherT="ipv4"

prot="tcp"

dFromPort="80"

dToPort="80"/>

</vzFilter>

<vzEntry

name="e1"

etherT="ipv4"

prot="tcp"

dFromPort="443"

dToPort="443"/>

</vzFilter>

<vzBrCP name="webCtrct">

<vzSubj name="http" revFltPorts="true" provmatchT="All">

<vzRsSubjFiltAtt tnVzFilterName="Http"/>

<vzRsSubjGraphAtt graphName="G1" termNodeName="TProv"/>

<vzProvSubjLbl name="openProv"/>

<vzConsSubjLbl name="openCons"/>

</vzSubj>

<!—Contract. The contract element is tagged vzBrCP and it has a name attribute. Contracts are the policy elements between EPGs. They contain all of the filters that are applied

between EPGs that produce and consume the contract. The contract element is tagged vzBrCP and it has a name attribute. Refer to the object model reference documentation for other attributes that can be used in the

contract element. This example has one contract named webCtrct. The contract contains multiple subject elements where each

subject contains a set of filters. In this example, the two subjects are http and https. —>

<vzSubj name="https" revFltPorts="true" provmatchT="All">

<vzProvSubjLbl name="secureProv"/>

<vzConsSubjLbl name="secureCons"/>

< vzRsSubjFiltAtt tnVzFilterName="Https"/>

<vzRsOutTermGraphAtt graphName="G2" termNodeName="TProv"/>

</vzSubj>

</vzBrCP>

<!—Subject. The subject element starts with the tag vzSubj and has three attributes: name, revFltPorts, and matchT. The name is simply the ASCII name of the subject. revFltPorts is a flag that indicates that the Layer 4 source and destination ports in the filters of this subject should be used as specified

in the filter description in the forward direction (that is, in the direction of from consumer to producer EPG), and should

be used in the opposite manner for the reverse direction. In this example, the “http” subject contains the “Http” filter that defined TCP destination port 80 and did not specify the source port. Because the revFltPorts flag is set to true, the policy will be TCP destination port 80 and any source port for traffic from the consumer to the producer,

and it will be TCP destination port any and source port 80 for traffic from the producer to the consumer. The assumption is

that the consumer initiates the TCP connection to the producer (the consumer is the client and the producer is the server).

The default value for the revFltPrts attribute is false if it is not specified.—>

<fvCtx name="solarctx1"/>

<!—VRF (context) The context (VRF) is identified by the fvCtx tag and contains a name attribute. A tenant can contain multiple contexts. For this example, the tenant uses one context

named “solartx1”. The name must be unique within the tenant. The context defines a Layer 3 address domain. All of the endpoints within the

Layer 3 domain must have unique IPv4 or IPv6 addresses because it is possible to directly forward packets between these devices

if the policy allows it. A context is equivalent to a virtual routing and forwarding (VRF) instance in the networking world.

While a context defines a unique IP address space, the corresponding subnets are defined within bridge domains. Each bridge

domain is then associated with a context.—>

<fvBD name="solarBD1">

<fvRsCtx tnFvCtxName="solarctx1" />

<fvSubnet ip="11.22.22.20/24">

<fvRsBDSubnetToProfile tnL3extOutName="rout1" tnRtctrlProfileName="profExport"/>

</fvSubnet>

<fvSubnet ip="11.22.22.211/24">

<fvRsBDSubnetToProfile tnL3extOutName="rout1" tnRtctrlProfileName="profExport"/>

</fvSubnet>

</fvBD>

<!—Bridge Domain.The bridge domain element is identified with the fvBD tag and has a name attribute. Within the bridge domain element, subnets are defined and a reference is made to the corresponding

Layer 3 context. Each bridge domain must be linked to a context and have at least one subnet. This example uses one bridge

domain named “solarBD1”. In this example, the “solarctx1” context is referenced by using the element tagged fvRsCtx and the tnFvCtxName attribute is given the value “solarctx1”. This name comes from the context defined above.

The subnets are contained within the bridge domain and a bridge domain can contain multiple subnets. This example defines two subnets. All of the addresses used within a bridge domain must fall into one of the address ranges that is defined by the subnets. However, the subnet can also be a supernet which is a very large subnet that includes many addresses that might never be used. Specifying one giant subnet that covers all current future addresses can simplify the bridge domain specification. However, different subnets must not overlap within a bridge domain or with subnets defined in other bridge domains that are associated with the same context. Subnets can overlap with other subnets that are associated with other contexts.

The subnets described below are 11.22.22.xx/24 and 11.22.23.xx/24. However, the full 32 bits of the address is given even though the mask says that only 24 are used, because this IP attribute also tells what the full IP address of the router is for that subnet. In the first case, the router IP address (default gateway) is 11.22.22.20 and for the second subnet, it is 11.22.23.211.

The entry 11.22.22.20/24 is equivalent to the following, but in compact form:

-

Subnet: 11.22.22.00

-

Subnet Mask: 255.255.255.0

-

Default gateway: 11.22.22.20

—>

<fvAp name="sap">

<!—Application Profile. The start of the application profile is indicated by the fvAp tag and has a name attribute. The application profile is a container that holds the EPGs. EPGs can communicate with other

EPGs in the same application profile and with EPGs in other application profiles. The application profile is simply a convenient

container that is used to hold multiple EPGs that are logically related to one another. They can be organized by the application

they provide such as “sap”, by the function they provide such as “infrastructure”, by where they are in the structure of the data center such as “DMZ”, or whatever organizing principle the administrator chooses to use. The primary object that the application profile contains

is an endpoint group (EPG). In this example, the “sap” application profile contains 4 EPGs: web1, web2, app, and db.—>

<fvAEPg name="web1">

<!—EPGs. EPGs begin with the tag fvAEPg and have a name attribute. The EPG object is where labels are defined that govern what policies are applied and which other

EPGs can communicate with this EPG. It also contains a reference to the bridge domain that the endpoints within the EPG are

associated with as well as which virtual machine manager (VMM) domain they are associated with. VMM allows virtual machine

mobility between two VM servers instantaneously with no application downtime.—>

<fvRsBd tnFvBDName="solarBD1" />

<fvRsDomAtt tDn="uni/vmmp-VMware/dom-mininet" />

<!—EPGs. The fvRsBd element within the EPG specifies the bridge domain with which it is associated. The bridge domain is identified by the value

of the tnFxBDName attribute. This EPG is associated with the “solarBD1” bridge domain named in the “Bridge Domain” section above. The binding to the bridge domain is used by the system to specify

what the default gateway address should be for the endpoints in this EPG. It does not imply that the endpoints are all in

the same subnet or that they can only communicate through bridging. Whether an endpoint’s packets are bridged or routed is

determined by whether the source endpoint sends the packet to its default gateway or the final destination desired. If it

sends the packet to the default gateway, the packet is routed.

The VMM domain used by this EPG is identified by the fvRsDomAtt tag. This element references the VMM domain object defined elsewhere. The VMM domain object is identified by its tDn name

attribute. This example shows only one VMM domain called “uni/vmmp-VMware/dom-mininet”.—>

<fvRsProv tnVzBrCPName="webCtrct" matchT="All">

<vzProvSubjLbl name="openProv"/>

<vzProvSubjLbl name="secureProv"/>

<vzProvLbl name="green"/>

</fvRsProv>

</fvAEPg>

<! —EPGs. The next element in the “web1” EPG defines which contract this EPG provides and is identified by the fvRsProv tag. If “web1” were to provide multiple contracts, there would be multiple fvRsProv elements. Similarly, if it were to consume one or more contracts, there would be fvRsCons elements as well. The fvRsProv element has a required attribute that is the name of the contract that is being provided. “web1” is providing the contract “webCtrct”.

The next attribute is the matchT attribute, which has the same semantics for matching provider or consumer labels as it did in the contract for subject labels

(it can take on the values of All, AtLeastOne, or None). This criteria applies to the provider labels as they are compared to the corresponding consumer labels. A match of the

labels implies that the consumer and provider can communicate if the contract between them allows it. In other words, the

contract has to allow communication and the consumer and provider labels have to match using the match criteria specified

at the provider. The consumer has no corresponding match criteria. The match type used is always determined by the provider.

In the “web1” EPG, two provider subject labels, openProv and secureProv, are specified to match with the “http” and “https” subjects of the “webCtrct” contract. One provider label, “green” is specified with a match criteria of All that will match with the same label in the “App” EPG.—>

<fvAEPg name="web2">

<fvRsBd tnFvBDName="solarBD1" />

<fvRsDomAtt tDn="uni/vmmp-VMware/dom-mininet" />

<fvRsProv tnVzBrCPName="webCtrct" matchT="All">

<vzProvSubjLbl name="secureProv"/>

<vzProvLbl name="red"/>

</fvRsProv>

</fvAEPg>

<!—EPGs. “web2”, is very similar to “web1” except that there is only one vzProvSubjLbl and the labels themselves are different.—>

<!—Labels. Inside the provider element, fvRsProv, an administrator needs to specify the labels that are to be used. There are two kinds of labels, provider labels and provider

subject labels. The provider labels, vzProvLbl, are used to match consumer labels in other EPGs that use the matchT criteria described earlier. The provider subject labels, vzProvSubjLbl, are used to match the subject labels that are specified in the contract. The only attribute of the label is its name attribute.—>

<fvAEPg name="app">

<fvRsBd tnFvBDName="solarBD1" />

<fvRsDomAtt tDn="uni/vmmp-VMware/dom-mininet" />

<fvRsCons tnVzBrCPName="webCtrct">

<vzConsSubjLbl name="openCons"/>

<vzConsSubjLbl name="secureCons"/>