Table Of Contents

About Cisco Validated Design (CVD) Program

Cisco Solution for EMC VSPEX End User Computing for 500 Citrix XenDesktop 5.6 Users

1.1 Solution Components Benefits

1.1.1 Benefits of Cisco Unified Computing System

1.1.2 Benefits of Nexus Switching

1.1.3 Benefits of EMC VNX Family of Storage Controllers

1.1.4 Benefits of VMware ESXi 5.0

1.1.5 Benefits of Citrix XenDesktop and Provisioning Server

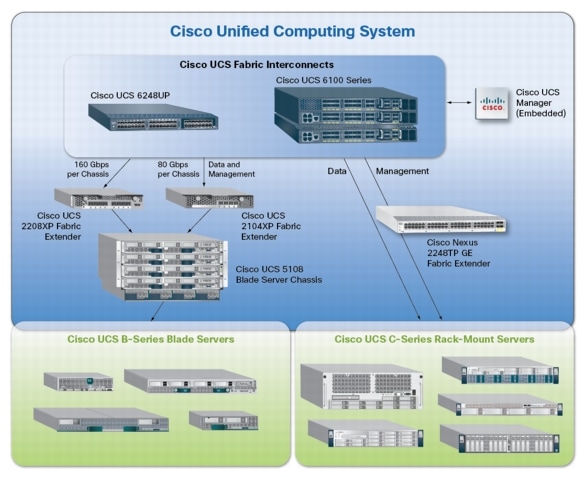

4.1 Cisco Unified Computing System (UCS)

4.1.1 Cisco Unified Computing System Components

4.1.1 Cisco Fabric Interconnects (Managed FC Variant Only)

4.1.2 Cisco UCS C220 M3 Rack-Mount Server

4.1.3 Cisco UCS Virtual Interface Card (VIC) Converged Network Adapter

4.2.1 Enhancements in Citrix XenDesktop 5.6 Feature Pack 1

4.2.3 High-Definition User Experience Technology

4.2.4 Citrix XenDesktop Hosted VM Overview

4.2.5 Citrix XenDesktop Hosted Shared Desktop Overview

4.2.6 Citrix Machine Creation Services

4.3.1 EMC VNX5300 Used in Testing

4.5 Modular Virtual Desktop Infrastructure Technical Overview

4.5.2 Understanding Desktop User Groups

4.5.3 Understanding Applications and Data

4.5.4 Project Planning and Solution Sizing Sample Questions

4.5.6 The Solution: A Unified, Pre-Tested and Validated Infrastructure

4.6 Cisco Networking Infrastructure

4.6.1 Cisco Nexus 5548UP Switch (Unmanaged FC Variant Only)

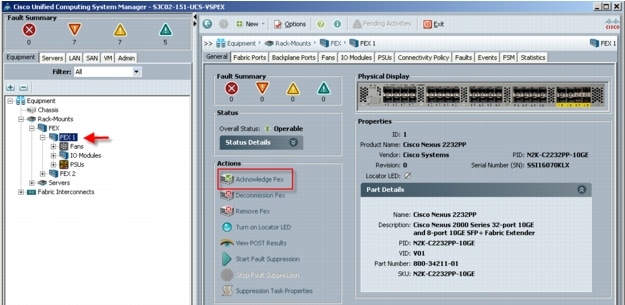

4.6.2 Cisco Nexus 2232PP Fabric Extender (Managed FC Variant Only)

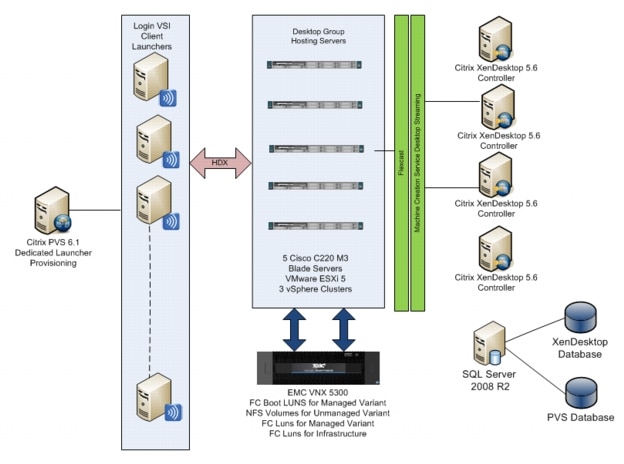

5 Architecture and Design of XenDesktop 5.6 on Cisco Unified Computing System and EMC VNX Storage

5.2 Hosted VDI Design Fundamentals

5.2.2 XenDesktop 5.6 Desktop Broker

5.3 Designing a Citrix XenDesktop 5.6 Deployment

5.4 Storage Architecture Design

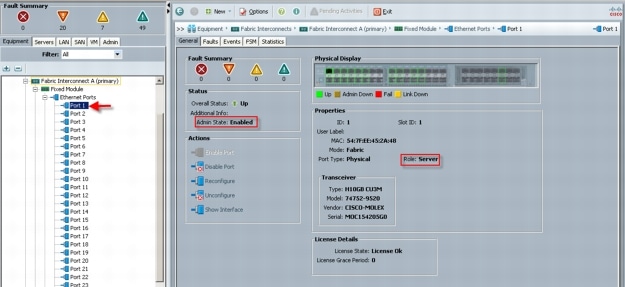

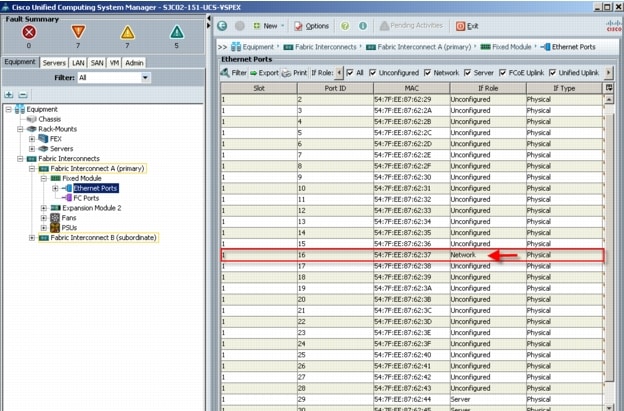

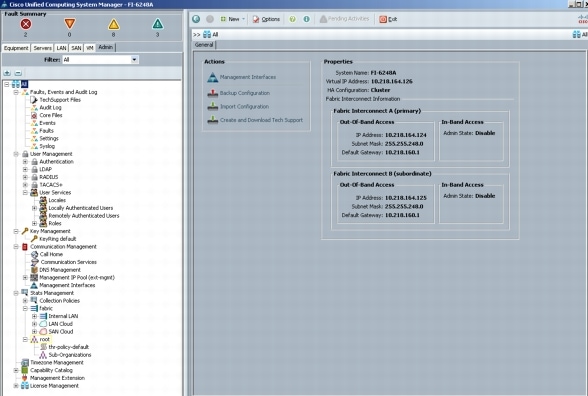

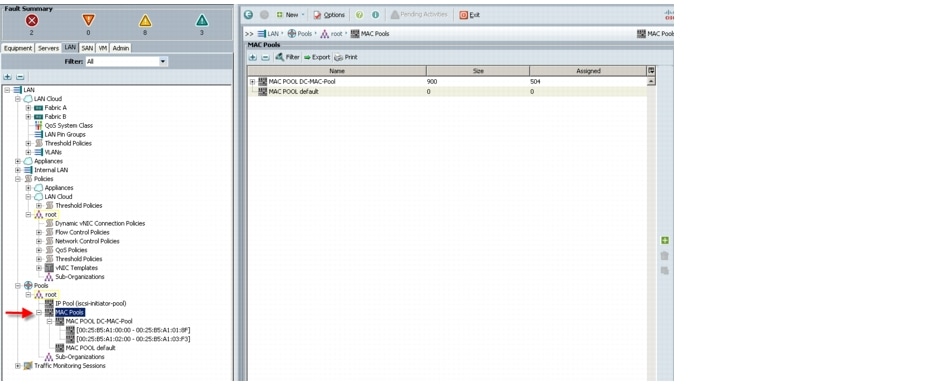

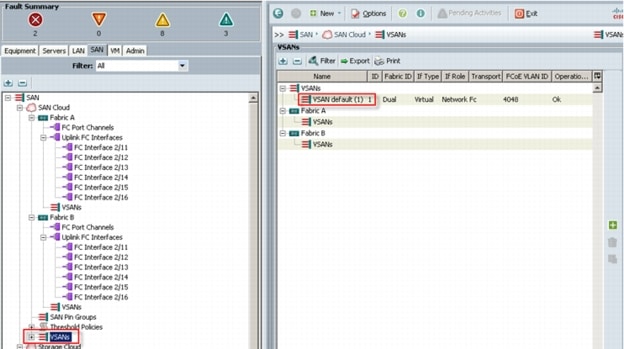

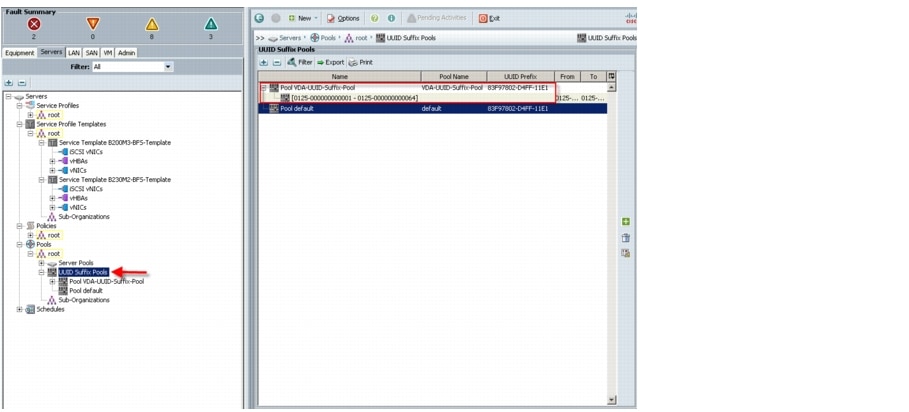

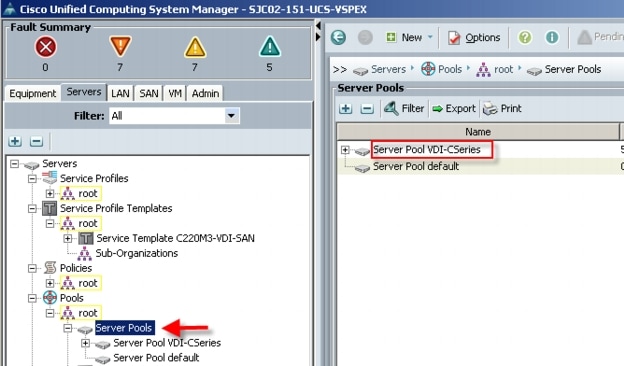

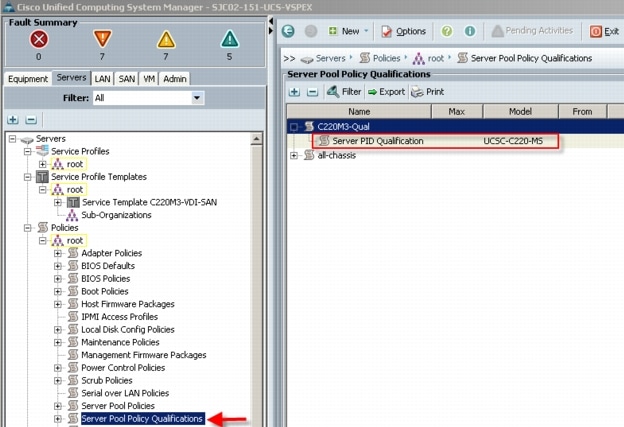

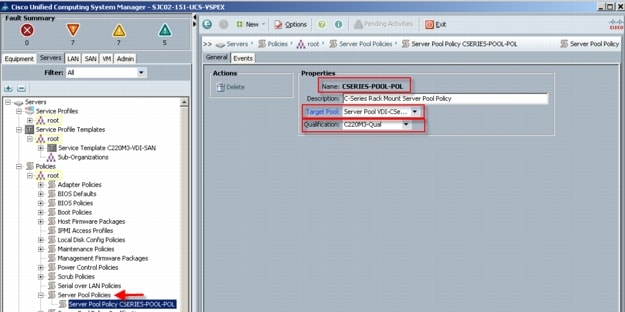

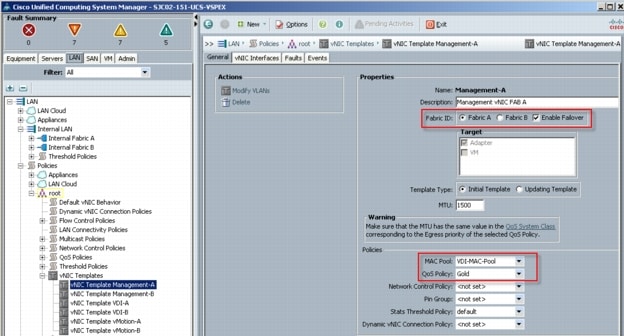

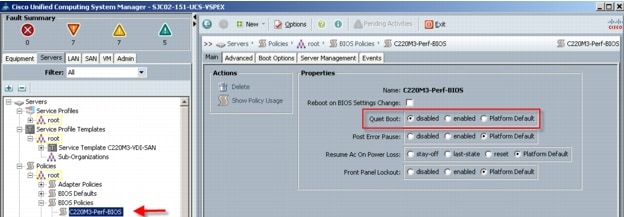

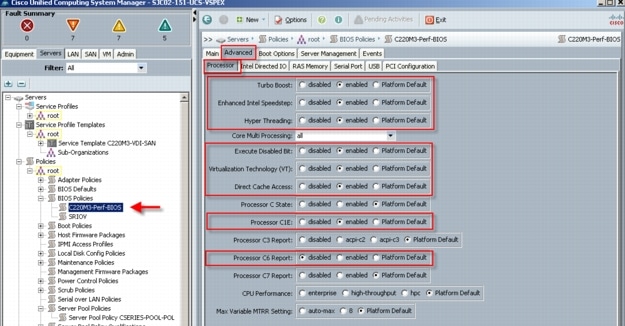

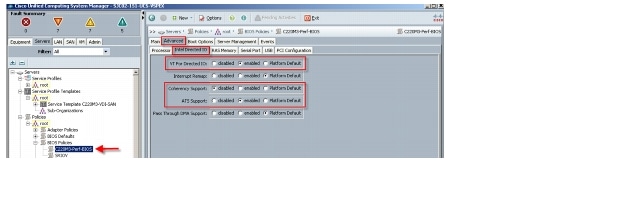

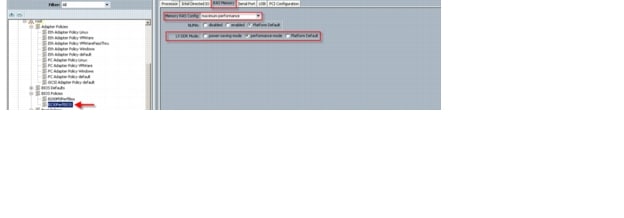

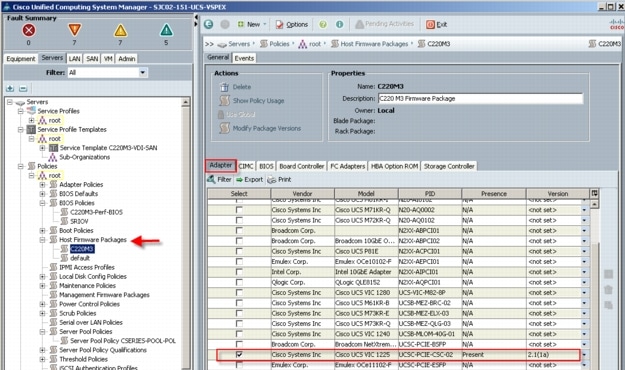

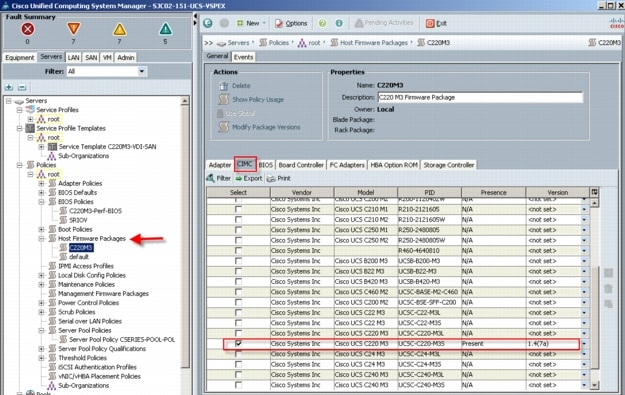

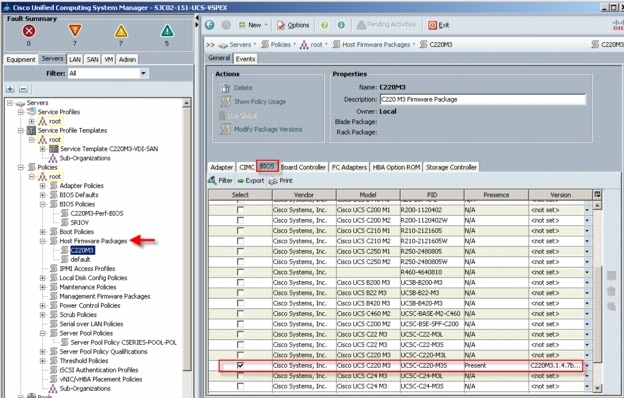

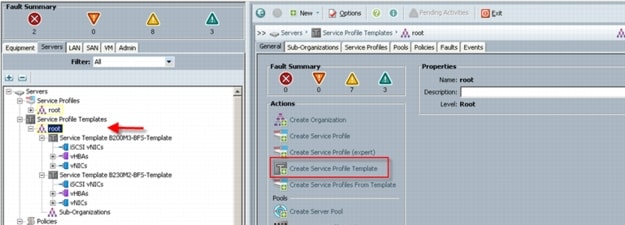

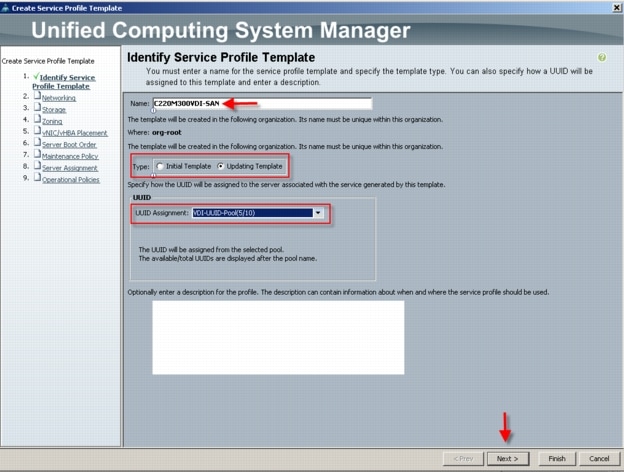

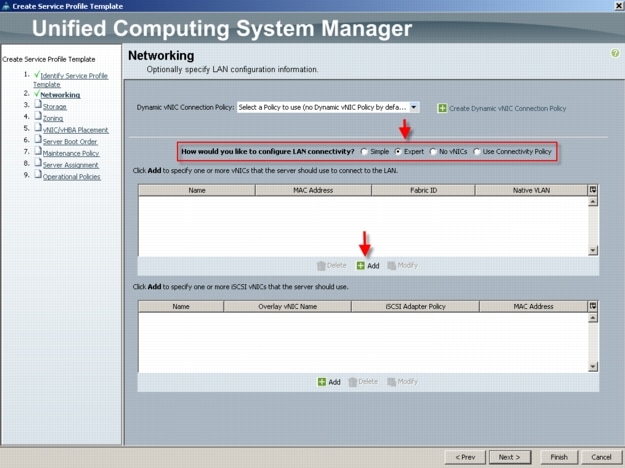

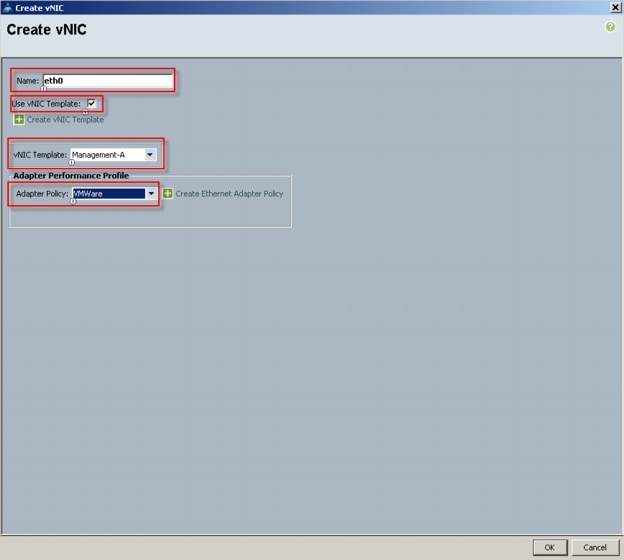

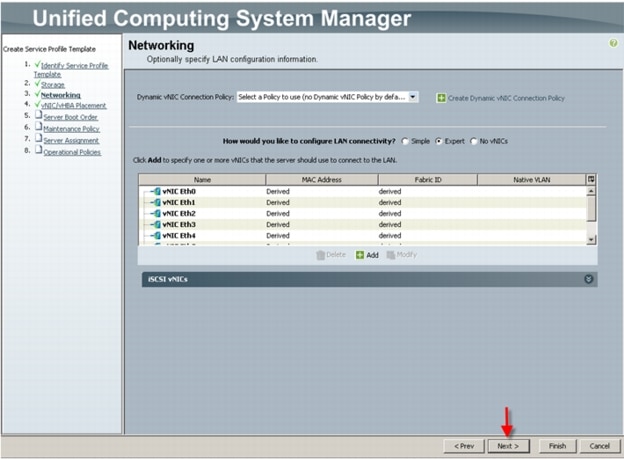

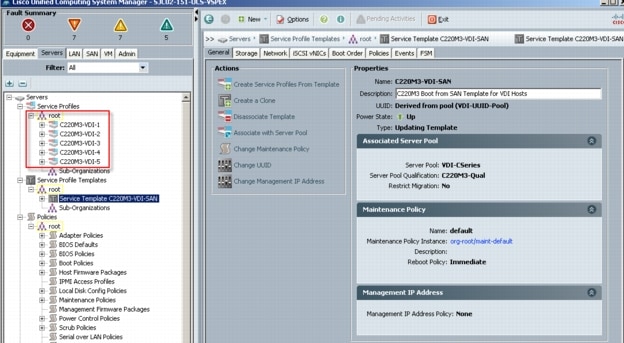

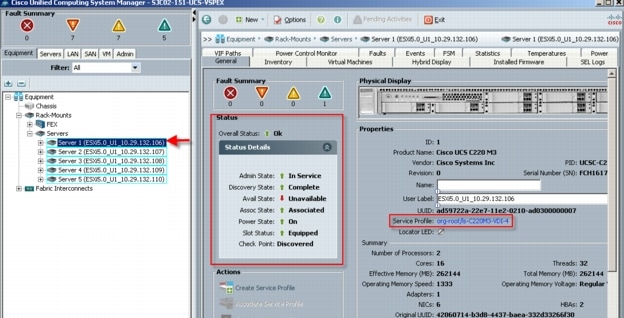

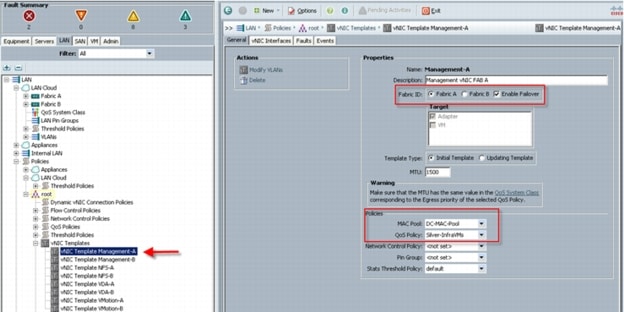

6.2 Cisco Unified Computing System Configuration (Managed FC Variant Only)

6.2.1 Base Cisco UCS System Configuration

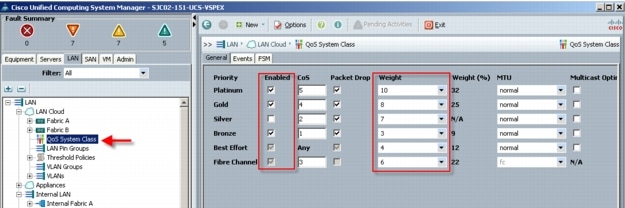

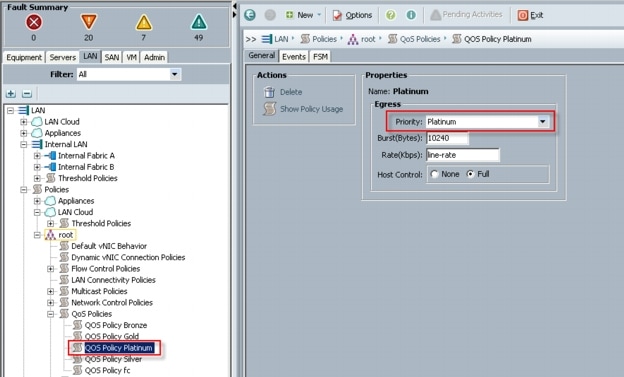

6.2.2 QoS and CoS in Cisco Unified Computing System

6.2.3 System Class Configuration

6.2.4 Cisco UCS System Class Configuration

6.2.5 Steps to Enable QOS on the Cisco Unified Computing System

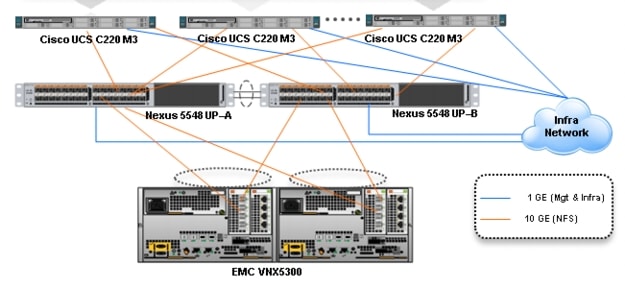

6.3.1 Nexus 5548UP and VNX5300 Connectivity (Unmanaged NFS Variant)

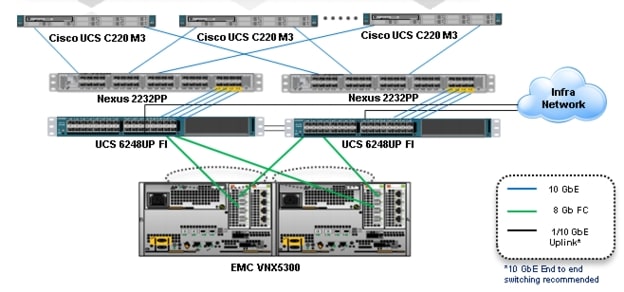

6.3.2 Cisco UCS Fabric Interconnect 6248UP and VNX5300 Connectivity (Managed FC Variant)

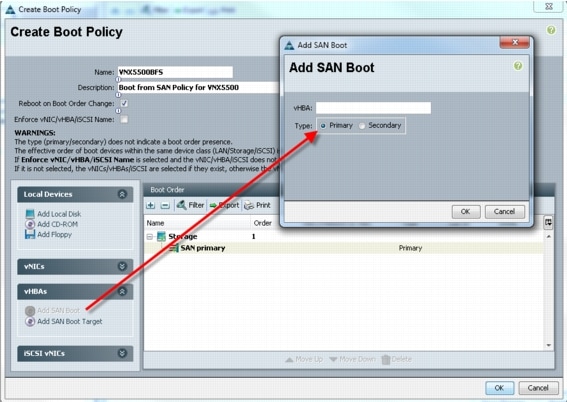

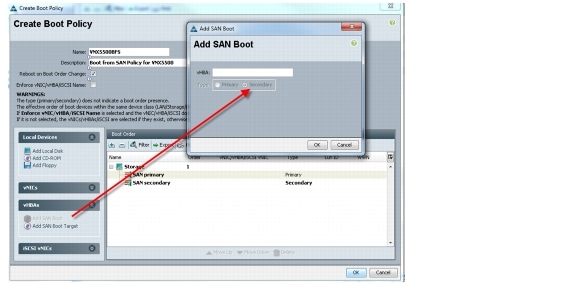

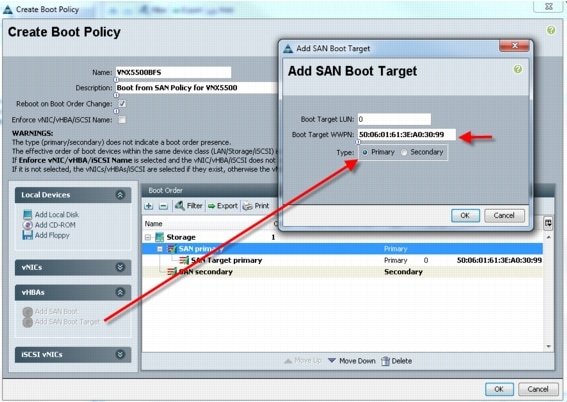

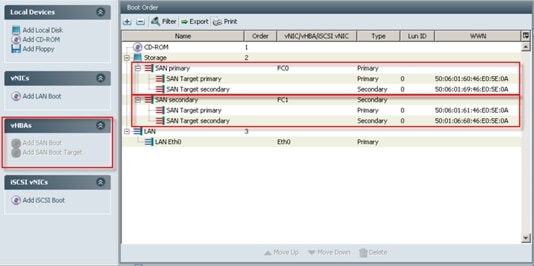

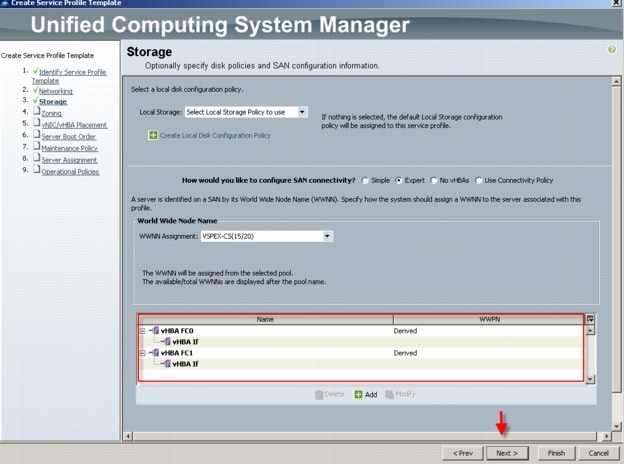

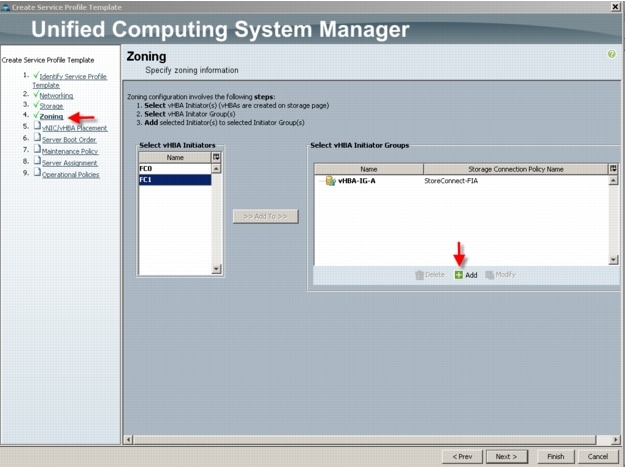

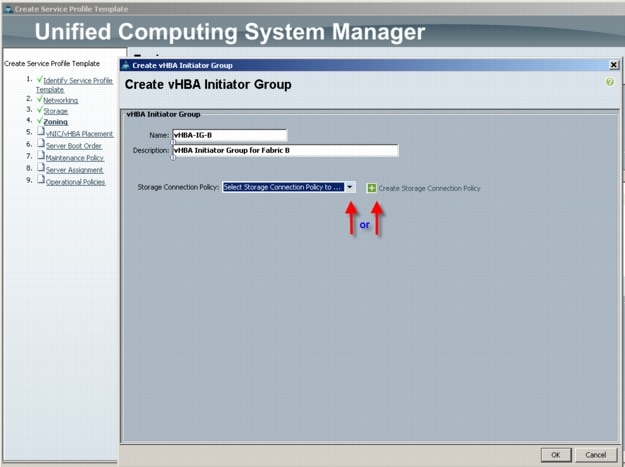

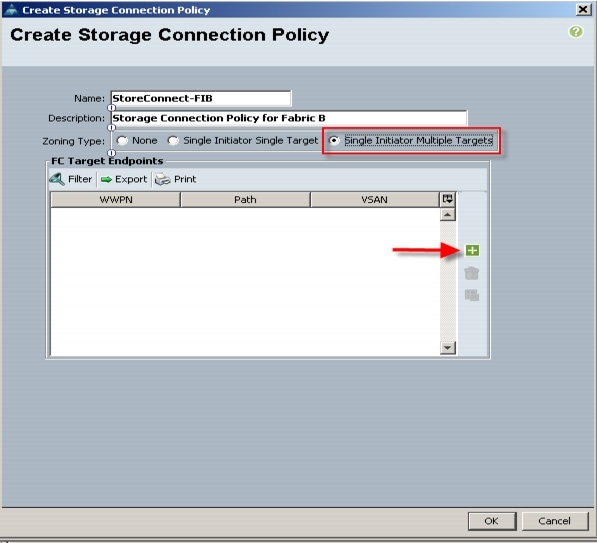

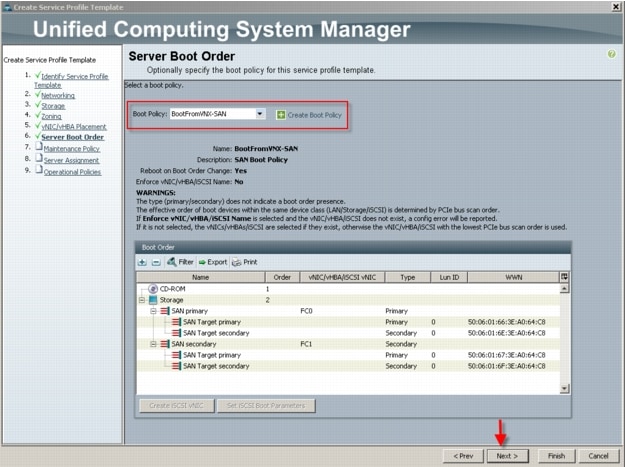

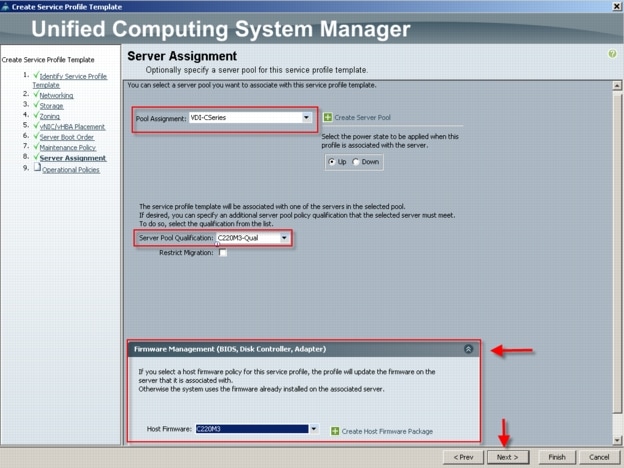

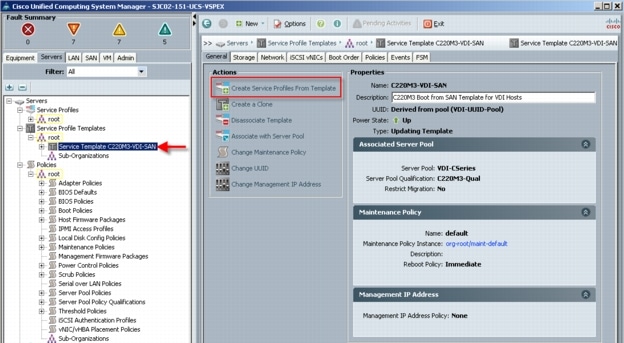

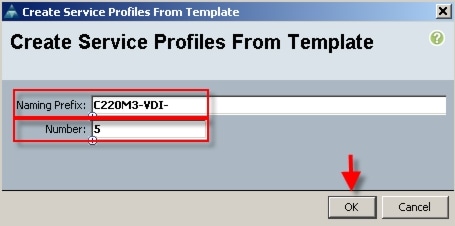

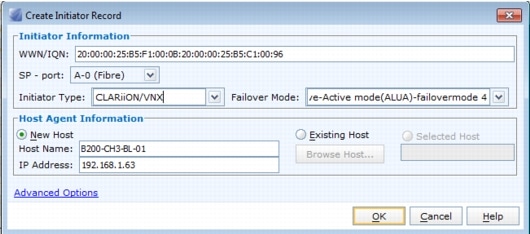

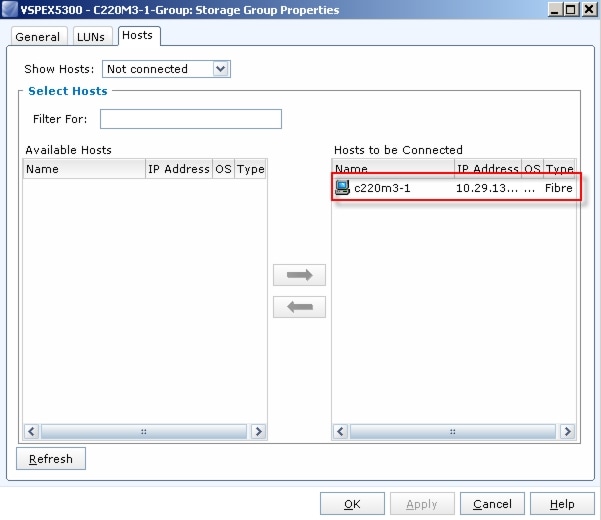

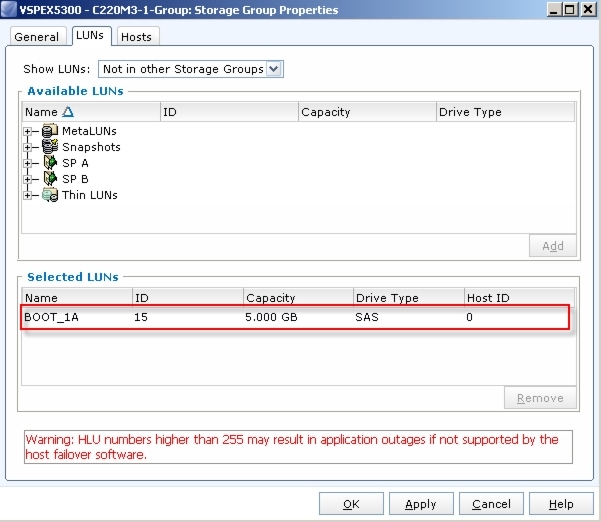

6.4.2 Configuring Boot from SAN Overview

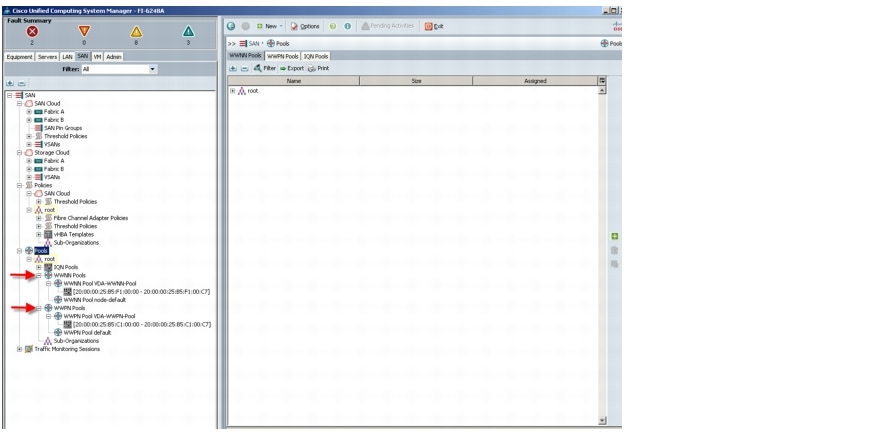

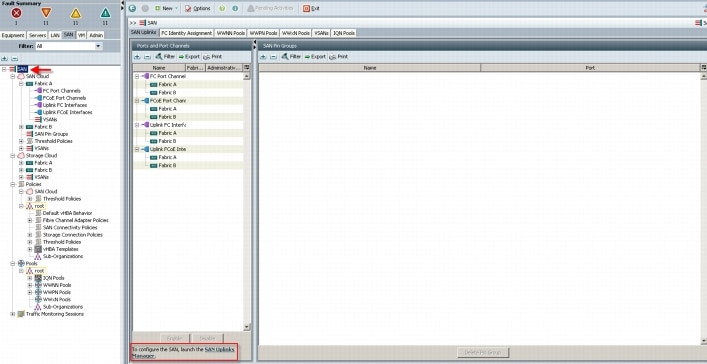

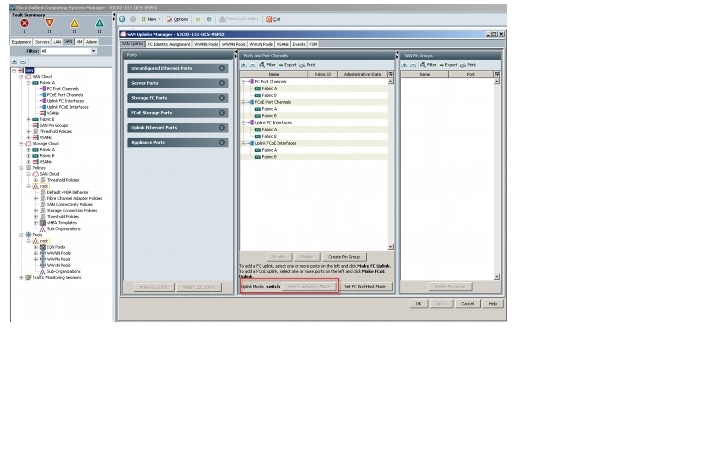

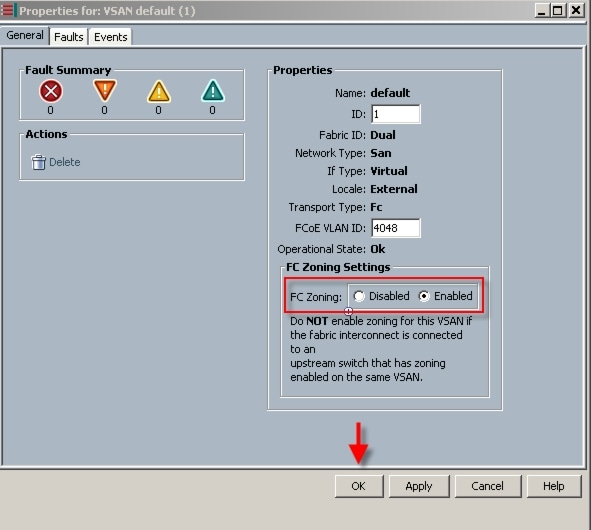

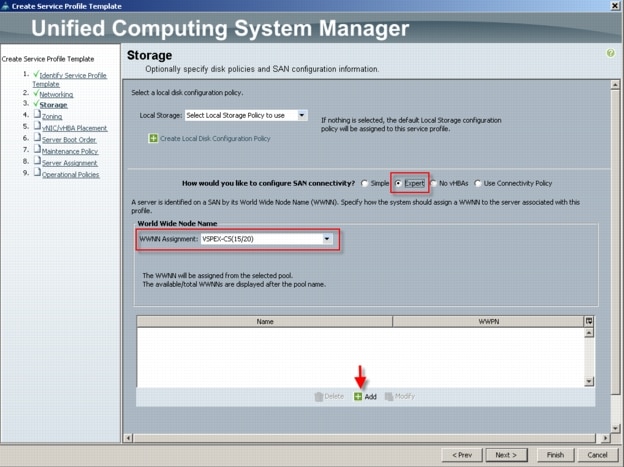

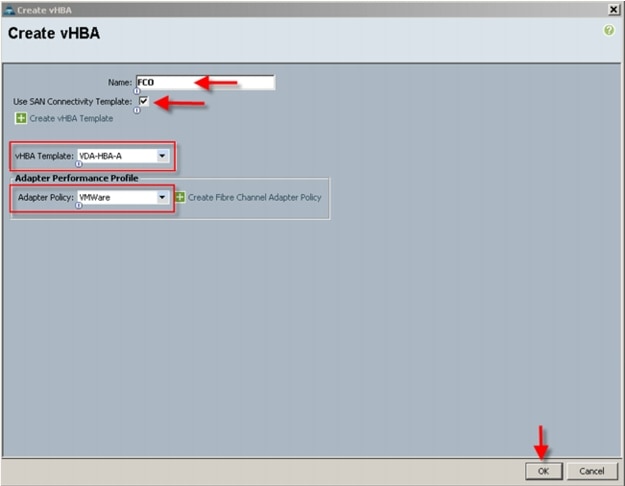

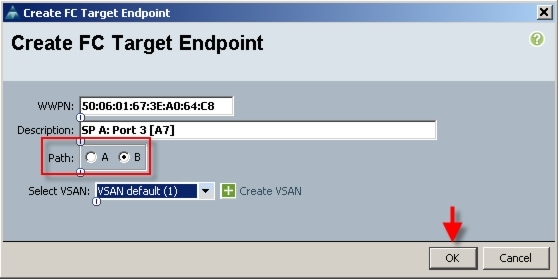

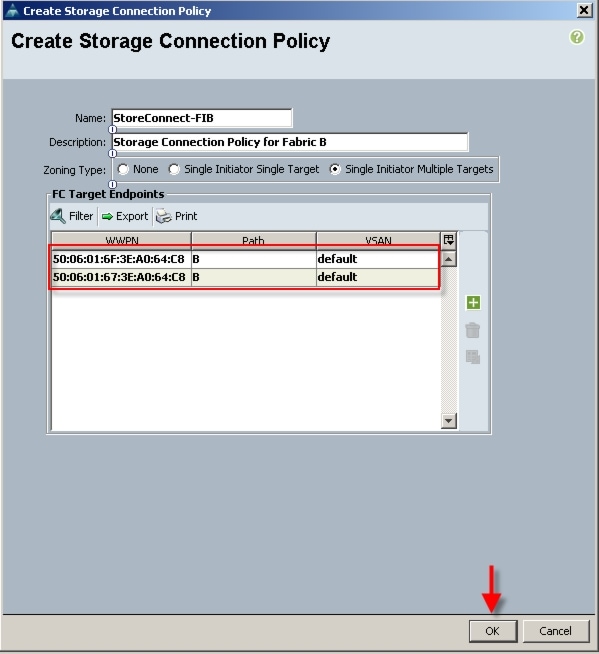

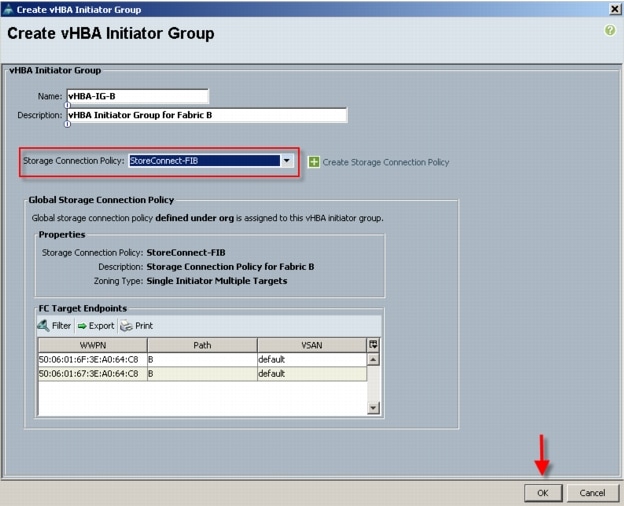

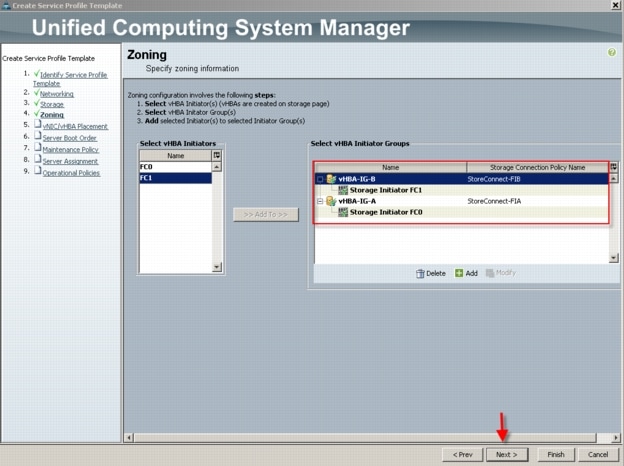

6.4.3 SAN Configuration on Cisco UCS Manager

6.4.4 Configuring Boot from SAN on EMC VNX

6.4.5 SAN Configuration on Cisco UCS Manager

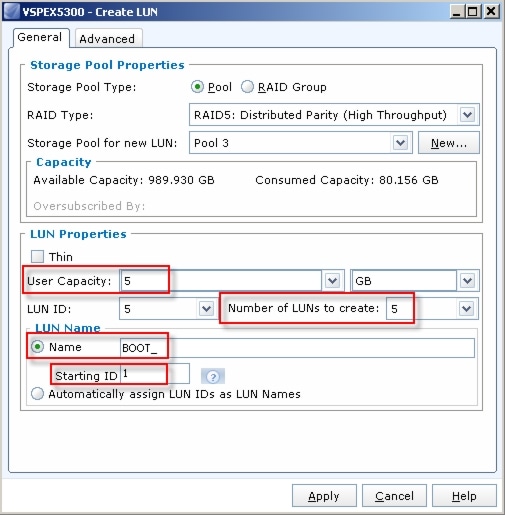

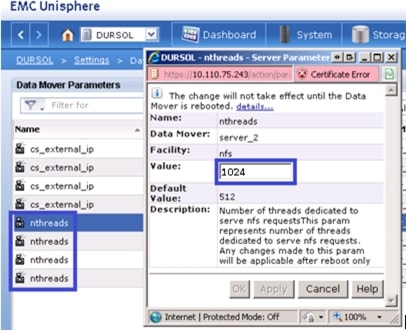

6.5 EMC VNX5300 Storage Configuration

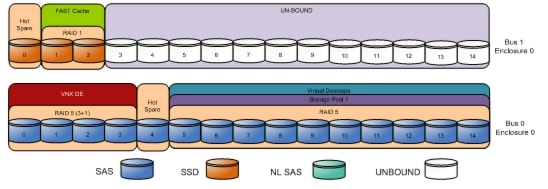

6.5.1 Physical and Logical Storage Layout for Managed FC and Unmanaged NFS Variants

6.5.2 EMC Storage Configuration for VMware ESXi 5.0 Infrastructure Servers

6.5.3 EMC FAST Cache in Practice

6.6 Installing and Configuring ESXi 5.0 Update 1

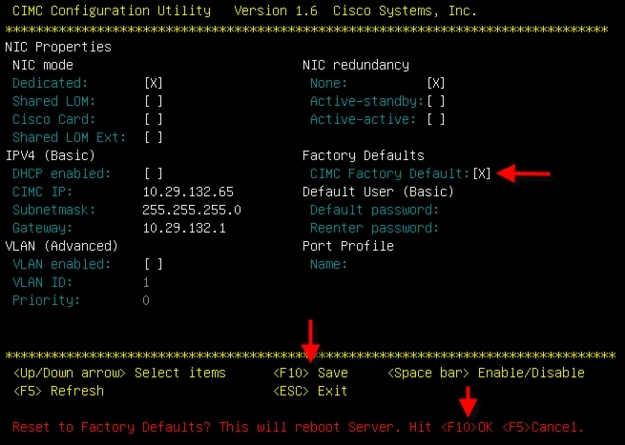

6.6.1 Install VMware ESXi 5.0 Update 1

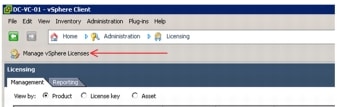

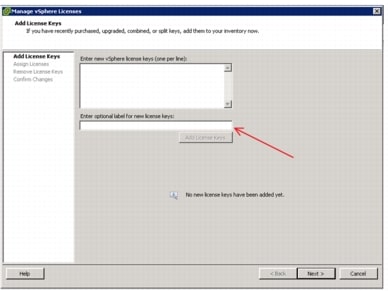

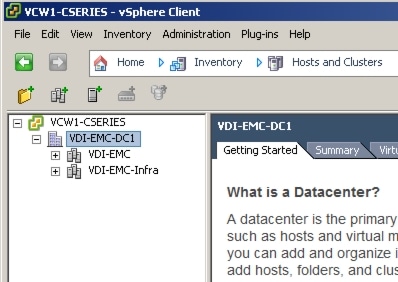

6.6.2 Install and Configure vCenter

6.6.4 ESXi 5.0 Update 1 Cluster Configuration

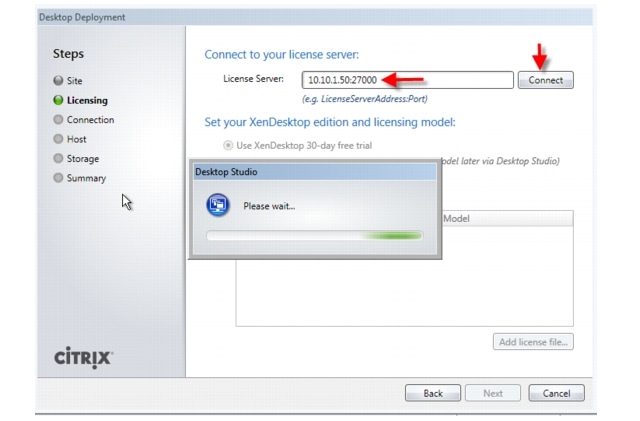

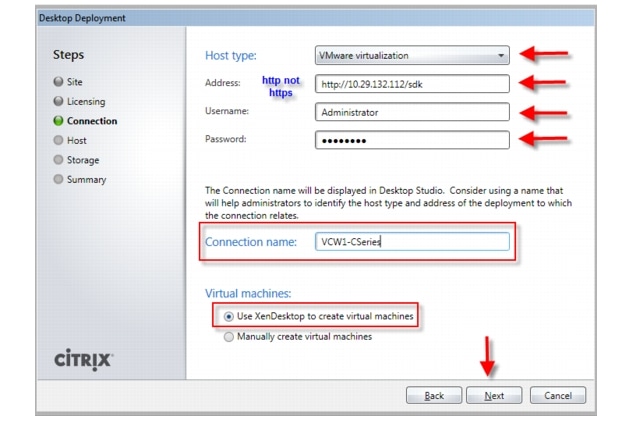

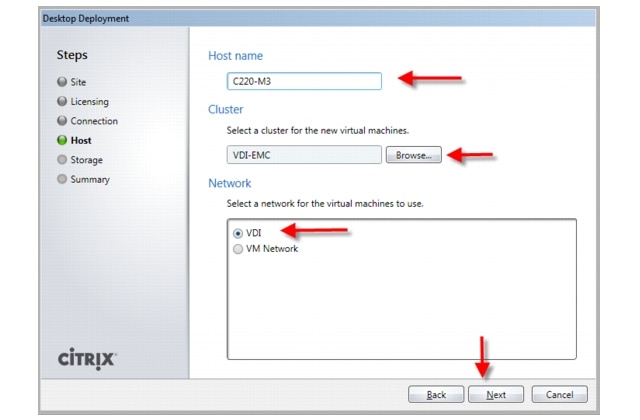

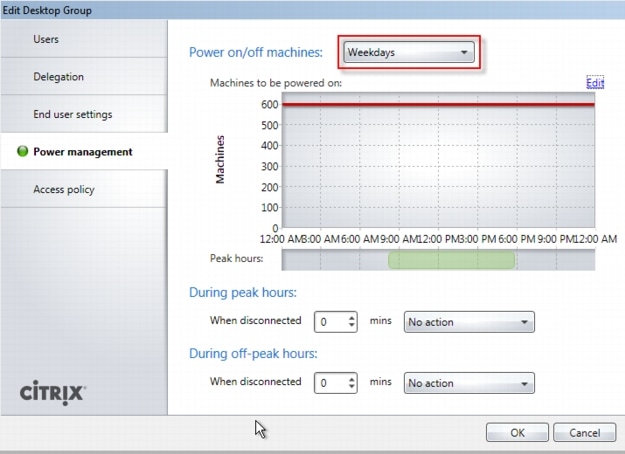

6.7 Installing and Configuring Citrix XenDesktop 5.6

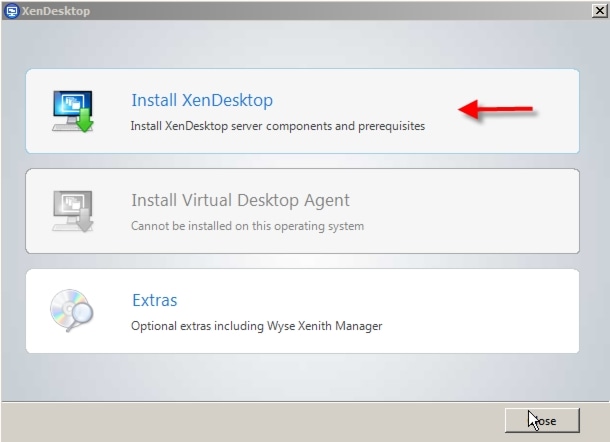

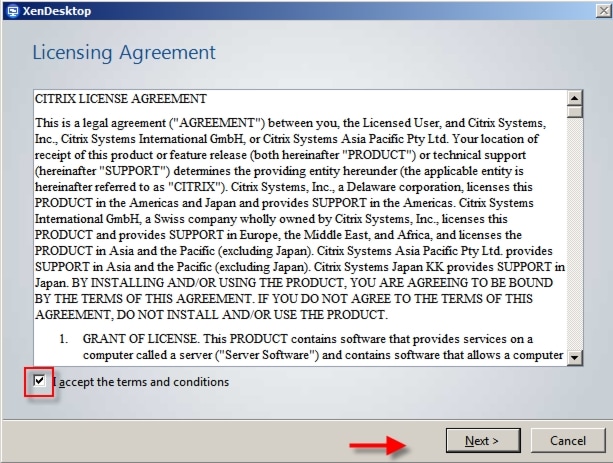

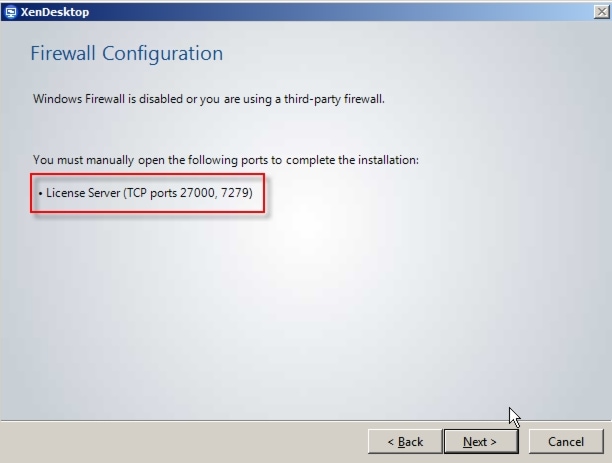

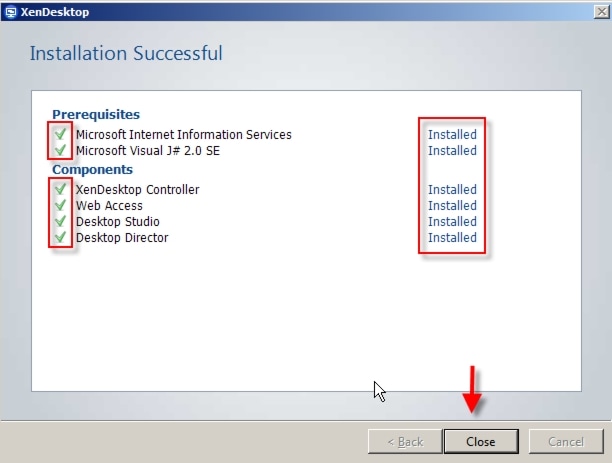

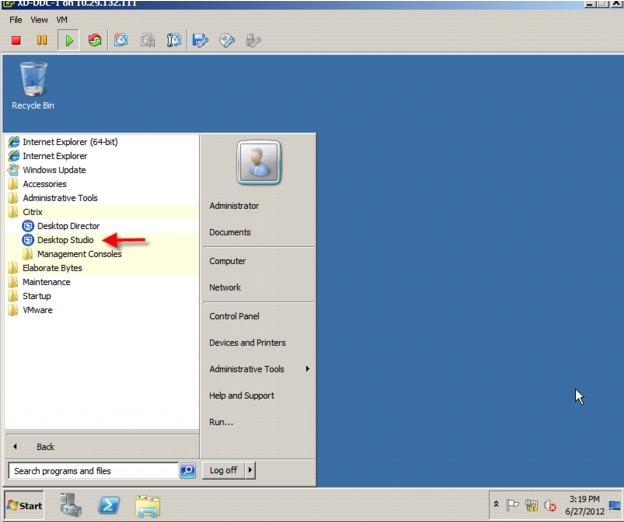

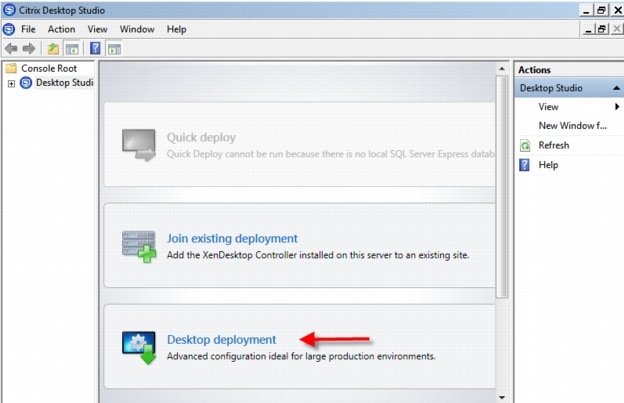

6.7.2 Install Citrix XenDesktop, Web Interface, Citrix XenDesktop Studio, and Optional Components

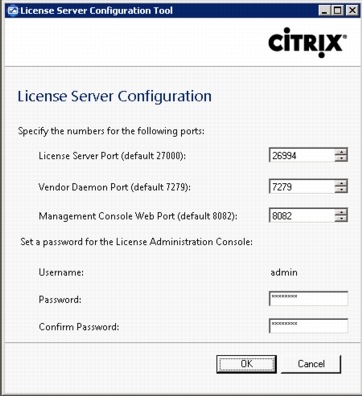

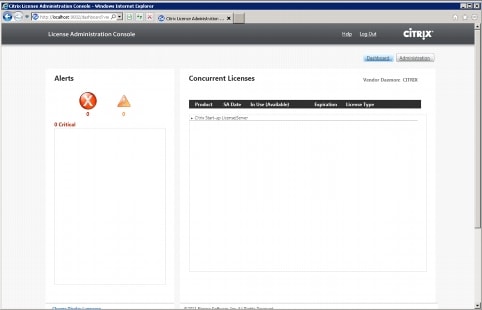

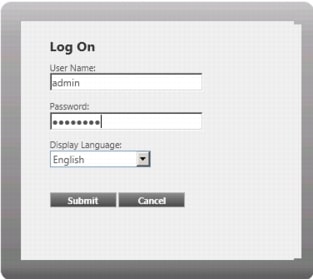

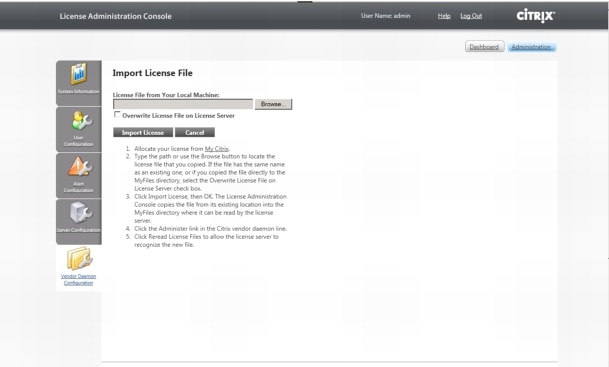

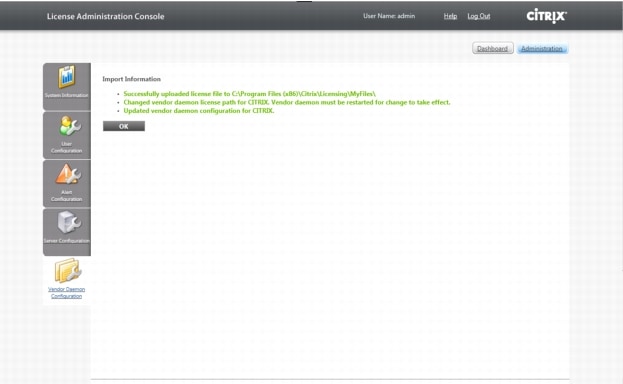

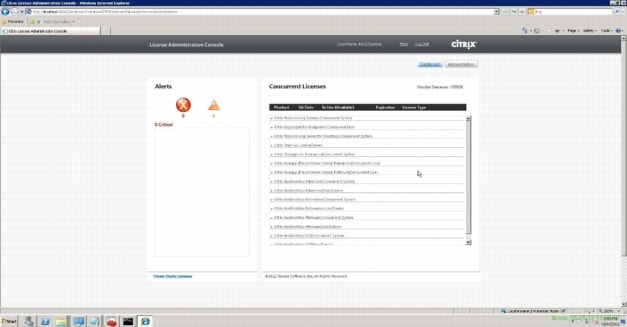

6.7.3 Configuring the License Server

6.7.4 Create SQL Database for Citrix XenDesktop

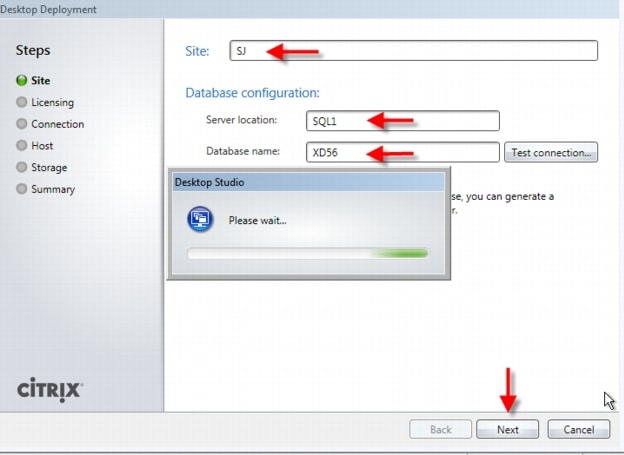

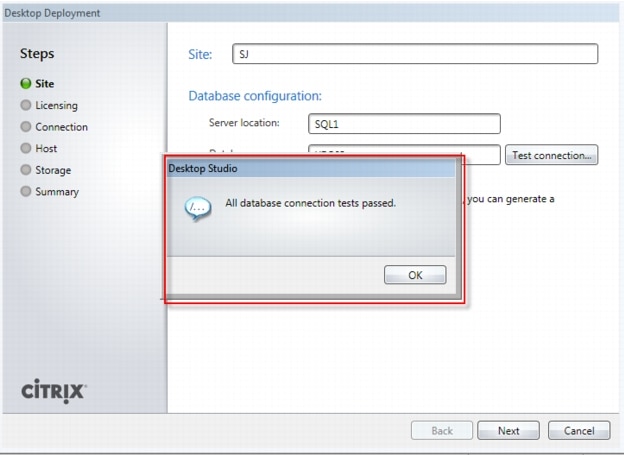

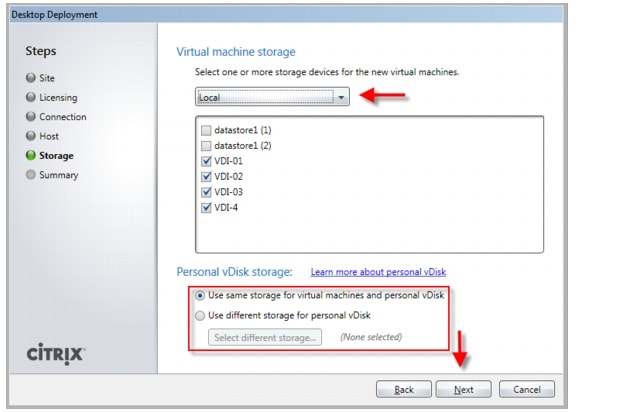

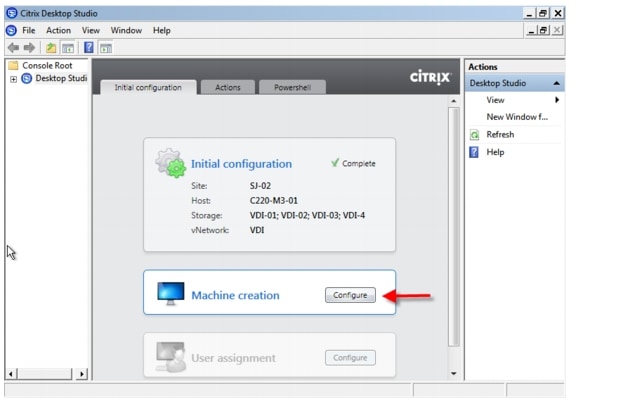

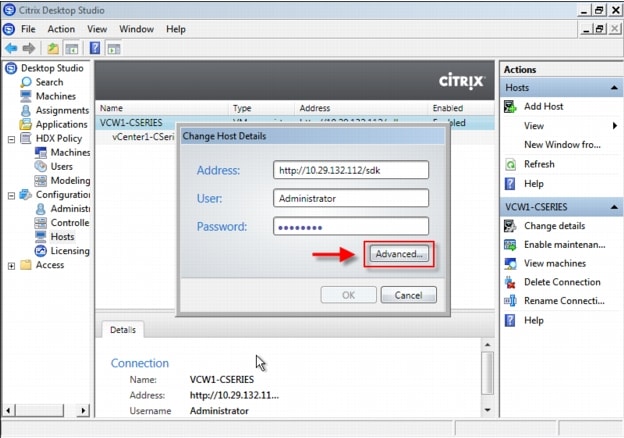

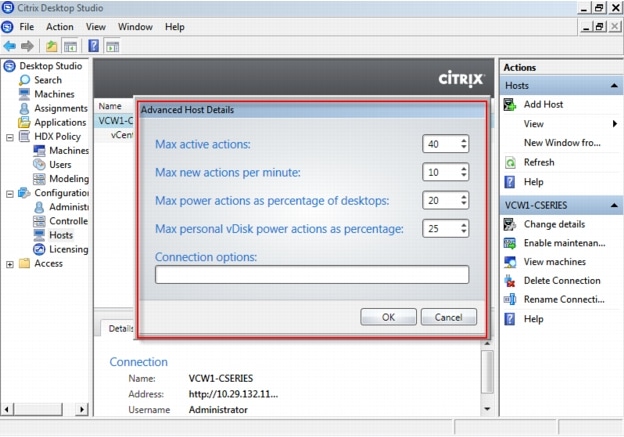

6.7.5 Configure the Citrix XenDesktop Site Hosts and Storage

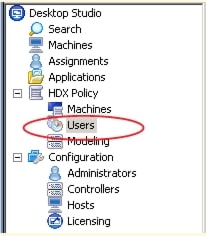

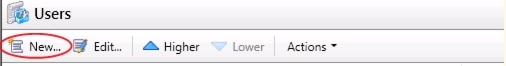

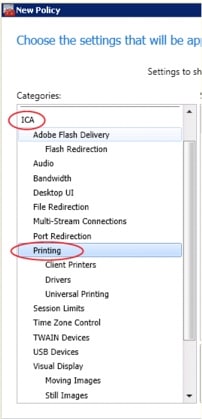

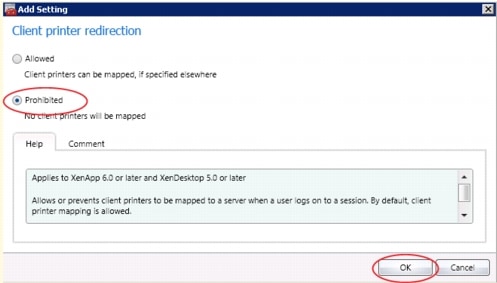

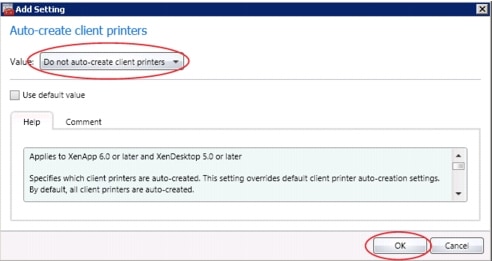

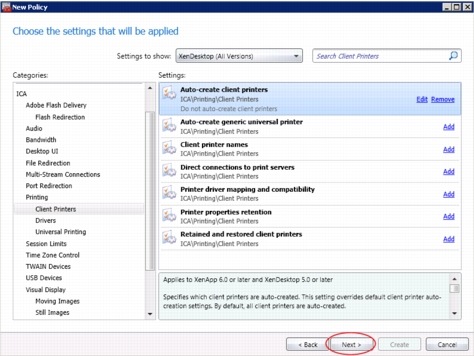

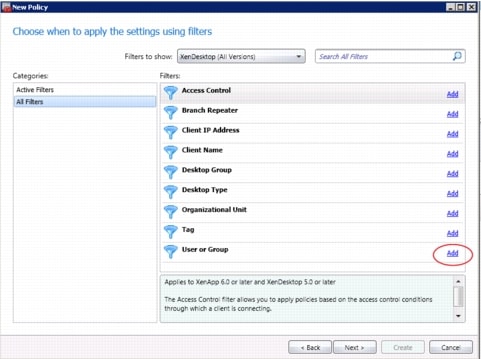

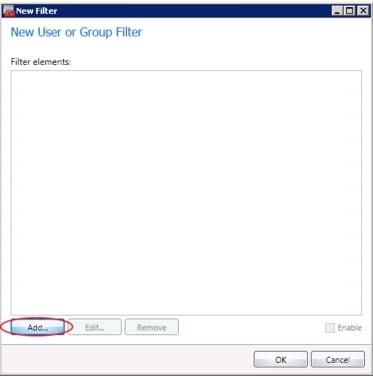

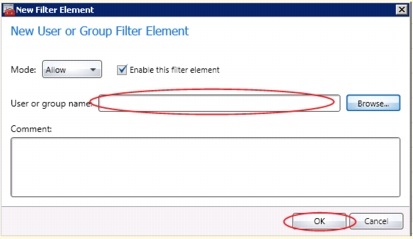

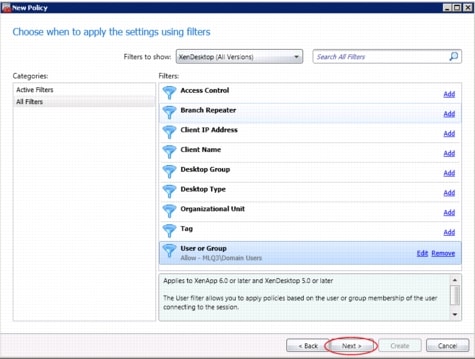

6.7.6 Configure Citrix XenDesktop HDX Polices

7 Desktop Delivery Infrastructure and Golden Image Creation

7.1 Overview of Solution Components

7.2 File Servers for User Profile Management and Login VSI Share

7.3 Microsoft Windows 7 Golden Image Creation

7.3.1 Create Base Windows 7 SP1 32bit Virtual Machine

7.3.2 Add Citrix XenDesktop 5.6 Virtual Desktop Agent

7.3.3 Add Login VSI Target Software (Login VSI Test Environments Only)

7.3.4 Citrix XenDesktop Optimizations

7.3.5 Perform additional PVS and XenDesktop Optimizations

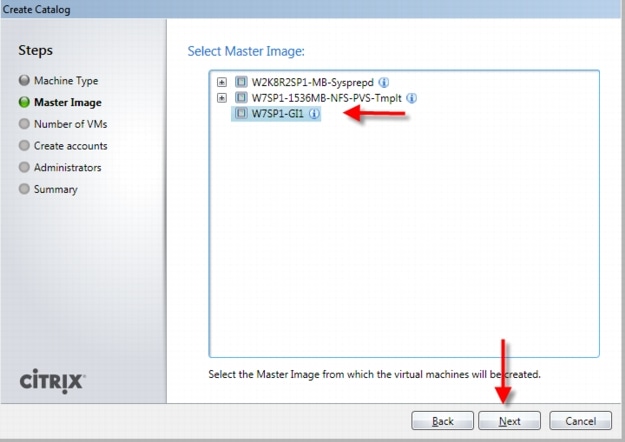

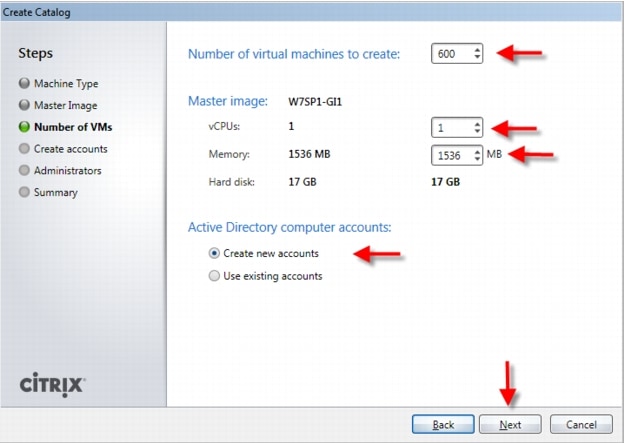

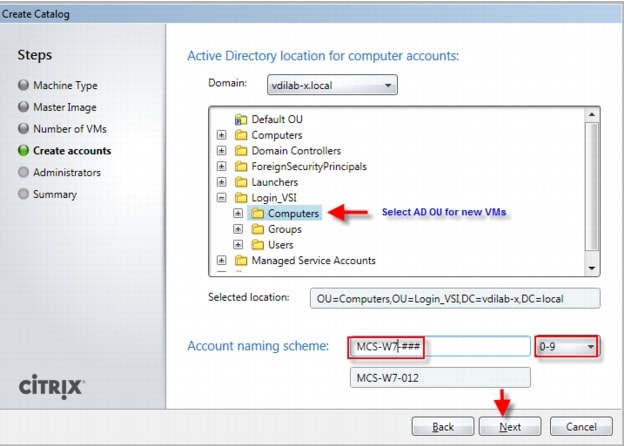

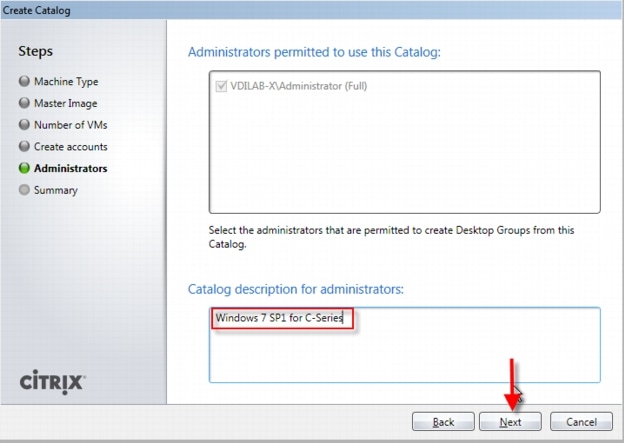

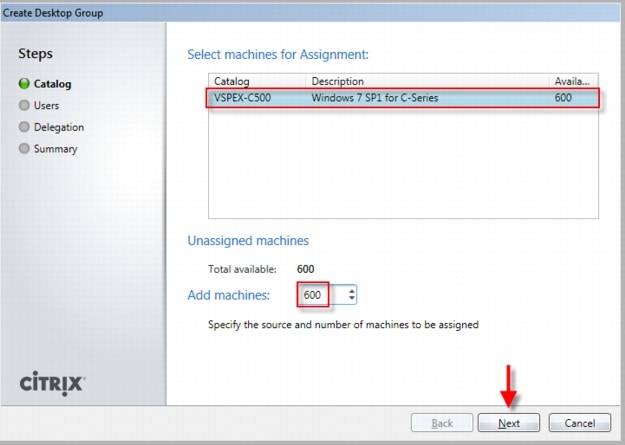

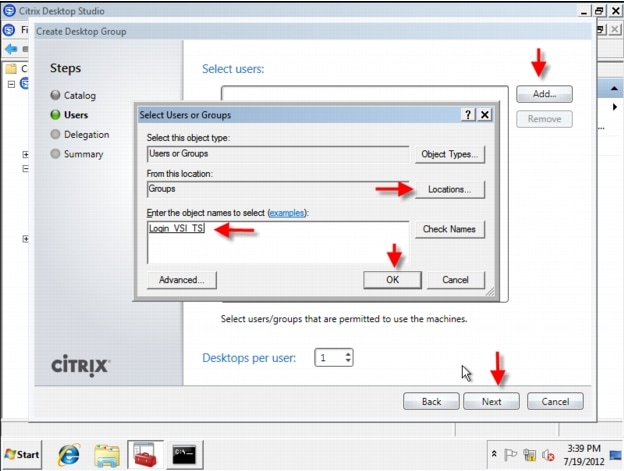

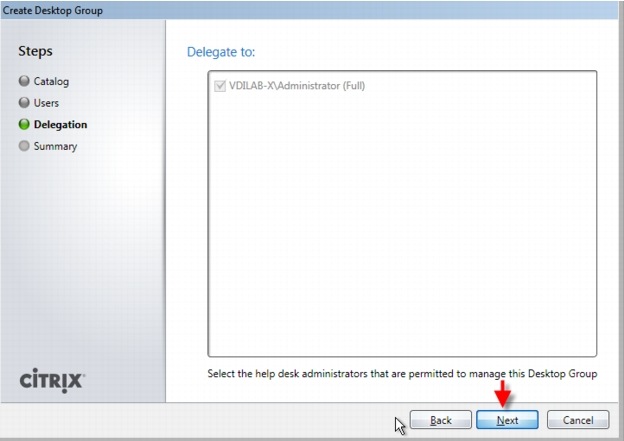

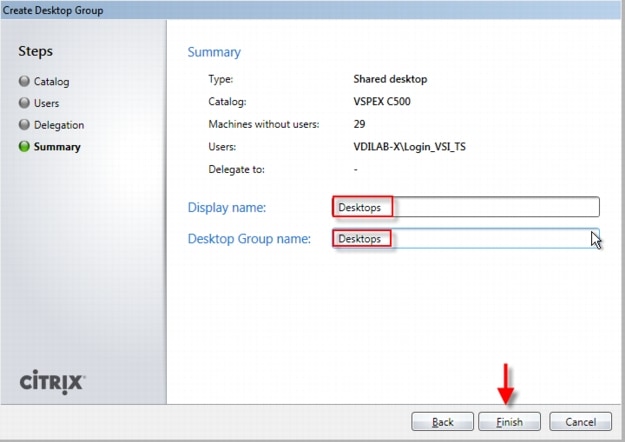

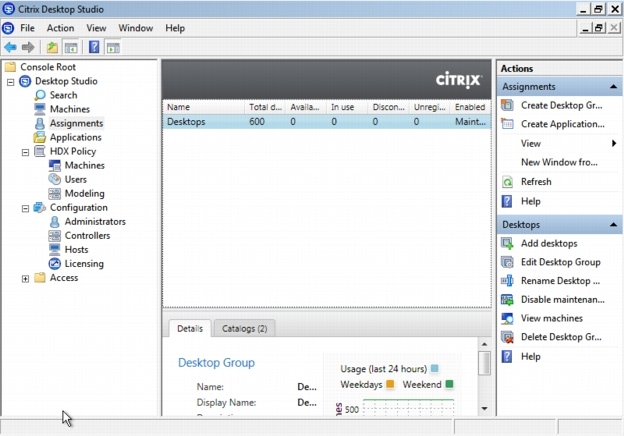

7.4 Virtual Machine Creation Using Citrix XenDesktop Machine Creation Services

8 Test Setup and Configurations

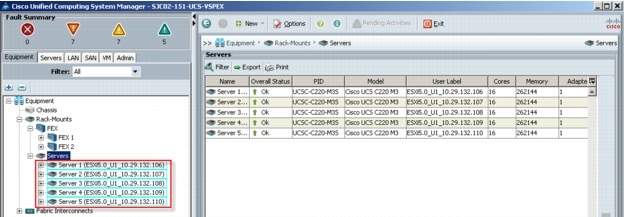

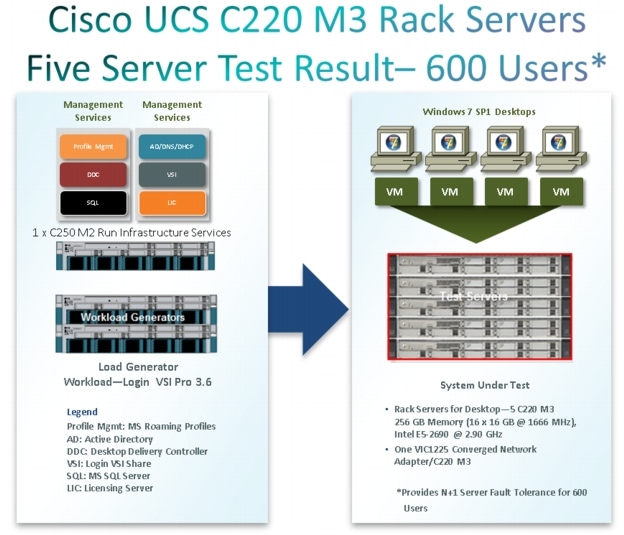

8.1 Cisco UCS Test Configuration for Single Server Scalability

8.2 Cisco UCS Configuration for Five Server Test

8.3 Testing Methodology and Success Criteria

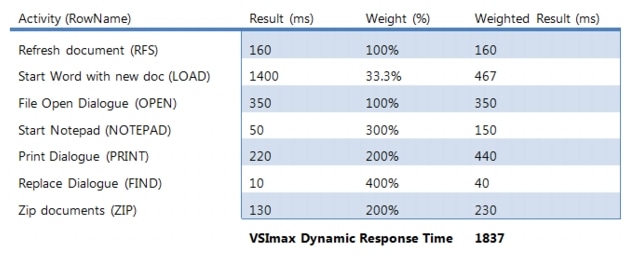

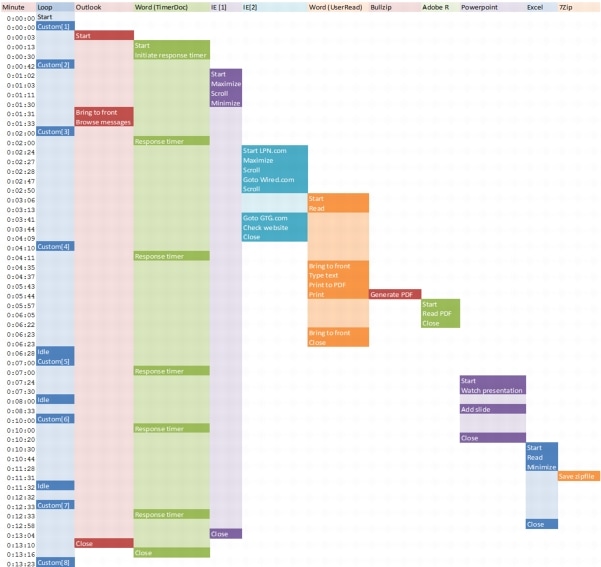

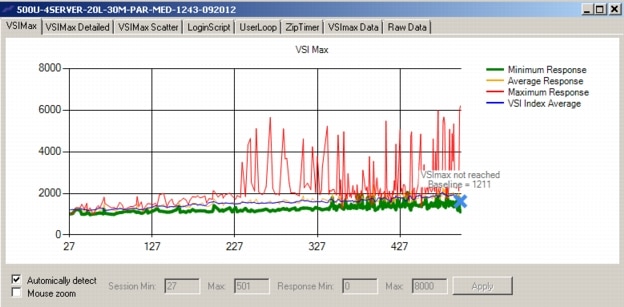

8.3.2 User Workload SimulationLoginVSI From Login Consultants

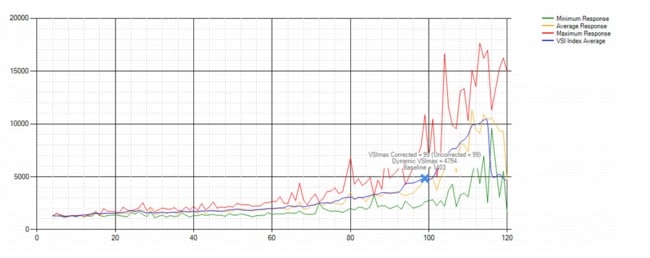

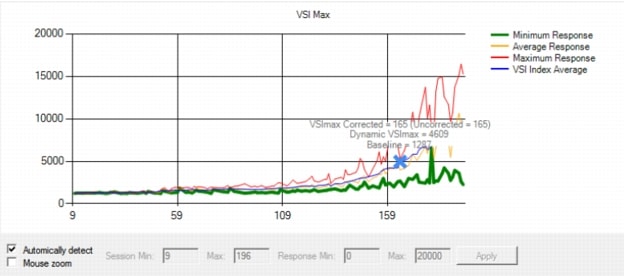

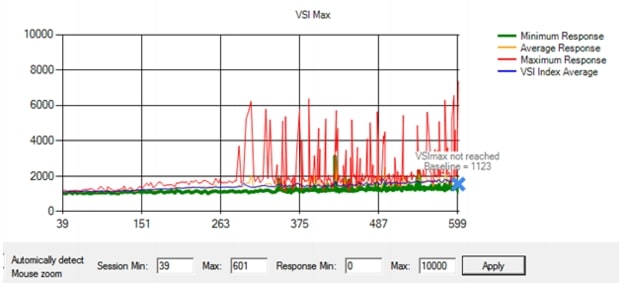

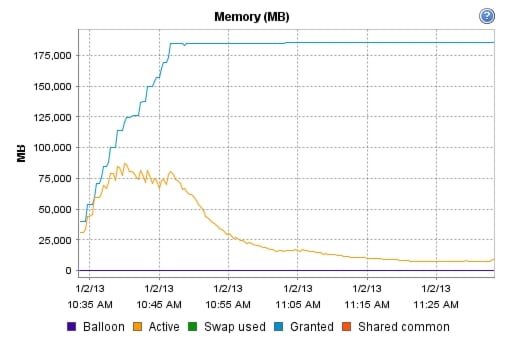

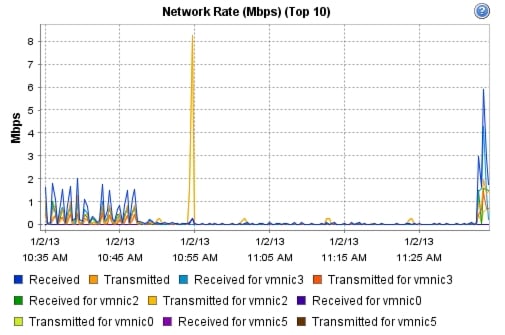

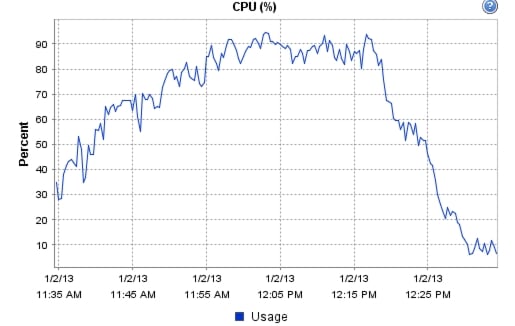

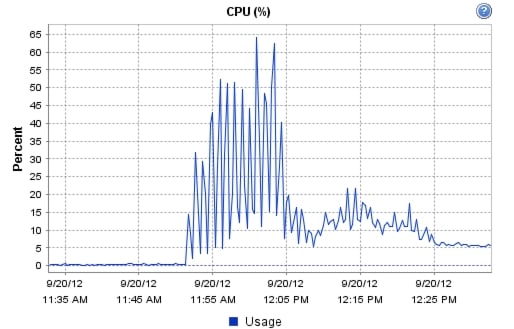

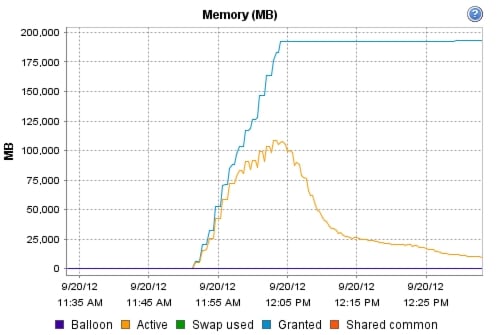

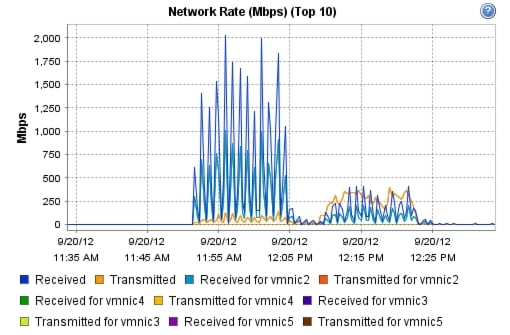

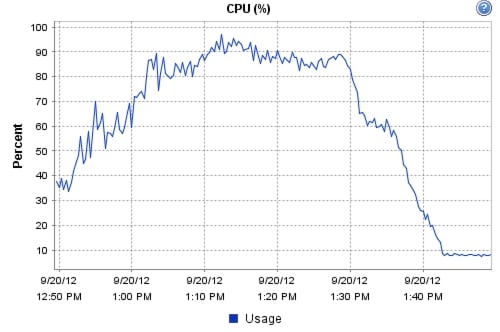

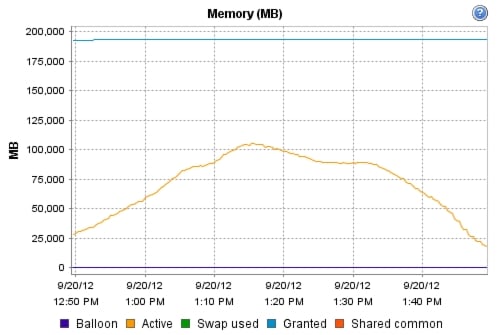

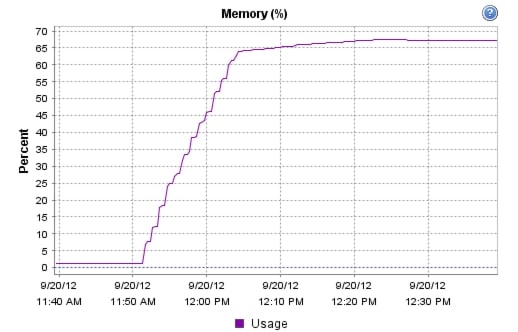

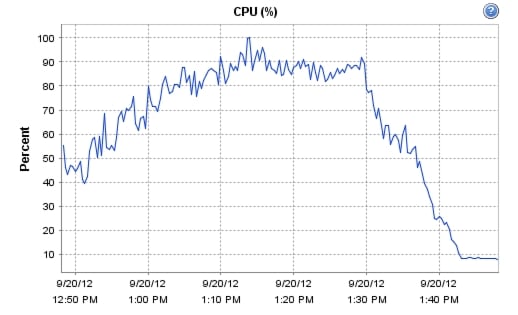

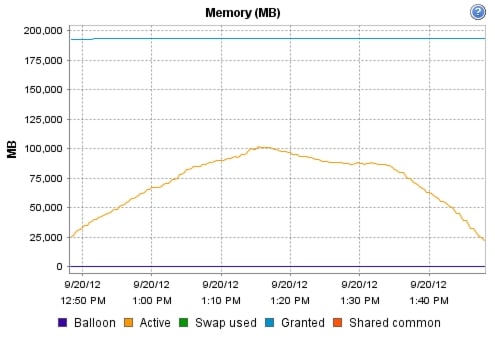

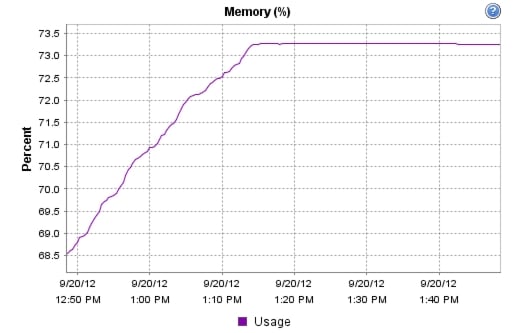

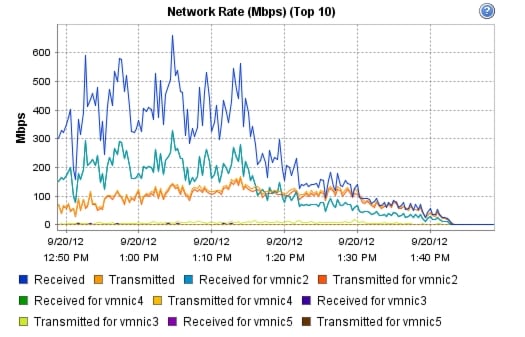

9.1 Cisco UCS Test Configuration for Single-Server Scalability Test Results

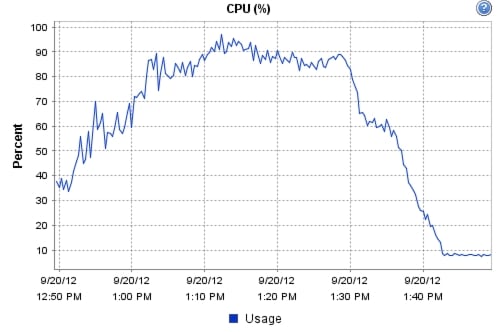

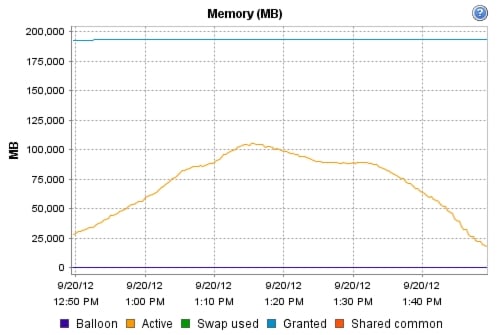

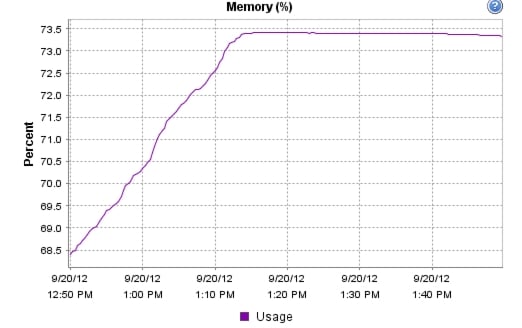

9.2 Cisco UCS Test Configuration for 600 Desktop Scalability Test Results

9.3 Cisco UCS Test Configuration for 500 Desktop Scalability Test Results

10 Scalability Considerations and Guidelines

10.1 Cisco UCS System Configuration

10.2 Citrix XenDesktop 5.6 Hosted VDI

10.3 EMC VNX Storage Guidelines for XenDesktop Virtual Machines

10.4 VMware ESXi 5 Guidelines for Virtual Desktop Infrastructure

11.1 Cisco Reference Documents

11.2 Citrix Reference Documents

11.4 VMware Reference Documents

Appendix A—Nexus 5548 Configuration (NFS Variant Only)

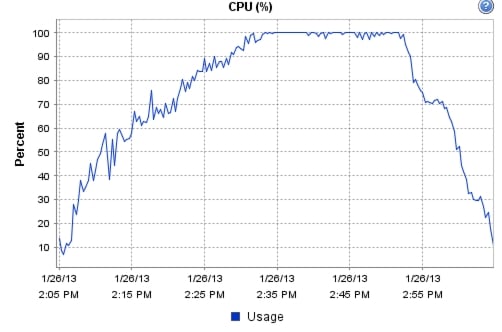

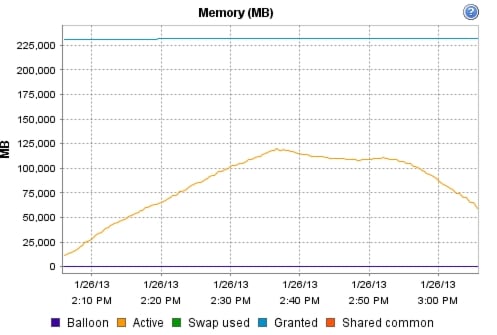

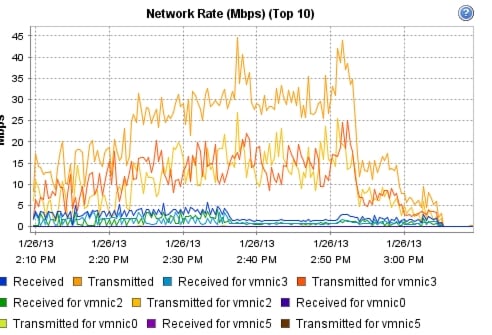

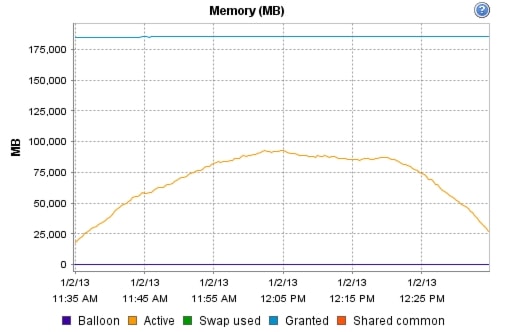

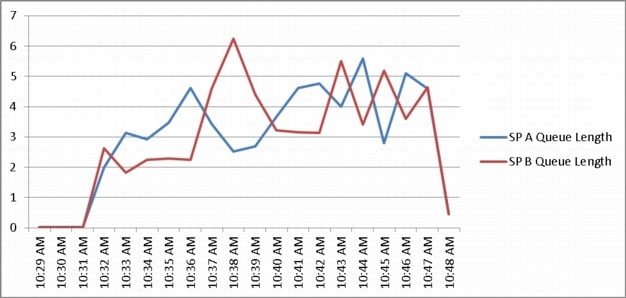

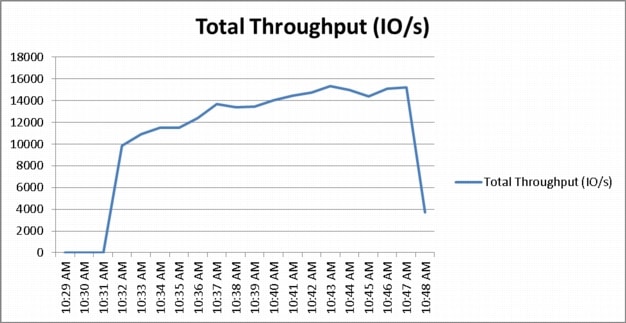

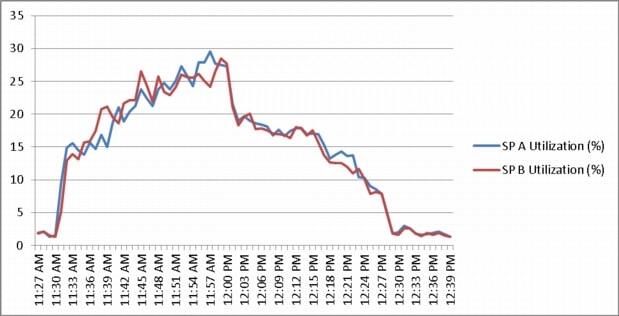

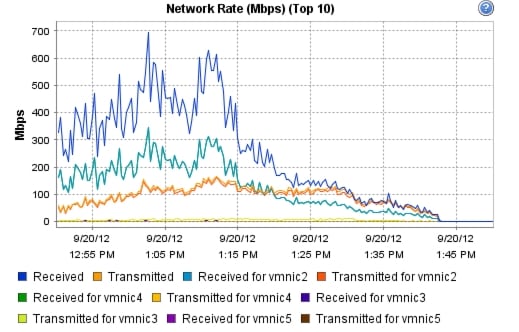

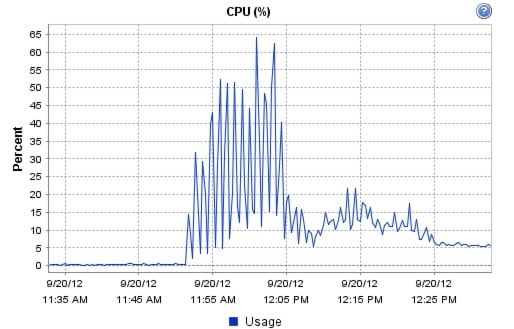

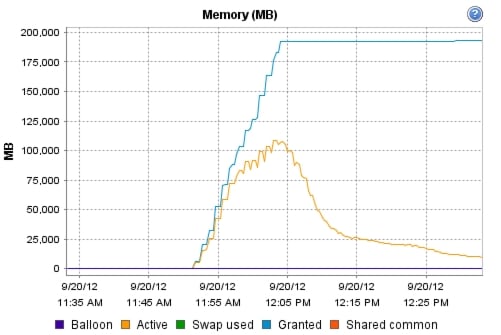

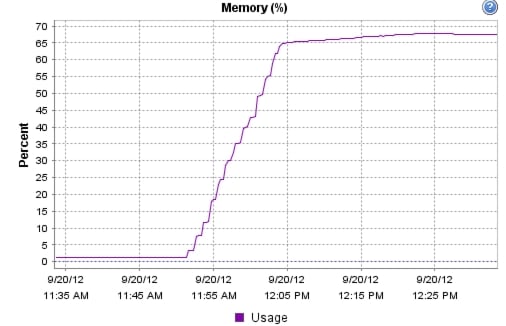

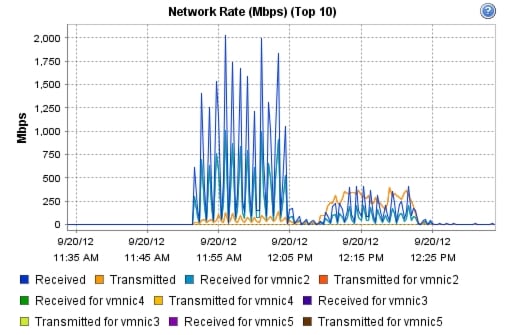

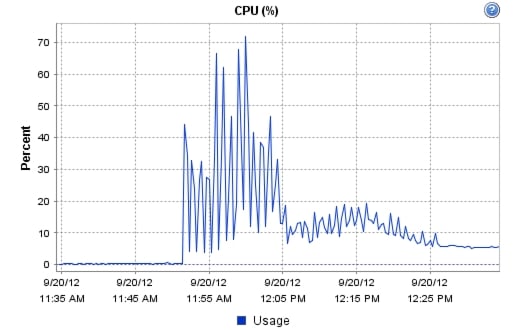

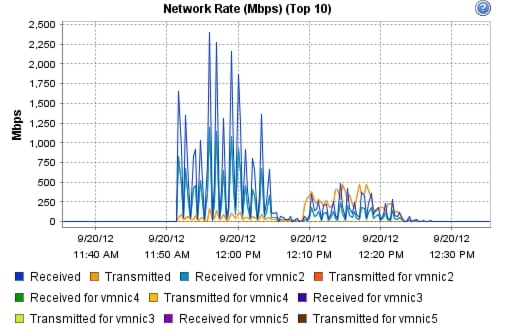

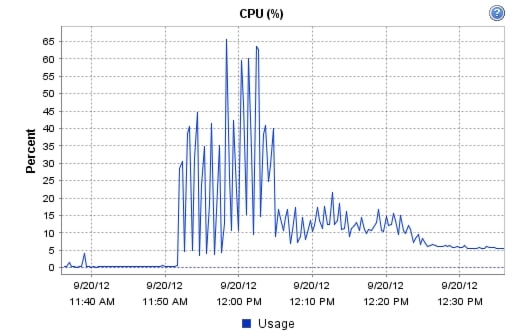

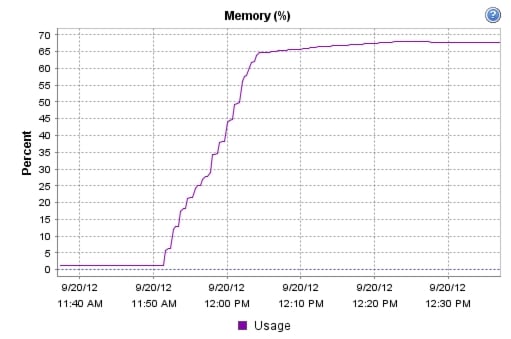

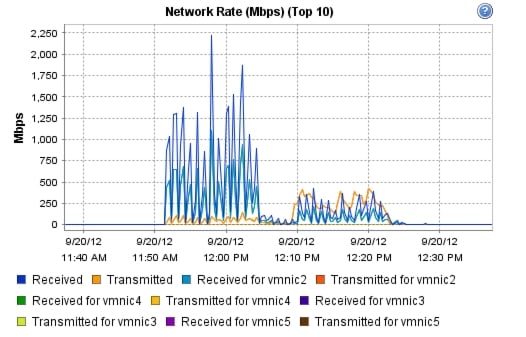

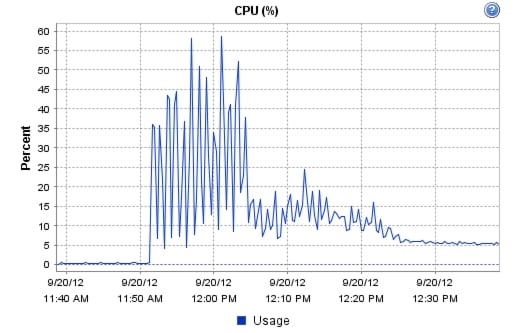

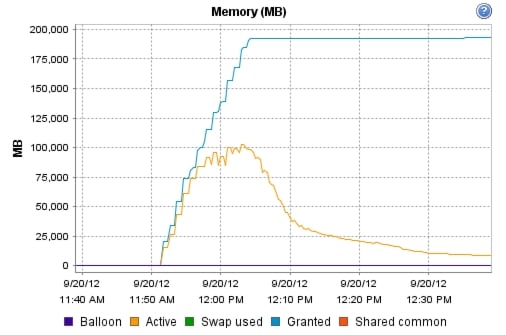

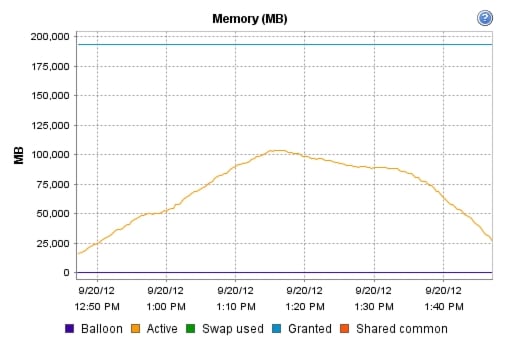

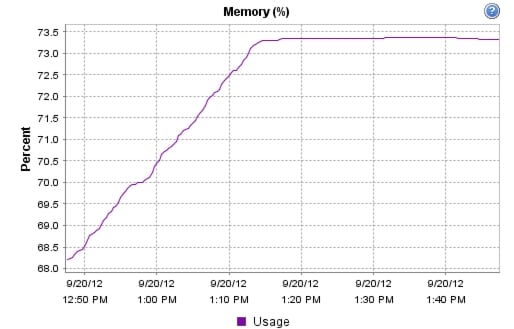

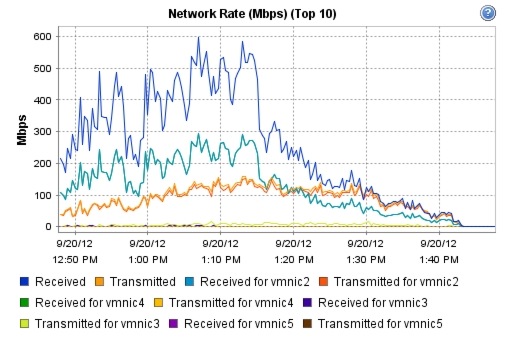

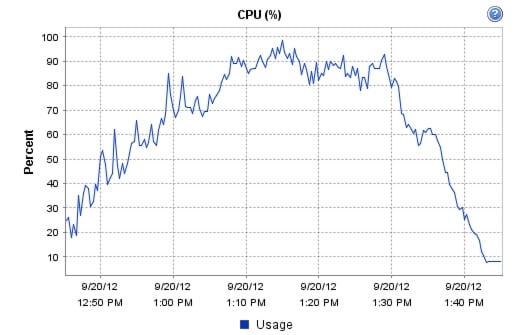

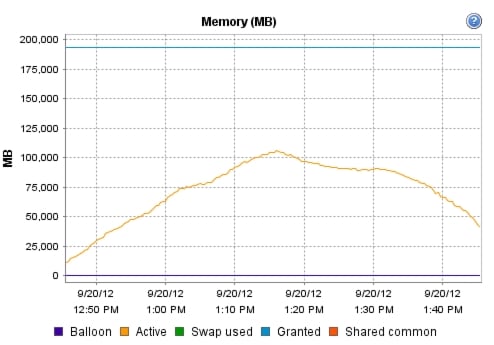

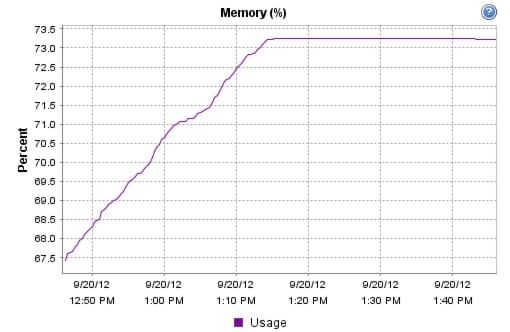

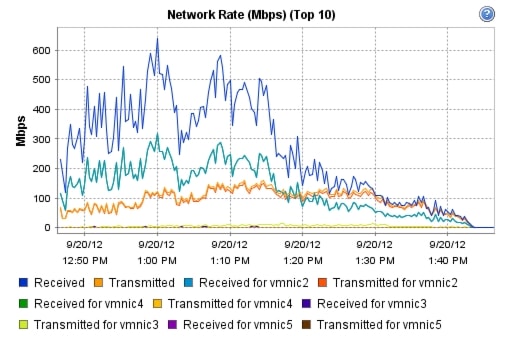

Appendix B—Server Performance Metrics for 500 Users on 4 Cisco UCS C220 M3 Servers

Cisco Solution for EMC VSPEX End User Computing for 500 Citrix XenDesktop 5.6 UsersEnabled by Cisco Unified Computing System C220 M3 Servers, Cisco Nexus Switching, VMware vSphere 5, and Direct Attached EMC VNX5300

Building Architectures to Solve Business Problems

About the Authors

Mike Brennan, Sr. Technical Marketing Engineer, VDI Performance and Solutions Team Lead, Cisco Systems

Mike Brennan is a Cisco Unified Computing System architect, focusing on Virtual Desktop Infrastructure solutions with extensive experience with EMC VNX, VMware ESX/ESXi, and VMware View. He has expert product knowledge in application and desktop virtualization across all three major hypervisor platforms, both major desktop brokers, Microsoft Windows Active Directory, User Profile Management, DNS, DHCP and Cisco networking technologies.

.

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2013 Cisco Systems, Inc. All rights reserved

Cisco Solution for EMC VSPEX End User Computing for 500 Citrix XenDesktop 5.6 Users

1 Overview

Industry trends indicate a vast data center transformation toward shared infrastructures. Enterprise customers are moving away from silos of information and toward shared infrastructures, to virtualized environments, and eventually to the cloud to increase agility, improve availability and reduce costs.

Five Cisco UCS C220 M3 Rack Servers were utilized in the design to provide N+1 fault tolerance for 500 Virtual Windows 7 desktops at the server level, guaranteeing the same end-user experience if just 4 C220 M3 servers are operational. In fact, the five server architecture can comfortably support 600 desktops with N+1 server fault tolerance. For that reason, the document architecture will refer to supporting a 600 desktop capacity with five Cisco UCS C220 M3 servers.

Alternatively, with just four Cisco UCS C220 M3 Rack Servers, we can effectively host 500 users with all servers online or 450 Users with 3 UCS C220 M3 servers running.

This study was performed in conjunction with EMC's VSPEX program and aligns closely with the Cisco Solution for EMC VSPEX C500 Reference Architecture and Deployment Guide.

1.1 Solution Components Benefits

Each of the components of the overall solution materially contributes to the value of functional design contained in this document.

1.1.1 Benefits of Cisco Unified Computing System

Cisco Unified Computing System™ is the first converged data center platform that combines industry-standard, x86-architecture servers with networking and storage access into a single converged system. The system is entirely programmable using unified, model-based management to simplify and speed deployment of enterprise-class applications and services running in bare-metal, virtualized, and cloud computing environments.

Benefits of the Unified Computing System include:

Architectural flexibility

•

Cisco UCS B-Series blade servers for infrastructure and virtual workload hosting

•

Cisco UCS C-Series rack-mount servers for infrastructure and virtual workload Hosting

•

Cisco UCS 6200 Series second generation fabric interconnects provide unified blade, network and storage connectivity

•

Cisco UCS 5108 Blade Chassis provide the perfect environment for multi-server type, multi-purpose workloads in a single containment

Infrastructure Simplicity

•

Converged, simplified architecture drives increased IT productivity

•

Cisco UCS management results in flexible, agile, high performance, self-integrating information technology with faster ROI. With Cisco UCS Manager 2.1 introduced on November 21, 2012, Cisco UCS C-Series Servers and Fibre Channel (FC) SAN storage can be managed end-to-end by Cisco UCS 6200 Series Fabric Interconnects

•

Fabric Extender technology reduces the number of system components to purchase, configure and maintain

•

Standards-based, high bandwidth, low latency virtualization-aware unified fabric delivers high density, excellent virtual desktop user-experience

Business Agility

•

Model-based management means faster deployment of new capacity for rapid and accurate scalability

•

Scale up to 16 Chassis and up to 128 blades in a single Cisco UCS management domain

•

With Cisco UCS Manger 2.1 and Cisco UCS Central 1.0, the scope of management extends to many Cisco UCS Domains

•

Leverage Cisco UCS Management Packs for System Center 2012 for integrated management

1.1.2 Benefits of Nexus Switching

1.1.2.1 Cisco Nexus 5548 (NFS Variant)

The Cisco Nexus 5548UP Switch, used exclusively in the NFS variant or the EMC VSPEX C500 architecture, delivers innovative architectural flexibility, infrastructure simplicity, and business agility, with support for networking standards. For traditional, virtualized, unified, and high-performance computing (HPC) environments, it offers a long list of IT and business advantages, including:

Architectural Flexibility

•

Unified ports that support traditional Ethernet, Fibre Channel (FC),and Fibre Channel over Ethernet (FCoE)

•

Synchronizes system clocks with accuracy of less than one microsecond, based on IEEE 1588

•

Offers converged Fabric extensibility, based on emerging standard IEEE 802.1BR, with Fabric Extender (FEX) Technology portfolio, including:

•

Nexus 1000V Virtual Distributed Switch

•

Cisco Nexus 2000 FEX

•

Adapter FEX

•

VM-FEX

Infrastructure Simplicity

•

Common high-density, high-performance, data-center-class, fixed-form-factor platform

•

Consolidates LAN and storage

•

Supports any transport over an Ethernet-based fabric, including Layer 2 and Layer 3 traffic

•

Supports storage traffic, including iSCSI, NAS, FC, RoE, and IBoE

•

Reduces management points with FEX Technology

Business Agility

•

Meets diverse data center deployments on one platform

•

Provides rapid migration and transition for traditional and evolving technologies

•

Offers performance and scalability to meet growing business needs

Specifications At-a-Glance

•

A 1 -rack-unit, 1/10 Gigabit Ethernet switch

•

32 fixed Unified Ports on base chassis and one expansion slot totaling 48 ports

•

The slot can support any of the three modules: Unified Ports, 1/2/4/8 native Fibre Channel, and Ethernet or FCoE

•

Throughput of up to 960 Gbps

1.1.2.2 Cisco Nexus 2232PP Fabric Extender (Fibre Channel Variant)

The Cisco Nexus 2232PP 10GE Fabric Extender provides 32 10 Gb Ethernet and Fibre Channel Over Ethernet (FCoE) Small Form-Factor Pluggable Plus (SFP+) server ports and eight 10 Gb Ethernet and FCoE SFP+ uplink ports in a compact 1 rack unit (1RU) form factor.

The Nexus 2232PP in conjunction with VIC1225 converged network adapters in the Cisco UCS C220 M3 rack servers provide fault-tolerant single wire management of the rack servers through up to 8 uplink ports to Cisco Fabric Interconnects.

Reduce TCO

•

The innovative Fabric Extender approach reduces data center cabling costs and footprint with optimized inter-rack cabling

•

Unified fabric and FCoE at the server access layer reduce capital expenditure and operating expenses Simplify Operation

•

Cisco UCS 6248UP or 6296UP Fabric Interconnects provide a single point of management and policy enforcement

•

Plug-and-play management includes auto-configuration

Cisco Nexus 2232PPs were utilized in the FC variant of the study only.

1.1.3 Benefits of EMC VNX Family of Storage Controllers

The EMC VNX Family delivers industry leading innovation and enterprise capabilities for file, block, and object storage in a scalable, easy-to-use solution. This next-generation storage platform combines powerful and flexible hardware with advanced efficiency, management, and protection software to meet the demanding needs of today's enterprises.

All of this is available in a choice of systems ranging from affordable entry-level solutions to high performance, petabyte-capacity configurations servicing the most demanding application requirements. The VNX family includes the VNXe Series, purpose-built for the IT generalist in smaller environments , and the VNX Series , designed to meet the high-performance, high scalability, requirements of midsize and large enterprises.

VNX Series - Simple, Efficient, Powerful

A robust platform for consolidation of legacy block storage, file-servers, and direct-attached application storage, the VNX series enables organizations to dynamically grow, share, and cost-effectively manage multi-protocol file systems and multi-protocol block storage access. The VNX Operating environment enables Microsoft Windows and Linux/UNIX clients to share files in multi-protocol (NFS and CIFS) environments. At the same time it supports iSCSI, Fiber Channel, and FCoE access for high bandwidth and latency-sensitive block applications. The combination of EMC Atmos Virtual Edition software and VNX storage supports object-based storage and enables customers to manage web applications from EMC Unisphere. The VNX series next generation storage platform is powered by Intel quad-core Xeon 5600 series with a 6 -Gb/s SAS drive back-end and delivers demonstrable performance improvements over the previous generation mid-tier storage:

•

Run Microsoft SQL and Oracle 3x to 10x faster

•

Enable 2x system performance in less than 2 minutes -non-disruptively

•

Provide up to 10 GB/s bandwidth for data warehouse applications

1.1.4 Benefits of VMware ESXi 5.0

As virtualization is now a critical component to an overall IT strategy, it is important to choose the right vendor. VMware is the leading business virtualization infrastructure provider, offering the most trusted and reliable platform for building private clouds and federating to public clouds.

Find out how only VMware delivers on the core requirements for a business virtualization infrastructure solution.

And best of all, VMware delivers while providing

1.1.5 Benefits of Citrix XenDesktop and Provisioning Server

XenDesktop is a comprehensive desktop virtualization solution that includes all the capabilities required to deliver desktops, apps and data securely to every user in an enterprise. Trusted by the world's largest organizations, XenDesktop has won numerous awards for its leading-edge technology and strategic approach to desktop virtualization.

XenDesktop helps businesses:

•

Enable virtual workstyles to increase workforce productivity from anywhere

•

Leverage the latest mobile devices to drive innovation throughout the business

•

Rapidly adapt to change with fast, flexible desktop and app delivery for offshoring, M&A, branch expansion and other initiatives

•

Transform desktop computing with centralized delivery, management and security

A complete line of XenDesktop editions lets you choose the ideal solution for your business needs and IT strategy. XenDesktop VDI edition, a scalable solution for delivering virtual desktops in a VDI scenario, includes Citrix HDX technology, provisioning services, and profile management. XenDesktop Enterprise edition is an enterprise-class desktop virtualization solution with FlexCast delivery technology that delivers the right type of virtual desktop with on-demand applications to any user, anywhere. The comprehensive Platinum edition includes advanced management, monitoring and security capabilities.

1.2 Audience

This document describes the architecture and deployment procedures of an infrastructure comprised of Cisco, EMC, VMware and Citrix virtualization. The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy the solution described in this document.

2 Summary of Main Findings

The combination of technologies from Cisco Systems, Inc, Citrix Systems, Inc, VMware and EMC produced a highly efficient, robust and scalable Virtual Desktop Infrastructure (VDI) for a hosted virtual desktop deployment. Key components of the solution included:

•

The combined power of the Unified Computing System, Nexus switching and EMC storage hardware with VMware ESXi 5.0 Update 1, and Citrix XenDesktop 5.6 with Machine Creation Services software produces a high density per rack-server Virtual Desktop delivery system.

•

Cisco UCS C-220 M3 Rack-Mount Servers support 500-600 virtual desktops in N+1 server fault tolerance configuration based on the number of rack servers deployed.

•

The 500 seat design providing N+1 server fault tolerance for 450 users is based on four Cisco UCS C220 M3 1U rack-mount servers, each with dual 8-core processors, 256GB of 1600 MHz memory and a Cisco VIC1225 converged network adapter

•

The 600 seat design providing N+1 server fault tolerance for 600 users is based on five Cisco UCS C220 M3 1U rack-mount servers, each with dual 8-core processors, 256GB of 1600 MHz memory and a Cisco VIC1225 converged network adapter

•

We were able to boot the full complement of desktops in under 15 minutes without pegging the processor, exhausting memory or storage subsystems

•

We were able to ramp (log in and exercise workloads) up to steady state in thirty minutes without pegging the processor, exhausting memory or storage subsystems

•

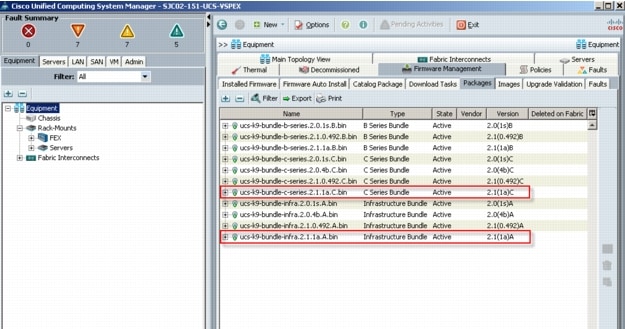

We maintain our industry leadership with our new Cisco UCS Manager 2.1(1a) software that makes scaling simple, consistency guaranteed and maintenance simple.

•

Our 10G unified fabric story gets additional validation on second generation 6200 Series Fabric Interconnects and second generation Nexus 5500 Series access switches and Nexus 2200 Series fabric extenders as we run more challenging workload testing, maintaining unsurpassed user response times.

•

For the Managed C-Series FC variant, utilizing Cisco UCS Manager 2.1 Service Templates and Service Profiles in conjunction with Nexus 2232PP Fabric Extenders, we were able fully configure all five Cisco UCS C220 M3 servers from cold start to ready to deploy VMware ESXi 5 boot from SAN in 30 minutes

•

For the Managed C-Series FC variant of the study, utilizing Cisco UCS Manager 2.1, we were able to connect and integrate the EMC VNX5300 via FC on our Cisco UCS 6248UP Fabric Interconnects, including FC zoning, eliminating the requirement for upstream access layer switching or fiber channel switches for that purpose

•

For the Unmanaged C-Series NFS variant of the study, we use a pair of Nexus 5548UP access layer switches to directly attach the unmanaged Cisco UCS C220 M3 servers and the EMC VNX5300

•

Pure Virtualization: We continue to present a validated design that is 100 percent virtualized on ESXi 5.0 Update 1. All of the Windows 7 SP1 virtual desktops and supporting infrastructure components, including Active Directory, Profile Servers, SQL Servers, and XenDesktop delivery controllers were hosted as virtual servers.

•

EMC's VNX5300 system provides storage consolidation and outstanding efficiency. Both block and NFS storage resources were provided by a single system, utilizing EMC Fast Cache technology.

•

Whether using the Managed C-Series FC or the Unmanaged C-Series NFS variant and the EMC VNX storage layout prescribed in this document, the same outstanding end user experience is achieved as measured by Login VSI 3.6 testing

•

Citrix HDX technology, extended in XenDesktop 5.6 Feature Pack 1 software, provides excellent performance with host-rendered flash video and other demanding applications.

3 Architecture

3.1 Hardware Deployed

The architecture deployed is highly modular. While each customer's environment might vary in its exact configuration, once the reference architecture contained in this document is built, it can easily be scaled as requirements and demands change. This includes scaling both up (adding additional resources within a UCS Domain) and out (adding additional Cisco UCS Domains and VNX Storage arrays).

The 500 User XenDesktop 5.6 solution with N+1 fault tolerance for 450 users, includes Cisco networking, four Cisco UCS C220 M3 Rack-Mount Servers and an EMC VNX5300 storage system.

The 600 User XenDesktop 5.6 solution with N+1 fault tolerance for 600 users, includes Cisco networking, five Cisco UCS C220 M3 Rack-Mount Servers and the same EMC VNX5300 storage system. The study will illustrate this configuration of the solution.

The same VNX5300 configuration is used with the 500 and 600 user examples.

Two variants to the design are offered:

•

Managed C-Series with Fibre Channel (FC) Storage

•

Unmanaged C-Series with NFS Storage

This document details the deployment of Citrix XenDesktop 5.6 with Machine Creation Service on VMware ESXi 5.0 Update 1

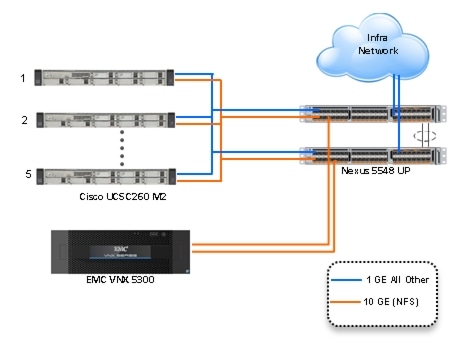

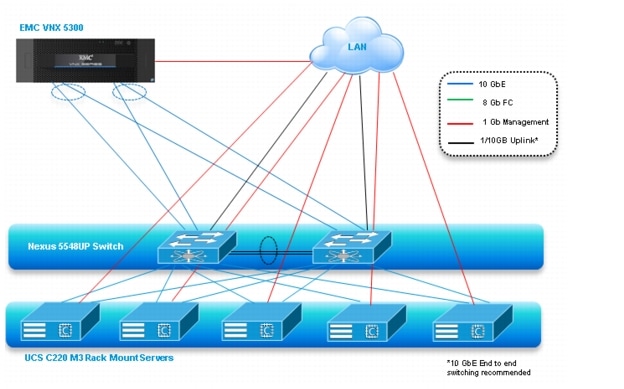

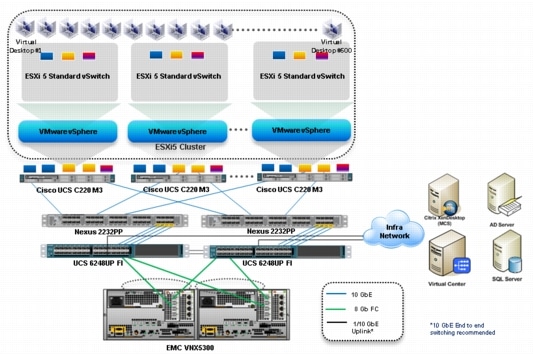

Figure 1 Citrix XenDesktop 5.6 600 User Hardware Components- Managed C220 M3 Rack-Mount Servers 8 Gb Fibre Channel Storage to Cisco UCS Connectivity

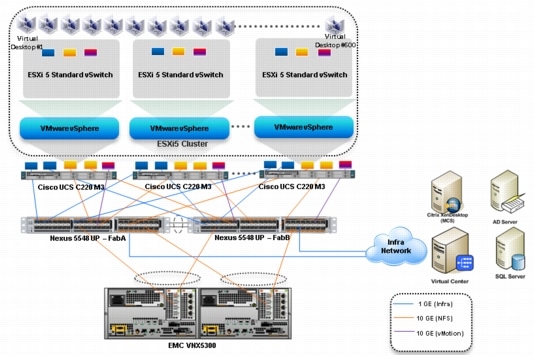

Figure 2 Citrix XenDesktop 5.6 600 User Hardware Components- Unmanaged C220 M3 Rack-Mount Servers 10 Gb Ethernet NFS Storage to Cisco UCS Connectivity

The reference configuration includes:

•

Two Cisco Nexus 5548UP switches (Unmanaged C-Series NFS Variant only)

•

Two Cisco UCS 6248UP Series Fabric Interconnects (Managed C-Series FC Variant Only)

•

Two Cisco Nexus 2232PP Fabric Extenders (Managed C-Series FC Variant Only)

•

Five Cisco UCS C220 M3 Blade servers with Intel E5-2690 processors, 256 GB RAM, and VIC1225 CNAs for 600 VDI workloads with N+1 Server fault tolerance for 600 desktops.

•

Four Cisco UCS C220 M3 Blade servers with Intel E5-2690 processors, 256 GB RAM, and VIC1225 CNAs for 500 VDI workloads with N+1 Server fault tolerance for 450 desktops

•

One EMC VNX5300 dual controller storage system for HA, 2 Datamovers, 600GB SAS Drives and 200GB SSD Fast Cache Drives

The EMC VNX5300 disk shelf, disk and Fast Cache configurations are detailed in Section 5.4 Storage Architecture Design later in this document.

3.2 Software Revisions

Table 1 Software Used in this Deployment

3.3 Configuration Guidelines

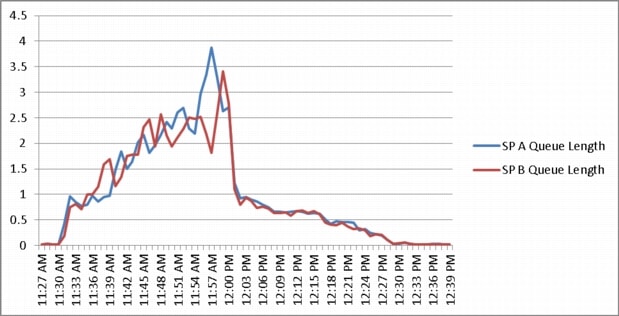

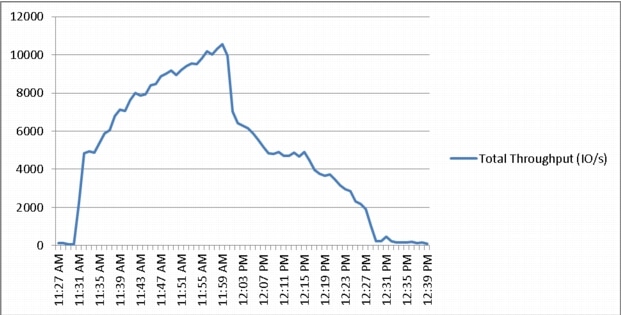

The 500-600 User XenDesktop 5.6 solution described in this document provides details for configuring a fully redundant, highly-available configuration. Configuration guidelines are provided that refer to which redundant component is being configured with each step, whether that be A or B. For example, SP A and SP B are used to identify the two EMC VNX storage controllers that are provisioned with this document while Nexus A and Nexus B identify the pair of Cisco Nexus switches that are configured. The Cisco UCS Fabric Interconnects are configured similarly.

This document is intended to allow the reader to configure the Citrix XenDesktop 5.6 with the Machine Configuration Server customer environment as stand-alone solution.

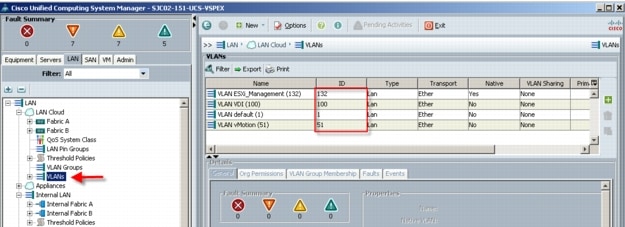

3.3.1 VLANs

For the 500 User XenDesktop 5.6 solution, we utilized VLANs to isolate and apply access strategies to various types of network traffic. Table 2 details the VLANs used in this study.

Table 2 VLANS

3.3.2 VMware Clusters

We utilized two VMware Clusters to support the solution and testing environment in both variants:

•

Infrastructure Cluster (Active Directory, DNS, DHCP, SQL Server, File Shares for user profiles, XenDesktop controllers, etc.) This would likely be the Customer's existing infrastructure cluster, adding a pair of XenDesktop 5.6 virtual machines and a SQL database to an existing SQL server to support them.

•

VDA Cluster (Windows 7 SP1 32-bit pooled virtual desktops)

4 Infrastructure Components

This section describes all of the infrastructure components used in the solution outlined in this study.

4.1 Cisco Unified Computing System (UCS)

Cisco Unified Computing System is a set of pre-integrated data center components that comprises blade servers, adapters, fabric interconnects, and extenders that are integrated under a common embedded management system. This approach results in far fewer system components and much better manageability, operational efficiencies, and flexibility than comparable data center platforms.

4.1.1 Cisco Unified Computing System Components

Cisco UCS components are shown in Cisco Unified Computing System Components.

Figure 3 Cisco Unified Computing System Components

The Cisco Unified Computing System is designed from the ground up to be programmable and self-integrating. A server's entire hardware stack, ranging from server firmware and settings to network profiles, is configured through model-based management. With Cisco virtual interface cards, even the number and type of I/O interfaces is programmed dynamically, making every server ready to power any workload at any time.

With model-based management, administrators manipulate a model of a desired system configuration, associate a model's service profile with hardware resources and the system configures itself to match the model. This automation speeds provisioning and workload migration with accurate and rapid scalability. The result is increased IT staff productivity, improved compliance, and reduced risk of failures due to inconsistent configurations.

Cisco Fabric Extender technology reduces the number of system components to purchase, configure, manage, and maintain by condensing three network layers into one. It eliminates both blade server and hypervisor-based switches by connecting fabric interconnect ports directly to individual blade servers and virtual machines. Virtual networks are now managed exactly as physical networks are, but with massive scalability. This represents a radical simplification over traditional systems, reducing capital and operating costs while increasing business agility, simplifying and speeding deployment, and improving performance.

Note

Only the Cisco UCS C-Series Rack-Mount Servers, specifically the Cisco UCS C220 M3, were used in both the Managed FC variant and the Unmanaged NFS variant for this study. For the Managed FC variant, Cisco UCS 6248UP Fabric Interconnects and Nexus 2232PPs were used in conjunction with the Cisco UCS C220 M3s and the EMC VNX5300. For the Unmanaged NFS variant, Nexus 5548UPs were used in conjunction with the Cisco UCS C220 M3s and the EMC VNX5300.

The components of the Cisco Unified Computing System and Nexus switches that were used in the study are discussed below

4.1.1 Cisco Fabric Interconnects (Managed FC Variant Only)

Cisco UCS Fabric Interconnects create a unified network, storage and management fabric throughout the Cisco UCS. They provide uniform access to both networks and storage, eliminating the barriers to deploying a fully virtualized environment based on a flexible, programmable pool of resources.

Cisco Fabric Interconnects comprise a family of line-rate, low-latency, lossless 10-GE, Cisco Data Center Ethernet, and FCoE interconnect switches. Based on the same switching technology as the Cisco Nexus 5000 Series, Cisco UCS 6000 Series Fabric Interconnects provide the additional features and management capabilities that make them the central nervous system of Cisco Unified Computing System.

The Cisco UCS Manager software runs inside the Cisco UCS Fabric Interconnects. The Cisco UCS 6000 Series Fabric Interconnects expand the Cisco UCS networking portfolio and offer higher capacity, higher port density, and lower power consumption. These interconnects provide the management and communication backbone for the Cisco UCS B-Series Blades and Cisco UCS Blade Server Chassis.

All chassis and all blades that are attached to the Fabric Interconnects are part of a single, highly available management domain. By supporting unified fabric, the Cisco UCS 6200 Series provides the flexibility to support LAN and SAN connectivity for all blades within its domain right at configuration time. Typically deployed in redundant pairs, the Cisco UCS Fabric Interconnect provides uniform access to both networks and storage, facilitating a fully virtualized environment.

The Cisco UCS Fabric Interconnect family is currently comprised of the Cisco 6100 Series and Cisco 6200 Series of Fabric Interconnects.

4.1.2.1 Cisco UCS 6248UP 48-Port Fabric Interconnect

The Cisco UCS 6248UP 48-Port Fabric Interconnect is a 1 RU, 10-GE, Cisco Data Center Ethernet, FCoE interconnect providing more than 1 Tbps throughput with low latency. It has 32 fixed ports of Fibre Channel, 10-GE, Cisco Data Center Ethernet, and FCoE SFP+ ports.

One expansion module slot can be up to sixteen additional ports of Fibre Channel, 10-GE, Cisco Data Center Ethernet, and FCoE SFP+. The expansion module was not required for this study.

4.1.2 Cisco UCS C220 M3 Rack-Mount Server

Cisco Unified Computing System is the first truly unified data center platform that combines industry-standard, x86-architecture blade and rack servers with networking and storage access into a single system. Key innovations in the platform include a standards-based unified network fabric, Cisco Virtualized Interface Card (VIC) support, and Cisco UCS Manager Service Profile and Direct Storage Connection support. The system uses a wire- once architecture with a self-aware, self-integrating, intelligent infrastructure that eliminates the time-consuming, manual, error-prone assembly of components into systems.

Managed Cisco UCS C-Series Rack-Mount Servers reduce total cost of ownership (TCO) and increase business agility by extending Cisco Unified Computing System™ innovations to a rack-mount form factor. These servers:

•

Can be managed and provisioned centrally using Cisco UCS Service Profiles with Cisco UCS Manager 2.1(1a,) Cisco UCS Fabric Interconnects and Nexus 2232PP Fabric Extenders

•

Offer a form-factor-agnostic entry point into the Cisco Unified Computing System, which is a single converged system with configuration automated through integrated, model-based management

•

Simplify and speed deployment of applications

•

Increase customer choice with unique benefits in a familiar rack package

•

Offer investment protection through the capability to deploy them either as standalone servers or as part of the Cisco Unified Computing System

Note

This study highlights the use of Managed Cisco UCS C-Series Rack-Mount servers in the FC variant. The alternative NFS variant utilizes the Cisco UCS C220 M3 servers in stand-alone mode.

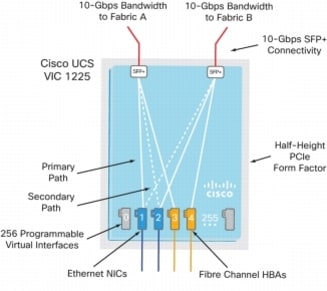

4.1.3 Cisco UCS Virtual Interface Card (VIC) Converged Network Adapter

A Cisco® innovation, the Cisco UCS Virtual Interface Card (VIC) 1225 (Figure 1) is a dual-port Enhanced Small Form-Factor Pluggable (SFP+) 10 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) card designed exclusively for Cisco UCS C-Series Rack Servers. With its half-height design, the card preserves full-height slots in servers for third-party adapters certified by Cisco. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases.

4.1.3.1 Cisco UCS Virtual Interface Card 1225

The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1225 supports Cisco Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment.

Figure 4 Cisco UCS VIC M81KR Converged Network Adapter

Note

The Cisco UCS VIC 1225 virtual interface cards are deployed in the Cisco UCS C-Series C220 M3 rack-mount servers.

4.2 Citrix XenDesktop

Citrix XenDesktop is a desktop virtualization solution that delivers Windows desktops as an on-demand service to any user, anywhere. With FlexCast™ delivery technology, XenDesktop can quickly and securely deliver individual applications or complete desktops to the entire enterprise, whether users are task workers, knowledge workers or mobile workers. Users now have the flexibility to access their desktop on any device, anytime, with a high definition user experience. With XenDesktop, IT can manage single instances of each OS, application, and user profile and dynamically assemble them to increase business agility and greatly simplify desktop management. XenDesktop's open architecture enables customers to easily adopt desktop virtualization using any hypervisor, storage, or management infrastructure.

4.2.1 Enhancements in Citrix XenDesktop 5.6 Feature Pack 1

XenDesktop 5.6 Feature Pack 1, builds upon the themes of the last release which are about reducing cost and making it easier to do desktop virtualization. Below, is an overview of new or updated technologies and capabilities contained in Feature Pack 1:

•

Remote PC - Extends the FlexCast physical delivery model to include secure remote connections to office-based PCs with a high-definition user experience leveraging Receiver and HDX technologies. Simple auto-assignment setup is included so Remote PC can be easily provisioned to thousands of users. With this new FlexCast delivery feature, Citrix is simplifying desktop transformation by creating an easy on-ramp for desktop virtualization. View the Remote PC video

•

Universal Print Server - Combined with the previously available Universal Print Driver, administrators may now install a single driver in the virtual desktop image or application server to permit local or network printing from any device, including thin clients and tablets.

•

Optimized Unified Communications - A new connector from Citrix enables Microsoft Lync 2010 clients to create peer-to-peer connections for the ultimate user experience, while taking the load off datacenter processing and bandwidth resources. The Cisco Virtualization Experience Client (VXC), announced October 2011, was the first in the industry to provide peer-to-peer connection capability to deliver uncompromised user experience benefits to Citrix customers. Download Cisco VXC . Webcam Video Compression adds support for WebEx (in addition to Office Communicator, GoToMeeting HDFaces, Skype and Adobe Connect).

•

Mobility Pack for VDI - With the new Mobility Pack, XenDesktop dynamically transforms the user interfaces of Windows desktops and applications to look and feel like the native user interface of smartphones and tablets. Now, your existing Windows applications adapt to the way users interact with applications on smaller devices without any source code changes. Previously, this technology was available only for XenApp.

•

HDX 3D Pro - This HDX update provides breakthrough visual performance of high-end graphics intensive applications obtained by producing much faster frame rates using NVIDIAs latest API and leveraging a new, ultra-efficient, deep compression codec.

•

XenClient Enterprise - XenClient 4.1 now supports 9x more PCs, has wider graphics support with NVIDIA graphics & has broader server hypervisor support. Its backend management can now run on XenServer, vSphere & Hyper-V. The release brings robust policy controls to the platform & role based administration. XenClient 4.1 delivers enterprise level scalability with support of up to 10,000 endpoints.

•

Simple License Service -This new license service automatically allocates and installs your XenDesktop and/or XenApp licenses directly from your license server, eliminating the need to go to My Citrix to fully allocate your licenses. For more details, reference Citrix edocs. Version 11.6.1 or higher of the License Server is required.

4.2.2 FlexCast Technology

Citrix XenDesktop with FlexCast is an intelligent delivery technology that recognizes the user, device, and network, and delivers the correct virtual desktop and applications specifically tailored to meet the performance, security, and flexibility requirements of the user scenario. FlexCast technology delivers any type of virtual desktop to any device and can change this mix at any time. FlexCast also includes on-demand applications to deliver any type of virtual applications to any desktop, physical or virtual.

The FlexCast delivery technologies can be broken down into the following categories:

•

Hosted Shared Desktops provide a locked down, streamlined and standardized environment with a core set of applications, ideally suited for task workers running a few lower-intensity applications with light personalization requirements

•

Hosted VM Desktops offer a personalized Windows desktop experience, typically needed by knowledge workers with higher application performance needs and high personalization requirements

•

Streamed Virtual Hard Disk (VHD) Desktops use the local processing power of rich clients while providing centralized single image management of the desktop. These types of desktops are often used in computer labs and training facilities and when users require local processing for certain applications or peripherals.

•

Local VM Desktops utilize XenClient to extend the benefits of centralized, single-instance management to mobile workers that need to use their laptops offline. When they are able to connect to a suitable network, changes to the OS, applications, and user data are automatically synchronized with the data center.

•

Physical Desktops utilize the Remote PC feature in XenDesktop to create secure remote connections to physical PCs on a LAN without having to build out a large scale XenDesktop infrastructure in the data center.

•

On-demand Applications allows any Windows® application to be centralized and managed in the data center, hosted either on multi-user terminal servers or VMs and instantly delivered as a service to physical and virtual desktops. Optimized for each user device, network, and location, applications are delivered through a high speed protocol for use while connected or streamed through Citrix application virtualization or Microsoft App-V directly to the endpoint for use when offline.

4.2.3 High-Definition User Experience Technology

Citrix High-Definition User Experience (HDX) technology is a set of capabilities that delivers a high definition desktop virtualization user experience to end users for any application, device, or network. These user experience enhancements balance performance with low bandwidth, whether it be plugging in a USB device, printing from a network printer or rendering real time video and audio. Citrix HDX technology provides network and application performance optimizations for a "like local PC" experience over LANs and a very usable experience over low bandwidth and high latency WAN connections.

4.2.4 Citrix XenDesktop Hosted VM Overview

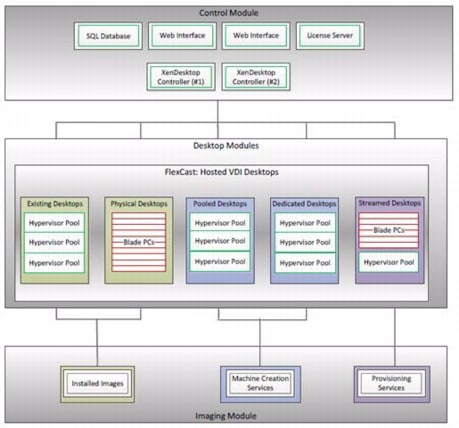

Hosted VM uses a hypervisor to host all the desktops in the data center. Hosted VM desktops can either be pooled or assigned. Pooled virtual desktops use Citrix Provisioning Services to stream a standard desktop image to each desktop instance upon boot-up. Therefore, the desktop is always returned to its clean, original state. Citrix Provisioning Services enables the streaming of a single desktop image to create multiple virtual desktops on one or more hypervisors in a data center. This feature greatly reduces the amount of storage required compared to other methods of creating virtual desktops. The high-level components of a Citrix XenDesktop architecture utilizing the Hosted VM model for desktop delivery are shown in below Citrix XenDesktop on VMware vSphere

Figure 5 Citrix XenDesktop on VMware vSphere

Components of a Citrix XenDesktop architecture using Hosted VM include:

•

Virtual Desktop Agent: The Virtual Desktop Agent (VDA) is installed on the virtual desktops and enables direct Independent Computing Architecture (ICA) connections between the virtual desktop and user devices with the Citrix online plug-in.

•

Desktop Delivery Controller: The XenDesktop controllers are responsible for maintaining the proper level of idle desktops to allow for instantaneous connections, monitoring the state of online and connected virtual desktops and shutting down virtual desktops as needed. The primary XD controller is configured as the farm master server. The farm master is able to focus on its role of managing the farm when an additional XenDesktop Controller acts as a dedicated XML server. The XML server is responsible for user authentication, resource enumeration, and desktop launching process. A failure in the XML broker service will result in users being unable to start their desktops. This is why multiple controllers per farm are recommended.

•

Citrix Receiver: Installed on user devices, Citrix Receiver enables direct HDX connections from user devices to virtual desktops. Receiver is a mobile workspace available on a range of platforms so users can connect to their Windows applications and desktops from devices of their choice. Receiver for Web is also available for devices that don't support a native Receiver. Receiver incorporates the Citrix® ICA® client engine and other technologies needed to communicate directly with backend resources, such as StoreFront.

•

Citrix XenApp: Citrix XenApp is an on-demand application delivery solution that enables any Windows application to be virtualized, centralized, managed in the data center, and instantly delivered as a service to users anywhere on any device. XenApp can be used to deliver both virtualized applications and virtualized desktops. In the Hosted VM model, XenApp is typically used for on-demand access to streamed and hosted applications.

•

Provisioning Services: PVS creates and provisions virtual desktops from a single desktop image (vDisk) on demand, optimizing storage utilization and providing a pristine virtual desktop to each user every time they log on. Desktop provisioning also simplifies desktop images, provides the best flexibility, and offers fewer points of desktop management for both applications and desktops. The Trivial File Transfer Protocol (TFTP) and Pre-boot eXecution Environment (PXE) services are required for the virtual desktop to boot off the network and download the bootstrap file which instructs the virtual desktop to connect to the PVS server for registration and vDisk access instructions.

•

Personal vDisk: Personal vDisk technology is a powerful new tool that provides the persistence and customization users want with the management flexibility IT needs in pooled VDI deployments. Personal vDisk technology gives these users the ability to have a personalized experience of their virtual desktop. Personal apps, data and settings are easily accessible each time they log on. This enables broader enterprise-wide deployments of pooled virtual desktops by storing a single copy of Windows centrally, and combining it with a personal vDisk for each employee, enhancing user personalization and reducing storage costs.

•

Hypervisor: XenDesktop has an open architecture that supports the use of XenServer, Microsoft Hyper-V, or VMware vSphere. For the purposes of the testing documented in this paper, VMware vSphere was the hypervisor of choice.

•

Storefront: Storefront is the next-generation of Web Interface and provides the user interface to the XenDesktop environment. Storefront broker user authentication, enumerates the available desktops and, upon launch, delivers an .ica file to Citrix Receiver on the user's local device to initiate a connection. Because StoreFront is a critical component, redundant servers must be available to provide fault tolerance.

•

License Server: The Citrix License Server is responsible for managing the licenses for all of the components of XenDesktop. XenDesktop has a 90 day grace period which allows the system to function normally for 90 days if the license server becomes unavailable. This grace period offsets the complexity involved with building redundancy into the license server.

•

Data Store: Each XenDesktop farm requires a database called the data store. Citrix XenDesktops use the data store to centralize configuration information for a farm in one location. The data store maintains all the static information about the XenDesktop environment.

•

Domain Controller: The Domain Controller hosts Active Directory, Dynamic Host Configuration Protocol (DHCP), and Domain Name System (DNS). Active Directory provides a common namespace and secure method of communication between all the servers and desktops in the environment. DNS provides IP Host name resolution for the core XenDesktop infrastructure components. DHCP is used by the virtual desktop to request and obtain an IP address from the DHCP service. DHCP uses Option 66 and 67 to specify the bootstrap file location and file name to a virtual desktop. The DHCP service receives requests on UDP port 67 and sends data to UDP port 68 on a virtual desktop. The virtual desktops then have the operating system streamed over the network utilizing Citrix Provisioning Services (PVS).

All of the aforementioned components interact to provide a virtual desktop to an end user based on the FlexCast Hosted VM desktop delivery model leveraging the Provisioning Services feature of XenDesktop. This architecture provides the end user with a pristine desktop at each logon based on a centralized desktop image that is owned and managed by IT.

4.2.5 Citrix XenDesktop Hosted Shared Desktop Overview

In a typical large enterprise environment, IT will implement a mixture of Flexcast technologies to meet various workstyle needs. Like the test in this document, hosted shared desktops can be deployed alongside hosted VM desktops.

Host shared desktops has been a proven Citrix offering over many years and is deployed in some of the largest enterprises today due to its ease of deployment, reliability and scalability. Hosted shared desktops are appropriate for environments that have a standardized set of applications that do not deviate from one user to another. All users share the same desktop interface hosted on a Windows server in the backend datacenter. Hence, the level of desktop customization is limited compared to a Hosted VM desktop model.

If VM isolation is required and the ability to allocate resources to one user over another is important, the Hosted VM desktop should be the model of choice.

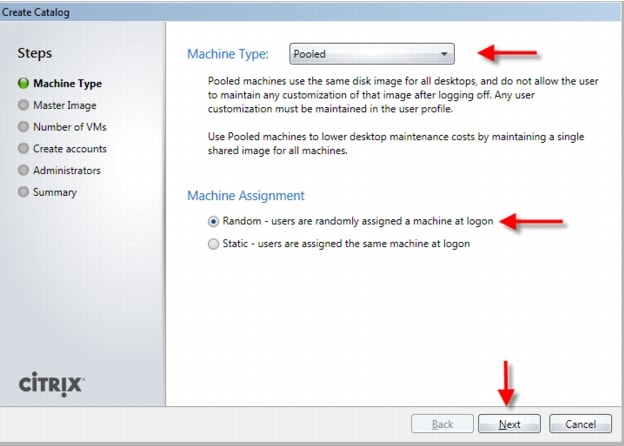

4.2.6 Citrix Machine Creation Services

Citrix Machine Creation Services (MCS) is the option for desktop image delivery used in this study. It uses the hypervisor APIs (XenServer, Hyper-V, and vSphere) to create, start, stop, and delete virtual machines. If you want to create a catalog of desktops with MCS, choose from the following:

•

Pooled-Random: Pooled desktops are assigned to random users. When they logoff, the desktop is free for another user. When rebooted, any changes made are destroyed.

•

Pooled-Static: Pooled desktops are permanently assigned to a single user. When a user logs off, only that user can use the desktop, regardless if the desktop is rebooted. During reboots, any changes made are destroyed.

•

Pooled-Personal vDisk: Retains the single image management of pooled desktops while allowing the statically assigned user to install apps and change their desktop settings. These changes are stored on the users personal vDisk. The pooled desktop image is not altered with pooled with personal vDisk.

•

Dedicated: Desktops are permanently assigned to a single user. When a user logs off, only that user can use the desktop, regardless if the desktop is rebooted. During reboots, any changes made will persist across subsequent startups.

In this study, Pooled-Random desktops were created and managed by Citrix Machine Creation Services.

4.3 EMC VNX Series

The VNX series delivers uncompromising scalability and flexibility for the mid-tier while providing market-leading simplicity and efficiency to minimize total cost of ownership. Customers can benefit from VNX features such as:

•

Next-generation unified storage, optimized for virtualized applications.

•

Extended cache by using Flash drives with Fully Automated Storage Tiering for Virtual Pools (FAST VP) and FAST Cache that can be optimized for the highest system performance and lowest storage cost simultaneously on both block and file.

•

Multiprotocol supports for file, block, and object with object access through EMC Atmos™ Virtual Edition (Atmos VE).

•

Simplified management with EMC Unisphere™ for a single management framework for all NAS, SAN, and replication needs.

•

Up to three times improvement in performance with the latest Intel Xeon multicore processor technology, optimized for Flash.

•

6 Gb/s SAS back end with the latest drive technologies supported:

–

3.5" 100 GB and 200 GB Flash, 3.5" 300 GB, and 600 GB 15k or 10k rpm SAS, and 3.5" 1 TB, 2 TB and 3 TB 7.2k rpm NL-SAS

–

2.5" 100 GB and 200 GB Flash, 300 GB, 600 GB and 900 GB 10k rpm SAS

•

Expanded EMC UltraFlex™ I/O connectivity—Fibre Channel (FC), Internet Small Computer System Interface (iSCSI), Common Internet File System (CIFS), network file system (NFS) including parallel NFS (pNFS), Multi-Path File System (MPFS), and Fibre Channel over Ethernet (FCoE) connectivity for converged networking over Ethernet.

The VNX series includes five software suites and three software packs that make it easier and simpler to attain the maximum overall benefits.

Software suites available:

•

VNX FAST Suite—Automatically optimizes for the highest system performance and the lowest storage cost simultaneously

•

VNX Local Protection Suite—Practices safe data protection and repurposing.

•

VNX Remote Protection Suite—Protects data against localized failures, outages, and disasters.

•

VNX Application Protection Suite—Automates application copies and proves compliance.

•

VNX Security and Compliance Suite—Keeps data safe from changes, deletions, and malicious activity.

Software packs available:

•

VNX Total Efficiency Pack—Includes all five software suites (not available for VNX5100).

•

VNX Total Protection Pack—Includes local, remote, and application protection suites.

4.3.1 EMC VNX5300 Used in Testing

EMC VNX 5300 provides storage by using FC (SAN) or IP (NAS) connections for virtual desktops, and infrastructure virtual machines such as Citrix XenDesktop controllers, VMware vCenter Servers, Microsoft SQL Server databases, and other supporting services. Optionally, user profiles and home directories are redirected to CIFS network shares on the VNX5300.

4.4 VMware ESXi 5.0

VMware, Inc. provides virtualization software. VMware's enterprise software hypervisors for servers—VMware ESX, Vmware ESXi, and VSphere—are bare-metal embedded hypervisors that run directly on server hardware without requiring an additional underlying operating system.

4.4.1 VMware on ESXi 5.0 HypervisorESXi 5.0 is a "bare-metal" hypervisor, so it installs directly on top of the physical server and partitions it into multiple virtual machines that can run simultaneously, sharing the physical resources of the underlying server. VMware introduced ESXi in 2007 to deliver industry-leading performance and scalability while setting a new bar for reliability, security and hypervisor management efficiency.

Due to its ultra-thin architecture with less than 100MB of code-base disk footprint, ESXi delivers industry-leading performance and scalability plus:

•

Improved Reliability and Security — with fewer lines of code and independence from general purpose OS, ESXi drastically reduces the risk of bugs or security vulnerabilities and makes it easier to secure your hypervisor layer.

•

Streamlined Deployment and Configuration — ESXi has far fewer configuration items than ESX, greatly simplifying deployment and configuration and making it easier to maintain consistency.

•

Higher Management Efficiency — The API-based, partner integration model of ESXi eliminates the need to install and manage third party management agents. You can automate routine tasks by leveraging remote command line scripting environments such as vCLI or PowerCLI.

•

Simplified Hypervisor Patching and Updating — Due to its smaller size and fewer components, ESXi requires far fewer patches than ESX, shortening service windows and reducing security vulnerabilities.

4.5 Modular Virtual Desktop Infrastructure Technical Overview

4.5.1 Modular Architecture

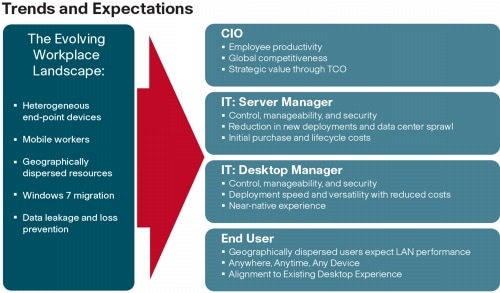

Today's IT departments are facing a rapidly-evolving workplace environment. The workforce is becoming increasingly diverse and geographically distributed and includes offshore contractors, distributed call center operations, knowledge and task workers, partners, consultants, and executives connecting from locations around the globe at all times.

An increasingly mobile workforce wants to use a growing array of client computing and mobile devices that they can choose based on personal preference. These trends are increasing pressure on IT to ensure protection of corporate data and to prevent data leakage or loss through any combination of user, endpoint device, and desktop access scenarios (Figure 6). These challenges are compounded by desktop refresh cycles to accommodate aging PCs and bounded local storage and migration to new operating systems, specifically Microsoft Windows 7.

Figure 6 The Evolving Workplace Landscape

Some of the key drivers for desktop virtualization are increased data security and reduced TCO through increased control and reduced management costs.

4.5.1.1 Cisco Data Center Infrastructure for Desktop Virtualization

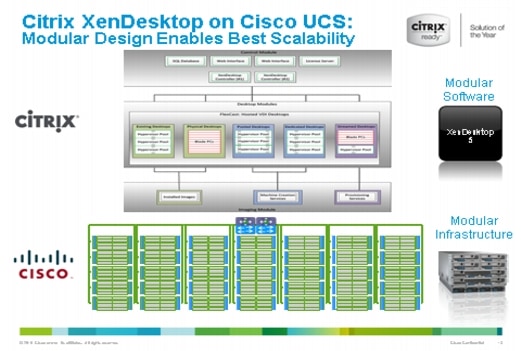

Cisco focuses on three key elements to deliver the best desktop virtualization data center infrastructure: simplification, security, and scalability. The software combined with platform modularity provides a simplified, secure, and scalable desktop virtualization platform (Figure 7).

Figure 7 Citirx XenDesktop on Cisco Unified Computing System

4.5.1.2 Simplified

Cisco UCS provides a radical new approach to industry standard computing and provides the heart of the data center infrastructure for desktop virtualization and the Cisco Virtualization Experience (VXI). Among the many features and benefits of Cisco UCS are the drastic reductions in the number of servers needed and number of cables per server and the ability to very quickly deploy or re-provision servers through Cisco UCS Service Profiles. With fewer servers and cables to manage and with streamlined server and virtual desktop provisioning, operations are significantly simplified. Thousands of desktops can be provisioned in minutes with Cisco Service Profiles and Cisco storage partners' storage-based cloning. This speeds time to productivity for end users, improves business agility, and allows IT resources to be allocated to other tasks.

IT tasks are further simplified through reduced management complexity, provided by the highly integrated Cisco UCS Manager, along with fewer servers, interfaces, and cables to manage and maintain. This is possible due to the industry-leading, highest virtual desktop density per blade of Cisco UCS along with the reduced cabling and port count due to the unified fabric and unified ports of Cisco UCS and desktop virtualization data center infrastructure.

Simplification also leads to improved and more rapid success of a desktop virtualization implementation. Cisco and its partners -Citrix (XenDesktop and Provisioning Server) and EMC - have developed integrated, validated architectures, including available pre-defined, validated infrastructure packages, known as Cisco Solutions for VSPEX.

4.5.1.3 Secure

While virtual desktops are inherently more secure than their physical world predecessors, they introduce new security considerations. Desktop virtualization significantly increases the need for virtual machine-level awareness of policy and security, especially given the dynamic and fluid nature of virtual machine mobility across an extended computing infrastructure. The ease with which new virtual desktops can proliferate magnifies the importance of a virtualization-aware network and security infrastructure. Cisco UCS and Nexus data center infrastructure for desktop virtualization provides stronger data center, network, and desktop security with comprehensive security from the desktop to the hypervisor. Security is enhanced with segmentation of virtual desktops, virtual machine-aware policies and administration, and network security across the LAN and WAN infrastructure.

4.5.1.4 Scalable

Growth of a desktop virtualization solution is all but inevitable and it is critical to have a solution that can scale predictably with that growth. The Cisco solution supports more virtual desktops per server and additional servers scale with near linear performance. Cisco data center infrastructure provides a flexible platform for growth and improves business agility. Cisco UCS Service Profiles allow for on-demand desktop provisioning, making it easy to deploy dozens or thousands of additional desktops.

Each additional Cisco UCS blad server provides near linear performance and utilizes Cisco's dense memory servers and unified fabric to avoid desktop virtualization bottlenecks. The high performance, low latency network supports high volumes of virtual desktop traffic, including high resolution video and communications.

Cisco Unified Computing System and Nexus data center infrastructure is an ideal platform for growth, with transparent scaling of server, network, and storage resources to support desktop virtualization.

4.5.1.5 Savings and Success

As demonstrated above, the simplified, secure, scalable Cisco data center infrastructure solution for desktop virtualization will save time and cost. There will be faster payback, better ROI, and lower TCO with the industry's highest virtual desktop density per server, meaning there will be fewer servers needed, reducing both capital expenditures (CapEx) and operating expenditures (OpEx). There will also be much lower network infrastructure costs, with fewer cables per server and fewer ports required, via the Cisco UCS architecture and unified fabric.

The simplified deployment of Cisco Unified Computing System for desktop virtualization speeds up time to productivity and enhances business agility. IT staff and end users are more productive more quickly and the business can react to new opportunities by simply deploying virtual desktops whenever and wherever they are needed. The high performance Cisco systems and network deliver a near-native end-user experience, allowing users to be productive anytime, anywhere.

4.5.2 Understanding Desktop User Groups

There must be a considerable effort within the enterprise to identify desktop user groups and their memberships. The most broadly recognized, high level user groups are:

•

Task Workers?Groups of users working in highly specialized environments where the number of tasks performed by each worker is essentially identical. These users are typically located at a corporate facility (e.g., call center employees).

•

Knowledge/Office Workers?Groups of users who use a relatively diverse set of applications that are Web-based and installed and whose data is regularly accessed. They typically have several applications running simultaneously throughout their workday and a requirement to utilize Flash video for business purposes. This is not a singular group within an organization. These workers are typically located at a corporate office (e.g., workers in accounting groups).

•

Power Users?Groups of users who run high-end, memory, processor, disk IO, and/or graphic-intensive applications, often simultaneously. These users have high requirements for reliability, speed, and real-time data access (e.g., design engineers).

•

Mobile Workers?Groups of users who may share common traits with Knowledge/Office Workers, with the added complexity of needing to access applications and data from wherever they are?whether at a remote corporate facility, customer location, at the airport, at a coffee shop, or at home?all in the same day (e.g., a company's outbound sales force).

•

Remote Workers?Groups of users who could fall into the Task Worker or Knowledge/Office Worker groups but whose experience is from a remote site that is not corporate owned, most often from the user's home. This scenario introduces several challenges in terms of type, available bandwidth, and latency and reliability of the user's connectivity to the data center (for example, a work-from-home accounts payable representative).

•

Guest/Contract Workers?Groups of users who need access to a limited number of carefully controlled enterprise applications and data and resources for short periods of time. These workers may need access from the corporate LAN or remote access (for example, a medical data transcriptionist).

There is good reason to search for and identify multiple sub-groups of the major groups listed above in the enterprise. Typically, each sub-group has different application and data requirements.

4.5.3 Understanding Applications and Data

When the desktop user groups and sub-groups have been identified, the next task is to catalog group application and data requirements. This can be one of the most time-consuming processes in the VDI planning exercise, but is essential for the VDI project's success. If the applications and data are not identified and co-located, performance will be negatively affected.

The process of analyzing the variety of application and data pairs for an organization will likely be complicated by the inclusion cloud applications, like SalesForce.com. This application and data analysis is beyond the scope of this Cisco Validated Design, but should not be omitted from the planning process. There are a variety of third party tools available to assist organizations with this crucial exercise.

4.5.4 Project Planning and Solution Sizing Sample Questions

Now that user groups, their applications and their data requirements are understood, some key project and solution sizing questions may be considered.

General project questions should be addressed at the outset, including:

•

Has a VDI pilot plan been created based on the business analysis of the desktop groups, applications and data?

•

Is there infrastructure and budget in place to run the pilot program?

•

Are the required skill sets to execute the VDI project available? Can we hire or contract for them?

•

Do we have end user experience performance metrics identified for each desktop sub-group?

•

How will we measure success or failure?

•

What is the future implication of success or failure?

Provided below is a short, non-exhaustive list of sizing questions that should be addressed for each user sub-group:

•

What is the desktop OS planned? Windows 7 or Windows XP?

•

32 bit or 64 bit desktop OS?

•

How many virtual desktops will be deployed in the pilot? In production? All Windows 7?

•

How much memory per target desktop group desktop?

•

Are there any rich media, Flash, or graphics-intensive workloads?

•

What is the end point graphics processing capability?

•

Will XenApp be used for Hosted Shared Server Desktops or exclusively XenDesktop?

•

Are there XenApp hosted applications planned? Are they packaged or installed?

•

Will Provisioning Server or Machine Creation Services be used for virtual desktop deployment?

•

What is the hypervisor for the solution?

•

What is the storage configuration in the existing environment?

•

Are there sufficient IOPS available for the write-intensive VDI workload?

•

Will there be storage dedicated and tuned for VDI service?

•

Is there a voice component to the desktop?

•

Is anti-virus a part of the image?

•

Is user profile management (e.g., non-roaming profile based) part of the solution?

•

What is the fault tolerance, failover, disaster recovery plan?

•

Are there additional desktop sub-group specific questions?

4.5.5 Cisco Services

Cisco offers assistance for customers in the analysis, planning, implementation, and support phases of the VDI lifecycle. These services are provided by the Cisco Advanced Services group. Some examples of Cisco services include:

•

Cisco VXI Unified Solution Support

•

Cisco VXI Desktop Virtualization Strategy Service

•

Cisco VXI Desktop Virtualization Planning and Design Service

4.5.6 The Solution: A Unified, Pre-Tested and Validated Infrastructure

To meet the challenges of designing and implementing a modular desktop infrastructure, Cisco, Citrix, EMC and VMware have collaborated to create the data center solution for virtual desktops outlined in this document.

Key elements of the solution include:

•

A shared infrastructure that can scale easily

•

A shared infrastructure that can accommodate a variety of virtual desktop workloads

4.6 Cisco Networking Infrastructure

This section describes the Cisco networking infrastructure components used in the configuration.

4.6.1 Cisco Nexus 5548UP Switch (Unmanaged FC Variant Only)

Two Cisco Nexus 5548UP access switches are 1RU 10 Gigabit Ethernet, Fibre Channel, and FCoE switch offering up to 960 Gbps of throughput and up to 48 ports. The switch has 32 unified ports and one expansion slot. The Cisco Nexus 5500 platform can be equipped with an expansion module that can be used to increase the number of 10 Gigabit Ethernet and FCoE ports or to connect to Fibre Channel SANs with 8/4/2/1-Gbps Fibre Channel switch ports, or both. (Not required for this study.)

The switch has a single serial console port and a single out-of-band 10/100/1000-Mbps Ethernet management port. Two N+1 redundant, hot-pluggable power supplies and five N+1 redundant, hot-pluggable fan modules provide highly reliable front-to-back cooling.

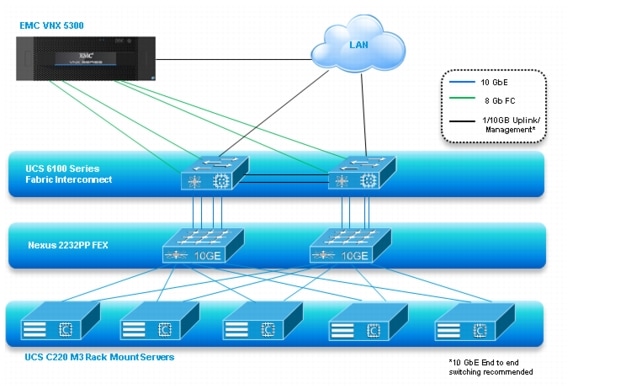

4.6.2 Cisco Nexus 2232PP Fabric Extender (Managed FC Variant Only)

The Cisco Nexus 2232PP 10G provides 32 10 Gb Ethernet and Fibre Channel Over Ethernet (FCoE) Small Form-Factor Pluggable Plus (SFP+) server ports and eight 10 Gb Ethernet and FCoE SFP+ uplink ports in a compact 1 rack unit (1RU) form factor.

Two Nexus 2232PP 10GE Fabric Extenders were deployed to provide cluster-mode single wire management to the Cisco UCS C220 M3 rack servers.

Four of eight available 10 GbE uplinks from each Nexus 2232 were utilized to provide 40 Gb of bandwidth between the UCS 6248UP Fabric Interconnects and the Cisco UCS C220 M3 rack servers.

5 Architecture and Design of XenDesktop 5.6 on Cisco Unified Computing System and EMC VNX Storage

5.1 Design Fundamentals

There are many reasons to consider a virtual desktop solution such as an ever growing and diverse base of user devices, complexity in management of traditional desktops, security, and even Bring Your Own Computer (BYOC) to work programs. The first step in designing a virtual desktop solution is to understand the user community and the type of tasks that are required to successfully execute their role. The following user classifications are provided:

•

Knowledge Workers today do not just work in their offices all day - they attend meetings, visit branch offices, work from home, and even coffee shops. These anywhere workers expect access to all of their same applications and data wherever they are.

•

External Contractors are increasingly part of your everyday business. They need access to certain portions of your applications and data, yet administrators still have little control over the devices they use and the locations they work from. Consequently, IT is stuck making trade-offs on the cost of providing these workers a device vs. the security risk of allowing them access from their own devices.

•

Task Workers perform a set of well-defined tasks. These workers access a small set of applications and have limited requirements from their PCs. However, since these workers are interacting with your customers, partners, and employees, they have access to your most critical data.

•

Mobile Workers need access to their virtual desktop from everywhere, regardless of their ability to connect to a network. In addition, these workers expect the ability to personalize their PCs, by installing their own applications and storing their own data, such as photos and music, on these devices.

•

Shared Workstation users are often found in state-of-the-art university and business computer labs, conference rooms or training centers. Shared workstation environments have the constant requirement to re-provision desktops with the latest operating systems and applications as the needs of the organization change, tops the list.

After the user classifications have been identified and the business requirements for each user classification have been defined, it becomes essential to evaluate the types of virtual desktops that are needed based on user requirements. There are essentially five potential desktops environments for each user:

•

Traditional PC: A traditional PC is what ?typically? constituted a desktop environment: physical device with a locally installed operating system.

•

Hosted Shared Desktop: A hosted, server-based desktop is a desktop where the user interacts through a delivery protocol. With hosted, server-based desktops, a single installed instance of a server operating system, such as Microsoft Windows Server 2008 R2, is shared by multiple users simultaneously. Each user receives a desktop "session" and works in an isolated memory space. Changes made by one user could impact the other users.

•

Hosted Virtual Desktop: A hosted virtual desktop is a virtual desktop running either on virtualization layer (XenServer, Hyper-V or ESX) or on bare metal hardware. The user does not work with and sit in front of the desktop, but instead the user interacts through a delivery protocol.

•

Streamed Applications: Streamed desktops and applications run entirely on the user`s local client device and are sent from a server on demand. The user interacts with the application or desktop directly but the resources may only available while they are connected to the network.

•

Local Virtual Desktop: A local virtual desktop is a desktop running entirely on the user`s local device and continues to operate when disconnected from the network. In this case, the user's local device is used as a type 1 hypervisor and is synced with the data center when the device is connected to the network.

For the purposes of the validation represented in this document only hosted virtual desktops were validated. Each of the sections provides some fundamental design decisions for this environment.

5.2 Hosted VDI Design Fundamentals

Citrix XenDesktop 5.6 can be used to deliver a variety of virtual desktop configurations. When evaluating a Hosted VDI deployment, consider the following:

5.2.1 Hypervisor Selection

Citrix XenDesktop is hypervisor agnostic, so any of the following three hypervisors can be used to hosted VDI-baseddesktops:

•

Hyper-V: Microsoft Windows Server 2008 R2 Hyper-V builds on the architecture and functions of Windows Server 2008 Hyper-V by adding multiple new features that enhance product flexibility. Hyper-V is available in a Standard, Server Core and free Hyper-V Server 2008 R2 versions. More information on Hyper-V can be obtained at the company web site.

•

vSphere: VMware vSphere consists of the management infrastructure or virtual center server software and the hypervisor software that virtualizes the hardware resources on the servers. It offers features like Distributed resource scheduler, vMotion, HA, Storage vMotion, VMFS, and a mutlipathing storage layer. More information on vSphere can be obtained at the company website.

•

XenServer: Citrix® XenServer® is a complete, managed server virtualization platform built on the powerful Xen® hypervisor. Xen technology is widely acknowledged as the fastest and most secure virtualization software in the industry. XenServer is designed for efficient management of Windows® and Linux® virtual servers and delivers cost-effective server consolidation and business continuity. More information on Hyper-V can be obtained at the company website.

For this study, we utilized VMware ESXi 5.0 Update 1 and vCenter 5.0 Update 1.

5.2.2 XenDesktop 5.6 Desktop Broker

The Citrix XenDesktop 5.6 broker provides two methods of creating hosted virtual desktops:

•

Citrix Provisioning Services

•

Citrix Machine Creation Services

Citrix Machine Creation Services, which is integrated directly with the XenDesktop Studio console, was used for this study.

It provides the ability to create seven types of virtual machines with random or static (assigned) users:

•

Pooled

•

Pooled with personal vDisk

•

Dedicated

•

Existing

•

Physical

•

Streamed

•

Streamed with personal vDisk

5.3 Designing a Citrix XenDesktop 5.6 Deployment

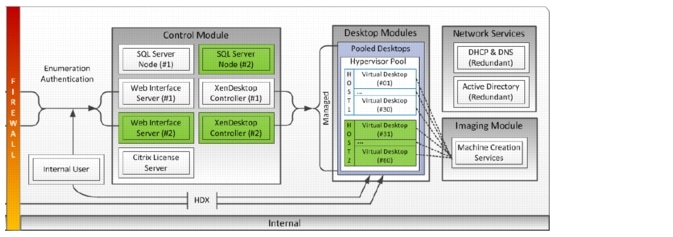

To implement our pooled desktop delivery model for this study, known as Hosted VDI Pooled Desktops, we followed the Citrix Reference Architecture for local desktop delivery.

Figure 8 Pooled Desktop Infrastructure

To read about Citrix's XenDesktop Reference Architecture - Pooled Desktops (Local and Remote) go to the following link:

http://support.citrix.com/article/CTX131049

To learn more about XenDesktop 5.6 Planning and Design go to the following link:

http://support.citrix.com/product/xd/v5.5/consulting/

5.4 Storage Architecture Design

Designing for this workload involves the deployment of many disks to handle brief periods of extreme I/O pressure, which is expensive to implement. This solution uses EMC VNX FAST Cache to reduce the number of disks required.

VNX multi-protocol support enables use of either Fibre Channel SAN-connected block storage or 10-gigabit Ethernet (GbE) connected NFS for flexible, cost effective, and easily deployable storage for VMware-based desktop virtualization.