Table Of Contents

About Cisco Validated Design (CVD) Program

SAP Applications Built on FlexPod

Business Challenges Facing the SAP Customer

SAP Applications Built on FlexPod

Investment Protection with Standardized, Flexible IT

Scalability for Any Cloud Solution

SAP Applications Built on FlexPod Architecture

Single-Managed Tenant Operation

Cisco Unified Computing System

VMware Network Distributed Switch

Examples of Storage Service Levels

Integrated Storage-Based Backup

Cisco Nexus 5000 Series Switches

Cisco Nexus 1000V Series Switches

Policy-Based Virtual Machine Connectivity

Mobility of Virtual Machine Security and Network Properties

Automated Provisioning of Infrastructure Components

Automated Space-Efficient SAP System Copies

Provisioning Fenced SAP Systems

Extending Additional Compute Resources

Adding a Second FlexPod Infrastructure

Overview of Solution Setup and Operation

Changes Compared to FlexPod Built on VMware

Additional Storage Configuration

Additional Network Configuration

Management Network for Physical Components

Central Software Share Network

Example ACLs for SAP Applications Built on FlexPod

Cisco UCS Configuration for Bare Metal Operating System

Create Service Profile Template for Bare Metal Linux Installations

Additional Steps for Adding a Second FlexPod Infrastructure

Additional Software Components

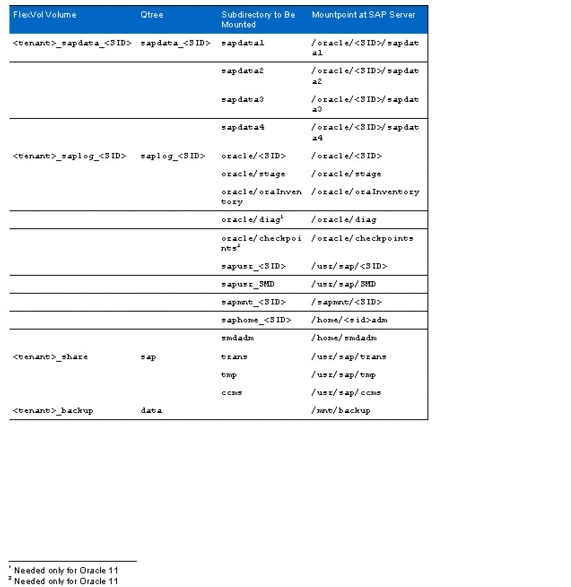

Central Volumes in the Tenant Infrastructure

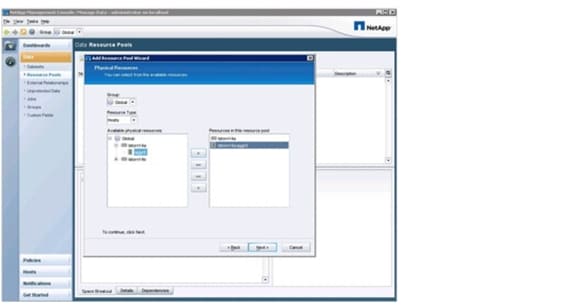

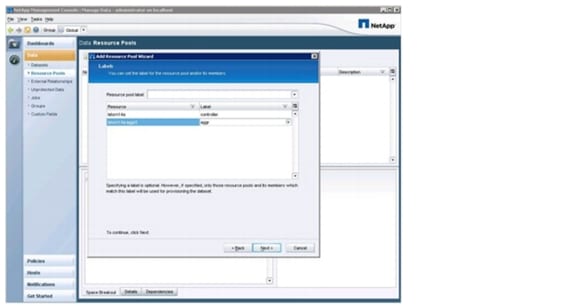

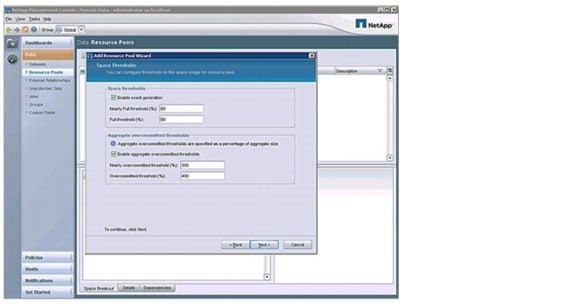

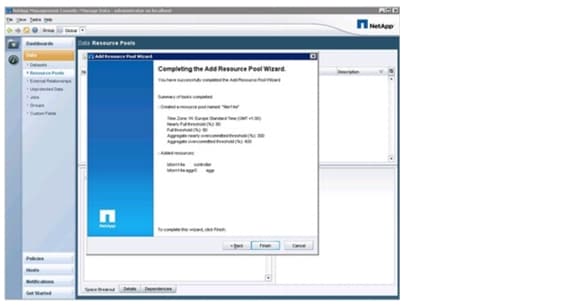

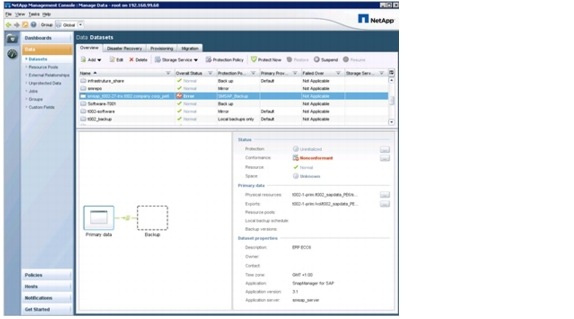

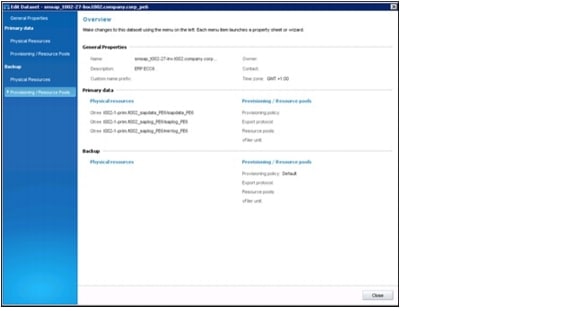

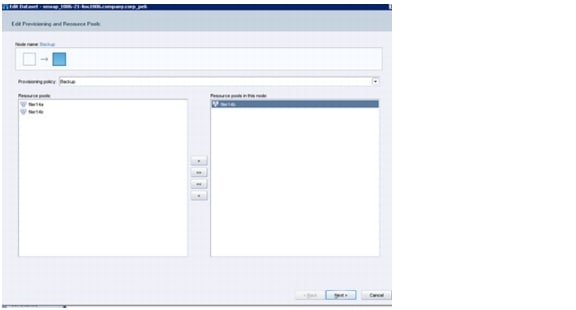

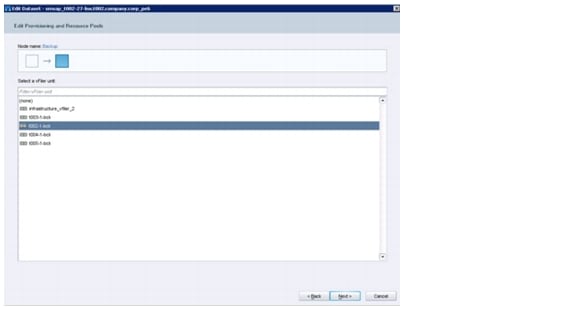

Configuration of Operations, Protection, and Provisioning Manager

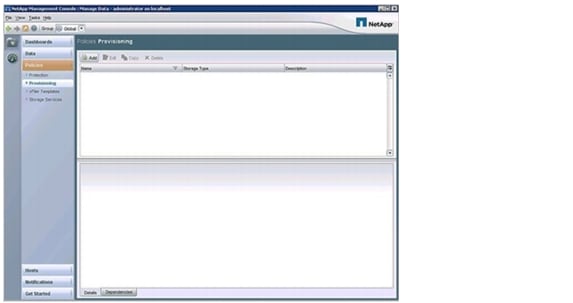

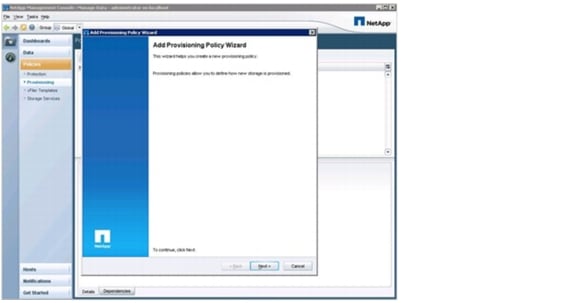

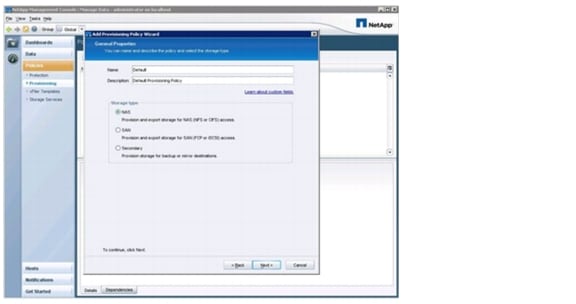

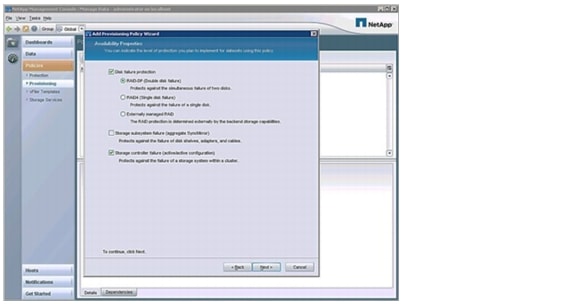

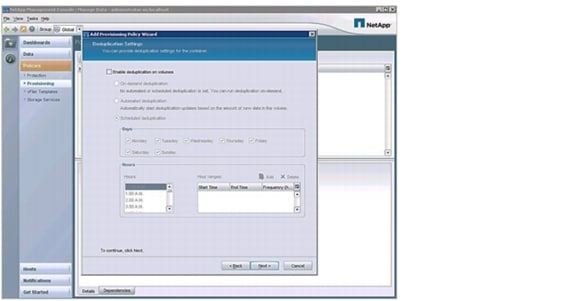

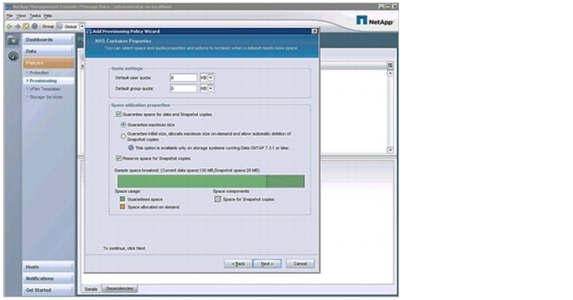

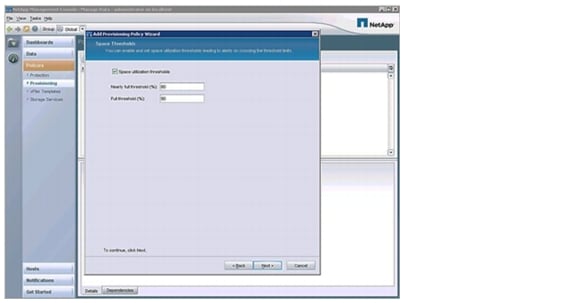

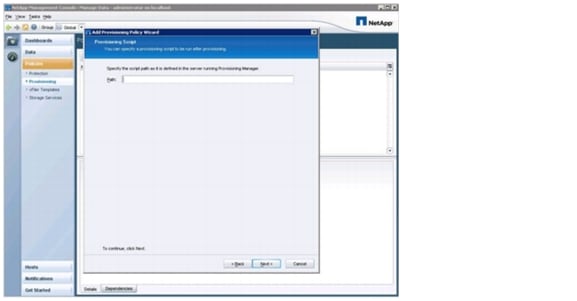

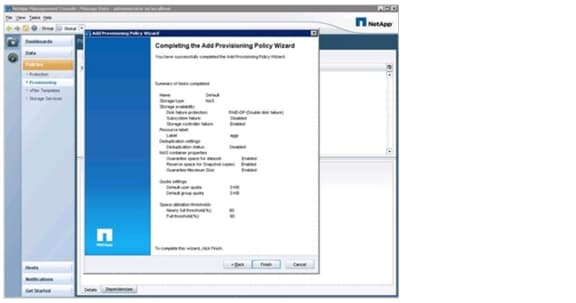

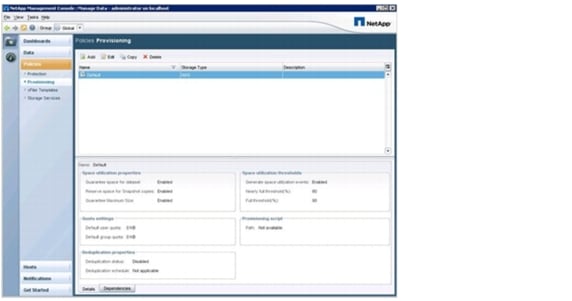

Defining Provisioning Policies

Setup of Infrastructure Volumes

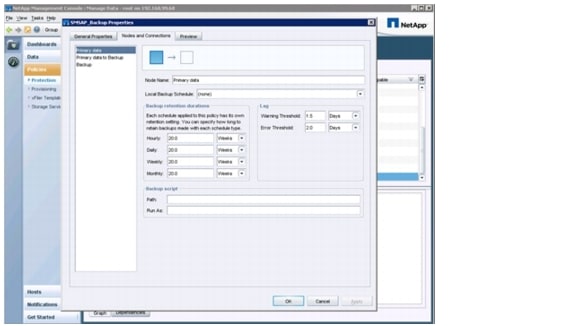

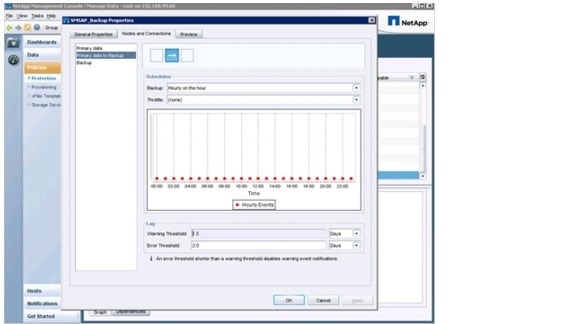

Back Up infrastructure_datastore

Back Up SAN Boot Volumes of ESXI Servers

SAP Landscape Virtualization Manager Setup

Back Up SMSAP Repository Database

Restore the SMSAP Repository Database

SMSAP Installation on the DFM Server

Infrastructure Tenant-Specific Services

Additional Steps for Handling a Second FlexPod Infrastructure

Creation of Additional VMware Datastores at Additional Storage Systems

Installation and Configuration of Operating System Images

Installation of Additional Software Components

Installation of SAP Host Agents

SnapDrive Installation and Configuration

SMSAP Installation and Configuration

SMSAP Post-Cloning Plug-In Configuration

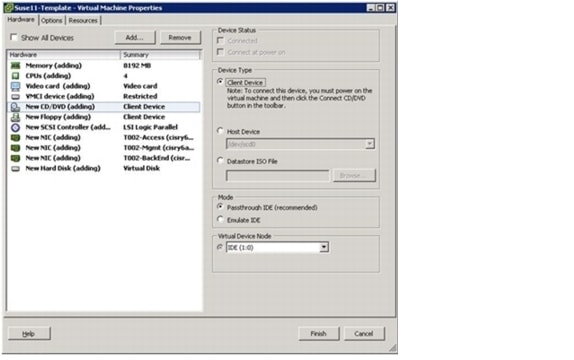

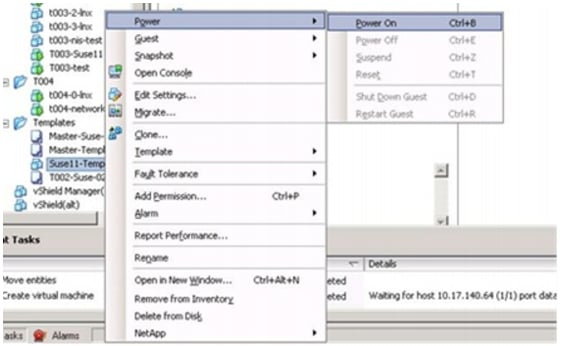

Converting the Virtual Machine to a Template

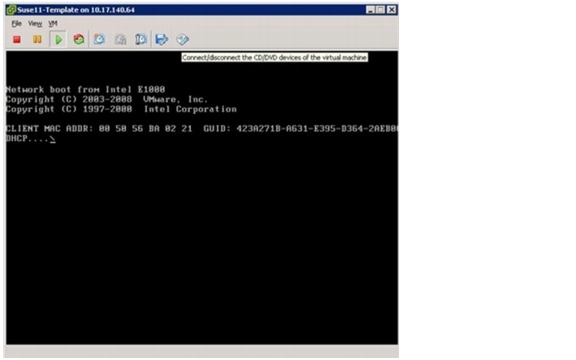

Start the Virtual Machine and Adapt the Software

Clean the Virtual Machine and Shut Down

Test and Release the New Template

Tenant-Specific Services Virtual Machine Template

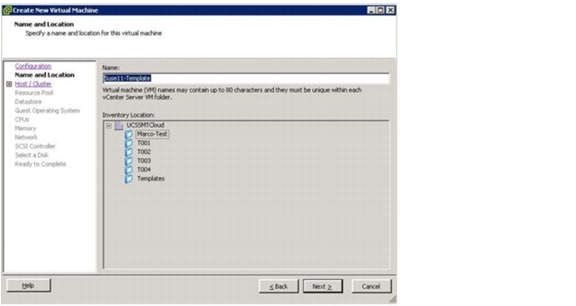

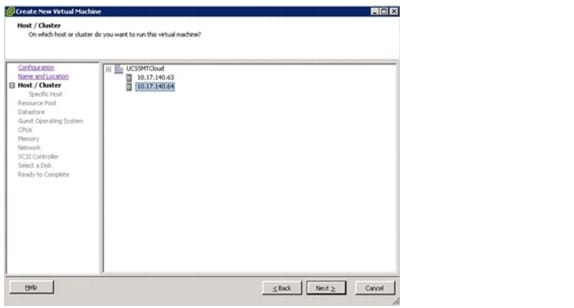

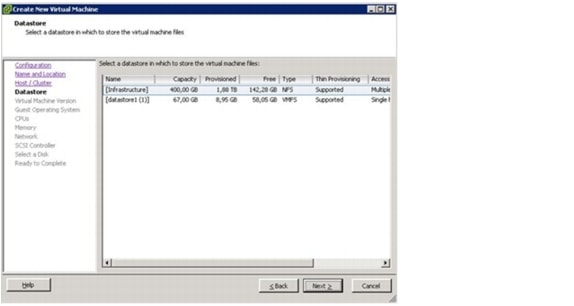

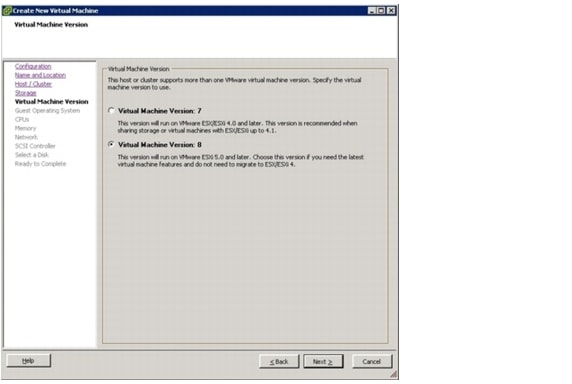

Converting the Virtual Machine to a VMware Template

Manual vFiler Unit Creation and Configuration

Additional Primary vFiler Unit Creation and Configuration

Directories for Volume TXXX_SHARE

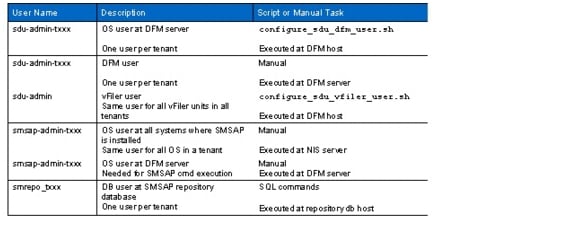

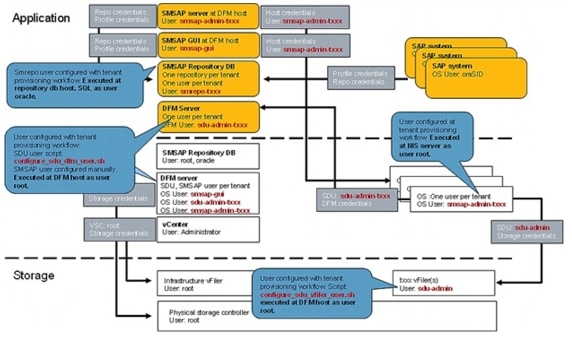

Tenant-Specific DFM, SDU, SMSAP, and Repository Users

Adding a vFiler Unit to a Tenant

Additional Steps Required to Work with a Second FlexPod Infrastructure

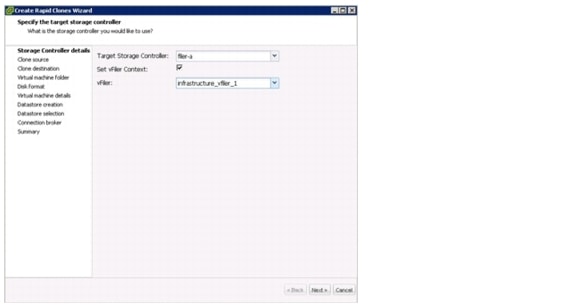

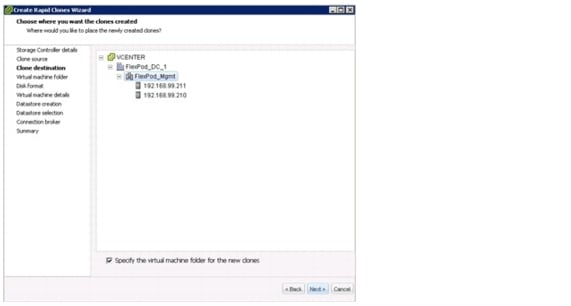

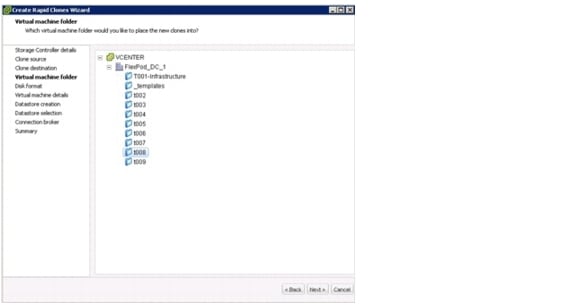

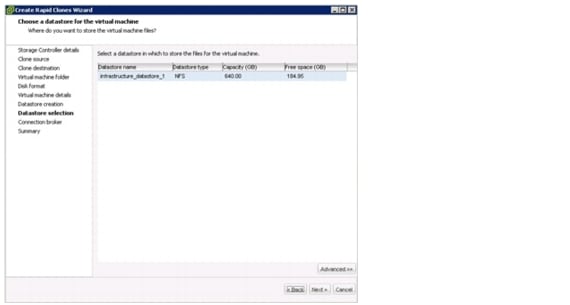

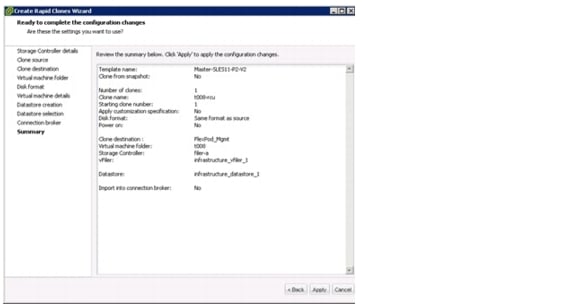

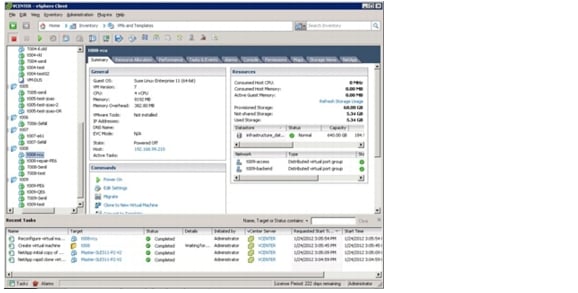

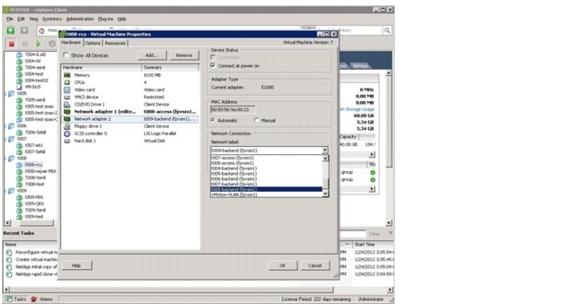

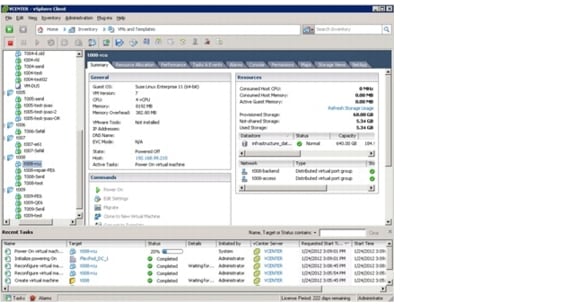

Manual Deployment Using the Rapid Cloning Utility

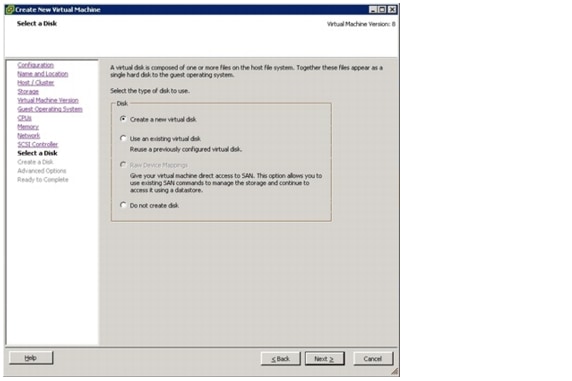

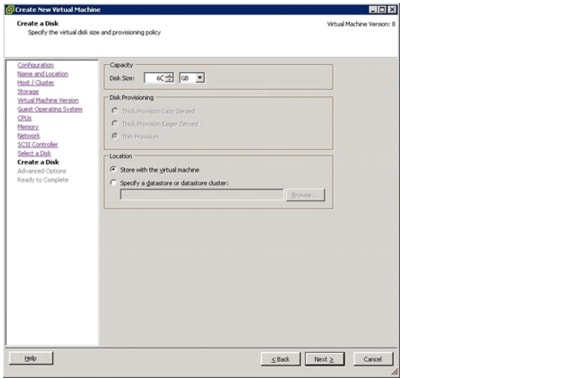

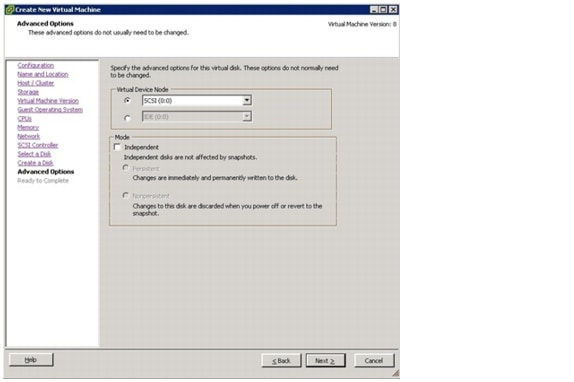

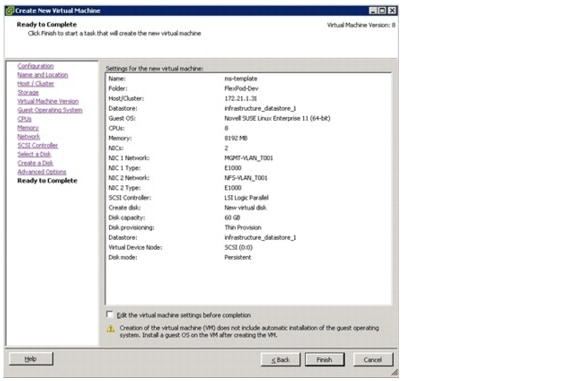

Manual Deployment Using the VMware Built-In Method

Deployment Using the Script DeployNewVm_w_rcu.ps1

Deployment Using the Script DeployNewVm.ps1

OS Provisioning SLES on Bare Metal

Create a Service Profile from a Service Profile Template

RHEL OS Provisioning on Bare Metal

Create a Service Profile from a Service Profile Template

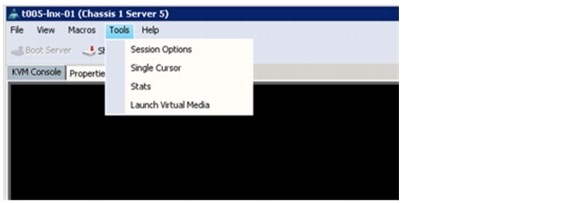

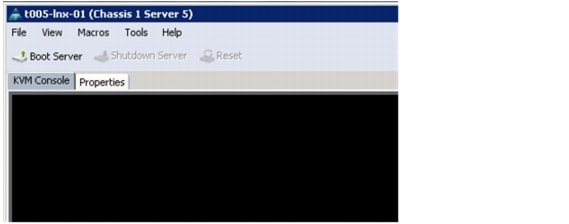

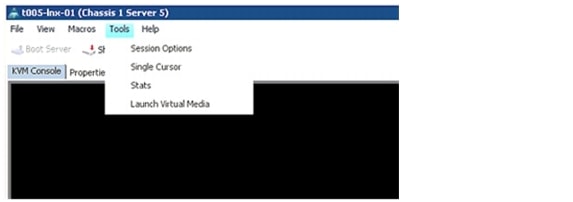

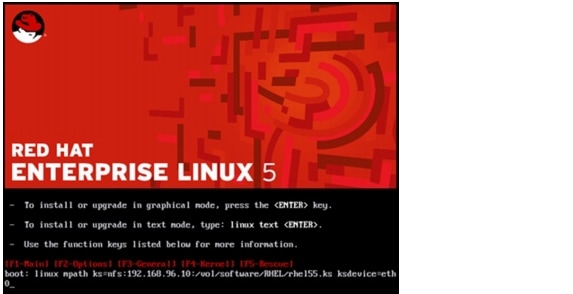

Install RHEL 5 with the Kickstart Option

Differences With a Second FlexPod Infrastructure

OS Provisioning SLES on Bare Metal

OS Provisioning RHEL on Bare Metal

OS Provisioning SLES Using PXE boot

Prepare the SID-Specific Configuration File

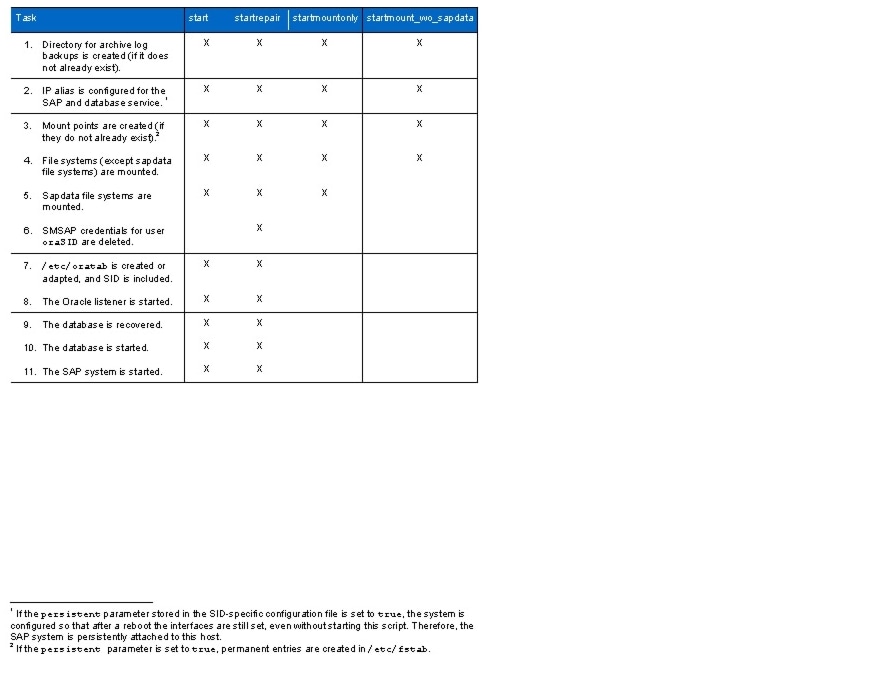

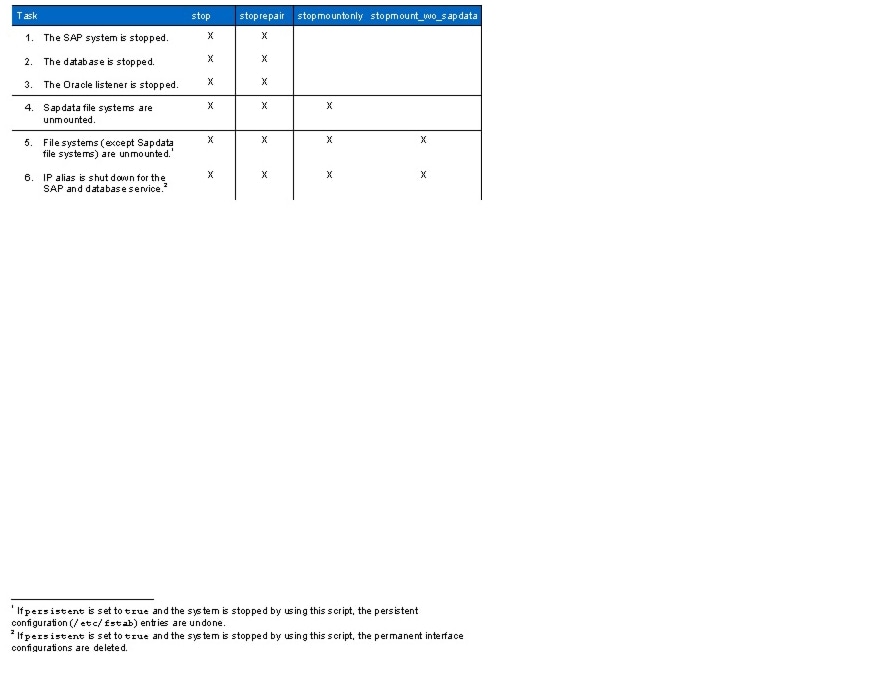

Mounting File Systems and Configuring the IP Alias

SAP System Installation with SAPinst

SAP System Post Installation Tasks

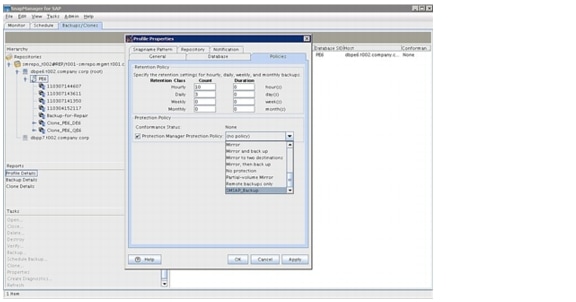

Configuring Backup Services for SAP Systems

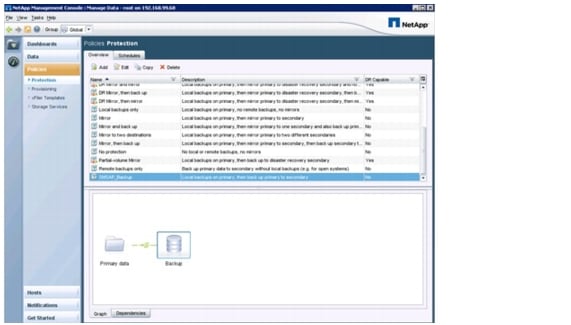

Protection Manager Protection Policies

Cloning From Secondary Storage

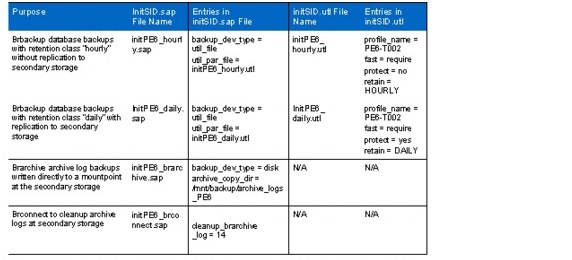

Brbackup and Brarchive Configuration

Naming Conventions and Prerequisites

Description of Configuration Files

Tenant-Specific Configuration File

SID-Specific Configuration File

SMSAP Cloning Specification File

Password File for SDU, SMSAP, DFM, and vFiler Credentials

Description of Example Scripts

Description of Workflow Example Scripts

Workaround for SMSAP and Virtual Host Names

SAP Applications Built on FlexPodLast Updated: May 15, 2013

Building Architectures to Solve Business Problems

About the Authors

Ulrich Kleidon, Technical Leader Engineering, Cisco Unified Computing System Solutions and Performance Team, Cisco Systems

Ulrich is a Technical Leader Engineering for Cisco's Unified Computing System (UCS) and Performance team. He is currently focused on validation of SAP application ready infrastructure solutions for the SAP Business Suite and Business Intelligent applications. . Over the past three years at Cisco, Ulrich has been in charge of the SAP certification and defining reference architectures, sizing rules and best practices for SAP on the Cisco Unified Computing System. Ulrich is a certified SAP NetWeaver Consultant and has more than 15 years experience in Datacenter and Enterprise Application solutions.

Matthias Schlarb, Technical Marketing Engineer, Cisco Systems

Matthias works as a Technical Marketing Engineer at the Cisco SAP Competence Center in Walldorf. His main focus is on SAP Solutions and Virtualization. In his previous roles at SAP alliances organizations, Matthias developed best practices and provided technical support for customers and field engineers.

Nils Bauer, SAP Competence Center Manager, NetApp.

Nils Bauer has a technical marketing role in NetApp's SAP Solutions and Integrations team. Over the last 12 years at NetApp, the areas of responsibility have been the integration of NetApp storage solutions into SAP environments as well as defining reference architectures, sizing rules and best practices for SAP on NetApp. Other areas Nils has been a part of are SAP technical pre-sales support and development of joint solutions with SAP and partners. Before joining NetApp, Nils worked at a consulting company where he focused on system and network management solutions and SAP Basis consulting.

Marco Schoen, Senior Technical Marketing Engineer, NetApp

Marco Schoen is a Technical Marketing Engineer at NetApp's SAP Competence Center. Main focus is developing NetApp storage based solutions for SAP applications, also jointly with SAP and SAP partners. In addition, Marco defines SAP reference architectures, best practices guides, and SAP technical presales. Prior to the 11 years at NetApp, Marco worked at a consulting company as a SAP Basis consultant.

Bernd Herth, CTO, GOPA IT Consultants

With more than 20 years of SAP background in all areas of SAP Technology, Bernd is a leading capacity on the SAP platform and infrastructure. Bernd focuses on ongoing R&D activities in partnership with leading technology partners, along with how to optimize IT infrastructure and creating the SAP Data Center of the future.

Tobias Brandl, Senior SAP Technology Consultant, GOPA IT Consultants

Tobias is a lead technical consultant in the area of SAP virtualization & infrastructure. He supports customers in optimizing their SAP architecture and operations using new technology concepts & virtualization. Before joining GOPA IT, he was a senior developer and SAP consultant at SAP in the areas of SAP ACC/LVM, SAP Business ByDesign and he was part of the virtual appliance factory development team in the SAP Center of Excellence/Value Prototyping.

Shailendra Mruthunjaya, SAP Technology Consultant, GOPA IT Consultants

Shailendra is a technical consultant in the area of SAP technology, Virtualization and infrastructure. He helps customers in designing, optimizing and integrating SAP appliance in their environment. Shailendra designs and manages SAP landscapes in the cloud environment for demo, proof of concept and training.

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2013 Cisco Systems, Inc. All rights reserved

SAP Applications Built on FlexPod

Introduction

The Challenge

Today's IT departments are increasingly challenged by the complexity of managing disparate components within their data centers. Rapidly proliferating silos of server, storage, and networking resources combined with numerous management tools and operational processes have led to crippling inefficiencies and costs.

Savvy organizations understand the financial and operational benefits of moving from infrastructure silos to a virtualized, shared environment. However, many of them are hesitant to make the transition because of potential short-term business disruptions and long-term architectural inflexibility, which can impede scalability and responsiveness to future business changes. Enterprises and service providers need a tested, cost-effective virtualization solution that can be easily implemented and managed within their existing infrastructures and that scales to meet their future cloud-computing objectives.

Business Challenges Facing the SAP Customer

Corporations deploying SAP software today are under pressure to reduce cost, minimize risk, and control change by accelerating deployments and increasing the availability of their SAP landscapes. Changing market conditions, restructuring activities, and mergers and acquisitions often result in the creation of new SAP landscapes based on the SAP NetWeaver® platform. Deployment of these business solutions usually exceeds a single production instance of SAP. Business process owners and project managers must coordinate with IT management to optimize the scheduling and availability of systems to support rapid prototyping and development, frequent parallel testing or troubleshooting, and appropriate levels of end-user training. The ability to access these systems as project schedules dictate-with current datasets and without affecting production operations-often determines whether SAP projects are delivered on time and within budget.

The Solution

To meet these challenges, NetApp, VMware, and Cisco have collaborated to create the SAP applications built on FlexPod data center solution. FlexPod is a proven long-term data center solution built on a flexible, shared infrastructure that can scale easily or be configured for secure multi-tenancy and cloud environments. FlexPod is a prevalidated configuration that delivers a virtualized data center in a rack composed of leading computing, networking, storage, and infrastructure software components.

SAP Applications Built on FlexPod

The SAP applications built on FlexPod data center solution differs from other virtualized infrastructure offerings by providing these advantages:

•

Validated technologies from industry leaders in computing, storage, networking, and server virtualization

•

A single platform, built from unified computing, fabric, and storage technologies, that lets you scale to meet the largest data center requirements without disruption or architectural changes in the future

•

Integrated components that enable you to centrally manage all your infrastructure pools

•

An open-design management framework that integrates with your existing third-party infrastructure management solutions

•

Support of VMware vSphere and bare metal server

•

Virtualization on all layers of the solution stack

•

Secure multi-tenancy for operating fenced SAP systems or landscapes

•

Application and data mobility

•

Integrated storage-based backup

•

Provisioning of infrastructure components; for example, tenants and operating systems

•

Automated SAP system copies

•

Provisioning of fenced SAP systems based on clones of production systems

Investment Protection with Standardized, Flexible IT

Together, NetApp, Cisco, and VMware provide a unified flexible architecture that is ready for virtualized environments today, yet is flexible enough to grow at your own pace to a fully private cloud. The Ethernet-based FlexPod framework fits right into your current infrastructure, eliminating the cost of replacing your existing technology.

FlexPod components are integrated and standardized to help you achieve timely, repeatable, consistent deployments and eliminate guesswork from the following areas:

•

Resource procurement and planning

•

Capacity and data center sizing

•

Identification of operations and provisioning requirements

As a result, you can understand and better predict the exact power, floor space, usable capacity, performance, and cost for each FlexPod deployment.

Scalability for Any Cloud Solution

FlexPod configurations can be right-sized up or out and then duplicated in modular fashion to fit your organization's unique needs. For example, large enterprises or service providers with mature IT processes and rapid growth expectations can deploy and scale out one or more FlexPod configurations to meet the following requirements:

•

Migration to a shared infrastructure with many SAP applications

•

Improved agility to meet growth and key critical business initiatives

•

Lower cost per user without sacrificing scalability

•

Simplified operating skills and processes and reduced costs

•

Evolution to operations that align with Information Technology Infrastructure Library (ITIL)-based standards

Medium-sized enterprises and customers with more moderate growth requirements can use FlexPod as a starting point for virtualization solutions. They can then scale up storage and compute pool capacity or performance within a FlexPod configuration while maintaining centralized management of the infrastructure solution.

SAP Applications Built on FlexPod Architecture

As the name implies, the FlexPod architecture is highly modular or "pod" like. Although each customer's FlexPod architecture might vary in its exact configuration, after a FlexPod architecture is built, it can easily be scaled as requirements and demand change. This includes scaling both up (adding additional resources within a FlexPod configuration) and out (adding additional FlexPod units).

Specifically, FlexPod is a defined set of hardware and software that serves as an integrated building block for all virtualization solutions. The SAP applications built on FlexPod data center solution includes NetApp® storage, Cisco® networking, the Cisco Unified Computing System™ (Cisco UCS), and VMware virtualization software in a single package in which the computing and storage fit in one data center rack, with the networking residing in a separate rack. Port density allows the networking components to accommodate multiple FlexPod configurations.

The infrastructure design of SAP applications built on FlexPod is based on the design of VMware vSphere built on FlexPod. In addition to this, SAP applications built on FlexPod solution describes the optional components and alternative configuration options.

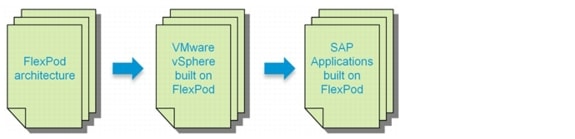

Figure 1 Infrastructure Design

Key Findings

The combination of technologies from Cisco Systems, NetApp and VMware produced a highly efficient, robust and scalable infrastructure for an SAP application deployment. Key components of the solution included:

•

The combined power of the Cisco Unified Computing System, Nexus switching and NetApp storage hardware with VMware vSphere software produces a high density of SAP applications per blade.

•

With the functionality of the SAP NetWeaver Landscape Virtualization Management Software together with NetApp storage, we tremendously accelerate the deployment of SAP applications.

•

A pre-defined script framework facilitates SAP basis operations like creating fenced clones for the use as repair systems or quick system refreshes of quality assurance systems.

•

All SAP and non-SAP systems are integrated in a provisioning framework that implements pre-defined procedures for automated backup/restore to ensure safe and SLA driven operation.

•

We maintain our industry leadership with our new Cisco UCS Manager 2.1 software that makes scaling efficient, consistency guaranteed and maintenance simple

•

Our 10G unified fabric story gets additional validation on second generation 6200 Series Fabric Interconnects and second generation Nexus 5500 Series access switches as we run more challenging workload testing, maintaining unsurpassed user response times.

•

We continue to present a validated design that is 100% virtualized on VMware vSphere 5.1. All of the management components, including DNS, provisioning services, Oracle database servers, and SAP application servers were hosted as virtual machines.

•

NetApp FAS 3200 series system provides storage consolidation and outstanding efficiency. Both block and NFS storage resources were provided by a single system, utilizing NetApp Ontap technology.

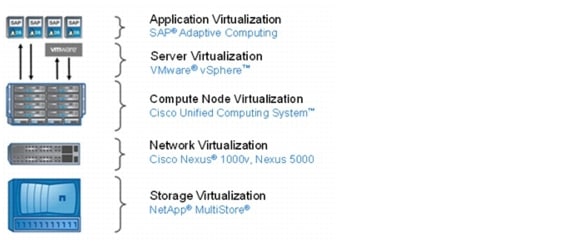

Architecture Overview

The SAP applications built on FlexPod data center solution introduces an infrastructure that is based on virtualization technologies on all layers of the solution stack within a pool of shared resources. SAP applications can be run on VMware virtual machines as well as on bare-metal servers. Figure 2 shows the components that are included.

Figure 2 Technology components of SAP applications built on FlexPod

The current version of the FlexPod data center solution supports SAP applications that run on SuSE Linux® or Red Hat Enterprise Linux using the Oracle® Database.

Management Components

SAP applications built on FlexPod includes the following management software components from the different partners:

•

SAP application management:

–

SAP Netweaver Landscape Virtualization Management (LVM)

•

VMware management:

–

VMware vCenter Server

•

Server management:

–

Cisco Unified Computing System Manager

•

Network management

•

NetApp storage management:

–

Operations Manager

–

Provisioning Manager

–

Protection Manager

–

SnapManager® for SAP (SMSAP)

–

Virtual Storage Console (VSC), including SnapManager for Virtual Infrastructure (SMVI) and Rapid Cloning Utility (RCU)

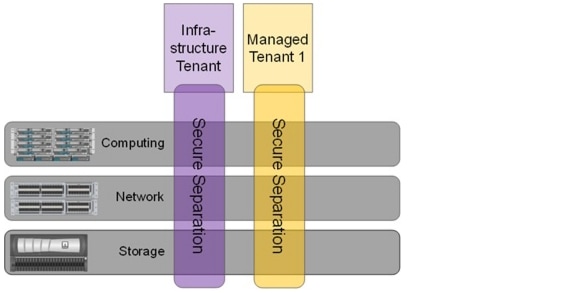

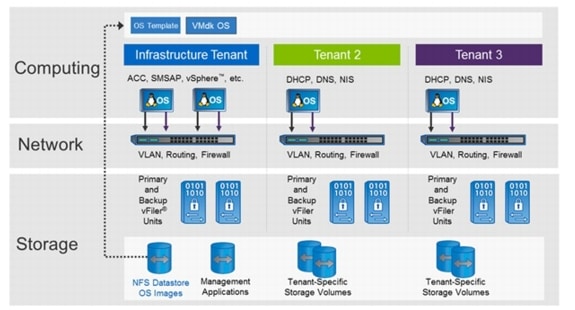

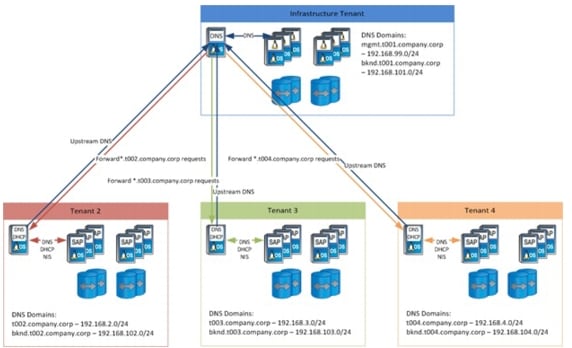

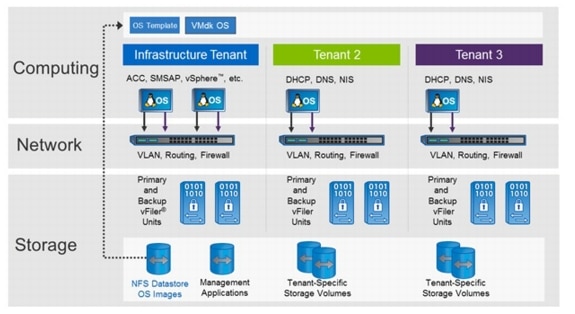

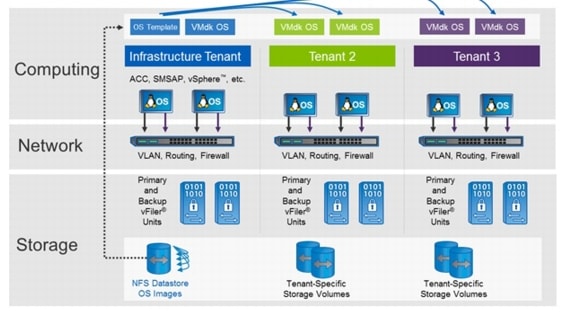

Tenant Operational Modes

SAP applications built on FlexPod is based on a multi-tenancy concept. A tenant is defined as a set of standardized, virtualized resources taken from a shared pool. Each tenant is isolated by VLAN technology on the networking and by NetApp vFiler® technology on the storage layer. SAP applications built on FlexPod consists of at least two tenants, one infrastructure tenant and one tenant for all SAP applications. Additional tenants can be created based on multi-tenancy requirements; for example, isolation of subsidiaries or isolation of clients. Additional tenants are also used to cover specific use cases such as fenced clones of SAP systems or landscapes.

The infrastructure tenant is used to run all management components for the infrastructure and the application layer. All "managed tenants" are administered from the infrastructure tenant.

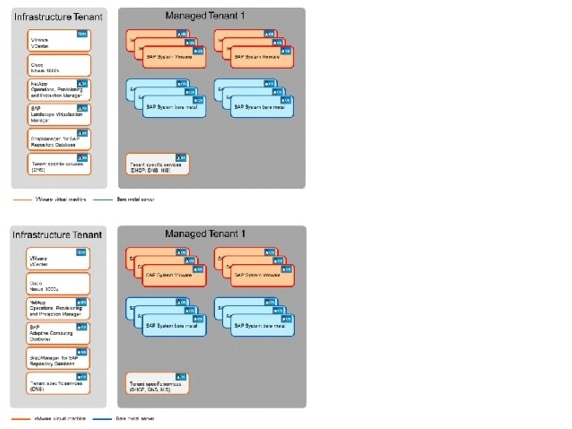

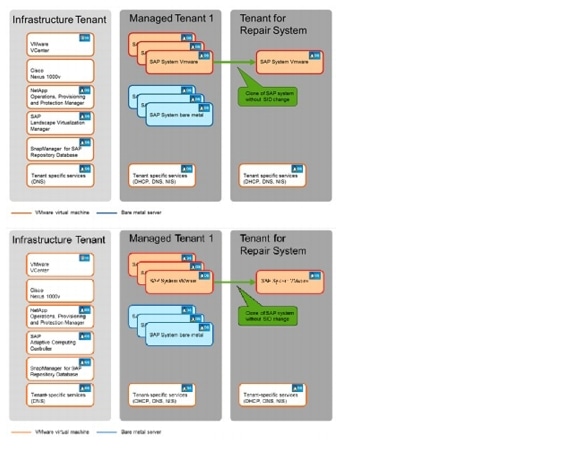

Figure 3 shows how an SAP landscape is deployed with a single managed tenant. All SAP systems are running within this tenant (Managed Tenant 1).

Figure 3 SAP applications built on FlexPod with a single tenant

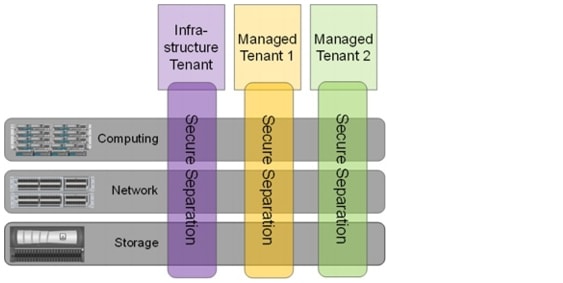

Figure 4 shows multiple tenants.

Figure 4 SAP application built on FlexPod with multiple tenants

Single-Managed Tenant Operation

Figure 5 shows a single-tenant environment.

Figure 5 Single-tenant operation

The SAP systems can run on VMware virtual machines or on bare-metal servers. All management applications are installed within the infrastructure tenant. These management applications run on VMware virtual machines.

•

VMware vCenter Server

•

NetApp Operations Manager, Protection Manager, and Provisioning Manager

•

NetApp SnapManager for SAP repository database

•

Tenant-specific services providing Domain Name System (DNS) services

•

SAP Landscape Virtualization Management (LVM)

In the second tenant, all SAP applications are running. The SAP systems can either run on VMware virtual machines or on bare-metal servers.

The "tenant-specific services" are also running in a VMware virtual machine in the second tenant, providing Dynamic Host Configuration Protocol (DHCP), Domain Name System (DNS), and Network Information Service (NIS) for this tenant.

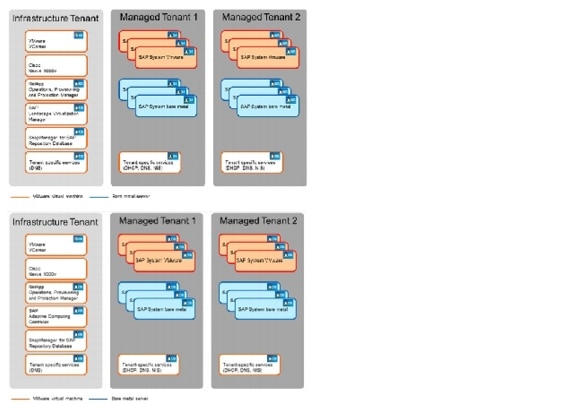

Multiple Managed Tenant Operation

Figure 6 shows multiple tenants can be used to isolate different subsidiaries, clients, or specific SAP landscapes.

Figure 6 Multiple tenants

When multiple tenants are used, the "tenant-specific services" VM runs in every tenant.

Server Architecture

Cisco Unified Computing System

The Cisco Unified Computing System physical servers are completely stateless and interchangeable. All server, network, and storage configuration information is contained in predefined XML service profiles, stored in the central Cisco UCS Manager, which dynamically maps these service profiles to physical compute blades based on predefined resource pools and policies. SAP administrators can bring new computing resources online and replicate or repurpose them in a matter of minutes rather than the weeks or months it traditionally takes to procure and deploy new resources.

The main system components include:

Compute. The system is based on an entirely new class of computing system that incorporates blade servers based on Intel® Xeon® processors. The Cisco UCS blade servers offer patented Cisco Extended Memory Technology to support applications with large datasets and allow more virtual machines per server.

Network. The system is integrated onto a low-latency, lossless, 10Gb/sec unified network fabric. This network foundation consolidates what today are three separate networks: LANs, SANs, and high-performance computing networks. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables and by decreasing power and cooling requirements.

Virtualization. The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

Storage access. The system provides consolidated access to both SAN storage and network-attached storage (NAS) over the unified fabric. Unifying storage access means that the Cisco Unified Computing System can access storage over Ethernet, Fibre Channel, Fibre Channel over Ethernet (FCoE), and iSCSI, providing customers with choice and investment protection. In addition, administrators can preassign storage-access policies for system connectivity to storage resources, simplifying storage connectivity and management while helping increase productivity.

Management. The system uniquely integrates all the system components, enabling the entire solution to be managed as a single entity through the Cisco UCS Manager software. The Cisco UCS Manager provides an intuitive graphical user interface (GUI), a command line interface (CLI), and a robust application programming interface (API) to manage all system configuration and operations. The Cisco UCS Manager helps increase IT staff productivity, enabling storage, network, and server administrators to collaborate on defining service profiles for applications. Service profiles are logical representations of desired physical configurations and infrastructure policies. They help automate provisioning and increase business agility, allowing data center managers to provision resources in minutes instead of days.

Working as a single, cohesive system, these components unify technology in the data center. They represent a radical simplification in comparison to traditional systems, helping simplify data center operations while reducing power and cooling requirements. The system amplifies IT agility for improved business outcomes. The Cisco Unified Computing System components illustrated in Figure 7 include, from left to right, fabric interconnects, blade server chassis, blade servers, and in the foreground, fabric extenders and network adapters.

Figure 7 Cisco Unified Computing System

VMware Architecture

VMware vSphere

VMware vSphere provides a foundation for virtual environments, including clouds. In addition to the hypervisor itself, it provides tools, such as VMware VMotion®, to manage the virtual landscape, and it allows the creation of secure private landscapes. With VMotion, you can move a virtual machine from one physical compute node to another without service interruption.

The powerful VMware virtualization solution enables you to pool server and desktop resources and dynamically allocate them with service-level automation so you can deploy a private cloud and deliver IT as a service (ITaaS). VMware components provide a scalable approach to virtualization that delivers high availability and agility to meet your changing business requirements. VMware vSphere, the industry's most complete and robust virtualization platform, increases IT efficiency through consolidation and automation, dramatically reducing your capital and operating costs while giving you the freedom to choose your applications, OS, and hardware. VMware vCenter Server Standard offers proactive end-to-end centralized management of virtual environments, delivering the visibility and responsiveness you need for cloud-ready applications.

VMware Network Distributed Switch

VMware vNetwork Distributed Switch maintains network runtime state for VMs as they move across multiple hosts, enabling inline monitoring and centralized firewall services. It provides a framework for monitoring and maintaining the security of virtual machines as they move from physical server to physical server and enables the use of third-party virtual switches such as the Cisco Nexus 1000V to extend familiar physical network features and controls to virtual networks.

In combination with a Cisco Virtual Interface Card (VIC) such as the Cisco UCS M81KR Virtual Interface Card, the Cisco UCS Virtual Machine Fabric Extender (VM-FEX) can be used to connect and manage the VMware vNetwork Distributed Switch directly. Using the Cisco UCS Manager, VM-FEX instead of a Cisco Nexus 1000V will shift the management from a "network device" to the UCS Manager by keeping all the advantages of a distributed switch as discussed in section, "Network Architecture."

Throughout this document Cisco Nexus 1000V will be used for all examples. Appendix D will discuss the configuration of a distributed switches based on VM-FEX.

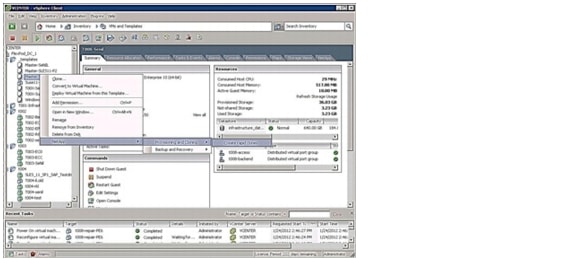

Figure 8 shows the VMware components and the plug-in architecture for VMware vCenter Server, which enables you to integrate additional plug-ins from NetApp and Cisco to manage integrated landscapes.

Figure 8 Overview of VMware components and management plug-ins

Storage Architecture

SAP applications built on FlexPod uses the NetApp MultiStore® software, which lets you quickly and easily create discrete and private logical partitions on a single storage system. Each virtual storage partition, a vFiler secure partition, maintains separation from every other storage partition, so you can enable multiple tenants to share the same storage resource without compromising privacy or security.

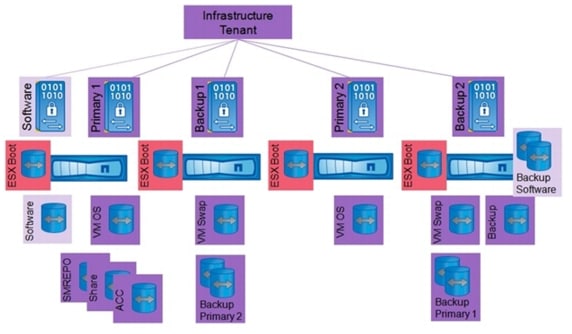

Infrastructure Tenant

The infrastructure tenant is used to run all management components for the infrastructure and the application layer. All "managed tenants" are administered from the infrastructure tenant. This tenant has at least two vFiler units, one primary and one backup. An additional software vFiler secure partition is available, which can be accessed (read/writable) from the infrastructure tenant and (read-only) from managed tenants. The software vFiler unit is used to distribute software and scripts to the managed tenants for automation purposes.

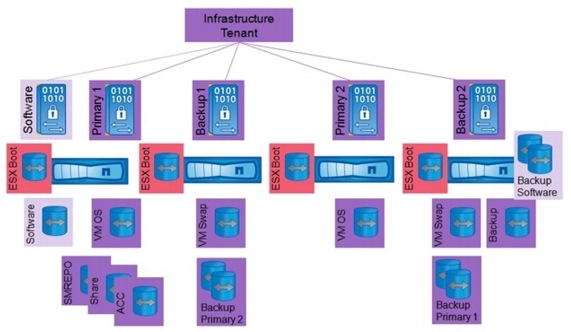

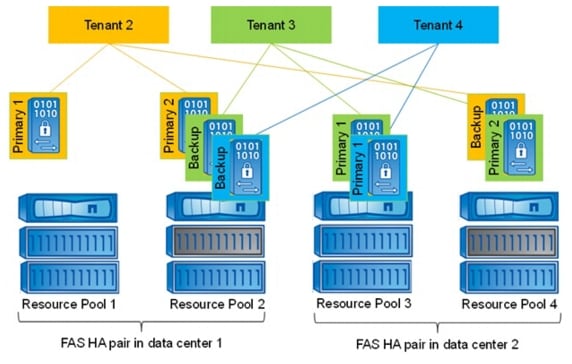

Figure 9 shows an example configuration with two FAS HA pairs located in different data centers. A FAS HA pair consists of two storage controllers in a high-availability configuration.

Each storage controller is assigned to a resource pool in NetApp Provisioning Manager, and vFiler units can be provisioned to each resource pool. In the example, resource pool 2 and resource pool 4 are resource pools that have SAS and SATA drives and are therefore used for both primary and backup vFiler units. The other resource pools are mainly used for primary vFiler units. The software vFiler unit is located at the first resource pool.

Figure 9 vFiler configuration infrastructure tenant

The infrastructure tenant has two central NetApp FlexVol® volumes that are shared with all systems running in this tenant:

•

The volume Share is assigned to vFiler unit Primary1 and is used for:

–

Central SAP transport directory

–

Tenant configuration files

–

Log files of scripts executed in the tenant

•

The volume Backup is assigned to vFiler unit Backup2 and is used for:

–

Backup of Oracle archive log files of SAP ACC database and SMSAP repository database

–

Backup of the DataFabric® Manager (DFM) database

The volumes Share and Backup are mounted by all systems.

In addition, several other volumes are part of the infrastructure tenant:

•

Volumes on vFiler unit Primary1:

–

Volume for the VM datastore

–

Volumes for the SAP ACC system

–

Volumes for the SMSAP repository database

•

Volumes on vFiler unit Backup 1:

–

The datastore used as swap space for VMs

–

The backups of all second primary vFiler volumes

•

Volumes on vFiler unit Backup 2:

–

Backup of all first primary vFiler volumes

–

Additional VM datastore used as swap space for VMs

–

The backup of the software volume is located at the same physical controller as the second backup vFiler unit

•

Volumes on vFiler unit Primary2:

–

Additional VM datastore

•

Volumes on vFiler software:

–

Volume software

The boot LUNs for the VMware ESXi and bare metal server must be configured on the physical controllers. All physical storage controllers can host volumes with boot LUNs. However, the LUNs should be located in the same data center as the servers that boot using these LUNs.

Figure 10 is an overview of the distribution of volumes to vFiler units and controllers.

Figure 10 Overview of infrastructure tenant volumes

Managed Tenants

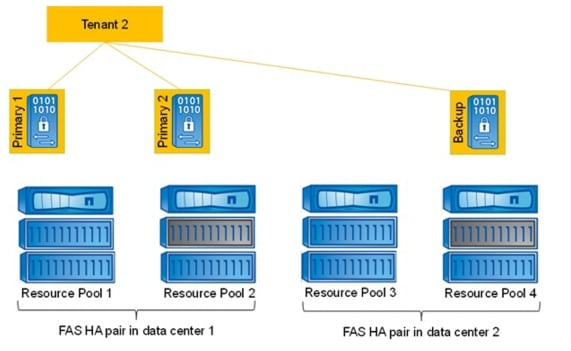

Figure 11 shows an example configuration with two FAS HA pairs located in different data centers. Each storage controller is assigned to a resource pool in NetApp Provisioning Manager, and vFiler units can be provisioned to each resource pool. In the example, resource pool 2 and resource pool 4 are resource pools that have SAS and SATA drives and are therefore used for primary and backup vFiler units. The other resource pools are used for primary vFiler units. With SAP applications built on FlexPod, the vFiler units are configured as follows:

•

Each managed tenant has at least:

–

One primary vFiler unit, Primary1

–

One backup vFiler unit, Backup, located in the second data center in this example

•

Additional primary vFiler units can be assigned to a tenant:

–

Additional primary vFiler units are on different physical storage controllers (resource pools)

–

A single tenant can scale to multiple physical storage controllers for performance reasons

Figure 11 vFiler unit configuration for a tenant

Figure 12 shows an example configuration with three managed tenants. The primary vFiler units are distributed to different resource pools. The backup vFiler units are configured at the backup resource pool in the other data center so that backups of primary data are located in a different data center.

Figure 12 vFiler unit configuration for multiple tenants

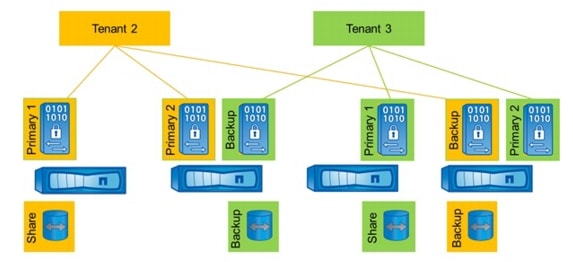

Each tenant has two central FlexVol volumes that are shared with all SAP systems running in that tenant.

•

The volume Share is used for:

–

Central SAP transport directory

–

Tenant configuration files

–

Log files of scripts executed in the tenant

•

The volume Share is assigned to vFiler unit Primary1 on the tenant

•

The volume Backup is used for:

–

Backup of Oracle archive log files with Brarchive

•

The volume Backup is assigned to vFiler unit Backup of the tenant

The volumes Share and Backup are mounted by all operating systems during system boot. Figure 13 shows the central volumes of a single tenant.

Figure 13 Central volumes of a tenant

An additional tenant necessitates an additional central volume Backup on the Backup vFiler unit of the tenant and an additional Share volume assigned to the Primary1 vFiler unit of that tenant. Figure 14 shows the central volume configuration with two managed tenants.

Figure 14 Central volumes with multiple tenants

During the process of provisioning storage volumes for an SAP system, you can decide on which primary vFiler unit the SAP system should run. This allows different SAP systems to run on different storage hardware. Each SAP system consists of two volumes, the sapdata and the saplog volumes. Backups of SAP systems are controlled by SnapManager for SAP and NetApp OnCommand® Unified Manager. The backups are stored on the backup vFiler unit of each tenant. When an SAP system copy has finished using SnapManager for SAP, the clone of the sapdata volume can be either on the same primary vFiler unit as the source system or on the backup vFiler unit. Figure 15 shows an example distribution of SAP volumes.

Figure 15 SAP volumes of a tenant

With additional tenants, the FlexVol volumes for the SAP systems of the new tenant are assigned to the primary vFiler units of the tenant. Figure 16 shows an example configuration.

Figure 16 SAP volumes with mulitple tenants

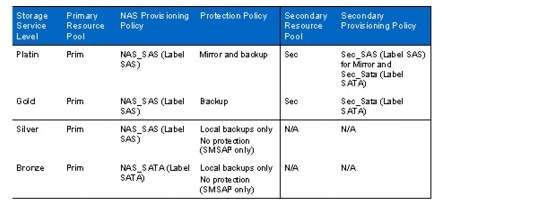

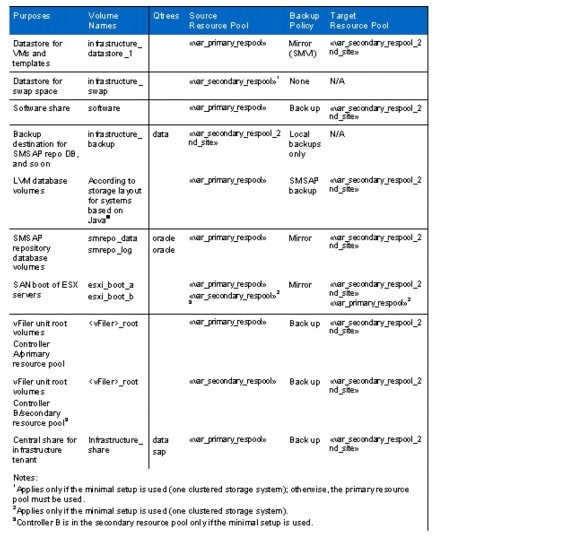

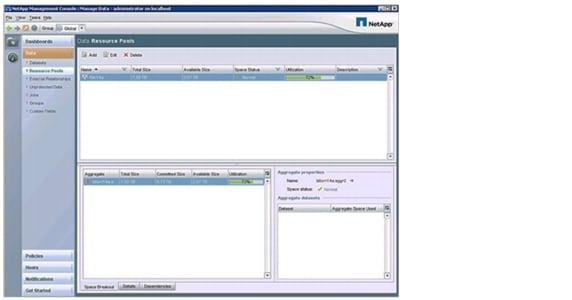

Examples of Storage Service Levels

Based on resource pools, provisioning policies, and protection policies, different storage service levels can be offered by NetApp Operations Manager, Protection Manager, and Provisioning Manager.

A resource pool can consist of several physical controllers and aggregates. However, with SAP applications built on FlexPod, each resource pool should contain only one controller. The controller and aggregates of one resource pool are used to provision storage units such as vFiler units, volumes, and qtrees.

A provision policy defines, based on several criteria, which physical storage is used for provisioning storage. Criteria are RAID protection levels, deduplication, HA configuration of the storage system, and other factors.

Two kinds of provisioning policies are used in FlexPod: NAS and secondary. A NAS provision policy is used for primary storage and can also be used for mirror destinations. A secondary provisioning policy is used for backup purposes and can also be used for mirror destinations.

A protection policy defines the way in which the primary data is protected. A mirror protection policy is used mainly for DR purposes (1:1 source:destination); a backup policy is used for backup purposes (1:N relationship).

Labels can be assigned to different physical storage objects like controllers and aggregates. These labels can be set in a provisioning policy, for example to make sure that the right aggregate is used.

Table 1 shows four different storage service levels: Platin, Gold, Silver, and Bronze. Each service level includes a resource pool, a NAS provisioning policy, and a protection policy. In addition, a secondary resource pool and secondary provisioning policy are needed for Platin and Gold levels.

Table 1 Storage service levels

Notes

•

Primary resource pools (Prim). All storage systems that are used for primary storage should belong to these resource pools. Different types of aggregates should receive appropriate labels when the resource pool is created. For example, an aggregate created from SAS or FCP disks would be assigned the resource label SAS; an aggregate created from SATA disks would be assigned the label SATA.

•

NAS provisioning policy. Create two provisioning profiles, NAS_SAS, using the label SAS; and NAS_SATA, using the label SATA.

•

Secondary resource pools (Sec). All storage systems that are used for secondary storage should belong to these resource pools. Different types of aggregates should receive appropriate labels when the resource pool is created. For example, an aggregate created from SAS or FCP disks would be assigned the label SAS; an aggregate created from SATA disks would be assigned the label SATA.

•

Backup provisioning policy. Create two provisioning profiles: SEC_SAS, using the label SAS; and SEC_SATA, using the label SATA.

•

The Platin service level provisions primary storage out of the Prim resource pool using SAS or FCP disks with the NAS_SAS provisioning policy. The data is mirrored to a second location provisioned out of the SEC resource pool using the SEC_SAS provisioning policy for disaster recovery. In addition, the primary data is backed up to a third location provisioned out of the SEC resource pool with the SEC_SATA provisioning policy. Therefore the Mirror and Backup protection policy is used.

•

The Gold service level provisions primary storage out of the Prim resource pool using SAS or FCP disks with the NAS_SAS provisioning policy. The data is backed up to a second location provisioned out of the SEC resource pool using the SEC_SATA provisioning policy with the Backup protection policy.

•

The Silver service level provisions primary storage out of the Prim resource pool using SAS or FCP disks with the NAS_SAS provisioning policy. The data is backed up using local NetApp Snapshot™ copies. Because local Snapshot copies are created by SMSAP, it is not necessary to assign a protection policy.

•

The Bronze service level provisions primary storage out of the Prim resource pool using SATA disks with the NAS_SATA provisioning policy. The data is backed up using local Snapshot copies. It is not necessary to assign a protection policy because local Snapshot copies are created by SMSAP.

For more information, refer to "Provisioning Manager and Protection Manager Guide to Common Workflows for Administrators for Use with DataFabric Manager Server 4.0."

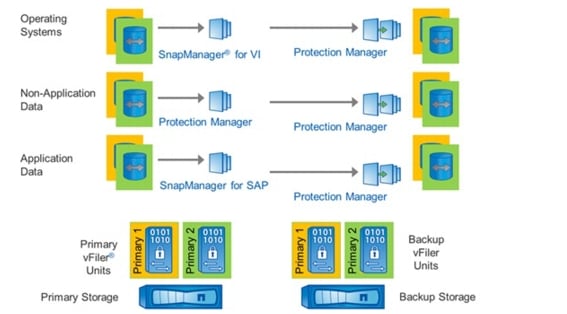

Integrated Storage-Based Backup

SAP applications built on FlexPod has an integrated backup solution for all infrastructure and application data. Nonapplication data is backed up by using Protection Manager. All storage volumes are provisioned with Provisioning Manager and are automatically assigned to a protection policy.

Virtual machines and templates are backed up with SnapManager for Virtual Infrastructure (SMVI), which is part of the NetApp Virtual Storage Console (VSC). SMVI integrates with VMware vSphere and provides consistent storage of Snapshot images of individual VMs or complete datastores. These consistent Snapshot images are replicated to a secondary storage system through Protection Manager.

SAP application data is backed up with SnapManager for SAP and Protection Manager, offering a Backint integration into SAP Br*tools.

The integrated storage-based backup offers these benefits:

•

Automated, application-integrated data protection for all data

•

Fast and space-efficient backups based on Snapshot technology

•

Efficient block-incremental replication to backup storage

•

Backup decoupled from the server layer

•

Restore in minutes

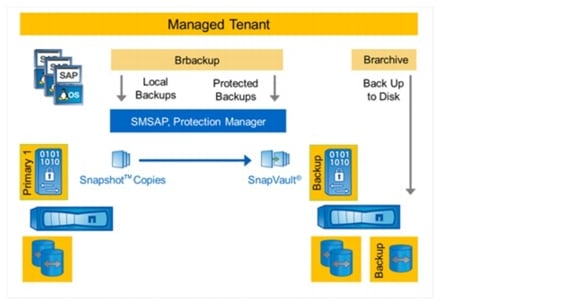

Figure 17 is an overview of the backup solution.

Figure 17 Overview of integrated backup

SnapManager for SAP offers Backint integration into SAP Br*tools. Backup and restore processes are executed with SAP Brbackup, Brrachive, Brrecover, and Brrestore.

Backups executed by Brbackup can be done as either local or protected backups.

•

A local backup creates a Snapshot copy of the SAP system on the primary vFiler unit.

•

A protected backup also initiates a block-incremental replication of the backup data to the backup vFiler unit.

Local backups are typically created more than once a day and are kept for 3 to 5 days at the primary storage. Protected backups are typically created once a day and are kept for 2 to 4 weeks at the secondary storage. Backup schedules and retention policies can be configured individually with the SAP database planning calendar, SnapManager for SAP, and Protection Manager.

Archive log backups are made using Brarchive. Brarchive is configured to back up the archive logs directly to the backup volume on the secondary storage system. Figure 18 shows the backup process of SAP systems.

Figure 18 Integrated backup of SAP systems

Network Architecture

Cisco Nexus 5000 Series Switches

The Cisco Nexus 5000 Series switches, part of the Cisco Nexus family of data-center-class switches, delivers an innovative architecture to simplify data center transformation by enabling a high-performance, standards-based, Ethernet unified fabric. The platform consolidates separate LAN, SAN, and server cluster network environments into a single unified fabric. Backed by a broad system of industry-leading technology partners, the Cisco Nexus 5000 Series is designed to meet the challenges of next-generation data centers, including dense multisocket, multicore, virtual-machine-optimized services, in which infrastructure sprawl and increasingly demanding workloads are commonplace.

Cisco Nexus 1000V Series Switches

The Cisco Nexus 1000V Series provides a common management model for both physical and virtual network infrastructures through Cisco VN-Link technology, which includes policy-based virtual machine connectivity, mobility of virtual machine security and network properties, and a nondisruptive operational model.

Policy-Based Virtual Machine Connectivity

To facilitate easy creation and provisioning of virtual machines, the Cisco Nexus 1000V Series includes port profiles. Port profiles enable you to define virtual machine network policies for different types or classes of virtual machines and then apply the profiles through VMware vCenter. Port profiles are a scalable mechanism for configuring networks with large numbers of virtual machines. When the port profiles include QoS and security policies, they constitute a complete service-level agreement (SLA) for the virtual machine's traffic.

Mobility of Virtual Machine Security and Network Properties

Network and security policies defined in the port profile follow the virtual machine throughout its lifecycle, whether it is being migrated from one server to another, suspended, hibernated, or restarted. In addition to migrating the policy, the Cisco Nexus 1000V Series VSM moves the virtual machine's network state. Virtual machines participating in traffic-monitoring activities can continue these activities uninterrupted by VMware VMotion operations. When a specific port profile is updated, the Cisco Nexus 1000V Series automatically provides live updates to all the virtual ports that use that same port profile. The capability to migrate network and security policies through VMware VMotion makes regulatory compliance much easier to enforce with the Cisco Nexus 1000V Series because the security policy is defined in the same way as for physical servers and is constantly enforced by the switch.

Example Network Architecture

Putting it all together, this example shows how all the required physical components must be attached to the networking layer:

•

Cisco UCS fabric interconnect, 2 chassis with 2 blades each

•

Cisco Nexus 5000 Series switch

•

NetApp physical storage controller A/B

•

VMware ESX Server

•

Central switch (not included as part of this solution)

•

Physical management switch (not included as part of this solution)

In order to focus on the network essentials, this example does not include any FC or FCoE connectivity, nor does it detail HA setup on the Cisco Nexus Switch layer. It includes only one NetApp cluster; that is, two physical control nodes.

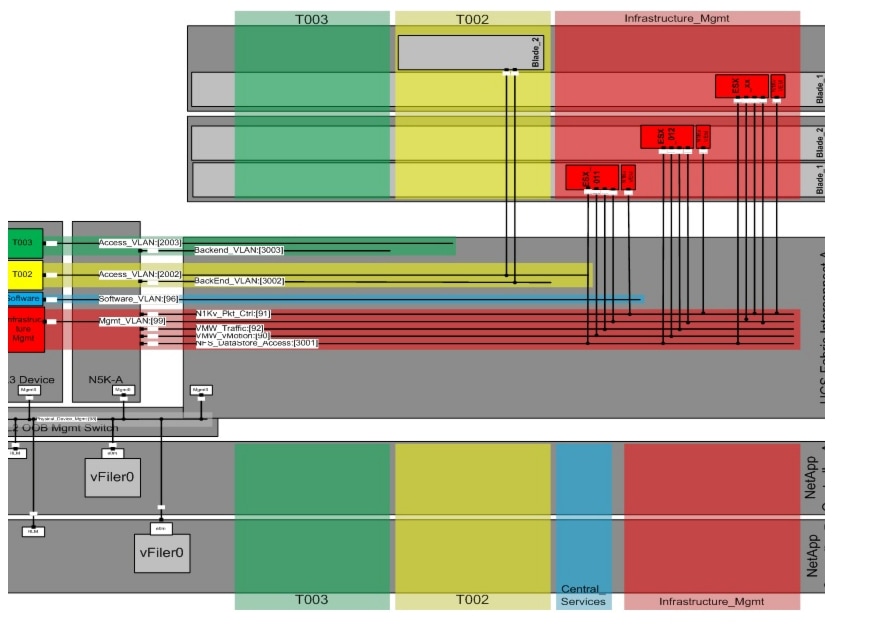

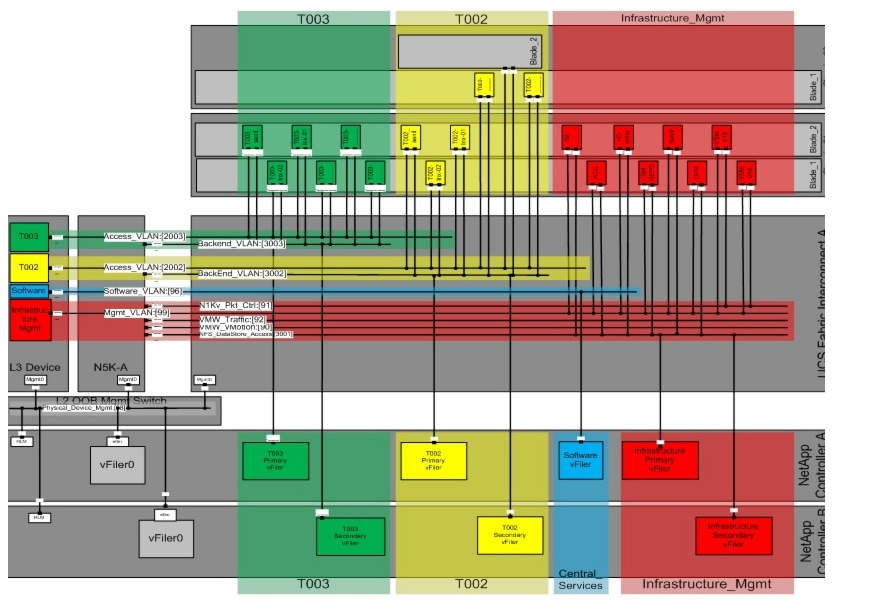

To illustrate the tenant-based structure, this example uses a total of three tenants: one infrastructure tenant and two tenants (t002, t003) to run independent SAP landscapes.

In this example, all physical components are connected to a physical management switch because this configuration is found in most customer data centers for the physical or console LAN.

As shown in Figure 19, four blades are used in such a way that:

•

Three servers are running ESXi as virtualization layer

•

One server is attached directly to tenant t002

Figure 19 Example network—physical layer

For each IP network, a VLAN is defined that separates the different networks on layer 2. The networks can be grouped into three logical segments:

•

Infrastructure management, with the following virtual LANs

–

Management VLAN (for example, VLAN ID 99)

–

NFS datastore VLAN (for example, VLAN-ID 3001)

–

Cisco Nexus 1000V packet control (for example, VLAN ID 91)

–

VM traffic (for example, VLAN ID 92)

–

VMotion (for example, VLAN ID 90)

•

Tenant t002

–

Access LAN (VLAN ID 2002)

–

Back-end VLAN (VLAN ID 3002)

•

Tenant t003

–

Access LAN (VLAN ID 2003)

–

Back-end LAN (VLAN ID 3003)

All the VLANs are connected to the Cisco Nexus 5000 Series switch and are defined at the UCS uplink; the physical storage controllers only define interfaces on the physical management LAN.

Each virtual or physical host in a tenant, except for the special infrastructure tenant, is connected to two networks:

•

The access VLAN, for the client and tenant-external connectivity

•

The back-end VLAN, for the storage connection

As shown in Figure 20, the vFiler units are only connected to the back-end VLAN

Routing

In order to reach the Access VLAN from the customer network and for all internal management connections between the infrastructure management VLAN and the Tenant Access LAN's, the solution requires that routing into this VLAN's is possible..

The previous version of this guide describes the connection of the FlexPod Infrastructure to a central switch to use the required L3 functionality to enable routing. Now, as an additional option the L3 modules of both Cisco Nexus 5548 switches could be used to implement the required L3 functionality. All examples throughout this document are using the central switch configuration, while an alternative setup using the Cisco Nexus 5548 L3-modules is described in Appendix E.

For the tenant access VLANs and the infrastructure management VLAN only, a switch virtual interface (SVI) is defined on the central switch The SVI offers the capability of basic layer 3 routing functionality on a layer 2 switch without the requirement of specific routing protocols in order to implement inter-VLAN routing.

Layer 3 separation of the tenants is accomplished by defining ACLs on the central, so that all security and connectivity requirements can be adapted through standard or extended ACLs. ACLs must be defined so that communication between the individual tenants (t002, t003) is prohibited, while communication between the infrastructure tenant and the individual tenants must be possible in order to allow management functions to work.

ACLs can also be used to control the accessibility of the systems in a tenant from the client network. For more information about ACLs, see section 4, "FlexPod Built on VMware Setup."

Figure 20 Example network—virtual systems view

Application and Data Mobility

SAP applications built on FlexPod provides mobility on all layers of the solution stack:

•

Application mobility with SAP Adaptive Computing

•

Server mobility based on Cisco UCS technology

•

Live migration of VMs with VMware VMotion

•

Migration of NetApp vFiler units

These technologies are used for migrating applications and data with little or no downtime; they support the following use cases:

•

Changing performance requirements

–

Migration of data to a different physical storage system

–

Migration of VMs to a different ESX server

–

Migration of SAP systems or databases from VM to bare metal server or vice versa

•

Minimize planned downtime (hardware exchange)

–

Migration of vFiler units, data, VMs, or SAP applications to new hardware

•

Support requirements

–

Migration of the database from a VM to a bare metal server

Automated Provisioning of Infrastructure Components

SAP applications built on FlexPod offers the possibility of automatically provisioning infrastructure components, such as the creation of new tenants and the deployment of operating systems based on templates.

Tenant Provisioning

All managed tenants in the SAP applications built on FlexPod solution are based on the same components, combined with tenant-specific parameters. This standardization allows you to document and automate the workflow for the provisioning and deprovisioning of additional tenants.

A tenant is provisioned with the following configuration steps:

•

Network

–

VLAN, routing, and ACL configuration

•

Storage

–

Provisioning of vFiler units

–

Provisioning of central tenant-specific volumes

•

Tenant-specific services

–

Provisioning of a VM template, providing DHCP, DNS, and NIS services

–

DHCP, DNS, and NIS server configuration

•

Tenant-specific SMSAP configuration

–

Repository configuration

–

DFM, vFiler, SMSAP, and SDU user and credential configuration

Figure 21 shows an overview of tenant components.

Figure 21 Overview of tenant components

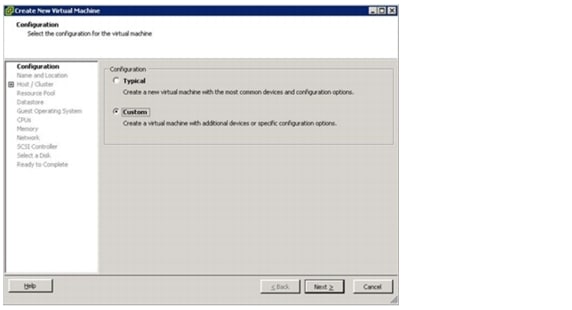

Operating System Deployment

The combination of NetApp cloning technology, templates of operating systems, and virtualized applications with SAP Adaptive Computing allows fast provisioning of operating systems for new servers and virtual machines and simplifies patching of operating systems.

New patches are applied and tested at a new golden image. After that the image gets cloned and, in combination with VMware vSphere and the Cisco UCS, is provisioned to new servers (physical or virtual), and the SAP application is relocated to the new hosts.

All required services for operating an SAP system (for example, backup services) are preinstalled and are automatically configured during the operating system provisioning process. This reduces the amount of time and resources required to apply patches to operating systems, because only one operating system must be patched and tested and not the operating systems of all involved hosts.

Automated Space-Efficient SAP System Copies

SAP applications built on FlexPod leverages SnapManager for SAP to create space-efficient SAP system copies. Any backup that has been created with SMSAP can be used as a source for an SAP system copy. The target storage of the system copy can be either the primary or the backup vFiler unit. The source and the target SAP systems must be in the same tenant.

The following use cases are supported with SAP applications built on FlexPod:

•

New SAP test or QA system setup based on a clone of the production system. SMSAP is used in combination with SAPinst to install the new system and to provide the database data by using NetApp FlexClone® technology.

•

Refresh of an existing SAP test or QA system based on a clone of the production systems. SMSAP is used to refresh the database data by using FlexClone technology. This document describes only the refreshing of Advanced Business Application Programming (ABAP) based systems.

Figure 22 shows the process for automated space-efficient SAP system copies.

Figure 22 Automated SAP system copies

Provisioning Fenced SAP Systems

The built-in secure multi-tenancy functionality of SAP applications built on FlexPod is also used to cover specific use cases that require isolation of an SAP system.

A fenced SAP system is an identical 1:1 clone of an SAP source system and can therefore be created in a few minutes. The clone has the same SID as the source system. With the standard approach, the target system has a different IP address and a different fully qualified domain name (FQDN). Both attributes are tenant specific.

For example:

Source system: SID=PE6, source tenant=t002, hostname=cipe6.t002.company.corp, IP=192.168.2.100

Target system: SID=PE6, target tenant=t003, hostname=cipe6.t003.company.corp, IP=192.168.3.100

One use case, shown in Figure 23, is the creation of a repair system that can be used to address logical errors that occurred in the source system.

Figure 23 Multiple tenants including a repair system

SAP applications built on FlexPod includes a fully automated workflow for creating a repair system:

1.

The time stamp of the repair system can be very flexible, since any SMSAP backup that has been created at the source system can be used.

2.

A new OS image is provisioned into the repair tenant.

3.

The required users and services are configured within the tenant-specific services in the repair tenant.

4.

The source system is cloned using the chosen SMSAP backup.

5.

The clone is moved to the repair tenant.

6.

The database and the SAP system are started in the repair tenant.

Provisioning of complete fenced SAP landscapes requires additional steps, which are described in section, "Cloning a Complete System Landscape."

System Scalability

Over time, the application and business demands for a customer implementation may grow and additional storage or compute capabilities may be required. In a standard implementation, adding resources to an existing environment presents some challenges for the IT team that may not be easy to handle in a highly demanding and business-critical application stack such as SAP.

SAP applications built on FlexPod is designed to be as scalable as possible. This is true for the base components such as compute, network, and storage, and also for the design and related software solutions that support this scalability.

Scalability-in all the different directions-is a very complex and customer-specific process. However, this section outlines some basic principles that you can use to meet the demands of growth and scalability.

Extending Additional Storage

Additional storage may be necessary due to additional space requirements, which are often correlated with increased I/O demands on the storage controller.

The requirement for additional storage can be easily solved by adding shelves to the existing controllers, and the requirement for additional space can be solved by adding storage systems. SAP applications built on FlexPod is built with all equipment connected to Cisco Nexus 5000 switches, which typically provides more than enough bandwidth so that the new storage can easily be added by using spare ports.

Because all customer projects use vFiler units, these units can easily be moved to the new storage systems without a major impact on operations.

Extending Additional Compute Resources

Applications such as SAP often require additional compute resources. This need might be due to enhanced functionality added over time, or it might be due to an increase in the number of users to the system, which requires more CPU and memory. Because SAP is a highly flexible architecture, most of these demands can be met by simply adding application servers and thus making use of SAP's 3-tier architecture.

Other demands require additional memory and CPU for an existing SAP instance. Because SAP applications built on FlexPod uses VMware for virtualization and can also integrate physical blades as compute resources, these demands can typically be met by changing VMware's CPU and memory assignment to the virtual machine. The architecture and implementation of SAP applications built on FlexPod uses adaptive enabled SAP installations, so assigning additional resources is as simple as stopping an SAP instance on one system such as a virtual machine and restarting it on another system such as a physical blade. After some modifications to the SAP profile, the relocated SAP instance uses the additional resources.

All of these scalability features are incorporated into the solution design. But at some point, you may need to physically add compute resources to the infrastructure in order to meet application demands. This is as easy as adding storage. For example, an additional chassis with slots for additional blades can easily be added to a Cisco UCS Fabric Interconnect. After configuring this new server, the added compute power can be used by applying the Cisco UCS service profiles, allowing you to move an existing server definition to the added blades and to use not only the additional hardware, but also the increased amount of memory or CPU power.

To implement all of these changes, only minimal modifications need to be made to the configuration, with only minimal disruption of the business applications, such as restarting an SAP system.

Adding a Second FlexPod Infrastructure

Some customers want to grow linearly by adding storage and compute power to an existing environment, and they might also consider adding a complete FlexPod infrastructure to a second server room and want to understand how to optimize the efficiency of these additional resources.

This setup is often discussed with additional requirements for high availability and disaster recovery. These topics will be covered in detail in a later release of SAP applications built on FlexPod; this subsection describes only a simple, generic application of this infrastructure. Typically, only a customer-specific project can describe in detail all requirements and demands.

One way to add a complete FlexPod infrastructure (storage, compute, Cisco UCS, and Cisco Nexus) and use it for application demands, while avoiding additional overhead due to duplicate management and/or infrastructure components, is to simply connect the additional components on a pure networking basis through a layer 2 connection to the central switch. There are many different ways of doing this, but at a high level the tasks are as follows:

•

Install and configure the new infrastructure according to the FlexPod Built on VMware guide, with the following exceptions:

–

Use different host names and IP addresses for the equipment so that it can be integrated into the existing networking structure.

–

There is no need to install infrastructure components such as Virtual Center, DFM, and so on. It is more important to use all of the infrastructure services and management components directly.

•

Change the uplink to the central switch so that all required VLANs are connected to the central switch (not just the Access-LAN networks), including:

–

Access and back-end VLANs for all tenants, including infrastructure tenants

–

Software and N1Kv packet control VLAN

–

NDMP VLAN, for synchronization between storages

–

VMotion VLAN (only if VMotion should be enabled between the two sites)

As a result of this setup, all the new infrastructure resources can be used almost nondisruptively while using the same management components. The design and the scripts are intended to work even in such an extended environment. Of course, the disadvantage of this example is that a lot of networking traffic is routed over the central switch.

Overview of Solution Setup and Operation

This section is an overview of the tasks and workflows for setting up and operating SAP applications built on FlexPod.

Infrastructure Setup Tasks

1.

VMware vSphere built on FlexPod setup

2.

Additional SAP applications built on FlexPod configuration steps

3.

Infrastructure tenant setup:

a.

NetApp Operations, Provisioning, and Protection Manager configuration

b.

Set up infrastructure volumes

c.

Backup configuration of infrastructure volumes

d.

SAP LVM installation

e.

SnapManager for SAP repository database installation

f.

SnapManager for SAP installation on the DataFabric Manager host

g.

Tenant-specific services configuration (DNS)

4.

Installation and configuration of the OS:

a.

OS template for VMware (SLES and/or RHEL)

b.

OS template and/or autoinstall framework for bare metal (SLES and/or RHEL)

5.

Provision one or more managed tenants:

a.

Network configuration

b.

Storage configuration

c.

Tenant-specific services configuration (DHCP, DNS, NIS)

Operational Tasks

1.

Provision additional managed tenants:

a.

Network configuration

b.

Storage configuration

c.

Tenant-specific services configuration (DHCP, DNS, NIS)

2.

OS provisioning into target tenants

3.

SAP system provisioning:

a.

Preparation

b.

System installation

4.

Configure backup services for SAP systems:

a.

Protection Manager protection policy

b.

Create SnapManager for SAP profile

c.

Configure data protection

d.

Configure Br*tools and Backint

5.

Configure SAP LVM for SAP systems

6.

Relocate SAP systems within a tenant

7.

Cloning-based SAP system copy within a tenant:

a.

New system setup

b.

System refresh

8.

Isolated clone of production SAP system

FlexPod Built on VMware Setup

The first step in setting up SAP Applications built on FlexPod is to set up FlexPod for VMware according to TR-3939: VMware vSphere Built on FlexPod Implementation Guide. The following sections describe only the differences and additional tasks required.

Changes Compared to FlexPod Built on VMware

Before setting up the system according to TR-3939, refer to the "SAP Applications built on FlexPod Technical Specifications" guide for the software version used.

NetApp Operations Manager (Version 5.1) is installed on a Linux VM instead of on a Windows® VM. The configuration steps are the same (refer to section 3.12 of TR-3939).

The NetApp Virtual Storage Console is installed on the Windows vCenter VM instead of on a new Windows VM (section 3.11 of TR-3939). You must add a second network card to the vCenter VM connected to the Network File System (NFS) network.

Because the Rapid Cloning Utility is used to deploy new VMs, in TR 3939, do not execute step 3, section 3.2, subsection "Creating the necessary infrastructure volumes." (3. Type sis on /vol/infrastructure.)

DNS has to be setup also for the infrastructure vFiler® units. Therefore setup the DNS settings by entering yes within step 6 in TR 3939, section 3.2, subsection "Creating the infrastructure vFiler units" at both controllers. Use the DNS server IP address of the infrastructure tenant DNS server and its DNS Domain name for the backend network.

Every application other than the management components runs in additional managed tenants and not in the infrastructure tenant

Additional Storage Configuration

For additional configuration, log in to controller A and set the following option:

options vfiler.vol_clone_zapi_allow on

Also, set this option on controller B.

Create a new VLAN for NDMP traffic, and configure SnapVault® and SnapMirror® access on each controller.

Log in to controller A and execute the following commands:

vlan add vif0 "var_ndmp_vlan_id"

wrfile -a /etc/rc "vlan add vif0 "var_ndmp_vlan_id""

ifconfig vif0-"var_ndmp_vlan_id" mtusize 9000

wrfile -a /etc/rc "ifconfig vif0-"var_ndmp_vlan_id" mtusize 9000"

ifconfig vif0-"var_ndmp_vlan_id" partner vif0-"var_ndmp_vlan_id"

wrfile -a /etc/rc "ifconfig vif0-"var_ndmp_vlan_id" partner vif0-"var_ndmp_vlan_id""

ifconfig vif0-"var_ndmp_vlan_id" "var_ndmp_ip_contr_a" netmask "var_ndmp_netmask"

wrfile -a /etc/rc "ifconfig vif0-"var_ndmp_vlan_id" "var_ndmp_ip_contr_a" netmask "var_ndmp_netmask""

options ndmpd.preferred_interface vif0-"var_ndmp_vlan_id"

wrfile -a /etc/snapmirror.allow ""var_ntap_B_hostname""

wrfile -a /etc/snapmirror.allow ""var_ndmp_ip_contr_b""

options snapvault.access host="var_ntap_B_hostname","var_ndmp_ip_contr_b"

options snapvault.enable on

options snapmirror.enable on

ndmpd on

vfiler limit 65

Log in to controller B and execute the following commands:

vlan add vif0 "var_ndmp_vlan_id"

wrfile -a /etc/rc "vlan add vif0 "var_ndmp_vlan_id""

ifconfig vif0-"var_ndmp_vlan_id" mtusize 9000

wrfile -a /etc/rc "ifconfig vif0-"var_ndmp_vlan_id" mtusize 9000"

ifconfig vif0-"var_ndmp_vlan_id" partner vif0-"var_ndmp_vlan_id"

wrfile -a /etc/rc "ifconfig vif0-"var_ndmp_vlan_id" partner vif0-"var_ndmp_vlan_id""

ifconfig vif0-"var_ndmp_vlan_id" "var_ndmp_ip_contr_b" netmask "var_ndmp_netmask"

wrfile -a /etc/rc "ifconfig vif0-"var_ndmp_vlan_id" "var_ndmp_ip_contr_b" netmask "var_ndmp_netmask""

options ndmpd.preferred_interface vif0-"var_ndmp_vlan_id"

wrfile -a /etc/snapmirror.allow ""var_ntap_A_hostname""

wrfile -a /etc/snapmirror.allow ""var_ndmp_ip_contr_a""

options snapvault.access host="var_ntap_A_hostname","var_ndmp_ip_contr_a"

options snapvault.enable on

options snapmirror.enable on

ndmpd on

vfiler limit 65

Additional Network Configuration

Management Network for Physical Components

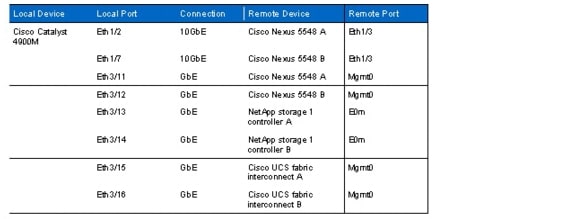

In the FlexPod built on VMware documentation, the management ports of the components are connected to port "any." For the SAP applications built on FlexPod data center solution, detailed documentation of the management ports and the routing between all components is required. Table 2 documents a sample configuration with an additional Cisco Catalyst® switch representing the customer data center network. The Cisco Catalyst switch is not part of the solution.

Table 2 Cisco Catalyst 4900M Ethernet cabling information

1.

To configure the network on the Cisco Catalyst 4900M, log in to the switch and execute the following commands:

EnableConf terminalinterface TenGigabitEthernet1/2description "var_nexus_A_hostname":Eth1/3switchport trunk native vlan "var_global_mgmt_vlan_id"switchport trunk allowed vlan "var_global_mgmt_vlan_id","var_physmgmt_vlan_id"switchport mode trunkno shutdowninterface TenGigabitEthernet1/7description "var_nexus_B_hostname":Eth1/3switchport trunk native vlan "var_global_mgmt_vlan_id"switchport trunk allowed vlan "var_global_mgmt_vlan_id","var_physmgmt_vlan_id"switchport mode trunkno shutdowninterface GigabitEthernet3/11description "var_nexus_A_hostname":mgmtswitchport access vlan "var_global_mgmt_vlan_id"switchport mode accessno shutdowninterface GigabitEthernet3/12description "var_nexus_B_hostname":mgmtswitchport access vlan "var_global_mgmt_vlan_id"switchport mode accessno shutdowninterface GigabitEthernet3/13description "var_ntap_A_hostname":e0mswitchport access vlan"var_global_mgmt_vlan_id"switchport mode accessno shutdowninterface GigabitEthernet3/14description "var_ntap_B_hostname":e0mswitchport access vlan "var_global_mgmt_vlan_id"switchport mode accessno shutdowninterface GigabitEthernet3/15description "var_ucsm_A_hostname":mgmtswitchport access vlan "var_global_mgmt_vlan_id"switchport mode accessno shutdowninterface GigabitEthernet3/16description "var_ucsm_B_hostname":mgmtswitchport access vlan "var_global_mgmt_vlan_id"switchport mode accessno shutdownexitexitcopy run startexit2.

Configure the network on the Cisco Nexus 5548 switches to enable the communication to the Cisco Catalyst 4900M. Log in to "var_nexus_A_hostname" and execute the following commands:

Conf terminalinterface port-channel100description cat4900switchport mode trunkswitchport trunk native vlan "var_global_mgmt_vlan_id"switchport trunk allowed vlan "var_global_mgmt_vlan_id","var_physmgmt_vlan_id"spanning-tree port type networkvpc 100exitinterface Ethernet1/3description cat4900:Eth1/2channel-group 100 mode activeno shutdownexitcopy run startexit3.

Log in to "var_nexus_B_hostname" and execute the following commands:

Conf terminalinterface port-channel100description cat4900switchport mode trunkswitchport trunk native vlan "var_global_mgmt_vlan_id"switchport trunk allowed vlan "var_global_mgmt_vlan_id","var_physmgmt_vlan_id"spanning-tree port type networkvpc 100exitinterface Ethernet1/3description cat4900:Eth1/7channel-group 100 mode activeno shutdownexitcopy run startexitNDMP—Traffic Network

The NDMP-traffic network is used for data transfer from NetApp storage to NetApp storage.

The NDMP-traffic VLAN is required only between the storage controllers within a FlexPod solution; therefore the VLAN ID is configured only on the Cisco Nexus 5548 and storage devices.

1.

Log in to "var_nexus_A_hostname" and "var_nexus_B_hostname" and execute the following commands:

Conf terminalvlan "var_ndmp_vlan_id"name NDMP-VLANexitinterface Vlan "var_ndmp_vlan_id"no shutdownip address <<var_ndmp_network>> "var_ndmp_netmask"exitinterface port-channel11switchport trunk allowed vlan add "var_ndmp_vlan_id"exitinterface port-channel12switchport trunk allowed vlan add <"var_ndmp_vlan_id"exitexitcopy run startexitThe NDMP-traffic network is not routed in our configuration; therefore we do not configure inter-VLAN routing on the Cisco Catalyst 4900 and do not allow the VLAN ID on the port channel 100.

Central Software Share Network

The central software repository is used to store configuration files, installation images, and additional software components. A dedicated network segment is used to access the central software share.

1.

Log in to "var_nexus_A_hostname" and "var_nexus_B_hostname" and execute the following commands:

Conf terminalvlan "var_software_vlan_id"name CentralSWexitinterface Vlan "var_software_vlan_id"no shutdownexitinterface port-channel11switchport trunk allowed vlan add "var_software_vlan_id"exitinterface port-channel12switchport trunk allowed vlan add "var_software_vlan_id"exitexitcopy run startexit2.

Configure the inter-VLAN routing function on the Cisco Catalyst 4900 switch. Log in to the Cisco Catalyst 4900 and execute the following commands:

EnableConf terminalvlan "var_software_vlan_id"name CentralSWexitinterface Vlan"var_software_vlan_id"ip address "var_software_gw_addr" "var_software_netmask"no shutdownexitinterface TenGigabitEthernet1/2switchport trunk allowed vlan "var_software_vlan_id"exitinterface TenGigabitEthernet1/7switchport trunk allowed vlan "var_software_vlan_id"exitcopy run startexitInter-VLAN Routing

The FlexPod environment forces an introduction of predefined access rules in order to implement a strict separation of the different tenants combined with specific access rights to the generally available services.

In the first step, standard access lists are implemented that match only on IP network prefixes, to separate the networks and to define on a low granular layer the basic access rights. In the next step, access lists are defined with a focus on the application layer, and therefore extended access lists must be used.

A VLAN is defined for each tenant IP network, which separates the different networks on layer 2. Each VLAN is configured on the central switch with a switch virtual interface (SVI). That interface represents a logical layer 3 interface on a switch and is bound to a specific VLAN. The SVI offers the capability of basic layer 3 routing functionality on a layer 2 switch without the requirement of specific routing protocols in order to implement inter-VLAN routing.

The following items define the access rights:

•

No global inter-VLAN routing is allowed.

•

Each tenant is allowed to ping its own SVI, the layer 3 interface dedicated to a specific VLAN and defined as a default gateway in each VLAN.

•

The central software tenant network ("var_software_network") must have access to each tenant and vice versa.

•

The global management tenant ("var_global_mgmt_network") must have access to each tenant and vice versa.

In order to define the VLANs, connect the switches, and define the SVIs, the following generic command sequence must be followed.

On the central switch only, add the missing VLANs (the Nexus switches already have all required VLANs defined):

vlan VLANIDname VLANNAMEOn all of the required switches, add the VLANs to the appropriate ports and portchannels that are used to interconnect the central switch with the Nexus 5548 switches by issuing the following command for each channel:

Interface PORTCHANNEL/PORT_NAMEswitchport trunk allowed vlan add VLANIDOn the central switch only, define the SVIs for all routed VLANs:

interface VLANIDip address VLAN_GATEWAY_IP VLAN_NETMASKno shutdownexitAccess Lists Basics

Named access lists are defined on a router layer and can be applied to all networking interfaces where an IP address has been defined. In this case, this is on the VLAN interfaces defined on the layer 3 device (the Catalyst switch).

Access lists can be defined as standard or extended lists, depending on the filtering requirements. Standard lists define only the source and target host where the traffic should be permitted or denied; extended lists have the flexibility to define much more granular filtering, down to the communication port level.

Command syntax for a standard list:

access?list access?list?namepermit|deny {host|source source?wildcard|any}Command syntax for an extended list:ip access?list extended namepermit|deny [protocol] {source source?wildcard|any} {destination destination?wildcard|any} [precedence precedence][tos tos][established] [log|log?input] [operator destination?port|destination port]For a detailed discussion of ACLs, consult the Cisco documentation.

Example ACLs for SAP Applications Built on FlexPod

The examples in this document focus on standard FlexPod communication needs between defined networks. Examples use the following numbering rules:

•

Tenant 002 uses VLAN 2002, network 192.168.2.0/24

•

Infrastructure tenant uses VLAN 99, network 192.168.99.0/24

•

Software share is available on VLAN 96, network 192.168.96.0/24

All examples are based on these numbers and must be adapted according to the user networks and additional customer requirements.

Every tenant must have access to services in the infrastructure tenant and to the software share. For this, a standard access list can be used on the tenant access VLAN interface to allow only these networks to have access to the tenant. The command is: