Table Of Contents

About Cisco Validated Design (CVD) Program

VMware vSphere Built On FlexPod With IP-Based Storage

Benefits of Cisco Unified Computing System

Benefits of Cisco Nexus 5548UP

Benefits of the NetApp FAS Family of Storage Controllers

Benefits of OnCommand Unified Manager Software

Benefits of VMware vSphere with the NetApp Virtual Storage Console

NetApp FAS2240-2 Deployment Procedure: Part 1

Assign Controller Disk Ownership

Install Data ONTAP to Onboard Flash Storage

Harden Storage System Logins and Security

Enable Active-Active Controller Configuration Between Two Storage Systems

Set Up Storage System NTP Time Synchronization and CDP Enablement

Create an SNMP Requests Role and Assign SNMP Login Privileges

Create an SNMP Management Group and Assign an SNMP Request Role

Create an SNMP User and Assign It to an SNMP Management Group

Set Up SNMP v1 Communities on Storage Controllers

Set Up SNMP Contact Information for Each Storage Controller

Set SNMP Location Information for Each Storage Controller

Reinitialize SNMP on Storage Controllers

Initialize NDMP on the Storage Controllers

Set 10GbE Flow Control and Add VLAN Interfaces

Export NFS Infrastructure Volumes to ESXi Servers

Cisco Nexus 5548 Deployment Procedure

Set up Initial Cisco Nexus 5548 Switch

Enable Appropriate Cisco Nexus Features

Add Individual Port Descriptions for Troubleshooting

Add PortChannel Configurations

Configure Virtual PortChannels

Uplink Into Existing Network Infrastructure

Cisco Unified Computing System Deployment Procedure

Perform Initial Setup of Cisco UCS C-Series Servers

Perform Initial Setup of the Cisco UCS 6248 Fabric Interconnects

Upgrade Cisco UCS Manager Software to Version 2.0(2m)

Add a Block of IP Addresses for KVM Access

Edit the Chassis Discovery Policy

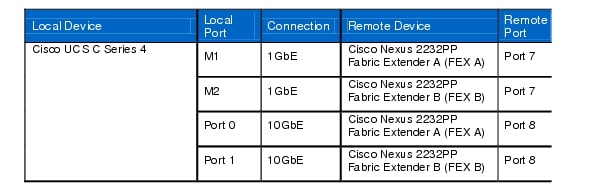

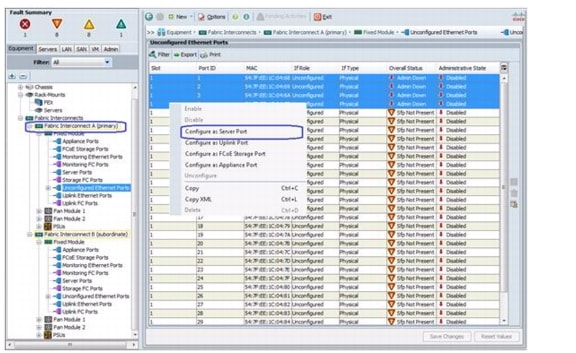

Enable Server and Uplink Ports

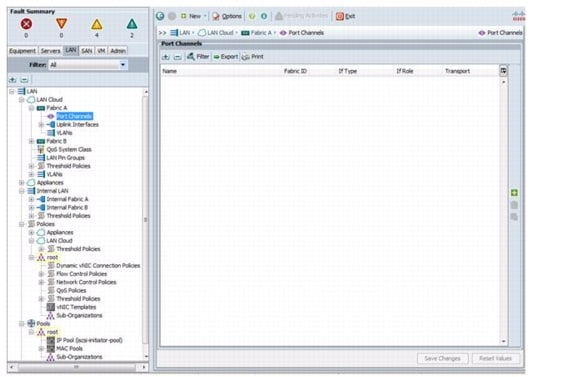

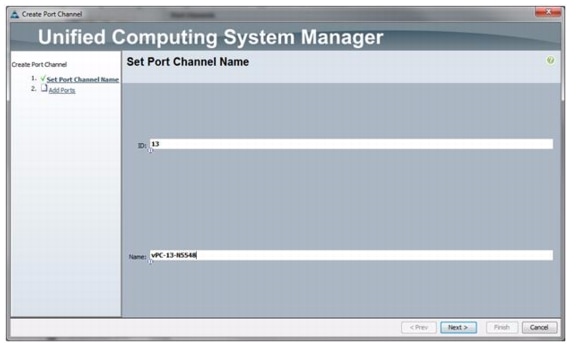

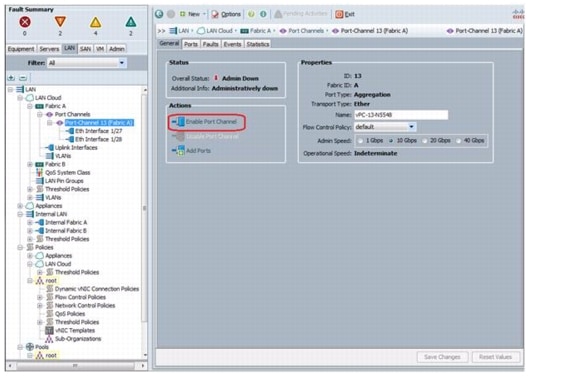

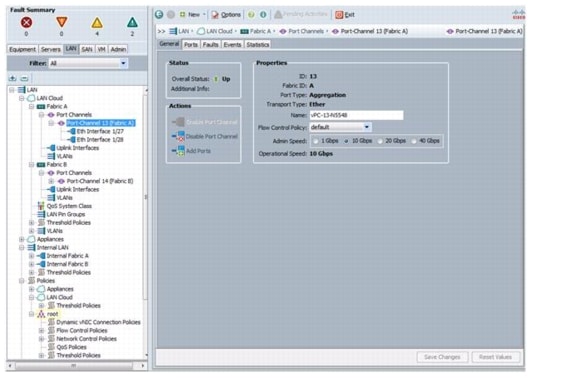

Create Uplink PortChannels to the Cisco Nexus 5548 Switches

Create IQN Pools for iSCSI Boot

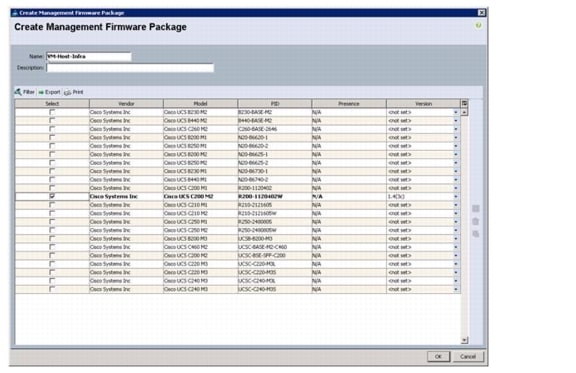

Create a Firmware Management Package

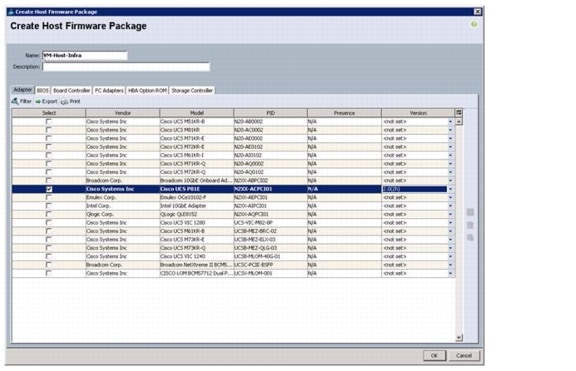

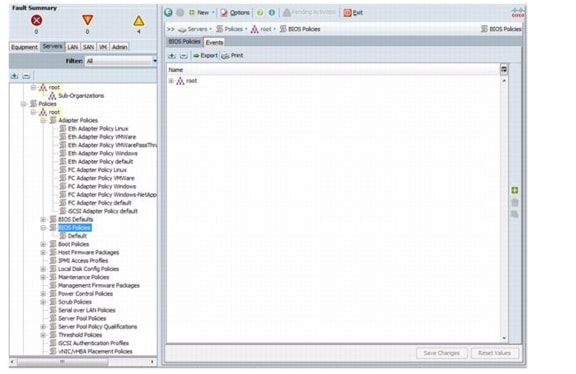

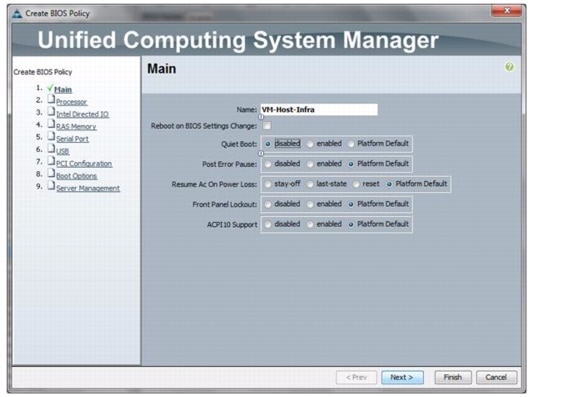

Create Host Firmware Package Policy

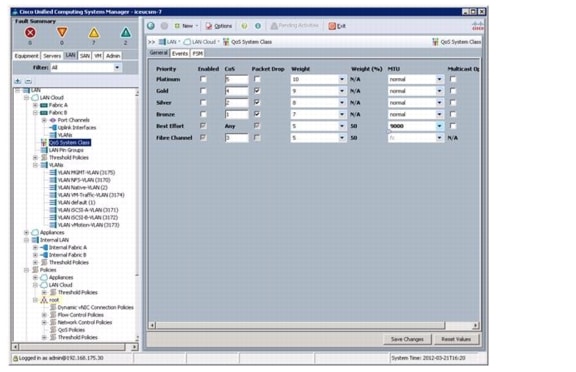

Set Jumbo Frames in Cisco UCS Fabric

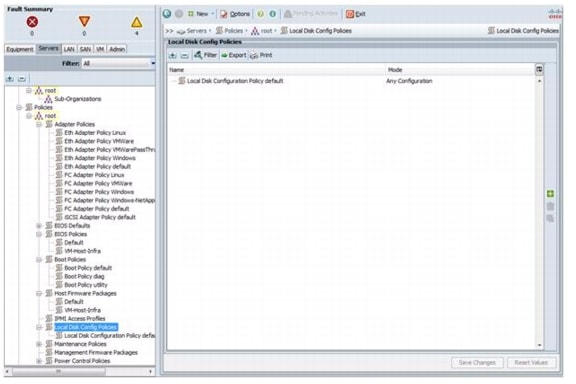

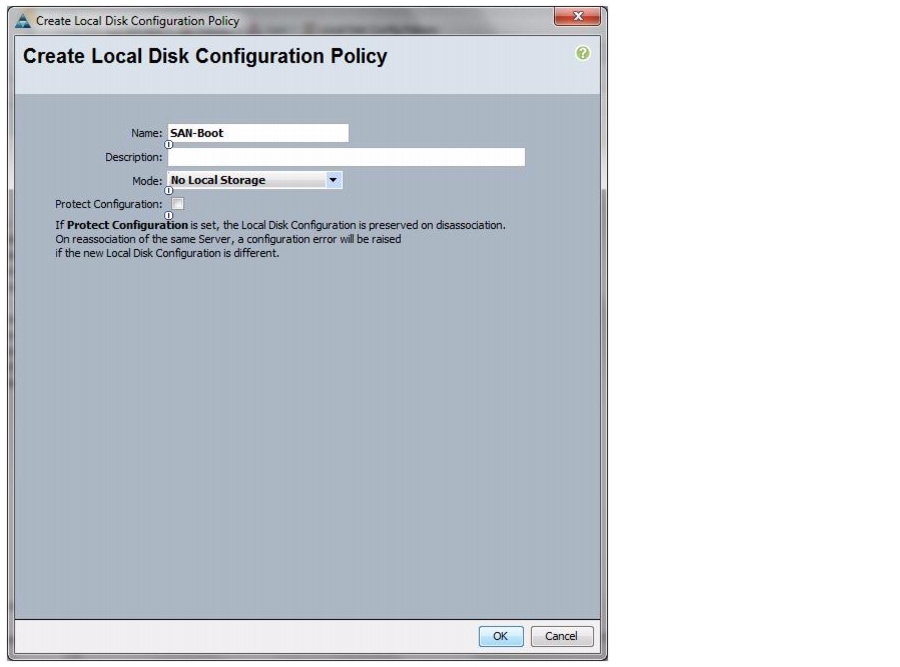

Create a Local Disk Configuration Policy

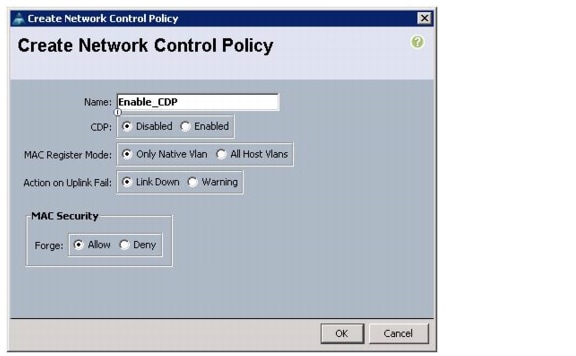

Create a Network Control Policy for Cisco Discovery Protocol (CDP)

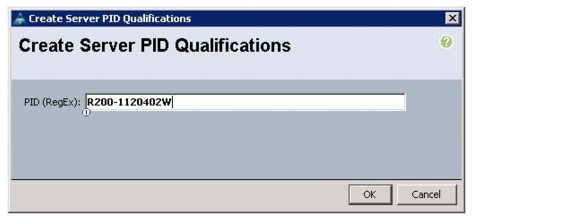

Create a Server Pool Qualification Policy

Create vNIC Placement Policy for Virtual Machine Infrastructure Hosts

Add More Servers to the FlexPod Unit

NetApp FAS2240-2 Deployment Procedure: Part 2

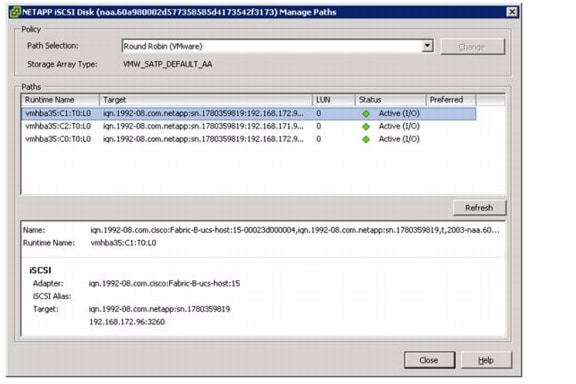

Add Infrastructure Host Boot LUNs

VMware ESXi 5.0 Deployment Procedure

Log Into the Cisco UCS 6200 Fabric Interconnects

Set Up the ESXi Hosts' Management Networking

Set Up Management Networking for Each ESXi Host

Download VMware vSphere Client and vSphere Remote Command Line

Log in to VMware ESXi Host Using the VMware vSphere Client

Change the iSCSI Boot Port MTU to Jumbo

Load Updated Cisco VIC enic Driver Version 2.1.2.22

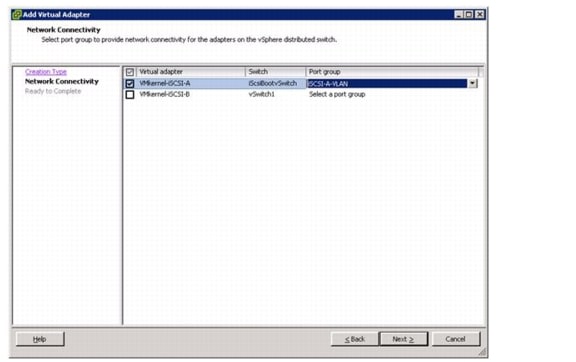

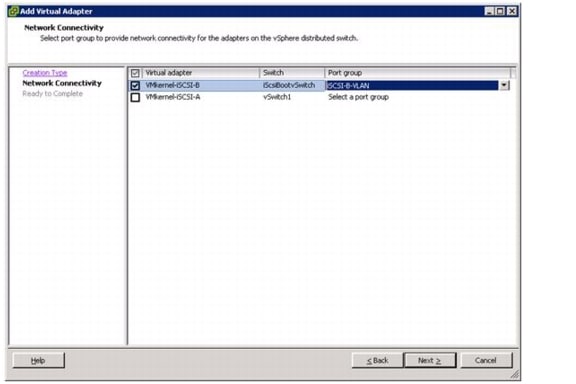

Set Up iSCSI Boot Ports on Virtual Switches

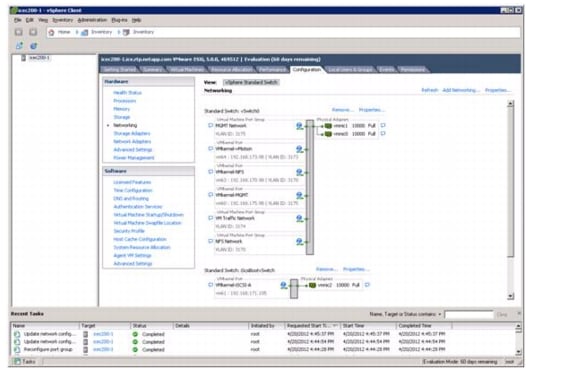

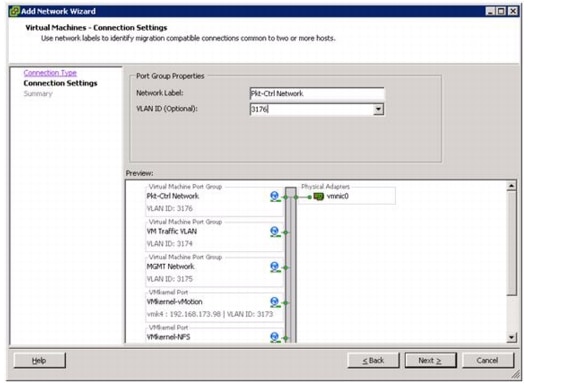

Set Up VMkernel Ports and Virtual Switch

Move the VM Swap File Location

VMware vCenter 5.0 Deployment Procedure

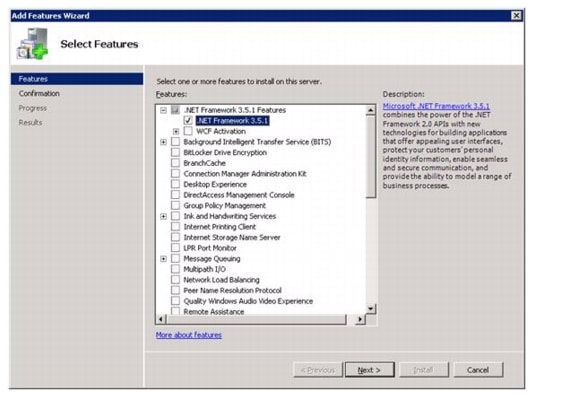

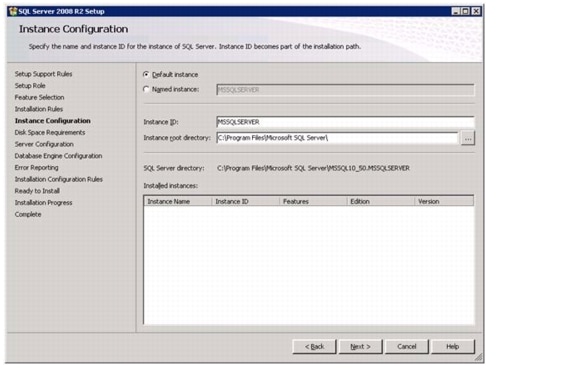

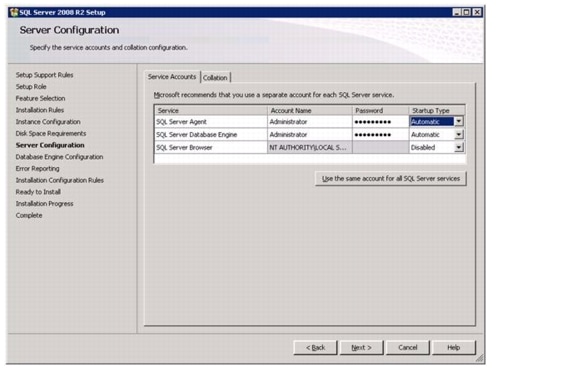

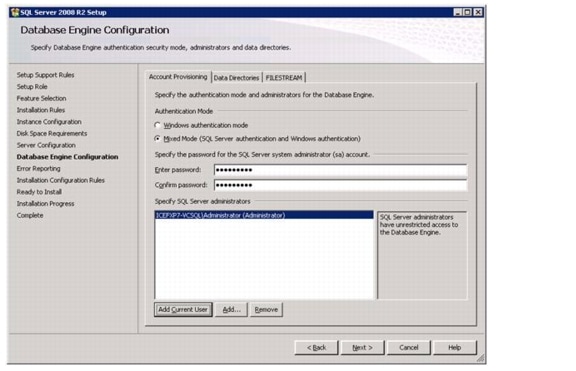

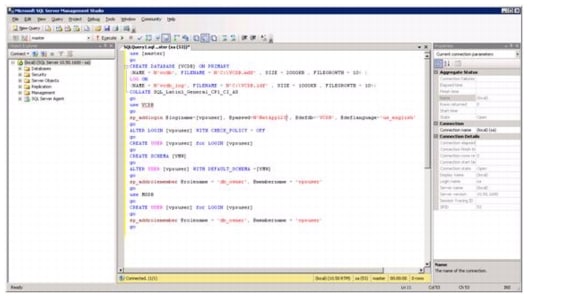

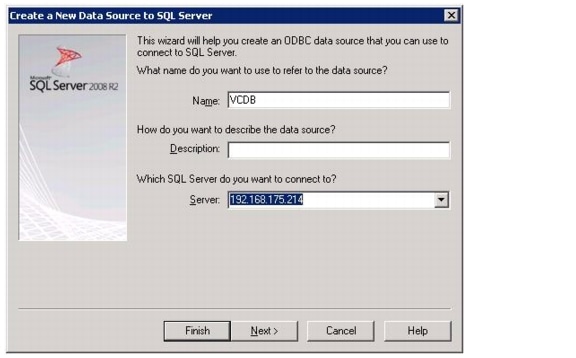

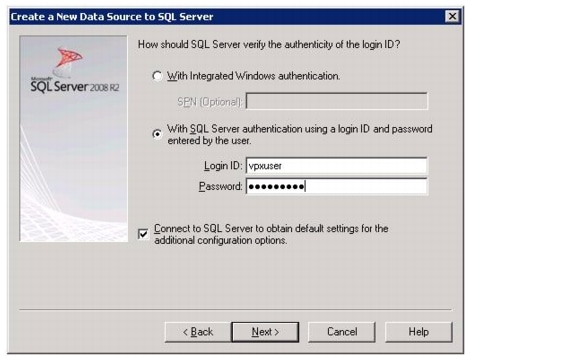

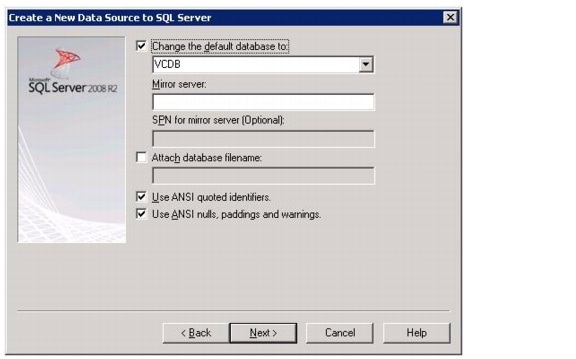

Build a Microsoft SQL Server Virtual Machine

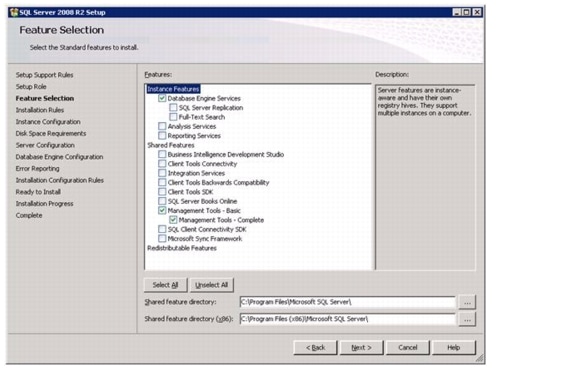

Install Microsoft SQL Server 2008 R2

Build a VMware vCenter Virtual Machine

NetApp Virtual Storage Console Deployment Procedure

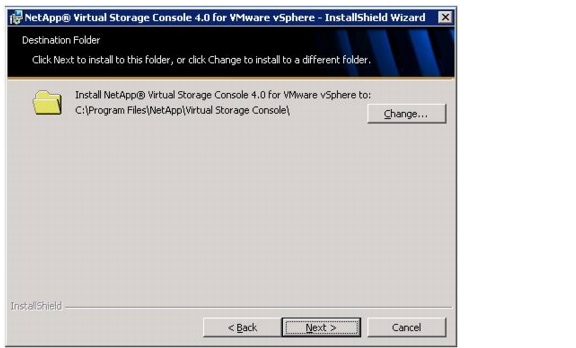

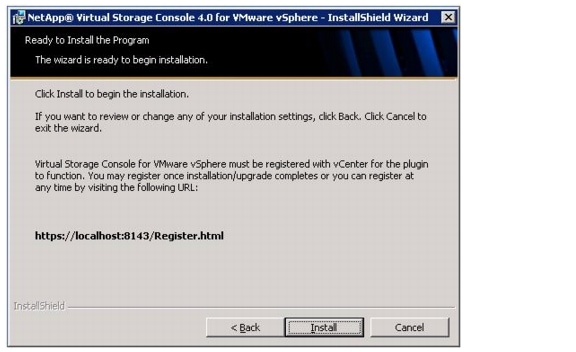

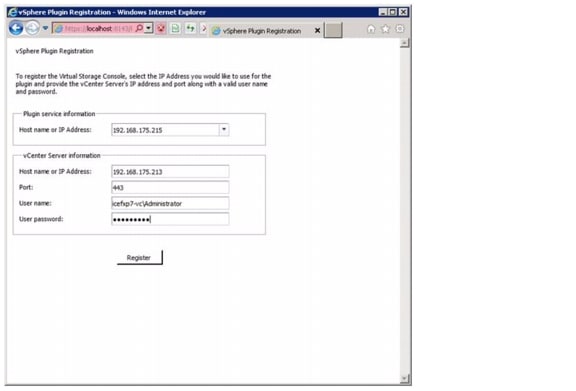

Installing NetApp Virtual Storage Console 4.0

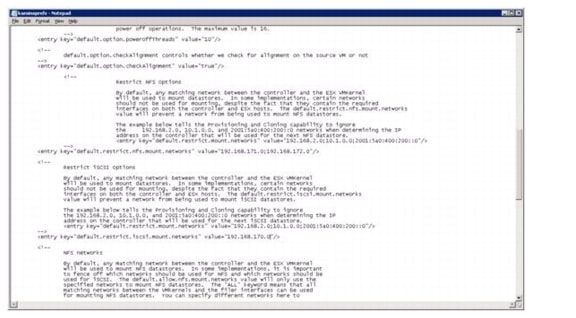

Optimal Storage Settings for ESXi Hosts

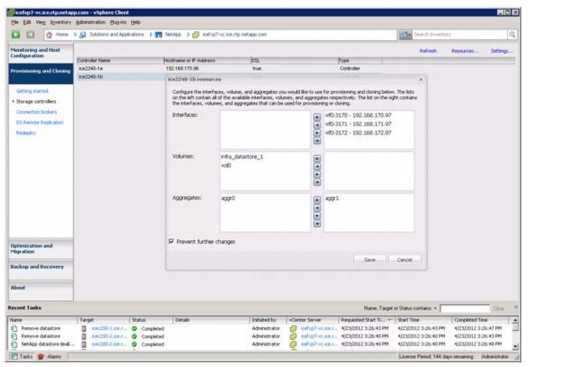

Provisioning and Cloning Setup

NetApp OnCommand Deployment Procedure

Manually Add Data Fabric Manager Storage Controllers

Run Diagnostics for Verifying Data Fabric Manager Communication

Configure Additional Operations Manager Alerts

Deploy the NetApp OnCommand Host Package

Set a Shared Lock Directory to Coordinate Mutually Exclusive Activities on Shared Resources

Install NetApp OnCommand Windows PowerShell Cmdlets

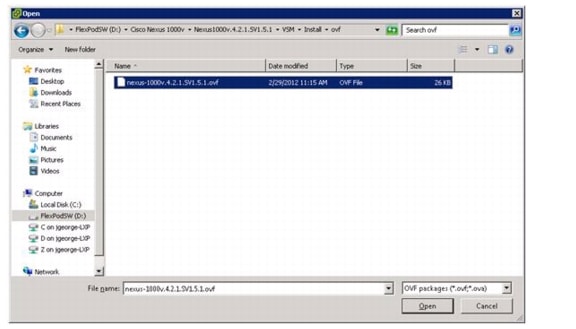

Cisco Nexus 1000v Deployment Procedure

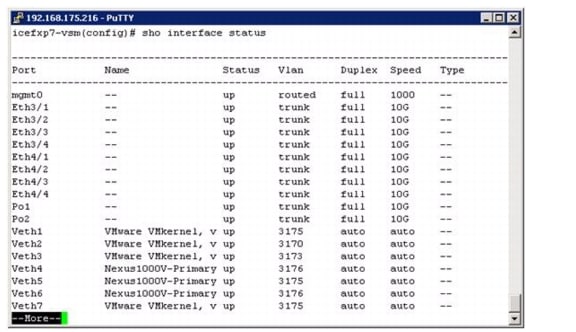

Log into Both Cisco Nexus 5548 Switches

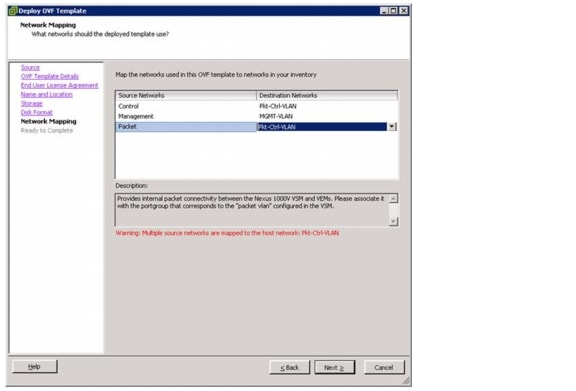

Add Packet-Control VLAN to Switch Trunk Ports

Add Packet-Control VLAN to Host Server vNICs

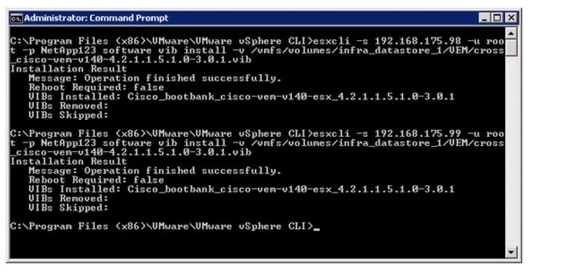

Install the Virtual Ethernet Module (VEM) on Each ESXi Host

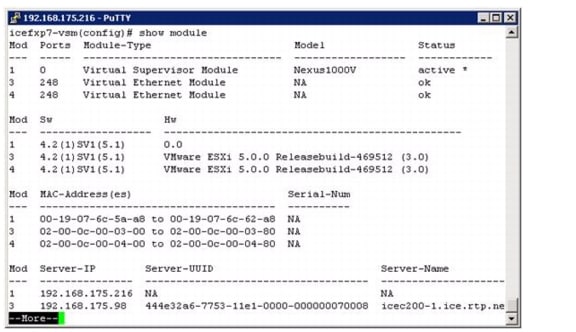

Base Configuration of the Primary VSM

Register the Nexus 1000v as a vCenter Plugin

Base Configuration of the Primary VSM

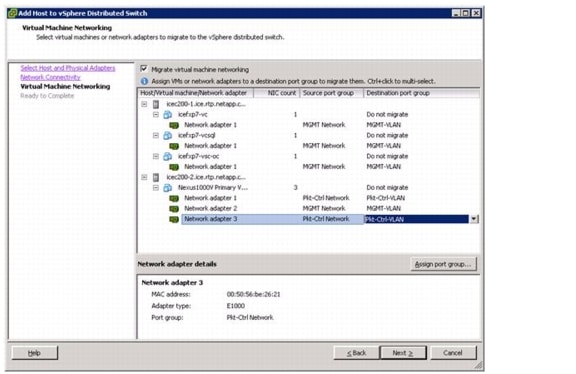

Migrate the ESXi Hosts' Networking to the Nexus 1000v

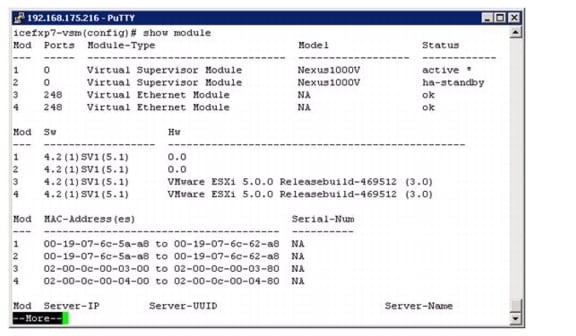

Base Configuration of the Secondary VSM

Nexus 5548 Reference Configurations

VMware vSphere Built On FlexPod With IP-Based StorageLast Updated: November 29, 2012

Building Architectures to Solve Business Problems

About the Authors

John George, Reference Architect, Infrastructure and Cloud Engineering, NetAppJohn George is a Reference Architect in the NetApp Infrastructure and Cloud Engineering team and is focused on developing, validating, and supporting cloud infrastructure solutions that include NetApp products. Before his current role, he supported and administered Nortel's worldwide training network and VPN infrastructure. John holds a Master's degree in computer engineering from Clemson University.

Ganesh Kameth, Technical Marketing Engineer, NetApp

Ganesh Kamath is a Technical Architect in the NetApp TSP solutions engineering team focused on architecting and validating solutions for TSP's based on NetApp products. Ganesh's diverse experiences at NetApp include working as a Technical Marketing Engineer as well as a member of the NetApp Rapid Response Engineering team qualifying specialized solutions for our most demanding customers.

John Kennedy, Technical Leader, CiscoJohn Kennedy is a Technical Marketing Engineer in the Server Access and Virtualization Technology Group. Currently, John is focused on the validation of FlexPod architecture while contributing to future SAVTG products. John spent two years in the Systems Development Unit at Cisco, researching methods of implementing long distance vMotion for use in the Data Center Interconnect Cisco Validated Designs. Previously, John worked at VMware Inc. for eight and a half years as a Senior Systems Engineer supporting channel partners outside the US and serving on the HP Alliance team. He is a VMware Certified Professional on every version of VMware's ESX / ESXi, vCenter, and Virtual Infrastructure including vSphere 5. He has presented at various industry conferences in over 20 countries.Chris Reno, Reference Architect, Infrastructure and Cloud Engineering, NetAppChris Reno is a Reference Architect in the NetApp Infrastructure and Cloud Engineering team and is focused on creating, validating, supporting, and evangelizing solutions based on NetApp products. Chris has his Bachelors of Science degree in International Business and Finance and his Bachelors of Arts degree in Spanish from the University of North Carolina - Wilmington while also holding numerous industry certifications.

Lindsey Street, Systems Architect, Infrastructure and Cloud Engineering, NetAppLindsey Street is a systems architect in the NetApp Infrastructure and Cloud Engineering team. She focuses on the architecture, implementation, compatibility, and security of innovative vendor technologies to develop competitive and high-performance end-to-end cloud solutions for customers. Lindsey started her career in 2006 at Nortel as an interoperability test engineer, testing customer equipment interoperability for certification. Lindsey has her Bachelors of Science degree in Computer Networking and her Master's of Science in Information Security from East Carolina University.

NetApp, the NetApp logo, Go further, faster, AutoSupport, DataFabric, Data ONTAP, FlexClone, FlexPod, and OnCommand are trademarks or registered trademarks of NetApp, Inc. in the United States and/or other countries.

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

Cisco and the Cisco Logo are trademarks of Cisco Systems, Inc. and/or its affiliates in the U.S. and other countries. A listing of Cisco's trademarks can be found at http://www.cisco.com/go/trademarks. Third party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (1005R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in illustrative content is unintentional and coincidental.

© 2012 Cisco Systems, Inc. All rights reserved.

VMware vSphere Built On FlexPod With IP-Based Storage

Overview

Industry trends indicate a vast data center transformation toward shared infrastructures. By leveraging virtualization, enterprise customers have embarked on the journey to the cloud by moving away from application silos and toward shared infrastructure, thereby increasing agility and reducing costs. NetApp and Cisco have partnered to deliver FlexPod, which serves as the foundation for a variety of workloads and enables efficient architectural designs that are based on customer requirements.

Audience

This Cisco Validated Design describes the architecture and deployment procedures of an infrastructure composed of Cisco, NetApp, and VMware virtualization that leverages IP-based storage protocols. The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineers, and customers who want to deploy the core FlexPod architecture.

Architecture

The FlexPod architecture is highly modular or "podlike." Although each customer's FlexPod unit varies in its exact configuration, once a FlexPod unit is built, it can easily be scaled as requirements and demand change. The unit can be scaled both up (adding resources to a FlexPod unit) and out (adding more FlexPod units).

Specifically, FlexPod is a defined set of hardware and software that serves as an integrated foundation for all virtualization solutions. VMware vSphere Built On FlexPod With IP-Based Storage includes NetApp storage, Cisco networking, the Cisco® Unified Computing System™ (Cisco UCS™), and VMware vSphere™ software in a single package. The computing and storage can fit in one data center rack, with the networking residing in a separate rack or deployed according to a customer's data center design. Port density enables the networking components to accommodate multiple configurations of this kind.

One benefit of the FlexPod architecture is the ability to customize or "flex" the environment to suit a customer's requirements. This is why the reference architecture detailed in this document highlights the resiliency, cost benefit, and ease of deployment of an IP-based storage solution. Ethernet storage systems are a steadily increasing source of network traffic, requiring design considerations that maximize the performance of servers to storage systems. This new concept is quite different from a network that caters to thousands of clients and servers connected across LAN and WAN networks. Correct Ethernet storage network designs can achieve the performance of Fibre Channel networks, provided that technologies such as jumbo frames, virtual interfaces (VIFs), virtual LANs (VLANs), IP multipathing (IPMP), Spanning Tree Protocol (STP), port channeling, and multilayer topologies are employed in the architecture of the system.

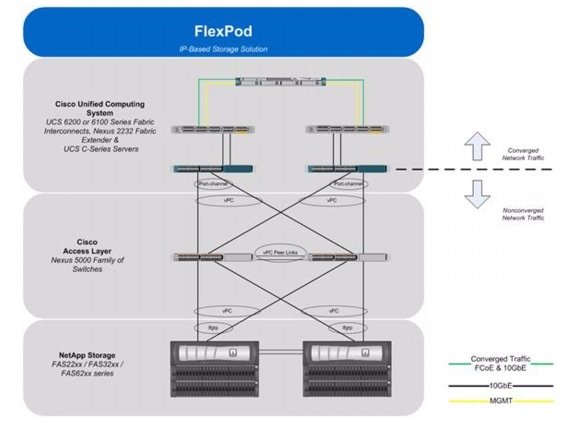

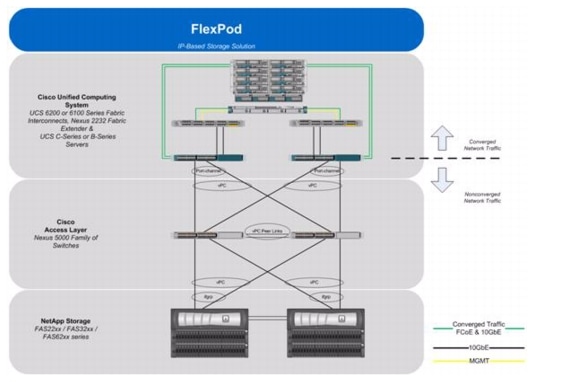

Figure 1 shows the VMware vSphere Built On FlexPod With IP-Based Storage components and the network connections for a configuration with IP-based storage. This design leverages the Cisco Nexus® 5548UP, Cisco Nexus 2232 FEX, Cisco UCS C-Series with the Cisco UCS virtual interface card (VIC), and the NetApp FAS family of storage controllers, which are all deployed to enable iSCSI-booted hosts with file- and block-level access to IP-based datastores. The reference architecture reinforces the "wire-once" strategy, because as additional storage is added to the architecture-either FC, FCoE, or 10GbE-no re-cabling is required from the hosts to the UCS fabric interconnect. An alternate IP-based storage configuration with Cisco UCS B-Series is described in B-Series Deployment Procedure in the Appendix.

Figure 1 VMware vSphere Built On FlexPod With IP-Based Storage Components

The reference configuration includes:

•

Two Cisco Nexus 5548UP switches

•

Two Cisco Nexus 2232 fabric extenders

•

Two Cisco UCS 6248UP fabric interconnects

•

Support for 16 UCS C-Series servers without any additional networking components

•

Support for hundreds of UCS C-Series servers by way of additional fabric extenders

•

One NetApp FAS2240-2A (HA pair)

Storage is provided by a NetApp FAS2240-2A (HA configuration in a single chassis). All system and network links feature redundancy, providing end-to-end high availability (HA). For server virtualization, the deployment includes VMware vSphere. Although this is the base design, each of the components can be scaled flexibly to support specific business requirements. For example, more (or different) servers or even blade chassis can be deployed to increase compute capacity, additional disk shelves can be deployed to improve I/O capacity and throughput, and special hardware or software features can be added to introduce new features.

The remainder of this document guides you through the low-level steps for deploying the base architecture, as shown in Figure 1. This includes everything from physical cabling, to compute and storage configuration, to configuring virtualization with VMware vSphere.

FlexPod Benefits

One of the founding design principles of the FlexPod architecture is flexibility. Previous FlexPod architectures have highlighted FCoE- or FC-based storage solutions in addition to showcasing a variety of application workloads. This particular FlexPod architecture is a predesigned configuration that is built on the Cisco Unified Computing System, the Cisco Nexus family of data center switches, NetApp FAS storage components, and VMware virtualization software. FlexPod is a base configuration, but it can scale up for greater performance and capacity, and it can scale out for environments that require consistent, multiple deployments. FlexPod has the flexibility to be sized and optimized to accommodate many different use cases. These use cases can be layered on an infrastructure that is architected based on performance, availability, and cost requirements.

FlexPod is a platform that can address current virtualization needs and simplify the evolution to an IT-as-a-service (ITaaS) infrastructure. The VMware vSphere Built On FlexPod With IP-Based Storage solution can help improve agility and responsiveness, reduce total cost of ownership (TCO), and increase business alignment and focus.

This document focuses on deploying an infrastructure that is capable of supporting VMware vSphere, VMware vCenter™ with NetApp plug-ins, and NetApp OnCommand™ as the foundation for virtualized infrastructure. Additionally, this document details a use case for those who want to design a potentially lower cost solution by leveraging IP storage protocols such as iSCSI, CIFS, and NFS, thereby avoiding the costs and complexities typically incurred with traditional FC SAN architectures. For a detailed study of several practical solutions deployed on FlexPod, refer to the NetApp Technical Report 3884, FlexPod Solutions Guide.

Benefits of Cisco Unified Computing System

Cisco Unified Computing System™ is the first converged data center platform that combines industry-standard, x86-architecture servers with networking and storage access into a single converged system. The system is entirely programmable using unified, model-based management to simplify and speed deployment of enterprise-class applications and services running in bare-metal, virtualized, and cloud computing environments.

The system's x86-architecture rack-mount and blade servers are powered by Intel® Xeon® processors. These industry-standard servers deliver world-record performance to power mission-critical workloads. Cisco servers, combined with a simplified, converged architecture, drive better IT productivity and superior price/performance for lower total cost of ownership (TCO). Building on Cisco's strength in enterprise networking, Cisco's Unified Computing System is integrated with a standards-based, high-bandwidth, low-latency, virtualization-aware unified fabric. The system is wired once to support the desired bandwidth and carries all Internet protocol, storage, inter-process communication, and virtual machine traffic with security isolation, visibility, and control equivalent to physical networks. The system meets the bandwidth demands of today's multicore processors, eliminates costly redundancy, and increases workload agility, reliability, and performance.

Cisco Unified Computing System is designed from the ground up to be programmable and self- integrating. A server's entire hardware stack, ranging from server firmware and settings to network profiles, is configured through model-based management. With Cisco virtual interface cards, even the number and type of I/O interfaces is programmed dynamically, making every server ready to power any workload at any time. With model-based management, administrators manipulate a model of a desired system configuration, associate a model's service profile with hardware resources, and the system configures itself to match the model. This automation speeds provisioning and workload migration with accurate and rapid scalability. The result is increased IT staff productivity, improved compliance, and reduced risk of failures due to inconsistent configurations.

Cisco Fabric Extender technology reduces the number of system components to purchase, configure, manage, and maintain by condensing three network layers into one. This represents a radical simplification over traditional systems, reducing capital and operating costs while increasing business agility, simplifying and speeding deployment, and improving performance.

Cisco Unified Computing System helps organizations go beyond efficiency: it helps them become more effective through technologies that breed simplicity rather than complexity. The result is flexible, agile, high-performance, self-integrating information technology, reduced staff costs with increased uptime through automation, and more rapid return on investment.

This reference architecture highlights the use of the Cisco UCS C200-M2 server, the Cisco UCS 6248UP, and the Nexus 2232 FEX to provide a resilient server platform balancing simplicity, performance and density for production-level virtualization. Also highlighted in this architecture, is the use of Cisco UCS service profiles that enable iSCSI boot of the native operating system. Coupling service profiles with unified storage delivers on demand stateless computing resources in a highly scalable architecture.

Recommended support documents include:

•

Cisco Unified Computing System: http://www.cisco.com/en/US/products/ps10265/index.html

•

Cisco Unified Computing System C-Series Servers: http://www.cisco.com/en/US/products/ps10493/index.html

•

Cisco Unified Computing System B-Series Servers: http://www.cisco.com/en/US/products/ps10280/index.html

Benefits of Cisco Nexus 5548UP

The Cisco Nexus 5548UP Switch delivers innovative architectural flexibility, infrastructure simplicity, and business agility, with support for networking standards. For traditional, virtualized, unified, and high-performance computing (HPC) environments, it offers a long list of IT and business advantages, including:

Architectural Flexibility

•

Unified ports that support traditional Ethernet, Fibre Channel (FC), and Fibre Channel over Ethernet (FCoE)

•

Synchronizes system clocks with accuracy of less than one microsecond, based on IEEE 1588

•

Offers converged Fabric extensibility, based on emerging standard IEEE 802.1BR, with Fabric Extender (FEX) Technology portfolio, including:

•

Cisco Nexus 2000 FEX

•

Adapter FEX

•

VM-FEX

Infrastructure Simplicity

•

Common high-density, high-performance, data-center-class, fixed-form-factor platform

•

Consolidates LAN and storage

•

Supports any transport over an Ethernet-based fabric, including Layer 2 and Layer 3 traffic

•

Supports storage traffic, including iSCSI, NAS, FC, RoE, and IBoE

•

Reduces management points with FEX Technology

Business Agility

•

Meets diverse data center deployments on one platform

•

Provides rapid migration and transition for traditional and evolving technologies

•

Offers performance and scalability to meet growing business needs

Specifications at-a Glance

•

A 1 -rack-unit, 1/10 Gigabit Ethernet switch

•

32 fixed Unified Ports on base chassis and one expansion slot totaling 48 ports

•

The slot can support any of the three modules: Unified Ports, 1/2/4/8 native Fibre Channel, and Ethernet or FCoE

•

Throughput of up to 960 Gbps

This reference architecture highlights the use of the Cisco Nexus 5548UP. As mentioned, this platform is capable of serving as the foundation for wire-once, unified fabric architectures. This document provides guidance for an architecture capable of delivering IP protocols including iSCSI, CIFS, and NFS. Ethernet architectures yield lower TCO through their simplicity and lack of need for FC SAN trained professionals while requiring fewer licenses to deliver the functionality associated with enterprise class solutions.

Recommended support documents include:

•

Cisco Nexus 5000 Family of switches: http://www.cisco.com/en/US/products/ps9670/index.html

Benefits of the NetApp FAS Family of Storage Controllers

The NetApp Unified Storage Architecture offers customers an agile and scalable storage platform. All NetApp storage systems use the Data ONTAP® operating system to provide SAN (FCoE, FC, iSCSI), NAS (CIFS, NFS), and primary and secondary storage in a single unified platform so that all virtual desktop data components can be hosted on the same storage array.

A single process for activities such as installation, provisioning, mirroring, backup, and upgrading is used throughout the entire product line, from the entry level to enterprise-class controllers. Having a single set of software and processes simplifies even the most complex enterprise data management challenges. Unifying storage and data management software and processes streamlines data ownership, enables companies to adapt to their changing business needs without interruption, and reduces total cost of ownership.

This reference architecture focuses on the use case of leveraging IP-based storage to solve customers' challenges and to meet their needs in the data center. Specifically, this entails iSCSI boot of UCS hosts, provisioning of VM data stores by using NFS, and application access through iSCSI, CIFS, or NFS, all while leveraging NetApp unified storage.

In a shared infrastructure, the availability and performance of the storage infrastructure are critical because storage outages and performance issues can affect thousands of users. The storage architecture must provide a high level of availability and performance. For detailed documentation about best practices, NetApp and its technology partners have developed a variety of best practice documents.

This reference architecture highlights the use of the NetApp FAS2000 product line, specifically the FAS2240-2A with the 10GbE mezzanine card and SAS storage. Available to support multiple protocols while allowing the customer to start smart, at a lower price point, the FAS2240-2 is an affordable and powerful choice for delivering shared infrastructure.

Recommended support documents include:

•

NetApp storage systems: www.netapp.com/us/products/storage-systems/

•

NetApp FAS2000 storage systems: http://www.netapp.com/us/products/storage-systems/fas2000/fas2000.html

•

NetApp TR-3437: Storage Best Practices and Resiliency Guide

•

NetApp TR-3450: Active-Active Controller Overview and Best Practices Guidelines

•

NetApp TR-3749: NetApp and VMware vSphere Storage Best Practices

•

NetApp TR-3884: FlexPod Solutions Guide

•

NetApp TR-3824: MS Exchange 2010 Best Practices Guide

Benefits of OnCommand Unified Manager Software

NetApp OnCommand management software delivers efficiency savings by unifying storage operations, provisioning, and protection for both physical and virtual resources. The key product benefits that create this value include:

•

Simplicity. A single unified approach and a single set of tools to manage both the physical world and the virtual world as you move to a services model to manage your service delivery. This makes NetApp the most effective storage for the virtualized data center. It has a single configuration repository for reporting, event logs, and audit logs.

•

Efficiency. Automation and analytics capabilities deliver storage and service efficiency, reducing IT capex and opex spend by up to 50%.

•

Flexibility. With tools that let you gain visibility and insight into your complex multiprotocol, multivendor environments and open APIs that let you integrate with third-party orchestration frameworks and hypervisors, OnCommand offers a flexible solution that helps you rapidly respond to changing demands.

OnCommand gives you visibility across your storage environment by continuously monitoring and analyzing its health. You get a view of what is deployed and how it is being used, enabling you to improve your storage capacity utilization and increase the productivity and efficiency of your IT administrators. And this unified dashboard gives at-a-glance status and metrics, making it far more efficient than having to use multiple resource management tools.

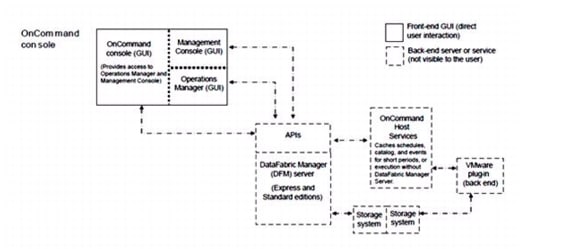

Figure 2 OnCommand Architecture

OnCommand Host Package

You can discover, manage, and protect virtual objects after installing the NetApp OnCommand Host Package software. The components that make up the OnCommand Host Package are:

•

OnCommand host service VMware plug-in. A plug-in that receives and processes events in a VMware environment, including discovering, restoring, and backing up virtual objects such as virtual machines and datastores. This plug-in executes the events received from the host service.

•

Host service. The host service software includes plug-ins that enable the NetApp DataFabric® Manager server to discover, back up, and restore virtual objects, such as virtual machines and datastores. The host service also enables you to view virtual objects in the OnCommand console. It enables the DataFabric Manager server to forward requests, such as the request for a restore operation, to the appropriate plug-in, and to send the final results of the specified job to that plug-in. When you make changes to the virtual infrastructure, automatic notification is sent from the host service to the DataFabric Manager server. You must register at least one host service with the DataFabric Manager server before you can back up or restore data.

•

Host service Windows PowerShell cmdlets. Cmdlets that perform virtual object discovery, local restore operations, and host configuration when the DataFabric Manager server is unavailable.

Management tasks performed in the virtual environment by using the OnCommand console include:

•

Create a dataset and then add virtual machines or datastores to the dataset for data protection.

•

Assign local protection and, optionally, remote protection policies to the dataset.

•

View storage details and space details for a virtual object.

•

Perform an on-demand backup of a dataset.

•

Mount existing backups onto an ESX™ server to support tasks such as backup verification, single file restore, and restoration of a virtual machine to an alternate location.

•

Restore data from local and remote backups as well as restore data from backups made before the introduction of OnCommand management software.

•

View storage details and space details for a virtual object.

Storage Service Catalog

The Storage Service Catalog, a component of OnCommand, is a key NetApp differentiator for service automation. It lets you integrate storage provisioning policies, data protection policies, and storage resource pools into a single service offering that administrators can choose when provisioning storage. This automates much of the provisioning process, and it also automates a variety of storage management tasks associated with the policies.

The Storage Service Catalog provides a layer of abstraction between the storage consumer and the details of the storage configuration, creating "storage as a service." The service levels defined with the Storage Service Catalog automatically specify and map policies to the attributes of your pooled storage infrastructure. This higher level of abstraction between service levels and physical storage lets you eliminate complex, manual work, encapsulating storage and operational processes together for optimal, flexible, and dynamic allocation of storage.

The service catalog approach also incorporates the use of open APIs into other management suites, which leads to a strong ecosystem integration.

FlexPod Management Solutions

The FlexPod platform open APIs offer easy integration with a broad range of management tools. NetApp and Cisco work with trusted partners to provide a variety of management solutions.

Products designated as Validated FlexPod Management Solutions must pass extensive testing in Cisco and NetApp labs against a broad set of functional and design requirements. Validated solutions for automation and orchestration provide unified, turnkey functionality. Now you can deploy IT services in minutes instead of weeks by reducing complex processes that normally require multiple administrators to repeatable workflows that are easily adaptable. The following list names the current vendors for these solutions:

Note

Some of the following links are available only to partners and customers.

•

CA

–

http://solutionconnection.netapp.com/CA-Infrastructure-Provisioning-for-FlexPod.aspx

–

http://www.youtube.com/watch?v=mmkNUvVZY94

•

Cloupia

–

http://solutionconnection.netapp.com/cloupia-unified-infrastructure-controller.aspx

–

http://www.cloupia.com/en/flexpodtoclouds/videos/Cloupia-FlexPod-Solution-Overview.html

•

Gale Technologies

–

http://solutionconnection.netapp.com/galeforce-turnkey-cloud-solution.aspx

–

http://www.youtube.com/watch?v=ylf81zjfFF0

•

Products designated as FlexPod Management Solutions have demonstrated the basic ability to interact with all components of the FlexPod platform. Vendors for these solutions currently include BMC Software Business Service Management, Cisco Intelligent Automation for Cloud, DynamicOps, FireScope, Nimsoft, and Zenoss. Recommended documents include:

–

https://solutionconnection.netapp.com/flexpod.aspx

–

http://www.netapp.com/us/communities/tech-ontap/tot-building-a-cloud-on-flexpod-1203.html

Benefits of VMware vSphere with the NetApp Virtual Storage Console

VMware vSphere, coupled with the NetApp Virtual Storage Console (VSC), serves as the foundation for VMware virtualized infrastructures. vSphere 5.0 offers significant enhancements that can be employed to solve real customer problems. Virtualization reduces costs and maximizes IT efficiency, increases application availability and control, and empowers IT organizations with choice. VMware vSphere delivers these benefits as the trusted platform for virtualization, as demonstrated by their contingent of more than 300,000 customers worldwide.

VMware vCenter Server is the best way to manage and leverage the power of virtualization. A vCenter domain manages and provisions resources for all the ESX hosts in the given data center. The ability to license various features in vCenter at differing price points allows customers to choose the package that best serves their infrastructure needs.

The VSC is a vCenter plug-in that provides end-to-end virtual machine (VM) management and awareness for VMware vSphere environments running on top of NetApp storage. The following core capabilities make up the plug-in:

•

Storage and ESXi host configuration and monitoring by using Monitoring and Host Configuration

•

Datastore provisioning and VM cloning by using Provisioning and Cloning

•

Backup and recovery of VMs and datastores by using Backup and Recovery

•

Online alignment and single and group migrations of VMs into new or existing datastores by using Optimization and Migration

Because the VSC is a vCenter plug-in, all vSphere clients that connect to vCenter can access VSC. This availability is different from a client-side plug-in that must be installed on every vSphere client.

Software Revisions

It is important to note the software versions used in this document. Table 1 details the software revisions used throughout this document.

Table 1 Software Revisions

Configuration Guidelines

This document provides details for configuring a fully redundant, highly available configuration for a FlexPod unit with IP-based storage. Therefore, reference is made to which component is being configured with each step, either A or B. For example, controller A and controller B are used to identify the two NetApp storage controllers that are provisioned with this document, and Nexus A and Nexus B identify the pair of Cisco Nexus switches that are configured. The Cisco UCS fabric interconnects are similarly configured. Additionally, this document details steps for provisioning multiple Cisco UCS hosts, and these are identified sequentially: VM-Host-Infra-01, VM-Host-Infra-02, and so on. Finally, to indicate that you should include information pertinent to your environment in a given step, <text> appears as part of the command structure. See the following example for the vlan create command:

controller A> vlan create

Usage:

vlan create [-g {on|off}] <ifname> <vlanid_list>

vlan add <ifname> <vlanid_list>

vlan delete -q <ifname> [<vlanid_list>]

vlan modify -g {on|off} <ifname>

vlan stat <ifname> [<vlanid_list>]

Example:

controller A> vlan create vif0 <management VLAN ID>

This document is intended to enable you to fully configure the customer environment. In this process, various steps require you to insert customer-specific naming conventions, IP addresses, and VLAN schemes, as well as to record appropriate MAC addresses. Table 2 details the list of VLANs necessary for deployment as outlined in this guide. The VM-Mgmt VLAN is used for management interfaces of the VMware vSphere hosts. Table 3 lists the configuration variables that are used throughout this document. This table can be completed based on the specific site variables and leveraged as one reads the document configuration steps..

Note

If you are using separate in-band and out-of-band management VLANs, you must create a layer 3 route between these VLANs. For this validation, a common management VLAN was used.

Table 2 Necessary VLANs

Table 3 Configuration Variables

Note

In this document, management IPs and host names must be assigned for the following components:

•

NetApp storage controllers A and B

•

Cisco UCS Fabric Interconnects A and B and the UCS Cluster

•

Cisco Nexus 5548s A and B

•

VMware ESXi Hosts 1 and 2

•

VMware vCenter SQL Server Virtual Machine

•

VMware vCenter Virtual Machine

•

NetApp Virtual Storage Console or OnCommand virtual machine

For all host names except the virtual machine host names, the IP addresses must be preconfigured in the local DNS server. Additionally, the NFS IP addresses of the NetApp storage systems are used to monitor the storage systems from OnCommand DataFabric Manager. In this validation, a management host name was assigned to each storage controller (that is, ice2240-1a-m) and provisioned in DNS. A host name was also assigned for each controller in the NFS VLAN (that is, ice2240-1a) and provisioned in DNS. This NFS VLAN host name was then used when the storage system was added to OnCommand DataFabric Manager.

Deployment

This document describes the steps to deploy base infrastructure components as well as to provision VMware vSphere as the foundation for virtualized workloads. When you finish these deployment steps, you will be prepared to provision applications on top of a VMware virtualized infrastructure. The outlined procedure contains the following steps:

•

Initial NetApp controller configuration

•

Initial Cisco UCS configuration

•

Initial Cisco Nexus configuration

•

Creation of necessary VLANs for management, basic functionality, and virtualized infrastructure specific to VMware

•

Creation of necessary vPCs to provide high availability among devices

•

Creation of necessary service profile pools: MAC, UUID, server, and so forth

•

Creation of necessary service profile policies: adapter, boot, and so forth

•

Creation of two service profile templates from the created pools and policies: one each for fabric A and B

•

Provisioning of two servers from the created service profiles in preparation for OS installation

•

Initial configuration of the infrastructure components residing on the NetApp controller

•

Installation of VMware vSphere 5.0

•

Installation and configuration of VMware vCenter

•

Enabling of NetApp Virtual Storage Console (VSC)

•

Configuration of NetApp OnCommand

The VMware vSphere Built On FlexPod With IP-Based Storage architecture is flexible; therefore the configuration detailed in this section can vary for customer implementations, depending on specific requirements. Although customer implementations can deviate from the following information, the best practices, features, and configurations described in this section should be used as a reference for building a customized VMware vSphere Built On FlexPod With IP-Based Storage built on FlexPod solution.

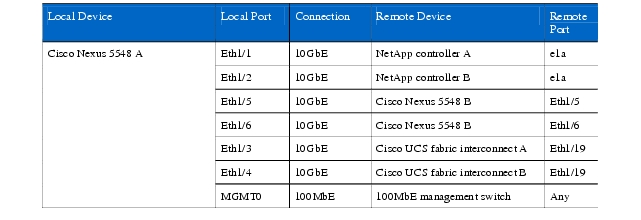

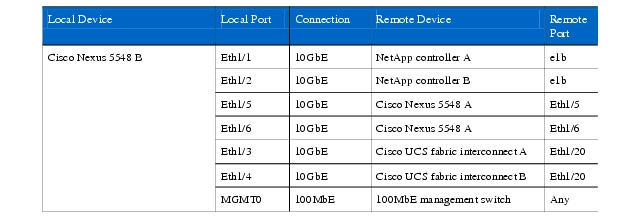

Cabling Information

The information in this section is provided as a reference for cabling the physical equipment in a FlexPod environment. To simplify cabling requirements, the tables include both local and remote device and port locations.

The tables in this section contain details for the prescribed and supported configuration of the NetApp FAS2240-2A running Data ONTAP 8.1. This configuration leverages a dual-port 10GbE adapter and the onboard SAS disk shelves with no additional external storage. For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT).

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site.

Be sure to follow the cabling directions in this section. Failure to do so will result in necessary changes to the deployment procedures that follow because specific port locations are mentioned.

It is possible to order a FAS2240-2A system in a different configuration from what is prescribed in the tables in this section. Before starting, be sure that the configuration matches the descriptions in the tables and diagrams in this section.

Figure 3 shows a FlexPod cabling diagram. The labels indicate connections to end points rather than port numbers on the physical device. For example, connection 1 is an FCoE target port connected from NetApp controller A to Nexus 5548 A. SAS connections 23, 24, 25, and 26 as well as ACP connections 27 and 28 should be connected to the NetApp storage controller and disk shelves according to best practices for the specific storage controller and disk shelf quantity.

Note

For disk shelf cabling, refer to the Universal SAS and ACP Cabling Guide at https://library.netapp.com/ecm/ecm_get_file/ECMM1280392.

Figure 3 FlexPod Cabling Diagram

Table 4 Cisco Nexus 5548 A Ethernet Cabling Information

Note

For devices added at a later date requiring 1GbE connectivity, use the GbE Copper SFP+s (GLC-T=).

Table 5 Cisco Nexus 5548 B Ethernet Cabling Information

Note

For devices added at a later date requiring 1GbE connectivity, use the GbE Copper SFP+s (GLC-T=).

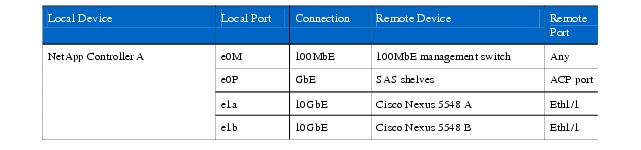

Table 6 NetApp Controller A Ethernet Cabling Information

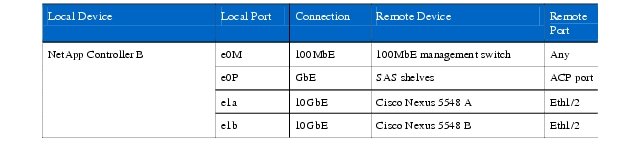

Table 7 NetApp Controller B Ethernet Cabling Information

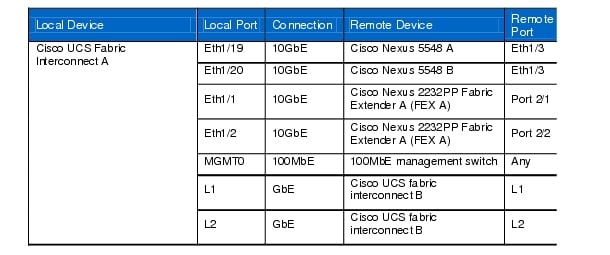

Table 8 Cisco UCS Fabric Interconnect A Ethernet Cabling Information

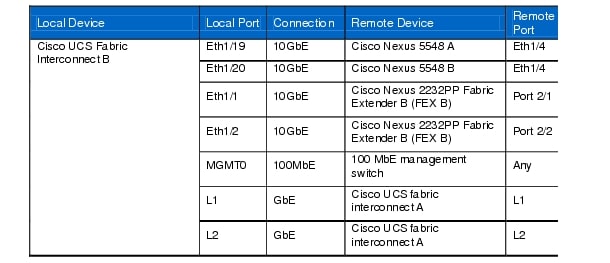

Table 9 Cisco UCS Fabric Interconnect B Ethernet Cabling Information

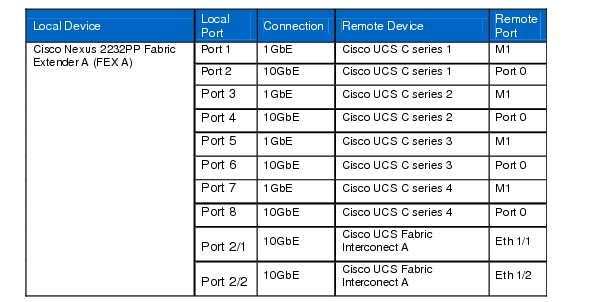

Table 10 Cisco Nexus 2232PP Fabric Extender A (FEX A)

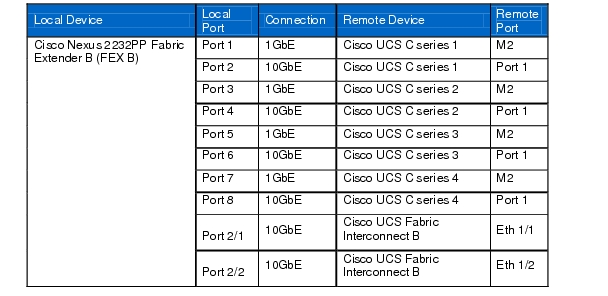

Table 11 Cisco Nexus 2232PP Fabric Extender B (FEX B)

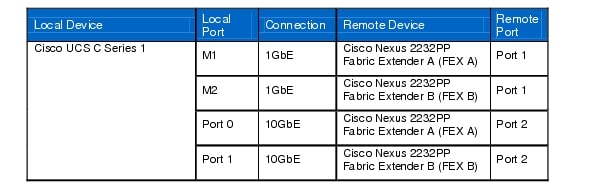

Table 12 Cisco UCS C Series 1

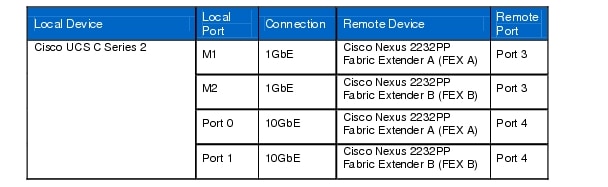

Table 13 Cisco UCS C Series 2

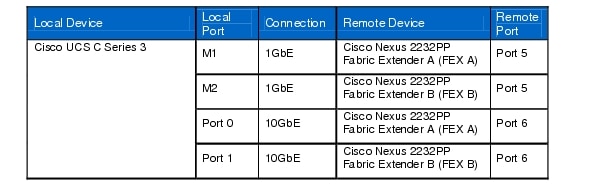

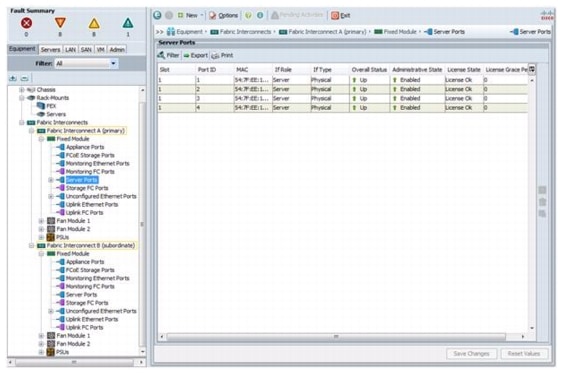

Table 14 Cisco UCS C Series 3

Table 15 Cisco UCS C Series 4

NetApp FAS2240-2 Deployment Procedure: Part 1

This section provides a detailed procedure for configuring the NetApp FAS2240-2 for use in a VMware vSphere Built On FlexPod With IP-Based Storage solution. These steps should be followed precisely. Failure to do so could result in an improper configuration.

Note

The configuration steps described in this section provide guidance for configuring the FAS2240-2 running Data ONTAP 8.1.

Assign Controller Disk Ownership

These steps provide details for assigning disk ownership and disk initialization and verification.

Note

Typical best practices should be followed when determining the number of disks to assign to each controller head. You may choose to assign a disproportionate number of disks to a given storage controller in an HA pair, depending on the intended workload.

Note

In this reference architecture, half the total number of disks in the environment is assigned to one controller and the remainder to its partner. Divide the number of disks in half and use the result in the following command for <# of disks>.

Controller A

1.

If the controller is at a LOADER-A> prompt, enter autoboot to start Data ONTAP. During controller boot, when prompted for Boot Menu, press CTRL-C.

2.

At the menu prompt, select option 5 for Maintenance mode boot.

3.

If prompted with Continue to boot? enter Yes.

4.

Enter ha-config show to verify that the controller and chassis configuration is ha.

Note

If either component is not in HA mode, use ha-config modify to put the components in HA mode.

5.

Enter disk show. No disks should be assigned to the controller.

6.

To determine the total number of disks connected to the storage system, enter disk show -a.

7.

Enter disk assign -n <#>. NetApp recommends connecting half the disks to each controller. Workload design could dictate different percentages, however.

8.

Enter halt to reboot the controller.

9.

If the controller stops at a LOADER-A> > prompt, enter autoboot to start Data ONTAP.

10.

During controller boot, when prompted, press CTRL-C.

11.

At the menu prompt, select option 4 for "Clean configuration and initialize all disks."

12.

The installer asks if you want to zero the disks and install a new file system. Enter y.

13.

A warning is displayed that this will erase all of the data on the disks. Enter y to confirm that this is what you want to do.

Note

The initialization and creation of the root volume can take 75 minutes or more to complete, depending on the number of disks attached. When initialization is complete, the storage system reboots.

Controller B

1.

If the controller is at a LOADER-B> prompt, enter autoboot to start Data ONTAP. During controller boot, when prompted, press CTRL-C for the special boot menu.

2.

At the menu prompt, select option 5 for Maintenance mode boot.

3.

If prompted with Continue to boot? enter Yes.

4.

Enter ha-config show to verify that the controller and chassis configuration is ha.

Note

If either component is not in HA mode, use ha-config modify to put the components in HA mode.

5.

Enter disk show. No disks should be assigned to this controller.

6.

To determine the total number of disks connected to the storage system, enter disk show -a. This will now show the number of remaining unassigned disks connected to the controller.

7.

Enter disk assign -n <#> to assign the remaining disks to the controller.

8.

Enter halt to reboot the controller.

9.

If the controller stops at a LOADER-B> prompt, enter autoboot to start Data ONTAP.

10.

During controller boot, when prompted, press CTRL-C for the boot menu.

11.

At the menu prompt, select option 4 for "Clean configuration and initialize all disks."

12.

The installer asks if you want to zero the disks and install a new file system. Enter y.

13.

A warning is displayed that this will erase all of the data on the disks. Enter y to confirm that this is what you want to do.

Note

The initialization and creation of the root volume can take 75 minutes or more to complete, depending on the number of disks attached. When initialization is complete, the storage system reboots.

Set Up Data ONTAP 8.1

These steps provide details for setting up Data ONTAP 8.1.

Controller A and Controller B

1.

After the disk initialization and the creation of the root volume, Data ONTAP setup begins.

2.

Enter the host name of the storage system.

3.

Enter n for enabling IPv6.

4.

Enter y for configuring interface groups.

5.

Enter 1 for the number of interface groups to configure.

6.

Name the interface vif0.

7.

Enter l to specify the interface as LACP.

8.

Enter i to specify IP load balancing.

9.

Enter 2 for the number of links for vif0.

10.

Enter e1a for the name of the first link.

11.

Enter e1b for the name of the second link.

12.

Press Enter to accept the blank IP address for vif0.

13.

Enter n for interface group vif0 taking over a partner interface.

14.

Press Enter to accept the blank IP address for e0a.

15.

Enter n for interface e0a taking over a partner interface.

16.

Press Enter to accept the blank IP address for e0b.

17.

Enter n for interface e0b taking over a partner interface.

18.

Press Enter to accept the blank IP address for e0c.

19.

Enter n for interface e0c taking over a partner interface.

20.

Press Enter to accept the blank IP address for e0d.

21.

Enter n for interface e0d taking over a partner interface.

22.

Enter the IP address of the out-of-band management interface, e0M.

23.

Enter the net mask for e0M.

24.

Enter y for interface e0M taking over a partner IP address during failover.

25.

Enter e0M for the name of the interface to be taken over.

26.

Enter n to continue setup through the Web interface.

27.

Enter the IP address for the default gateway for the storage system.

28.

Enter the IP address for the administration host.

29.

Enter the local time zone (such as PST, MST, CST, or EST or Linux time zone format; for example,. America/New_York).

30.

Enter the location for the storage system.

31.

Press Enter to accept the default root directory for HTTP files [/home/http].

32.

Enter y to enable DNS resolution.

33.

Enter the DNS domain name.

34.

Enter the IP address for the first nameserver.

35.

Enter n to finish entering DNS servers, or select y to add up to two more DNS servers.

36.

Enter n for running the NIS client.

37.

Press Enter to acknowledge the AutoSupport™ message.

38.

Enter y to configure the SP LAN interface.

39.

Enter n to setting up DHCP on the SP LAN interface.

40.

Enter the IP address for the SP LAN interface.

41.

Enter the net mask for the SP LAN interface.

42.

Enter the IP address for the default gateway for the SP LAN interface.

43.

Enter the fully qualified domain name for the mail host to receive SP messages and AutoSupport.

44.

Enter the IP address for the mail host to receive SP messages and AutoSupport.

Note

If you make a mistake during setup, press CTRL+C to get a command prompt. Enter setup and run the setup script again. Or you can complete the setup script and at the end enter setup to redo the setup script.

Note

At the end of the setup script, the storage system must be rebooted for changes to take effect.

45.

Enter passwd to set the administrative (root) password.

46.

Enter the new administrative (root) password.

47.

Enter the new administrative (root) password again to confirm.

Install Data ONTAP to Onboard Flash Storage

The following steps describe installing Data ONTAP to the onboard flash storage.

Controller A and Controller B

1.

To install the Data ONTAP image to the onboard flash device, enter software install and indicate the http or https Web address of the NetApp Data ONTAP 8.1 flash image; for example,

http://192.168.175.5/81_q_image.tgz2.

Enter download and press Enter to download the software to the flash device.

Harden Storage System Logins and Security

The following steps describe hardening the storage system logins and security.

Controller A and Controller B

1.

Enter secureadmin disable ssh.

2.

Enter secureadmin setup -f ssh to enable ssh on the storage controller.

3.

If prompted, enter yes to rerun ssh setup.

4.

Accept the default values for ssh1.x protocol.

5.

Enter 1024 for ssh2 protocol.

6.

If the information specified is correct, enter yes to create the ssh keys.

7.

Enter options telnet.enable off to disable telnet on the storage controller.

8.

Enter secureadmin setup ssl to enable ssl on the storage controller.

9.

If prompted, enter yes to rerun ssl setup.

10.

Enter the country name code, state or province name, locality name, organization name, and organization unit name.

11.

Enter the fully qualified domain name of the storage system.

12.

Enter the administrator's e-mail address.

13.

Accept the default for days until the certificate expires.

14.

Enter 1024 for the ssl key length.

15.

Enter options httpd.admin.enable off to disable http access to the storage system.

16.

Enter options httpd.admin.ssl.enable on to enable secure access to the storage system.

Install the Required Licenses

The following steps provide details about storage licenses that are used to enable features in this reference architecture. A variety of licenses come installed with the Data ONTAP 8.1 software.

Note

The following licenses are required to deploy this reference architecture:

•

cluster (cf): To configure storage controllers into an HA pair

•

iSCSI: To enable the iSCSI protocol

•

nfs: To enable the NFS protocol

•

flex_clone: To enable the provisioning of NetApp FlexClone® volumes and files

Controller A and Controller B

1.

Enter license add <necessary licenses> to add licenses to the storage system.

2.

Enter license to double-check the installed licenses.

3.

Enter reboot to reboot the storage controller.

4.

Log back in to the storage controller with the root password.

Enable Licensed Features

The following steps provide details for enabling licensed features.

Controller A and Controller B

1.

Enter options licensed_feature.multistore.enable on.

2.

Enter options licensed_feature.nearstore_option.enable on.

Enable Active-Active Controller Configuration Between Two Storage Systems

This step provides details for enabling active-active controller configuration between the two storage systems.

Controller A only

1.

Enter cf enable and press Enter to enable active-active controller configuration.

Start iSCSI

This step provides details for enabling the iSCSI protocol.

Controller A and Controller B

1.

Enter iscsi start.

Set Up Storage System NTP Time Synchronization and CDP Enablement

The following steps provide details for setting up storage system NTP time synchronization and enabling Cisco Discovery Protocol (CDP).

Controller A and Controller B

1.

Enter date CCyymmddhhmm, where CCyy is the four-digit year, mm is the two-digit month, dd is the two-digit day of the month, hh is the two-digit hour, and the second mm is the two-digit minute to set the storage system time to the actual time.

2.

Enter options timed.proto ntp to synchronize with an NTP server.

3.

Enter options timed.servers <NTP server IP> to add the NTP server to the storage system list.

4.

Enter options timed.enable on to enable NTP synchronization on the storage system.

5.

Enter options cdpd.enable on.

Create Data Aggregate aggr1

The following step provides details for creating the data aggregate aggr1.

Note

In most cases, the following command finishes quickly, but depending on the state of each disk, it might be necessary to zero some or all of the disks in order to add them to the aggregate. This could take up to 60 minutes to complete.

Controller A

1.

Enter aggr create aggr1 -B 64 <# of disks for aggr1> to create aggr1 on the storage controller.

Controller B

1.

Enter aggr create aggr1 -B 64 <# of disks for aggr1> to create aggr1 on the storage controller.

Create an SNMP Requests Role and Assign SNMP Login Privileges

This step provides details for creating the SNMP request role and assigning SNMP login privileges to it.

Controller A and Controller B

1.

Run the following command: useradmin role add <ntap SNMP request role> -a login-snmp.

Create an SNMP Management Group and Assign an SNMP Request Role

This step provides details for creating an SNMP management group and assigning an SNMP request role to it.

Controller A and Controller B

1.

Run the following command: useradmin group add <ntap SNMP managers> -r <ntap SNMP request role>.

Create an SNMP User and Assign It to an SNMP Management Group

This step provides details for creating an SNMP user and assigning it to an SNMP management group.

Controller A and Controller B

1.

Run the following command: useradmin user add <ntap SNMP users> -g <ntap SNMP managers>.

Note

After the user is created, the system prompts for a password. Enter the SNMP password.

Set Up SNMP v1 Communities on Storage Controllers

These steps provide details for setting up SNMP v1 communities on the storage controllers so that OnCommand System Manager can be used.

Controller A and Controller B

1.

Run the following command: snmp community delete all.

2.

Run the following command: snmp community add ro <ntap SNMP community>.

Set Up SNMP Contact Information for Each Storage Controller

This step provides details for setting SNMP contact information for each of the storage controllers.

Controller A and Controller B

1.

Run the following command: snmp contact <ntap admin email address>.

Set SNMP Location Information for Each Storage Controller

This step provides details for setting SNMP location information for each of the storage controllers.

Controller A and Controller B

1.

Run the following command: snmp location <ntap SNMP site name>.

Reinitialize SNMP on Storage Controllers

This step provides details for reinitializing SNMP on the storage controllers.

Controller A and Controller B

1.

Run the following command: snmp init 1.

Initialize NDMP on the Storage Controllers

This step provides details for initializing NDMP.

Controller A and Controller B

1.

Run the following command: ndmpd on.

Set 10GbE Flow Control and Add VLAN Interfaces

These steps provide details for adding VLAN interfaces on the storage controllers.

Controller A

1.

Run the following command: ifconfig e1a flowcontrol none .

2.

Run the following command: wrfile -a /etc/rc ifconfig e1a flowcontrol none .

3.

Run the following command: ifconfig e1b flowcontrol none .

4.

Run the following command: wrfile -a /etc/rc ifconfig e1b flowcontrol none .

5.

Run the following command: vlan create vif0 <NFS VLAN ID>.

6.

Run the following command: wrfile -a /etc/rc vlan create vif0 <NFS VLAN ID>.

7.

Run the following command: ifconfig vif0-<NFS VLAN ID> <Controller A NFS IP> netmask <NFS Netmask> mtusize 9000 partner vif0-<NFS VLAN ID>.

8.

Run the following command: wrfile -a /etc/rc ifconfig vif0-<NFS VLAN ID> <Controller A NFS IP> netmask <NFS Netmask> mtusize 9000 partner vif0-<NFS VLAN ID>.

9.

Run the following command: vlan add vif0 <iSCSI-A VLAN ID>.

10.

Run the following command: wrfile -a /etc/rc vlan add vif0 <iSCSI-A VLAN ID>.

11.

Run the following command: ifconfig vif0-<iSCSI-A VLAN ID> <Controller A iSCSI-A IP> netmask <iSCSI-A Netmask> mtusize 9000 partner vif0-<iSCSI-A VLAN ID>.

12.

Run the following command: wrfile -a /etc/rc ifconfig vif0-<iSCSI-A VLAN ID> <Controller A iSCSI-A IP> netmask <iSCSI-A Netmask> mtusize 9000 partner vif0-<iSCSI-A VLAN ID>.

13.

Run the following command: vlan add vif0 <iSCSI-B VLAN ID>.

14.

Run the following command: wrfile -a /etc/rc vlan add vif0 <iSCSI-B VLAN ID>.

15.

Run the following command: ifconfig vif0-<iSCSI-B VLAN ID> <Controller A iSCSI-B IP> netmask <iSCSI-B Netmask> mtusize 9000 partner vif0-<iSCSI-B VLAN ID>.

16.

Run the following command: wrfile -a /etc/rc ifconfig vif0-<iSCSI-B VLAN ID> <Controller A iSCSI-B IP> netmask <iSCSI-B Netmask> mtusize 9000 partner vif0-<iSCSI-B VLAN ID>.

17.

Run the following command to verify additions to the /etc/rc file: rdfile /etc/rc.

Controller B

1.

Run the following command: ifconfig e1a flowcontrol none.

2.

Run the following command: wrfile -a /etc/rc ifconfig e1a flowcontrol none.

3.

Run the following command: ifconfig e1b flowcontrol none.

4.

Run the following command: wrfile -a /etc/rc ifconfig e1b flowcontrol none.

5.

Run the following command: vlan create vif0 <NFS VLAN ID>.

6.

Run the following command: wrfile -a /etc/rc vlan create vif0 <NFS VLAN ID>.

7.

Run the following command: ifconfig vif0-<NFS VLAN ID> <Controller B NFS IP> netmask <NFS Netmask>.mtusize 9000 partner vif0-<NFS VLAN ID>.

8.

Run the following command: wrfile -a /etc/rc ifconfig vif0-<NFS VLAN ID> <Controller B NFS IP> netmask <NFS Netmask>.mtusize 9000 partner vif0-<NFS VLAN ID>.

9.

Run the following command: vlan add vif0 <iSCSI A VLAN ID>.

10.

Run the following command: wrfile -a /etc/rc vlan add vif0 <iSCSI-A VLAN ID>.

11.

Run the following command: ifconfig vif0-<iSCSI-A VLAN ID> <Controller B iSCSI-A IP> netmask <iSCSI A Netmask> mtusize 9000 partner vif0-<iSCSI-A VLAN ID>.

12.

Run the following command: wrfile -a /etc/rc ifconfig vif0-<iSCSI-A VLAN ID> <Controller B iSCSI-A IP> netmask <iSCSI-A Netmask> mtusize 9000 partner vif0-<iSCSI-A VLAN ID>.

13.

Run the following command: vlan add vif0 <iSCSI-B VLAN ID>.

14.

Run the following command: wrfile -a /etc/rc vlan add vif0 <iSCSI-B VLAN ID>.

15.

Run the following command: ifconfig vif0-<iSCSI-B VLAN ID> <Controller B iSCSI-B IP> netmask <iSCSI-B Netmask>. mtusize 9000 partner vif0-<iSCSI B VLAN ID>.

16.

Run the following command: wrfile -a /etc/rc ifconfig vif0-<iSCSI-B VLAN ID> <Controller B iSCSI-B IP> netmask <iSCSI-B Netmask>. mtusize 9000 partner vif0-<iSCSI-B VLAN ID>.

17.

Run the following command to verify additions to the /etc/rc file: rdfile /etc/rc.

Add Infrastructure Volumes

The following steps describe adding volumes on the storage controller for SAN boot of the Cisco UCS hosts as well as virtual machine provisioning.

Note

In this reference architecture, controller A houses the boot LUNs for the VMware hypervisor in addition to the swap files, while controller A houses the first datastore for virtual machines.

Controller A

1.

Run the following command: vol create esxi_boot -s none aggr1 100g.

2.

Run the following command: sis on /vol/esxi_boot .

3.

Run the following command: vol create infra_swap -s none aggr1 100g.

4.

Run the following command: snap sched infra_swap 0 0 0.

5.

Run the following command: snap reserve infra_swap 0.

Controller B

1.

Run the following command: vol create infra_datastore_1 -s none aggr1 500g.

2.

Run the following command: sis on /vol/infra_datastore_1.

Export NFS Infrastructure Volumes to ESXi Servers

These steps provide details for setting up NFS exports of the infrastructure volumes to the VMware ESXi servers.

Controller A

1.

Run the following command: exportfs -p rw=<ESXi Host 1 NFS IP>:<ESXi Host 2 NFS IP>,root=<ESXi Host 1 NFS IP>:<ESXi Host 2 NFS IP> /vol/infra_swap.

2.

Run the following command: exportfs. Verify that the NFS exports are set up correctly.

Controller B

1.

Run the following command: exportfs -p rw=<ESXi Host 1 NFS IP>:< ESXi Host 2 NFS IP>,root=<ESXi Host 1 NFS IP>:< ESXi Host 2 NFS IP> /vol/infra_datastore_1.

2.

Run the following command: exportfs. Verify that the NFS exports are set up correctly.

Cisco Nexus 5548 Deployment Procedure

The following section provides a detailed procedure for configuring the Cisco Nexus 5548 switches for use in a FlexPod environment. Follow these steps precisely because failure to do so could result in an improper configuration.

Note

The configuration steps detailed in this section provides guidance for configuring the Nexus 5548UP running release 5.1(3)N2(1). This configuration also leverages the native VLAN on the trunk ports to discard untagged packets, by setting the native VLAN on the PortChannel, but not including this VLAN in the allowed VLANs on the PortChannel.

Set up Initial Cisco Nexus 5548 Switch

These steps provide details for the initial Cisco Nexus 5548 Switch setup.

Cisco Nexus 5548 A

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start.

1.

Enter yes to enforce secure password standards.

2.

Enter the password for the admin user.

3.

Enter the password a second time to commit the password.

4.

Enter yes to enter the basic configuration dialog.

5.

Create another login account (yes/no) [n]: Enter.

6.

Configure read-only SNMP community string (yes/no) [n]: Enter.

7.

Configure read-write SNMP community string (yes/no) [n]: Enter.

8.

Enter the switch name: <Nexus A Switch name> Enter.

9.

Continue with out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter.

10.

Mgmt0 IPv4 address: <Nexus A mgmt0 IP> Enter.

11.

Mgmt0 IPv4 netmask: <Nexus A mgmt0 netmask> Enter.

12.

Configure the default gateway? (yes/no) [y]: Enter.

13.

IPv4 address of the default gateway: <Nexus A mgmt0 gateway> Enter.

14.

Enable the telnet service? (yes/no) [n]: Enter.

15.

Enable the ssh service? (yes/no) [y]: Enter.

16.

Type of ssh key you would like to generate (dsa/rsa):rsa.

17.

Number of key bits <768-2048> :1024 Enter.

18.

Configure the ntp server? (yes/no) [y]: Enter.

19.

NTP server IPv4 address: <NTP Server IP> Enter.

20.

Enter basic FC configurations (yes/no) [n]: Enter.

21.

Would you like to edit the configuration? (yes/no) [n]: Enter.

22.

Be sure to review the configuration summary before enabling it.

23.

Use this configuration and save it? (yes/no) [y]: Enter.

24.

Configuration may be continued from the console or by using SSH. To use SSH, connect to the mgmt0 address of Nexus A. It is recommended to continue setup via the console or serial port.

25.

Log in as user admin with the password previously entered.

Cisco Nexus 5548 B

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start.

1.

Enter yes to enforce secure password standards.

2.

Enter the password for the admin user.

3.

Enter the password a second time to commit the password.

4.

Enter yes to enter the basic configuration dialog.

5.

Create another login account (yes/no) [n]: Enter.

6.

Configure read-only SNMP community string (yes/no) [n]: Enter.

7.

Configure read-write SNMP community string (yes/no) [n]: Enter.

8.

Enter the switch name: <Nexus B Switch name> Enter.

9.

Continue with out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter.

10.

Mgmt0 IPv4 address: <Nexus B mgmt0 IP> Enter.

11.

Mgmt0 IPv4 netmask: <Nexus B mgmt0 netmask> Enter.

12.

Configure the default gateway? (yes/no) [y]: Enter.

13.

IPv4 address of the default gateway: <Nexus B mgmt0 gateway> Enter.

14.

Enable the telnet service? (yes/no) [n]: Enter.

15.

Enable the ssh service? (yes/no) [y]: Enter.

16.

Type of ssh key you would like to generate (dsa/rsa):rsa.

17.

Number of key bits <768-2048>:1024 Enter.

18.

Configure the ntp server? (yes/no) [y]: Enter.

19.

NTP server IPv4 address: <NTP Server IP> Enter.

20.

Enter basic FC configurations (yes/no) [n]: Enter.

21.

Would you like to edit the configuration? (yes/no) [n]: Enter.

22.

Be sure to review the configuration summary before enabling it.

23.

Use this configuration and save it? (yes/no) [y]: Enter.

24.

Configuration may be continued from the console or by using SSH. To use SSH, connect to the mgmt0 address of Nexus B. It is recommended to continue setup via the console or serial port.

25.

Log in as user admin with the password previously entered.

Enable Appropriate Cisco Nexus Features

These steps provide details for enabling the appropriate Cisco Nexus features.

Nexus A and Nexus B

1.

Type config t to enter the global configuration mode.

2.

Type feature lacp.

3.

Type feature vpc.

Set Global Configurations

These steps provide details for setting global configurations.

Nexus A and Nexus B

1.

From the global configuration mode, type spanning-tree port type network default to make sure that, by default, the ports are considered as network ports in regards to spanning-tree.

2.

Type spanning-tree port type edge bpduguard default to enable bpduguard on all edge ports by default.

3.

Type spanning-tree port type edge bpdufilter default to enable bpdufilter on all edge ports by default.

4.

Type policy-map type network-qos jumbo.

5.

Type class type network-qos class-default.

6.

Type mtu 9000.

7.

Type exit.

8.

Type exit.

9.

Type system qos.

10.

Type service-policy type network-qos jumbo.

11.

Type exit.

12.

Type copy run start.

Create Necessary VLANs

These steps provide details for creating the necessary VLANs.

Nexus A and Nexus B

1.

Type vlan <MGMT VLAN ID>.

2.

Type name MGMT-VLAN.

3.

Type exit.

4.

Type vlan <Native VLAN ID>.

5.

Type name Native-VLAN.

6.

Type exit.

7.

Type vlan <NFS VLAN ID>.

8.

Type name NFS-VLAN.

9.

Type exit.

10.

Type vlan <iSCSI-A VLAN ID>.

11.

Type name iSCSI-A-VLAN.

12.

Type exit.

13.

Type vlan <iSCSI-B VLAN ID>.

14.

Type name iSCSI-B-VLAN.

15.

Type exit.

16.

Type vlan <vMotion VLAN ID>.

17.

Type name vMotion-VLAN.

18.

Type exit.

19.

Type vlan <VM-Traffic VLAN ID>.

20.

Type name VM-Traffic-VLAN.

21.

Type exit.

Add Individual Port Descriptions for Troubleshooting

These steps provide details for adding individual port descriptions for troubleshooting activity and verification.

Cisco Nexus 5548 A

1.

From the global configuration mode, type interface Eth1/1.

2.

Type description <Controller A:e1a>.

3.

Type exit.

4.

Type interface Eth1/2.

5.

Type description <Controller B:e1a>.

6.

Type exit.

7.

Type interface Eth1/5.

8.

Type description <Nexus B:Eth1/5>.

9.

Type exit.

10.

Type interface Eth1/6.

11.

Type description <Nexus B:Eth1/6>.

12.

Type exit.

13.

Type interface Eth1/3.

14.

Type description <UCSM A:Eth1/19>.

15.

Type exit.

16.

Type interface Eth1/4.

17.

Type description <UCSM B:Eth1/19>.

18.

Type exit.

Cisco Nexus 5548 B

1.

From the global configuration mode, type interface Eth1/1.

2.

Type description <Controller A:e1b>.

3.

Type exit.

4.

Type interface Eth1/2.

5.

Type description <Controller B:e1b>.

6.

Type exit.

7.

Type interface Eth1/5.

8.

Type description <Nexus A:Eth1/5>.

9.

Type exit.

10.

Type interface Eth1/6.

11.

Type description <Nexus A:Eth1/6>.

12.

Type exit.

13.

Type interface Eth1/3.

14.

Type description <UCSM A:Eth1/20>.

15.

Type exit.

16.

Type interface Eth1/4.

17.

Type description <UCSM B:Eth1/20>.

18.

Type exit.

Create Necessary PortChannels

These steps provide details for creating the necessary PortChannels between devices.

Cisco Nexus 5548 A

1.

From the global configuration mode, type interface Po10.

2.

Type description vPC peer-link.

3.

Type exit.

4.

Type interface Eth1/5-6.

5.

Type channel-group 10 mode active.

6.

Type no shutdown.

7.

Type exit.

8.

Type interface Po11.

9.

Type description <Controller A>.

10.

Type exit.

11.

Type interface Eth1/1.

12.

Type channel-group 11 mode active.

13.

Type no shutdown.

14.

Type exit.

15.

Type interface Po12.

16.

Type description <Controller B>.

17.

Type exit.

18.

Type interface Eth1/2.

19.

Type channel-group 12 mode active.

20.

Type no shutdown.

21.

Type exit.

22.

Type interface Po13.

23.

Type description <UCSM A>.

24.

Type exit.

25.

Type interface Eth1/3.

26.

Type channel-group 13 mode active.

27.

Type no shutdown.

28.

Type exit.

29.

Type interface Po14.

30.

Type description <UCSM B>.

31.

Type exit.

32.

Type interface Eth1/4.

33.

Type channel-group 14 mode active.

34.

Type no shutdown.

35.

Type exit.

36.

Type copy run start.

Cisco Nexus 5548 B

1.

From the global configuration mode, type interface Po10.

2.

Type description vPC peer-link.

3.

Type exit.

4.

Type interface Eth1/5-6.

5.

Type channel-group 10 mode active.

6.

Type no shutdown.

7.

Type exit.

8.

Type interface Po11.

9.

Type description <Controller A>.

10.

Type exit.

11.

Type interface Eth1/1.

12.

Type channel-group 11 mode active.

13.

Type no shutdown.

14.

Type exit.

15.

Type interface Po12.

16.

Type description <Controller B>.

17.

Type exit.

18.

Type interface Eth1/2.

19.

Type channel-group 12 mode active.

20.

Type no shutdown.

21.

Type exit.

22.

Type interface Po13.

23.

Type description <UCSM A>.

24.

Type exit.

25.

Type interface Eth1/3.

26.

Type channel-group 13 mode active.

27.

Type no shutdown.

28.

Type exit.

29.

Type interface Po14.

30.

Type description <UCSM B>.

31.

Type exit.

32.

Type interface Eth1/4.

33.

Type channel-group 14 mode active.

34.

Type no shutdown.

35.

Type exit.

36.

Type copy run start.

Add PortChannel Configurations

These steps provide details for adding PortChannel configurations.

Cisco Nexus 5548 A

1.

From the global configuration mode, type interface Po10.

2.

Type switchport mode trunk.

3.

Type switchport trunk native vlan <Native VLAN ID>.

4.

Type switchport trunk allowed vlan <MGMT VLAN ID, NFS VLAN ID, iSCSI-A VLAN ID, iSCSI-B VLAN ID, vMotion VLAN ID, VM-Traffic VLAN ID>.

5.

Type spanning-tree port type network.

6.

Type no shutdown.

7.

Type exit.

8.

Type interface Po11.

9.

Type switchport mode trunk.

10.

Type switchport trunk native vlan <Native VLAN ID>.

11.

Type switchport trunk allowed vlan <NFS VLAN ID, iSCSI-A VLAN ID, iSCSI-B VLAN ID>.

12.

Type spanning-tree port type edge trunk.

13.

Type no shutdown.

14.

Type exit.

15.

Type interface Po12.

16.

Type switchport mode trunk.

17.

Type switchport trunk native vlan <Native VLAN ID>.

18.

Type switchport trunk allowed vlan <NFS VLAN ID, iSCSI-A VLAN ID, iSCSI-B VLAN ID>.

19.

Type spanning-tree port type edge trunk.

20.

Type no shutdown.

21.

Type exit.

22.

Type interface Po13.

23.

Type switchport mode trunk.

24.

Type switchport trunk native vlan <Native VLAN ID>.

25.

Type switchport trunk allowed vlan <MGMT VLAN ID, NFS VLAN ID, iSCSI-A VLAN ID, vMotion VLAN ID, VM-Traffic VLAN ID>.

26.

Type spanning-tree port type edge trunk.

27.

Type no shutdown.

28.

Type exit.

29.

Type interface Po14.

30.

Type switchport mode trunk.

31.

Type switchport trunk native vlan <Native VLAN ID>.

32.

Type switchport trunk allowed vlan <MGMT VLAN ID, NFS VLAN ID, iSCSI-B VLAN ID, vMotion VLAN ID, VM-Traffic VLAN ID>.

33.

Type spanning-tree port type edge trunk.

34.

Type no shutdown.

35.

Type exit.

36.

Type copy run start.

Cisco Nexus 5548 B

1.

From the global configuration mode, type interface Po10.

2.

Type switchport mode trunk.

3.

Type switchport trunk native vlan <Native VLAN ID>.

4.

Type switchport trunk allowed vlan <MGMT VLAN ID, NFS VLAN ID, iSCSI-A VLAN ID, iSCSI-B VLAN ID, vMotion VLAN ID, VM-Traffic VLAN ID>.

5.

Type spanning-tree port type network.

6.

Type no shutdown.

7.

Type exit.

8.

Type interface Po11.

9.

Type switchport mode trunk.

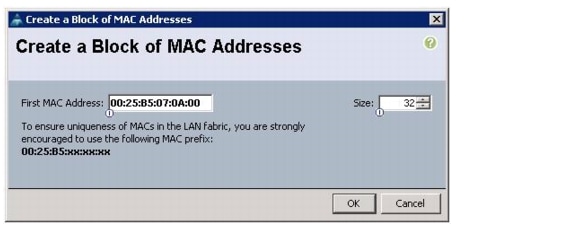

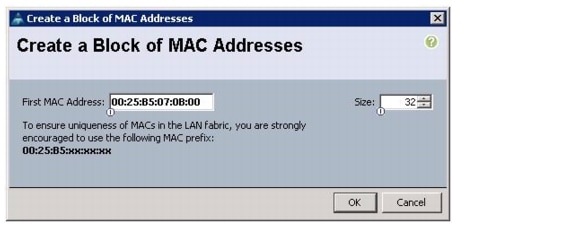

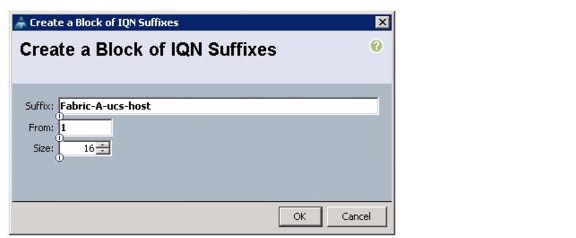

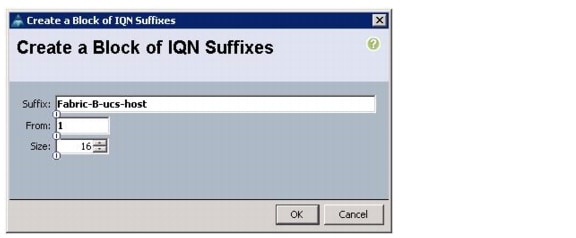

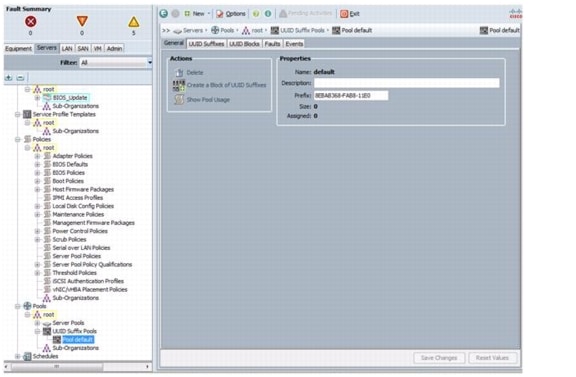

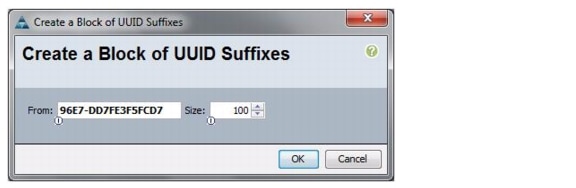

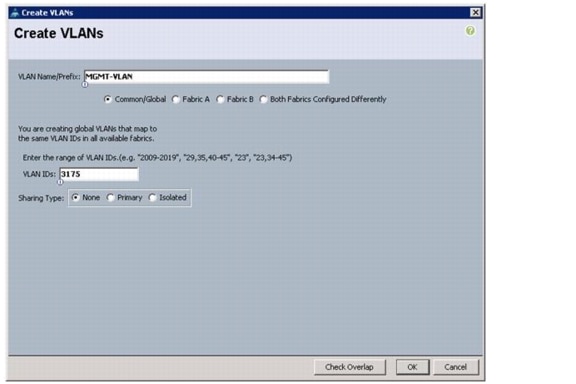

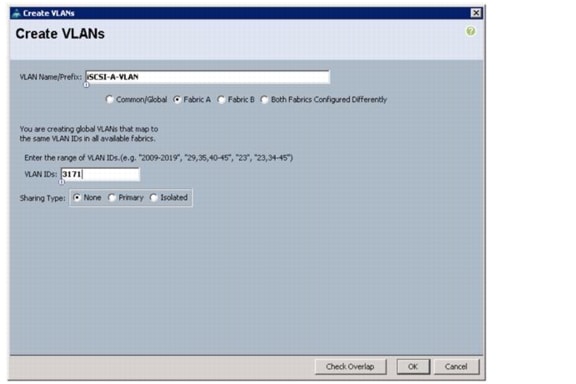

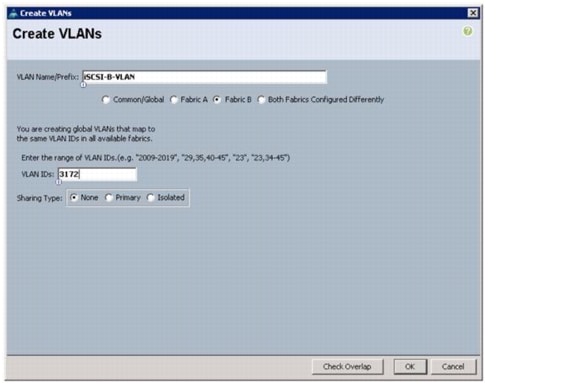

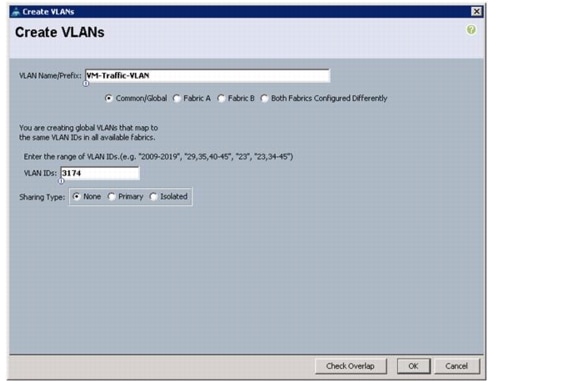

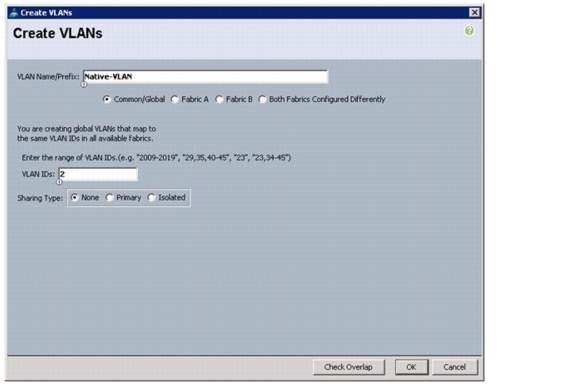

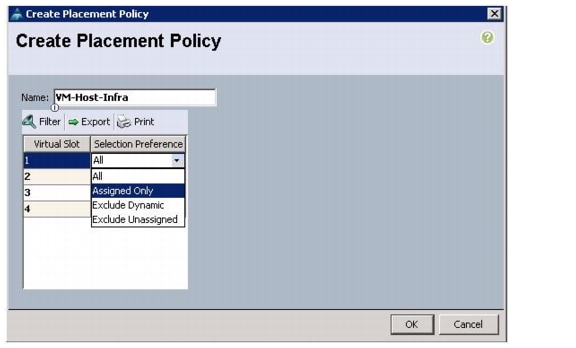

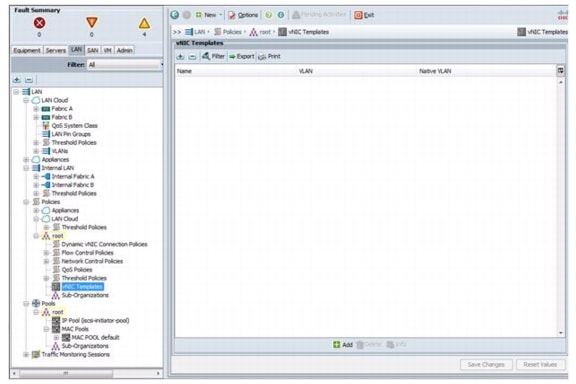

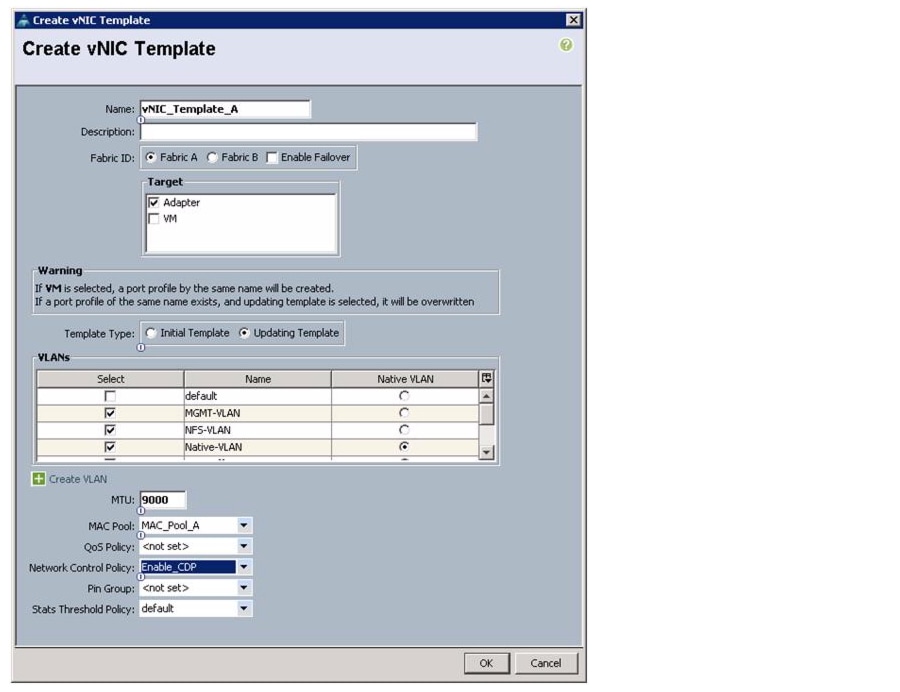

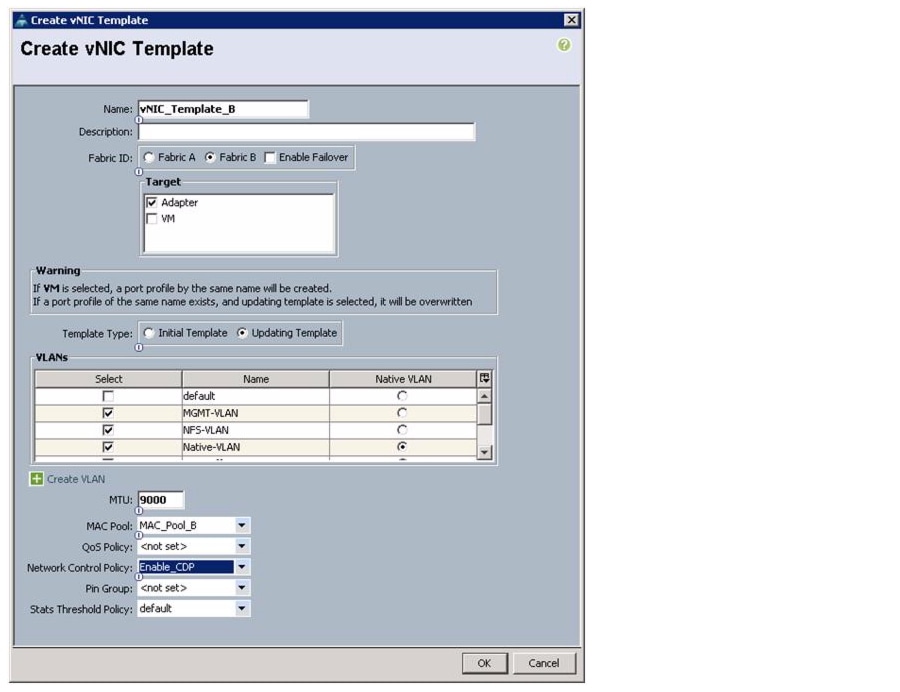

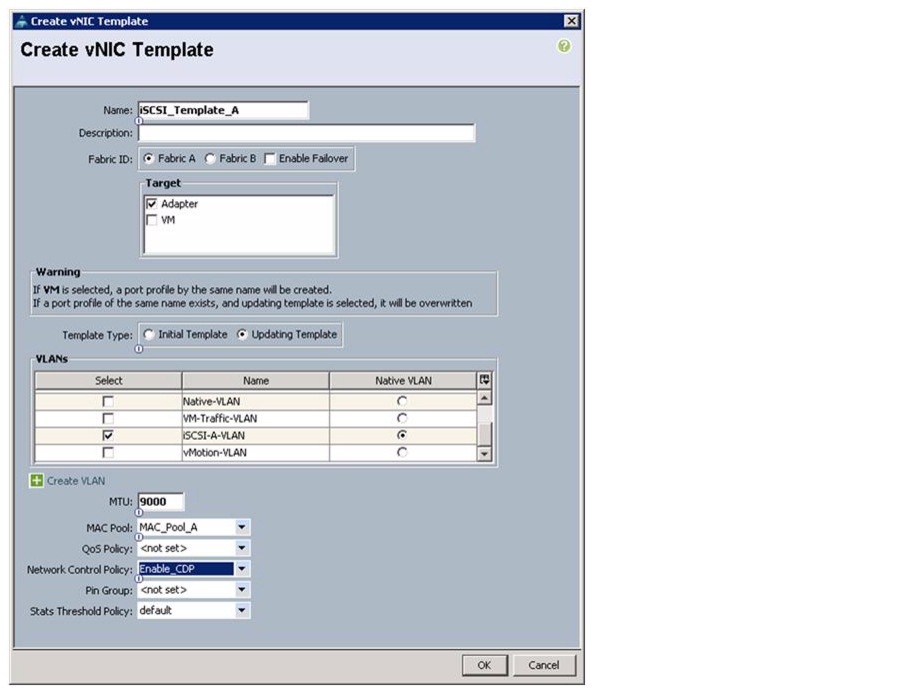

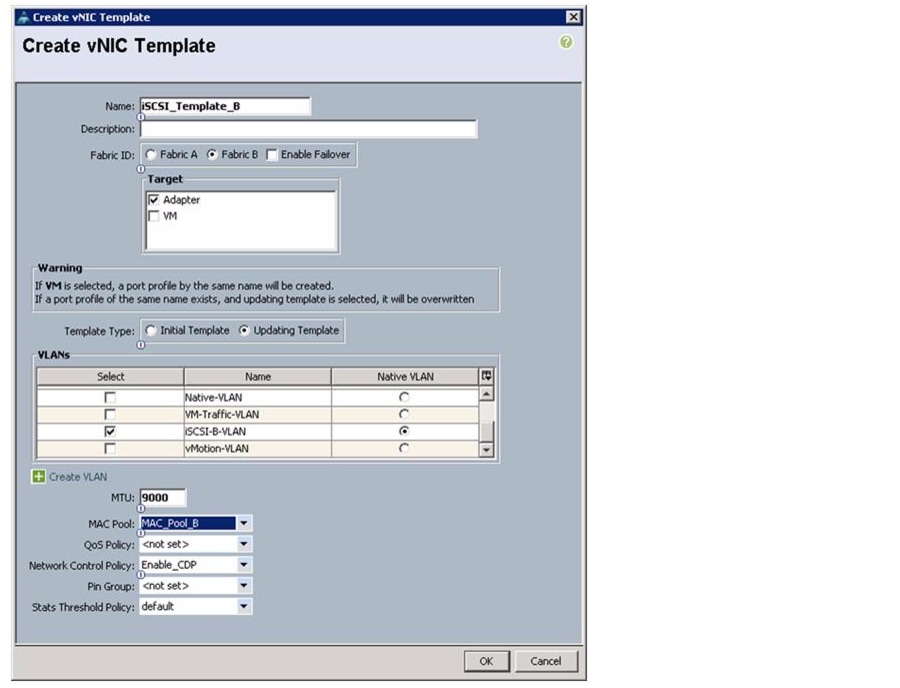

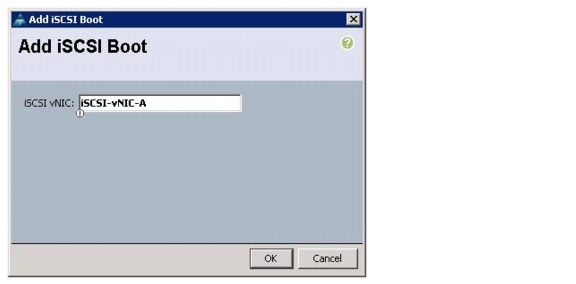

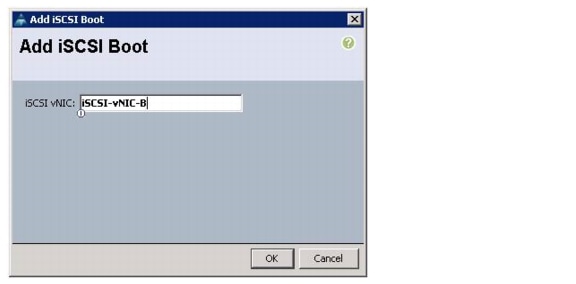

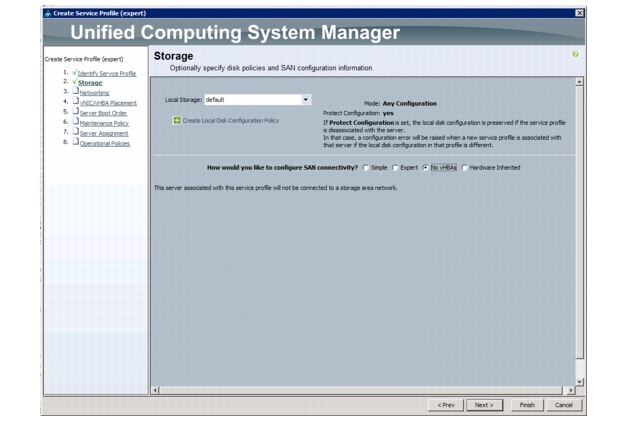

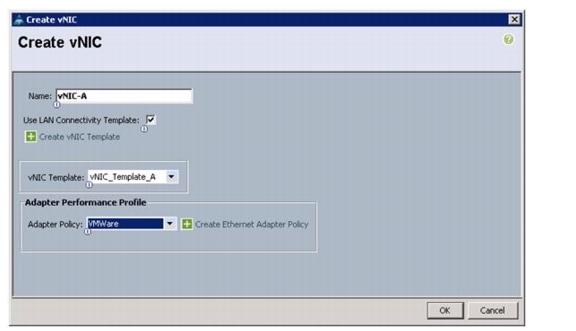

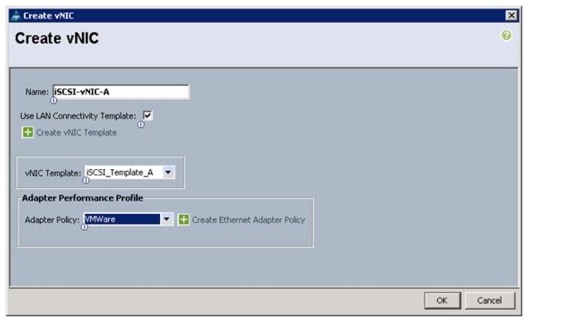

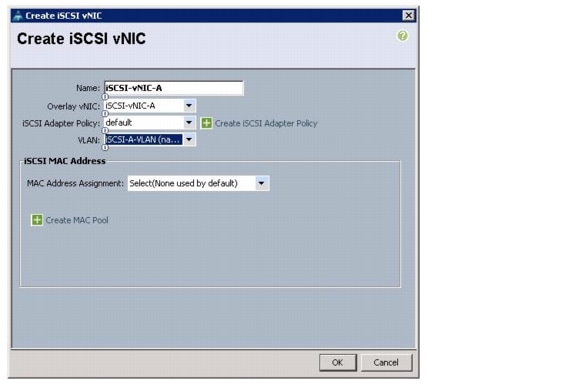

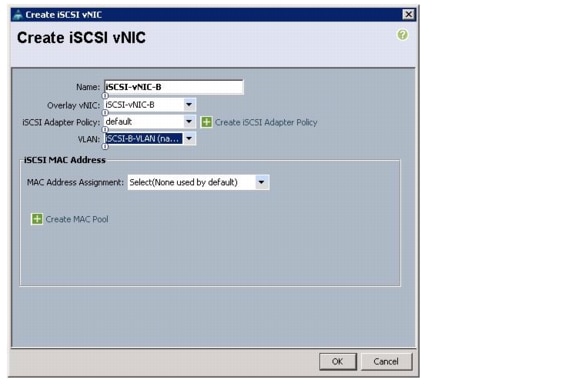

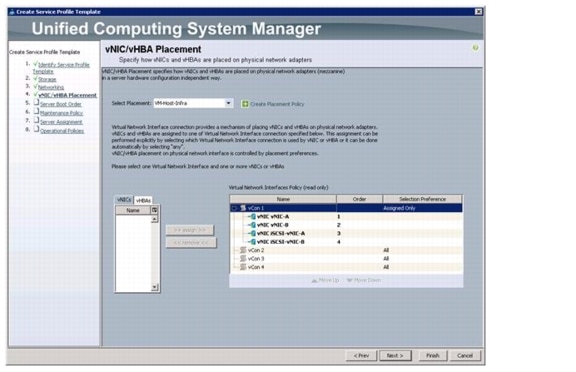

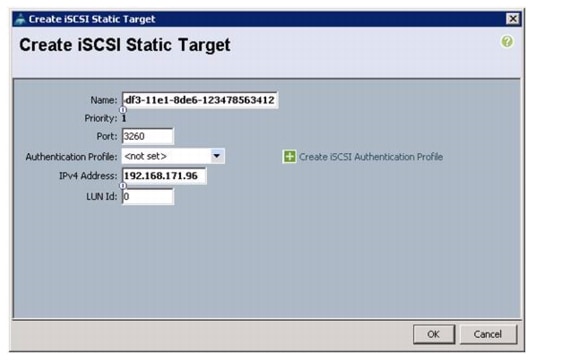

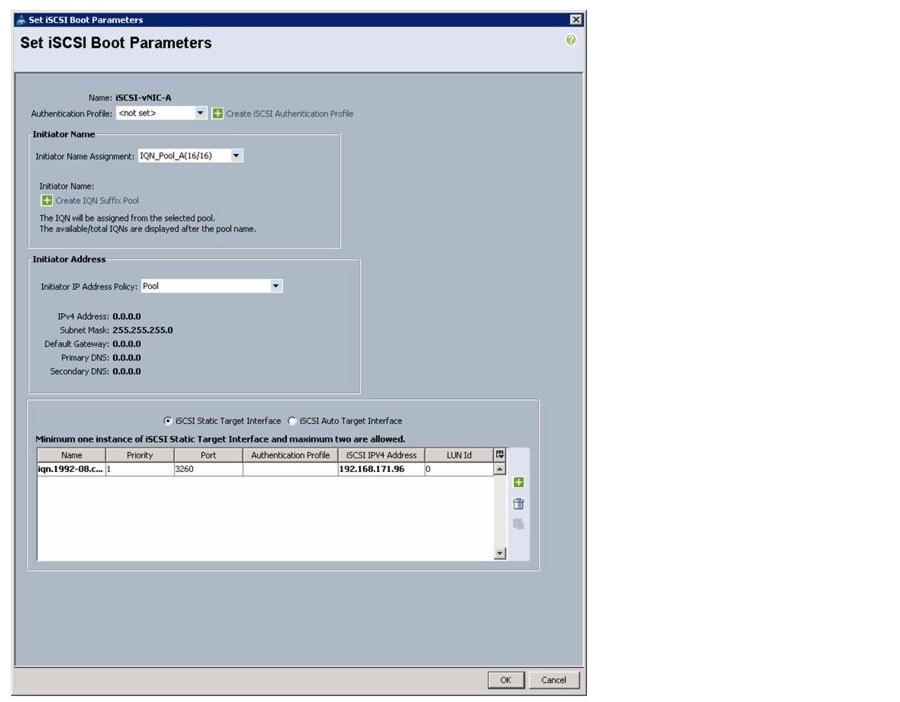

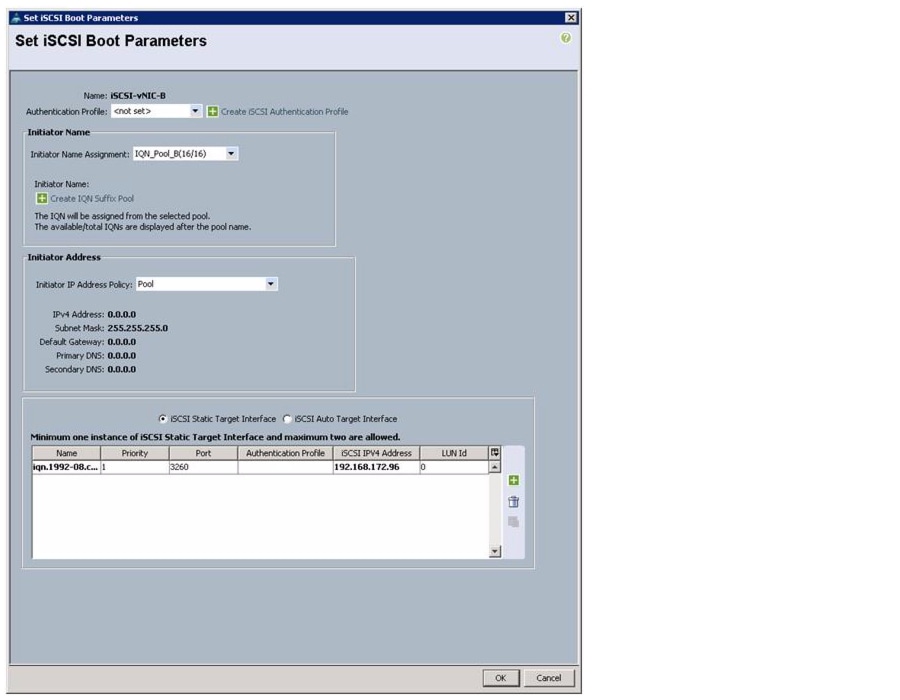

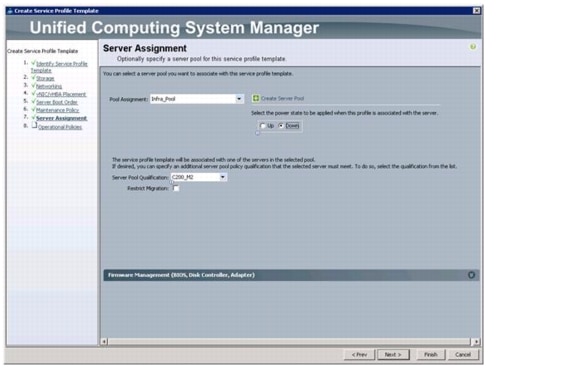

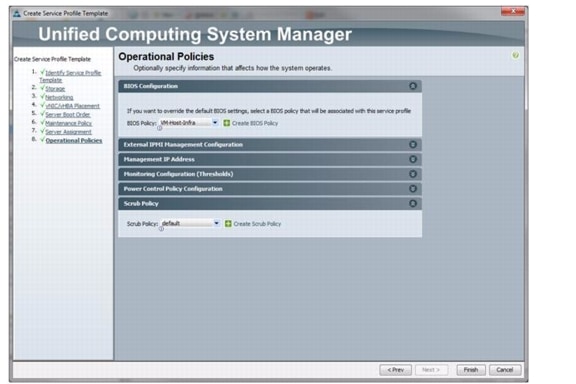

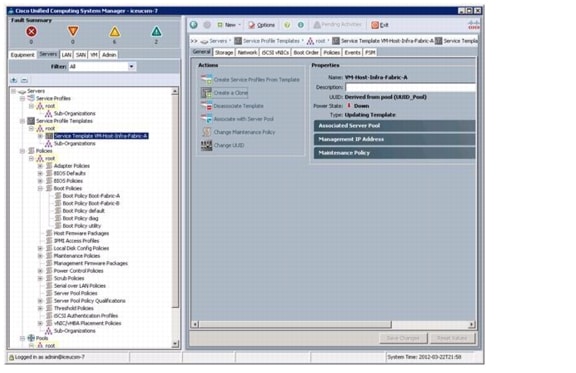

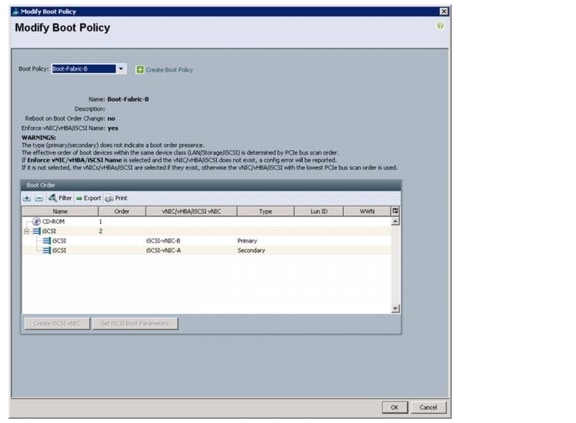

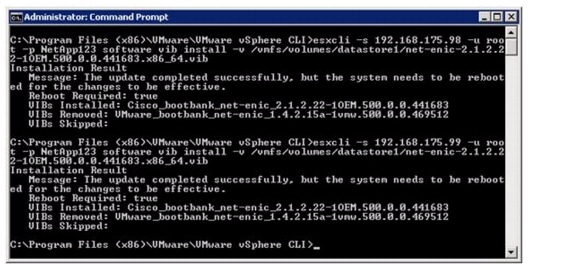

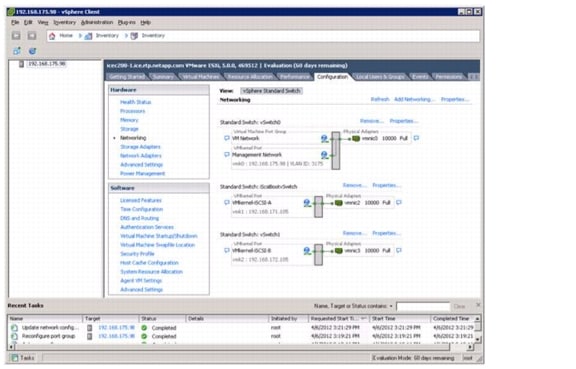

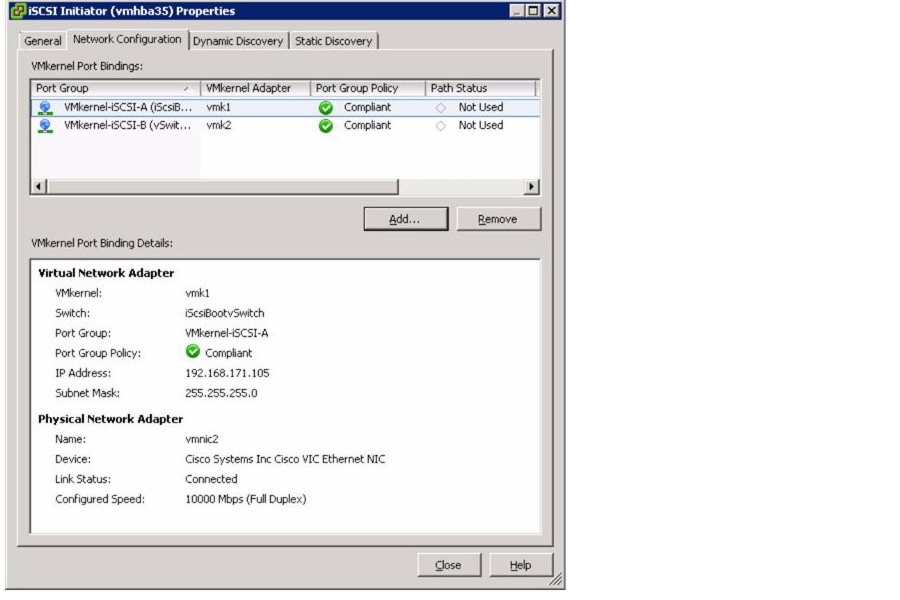

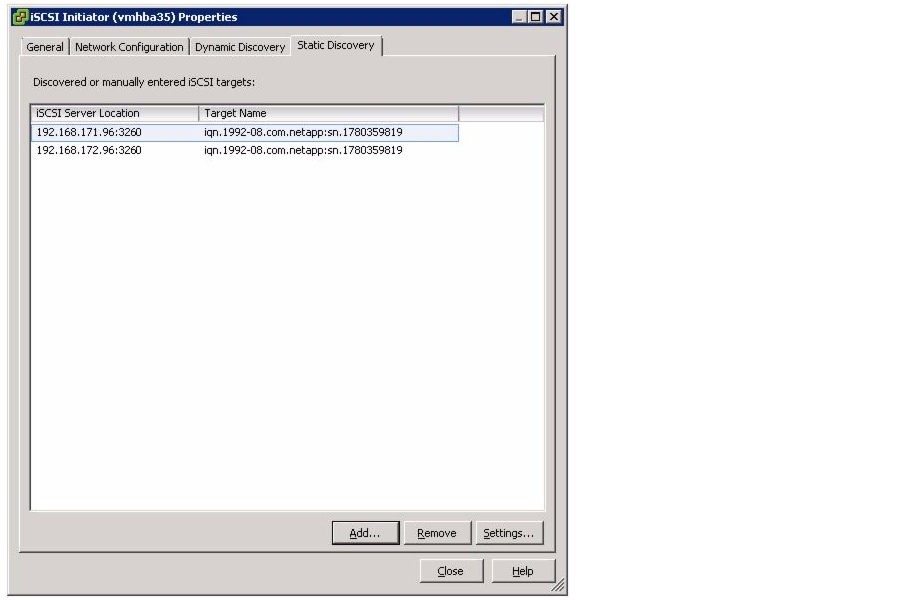

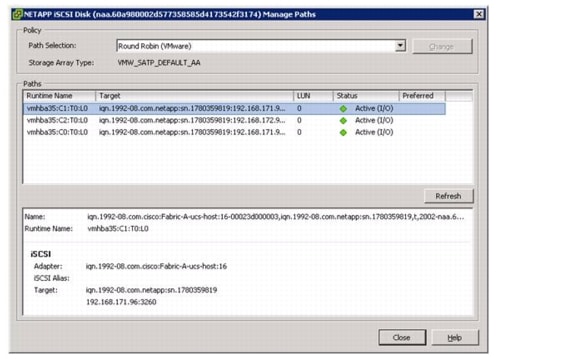

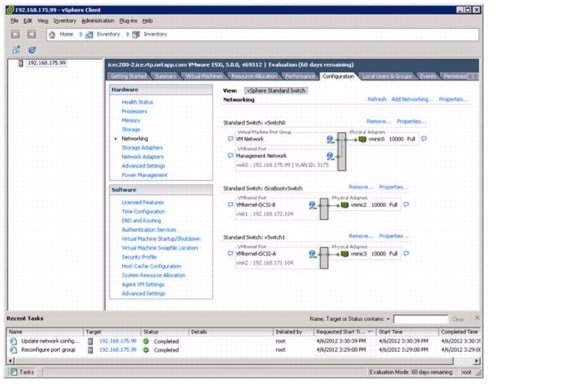

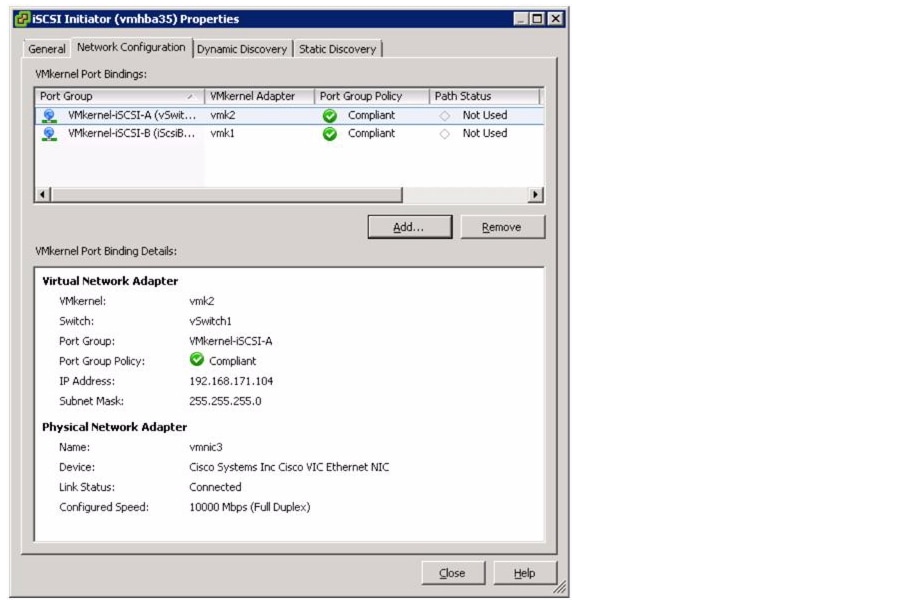

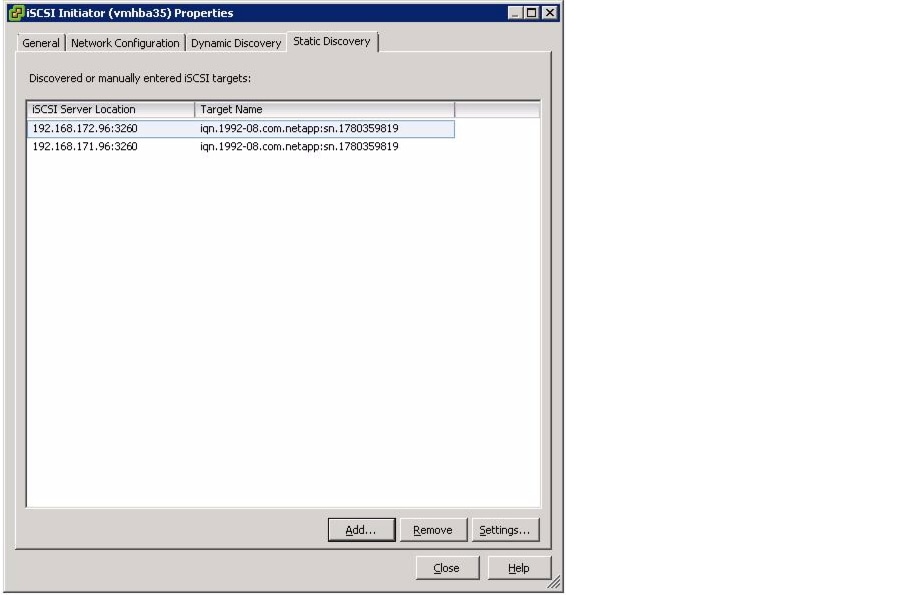

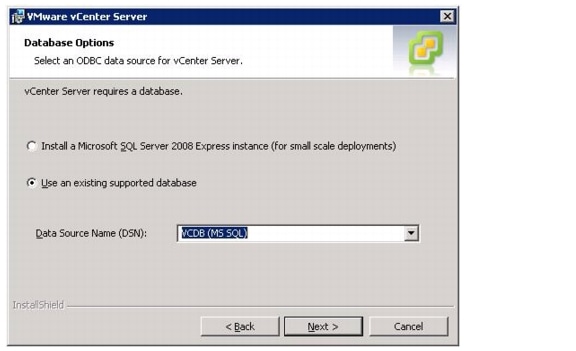

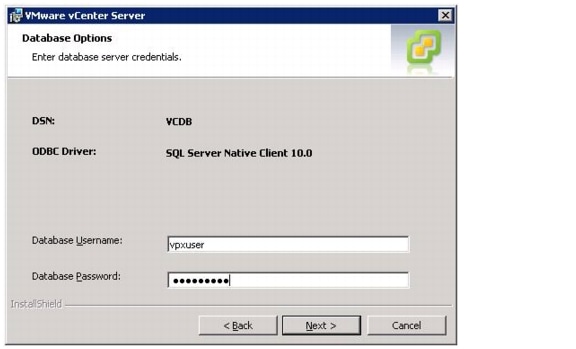

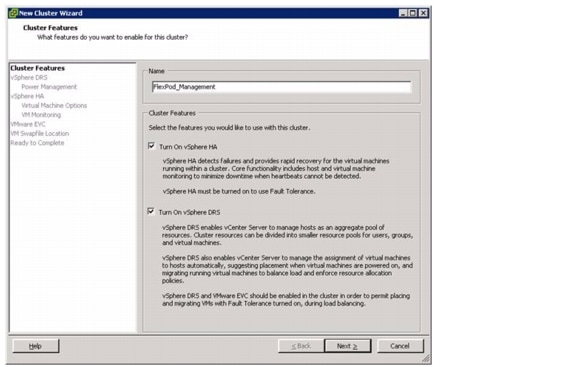

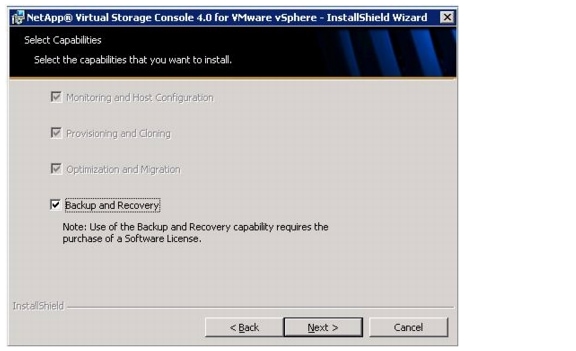

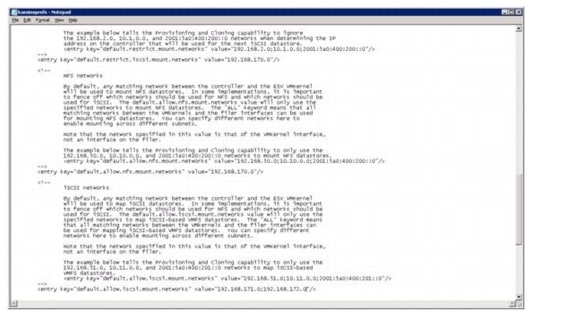

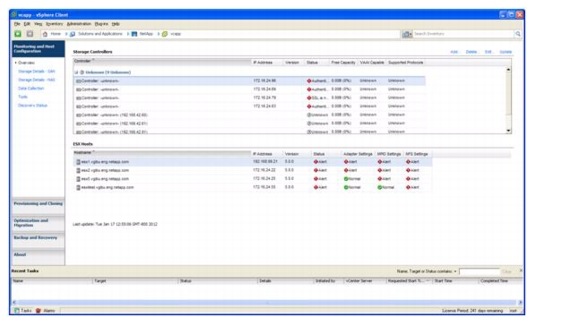

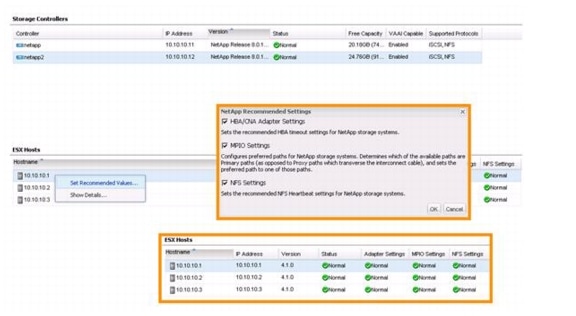

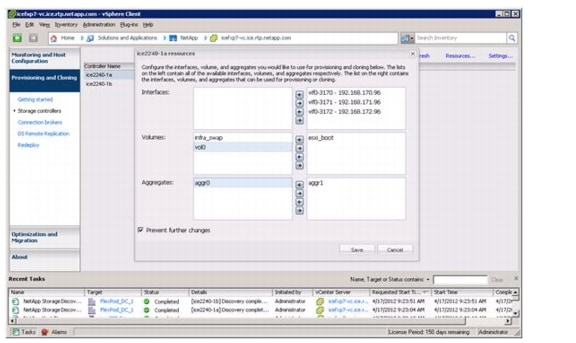

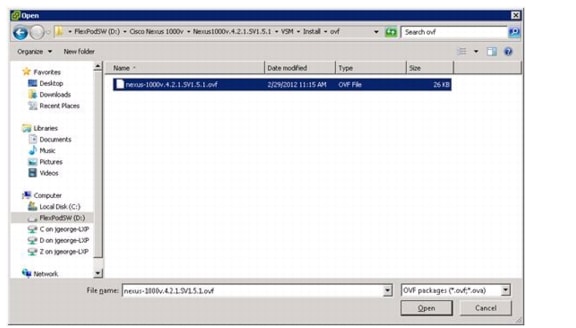

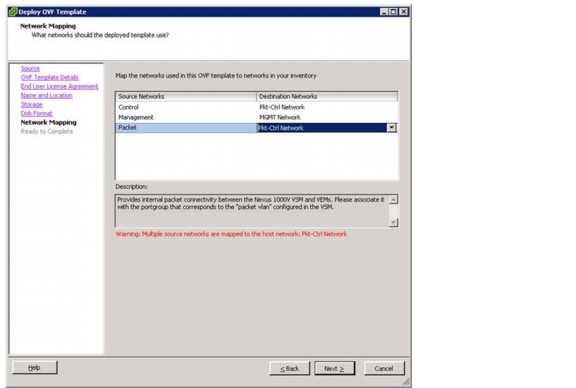

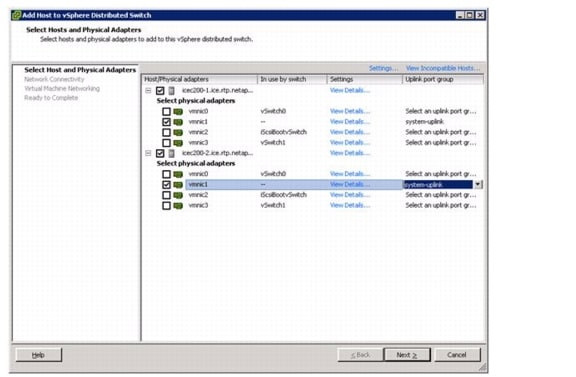

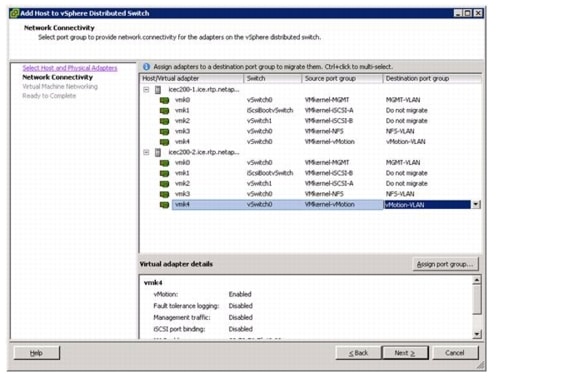

10.