System Overview

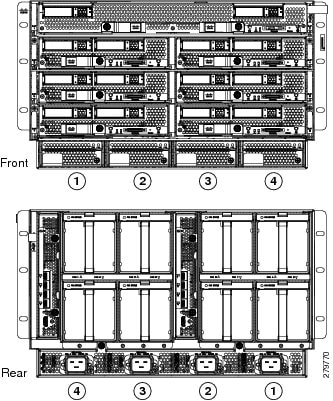

The Cisco UCS 5108 Server Chassis and its components are part of the Cisco Unified Computing System (UCS), which uses the Cisco UCS 5108 server system with the two I/O modules and the Cisco UCS Fabric Interconnects to provide advanced options and capabilities in server and data management. All servers are managed via the GUI or CLI with Cisco UCS Manager.

The Cisco UCS 5108 Server Chassis system consists of the following components:

-

Chassis versions:

-

Cisco UCS 5108 server chassis–AC version (UCSB-5108-AC2 or N20-C6508)

-

Cisco UCS 5108 server chassis–DC version (UCSB-5108-DC2 or UCSB-5108-DC)

-

-

I/O module (IOM) versions:

-

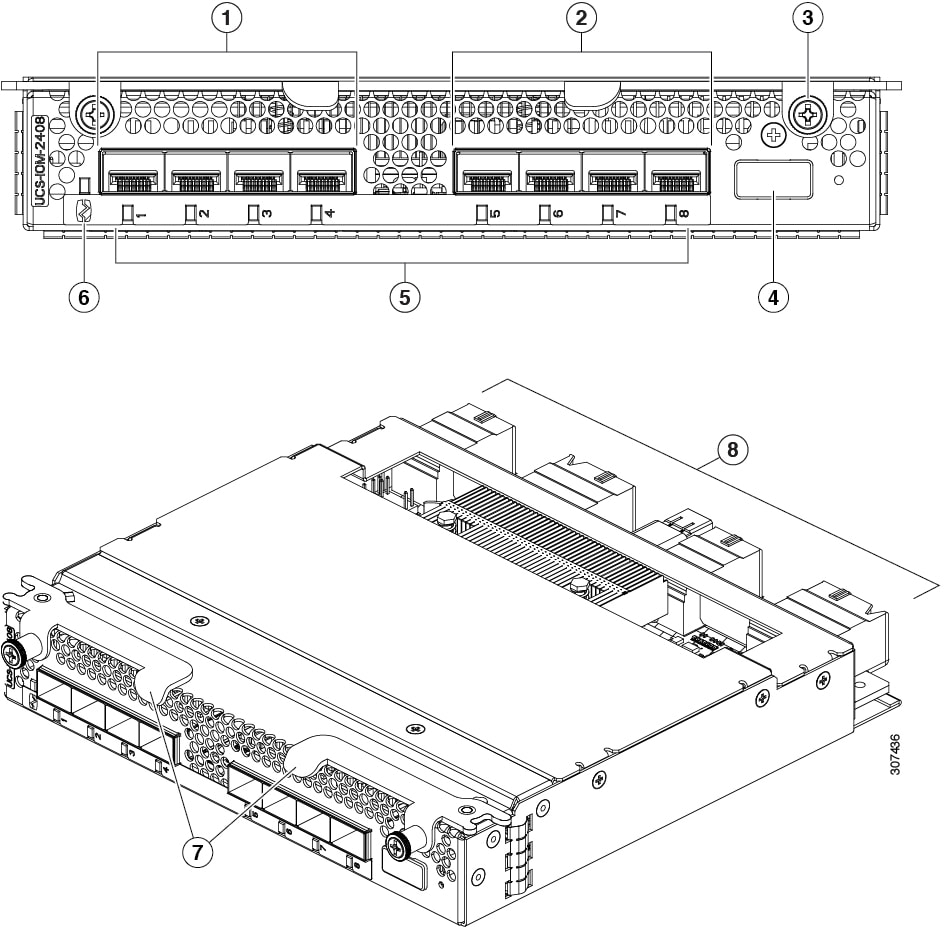

Cisco UCS 2408 I/O Module (UCS-IOM-2408)—Up to two I/O modules, each with 8 25-Gigabit SFP28 uplink ports and 32 10-Gigabit backplane ports

-

Cisco UCS 2304 I/O Module (UCS-IOM-2304V2 or UCS-IOM-2304)—Up to two I/O modules, each with 4 configurable 40-Gigabit uplink ports and 8 40-Gigabit backplane ports

Note

You cannot mix UCS-IOM-2304V2 and UCS-IOM-2304 in the same chassis. UCS-IOM-2304V2 requires Cisco UCS Manager 4.0(4) or later.

-

-

A number of SFP+ choices using copper or optical fiber

-

Power supplies (N20-PAC5-2500W, UCSB-PSU-2500ACPL, or UCSB-PSU-2500DC48)—Up to four 2500 Watt, hot-swappable power supplies

-

Fan modules (N20-FAN5)—Eight hot-swappable fan modules

-

UCS B-Series blade servers, including:

-

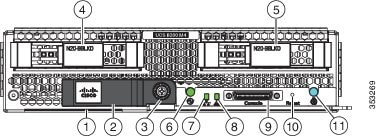

Cisco UCS B200 M5 blade servers (UCSB-B200-M5)—Up to eight half-width blade servers, each containing two CPUs and holding up to two hard drives capable of RAID 0 or 1

-

Cisco UCS B200 M4 blade servers (UCSB-B200-M4)—Up to eight half-width blade servers, each containing two CPUs and holding up to two hard drives capable of RAID 0 or 1

-

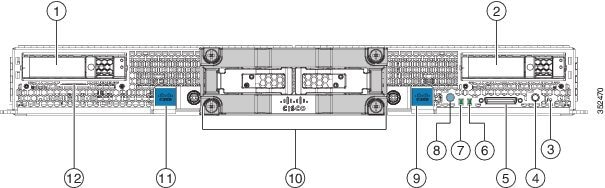

Cisco UCS B480 M5 blade servers (UCSB-B480-M5)—Up to four full-width blade servers, each containing four CPUs and holding up to four hard drives capable of RAID 0, 1, 5, and 6

-

Cisco UCS B260 M4 blade servers (UCSB-EX-M4-1 or UCSB-EX-M4-2)—Up to four full-width blade servers, each containing two CPUs and a SAS RAID controller

-

Cisco UCS B460 M4 blade servers (UCSB-EX-M4-1 or UCSB-EX-M4-2)—Up to two full-width blade servers, each containing four CPUs and SAS RAID controllers

-

Cisco UCS B420 M4 blade servers (UCSB-B420-M4)—Up to four full-width blade servers, each containing four CPUs and holding up to four hard drives capable of RAID 0, 1, 5, and 10

-

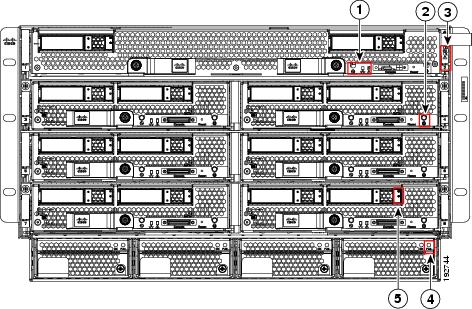

Cisco UCS Mini Server Chassis, which is a smaller solution, consists of the following components:

-

Cisco UCS 5108 server chassis–AC version (UCSB-5108-AC2)

-

Cisco UCS 5108 server chassis–DC version (UCSB-5108-DC2)

-

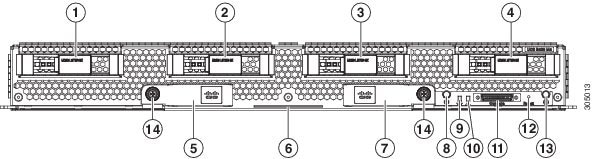

Cisco UCS 6324 Fabric Interconnect for the UCS Mini system (UCS-FI-M-6324)—Up to two integrated fabric interconnect modules, each providing four SFP+ ports of 10-Gigabit Ethernet and Fibre Channel over Ethernet (FCoE), and a QSFP+ port. This FI fits into the I/O module slot on the rear of the chassis.

-

A number of SFP+ choices using copper or optical fiber

-

Power supplies (UCSB-PSU-2500ACDV, UCSB-PSU-2500DC48, and UCSB-PSU-2500HVDC)—Up to four 2500 Watt, hot-swappable power supplies

-

Fan modules (N20-FAN5)—Eight hot-swappable fan modules

-

UCS B-Series blade servers, including the following:

-

Cisco UCS B200 M4 or M5 blade servers—Up to eight half-width blade servers, each containing two CPUs and holding up to two hard drives capable of RAID 0 or 1

-

-

UCS C-Series rack servers, including the following:

-

Cisco UCS C240 M4 or C240 M5 rack servers and Cisco UCS C220 M4 or C220 M5 rack servers—Up to seven rack servers, either C240 or C220, or a combination of the two.

-

Feedback

Feedback