Sustitución del servidor informático UCS C240 M4 - vEPC

Opciones de descarga

-

ePub (1.0 MB)

Visualice en diferentes aplicaciones en iPhone, iPad, Android, Sony Reader o Windows Phone -

Mobi (Kindle) (646.4 KB)

Visualice en dispositivo Kindle o aplicación Kindle en múltiples dispositivos

Lenguaje no discriminatorio

El conjunto de documentos para este producto aspira al uso de un lenguaje no discriminatorio. A los fines de esta documentación, "no discriminatorio" se refiere al lenguaje que no implica discriminación por motivos de edad, discapacidad, género, identidad de raza, identidad étnica, orientación sexual, nivel socioeconómico e interseccionalidad. Puede haber excepciones en la documentación debido al lenguaje que se encuentra ya en las interfaces de usuario del software del producto, el lenguaje utilizado en función de la documentación de la RFP o el lenguaje utilizado por un producto de terceros al que se hace referencia. Obtenga más información sobre cómo Cisco utiliza el lenguaje inclusivo.

Acerca de esta traducción

Cisco ha traducido este documento combinando la traducción automática y los recursos humanos a fin de ofrecer a nuestros usuarios en todo el mundo contenido en su propio idioma. Tenga en cuenta que incluso la mejor traducción automática podría no ser tan precisa como la proporcionada por un traductor profesional. Cisco Systems, Inc. no asume ninguna responsabilidad por la precisión de estas traducciones y recomienda remitirse siempre al documento original escrito en inglés (insertar vínculo URL).

Contenido

Introducción

Este documento describe los pasos necesarios para sustituir un servidor informático defectuoso en una configuración Ultra-M que aloja StarOS Virtual Network Functions (VNF).

Antecedentes

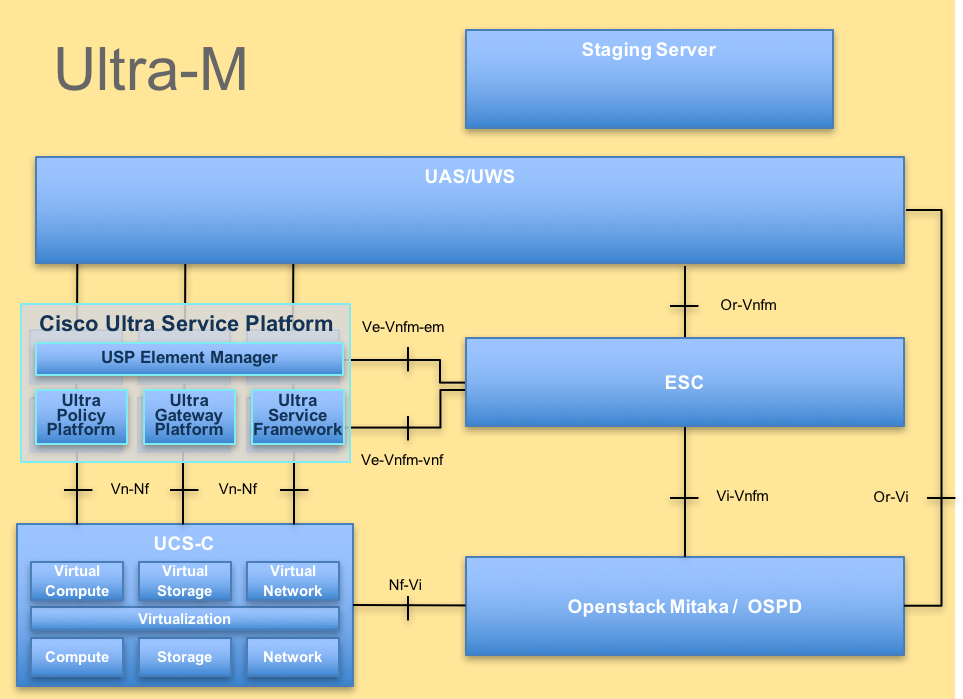

Ultra-M es una solución de núcleo de paquetes móviles virtualizada validada y empaquetada previamente diseñada para simplificar la implementación de VNF. OpenStack es el Virtualized Infrastructure Manager (VIM) para Ultra-M y consta de estos tipos de nodos:

- Informática

- Disco de almacenamiento de objetos - Compute (OSD - Compute)

- Controlador

- Plataforma OpenStack: Director (OSPD)

La arquitectura de alto nivel de Ultra-M y los componentes involucrados se ilustran en esta imagen:

Arquitectura UltraM

Arquitectura UltraM

Este documento está dirigido al personal de Cisco familiarizado con la plataforma Cisco Ultra-M y detalla los pasos necesarios para llevarse a cabo en el nivel VNF de OpenStack y StarOS en el momento de la sustitución del servidor informático.

Nota: Se considera la versión Ultra M 5.1.x para definir los procedimientos en este documento.

Abreviaturas

| VNF | Función de red virtual |

| CF | Función de control |

| SF | Función de servicio |

| ESC | Controlador de servicio elástico |

| MOP | Método de procedimiento |

| OSD | Discos de almacenamiento de objetos |

| HDD | Unidad de disco duro |

| SSD | Unidad de estado sólido |

| VIM | Administrador de infraestructura virtual |

| VM | Máquina virtual |

| EM | Administrador de elementos |

| UAS | Servicios de ultra automatización |

| UUID | Identificador único universal |

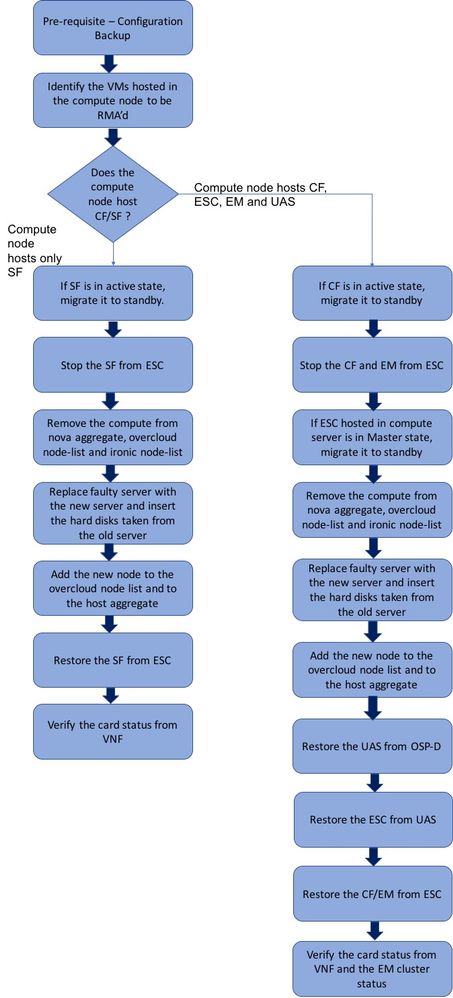

Flujo de trabajo del MoP

Flujo de trabajo de alto nivel del procedimiento de reemplazo

Flujo de trabajo de alto nivel del procedimiento de reemplazo

Prerequisites

Copia de seguridad

Antes de reemplazar un nodo de cálculo, es importante comprobar el estado actual de su entorno de Red Hat OpenStack Platform. Se recomienda que verifique el estado actual para evitar complicaciones cuando el proceso de reemplazo de Compute está activado. Se puede lograr con este flujo de reemplazo.

En caso de recuperación, Cisco recomienda realizar una copia de seguridad de la base de datos OSPD con estos pasos:

[root@director ~]# mysqldump --opt --all-databases > /root/undercloud-all-databases.sql

[root@director ~]# tar --xattrs -czf undercloud-backup-`date +%F`.tar.gz /root/undercloud-all-databases.sql

/etc/my.cnf.d/server.cnf /var/lib/glance/images /srv/node /home/stack

tar: Removing leading `/' from member names

Este proceso asegura que un nodo se pueda reemplazar sin afectar la disponibilidad de ninguna instancia. Además, se recomienda realizar una copia de seguridad de la configuración de StarOS, especialmente si el nodo informático que se va a sustituir aloja la máquina virtual (VM) de la función de control (CF).

Identificación de las VM alojadas en el nodo de informática

Identifique las VM alojadas en el servidor informático. Puede haber dos posibilidades:

- El servidor informático sólo contiene la VM Service Function (SF):

[stack@director ~]$ nova list --field name,host | grep compute-10

| 49ac5f22-469e-4b84-badc-031083db0533 | VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d |

pod1-compute-10.localdomain |

- El servidor informático contiene la combinación de VM Control Function (CF)/Elastic Services Controller (ESC)/Element Manager (EM)/Ultra Automation Services (UAS):

[stack@director ~]$ nova list --field name,host | grep compute-8

| 507d67c2-1d00-4321-b9d1-da879af524f8 | VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea | pod1-compute-8.localdomain |

| f9c0763a-4a4f-4bbd-af51-bc7545774be2 | VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229 | pod1-compute-8.localdomain |

| 75528898-ef4b-4d68-b05d-882014708694 | VNF2-ESC-ESC-0 | pod1-compute-8.localdomain |

| f5bd7b9c-476a-4679-83e5-303f0aae9309 | VNF2-UAS-uas-0 | pod1-compute-8.localdomain |

Nota: En el resultado que se muestra aquí, la primera columna corresponde al identificador único universal (UUID), la segunda columna es el nombre de la máquina virtual y la tercera es el nombre de host donde está presente la máquina virtual. Los parámetros de este resultado se utilizarán en secciones posteriores.

Apagado Graceful

Caso 1. Compute Node Hosts solamente SF VM

Migración de la tarjeta SF al estado en espera

- Inicie sesión en StarOS VNF e identifique la tarjeta que corresponde a SF VM. Utilice el UUID de la VM SF identificada en la sección "Identifique las VM alojadas en el nodo de cómputo" e identifique la tarjeta que corresponde al UUID:

[local]VNF2# show card hardware

Tuesday might 08 16:49:42 UTC 2018

<snip>

Card 8:

Card Type : 4-Port Service Function Virtual Card

CPU Packages : 26 [#0, #1, #2, #3, #4, #5, #6, #7, #8, #9, #10, #11, #12, #13, #14, #15, #16, #17, #18, #19, #20, #21, #22, #23, #24, #25]

CPU Nodes : 2

CPU Cores/Threads : 26

Memory : 98304M (qvpc-di-large)

UUID/Serial Number : 49AC5F22-469E-4B84-BADC-031083DB0533

<snip>

- Compruebe el estado de la tarjeta:

[local]VNF2# show card table

Tuesday might 08 16:52:53 UTC 2018

Slot Card Type Oper State SPOF Attach

----------- -------------------------------------- ------------- ---- ------

1: CFC Control Function Virtual Card Active No

2: CFC Control Function Virtual Card Standby -

3: FC 4-Port Service Function Virtual Card Active No

4: FC 4-Port Service Function Virtual Card Active No

5: FC 4-Port Service Function Virtual Card Active No

6: FC 4-Port Service Function Virtual Card Active No

7: FC 4-Port Service Function Virtual Card Active No

8: FC 4-Port Service Function Virtual Card Active No

9: FC 4-Port Service Function Virtual Card Active No

10: FC 4-Port Service Function Virtual Card Standby -

- Si la tarjeta está en estado activo, mueva la tarjeta al estado en espera:

[local]VNF2# card migrate from 8 to 10

Apagar SF VM desde ESC

- Inicie sesión en el nodo ESC que corresponde al VNF y verifique el estado de SF VM:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_ALIVE_STATE</state>

<vm_name> VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d</vm_name>

<state>VM_ALIVE_STATE</state>

<snip>

- Detenga la máquina virtual SF con el uso de su nombre de máquina virtual. (Nombre de VM indicado en la sección "Identificar las VM alojadas en el nodo de cálculo"):

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli vm-action STOP VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d

- Una vez detenido, la VM debe ingresar el estado SHUTOFF:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_ALIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c3_0_3e0db133-c13b-4e3d-ac14-

<state>VM_ALIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d</vm_name>

<state>VM_SHUTOFF_STATE</state>

<snip>

Eliminar el nodo de cálculo de la lista de agregación de Nova

- Enumere los agregados nova e identifique el agregado que corresponde al servidor informático basado en el VNF alojado por él. Normalmente, tendría el formato <VNFNAME>-SERVICE<X>:

[stack@director ~]$ nova aggregate-list

+----+-------------------+-------------------+

| Id | Name | Availability Zone |

+----+-------------------+-------------------+

| 29 | POD1-AUTOIT | mgmt |

| 57 | VNF1-SERVICE1 | - |

| 60 | VNF1-EM-MGMT1 | - |

| 63 | VNF1-CF-MGMT1 | - |

| 66 | VNF2-CF-MGMT2 | - |

| 69 | VNF2-EM-MGMT2 | - |

| 72 | VNF2-SERVICE2 | - |

| 75 | VNF3-CF-MGMT3 | - |

| 78 | VNF3-EM-MGMT3 | - |

| 81 | VNF3-SERVICE3 | - |

+----+-------------------+-------------------+

En este caso, el servidor informático que se va a reemplazar pertenece a VNF2. Por lo tanto, la lista de agregación correspondiente será VNF2-SERVICE2.

- Quite el nodo de cálculo del agregado identificado (elimine por nombre de host anotado en la sección "Identificación de las VM alojadas en el nodo de cálculo"):

nova aggregate-remove-host

[stack@director ~]$ nova aggregate-remove-host VNF2-SERVICE2 pod1-compute-10.localdomain

- Verifique si el nodo de cálculo se ha eliminado de los agregados. Ahora, el Host no debe aparecer en el agregado:

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-SERVICE2

Caso 2. Host de nodo de cálculo CF/ESC/EM/UAS

Migrar tarjeta CF al estado en espera

- Inicie sesión en el VNF de StarOS e identifique la tarjeta que corresponde a la VM CF. Utilice el UUID de la VM CF identificada en la sección "Identifique las VM alojadas en el nodo de cómputo" y busque la tarjeta que corresponda al UUID:

[local]VNF2# show card hardware

Tuesday might 08 16:49:42 UTC 2018

<snip>

Card 2:

Card Type : Control Function Virtual Card

CPU Packages : 8 [#0, #1, #2, #3, #4, #5, #6, #7]

CPU Nodes : 1

CPU Cores/Threads : 8

Memory : 16384M (qvpc-di-large)

UUID/Serial Number : F9C0763A-4A4F-4BBD-AF51-BC7545774BE2

<snip>

- Compruebe el estado de la tarjeta:

[local]VNF2# show card table

Tuesday might 08 16:52:53 UTC 2018

Slot Card Type Oper State SPOF Attach

----------- -------------------------------------- ------------- ---- ------

1: CFC Control Function Virtual Card Standby -

2: CFC Control Function Virtual Card Active No

3: FC 4-Port Service Function Virtual Card Active No

4: FC 4-Port Service Function Virtual Card Active No

5: FC 4-Port Service Function Virtual Card Active No

6: FC 4-Port Service Function Virtual Card Active No

7: FC 4-Port Service Function Virtual Card Active No

8: FC 4-Port Service Function Virtual Card Active No

9: FC 4-Port Service Function Virtual Card Active No

10: FC 4-Port Service Function Virtual Card Standby -

- Si la tarjeta está en estado activo, mueva la tarjeta al estado en espera:

[local]VNF2# card migrate from 2 to 1

Cierre CF y VM EM desde ESC

- Inicie sesión en el nodo ESC que corresponde al VNF y verifique el estado de las VM:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_ALIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c3_0_3e0db133-c13b-4e3d-ac14-

<state>VM_ALIVE_STATE</state>

<deployment_name>VNF2-DEPLOYMENT-em</deployment_name>

<vm_id>507d67c2-1d00-4321-b9d1-da879af524f8</vm_id>

<vm_id>dc168a6a-4aeb-4e81-abd9-91d7568b5f7c</vm_id>

<vm_id>9ffec58b-4b9d-4072-b944-5413bf7fcf07</vm_id>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea</vm_name>

<state>VM_ALIVE_STATE</state>

<snip>

- Detenga la máquina virtual CF y EM una por una con el uso de su nombre de máquina virtual. (Nombre de máquina virtual indicado en la sección "Identificación de las VM alojadas en el nodo informático"):

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli vm-action STOP VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli vm-action STOP VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea

- Después de que se detenga, las VM deben ingresar el estado SHUTOFF:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_SHUTOFF_STATE</state>

<vm_name>VNF2-DEPLOYM_c3_0_3e0db133-c13b-4e3d-ac14-

<state>VM_ALIVE_STATE</state>

<deployment_name>VNF2-DEPLOYMENT-em</deployment_name>

<vm_id>507d67c2-1d00-4321-b9d1-da879af524f8</vm_id>

<vm_id>dc168a6a-4aeb-4e81-abd9-91d7568b5f7c</vm_id>

<vm_id>9ffec58b-4b9d-4072-b944-5413bf7fcf07</vm_id>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea</vm_name>

VM_SHUTOFF_STATE

<snip>

Migrar ESC al modo en espera

- Inicie sesión en el ESC alojado en el nodo de cálculo y verifique si se encuentra en el estado principal. En caso afirmativo, cambie el modo ESC al modo de espera:

[admin@VNF2-esc-esc-0 esc-cli]$ escadm status

0 ESC status=0 ESC Master Healthy

[admin@VNF2-esc-esc-0 ~]$ sudo service keepalived stop

Stopping keepalived: [ OK ]

[admin@VNF2-esc-esc-0 ~]$ escadm status

1 ESC status=0 In SWITCHING_TO_STOP state. Please check status after a while.

[admin@VNF2-esc-esc-0 ~]$ sudo reboot

Broadcast message from admin@vnf1-esc-esc-0.novalocal

(/dev/pts/0) at 13:32 ...

The system is going down for reboot NOW!

Eliminar el nodo de cálculo de la lista de agregación de Nova

- Enumere los agregados nova e identifique el agregado que corresponde al servidor informático basado en el VNF alojado por él. Normalmente, tendría el formato <VNFNAME>-EM-MGMT<X> y <VNFNAME>-CF-MGMT<X>

[stack@director ~]$ nova aggregate-list

+----+-------------------+-------------------+

| Id | Name | Availability Zone |

+----+-------------------+-------------------+

| 29 | POD1-AUTOIT | mgmt |

| 57 | VNF1-SERVICE1 | - |

| 60 | VNF1-EM-MGMT1 | - |

| 63 | VNF1-CF-MGMT1 | - |

| 66 | VNF2-CF-MGMT2 | - |

| 69 | VNF2-EM-MGMT2 | - |

| 72 | VNF2-SERVICE2 | - |

| 75 | VNF3-CF-MGMT3 | - |

| 78 | VNF3-EM-MGMT3 | - |

| 81 | VNF3-SERVICE3 | - |

+----+-------------------+-------------------+

En nuestro caso, el servidor informático pertenece a VNF2. Por lo tanto, los agregados que corresponden serían VNF2-CF-MGMT2 y VNF2-EM-MGMT2.

- Quite el nodo de cálculo del agregado identificado:

nova aggregate-remove-host

[stack@director ~]$ nova aggregate-remove-host VNF2-CF-MGMT2 pod1-compute-8.localdomain

[stack@director ~]$ nova aggregate-remove-host VNF2-EM-MGMT2 pod1-compute-8.localdomain

- Verifique si el nodo de cálculo se ha eliminado de los agregados. Ahora, asegúrese de que el Host no aparezca en los agregados:

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-CF-MGMT2

[stack@director ~]$ nova aggregate-show VNF2-EM-MGMT2

Eliminación del nodo de cálculo

Los pasos mencionados en esta sección son comunes independientemente de las VM alojadas en el nodo informático.

Eliminar nodo de cálculo de la lista de servicios

- Elimine el servicio informático de la lista de servicios:

[stack@director ~]$ source corerc

[stack@director ~]$ openstack compute service list | grep compute-8

| 404 | nova-compute | pod1-compute-8.localdomain | nova | enabled | up | 2018-05-08T18:40:56.000000 |

openstack compute service delete

[stack@director ~]$ openstack compute service delete 404

Eliminar agentes neutrales

- Elimine el agente neutrón asociado antiguo y abra el agente vswitch para el servidor informático:

[stack@director ~]$ openstack network agent list | grep compute-8

| c3ee92ba-aa23-480c-ac81-d3d8d01dcc03 | Open vSwitch agent | pod1-compute-8.localdomain | None | False | UP | neutron-openvswitch-agent |

| ec19cb01-abbb-4773-8397-8739d9b0a349 | NIC Switch agent | pod1-compute-8.localdomain | None | False | UP | neutron-sriov-nic-agent |

openstack network agent delete

[stack@director ~]$ openstack network agent delete c3ee92ba-aa23-480c-ac81-d3d8d01dcc03

[stack@director ~]$ openstack network agent delete ec19cb01-abbb-4773-8397-8739d9b0a349

Eliminar de la base de datos irónica

- Elimine un nodo de la base de datos irónica y verifíquelo:

[stack@director ~]$ source stackrc

nova show| grep hypervisor

[stack@director ~]$ nova show pod1-compute-10 | grep hypervisor

| OS-EXT-SRV-ATTR:hypervisor_hostname | 4ab21917-32fa-43a6-9260-02538b5c7a5a

ironic node-delete

[stack@director ~]$ ironic node-delete 4ab21917-32fa-43a6-9260-02538b5c7a5a

[stack@director ~]$ ironic node-list (node delete must not be listed now)

Eliminar de Overcloud

- Cree un archivo de script denominado delete_node.sh con el contenido como se muestra. Asegúrese de que las plantillas mencionadas sean las mismas que las utilizadas en el script Deploy.sh utilizado para la implementación de la pila:

delete_node.sh

openstack overcloud node delete --templates -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-sriov.yaml -e /home/stack/custom-templates/network.yaml -e /home/stack/custom-templates/ceph.yaml -e /home/stack/custom-templates/compute.yaml -e /home/stack/custom-templates/layout.yaml -e /home/stack/custom-templates/layout.yaml --stack

[stack@director ~]$ source stackrc

[stack@director ~]$ /bin/sh delete_node.sh

+ openstack overcloud node delete --templates -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-sriov.yaml -e /home/stack/custom-templates/network.yaml -e /home/stack/custom-templates/ceph.yaml -e /home/stack/custom-templates/compute.yaml -e /home/stack/custom-templates/layout.yaml -e /home/stack/custom-templates/layout.yaml --stack pod1 49ac5f22-469e-4b84-badc-031083db0533

Deleting the following nodes from stack pod1:

- 49ac5f22-469e-4b84-badc-031083db0533

Started Mistral Workflow. Execution ID: 4ab4508a-c1d5-4e48-9b95-ad9a5baa20ae

real 0m52.078s

user 0m0.383s

sys 0m0.086s

- Espere a que la operación de pila OpenStack pase al estado COMPLETE:

[stack@director ~]$ openstack stack list

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| ID | Stack Name | Stack Status | Creation Time | Updated Time |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| 5df68458-095d-43bd-a8c4-033e68ba79a0 | pod1 | UPDATE_COMPLETE | 2018-05-08T21:30:06Z | 2018-05-08T20:42:48Z |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

Instalación del nuevo nodo informático

- Los pasos para instalar un nuevo servidor UCS C240 M4 y los pasos de configuración inicial se pueden consultar desde:

Guía de instalación y servicio del servidor Cisco UCS C240 M4

- Después de la instalación del servidor, inserte los discos duros en las ranuras respectivas como el servidor antiguo

- Inicie sesión en el servidor con el uso de CIMC IP

- Realice la actualización del BIOS si el firmware no se ajusta a la versión recomendada utilizada anteriormente. Los pasos para la actualización del BIOS se indican a continuación:

Guía de actualización del BIOS del servidor de montaje en bastidor Cisco UCS C-Series

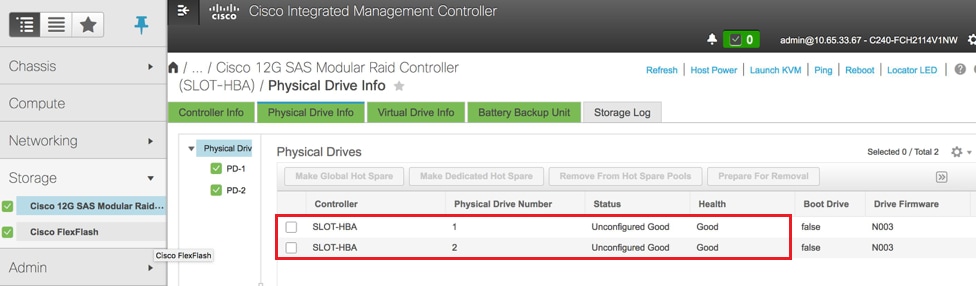

- Verifique el estado de las unidades físicas. Debe ser "Unconfigured Good":

Storage > Cisco 12G SAS Modular Raid Controller (SLOT-HBA) > Physical Drive Info (Información de unidad física)

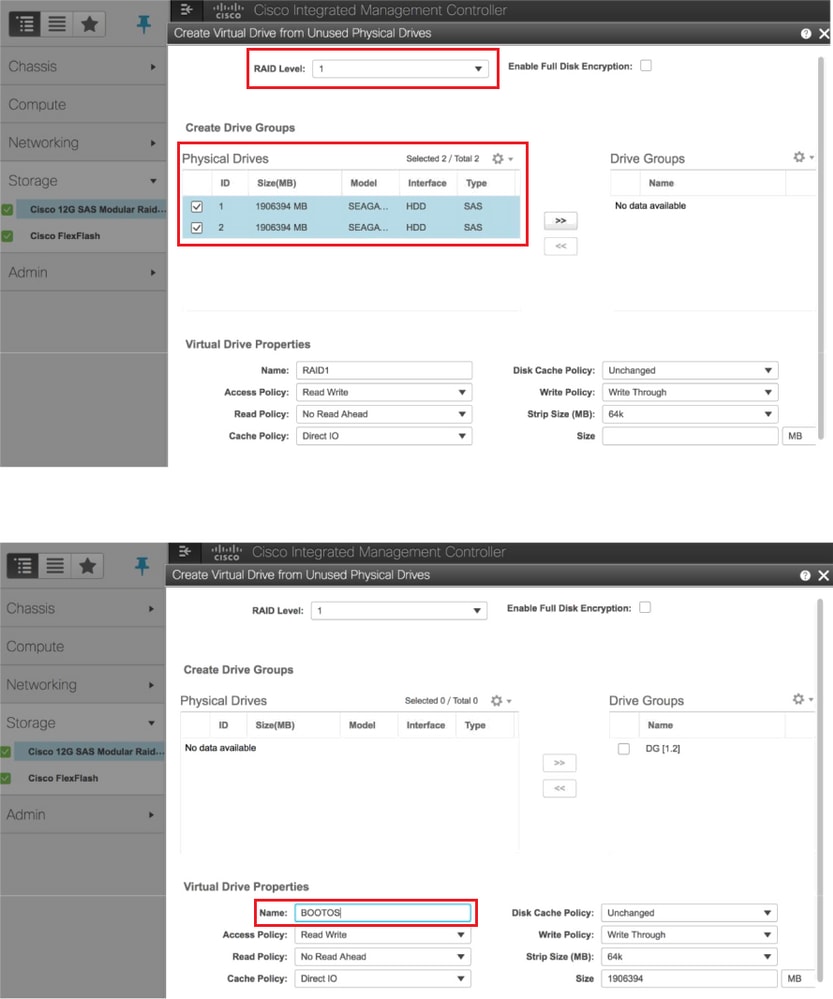

- Cree una unidad virtual desde las unidades físicas con RAID Nivel 1:

Storage > Cisco 12G SAS Modular Raid Controller (SLOT-HBA) > Controller Info > Create Virtual Drive from Unused Physical Drive

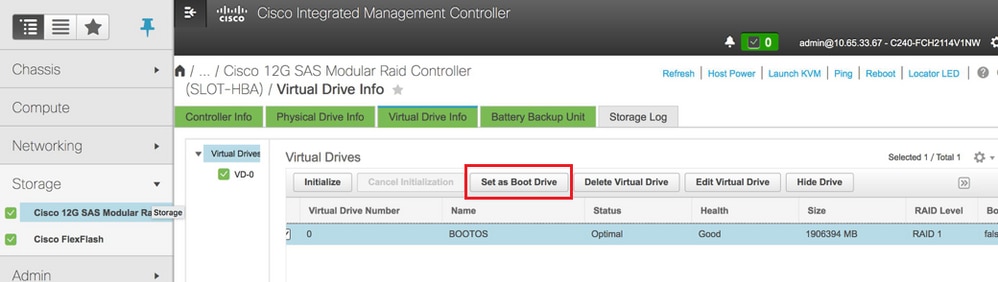

- Seleccione el VD y configure "Set as Boot Drive" (Establecer como unidad de arranque):

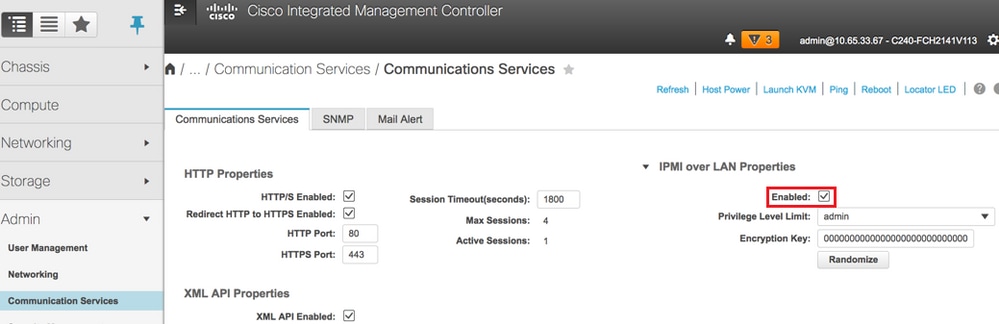

- Habilitar IPMI sobre LAN:

Admin > Communication Services > Communication Services

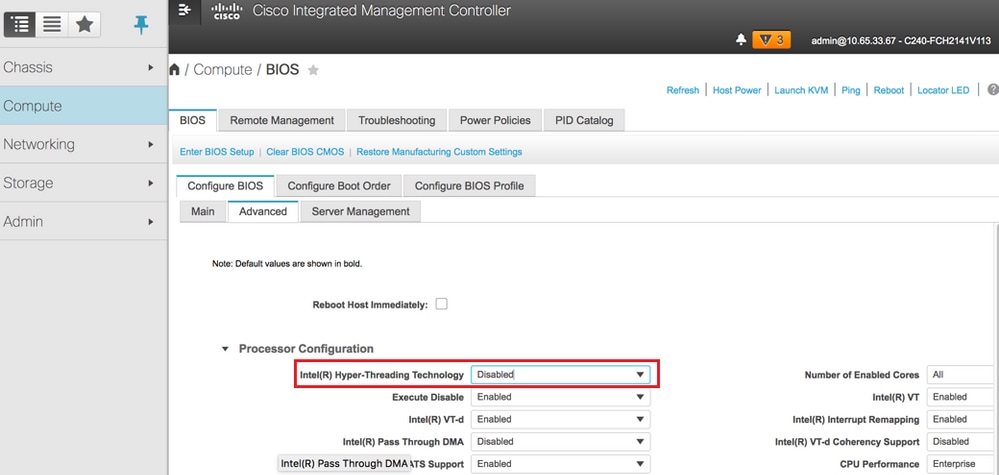

- Desactivar hiperHiperhilado:

Computar > BIOS > Configurar BIOS > Avanzado > Configuración del procesador

Nota: La imagen que se muestra aquí y los pasos de configuración mencionados en esta sección se refieren a la versión de firmware 3.0(3e) y puede haber ligeras variaciones si trabaja en otras versiones.

Agregar el nuevo nodo informático a la nube

Los pasos mencionados en esta sección son comunes independientemente de la máquina virtual alojada por el nodo informático.

- Agregar servidor informático con un índice diferente

Cree un archivo add_node.json con sólo los detalles del nuevo servidor informático que se agregará. Asegúrese de que el número de índice del nuevo servidor informático no se haya utilizado antes. Normalmente, aumente el siguiente valor de cálculo más alto.

Ejemplo: El más alto anterior era compute-17, por lo tanto, se creó compute-18 en el caso del sistema 2-vnf.

Nota: Tenga en cuenta el formato json.

[stack@director ~]$ cat add_node.json

{

"nodes":[

{

"mac":[

"<MAC_ADDRESS>"

],

"capabilities": "node:compute-18,boot_option:local",

"cpu":"24",

"memory":"256000",

"disk":"3000",

"arch":"x86_64",

"pm_type":"pxe_ipmitool",

"pm_user":"admin",

"pm_password":"<PASSWORD>",

"pm_addr":"192.100.0.5"

}

]

}

- Importe el archivo json:

[stack@director ~]$ openstack baremetal import --json add_node.json

Started Mistral Workflow. Execution ID: 78f3b22c-5c11-4d08-a00f-8553b09f497d

Successfully registered node UUID 7eddfa87-6ae6-4308-b1d2-78c98689a56e

Started Mistral Workflow. Execution ID: 33a68c16-c6fd-4f2a-9df9-926545f2127e

Successfully set all nodes to available.

- Ejecute la introspección del nodo con el uso del UUID observado desde el paso anterior:

[stack@director ~]$ openstack baremetal node manage 7eddfa87-6ae6-4308-b1d2-78c98689a56e

[stack@director ~]$ ironic node-list |grep 7eddfa87

| 7eddfa87-6ae6-4308-b1d2-78c98689a56e | None | None | power off | manageable | False |

[stack@director ~]$ openstack overcloud node introspect 7eddfa87-6ae6-4308-b1d2-78c98689a56e --provide

Started Mistral Workflow. Execution ID: e320298a-6562-42e3-8ba6-5ce6d8524e5c

Waiting for introspection to finish...

Successfully introspected all nodes.

Introspection completed.

Started Mistral Workflow. Execution ID: c4a90d7b-ebf2-4fcb-96bf-e3168aa69dc9

Successfully set all nodes to available.

[stack@director ~]$ ironic node-list |grep available

| 7eddfa87-6ae6-4308-b1d2-78c98689a56e | None | None | power off | available | False |

- Ejecute el script Deploy.sh que se utilizó anteriormente para implementar la pila, para agregar el nuevo nodo de cálculo a la pila de nubes superpuestas:

[stack@director ~]$ ./deploy.sh

++ openstack overcloud deploy --templates -r /home/stack/custom-templates/custom-roles.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-sriov.yaml -e /home/stack/custom-templates/network.yaml -e /home/stack/custom-templates/ceph.yaml -e /home/stack/custom-templates/compute.yaml -e /home/stack/custom-templates/layout.yaml --stack ADN-ultram --debug --log-file overcloudDeploy_11_06_17__16_39_26.log --ntp-server 172.24.167.109 --neutron-flat-networks phys_pcie1_0,phys_pcie1_1,phys_pcie4_0,phys_pcie4_1 --neutron-network-vlan-ranges datacentre:1001:1050 --neutron-disable-tunneling --verbose --timeout 180

…

Starting new HTTP connection (1): 192.200.0.1

"POST /v2/action_executions HTTP/1.1" 201 1695

HTTP POST http://192.200.0.1:8989/v2/action_executions 201

Overcloud Endpoint: http://10.1.2.5:5000/v2.0

Overcloud Deployed

clean_up DeployOvercloud:

END return value: 0

real 38m38.971s

user 0m3.605s

sys 0m0.466s

- Espere a que se complete el estado de pila de openstack:

[stack@director ~]$ openstack stack list

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| ID | Stack Name | Stack Status | Creation Time | Updated Time |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| 5df68458-095d-43bd-a8c4-033e68ba79a0 | ADN-ultram | UPDATE_COMPLETE | 2017-11-02T21:30:06Z | 2017-11-06T21:40:58Z |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

- Verifique que el nuevo nodo de cálculo se encuentre en el estado Activo:

[stack@director ~]$ source stackrc

[stack@director ~]$ nova list |grep compute-18

| 0f2d88cd-d2b9-4f28-b2ca-13e305ad49ea | pod1-compute-18 | ACTIVE | - | Running | ctlplane=192.200.0.117 |

[stack@director ~]$ source corerc

[stack@director ~]$ openstack hypervisor list |grep compute-18

| 63 | pod1-compute-18.localdomain |

Valores de configuración de reemplazo de servidor posterior

Después de agregar el servidor a la nube, consulte el siguiente enlace para aplicar la configuración que estaba presente anteriormente en el servidor antiguo:

Restauración de las VM

Caso 1. Compute Node Hosts solamente SF VM

Adición a la lista de agregación Nova

- Agregue el nodo de cálculo al host agregado y verifique si se ha agregado el host:

nova aggregate-add-host

[stack@director ~]$ nova aggregate-add-host VNF2-SERVICE2 pod1-compute-18.localdomain

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-SERVICE2

Recuperación de VM SF desde ESC

- La VM SF estaría en estado de error en la lista nova:

[stack@director ~]$ nova list |grep VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d

| 49ac5f22-469e-4b84-badc-031083db0533 | VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d | ERROR | - | NOSTATE |

- Recupere la VM SF del ESC:

[admin@VNF2-esc-esc-0 ~]$ sudo /opt/cisco/esc/esc-confd/esc-cli/esc_nc_cli recovery-vm-action DO VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d

[sudo] password for admin:

Recovery VM Action

/opt/cisco/esc/confd/bin/netconf-console --port=830 --host=127.0.0.1 --user=admin --privKeyFile=/root/.ssh/confd_id_dsa --privKeyType=dsa --rpc=/tmp/esc_nc_cli.ZpRCGiieuW

<?xml version="1.0" encoding="UTF-8"?>

<rpc-reply xmlns="urn:ietf:params:xml:ns:netconf:base:1.0" message-id="1">

<ok/>

</rpc-reply>

- Supervise el archivo yangesc.log:

admin@VNF2-esc-esc-0 ~]$ tail -f /var/log/esc/yangesc.log

…

14:59:50,112 07-Nov-2017 WARN Type: VM_RECOVERY_COMPLETE

14:59:50,112 07-Nov-2017 WARN Status: SUCCESS

14:59:50,112 07-Nov-2017 WARN Status Code: 200

14:59:50,112 07-Nov-2017 WARN Status Msg: Recovery: Successfully recovered VM [VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d].

- Asegúrese de que la tarjeta SF aparezca como SF en espera en el VNF

Caso 2. Calcular los hosts de nodos CF, ESC, EM y UAS

Adición a la lista de agregación Nova

Agregue el nodo de cómputo a los hosts agregados y verifique si se ha agregado el host. En este caso, el nodo de cálculo se debe agregar a los agregados de host CF y EM.

nova aggregate-add-host

[stack@director ~]$ nova aggregate-add-host VNF2-CF-MGMT2 pod1-compute-18.localdomain

[stack@director ~]$ nova aggregate-add-host VNF2-EM-MGMT2 pod1-compute-18.localdomain

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-CF-MGMT2

[stack@director ~]$ nova aggregate-show VNF2-EM-MGMT2

Recuperación de la VM UAS

- Verifique el estado de la VM UAS en la lista nova y elimínelo:

[stack@director ~]$ nova list | grep VNF2-UAS-uas-0

| 307a704c-a17c-4cdc-8e7a-3d6e7e4332fa | VNF2-UAS-uas-0 | ACTIVE | - | Running | VNF2-UAS-uas-orchestration=172.168.11.10; VNF2-UAS-uas-management=172.168.10.3

[stack@tb5-ospd ~]$ nova delete VNF2-UAS-uas-0

Request to delete server VNF2-UAS-uas-0 has been accepted.

- Para recuperar la VM autovnf-uas, ejecute el script uas-check para verificar el estado. Debe informar de un error. A continuación, vuelva a ejecutar con la opción —fix para volver a crear la VM UAS que falta:

[stack@director ~]$ cd /opt/cisco/usp/uas-installer/scripts/

[stack@director scripts]$ ./uas-check.py auto-vnf VNF2-UAS

2017-12-08 12:38:05,446 - INFO: Check of AutoVNF cluster started

2017-12-08 12:38:07,925 - INFO: Instance 'vnf1-UAS-uas-0' status is 'ERROR'

2017-12-08 12:38:07,925 - INFO: Check completed, AutoVNF cluster has recoverable errors

[stack@director scripts]$ ./uas-check.py auto-vnf VNF2-UAS --fix

2017-11-22 14:01:07,215 - INFO: Check of AutoVNF cluster started

2017-11-22 14:01:09,575 - INFO: Instance VNF2-UAS-uas-0' status is 'ERROR'

2017-11-22 14:01:09,575 - INFO: Check completed, AutoVNF cluster has recoverable errors

2017-11-22 14:01:09,778 - INFO: Removing instance VNF2-UAS-uas-0'

2017-11-22 14:01:13,568 - INFO: Removed instance VNF2-UAS-uas-0'

2017-11-22 14:01:13,568 - INFO: Creating instance VNF2-UAS-uas-0' and attaching volume ‘VNF2-UAS-uas-vol-0'

2017-11-22 14:01:49,525 - INFO: Created instance ‘VNF2-UAS-uas-0'

- Inicie sesión en autovnf-uas. Espere unos minutos y UAS debe volver al estado correcto:

VNF2-autovnf-uas-0#show uas

uas version 1.0.1-1

uas state ha-active

uas ha-vip 172.17.181.101

INSTANCE IP STATE ROLE

-----------------------------------

172.17.180.6 alive CONFD-SLAVE

172.17.180.7 alive CONFD-MASTER

172.17.180.9 alive NA

Nota: Si falla uas-check.py —fix, es posible que necesite copiar este archivo y ejecutarlo de nuevo.

[stack@director ~]$ mkdir –p /opt/cisco/usp/apps/auto-it/common/uas-deploy/

[stack@director ~]$ cp /opt/cisco/usp/uas-installer/common/uas-deploy/userdata-uas.txt /opt/cisco/usp/apps/auto-it/common/uas-deploy/

Recuperación de VM ESC

- Verifique el estado de la VM ESC de la lista nova y elimínelo:

stack@director scripts]$ nova list |grep ESC-1

| c566efbf-1274-4588-a2d8-0682e17b0d41 | VNF2-ESC-ESC-1 | ACTIVE | - | Running | VNF2-UAS-uas-orchestration=172.168.11.14; VNF2-UAS-uas-management=172.168.10.4 |

[stack@director scripts]$ nova delete VNF2-ESC-ESC-1

Request to delete server VNF2-ESC-ESC-1 has been accepted.

- En AutoVNF-UAS, busque la transacción de implementación ESC y en el registro de la transacción busque la línea de comandos boot_vm.py para crear la instancia ESC:

ubuntu@VNF2-uas-uas-0:~$ sudo -i

root@VNF2-uas-uas-0:~# confd_cli -u admin -C

Welcome to the ConfD CLI

admin connected from 127.0.0.1 using console on VNF2-uas-uas-0

VNF2-uas-uas-0#show transaction

TX ID TX TYPE DEPLOYMENT ID TIMESTAMP STATUS

-----------------------------------------------------------------------------------------------------------------------------

35eefc4a-d4a9-11e7-bb72-fa163ef8df2b vnf-deployment VNF2-DEPLOYMENT 2017-11-29T02:01:27.750692-00:00 deployment-success

73d9c540-d4a8-11e7-bb72-fa163ef8df2b vnfm-deployment VNF2-ESC 2017-11-29T01:56:02.133663-00:00 deployment-success

VNF2-uas-uas-0#show logs 73d9c540-d4a8-11e7-bb72-fa163ef8df2b | display xml

<config xmlns="http://tail-f.com/ns/config/1.0">

<logs xmlns="http://www.cisco.com/usp/nfv/usp-autovnf-oper">

<tx-id>73d9c540-d4a8-11e7-bb72-fa163ef8df2b</tx-id>

<log>2017-11-29 01:56:02,142 - VNFM Deployment RPC triggered for deployment: VNF2-ESC, deactivate: 0

2017-11-29 01:56:02,179 - Notify deployment

..

2017-11-29 01:57:30,385 - Creating VNFM 'VNF2-ESC-ESC-1' with [python //opt/cisco/vnf-staging/bootvm.py VNF2-ESC-ESC-1 --flavor VNF2-ESC-ESC-flavor --image 3fe6b197-961b-4651-af22-dfd910436689 --net VNF2-UAS-uas-management --gateway_ip 172.168.10.1 --net VNF2-UAS-uas-orchestration --os_auth_url http://10.1.2.5:5000/v2.0 --os_tenant_name core --os_username ****** --os_password ****** --bs_os_auth_url http://10.1.2.5:5000/v2.0 --bs_os_tenant_name core --bs_os_username ****** --bs_os_password ****** --esc_ui_startup false --esc_params_file /tmp/esc_params.cfg --encrypt_key ****** --user_pass ****** --user_confd_pass ****** --kad_vif eth0 --kad_vip 172.168.10.7 --ipaddr 172.168.10.6 dhcp --ha_node_list 172.168.10.3 172.168.10.6 --file root:0755:/opt/cisco/esc/esc-scripts/esc_volume_em_staging.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_volume_em_staging.sh --file root:0755:/opt/cisco/esc/esc-scripts/esc_vpc_chassis_id.py:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_vpc_chassis_id.py --file root:0755:/opt/cisco/esc/esc-scripts/esc-vpc-di-internal-keys.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc-vpc-di-internal-keys.sh

Guarde la línea boot_vm.py en un archivo de secuencia de comandos de shell (esc.sh) y actualice todas las líneas de nombre de usuario ***** y contraseña ***** con la información correcta (normalmente core/<PASSWORD>). También debe quitar la opción -encrypt_key. Para user_pass y user_confd_pass, debe utilizar el formato - nombre de usuario: password (ejemplo - admin:<PASSWORD>).

- Busque la URL a bootvm.py desde running-config y obtenga el archivo bootvm.py a la VM autovnf-uas. En este caso, 10.1.2.3 es la IP de la VM Auto-IT:

root@VNF2-uas-uas-0:~# confd_cli -u admin -C

Welcome to the ConfD CLI

admin connected from 127.0.0.1 using console on VNF2-uas-uas-0

VNF2-uas-uas-0#show running-config autovnf-vnfm:vnfm

…

configs bootvm

value http:// 10.1.2.3:80/bundles/5.1.7-2007/vnfm-bundle/bootvm-2_3_2_155.py

!

root@VNF2-uas-uas-0:~# wget http://10.1.2.3:80/bundles/5.1.7-2007/vnfm-bundle/bootvm-2_3_2_155.py

--2017-12-01 20:25:52-- http://10.1.2.3 /bundles/5.1.7-2007/vnfm-bundle/bootvm-2_3_2_155.py

Connecting to 10.1.2.3:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 127771 (125K) [text/x-python]

Saving to: ‘bootvm-2_3_2_155.py’

100%[=====================================================================================>] 127,771 --.-K/s in 0.001s

2017-12-01 20:25:52 (173 MB/s) - ‘bootvm-2_3_2_155.py’ saved [127771/127771]

- Cree un archivo /tmp/esc_params.cfg:

root@VNF2-uas-uas-0:~# echo "openstack.endpoint=publicURL" > /tmp/esc_params.cfg

- Ejecute el script de shell para implementar ESC desde el nodo UAS:

root@VNF2-uas-uas-0:~# /bin/sh esc.sh

+ python ./bootvm.py VNF2-ESC-ESC-1 --flavor VNF2-ESC-ESC-flavor --image 3fe6b197-961b-4651-af22-dfd910436689

--net VNF2-UAS-uas-management --gateway_ip 172.168.10.1 --net VNF2-UAS-uas-orchestration --os_auth_url

http://10.1.2.5:5000/v2.0 --os_tenant_name core --os_username core --os_password <PASSWORD> --bs_os_auth_url

http://10.1.2.5:5000/v2.0 --bs_os_tenant_name core --bs_os_username core --bs_os_password <PASSWORD>

--esc_ui_startup false --esc_params_file /tmp/esc_params.cfg --user_pass admin:<PASSWORD> --user_confd_pass

admin:<PASSWORD> --kad_vif eth0 --kad_vip 172.168.10.7 --ipaddr 172.168.10.6 dhcp --ha_node_list 172.168.10.3

172.168.10.6 --file root:0755:/opt/cisco/esc/esc-scripts/esc_volume_em_staging.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_volume_em_staging.sh

--file root:0755:/opt/cisco/esc/esc-scripts/esc_vpc_chassis_id.py:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_vpc_chassis_id.py

--file root:0755:/opt/cisco/esc/esc-scripts/esc-vpc-di-internal-keys.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc-vpc-di-internal-keys.sh

- Inicie sesión en el nuevo ESC y verifique el estado de la copia de seguridad:

ubuntu@VNF2-uas-uas-0:~$ ssh admin@172.168.11.14

…

####################################################################

# ESC on VNF2-esc-esc-1.novalocal is in BACKUP state.

####################################################################

[admin@VNF2-esc-esc-1 ~]$ escadm status

0 ESC status=0 ESC Backup Healthy

[admin@VNF2-esc-esc-1 ~]$ health.sh

============== ESC HA (BACKUP) ===================================================

ESC HEALTH PASSED

Recuperación de VM CF y EM de ESC

- Verifique el estado de las VM CF y EM de la lista nova. Deben estar en el estado ERROR

[stack@director ~]$ source corerc

[stack@director ~]$ nova list --field name,host,status |grep -i err

| 507d67c2-1d00-4321-b9d1-da879af524f8 | VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea | None | ERROR|

| f9c0763a-4a4f-4bbd-af51-bc7545774be2 | VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229 |None | ERROR

- Inicie sesión en ESC Master, ejecute recovery-vm-action para cada EM y VM CF afectadas. Sé paciente. ESC programaría la acción de recuperación y podría no ocurrir durante unos minutos. Supervise el archivo yangesc.log:

sudo /opt/cisco/esc/esc-confd/esc-cli/esc_nc_cli recovery-vm-action DO

[admin@VNF2-esc-esc-0 ~]$ sudo /opt/cisco/esc/esc-confd/esc-cli/esc_nc_cli recovery-vm-action DO VNF2-DEPLOYMENT-_VNF2-D_0_a6843886-77b4-4f38-b941-74eb527113a8

[sudo] password for admin:

Recovery VM Action

/opt/cisco/esc/confd/bin/netconf-console --port=830 --host=127.0.0.1 --user=admin --privKeyFile=/root/.ssh/confd_id_dsa --privKeyType=dsa --rpc=/tmp/esc_nc_cli.ZpRCGiieuW

<?xml version="1.0" encoding="UTF-8"?>

<rpc-reply xmlns="urn:ietf:params:xml:ns:netconf:base:1.0" message-id="1">

<ok/>

</rpc-reply>

[admin@VNF2-esc-esc-0 ~]$ tail -f /var/log/esc/yangesc.log

…

14:59:50,112 07-Nov-2017 WARN Type: VM_RECOVERY_COMPLETE

14:59:50,112 07-Nov-2017 WARN Status: SUCCESS

14:59:50,112 07-Nov-2017 WARN Status Code: 200

14:59:50,112 07-Nov-2017 WARN Status Msg: Recovery: Successfully recovered VM [VNF2-DEPLOYMENT-_VNF2-D_0_a6843886-77b4-4f38-b941-74eb527113a8]

- Inicie sesión en el nuevo EM y verifique que el estado de EM esté activo:

ubuntu@VNF2vnfddeploymentem-1:~$ /opt/cisco/ncs/current/bin/ncs_cli -u admin -C

admin connected from 172.17.180.6 using ssh on VNF2vnfddeploymentem-1

admin@scm# show ems

EM VNFM

ID SLA SCM PROXY

---------------------

2 up up up

3 up up up

- Inicie sesión en el VNF de StarOS y verifique que la tarjeta CF esté en estado de espera

Controlar la falla de recuperación ESC

En los casos en los que ESC no puede iniciar la VM debido a un estado inesperado, Cisco recomienda realizar un switchover ESC reiniciando el ESC maestro. La conmutación ESC tardaría aproximadamente un minuto. Ejecute la secuencia de comandos "health.sh" en el nuevo Master ESC para comprobar si el estado está activo. Master ESC para iniciar la VM y corregir el estado de la VM. Esta tarea de recuperación tardaría hasta 5 minutos en completarse.

Puede supervisar /var/log/esc/yangesc.log y /var/log/esc/escmanager.log. Si NO ve que se recupera la máquina virtual después de 5-7 minutos, el usuario tendría que ir y realizar la recuperación manual de las máquinas virtuales afectadas.

Actualización de configuración de implementación automática

Desde la máquina virtual AutoDeploy, edite el archivo autoDeploy.cfg y reemplace el servidor informático antiguo por el nuevo. A continuación, cargue replace en confd_cli. Este paso es necesario para que la desactivación de la implementación se realice correctamente más adelante.

root@auto-deploy-iso-2007-uas-0:/home/ubuntu# confd_cli -u admin -C

Welcome to the ConfD CLI

admin connected from 127.0.0.1 using console on auto-deploy-iso-2007-uas-0

auto-deploy-iso-2007-uas-0#config

Entering configuration mode terminal

auto-deploy-iso-2007-uas-0(config)#load replace autodeploy.cfg

Loading. 14.63 KiB parsed in 0.42 sec (34.16 KiB/sec)

auto-deploy-iso-2007-uas-0(config)#commit

Commit complete.

auto-deploy-iso-2007-uas-0(config)#end

Reinicie uas-confd e implemente automáticamente los servicios después del cambio de configuración.

root@auto-deploy-iso-2007-uas-0:~# service uas-confd restart

uas-confd stop/waiting

uas-confd start/running, process 14078

root@auto-deploy-iso-2007-uas-0:~# service uas-confd status

uas-confd start/running, process 14078

root@auto-deploy-iso-2007-uas-0:~# service autodeploy restart

autodeploy stop/waiting

autodeploy start/running, process 14017

root@auto-deploy-iso-2007-uas-0:~# service autodeploy status

autodeploy start/running, process 14017

Habilitación de Syslogs

Para habilitar los registros del sistema para el servidor UCS, los componentes de Openstack y las VM recuperadas, siga las secciones

"Vuelva a habilitar syslog para los componentes UCS y Openstack" y "Habilitar syslog para los VNF" en el siguiente enlace:

Información Relacionada

- https://access.redhat.com/documentation/en-us/red_hat_openstack_platform/10/html/director_installation_and_usage/sect-Scaling_the_Overcloud - sect-Remove_Compute_Nodes

- https://access.redhat.com/documentation/en-us/red_hat_openstack_platform/10/html/director_installation_and_usage/sect-Scaling_the_Overcloud#sect-Adding_Compute_or_Ceph_Storage_Nodes

- Soporte Técnico y Documentación - Cisco Systems

Historial de revisiones

| Revisión | Fecha de publicación | Comentarios |

|---|---|---|

1.0 |

01-Jun-2018

|

Versión inicial |

Con la colaboración de ingenieros de Cisco

- Partheeban RajagopalCisco Advanced Services

- Padmaraj RamanoudjamCisco Advanced Services

Contacte a Cisco

- Abrir un caso de soporte

- (Requiere un Cisco Service Contract)

Comentarios

Comentarios