Extending the Cisco SD-WAN Fabric into Azure with Cisco Cloud onRamp for Multi-Cloud

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Define – Cisco Cloud onRamp for Multi-Cloud Introduction

Deploy - Cisco Cloud onRamp for Multi-Cloud with Azure

Process: Deploy a Cloud Gateway with Cisco Cloud onRamp for Multi-Cloud

Process: Map Host vNets to the Cloud Gateway

Operate - Cisco Cloud onRamp for Multi-Cloud

Process: Modifying an Existing Cloud Gateway Deployment (Optional)

Process: Adding an Inbound Route Map to Filter BGP Routes from Azure (Optional)

Process: Monitor Cisco Cloud onRamp for Multi-Cloud

Appendix A: Changes from Previous Versions

Appendix B: Hardware and Software Used for Validation

Appendix C: Cisco Catalyst 8000v Router Configuration Template Summary

Appendix D: Cloud Gateway Catalyst 8000v SD-WAN Edge Router CLI Configurations

Appendix E: Azure Prerequisites

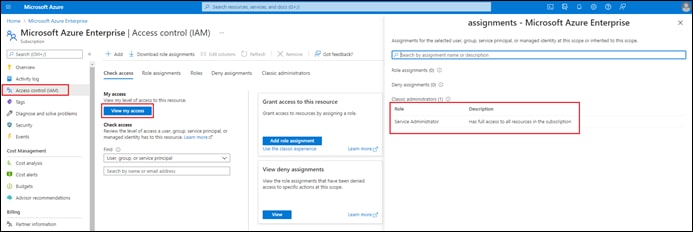

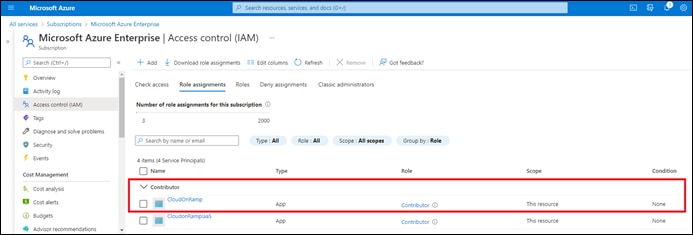

Process: Check Azure Subscription Permissions

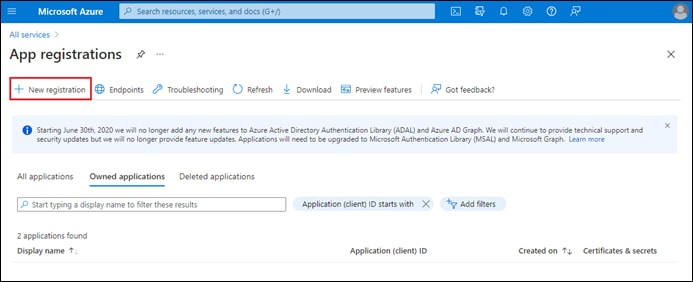

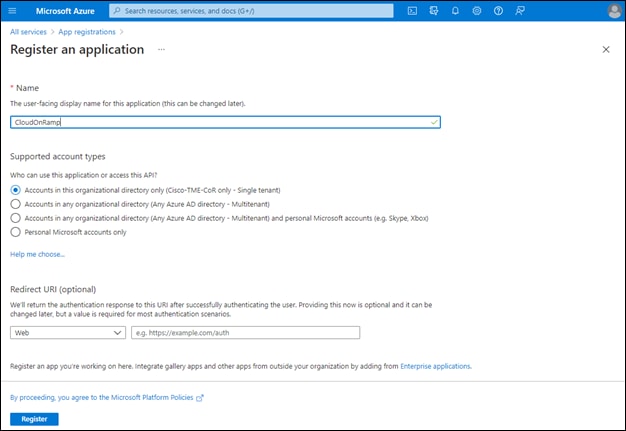

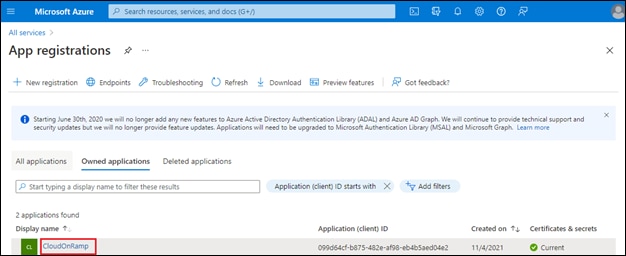

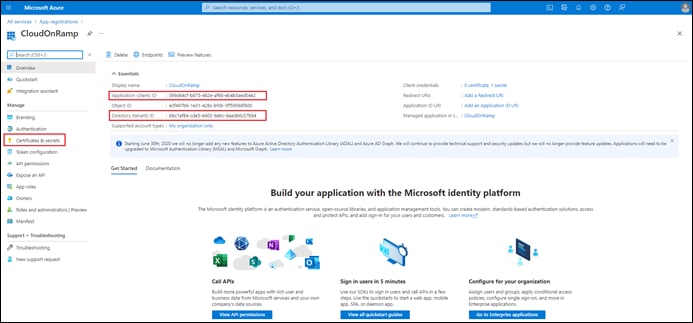

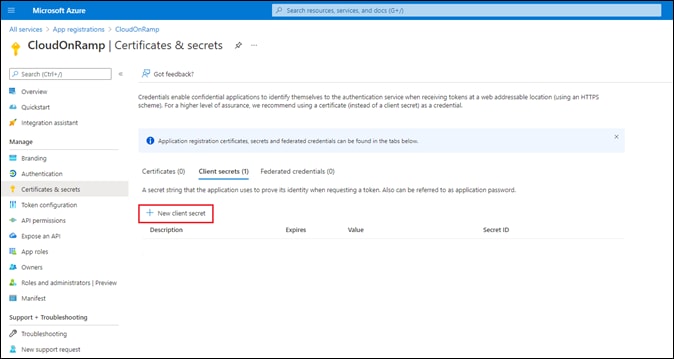

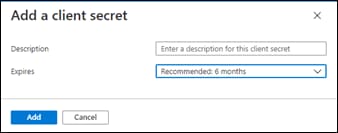

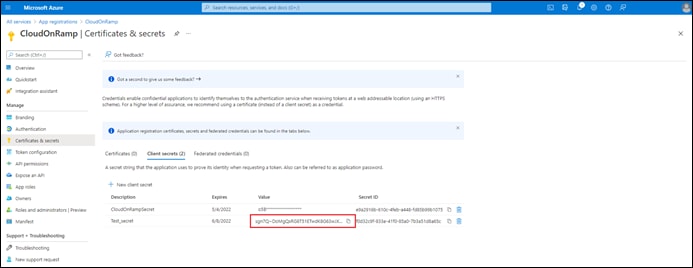

Process: Register an Application with Azure Active Directory (AD)

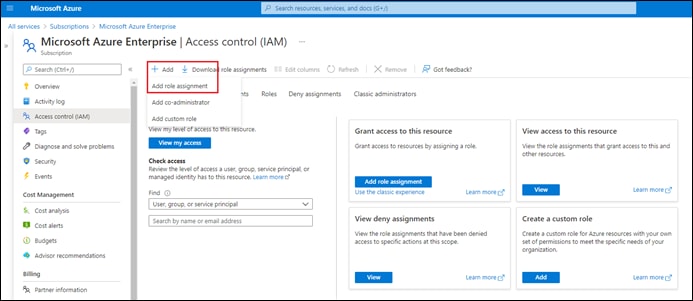

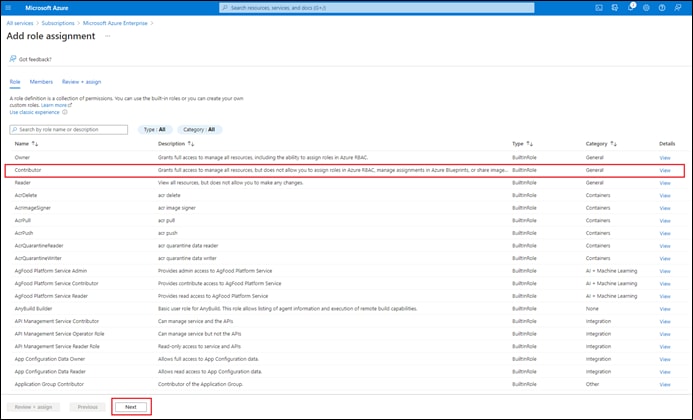

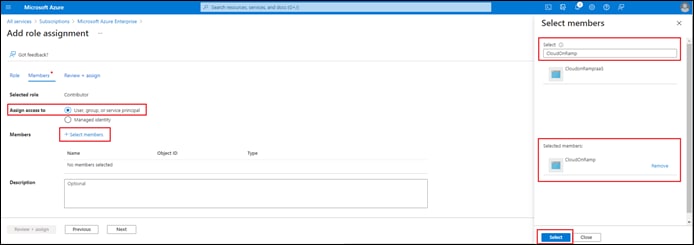

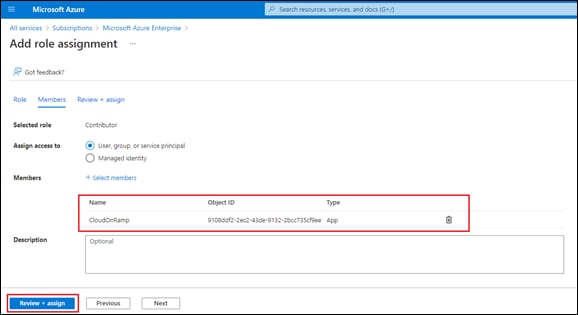

Process: Assign a Role to the Application

Appendix F: Integration with a Service vNet Hosting a Load-Balancer and Firewalls

About the Guide

The following IaaS public cloud providers are supported within the Cloud onRamp for Multi-Cloud feature within Cisco SD-WAN, as of Cisco vManage release 20.6.1:

● Amazon Web Services (AWS)

● Microsoft Azure

● Google Cloud Platform (GCP)

This guide is intended to provide technical guidance to design, deploy, and operate Cisco Cloud onRamp for Multi-Cloud with Azure. As of software release 17.6.1 / 20.6.1 Cisco Cloud onRamp for Multi-Cloud with Azure supports the instantiation of a single pair of Cisco Catalyst 8000v routers functioning as Network Virtualization Appliances (NVAs) within a virtual hub (vHub) within an Azure virtual WAN (vWAN).

| Technical Note |

| Cisco Cloud onRamp for Multi-Cloud with Azure does not support the instantiation of Cisco CSR 1000v or Cisco vEdge Cloud routers within the Azure vHub. Only Cisco Catalyst 8000v routers are supported. |

Because this deployment guide focuses primarily on Cisco Cloud onRamp for Multi-Cloud, the following are presumed:

● Cisco SD-WAN controllers (vManage, vBond, and vSmart) are already deployed with valid certificates.

● Cisco SD-WAN Edge devices at other branch and campus locations, as well as vSmart controllers have configurations – feature templates defined, device templates associated, and are in vManage mode.

● Cisco SD-WAN Edge devices at other branch and campus locations have been onboarded, have established control connections to the Cisco SD-WAN controllers, and have established data tunnels to other SD-WAN Edge devices across all available transports.

For more information on SD-WAN controller design and deployment, please refer to the Cisco SD-WAN Design Guide and the Cisco SD-WAN End-to-End Deployment Guide at the following URLs:

https://www.cisco.com/c/en/us/td/docs/solutions/CVD/SDWAN/cisco-sdwan-design-guide.html

https://www.cisco.com/c/dam/en/us/td/docs/solutions/CVD/SDWAN/SD-WAN-End-to-End-Deployment-Guide.pdf

This document contains four major sections:

● The Define section introduces the Cisco Cloud onRamp for Multi-Cloud feature and explains the overall solution, along with the benefits of deploying it.

● The Design section describes the Microsoft Azure vHub design used by Cisco Cloud onRamp for Multi-Cloud.

● The Deploy section is divided into two parts. The first part provides information regarding the prerequisites necessary for deploying Cisco Cloud onRamp for Multi-Cloud using Microsoft Azure as the IaaS public cloud provider. The second part discusses the automated deployment workflow of Cisco Cloud onRamp for Multi-Cloud with Azure.

● The Operate section shows additional operations and monitoring capabilities of Cisco Cloud onRamp for Multi-Cloud available through the Cisco vManage web-based graphical user interface (GUI).

Audience

The audience for this document includes network design engineers, network operations personnel, and security operations personnel who wish to implement Cisco SD-WAN secure virtual private network (VPN) connectivity from their private networks to one or more Microsoft Azure virtual networks (vNets).

Define – Cisco Cloud onRamp for Multi-Cloud Introduction

About the Solution

In a multi-cloud world, organizations realize the benefits of cloud computing services such as infrastructure as a service (IaaS) and have transitioned to either a hybrid model – using both on-prem and cloud-based data centers, or a cloud-first approach. IaaS public cloud providers, such as Microsoft Azure, allow organizations to prototype and deploy new applications more rapidly and cost-effectively. Instead of procuring, installing, and managing hardware – which could take months to accomplish – organizations can easily use the on-demand and scalable compute services in Azure as needed. This allows your organization to focus its resources on applications rather than on managing the data center and physical infrastructure.

With the use of IaaS, expenses shift from fixed costs for hardware, software, and data center infrastructure to variable costs based on the usage of compute resources and the amount of data transferred between the private data center, campus, and branch locations, and the IaaS public cloud provider. Hence, visibility into the usage of such resources for cost tracking and/or internal billing purposes is critical.

This guide focuses on how to implement secure network connectivity from one or more private network campus and/or branch locations to one or more Microsoft Azure virtual networks (vNets) using the Cisco SD-WAN Cloud onRamp for Multi-Cloud feature within Cisco vManage version 20.6.1. A vNet is an on-demand virtual network, logically isolated from other virtual networks within the Azure IaaS public cloud.

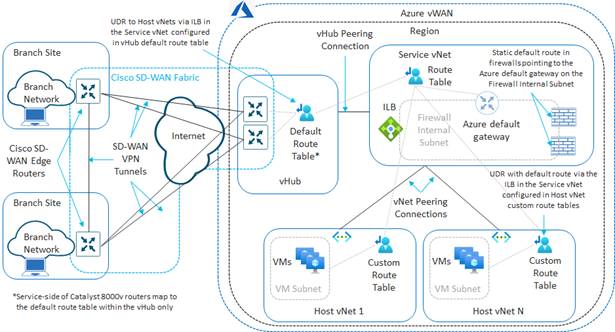

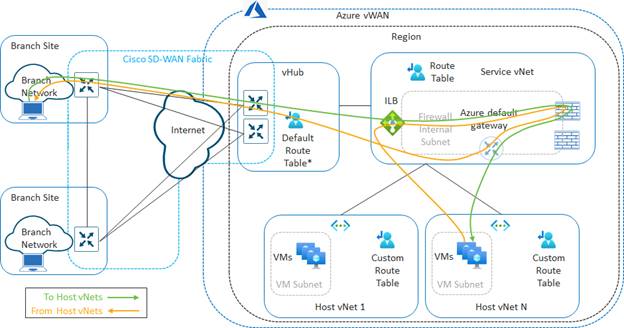

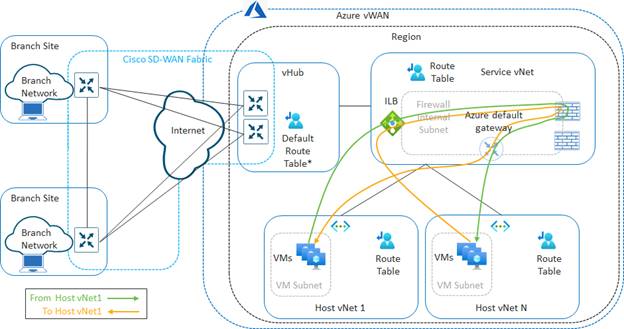

Design Overview

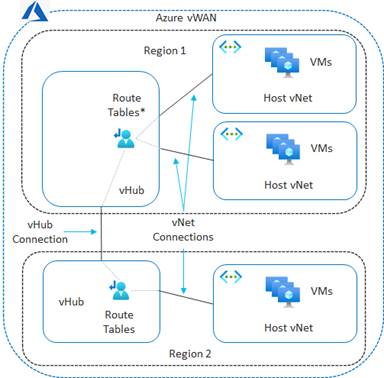

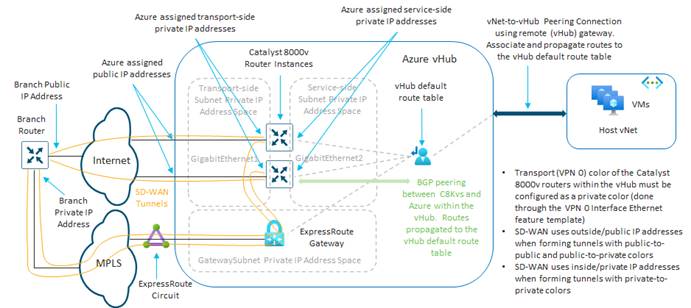

The Cisco Cloud onRamp for Multi-Cloud design extends the Cisco SD-WAN fabric to Azure through integration with a virtual WAN (vWAN). A vWAN is a global construct within Azure, representing the overall network deployment. Within the vWAN, there can be multiple virtual hubs (vHubs) per Azure region. A vHub is a special transit virtual network (vNet) managed by Azure. Since the vHub is completely managed by Azure, IP addresses are dynamically assigned by Azure based upon the address space specified when the vHub is instantiated. Azure automatically partitions the vHub IP address space for each of the subnets needed within the vHub itself.

Host vNets connect via vNet peering connections to a vHub within their Azure region. Host vNet connectivity between Azure regions can be provided through a combination of vHubs with vNet peering within each region, along with vHub-to-vHub peering between regions within the overall vWAN. The Azure vWAN design therefore provides for scalable connectivity between host vNets within an Azure region and between regions, as shown in the following figure.

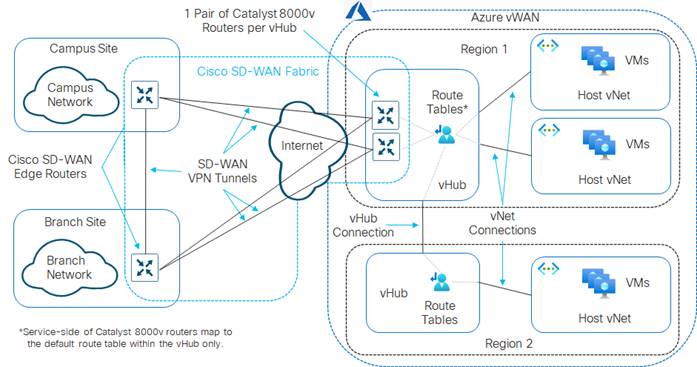

With the Cisco Cloud onRamp for Multi-Cloud design, Cisco has partnered with Microsoft to provide the ability to instantiate a pair of Cisco Catalyst 8000v virtual form-factor SD-WAN routers functioning as Network Virtual Appliances (NVAs) directly within an Azure vHub. The combination of the vHub with an instantiated pair of Cisco Catalyst 8000v SD-WAN routers is referred to as a Cloud Gateway within this document.

The Cisco Cloud onRamp for Multi-Cloud feature provides the following benefits:

● Automated creation of the vWAN and vHub within Azure if necessary.

● Automation of the instantiation of the Catalyst 8000v SD-WAN routers within the vHub.

● Automated connectivity of the Catalyst 8000v routers to the rest of the Cisco SD-WAN fabric (overlay network) through SD-WAN IPsec VPN tunnels established over the Internet functioning as the underlay connectivity.

● Automation connection of selected host vNets to the vHub, through vNet connections.

The Cisco Cloud onRamp for Multi-Cloud feature is flexible in terms of whether you have already provisioned a vWAN and/or a vHub within your Azure deployment. When you configure the Cloud Gateway within the vManage web-based GUI, you can choose to use an existing vWAN and/or vHub, or you can choose to allow Cloud onRamp for Multi-Cloud to create them for you.

As of software release 17.6.1 / 20.6.1, Cisco Cloud onRamp for Multi-Cloud supports a single Azure vWAN only. Multiple Azure cloud accounts (consisting of Subscription ID, Tenant ID, Application ID, and Secret Key) may be added to Cisco Cloud onRamp for Multi-Cloud. However, when the first Cloud Gateway is instantiated within Cisco Cloud onRamp for Multi-Cloud, the Azure Resource Group in which the vWAN is created (belonging to the Azure subscription under which the Cloud Gateway is created) must be used for all further Cloud Gateways within Cloud onRamp. Hence, the same vWAN must be used for all Cloud Gateways instantiated within Cisco Cloud onRamp for Multi-Cloud.

The pair of Cisco Catalyst 8000v virtual form-factor routers instantiated within the vHub provides the connectivity between the Cisco SD-WAN network and the Microsoft Azure vWAN, as shown in the following figure.

As of software release 17.6.1 / 20.6.1, only two Cisco Catalyst 8000v SD-WAN routers are provisioned by Cloud onRamp for Multi-Cloud within a vHub. The pair of Cisco Catalyst 8000v routers is instantiated within the vHub for resiliency. Throughput capacity of the SD-WAN connectivity is based upon the underlying Azure virtual machines (VMs) chosen to run the Catalyst 8000v router instances. Additional SD-WAN routers cannot be provisioned within the vHub via Cloud onRamp for Multi-Cloud if additional capacity is required.

As of software release 17.6.1 / 20.6.1 only a single Cloud Gateway – and more specifically a single pair of Catalyst 8000v routers – can be instantiated within a given Azure region. However, each Azure region may have its own vHub. You can create a Cloud Gateway in each Azure region within the vWAN to scale the throughput capacity across the deployment.

Only two physical interfaces are created on each of the Cisco Catalyst 8000v SD-WAN routers when they are instantiated within the Azure vHub. The first physical interface (GigabitEthernet1) of each of the Catalyst 8000v routers is configured as an WAN transport (VPN 0) interface and attached to a subnet within the vHub. IP addresses are dynamically assigned from the subnet address space. Internet connectivity is provided through Azure public IP addresses, also assigned to the WAN interfaces. Hence, the WAN interfaces use the Internet for the SD-WAN fabric underlay connectivity. The SD-WAN fabric overlay is created via IPsec tunnels which use the Internet as the underlay.

| Technical Note |

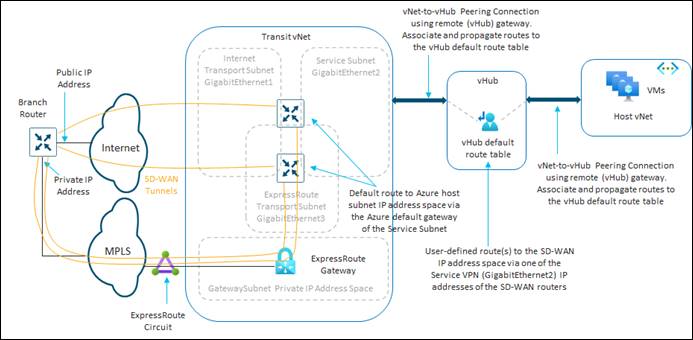

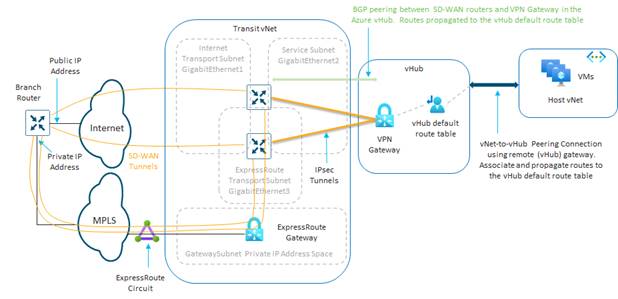

| As of software release 17.6.1 / 20.6.1, Cisco Cloud onRamp for Multi-Cloud does not automate any integration with Azure ExpressRoute as part of the SD-WAN underlay. However, Azure ExpressRoute circuits can be accommodated as part of the SD-WAN underlay through manual provisioning. This will be discussed the Alternative Azure Designs section. |

The second physical interface (GigabitEthernet2) of each of the Catalyst 8000v routers is configured as an SD-WAN service (LAN) interface and attached to a different subnet within the vHub. The LAN interfaces are dynamically assigned private IP addresses within the subnet IP address space within the Azure vHub. No Azure public IP addresses are assigned to the LAN interfaces.

The service VPN to which the LAN interfaces belongs, is dynamically configured when the first host vNet is mapped to the vHub within the Intent Management section of the Cloud onRamp for Multi-Cloud provisioning workflow. Since there is only a single LAN interface, and since Azure does not support sub-interfaces, only a single SD-WAN service VPN can be extended to Azure with Cisco Cloud onRamp for Multi-Cloud currently.

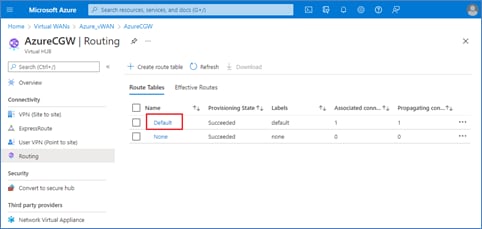

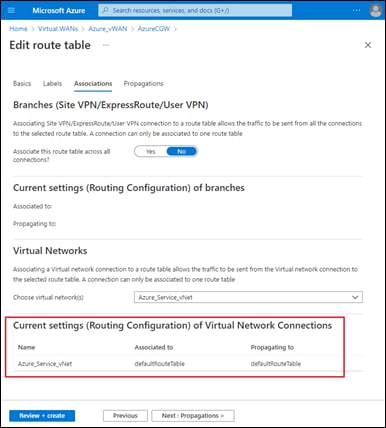

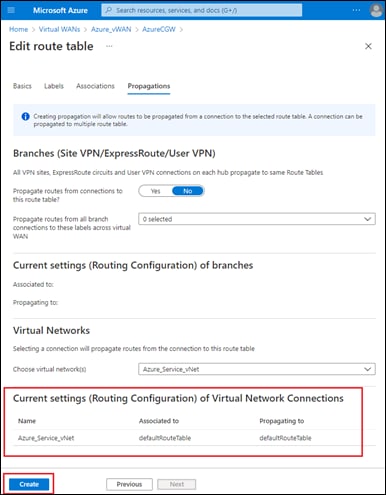

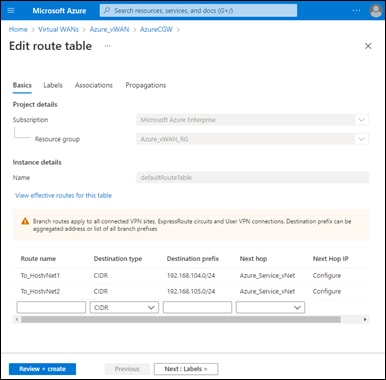

External BGP (eBGP) peering is established between the service-side physical LAN interfaces (GigabitEthernet2) of the Cisco Catalyst 8000v SD-WAN routers and the internal router/route server with the Azure vHub. The eBGP peering is dynamically configured on the Catalyst 8000v SD-WAN routers when the first host vNet is mapped to the vHub within the Intent Management section of the Cloud onRamp for Multi-Cloud provisioning workflow. Routes exchanged via the eBGP peering of the SD-WAN routers and the Azure vHub router/route server are mapped to the default route table of the vHub. Because only the default route table is used for the eBGP peering, the Cloud onRamp for Multi-Cloud workflow that automates the mapping of host vNets to the vHub also maps host vNets only to the default route table of the vHub. In other words, only the default route table of the vHub is used with the automation of Cisco Cloud onRamp for Multi-Cloud.

The Cisco Cloud onRamp for Multi-Cloud automation also configures propagation of routes between the host vNets such that the host vNets can communicate with each other. Within the Catalyst 8000v routers instantiated as NVAs within the vHub, BGP routes are redistributed into the Overlay Management Protocol (OMP) and vice-versa. This allows the host vNets to communicate with the rest of the SD-WAN fabric overlay – for the service VPN mapped within the Intent Management section of the Cloud onRamp for Multi-Cloud automation workflow.

Deploy - Cisco Cloud onRamp for Multi-Cloud with Azure

Cisco Cloud onRamp for Multi-Cloud with Azure uses APIs to automate the following:

● Deployment of a vWAN within your Azure subscription if you have not already deployed an Azure vWAN. Cisco Cloud onRamp for Multi-Cloud can also use an existing vWAN if you have already deployed one within your subscription.

● Deployment of a vHub within the Azure region that you specify within the vManage workflow during deployment. Since the vHub resource is managed by Azure, all you need to specify is an IP address range. Azure creates all the necessary subnets, route tables, security groups, etc. as necessary for the vHub. Cisco Cloud onRamp for Multi-Cloud can also use an existing vHub If you have already deployed one within the Azure region.

| Technical Note |

| The minimum IP address CIDR block supported for a vHub is specified by Azure as /24. |

● Deployment of a single pair of Catalyst 8000v SD-WAN routers functioning as Network Virtual Appliances (NVAs) within the vHub. Each of the Cisco Catalyst 8000v routers within the pair is instantiated within a different Azure availability zone for resiliency. The combination of the Catalyst 8000v routers and the vHub are referred to as the Cloud Gateway.

● Connectivity of the Catalyst 8000v routers to the rest of the Cisco SD-WAN fabric through IPsec VPN tunnels established over the Internet (using Azure public IP addresses) as the transport (VPN 0) interface.

| Technical Note |

| As of software release 17.6.1 / 20.6.1, Cisco Cloud onRamp for Multi-Cloud does not automate any integration with Azure ExpressRoute as part of the SD-WAN underlay. However, Azure ExpressRoute circuits can be accommodated as part of the SD-WAN underlay through manual provisioning. This is discussed in the Alternative Azure Designs section of this document. |

● Discovery and tagging of host vNets within your Azure subscription or within other Azure subscriptions. Tagging is used to facilitate the delivery of intent – which refers to the automated mapping of host vNets to the vHub.

● Delivery of intent within the vManage workflow during the deployment. Intent includes the following:

◦ Mapping of one or more tagged host vNets to the vHub through Azure vNet connections. Host vNets are mapped to the default route table of the vHub.

◦ Dynamic configuration of the selected service VPN onto the service-side interface of the pair of Cisco Catalyst 8000v SD-WAN routers functioning as NVAs within the Azure vHub.

◦ Provisioning of external BGP (eBGP) peering between the pair of Cisco Catalyst 8000v SD-WAN routers functioning as NVAs within the Azure vHub and the internal router/route server within the Azure vHub. eBGP peering is done through the service-side interface of the pair of Cisco Catalyst 8000v SD-WAN routers.

◦ Mapping of host vNet IP address spaces and IP addresses learned via eBGP peering, to the default route table of the vHub.

| Technical Note |

| Azure uses BGP autonomous system number (ASN) 65515 by default for the vHub. You must select a different BGP ASN for the Cisco Catalyst 8000v SD-WAN routers functioning as NVAs within the vHub to accomplish external BGP (eBGP) peering. |

The delivery of intent completes the configuration which provides the connectivity from the Cisco SD-WAN fabric to one or more Azure host vNets through the service VPN configured on the Cisco Catalyst 8000v SD-WAN routers within the vHub. All host vNets are mapped to a single service VPN on the Cisco Catalyst 8000v SD-WAN routers within the Azure vHub. If traffic separation is desired, depending upon your requirements it may be possible to accomplish through centralized policy allowing and/or preventing connectivity between prefixes. Centralized policy is outside the scope of this guide and will not be covered here.

Host vNets can be automatically mapped to the vHub by Cisco Cloud onRamp for Multi-Cloud within the workflow either during the creation of the Cloud Gateway or added after the Cloud Gateway has been created. For the use case in this deployment guide, the host vNets are mapped to the vHub after the Cloud Gateway has been created.

Before configuring Cisco Cloud onRamp for Multi-Cloud with Azure, the following prerequisites must be met:

● Verify you meet the Azure prerequisites

● Verify you have available software tokens / licenses for two Cisco Catalyst 8000v routers in Cisco vManage

● Configure feature and device templates for the Cisco Catalyst 8000v routers that will be used within the Cloud Gateway

● Deploy the device template to the software tokens representing the Cisco Catalyst 8000v routers that will be used within the Cloud Gateway

The following procedures assist with validating and configuring the prerequisites for Cisco Cloud onRamp for Multi-Cloud with Azure. If you already meet the prerequisites, you can skip this section and move on to the Deploy a Cloud Gateway with Cisco Cloud onRamp for Multi-Cloud section.

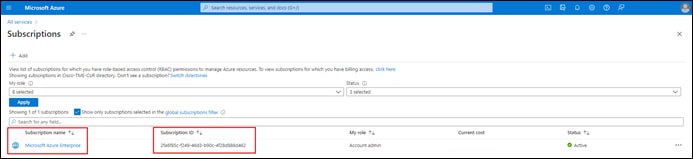

Procedure 1. Verify the Azure Prerequisites

The Azure prerequisites for deploying a Cloud Gateway with Cisco Cloud onRamp for Multi-Cloud are discussed in detail within Appendix E. At a high level, the requirements are summarized as follows:

● You must have a subscription within Azure marketplace to utilize Cisco Catalyst 8000v router images.

● You must ensure that your Azure subscription has the privileges to create the necessary resources within Azure.

● Your vManage must have Internet connectivity and the necessary firewall ports open for REST-based API calls between Cisco Cloud onRamp for Multi-Cloud and Azure.

Please refer to Appendix E for additional details on these requirements as necessary.

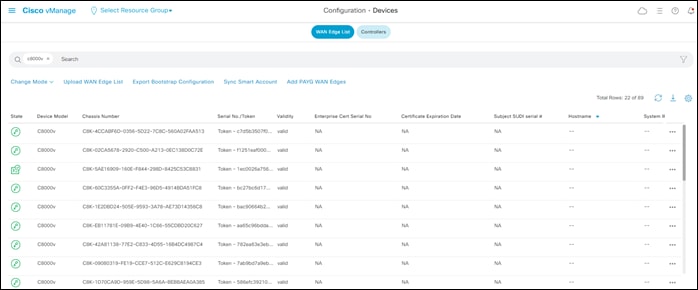

Procedure 2. Verify You Have at Least Two Unused Cisco Catalyst 8000v Routers within Cisco vManage

Cisco Cloud onRamp for Multi-Cloud with Azure supports the instantiation of Cisco Catalyst 8000v SD-WAN routers functioning as NVAs within the Azure vHub. Cisco CSR 1000v and vEdge Cloud routers are not supported with the Cisco Cloud onRamp for Multi-Cloud workflow with Azure.

The following are the steps for this procedure.

Step 1. Log into the Cisco vManage web console using the IP address or fully qualified domain name of your Cisco vManage instance.

For example: https://<Cisco_vManage_ipaddr_or_FQDN>:8443/

Step 2. In the navigation panel on the left side of the screen, select Configuration > Devices.

This will bring up the Devices screen. An example is shown in the figure below.

Step 3. Verify that you have at least two valid Cisco Catalyst 8000v routers that are not being used already. Valid unused devices should have the word “valid” under the Validity column. The Assigned Template, Device Status, Hostname, System IP, and Site ID columns should be blank.

Cisco Catalyst 8000v routers are sold as a software subscription license. Go to software.cisco.com and use the Plug and Play Connect portal to add tokens / licenses and sync or upload them to vManage if you have insufficient Cisco SD-WAN Edge software router tokens.

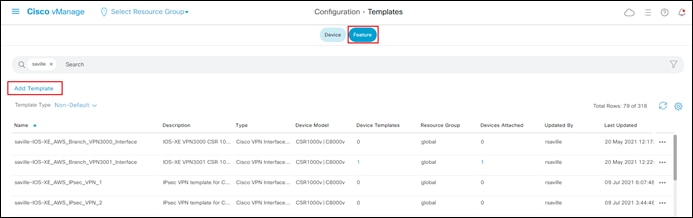

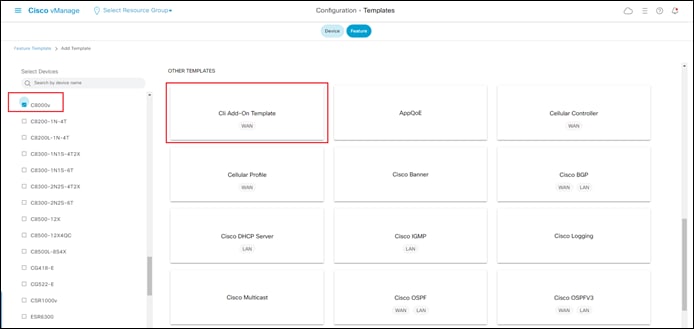

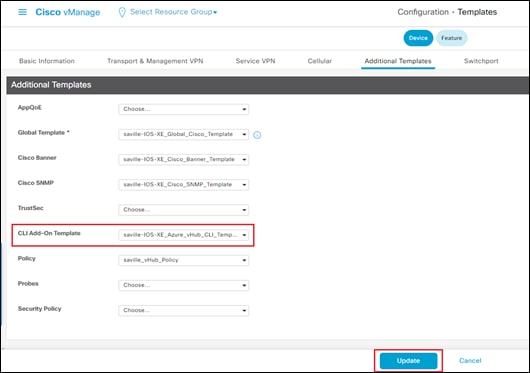

Procedure 3. Configure Feature & Device Templates for the Catalyst 8000v Routers used as NVAs within the Azure vHub

You must have at least a minimal device template assigned within Cisco vManage to the software tokens that represent the Cisco Catalyst 8000v routers that Cisco Cloud onRamp for Multi-Cloud provisions within the Azure vHub.

Cisco provides a minimum default device template named Default_Azure_vWAN_C8000V_Template_V01 to help you get started. This default device template consists of multiple default feature templates. If you choose, you can deploy the Cloud Gateway using these default device and feature templates.

It should be noted that you cannot modify a default template. However, you can change any values of device variables within the default templates that were manually entered while instantiating the SD-WAN routers, and then re-deploy the changes to the running SD-WAN routers. If you wish to change the template itself, you must first copy the template. Once you have a copy of the default template, you can then modify the copy of the template.

| Technical Note |

| When deploying a Cloud Gateway within Azure using vManage release 20.7 with Catalyst 8Kv routers running software release 17.6, the default device template (Default_Azure_vWAN_C8000V_Template_V01) may result in Catalyst 8000v routers not establishing control plane connections with vManage. It is recommended that you try the following: delete the Cloud Gateway within vManage, copy the default device template (Default_Azure_vWAN_C8000V_Template_V01), edit the new template to remove the second Cisco VPN Interface Ethernet feature template (Default_Azure_vWAN_C8000V_VPN0_INTF_GE2_V01) from the transport VPN (VPN 0), and then re-deploy the Cloud Gateway using the new template with only one WAN transport interface. |

After you have implemented the Default_Azure_vWAN_C8000V_Template_V01 template within the Cloud Gateway, you can modify the configuration of the Catalyst 8000v SD-WAN routers within the Cloud Gateway by making copies of the device and feature templates, swapping out the necessary default templates with the copies as needed, and re-deploying to the running Catalyst 8000v SD-WAN routers within the Cloud Gateway. At a minimum it is recommended to modify the userid / password within the Cisco AAA template, in order to secure access to the Cisco Catalyst 8000v routers within the Cloud Gateway.

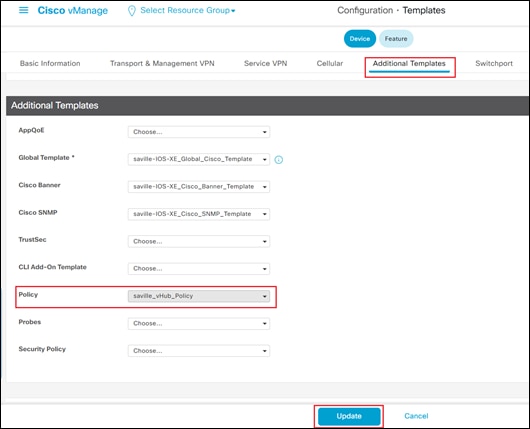

This deployment guide follows the strategy of deploying device and feature templates with a more complete configuration when the Cloud Gateway is instantiated. This template strategy is simply the choice of the author of this guide, based upon the concept that cloud infrastructure should be immutable as much as possible. The device template, saville-C8Kv-Azure-vHub, used for the Catalyst 8000v SD-WAN routers, as well as the various feature templates which make up the device template, are discussed in Appendix C.

| Technical Note |

| Please refer to the Cisco SD-WAN Deployment Guide located at the following URL, for step-by-step instructions as to how to create individual feature templates and device templates within Cisco vManage if necessary. https://www.cisco.com/c/dam/en/us/td/docs/solutions/CVD/SDWAN/SD-WAN-End-to-End-Deployment-Guide.pdf |

Procedure 4. Attach Device Templates to the Software Tokens Representing the Cisco Catalyst 8000v Routers used in the Azure Cloud Gateway

When you attach a device template to Cisco Catalyst 8000v routers, Cisco vManage builds the configurations based on the feature templates and then associates the configuration with the software tokens representing the Cisco Catalyst 8000v routers that will be used within the Cloud Gateway. For Cisco Catalyst 8000v routers, the configuration, along with a One-Time Password (OTP) – unique to each device, are included within the cloud-init file. The OTP is used by the Cisco Catalyst 8000v router to initially authenticate to the Cisco vBond and vManage controllers. The cloud-init files are automatically uploaded to Azure as Custom Data within the Cloud onRamp for Multi-Cloud automation when the Cisco Catalyst 8000v routers are instantiated.

However, before the configuration can be built and pushed out, you need to first define all variables within the feature templates attached to the device template. There are two ways to do this, either by entering in the values of the variables manually within the GUI, or by uploading a .csv file with a list of the variables and their values. Both methods are discussed within the Cisco SD-WAN Deployment Guide referenced earlier. This section of the deployment guide will only discuss entering values manually.

The following are the steps:

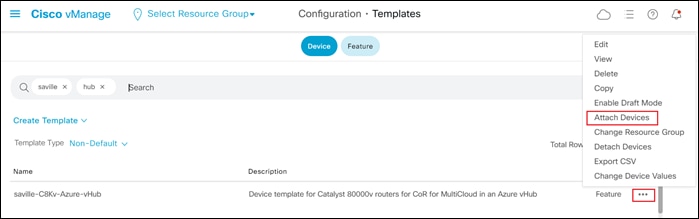

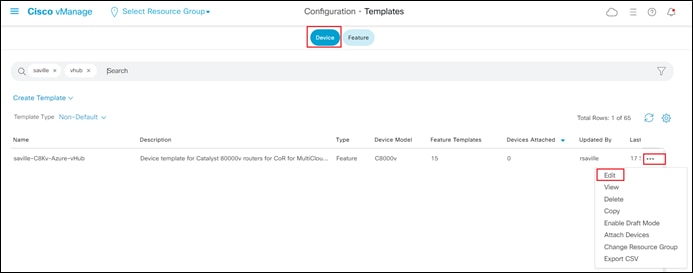

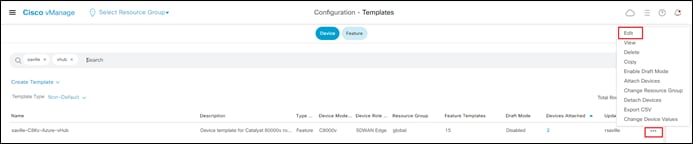

Step 1. Go to Configuration > Templates and select the Device tab.

Step 2. Find the desired device template.

The example within this section will highlight the steps for deploying the device template named saville-C8Kv-Azure-vHub to Cisco Catalyst 8000v routers.

Step 3. Select the … to the right of the template, and from the drop-down menu select Attach Devices.

An example is shown in the following figure.

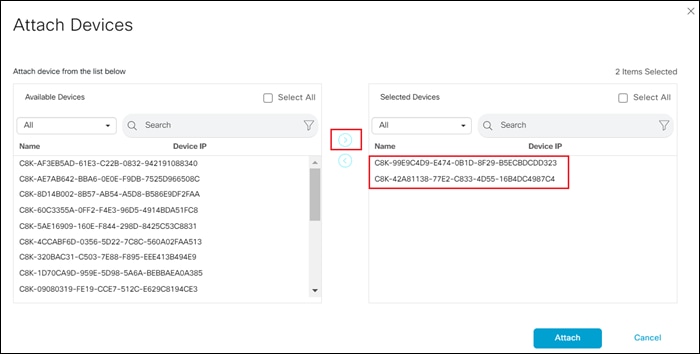

A pop-up window listing the available devices to be attached to this configuration will appear. The list of available devices will contain either the hostname and IP address of a device, if it is known through Cisco vManage; or the chassis serial number of a device, if it has not yet come up on the network and is unknown by Cisco vManage. Cisco Catalyst 8000v routers are assigned a chassis serial number although there is no physical chassis. The list contains only the device model that was defined when the template was created (for this deployment guide, Cisco Catalyst 8000v routers).

Step 4. Select the devices to which you wish to apply the configuration template and select the arrow to move the device from the Available Devices box to the Selected Devices box.

You can select multiple devices at one time by simply clicking each desired device.

Step 5. Click the Attach button.

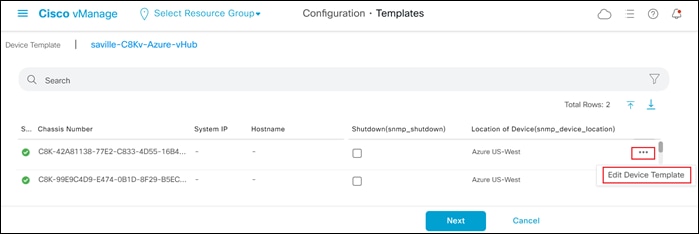

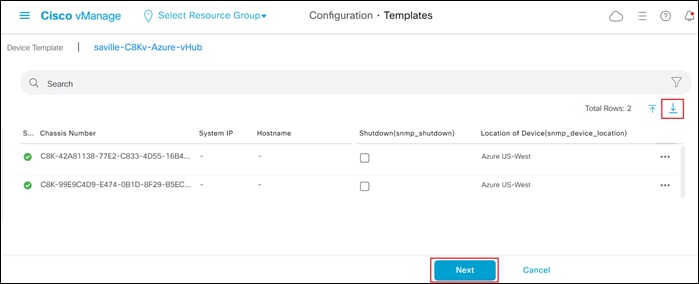

A new screen will appear, listing the devices that you have selected. An example is shown in the following figure.

Step 6. Find the first Cisco SD-WAN Edge device, select … to the far right of it, and from the drop-down menu select Edit Device Template.

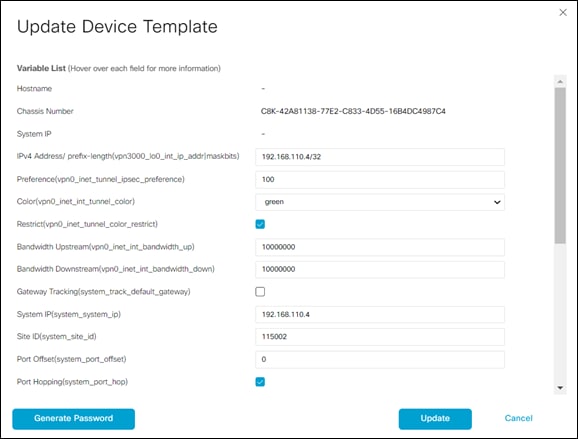

A pop-up screen will appear with a list of variables and empty text boxes. There may also be variables with check boxes to check/uncheck for on/off values. An example is shown in the figure below.

Step 7. Fill in the values of the variables in the text boxes.

All text fields must be filled in. If you leave a text field empty, the box around the text field will be highlighted red when you try to move to the next page. Check boxes can be left unchecked. For check boxes, checked means “Yes” and unchecked means “No”.

The following tables show the variables used when deploying the pair of Cisco Catalyst 8000v routers for this deployment guide.

Table 1. AzureC8Kv1 Device Template Variable Values

| Variable |

Value |

| IPv4 Address/ prefix-length(vpn3001_lo0_int_ip_addr|maskbits) |

192.168.110.4/32 |

| Preference (vpn0_inet_tunnel_ipsec_preference) |

100 |

| Color(vpn0_inet_int_tunnel_color) |

green |

| Restrict(vpn0_inet_tunnel_color_restrict) |

✓ |

| QoS Map(qos_map) |

4Q_QoS_Map |

| Bandwidth Upstream (vpn0_inet_int_bandwidth_up) |

10000000 |

| Bandwidth Downstream (vpn0_inet_int_bandwidth_down) |

10000000 |

| Gateway Tracking(system_track_default_gateway) |

□ |

| System IP (system_system_ip) |

192.168.110.4 |

| Site ID (system_site_id) |

115002 |

| Port Offset (system_port_offset) |

0 |

| Port Hopping (system_port_hop) |

✓ |

| Hostname (system_host_name) |

AzureC8Kv1 |

| Longitude(system_longitude) |

-121.9552 |

| Latitude (system_latitude) |

37.3541 |

| Device Groups(system_device_groups) |

saville_Azure |

| Console Baud Rate (bps)(system_console_baud_rate) |

19200 |

| VPN_ID (snmp_trap_vpn_id) |

3001 |

| Source Interface(snmp_trap_source_interface) |

Loopback0 |

| IP Address(snmp_trap_ip) |

192.168.101.102 |

| Shutdown (snmp_shutdown) |

□ |

| Location of Device(snmp_device_location) |

Azure US-West |

| Source Interface(https_client_source_interface) |

GigabitEthernet1 |

| Hello Interval (milliseconds) (bfd_color_hello_interval) |

1000 |

| Color(bfd_color) |

Green |

| Poll Interval (milliseconds)(bfd_poll_interval) |

600000 |

| Hostname/IP Address(vpn0_ntp_server_host) |

time.nist.gov |

| VPN ID (logging_server_vpn) |

3001 |

| Hostname/IPv4 Address(logging_server_ip_addr) |

192.168.101.102 |

Table 2. AzureC8Kv2 Device Template Variable Values

| Variable |

Value |

| IPv4 Address/ prefix-length(vpn3001_lo0_int_ip_addr|maskbits) |

192.168.110.5/32 |

| Preference (vpn0_inet_tunnel_ipsec_preference) |

100 |

| Color(vpn0_inet_int_tunnel_color) |

green |

| Restrict(vpn0_inet_tunnel_color_restrict) |

✓ |

| QoS Map(qos_map) |

4Q_QoS_Map |

| Bandwidth Upstream (vpn0_inet_int_bandwidth_up) |

10000000 |

| Bandwidth Downstream (vpn0_inet_int_bandwidth_down) |

10000000 |

| Gateway Tracking(system_track_default_gateway) |

□ |

| System IP (system_system_ip) |

192.168.110.5 |

| Site ID (system_site_id) |

115002 |

| Port Offset (system_port_offset) |

0 |

| Port Hopping (system_port_hop) |

✓ |

| Hostname (system_host_name) |

AzureC8Kv2 |

| Longitude(system_longitude) |

-121.9552 |

| Latitude (system_latitude) |

37.3541 |

| Device Groups(system_device_groups) |

saville_Azure |

| Console Baud Rate (bps)(system_console_baud_rate) |

19200 |

| VPN_ID (snmp_trap_vpn_id) |

3001 |

| Source Interface(snmp_trap_source_interface) |

Loopback0 |

| IP Address(snmp_trap_ip) |

192.168.101.102 |

| Shutdown (snmp_shutdown) |

□ |

| Location of Device(snmp_device_location) |

Azure US-West |

| Source Interface(https_client_source_interface) |

GigabitEthernet1 |

| Hello Interval (milliseconds) (bfd_color_hello_interval) |

1000 |

| Color(bfd_color) |

Green |

| Poll Interval (milliseconds)(bfd_poll_interval) |

600000 |

| Hostname/IP Address(vpn0_ntp_server_host) |

time.nist.gov |

| VPN ID (logging_server_vpn) |

3001 |

| Hostname/IPv4 Address(logging_server_ip_addr) |

192.168.101.102 |

Interface names for Cisco Catalyst 8000v routers start with GigabitEthernet1. Subsequent interfaces follow the standard conventions for Cisco IOS XE devices – GigabitEthernet2, GigabitEthernet3, etc.

Step 8. When you have filled in the values of the variables in the text boxes, select Update.

This will fill in all the variables for the template for the first Cisco Catalyst 8000v router.

Step 9. Repeat Steps 6 – 8 for the other Catalyst 8000v router.

Step 10. When you are finished filling out the variables and before moving further, download the .csv file by selecting the download arrow symbol in the upper right corner.

The .csv file will be populated with the values you have filled in so far. If you deploy the configuration, and for any reason there is an error in one of the input variables, the configuration may fail to deploy. When you come back to this page, all the values will be gone, and you will need to enter them in again.

If you downloaded the populated .csv file, just upload it by selecting the up arrow. Then you can select … to the right of the desired device and select Edit Device Template, and your latest values will be populated in the text boxes. You can then modify any input values and try to deploy again.

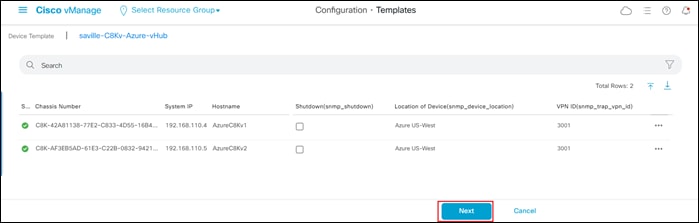

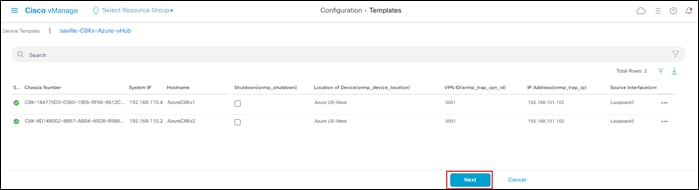

Step 11. When you are ready to deploy, select the Next button.

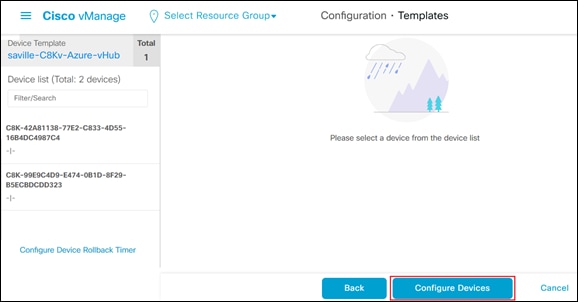

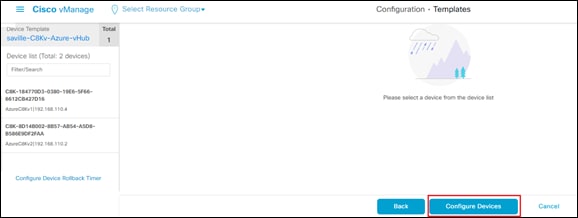

The next screen will indicate that the template (or templates if you used multiple device templates) will be applied to the devices. An example is shown in the figure below.

If you forget to add values for a device, you will get an error and you won't be able to move forward until that is corrected.

Selecting any device in the left-hand panel will show you the configuration that will be pushed to that Catalyst 8000v device (through the Config Preview tab).

Appendix D shows the configuration pushed to one of the Cisco Catalyst 8000v routers (AzureC8Kv1) from the configuration templates.

Step 12. Click on the Configure Devices button.

A pop-up window will appear, informing you that committing the changes will affect the configuration on the Cisco Catalyst 8000v devices, and asking you to confirm that you want to proceed.

Step 13. Check the box next to Confirm configuration changes on 2 devices and click on the OK button.

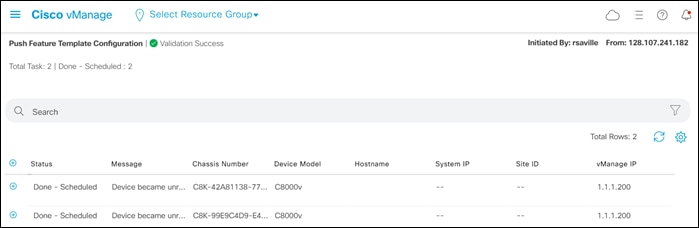

The Task View screen will then appear. After a few moments the status of the Cisco Catalyst 8000v SD-WAN Edge routers will appear as “Done – Scheduled” with a message indicating that the device is offline and that the template will be attached to the device when it comes online. An example is shown in the figure below.

The Cisco Catalyst 8000v SD-WAN Edge routers are now ready to be deployed within the Azure Cloud Gateway by Cisco Cloud onRamp for Multi-Cloud.

Process: Deploy a Cloud Gateway with Cisco Cloud onRamp for Multi-Cloud

This section discusses the procedures for deploying an Azure Cloud Gateway using Cisco Cloud onRamp for Multi-Cloud.

Procedure 1. Login to Cisco vManage and Navigate to Cloud onRamp for Multi-Cloud

Step 1. Login to the Cisco vManage web console using the IP address or fully qualified domain name of your Cisco vManage instance.

For example: https://Cisco_vManage_ip_addr_or_FQDN:8443/

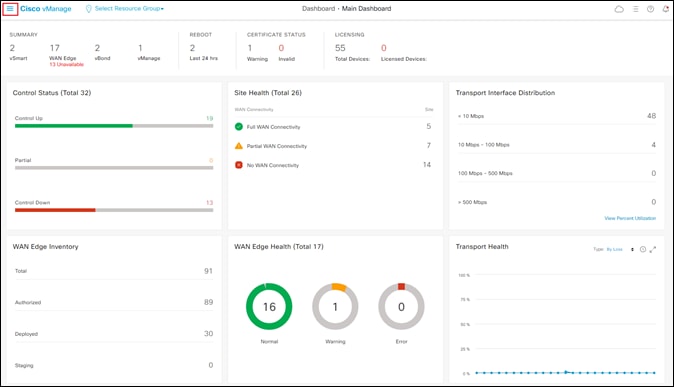

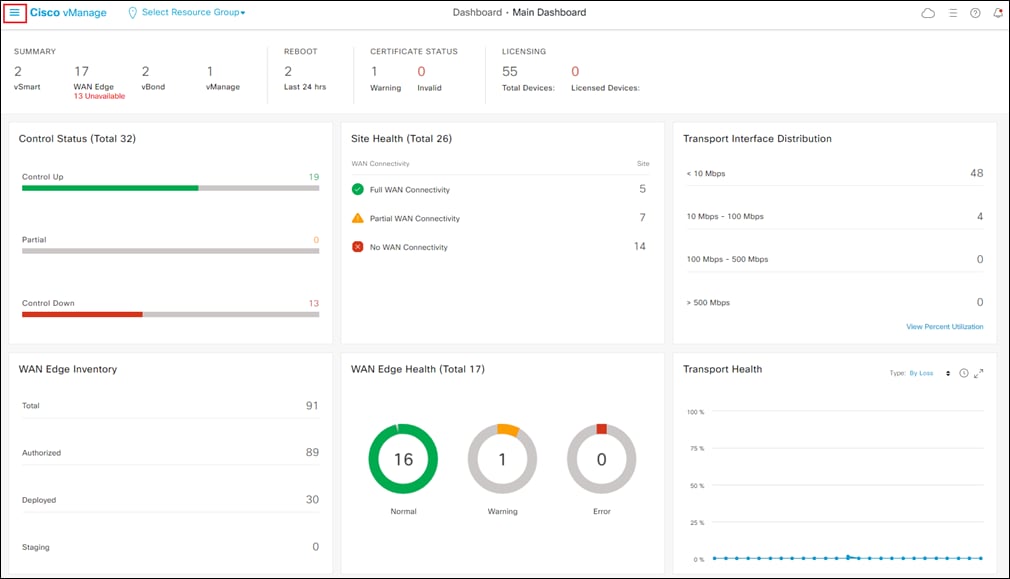

This will bring up the Cisco vManage dashboard, as shown in the following figure.

Step 2. Click the three bars in the upper left corner of the screen to bring up a navigation panel on the left side of the screen.

Step 3. In the navigation panel, select Configuration > Cloud onRamp for Multi-Cloud.

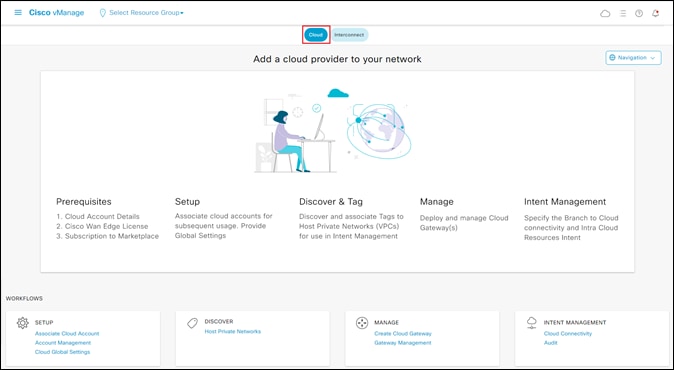

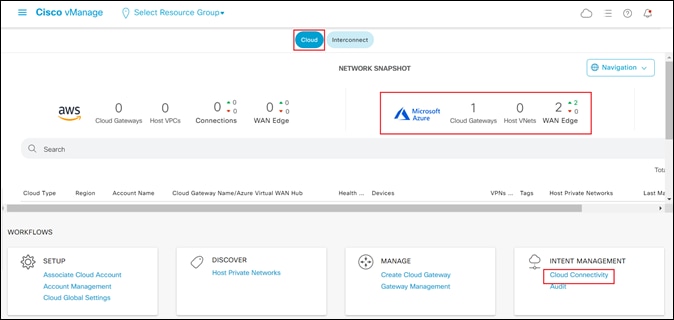

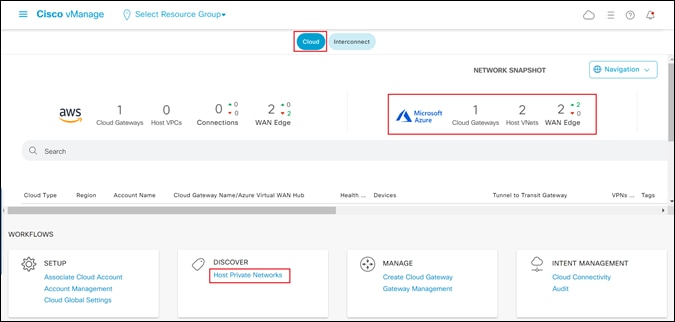

This will bring you to the initial Cisco Cloud onRamp for Multi-Cloud screen, as shown in the figure below.

Step 4. Make sure the Cloud button at the top of the screen is selected, as shown in the figure above.

Cloud onRamp for Multi-Cloud has four main workflows: Setup, Discover, Manage, and Intent Management - each with one or more actions that need to be performed. This guide will walk you through each of the actions within each of the workflows as a separate procedure.

Procedure 2. Setup – Associate Cloud Account

The Setup workflow allows you to do the following:

● Add new cloud accounts to Cisco Cloud onRamp for Multi-Cloud

● Manage (including delete) existing cloud accounts

● Configure global settings for the Catalyst 8000v routers instantiated within the Azure vHub.

Cloud accounts include the credentials necessary to access the public IaaS cloud provider (Azure for this deployment guide) through API calls. This procedure will discuss how to add (associate) a new cloud account to Cisco Cloud onRamp for Multi-Cloud.

Step 1. To add a new cloud instance, within Setup click on Associate Cloud Account.

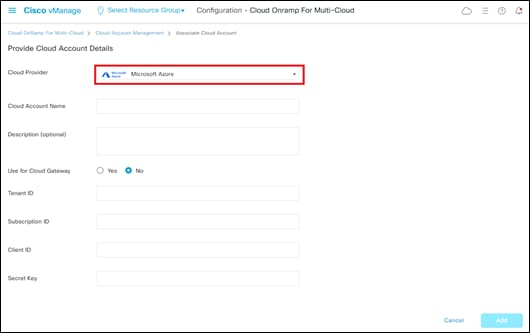

The Provide Cloud Account Details screen will appear.

Step 2. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

The fields within the screen will change to reflect the credentials necessary for Cisco Cloud onRamp for Multi-Cloud to access Microsoft Azure. An example is shown in the following figure.

Step 3. Fill out the required information within the Provide Cloud Account Details screen and click the Add button.

The following table provides a brief description of each of the fields.

Table 3. Provide Cloud Account Details Screen Field Descriptions

| Field |

Description |

| Cloud Provider |

Drop-down menu containing the IaaS public cloud providers supported by Cisco Cloud onRamp for Multi-Cloud. As of vManage release 20.6.1 Amazon Web Services, Microsoft Azure, and Google Cloud Platform are supported. |

| Cloud Account Name |

Text field used to identify the cloud account within Cisco Cloud onRamp for Multi-Cloud. |

| Description (optional) |

Optional text field used for a description of the cloud account. |

| Use for Cloud Gateway |

Yes / No radio button to determine if the cloud account can be used to instantiate a Cloud Gateway within Azure. A Cloud Gateway consists of a pair of Catalyst 8000v routers instantiated within an Azure vHub. If set to No, the cloud account can still be used to map existing host vNets associated with the cloud account to a Cloud Gateway but cannot be used to instantiate a Cloud Gateway. |

| Tenant ID |

Universally Unique Identifier (UUID) / Globally Unique Identifier (GUID) representing the representing the Azure Active Directory (AD) tenant for the cloud account. Also called a Directory ID. |

| Subscription ID |

UUID / GUID identifying the subscription within this tenant. |

| Client ID |

The ID provided by Azure Active Directory (AD) when you registered your client application. Also called an Application ID. |

| Secret Key |

The client secret used by the application / service principal to prove its identity when requesting a token. |

For this deployment guide the cloud account is used to instantiate Cloud Gateways. Make sure the Use for Cloud Gateway radio button is set to Yes.

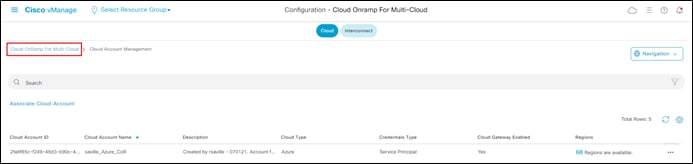

Once you have added the cloud account it should appear like the following figure.

Step 4. Click on the Cloud OnRamp for Multi-Cloud link in the upper right side to the screen (as shown in the figure above) to return to the initial Cloud OnRamp for Multi-Cloud screen.

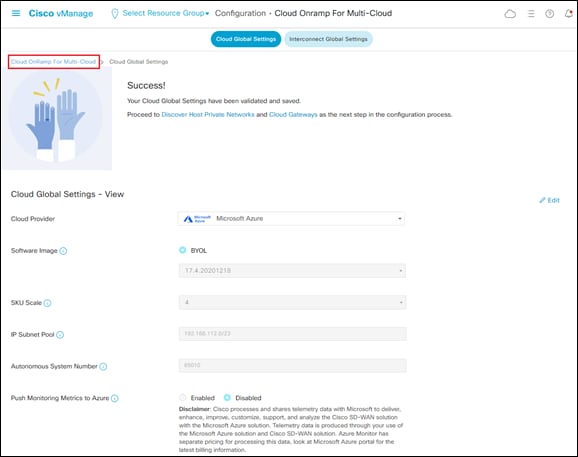

Procedure 3. Setup – Cloud Global Settings

This procedure will discuss how to configure global settings for the Cloud Gateways (Catalyst 8000v routers instantiated within the Azure vHub) within Cisco Cloud onRamp for Multi-Cloud. Global settings must be configured before you can create a Cloud Gateway. However, you can override many of the global settings when you instantiate a Cloud Gateway.

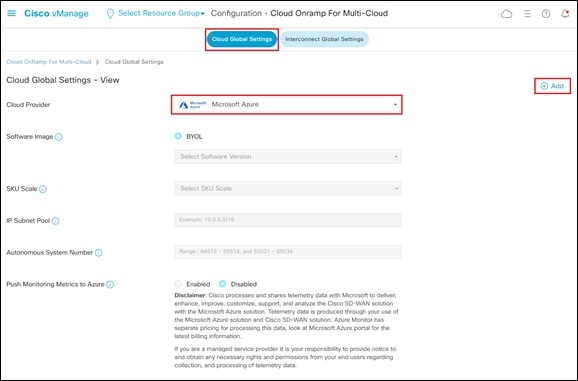

Step 1. From the initial Cisco Cloud onRamp for Multi-Cloud screen (as shown in Figure 13 above), within Setup click on Cloud Global Settings.

Step 2. Make sure the Cloud Global Settings button at the top of the screen is selected.

The Cloud Global Settings screen will appear.

Step 3. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

The fields within the Cloud Global Settings screen will change to reflect the credentials necessary for Cisco Cloud onRamp for Multi-Cloud to access Microsoft Azure. An example is shown in the following figure.

Step 4. Click on the + Add link in the upper right corner of the screen to move from View mode to Create mode. This will allow you to edit the fields.

Step 5. Fill out the required information within the Cloud Global Settings screen.

The following table provides a brief description of each of the fields as well as the values used within this deployment guide.

Table 4. Cloud Global Settings Screen Field Descriptions and Values Used in This Guide

| Field |

Description |

Values Used in This Deployment Guide |

| Cloud Provider |

Drop-down menu containing one of the three IaaS public cloud providers supported by Cisco Cloud onRamp for Multi-Cloud. As of vManage release 20.6.1 – Amazon Web Services, Microsoft Azure, and Google Cloud Platform are supported. |

Microsoft Azure |

| Software Image |

Cisco SD-WAN software image which will run on the Catalyst 8000v routers functioning as Network Virtual Appliances (NVAs) within an Azure virtual hub (vHub). |

17.4.20201218 |

| SKU Scale |

The SKU scale determines the throughput per instance for the Catalyst 8000v SD-WAN routers which are instantiated within the vHub. Each SKU represents approximately 0.5 Gbps of throughput. Hence a SKU of 2 represents approximately 1 Gbps, a SKU of 4 represents approximately 2 Gbps, and a SKU of 10 represents approximately 5 Gbps of performance. |

4 |

| IP Subnet Pool |

Specifies the IP address range to be used when Microsoft Azure creates a vHub. The IPv4 CIDR block range must be between /16 and /24. Azure controls the partitioning of this IP address space for the various subnets created within the vHub. |

192.168.112.0/23 |

| Autonomous System Number |

The BGP autonomous system number of the Catalyst 8000v routers functioning as NVAs within the Azure vHub. The Catalyst 8000v routers form eBGP peering with the Azure vHub. |

65010 |

| Push Monitoring Metrics to Azure |

Radio button which enables / disables sharing of telemetry data with Microsoft. |

Disabled |

Step 6. When you have finished filling out the fields within the Cloud Global Settings screen click the Save button which appears at the bottom of the screen when you click the + Add link.

This will bring up a screen indicating you have successfully added global account settings for Microsoft Azure.

Step 7. Click on the Cloud OnRamp for Multi-Cloud link in the upper right side to the screen, as shown in the figure above, to return to the initial Cloud OnRamp for Multi-Cloud screen.

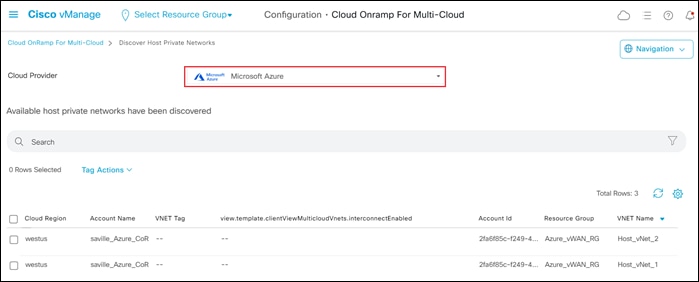

Procedure 4. Discover – Host Private Networks

This procedure can be done either before or after you create a Cloud Gateway. However, in keeping with the steps in the Cloud onRamp for Multi-Cloud workflow, it will be discussed here. You must have at least one existing host vNet in order to complete this procedure.

Within Cisco Cloud onRamp for Multi-Cloud, existing host vNets must first be discovered and tagged before they can be mapped to a Cloud Gateway. Tagging host vNets provides a flexible abstraction, making it visually easier to map one or more host vNets to a service VPN within the SD-WAN overlay.

The mapping of host vNets to a Cloud Gateway provides network connectivity from the SD-WAN overlay to the host vNets. Mapping is done within the Intent Management workflow (discussed in an upcoming procedure).

Step 1. From the initial Cisco Cloud onRamp for Multi-Cloud screen (shown in Figure 13), within Discover click on Host Private Networks.

This will bring up the Discover Host Private Networks screen.

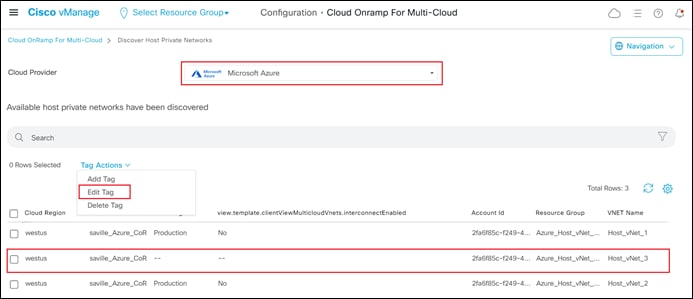

Step 2. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

This will cause Cisco Cloud onRamp for Multi-Cloud to discover and display all untagged host vNets associated with any of the Microsoft Azure cloud accounts added to Cloud onRamp for Multi-Cloud.

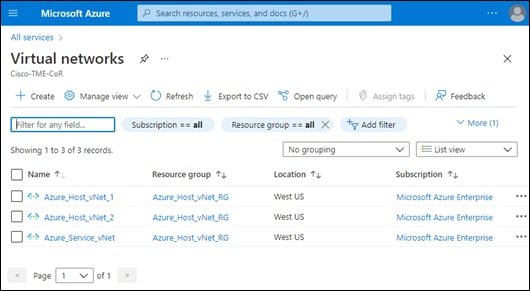

An example is shown in the following figure.

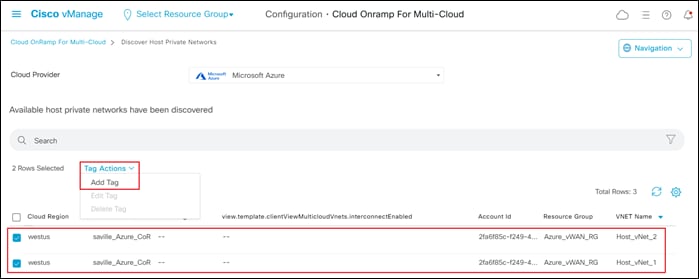

Step 3. Select one or more host vNets which you wish to tag, and from the Tag Actions drop-down menu, select Add Tag, as shown below.

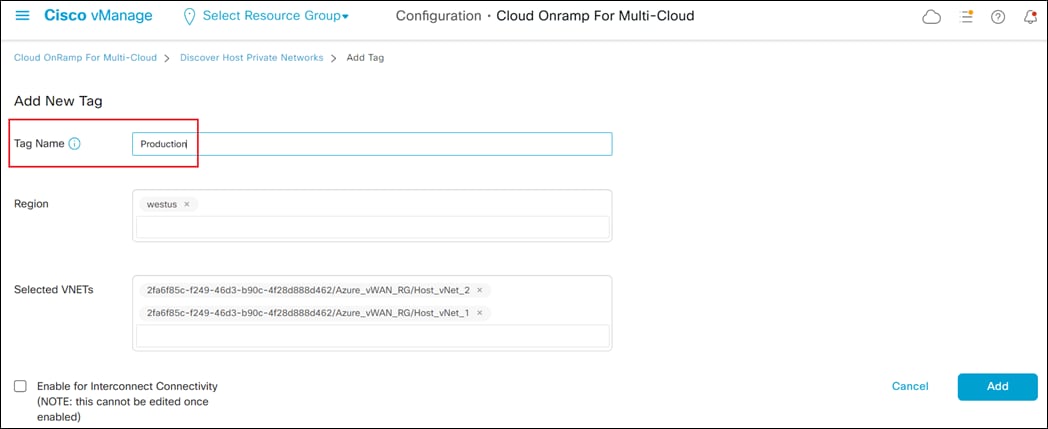

This will bring up the Add New Tag screen.

You can choose to use tags in various ways. For example, you can choose to tag multiple host vNets associated with a particular business function within your organization with a tag name indicating the business function – such as Production, Development, Test, etc. That allows you to map multiple host vNets all with the same business function to the Cloud Gateway at one time. Alternatively, you can simply choose to tag each host vNet with a tag name indicating the name of the host vNet. That allows you to individually select the host vNets you wish to map to the Cloud Gateway.

Step 4. In the open text field adjacent to Tag Name, type in the name of the tag which you wish to assign to the host vNet(s).

This design guide follows the model where both host vNets (Host_vNet_1 and Host_vNet_2) are assigned the business function tag name of Production, indicating the workloads within those vNets are production workloads.

The Region and Selected VNETs fields should be automatically filled. The Enable for Interconnect Connectivity should be checked if you are using the host vNet for Cisco SD-WAN interconnect connectivity (Megaport and/or Equinix). This is not covered within this deployment guide and left unchecked.

| Technical Note |

| It should be noted that regardless of whether you assign host vNets individual tag names, or aggregate multiple host vNets with tag names indicating business function, you can only map host vNets to a single service VPN within the SD-WAN network, as of vManage release 20.6.1. The Catalyst 8000v routers functioning as NVAs within the Azure vHub support only one service-side interface (GigabitEthernet2). This service-side interface must be assigned to a single service VPN. eBGP peering is established from the service-side interface of each of the Catalyst 8000v SD-WAN routers to the internal router/route server within the vHub. Routes learned via the eBGP peering are automatically mapped to the default route table of the Azure vHub. Hence, for connectivity, all host vNets mapped to the Cloud Gateway are also assigned to the default route table of the Azure vHub. |

Step 5. Click the Add button to tag the host vNets.

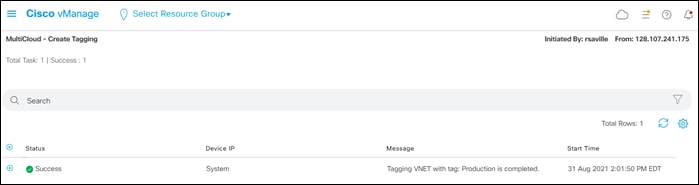

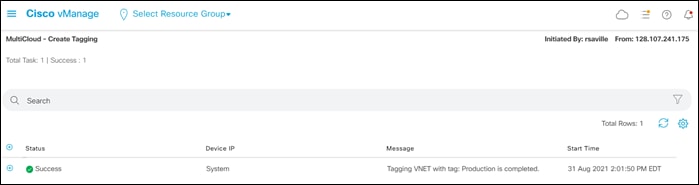

After a few moments Cisco Cloud onRamp for Multi-Cloud will display a screen indicating that the host vNets have been successfully tagged.

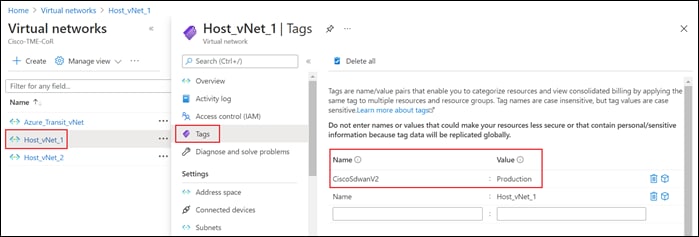

From a Microsoft Azure perspective, Cisco Cloud onRamp for Multi-Cloud uses API calls to add the following tag to the Host_vNet_1 and Host_vNet_2 vNets:

Name: CiscoSdwanV2

Value: Production

This can be seen within the Microsoft Azure portal for the individual host vNets as well.

This completes the discovery and tagging of the host vNets. In the next procedure, the Cloud Gateway will be instantiated within the Azure vHub.

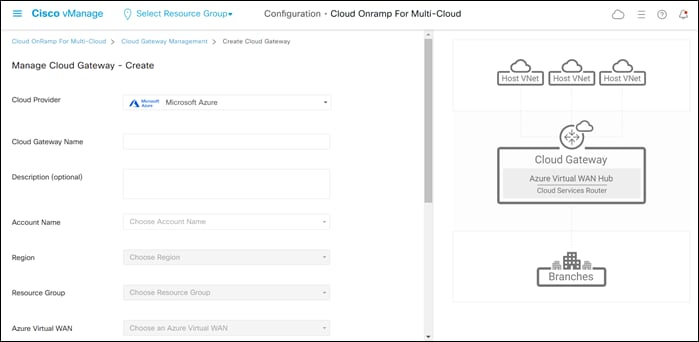

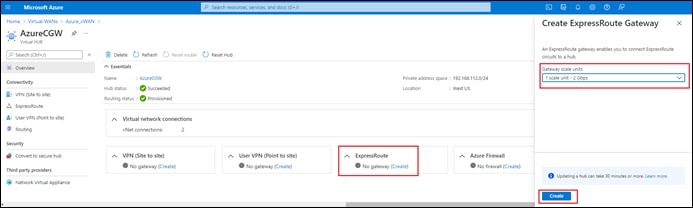

Procedure 5. Manage – Create Cloud Gateway

Step 1. From the initial Cisco Cloud onRamp for Multi-Cloud screen within Manage (shown in Figure 13), click on Create Cloud Gateway.

This will bring up the Manage Cloud Gateway - Create screen.

Step 2. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

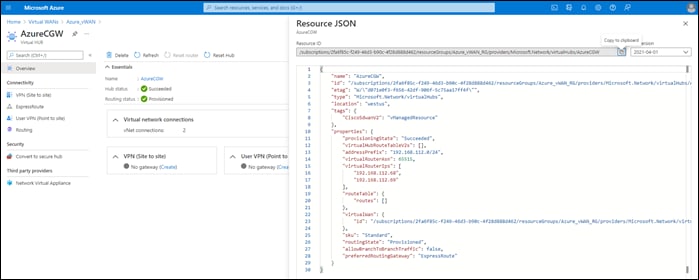

This will cause the screen to change to reflect the fields necessary to instantiate a Cloud Gateway within Azure. An example is shown in the following figure.

Step 3. Fill in the required fields.

The following table provides a brief description of each of the fields as well as the values used within this deployment guide.

Table 5. Managed Cloud Gateway - Create Screen Field Descriptions and Values Used in This Guide

| Field |

Description |

Values Used in This Deployment Guide |

| Cloud Provider |

Drop-down menu containing one of the three IaaS public cloud providers supported by Cisco Cloud onRamp for Multi-Cloud. As of vManage release 20.6.1 – Amazon Web Services, Microsoft Azure, and Google Cloud Platform are supported. |

Microsoft Azure |

| Cloud Gateway Name |

End user specified name for the Cloud Gateway instantiated within Azure. |

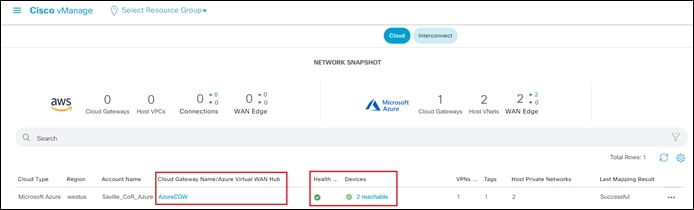

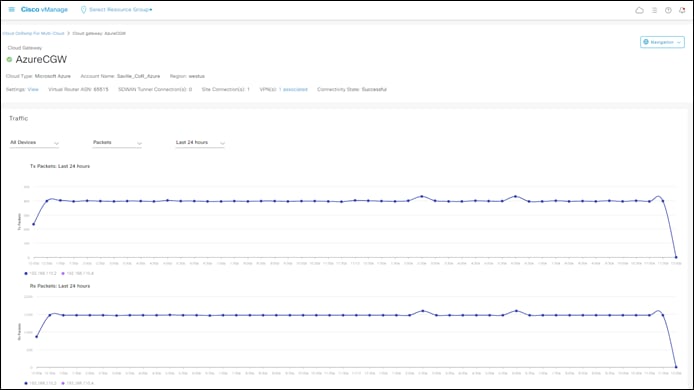

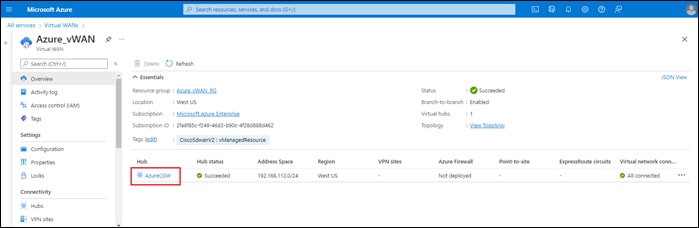

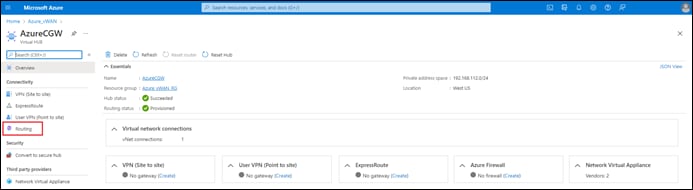

AzureCGW |

| Description (Optional) |

Optional end user specified description for the Cloud Gateway instantiated within Azure. |

None |

| Account Name |

Drop-down menu containing the list of cloud accounts that have been added to Cloud onRamp for Multi-Cloud and allowed to create a Cloud Gateway. Select the cloud account under which the Cloud Gateway will be instantiated. |

Saville_CoR_Azure |

| Region |

Drop-down menu containing the list of Azure regions. Select the region in which you wish to instantiate the Cloud Gateway. |

westus |

| Resource Group |

Drop-down menu containing the list of existing Azure resource groups within the cloud account specified within the Account Name drop-down menu above. Select the resource group within the cloud account in which you wish to instantiate the Cloud Gateway or select Create New… to create a new Azure resource group within the Azure subscription to which the cloud account belongs. |

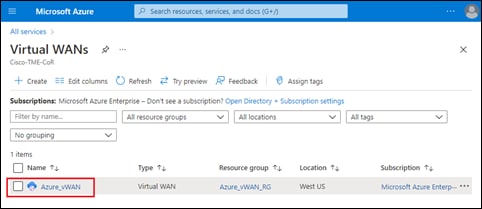

Azure_vWAN_RG |

| Azure Virtual WAN |

Drop-down menu containing the list of existing Azure vWANs within the cloud account specified within the Account Name drop-down menu above. Select the vWAN within the cloud account in which you wish to instantiate the Cloud Gateway or select Create New… to create a new vWAN within the Azure subscription to which the cloud account belongs. Note: If you do not see existing vWANs in your cloud account within the drop-down menu, check to ensure that your Azure credentials have not expired. |

Create New… -> Azure_vWAN |

| Azure Virtual WAN Hub |

Drop-down menu containing the list of existing Azure vHubs in the vWAN specified within the Azure Virtual WAN drop-down menu above. If you selected Create New… to create a new vWAN, this field will be automatically filled in for you with the following entry: Create New VHUB using Cloud Gateway Name. This indicates that a new vHub will be created using the same name as the Cloud Gateway specified within the Cloud Gateway Name field above. Note: If you do not see existing vHubs in your cloud account within the drop-down menu, check to ensure that your Azure credentials have not expired. |

Create New VHUB using Cloud Gateway Name |

| Settings -> Software Image |

Drop-down menu listing the Cisco SD-WAN software images available to run on the Catalyst 8000v routers functioning as NVAs within the Azure vHub. Select the image you wish to run on the Catalyst 8000v routers. BYOL – meaning bring your own license is the only option for licensing. This field is copied from the Cloud Global Settings discussed previously. However, it can be overridden during instantiation of a Cloud Gateway. Note: If you do not see any available software images within the drop-down menu, check to ensure that your Azure credentials have not expired. |

17.6.01 |

| Settings -> SKU Scale |

Drop-down menu listing the available SKU scales for Catalyst 8000v routers functioning as NVAs within the vHub. Select the SKU scale for the Catalyst 8000v routers you will be instantiating within the Cloud Gateway. The SKU scale determines the throughput per instance for the Catalyst 8000v SD-WAN routers which are instantiated within the vHub. Each SKU represents approximately 0.5 Gbps of throughput. Hence a SKU of 2 represents approximately 1 Gbps, a SKU of 4 represents approximately 2 Gbps, and a SKU of 10 represents approximately 5 Gbps of performance. This field is copied from the Cloud Global Settings discussed previously. However, it can be overridden during instantiation of a Cloud Gateway. |

2 |

| Settings -> IP Subnet Pool |

Specifies the IP address range to be used when Microsoft Azure creates a vHub. The IPv4 CIDR block range must be between /16 and /24. Azure controls the partitioning of this IP address space for the various subnets created within the vHub. This field is copied from the Cloud Global Settings discussed previously. However, it can be overridden during instantiation of a Cloud Gateway. |

192.168.112.0/23 |

| UUID (specify 2) |

Drop-down menu which lists the Catalyst 8000v UUIDs available for instantiation within the Cloud Gateway. For a UUID to appear, it has to have already been attached to a device template which can be used to deploy the Catalyst 8000v routers functioning as NVAs within the Azure vHub. |

C8K-42A81138-77E2-C833-4D55-16B4DC4987C4 C8K-99E9C4D9-E474-0B1D-8F29-B5ECBDCDD323 |

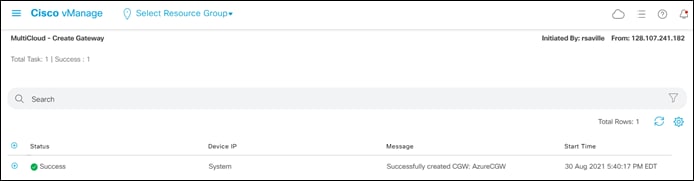

Step 4. Once you have filled in the required fields, click Add to create the Cloud Gateway.

Creation of the Cloud Gateway can take up to 40 minutes. When the Cloud Gateway has been successfully created, the Task View screen should appear as shown in the figure below.

In the next process, host vNets will be mapped to the Cloud Gateway.

Process: Map Host vNets to the Cloud Gateway

This section discusses the procedures for mapping tagged host vNets to an existing Cloud Gateway. This document assumes the host vNets are already created and have been tagged within the previous process. The creation of a host vNet is outside the scope of this document.

Procedure 1. Login to Cisco vManage and Navigate to Cloud onRamp for Multi-Cloud

Step 1. Login to the Cisco vManage web console using the IP address or fully qualified domain name of your Cisco vManage instance.

For example: https://Cisco_vManage_ip_addr_or_FQDN:8443/

This will bring up the Cisco vManage dashboard, as shown in the following figure.

Step 2. Click the three bars in the upper left corner of the screen to bring up a navigation panel on the left side of the screen.

Step 3. In the navigation panel, select Configuration > Cloud onRamp for Multi-Cloud.

This will bring you to the initial Cisco Cloud onRamp for Multi-Cloud screen, as shown in the figure below.

Step 4. Make sure the Cloud button at the top of the screen is selected, as shown in the figure above.

The Cloud Gateway which you provisioned in the previous process should appear in the upper right corner of the screen. The Cloud Gateway must be provisioned before you can map host vNets to it. Review the steps within the previous process if you need to create a Cloud Gateway.

Cloud onRamp for Multi-Cloud has four main workflows: Setup, Discover, Manage, and Intent Management - each with one or more actions that need to be performed. In the previous process, we covered the Setup, Discover, and Manage workflows. The next procedure will walk you through the Cloud Connectivity action within the Intent Management workflow, which is used to map host vNets to the Cloud Gateway.

Procedure 2. Intent Management – Cloud Connectivity

Step 1. From the initial Cisco Cloud onRamp for Multi-Cloud screen (shown in the Figure 26 above), within Intent Management click on Cloud Connectivity.

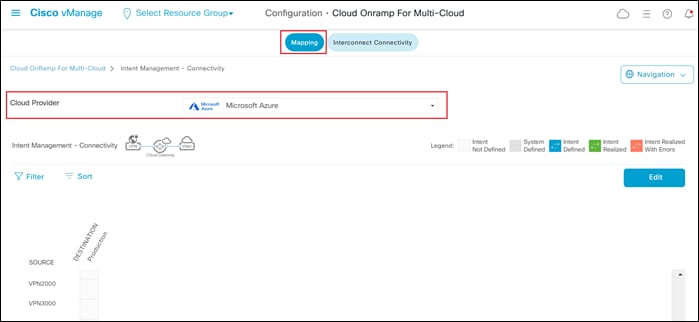

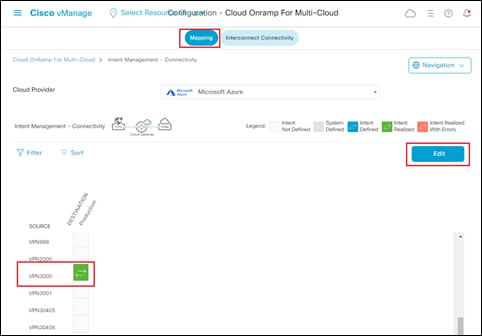

This will bring up the Intent Management - Connectivity screen.

Step 2. Make sure the Mapping tab at the top of the screen is selected.

Step 3. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

This will cause the screen to change to reflect the current mappings of tags (which represent host vNets) to service VPNs within the SD-WAN overlay for Microsoft Azure.

The service VPNs rows listed under the Source heading should be automatically populated, based upon the service VPNs defined within the SD-WAN overlay network. Tags which were defined during the Discover workflow (discussed in the previous process of this guide) will appear as columns under the Destination heading.

The intersection of the Source service VPN rows with the Destination tag columns creates boxes. These boxes represent the end-user intent regarding the mapping of service VPNs to host vNets. The Legend at the right side of the screen above the Edit button describes the various possibilities for intent. If no host vNets are mapped to service VPNs, all boxes will be empty, as shown in the figure above.

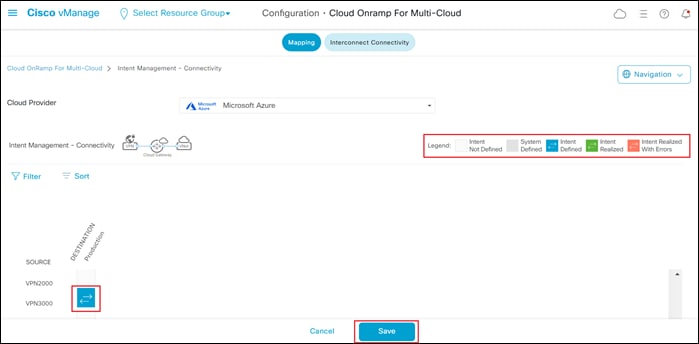

Step 4. Click the Edit button to change the intent by mapping a service VPN to one or more host vNets (represented by tags).

Step 5. Scroll down to the row which represents the service VPN that you wish to extend to Azure. You can extend only one service VPN to Azure with Cisco Cloud onRamp for Multi-Cloud with Azure.

Step 6. Within that row, move over to the column which represents the tag for the host vNet that you wish to map to the service VPN, and click on the empty box to select it.

The empty box should change to a blue color indicating Intent Defined, as shown in the Legend.

For this guide, the host vNets are mapped to service VPN 3000. An example is shown in the following figure.

You can select multiple tags to be mapped to the same service VPN – if you have multiple tags defined. However, as of software release 17.6.1 / 20.6.1, Cisco Cloud onRamp for Multi-Cloud with Azure only supports the mapping of host vNets to a single service VPN. Although you can select multiple service VPNs within the graphical user interface, DO NOT DO THIS! If you select multiple service VPNs within the intent (meaning boxes along multiple service VPN rows are highlighted in blue, indicating Intent Defined), when you try to execute that intent, nothing may be configured on the Catalyst 8000v SD-WAN routers within the Azure vHub – although the intent workflow may indicate success. Further, if you have an existing configuration on the Catalyst 8000v SD-WAN routers based upon previous intent with a single service VPN, and then you try to add a second service VPN – Cisco Cloud onRamp for Multi-Cloud may remove all intent from the routers. This can potentially result in an unscheduled loss of connectivity to Microsoft Azure and should be avoided. Always make sure that only a single service VPN is selected when configuring intent with Microsoft Azure.

Step 7. Click the Save button to execute the intent.

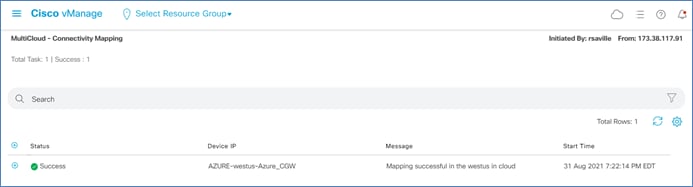

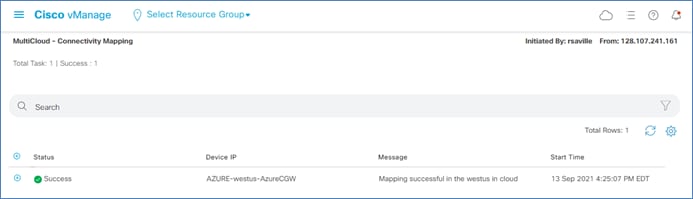

After a few minutes, the MultiCloud – Connectivity Mapping screen will appear as shown in the figure below.

Intent Management: What Happens on the Catalyst 8000v SD-WAN Routers within the vHub?

When you execute the intent, Cisco Cloud onRamp for Multi-Cloud will dynamically modify the configuration of each of the Catalyst 8000v SD-WAN routers functioning as NVAs within the Azure vHub in the following ways:

● A virtual routing and forwarding (VRF) instance corresponding to the service VPN mapped to the host vNets, as specified within the Intent Management workflow, is added to each of the Catalyst 8000v routers.

● The SD-WAN service-side interface (GigabitEthernet2) of each Catalyst 8000v router is configured for dynamic IP address assignment and configured to be in the service VPN.

● BGP routing is enabled on each of the Catalyst 8000v SD-WAN routers within the Cloud Gateway. The BGP AS number configured under the Cloud Global Settings configured within the Setup workflow for Cloud onRamp for Multi-Cloud is used for both Catalyst 8000v routers.

● Each Catalyst 8000v router establishes two external BGP (eBGP) peers to the Azure vHub, using two different IP addresses which were allocated by Azure within the vHub. (Note, the eBGP peers appear under the IPv4 unicast address family for the service VPN mapped to the host vNets). The Azure vHub uses BGP AS 65515.

● BGP routes are redistributed into OMP, and OMP routes are redistributed into BGP.

● A BGP AS route map filter named AZURE_CSR_NVA_ROUTE_POLICY is applied in the outbound direction against the BGP peers in the Azure vHub. The route map does the following:

◦ Blocks routes from being sent from the last autonomous system number of the 16-bit ASN range, 65535, per IETF RFC 7300.

◦ Blocks routes from being sent that have passed through AS 65515. Since the Azure vHub uses BGP AS 65515, this essentially prevents sending routes to the vHub which have already passed through the vHub.

◦ Allows routes from any other ASNs.

● Within the service VPN, adds static routes to the two BGP peers located in the Azure vHub. The static routes point to the Azure default gateway belonging to the service side (LAN) interfaces of the Catalyst 8000v SD-WAN routers within the vHub. This ensures the BGP sessions can be established between the Catalyst 8000v SD-WAN router service-side (LAN) interfaces and the Azure vHub.

To summarize, the dynamic configuration within the Intent Management workflow extends the service VPN through the SD-WAN fabric to Azure and establishes BGP peering to dynamically exchange IP routing information for that service VPN.

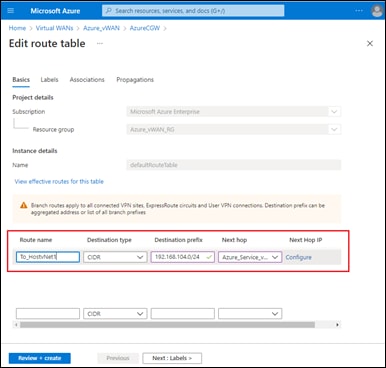

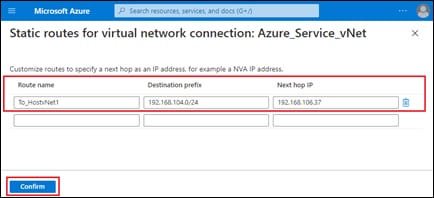

Intent Management: What Happens within the Azure vHub?

When you execute the intent, Cisco Cloud onRamp for Multi-Cloud uses API calls to dynamically modify the Azure vHub to host vNet peering as follows:

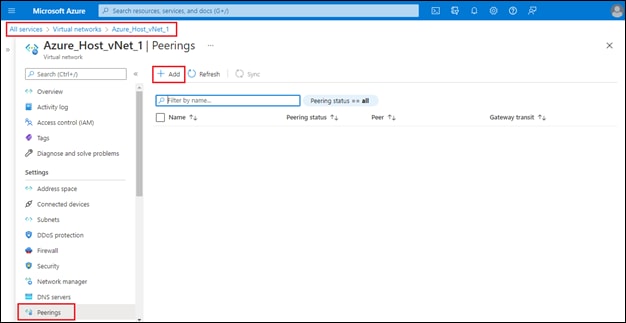

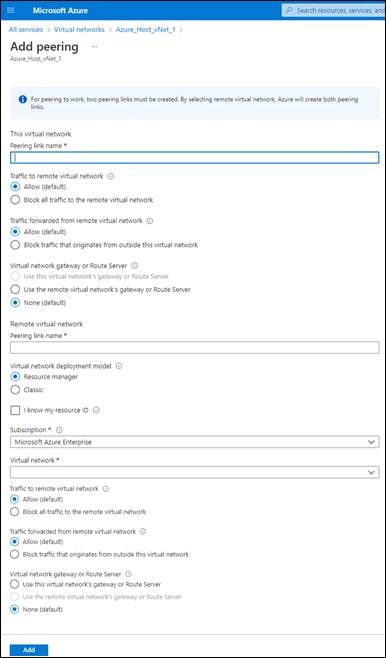

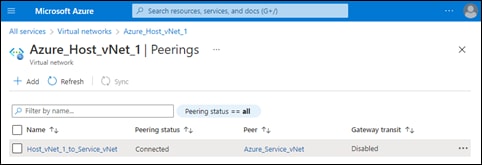

● vNet peering is established from the host vNet to the vHub. On the host vNet side, the peering is configured to use the remote VNG/route server – meaning the router/route server within the vHub. This is necessary because the IP address space of the host vNet must be propagated from the vHub to the Catalyst 8000v routers through the BGP peering between the vHub and the SD-WAN routers. To propagate the routes, the vHub router/route server must be aware of the host vNet address space. Likewise, to propagate routes learned from the Catalyst 8000v routers via BGP back to the host vNet, the host vNet peering must use the remote VNG/route server.

● On the Azure vHub, the routes learned via the BGP peering with the Catalyst 8000v routers are both associated to and propagated into the Default route table. Likewise, routes from the host vNet peering connections are both associated to and propagated to the Default route table. This ensures route visibility from the service VPN of the SD-WAN fabric through to the host vNets.

To summarize, the dynamic configuration within the Intent Management workflow uses API calls to establish the vNet connections between the host vNets and the vHub; and then associates and propagates routes to the Default route table.

Operate - Cisco Cloud onRamp for Multi-Cloud

This section of the guide discusses various processes for operating the Cisco Cloud onRamp for Multi-Cloud deployment.

Process: Modifying an Existing Cloud Gateway Deployment (Optional)

This section discusses various operations which can be performed after the deployment of Cisco Cloud onRamp for Multi-Cloud with Azure. The procedures within this process are optional, and primarily included for information purposes. The following procedures are discussed:

● Adding another host vNet to an existing tag that is mapped to a service VPN

● Removing a host vNet from an existing tag that is mapped to a service VPN

● Removing the mapping of a tag to a service VPN

● Deleting a tag when the tag is not mapped a service VPN

Procedure 1. Adding Another Host vNet to a Tag that is Mapped to a Service VPN

When you add another host vNet to an existing tag that is mapped to a service VPN, the behavior is as follows:

● Cisco Cloud onRamp for Multi-Cloud uses API calls into Azure to add the tag to the host vNet

● Cisco Cloud onRamp for Multi-Cloud uses API calls to configure the host vNet-to-vHub peering of the newly tagged host vNet

Note that if the existing tag is not mapped to a service VPN, only the intent within the first bullet above (adding the tag to the host vNet) is performed.

The following are the steps for adding another host vNet to an existing tag that is mapped to a service VPN.

Step 1. From any screen within vManage, click the three bars in the upper left corner of the screen to bring up the navigation panel on the left side of the screen.

Step 2. In the navigation panel, select Configuration > Cloud onRamp for Multi-Cloud.

This will bring you to the initial Cisco Cloud onRamp for Multi-Cloud screen, as shown in the figure below.

Step 3. Make sure the Cloud button at the top of the screen is selected.

You should see information regarding existing Cloud Gateways with the screen.

Step 4. Within the Discover workflow at the bottom of the screen, click on Host Private Networks.

This will bring up the Discover Host Private Networks screen.

Step 5. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

This will cause Cisco Cloud onRamp for Multi-Cloud to issue API calls to discover and display both tagged and untagged host vNets associated with any of the Microsoft Azure cloud accounts added to Cloud onRamp for Multi-Cloud. An example is shown in the figure below.

In the figure above, notice that Host_vNet_1 and Host_vNet_2 are both tagged with the Production tag, while Host_vNet_3 has no tag.

Step 6. From the drop-down menu under Tag Actions, select Edit Tag.

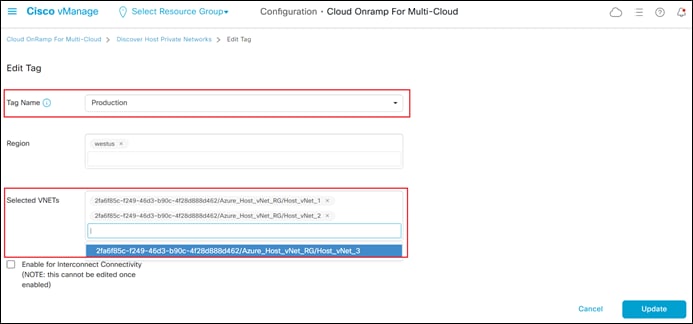

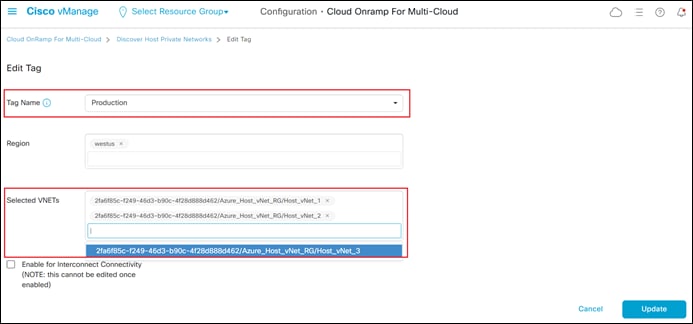

This will bring up the Edit Tag screen.

Step 7. From the drop-down menu adjacent to Tag Name, select the existing tag name to which you wish to add another host vNet.

The Selected VNETs field should automatically populate, showing you the host vNets already assigned to the tag. In the figure above you can see that once the Production tag is selected, both Host_vNet_1 and Host_vNet_2 show up as being already tagged.

Step 8. From the drop-down menu adjacent to Selected VNETs, choose the additional vNet(s) which you wish to add to the Production tag.

For this procedure, Host_vNet_3 is added to the Production tag, as shown in the figure above.

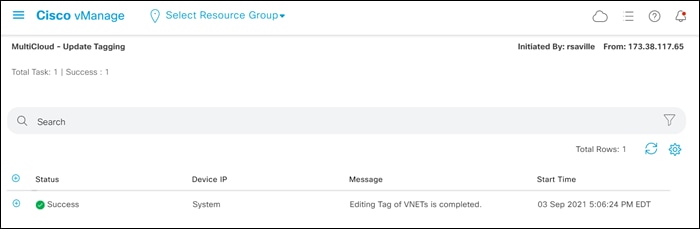

Step 9. Click the Update button to tag the additional host vNet.

After a few moments Cisco Cloud onRamp for Multi-Cloud will display a screen indicating that the host vNet(s) have been successfully tagged.

The next procedure discusses removing the tag from a host vNet when the tag is mapped to a service VPN.

Procedure 2. Removing the Tag from a Host vNet when the Tag is Mapped to a Service VPN

When a tag is applied to multiple host vNets and that tag is mapped to a service VPN, if you remove the tag from one of the host vNets the behavior is as follows:

● Cisco Cloud onRamp for Multi-Cloud uses API calls into Azure to remove the tag from the host vNet

● Cisco Cloud onRamp for Multi-Cloud uses API calls into Azure to remove the host vNet-to-vHub peering for the host vNet.

Note that if the existing tag is not mapped to a service VPN, only the intent within the first bullet above (removing the tag from the host vNet) is performed.

The steps for removing a tag that is currently mapped to a service VPN, from a host vNet, are similar to the steps for adding a host vNet to an existing tag that is currently mapped to a service VPN. The steps are as follows.

Step 1. From any screen within vManage, click the three bars in the upper left corner of the screen to bring up a navigation panel on the left side of the screen.

Step 2. In the navigation panel, select Configuration > Cloud onRamp for Multi-Cloud.

This will bring you to the initial Cisco Cloud onRamp for Multi-Cloud screen.

Step 3. Make sure the Cloud button at the top of the screen is selected.

You should see information regarding existing Cloud Gateways with the screen.

Step 4. Within the Discover workflow at the bottom of the screen, click on Host Private Networks.

This will bring up the Discover Host Private Networks screen.

Step 5. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

This will cause Cisco Cloud onRamp for Multi-Cloud to issue API calls to discover and display both tagged and untagged host vNets associated with any of the Microsoft Azure cloud accounts added to Cloud onRamp for Multi-Cloud.

Step 6. From the drop-down menu under Tag Actions, select Edit Tag.

This will bring up the Edit Tag screen.

Step 7. From the drop-down menu adjacent to Tag Name, select the existing tag name to which you wish to delete the host vNet.

The Selected VNETs field should automatically populate, showing you the vNets already assigned to the tag. In the figure above you can see that once the Production tag is selected, both Host_vNet_1 and Host_vNet_2 show up as being already tagged.

Step 8. Click the x to the right of one or more of the vNets already assigned to the tag within the Selected VNETs field, to delete the host vNet.

| Technical Note |

| You cannot delete the last host vNet associated with a tag through the Edit Tag under Tag Actions. Instead, you must do one of the following: ● Select Delete Tag under Tag Actions to remove the tag from Cloud onRamp for Multi-Cloud. ● Remove the mapping of the tag to the service VPN within the Intent Management Workflow. |

Step 9. Click the Update button to remove the tag from the host vNet.

After a few moments Cisco Cloud onRamp for Multi-Cloud will display a screen indicating that the host vNet has been successfully untagged.

The next procedure discusses removing the mapping of a tag to a service VPN within the Intent Management workflow.

Procedure 3. Removing the Mapping of a Tag to a Service VPN within the Intent Management Workflow.

When you remove the mapping of a tag to a service VPN within the Intent Management workflow, and the tag is the only tag mapped to the service VPN, the behavior is as follows:

● Cisco Cloud onRamp dynamically modifies the configuration of the Catalyst 8000v routers functioning as NVAs within the Cloud Gateway to remove the BGP peering with the vHub

● Cisco Cloud onRamp for Multi-Cloud uses API calls to remove the host vNet-to-vHub peerings within Azure for all of the host vNets to which the tag is applied

Note that the tag is not removed from the host vNets. This allows you to map the same tagged host vNets to a different service VPN without having to re-tag the host vNets.

The steps for removing the mapping of a tag to a service VPN within the Intent Management workflow are as follows:

Step 1. From any screen within vManage, click the three bars in the upper left corner of the screen to bring up a navigation panel on the left side of the screen.

Step 2. In the navigation panel, select Configuration > Cloud onRamp for Multi-Cloud.

This will bring you to the initial Cisco Cloud onRamp for Multi-Cloud screen.

Step 3. Make sure the Cloud button at the top of the screen is selected.

You should see information regarding existing Cloud Gateways within the screen.

Step 4. Within the Intent Management workflow at the bottom of the screen, click on Cloud Connectivity.

This will bring up the Intent Management - Connectivity screen.

Step 5. Make sure the Mapping button is selected.

Step 6. Click the Edit button to change the mapping.

Step 7. Scroll down to the green box indicating the mapping of the service VPN with the tag and click on it to de-select the mapping.

This will cause the box to become empty, indicating you have de-selected the mapping of the service VPN to the tag.

Step 8. Click the Save button at the bottom of the screen to implement your intent.

After several minutes Cisco Cloud onRamp for Multi-Cloud will display a screen indicating that the un-mapping of the service VPN to the tag has been successful.

The next procedure discusses deleting a tag from Cisco Cloud onRamp for Multi-Cloud, when the tag is not assigned to one or more host vNets which are mapped to a service VPN.

Procedure 4. Deleting a Tag when the Tag is not Assigned to One or More Host vNets Mapped to a Service VPN

You should only delete a tag within Cisco Cloud onRamp for Multi-Cloud after first removing the mapping of the tag to a service VPN within the Intent Management workflow, discussed in the previous procedure. This ensures the BGP peering between the Cisco Catalyst 8000v routers and the vHub is removed, and the host vNet-to-vHub peering within Azure is also removed – before deleting the tag. In other words, you should only delete a tag within Cisco cloud onRamp for Multi-Cloud if the tag is not currently mapped to a service VPN within the Intent Management workflow.

When a tag is applied to one or more host vNets and that tag is not mapped to a service VPN, if you delete the tag from Cisco Cloud onRamp for Multi-Cloud the behavior is as follows:

● Cisco Cloud onRamp for Multi-Cloud uses API calls to Azure to remove the tag from all host vNets to which the tag was applied

● The tag is deleted from Cisco Cloud onRamp for Multi-Cloud

The steps for deleting a tag, when the tag is assigned to one or more host vNets which are not mapped to a service VPN are as follows:

Step 1. From any screen within vManage, click the three bars in the upper left corner of the screen to bring up a navigation panel on the left side of the screen.

Step 2. In the navigation panel, select Configuration > Cloud onRamp for Multi-Cloud.

This will bring you to the initial Cisco Cloud onRamp for Multi-Cloud screen.

Step 3. Make sure the Cloud button at the top of the screen is selected.

You should see information regarding existing Cloud Gateways within the screen.

Step 4. Within the Discover workflow at the bottom of the screen, click on Host Private Networks.

This will bring up the Discover Host Private Networks screen.

Step 5. From the drop-down menu adjacent to Cloud Provider, select Microsoft Azure.

This will cause Cisco Cloud onRamp for Multi-Cloud to issue API calls to discover and display both tagged and untagged host vNets associated with any of the Microsoft Azure cloud accounts added to Cloud onRamp for Multi-Cloud.

Step 6. From the drop-down menu under Tag Actions, select Delete Tag.

This will bring up the Delete Tag screen.

Step 7. From the drop-down menu adjacent to Tag Name, select the existing tag name which you wish to delete.

The Selected VNETs field should automatically populate, showing you the vNets already assigned to the tag. In the figure above you can see that once the Production tag is selected, both Host_vNet_1 and Host_vNet_2 show up as being already tagged.

Step 8. Click the Delete button delete the tag and remove the host vNet-to-vHub peering for the host vNets associated with the tag.

Step 9. Click OK to confirm in the confirmation pop-up window that appears.

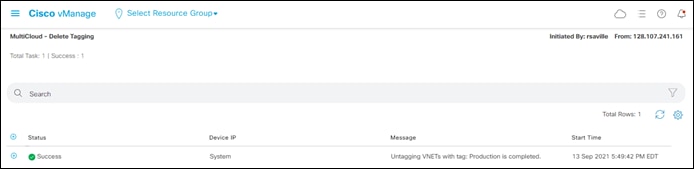

After a few moments Cisco Cloud onRamp for Multi-Cloud will display a screen indicating that the tag has been successfully deleted.

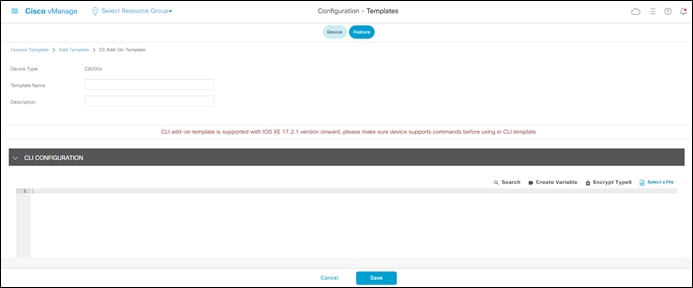

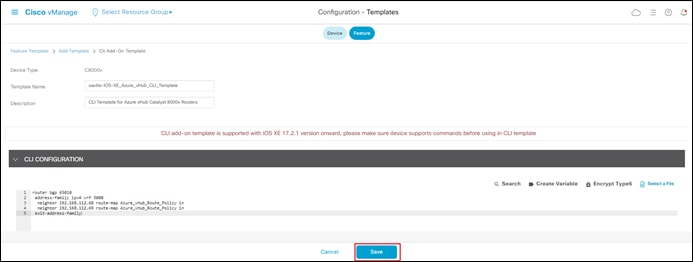

Process: Adding an Inbound Route Map to Filter BGP Routes from Azure (Optional)

As mentioned previously within this guide, Cisco Cloud onRamp for Multi-Cloud provisions a BGP AS route map filter named AZURE_CSR_NVA_ROUTE_POLICY in the outbound direction against the BGP peers in the Azure vHub. This route map filter does the following:

● Blocks routes from being sent from the last autonomous system number of the 16-bit ASN range, 65535, per IETF RFC 7300.

● Blocks routes from being sent that have passed through AS 65515. Since the Azure vHub uses BGP AS 65515, this essentially prevents sending routes to the vHub which have already passed through the vHub.

● Allows routes from any other ASNs.

Some customers may desire inbound filtering of BGP routes – either via a BGP AS route map filter or by individual prefixes, or both. This process discusses how to do that through a combination of localized route policy and CLI templates.

| WARNING |

| Cisco Cloud onRamp for Multi-Cloud dynamically modifies the BGP configuration of the Cisco Catalyst 8000v routers that function as NVAs within the Cloud Gateway – when a service VPN is mapped to a tag within the Intent Management workflow. Cisco Cloud onRamp for Multi-Cloud does not implement BGP configuration through a BGP feature template that is then applied to the service VPN within the device templates assigned to the Cisco Catalyst 8000v routers. Because of the dynamic nature of the BGP configuration with Cisco Cloud onRamp for Multi-Cloud, you must be very careful in modifying the BGP configuration through a CLI template to implement inbound filtering of BGP routes. Any mistakes in the configuration could result in a failure to add (or later remove) the BGP inbound route filter, or in some circumstances could result in the overall application of intent with the Intent Management workflow to fail. It is recommended that you first look at whether redistributing BGP routes into OMP with the Catalyst 8000v routers with the Cloud Gateway, and then implementing centralized policy to filter out unwanted routes, will meet your requirements before you consider this approach. |

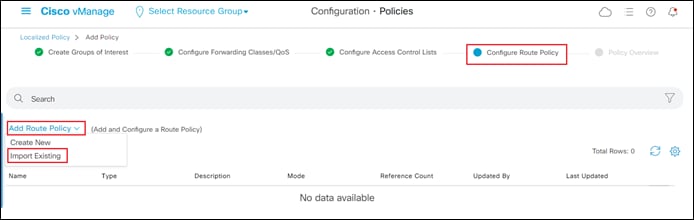

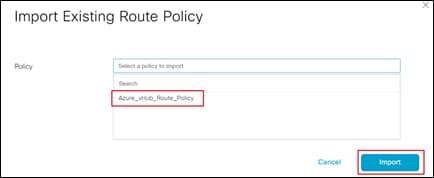

Since this process discusses the use of localized route policy to filter inbound BGP routes from Azure – either using AS path lists and/or individual prefix lists you must first create a localized route policy. This is discussed in the next procedure.

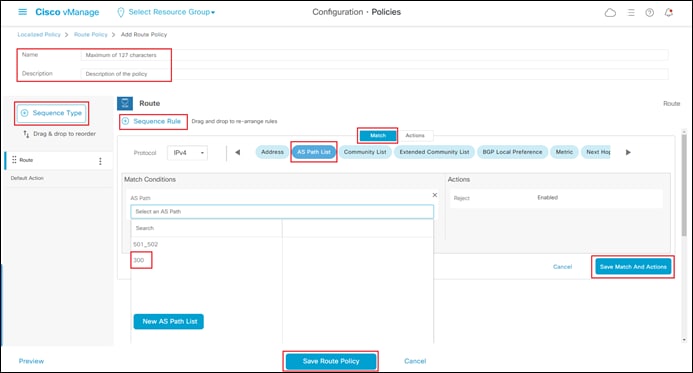

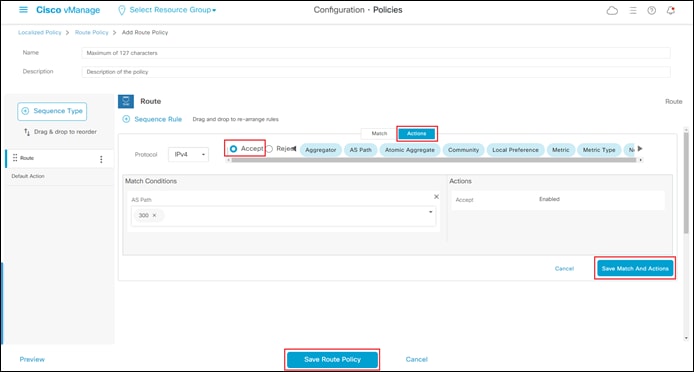

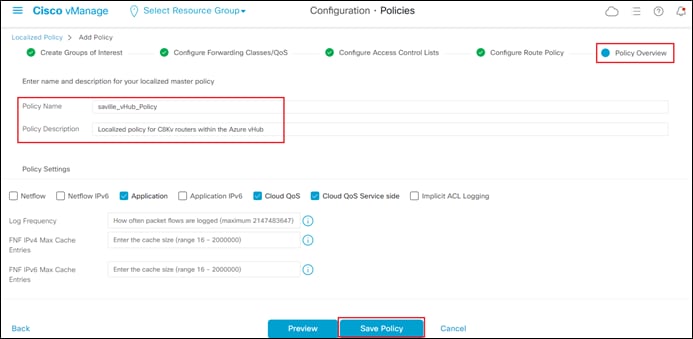

Procedure 1. Create a Localized Route Policy for Filtering BGP Routes

This procedure creates a localized route policy. This localized route policy will then be included within a localized policy in the next procedure. The following are the steps to create the localized route policy.

Step 1. From any screen within vManage, click the three bars in the upper left corner of the screen to bring up a navigation panel on the left side of the screen.

Step 2. In the navigation panel, select Configuration > Policies.

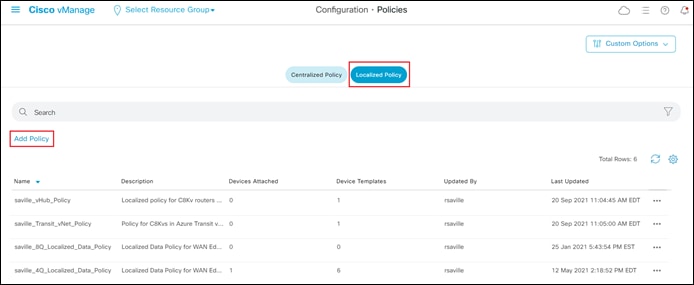

This will bring you to the initial Policies screen.

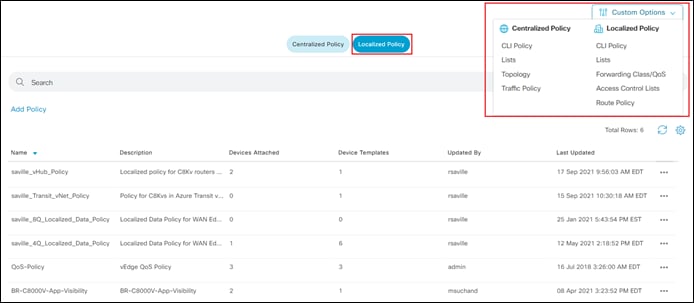

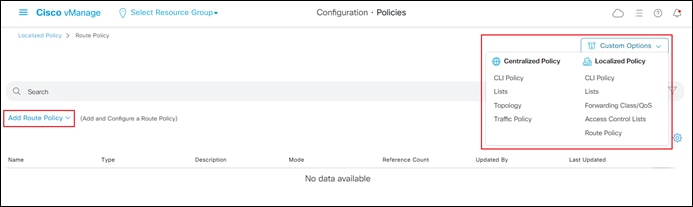

Step 3. Click on the Localized Policy button at the top center of the screen.

You should see information regarding existing localized policies defined within vManage.

The localized route policy used for this section will include both an AS path list and a prefix list. For this guide, both lists will be configured prior to creation of the route policy itself. In a real network you would normally choose to use either an AS path list filter or a prefix list filter.

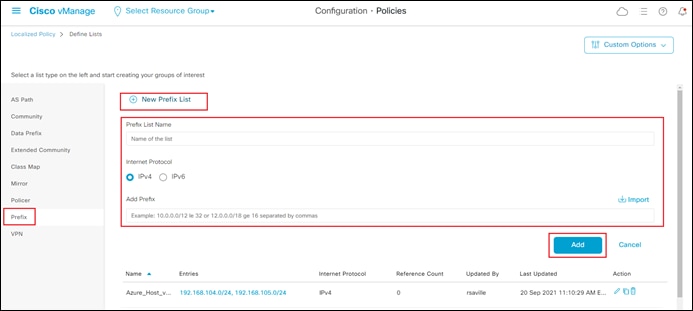

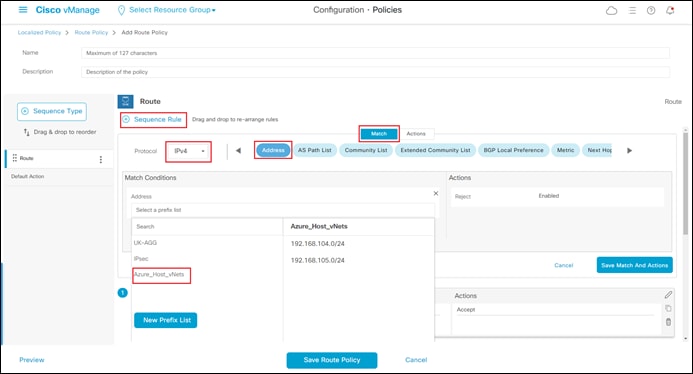

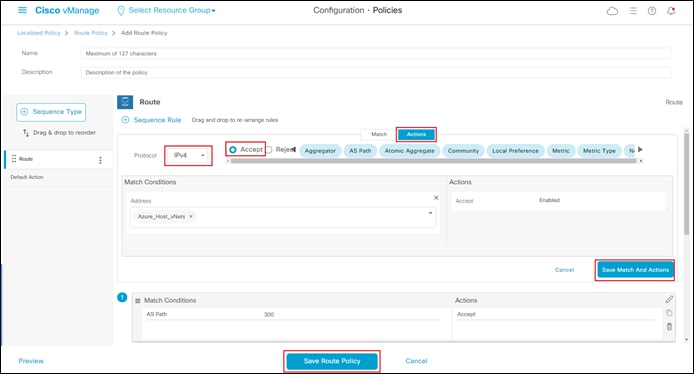

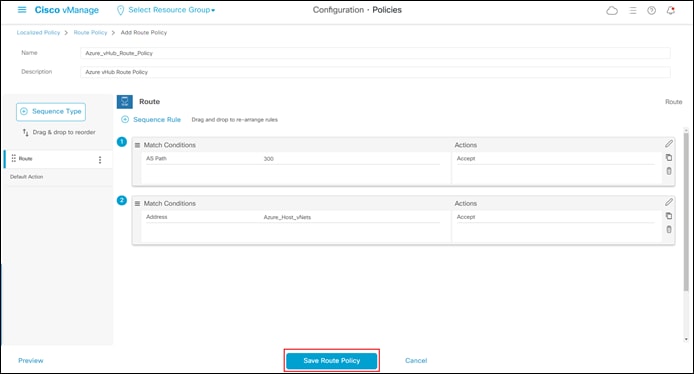

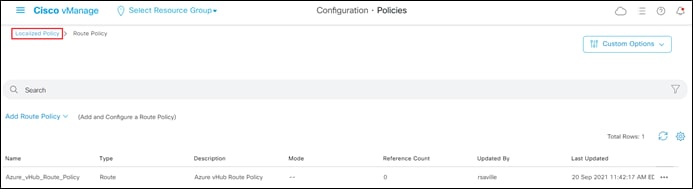

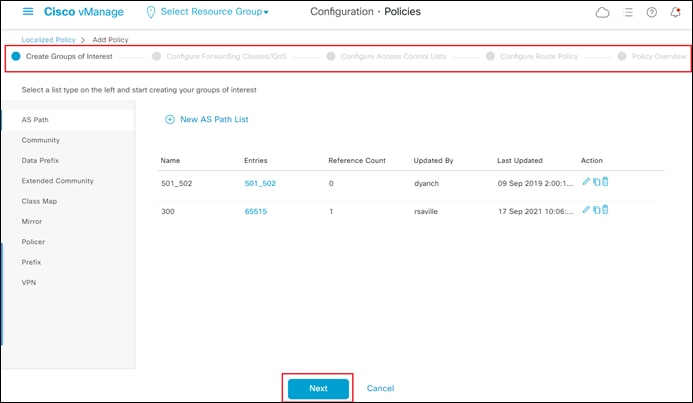

AS Path List

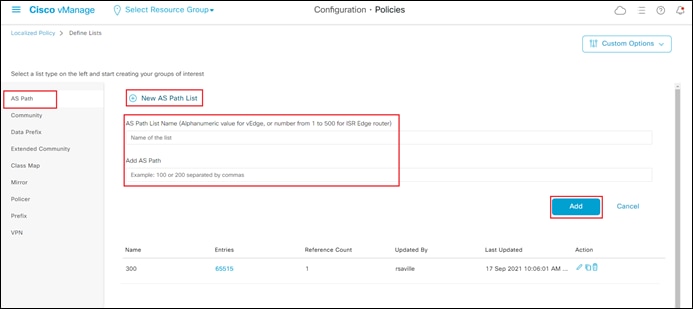

Step 4. From the drop-down menu under Custom Options, under Localized Policy, select Lists.

Step 5. From the navigation panel on the left side of the screen select AS Path.

This will bring up the existing AS path lists defined within vManage.