Understanding Cisco VTS

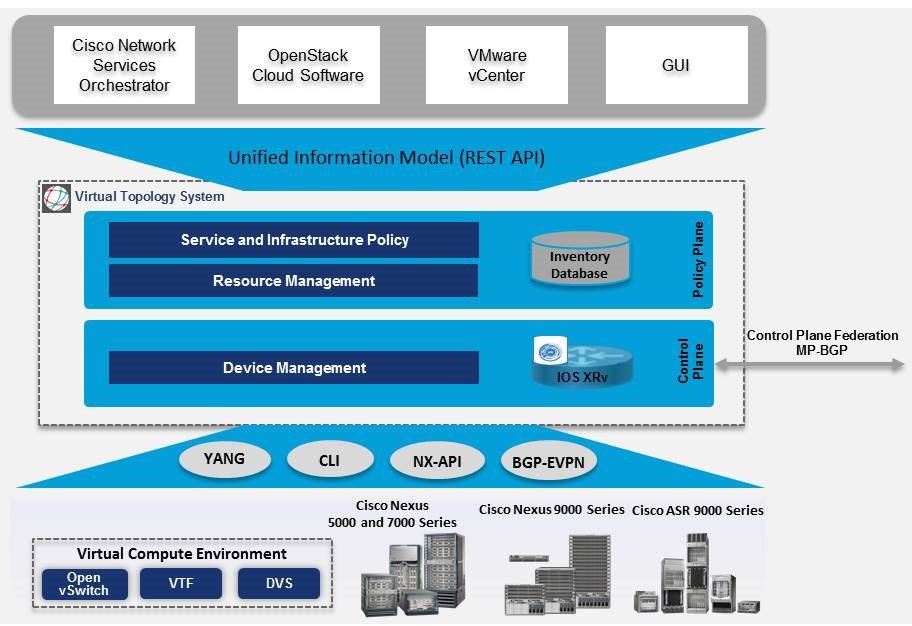

The Cisco Virtual Topology System (VTS) is a standards-based, open, overlay management, and provisioning system for data center networks. It automates the DC overlay fabric provisioning for both physical and virtual workloads.

Cisco VTS provides a network virtualization architecture and software-defined networking (SDN) framework that meets the requirements of multitenant data centers for cloud services. It enables a policy-based approach for overlay provisioning.

Cisco VTS automates complex network overlay provisioning and management tasks through integration with cloud orchestration systems such as OpenStack and VMware vCenter. It reduces the complexity involved in managing heterogeneous network environments.

You can manage the solution in the following ways:

-

Using the embedded Cisco VTS GUI

-

Using a set of northbound Representational State Transfer (REST) APIs that can be consumed by orchestration and cloud management systems.

Cisco VTS provides:

-

Fabric automation

-

Programmability

-

Open, scalable, standards-based solution

-

Cisco Nexus 2000, 3000, 5000, 7000, and 9000 Series Switches. For more information, see Supported Platforms in Cisco VTS 2.6 Installation Guide.

-

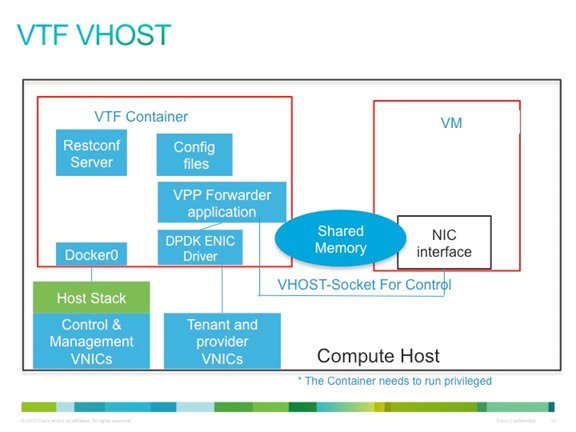

Software forwarder (Virtual Topology Forwarder [VTF])

Feedback

Feedback