Managing Cisco NFVI Pods

You can perform OpenStack management operations on Cisco NFVI pods including addition and removal of Cisco NFVI compute and Ceph nodes, and replacement of controller nodes. Each action is mutually exclusive. Only one pod management action can be performed at any time. Before you perform a pod action, verify that the following requirements are met:

-

The node is part of an existing pod.

-

The node information exists in the setup_data.yaml file, if the pod management task is removal or replacement of a node.

-

The node information does not exist in the setup_data.yaml file, if the pod management task is to add a node.

To perform pod actions, see the Managing Hosts in Cisco VIM or NFVI Podssection.

General Guidelines for Pod Management

The setup_data.yaml file is the only user-generated configuration file that is used to install and manage the cloud. While many instances of pod management indicates that the setup_data.yaml file is modified, the administrator does not update the system generated setup_data.yaml file directly.

Note |

To avoid translation errors, we recommend that you avoid copying and pasting commands from the documents to the Linux CLI. |

To update the setup_data.yaml file, do the following:

- Copy the setup data into a local directory:

[root@mgmt1 ~]# cd /root/ [root@mgmt1 ~]# mkdir MyDir [root@mgmt1 ~]# cd MyDir [root@mgmt1 ~]# cp /root/openstack-configs/setup_data.yaml <my_setup_data.yaml> - Update the setup data manually:

[root@mgmt1 ~]# vi my_setup_data.yaml (update the targeted fields for the setup_data) - Run the reconfiguration command:

[root@mgmt1 ~]# ciscovim –-setupfile ~/MyDir/<my_setup_data.yaml> <pod_management_action>

In Cisco VIM, you can edit and enable a selected set of options in the setup_data.yaml file using the reconfigure option. After installation, you can change the values of the feature parameters. Unless specified, Cisco VIM does not support unconfiguring of the feature.

The following table summarizes the list of features that can be reconfigured after the installation of the pod.

|

Features enabled after post-pod deployment |

Comments |

|---|---|

|

Optional OpenStack Services |

|

|

Pod Monitoring |

|

|

Export of EFK logs to External Syslog Server |

Reduces single point of failure on management node and provides data aggregation. |

|

NFS for Elasticsearch Snapshot |

NFS mount point for Elastic-search snapshot is used so that the disk on management node does not get full. |

|

Admin Source Networks |

White list filter for accessing management node admin service. |

|

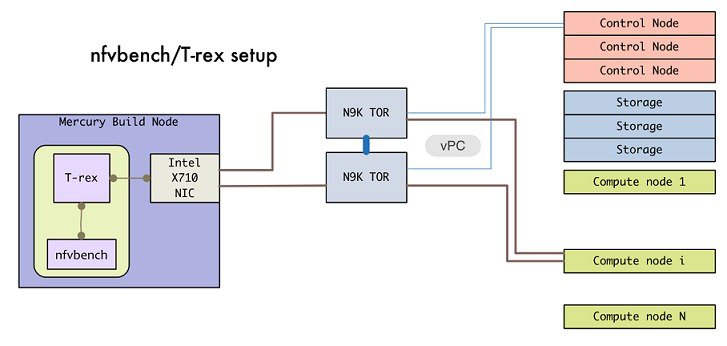

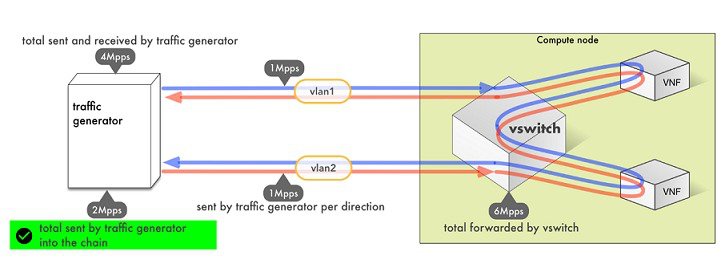

NFVBench |

Tool to help measure cloud performance. Management node needs a 10G Intel NIC (4x10G 710, or 2x10G 520 Intel NIC). |

|

EFK settings |

Enavles you to set EFK rotation frequency and size. |

|

OpenStack service password |

Implemented for security reasons, so that OpenStack passwords can be reset on-demand. |

| CIMC Password Reconfigure Post Install | Implemented for security reasons, so that CIMC passwords for C-series pod, can be reset on-demand. |

|

SwiftStack Post Install |

Integration with third-party Object-Store. The SwiftStack Post Install feature works only with Keystone v2. |

|

TENANT_VLAN_RANGES and PROVIDER_VLAN_RANGES |

Ability to increase the tenant and provider VLAN ranges on a pod that is up and running. It gives customers flexibility in network planning. |

|

Support of Multiple External Syslog Servers |

Ability to offload the OpenStack logs to an external Syslog server post-install. |

|

Replace of Failed APIC Hosts and add more leaf nodes |

Ability to replace Failed APIC Hosts, and add more leaf nodes to increase the fabric influence. |

|

Make Netapp block storage end point secure |

Ability to move the Netapp block storage endpoint from Clear to TLS post-deployment |

|

Auto-backup of Management Node |

Ability to enable/disable auto-backup of Management Node. It is possible to unconfigure the Management Node. |

|

VIM Admins |

Ability to configure non-root VIM Administrators. |

|

EXTERNAL_LB_VIP_FQDN |

Ability to enable TLS on external_vip through FQDN. |

|

EXTERNAL_LB_VIP_TLS |

Ability to enable TLS on external_vip through an IP address. |

|

http_proxy and/or https_proxy |

Ability to reconfigure http and/or https proxy servers. |

|

Admin Privileges for VNF Manager (ESC) from a tenant domain |

Ability to enable admin privileges for VNF Manager (ESC) from a tenant domain. |

|

SRIOV_CARD_TYPE |

Mechanism to go back and forth between 2-X520 and 2-XL710 as an SRIOV option in Cisco VIC NIC settings through reconfiguration. |

|

NETAPP |

Migrate NETAPP transport protocol from http to https. |

Identifying the Install Directory

[root@mgmt1 ~]# cd /root/

[root@mgmt1 ~]# ls –lrt | grep openstack-configs

lrwxrwxrwx. 1 root root 38 Mar 12 21:33 openstack-configs -> /root/installer-<tagid>/openstack-configs

From the output, you can understand that the OpenStack-configs is a symbolic link to the installer directory.

Verify that the REST API server is running from the same installer directory location, by executing the following command:

# cd installer-<tagid>/tools

#./restapi.py -a status

Status of the REST API Server: active (running) since Thu 2016-08-18 09:15:39 UTC; 9h ago

REST API launch directory: /root/installer-<tagid>/

Managing Hosts in Cisco VIM or NFVI Pods

To perform actions on the pod, run the commands specified in the following table. If you log in as root, manually change the directory to /root/installer-xxx to get to the correct working directory for these Cisco NFVI pod commands.

|

Action |

Steps |

Restrictions |

||

|---|---|---|---|---|

|

Remove block_storage or compute node |

|

You can remove multiple compute nodes and only one storage at a time; The pod must have a minimum of one compute and two storage nodes after the removal action. In Cisco VIM the number of ceph OSD nodes can vary from 3 to 20. You can remove one OSD node at a time as part of the pod management.

|

||

|

Add block_storage or compute node |

|

You can add multiple compute nodes and only one storage node at a time. The pod must have a minimum of one compute, and two storage nodes before the addition action. In Cisco VIM the number of ceph OSD nodes can vary from 3 to 20. You can add one OSD node at a time as part of the pod management.

|

||

|

Replace controller node |

|

You can

replace only one controller node at a time. The pod can have a maximum of three

controller nodes.

In Cisco VIM the replace controller node operation is supported in micro-pod.

|

When you add a compute or storage node to a UCS C-Series pod, you can increase the management/provision address pool. Similarly, for a UCS B-Series pod, you can increase the Cisco IMC pool to provide routing space flexibility for pod networking. Along with server information, these are the only items you can change in the setup_data.yaml file after the pod is deployed. To make changes to the management or provisioning sections and/or CIMC (for UCS B-Series pods) network section, you must not change the existing address block as defined on day 0. You can add only to the existing information by adding new address pool block(s) of address pool as shown in the following example:

NETWORKING:

:

:

networks:

-

vlan_id: 99

subnet: 172.31.231.0/25

gateway: 172.31.231.1

## 'pool' can be defined with single ip or a range of ip

pool:

- 172.31.231.2, 172.31.231.5 -→ IP address pool on Day-0

- 172.31.231.7 to 172.31.231.12 -→ IP address pool ext. on Day-n

- 172.31.231.20

segments:

## CIMC IP allocation. Needs to be an external routable network

- cimc

-

vlan_id: 2001

subnet: 192.168.11.0/25

gateway: 192.168.11.1

## 'pool' can be defined with single ip or a range of ip

pool:

- 192.168.11.2 to 192.168.11.5 -→ IP address pool on Day-0

- 192.168.11.7 to 192.168.11.12 → IP address pool on day-n

- 192.168.11.20 → IP address pool on day-n

segments:

## management and provision goes together

- management

- provision

:

:

The IP address pool is the only change allowed in the networking space of the specified networks management/provision and/or CIMC (for B-series). The overall network must have enough address space to accommodate for future enhancement on day-0. After making the changes to servers, roles, and the corresponding address pool, you can execute the add compute/storage CLI shown above to add new nodes to the pod.

Recovering Cisco NFVI Pods

This section describes the recovery processes for Cisco NFVI control node and the pod that is installed through Cisco VIM. For recovery to succeed, a full Cisco VIM installation must have occurred in the past, and recovery is caused by a failure of one or more of the controller services such as Rabbit MQ, MariaDB, and other services. The management node must be up and running and all the nodes must be accessible through SSH without passwords from the management node. You can also use this procedure to recover from a planned shutdown or accidental power outage.

Cisco VIM supports the following control node recovery command:

# ciscovim cluster-recovery

The control node recovers after the network partition is resolved.

Note |

It may be possible that database sync between controller nodes takes time, which can result in cluster-recovery failure. In that case, wait for some time for the database sync to complete and then re-run cluster-recovery. |

To make sure Nova services are good across compute nodes, execute the following command:

# source /root/openstack-configs/openrc

# nova service-list

To check for the overall cloud status, execute the following:

# cd installer-<tagid>/tools

# ./cloud_sanity.py -c all

In case of a complete pod outage, you must follow a sequence of steps to bring the pod back. The first step is to bring up the management node, and check that the management node containers are up and running using the docker ps –a command. After you bring up the management node, bring up all the other pod nodes. Make sure every node is reachable through password-less SSH from the management node. Verify that no network IP changes have occurred. You can get the node SSH IP access information from /root/openstack-config/mercury_servers_info.

Execute the following command sequence:

-

Check the setup_data.yaml file and runtime consistency on the management node:

# cd /root/installer-<tagid>/tools # ciscovim run --perform 1,3 -y -

Execute the cloud sanity command:

# cd/root/installer-<tagid>/tools # ./cloud_sanity.py -c all -

Check the status of the REST API server and the corresponding directory where it is running:

# cd/root/installer-<tagid>/tools #./restapi.py -a status Status of the REST API Server: active (running) since Thu 2016-08-18 09:15:39 UTC; 9h ago REST API launch directory: /root/installer-<tagid>/ -

If the REST API server is not running from the right installer directory, execute the following to get it running from the correct directory:

# cd/root/installer-<tagid>/tools #./restapi.py -a setup Check if the REST API server is running from the correct target directory #./restapi.py -a status Status of the REST API Server: active (running) since Thu 2016-08-18 09:15:39 UTC; 9h ago REST API launch directory: /root/new-installer-<tagid>/ -

Verify Nova services are good across the compute nodes by executing the following command: # source /root/openstack-configs/openrc # nova service-list

If cloud-sanity fails, execute cluster-recovery (ciscovim cluster-recovery), then re-execute the cloud-sanity and nova service-list steps as listed above.

Recovery of compute and OSD nodes requires network connectivity and reboot so that they can be accessed using SSH without password from the management node.

To shutdown, bring the pod down in the following sequence:

-

Shut down all VMs, then all the compute nodes

-

Shut down all storage nodes serially

-

Shut down all controllers one at a time

-

Shut down the management node

-

Shut down the networking gears

Bring the nodes up in reverse order, that is, start with networking gears, then the management node, storage nodes, control nodes, and compute nodes. Make sure that each node type is completely booted up before you move on to the next node type.

Validate the Cisco API server by running the following command: ciscovim run -–perform 1,3 -y

Run the cluster recovery command to bring up the POD post power-outage

# help on sub-command

ciscovim help cluster-recovery

# execute cluster-recovery

ciscovim cluster-recovery

# execute docker cloudpulse check

# ensure all containers are up

cloudpulse run --name docker_check

Validate if all the VMs are up (not in shutdown state). If any of the VMs are in down state, bring them up using the Horizon dashboard.

Feedback

Feedback