-

Cisco MDS 9000 Family Fabric Manager Configuration Guide, Release 3.x

-

Index

-

New and Changed Information

-

Preface

- Getting Started

- Installation and Switch Management

- Switch Configuration

-

Fabric Configuration

-

Configuring and Managing VSANs

-

SAN Device Virtualization

-

Creating Dynamic VSANs

-

Configuring Inter-VSAN Routing

-

Distributing Device Alias Services

-

Configuring and Managing Zones

-

Configuring Fibre Channel Routing Services and Protocols

-

Dense Wavelength Division Multiplexing

-

Managing FLOGI, Name Server, FDMI, and RSCN Databases

-

Discovering SCSI Targets

-

Configuring FICON

-

Advanced Features and Concepts

-

-

Security

-

Configuring FIPS

-

Configuring Users and Common Roles

-

Configuring SNMP

-

Configuring RADIUS and TACACS+

-

Configuring IPv4 Access Control Lists

-

Configuring Certificate Authorities and Digital Certificates

-

Configuring IPsec Network Security

-

Configuring FC-SP and DHCHAP

-

Configuring Port Security

-

Configuring Fabric Binding

-

- IP Services

- Intelligent Storage Services

- Network and Switch Monitoring

- Traffic Management

- Troubleshooting

-

Launching Fabric Manager in Cisco SAN-OS Releases Prior to 3.2(1)

-

Cisco Fabric Manager Unsupported Feature List

-

Interface Nonoperational Reason Codes

-

Managing Cisco FabricWare

-

Configuration Limits for Cisco MDS SAN-OS Release 3.1(x) and 3.2(x)

-

Table Of Contents

Monitoring System Processes and Logs

First and Last Core Verification

Online System Health Management

About Online System Health Management

Performing Internal Loopback Tests

Performing External Loopback Tests

Monitoring System Processes and Logs

This chapter provides details on monitoring the health of the switch. It includes the following sections:

•

Online System Health Management

Displaying System Processes

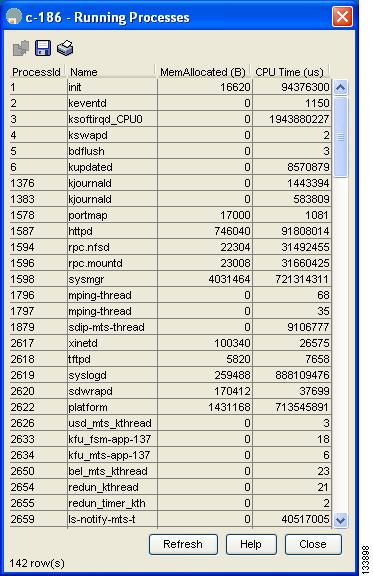

To obtain general information about all processes using Device Manager, follow these steps:

Step 1

Choose Admin > Running Processes.

You see the Running Processes dialog box shown in Figure 59-1.

Figure 59-1 Running Processes Dialog Box

Where:

•

ProcessId = Process ID

•

Name = Name of the process

•

MemAllocated = Sum of all the dynamically allocated memory that this process has received from the system, including memory that may have been returned

•

Runtime (ms) = CPU time the process has used, in microseconds

Step 2

Click Close to close the dialog box.

Displaying System Status

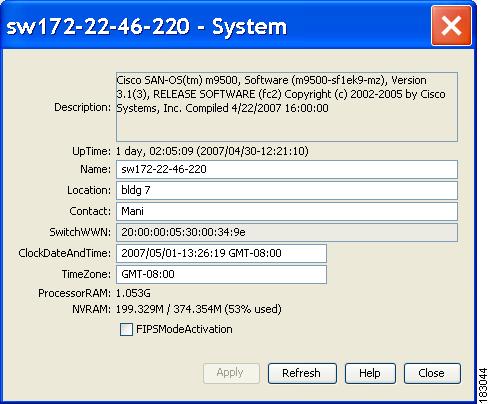

To display system status from Device Manager, follow these steps:

Step 1

Choose Physical > System.

You see the System dialog box shown in Figure 59-2.

Figure 59-2 System Dialog Box

Step 2

Click Close to close the dialog box.

Core and Log Files

For information on copying core and log files, refer to the Cisco MDS 9000 Family CLI Configuration Guide.

Displaying Core Status

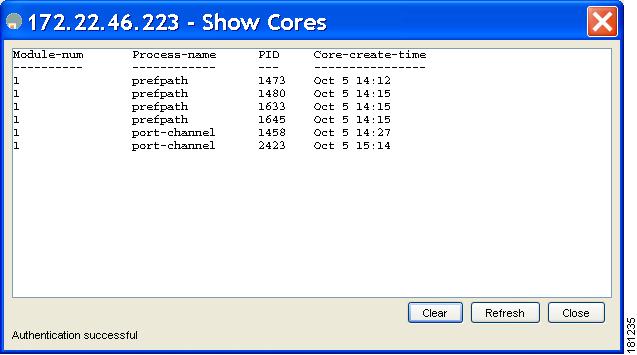

To display cores on a switch using Device Manager, follow these steps:

Note

Be sure SSH2 is enabled on this switch.

Step 1

Choose Admin > Show Cores.

You see the Show Cores dialog box shown in Figure 59-3.

Figure 59-3 Show Cores Dialog Box

Where:

Module-num shows the slot number on which the core was generated.In this example, the fspf core was generated on the active supervisor module (slot 5), fcc was generated on the standby supervisor module (slot 6), and acltcam and fib were generated on the switching module (slot 8).

Step 2

Click Close to close the dialog box.

Clearing the Core Directory

To clear the cores on a switch using Device Manager, follow these steps:

Note

Be sure SSH2 is enabled on this switch.

Step 1

Click Clear to clear the cores.

The software keeps the last few cores per service and per slot and clears all other cores present on the active supervisor module.

Step 2

Click Close to close the dialog box.

First and Last Core

The First and last core feature uses the limited system resource and retains the most important core files. Generally, the first core and the most recently generated core have the information for debugging and, the First and last core feature tries to retain the first and the last core information.

If the core files are generated from active supervisor module, the number of core files for the service is defined in the service.conf file. There is no upper limit on the total number of core files in the active supervisor module. The defined number of core files work for every VDC.

To display the core files saved in the system, use the following commands:

First and Last Core Verification

You can view specific information about the saved core files. Example 59-1 to Example 59-2 provide further details on saved core files.

Example 59-1 Regular Service on Default-VDC on Local Node

For example, pixm crashes five times. The output of show cores vdc-all displays five core files. Three minutes later, the second oldest core file gets deleted to comply with the number of cores defined in the service.conf file.

switch# show cores vdc-allVDC No Module-num Process-name PID Core-create-time------ ---------- ------------ --- ----------------1 5 pixm 4103 Jan 29 01:301 5 pixm 5105 Jan 29 01:321 5 pixm 5106 Jan 29 01:321 5 pixm 5107 Jan 29 01:331 5 pixm 5108 Jan 29 01:40switch# show cores vdc-allVDC No Module-num Process-name PID Core-create-time------ ---------- ------------ --- ----------------1 5 pixm 4103 Jan 29 01:301 5 pixm 5106 Jan 29 01:321 5 pixm 5107 Jan 29 01:331 5 pixm 5108 Jan 29 01:40Example 59-2 Regular Service on vdc 2 on Active Supervisor Module

For example, there are five radius core files from vdc2 on the active supervisor module. The second and third oldest files get deleted to comply with the number of core files defined in the service.conf file.

switch# show cores vdc vdc2VDC No Module-num Process-name PID Core-create-time------ ---------- ------------ --- ----------------2 5 radius 6100 Jan 29 01:472 5 radius 6101 Jan 29 01:552 5 radius 6102 Jan 29 01:552 5 radius 6103 Jan 29 01:552 5 radius 6104 Jan 29 01:57switch# show cores vdc vdc2VDC No Module-num Process-name PID Core-create-time------ ---------- ------------ --- ----------------2 5 radius 6100 Jan 29 01:472 5 radius 6103 Jan 29 01:552 5 radius 6104 Jan 29 01:57

Online System Health Management

The Online Health Management System (system health) is a hardware fault detection and recovery feature. It ensures the general health of switching, services, and supervisor modules in any switch in the Cisco MDS 9000 Family.

Note

For information on most Online Health Management System procedures, refer to the Cisco MDS 9000 Family CLI Configuration Guide.

This section includes the following topics:

•

About Online System Health Management

•

Performing Internal Loopback Tests

•

Performing External Loopback Tests

About Online System Health Management

The Online Health Management System (OHMS) is a hardware fault detection and recovery feature. It runs on all Cisco MDS switching, services, and supervisor modules and ensures the general health of any switch in the Cisco MDS 9000 Family. The OHMS monitors system hardware in the following ways:

•

The OHMS component running on the active supervisor maintains control over all other OHMS components running on the other modules in the switch.

•

The system health application running in the standby supervisor module only monitors the standby supervisor module—if that module is available in the HA standby mode. See the "HA Switchover Characteristics" section on page 17-2.

The OHMS application launches a daemon process in all modules and runs multiple tests on each module to test individual module components. The tests run at preconfigured intervals, cover all major fault points, and isolate any failing component in the MDS switch. The OHMS running on the active supervisor maintains control over all other OHMS components running on all other modules in the switch.

On detecting a fault, the system health application attempts the following recovery actions:

•

Performs additional testing to isolate the faulty component

•

Attempts to reconfigure the component by retrieving its configuration information from persistent storage.

•

If unable to recover, sends Call Home notifications, system messages and exception logs; and shuts down and discontinues testing the failed module or component (such as an interface)

•

Sends Call Home and system messages and exception logs as soon as it detects a failure.

•

Shuts down the failing module or component (such as an interface).

•

Isolates failed ports from further testing.

•

Reports the failure to the appropriate software component.

•

Switches to the standby supervisor module, if an error is detected on the active supervisor module and a standby supervisor module exists in the Cisco MDS switch. After the switchover, the new active supervisor module restarts the active supervisor tests.

•

Reloads the switch if a standby supervisor module does not exist in the switch.

•

Provides CLI support to view, test, and obtain test run statistics or change the system health test configuration on the switch.

•

Performs tests to focus on the problem area.

Each module is configured to run the test relevant to that module. You can change the default parameters of the test in each module as required.

Performing Internal Loopback Tests

You can run manual loopback tests to identify hardware errors in the data path in the switching or services modules, and the control path in the supervisor modules. Internal loopback tests send and receive FC2 frames to/from the same ports and provide the round trip time taken in microseconds. These tests are available for Fibre Channel, IPS, and iSCSI interfaces.

Choose Interface > Diagnostics > Internal to perform an internal loopback test from Device Manager.

Performing External Loopback Tests

You can run manual loopback tests to identify hardware errors in the data path in the switching or services modules, and the control path in the supervisor modules. External loopback tests send and receive FC2 frames to/from the same port or between two ports.

You need to connect a cable (or a plug) to loop the Rx port to the Tx port before running the test. If you are testing to/from the same port, you need a special loop cable. If you are testing to/from different ports, you can use a regular cable. This test is only available for Fibre Channel interfaces.

Choose Interface > Diagnostics > External to perform an external loopback test from Device Manager.

Default Settings

Table 59-1 lists the default system health and log settings.

Table 59-1 Default System Health and Log Settings

Kernel core generation

One module.

System health

Enabled.

Loopback frequency

5 seconds.

Failure action

Enabled.

Feedback

Feedback