Table Of Contents

Microsoft Exchange 2010 with VMware VSphere on Cisco Unified Computing System with NetApp Storage

Exchange 2010 Deployment Scenario

Global Site Selector (GSS) and Application Control Engine (ACE)

Load Balancing for an Exchange 2010 Environment

Exchange 2010 MAPI Client Load Balancing Requirements

Configuring Static Port Mapping For RPC-Based Services

Exchange 2010 Outlook Web Access Load Balancing Requirements

Outlook Anywhere Load Balancing Requirements

Cisco Application Control Engine Overview

Cisco Unified Computing System Hardware

Cisco UCS 2100 Series Fabric Extenders

UCS 6100 XP Series Fabric Interconnect

Programmatically Deploying Server Resources

Dynamic Provisioning with Service Profiles

NAM Appliance for Virtual Network Monitoring

NetApp Storage Technologies and Benefits

NetApp Strategy for Storage Efficiency

Cisco Wide Area Application Services for Branch Users

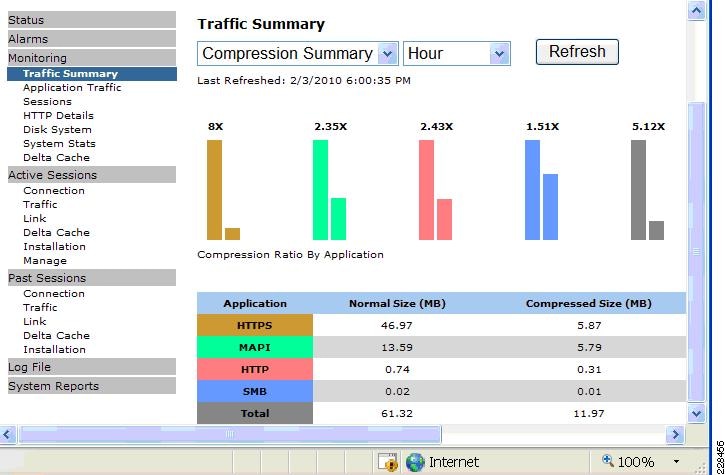

Advanced Compression Using DRE and LZ Compression

Messaging Application Programming Interface (MAPI) Protocol Acceleration

Secure Socket Layer (SSL) Protocol Acceleration

Advanced Data Transfer Compression

Application-Specific Acceleration

Solution Design and Deployment

Exchange 2010 Design Considerations

Processor Cores—Factors to Consider

Microsoft Exchange 2010 Calculator

Configuring CPU and Memory on Exchange VMs

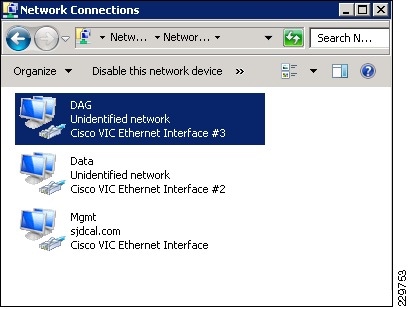

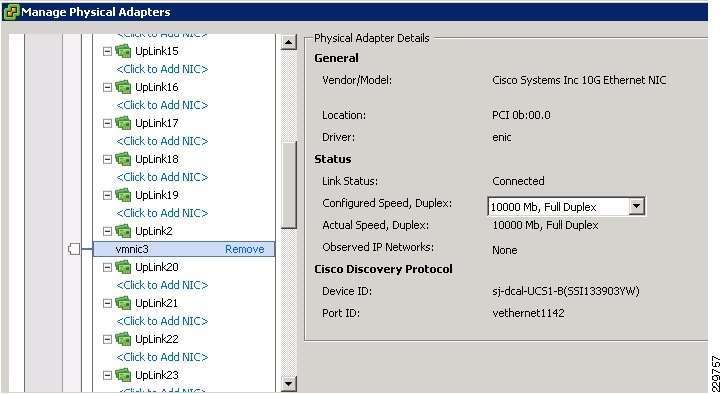

M81KR (Palo) Network Interface

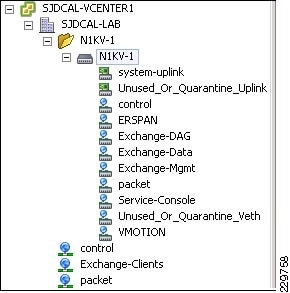

Nexus 1000V and Virtual Machine Networking

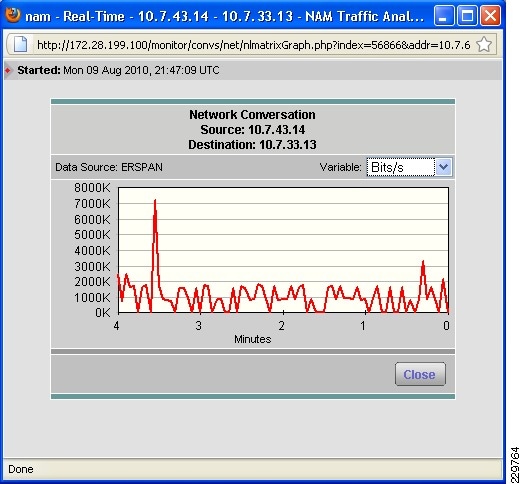

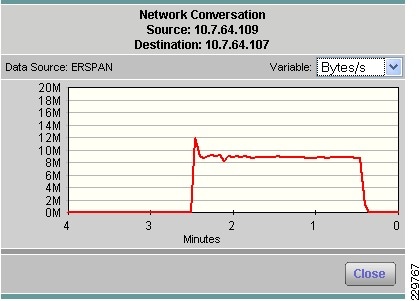

NAM Reporting of ERSPAN from Nexus 1000V

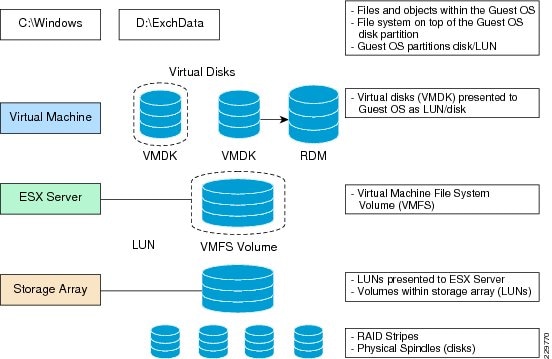

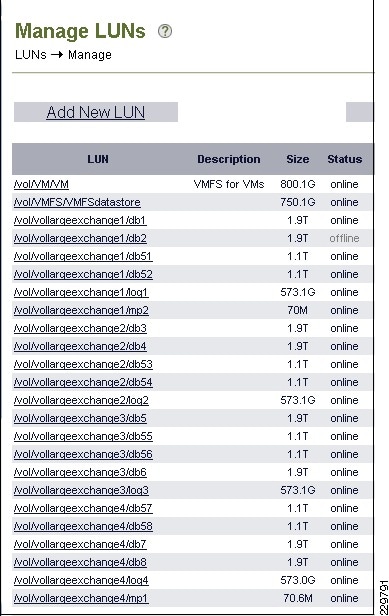

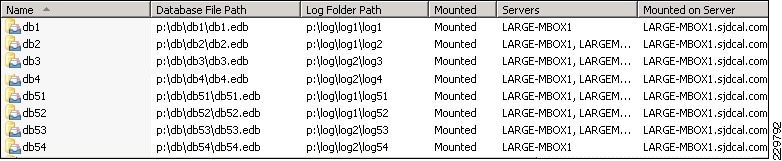

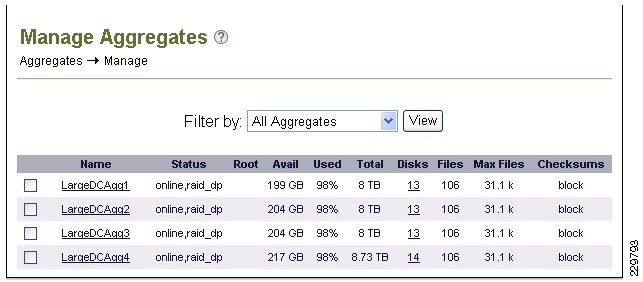

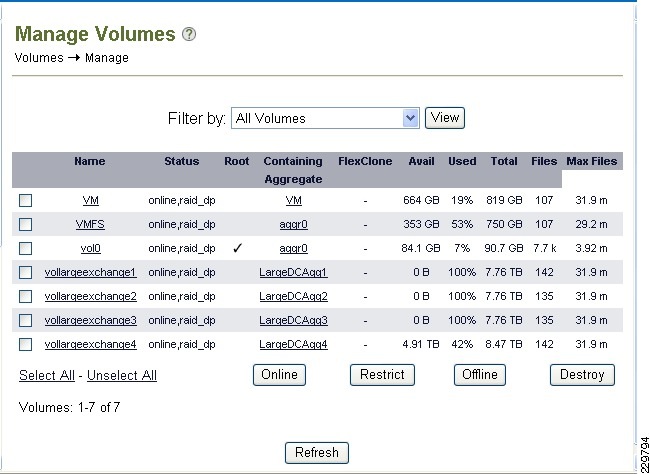

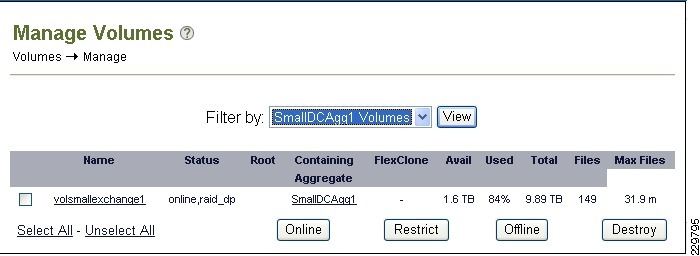

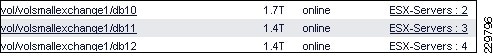

Storage Configuration for Exchange 2010

NetApp RAID-DP Storage Calculator for Exchange 2010

Aggregate, Volume, and LUN Sizing

NetApp Best Practices for Exchange 2010

VSphere Virtualization Best Practices for Exchange 2010

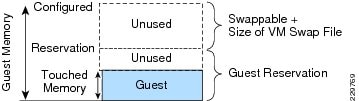

Memory Configuration Guidelines

Networking Configuration Guidelines

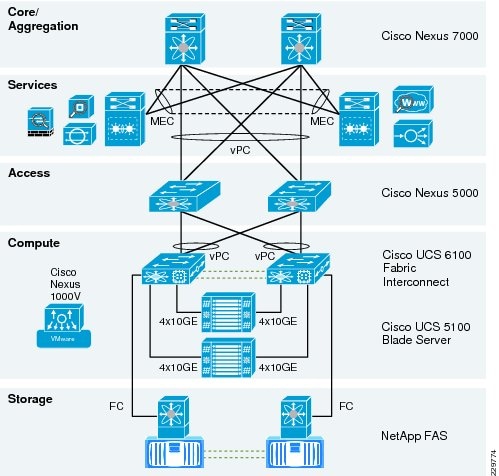

Data Center Network Architecture

Global Site Selector Configuration for Site Failover

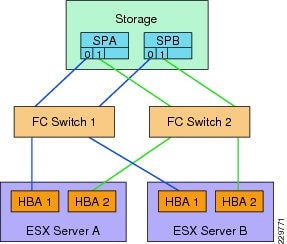

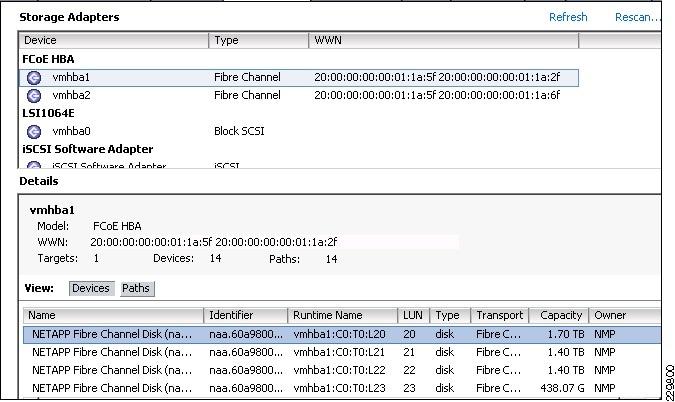

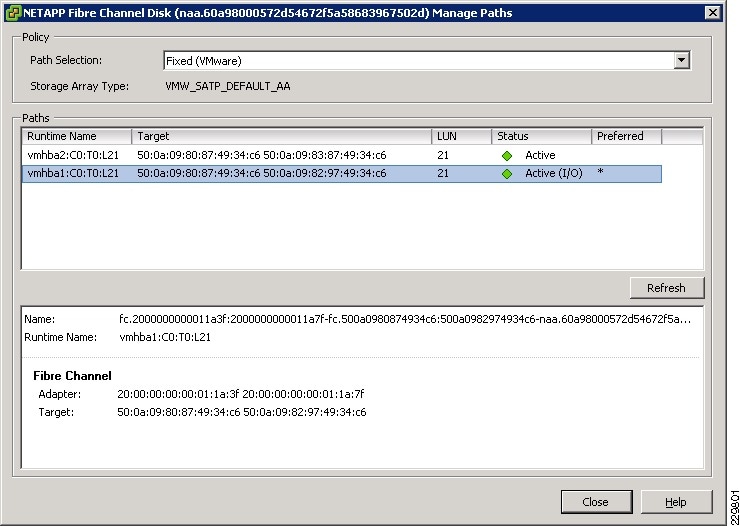

Fibre-Channel Storage Area Network

Validating Exchange Server Performance

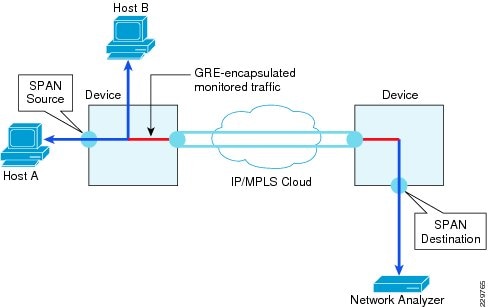

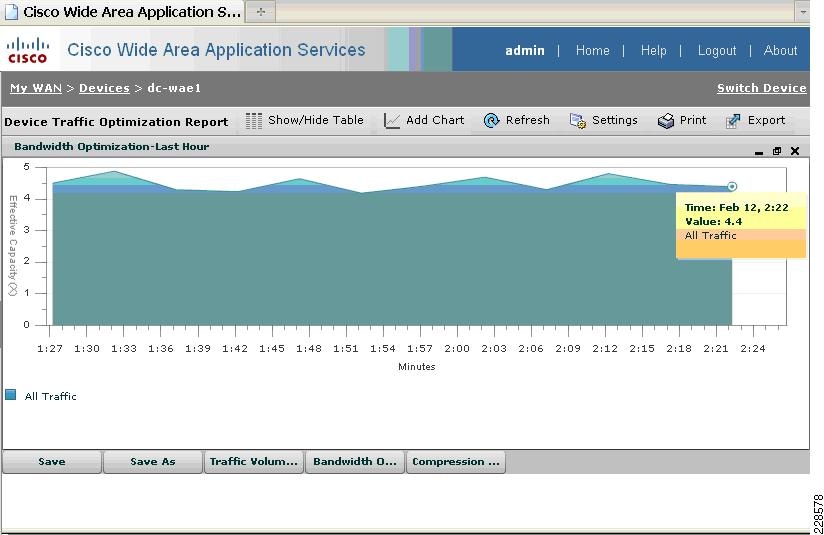

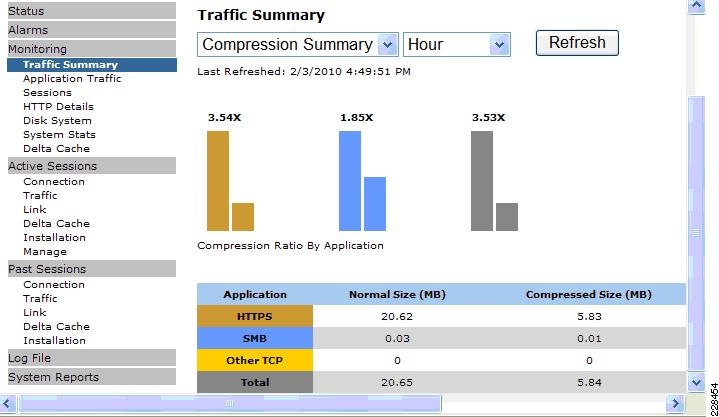

WAAS Setup Between Branch and Data Center

WAAS Setup for Remote User to Data Center

Appendix—ACE Exchange Context Configuration

About Cisco Validated Design (CVD) Program

Microsoft Exchange 2010 with VMware VSphere on Cisco Unified Computing System with

NetApp StorageLast Updated: February 7, 2011

Building Architectures to Solve Business Problems

About the Authors

Karen Chan, Solutions Architect, Systems Architecture and Strategy, Cisco SystemsKaren is currently a Solutions Architect in Cisco Systems Architecture and Strategy group. Prior to this role, she was an Education Architect in the Industry Solutions Engineering team at Cisco. She has also worked as a technical marketing engineer in the Retail group on the Cisco PCI for Retail solution as well as in the Financial Services group on the Cisco Digital Image Management solution. Prior to Cisco, she spent 11 years in software development and testing, including leading various test teams at Citrix Systems, Orbital Data, and Packeteer, to validate software and hardware functions on their WAN optimization and application acceleration products. She holds a bachelor of science in electrical engineering and computer science from the University of California, Berkeley.

Alex Fontana, Technical Solutions Architect, VMwareAlex Fontana is a Technical Solutions Architect at VMware, with a focus on virtualizing Microsoft tier-1 applications. Alex has worked in the information technology industry for over 10 years during which time five years have been spent designing and deploying Microsoft applications on VMware technologies. Alex specializes in Microsoft operating systems and applications with a focus on Active Directory, Exchange, and VMware vSphere. Alex is VMware, Microsoft, and ITIL certified.

Robert Quimbey, Microsoft Alliance Engineer, NetAppRobert joined NetApp in 2007 after nearly 9 years as a member of the Microsoft Exchange product team, where he was responsible for storage and high availability. Since joining NetApp, his activities have continued to center around Exchange, including designing sizing tools, best practices, and reference architectures.

Microsoft Exchange 2010 with VMware VSphere on Cisco Unified Computing System with NetApp Storage

Introduction

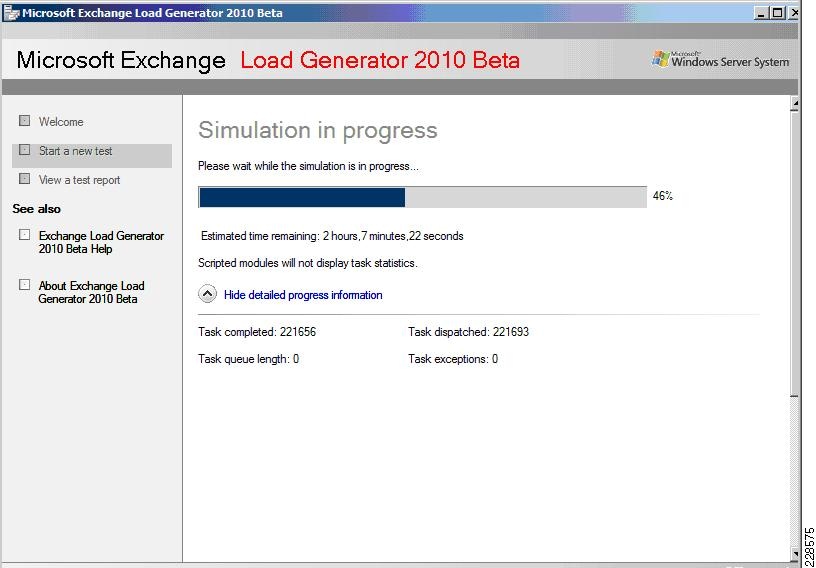

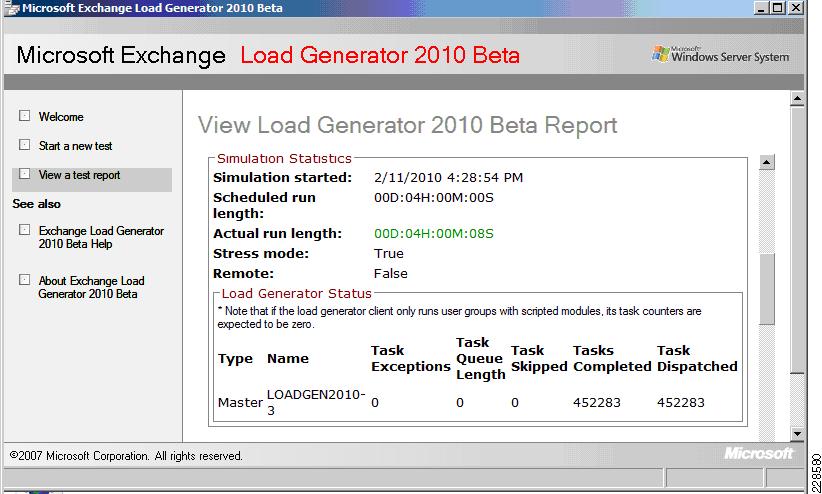

This design and deployment guide demonstrates how enterprises can apply best practices for VSphere 4.0, Microsoft Exchange 2010, and NetApp Fiber-Attached Storage arrays to run virtualized and native installations of Exchange 2010 servers on the Cisco® Unified Computing System™ (UCS). VSphere and Exchange 2010 are deployed in a Cisco Data Center Business Advantage architecture that provides redundancy/high availability, network virtualization, network monitoring, and application services. The Cisco Nexus 1000V virtual switch is deployed as part of the VSphere infrastructure and integrated with the Cisco Network Analysis Module appliance to provide visibility and monitoring into the virtual server farm. This solution was validated within a multi-site data center scenario with a realistically-sized Exchange deployment using Microsoft's Exchange 2010 Load Generator tool to simulate realistic user loads. The goal of the validation was to verify that the Cisco UCS, NetApp storage, and network link sizing was sufficient to accommodate the LoadGen user workloads. Cisco Global Site Selector (GSS) provides site failover in this multi-site Exchange environment by communicating securely and optimally with the Cisco ACE load balancer to determine application server health. User connections from branch office and remote sites are optimized across the WAN with Cisco Wide Area Application Services (WAAS).

Audience

This document is primarily intended for enterprises interested in virtualizing their Exchange 2010 servers using VMware VSphere on the Cisco Unified Computing System. These enterprises would be planning to use Netapp FAS for storage of their virtual machines and Exchange mailboxes. This design guide also applies to those who want to understand how they can optimize application delivery to the end user through a combination of server load balancing and WAN optimization.

Solution Overview

This solution provides an end-to-end architecture with Cisco, VMware, Microsoft, and NetApp technologies that demonstrates how Microsoft Exchange 2010 servers can be virtualized to support around 10,000 user mailboxes and to provide high availability and redundancy for server and site failover. The design and deployment guidance cover the following solution components:

•

Exchange 2010 application

•

Unified Computing System server platform

•

VSphere virtualization platform

•

Nexus 1000V software switching for the virtual servers, the

•

Data Center Business Advantage Architecture LAN and SAN architectures

•

NetApp storage components

•

Cisco GSS and ACE

•

Cisco WAAS

•

Cisco Network Analysis Module appliance

Exchange 2010 Deployment Scenario

This solution involves a multi-site data center deployment—a large data center, a small data center, and a data center for site disaster recovery geographically located between the large and the small data centers. The servers in the disaster recovery data center are all passive under normal operations. The large data center has two Mailbox servers, two Hub Transport servers, two Client Access Servers, and two Active Directory/DNS servers—all in an active-active configuration. A single server failure of any role causes failover to the secondary server in the local site. For a dual-server failure or local site failure, failover to the disaster recovery data center servers occurs. The small data center has one Exchange server that contains the Mailbox server, Hub Transport, and Client Access roles and it also has one Active Directory/DNS server. In the event of any single server failure or a local site failure, failover to the servers in the disaster recovery site occurs.

The server assignments to the UCS blade servers in the large data center are:

•

Each of two UCS blade servers installed natively (bare-metal) with a Mailbox server. These two Mailbox servers are members of one Database Availability Group (DAG) in this solution. There is a third node in this DAG that resides in the disaster recovery data center.

•

Two other UCS blade servers installed with:

–

A virtual machine that has a combined Hub Transport Server and Client Access Server installation.

–

A second virtual machine that has the Active Directory and DNS servers.

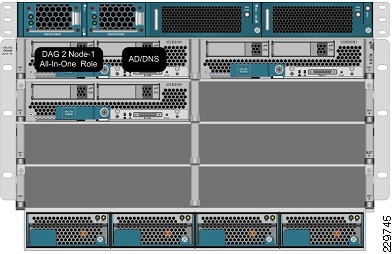

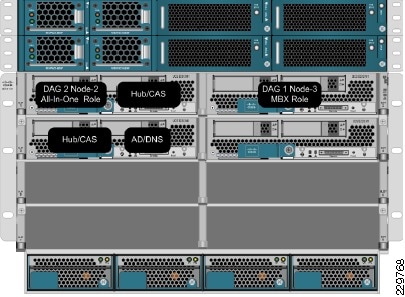

Figure 1 illustrates the assignment of the application servers to the UCS blades in the large data center.

Figure 1 Application Servers Assigned to UCS Blade in Large Data Center

The server assignments to the UCS blade servers in the small data center are:

•

One UCS blade server supports a virtual machine that has all three Exchange roles installed (CAS, Hub Transport, and Mailbox). The Mailbox server on this virtual machine is one of the two nodes in a DAG that spans the small data center and the disaster recovery data center.

•

On that same UCS blade server, a second virtual machine supports the Active Directory/DNS server (see Figure 2).

Figure 2 Application Server VMs Assigned to UCS Blade in Small Data Center

The server assignments to the UCS blade servers in the disaster recovery data center are:

•

One UCS blade server is configured the same as the blade server in the small data center—supporting one virtual machine for all three Exchange server roles and one virtual machine for Active Directory/DNS server. The Mailbox server is the second node of the DAG that spans the small data center and this disaster recovery data center. Any server failure at the small data center site will result in failover to the appropriate virtual machine server on this blade. See Table 1 for information on how many active and passive mailboxes are in the small and disaster recovery data centers under different failure scenarios.

•

One UCS blade server supports a native/bare-metal installation of a Mailbox server. This is the third node of the DAG that spans the large data center and this disaster recovery data center. This node is passive under normal operations and will be activated only if both Mailbox servers in the large data center fail or the large data center undergoes a site failure. See Table 2 for information on how many active and passive mailboxes are in the large and disaster recovery data centers under different failure scenarios. A second UCS blade hosts a CAS/HT VM and an AD/DNS VM.

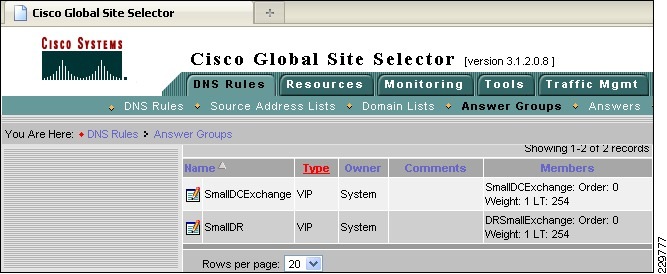

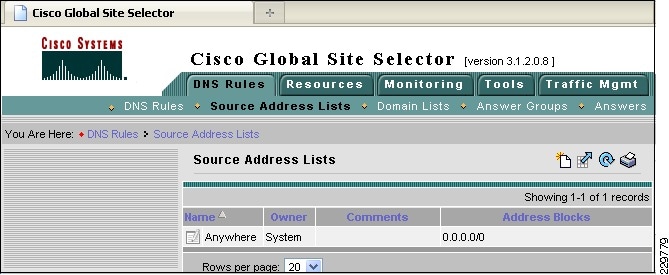

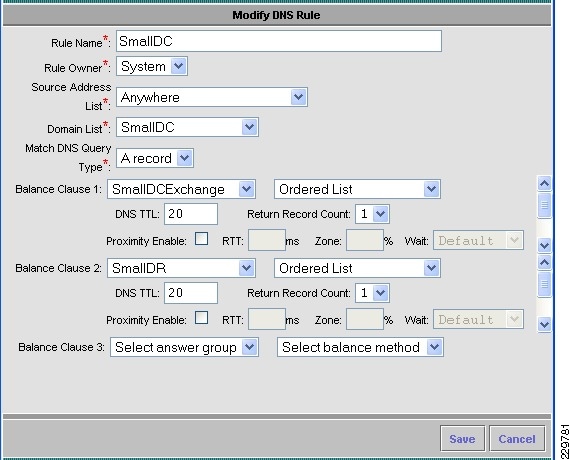

Global Site Selector (GSS) and Application Control Engine (ACE)

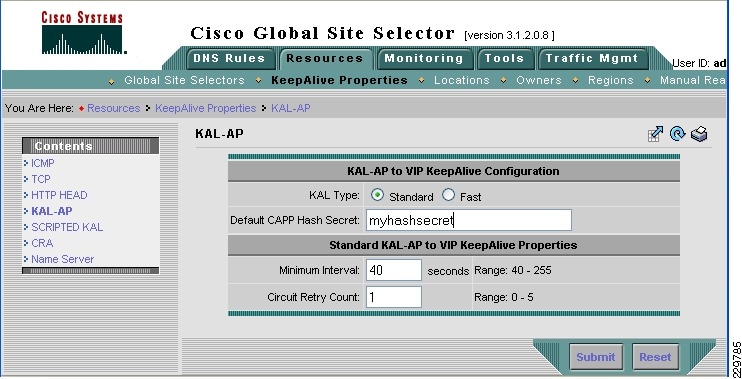

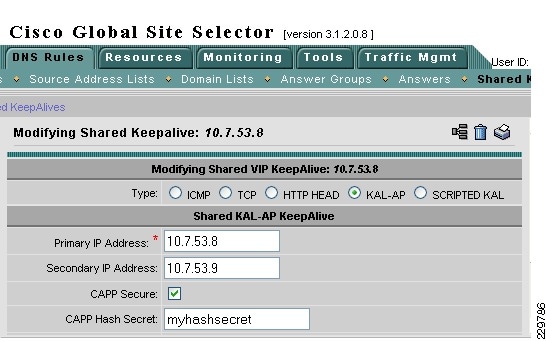

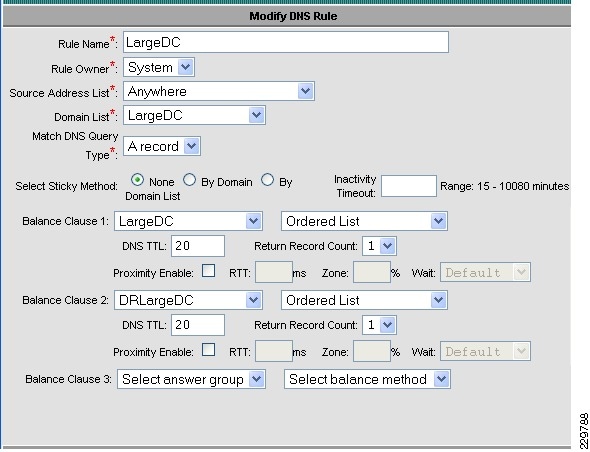

The Cisco Global Site Selector appliance is used in this solution to redirect incoming Exchange client requests to the appropriate data center servers, depending on the failover scenario. The Cisco GSS traffic management process continuously monitors the load and health of a device (such as the ACE or a particular server) within each data center. The Cisco GSS uses this information in conjunction with customer-controlled load balancing algorithms to select, in real time, the best data center or server destinations that are available and not overloaded per user-definable load conditions. In this manner, the Cisco GSS intelligently selects best destination to ensure application availability and performance for any device that uses common DNS request for access.

While the Cisco GSS can monitor health and performance of almost any server or load balancing/application delivery device using ICMP, TCP, HTTP-header, and SNMP probes, when used with the ACE load balancer, it has enhanced monitoring capabilities. To retrieve more granular monitoring data that is securely transmitted in a timely manner, the Cisco GSS can make use of the special KAL-AP monitoring interface built into the Cisco Application Control Engine (ACE) Modules and Cisco ACE 4710 appliances. For users this means:

•

Higher application availability due to Cisco GSS providing failover across distributed data centers

•

Better application performance due to Cisco GSS optimization of load growth to multiple servers across multiple data centers

For IT operators this means the Cisco GSS adds agility by automating reactions to changes in local and global networks to ensure application availability and performance. If a network outage occurs, the Cisco GSS can automatically or under administrative control direct clients to a disaster recovery site within seconds. The Cisco GSS also adds security and intelligence to the DNS process by offering cluster resiliency that can be managed as a single entity.

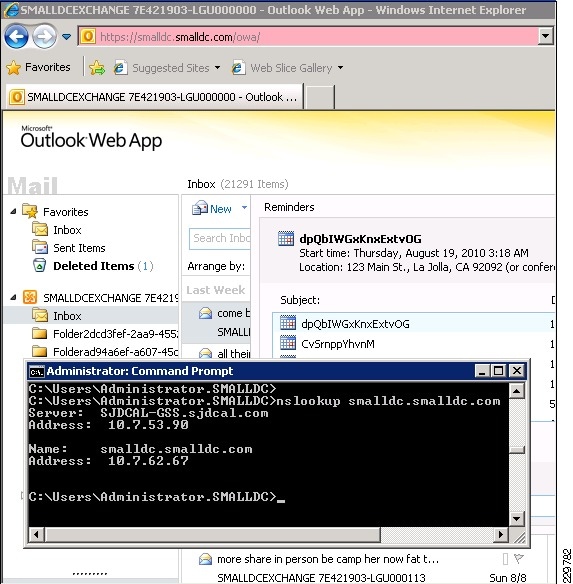

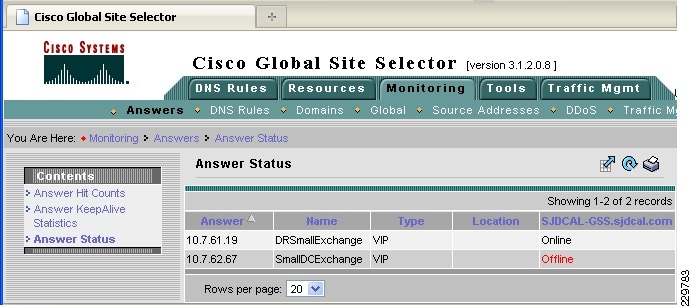

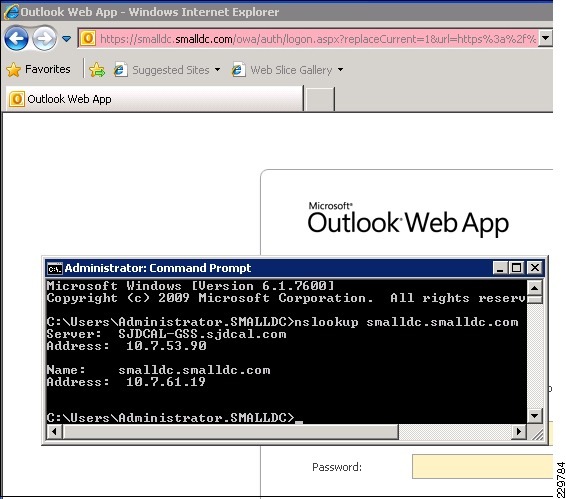

The following describes how the GSS combined with the ACE can intelligently route incoming Exchange client requests to the data center and Exchange server that is the most suitable based on availability and server load:

1.

A user wants to check their corporate E-mail from their home office and connects to their corporate campus network through their VPN. When their Outlook client opens, it needs to access a corporate Exchange Client Access Server. The resolver (client) sends a query for the DNS name of the CAS server VIP (cas.mycompany.com) to the local client DNS server. This could be a server running Berkeley Internet Name Domain (BIND) services or a DNS server appliance from one of many available vendors.

2.

Through being referred by a root name server or an intermediate name server, the local client DNS server is eventually directed to query the authoritative name server for the zone. When the authoritative name server is queried, it replies with the IP address of the Cisco GSS. The local client DNS server then does its final query to the Cisco GSS, which is authoritative for the zone and can provide an "A" record (i.e., IP address) response for the client query for the CAS server VIP. The Cisco GSS applies some intelligence in order to determine what IP address to respond with, i.e., to determine which CAS server (in which data center) is the best IP address to return to the requesting client.

3.

In order to determine the best server, the Cisco GSS applies the following types of intelligence, which are not supported by generic DNS servers. The Cisco GSS can:

–

Apply any of its ten global load balancing algorithms to direct users to the closest, least-loaded, or otherwise selected "best server". Cisco GSS will not send an IP address to the querying name server if the device is overloaded.

–

Automatically route users to an alternative data center if the primary data center/device becomes unavailable; Cisco GSS will not send an IP address to the querying name server if the device (Website, application swerver, etc.) is unavailable.

–

Determine the health of servers directly through different probes (e.g., ICMP, TCP, HTTP) to determine which servers are available and which are offline.

–

Determine the health of servers indirectly if those servers are sitting behind an ACE load balancer. The GSS can get server health information from the ACE through its KAL-AP interface. It can then reply to the query with the Virtual IP address of an ACE, thus taking advantage of local load balancing services.

4.

Once the GSS has made the determination and returns its best IP address, the client can then connect directly to either the ACE Virtual IP to be load balanced to an available CAS server or can connect directly to a CAS server.

Table 3 shows the different protocols and ports used by the GSS appliance.

Table 3 GSS Service Ports

20-23

TCP

FTP, SSH, and Telnet server services on the GSS

20-23

Any

TCP

Return traffic of FTP and Telnet GSS CLI commands

Any

53

UDP, TCP

GSS DNS server traffic

53

UDP

GSS software reverse lookup and "dnslookup" queries

123

123

UDP

Network Time Protocol (NTP) updates

161

UDP

Simple Network Management Protocol (SNMP) traffic

Any

443

TCP

Primary GSSM GUI

13043

1304

UDP

CRA keepalives

Any

2000

UDP

Inter-GSS periodic status reporting

Any

2001-2005

TCP

Inter-GSS communication

2001-2005

Any

TCP

Inter-GSS communication

Any

3002-3008

TCP

Inter-GSS communication

3002-3008

Any

TCP

Inter-GSS communication

3340

Any

TCP

Sticky and Config Agent communication

3341

Any

TCP

Sticky communication source

3342

Any

TCP

Sticky and DNS processes communication

50022

Any

UDP

KAL-AP keepalives

19743

1974

UDP

DRP protocol traffic

Any

5001

TCP

Global sticky mesh protocol traffic

5001

Any

TCP

Global sticky mesh protocol traffic

1 http://www.cisco.com/en/US/docs/app_ntwk_services/data_center_app_services/gss4400series/v3.1.1/administration/guide/ACLs.html#wp999192

2 For communication between ACE and GSS.

3 For communication between GSS and other network device (neither ACE nor GSS).

3 Note: Line items in Table 3 not marked with footnotes 2 or 3 are for communications involving one or more GSS devices.

Exchange 2010 Servers

This solution involved implementing the following three Exchange 2010 server roles as VMware VSphere virtual machines and physical servers on the Cisco UCS:

•

Hub Transport Server

•

Client Access Server

•

Mailbox Server

Since the Edge Transport role is an optional role for an Exchange deployment, it was not included in this solution. For guidance on deploying an Edge Transport server in a Data Center Business Advantage Architecture with ACE load balancing, see the following Cisco design guide: http://www.cisco.com/en/US/docs/solutions/Verticals/mstdcmsftex.html#wp623892.

Hub Transport Server Role

For those familiar with earlier versions of Exchange Server 2007, the Hub Transport server role replaces what was formerly known as the bridgehead server in Exchange Server 2003. The function of the Hub Transport server is to intelligently route messages within an Exchange Server 2010 environment. By default, SMTP transport is very inefficient at routing messages to multiple recipients because it takes a message and sends multiple copies throughout an organization. As an example, if a message with a 5-MB attachment is sent to 10 recipients in an SMTP network, typically at the sendmail routing server, the 10 recipients are identified from the directory, and 10 individual 5-MB messages are transmitted from the sendmail server to the mail recipients, even if all of the recipients' mailboxes reside on a single server.

The Hub Transport server takes a message destined to multiple recipients, identifies the most efficient route to send the message, and keeps the message intact for multiple recipients to the most appropriate endpoint. So, if all of the recipients are on a single server in a remote location, only one copy of the 5-MB message is transmitted to the remote server. At that server, the message is then broken apart with a copy of the message dropped into each of the recipient's mailboxes at the endpoint.

The Hub Transport server in Exchange Server 2010 does more than just intelligent bridgehead routing, though; it also acts as the policy compliance management server. Policies can be configured in Exchange Server 2010 so that after a message is filtered for spam and viruses, the message goes to the policy server to be assessed whether the message meets or fits into any regulated message policy and appropriate actions are taken. The same is true for outbound messages; the messages go to the policy server, the content of the message is analyzed, and if the message is determined to meet specific message policy criteria, the message can be routed unchanged or the message can be held or modified based on the policy. As an example, an organization might want any communications referencing a specific product code name, or a message that has content that looks like private health information, such as Social Security number, date of birth, or health records of an individual, to be held or encryption to be enforced on the message before it continues its route.

Client Access Server Role

The Client Access Server role in Exchange Server 2010 (as was also the case in Exchange Server 2007) performs many of the tasks that were formerly performed by the Exchange Server 2003 front-end server, such as providing a connecting point for client systems. A client system can be an Office Outlook client, a Windows Mobile handheld device, a connecting point for OWA, or a remote laptop user using Outlook Anywhere to perform an encrypted synchronization of their mailbox content.

Unlike a front-end server in Exchange Server 2003 that effectively just passed user communications on to the back-end Mailbox server, the CAS does intelligent assessment of where a user's mailbox resides and then provides the appropriate access and connectivity. This is because Exchange Server 2010 now has replicated mailbox technology where a user's mailbox can be active on a different server in the event of a primary mailbox server failure. By allowing the CAS server to redirect the user to the appropriate destination, there is more flexibility in providing redundancy and recoverability of mailbox access in the event of a system failure.

Mailbox Server Role

The Mailbox server role is merely a server that holds users' mailbox information. It is the server that has the Exchange Server EDB databases. However, rather than just being a database server, the Exchange Server 2010 Mailbox server role can be configured to perform several functions that keep the mailbox data online and replicated. For organizations that want to create high availability for Exchange Server data, the Mailbox server role systems would likely be clustered and not just a local cluster with a shared drive (and, thus, a single point of failure on the data), but rather one that uses the new Exchange Server 2010 Database Availability Groups. The Database Availability Group allows the Exchange Server to replicate data transactions between Mailbox servers within a single data center site or across several data center sites. In the event of a primary Mailbox server failure, the secondary data source can be activated on a redundant server with a second copy of the data intact. Downtime and loss of data can be drastically minimized, if not completely eliminated, with the ability to replicate mailbox data in real-time.

Microsoft eliminated single copy clusters, Local Continuous Replication, Clustered Continuous Replication, and Standby Continuous Replication in Exchange 2010 and substituted in their place Database Availability Group (DAG) replication technology. The DAG is effectively CCR, but instead of a single active and single passive copy of the database, DAG provides up to 16 copies of the database and provides a staging failover of data from primary to replica copies of the mail. DAGs still use log shipping as the method of replication of information between servers. Log shipping means that the 1-MB log files that note the information written to an Exchange server are transferred to other servers and the logs are replayed on that server to build up the content of the replica system from data known to be accurate. If during a replication cycle a log file does not completely transfer to the remote system, individual log transactions are backed out of the replicated system and the information is re-sent. Unlike bit-level transfers of data between source and destination used in Storage Area Networks (SANs) or most other Exchange Server database replication solutions, if a system fails, bits do not transfer and Exchange Server has no idea what the bits were, what to request for a resend of data, or how to notify an administrator what file or content the bits referenced. Microsoft's implementation of log shipping provides organizations with a clean method of knowing what was replicated and what was not. In addition, log shipping is done with small 1-MB log files to reduce bandwidth consumption of Exchange Server 2010 replication traffic. Other uses of the DAG include staging the replication of data so that a third or fourth copy of the replica resides "offline" in a remote data center; instead of having the data center actively be a failover destination, the remote location can be used to simply be the point where data is backed up to tape or a location where data can be recovered if a catastrophic enterprise environment failure occurs.

A major architecture change with Exchange Server 2010 is how Outlook clients connect to Exchange Server. In previous versions of Exchange Server, even Exchange Server 2007, RPC/HTTP and RPC/HTTPS clients would initially connect to the Exchange Server front end or Client Access Server to reach the Mailbox servers, while internal MAPI clients would connect directly to their Mailbox Server. With Exchange Server 2010, all communications (initial connection and ongoing MAPI communications) go through the Client Access Server, regardless of whether the user was internal or external. Therefore, architecturally, the Client Access Server in Exchange Server 2010 needs to be close to the Mailbox server and a high-speed connection should exist between the servers for optimum performance.

Load Balancing for an Exchange 2010 Environment

In Microsoft's Exchange 2010 there have been some architectural changes that have increased the importance of load balancing. Although there are by default some software based load balancing technologies used in the exchange 2010 architecture, a hardware-based load balancer, such as the Cisco Application Control Engine (ACE), can be beneficial.

Two changes to Exchange 2010 solution in regards to the Client Access Server (CAS) are the RPC Client Access Service and the Exchange Address Book Service. In previous iterations, Exchange Outlook clients would connect directly to the mailbox server and in Exchange 2010 all client connections internal and external are terminated at the CAS or by a pool of CAS servers.

Commonly Windows Network Load Balancing (WNLB) is used to load balance different roles of the Exchange 2010 environment. A hardware load balancer such as ACE can be used in place to eliminate some limitations such as scalability and functionality. Microsoft suggests a hardware load balancer is needed when deployments have more then eight CAS servers. However in some scenarios with deployments of less then eight CAS servers, a hardware load balancer can also be used. One specific limitation of WNLB is it is only capable of session persistence based on the client's IP address.

ACE can be used to offload CUP-intensive tasks such as SSL encryption and decryption processing and TCP session management, which greatly improves server efficiency. More complex deployments also dictate the use of a hardware load balancer, for example if CAS server role is co-located on servers running the mailbox server role in a database availability configuration (DAG). This requirement is due to a incompatibility with the Windows Failover clustering and WNLB. For more information, see Microsoft Support Knowledge Base article 235305.

This document and its technology recommendations are intended to be used in a pure Exchange 2010 deployment, however some may work in Exchange 2007 or mixed deployments. Such deployments are beyond the scope of this document as they were not tested and validated. For more information on load balancing an Exchange 2007 deployment with ACE, see: http://www.cisco.com/en/US/docs/solutions/Verticals/mstdcmsftex.html.

Exchange 2010 MAPI Client Load Balancing Requirements

As previously mentioned, there are some changes in Exchange 2010 from Exchange 2007, including how Exchange clients communicate. Outlook 2010 clients use MAPI over RPC to the Client Access Servers, therefore IP-based load balancing persistence should be used. However with RPC the TCP port numbers are dynamically derived when the service is started and configurations for a large range of dynamically created ports is not desirable. Because of this a catch all can be used for RPC traffic at the load balancer for any TCP-based traffic destined for the server farm.

Microsoft does give a work around to this and allow static port mapping to simplify load balancing; however in this design our testing was limited to the use of a catch all for Exchange 2010 client testing.

Configuring Static Port Mapping For RPC-Based Services

If the ports for these services are statically mapped, then the traffic for these services is restricted to port 135 (used by the RPC portmapper) and the two specific ports that have been selected for these services.

The static port for the RPC Client Access Service is configured via the registry. The following registry key should be set on each Client Access Server to the value of the port that you wish to use for TCP connections for this service:

Key: HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\MSExchangeRPC\ParametersSystem

Value: TCP/IP Port

Type: DWORD

The static ports for the two RPC endpoints maintained by the Exchange Address Book Service are set in the Microsoft.Exchange.Address Book.Service.Exe.config file, which can be found in the bin directory under the Exchange installation path on each Client Access Server. The "RpcTcpPort" value in the configuration file should be set to the value of the port that you wish to use for TCP connections for this service. This port handles connections for both the Address Book Referral (RFR) interface and the Name Service Provider Interface (NSPI).

Note

This only affects connections for "internal" connections via TCP and does not affect Outlook Anywhere connections that take advantage of RPC/HTTP tunneling. Outlook Anywhere connections to the RPC Client Access Service occur on port 6001 and this is not configurable.

Exchange 2010 Outlook Web Access Load Balancing Requirements

In regards to Outlook Web App (OWA), client session information is maintained on the CAS for the duration of a user's session. These Web sessions are either terminated via a timeout or logout. Access permissions for sessions may be affected by client session information depending on the authentication used. If an OWA client session request is load balanced between multiple CAS servers, requests can be associated with different sessions to different servers. This causes the application to be unusable due to frequent authentication requests.

IP-based session persistence is a reliable and simple method of persistence, however if the source of the session is being masked at any given point in the connection by something such as network address translation (NAT), it should not be used. Cookie-based persistence is the optimum choice for OWA sessions. Cookie-based persistence can either be achieved by insertion of a new cookie in the HTTP stream by the load balancer or by using the existing cookie in the HTTP stream that comes from the CAS server.

If forms-based authentication is being used, there is a timing concern that has to be addressed. SSL ID-based persistence as a fall back can be used to address this timing constraint.

The following scenario depicts the sequence of events that require SSL IP-based persistence as a fallback:

1.

Client sends credentials via an HTTP POST action to /owa/auth.owa.

2.

Server responds authentication request from a client and redirects to /owa/ and sets two cookies, "cadata" and "sessionid".

3.

Client follows redirect and passes up the two new cookies.

4.

Server sets "UserContext" cookie.

It is critical that the server which issued the two cookies in step 2 "cadata" and "sessionid" is the same server that is accessed in step 3. By using SSL ID-based persistence for this part of the transaction, you can maintain that the requests are sent to the same server in both steps.

It is also important to understand the browser process model in relation to OWA session workloads if SSL ID-based persistence is configured. With Internet Explorer 8 as an OWA client, some operations may open a new browser window or tab, such as opening a new message, browsing address lists, or creating a new message. When a new window or tab is launched, so is a new SSL ID and therefore a new logon screen because the session can possibly be going to a different CAS server that is unaware of the previous session. By using client certificates for authentication, IE8 does not spawn new processes and subsequently client traffic remains on the same CAS server.

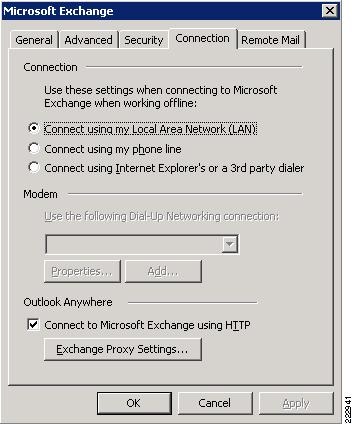

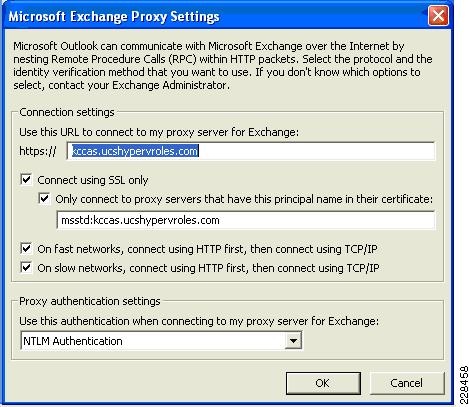

Outlook Anywhere Load Balancing Requirements

Outlook Anywhere Clients use a RPC Proxy component to proxy RPC calls to the RPC Client Access Service and Exchange Address Book Service. If the real Client IP is available to the CAS, IP-based persistence can be used with a load balancer for these connections. However commonly in the case with remote clients, the client IP is most likely using NAT at one or more points in the network connection. Therefore a less basic persistence method is needed.

If basic authentication is being used, persistence can be based on the Authorization HTTP header. If your deployment is a pure Outlook 2010 environment using the Outlook 2010 client, you can also use a cookie with the value set to "OutlookSession" for persistence.

Cisco Application Control Engine Overview

The Cisco ACE provides a highly-available and scalable data center solution from which the Microsoft Exchange Server 2010 application environment can benefit. Currently, the Cisco ACE is available as an appliance or integrated service module in the Cisco Catalyst 6500 platform. The Cisco ACE features and benefits include:

•

Device partitioning (up to 250 virtual ACE contexts)

•

Load balancing services (up to 16 Gbps of throughput capacity and 325,000 Layer 4 connections/second)

•

Security services via deep packet inspection, access control lists (ACLs), unicast reverse path forwarding (uRPF), Network Address Translation (NAT)/Port Address Translation (PAT) with fix-ups, syslog, etc.

•

Centralized role-based management via Application Network Manager (ANM) GUI or CLI

•

SSL-offload

•

HTTPS (SSL) server redirection rewrite

•

Support for redundant configurations (intra-chassis, inter-chassis, and inter-context)

ACE can be configured in the following modes of operation:

•

Transparent

•

Routed

•

One-armed

The following sections describe some of the Cisco ACE features and functionalities used in the Microsoft Exchange Server 2010 application environment.

ACE One-Armed Mode Design

One-armed configurations are used when the device that makes the connection to the virtual IP address (VIP) enters the ACE on the same VLAN on which the server resides. The servers must traverse back through the ACE before reaching the client. This is done with either source NAT or policy-based routing. Because the network design for this document has ACE VIP on the same VLAN as the actual servers being load balanced, a one-armed mode was used.

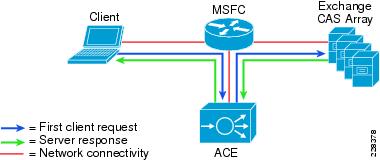

In the one-armed mode of operation clients send application requests through the multilayer switch feature card (MSFC), which routes them to a virtual IP address (VIP) within the Application Control Engine (ACE), which is configured with a single VLAN to handle client and server communication. Client requests arrive at the VIP and the ACE picks the appropriate server and then uses the destination Network Address Translation (NAT) to rewrite the destination IP to that of the rserver and rewrite the source IP with one from the nat-pool. Once the client request is fully NATed, it is sent to the server over the same VLAN which it was originally received. The server responds to the Cisco ACE based on the source IP of the request. The Cisco ACE receives the response. The ACE then changes the source IP to be the VIP and routes the traffic to the MSFC.

The MSFC then forwards the response to the client. Figure 3 displays these transactions at a high level.

Figure 3 One-Armed Mode Design Transactions

ACE Virtualization

Virtualization is a prevalent trend in the enterprise today. From virtual application containers to virtual machines, the ability to optimize the use of physical resources and provide logical isolation is gaining momentum. The advancement of virtualization technologies includes the enterprise network and the intelligent services it offers.

The Cisco ACE supports device partitioning where a single physical device may provide multiple logical devices. This virtualization functionality allows system administrators to assign a single virtual ACE device to a business unit or application to achieve application performance goals or service level agreements (SLAs). The flexibility of virtualization allows the system administrator to deploy network-based services according to the individual business requirements of the customer and technical requirements of the application. Service isolation is achieved without purchasing another dedicated appliance that consumes more space and power in the data center.

SSL Offload

The Cisco ACE is capable of providing secure transport services to applications residing in the data center. The Cisco ACE implements its own SSL stack and does not rely on any version of OpenSSL. The Cisco ACE supports TLS 1.0, SSLv3, and SSLv2/3 hybrid protocols. There are three SSL relevant deployment models available to each ACE virtual context:

•

SSL termination—Allows for the secure transport of data between the client and ACE virtual context. The Cisco ACE operates as an SSL proxy, negotiating and terminating secure connections with a client and a non-secure or clear text connection to an application server in the data center. The advantage of this design is the offload of application server resources from taxing the CPU and memory demands of SSL processing, while continuing to provide intelligent load balancing.

•

SSL initiation—Provides secure transport between the Cisco ACE and the application server. The client initiates an unsecure HTTP connection with the ACE virtual context and the Cisco ACE acting as a client proxy negotiates an SSL session to an SSL server.

•

SSL end-to-end—Provides a secure transport path for all communications between a client and the SSL application server residing in the data center. The Cisco ACE uses SSL termination and SSL initiation techniques to support the encryption of data between client and server. Two completely separate SSL sessions are negotiated, one between the ACE context and the client and the other between the ACE context and the application server. In addition to the intelligent load balancing services the Cisco ACE provides in an end-to-end SSL model, the system administrator may choose to alter the intensity of data encryption to reduce the load on either the front-end client connection or back-end application server connection to reduce the SSL resource requirements on either entity.

SSL URL Rewrite Offload

Because the server is unaware of the encrypted traffic flowing between the client and the ACE, the server may return to the client a URL in the Location header of HTTP redirect responses (301: Moved Permanently or 302: Found) in the form http://www.cisco.com instead of https://www.cisco.com. In this case, the client makes a request to the unencrypted insecure URL, even though the original request was for a secure URL. Because the client connection changes to HTTP, the requested data may not be available from the server using a clear text connection.

To solve this problem, the ACE provides SSL URL rewrite, which changes the redirect URL from http:// to https:// in the Location response header from the server before sending the response to the client. By using URL rewrite, you can avoid insecure HTTP redirects. All client connections to the Web server will be SSL, ensuring the secure delivery of HTTPS content back to the client. The ACE uses regular expression matching to determine whether the URL needs rewriting. If a Location response header matches the specified regular expression, the ACE rewrites the URL. In addition, the ACE provides commands to add or change the SSL and the clear port numbers.

Session Persistence

Session persistence is the ability to forward client requests to the same server for the duration of a session. Microsoft supports session persistence for their Microsoft Exchange environment via the following methods:

•

Source IP sticky

•

Cookie sticky

The Cisco ACE supports each of these methods, but given the presence of proxy services in the enterprise, Cisco recommends using the cookie sticky method to guarantee load distribution across the server farm wherever possible as session-based cookies present unique values to use for load balancing.

In addition, the Cisco ACE supports the replication of sticky information between devices and their respective virtual contexts. This provides a highly available solution that maintains the integrity of each client's session.

Health Monitoring

The Cisco ACE device is capable of tracking the state of a server and determining its eligibility for processing connections in the server farm. The Cisco ACE uses a simple pass/fail verdict, but has many recovery and failures configurations, including probe intervals, timeouts, and expected results. Each of these features contributes to an intelligent load-balancing decision by the ACE context.

The predefined probe types currently available on the ACE module are:

•

ICMP

•

TCP

•

UDP

•

Echo (TCP/UDP)

•

Finger

•

HTTP

•

HTTPS (SSL Probes)

•

FTP

•

Telnet

•

DNS

•

SMTP

•

IMAP

•

POP

•

RADIUS

•

Scripted (TCL support)

Note that the potential probe possibilities available via scripting make the Cisco ACE an even more flexible and powerful application-aware device. In terms of scalability, the Cisco ACE module can support 1000 open probe sockets simultaneously.

Cisco Unified Computing System Hardware

The UCS components included in this solution:

•

UCS 5108 blade server chassis

•

UCS B200-M1 half-slot two socket blade servers with the processor types and memory sizes in the different data centers as shown in Table 4.

I/O Adapters

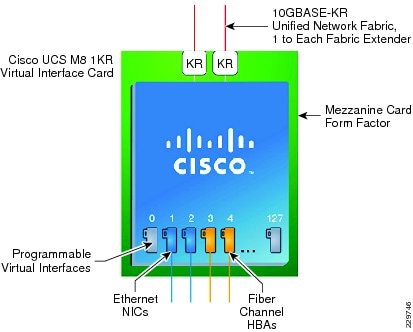

The blade server has various Converged Network Adapters (CNA) options. The following CNA option was used in this Cisco Validated Design:

•

UCS M81KR Virtual Interface Card (VIC)

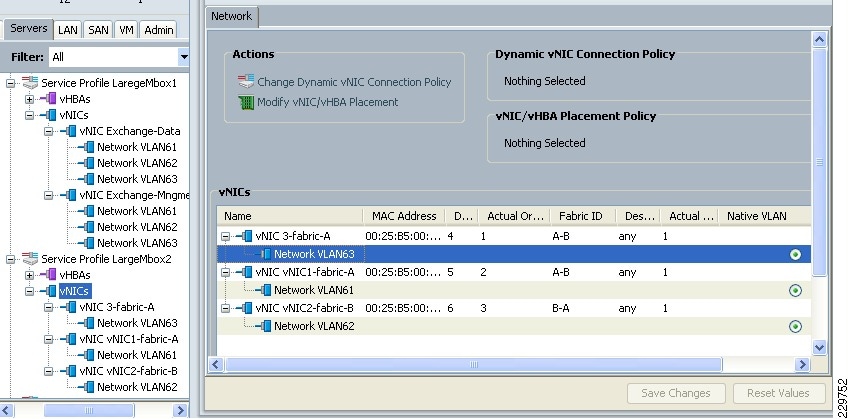

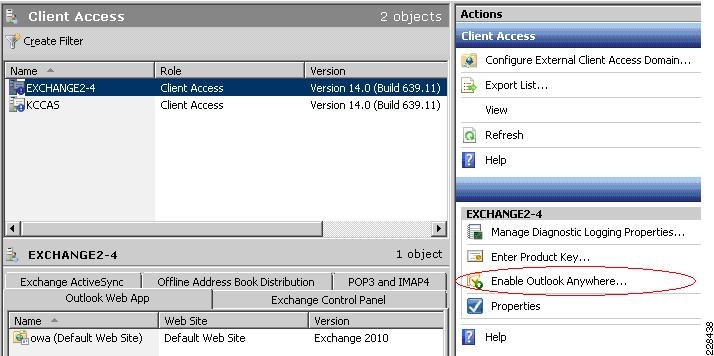

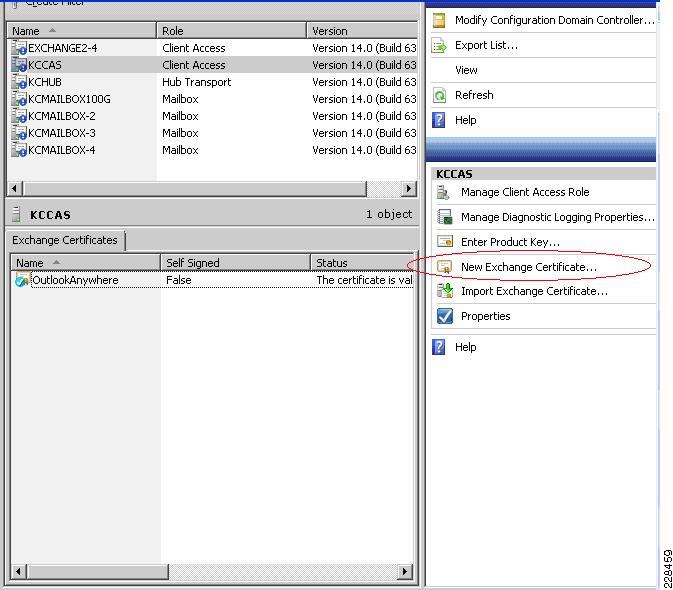

The UCS M81KR VIC allows multiple complete I/O configurations to be provisioned in virtualized or non-virtualized environments using just-in-time provisioning, providing tremendous system flexibility and allowing consolidation of multiple physical adapters. It delivers uncompromising virtualization support, including hardware-based implementation of Cisco VN-Link technology and pass-through switching. System security and manageability is improved by providing visibility and portability of network polices and security all the way to the virtual machine. Figure 4 shows the types of interfaces supported on the M81KR VIC.

Figure 4 FC and Ethernet Interfaces Supported on the M81KR VIC

The virtual interface card makes Cisco VN-Link connections to the parent fabric interconnects, which allows virtual links to connect virtual NICs in virtual machines to virtual interfaces in the interconnect. Virtual links then can be managed, network profiles applied, and interfaces dynamically reprovisioned as virtual machines move between servers in the system.

Cisco UCS 2100 Series Fabric Extenders

The Cisco UCS 2104XP Fabric Extender brings the I/O fabric into the blade server chassis and supports up to four 10-Gbps connections between blade servers and the parent fabric interconnect, simplifying diagnostics, cabling, and management. The fabric extender multiplexes and forwards all traffic using a cut-through architecture over one to four 10-Gbps unified fabric connections. All traffic is passed to the parent fabric interconnect, where network profiles are managed efficiently and effectively by the fabric interconnects. Each of up to two fabric extenders per blade server chassis has eight 10GBASE-KR connections to the blade chassis midplane, with one connection to each fabric extender from each of the chassis' eight half slots. This configuration gives each half-width blade server access to each of two 10-Gbps unified fabric connections for high throughput and redundancy.

The benefits of the fabric extender design include:

•

Scalability—With up to four 10-Gbps uplinks per fabric extender, network connectivity can be scaled to meet increased workload demands simply by configuring more uplinks to carry the additional traffic.

•

High availability—Chassis configured with two fabric extenders can provide a highly available network environment.

•

Reliability—The fabric extender manages traffic flow from network adapters through the fabric extender and onto the unified fabric. The fabric extender helps create a lossless fabric from the adapter to the fabric interconnect by dynamically throttling the flow of traffic from network adapters into the network.

•

Manageability—The fabric extender model extends the access layer without increasing complexity or points of management, freeing administrative staff to focus more on strategic than tactical issues. Because the fabric extender also manages blade chassis components and monitors environmental conditions, fewer points of management are needed and cost is reduced.

•

Virtualization optimization—The fabric extender supports Cisco VN-Link architecture. Its integration with VN-Link features in other Cisco UCS components, such as the fabric interconnect and network adapters, enables virtualization-related benefits including virtual machine-based policy enforcement, mobility of network properties, better visibility, and easier problem diagnosis in virtualized environments.

•

Investment protection—The modular nature of the fabric extender allows future development of equivalent modules with different bandwidth or connectivity characteristics, protecting investments in blade server chassis.

•

Cost savings—The fabric extender technology allows the cost of the unified network to be accrued incrementally, helping reduce costs in times of limited budgets. The alternative is to implement and fund a large, fixed-configuration fabric infrastructure long before the capacity is required.

UCS 6100 XP Series Fabric Interconnect

The UCS 6100 XP fabric interconnect is based on the Nexus 5000 product line. However, unlike the Nexus 5000 products, it provides additional functionality of managing the UCS chassis with the embedded UCS manager. A single 6140 XP switch can support multiple UCS chassis with either half-slot or full-width blades.

Some of the salient features provided by the switch are:

•

10 Gigabit Ethernet, FCoE capable, SFP+ ports

•

Fixed port versions with expansion slots for additional Fiber Channel and 10 Gigabit Ethernet connectivity

•

Up to 1.04 Tb/s of throughput

•

Hot pluggable fan and power supplies, with front to back cooling system

•

Hardware based support for Cisco VN-Link technology

•

Can be configured in a cluster for redundancy and failover capabilities

In this solution, two UCS 6120 Fabric Interconnects were configured in a cluster pair for redundancy and were configured in End Host Mode. Because the Nexus 1000V switch is used in this solution, fabric failover in the Service Profiles of EXS hosts did not have to be enabled. This is because in a configuration where there is a bridge (Nexus 1000V or VMware VSwitch) downstream from the UCS 6120 fabric interconnects, the MAC address learning on the switches will take care of failover without the fabric failover feature.

UCS Service Profiles

Programmatically Deploying Server Resources

Cisco UCS Manager provides centralized management capabilities, creates a unified management domain, and serves as the central nervous system of the Cisco UCS. Cisco UCS Manager is embedded device management software that manages the system from end-to-end as a single logical entity through an intuitive GUI, CLI, or XML API. Cisco UCS Manager implements role- and policy-based management using service profiles and templates. This construct improves IT productivity and business agility. Now infrastructure can be provisioned in minutes instead of days, shifting IT's focus from maintenance to strategic initiatives.

Dynamic Provisioning with Service Profiles

Cisco UCS resources are abstract in the sense that their identity, I/O configuration, MAC addresses and WWNs, firmware versions, BIOS boot order, and network attributes (including QoS settings, ACLs, pin groups, and threshold policies) all are programmable using a just-in-time deployment model. The manager stores this identity, connectivity, and configuration information in service profiles that reside on the Cisco UCS 6100 Series Fabric Interconnect. A service profile can be applied to any blade server to provision it with the characteristics required to support a specific software stack. A service profile allows server and network definitions to move within the management domain, enabling flexibility in the use of system resources. Service profile templates allow different classes of resources to be defined and applied to a number of resources, each with its own unique identities assigned from predetermined pools.

VMware VSphere 4.0

In 2009, VMware introduced its next-generation virtualization solution, VMware Vsphere 4, which builds upon ESX 3.5 and provides greater levels of scalability, security, and availability to virtualized environments. In addition to improvements in performance and utilization of CPU, memory, and I/O, VSphere 4 also offers users the option to assign up to eight virtual CPU to a virtual machine—giving system administrators more flexibility in their virtual server farms as processor-intensive workloads continue to increase.

VSphere 4 also provides the VMware VCenter Server that allows system administrators to manage their ESX hosts and virtual machines on a centralized management platform. With the Cisco Nexus 1000V Distributed Virtual Switch integrated into the VCenter Server, deploying and administering virtual machines is similar to deploying and administering physical servers. Network administrators can continue to own the responsibility for configuring and monitoring network resources for virtualized servers as they did with physical servers. System administrator can continue to "plug-in" their virtual machines into network ports that have Layer 2 configurations, port access and security policies, monitoring features, etc., that have been pre-defined by their network administrators, in the same way they would plug in their physical servers to a previously-configured access switch. In this virtualized environment, the system administrator has the added benefit of the network port configuration/policies moving with the virtual machine if it is ever migrated to different server hardware.

Nexus 1000V Virtual Switch

Operating inside the VMware ESX hypervisor, the Cisco Nexus 1000V distributed switch supports Cisco VN-Link server virtualization technology that allows virtual machines to be connected upstream through definitions of policies. These security and network policies follow the virtual machine when it is moved through VMotion onto different ESX hosts.

Since the Nexus 1000V runs on NXOS, network administrators can configure this virtual switch with the command line interface they are already familiar with and pre-define the network policies and connectivity options to match the configuration and policies in the upstream access switches. Server administrators can then later assign these network policies to their new virtual machines using the VCenter Server GUI, making it so much easier and faster to deploy new servers as they do not have to wait for the network configuration to be put in place by the network administrators in order to bring their servers online. This also has significant positive impact for customers who regularly use the DRS functionality found in vSphere to balance Guest load on ESX Hosts. The Network Profile can now follow the guest as it is moved by vMotion between hosts. Table 5 points out how operationally the Nexus 1000V saves time for server administrators deploying virtual machines and allows network administrators to continue doing what they are used to with the tools with which they are already familiar.

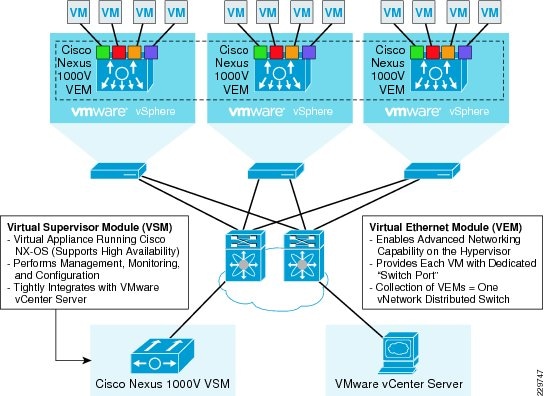

To better understand how the Nexus 1000V works, it is important to understand the software components of the Nexus 1000V—the Virtual Supervisor Module (VSM) and the Virtual Ethernet Module (VEM). It is also important to understand how the physical interfaces on a physical server map to virtual switches and virtual network interface cards (vNICs). The physical NICs on an ESX host are used as uplinks to the physical network infrastructure, e.g., the upstream access switch. Virtual network interfaces are the network interfaces on the virtual machines—whatever guest operating system is installed on the virtual machine will see these vNICs as physical NICs—so that the guest operating system can use standard network interface drivers (e.g., Intel ProSet). The Nexus 1000V, or the virtual switch, connects the virtual network interfaces to the physical NICs. Because the Nexus 1000V is a virtual distributed switch, it provides virtual switching across a cluster of ESX hosts and through the installation of a VEM on ESX. The VSM is a virtual machine running NX-OS that is created upon the installation of the Nexus 1000V; it is the control plane of the virtual distributed switch.

Each time an ESX host is installed, it is also configured with a VEM that is a virtual line card providing the virtual network interfaces to the hosted VMs as well as the system interfaces on the ESX host for host management and for communicating with the VSM. Any time configuration changes are made through VCenter to the virtual networking, the VSM pushes these changes down to the affected VEMs on their ESX hosts. Since the switching functionality resides on the VEMs themselves, the Nexus 1000V distributed switch can continue providing switching functionality even if the VSM is offline. Figure 5 illustrates how each VM is connected to the overall Nexus 1000V distributed switch through VEMs and managed by the VSM through VCenter.

Figure 5 Network Connectivity of VMs Through Nexus 1000V to Data Center LAN

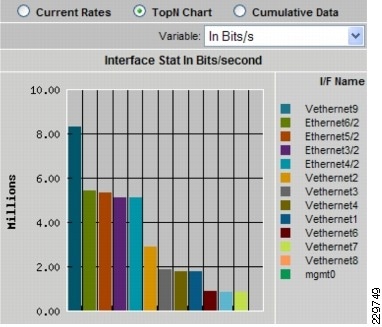

NAM Appliance for Virtual Network Monitoring

With NAM 4.2, the Cisco NAM Appliances extend into the virtual networking layer, simplifying manageability of Cisco Nexus 1000V switch environments by offering visibility into the VM network, including interactions across virtual machines and virtual interfaces. The Cisco NAM devices provide combined network and application performance analytics that are essential in addressing service delivery challenges in the virtualized data center. The Cisco NAM Appliances can:

•

Monitor the network and VMs uninterrupted by VMotion operations

•

Analyze network usage behavior by applications, hosts/VMs, conversations, and VLANs to identify bottlenecks that may impact performance and availability

•

Allow administrators to preempt performance issues. Provides proactive alerts that facilitate corrective action to minimize performance impact to end users.

•

Troubleshoot performance issues with extended visibility into VM-to-VM traffic, virtual interface statistics (see Figure 7), and transaction response times

•

Assess impact on network behavior due to changes such as VM migration, dynamic resource allocation, and port profile updates

•

Improve the efficiency of the virtual infrastructure and distributed application components with comprehensive traffic analysis

Figure 6 shows the NAM product family. The NAM 2200 appliance is used in this solution.

Figure 6 NAM Product Family

Integrated with the Cisco Network Analysis Module (NAM) appliance, ERSPAN can be configured on the Nexus 1000V to provide bandwidth statistics for each virtual machine. Reports on bandwidth utilization for various traffic types, such as Exchange user data, management, and DAG replication, can be generated to ensure that the overall network design can support the traffic levels generated by the application servers. For Exchange 2010 mailbox replication, for example, the bandwidth utilization by replication or database seeding traffic from any mailbox server configured in a DAG can be reported through the NAM appliance. Traffic generated by the VMotion of the Hub Transport/CAS or AD/DNS VMs can be monitored and reported real-time or historically.

Figure 7 Single-Screen View of Traffic Utilization from Both Physical and Virtual Interfaces

NetApp Storage Technologies and Benefits

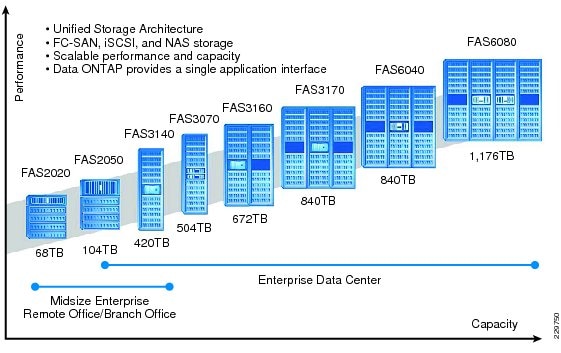

NetApp solutions begin with Data ONTAP® 7G, the fundamental software platform that runs on all NetApp storage systems. Data ONTAP 7G is a highly optimized, scalable operating system that supports mixed NAS and SAN environments and a range of protocols, including Fibre Channel, iSCSI, FCoE, NFS, and CIFS. It also includes a patented file system and storage virtualization capabilities. Leveraging the Data ONTAP 7G platform, the NetApp Unified Storage Architecture offers the flexibility to manage, support, and scale to business environments by using a single set of knowledge and tools. From the remote office to the data center, our customers collect, distribute, and manage data from all locations and applications at the same time. This allows the investment to scale by standardizing processes, cutting management time, and increasing availability. Figure 8 shows the different NetApp Unified Storage Architecture platforms.

Figure 8 NetApp Unified Storage Architecture Platforms

The NetApp storage hardware platform used in this solution is the FAS3170. The FAS3100 series is an ideal platform for primary and secondary storage for a Microsoft Exchange deployment. An array of NetApp tools and enhancements are available to augment the storage platform. These tools assist in deployment, backup, recovery, replication, management, and data protection. This solution makes use of a subset of these tools and enhancements.

RAID-DP

RAID-DP® (http://www.netapp.com/us/products/platform-os/raid-dp.html) is NetApp's implementation of double-parity RAID 6, which is an extension of NetApp's original Data ONTAP WAFL® RAID 4 design. Unlike other RAID technologies, RAID-DP provides the ability to achieve a higher level of data protection without any performance effect while consuming a minimal amount of storage.

SATA

The performance acceleration provided by WAFL (http://blogs.netapp.com/extensible_netapp/2008/10/what-is-wafl-pa.html) and the double-disk protection provided by RAID-DP make economical and large-capacity SATA drives practical for production application use. In addition, to negate the read latencies associated with large drives, SATA drives can be used with NetApp Flash Cache (http://www.netapp.com/us/products/storage-systems/flash-cache/flash-cache.html), which significantly increases performance with large working set sizes. SATA drives are more susceptible to unrecoverable BIT errors and operational failures. Without high-performance double-disk RAID protection such as RAID-DP, large SATA disk drives are more prone to failure, especially during their long RAID reconstruction time for a single drive failure. Table 6 compares RAID types and the probability of data loss in a five-year period.

For additional information about Flash Cache and Exchange Server 2010, see Using Flash Cache for Exchange 2010 (http://www.netapp.com/us/library/technical-reports/tr-3867.html).

For additional information on Exchange Server and RAID-DP, see http://media.netapp.com/documents/tr-3574.pdf.

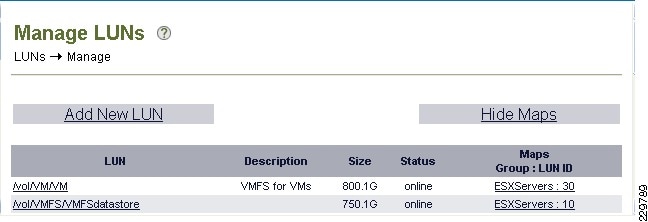

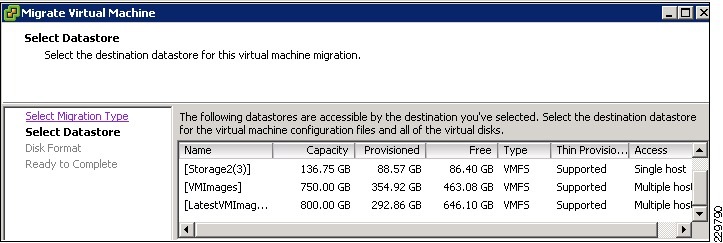

Thin Provisioning and FlexVol

Thin provisioning is a function of NetApp FlexVol®, which allows storage to be provisioned just like traditional storage. However, it is not consumed until the data is written (just-in-time storage). Use NetApp ApplianceWatch (http://blogs.netapp.com/storage_nuts_n_bolts/2010/06/netapp-appliancewatch-for-microsofts-scom-and-scvmmhyper-v.html) in Microsoft's SCOM to monitor thin provisioned LUNs, increasing disk efficiency. Microsoft recommends a 20% growth factor above the database size and a 20% free disk space in the database LUN which on disk is over 45% free disk space.

Deduplication

NetApp deduplication technology leverages NetApp WAFL block sharing to perform protocol-agnostic data-in-place deduplication as a property of the storage itself. With legacy versions of Exchange Server most customers saw 1-5% deduplication rates, while with Exchange Server 2010 customers are seeing 10-35% deduplication rates. In a virtualized environment, it is not uncommon to see 90% deduplication rates on the application and operating system data.

Snapshot

NetApp Snapshot™ technology provides zero-cost, near-instantaneous backup, point-in-time copies of the volume or LUN by preserving Data ONTAP WAFL consistency points (CPs). Creating Snapshot copies incurs minimal performance effect because data is never moved, as it is with other copy-out technologies. The cost for Snapshot copies is at the rate of block-level changes, not 100% for each backup as it is with mirror copies. Using Snapshot can result in savings in storage cost for backup and restore purposes and opens up a number of efficient data management possibilities. SnapManager for Microsoft Exchange is tightly coupled with Microsoft Exchange Server and integrates directly with the Microsoft Volume Shadow Copy Service (VSS). This allows consistent backups and also provides a fully supported solution from both NetApp and Microsoft.

Backup and Recovery

NetApp SnapManager for Microsoft Exchange decreases the time required to complete both backup and recovery operations for Microsoft Exchange Server. The SnapManager for Exchange software and the FAS3170 storage array allow the IT department to perform near-instantaneous backups and rapid restores. SnapManager for Microsoft Exchange is tightly coupled with Microsoft Exchange Server 2010 and integrates directly with the Microsoft Volume Shadow Copy Service (VSS). This allows consistent backups and also provides a fully-supported solution from both NetApp and Microsoft. SnapManager for Exchange provides the flexibility to schedule and automate the Exchange backup verification, with additional built-in capabilities for nondisruptive and concurrent verifications. Database verification is not a requirement when in a DAG configuration.

SnapManager for Exchange can be configured to initiate a copy backup (Snapshot copy) of another database with a retention policy of one Snapshot copy. This enables the administrator to perform a nearly instant reseed should one be required. SnapManager for Microsoft Exchange allows quick and easy restoration of Exchange data to any server, including both point-in-time and roll-forward restoration options. This helps to speed disaster recovery and facilitates testing of the Snapshot restore procedure before actual need arises. NetApp Single Mailbox Recovery software delivers the ability to restore an individual mailbox or an individual e-mail message quickly and easily. It also enables the rapid recovery of Exchange data at any level of granularity-storage group, database, folder, single mailbox, or single message, and restores folders, messages, attachments, calendar notes, contacts, and tasks from any recent Snapshot copy. Single Mailbox Recovery can directly read the contents of SnapManager Snapshot copies without the assistance of Exchange Server and rapidly search archived Snapshot copies for deleted messages that are no longer in the current mailbox. Using the Advanced Find feature, it is possible to search across all mailboxes in an archive EDB file by keyword or other criteria and quickly find the desired item. For additional best practices on SnapManager for Exchange, see SnapManager 6.0 for Microsoft Exchange Best Practices Guide (http://www.netapp.com/us/library/technical-reports/tr-3845.html).

NetApp Strategy for Storage Efficiency

As seen in the previous section on technologies for storage efficiency, NetApp's strategy for storage efficiency is based on the built-in foundation of storage virtualization and unified storage provided by its core Data ONTAP operating system and the WAFL file system. Unlike its competitors' technologies, NetApp's technologies surrounding its FAS and V-Series product line have storage efficiency built into their core. Customers who already have other vendors' storage systems and disk shelves can still leverage all the storage saving features that come with the NetApp FAS system simply by using the NetApp V-Series product line. This is again in alignment with NetApp's philosophy of storage efficiency because customers can continue to use their existing third-party storage infrastructure and disk shelves, yet save more by leveraging NetApp's storage-efficient technologies.

NetApp Storage Provisioning

SnapDrive® for Windows®—Provisions storage resources and is the storage provider performing VSS backup.

Data ONTAP Powershell Toolkit—This toolkit is a collection of PowerShell cmdlets for facilitating integration of Data ONTAP into Windows environment and management tools by providing easy-to-use cmdlets for low-level Data ONTAP APIs.

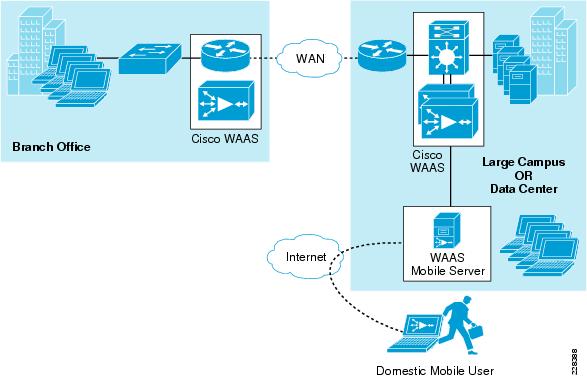

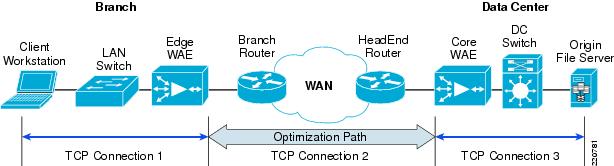

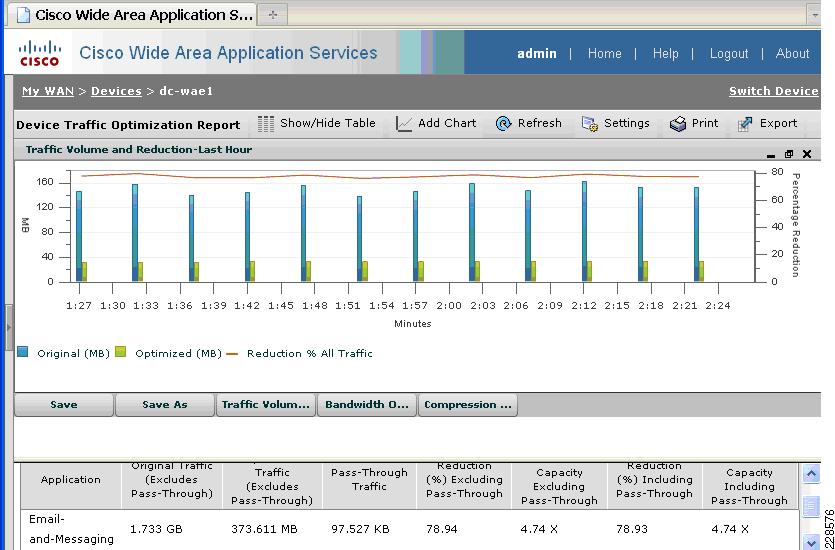

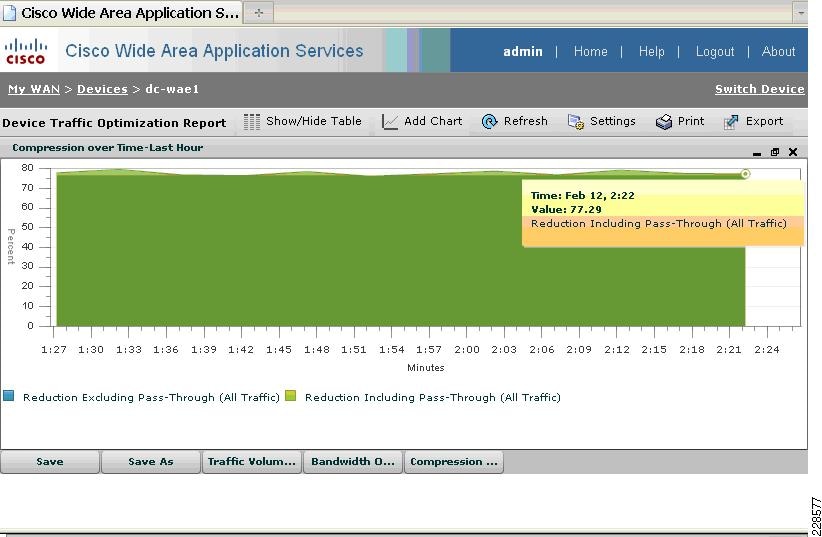

Cisco Wide Area Application Services for Branch Users

To provide optimization and acceleration services between the branch and data center, a Cisco WAAS appliance was deployed at the data center WAN aggregation tier in a one-armed deployment and a WAAS network module was deployed in the Integrated Services Router at the branch edge.

To appreciate how the Cisco Wide Area Application Services (Cisco WAAS) provides WAN optimization and application acceleration benefits to the enterprise, consider the basic types of centralized application messages that are transmitted between remote branches. For simplicity, two basic types are identified:

•

Bulk transfer applications—Transfer of files and objects, such as FTP, HTTP, and IMAP. In these applications, the number of round-trip messages might be few and might have large payloads with each packet. Examples include Web portal or thin client versions of Oracle, SAP, Microsoft (SharePoint, OWA) applications, E-mail applications (Microsoft Exchange, Lotus Notes), and other popular business applications.

•

Transactional applications—High numbers of messages transmitted between endpoints. Chatty applications with many round-trips of application protocol messages that might or might not have small payloads. Examples include CIFS file transfers.

The Cisco WAAS uses the technologies described in the following sections to enable optimized Exchange Outlook communication between the branch office outlook clients and Exchange servers in the data center by providing TFO optimization, LZ compression, DRE caching, MAPI acceleration, and SSL acceleration.

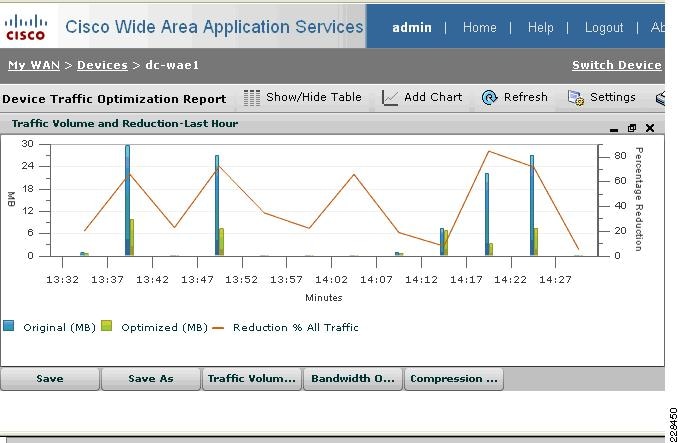

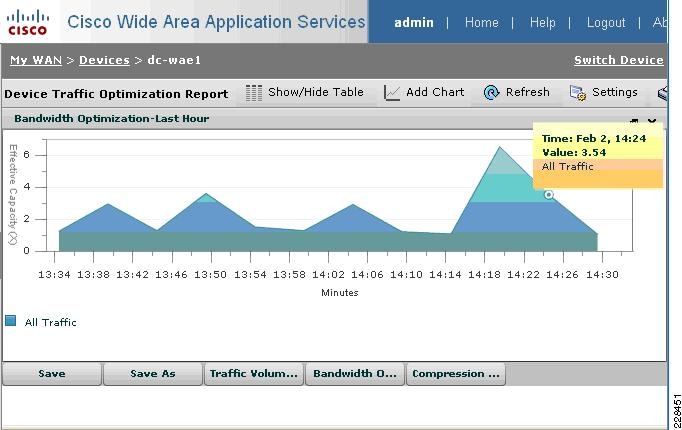

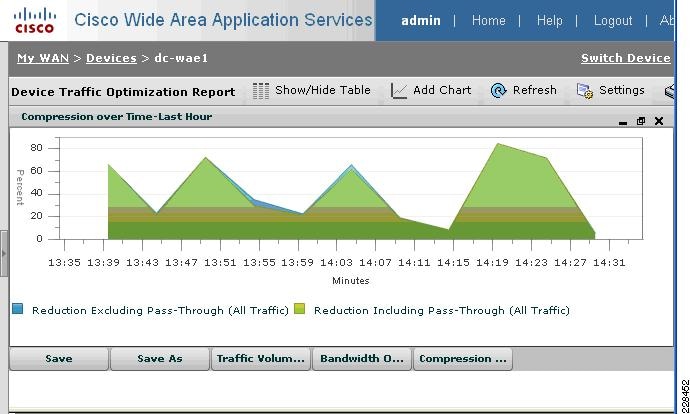

Advanced Compression Using DRE and LZ Compression

Data Redundancy Elimination (DRE) is an advanced form of network compression that allows the Cisco WAAS to maintain an application-independent history of previously-seen data from TCP byte streams. Lempel-Ziv (LZ) compression uses a standard compression algorithm for lossless storage. The combination of using DRE and LZ reduces the number of redundant packets that traverse the WAN, thereby conserving WAN bandwidth, improving application transaction performance, and significantly reducing the time for repeated bulk transfers of the same application.

TCP Flow Optimization

The Cisco WAAS TCP Flow Optimization (TFO) uses a robust TCP proxy to safely optimize TCP at the Cisco WAE device by applying TCP-compliant optimizations to shield the clients and servers from poor TCP behavior due to WAN conditions. The Cisco WAAS TFO improves throughput and reliability for clients and servers in WAN environments through increases in the TCP window sizing and scaling enhancements—as well as through the implementation of congestion management and recovery techniques—to ensure that the maximum throughput is restored in the event of packet loss. By default, Cisco WAAS provides only TFO for RDP. If RDP compression and encryption are disabled, then full optimization (TFO+ DRE/LZ) can be enabled for RDP flows.

Messaging Application Programming Interface (MAPI) Protocol Acceleration

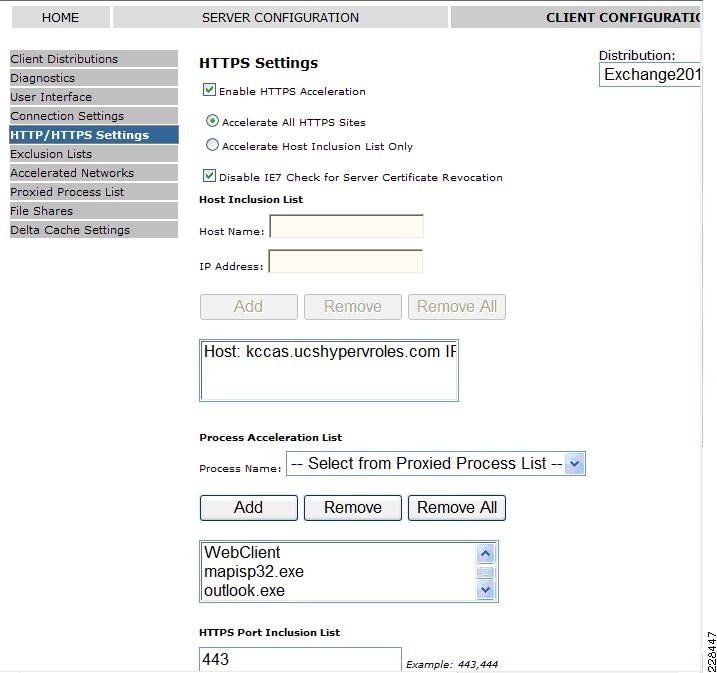

The MAPI application accelerator accelerates Microsoft Outlook Exchange 2010 traffic that uses the Messaging Application Programming Interface (MAPI) protocol. Microsoft Outlook 2010 clients are supported. Clients can be configured with Outlook in cached or non-cached mode; both modes are accelerated. Secure connections that use message authentication (signing) or encryption or Outlook Anywhere connections (MAPI over HTTP/HTTPS) are not accelerated by the MAPI application accelerator. To allow internal Outlook users to benefit from application acceleration of their Exchange traffic, these users can leverage the Outlook Anywhere option to run MAPI over HTTPS, in which case SSL acceleration of their E-mail traffic can be leveraged.

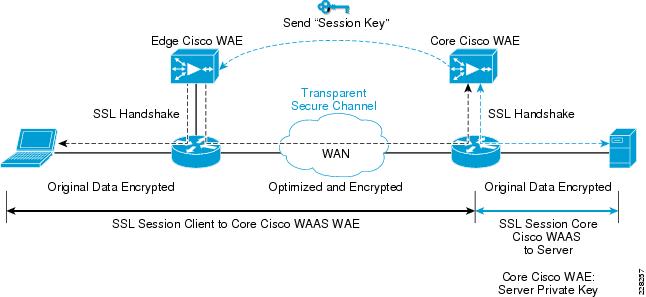

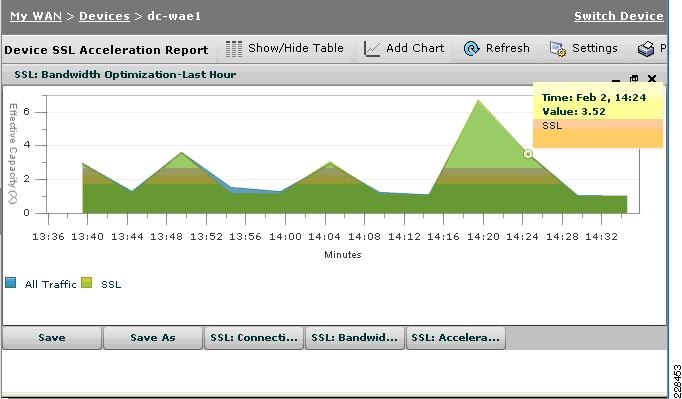

Secure Socket Layer (SSL) Protocol Acceleration

Cisco WAAS provides the widest set of customer use cases for deploying WAN optimization into SSL-secured environments. For example, many industries and organizations use Web proxy servers to front-end their key business process applications in the data center and encrypt with SSL from the proxy server to remote users in branch sites. Cisco WAAS provides optimized performance for delivering these applications, while preserving the SSL encryption end-to-end-something competitive products' SSL implementations do not support. To maximize application traffic security, WAAS devices provide additional encryption for data stored at rest and can be deployed in an end-to-end SSL-optimized architecture with Cisco ACE application switches for SSL offload.

Cisco WAAS provides SSL optimization capabilities that integrate fully with existing data center key management and trust models and can be used by both WAN optimization and application acceleration components. Private keys and certificates are stored in a secure vault on the Cisco WAAS Central Manager. The private keys and certificates are distributed in a secure manner to the Cisco WAAS devices in the data center and stored in a secure vault, maintaining the trust boundaries of server private keys. SSL optimization through Cisco WAAS is fully transparent to end users and servers and requires no changes to the network environment.

Among the several cryptographic protocols used for encryption, SSL/TLS is one of the most important. SSL/TLS-secured applications represent a growing percentage of traffic traversing WAN links today. Encrypted secure traffic represents a large and growing class of WAN data. Standard data redundancy elimination (DRE) techniques cannot optimize this WAN data because the encryption process generates an ever-changing stream of data, making even redundant data inherently non-reducible and eliminating the possibility of removing duplicate byte patterns. Without specific SSL optimization, Cisco WAAS can still provide general optimization for such encrypted traffic with transport flow optimization (TFO). Applying TFO to the encrypted secure data can be helpful in many situations in which the network has a high bandwidth delay product (BDP)1 and is unable to fill the pipe.

Termination of the SSL session and decryption of the traffic is required to apply specific SSL optimizations such as Cisco WAAS DRE and Lempel-Ziv (LZ) compression techniques to the data. Minimally, SSL optimization requires the capability to:

•

Decrypt traffic at the near-side Cisco WAAS Wide Area Application Engine (WAE) and apply WAN optimization to the resulting clear text data.

•

Re-encrypt the optimized traffic to preserve the security of the content for transport across the WAN.

•

Decrypt the encrypted and optimized traffic on the far-side Cisco WAAS WAE and decode the WAN optimization.

•

Re-encrypt the resulting original traffic and forward it to the destination origin server.

The capability to terminate SSL sessions and apply WAN optimizations to encrypted data requires access to the server private keys. Further, the clear-text data received as a result of decryption must be stored on the disk for future reference to gain the full benefits of DRE. These requirements pose serious security challenges in an environment in which data security is paramount. Security by itself is the most important and sensitive aspect of any WAN optimization solution that offers SSL acceleration.

Figure 9 SSL Protocol Acceleration

During initial client SSL handshake, the core Cisco WAE in the data center participates in the conversation.

The connection between the Cisco WAEs is established securely using the Cisco WAE device certificates and the Cisco WAEs cross-authenticate each other:

•

After the client SSL handshake occurs and the data center Cisco WAE has the session key, the data center Cisco WAE transmits the session key (which is temporary) over its secure link to the edge Cisco WAE so that it can start decrypting the client transmissions and apply DRE.

•

The optimized traffic is then re-encrypted using the Cisco WAE peer session key and transmitted, in-band, over the current connection, maintaining full transparency, to the data center Cisco WAE.

•

The core Cisco WAE then decrypts the optimized traffic, reassembles the original messages, and re-encrypts these messages using a separate session key negotiated between the server and the data center Cisco WAE.

•

If the back-end SSL server asks the client to submit an SSL certificate, the core Cisco WAE requests one from the client. The core Cisco WAE authenticates the client by verifying the SSL certificate using a trusted Certificate Authority (CA) or an Online Certificate Status Protocol (OCSP) responder.

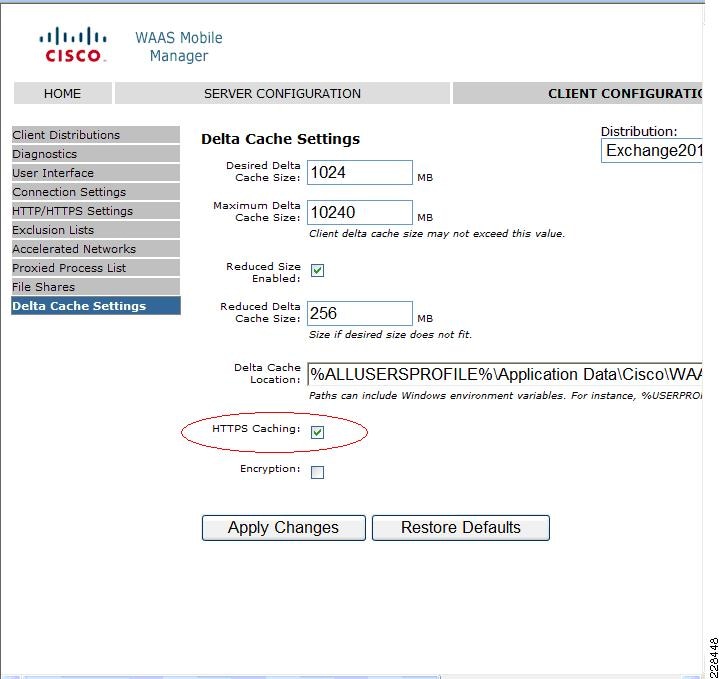

Cisco WAAS Mobile

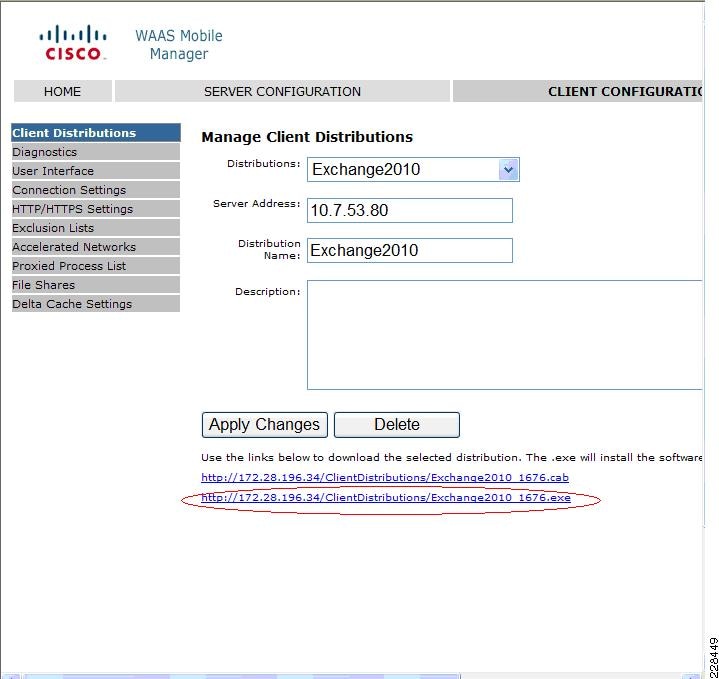

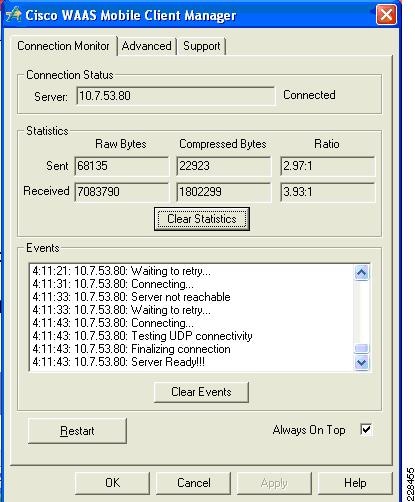

In addition to Cisco WAAS for branch optimization, Cisco offers Cisco WAAS Mobile for telecommuters, mobile users, and small branch and home office users who access corporate networks and need accelerated application performance. Cisco WAAS Mobile client is purpose-built for Microsoft Windows PCs and laptops. To provide WAAS Mobile services to remote users, a Windows 2008 WAAS server was deployed as a virtual machine on the UCS to support user connections into the data center Exchange server farm.

Advanced Data Transfer Compression

Cisco WAAS Mobile maintains a persistent and bi-directional history of data on both the mobile PC and the Cisco WAAS Mobile server. This history can be used in current and future transfers, across different VPN sessions, or after a reboot, to minimize bandwidth consumption and to improve performance. In addition, instead of using a single algorithm for all file types, Cisco WAAS Mobile uses a file-format specific compression to provide higher-density compression than generic compression for Microsoft Word, Excel, and PowerPoint files, Adobe Shockwave Flash (SWF) files, ZIP files, and JPEG, GIF, and PNG files.

Application-Specific Acceleration

Cisco WAAS Mobile reduces application-specific latency for a broad range of applications, including Microsoft Outlook Messaging Application Programming Interface (MAPI), Windows file servers, or network-attached storage using CIFS, HTTP, HTTPS, and other TCP-based applications, such as RDP.

Transport Optimization

Cisco WAAS Mobile extends Cisco WAAS technologies to handle the timing variations found in packet switched wireless networks, the significant bandwidth latency problems of broadband satellite links, and noisy Wi-Fi and digital subscriber line (DSL) connections. The result is significantly higher link resiliency.

Solution Design and Deployment

Exchange 2010 Design Considerations

Microsoft best practices are followed for the different Exchange server roles for the servers that are deployed virtually on Vsphere as well as for the servers installed natively on the UCS blade servers. To summarize, these are the requirements being satisfied in this solution validation that are relevant to processor and memory allocation.

Large Data Center Scenario

•

Mailbox servers are natively installed on UCS blade servers.

•

4000 unique mailboxes actively hosted on each of the two mailbox server in the Large DC. Each mailbox server hosts a passive copy of the 4000 active mailboxes on the other server to support server failover in a DAG.

•

A third natively installed mailbox server is located in a remote disaster recovery datacenter. It hosts all 8000 mailboxes passively, ready to be activated through the DAG if both servers in the Large DC are unavailable.

Small Data Center Scenario

•

One mailbox server virtual machine is located in the Small DC, hosting 1350 active mailbox copies. It does not host any passive copies.

•

One mailbox server virtual machine is located at a remote disaster recovery DC, hosting passive copies of all 1350 mailboxes, ready to be activated if the Small DC mailbox server is unavailable.

Processor Cores—Factors to Consider

There are several factors to consider when determining how many processor cores are needed by your mailbox server, regardless of whether the server is physical or virtualized.

•

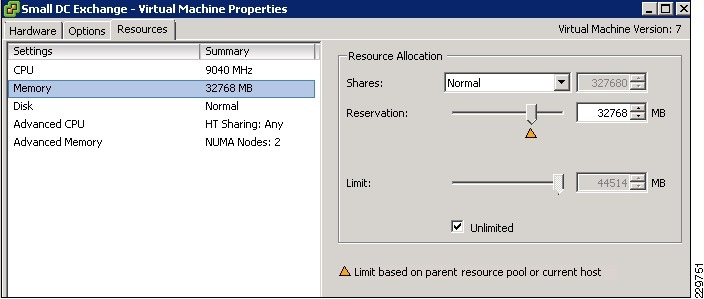

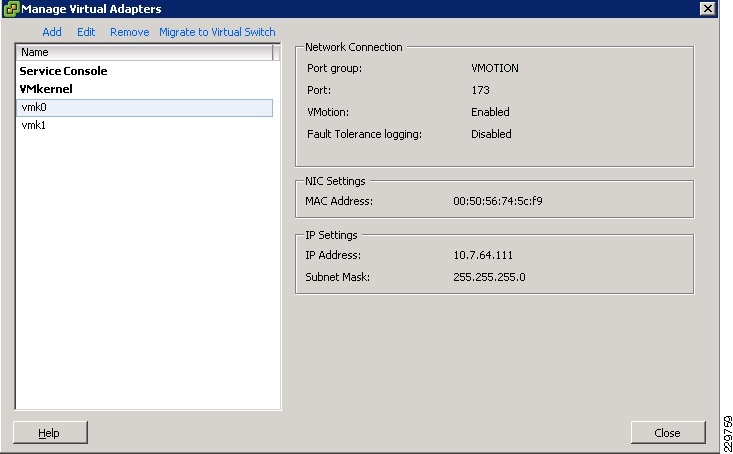

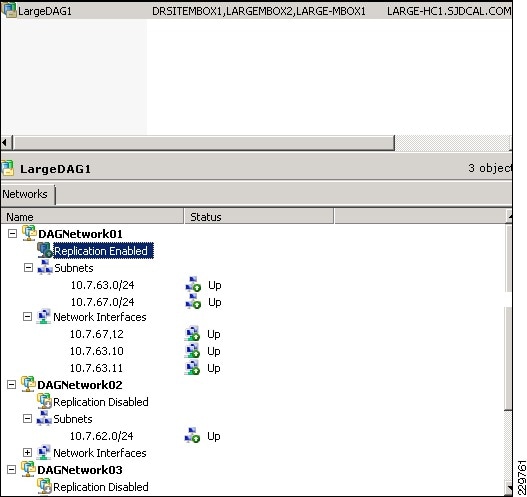

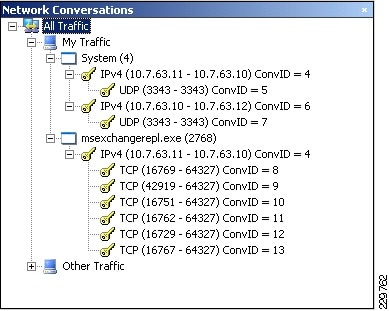

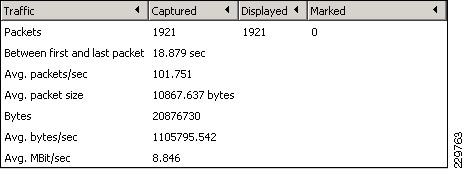

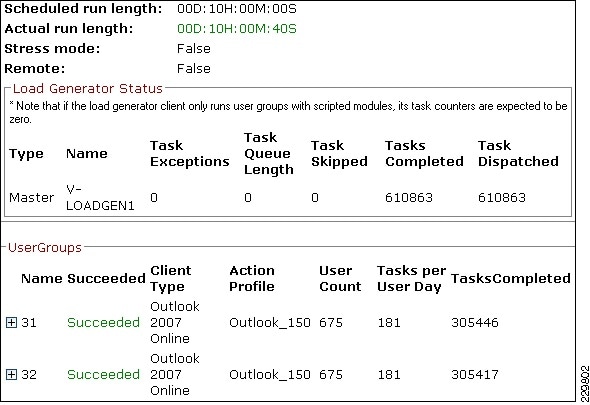

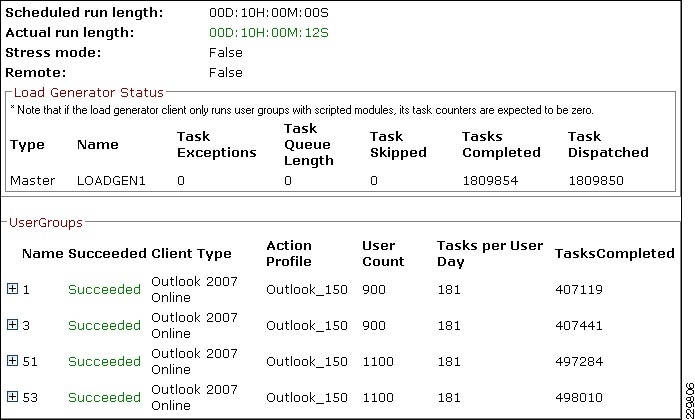

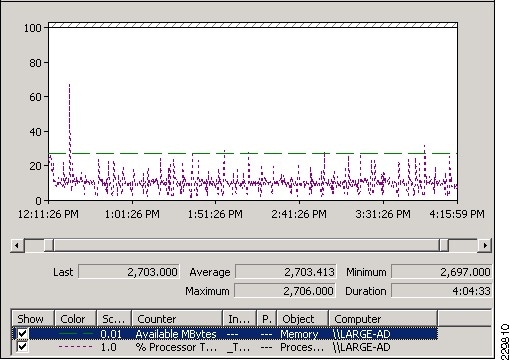

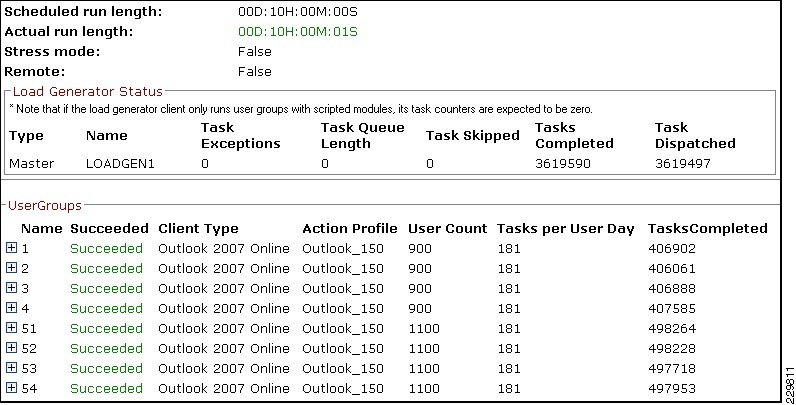

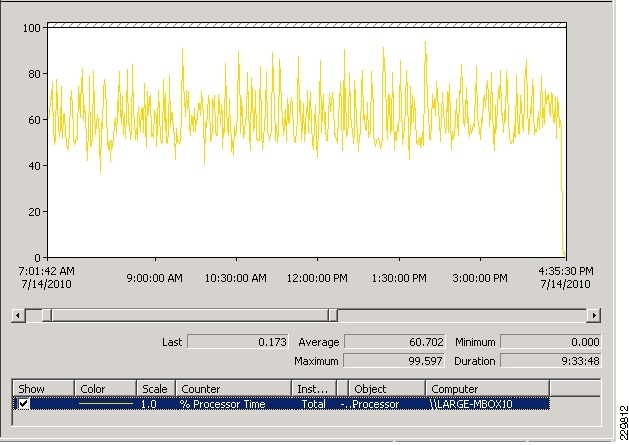

Standalone or Database Availability Group