UCS C-Series Rack Servers VIC Connectivity Options

Available Languages

Contents

Introduction

This document describes the connectivity options that are available for VMware ESX and the Microsoft Windows Server Version 2008 or 2012 when you use the Cisco Virtual Interface Card (VIC) adapters on the Cisco Unified Computing System (UCS) C-Series rack servers.

Prerequisites

Requirements

Cisco recommends that you have knowledge of these topics:

- Cisco UCS C-Series rack servers

- Cisco Integrated Management Controller (CIMC) configuration

- Cisco VIC

- VMware ESX Versions 4.1 and later

- Microsoft Windows Server Version 2008 R2

- Microsoft Windows Server Version 2012

- Hyper-V Version 3.0

Components Used

The information in this document is based on these software and hardware versions:

- Cisco UCS C220 M3 server with a VIC 1225

- CIMC Version 1.5(4)

- VIC firmware Version 2.2(1b)

- Cisco Nexus 5548UP Series switches that run software Version 6.0(2)N1(2)

- VMware ESXi Version 5.1, Update 1

- Microsoft Windows Server Version 2008 R2 SP1

- Microsoft Windows Server Version 2012

The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, make sure that you understand the potential impact of any command.

Cisco VIC Switching Basics

This section provides general information about VIC switching.

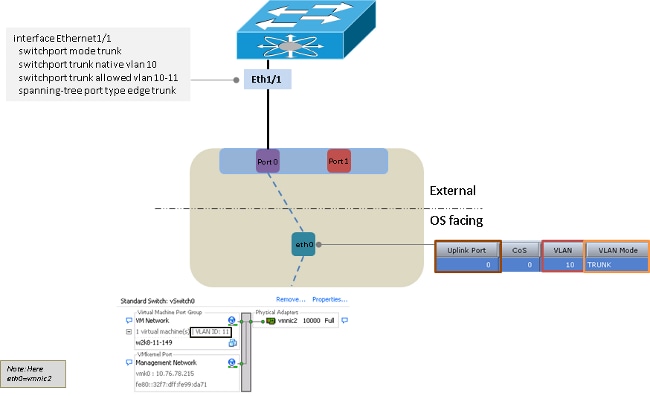

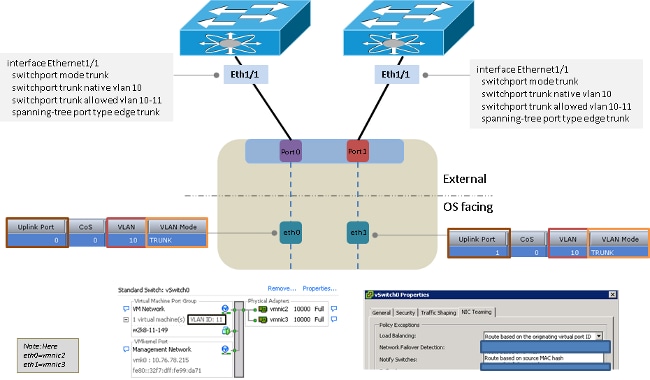

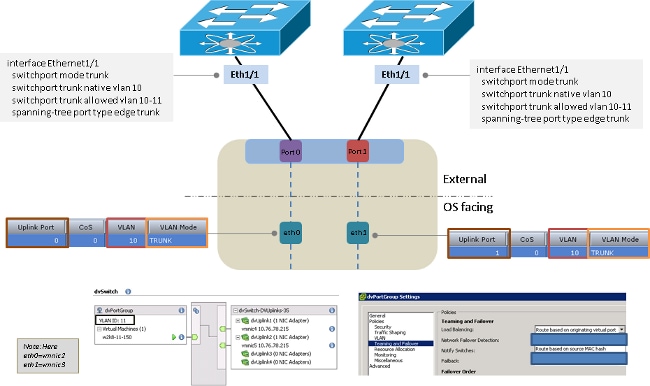

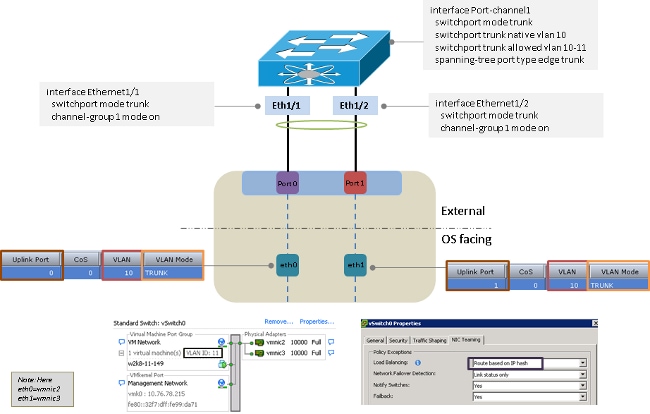

The VIC card has only two ports that face externally. These are not seen by the Operating System (OS) that is installed on the server and are used in order to connect to the upstream switches. The VIC always tags packets with an 802.1p header. While the upstream switchport can be an access port, different switch platforms behave differently when a 802.1p packet is received without a VLAN tag. Therefore, Cisco recommends that you have the upstream switchport configured as a trunk port.

The Virtual Network Interface Cards (vNICs) that are created are presented to the OS that is installed on the server, which can be configured as an access port or trunk port. The access port removes the VLAN tag when it sends the packet to the OS. The trunk port sends the packet to the OS with the VLAN tag, so the OS on the server must have a trunking driver in order to understand it. The trunk port removes the VLAN tag only for the default VLAN.

VMware ESX

This section describes the connectivity options that are available for VMware ESX.

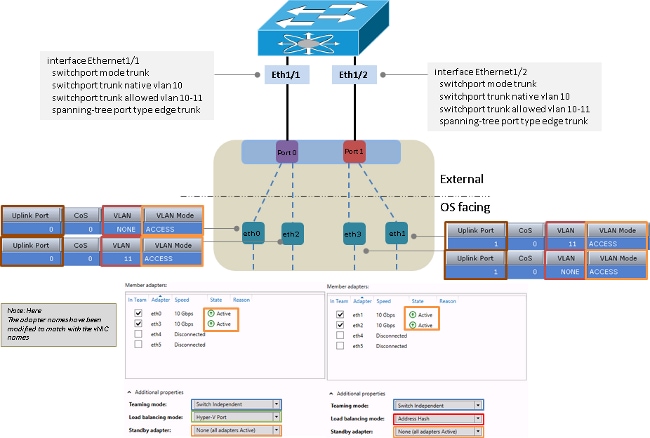

Upstream Switch-Independent Teaming

These examples show the connectivity options that are available for upstream switch-independent teaming.

One Uplink

Two Uplinks to Different Switches

Two Uplinks to Different Switches with a VMware-Distributed Virtual Switch

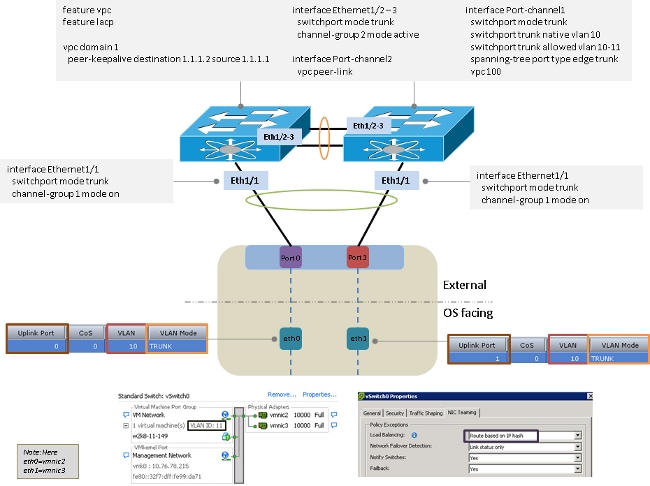

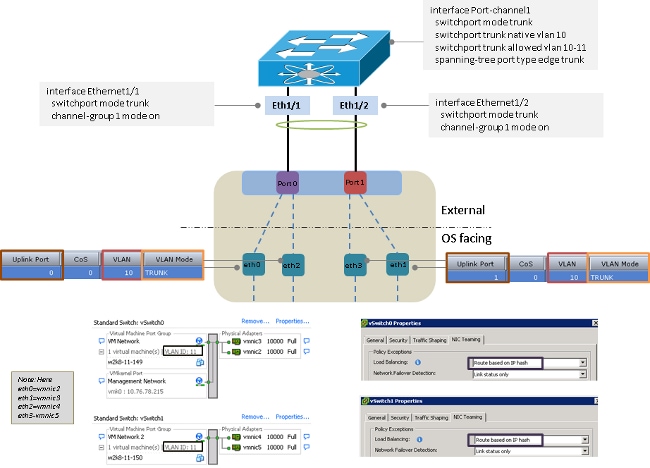

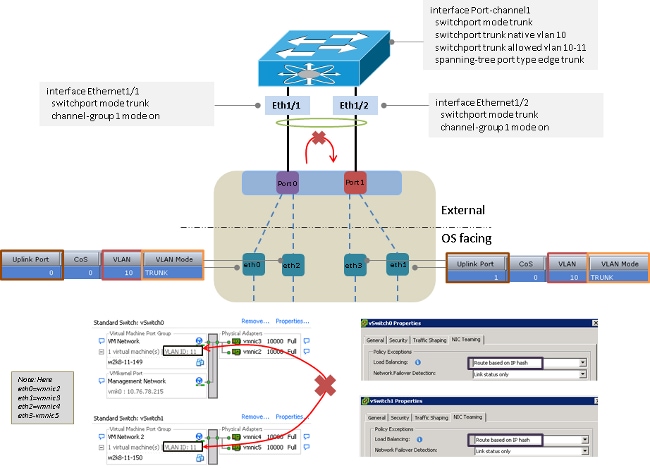

Upstream Switch-Dependent Teaming

These examples show the connectivity options that are available for upstream switch-dependent teaming.

Two Uplinks to the Same Switch

Two Uplinks to Different Switches

Two Uplinks to the Same Switch with Multiple VMware Standard Switches

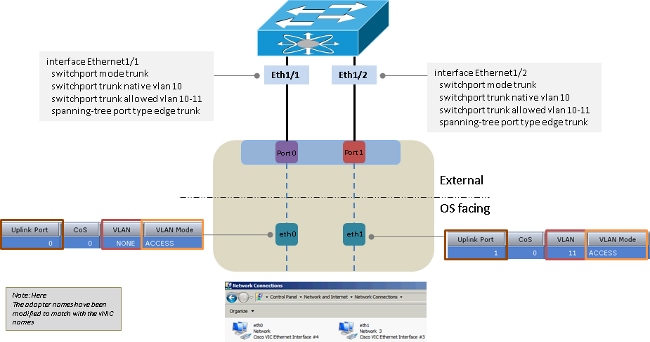

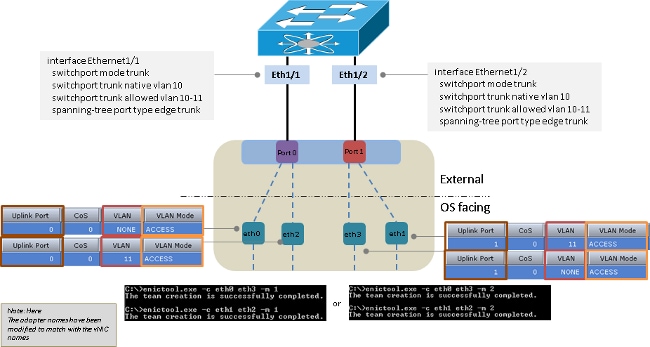

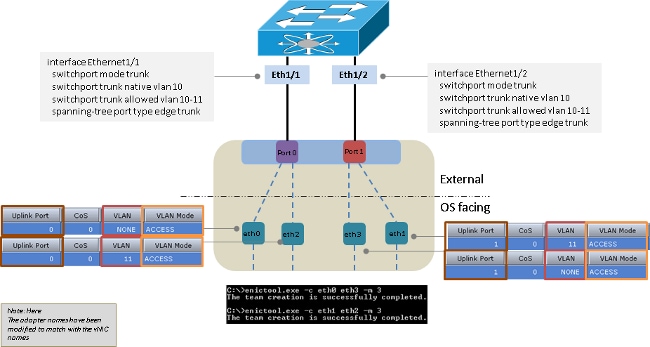

Microsoft Windows Server Version 2008

This section describes the connectivity options that are available for the Microsoft Windows Server Version 2008.

Without NIC Teaming

Active-Backup and Active-Backup with Failback

Active-Active Transmit Load-Balancing

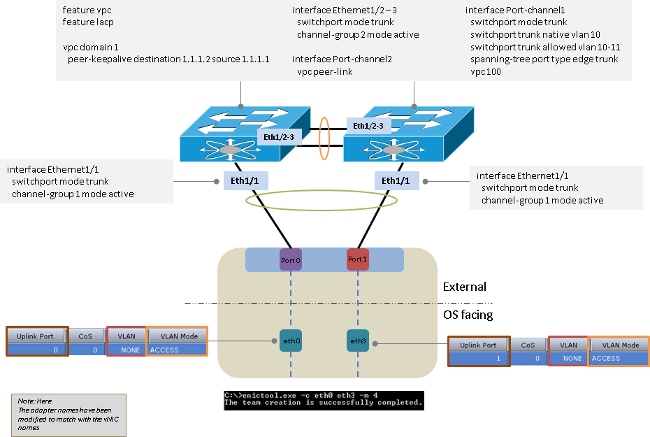

Active-Active with LACP

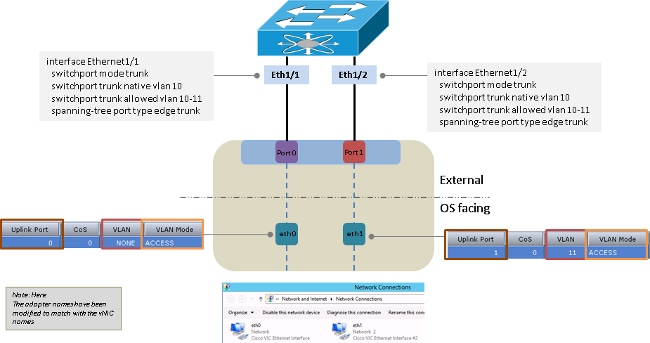

Microsoft Windows Server Version 2012

This section describes the connectivity options that are available for the Microsoft Windows Server Version 2012.

Without NIC Teaming

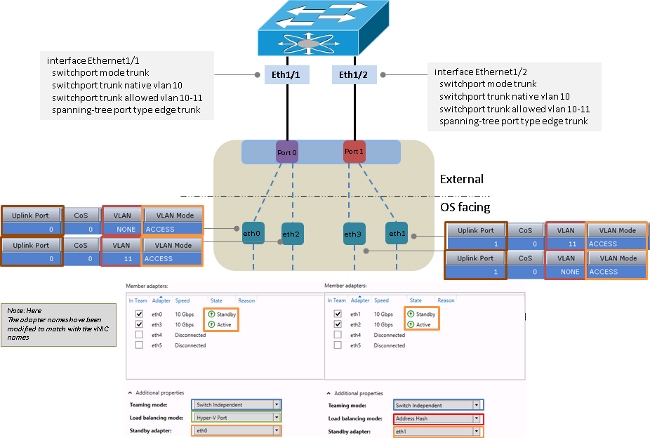

Upstream Switch-Independent Teaming

You can use either active-backup or active-active for upstream switch-independent teaming.

Active-Backup

The load balancing method can be either Hyper-V Port or Address Hash.

Active-Active

The load balancing method can be either Hyper-V Port or Address Hash. The Hyper-V Port method is the preferred option because it load balances among the available interfaces. The Address Hash method usually chooses only one interface in order to transmit the packets from the server.

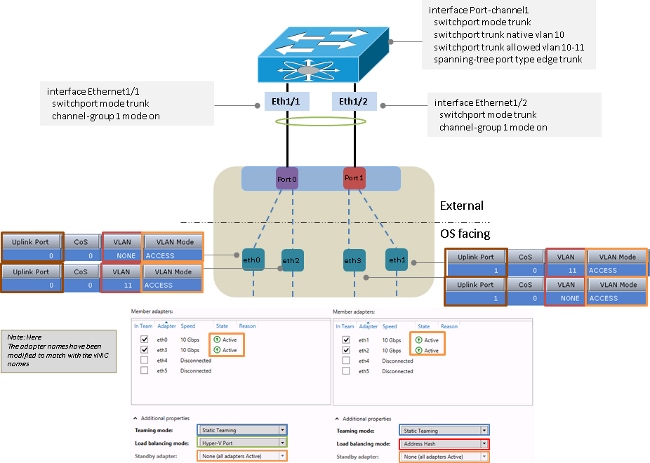

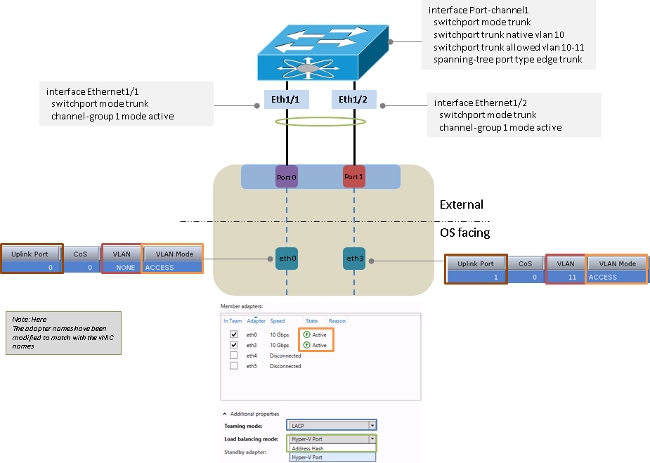

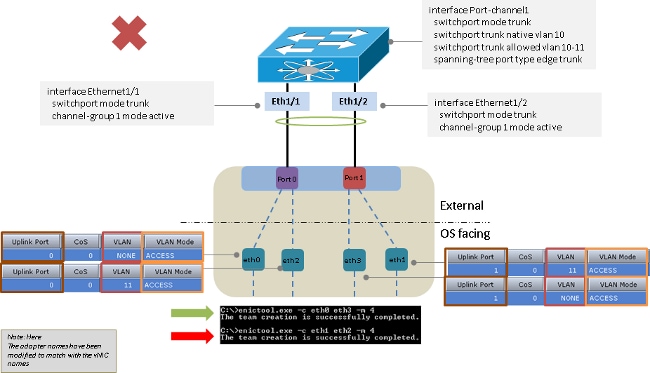

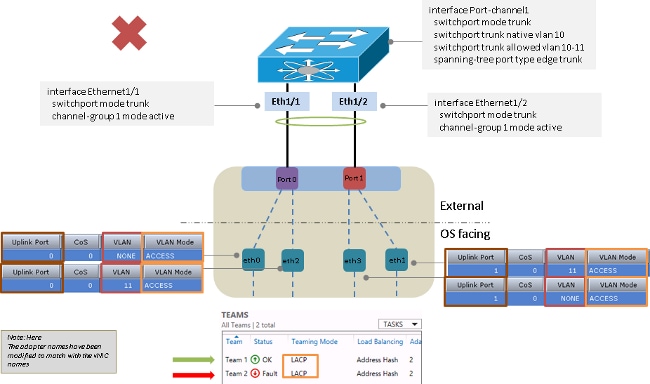

Upstream Switch-Dependent Teaming

You can use either static or dynamic teaming for upstream switch-independent teaming.

Static Teaming

The load balancing method can be either Hyper-V Port or Address Hash.

Dynamic Teaming

For dynamic teaming, or Link Aggregation Control Protocol (LACP), the load balancing method can be either Hyper-V Port or Address Hash.

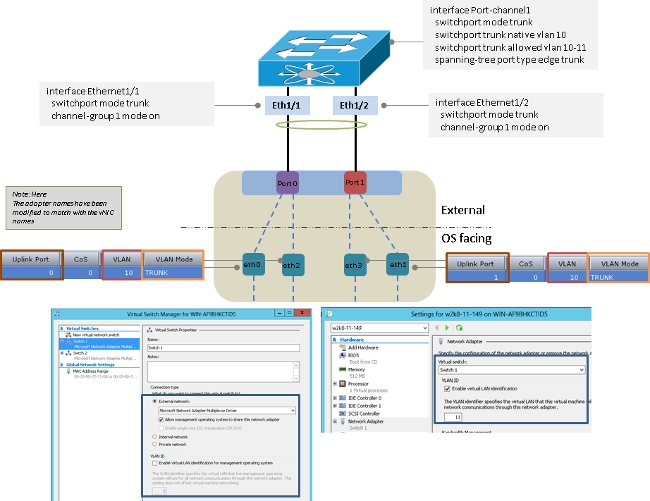

Hyper-V

When Hyper-V is used, the teamed NIC can be used inside the Hyper-V virtual switch. You can use any of the teaming methods previously described.

Failure Scenarios

This section describes the failure scenarios that you might encounter when switching is performed between two VMs on the same server and when two LACP teams are on the server-side.

Switching Between Two VMs on the Same Server

If the source and destination are on the same host and if the packet must be switched by the upstream switch, then a failure occurs if the source and destination are connected to the same interface from the switch perspective.

Two LACP Teams on the Server-Side

There can be only one LACP team from the sever towards the upstream switch. If there are multiple teams on the server, it causes the LACP to flap on the upstream switch.

Known Caveats

Here are the known caveats for the information in this document:

- Cisco bug ID CSCuf65032 - NIC team - P81E / VIC 1225 - Accepts traffic only on single DCE port

- Cisco bug ID CSCuh63745 - Support for LACP and active-active modes with the Win teaming driver

Related information

- Cisco Integrated Management Controller - Configuration Guides

- Cisco UCS C-Series Servers Integrated Management Controller GUI Configuration Guide, Release 1.5 - Managing Network Adapters

- Cisco Unified Computing System Adapters

- Cisco Nexus 5500 Series NX-OS Interfaces Configuration Guide, Release 7.x

- Cisco UCS Virtual Interface Card Drivers for Windows Installation Guide

- VLAN Tricks with NICs - Teaming & Hyper-V in Windows Server 2012

- Technical Support & Documentation - Cisco Systems

Contact Cisco

- Open a Support Case

- (Requires a Cisco Service Contract)

Feedback

Feedback