Second-Generation Intel Xeon Scalable Processor Refresh Selection Guide for VDI on Cisco UCS with VMware Horizon 7

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

Intel recently announced the availability of the second-generation Intel® Xeon® Scalable Family processor refresh. Intel sees across an evolving digital world disruptive and emerging technology trends in business, industry, science, and entertainment. According to Intel, by 2020, the success of half of the world’s Global 2000 companies will depend on their ability to create digitally enhanced products and services.

Intel suggests that this global transformation is rapidly scaling the demand for flexible computing, storage, and networking resources. Future workloads will require infrastructure that can scale to support rapid response to changing demands and scale requirements.

The 2nd Gen Intel Xeon Scalable Refresh platform provides a strong foundation for that evolution. This refreshed processor line, designated with an “R” after the processor model number, enables new capabilities across computing, memory, storage, network, and security resources.

The Scalable Family processor line introduced a unique persistent memory class, called Intel® Optane™ DC persistent memory. This new class of memory and storage innovation is designed for data-centric environments. Individual memory modules can be as large as 512 GB, allowing up to 36 TB of system-level memory when combined with traditional DRAM.

Cisco has evaluated the refreshed processor line for its use in virtual desktop infrastructure (VDI) to provide our customers with guidance as to which processors provide the best starting price-to-performance ratio for three key benchmark workloads. These workloads represent three user personas and delivery mechanism.

The main benefits of the 2nd Gen Intel Xeon Scalable Refresh Gold processor for VDI include the following:

● Intel Xeon Gold 6200R and 5200R series processors support higher memory speeds (2933 MHz for the 6200 series), enhanced memory capacity, and 4-socket scalability.

● Intel Xeon Gold 6200R and 5200R series processors support advanced reliability and hardware-enhanced security.

● The 2nd Gen Refresh platform offers higher core counts and processor frequencies and higher user densities.

● Lower-core-count higher-frequency processors facilitate enhanced performance for high-performance professional graphics applications when coupled with server graphics processing units (GPUs).

This document provides an overview of the fifth-generation Cisco Unified Computing System™ (Cisco UCS®) product line, an update on the latest VMware ESXi and Horizon products that support the 2nd Gen Intel Xeon Scalable Refresh processors, and an overview of our selection process and test methodology.

The document concludes with guidance for the starting configuration of 2nd Gen Intel Xeon Scalable processors by user persona and delivery type.

The document begins with an overview of the Cisco UCS and VMware products used in the testing discussed in this document.

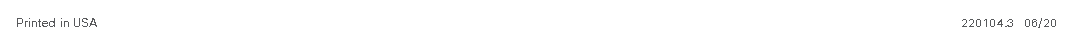

Cisco Unified Computing System

Cisco UCS is a next-generation data center platform that unites computing, networking, and storage access. The platform is optimized for virtual environments. It is designed using open industry-standard technologies and aims to reduce total cost of ownership (TCO) and increase business agility. The system integrates a low-latency lossless 40 Gigabit Ethernet unified network fabric with enterprise-class x86-architecture servers. It is an integrated, scalable, multichassis platform in which all resources participate in a unified management domain (Figure 1).

Cisco UCS components

The main components of Cisco UCS are:

● Computing: The system is based on an entirely new class of computing system that incorporates blade servers and modular servers based on Intel processors.

● Network: The system is integrated onto a low-latency, lossless, 40-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing (HPC) networks, which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables and by decreasing power and cooling requirements.

● Virtualization: The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

● Storage access: The system provides consolidated access to local storage, SAN storage, and network-attached storage (NAS) over the unified fabric. With storage access unified, Cisco UCS can access storage over Ethernet, Fibre Channel, Fibre Channel over Ethernet (FCoE), and Small Computer System Interface over IP (iSCSI) protocols. This capability provides customers with a choice for storage access and investment protection. In addition, server administrators can preassign storage-access policies for system connectivity to storage resources, simplifying storage connectivity and management and helping increase productivity.

● Management: Cisco UCS uniquely integrates all system components, enabling the entire solution to be managed as a single entity by Cisco UCS Manager. The manager has an intuitive GUI, a command-line interface (CLI), and a robust API for managing all system configuration processes and operations.

Cisco UCS is designed to deliver:

● Reduced TCO and increased business agility

● Increased IT staff productivity through just-in-time provisioning and mobility support

● A cohesive, integrated system that unifies the technology in the data center; the system is managed, serviced, and tested as a whole

● Scalability through a design for hundreds of discrete servers and thousands of virtual machines and the capability to scale I/O bandwidth to match demand

● Industry standards supported by a partner ecosystem of industry leaders

Cisco UCS Manager provides unified, embedded management of all software and hardware components of Cisco UCS through an intuitive GUI, a CLI, and an XML API. The manager provides a unified management domain with centralized management capabilities and can control multiple chassis and thousands of virtual machines. Tightly integrated Cisco UCS manager and NVIDIA GPU cards provide better management of firmware and graphics card configuration.

Cisco UCS 6454 Fabric Interconnect

The Cisco UCS 6454 Fabric Interconnect (Figure 2) is the management and communication backbone for Cisco UCS B-Series Blade Servers, C-Series Rack Servers, and 5100 Series Blade Server Chassis. All servers attached to 6454 Fabric Interconnects become part of one highly available management domain.

Because they support unified fabric, Cisco UCS 6400 Series Fabric Interconnects provide both LAN and SAN connectivity for all servers within their domains. For more details, see https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-b-series-blade-servers/6400-specsheet.pdf.

The Cisco UCS 6454 Fabric Interconnect is a 1-rack-unit (1RU) top-of-rack (ToR) switch that mounts in a standard 19-inch rack such as the Cisco R Series rack. It provides these features and capabilities:

● Supports 10, 25, 40, and 100 Gigabit Ethernet

● Supports Fibre Channel over Ethernet (FCoE) and Fibre Channel, with up to 3.82 Tbps throughput and up to 54 ports

● Supports 16 unified ports (port numbers 1 to 16)

● Supports 10/25-Gbps SFP28 Ethernet ports and 8/16/32-Gbps Fibre Channel ports

● Supports 28 x 10/25-Gbps Ethernet SFP28 ports (port numbers 17 to 44)

● Supports 4 x 1/10/25-Gbps Ethernet SFP28 ports (port numbers 45 to 48)

● Supports 6 x 40/100-Gbps Ethernet QSFP28 uplink ports (port numbers 49 to 54).

● Supports FCoE on all Ethernet ports

● Provides ports capable of line-rate, low-latency, lossless 40 Gigabit Ethernet and FCoE

● Supports centralized unified management with Cisco UCS Manager

● Provides efficient cooling and serviceability

Cisco UCS 6454 Fabric Interconnect

For more information, see https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/datasheet-c78-741116.html.

Cisco UCS B-Series Blade Servers

Cisco UCS B-Series Blade Servers are based on Intel Xeon processors. They work with virtualized and nonvirtualized applications to increase performance, energy efficiency, flexibility, and administrator productivity. Cisco UCS servers offer a balance between the need for servers with smaller form factors and the need for greater server density per rack. Cisco UCS blade servers deliver a high-performance virtual client computing end-user experience.

Cisco UCS B200 M5 Blade Server

Delivering performance, versatility, and density without compromise, the Cisco UCS B200 M5 Blade Server (Figure 3) addresses a broad set of workloads, from IT and web infrastructure to distributed databases. The enterprise-class B200 M5 Blade Server extends the capabilities of the Cisco UCS portfolio in a half-width blade form factor. The B200 M5 harnesses the power of the latest Intel Xeon Scalable CPUs with up to 3072 GB of RAM (using 128-GB DIMMs), two solid-state disks (SSDs) or hard-disk drives (HDDs), and connectivity with throughput of up to 80 Gbps.

The Cisco UCS B200 M5 server mounts in a Cisco UCS 5100 Series Blade Server Chassis or Cisco UCS Mini blade server chassis. It has 24 total slots for error-correcting code (ECC) registered DIMMs (RDIMMs) or load-reduced DIMMs (LR DIMMs). It supports one connector for the Cisco UCS Virtual Interface Card (VIC) 1340 adapter, which provides Ethernet and FCoE connectivity.

The B200 M5 has one rear mezzanine adapter slot, which can be configured with a Cisco UCS port expander card for additional connectivity bandwidth or with an NVIDIA P6 GPU. These hardware options enable an additional four ports of the VIC 1340, bringing the total capability of the VIC 1340 to a dual native 40-Gbps interface or a dual 4 x 10 Gigabit Ethernet port-channel interface. Alternatively, the same rear mezzanine adapter slot can be configured with an NVIDIA P6 GPU.

The B200 M5 also has one front mezzanine slot. The B200 M5 can be ordered with or without a front mezzanine card. The front mezzanine card can accommodate a storage controller or NVIDIA P6 GPU.

For more information, see https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-b-series-blade-servers/b200m5-specsheet.pdf.

Cisco UCS B200 M5 Blade Server front view

Cisco UCS B480 M5 Blade Server

The enterprise-class Cisco UCS B480 M5 Blade Server (Figure 4) delivers market-leading performance, versatility, and density without compromise for memory-intensive mission-critical enterprise applications and virtualized workloads, among others.

With the B480 M5, you can quickly deploy stateless physical and virtual workloads with the programmability that Cisco UCS Manager and Cisco® SingleConnect technology enable. Customers gain support for Intel Xeon Scalable processors, higher-density 256-GB DDR4 DIMMs, new 2nd Gen Intel Xeon Scalable processors, and the new Intel Optane DC persistent memory. Additionally, these B480 M5 servers offer up to 12 TB of DDR4 memory or 18 TB using 24 x 256-GB DDR4 DIMMs and 24 x 512-GB Intel Optane DC persistent memory; four SAS, SATA, and Non-Volatile Memory Express (NVMe) drives; M.2 storage; up to four GPUs; and 160 Gigabit Ethernet connectivity for I/O throughput, all leading to exceptional performance, flexibility, and I/O throughput to run your most demanding applications.

The B480 M5 is a full-width blade server supported by the Cisco UCS 5108 Blade Server Chassis. The 5108 chassis and the Cisco UCS B-Series Blade Servers provide inherent architectural advantages:

● Through Cisco UCS, gives you the architectural advantage of not having to power, cool, manage, and purchase excess switches (management, storage, and networking), host bus adapters (HBAs), and network interface cards (NICs) in each blade chassis

● Reduces TCO by removing management modules from the chassis, making the chassis stateless

● Provides a single, highly available Cisco UCS management domain for all system chassis and rack servers, reducing administrative tasks

The Cisco UCS B480 M5 Blade Server delivers flexibility, density, and expandability in a 4-socket, full-width form factor for enterprise and mission-critical applications. It offers:

● Four new 2nd Gen Intel Xeon Scalable CPUs (up to 28 cores per socket)

● Four existing Intel Xeon Scalable CPUs (up to 28 cores per socket)

● Support for higher-density DDR4 memory: from 6 TB (128-GB DDR4 DIMMs) to 12 TB (256-GB DDR4 DIMMs)

● Increased memory speeds: from 2666 MHz to 2933 MHz

● Intel Optane DC persistent memory modules (DCPMMs): 128, 256, and 512 GB

● Up to 18 TB using 24 x 256-GB DDR4 DIMMs and 24 x 512-GB Intel Optane DCPMMs

● Cisco FlexStorage storage subsystem

● Five mezzanine adapters and support for up to four NVIDIA GPUs

● Cisco UCS VIC 1340 modular LAN on motherboard (mLOM) and upcoming fourth-generation VIC mLOM

● Internal Secure Digital (SD) and M.2 boot options

Note: Each Intel Optane DCPMM requires a DDR4 DIMM for deployment (for example, 12 Intel Optane DCPMMs require 12 DDR4 DIMMs). The sizes of the persistent memory modules must be the same, and the DDR4 DIMMs must be the same. But between the persistent memory modules and DIMMs, the sizes can vary.

Cisco UCS B480 M5 Blade Server (front view)

Cisco UCS C-Series Rack Servers

Cisco UCS C-Series Rack Servers keep pace with Intel Xeon processor innovation by offering the latest processors with an increase in processor frequency and improved security and availability features. With the increased performance provided by the Intel Xeon Scalable processors, Cisco UCS C-Series servers offer an improved price-to-performance ratio. They also extend Cisco UCS innovations to an industry-standard rack-mount form factor, including a standards-based unified network fabric, Cisco VN-Link virtualization support, and Cisco Extended Memory Technology.

Designed to operate both in standalone environments and as part of a Cisco UCS managed configuration, these servers enable organizations to deploy systems incrementally—using as many or as few servers as needed—on a schedule that best meets the organization’s timing and budget. Cisco UCS C-Series servers offer investment protection through the capability to deploy them either as standalone servers or as part of Cisco UCS.

One compelling reason that many organizations prefer rack-mount servers is the wide range of I/O options available in the form of PCI Express (PCIe) adapters. Cisco UCS C-Series servers support a broad range of I/O options, including interfaces supported by Cisco as well as adapters from third parties.

The Cisco UCS C220 M5 Rack Server (Figures 5 and 6) is among the most versatile general-purpose enterprise infrastructure and application server in the industry. It is a high-density 2-socket rack server that delivers industry-leading performance and efficiency for a wide range of workloads, including virtualization, collaboration, and bare-metal applications. The Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of Cisco UCS to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ TCO and increase their business agility.

The Cisco UCS C220 M5 server extends the capabilities of the Cisco UCS portfolio in a 1RU form factor. It incorporates the Intel Xeon Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, 20 percent greater storage density, and five times more PCIe NVMe SSDs compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance. The C220 M5 delivers outstanding levels of expandability and performance in a compact package, with:

● The latest 2nd Gen Intel Xeon Scalable CPUs, with up to 28 cores per socket

● Support for the first generation of Intel Xeon Scalable CPUs, with up to 28 cores per socket

● Up to 24 DDR4 DIMMs for improved performance

● Support for Intel Optane DC persistent memory (128, 256, and 512 GB)

● Up to 10 small-form-factor (SFF) 2.5-inch drives or 4 large-form-factor (LFF) 3.5-inch drives (77 TB of storage capacity with all NVMe PCIe SSDs)

● Support for a 12-Gbps SAS modular RAID controller in a dedicated slot, leaving the remaining PCIe Generation 3.0 slots available for other expansion cards

● mLOM slot that can be used to install a Cisco UCS VIC without consuming a PCIe slot

● Dual embedded Intel x550 10GBASE-T LAN-on-motherboard (LOM) ports

Note: Each Intel Optane DCPMM requires a DDR4 DIMM for deployment (for example, 12 Intel Optane DCPMMs require 12 DDR4 DIMMs). The sizes of the persistent memory modules must be the same, and the DDR4 DIMMs must be the same. However, between the persistent memory modules and DIMMs, the sizes can vary.

Cisco UCS C220 M5 Rack Server

Cisco UCS C220 M5 Rack Server (rear view)

The server includes two PCIe 3.0 slots plus one dedicated 12-Gbps RAID controller slot and one dedicated mLOM slot for the Cisco VIC converged network adapter (CNA).

The Cisco UCS C240 M5 Rack Server (Figures 7 and 8 and Table 1) is designed for both performance and expandability over a wide range of storage-intensive infrastructure workloads, from big data to collaboration.

The C240 M5 SFF server extends the capabilities of the Cisco UCS portfolio in a 2RU form factor with the addition of the Intel Xeon Scalable processor family, 24 DIMM slots for 2666-MHz DDR4 DIMMs, up to 128-GB capacity points, up to 6 PCIe 3.0 slots, and up to 26 internal SFF drives. The C240 M5 SFF server also includes one dedicated internal slot for a 12-GB SAS storage controller card. The C240 M5 server includes a dedicated internal mLOM slot for installation of a Cisco VIC or third-party network interface card (NIC) without consuming a PCI slot, in addition to two 10GBASE-T Intel x550 embedded (on the motherboard) LOM ports.

In addition, the C240 M5 offers outstanding levels of internal memory and storage expandability with exceptional performance. It delivers:

● Up to 24 DDR4 DIMMs at speeds up to 2666 MHz for improved performance and lower power consumption

● One or two Intel Xeon Scalable CPUs

● Up to six PCIe 3.0 slots (four full-height, full-length for GPU)

● Six hot-swappable fans for front-to-rear cooling

● Twenty-four SFF front-facing SAS/SATA HDDs or SAS/SATA SSDs

● Optionally, up to two front-facing SFF NVMe PCIe SSDs (replacing SAS/SATA drives); these drives must be placed in front drive bays 1 and 2 only and are controlled from Riser 2, Option C

● Optionally, up to two SFF, rear-facing SAS/SATA HDDs and SSDs or up to two rear-facing SFF NVMe PCIe SSDs

◦ Rear-facing SFF NVMe drives connected from Riser 2, Option B or C

◦ Support for 12-Gbps SAS drives

● Dedicated mLOM slot on the motherboard, which can flexibly accommodate the following cards:

◦ Cisco VICs

◦ Quad-port Intel i350 1 Gigabit Ethernet RJ-45 mLOM NIC

● Two 1 Gigabit Ethernet embedded LOM ports

● Support for up to two double-wide NVIDIA GPUs, providing a graphics-rich experience to more virtual users

● Excellent reliability, availability, and serviceability (RAS) features with tool-free CPU insertion, easy-to-use latching lid, and hot-swappable and hot-pluggable components

● One slot for a MicroSD card on PCIe Riser 1 (Options 1 and 1B)

◦ The MicroSD card serves as a dedicated local resource for utilities such as the Cisco Host Upgrade Utility (HUU).

◦ Images can be pulled from a file share (Network File System [NFS] or Common Internet File System [CIFS]) and uploaded to the cards for future use.

● A mini-storage module connector on the motherboard that supports either:

◦ SD card module with two SD card slots; mixing different-capacity SD cards is not supported

◦ M.2 module with two SATA M.2 SSD slots; mixing different-capacity M.2 modules is not supported

Note: SD cards and M.2 modules cannot be mixed. M.2 modules do not support RAID 1 with VMware. Only Microsoft Windows and Linux are supported.

The C240 M5 also increases performance and customer choice over many types of storage-intensive applications such as:

● Collaboration

● Small and medium-sized business (SMB) databases

● Big data infrastructure

● Virtualization and consolidation

● Storage servers

● High-performance appliances

The C240 M5 can be deployed as a standalone server or as part of a Cisco UCS managed domain. Cisco UCS unifies computing, networking, management, virtualization, and storage access into a single integrated architecture that enables end-to-end server visibility, management, and control in both bare-metal and virtualized environments. Within a Cisco UCS deployment, the C240 M5 takes advantage of Cisco’s standards-based unified computing innovations, which significantly reduce customers’ TCO and increase business agility.

For more information about the Cisco UCS C240 M5 Rack Server, see https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/c240m5-sff-specsheet.pdf.

Cisco UCS C240 M5 Rack Server

Cisco UCS C240 M5Rack Server rear view

Table 1. Cisco UCS C240 M5 PCIe slots

| PCIe slot |

Length |

Lane |

| 1 |

Half |

x8 |

| 2 |

Full |

x16 |

| 3 |

Half |

x8 |

| 4 |

Half |

x8 |

| 5 |

Full |

x16 |

| 6 |

Full |

x8 |

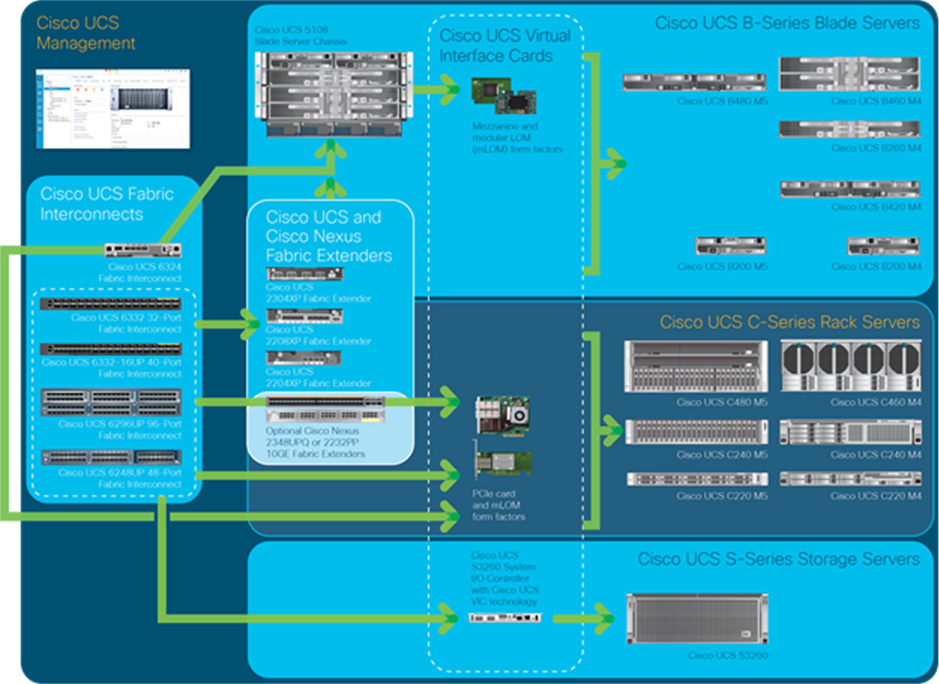

Cisco UCS virtual interface cards

Whether you are using blade, rack, or storage servers from Cisco, the Cisco UCS VIC (Figure 9) provides optimal connectivity.

Cisco VICs for Cisco UCS blade and rack servers

For more information, see https://www.cisco.com/c/en/us/products/interfaces-modules/unified-computing-system-adapters/models-comparison.html.

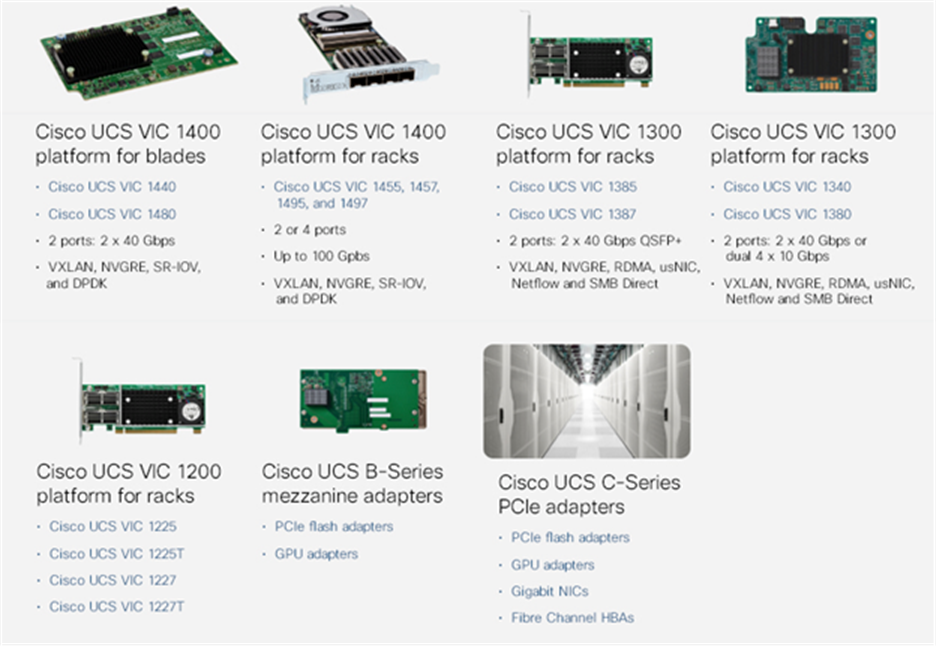

The Cisco UCS VIC 1340 (Figure 10) is a 2-port 40-Gbps Ethernet or dual 4 x 10-Gbps Ethernet, FCoE-capable mLOM designed exclusively for the M5 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the VIC 1340 is enabled for two ports of 40-Gbps Ethernet. The VIC 1340 enables a policy-based, stateless, agile server infrastructure that can present more than 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either NICs or HBAs. In addition, the VIC 1340 supports Cisco Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management.

For more information, see https://www.cisco.com/c/en/us/products/collateral/interfaces-modules/ucs-virtual-interface-card-1340/datasheet-c78-732517.html.

Cisco UCS VIC 1340

The Cisco UCS VIC 1387 (Figure 11) is a dual-port Enhanced Small Form-Factor Pluggable (SFP+) 40-Gbps Ethernet and FCoE-capable PCIe mLOM adapter installed in Cisco UCS C-Series Rack Servers. The mLOM slot can be used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation CNA technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present more than 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either NICs or HBAs. The personality of the card is determined dynamically at boot time using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all determined using the service profile.

For more information, see https://www.cisco.com/c/en/us/products/interfaces-modules/ucs-virtual-interface-card-1387/index.html.

Cisco UCS VIC 1387

The Cisco UCS VIC 1457 (Figure 12) is a quad-port SFP28 mLOM card designed for the M5 generation of Cisco UCS C-Series Rack Servers. The card supports 10 and 25 Gigabit Ethernet and FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

The Cisco VIC 1497 (Figure 13) is a dual-port quad SFP28 mLOM card designed for the M5 generation of Cisco UCS C-Series Rack Servers. The card supports 40 and 100 Gigabit Ethernet and FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as NICs or HBAs.

Cisco UCS VIC 1497

VMware vCenter Server 6.7 Update 3

VMware vCenter Server 6.7 Update 3 provides a number of new features:

● vCenter Server 6.7 Update 3 supports a dynamic relationship between the IP address settings of a vCenter Server Appliance and a DNS server by using the Dynamic Domain Name Service (DDNS). The DDNS client in the appliance automatically sends secure updates to DDNS servers at scheduled intervals.

● With vCenter Server 6.7 Update 3, you can configure virtual machines and templates with up to four NVIDIA virtual GPU (vGPU) devices to cover use cases requiring multiple GPU accelerators attached to a virtual machine. To use the VMware vMotion vGPU feature, you must set the vgpu.hotmigrate.enabled advanced setting to true and make sure that both your vCenter Server and ESXi hosts are running vSphere 6.7 Update 3.

● vMotion movement of multiple GPU-accelerated virtual machines may fail gracefully under heavy GPU workload due to the maximum switchover time of 100 seconds. To avoid this failure, either increase the maximum allowable switchover time or wait until the virtual machine is performing a less-intensive GPU workload.

● With vCenter Server 6.7 Update 3, you can change the primary network identifier (PNID) of your vCenter Server Appliance. You can change the vCenter Server Appliance fully qualified domain name (FQDN) or host name, and also modify the IP address configuration of the virtual machine management network (NIC 0). For more information, see this VMware blog post.

● With vCenter Server 6.7 Update 3, if the overall health status of a VMware vSAN cluster is red, APIs to configure or extend hyperconverged infrastructure (HCI) clusters throw InvalidState exceptions to prevent further configuration or extension. This fix aims to resolve situations in which mixed versions of ESXi hosts in an HCI cluster may cause VSAN network partitions.

● vCenter Server 6.7 adds new Sandy Bridge microcode to the cpu-microcode vSphere Information Bundle (VIB) to bring Sandy Bridge security up to par with other CPUs and fix per–virtual machine Enhanced vMotion Compatibility (EVC) support. For more information, see VMware knowledge base article 1003212.

● With vCenter Server 6.7 Update 3, you can configure the property config.vpxd.macAllocScheme.method in the vCenter Server configuration file, vpxd.cfg, to allow sequential selection of MAC addresses from MAC address pools. The default option for random selection does not change. Modifying the MAC address allocation policy does not affect MAC addresses for existing virtual machines.

● vCenter Server 6.7 Update 3 adds a representational state transfer (REST) API that you can run from the vSphere Client to converge instances of the vCenter Server Appliance with an external VMware Platform Services Controller instance into a vCenter Server Appliance with an embedded Platform Services Controller connected in embedded linked mode. For more information, see the vCenter Server Installation and Setup guide.

● vCenter Server 6.7 Update 3 integrates the VMware Customer Experience Improvement Program (CEIP) into the converged utility.

● vCenter Server 6.7 Update 3 adds a Simple Object Access Protocol (SOAP) API to track the status of encryption keys. With the API, you can see whether the crypto key is available in a vCenter Server system or is used by virtual machines as a host key or by third-party programs.

● vCenter Server 6.7 Update 3 provides a precheck feature for when you are upgrading a vCenter Server system to help ensure upgrade compatibility of the VMware vCenter single sign-on (SSO) service registration endpoints. This check reports possible mismatches with the present machine vCenter SSO certificates before the start of an upgrade and prevents upgrade interruptions that require manual workarounds and cause downtime.

● vCenter Server 6.7 Update 3 improves VMware vCenter SSO auditing by adding events for the following operations: user management, login, group creation, identity source, and policy updates. The new feature is available only for the vCenter Server Appliance with an embedded Platform Services Controller and not for vCenter Server for Microsoft Windows or a vCenter Server Appliance with an external Platform Services Controller. Supported identity sources are vsphere.local, Integrated Windows Authentication (IWA), and Microsoft Active Directory over Lightweight Directory Access Protocol (LDAP).

● vCenter Server 6.7 Update 3 introduces Virtual Hardware Version 15, which adds support for creation of virtual machines with up to 256 virtual CPUs (vCPUs). For more information, see VMware knowledge base articles 1003746 and 2007240.

● The vCenter Server Appliance Management Interface in vCenter Server 6.7 Update 3 adds version details to the “Enter backup details” page that help you choose the correct build to restore the backup file, simplifying the restore process.

● With vCenter Server 6.7 Update 3, you can use the Network File System (NFS) and Server Message Block (SMB) protocols for file-based backup and restore operations on the vCenter Server Appliance. The use of NFS and SMB protocols for restore operations is supported only with the vCenter Server Appliance CLI installer.

● vCenter Server 6.7 Update 3 adds events for changes of permissions on tags and categories, vCenter Server objects, and global permissions. The events specify the user who initiated the changes.

● With vCenter Server 6.7 Update 3, you can create alarm definitions to monitor the backup status of your system. By setting a Backup Status alarm, you can receive email notifications, send Simple Network Management Protocol (SNMP) traps, and run scripts triggered by events such as “Backup job failed” and “Backup job finished successfully.” A “Backup job failed” event sets the alarm status to red, and “Backup job finished successfully” resets the alarm to green.

● With vCenter Server 6.7 Update 3, in clusters with the Enterprise edition of VMware vSphere remote office and branch office (ROBO) configured to support vSphere Distributed Resource Scheduler maintenance mode (DRS-MM), when an ESXi host enters maintenance mode, all virtual machines running on the host are moved to other hosts in the cluster. Automatic virtual machine and host affinity rules help ensure that the moved virtual machines return to the same ESXi hosts when they exit maintenance mode.

● With vCenter Server 6.7 Update 3, events related to adding, removing, or modifying user roles specify the user that initiated the changes.

● With vCenter Server 6.7 Update 3, you can publish your .vmtx templates directly from a published library to multiple subscribers in a single action instead of needing to perform a synchronization operation from each subscribed library individually. The published and subscribed libraries must be in the same linked vCenter Server system, regardless of whether they are on the premises, in the cloud, or in a hybrid environment. Workflow for other templates in content libraries does not change.

● vCenter Server 6.7 Update 3 adds an alert to specify the installer version in the “Enter backup details” step of a restore operation. If the installer and backup versions are not identical, you see a prompt that indicates which matching build to download: for example, “Launch the installer that corresponds with version 6.8.2 GA.”

● vCenter Server 6.7 Update 3 adds support for a Swedish keyboard in the vSphere Client and VMware Host Client. For known issues related to the keyboard mapping, see VMware knowledge base article 2149039.

● With vCenter Server 6.7 Update 3, the vSphere Client provides a check box “Check host health after installation” that allows you to opt out of VMware vSAN health checks during the upgrade of an ESXi host using the vSphere Update Manager. Before this option was introduced, if vSAN issues were detected during an upgrade, the entire cluster remediation failed, and the ESXi host that was upgraded remained in maintenance mode.

● vCenter Server 6.7 Update 3 raises an alarm in the vSphere Client when the vSphere health check detects a new issue in your environment and prompts you to resolve the issue. Health check results are now grouped into categories for better visibility.

● With vCenter 6.7 Update 3 you can now publish your virtual machine templates managed by content library from a published library to multiple subscribers. You can trigger this action from the published library, which gives you greater control over the distribution of virtual machine templates. The published and subscribed libraries must be in the same linked vCenter Server system, regardless of whether they are on the premises, in the cloud, or in a hybrid environment. Workflow for other templates in content libraries does not change.

VMware Horizon 7.11 provides several new features and enhancements. This information is grouped here by installable component.

For information about the issues that are resolved in this release, see Resolved Issues.

Horizon Connection Server offers these new features:

● Horizon Administrator

◦ To identify the Horizon 7 pod you are working with, Horizon Administrator displays the pod name in the Horizon Administrator header and in the Web browser tab.

◦ You can monitor the system health of VMware Unified Access Gateway Version 3.4 or later from Horizon Administrator.

◦ You can view the connected user when you verify user assignment for desktop pools.

● Horizon Console (HTML5-based Web interface)

◦ You can manage View Composer linked-clone desktop pools.

◦ You can manage manual desktop pools.

◦ You can manage persistent disks for linked-clone desktop pools in Horizon Console.

● Horizon Help Desk Tool

◦ You can end an application process running on a Remote Desktop Services (RDS) host for a specific user in the Horizon Help Desk Tool.

● VMware Cloud Pod Architecture

◦ When you create a global application entitlement, you can specify whether users can start multiple sessions of the same published application on different client devices. This feature is called multisession mode.

◦ When you create a shortcut for a global entitlement, you can configure up to four subfolders.

◦ You can use a single vCenter Server instance with multiple pods in a Cloud Pod Architecture environment.

● VMware published desktops and applications

◦ A farm can contain up to 500 RDS host servers.

◦ For published applications, users can configure the multisession mode to use multiple instances of the same published application on different client devices. For published desktop pools, users can initiate separate sessions from different client devices.

◦ You can set the RDS host in the drain mode state or in the drain mode until restart state. Horizon Agent communicates the status of the RDS host to the Horizon Connection Server. You can monitor the status of the RDS host in the Horizon Administrator.

◦ You can enable hybrid logon for after you create an unauthenticated access user. Enabling hybrid logon provides unauthenticated access users domain access to network resources such as file shares and network printers without the need to enter credentials.

● vSphere support

◦ vSphere 6.7 Update 3 and vSAN 6.7 Update 3 are supported for virtual desktops.

◦ VMware vMotion support is provided for vGPU-enabled automated desktop pools that contain instant-clone, linked-clone, and full-clone virtual machines.

Horizon Agent for Linux offers these new features:

● Single sign-on support on additional platforms: SSO is now supported on SUSE Linux Enterprise Desktop (SLED) and SUSE Linux Enterprise Server (SLES) 12.0 SP1, SP2, and SP3 desktops.

● Audio-in support on additional platforms: Audio-in is now supported on SLED 11 SP4 x64 and SLED and SLES 12 SP3 x64 desktops.

● Instant-clone floating desktop pool support on additional platforms: Instant-clone floating desktop pool support is now available on SLED and SLES 11 and 12.0 or later desktops.

● Session collaboration: When the session collaboration feature is enabled for a remote Linux desktop, you can invite other users to join an existing remote desktop session or you can join a collaborative session when you receive an invitation from another user.

● Instant-clone offline domain join using Samba: Instant-cloned Linux desktops are allowed to perform an offline domain join operation with Active Directory using Samba. This feature is supported only on Ubuntu 14.04, 16/04, and 18.04; Red Hat Enterprise Linux (RHEL) 6.9 and 7.3; CentOS 6.9 and 7.3; and SLED 11 SPF4 and SLED 12.

Horizon Agent offers these new features:

● Client drive redirection over a VMware Virtual Channel (VVC) or BEAT side channel when copying a large number of small files and when indexing a folder that includes a large number of files has improved.

● Horizon 7.11 supports VMware Virtualization Pack for Skype for Business in an IPv6 environment.

● When client drive redirection is enabled, you can drag and drop files and folders between the client system and remote desktops and published applications.

● The VMware Virtual Print feature enables users to print to any printer available on their Microsoft Windows client computers. Virtual Print supports client printer redirection, location-based printing, and a persistent print setting.

● You can add a Virtual Trusted Platform Module (vTPM) device to instant clone desktop pools and add or remove a vTPM during a push-image operation.

● Microsoft Windows Server 2019 is supported for RDS hosts and virtual desktops.

● The Virtual Print feature is supported for published desktops and published applications that are deployed on RDS hosts that are physical machines.

● The clipboard audit feature is supported for all Horizon Client platforms. With the clipboard audit feature, Horizon Agent records information about copy and paste activity between the client and agent in an event log on the agent machine. To enable the clipboard audit feature, you configure the VMware Blast or PC over IP (PCoIP) “Configure clipboard audit” group policy setting.

● Physical PCs and workstations with Microsoft Windows 10 Version 1803 Enterprise or later can be brokered through Horizon 7 using the Blast Extreme protocol.

Horizon Group Policy Organization Bundle

The Horizon Group Policy Organization (GPO) Bundle offers these new features:

● You can configure group policy settings for the VMware Virtual Print Redirection feature.

● You can configure group policy settings to specify the direction of the drag-and-drop feature.

For information about new features in Horizon Client 5.3, including HTML Access 5.3, see the Horizon Clients Documentation page.

New features in this release include the following:

● Battery state redirection: When the Enable Battery State Redirection agent group policy setting is enabled, information about the Windows client system's battery is redirected to a Windows remote desktop. This setting is enabled by default. For more information, see the Configuring Remote Desktop Features in Horizon 7 document.

● Universal broker support: You can use Horizon Client for Windows in a cloud brokering environment.

● RADIUS authentication login page customization: Beginning with Horizon 7.11, an administrator can customize the labels on the RADIUS authentication login page that appears in Horizon Client. For more information, see the topics about two-factor authentication in the VMware Horizon Console Administration document.

● Save custom display resolution and display scaling settings on the server: By default, custom display resolution and display scaling settings are stored only on the local client system. An administrator can use the “Save resolution and DPI to server client group policy setting to save these settings to the server so that they are always applied, regardless of the client system that you use to log in to the remote desktop. For more information, see General Settings for Client GPOs.

Horizon 7 Cloud Connector offers these new features:

● You can upgrade the Horizon 7 Cloud Connector virtual appliance.

● VMware Horizon Cloud Service integrates with Horizon 7 using Horizon 7 Cloud Connector for on-premises deployments and VMware Cloud on Amazon Web Services (AWS) deployments. With this integration, Horizon Cloud Service provides a unified view into health status and connectivity metrics for all your cloud-connected pods. For more information, see the Horizon Cloud Service documentation

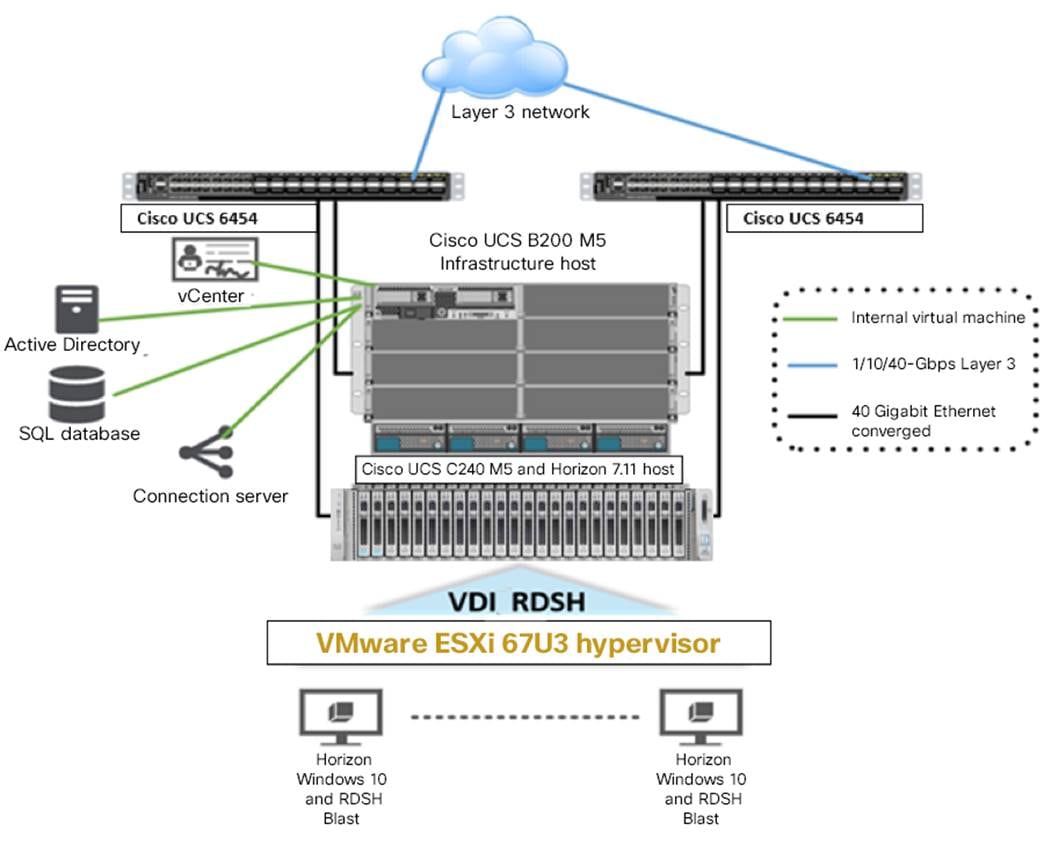

VMware Horizon test platform for 2nd Gen Intel Xeon Scalable processors

Figure 14 provides an overview of the test platform used to evaluate and select processors.

Reference architecture

The solution includes the following hardware components:

● Cisco UCS C240 M5 Rack Server for Horizon payloads (two 2nd Gen Intel Xeon Scalable Refresh Gold processors with 768 GB of memory (64 GB x 12 DIMMs at 2666 to 2933 MHz) with:

◦ Intel Xeon Scalable processor Gold 5218R (20 cores at 2.1 GHz with 125 watts (W) and 2666-MHz for memory)

◦ Intel Xeon Scalable processor Gold 5220R (24 cores at 2.2 GHz with 150 watts (W) and 2666 MHz for memory)

◦ Intel Xeon Scalable processor Gold 6240R (24 cores at 2.4 GHz with 165 watts (W) and 2933 MHz for memory)

● Cisco UCS B200 M5 Blade Server for Horizon infrastructure (two Intel Xeon Gold 5208 CPUs at 2.0 GHz) with 768 GB of memory (64 GB x 12 DIMMs at 2666 MHz)

● Cisco UCS VIC 1387 mLOM (Cisco UCS C240 M5 Rack Server)

● Cisco UCS VIC 1340 mLOM (Cisco UCS B200 M5 Blade Server)

● Two Cisco UCS 6454 Fabric Interconnects (Fourth gen fabric interconnects)

● Two Cisco Nexus® 93180YC-FX Switches (optional access switches)

The software components of the solution are as follows:

● Cisco UCS Firmware Release 4.0(4g)

● VMware ESXi 6.7 U3 for VDI hosts

● VMware Horizon 7.11

● Microsoft Windows 10 64-bit (1809)

● Microsoft Office 2016

To evaluate the 2nd Gen Intel Xeon Scalable processors, we created a strategy to test for the optimal price-to-performance ratio for the three mainstream user personas for virtual client computing, also referred to as end-user computing by VMware. They are:

● Task workers

● Knowledge workers

● Power users

Windows 10 virtual desktops using Horizon Composer clones

We evaluated each processor tested against the combinations of Login VSI test workloads shown in Table 2, in benchmark mode.

Table 2. User type and delivery mechanism combinations tested

| Delivery mechanism |

Task worker |

Knowledge worker |

Power user |

| Windows 10 Horizon Composer full clone (VDI) |

Tested |

Tested |

Tested |

We started with our knowledge of the performance of the first-generation Intel Scalable Gold processors and compared their benchmark performance to that of the second-generation processors to guide our initial selections for evaluation. Our plan was to identify a processor for each user type that delivered the best price-to-performance ratio for both the VDI and Remote Desktop Session Host (RDSH) delivery modalities for VMware Horizon.

For each user type and processor combination tested, we created a VMware Horizon virtual machine with specifications as shown in Table 3.

Table 3.

| Combination |

Virtual CPU |

Memory |

Virtual NIC |

| Task worker: Windows 10 |

1 vCPU |

2 GB of memory |

1x 40-GB vNIC |

| Knowledge worker: Windows 10 |

2 vCPUs |

4 GB of memory |

1 x 40-GB vNIC |

| Power user: Windows 10 |

4 vCPUs |

8 GB of memory |

1 x 40-GB vNIC |

For each user type, we selected one or more 2nd Gen Intel Xeon Scalable processors for evaluation. We used SPECrate2017*_int_base and SPECrate2017*_int_fp data generated by Cisco for 2nd Gen processors and 2nd Gen Refresh processors to identify candidates.

We installed the chosen processor candidates in a Cisco UCS C240 M5 server and ran Login VSI benchmark mode tests at calculated maximum user densities to determine the actual maximum user density per server. The maximum recommended user density is some number of users that complete the Login VSI workload with all attempted users active and logged off without triggering Login VSImax. In addition, CPU utilization on the host should not exceed 90 percent during the test.

We used the maximum recommended user density achieved to determine server loading in a server maintenance or failure scenario: typically N-1. We expect that customers would run their environment only at this load in those cases.

We compared performance and price per user at the maximum recommended user density to determine the best processor for the user type. We used Windows 10 across all processor testing.

This section presents the data from the test runs for the processor selected for each user type.

Task workers are individuals in an organization who use a limited number of applications to perform their duties. Examples of task workers are customer service agents, medical transcriptionists, accounts receivable and payable workers, and some enterprise resource planning (ERP) workers.

In many cases, these workers are well served by RDSH server sessions or published applications. In some cases, organizations provide a light-duty Windows 10 virtual desktop to these users.

We tested both use cases using the Login VSI Task Worker workload in benchmark mode. You can find additional information about Login VSI and all of the workloads we tested for this document here.

In addition to using the Login VSI test suite, we measured host utilization by gathering data from ESXTOP. We also captured perfmon data from sample virtual machines during the full server load tests.

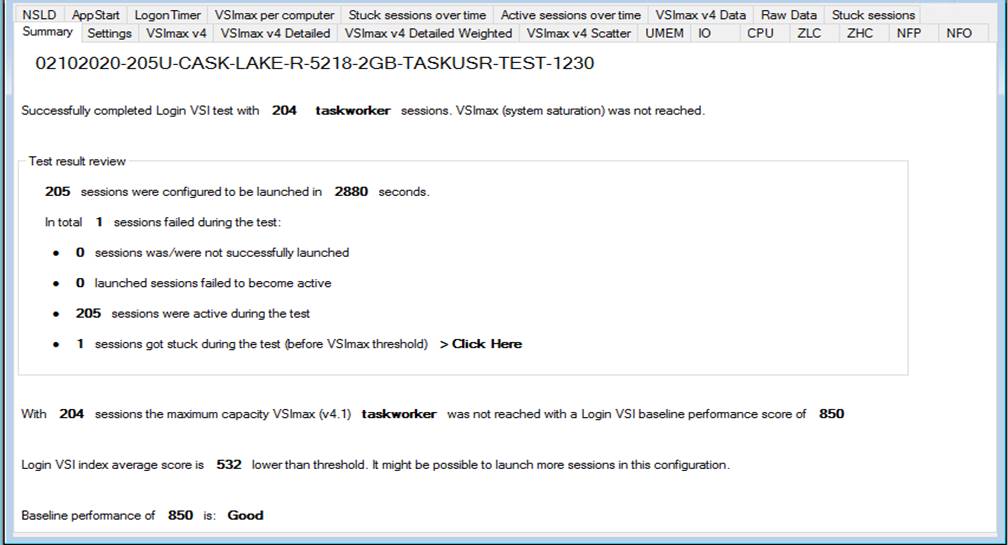

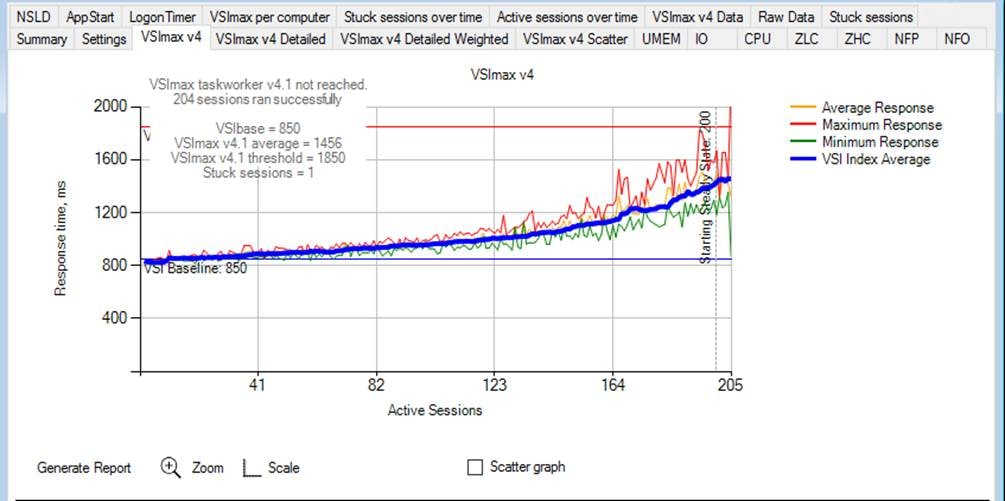

Windows 10 and Horizon 7.11 single-server synopsis: Intel Xeon Scalable Gold processor 5218R

The test results are summarized here and in Figures 15, 16, and 17.

● Operating system: Windows 10 64-bit (1809) with VMware optimizations

● 1 vCPU; 2 GB of RAM

● Number of users: 205 users running Login VSI Task Worker workload with Windows 10

● No VSImax; Login VSI baseline = 850 milliseconds (ms)

Login VSI end-user experience summary

Login VSI end-user experience performance chart

Knowledge workers are individuals in an organization who use a large number of applications to perform their duties. Examples of knowledge workers are sales and marketing professionals, business development managers, healthcare clinicians, and project managers.

In some cases, these workers can be served by RDSH server sessions or published applications. In most cases, organizations provide a medium-capability Windows 10 virtual desktop to these users.

We tested both use cases using the Login VSI Knowledge Worker workload in benchmark mode. You can find additional information about Login VSI and all the workloads we tested for this document here.

In addition to the Login VSI test suite, we measured host utilization by gathering data from ESXTOP. We also captured perfmon data from sample RDSH server virtual machines during the full server load tests.

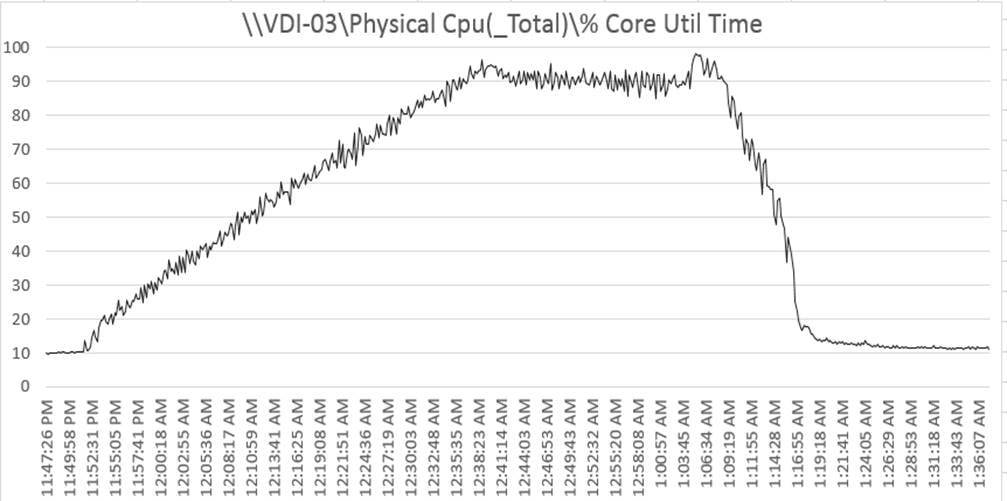

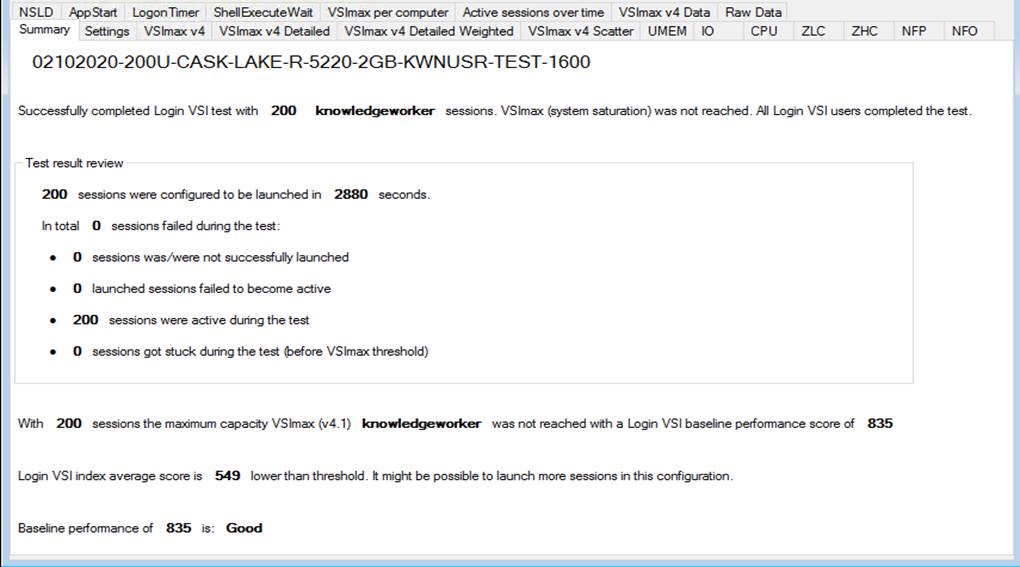

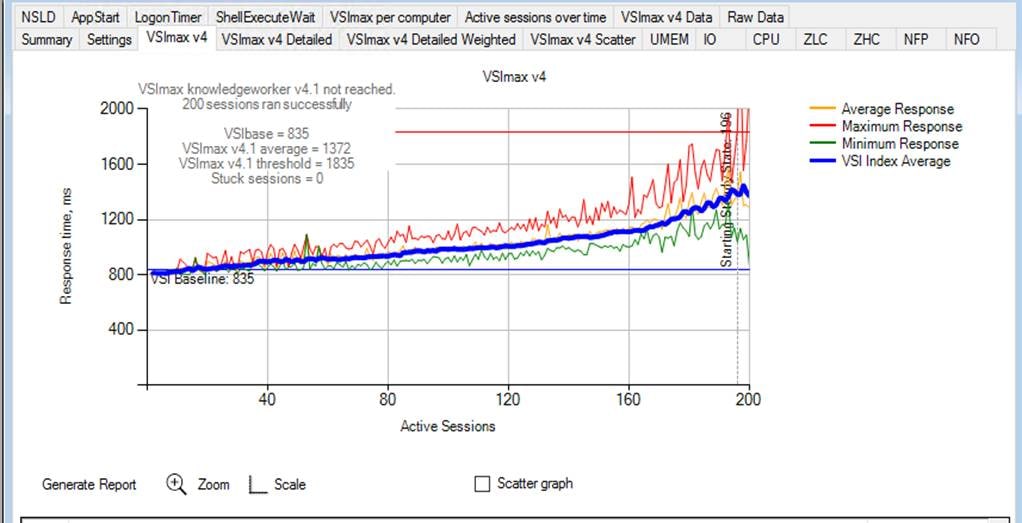

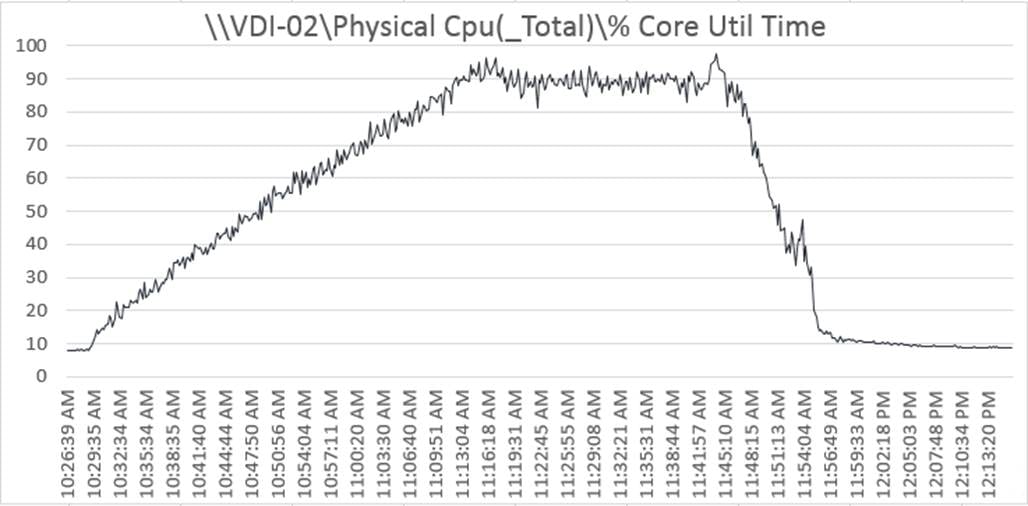

Windows 10 and Horizon 7.11 single-server synopsis: Intel Xeon Scalable Gold processor 5220R

The test results are summarized here and in Figures 18, 19, and 20.

● Operating system: Windows 10 64-bit (1809) with VMware optimizations

● 2 vCPUs; 4 GB of RAM

● Number of users: 200 users running Login VSI Knowledge Worker workload with Windows 10

● No VSImax; Login VSI baseline = 835 ms

Login VSI end-user experience summary

Login VSI end-user experience performance chart

VMware ESXi host CPU Util% during testing

Power users are individuals in an organization who use a large number of installed and web applications to perform their duties. These users typically have many applications open concurrently and perform more complex operations than other workers. Examples of power users are business analysts, strategic and tactical planners, manufacturing planners, operations planners, and financial analysts.

These workers cannot typically be served by RDSH server sessions or published applications. In almost all cases, organizations provide a highly capable Windows 10 virtual desktop to these users.

For that reason, we tested only the Windows 10 use case using the Login VSI Power User workload in benchmark mode. You can find additional information about Login VSI and all the workloads we tested for this document here.

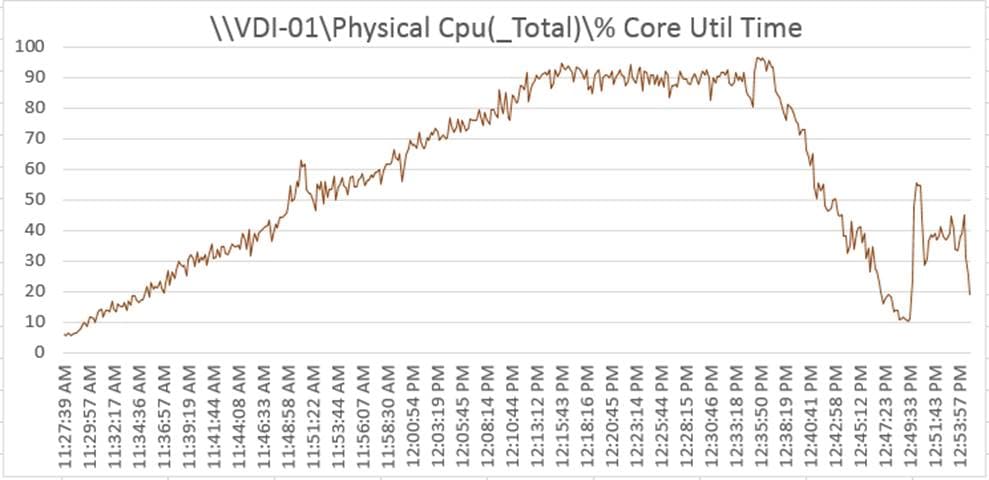

In addition to the Login VSI test suite, we measured host utilization by gathering data from ESXTOP.

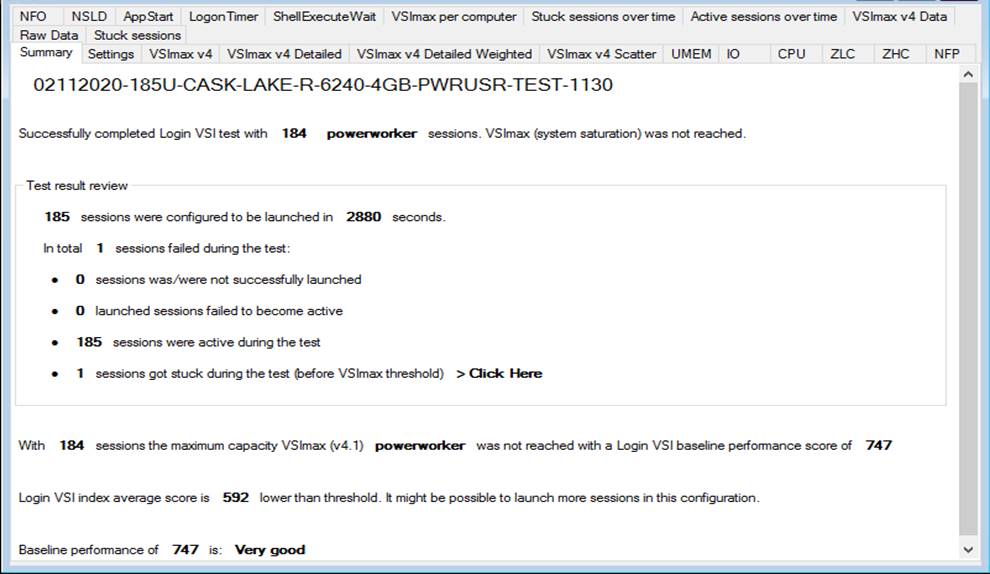

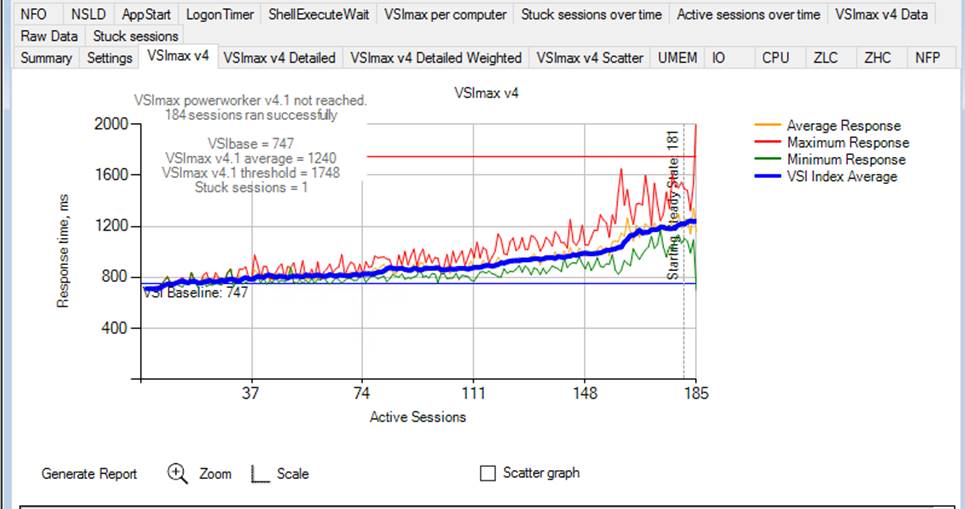

Windows 10 and Horizon 7.11 single-server synopsis: Intel Xeon Scalable Gold processor 6240R

The test results are summarized here and in Figures 21, 22, and 23.

● Operating system: Windows 10 64-bit (1809) with VMware optimizations

● 4 vCPUs; 8 GB of RAM

● Number of users: 185 users running Login VSI Power User workload with Windows 10

● No VSImax; Login VSI baseline = 747 ms

Login VSI end-user experience summary

Login VSI end-user experience performance chart

VMware ESX host CPU Util % during testing

By carefully evaluating key processors in the 2nd Gen Intel Xeon Scalable Family, we identified three processors that have optimal price-to-performance characteristics for the three main user types—task workers, knowledge workers, and power users for VMware Horizon 7.11 and Microsoft Windows 10 virtual desktops and RDSH hosted server sessions. Table 4 shows the user type configuration details.

Table 1. Configurations by user type

| Microsoft Windows 10 |

Task worker |

Knowledge worker |

Power user |

| vCPU per virtual machine |

1 |

2 |

4 |

| Memory per virtual machine |

2 GB |

4 to 8 GB |

8 to 16 GB |

We identified the maximum recommended workload for each processor and user type pair for Windows 10 virtual machines and for RDSH sessions for task workers and knowledge workers. The maximum recommended workload is used to plan for maintenance and failure scenarios. During normal operations, fewer virtual machines would run on the clusters supporting your users. Normal operation densities are shown in Table 5.

Because of the nature of power users’ workloads, for power workers we focused solely on the Windows 10 virtual machines and identified the maximum recommended workload.

Table 5 summarizes our starting-point recommendations (not the maximum recommended workloads) for each user type and delivery method.

Table 2. Microsoft Windows 10 (Build 1809) and Horizon 7.11 virtual desktops

| Processor part number and quantity |

Cores |

Memory (GB) |

Task worker |

Knowledge worker |

Power user |

| UCS-CPU-I5218R x 2 |

40 |

768 (12 x 64 GB) |

170 to 190 |

|

|

| UCS-CPU-I5220R x 2 |

48 |

768 (12 x 64 GB) |

|

90 to 185 |

|

| UCS-CPU-I6240R x 2 |

48 |

1.5 TB (24 x 64 GB) |

|

|

90 to 170 |

Each customer’s environment and workloads are different. The recommended ranges shown in the tables here are starting points for your unique environment. They are not intended to be performance guarantees.

For graphics-intensive workloads and for enhanced-experience Windows 10 workloads, you can use GPUs with additional processors that are suited for that purpose.

For additional information, see the following:

● Cisco UCS C-Series Rack Servers and B-Series Blade Servers:

◦ http://www.cisco.com/en/US/products/ps10265/

● Cisco HyperFlex™ hyperconverged servers:

◦ https://www.cisco.com/c/en/us/products/hyperconverged-infrastructure/hyperflex-hx-series/index.html

● VMware Horizon 7.11:

◦ https://docs.vmware.com/en/VMware-Horizon-7/7.11/rn/horizon-711-view-release-notes.html

● VMware vSphere 6.7 Update 3:

◦ https://docs.vmware.com/en/VMware-vSphere/6.7/rn/vsphere-vcenter-server-67u3b-release-notes.html

◦ https://docs.vmware.com/en/VMware-vSphere/6.7/rn/vsphere-esxi-67u3-release-notes.html

● Microsoft Windows and Horizon optimization guides for virtual desktops:

● Login VSI

◦ https://www.loginvsi.com/products/login-vsi