Cisco HyperFlex Fabric Interconnect Hardware Live Migration Guide White Paper

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

| Document summary |

Prepared for |

Prepared by |

| Fabric Interconnect (FI) hardware online upgrade with HyperFlex™ cluster |

Cisco field |

Ganesh Kumar, |

The audience for this document consists of system architects, system engineers, and any other technical staff who are responsible for planning or upgrading Fabric Interconnects with a Cisco HyperFlex cluster. Although every effort has been made to make this document appeal to the widest possible audience, the document assumes that readers have an understanding of Cisco UCS® hardware, terminology, and configurations.

All information in this document is provided in confidence and shall not be published or disclosed, wholly or in part, to any other party without Cisco’s written permission.

The Cisco 6400 Series Fabric Interconnects (FIs) are new Cisco UCS hardware platforms that use the latest Cisco® silicon Switch-on-Chip (SoC) ASIC technology. Because the hardware is new, you may need to make changes in port and software configurations to experience a successful migration. This document discusses the process for upgrading from Cisco UCS 6200 Series Fabric Interconnects to Cisco UCS 6454 Fabric Interconnects when Cisco HyperFlex clusters are connected to the fabric. The migration procedure remains the same for the 64108 platform as well. After reading this document, you should understand the upgrade process and any precautions you should consider for a successful migration.

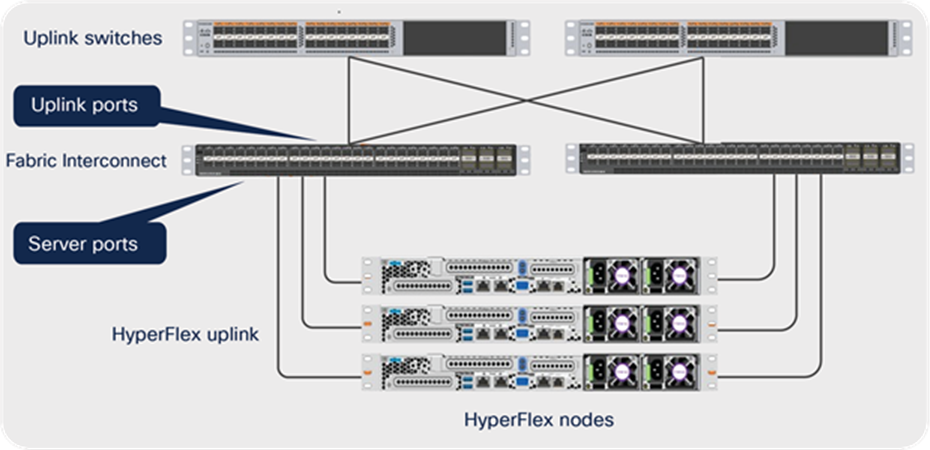

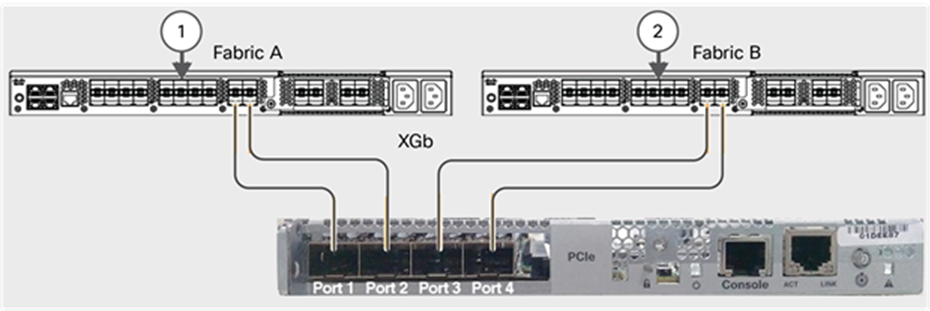

Cisco HyperFlex network connectivity

Cisco HyperFlex clusters in the data center are deployed with a pair of Cisco UCS Fabric Interconnects (see Figure 1) for server uplink connectivity. The Fabric Interconnects are always Active/Active for data traffic. The Cisco UCS Fabric Interconnects are connected to a pair of network uplink switches for northbound traffic. As you can see in Figure 1 above, the HyperFlex network connectivity is recommended always to be redundant, and hence the Fabric Interconnect migration procedure is a complete online operation without any impact on data traffic.

We recommend reviewing the Cisco HyperFlex HX Data Platform release notes, installation guide, and user guide before proceeding with any configuration. The HyperFlex cluster should be healthy and ONLINE. The UCS FI cluster state should be “HA READY” before you start the FI migration. Please contact Cisco support or your Cisco representative if you need assistance. Licenses from Cisco UCS 6200 Series Fabric Interconnects are not transferable to Cisco UCS 6400 Series Fabric Interconnects. You must obtain licenses for the Cisco UCS 6400 Series Fabric Interconnects before you upgrade if there are insufficient port licenses to cover the migration.

Cisco UCS Manager Release 4.1 is the minimum version that provides support for Cisco UCS 64108 Fabric Interconnects, and Release 4.0 is the bare minimum version that provides support for Cisco UCS 6454 Fabric Interconnects. To migrate from Cisco UCS 6200 Series Fabric Interconnects to Cisco UCS 6400 Series Fabric Interconnects:

● Cisco UCS 6200 Series Fabric Interconnects must be on Cisco UCS Manager Release 4.1(1) or a later release to migrate to Cisco UCS 64108 Fabric Interconnects, and Release 4.0(1) or a later release to migrate to Cisco UCS 6454 Fabric Interconnects.

● Cisco UCS 6400 Series Fabric Interconnects must be loaded with the same build version that is on the Cisco UCS 6200 Series Fabric Interconnect that it will replace.

◦ Power on the Fabric Interconnects independently and connect to console.

◦ Configure the new Fabric Interconnect in “standalone” mode, and check the version by running the command show version.

● The UCS 6400 Series Fabric Interconnect is intended as a replacement for the UCS 6200 Series Fabric Interconnect, but not as a replacement for the higher speed (or 40Gb) UCS 6332/6332-16UP Fabric Interconnects. Therefore, Cisco has not tested or published a plan to migrate from UCS 6332/6332-16UP Fabric Interconnects to UCS 6400 Series Fabric Interconnects. The high-density UCS 64108 Fabric Interconnect is an ideal upgrade from the high-density Cisco UCS 6296 Fabric Interconnect, while the UCS 6454 Fabric Interconnect is an ideal upgrade from the Cisco UCS 6248 Fabric Interconnect.

● HyperFlex stretched-cluster architecture requires the same model of Fabric Interconnect to be deployed in both sites. So, if you have a stretched cluster, make sure to upgrade/migrate all four Fabric Interconnects to the UCS 6400 platform. Specific information about HyperFlex stretched-cluster Fabric Interconnect requirements and limitations can be found in the HyperFlex Stretched Cluster White Paper.

The fourth-generation Cisco UCS 6400 Fabric Interconnects provide both network connectivity and management capabilities for the data center HyperFlex system. The Cisco UCS 6400 Series offers line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions. From a networking perspective, the Cisco UCS 6400 Series use a cut-through architecture, supporting deterministic, low-latency, line-rate 10/25/40/100 Gigabit Ethernet ports, and a switching capacity of 3.82 Tbps for the 6454 and 7.42 Tbps for the 64108 FI model.

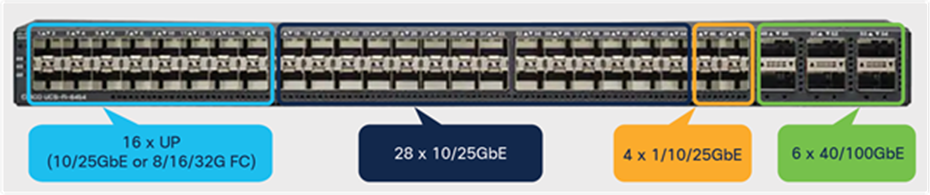

For the purposes of this document, we use the Cisco UCS Fabric Interconnect 6454 model. However, the migration procedure remains the same for the 64108 model. It has 28 10/25-Gbps Ethernet ports, 4 1/10/25- Gbps Ethernet ports, 6 40/100-Gbps Ethernet uplink ports, and 16 unified ports that can support 10/25-Gbps Ethernet ports or 8/16/32-Gbps Fibre Channel ports (see Figure 2). All Ethernet ports are capable of supporting FCoE.

Cisco UCS 6454 Fabric Interconnect ports (rear view)

Two hot swappable power supplies and four variable-speed fans insert into the front of the chassis with front-to-back cooling. Server-facing ports are at the rear of the chassis to simplify the connections to the servers. Serial, management, and USB ports are provided at the front of the chassis for easy access at the front of the rack (Figure 3).

Cisco UCS 6454 Fabric Interconnect (front view)

The Cisco UCS 6200 Series Fabric Interconnects are the only Fabric Interconnects that can be migrated to the Cisco UCS 6454/64108 Fabric Interconnects while in service (Table 1). No other Fabric Interconnect series is supported.

Note: The Cisco UCS 6400 Series Fabric Interconnect is intended as a replacement for the UCS 6200 Series Fabric Interconnect, but not as a replacement for the higher speed (or 40Gb) UCS 6332/6332-16UP Fabric Interconnect. Therefore, Cisco has not tested or published a plan to migrate from UCS 6332/6332-16UP Fabric Interconnects to UCS 6400 Series Fabric Interconnects. The high-density UCS 64108 Fabric Interconnect is an ideal upgrade from the high-density Cisco UCS 6296 Fabric Interconnect.

Table 1. Migration support matrix for infrastructure hardware

| From |

To |

Support |

| Cisco UCS 6100 Series Fabric Interconnects |

Cisco UCS 6454 or UCS 64108 Fabric Interconnects |

Not Supported |

| Cisco UCS 6200 Series Fabric Interconnects |

Supported |

|

| Cisco UCS 6332 and 6332-16UP Fabric Interconnect |

Not Supported |

|

| Cisco UCS 6324 Fabric Interconnects |

Not Supported |

Note: Even though the migration can be done live without impacting data traffic, it is recommended to perform the migration in a planned maintenance window. If you have the 6200 series Fabric Interconnects configured with Fiber Channel (FC) ports, you need to unconfigure those ports for the migration.

For a successful Fabric Interconnect migration, it is important that the current pair of Fabric Interconnects and the new pair are running the same Cisco UCS Manager (UCSM) release. UCS FI 64108 requires that a minimum of UCSM Release 4.1, and FI 6454 requires that a minimum of UCSM Release 4.0, is running.

Before you start the migration, make sure to perform the activities described below:

● Power on the new Fabric Interconnects and check the UCSM version running in them.

◦ Connect the console.

◦ Configure the new Fabric Interconnect in “standalone” mode, and check the UCSM version by running the command show version.

● Based on your new Fabric Interconnect model and the UCSM version that is running, download the required Cisco UCS Manager Infrastructure (Bundle A) package from the Cisco software downloads page, and upgrade the current 6200 Fabric Interconnects.

● If the new Fabric Interconnects were part of any other previous UCSM cluster, they may be running a different configuration. Therefore, clean up the new fabric interconnect by running the commands given below on each fabric interconnect:

◦ # connect local-mgmt

◦ # erase configuration

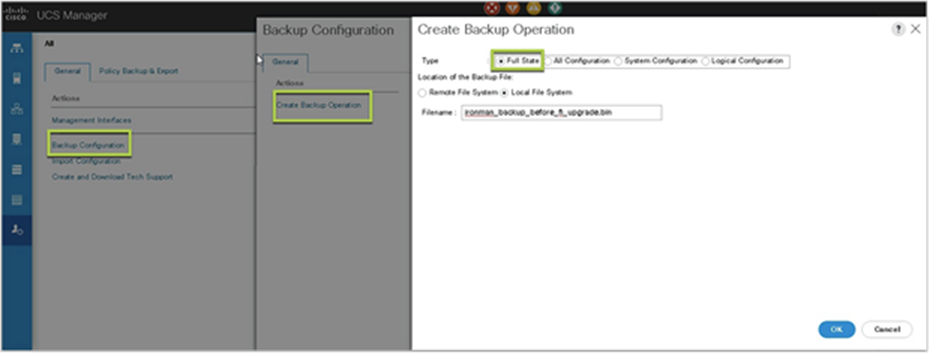

● Back up and export the Cisco UCS Manager configuration that can be used to restore the configuration in future if needed.

◦ Connect to UCS Manager and navigate to Admin menu -> Backup Configuration and take a “Full State” backup (Figure 4).

Cisco UCS Manager configuration backup

● Note down the current Fabric Interconnect connection details, such as which server connects to which port in each FI and the uplink port connections.

● Note down the current Fabric Interconnect IP details (FI-A IP, FI-B IP, UCSM Cluster IP, Netmask, and the Gateway).

● Check and confirm that all connected servers have redundant data paths visible and online with appropriate failover policies configured. Servers not configured with network redundancy will experience a network outage during the migration.

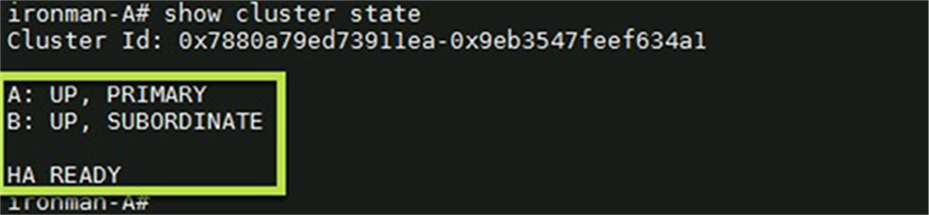

● Check and confirm that the current UCSM cluster is in a healthy state by running the command show cluster state, and confirm that the HA status is ”Ready.”

UCSM cluster status

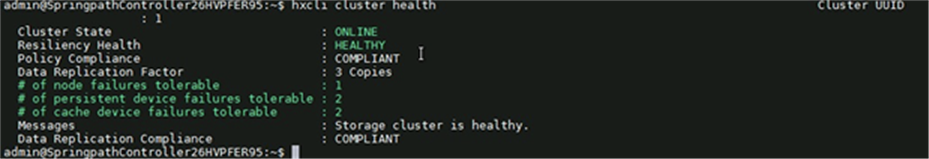

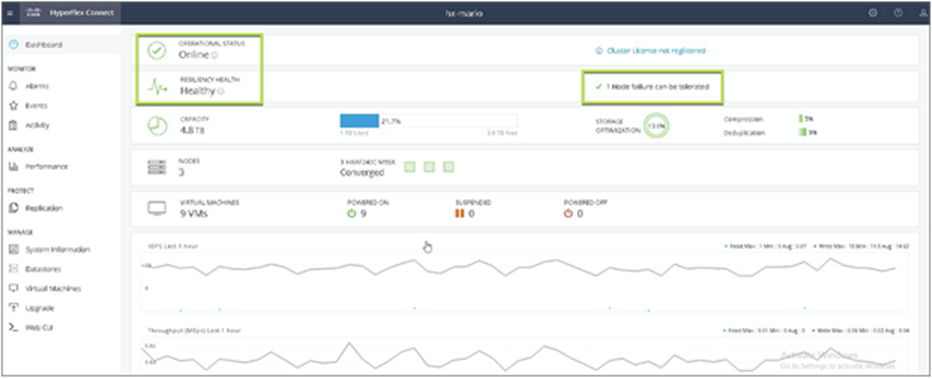

● Login to HyperFlex Connect or SSH to the HyperFlex cluster IP and verify that the cluster is healthy and all the nodes are online.

◦ Run the command hxcli cluster state, and confirm that the cluster state is “ONLINE” and the Resiliency Health is “HEALTHY.”

HyperFlex cluster status

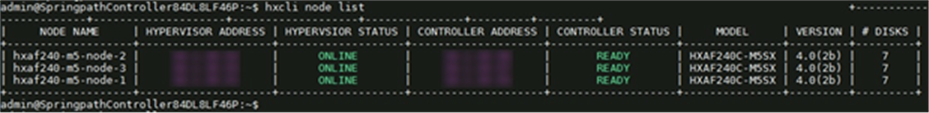

● Run the command hxcli node list, and verify that the storage controller and hypervisor status are “ONLINE” for all nodes in the cluster.

HyperFlex node and controller status

Now we are ready to start the migration. Perform the steps below in the order given for a successful online migration.

Note: This Fabric Interconnect migration is an online process with very quick failover of physical NICs. The migration can be done without powering down any workloads running on the HyperFlex cluster. Even though the migration can be done live without impacting the data traffic, it is recommended to perform the migration in a planned maintenance window.

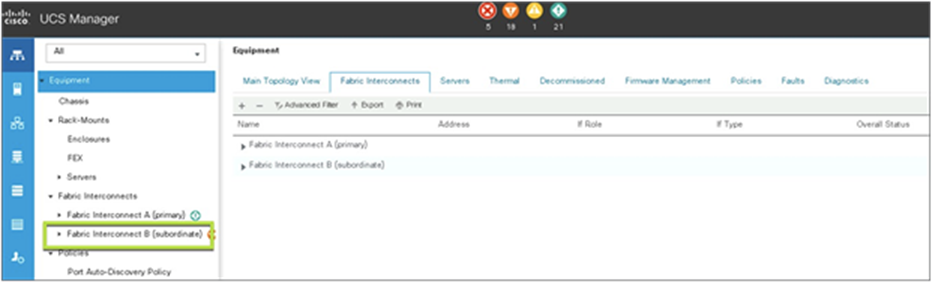

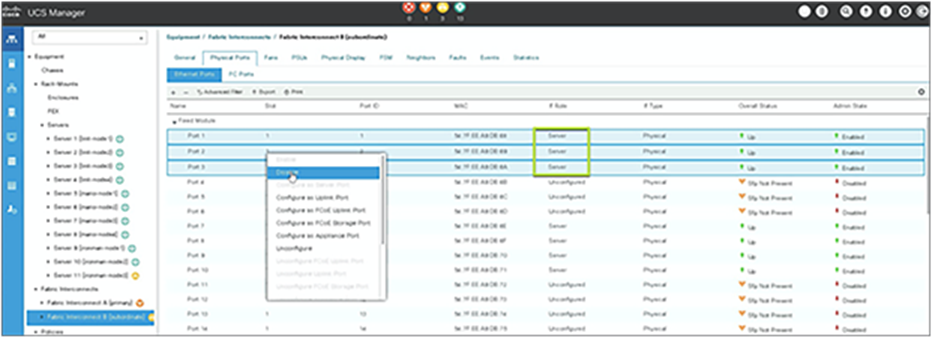

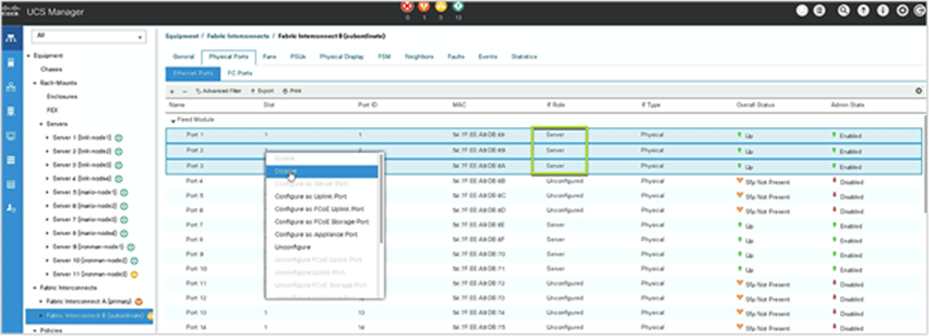

1. Log in to UCS Manager and identify the subordinate Fabric Interconnect, then disable the server ports connected to each HyperFlex node.

Identifying subordinate Fabric Interconnect

Note: If you have a Cisco UCS B-Series chassis connected to the same pair of Fabric Interconnects, follow the Cisco UCS–recommended process to evacuate the traffic from the Fabric Interconnect before you disable the server ports. Refer to UCS Fabric Interconnect Traffic Evacuation for more details.

Disable subordinate Fabric Interconnect server ports

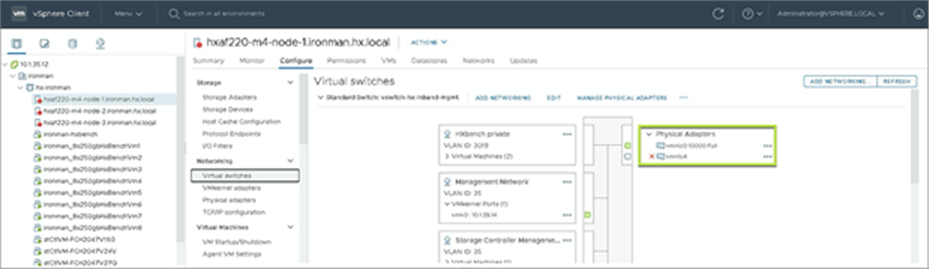

2. Confirm that the data path is failed over and that data traffic is not being disrupted from the hypervisor side.

Hypervisor traffic failover

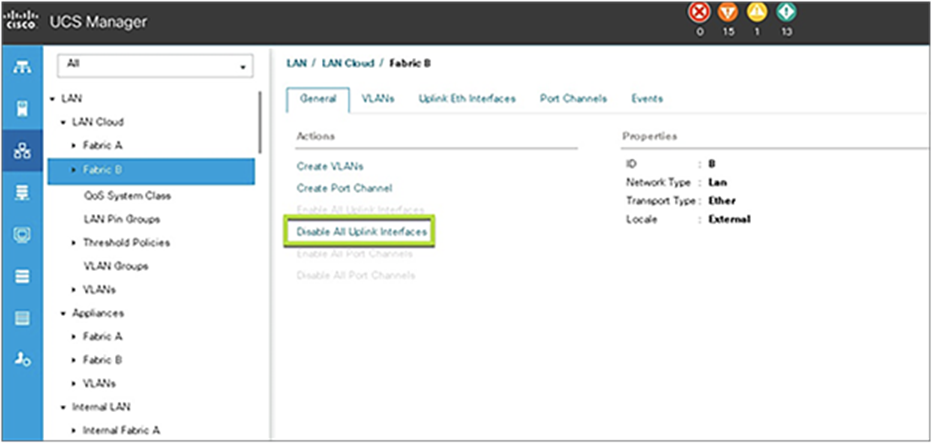

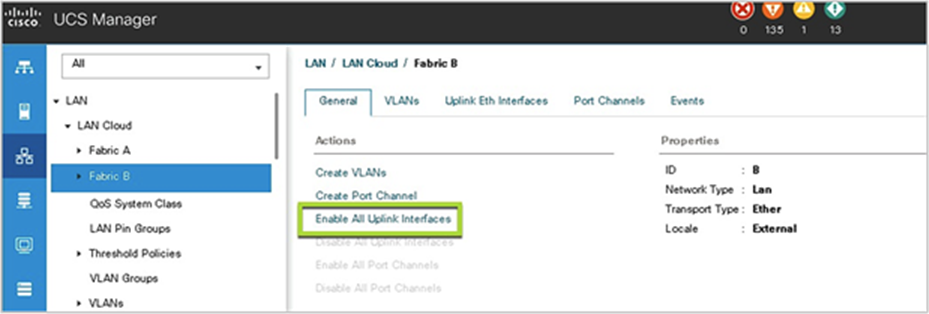

3. Disable all the uplink interfaces. Go to LAN -> Fabric A/B (subordinate fabric) -> click Disable All Uplink Interfaces.

Disable subordinate Fabric Interconnect uplink ports

4. Power down the subordinate Fabric Interconnect by unplugging it from the power source.

5. Mount the replacement Cisco UCS 6400 Series Fabric Interconnect into either the same rack or an adjacent rack. Refer to the Cisco UCS 6400 Series Installation Guide for details.

6. Disconnect the cables from the HyperFlex nodes to the subordinate Fabric Interconnect ports on the UCS 6200 Series Fabric Interconnect.

7. Connect these cables to the corresponding ports on one of the new Cisco UCS 6400 Series Fabric Interconnects, using the connection records to preserve the port mapping. The servers may need to be reacknowledged (that is, may require a reboot) if the new server port numbers are changed.

8. Connect the L1/L2 cables that were disconnected from the old Fabric Interconnect to the new Cisco UCS 6400 Series Fabric Interconnect. L1 connects to L1, L2 connects to L2.

9. Connect the power to the new Cisco UCS 6400 Series Fabric Interconnect. It will automatically boot and run POST tests. If it reboots itself, this is normal behavior.

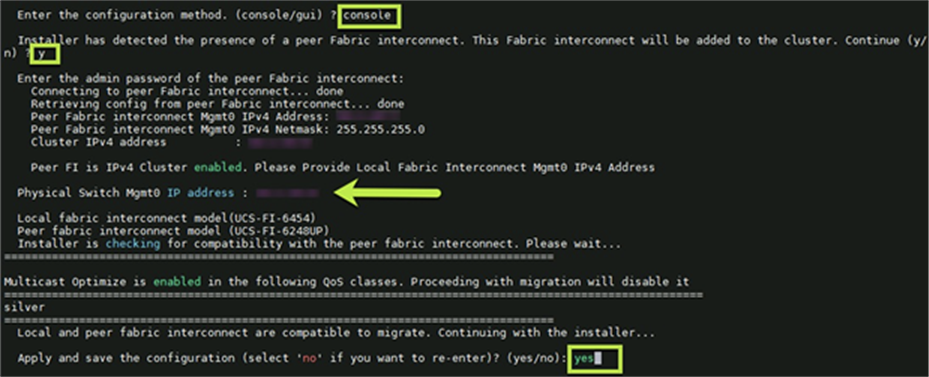

Important: Directly connect the console port to a terminal and observe the boot sequence. You should at some point see the Basic System Configuration Dialog, where you will configure the switch as a subordinate interconnect. If you do not see this dialog, either you have different builds of software on your old primary and new subordinate Fabric Interconnect, or the new subordinate was previously part of a cluster and will now need to have all configuration information wiped before it can be added to a cluster as a subordinate. In either case, immediately disconnect the L1 and L2 connections, bring the Fabric Interconnect up as standalone, then correct the issue before proceeding further. The primary and subordinate Fabric Interconnects must be running the same software build.

10. Enter the IP address details for the Fabric Interconnect. After this step, the installer will perform all the required configurations and sync with the primary Fabric Interconnect.

Note: If the installer detects any unsupported configuration, it will show the error in the console. You need to resolve any displayed configuration issues, then hit any key to restart the installer.

Subordinate Fabric Interconnect IP configuration

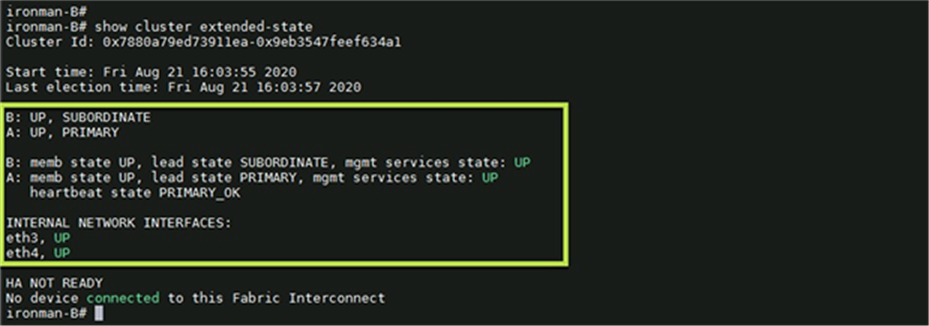

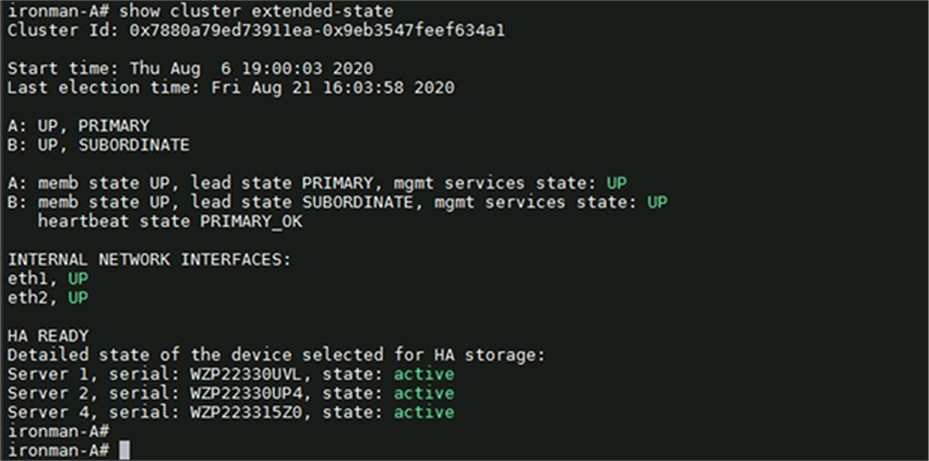

11. Once the configuration is complete, you will see the login prompt in the console. Log in to the new subordinate Fabric Interconnect and check the cluster status by running the command show cluster extended-state. Check and confirm that the management services are up on both Fabric Interconnects. Also, check and confirm that L1 and L2 (eth3 and eth4, as shown in Figure 13, below) report status as “UP.”

Note: An HA status of “NOT READY” is expected at this point until we enable the server ports that enable the Fabric Interconnects to form a cluster.

Cluster status after replacing the subordinate Fabric Interconnect

12. Enable the uplink ports from the UCS Manager UI on the subordinate Fabric Interconnect.

Enable uplink interfaces

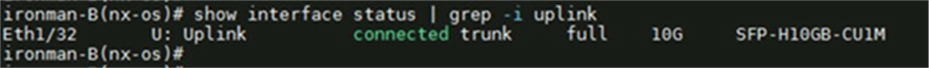

13. Confirm the uplink ports are up by running the commands below using the Fabric Interconnect CLI:

◦ # connect nxos

◦ # show interface status | grep -i uplink

Uplink interface status

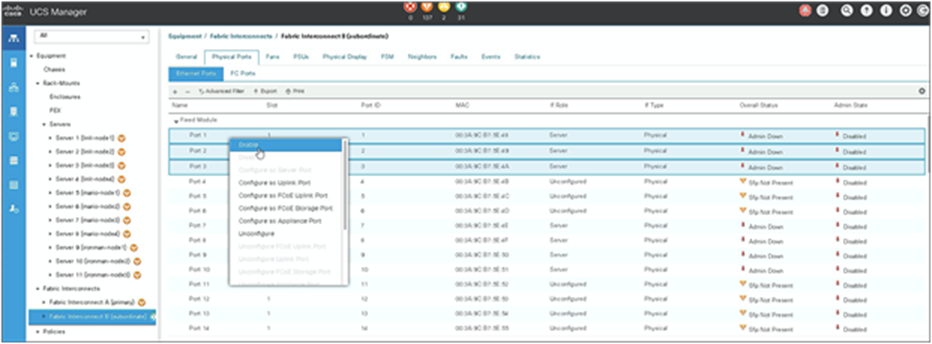

14. Enable the previously disabled server ports from the UCS Manger UI.

Enable server interfaces

Note:

● If you have a Cisco UCS B-Series chassis connected to this pair of Fabric Interconnects and you had enabled Evacuation Mode, you can disable the evacuation mode now.

● Be patient. Once the server interfaces are enabled, the server will transition through a shallow discovery process (no reboot or traffic interruption will occur) and then the interfaces will be up. This process will take time to complete.

15. Verify the UCS cluster status and confirm that the HA status is ready.

UCS cluster HA status after subordinate Fabric Interconnect replacement

16. Verify that the data path is back up and redundant now from the hypervisor side.

Data path recovered

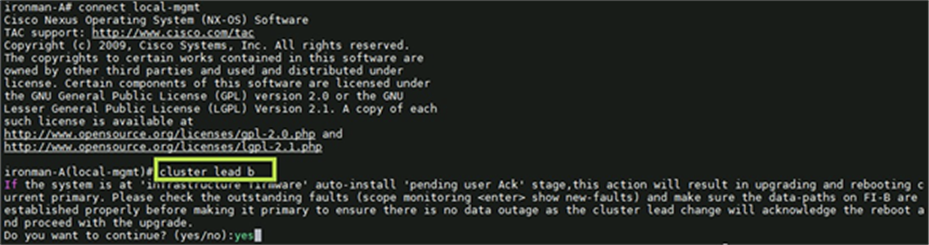

17. SSH to the UCSM cluster IP and run the commands given below to promote the subordinate Fabric Interconnect to primary. (Note: Verify that the cluster status is HA Ready before proceeding to the next step)

◦ # connect local-mgmt

◦ # cluster lead <a/b (current subordinate)>

Promoting the subordinate Fabric Interconnect

Note: You may lose connectivity to UCS Manager when this failover happens. If so, connect to the UCSM virtual IP again.

18. Check and verify that the UCS cluster status is healthy and HA status is “READY.”

19. Cable the second new Fabric Interconnect identically to the Fabric Interconnect that it is replacing and allow the configuration to be applied to the second new Fabric Interconnect.

20. Repeat the process from steps 1 through 16 for the second new Cisco UCS 6400 Series Fabric Interconnect.

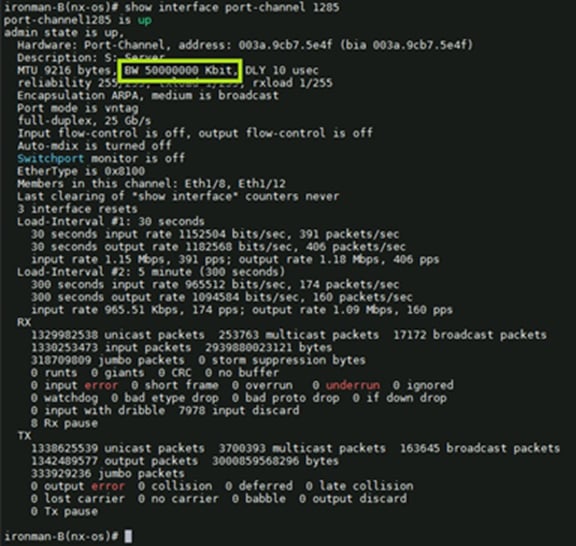

Optional: Upgrade HyperFlex server uplinks to 25Gbps with Cisco VIC 1457

The fourth-generation Cisco UCS 6400 Fabric Interconnects offer line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions. The new 6400 series Fabric Interconnect along with Cisco VIC 1457 adapters can be combined to achieve up to 100Gpbs (50Gbps per Fabric Interconnect) network bandwidth from Fabric Interconnects toward the servers. Cisco VIC 1457 is a 10/25-Gbps quad-port Small Form-Factor Pluggable (SFP28) mLOM card designed for the M5 generation of Cisco HyperFlex and UCS servers. If your HyperFlex nodes have Cisco VIC 1457, you can upgrade the current southbound 10Gbps connectivity to 25Gbps without any downtime. New 25Gbps cables are required for the interfaces to negotiate at this higher speed. Refer to Transceiver and Cable Support section of the Cisco UCS Virtual Interface Cards (VICs) 1400 series data sheet for supported transceiver and cable details.

Note: Although this upgrade is an online upgrade with vNIC failover, it is recommended to plan the activity during a maintenance window.

Procedure:

Before you start the server uplink upgrade, make sure that the vNIC failover policies are correctly configured and that redundant paths are up.

1. Check and confirm that the redundant paths are up from the hypervisor side.

2. Note down the exact server port numbers on both Fabric Interconnects for each HyperFlex node.

3. SSH to the UCSM Management IP. Then do the following:

◦ Run the command show cluster state to identify the subordinate Fabric Interconnect.

◦ Run the command connect nxos <a/b> connect to the NX-OS shell of the subordinate Fabric Interconnect.

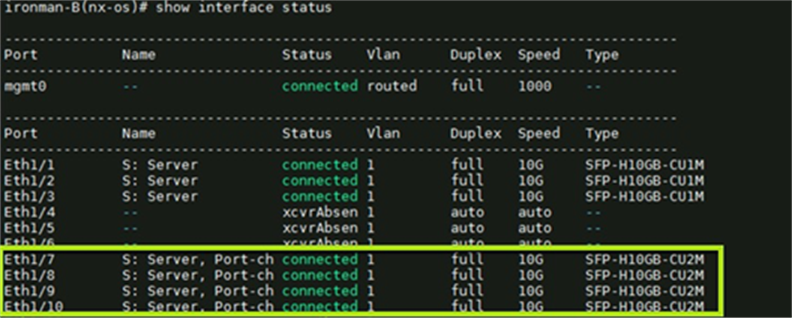

◦ Run the command show interface status and identify the server ports.

Fabric Interconnect server interfaces before the link upgrade

Note: As you see above, in Figure 20, the server ports are configured as port channels by default with VIC 1457.

4. Log in to UCS Manager and navigate to Equipment -> Rack-Mounts -> Servers, and select the first HyperFlex node. In the right-hand pane, go to VIF paths and expand all the paths. Check and confirm that all the virtual NICs are up and no “unpinned” virtual NICs are present.

HyperFlex node VIF paths

5. Check and confirm that the HyperFlex cluster state is online and healthy by running the command hxcli cluster health.

6. Disconnect the link from the HyperFlex node to the subordinate Fabric Interconnect.

7. Connect the new 25G-capable cable to the same Fabric Interconnect port that was previously connected.

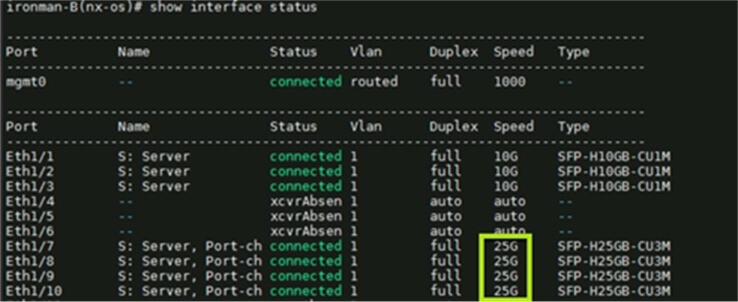

8. From the UCS Fabric Interconnect CLI, verify that the physical link is up and is negotiated at 25G speed.

Fabric Interconnect server interfaces after link upgrade

9. Wait for the virtual NICs to be pinned to the appropriate uplink ports as shown in Figure 22, above.

Note: It may take a few minutes for the dynamic pinning to happen and the vNICs are ready for failover. Check and make sure all the vNICs are in a pinned state before moving to the next step.

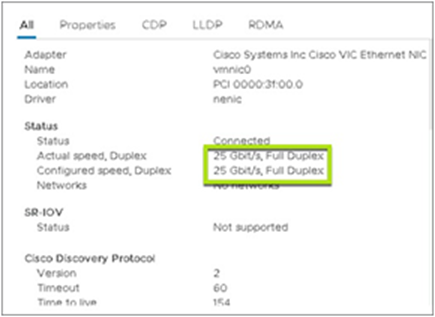

10. Check and verify that the virtual NIC in the hypervisor is up and showing a negotiated 25Gbps link speed.

Hypervisor uplink speed

11. Disconnect the link from the same HyperFlex node to the primary Fabric Interconnect and replace with a new 25G-capable cable.

12. Repeat steps 8 through 10 for the second link to confirm the speed increase.

13. Repeat steps 4 through 12 on each node in the HyperFlex cluster.

Note: All the nodes in a HyperFlex cluster must be running with the same link speed. It is therefore imperative to ensure that all server links in the cluster are upgraded during the same maintenance window.

Optional: Adding more links for higher network bandwidth

Cisco VIC 1457 is a 10/25-Gbps quad-port Small Form-Factor Pluggable (SFP28) mLOM card designed for the M5 generation of Cisco HyperFlex servers. If your applications require higher network bandwidth and your HyperFlex servers have the VIC 1457 adapter installed, you have an option to double the network bandwidth to each Fabric Interconnect. This is accomplished by adding one additional network link from each HyperFlex node to each Fabric Interconnect.

Note: This is a complete online upgrade without any downtime. However, it is recommended to perform this upgrade in a maintenance window.

Procedure:

Before you start adding additional links, ensure that the new cables are of the same speed as the existing cables. For example, if your current connectivity is 25Gbps, add an identical 25Gbps cable as the second link to each fabric interconnect. Mixing the speeds is not a recommended configuration.

1. Check and confirm that the HyperFlex cluster state is online and healthy by running the command hxcli cluster health.

2. Connect the new cables as shown in Figure 24 below.

Cisco VIC 1457 quad-port connections

3. Log in to UCS Manager and configure the newly connected ports as server ports on both Fabric Interconnects.

Configure the server ports

4. In UCS Manager, navigate to Equipment -> Rack-Mounts -> Servers -> your HyperFlex Node -> Adapters -> Adapter 1 -> DCE Interfaces, confirm that it shows up all four links now and that they are members of the port channel.

5. Optionally log in to each of the UCS Fabric Interconnects and confirm that the port channel bandwidth is now doubled.

Server bandwidth doubled

Important Note: Due to a known cosmetic defect, both ESXi CLI and vCenter do not update their status to show the new link speed. However, the updated bandwidth is available for the workloads to consume when needed. This display issue can be resolved by a reboot of the server, which can be scheduled during the next planned patch upgrade or any other maintenance window. The steps below for server reboot are optional, if you wish to update the status right away.

6. Reboot each HyperFlex node one by one.

◦ Log in to HyperFlex Connect and confirm that the cluster is online and healthy and that one or more node failures can be tolerated.

HyperFlex cluster health

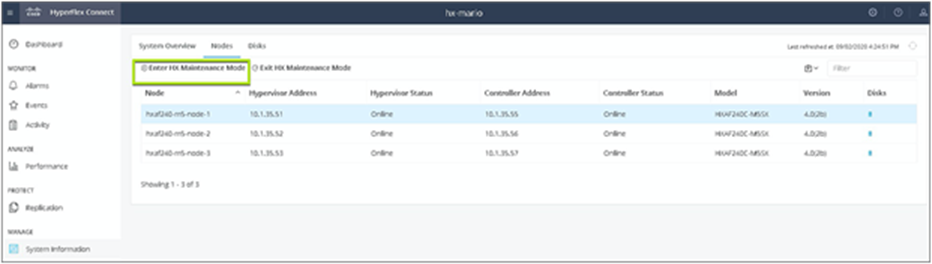

● Place a HyperFlex node in Maintenance Mode.

Enter HyperFlex Maintenance Mode

● Once the node is successfully in Maintenance Mode, reboot the node from the vCenter.

● Wait for the node to reboot, and reestablish connectivity back to vCenter.

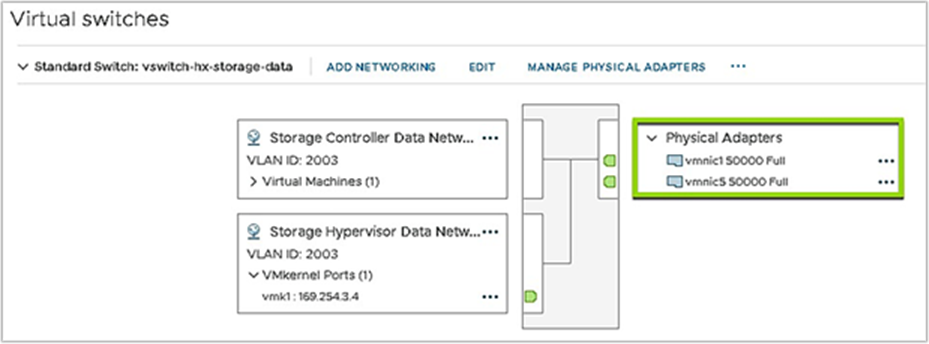

● Check and confirm that the new updated link speed is displayed in vCenter or the ESXi shell.

Updated physical NIC bandwidth

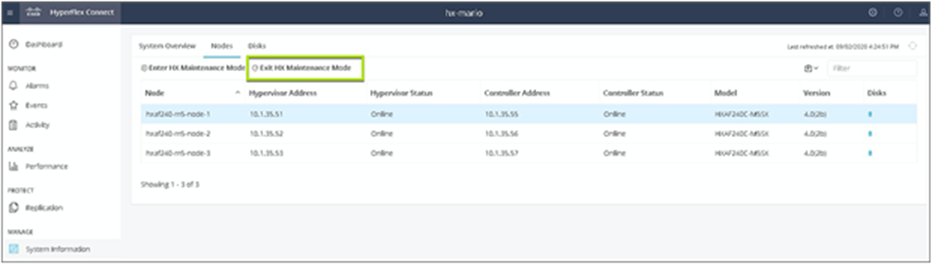

● Exit the node from Maintenance Mode from HyperFlex Connect.

Exit HyperFlex Maintenance Mode

● Wait for the cluster to be online and healthy and for node failures that can tolerate as 1 or higher.

● Repeat the steps above for the remaining nodes in the cluster.

Read more about Cisco HyperFlex:

https://www.cisco.com/site/us/en/products/computing/hyperconverged-infrastructure/index.html

Read more about the Cisco UCS 6454 Fabric Interconnect:

https://www.cisco.com/c/en/us/products/servers-unified-computing/fabric-interconnects.html

Read more about Cisco VIC 1457 adapters:

Read more about UCS Fabric Interconnect hardware migration: